Abstract

Lane recognition is important for safe driving in structured road environment; it is becoming an indispensable part of the advanced driver-assistance system (ADAS) for active security control. This paper proposes a novel lane detection and tracking approach for ADAS by using the line segment detector (LSD), adaptive angle filter and dual Kalman filter. In the lane detection process, the region of interest (ROI) within the inputted image is transformed into grayscale image, which is further preprocessed with median filtering, histogram equalization, image thresholding and perspective mapping. Then, the fast and robust LSD algorithm is applied on the ROI with the proposed adaptive angle filter to eliminate incorrect line segments more efficiently. In addition, a new three-level classifier based on the color, length and quantity of detected line segments is designed for lane classification. Finally, two Kalman filters are applied to track the detected lane and predict the following ROIs to improve the robustness and processing speed. The experimental results on four datasets show that the proposed method has robust performance in complex environments with the presence of shadows or other artifacts. It has average correct rate of lane detection higher than 94%, while the processing time is reduced by about 50% compared with a state-of-the-art method, and the average success rate of lane classification is above 85%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the increasing number of private cars on the road (WHO 2018), traffic accidents have becoming more and more a severe problem reported by some investigations. In order to deal with this issue, the advanced driver-assistance system (ADAS), which is aimed at achieving active security control, emerged in recent years. In particular, lane departure warning system (LDWS) and lane change assistance (LCA) functions based on computer vision have been applied successfully in cars by some automobile industries (Kodeeswari and Philemon 2018). One key technology in such systems is to recognize the lane markings, since it can be used for ADAS to keep lane, and decide whether lane changing or overtaking is permitted or not.

In the case of the above-mentioned typical sub-systems of ADAS, lane detection is the most important technology obviously. Due to the advantages such as easy installation, informative data and similar to the principle of human perception, the vision-based methods are the mainstream in this field currently. Feature selection is the key step of image identification (Abualigah et al. 2019; Abualigah et al. 2018a, b, c ; Abualigah and Khader 2017); it usually adopted techniques of Hough transform (HT) (Son et al. 2015), Canny detector (Wu et al. 2014a, b ), linear or parabolic model (Mu and Ma 2014), etc., to detect lane markings (Narote et al. 2018). McCall and Trivedi (2006) used a simple parabolic model which incorporates lanes positions, angle, curvature and steerable filter to provide better performance for solid and segmented lane marking detection. Wu et al. (2014a, b) proposed a lane-mark extraction method using Canny edge detection with multi-adaptive thresholds for different blocks and using either straight or curved line models to verify the candidate lane-mark edges and fit the lane marks. Mammeri et al. (2016) creatively used maximally stable external region (MSER) blobs and progressive probabilistic Hough transform (PPHT) to detect lane markings. Zhao et al. (2012) proposed a simple, flexible and robust spline model for lane detection and applied extended Kalman filter to support multiple lane tracking and independent tracking. However, as introduced in Shin et al. (2014), difficulties still exist if the feature extraction methods are too restrictive to deal with the complexity of the road environments, or the adopted model does not fit with the practical situation sufficiently. On account of the perspective effect of camera image, others put forward inverse perspective mapping (IPM) (Li et al. 2018) to transform original image to bird’s view image and used the vanishing point (VP) (Liu and Li 2013) to locate the ROI and filter out incorrect lines. But these methods are based on the assumptions that the road should be flat and constant in width. More recently, a faster and robust line segment detector (LSD) has attracted more and more researchers’ attention (Von Gioi et al. 2010; Li et al. 2018). Liu and Li (2013) applied LSD to detect lane markings, and non-lane markings are eliminated by clustering on orientation information and vanishing point. Nan et al. (2016) used LSD, crossing point filter (CPF) and structure triangle filter (STF) based on spatial–temporal knowledge to detect lane markings. Moreover, the methods for prediction and continuous tracking should be designed carefully (Abualigah and Hanandeh 2015; Abualigah et al. 2018a, b, c). Huang et al. (2018) proposed classification–generation–growth-based (CGG) operator using multiple visual cues for lane detection and applied adaptive noise covariance Kalman filter for lane tracking based on the dynamic switch of linear–parabolic models, which showed good performance in challenging scenarios. Besides, deep learning methods such as convolutional neural networks (Li et al. 2016; Lee et al. 2019) and support vector machines (Dou et al. 2016) have also been employed to lane detection and prediction in recent years.

On the other hand, lane classification also plays an important part in ADAS, as it can direct whether the moving car can change its way or not. Unfortunately, there are few studies that concentrate on this aspect. Ding et al. (2015) utilized spatial and frequency sampling for different types of lane markings. A Bayesian classifier based on mixtures of Gaussians was developed in Paula and Jung (2015) to classify lane markings into five categories. However, the above-mentioned methods focused on the whole image, which may lead to high computation cost. Song et al. (2018) used manually labeled ROI transformed by IPM to train a convolutional neural network (CNN) with a real-time processing speed.

Inspired by the mentioned methods above, we present an efficient and robust lane detection and tracking method for ADAS used in structured road environment. Experimental results under different conditions evaluated the accuracy and real-time performance of the method.

The main contributions of this paper are as follows:

-

(1)

Lane detection method is proposed based on LSD algorithm with an adaptive angle filter used to eliminate incorrect line segments more efficiently.

-

(2)

The adaptive ROIs selection method based on warp perspective mapping (WPM) and dual Kalman filter is designed for lane tracking.

-

(3)

A new three-level features which combine color, length and quantity of lane lines are used for lane classification.

The rest of this paper is organized as follows. The pipeline and detailed design of the proposed lane detection and tracking method are presented in Sect. 2. The experimental results are given and analyzed in Sect. 3. Finally, Sect. 4 concludes the paper and discusses the future research work and directions.

2 Proposed method

The framework of the whole system is presented in Fig. 1. The description of each block of this system is described as follows.

2.1 Predetermined ROI selection

Lane markings usually lie in the middle of the image when the position of camera relative to the car is set as shown in Fig. 2. In order to improve the real-time performance and the accuracy of the lane detection system, ROI needs to be located firstly to eliminate the noise influence brought by irrelevant sky, trees, buildings, etc. Unlike the previous methods which defined ROI based on the VPs or mean value of each row, predetermined ROIs are set on the front of the car, as shown in Fig. 2. The upper-left coordinate and size of the predetermined ROI should be proportionally changed based on the used image (Toan et al. 2016). In order to predetermine the ROI, the coordinates of upper-left corner and the size are settled as (X, Y) and W × H, respectively. The initial ROI is drawn as the red rectangle as shown in Fig. 2.

2.2 Preprocessing operations

2.2.1 Grayscale method

Color information is not adopted in lane marking detection; therefore, the RGB camera image needs to be transformed into the grayscale image so as to decrease the redundant data. General computation of grayscale is shown as

Previous researches usually determined \(\omega_{1} = 0.299,\omega_{2} = 0.587,\omega_{3} = 0.114\) as human eye is more sensitive to red and green color; some grayscale results are shown in Fig. 3a. Different from this common approach, an effective contrast enhancement method shows that the R and G channels display excellent contrast qualities for white and yellow colors of the actual road lane; thus, the new weighting coefficients are set as \(\omega_{1} = 0.5,\omega_{2} = 0.5,\omega_{3} = 0\) to achieve better grayscale performance as shown in Fig. 3b.

2.2.2 Image smoothing

The road images have a lot of noise brought by external influence, such as illumination variation, moving cars and shadows. Usually, noise is represented as high-frequency information, which means the image needs to be processed by low-pass filtering. After comprehensive comparison, median filter is chosen to perform image smoothing to achieve noise reduction. And the kernel size, seen in Eq. (2), is selected as 3 × 3 to balance the smoothing performance and the computation cost. The examples of smoothed images are shown in Fig. 4.

2.2.3 Image enhancing

In order to magnify the contrast between road area and non-road area, image enhancing step is operated. A comparison is made between three-segment linear transformation enhancement and histogram equalization. The latter method is selected after experiments and comparison. The histogram equalization is used to change the original histogram into a more uniform distribution form, as shown in Fig. 5, which can increase the dynamic range of pixel gray value transformation and achieve the purpose of image enhancement. Some results of image enhancement are shown in Fig. 6.

2.2.4 Image threshold

After the above preprocessing operations, image segmentation is much easier to obtain on the basis of the high contrast between lane area and non-lane area. This step is indispensable because its result served as input of LSD; meanwhile, an amount of data will be decreased sharply. These methods conclude iterative method, OTSU method, optimal threshold method, etc. Considering the various road environment, a method which can produce adaptive threshold is needed. Hence, in this paper, we choose the classical OTSU which has low computation cost and is not sensitive to the change of image quality. Examples of the processed images are shown in Fig. 7.

2.3 Wrap perspective mapping (WPM)

The existing method named warp perspective mapping (WPM), also called four-point method, is applied to transform the camera image into bird’s view image, for its advantage of no camera calibration and external parameters. The affine matrix \({\mathbf{H}}\) is acquired by mapping the assumed ground-plane rectangle corners into the corresponding points in the birds’ view image. After getting matrix \({\mathbf{H}}\), the original image is mapped pixel by pixel. (One result is shown in Fig. 8.) Subsequent lane detection results in Sect. 3 reveal its better performance than what has been achieved in our earlier work (Liu et al. 2018), such as that the lane in far field can also be detected by LSD.

Suppose the homogenous coordinate of a point in the input image is \({\mathbf{X}} = \left( {u,v,1} \right)^{T}\), and the corresponding coordinate of bird’s view image is \({\mathbf{X}}^{^{\prime}} = \left( {u^{^{\prime}} ,v^{^{\prime}} ,1} \right)^{T}\): then, their relationship can be described as

where \({\mathbf{H}}\) is a 3 × 3 homography matrix:

2.4 Lane detection

After the above image preprocessing operations, the LSD is employed for the detection of line segments which belong to lane. Meanwhile, in Niu et al. (2016), it has been proved that the capability of LSD is superior to Hough transform. In particular, the input binary image is divided into two parts, namely left ROI and right ROI. This step will be beneficial to the following candidate line segments selecting and the lane tracking based on Kalman filters.

The LSD detection process is expressed as

The detected line segments are denoted as \(S = \left\{ {s_{1} ,s_{2} ,s_{3} ,...,s_{n} } \right\}\); each line segment \(s_{i} \left( {i = 1,2,...,n} \right)\) is defined as

where \(\left( {x_{1i} ,y_{1i} } \right)\) and \(\left( {x_{2i} ,y_{2i} } \right)\) are the starting and ending points coordinates, respectively. Here, the point with a smaller y-axis coordinate is defined as the starting point, and \(k_{i}\) is the angle of the line segment si calculated as

Therefore, the detected line segments of the two parts of the ROI can be expressed as \(S_{left} = \left\{ {s_{1} ,s_{2} ,s_{3} ,...,s_{n} } \right\}\) and \(S_{right} = \left\{ {s_{1} ,s_{2} ,s_{3} ,...,s_{m} } \right\}\). Each line segment si is redefined as \(S_{left\_i} = \left\{ {x_{1i} ,y_{1i} ,x_{2i} ,y_{2i} ,k_{i} } \right\},\left( {i = 1,2,...,n} \right)\), or \(S_{right\_i} = \left\{ {x_{1i} ,y_{1i} ,x_{2i} ,y_{2i} ,k_{i} } \right\},\)\(\left( {i = 1,2,...,m} \right)\). Its angle is expressed as \(K_{left\_i} = \left\{ {k_{1} ,k_{2} ,k_{3} ,...k_{n} } \right\},\left( {K_{left\_i} < 0} \right)\),\(K_{right\_i} = \left\{ {k_{1} ,k_{2} ,k_{3} ,...k_{m} } \right\}\),\(\left( {K_{right\_i} > 0} \right)\).

2.5 Noisy lanes filtering

The detection results show that the LSD also produces some false detection results (i.e., some detected segments are not belong to lane) as shown in Fig. 9a. But owing to these preprocessing operations, the incorrect line segments are apparent less than the true lines. Using this characteristic, adaptive angle filtering method is proposed, other than stable range slope adopted in Toan et al. (2016), to obtain the candidate lane markings more robust and correctly. Equation (8) firstly calculates the average angle of these detected line segments; if the difference between the angle ki and the average angle ki* exceeds a threshold T, then the segment si will be deleted unless it conforms to Eq. (9).

Combined with some other filtering approaches such as the length property of line segments, the filtering process and results are shown in Fig. 9b.

2.6 Lane classification

Driving maneuvers, such as lane changing or overtaking, are usually operated by drivers. In this case, ADAS should know the additional information of each lane to judge whether such operations could be allowed or not. In this part, our work is to further classify the lanes into various types, for instance yellow/white, double/single and solid/dashed.

Yellow or white lane markings on a two-way road are used to separate the traffic flow in the same or opposite direction. Few attention has been focused on it before. Color information is extracted in the HSV space in Ding’s method (Ding et al. 2015). As the original RGB image is still stored in memory, we distinguish the yellow and white in RGB space directly with no need for space transformation. The other colors can be eliminated through Eq. (10):

Only yellow and white yet exist in the processed image. B channel value is much less than R and G channels in yellow, while three channels’ values are almost equal in white. Therefore, white and yellow can be judged by Eq. (11):

where R, G and B are the value of red, green and blue channels, respectively. And \(V_{B}\) represents the blue proportion of the pixel.

If \(V_{B}\) is less than 0.25 in our experiment, it should be yellow, otherwise it is white.

Solid and dashed lanes indict the possibilities of lane changes. Current methods focused on accumulating the value of gradient changes or sampling, which belong to statistical approaches. However, the most significant and obvious feature of them is the length characteristics. That is, solid lanes always run though the entire ROI, and dashed lanes just occupy a small part of the ROI. Thus, a simple binary judgment can be used, and the threshold of length is set as the half of the ROI height.

Due to the robustness and accuracy of the LSD, all lane boundaries can be detected efficiently. It means that the double lane will have four line segments, while the single lane may have two. In case of unexpected situation, the detected one line may be split into two discrete segments resulting from undesirable environment conditions. Therefore, the threshold of line segments quantity is set as 4.

In conclusion, the methods mentioned above can be devoted to designing a three-level classifier to distinguish the lane into eight types, as shown in Fig. 10.

2.7 Lane tracking

After the angle filtering of the line segments, the central line of each detected side line is drawn finally and the dashed lines are extended to approach the lower and upper side of the ROI, as shown in Fig. 11. Then, in the lane tacking step, two Kalman filters are used, respectively, in the left and right half ROI for the tracking of the left and right lanes. Different from adopting one point coordinate and slope of line for tracking (Chen et al. 2014), two virtual lane points, i.e., A and B, or C and D, are tracked directly. Furthermore, as the vertical coordinate of virtual point is already known, only the horizontal coordinates a, b, c and d are needed to track.

Both left and right lane detection results are tracked by the Kalman filter (Narote et al. 2018; Miad and Chung 2019; Goleijani and Ameli 2019). In the left lane tracking process, the state vector and observation vector are defined as

where (a, b) is the horizontal coordinates of left virtual boundary points A and B, and \(\Delta a{\text{ and }}\Delta b\) are the deviations of a and b. The prediction and correction process are expressed by Eqs. (14) and (15), respectively.

The state transition matrix \(A\) is

And the measurement matrix \(H\) is

where \(P\left( {k\left| {k - 1} \right.} \right){\text{ and }}P\left( {k\left| k \right.} \right)\) are the prior and posteriori estimated error covariance, respectively, and \(K\left( k \right)\) is the Kalman gain used to renovate the predicted \(X\left( {k\left| {k - 1} \right.} \right)\) and \(P\left( {k\left| {k - 1} \right.} \right)\).

The process noise covariance \(Q\) and measurement noise covariance \(R\) are assumed as Gaussian white noise and independent to each other.

Similarly, the lane tracking of right half ROI is as same as the above process.

After the points A, B, C, D (as shown in Fig. 11) are tracked successfully by the Kalman filter, another operation named ROI tracking, i.e., predicted ROI, will be performed. As the coordinates a, b, c, d are already known by the lane tracking, the width of the left half ROI in next frame can be redefined based on the difference between a and b. (The same goes for the right half ROI.) Therefore, both detection accuracy and computation speed can be improved due to more precise and small ROI region. In order to prevent some unexpected situations and enhance the robustness, the predicted ROIs are extended outward for M pixels. For example, the width of the left half ROI is |a-b|+ M, as shown in Fig. 12a. An example of the predicted ROIs is shown in Fig. 12b, c.

Meanwhile, a tracking failure mechanism marked by three consecutive frame failures is added to cope with extreme situations. If it happened (i.e., the ROIs prediction are failed), the next ROI will be switched to the predetermined ROI as shown in Fig. 2.

Some lane tracking results are shown in Fig. 13. It can be seen that if no line segment is detected, the virtual lane will be drawn by the predicted points A, B, C, D.

3 Experiments

For the tests of lane marking detection and tracking, we have completed the proposed algorithm using Microsoft Visual Studio 2012 and OpenCV 3.0 and conducted the experiments on a PC with Intel Core i5-3470 CPU @ 3.20 GHz. In our experiment, firstly X is set as 100, Y is set as 245, W is set as 440, and H is set as 100; the threshold T of Eq. (9) is set as 85, and the M of Fig. 12a is set as 20 pixels empirically.

3.1 Experiment results

3.1.1 Lane detection method results

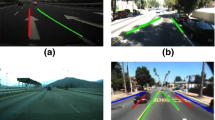

In order to verify the efficiency of adaptive ROI and adaptive angle filter for lane detection, we did experiments on the Caltech datasets (Toan et al. 2016), with totaling 1225 frames, and the image size is 640 × 480, which contains challenging scenarios, such as different pavements, moving cars, artificial road markings and lots of shallows. The final detection results are shown in Fig. 14.

The images shown in Fig. 14 are some of the representative detection results which show that the proposed method can deal with such complex scenarios successfully. Meanwhile, three indexes such as correct detection rate (CDR), false detection rate (FDR) and missing detection rate (MDR) are adopted to quantitatively evaluate the accuracy of detection. The definition of such indexes is expressed in Eq. (18):

where NCD, NFD, NMD and TNF are the number of correct detection, false detection, missing detection and total frames, respectively. And the statistical results are shown in Table 1.

It can be seen that the test in Cordoval1 has the highest correct detection rate and the lowest false and missing detection rate as the road environment is much better than the others. On the contrary, lane detection performance in Washingon1 is the worst resulting from various illumination conditions, more clutter shallows and other interference. Even though in such complex conditions the proposed method can still achieve 94.48% correct rate averagely, which means that the accuracy and robustness of our algorithms can satisfy the requirements of ADAS.

3.1.2 Lane tracking method results

In order to verify the effectiveness of lane tracking method based on warp perspective mapping (WPM), dual Kalman filter and the predicted ROIs, we compared our method with the algorithm presented in Toan et al. (2016). Some typical situations (frames) are selected, and the results of lane detection and tracking are shown in Fig. 15.

It can be seen that both two methods perform well in the first row images of standard road conditions. The influence on lane detection resulting from the shallow could be overcome by the robust LSD. However, when the car is driving across roads with some stained writing or without lane marking in ROI, some fatal detection error happens in the second row of Fig. 15a. Due to the proposed warp perspective mapping (WPM) and the lane tracking step using Kalman filters, our methods could deal with such cases, as shown in the second row of Fig. 15b.

In addition, a comparison of correct detection rate and processing time between our method and the method without adaptive ROI in Shin et al. (2015) is made, as shown in Table 2. Shin et al. (2015) also applied WPM to transform the image and presented particle filter based on a more general model and lane detectors for the estimation of lane borders.

As shown in Table 2, our method is much more advantageous than Shin’s method in general, even though a slight inferior on CDR of the Cordoval1 sequence. Because the predicted ROIs include smaller left–right ROIs, the processing time needed in our method is only half than their method with nearly 60.7 ms (averaged processing time) (Shin et al. 2015; Narote et al. 2018). On the whole, a sufficient fast speed (about 30.4 frames per second) can be guaranteed by our proposed method.

3.1.3 The lane classification results

At the next experiments, lane classification is added into the whole algorithm. As shown in Fig. 16, some representative results are obtained using the designed three-level classifier.

For visualization, lane marking like yellow double solid lane is denoted as “YDSL” for short, white single solid lane as “WSSL,” white single dashed lane as “WSDL,” and so on.

As the Caltech dataset has not been used for lane classification before, so a new evaluation criterion is designed by us. There are mainly three types of lanes, in order to evaluate the effectiveness of the proposed classifier; the left and right lanes are recognized separately. And Table 3 shows the specific classification results. NULL information means that the car is driving to the crossroads. The left lane classification rate is higher than right, and the average success rates is above 85%.

The reason why left lane classification rate is always higher than the right is that the feature of left lane is more evident because of the less influence resulting from shadow, etc. Another interesting phenomenon is that the yellow lane is easier to classify correctly than the white.

3.2 Discussion

From the above test results, it can be seen that the LSD-based method with adaptive angle filter and dual Kalman filter is possible to detect and track lane marking even in the presence of shadows or other artifacts. However, examples of incorrect detection results are also shown in Fig. 17. Missing detection occurred resulting from overexposure in the left side of Fig. 17a, while in the right side of Fig. 17b, the diversity in road texture made the lane detection inaccurate. And there is no consideration in our algorithm for zebra crossing distinguishing, so the results are slightly different from the true position of lane as shown in Fig. 17c.

The another limitation of the methodology is that it detects lane markings in the structured environment, i.e., it is not fit for unstructured roads and lanes with high curvature. Meanwhile, the reduction in computational time required by LSD and smaller divided ROIs is significant, but the ROI is only a part of the image; it cannot detect the lane markings all over the image.

4 Conclusion

This paper proposes an efficient and robust lane detection and tracking method which is verified in some typical structured road environments successfully. The new weighted-average grayscale and wrap perspective mapping for LSD, adaptive angle filtering approach as well as the three-level classifier are combined together into the whole system. Moreover, in order to eliminate noisy influence and improve the robustness and computation speed, dual Kalman filter is applied to track the line segments and predict the following ROIs. Without using much prior knowledge and assumptions, it is confirmed that our method can run through the various experiments in real time and accurately.

In future work, we consider further optimizing the performance and apply the algorithm into practical ADAS. Meanwhile, the region of interest (ROI) is a small part of the image, with more and more labeled data available; we would like to fuse LSD with deep learning to detect and classify lane markings all over the image for ADAS and driver-less cars.

References

Abualigah LM (2019) Feature selection and enhanced krill herd algorithm for text document clustering. In: Studies in computational intelligence, Springer, Berlin.

Abualigah LMQ, Hanandeh ES (2015) Applying genetic algorithms to information retrieval using vector space model. Int J Comput Sci Eng Appl 5(1):19–28

Abualigah LM, Khader AT (2017) Unsupervised text feature selection technique based on hybrid particle swarm optimization algorithm with genetic operators for the text clustering. J Supercomput 73(11):4773–4795

Abualigah LM, Khader AT, Hanandeh ES (2018a) A new feature selection method to improve the document clustering using particle swarm optimization algorithm. J Comput Sci 25:456–466

Abualigah LM, Khader AT, Hanandeh ES (2018b) Hybrid clustering analysis using improved krill herd algorithm. Appl Intell 48(11):4047–4071

Abualigah LM, Khader AT, Hanandeh ES (2018c) A combination of objective functions and hybrid Krill herd algorithm for text document clustering analysis. Eng Appl Artif Intell 73:111–125

Chen C, Zhang B, Gao S (2014) A lane detection algorithm based on hyperbola model. In: International conference on computer engineering and networking, proceedings, pp 609–616

Ding D, Yoo J, Jung J, Jin S, Kwon S (2015) Various lane marking detection and classification for vision-based navigation system. In: IEEE international conference on consumer electronics, proceedings, pp 491–492

Dou Y, Yan F, Feng D (2016). Lane changing prediction at highway lane drops using support vector machine and artificial neural network classifiers. In: IEEE international conference on advanced intelligent mechatronics, proceedings, pp901–906

Goleijani S, Ameli MT (2019) An agent-based approach to power system dynamic state estimation through dual unscented kalman filter and artificial neural network. Soft Comput 23(23):12585–12606

Huang Z, Fan B, Song X (2018) Robust lane detection and tracking using multiple visual cues under stochastic lane shape conditions. J Electron Imag 27(2):023025

Kodeeswari M, Philemon D (2018) Survey on various lane and driver detection techniques based on image processing for hilly terrain. IET Image Process 12(9):1511–1520

Lee M, Han KY, Yu J, Lee YS (2019) A new lane following method based on deep learning for automated vehicles using surround view images. J Ambient Intell Humaniz Comput Online. https://doi.org/10.1007/s12652-019-01496-8

Li J, Mei X, Prokhorov D (2016) Deep neural networks for structural prediction and lane detection in traffic scene. IEEE Trans Neural Netw Learn Syst 28(3):690–703

Li W, Qu F, Wang Y, Wang L, Chen Y (2018) A robust lane detection method based on hyperbolic model. Soft Comput Fusion Found Methodol Appl 2018:1–14

Liu W, Li S (2013) An effective lane detection algorithm for structured road in urban. In: Intelligent science and intelligent data engineering, Springer, Berlin, Heidelberg

Liu S, Lu L, Zhong X, Zeng J (2018) Effective road lane detection and tracking method using line segment detector. In: IEEE 37th Chinese control conference, proceedings, pp.5222–5227

Mammeri A, Boukerche A, Tang Z (2016) A real-time lane marking localization, tracking and communication system. Comput Commun 73(2):132–143

McCall J, Trivedi M (2006) Video-based lane estimation and tracking for driver assistance: survey, system, and evaluation. IEEE Trans Intell Transp Syst 7(1):20–37

Miad S, Chung Z (2019) State estimation of nonlinear dynamic system using novel heuristic filter based on genetic algorithm. Soft Comput 23(14):5559–5570

Mu C, Ma X (2014) Lane detection based on object segmentation and piecewise fitting. Telkomnika Indones J Electr Eng 12(5):3491–3500

Nan Z, Wei P, Xu L, Zheng N (2016) Efficient lane boundary detection with spatial-temporal knowledge filtering. Sensors 16(8):1276–1295

Narote SP, Bhujbal PN, Narote AS, Dhane DM (2018) A review of recent advances in lane detection and departure warning system. Pattern Recognit 73(2018):216–234

Niu J, Lu J, Xu M, Lv P, Zhao X (2016) Robust lane detection using two-stage feature extraction with curve fitting. Pattern Recognit 59:225–233

Paula M, Jung C (2015) Automatic detection and classification of road lane markings using onboard vehicular cameras. IEEE Trans Intell Transp Syst 16(6):3160–3169

Shin B, Xu Z, Klette R (2014) Visual lane analysis and high-order tasks: a concise review. Mach Vis Appl 25(6):1519–1547

Shin B, Tao J, Klette R (2015) A superparticle filter for lane detection. Pattern Recognit 48(11):3333–3345

Son J, Yoo H, Kim S, Sohn K (2015) Real-time illumination invariant lane detection for lane departure warning system. Expert Syst Appl 42(4):1816–1824

Song W, Yang Y, Fu M, Li Y, Wang M (2018) Lane detection and classification for forward collision warning system based on stereo vision. IEEE Sensors J 18(12):5151–5163

Toan H, Hyung H, Husan V, Kang P (2016) Road lane detection by discriminating dashed and solid road lanes using a visible light camera sensor. Sensors (Basel) 16(8):1313–1335

Von Gioi R, Jakubowicz J, Morel J, Randall G (2010) LSD: a fast line segment detector with a false detection control. IEEE Trans Pattern Anal Mach Intell 32(4):722–732

World Health Organization (2018) Global status report on road safety 2018. WHO, Geneva

Wu PC, Chang CY, Lin CH (2014a) Lane-mark extraction for automobiles under complex conditions. Pattern Recognit 47(8):2756–2767

Wu P, Chang C, Lin C (2014b) Lane mark extraction for automobile under complex conditions. Pattern Recognit 47(8):2756–2767

Zhao K, Meuter M, Nunn C, Muller D, Pauli, J (2012) A novel multi-lane detection and tracking system. In: IEEE intelligent vehicles symposium (IV), proceedings, pp1084–1089

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant Nos. 61703356 and 61305117) and the Fundamental Research Funds for the Central Universities (Grant No. 20720190129).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tian, J., Liu, S., Zhong, X. et al. LSD-based adaptive lane detection and tracking for ADAS in structured road environment. Soft Comput 25, 5709–5722 (2021). https://doi.org/10.1007/s00500-020-05566-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-020-05566-4