Abstract

Discriminative models have been shown to be more advantageous for pattern recognition problem in machine learning. For this study, the main focus is developing a new hybrid model that combines the advantages of a discriminative technique namely the support vector machines (SVM) with the full efficiency offered through covariance multivariate generalized Gaussian mixture models (MGGMM). This new hybrid MGGMM applies the Fisher and Kullback–Leibler kernels derived from MGGMM to improve the kernel function of SVM. This approach is based on two different learning techniques explicitly: the Fisher scoring algorithm and the Bayes inference technique based on Markov Chain Monte Carlo and Metropolis–Hastings algorithm. These learning methods work with two model selection approaches (minimum message length and marginal likelihood) to determine the number of clusters. The effectiveness of the framework is demonstrated through extensive experiments including synthetic datasets, facial expression recognition and human activity recognition.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Within machine learning research, the recognition problem has been the subject of several studies. Major challenges, such as visualizing, explaining and representing patterns, have been addressed by a wide range of feature extraction and learning techniques. The key process for dealing with pattern recognition problems is maximizing the recognition rates. In this context, discriminative techniques namely support vector machines (SVM) have been shown to be powerful and attractive models for many pattern recognition applications as they can produce accurate classifiers by learning decision boundaries without regard to the underlying class densities. On the other hand, generative approaches, namely finite mixtures, have also been successfully deployed due to their ability to model a large range of data and to provide a principled framework for handling missing and incomplete data. Indeed, studies (Bouguila 2012) have shown a great understanding of the advantages and limitations of both generative of both approaches.

Finite mixture models have been broadly applied in the last few years for several applications related to machine learning and pattern recognition (Fan and Bouguila 2013, 2014). This growing interest has led to many fascinating data modeling techniques. Gaussian-based mixture models (GMM), for instance, are popular flexible and powerful tools for modeling univariate and multivariate data. However, Gaussian mixture models fail to fit different complex shapes of observed data. In the previous work (Najar et al. 2018, 2019), we have shown that the multivariate generalized Gaussian mixture model (MGGMM) was able to provide more flexibility to fit the shape of non-Gaussian data and correlated features for which the full-covariance matrix assumption is an appropriate choice. An important task in finite mixture models is estimating the model’s parameters. The approaches used for parameters estimation could be deterministic or Bayesian. In deterministic approaches, all parameters are assumed as fixed and unknown, and inference is based on the likelihood of data. Deterministic techniques generally suffer from the dependency on initialization and the convergence to local maxima. As an alternative technique to the likelihood-based approach, the posterior could also be considered for learning. This can be done through Bayesian inference that has been successfully used for computer vision and pattern recognition applications and especially for mixture models as in Bourouis et al. (2019), Fan et al. (2016) and Elguebaly and Bouguila (2015). In Bayesian approaches, parameters are considered random and the posterior distribution of each parameter is estimated by considering the prior of that parameter. One of the most popular algorithms for generating observations from posterior distribution, we mention the Gibbs sampler (Neal 1992) which is a Markov Chain Monte Carlo (MCMC) technique (Gelman et al. 2013). In this paper, we propose two learning approaches, one is based on likelihood-based estimation and Fisher information matrix and the other is based on MCMC algorithm using Gibbs sampling and Metropolis–Hastings technique. Another fundamental step in mixture modeling is selecting the number of components; for this purpose, we adopt the minimum message length (MML) criterion for the deterministic approach and the marginal likelihood for the Bayesian inference given their superior performance w.r.t other model selection criteria (Bouguila and Ziou 2007; Kass and Raftery 1995). Following that, we propose a hybrid framework that combines and uses simultaneously both the generalized Gaussian mixture and SVM to recognize human activities and their facial expression. To the best of our knowledge, no previous work has addressed a hybrid generative-discriminative approach using both deterministic and Bayesian methods for the multivariate generalized Gaussian mixture model.

The rest of this paper is organized as follows. Section 2 presents some relevant works. Section 3 introduces the multivariate generalized Gaussian distribution. In Sect. 4, we describe the proposed approaches to learn (MGG) mixture models (Fisher scoring via expectation maximization and Bayesian learning) and model selection techniques based on MML and marginal likelihood. Following this, the proposed models are used to generate SVM kernels based on information divergence and Fisher scores as presented in Sect. 5. Section 6 is devoted to discuss the results obtained from experiments conducted on synthetic, Cohn–Kanade and KTH datasets. Finally, Sect. 7 concludes the paper with few remarks and future works.

2 Relevant related works

For the recognition of facial expression, some related works have been provided to the same context as our methods. In Lajevardi and Hussain (2009), a supervised classification based on linear discriminant analysis has been proposed to recognize facial expression from static images. A semi-supervised technique has been adapted by Cohen et al. (2003). The classification has been proposed beyond a 3D wireframe model to extract landmark facial features from static images. Online variational learning based on Beta-Liouville has also been applied on static images in Fan and Bouguila (2013). In Yeasin et al. (2004), the authors have used a discrete hidden Markov models to learn a refined optical flow computed from a sequence of images selected from 21 subjects among 97 subjects. Authors in Roh et al. (2018) proposed a fuzzy transform for face recognition using radial basis function neural networks classifier. They used their proposed model to identify face images into various categories. A discriminative model based on support vector machines (SVM) for facial expression and emotion classification was proposed in Bartlett et al. (2003). Another interesting model using SVM (Tsai and Chang 2018) was introduced to detect faces in images that combine Haar features with self-quotient image filter.

For human activity recognition, many works have been proposed where contributions intend preprocessing datasets, extracting features or learning activities. In terms of feature extraction, an unsupervised learning method has been proposed (Niebles et al. 2008) using probabilistic latent semantic analysis (pLSA) model and latent Dirichlet allocation. The authors construct a bag of spatial-temporal words by extracting space-time interest points. Another contribution based on local spatio-temporal features has been introduced in Wong and Cipolla (2007). The contribution for this work was based on feature extraction, for the clustering, they have used different models among them the generative model pLSA. In Dollár et al. (2005), the authors used a cuboid approach for feature extraction which is an extension to the Harris spatio-temporal detector and 1-nearest neighbor model to classify facial expression and human action. A new recent method which is based on sensor data was proposed in Adama et al. (2018). The information regarding the human activities was extracted using RGB-depth sensors and three classifiers: support vector machines, the k-nearest neighbor and the random forest were used for learning those activities. Regarding learning the activities, using also sensor data, a gamma growing neural gas algorithm was introduced to find signatures characterizing the activities from the time series coming of data. In Fan and Bouguila (2014), a nonparametric Bayesian approach based on a mixture of Dirichlet processes with Dirichlet distributions has been introduced. SVM classification schemes for recognition have been widely introduced for the problem of classification as in Schuldt et al. (2004) for classifying human action using space-time features.

In this paper, for recognizing facial expression and human activities, based on the characteristics of SVM and the efficiency of MGGMM with full covariance, we propose robust SVM ’s kernels based on mixtures of multivariate generalized Gaussian distributions.

3 Multivariate generalized Gaussian mixture model

Let \(\mathcal {X}=(\mathbf {X}_1,\mathbf {X}_2,\dots ,\mathbf {X}_N)\) be a set of features vectors where each \(\mathbf {X}_i\) is generated from a multivariate generalized Gaussian mixture model (MGGMM) (Kelker 1970) defined by its mean \({\varvec{\mu }}_j\), shape parameter \(\beta _{j}\) and covariance matrix \(\varSigma _j\):

where \(p_j\) denotes the mixing weight of the jth component such that:

where K is the number of clusters, N the number of feature vector \(\mathbf {X}_i\), and \(Z_{ij}\) denotes a membership vector that indicates if the vector \(\mathbf {X}_i\) belongs to cluster j\((Z_{ij}=1)\) or not \((Z_{ij}=0)\). Moreover, the mixing weight parameters satisfy the following: \(p_j \ge 0, \sum _{j=1}^{K} p_j=1\).

For a selected cluster j, each \(\mathbf {X}_i\) follows a multivariate generalized Gaussian distribution with a probability density function (Kotz 1975) defined by:

where \( \mathbf {X}_i \in R^d\), \(\varSigma _j\) is a \(d \times d\) symmetric positive definite matrix, called the covariance matrix, \({\varvec{\mu }}_j\) is a d-dimensional mean vector, and \(\mathbf {h_{\beta _j}} (.)\) is a so-called density generator function defined by:

where \(\beta _j > 0\) is the shape parameter, \(\varGamma (.)\) is a gamma function, and Y is a one-dimensional vector.

It is noteworthy that if \(\beta _j=1\), the multivariate generalized Gaussian distribution is reduced to the multivariate Gaussian distribution. The shape parameter \(\beta _j \) controls the peakedness and the spread of the distribution. If \(\beta _j<< 1\), the distribution is more peaky than Gaussian with heavier tails, and if \(\beta _j>> 1\), it converges to a uniform distribution.

4 Parameter estimation of multivariate generalized Gaussian mixture model

One of the most important issues in mixture models is the learning of parameters. Giving the fact that each distribution is characterized by such different sets of parameters, the learning step is a challenging task. In our paper, we are considering the multivariate generalized Gaussian mixture model which is described by four distinct parameters namely the mixing weight, the shape, the mean and the covariance matrix. In the first part, we present a maximum likelihood estimation algorithm based on the Fisher scoring technique, and in the second part, we propose a Bayesian learning framework.

4.1 Fisher scoring via expectation–maximization

In this section, we present a novel learning method based on the expectation–maximization technique (Dempster et al. 1977) combined with a Fisher scoring algorithm (Lindley and Rao 1953) that estimates the covariance matrix. The mean and the mixing weight are estimated using the maximum likelihood estimation algorithm. In fact, setting the derivate of the complete log-likelihood with respect to the mixing weight and the mean respectively, we obtain the update equation of the mixing weight as follows:

where \(p(j|\mathbf {X}_i)\) is the posterior distribution that defines the probability of affecting a vector \(\mathbf {X}_i\) to the component j,

and the estimation of the mean parameter is conducted using the below equation:

Regarding the covariance estimation, a maximum likelihood estimation algorithm and a fixed-point have been proposed earlier (Pascal et al. 2013; Najar et al. 2019). Those algorithms have proven the robustness and effectiveness of estimating the covariance matrix in such applications. However, authors take into account only the case of the shape parameter that could be between 0 and 1 which is a restrictive situation as the shape parameter for many applications could be \(2,3,\dots \). That’s why, we consider the Fisher scoring algorithm that estimates the covariance matrix for any value of the shape. The main purpose of the Fisher scoring algorithm (Boukouvalas et al. 2015) is to optimize the likelihood function based on fixed-point technique and followed by an optimization iteration through the Fisher information matrix.

Optimizing the likelihood function of N vectors \(\mathcal {X}=(\mathbf {X}_1,\mathbf {X}_2,\dots ,\mathbf {X}_N)\), we should first give the below equation:

As we will take into account only the covariance matrix, we consider a simplistic gradient formula of this likelihood function which depends only on the entries of \(\varSigma \) as defined in the work of Boukouvalas et al. (2015)

with F a function defined for the space of all real \(K \times K\) symmetric and positive definite matrices \( S_{++}^K\) :

where \(u_i=X_i^T\varSigma ^{-1}X_i\), \(|\varSigma |\) is the determinant of the covariance matrix \(\varSigma \) and the gradient of F at a point \(\varSigma \in S_{++}^K\) is given by:

where \(f(\varSigma )\) is defined by fixed-point algorithm in Pascal et al. (2013). The existence was proved by showing that the profile likelihood is positive, bounded in the set of symmetric positive definite matrices and equals to zero on the boundary of this set. Regarding the uniqueness, it was proved that for any initial symmetric positive definite matrix, the sequence of matrices satisfying a fixed-point equation converges to the unique maximum of this profile likelihood defined by:

To numerically maximize the likelihood function with respect to the covariance matrix, the Fisher scoring iteration at \(t+1\) of \(\varSigma \) parameter is given by:

where the update of the new covariance matrix at \(t+1\) is based on the previously estimated covariance at time t and the inverse of the Fisher information matrix G which is defined by Verdoolaege and Scheunders (2012):

for \(m,j=1,\dots ,d\).

Afterward, to compute the maximum likelihood estimation of the shape parameter, we used an iterative algorithm based on Newton–Raphson technique. The Newton–Raphson method is as well a fixed-point estimation technique where the difference between them is the use of first-order Taylor expansion around the considered parameter. In another words, the fixed-point function is substituted by the first derivate of the log-likelihood function.

where \(\hat{\beta }_{t+1}\) is the estimation of the shape parameter at iteration \(t+1\), \(\alpha (\beta )\) is the derivate of the complete log-likelihood \(\mathcal {L}(\mathcal {X}|\varTheta )\) with respect to \(\beta \) parameter defined by (Pascal et al. 2013)

where \(\psi (.)\) is the digamma function and \(\alpha '(\beta )\) is the derivate of \(\alpha (\beta )\).

In finite mixture modeling, selecting the number of components is one of the challenging tasks. Several model selection approaches have been considered such as the Akaike’s information criterion (AIC) (Akaike 1974), the minimum description length (MDL) (Rissanen 1978), the mixture MDL (MMDL) (Figueiredo and Jain 2002) and the minimum message length criterion (MML) (Wallace and Boulton 1968). Among those criteria, the minimum message length criterion (MML), which is based on information theory, has shown better performance for the mixture model (Baxter and Oliver 2000; Bouguila and Ziou 2007). MML is used to evaluate statistical models according to their competence on compressing a message containing the data. Therefore, by using MML, the optimal number of components in the mixture is obtained by minimizing the following function:

where \(p(\varTheta )\) is the prior probability, \(L(\varTheta |\mathcal {X})\) is the complete log-likelihood, \(|F(\varTheta )|\) is the determinant of the Fisher information matrix of minus the log-likelihood of the mixture, \(N_p\) is the number of free parameters to be estimated and is equal to \(K(3d+1)\) in our case, and \(K_{N_p}\) is the \(N_p\) dimensional optimal quantizing lattice constant (Conway and Sloane 2013), where \(K_1=\frac{1}{12} \approxeq 0.083\) for \(N_p=1\) and \(K_2=\frac{5}{36 \sqrt{3}}\) for \(N_p=2\). As \(N_p\) grows, \(K_{N_p}\) tends to the asymptotic value given by \(\frac{1}{2 \pi e} \approxeq 0.0585\). In this way, \(K_{N_p}\) does not vary much; for that, we approximate it by \(\frac{1}{12}\).

The most challenging task for using the minimum message length is choosing the appropriate prior distribution, \(p(\varTheta )\) which have been developed in our previous work (Najar et al. 2019) where also we define the elements of the Fisher information matrix with respect to the set of parameters.

Having our selection criterion and estimation equations, the complete proposed learning approach is summarized in Algorithm 1

4.2 Bayesian learning

We describe in this section a Bayesian approach for learning the multivariate generalized Gaussian mixture model. Bayesian learning has been proposed as an alternative to likelihood-based approach which generally suffers from sensitivity to the initialization and the presence of many local minima. To avoid this kind of problems, we consider one of the most popular MCMC algorithms namely Gibbs sampler (Bouguila 2011). In our Bayesian framework, the full posterior distribution of all variables over the training data \(\mathcal {X}\) is defined by Bayes rule (Marin and Robert 2007):

where \(p(\mathcal {X}|\varTheta )\) is the likelihood of the data given the model’s parameters and \(p(\varTheta )\) is the prior distribution of parameters. The Gibbs sampler is based on generating posterior samples by alternating conditional distributions. At each iteration t, the approximate distribution of \(\varTheta ^t\) is sampled from the previous posterior distribution for the parameters \(\varTheta ^{t-1}\), and this process continues until convergence (reaching the true joint posterior probability).

The key of Bayesian algorithm is the choice of target distributions. We state in the following section our developed prior distributions and the related posterior distributions.

4.3 Prior and posterior distributions

We start by the conditional distribution of the mixing weight \(\mathbf {P}=(p_1,\dots ,p_K)\). We take into account that \(\mathbf {P}\) is defined as \(\forall j~ p_j \ge 0, \sum _{j=1}^{K}p_j=1\), and thus, a natural choice as a prior is a distribution on probabilities which is the Dirichlet distribution with parameters \(\eta =(\eta _1,\dots ,\eta _K)\):

Also, according to equation 2, we defined the missing vectors \(\mathcal {Z}=(\mathbf {Z}_1,\dots ,\mathbf {Z}_N)\) and each \(\mathbf {Z}_i=(Z_{i1},\dots ,Z_{iK})\) is the probability \(p(Z_{ij}=1)=p_j\) and also \(\sum _{j=1}^{K}Z_{ij}=1\). Thus, the probability of generating the missing vectors conditioned on the mixing weight parameters is given as the following:

where \(n_j=\sum _{i=1}^{N} Z_{ij}\) is the number of vectors \(\mathbf {X}_i\) affected to the cluster j. The above equation corresponds to a multinomial distribution with the corresponding probabilities \(p_1,\dots ,p_K\) and N independent trials. The structure of Gibbs sampler requires the generation of parameters from the related posterior. Thus, we derive the posterior of the mixing weights from Eqs. 20 and 21.

As a result, the posterior distribution of the mixing vectors \(\mathbf {P}\) has also the form of a Dirichlet distribution with parameters \((\eta _1+n_1,\dots ,\eta _K+n_K)\), where \(\eta \) is the hyperparameter of the Dirichlet distribution corresponding to the prior of the mixing weight and \(n_j,~j=1\dots ,K\) is the index numbers defined below.

As for the parameters \({\varvec{\mu }}_j, \beta _{j}\) and \(\varSigma _j\), we consider a Normal prior for the mean, a gamma prior for the shape parameter and an inverted Wishart for the covariance matrix (Robert 2007; Robert and Casella 2004). In fact, \({\varvec{\mu }}_j \sim \mathcal {N}({\varvec{\mu }}_0,\varSigma _0)\), \(\beta _j\sim \mathcal {G}(\alpha _{\beta },\beta _{\beta })\), and \(\varSigma _j \sim IW (\psi , \nu )\), where \({\varvec{\mu }}_0, \varSigma _0\) are the hyperparameters (mean, variance) for the normal distribution, \(\alpha _{\beta },\beta _{\beta }\) are the hyperparameters (shape, scale) for the gamma distribution, and \(\psi , \nu \) are the hyperparameters (degree of freedom, scale matrix) for the inverted Wishart distribution. The prior distributions for \({\varvec{\mu }}_j, \beta _{j}\) and \(\varSigma _j\) are considered as follows:

Having those prior distributions, we can easily deduce the posterior distributions using the Bayes rule \(p(\varTheta |X)=\frac{p(\varTheta ) p(X|\varTheta )}{p(X)} \propto p(\varTheta ) p(X|\varTheta )\). Accordingly, we multiply the prior for each parameter within the cluster j by the likelihood taking into account only the vectors affected to cluster j depicted as (\(Z_{ij}=1\))

In this case, the current set of samples will be generated just from the \(\mu \) parameters, where, \(\beta _j\) and \(\varSigma _j\) could be considered as constant parameters, which give us a more simplistic formulate of the posterior distribution:

In the wake of the same methodology, the posterior distribution for the shape parameter and using the prior from equation 24 and the likelihood from 3, it holds that:

Note that sampling from shape parameter depends only on \(\beta _j\) so the hyperparameters \(\alpha _{\beta },\beta _{\beta }\) and \(\varSigma _j\) are taken as constant parameters, thus we have:

Finally, the last conditional distribution we have to consider is that of covariance matrix. As well, we consider the prior defined in Eq. 25 and the likelihood as the following:

Again sampling the last distribution leads to consider the hyperparameters \(\psi , \nu \) and \(\beta _j\) as constant parameters which gives us:

4.4 Complete Bayesian estimation and model selection

Having our posteriors distributions, we give the complete Bayesian learning algorithm. Thus, the steps of our Gibbs sampler are given in Algorithm 2. First, we initialize all the hyperparameters (\({\varvec{\mu }}_0, \varSigma _0, \alpha _{\beta },\beta _{\beta }, \psi , \nu \)). Then, we start sampling for many iterations the missing vectors from a multinomial distribution such that the parameters are taken as the posterior distribution. After, we sample the mixing weight from the conditional posterior distribution. And, finally, we generate the set of parameters (mean, shape and covariance matrix) from the introduced posterior distributions in the above section with the combination of an acceptance/rejection method namely “Metropolis–Hastings” algorithm,

where \( \mathcal {M} (1,p(1|\mathbf {X}_i)^{(t-1)},\dots ,p(K|\mathbf {X}_i)^{(t-1)})\) denotes a multinomial distribution of order one with parameters \((p(1|\mathbf {X}_i)^{(t-1)},\dots ,p(M|\mathbf {X}_i)^{(t-1)})\), where

represents the posterior probability that a vector \(\mathbf {X}_i\) is affected to the component j of the mixture.

We use the Metropolis–Hastings method (Chib and Greenberg 1995) in our Bayesian estimation algorithm to make a decision whether the new samples at iteration t should be accepted or discarded for the next iteration through an acceptance ratio defined as follows:

where \(q(\varTheta _j^{(t-1)}|\tilde{\varTheta }_j)\) is a proposal distribution for the previous \(\varTheta _j^{(t-1)}\) parameters conditioned on the new ones \(\tilde{\varTheta }_j\), and \(p(\tilde{\varTheta }_j|\mathcal {X},\mathcal {Z})\) is the posterior distribution of the new sample \(\tilde{\varTheta }_j\) parameter. The most challenging aspect in this algorithm is how to choose the proposal distributions \(q(\varTheta _j^{(t-1)}|\tilde{\varTheta }_j),q(\tilde{\varTheta }_j|\varTheta _j^{(t-1)}) \). In our case, we have chosen normal distribution for mean parameters and shape parameter with variance \(\zeta ^2\) : \(\tilde{{\varvec{\mu }}}_j \sim \mathcal {N}({\varvec{\mu }}_j^{(t-1)} , \zeta ^2), \tilde{\beta }_j \sim \mathcal {N}(\beta _j^{(t-1)}, \zeta ^2) \). For the covariance matrix, we have considered the inverted Wishart distribution with a degree of freedom \(\delta \) : \(\tilde{\varSigma _j} \sim \mathcal {IW} (\varSigma _j^{(t-1)},\delta )\).

We summarize the Metropolis–Hastings algorithm as follows:

- 1.

Generate parameters \(\varTheta _j\) from proposal distributions :

$$\begin{aligned}&\tilde{{{\varvec{\mu }}}}_j \sim \mathcal {N}({\varvec{\mu }}_j^{(t-1)} , \zeta ^2), \tilde{\beta }_j \sim \mathcal {N}(\beta _j^{(t-1)}, \zeta ^2), \\&\tilde{\varSigma }_j \sim \mathcal {IW} (\varSigma _j^{(t-1)},\delta ), U \sim \mathcal {U}_{[0,1]}. \end{aligned}$$ - 2.

Compute the acceptance ratio for each parameters :

$$\begin{aligned} r_{\mu _{j}}= & {} \frac{p(\tilde{{\varvec{\mu }}}_j|\mathcal {X},\mathcal {Z})\mathcal {N}({\varvec{\mu }}_j^{(t-1)}|\tilde{{\varvec{\mu }}}_j, \zeta ^2)}{p({\varvec{\mu }}_j^{(t-1)}|\mathcal {X},\mathcal {Z}) \mathcal {N}(\tilde{{\varvec{\mu }}}_j|{\varvec{\mu }}_j^{(t-1)}, \zeta ^2)}\\ r_{\beta _{j}}= & {} \frac{p(\tilde{\beta }_j|\mathcal {X},\mathcal {Z}) \mathcal {N}(\beta _j^{(t-1)}|\tilde{\beta }_j, \zeta ^2)}{p(\beta _j^{(t-1)}|\mathcal {X},\mathcal {Z}) \mathcal {N}(\tilde{\beta }_j|\beta _j^{(t-1)}, \zeta ^2)}\\ r_{\varSigma _{j}}= & {} \frac{p(\tilde{\varSigma }_j|\mathcal {X},\mathcal {Z})\mathcal {IW}(\varSigma _j^{(t-1)}|\tilde{\varSigma }_j, \delta )}{p(\varSigma _j^{(t-1)}|\mathcal {X},\mathcal {Z}) \mathcal {IW}(\tilde{\varSigma }_j|\varSigma _j^{(t-1)}, \delta )}. \end{aligned}$$ - 3.

if \(\alpha =min[1,r] < U\) and \(\tilde{\varSigma }_j\) is a positive definite matrix then:

$$\begin{aligned}&{\varvec{\mu }}_j^t=\tilde{{\varvec{\mu }}}_j, \end{aligned}$$(34)$$\begin{aligned}&\beta _{j}^t=\tilde{\beta }_j, \end{aligned}$$(35)$$\begin{aligned}&\varSigma _{j}^t= \frac{d * \tilde{\varSigma }_j}{trace(\tilde{\varSigma }_j)} \end{aligned}$$(36)else \( {\varvec{\mu }}_j^t={\varvec{\mu }}_j^{(t-1)},\beta _{j}^t= \beta _j^{(t-1)}, \varSigma _{j}^t=\varSigma _j^{(t-1)} \).

The major problem when using Bayesian inference is the convergence to the target distributions (i.e., how long should be the MCMC run). Different techniques have been considered and applied with success to stop sampling (Roberts and Tweedie 1999). In our case, we have considered a widely used approach for Markov Chain Monte Carlo algorithms based on one long run with diagnostics proposed in Carlo (1992).

One of the most challenging aspects in finite mixture models concerns determining the number of components that best describe a given dataset. Various model selection approaches have been considered to solve this challenging problem. The most successful Bayesian model selection method, that we will follow, is the integrated likelihood (Kass and Raftery 1995) defined by:

where \(\varTheta \) is the parameters vector, \(p(\varTheta |K)\) is the parameters’ prior given the number of components M and \(p(\mathcal {X}|\varTheta ,M)\) is the likelihood function. The marginal likelihood is generally approximated using the Laplace method as follows:

where \(N_p\) is the number of parameters and \(|H(\hat{\varTheta })|\) denotes the determinant of the Hessian matrix given by:

The parameters of different components in the mixture are independent, since having no knowledge about a parameter in one class does not provide any knowledge about the parameters of another class. Thus, we can assume that our parameters \((\varvec{\mu }=({\varvec{\mu }}_1,\dots ,{\varvec{\mu }}_K), \varvec{\beta }=(\beta _1,\dots ,\beta _K), \varvec{\varSigma }=(\varSigma _1,\dots ,\varSigma _K), \mathbf {P}=(p_1,\dots ,p_K))\) are independent, then:

where \(p({\varvec{\mu }}_j), p(\beta _j), p(\varSigma _j)\) and \(p(\mathbf {P})\) are defined in Sect. 4.3.

Having our model selection criterion and learning parameters algorithm, the complete Bayesian estimation algorithm is as follows:

5 Discriminative learning based on the mixture model

Discriminative approaches have become powerful tools for machine learning tasks involving pattern recognition and computer vision applications. They aim to separate data points by creating decision boundaries. One of the most successful discriminative models is support vector machines which incorporates two main key points. The first important issue is to define a separating hyperplane that maximizes the margin between two classes by solving a quadratic programming problem. The second issue driven by SVM algorithm is the choice of kernel functions. Indeed, a kernel function is a similarity measure between vectors, and a given kernel should capture the intrinsic properties of the data to classify and take into account prior knowledge of the problem domain. Deriving kernels from generative models in order to enhance the capability of SVM in describing the data formed a link between generative and discriminative models through hybrid frameworks.

In this section, we develop kernels, from multivariate generalized Gaussian mixture models, based on Fisher score and information divergence to tackle the problem of finding an appropriate kernel for SVM.

5.1 Fisher kernels

We develop Fisher kernels for our generative models. Let \(O=\{O_1,\dots ,O_K\}\) be a set of multimedia objects, each object \(O_j,j=1,\dots ,K\) is defined by \(\mathcal {X}=\{\mathbf {X}_1,\dots ,\mathbf {X}_N\}\) a set of feature vectors generated by a finite generalized Gaussian mixture model \(p(\mathbf {X}|\varTheta )\). The corresponding feature space is \((2K(d+1)-1)\). The Fisher kernel is defined by computing the derivative of the log-likelihood with respect to the mixture model parameters, called the Fisher score \(U_{\mathcal {X}}(\varTheta )=\log p(\mathcal {X}|\varTheta )\) :

where \(I(\varTheta )\) is the Fisher information matrix that has less significant role and then can be approximated by the identity matrix as shown in Jaakkola and Haussler (1999). It is notable that Fisher kernel has a quadratic complexity, for simplicity purpose, we consider that covariance matrices are given by \(\varSigma =\sigma ^2 I\). By computing the gradient of \(\log p(\mathcal {X} |\varTheta )\) with respect to our model parameters \(p_j,\mu _j, \beta _{j},\sigma _j\), we obtain :

and for \(j=1,\dots ,K\)

where \(p(j|\mathbf {X_i}) \) is the posterior probability. In Eq. 42, we take into account that \(\sum _{j=1}^{K}p_j=1\) and thus we have only \(K-1\) free mixing parameters.

5.2 Kullback–Leibler kernel

Another approach developing SVM kernels is to measure the divergence between two generative probabilistic models. For instance, a kernel distance based on Kullback–Leibler divergence between Gaussian mixtures was applied in Moreno et al. (2004) for speaker identification. Let \(\mathcal {X}=\{\mathbf {X}_1,\dots ,\mathbf {X}_N\}\) and \(\mathcal {X}'=\{\mathbf {X'}_1,\dots ,\mathbf {X'}_N\}\) be two sets of feature vectors modeled by two generative models \(p(\mathbf {X}|\varTheta )\) and \(p'(\mathbf {X'}|\varTheta ')\). Once the probability density function (PDFs) has been estimated for each set of features vectors, the kernel computation is replaced from the original sequence space by computation in the PDFs space (i.e., the kernel becomes a measure of similarity between probability distributions) as the following:

where \(a > 0\) is a kernel parameter included for numerical stability and

The KLD between two zero-mean multivariate GGDs \(p(X|\varSigma ,\beta )\) and \(p'(X|\varSigma ', \beta ')\) is defined by (Verdoolaege et al. 2009):

where here, \(\gamma \equiv \lambda ^{-1}\), with \(\lambda \) the eigenvalues of \(\varSigma ^{-1}\varSigma '\), while \(A \equiv \frac{\gamma -\gamma '}{\gamma +\gamma '}\).

However, due to the absence of a closed-form expression in the case of finite mixture models, we propose to use upper bound approximation which was proposed in the case of Gaussian mixture given by Hershey and Olsen (2007):

6 Experimental results

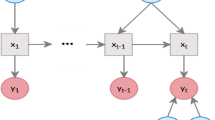

In this section, we conducted experiments to investigate the performance of the proposed framework and to compare it to other methods proposed in the literature. To this purpose, we validate the effectiveness of our two learning methods (Fisher scoring and Bayesian inference) using synthetic dataset. Concerning the hybrid framework, we applied the proposed approaches on two challenging applications. We carried out facial expression recognition experiments using Cohn–Kanade database and human activity recognition using the famous KTH database. Moreover, we compare the generated kernels proposed in Sect. 4, namely Fisher kernel and Kullback–Leibler divergence. The entire recognition framework is presented in Fig. 1

6.1 Synthetic dataset

In this section, we investigate the performance of our mixture model (algorithm 1 in section 3.1 and algorithm 3 in section 3.2) on three two-dimensional synthetic datasets, each of which consists of 100 vectors in each cluster. We present in Fig. 2 the generated mixture of multivariate generalized Gaussian distributions for those datasets. We compare the effectiveness of both the Bayesian and Fisher scoring learning of multivariate generalized Gaussian mixture model. The real and estimated parameters for generated synthetic datasets using both learning methods are given in Table 1. We can see clearly that our both algorithms can accurately estimate the mixture parameters. We also prove the effectiveness of our two model selection methods (minimum message length in the case of Fisher scoring algorithm and marginal likelihood for the Bayesian learning method). According to Fig. 3, we confirm that the minimum message length is as accurate as the marginal likelihood in estimating the correct number of components. Figure 4 shows the estimated parameters plots for the first synthetic dataset with regard to our Bayesian algorithm iterations. We prove the convergence of multivariate generalized Gaussian mixture model parameters after such number of iterations. It is clear here, that all the parameters reach their convergence after 2000 iterations. For this purpose, we used 4000 iterations for our Metropolis–Hastings combined with Gibbs sampler algorithm and we discard the first 800 iterations as ’burn-in’ to stabilize the model and we kept the rest.

6.2 Recognition of facial expressions

Faces are commonly used in daily life to express action and intent. Thus, visual analysis of facial expression is of elementary interest nowadays. The huge interest in facial expressions analysis is motivated by the promise of many applications such as human to computer interaction, medicine, e-learning, access control and marketing. All facial expression recognition approaches include two main steps extracting facial features from images or video sequences and then finding a way to classify these visual characteristics in an efficient way. For our experiments, we use the Cohn–Kanade database (Kanade et al. 2000) which consists of 100 university students ranging in age from 18 to 30 years. We follow in our simulations the experiments setting considered in Zhao and Pietikainen (2007) which consists on selecting 374 sequences from the database for basic emotional expression recognition. The selected sequences are those that could be labeled as one of the six basic emotions: ( 49 angry, 38 disgust, 52 fear, 90 joy, 67 sadness, and 79 surprise). Sample images from this database with different facial expressions are shown in Fig. 5. For feature extraction, we first extract dynamic textures features using LBP-TOP descriptor (Zhao and Pietikainen 2007). After experiments with different block sizes shown in Zhao and Pietikainen (2007), we chose to use \(9 \times 8\) blocks in our experiments. As a result, each video sequence is encoded as a bag of 72 (\(9 \times 8\)) \(LBP-TOP_{4,4,4,3,3,3}\) histograms with a length of 1080 (\(15 \times 72\)) and modeled after that by our finite mixture models (FS-MGGMM and BI-MGGMM) using Algorithms 1 and 3 respectively. Then, we constructed kernel matrices based on Fisher information and probabilistic distances between each of these mixture models. Therefore, a multi-class SVM was trained with the computed kernel matrices using the 1-versus-all training approach and performed our classification results using tenfold cross-validation. We consider the number of components to be the same for all the mixture models (we take K as the smallest number of components selected when modeling the different videos, K=3).

Metropolis–Hastings combined with Gibbs sampler algorithm for the first synthetic database. a Plot of the estimated mean parameter \(\hat{\mu }_1(1,1)\), b\(\hat{\mu }_1(1,2)\), c\(\hat{\mu }_2(1,1)\), d\(\hat{\mu }_2(1,2)\), e plot of the shape parameter \(\hat{\beta }_1\), f\(\hat{\beta }_2\) and g plot of the mixing weight \(\hat{p}_1\)h\(\hat{p}_2\)

6.3 Recognition of human activity

Recognizing human activities plays a significant role in different research topics as computer vision and pattern recognition (Vrigkas et al. 2015). They are highly recommended by various applications like robotics, health care activities, video surveillance systems, human-to-human interaction, human-to-computer interfaces, etc. For this application, we have used the same methodology to recognize human activities as for the facial expression recognition considered in the previous section. The KTH human action dataset (Schuldt et al. 2004) is used to evaluate the performance of the proposed mixture model. We have chosen KTH human action dataset which presents the largest available video sequence dataset of human actions. It contains six human action classes: walking, jogging, running, boxing, hand waving and hand clapping. Each action is performed by 25 subjects in four different scenarios: outdoors, outdoors with scale variations, outdoors with different clothes and indoors. Examples of frames from video sequences in each category are shown in Fig. 6.

6.4 Results

As we mentioned before in Sect. 4.4, the convergence to a target distribution is a major problem for Bayesian learning. For this, we have considered a one long run with diagnostics. We point out the impact of the number of iterations on performing our models for both applications in Figs. 7 and 8. For each number of iteration, we discard the first 15\(\%\) of the total iterations as ‘burn-in’ to stabilize the model and kept the rest. According to these results, the maximum accuracy value is obtained with 4000 iterations in the case of Fisher kernels and 2000 in the case of Kullback–Leibler for Cohn–Kanade. For KTH dataset, we get the best accuracy with 2000 iterations (Fisher kernels) and with 1000 iterations (Kullback–Leibler). Moreover, Tables 2 and 3 show the recognition rates achieved with different Gaussian-based mixture models namely: Gaussian mixture model, generalized Gaussian mixture model using diagonal covariance matrix and the multivariate generalized Gaussian mixture models performed with our parameter learning methods (Fisher scoring MGGMM and Bayesian-inference MGGMM). The obtained results in Table 2 are achieved using the Fisher kernels and in Table 3 using the Kullback–Leibler divergence. Based on those results, we point out that multivariate generalized Gaussian mixture model performs better than Gaussian mixture model and generalized Gaussian mixture model. Besides, the multivariate generalized Gaussian mixture model based on Fisher scoring algorithm provides better recognition rates than fixed-point due to the limitation of shape parameter which should be in the range [0, 1]. On the other hand, comparing likelihood approach and Bayesian-based model, we conclude that Bayesian learning method performs better than those based on likelihood approach. Finally, comparing Tables 2 and 3, it is clear that the best classification results were obtained using the information divergence-based kernels.

Tables 4 and 5 summarize the obtained recognition results when considering our mixture model to generate the kernels with a comparison to some other approaches using the recognition rates given in each paper. It is noteworthy to mention that these results are not directly comparable due to different experimental setups, database preprocessing and the number of sequences of videos or images used. However, it still gives an indication of the discriminative power of our proposed framework. Our method is different in two aspect, first we extract the dynamic texture features from the videos using the LBP-TOP which is a recent detector introduced initially to facial expression recognition. The second element is that our contribution is based on the activity learning not feature extraction. For this, we have constructed new robust models that achieve comparable results to the well-known techniques mentioned in Sect. 2. Comparing our techniques (Bayesian and Fisher scoring) MGGMM to the other relevant related works proves the highest performance achieved in the first hand when combining the advantages of both generative and discriminative technique. In the other hand, it demonstrates the profit when using Bayesian learning and the two proposed kernels. Indeed, the comparable results of the proposed models have been achieved with videos experiments but in all the mentioned related works, the experiments have been made on static images which explains more the advantages of our proposed framework. We have also evaluated different kernels in Table 6: the well-known SVM kernels (linear, radial basis function, polynomial) and our generated kernels (Fisher, Kullback–leibler) from the proposed generative models (FS-MGGMM, BI-MGGMM) and another generative model that we have proposed previously in Najar et al. (2018). To have a comparable results, we applied the bag-of-words on LBP-TOP features extracted for both databases (Cohn–Kanade, KTH). We have selected the vocabulary size to be 100. The achieved results indicate the importance of incorporating the information divergence-based kernels and exploiting at the same time the advantages of finite mixture models and SVM. We can notice also that recognition rates are relatively good for the hybrid framework combined with different learning methods for the multivariate generalized Gaussian mixture model and especially for the Bayesian inference combined with Kullback–Leibler divergence-based kernels.

7 Conclusion

In this paper, we have developed hybrid generative discriminative approaches for facial expression recognition and human activity recognition. The novelty of our approach reaches three different contributions. The first one is to combine generative and discriminative techniques in one framework through proposing new kernels: Fisher kernels and Kullback–Leibler kernel. Our second contribution is to propose a new learning method to the full-covariance generalized Gaussian mixture model by involving a minimum message length criterion. The third contribution is the Bayesian learning on the basis of Metropolis–Hastings technique combined with Gibbs sampler and Marginal likelihood. The effectiveness and the efficiency of the proposed methods are demonstrated through experimental results and simulations. A potential future work can focus on feature selection which could improve further the results.

References

Adama DA, Lotfi A, Langensiepen C, Lee K, Trindade P (2018) Human activity learning for assistive robotics using a classifier ensemble. Soft Comput 22(21):7027–7039

Akaike H (1974) A new look at the statistical model identification. IEEE Trans Autom Control 19(6):716–723

Bartlett MS, Littlewort G, Fasel I, Movellan JR (2003) Real time face detection and facial expression recognition: development and applications to human computer interaction. In: Conference on computer vision and pattern recognition workshop, 2003. CVPRW’03, vol. 5. IEEE, pp 53–53

Baxter RA, Oliver JJ (2000) Finding overlapping components with mml. Stat Comput 10(1):5–16

Bouguila N (2011) Bayesian hybrid generative discriminative learning based on finite liouville mixture models. Pattern Recognit 44(6):1183–1200

Bouguila N (2012) Hybrid generative/discriminative approaches for proportional data modeling and classification. IEEE Trans Knowl Data Eng 24(12):2184–2202

Bouguila N, Ziou D (2007) High-dimensional unsupervised selection and estimation of a finite generalized Dirichlet mixture model based on minimum message length. IEEE Trans Pattern Anal Mach Intell 29(10):1716–1731

Boukouvalas Z, Fu GS, Adalı T (2015) An efficient multivariate generalized gaussian distribution estimator: Application to iva. In: 49th Annual conference on information sciences and systems (CISS), 2015. IEEE, pp 1–4

Bourouis S, Al-Osaimi FR, Bouguila N, Sallay H, Aldosari F, Al Mashrgy M (2019) Bayesian inference by reversible jump mcmc for clustering based on finite generalized inverted dirichlet mixtures. Soft Comput 23(14):5799–5813

Carlo M (1992) Comment: one long run with diagnostics: implementation strategies for Markov chain. Stat Sci 7(4):493–497

Chib S, Greenberg E (1995) Understanding the Metropolis–Hastings algorithm. Am Stat 49(4):327–335

Cohen I, Sebe N, Cozman FG, Huang TS (2003) Semi-supervised learning for facial expression recognition. In: Proceedings of the 5th ACM SIGMM international workshop on Multimedia information retrieval. ACM, pp 17–22

Conway JH, Sloane NJA (2013) Sphere packings, lattices and groups, vol 290. Springer, Berlin

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the em algorithm. J R Stat Soc Ser B (Methodol) 39(1):1–22

Dollár P, Rabaud V, Cottrell G, Belongie S (2005) Behavior recognition via sparse spatio-temporal features. VS-PETS, Beijing

Elguebaly T, Bouguila N (2015) A hierarchical nonparametric Bayesian approach for medical images and gene expressions classification. Soft Comput 19(1):189–204

Fan W, Bouguila N (2013) Online facial expression recognition based on finite beta-liouville mixture models. In: 2013 International conference on computer and robot vision (CRV). IEEE, pp 37–44

Fan W, Bouguila N (2014) Variational learning for Dirichlet process mixtures of Dirichlet distributions and applications. Multimed Tools Appl 70(3):1685–1702

Fan W, Sallay H, Bouguila N, Bourouis S (2016) Variational learning of hierarchical infinite generalized Dirichlet mixture models and applications. Soft Comput 20(3):979–990

Figueiredo MAT, Jain AK (2002) Unsupervised learning of finite mixture models. IEEE Trans Pattern Anal Mach Intell 24(3):381–396

Gelman A, Stern HS, Carlin JB, Dunson DB, Vehtari A, Rubin DB (2013) Bayesian data analysis. Chapman and Hall/CRC, Boca Raton

Hershey JR, Olsen PA (2007) Approximating the kullback leibler divergence between gaussian mixture models. In: IEEE international conference on acoustics, speech and signal processing, 2007. ICASSP 2007. vol. 4. IEEE, pp IV–317

Jaakkola T, Haussler D (1999) Exploiting generative models in discriminative classifiers. In: Advances in neural information processing systems, pp 487–493

Kanade T, Tian Y, Cohn JF (2000) Comprehensive database for facial expression analysis. In: Proceedings fourth IEEE international conference on automatic face and gesture recognition. IEEE, p 46

Kass RE, Raftery AE (1995) Bayes factors. J Am Stat Assoc 90(430):773–795

Kelker D (1970) Distribution theory of spherical distributions and a location-scale parameter generalization. Sankhyā Indian J Stat Ser A 32:419–430

Kotz S (1975) Multivariate distributions at a cross-road. Stat Distrib Sci Work 1:247–270

Lajevardi SM, Hussain ZM (2009) Zernike moments for facial expression recognition. rn 2, 3

Lindley DV and Rao CR (1953) Advanced statistical methods in biometric research. J R Stat Soc 116(1):86–87

Marin JM, Robert C (2007) Bayesian core: a practical approach to computational Bayesian statistics. Springer, Berlin

Moreno PJ, Ho PP, Vasconcelos N (2004) A Kullback–Leibler divergence based kernel for svm classification in multimedia applications. In: Advances in neural information processing systems, pp 1385–1392

Najar F, Bourouis S, Bouguila N, Belghith S (2018) A fixed-point estimation algorithm for learning the multivariate ggmm: application to human action recognition. In: 2018 IEEE Canadian conference on electrical & computer engineering (CCECE). IEEE, pp 1–4

Najar F, Bourouis S, Bouguila N, Belghith S (2019) Unsupervised learning of finite full covariance multivariate generalized Gaussian mixture models for human activity recognition. Multimed Tools Appl 78:1–23

Neal RM (1992) Bayesian mixture modeling. In: Maximum entropy and Bayesian methods. Springer, pp. 197–211

Niebles JC, Wang H, Fei-Fei L (2008) Unsupervised learning of human action categories using spatial-temporal words. Int J Comput Vis 79(3):299–318

Pascal F, Bombrun L, Tourneret JY, Berthoumieu Y (2013) Parameter estimation for multivariate generalized gaussian distributions. IEEE Trans Signal Process 61(23):5960–5971

Rissanen J (1978) Modeling by shortest data description. Automatica 14(5):465–471

Robert C (2007) The Bayesian choice: from decision-theoretic foundations to computational implementation. Springer, Berlin

Robert C, Casella G (2000) Monte carlo statistical methods. Springer Text in Statistics, Springer. https://doi.org/10.1007/978-1-4757-4145-2

Roberts GO, Tweedie RL (1999) Bounds on regeneration times and convergence rates for Markov chains. Stoch Process Appl 80(2):211–229

Roh SB, Oh SK, Yoon JH, Seo K (2018) Design of face recognition system based on fuzzy transform and radial basis function neural networks. Soft Comput 23:1–17

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: a local SVM approach. In: Proceedings of the 17th international conference on pattern recognition, 2004. ICPR 2004. vol. 3. IEEE, pp 32–36

Tsai HH, Chang YC (2018) Facial expression recognition using a combination of multiple facial features and support vector machine. Soft Comput 22(13):4389–4405

Verdoolaege G, Rosseel Y, Lambrechts M, Scheunders P (2009) Wavelet-based colour texture retrieval using the Kullback–Leibler divergence between bivariate generalized Gaussian models. In: 2009 16th IEEE international conference on image processing (ICIP). IEEE, pp 265–268

Verdoolaege G, Scheunders P (2012) On the geometry of multivariate generalized Gaussian models. J Math Imaging Vis 43(3):180–193

Vrigkas M, Nikou C, Kakadiaris IA (2015) A review of human activity recognition methods. Front Robot AI 2:28

Wallace CS, Boulton DM (1968) An information measure for classification. Comput J 11(2):185–194

Wong SF, Cipolla R (2007) Extracting spatiotemporal interest points using global information. In: 2007 IEEE 11th international conference on computer vision. Citeseer, pp 1–8

Yeasin M, Bullot B, Sharma R (2004) From facial expression to level of interest: a spatio-temporal approach. In: Proceedings of the 2004 IEEE computer society conference on computer vision and pattern recognition, 2004. CVPR 2004. vol. 2. IEEE, pp II–II

Zhao G, Pietikainen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29(6):915–928

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Najar, F., Bourouis, S., Bouguila, N. et al. A new hybrid discriminative/generative model using the full-covariance multivariate generalized Gaussian mixture models. Soft Comput 24, 10611–10628 (2020). https://doi.org/10.1007/s00500-019-04567-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04567-2