Abstract

After discussing the basics of belief structures we introduce a new class of belief structures in which we select from among the focal elements using a possibility measure. We refer to this as a maxitive belief structure, MBS. The concepts of belief and plausibility are defined for an MBS, and it is noted how an MBS can be used to model imprecise possibility distributions. We describe various operations with these structures including arithmetic and fusion. We look at the use of the Choquet integral type aggregation for these MBS. Measures other than belief and plausibility were defined for these structures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The classic Dempster–Shafer belief structure can be viewed as a bi-level model for representing the uncertainty associated with a variable that takes its value in the space X (Dempster 1966, 1967; Shafer 1976; Yager 1987; Smets 1988; Yager et al. 1994; Dempster 2008; Liu and Yager 2008; Yager and Liu 2008). At the first level a subset of X is randomly selected from a collection of subsets of X called the focal elements. This makes use of a probability distribution over the focal elements. Once having randomly selected this subset, in the second step an object is chosen from this subset in some unknown, indeterminate, manner. This chosen object is the value of variable of interest. This indeterminism in this second step leads to a model of uncertainty that mixes both randomness and imprecision. One use of this structure has been for modeling imprecise probabilistic information (Caselton and Luo 1992). Here we introduce a variation of the classic belief structure in which in the first step instead of selecting from among the focal elements using a probability distribution we use a possibility, maxitive, distribution. We refer to this new structure as a maxitive belief structure, MBS. We look at various properties and features of the new structure. We note that one use of the MBS is for modeling imprecise possibilistic information (Tsiporkova and Baets 1998; Dubois et al. 2013). An important real-world application of the framework developed here is in multi-criteria decision-making under uncertainty (Dammak et al. 2016). In many situations rather than precisely knowing the degree of satisfaction of a criterion by an alternative we are only able to specify this satisfaction with some uncertainty that is best modeled by an MBS.

2 Basic belief structure

Assume \(X = \{x_{i}\hbox { for }i = 1\hbox { to }n\}\) are a set of elements. Consider a structure m defined on X consisting a collection of non-empty subsets of \(X, F_{j}\) for \(j = 1\) to q, called the focal elements and a group of associated weights \(m(F_{j})=\alpha _{j }\in [0, 1]\) such that \(\sum \nolimits _{j=1}^q {\alpha _j } = 1\). This is used as the basis of model of uncertainty called a Dempster–Shafer belief structure (Dempster 1966, 1967; Shafer 1976; Dempster 2008; Liu and Yager 2008; Yager and Liu 2008). Let V be a variable whose value is determined from the set of focal elements \(\mathbf{F} = \{F_{1}, {\ldots }, F_{q}\}\) by a probabilistic experiment where \(\alpha _{j}\) is the probability that \(F_{j}\) will be the outcome. In addition let U be a related variable taking its value in the space X. If \(V =F_{j*}\), then U is selected from \(F_{j*}\) in some indeterminate manner, that is all we know is that the value of U is some element in \(F_{j*}\). Here we see that Poss(\(U= x_{k}\) for \(x_{k} \in F_{j*}) = 1\).

One interpretation of the D–S belief structure is as an imprecise probability distribution on X associated with the variable U. Here instead of precisely assigning probabilities to the elements in X we indicate that an amount of probability, \(\alpha _{j}\), is allocated in some unspecified manner to the elements in \(F_{j}\).

If m is a belief structure so that two of the focal elements are same \(F_{k_1 } =F_{k_2 } \) with weights \(\alpha _{k_1 } \) and \(\alpha _{k_2 } \), we can replace these by one focal element \(F_{k}=F_{k_1 } =F_{k_2 } \)with weight \(\alpha _k =\alpha _{k_1 } +\alpha _{k_2}\). We shall refer to this as the compression principle.

Two concepts closely associated with a D–S structure are the measures of plausibility, denoted Pl, and belief, denoted Bel, which are set mappings from the space X into the unit interval (Shafer 1976). We recall Pl: \(2^{X} \rightarrow [0, 1]\) and Bel: \(2^{X} \rightarrow [0, 1]\) are such that for any subset A of X \(\hbox {Pl}_{m}(A)=\sum \nolimits _{j, F_j \cap A \ne \varnothing } {\alpha _j}\) and \(\hbox {Bel}_{m}(A) = \sum \limits _{j,\,{F_j}\, \subseteq \,A} {{\alpha _j}} \). We observe that for any subset \(A \subseteq X\), \(\hbox {Bel}_{m}(A) \le \hbox {Pl}_{m}(A)\).

It can easily be shown that both Pl and Bel are monotonic set measures on the space X (Klir 2006):

-

(1)

\(\hbox {Pl}(\varnothing ) = \hbox {Bel}(\varnothing ) = 0\)

-

(2)

\(\hbox {Pl}(X) = \hbox {Bel}(X) = 1\)

-

(3)

If \(A \subseteq B \subseteq X\), then \(\hbox {Pl}(A) \le \hbox {Pl}(B)\) and \(\hbox {Bel}(A)\le \hbox { Bel}(A)\)

We also note that Bel and Pl are duals, for any subset \(A \subseteq X\), \(\hbox {Bel}(A) = 1 - \hbox {Pl}(\bar{{A}})\).

Under the interpretation of a belief structure as an imprecise probability distribution associated with U it is well known that \(\hbox {Pl}(A)\) is the upper bound on the probability of A and \(\hbox {Bel}(A)\) is the lower bound on the probability of A. In this spirit \(\hbox {Prob}(A) \in [\hbox {Bel}(A), \hbox {Pl}(A)]\). An alternate view is that Prob(A) can be seen as an imprecise probability with \(\hbox {Prob}_{m}(A)=[\hbox {Bel}_{m}(A),\hbox {Pl}_{m}(A)]\).

Another measure that can be associated with a belief structure is called a Pignistic measure (Smets 1992; Smets and Kennes 1994) which is defined such that \(\hbox {Pig}_{m}(A) = \sum \nolimits _{j=1}^q {\frac{\hbox {Card}(F_j \cap A) m(F_j )}{\hbox {Card}(F_j)}} \). We note that for any A

The pignistic measure is actually a probability distribution

and for any subset A of \(X \hbox { Pig}_{m}(A) = \sum \limits _{x_k \in A} {{Pig}_{m}(\{x_k \})} \).

The concepts of possibility and certainty introduced by Zadeh (1978, 1979) are useful concepts in discussing the measures of plausibility or belief. Assume A and B are two crisp subsets X, then the possibility of A given B is defined as

and the certainty of A given B is defined as

Using these concepts we see that \(\hbox {Pl}_{m}(A) = \sum \nolimits _{j=1}^q \hbox {Poss}(A/F_j ]m(F_{j})\) and \(\hbox {Bel}_{m}(A)=\sum \nolimits _{j=1}^q {\hbox {Cert}(A/F_j )}m(F_{j})\). Thus, the plausibility of A is the expected possibility of A over the focal elements and the belief of A is the expected certainty of A over the focal elements. We note that Certainty\((A/B) = 1 - \hbox {Poss}(\bar{{A}}/B)\).

3 Maxitive/possibilistic belief structures

Here we shall introduce a variation of the classic D–S belief structure also defined on the space \(X = \{x_{i}\hbox { for }i = 1\hbox { to }n\}\). Here again we have a collection of non-empty subsets of X, \(F_{j}\) for \(j= 1\) to q, called focal elements and a collection of associated weights \(\pi _{j }\in \) [0, 1]; however, we require \(\hbox {Max}_{j}[\pi _{j}] = 1\). Here again the variable V is a value from the space \(\mathbf{F} = \{F_{1}, {\ldots }, F_{q}\}\); however, here it is determined via a possibility measure \(\lambda \) (Klir 2006; Klir and Wierman 1999; Wang and Klir 2009) on F such that \(\lambda (\{F_{j}\}) = \pi _{j}\) and for any subset “B” of F we have \(\lambda (\text {``}B\text {''}) = \mathop {\hbox {Max}}\nolimits _{j, F_{j } \in \text {``}B\text {''}} [\pi _j ]\).

Here again we let U be a related variable taking its value in the space X. As in the preceding if \(V = F_{j*}\) then U is selected from \(F_{j*}\) in some unspecified manner, that is all we know is that U is some element in \(F_{j*}\). We shall denote this structure as g and refer to it as a maxitive Dempster–Shafer belief structure. As we shall subsequently see one interpretation of g is as an imprecise possibility distribution.

Here we note that one conceivable source of the possibility distribution \(\lambda \) on V is a fuzzy subset D on the set F describing our knowledge of the value of the variable V. In this perspective, as noted by Zadeh (1978), the value \(\pi _{j}\) associated with the possibility measure \(\lambda \) would be equal to the membership grade of the focal element \(F_{j}\) in D, \(D(F_{j})\).

We note that a corresponding compression principle exists for these maxitive belief structures. If F has two focal elements that are same, \(F_{k_1 } =F_{k_2 } \) with weights \(\pi _{k_1 } \) and \(\pi _{k_2 } \) we can replace these by one focal element \(F_{k}=F_{k_1 } =F_{k_2 } \) with weight \(\pi _{k }= \hbox {Max}[\pi _{k_1 } \),\(\pi _{k_2 } \)].

In this framework we can define the concepts of plausibility and belief associated with a maxitive belief structure g. Assume E is any subset of X, then \(\hbox {Pl}_{g}(E) = \mathop {\hbox {Max}}\nolimits _{j, F_{j } \cap E \ne \varnothing } [\pi _{j}\)] and \(\hbox {Bel}_{g}(E) = \mathop {\hbox {Max}}\nolimits _{j, F_j \subseteq E} [\pi _{j}\)]. We note that if “A” is the subset of F consisting of those focal elements for which \(E \cap F_{j} \ne \varnothing \), then \(\hbox {Pl}_{g}(E) = \lambda \)(“A”). Similarly if “B” is the subset of F consisting of those focal elements for which \(F_{j} \subseteq \) E, then \(\hbox {Bel}_{g}(E) = \lambda \)(“B”).

Under the interpretation of a maxitive belief structure g as an imprecise possibility distribution on X we see that \(\hbox {Pl}_{g}(E)\) is the upper bound on the possibility of E and \(\hbox {Bel}_{g}(E)\) is the lower bound on the possibility of E. Here then \(\hbox {Poss}_{g}(E) \in [\hbox {Bel}_{g}(E),\hbox { Pl}_{g}(E)]\). In an alternative view \(\hbox {Poss}_{g}(E)\) can be seen as an imprecise possibility distribution. \(\hbox {Poss}_{g}[E] = [\hbox {Bel}_{g}[E), \hbox {Pl}_{g}[E)]\)

Example

Assume \(X = \{x_{1}, x_{2}, x_{3}, x_{4}, x_{5}\}\). Let g be a maxitive D–S belief structure with focal elements: \(F_{1} = \{x_{1}, x_{3}, x_{5}\}, F_{2} = \{x_{2}, x_{4}\}\), \(F_{3} = \{x_{1}, x_{4}\}, F_{4} {=} \{x_{2}, x_{3}, x_{4}\}\). Here \(\mathbf{F} {=} \{F_{1}, F_{2}, F_{3}, F_{4}\}\).

Assume \(\lambda \) is a maxitive/possibility measure on F such that:

Since \(\lambda \) is a maxitive measure, then \(\lambda (\hbox {``}A\hbox {''}) = \mathop {\hbox {Max}}\nolimits _{F_{j } \in \hbox {``}A\hbox {''}} [\pi _{j}]\) and hence

Assume \(E_{1} = \{x_{2}\}\), then

From this we get \(\hbox {Pl}_{g}(E_{1})=\lambda (\{F_{1}, F_{3})) = 0.6\) and \(\hbox {Bel}_{g}(E_{1})=\lambda (\varnothing ) = 0\)

Assume \(E_{2} = \{x_{1}, x_{4}\}\), then

From this we get \(\hbox {Pl}_{g}(E_{2})=\lambda (\mathbf{F}) = 1\) and \(\hbox {Bel}_{g}(E_{2})=\lambda (\{F_{3}\}) = 0.5\)

4 Arithmetic and other operations on maxitive belief structures

Assume \(V_{1}\) and \(V_{2}\) are variables whose values lie in the set R of real numbers. Let us assume our knowledge of the values of each of these variables is expressed in terms of maxitive D–S structures. Assume \(V = V_{1} + V_{2}\) where \(+\) is the arithmetic addition operation. Here we provide an approach for obtaining the maxitive D–S structure for V.

As background we note that as discussed in Moore (1966) if A and B are two discrete sets defined on the real line R, then \(C = A + B\) is a subset of R where \(C = \{x + y{\vert }\) for all \(x \in A\) and \(y \in B\}\).

Example

If \(A = \{10, 15, 20\}\) and \(B = \{2, 4\}\), then \(A + B = \{12, 14, 17, 19, 22, 24\}\)

Using this we can extend the idea of addition to two maxitive belief structures. Assume the knowledge \(V_{1}\) and \(V_{2}\) are expressed via maxitive belief structures \(g_{1}\) and \(g_{2}\). Let \(g_{1}\) be a maxitive belief structure with focal elements \(A_{i}\) for \(i = 1\) to q and possibility measure \(\lambda _{1}\). Let \(g_{2}\) also be a maxitive belief structure with focal elements \(B_{j}\) for \(j = 1\) to r and possibility measure \(\lambda _{2}\). Here \(V = V_{1} + V_{2}\) is a maxitive belief structure g with focal elements, \(F_{ij}= A_{i} + B_{j}\), for \(i = 1\) to q and for \(j = 1\) to r and associated possibility measure \(\lambda \) so that \(\lambda (F_{ij})=\hbox {Min}[\lambda _{1}(A_{i}), \lambda _{2}(B_{j})]\).

At times we shall find it more convenient to represent this addition operator directly in terms of the corresponding addition of maxitive belief structures, \(g = g_{1} + g_{1}\). Also at times to simplify the notation we shall use the notation g(\(F_{i})\) for \(\lambda (\{F_{i}\})\) and \(g(F_{1}, F_{2}, F_{3})\) for \(\lambda (\{F_{1}, F_{2}, F_{3}\})\) and \(g(\hbox {``}B\hbox {''}) = \mathop {\hbox {Max}}\nolimits _{F_i \in {\hbox {``}B\hbox {''}}} [g(F_{i}))\)

We note that the operation \(\lambda (\{F_{ij}\}) = \hbox {Min}[\lambda _{1}(\{A_{i}\}), \lambda _{2}(\{B_{j}\})]\) always results in the appropriate type of weights for a possibility measure. In particular, all \(\lambda (\{F_{ij}\}) \in [0, 1]\) and since there always exists a pair \(A_{i^{*}} \) and \(B_{j^{*}} \) so that \(\lambda _{1}(\{A_{i^{*}} \}) = 1\) and \(\lambda _{2}(\{B_{j^{*}} \}) = 1\), then there always exists one focal element \(F_{i^{*}j^{*}} \) so \(\lambda (\{F_{i^{*}j^{*}}\}) = 1\). Since \(F_{i^{*}j^{*}} =A_{i^{*}} +B_{j^{*}} \), then \(F_{i^{*}j^{*}} \) is always non-null when \(A_{i^{*}} \) and \(B_{j^{*}} \) are non-null.

The following two properties can easily be shown to hold for the addition of maxitive belief structures:

-

(1)

Symmetry: \(g_{1} + g_{2} = g_{2} + g_{1}\)

-

(2)

Associatively: \(g_{1} + g_{2} + g_{3} = (g_{1} + g_{2}) + g_{3} = g_{1} + (g_{2} + g_{3})\)

In cases when addition of maxitive belief structures leads to duplicate focal elements with different weights the compression principle can be used to eliminate the duplicates.

Example

Let \(g_{1}\) and \(g_{2}\) be two maxitive belief structures on R. Assume that \(g_{1}\) has focal elements

and possibility measure \(\lambda _{1}\) is such \(g_{1}(A_{1}) = 0.7,g_{1}(A_{2}) = 0.8\), and \(g_{1}(A_{3}) = 1\).

In addition \(g_{2}\) has focal elements \(B_{1} = \{10, 20\}\) and \(B_{2} = \{25, 35\}\) and possibility measure \(\lambda _{2}\) with \(\lambda _{2}(B_{1}) = 1\) and \(\lambda _{2}(B_{2}) = 0.5\).

Here then \(g = g_{1} + g_{2}\) with focal elements

Further g is a maxitive measure with \(g(F_{ij}) = g(A_{i}) \wedge g(B_{j})\); hence, we have

The preceding approach can be extended to other binary arithmetic operations. Assume \(g_{1}\) and \(g_{2}\) are two maxitive belief structure with focal elements \(A_{i}\), \(i = 1\) to p and \(B_{j}\), \(j = 1\) to q, respectively. Let \(\bot \) be any arithmetic operator: addition, subtraction, multiplication, division, exponential. If \(g = g_{1} \bot g_{2}\), then g is a maxitive belief structure with focal elements

We note here all \(F_{ij}\) are non-null and here g(\(F_{ij})\) a valid possibility distribution.

If \(g_{1}\) is a maxitive belief structure (MBS) with focal elements \(A_{j}\) for \(j = 1\) to q all subsets of R. If b is a number, then \(g = b\, g_{1}\) is an MBS with focal element \(B_{j} = b\; A_{j} = \{bx{\vert }\hbox { for all }x \in A_{j}\}\) and \(g(B_{j}) = g_{1}(A_{j})\) for \(j = 1\) to q. Using this and our definition of addition of MBS we can easily obtain the weighted aggregation of MBS. Thus, if \(g_{k}\) for \(k = 1\) to r are MBS with focal elements in R and if \(w_{k}\) are a set of weights such \(w_{k} \in [0, 1]\) and \(\sum \nolimits _{r = 1}^r {w_k } = 1\), then the weighted average of these r belief structures is \(g = \sum \nolimits _{k=1}^r {w_k g_k } \).

We now consider further operations with maxitive belief structures. Assume \(X_{1}\), \(X_{2}\) and \(X_{3}\) are three not necessarily different sets. Let S be a set operator defined as

That is if A and B are subsets of \(X_{1}\) and \(X_{2}\), respectively, then \(S(A, B) = C\) where C is a subset of \(X_{3}\). Let \(g_{1}\) and \(g_{2}\) be two MBS on \(X_{1}\) and \(X_{2}\), respectively, with focal elements \(A_{i}\) for \(i = 1\) to q and \(B_{j}\) for \(j = 1\) to p. We are now interested in obtaining the MBS \(g = S(g_{1}\), \(g_{2})\). Here we let \(F_{ij}=S(A_{i}, B_{j})\) which is a subset of \(X_{3}\). We associate with this a set mapping \(\hat{{g}}\) on \(X_{3}\) so that \(\hat{{g}}(F_{ij}) = g_{1}(A_{i}) \wedge g_{2}(B_{j})\). We note that \(\hat{{g}}\) is such that all \(\hat{{g}}(F_{ij}) \in \) [0, 1] and there exists at least one \(F_{i^{*}j^{*}} \) such that \(\hat{{g}}(F_{i^{*}j^{*}} ) = 1\). This occurs for any pair for where \(g_{1}(A_{i^{*}} ) = g_{2}(B_{j^{*}} ) = 1\).

At this point we must distinguish between two classes of the operator S. We call S non-null forming if for any A and B that are not null \(S(A, B) \ne \varnothing \). On the other hand we shall say that S is a null forming if for some \(A \ne \varnothing \) and \(B \ne \varnothing \) we can have \(S(A, B) = \varnothing \). We note the union is an example of non-null forming S. Another example of non-null forming S is the Cartesian product. An example of a null forming operator is the intersection. We must use different procedures for obtaining g from \(\hat{{g}}\) in these cases. If S is non-nulling forming, then g is \(\hat{{g}}\); the focal elements of g are also the \(F_{ij} = S(A_{i}, B_{j})\) and \(g(F_{ij})=\hat{{g}}(F_{ij})\). We see in this case that g is a possibility measure, all \(g(F_{ij}) \in [0, 1]\), and there is at least one \(F_{ij}\), \(g(F_{ij})=\hat{{g}}(F_{ij}) = 1\). If S is null forming, we must use the following procedure for obtaining \(g = S(g_{1}\), \(g_{2})\)

-

(1)

Focal elements of g are all the non-null \(F_{ij}\), that is \(\mathbf{F} = \{F_{ij}\hbox { s.t. }F_{ij} \ne \varnothing \}\)

-

(2)

If there exists one \(F_{ij} \ne \varnothing \) such that \(\hat{{g}}(F_{ij}) = 1\), then g is an MBS with focal elements all \(F_{ij} \ne \varnothing \) and for these focal elements \(g(F_{ij})=\hat{{g}}(F_{ij})\)

-

(3)

If there is no \(F_{ij} \ne \varnothing \) such that \(\hat{{g}}(F_{ij}) = 1\). We let \(\alpha = \hbox {Max}[\hat{{g}}(F_{ij})\)] over those \(F_{ij}\ne \varnothing \). Using this we define g having focal element all \(F_{ij} \ne \varnothing \) and \(g(F_{ij})=\frac{\hat{{g}}(F_{ij} )}{\alpha }\).

We note here that \(g(F_{ij}) \in [0, 1]\) and there exists at least one \(F_{ij}\) with \(g(F_{ij}) = 1\).

5 Fusion of multiple MBS

A fundamental issue in the classic Dempster–Shafer theory is the fusion of multiple structures. The predominant methodology to accomplish this is the Dempster’s rule (Dempster 1966, 1967; Shafer 1976; Dempster 1968; Zadeh 1979; Fu and Yang 2011). Here we look at the issue of fusion multiple maxitive belief structure.

We note for the case of \(S(g_{1}, g_{2})\) where S is the intersection, then \(S(g_{1}, g_{2})\) as defined earlier becomes an extension of the Dempster’s rule. More generally assume \(g_{k}\) for \(k = 1\) to t are a collection of MBS based on possibility measures \(\lambda _{k}\), respectively, with focal elements \(F_{kj}\) for \(j=1\) to \(n_{k}\). In the following we shall denote \(\lambda _{k}(F_{kj})=\pi _{kj}\). We now can consider the fusion of these to obtain \(g = S(g_{1}, {\ldots }, g_{t})\). Here g is an MBS with focal elements \(E_{r}\) where each \(E_{r}\) is composed by forming an non-null intersection made of one focal element from each of the \(g_{k}\); thus, each \(E_{r}\) is the intersection E\(_{r}=\bigcap \nolimits _{k = 1}^t {F_{kj_k } } \ne \varnothing \). Also for each \(E_{r}\) we obtain \(\hat{{g}}(E_{r})=\mathop {\hbox {Min}}\nolimits _{k = 1\text { to }t} [\lambda _{k}(F_{kj_k } )\)]. If there exists at least one \(E_{r} \ne \varnothing \) with \(\hat{{g}}(E_{r}) = 1\), then \(g(E_{r})=\hat{{g}}(E_{r})\). If there is no \(E_{r} \ne \varnothing \) with \(\hat{{g}}(E_{r}) = 1\), then we let \(\alpha = \hbox {Max}[\hat{{g}}(E_{r})]\) for \(E_{r} \ne \varnothing \). Using this we define \(g(E_{r})=\frac{\hat{{g}}(E_r )}{\alpha }\).

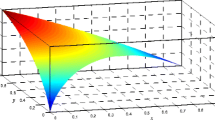

6 Choquet integral associated with an MBS

Let \(F = \{F_{1}, {\ldots }, F_{q}\}\) be a collection of focal elements, non-empty subsets of X. Let \(r_{j}\) be some numeric value associated with each focal element. In many cases we are interested in some kind of average of these values. In the case when we have an MBS structure g based on the possibility measure \(\lambda \) with \(\lambda (\{F_{j}\}) = \pi _{j}\) we often use the Choquet integral (Choquet 1953; Beliakov et al. 2007) to obtain the average; we shall denote this \(\hbox {Choq}_{\lambda }(r_{j}\hbox { for }j = 1\hbox { to }q)\). Assume \(\rho \) is an index function so that \(\rho \)(i) in the index of i th largest of the \(r_{j}\); thus, \(r_{\rho _{(i)}}\) is the \(i{\mathrm{th}}\) largest of the \(r_{j}\). Using this we get

where “\(H_{j}\hbox {''}= \{F\rho _{(1)}, {\ldots }, F\rho _{(i)}))\) is the subset of focal elements with the i largest values of r\(_{j}\). Denoting \(w_{i}=\lambda (\hbox {``}H_{i}\hbox {''}) - \lambda (\hbox {``}H_{i - 1}\hbox {''})\) we see \(\hbox {Choq}_{\lambda }(r_{j}\hbox { for }j = 1\hbox { to }q) = \sum \nolimits _{i=1}^q {w_i r_{\rho (i)} } \). It is easy to show that each \(w_{i}\in [0, 1]\) and \(\sum \nolimits _{i=1}^q {w_i }= 1\). Thus, we see that the Choquet integral is essentially providing a weighted average of \(r_{\rho _{(i)}}\). Here the weights are determined by the measure \(\lambda \).

We further note that since \(\lambda \) is a possibility measure with \(\lambda (\{F_{j}\}) = \pi _{j}\) and for a subset “B” of F we have \(\lambda (``B\hbox {''}) = \mathop {\mathrm{Max}}\nolimits _{F_j \in \hbox {``}B\hbox {''}} [\pi _{j}\)]. In the special case of “\(H_{i}\)” we have \(\lambda (\hbox {``}H_{i}\hbox {''}) = \mathop {\hbox {Max}}\nolimits _{k = 1\text { to }i} [\pi _{\rho _{(k)}}]\). Thus, here \(w_{i} = (\hbox {Max}[\pi _{\rho _{(i)}}\), \(\lambda (\hbox {``}H_i \hbox {''}) - \lambda (\hbox {``}H_{i - 1}\hbox {''})] = \hbox {Max}[0, \pi \rho _{(i) }-\mathop {\hbox {Max}}\nolimits _{k = 1\text { to }i-1} [\pi _{\rho _{(k)}}]]\). We observe that if \(\pi _{\rho _{(i*) }}= 1\), then \(w_{i}\) for all i \(> i^{*}\). Here we shall use the notational convention that in the case of an MBS g based on the possibility measure \(\lambda \) that \(g(F_{j})=\pi _{j }=\lambda (\{F_{j}\})\).

With a little bit of algebra we can express the Choquet integral as

In the case where \(\lambda \) is a possibility distribution, then

We now show that the Choquet integral can provide an alternative and useful formula for the measure of plausibility and belief associated with g.

Theorem

-

\(\hbox {Pl}_{g}(E) = \hbox {Choq}_{\lambda }(\hbox {Poss}(E/F_{j}\hbox { for }j = 1\hbox { to }q)\)

-

\(\hbox {Bel}_{g}(E) = \hbox {Choq}_{\lambda }(\hbox {Cert}(E/F_{\lambda }\hbox { for }j = 1\hbox { to } q)\)

Proof

(1) Let \(\rho \) be an index function so that \(\rho \)(i) is the index of the i th largest \(\hbox {Poss}(E/F_{j})\); thus, \(\hbox {Poss}(E/F\rho _{(j)})\) is the i th largest of the \(\hbox {Poss}(E/F_{j})\). Here then

We note that if \(E \cap F_{j} \ne \varnothing \), then \(\hbox {Poss}(E/F_{j}) = 1\) and if \(E \cap F_{j}=\varnothing \), then \(\hbox {Poss}(E/F_{j}) = 0\). Assume \(j^{*}\) are the number of focal elements that intersect E. Using this we get

since \(\hbox {Poss}(E/F\rho _{(j)}) = 0\) for those focal elements not intersecting E and \(\hbox {Poss}(E/F\rho _{(j)}) = 1\) for those focal elements intersecting E. We further see that

However “\(H_{j^{*}} \)” \(= \{F\rho _{(1)}, {\ldots }, F\rho _{(j*)}\}\) is the subset of focal elements that intersects E; thus, \(\hbox {Choq}_{\lambda }\;(\hbox {Poss}(E/F_{j}), j = 1\hbox { to }q) = \lambda \)(“A”) where “A” is the subset of focal elements that intersects \(E, \hbox {Pl}_{\lambda }(E)\)

(2) In a similar way we can show that \(\hbox {Choq}_{\lambda } (\hbox {Cert}(E/F_{\lambda }\hbox { for }j = 1\hbox { to }q) = \lambda \)(“B”) \(= \hbox {Bel}_{\lambda }(E)\) where “B” is the set of focal elements contained in E since \(\hbox {Cert}(E/F_{j}) = 1\hbox { if }F_{j}\subseteq E\) and \(\hbox {Cert}(E/F_{j})= 0\) otherwise.

A related integral is the Sugeno integral (Beliakov et al. 2007; Sugeno 1977; Klement et al. 2010). Here

It is well known that the Sugeno integral is also a mean operator. In the special case where \(\lambda \) is a possibility measure, then

Using this we can also show that

\(\square \)

7 Alternative measures associated with an MBS

In the preceding we showed that two measures associated with the variable U on the space X are the measures of plausibility and belief where for any subset E of X

Here with g an MBS based on \(\lambda \) having focal elements \(\mathbf{F} = \{F_{1}, {\ldots }, F_{q}\}\) and associated with each \(F_{j}\) is a weight \(g(F_{j})=\pi _{j}\) we now provide a whole class of measures on X, in addition to plausibility and belief, that can be generated from g.

Let \(\mathbf{W} = \langle W_{1}, {\ldots }, W_{q}\rangle \) be a collection of vectors called the allocation imperative. Each \(W_{j}\) is of dimension \(n_{j} = {\vert }F_{j}{\vert }\), and the components of \(W_{j}\) are \(w_{j}(k) \in [0, 1]\) with \(\sum \nolimits _{k=1}^{n_j } {w_j (k)}= 1\). If E is a subset of X, then for each focal element \(F_{j}\) let z\(_{j}=\sum \nolimits _{k=1}^{|F_j \cap E|} {w_j (k)} \). Consider the set function \(\mu _{_{\mathbf{W}, g}}\) defined so that for any subset E of X

where \(\rho \)(i) is the index of the i th largest z\(_{j}\) and \(\hbox {``}H_{i}\hbox {''} = \{F\rho _{(1)}, {\ldots }, F\rho _{(i)}\}\). We now show that \(\mu _{\mathbf{W}{, g}} \) is a measure on X

-

(1)

If \(E = \varnothing \), then \(F_{j} \cap E = \varnothing \) for j and here \(z_{j} = 0\) for all \(j = 1\) to q and hence \(\mu _{W_1 g} (\varnothing ) = 0\).

-

(2)

If \(E = X\), then \(F_{j} \cap X = F_{j}\) and \({\vert }F_{j}{\vert } = n_{j}\) and hence \(z_{j} = 1\hbox { for }j\). In this case \(\mu _{W_1 g} (X) = 1\)

-

(3)

If \(E_{1}\) and \(E_{2}\) are such that \(E_{1} \subseteq E_{2}\), then \((E_{1} \cap F_{j}) \subseteq (E_{2} \cap F_{j})\) and \({\vert }(E_{1}\cap F_{j})\le {\vert }(E_{2}\cap F_{j}){\vert }\) for all j. From this it follows \(\sum \nolimits _{k=1}^{|E_1 \cap F_j |} {w_j (k)} \le \sum \nolimits _{k=1}^{|E_2 \cap F_j |} {w_j (k)} \). From this it follows that if \(E_{1} \subseteq E_{3}\), then \(\mu _{W, g} (E_{1}) \le \mu _{W, g}(E_{2})\). Thus, we see that \(\mu _{W, g} \) is a measure on X.

Let us look at some special cases of W. First consider the case where W is such for all \(W_{j}, w_{j}(1) = 1\). Here we see that if \(F_{j}\) is such that if \(E \cap F_{j} \ne \varnothing \), then \({\vert }E \cap F_{j}{\vert } \ge 1\) and \(z_{j} = 1\). While if \(E \cap F_{j}=\varnothing \), then \({\vert }E \cap F_{j}{\vert } = 0\) and \(z_{j} = 0\). Here we see for this W that \(z_{j}\) is essentially the \(\hbox {Poss}(E/F_{j})\). Thus, in this case

Thus, in this special case of \(\mathbf{W}_{j}\) where \(w_{j}(1) = 1\) for all j gives us the plausibility measure.

Consider now the special case where for all \(W_{j}\) we have \(w_{j}(n_{j}) = 1\). Here we observe that if \(F_{j} \subseteq E\), then \(E \cap F_{j} = F_{j}\) and thus \({\vert }E \cap F_{j}{\vert } = n_{j}\) and \(z_{j} = 1\). While if \(F_{j}\) \(\underline{\not \subset }\) E, then \({\vert }E \cap F_{j}{\vert }< n_{j}\) and \(z_{j} = 0\). Thus, we see \(\hbox {z}_{j}\) is essentially the \(\hbox {Cert}(E/F_{j})\). Thus, in this case

We note that if E is a singleton, \(E = \{x^{*}\}\), then \(\hbox {Pl}_{g}(\{x^{*}\}) = \hbox {Max}_{j}[\pi _{j}\)] over all j so that \(x^{*}\in F_{j}\). On the other hand in this case \(\hbox {Bel}_{g}(\{x^{*}\}) = \hbox {Max}_{j}[\pi _{j}]\) over all j so that so that \(F_{j} = \{x^{*}\}\)

Another special case of interest is one in which each vector \(W_{j}\) is such that \(w_{j}(k) = \frac{1}{n_j}\) for all \(k = 1\) to \(n_{j}\). Here we see that \(z_{j}=\frac{|F_j \cap E|}{n_j }\), \(z_{j}\) is the proportion of elements in \(F_{j}\) that are in E. Let \(\rho \) be an index function on the focal elements so that \(\rho (i)\) is the index of the focal element with the \(i{\mathrm{th}}\) largest \(z_{j}\). Thus, \(z_{\rho _{(i) }}=\frac{|F_{\rho (i)} \cap E|}{n_{\rho (i)} }\) and with “\(H_{i}\)”\(=\{F\rho _{(1)},{\ldots }, F\rho _{(i)}\}\) we have

A standardization of the determination of the vectors \(W_{j}\) can be had using a methodology introduced by O’Hagan (1990). Here we provide a parameter \(\beta \in [0, 1]\) called the attitudinal character or degree of optimism. The larger the \(\beta \), the more optimistic the resulting measure. Here for each \(W_{j}\) we obtain its \(n_{j}\) components, the \(w_{j}\)(k), by solving the following optimization problem.

We shall here refer to the measure obtained using this \(\beta \) across all \(W_{j}\) as \(\mu _{\beta , g}\). We note that the following properties can be shown

-

(1)

if \(\beta = 1\), then all \(w_{j}(1) = 1\) and \(\mu _{\beta ,_g } (E) = \hbox {Pl}(E)\)

-

(2)

If \(\beta = 0\), then all \(w_{j}(n_{j}) = 1\) and \(\mu _{\beta ,_g}(E) = \hbox {Bel}(E)\)

-

(3)

If \(\beta = 0.5\), then all \(w_{j}(k) =\frac{1}{n_j}\)

-

(4)

If \(\beta _{1 }> \beta _{2}\), then \(\mu _{\beta _{1,} g}(E) \ge (E) \mu _{\beta _{2,} g}(E)\) for all E

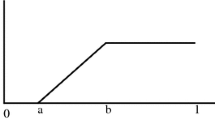

Another method for standardization of the \(W_{j}\) can be had using a weight generating function \(f:\, [0, 1] \rightarrow [0, 1]\) such that (1) \(f(0) = 0\), (2) \(f(1) = 1\) and (3) \(f(a) \ge f(b)\) if \(a > b\) (Yager 2017). Using this function we can generate the OWA weights for each \(W_{j}\), \(w_{j}(k)\) for \(k = 1\) to \(n_{j}\) as (Yager 1996)

We note that the function \(f^{*}\) such that \(f^{*}(x) = 1\) for all \(x > 0\) has \(w_{j}(1) = 1\) and generates the plausibility measure. The function \(f_{*}\) such that \(f_{*}(x) = 0\) for all \(x < 1\) has \(w_{j}(n_{j}) = 1\) and generates the belief measure. In addition the function \(f(x) = x\) generates the weights \(w_{j}(k) = \frac{1}{n_j}\) for all k. It can be shown that if \(f_{1}\) and \(f_{2}\) are two function such that \(f_{1}(x) \ge f_{2}(x)\) for all x, then the associated generated measures, \(\mu _{f_1 , g} \) and \(\mu _{f_2 , g} \), are such that \(\mu _{f_1 , g} (E) \ge \mu _{f_2 , g}(E)\) for all E. In addition we can associate with every weight generating function f a measure of optimism \(\beta =\int _0^1 {f(x)\mathrm{d}x} \). We see here that if \(f_{1}(x) \ge f_{2}(x)\) for all x, then \(\beta _{1 }=\int _0^1 {f_1 (x)\mathrm{d}x} \ge \int _0^1 {f_2 (x)\mathrm{d}x} \ge \beta _{2}\). Here also note that \(\beta =\int _0^1 {f^{*}(x)\mathrm{d}x} = 1, \beta =\int _0^1 {f_*(x)\mathrm{d}x} = 0\) and \(if f(x) = x\), then \(\beta = 0.5\)

Consider a weight generating function \(f_{1}\) and the function \(f_{2}(x) = 1 - f_{1}(1 - x)\). We see

-

(1)

\(f_{2}(1) = 1 - f_{1}(0) = 1\)

-

(2)

\(f_{2}(0) = 1 - f_{1}(1 - 0) = 1 - f_{1}(1) = 0\)

-

(3)

If \(x \ge y\), then \(f_{2}(x) \ge f_{2}(y)\). We see this as follows. Since \(f_{2}(x) = 1 - f_{1}(1 - x)\) and

\(f_{2}(y) = 1 - f_{1}(1 - y)\) when \(x \ge y\), then \(1 - x \le 1 - y\) and hence \(f_{1}(1 - x)\le f_{1}(1 - y)\)

but \(f_{2}(x) = 1 - f_{1}(1 - x) \ge 1 - f_{1}(1 - y) = f_{2}(y)\).

Thus, when \(f_{1}\) is a weight generating function, then \(f_{2}(x) = 1 - f_{1}(1 - x)\) is also a weight generating function; in this case we shall refer to \(f_{2}\) as the dual of \(f_{1}\). If \(f_{1}\) and \(f_{2}\) are dual weight generating functions and \(\mu _{f_1 , g}\) and \(\mu _{f_2 , g} \) are the measures obtained from an MBS g using these functions, then it can be shown that \(\mu _{f_1 , g}\) and \(\mu _{f_2 , g} \) are duals (Yager 2017).

If \(f_{1}\) and \(f_{2}\) are dual weight generating functions, \(f_{2}(x) = 1 - f_{1}(1 - x)\), then \(\beta _{2}= 1 - \beta _{1}\). If \(f_{1}\) and \(f_{2}\) are dual pairs of weight generating functions such that \(f_{1}(x) \ge f_{2}(x)\) for all x, then (1) \(\mu _{f_1 , g} (E) \ge \mu _{f_2 , g} (E)\) for all E and (2) \(\beta _{1 }\ge \beta _{2}\). Thus, in this case of \(f_{1}(x) \ge f_{2}(x)\) a natural relationship exists between their respective measures. The prototypical example of this is \(f^{*}\) and \(f_{*}\) and their associated measures of plausibility and belief.

We shall find it convenient to assume the notation \(\hat{{f}}\) for the dual of f. In addition we shall use the notation \(\hat{{\mu }}_{f, g} \) for  . If f and \(\hat{{f}}\) are duals let \(\Delta = {\vert } \beta -\hat{{\beta }}{\vert }\).

. If f and \(\hat{{f}}\) are duals let \(\Delta = {\vert } \beta -\hat{{\beta }}{\vert }\).

Consider the weight generation function \(f(x) = x\). Here we see that \(\hat{{f}}(x) = x\). Thus, \(f(x)=x\) is self-dual, in this case \(\mu _{f, g}(E) = \hat{{\mu }}_{f, g}(E)\). We also observe in this case \(\hat{{\beta }}=\beta = 0.5\). We see that \(\Delta \) takes its maximum of one for \(f_{*}\) and \(f^{*}\) and its minimum value of zero for the self-dual case \(f = x\).

A useful class of weight generating functions are \(f(x) = x^{t}\) for \(t \in (0, \infty )\). Here we note that for \(t \rightarrow 0\) we get \(f^{*}\) and for \(t \rightarrow \infty \) we get \(f_{*}\). In addition for \(t = 1\) we get the function \(f(x) = x\). Here we observe that \(\beta =\int _0^1 {x^{t}\mathrm{d}x} =\frac{1}{t+1}\). We note here we have as dual \(\hat{{f}}(x) = 1 - (1 - x)^{t }\) and \(\hat{{\beta }}=1 - \frac{1}{t+1}=\frac{t}{t+1}\). In this case \(\Delta = {\vert }\frac{t - 1}{t + 1}{\vert }\). Thus, if \(t \le 1\), then \(\Delta =\frac{1 - t}{t + 1}\) and if \(t \ge 1\), then \(\Delta =\frac{t - 1}{t + 1}\).

8 Approximating belief plausibility range

Consider now an MBS g on X; as we noted, this can be used to model an imprecise possibility distribution. In this perspective we see that for any subset E of X we can say

Consider any dual pair f and \(\hat{{f}}\) such that \(f(x) \ge \hat{{f}}(x)\) for all x in this case we have \(\mu _{f,g}(E)\ge \hat{{\mu }}_{f,g}(E)\). Further since \(f^{*}(x)\ge f(x)\) for all x, then \(\hbox {Pl}_{g}(E) \ge \mu _{f, g}(E)\) or all E. In addition since \(f(x) \ge f_{*}(x)\) for all x, then \(\hat{{\mu }}_{f,g} (E) \ge \hbox {Bel}_{g}(E)\) for all E. Consider now the interval

we see that the \(\hbox {Range}_{f, g}(E)\subseteq [\hbox {Bel}_{g}(E), \hbox {Pl}_{g}(E)]\). Here we see the \(\hbox {Range}_{f, g}(E)\) provides a narrower interval for the value Poss(E). In some situations we may prefer to use a narrower interval for expressing the possibility of E. We should note that formally we cannot be certain that \(\hbox {Poss}(E)\in \hbox {Rang}_{f, g}(E)\). Here \(\hbox {Rang}_{f,g}(E)\) is only providing an approximation to the true interval value of \(\hbox {Poss}(E)\). In this spirit we see if \(\beta \) is the degree of optimism associated with the function f, then \(\Delta = {\vert }\beta -\hat{{\beta }}\)) can provide some indication of confidence in using the range value \(\hbox {Range}_{f,g}(E)\) as our approximation. Here we note that in the special case where f is self-dual, \(\hat{{f}}=f\), then \(\mu _{f,g}(E) = \mu _{\hat{{f}},g}(E)\) and we have no interval for Poss(E), here we have \(\hbox {Poss}(E)=\mu _{f,g}(E)\).

We note that we can use the O’Hagan approach to obtain dual measures. Let \(\mathbf{W}=\langle W_{1},{\ldots }, W_{q}\rangle \) be a collection of OWA weight vectors obtained by using an optimism degree of \(\beta \in ~[0, 1]\) in the algorithm used for calculating the \(w_{j}(k)\). Let \(\hat{{W}}=\langle \hat{{W}}_1 , {\ldots }, \hat{{W}}_q \rangle \) be a collection of OWA weight vector such that \(W_{j}\) and \(\hat{{W}}_j \) are duals. In this case we obtain the vector components \(\hat{{w}}_j (k) = w_{j}(n - k + 1)\). From these then we calculate \(z_{j}=\sum \nolimits _{k=1}^{|F_j \cap E)} {w_j (k)}\) and \(\hat{{z}}_j =\sum \nolimits _{k=1}^{|F_j \cap E)} {\hat{{w}}_j (k)} \). Using these we calculate \(\mu _{B, g}(E) = \sum \nolimits _{i=1}^q {(\lambda (\hbox {``}H_{i}\hbox {''})} -\lambda (\hbox {``}H_{i - 1}\hbox {''}))z_{\rho _{(i)}}\) where \(\rho \)(i) is the index of \({i}{\mathrm{th}}\) largest \(z_{j}\) and \(\hbox {``}H_{i}\hbox {''} = \{F\rho _{(1)}, {\ldots }, F\rho _{(i)}\}\). We also now calculate \(\hat{{\mu }}_{B,g} (E)=\sum \nolimits _{i=1}^q {(\lambda _i (\hbox {``}\hat{{H}}_i\hbox {''})}- \lambda (\hbox {``}\hat{{H}}_{i-1}\hbox {''}))\hat{{z}}_{\hat{{\rho }}(i)}\) where \(\hat{{\rho }}\)(i) is the index of the \(i{\mathrm{th}}\) largest \(\hat{{z}}_j \) and \(\hbox {``}\hat{{H}}_i\hbox {''}=\{F_{\hat{{\rho }}(1)}, {\ldots }, F_{\hat{{\rho }}(i)}\}\).

9 Random method of selecting object from chosen focal element

Here we shall consider a variation of the maxitive belief structure in which we use a different approach for selecting the element from the chosen focal element. Again we have a collection \(\mathbf{F} = \{F_{1}, {\ldots }, F_{q}\}\) of focal elements and a maxitive measure \(\lambda \) for choosing the value V from F. We shall again let \(\lambda (F_{j})=\pi _{j}\) where at least one of these has value one. Again we let U be a related variable taking its value in \(X = \{x_{1}, {\ldots }, x_{n}\}\). Here, however, if \(V = F_{k}\) we select the value U from \(F_{k}\) in the manner described below.

Associated with each \(x_{i}\) in X is a probability \(\alpha _{i }\in \) [0, 1]. We select the element x from \(F_{k}\) based on a random experiment where the probability of selecting \(x_{i}\) given \(U = F_{k}\) is \(p_{ik}=\frac{\alpha _i \hbox {Poss}[\{x_i \}/F_k )}{\sum \nolimits _{i=1}^n {\alpha _i \hbox {Poss}(\{x_i \}/F_k \})} }\). We see \(p_{ik}\) is the normalized probability of selecting \(x_{i}\) from \(F_{k}\).

Note: The \(\alpha _{i}\) need not be a probability distribution but can be a finite nonnegative collection of weights.

In the following we find it convenient to use the notation, \(F_{k}(x_{i})\) for \(\hbox {Poss}(\{x_{i}\}/F_{k})\). It is essentially the membership grade of \(x_{i}\) in \(F_{k}\). Using this we see that \(p_{ik}=\frac{\alpha _i F_k (x_i )}{\sum \nolimits _{i=1}^n {\alpha _i F_k (x_i )} }\).

Denoting \(T_{k}=\sum \nolimits _{i=1}^n {\alpha _i F_k (x_i )} \), the sum of the \(\alpha _{i }\)s of the elements in \(F_{k}\), we see \(p_{ik}=\frac{\alpha _i F_k (x_i )}{T_k }\). Please note that T\(_{k}\) is not the probability of selecting \(F_{k}\), as \(F_{k}\) is chosen using the measure \(\lambda \).

Our interest here is in determining the anticipation that U \(\in \) E where E is some subset of X. We see that the probability of that U \(\in \) E given \(F_{j}\) is Prob\((E/F_{j})=\sum \nolimits _{x_i \in E} {p_{ij} } =\frac{1}{T_j }\sum \nolimits _{x_i \in E} {\alpha _i F_j (x_i )} \). To obtain the anticipation that \(U \in E\), denoted Ant(E), we calculate the mean of the Prob\((E/F_{j})\) with respect to the measure \(\lambda \). We can obtain this value using the Choquet integral of the Prob\((E/F_{j})\) with respect to the measure \(\lambda \); using this we have

where \(\rho \) is an index function on the focal elements so that \(\rho \)(i) is the index of the focal element with i th largest value for Prob\((E/F_{j})\) and \(\hbox {``}H_{i}\hbox {''} = \{F\rho _{(1)}, {\ldots }, F\rho _{(i)}\}\).

We note here that the value of Ant(E) is not an interval but a specific value. The reason for this is the value of U is selected from \(F_{k}\) in some well-defined manner.

We also note here that Ant is a measure on the space X since we can easily show that

Let us look at Ant(E) for some notable cases of E. Consider the case where \(E = \{x_{1}\}\), some arbitrary element in X, here Prob\((E/F_{j}) = \hbox {Prob}(\frac{(\{x_1 \})}{F_j })=\frac{\alpha _1 }{T_j }F_{j}(x_{1}) = p_{1j }\) and

Let \(id_{1}\) be an index function so that \(id_{1}\)(i) is the index of the \(i{\mathrm{th}}\) largest \(p_{1j}\) and let \(H_{1i}=\{F_{id_1 (1)} , {\ldots }, F_{id_1 (i)})\). Using this we have

Assume \(n_{1}\) is the number of focal elements containing \(x_{1}\). We see that \(F_{id_1 (i)} \)(i)(\(x_{1}) = 0\) for \(i > n_{1}\) and \(F_{id_2 (i)} (x_{1}) = 1\) for \(i \le n_{1}\), from this we have \(\hbox {Ant}(\{x_{1}\}) = \sum \nolimits _{i=1}^{n_1 } {(\lambda (H_{1i} )} -\lambda (H_{1i - 1}))\;\frac{\alpha _1 }{\hat{{p}}_{id_1 (i)} }\).

For the case \(E = \{x_{2}\}\) we get \(\hbox {Ant}(\{x_{2}\}) = \sum \nolimits _{i=1}^{n_2 } {(\lambda (H_{2i} )} -\lambda (H_{2i - 1})) \frac{\alpha _2 F_{id_1 (i)} (x_2 )}{T_{id_1 (i)} }\)

Let us consider the case with \(E = \{x_{1}, x_{2}\}\). Here Prob(\(\{x_{1}, x_{2}\}/F_{j}) = p_{1j} + p_{2j}=\frac{\alpha _1 F_j (x_1 ) + \alpha _2 F_j (x_2 )}{T_j }\) and hence Ant(\(\{x_{1}, x_{2}\}\} = \hbox {Choq}_{\lambda }(\frac{\alpha _1 F_j (x_1 ) + \alpha _2 F_j (x_2 )}{T_j }\) for \(j = 1\) to q)

A natural question is that is there any simple relationship between the values \(\hbox {Ant}(\{x_{1}\})\) and \(\hbox {Ant}(\{x_{2}\})\) and the value \(\hbox {Ant}(\{x_{1}, x_{2}\})\). Unfortunately, the complexity of the Choquet integral with respect to possibility measure \(\lambda \) precludes the uncovering of any natural relationship. In particular what is clear is that in general \(\hbox {Ant}(\{x_{1}\}) + \hbox {Ant}(\{x_{2}\}) \ne \hbox { Ant}(\{x_{1}, x_{2}\})\). Thus, while Ant is a measure it is not a probability measure.

10 Conclusion

We introduced a new class of belief structures in which we select from among the focal elements using a possibility measure instead of a probability measure. We referred to this as a maxitive belief structure, MBS. The concepts of belief and plausibility were defined for an MBS, and it was noted how an MBS can be used to model imprecise possibility distributions. We described various operations that can be performed with these structures including arithmetic and fusion. We looked at the use of the Choquet integral type aggregation for these MBS. Measures other than belief and plausibility were defined for these structures.

References

Beliakov G, Pradera A, Calvo T (2007) Aggregation functions: a guide for practitioners. Springer, Heidelberg

Caselton WF, Luo W (1992) Decision making with imprecise probabilities: Dempster–Shafer theory and application. Water Resour Res 28:3071–3083

Choquet G (1953) Theory of capacities. Annales de l’Institut Fourier 5:131–295

Dammak F, Baccour L, Alimi A (2016) An exhaustive study of possibility measures of interval-valued intuitionistic fuzzy sets and application to multicriteria decision making. Adv Fuzzy Syst 2016:9185706. https://doi.org/10.1155/2016/9185706

Dempster AP (1968) A generalization of Bayesian inference. J R Stat Soc 30:205–247

Dempster AP (1966) New methods of reasoning toward posterior distributions based on sample data. Ann Math Stat 37:355–374

Dempster AP (1967) Upper and lower probabilities induced by a multi-valued mapping. Ann Math Stat 38:325–339

Dempster AP (2008) The Dempster–Shafer calculus for statisticians. Int J Approx Reason 48:365–377

Dubois D, Prade H, Rico A (2013) Qualitative capacities as imprecise possibilities. In: van der Gaag LC (ed) Symbolic and quantitative approaches to reasoning with uncertainty. ECSQARU 2013. Lecture Notes in Computer Science, vol 7958. Springer, Berlin, pp 169–180

Fu C, Yang SL (2011) Analyzing the applicability of Dempster’s rule to the combination of interval-valued belief structures. Expert Syst Appl 38:4291–4301

Klement EP, Mesiar R, Pap E (2010) A universal integral as common frame for Choquet and Sugeno. IEEE Trans Fuzzy Syst 18:178–187

Klir GJ (2006) Uncertainty and information. Wiley, New York

Klir GJ, Wierman MJ (1999) Uncertainty based information. Springer, Heidelberg

Liu L, Yager RR (2008) Classic works of the Dempster–Shafer theory of belief functions: an introduction. In: Yager RR, Liu L (eds) Classic works of the Dempster–Shafer theory of belief functions. Springer, Heidelberg, pp 1–34

Moore RE (1966) Interval analysis. Prentice-Hall, Englewood Cliff

O’Hagan M (1990) Using maximum entropy-ordered weighted averaging to construct a fuzzy neuron. In: Proceedings 24th annual IEEE Asilomar conference on signals, systems and computers, Pacific Grove, CA, pp 618–623

Shafer G (1976) A mathematical theory of evidence. Princeton University Press, Princeton

Smets P (1988) Belief functions. In: Smets P, Mamdani EH, Dubois D, Prade H (eds) Non-standard logics for automated reasoning. Academic Press, London, pp 253–277

Smets P (1992) The tranferable belief model and random sets. Int J Intell Syst 7:37–46

Smets P, Kennes R (1994) The transferable belief model. Artif Intell 66:191–234

Sugeno M (1977) Fuzzy measures and fuzzy integrals: a survey. In: Gupta MM, Saridis GN, Gaines BR (eds) Fuzzy automata and decision process. North-Holland, Amsterdam, pp 89–102

Tsiporkova E, De Baets B (1998) A general framework for upper and lower possibilities and necessities. Int J Uncertain Fuzziness Knowl Based Syst 6:1–34

Wang Z, Klir GJ (2009) Generalized measure theory. Springer, New York

Yager RR, Liu L, (Dempster AP, Shafer G, Advisory Editors) (2008) Classic works of the Dempster–Shafer theory of belief functions. Springer, Heidelberg

Yager RR (1987) On the Dempster–Shafer framework and new combination rules. Inf Sci 41:93–137

Yager RR (1996) Quantifier guided aggregation using OWA operators. Int J Intell Syst 11:49–73

Yager RR (2017) Belief structures, weight generating functions and decision-making. Fuzzy Optim Decis Mak 16:1–21

Yager RR, Kacprzyk J, Fedrizzi M (1994) Advances in the Dempster–Shafer theory of evidence. Wiley, New York

Zadeh LA (1978) Fuzzy sets as a basis for a theory of possibility. Fuzzy Sets Syst 1:3–28

Zadeh LA (1979) Fuzzy sets and information granularity. In: Gupta MM, Ragade RK, Yager RR (eds) Advances in fuzzy set theory and applications. North-Holland, Amsterdam, pp 3–18

Zadeh LA (1979) On the validity of Dempster’s rule of combination of evidence, Memo# UCB/ERL, M79/32. University of California, Berkeley

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author declare that he has no conflict of interest.

Additional information

Communicated by A. Di Nola.

Rights and permissions

About this article

Cite this article

Yager, R.R. A class of belief structures based on possibility measures. Soft Comput 22, 7909–7917 (2018). https://doi.org/10.1007/s00500-018-3062-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3062-8