Abstract

Many real-world multiobjective optimization problems are dynamic, requiring an optimization algorithm that is able to continuously track the moving Pareto front over time. In this paper, we propose a directed search strategy (DSS) consisting of two mechanisms for improving the performance of multiobjective evolutionary algorithms in changing environments. The first mechanism reinitializes the population based on the predicted moving direction as well as the directions that are orthogonal to the moving direction of the Pareto set, when a change is detected. The second mechanism aims to accelerate the convergence by generating solutions in predicted regions of the Pareto set according to the moving direction of the non-dominated solutions between two consecutive generations. The two mechanisms, when combined together, are able to achieve a good balance between exploration and exploitation for evolutionary algorithms to solve dynamic multiobjective optimization problems. We compare DSS with two existing prediction strategies on a variety of test instances having different changing dynamics. Empirical results show that DSS is powerful for evolutionary algorithms to deal with dynamic multiobjective optimization problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In many real-world optimization problems, the fitness functions to be optimized may change over time, which are known as dynamic optimization problems (DOPs). Like stationary optimization problems, DOPs can also be categorized into dynamic single-objective optimization problems (DSOPs) and dynamic multiobjective optimization problems (DMOPs), see detailed discussions in Farina et al. (2004), Jin and Branke (2005) and Nguyen et al. (2012). In recent years, solving dynamic multiobjective optimization problems using evolutionary algorithms (EAs) has attracted increasing attention (Farina et al. 2004; Jin and Branke 2005; Nguyen et al. 2012) due to its practical significance in a wide range of applications, such as scheduling (Abello et al. 2011a, b; Deb et al. 2007), control (Zhang 2008), airspace sectorization (Tang et al. 2012), vehicle motion planning (Wu et al. 2011) and wireless network design (Martins et al. 2009), although many challenges remain (Nguyen et al. 2012).

In this paper, we focus on the following class of DMOPs:

where \(t=0,1,2,\ldots \in T\) is the time instant and \(x=(x_{1},\ldots , x_{n})\) is the decision vector.

\([L,U]=\{x=(x_{1},\ldots , x_{n})| l_{i}\le x_{i}\le u_{i},i=1,2,\ldots ,n\}\) defines the decision space, where \(L=(l_{1},\ldots ,l_{n})\), \(U=(u_{1},\ldots ,u_{n})\) are the lower and upper bounds, respectively. The objective vector consists of \(m\) time-varying objective functions \(f_{i}(x,t),(i=1,2,\ldots ,m)\).

For DMOPs defined in (1), the Pareto front (PF) and/or Pareto set (PS) may change over time. Typically, dynamic multiobjective optimization evolutionary algorithms (DMOEAs) aim to track moving PF (PS) as closely as possible when environment changes.

However, EAs usually lose the ability to find a new optimum once the population has converged. A straightforward idea to address this problem is to increase the population diversity so that the population will not fully converge even if the current optimum has been found. Based on this idea, many approaches have been proposed to adapt EAs to solving DMOPs. Hyper mutation methods (Deb et al. 2007; Zhou et al. 2007; Zheng 2007; Liu et al. 2011) promote diversity by increasing the mutation rate drastically after a change is detected. Random immigrants and other immigrants methods (Deb et al. 2007; Aragon et al. 2005; Azevedo and Araujo 2011) maintain diversity by inserting new immigrants throughout the evolutionary optimization. There are also other methods to increase diversity, such as employing multiple populations and parallel computing (Camara et al. 2009, 2010) and applying different crossover and mutation operator (Yang et al. 2008; Jin and Sendhoff 2004) after a change.

In addition to enhancing population diversity, accelerating convergence is another important issue for dynamic MOEAs to trace the moving PF(PS), as the time for relocating the changed optimum is typically rather short. Unfortunately, most methods for increasing population diversity are likely to disrupt convergence, e.g., the random initialization method (Deb et al. 2007; Zhang 2008; Greeff and Engelbrecht 2008; Liu and Wang 2006, 2009). To alleviate this problem, ideas of using history information about previous optimums have been proposed to speed up convergence, such as the memory-based methods (Zhang 2008; Hatzakis and Wallace 2006a, b; Wang and Li 2009; Manriquez et al. 2010; Vinek et al. 2011; Helbig and Engelbrecht 2012), among others. However, memory-based approaches are most effective only when the changes are periodic.

History information of the previous optimums can be used to predict their future behavior, more or less, so long as the changes in the fitness functions are not fully random. Various prediction strategies have been suggested to demonstrate that they can accelerate convergence of dynamic MOEAs (Zhou et al. 2007; Liu et al. 2011; Hatzakis and Wallace 2006a, b; Wei and Zhang 2011; Ma et al. 2011; Wei and Wang 2012; Zhou et al. 2014). A feed-forward prediction strategy (FPS) was studied in Hatzakis and Wallace (2006b), where an autoregressive model was used to estimate the new position of an individual once a change occurs based on the position of a number of previous optimums that are considered to be related to this individual. The difficulty is that it is non-trivial to identify the right previous optimums that are really associated with the current individual. In Zhou et al. (2014), a population prediction strategy (PPS) has been investigated, which was shown to be able to enhance the performance of MOEAs in dynamic environments thanks to the predicted initial population after a change. Comparative studies indicated that PPS (Zhou et al. 2014) can converge faster than FPS (Hatzakis and Wallace 2006b). However, as a linear regression model was used in PPS, the performance of PPS will severely degrade if the changes are irregular or nonlinear.

In solving DOPs, it is essential for EAs to achieve a good balance between maintaining a high degree of population diversity and accelerating convergence. To this end, we design a new prediction based method to take both diversity and convergence into account. The proposed method consists of two mechanisms. The first mechanism reinitializes the population based on the predicted moving direction and the orthogonal directions of the moving Pareto set. The second mechanism guides the search by adding a small number of individuals generated according to the moving direction of the non-dominated solutions between two consecutive generations. Among these mechanisms, generating candidate solutions along the predicted moving direction of the Pareto or non-dominated set is meant for speeding up convergence, whereas the solutions generated along the orthogonal directions of the moving direction can enhance the diversity in the beginning of an environmental change. It is noted that if the change severity is large, the new problem is less relevant to the previous problem and a restart strategy may be better.

The paper is structured as follows. Section 2 presents the proposed algorithm in detail. The test instances and performance metric are presented in Sect. 3. In Sect. 4, experimental results are reported. Finally, conclusions are drawn in Sect. 5.

2 The proposed algorithm

2.1 Directed search strategy (DSS)

The basic idea of the proposed method is to predict the possible regions in decision space where new PS may be located once an environmental change is detected. The main idea here is to take advantage of the predicted information about the moving directions of non-dominated solutions between two consecutive environments and between two consecutive generations. For the sake of convenience, we term the proposed method directed search strategy (DSS). DSS contains two mechanisms, one used when an environmental change is detected (DSS1 for short), and the other used in each generation (DSS2).

-

DSS1 Reinitialize the population based on the predicted moving direction of the Pareto set and a local search along the directions orthogonal to the moving direction of PS between two consecutive environments once a change occurs.

-

DSS2 Guide the search by introducing the promising individuals generated in the regions predicted based on relative positions of the non-dominated solutions between two consecutive generations.

The first mechanism, DSS1, is used to reinitialize the population after an environmental change. After a change occurs, part of the population is reinitialized with individuals generated in the predicted regions where the new PS may be located. In addition, the rest of the individuals are generated by performing a local search along the orthogonal directions of the predicted Pareto set, aiming to enhance the diversity of the population and to deal with inaccurate prediction and sudden irregular changes. By contrast, the second mechanism, DSS2, aims to improve the speed of convergence to PS. At every generation, some individuals generated around the PS region predicted using the history information of the PSs are inserted into the population to speed up the convergence.

2.2 DSS1

2.2.1 Prediction upon an environmental change

The prediction in this work plays a similar role as other methods (Hatzakis and Wallace 2006b; Zhou et al. 2014), which aims to make a good guess of the location of the new optimum so that solutions close to the new PS can be generated and the new PS can be obtained more quickly than random initialization. The main assumption in predicting the location of the new PS is that the moving direction of PS at time \(t+1\) can be estimated based on the changes of the non-dominated solutions in \(t-1\) and \(t\). The prediction we used here is very coarse, which however, offers the benefit of a more explorative search than the finer prediction in FPS and PPS, as illustrated in Fig. 1.

Let \(C^t\) be the centroid of PS\(^t\), PS\(^t\) is the non-dominated solutions obtained by the algorithm at the end of time step \(t\), then \(C^t\) can be calculated as follows:

where \(|\mathrm{PS}^t|\) is the cardinality of \(\mathrm{PS}^t\), \(x=(x_{1},\ldots ,x_{n})\in \mathrm{PS}^t\). Then the moving direction of the non-dominated set at time \(t\), denoted by \(D_1^t\) can be estimated as follows:

Then the predicted moving direction \(D_{1}^{t}\) is used to generate individuals according to the following formulas for initializing the population after the environmental change:

where \(x\) is any individual in the population before the environmental change, \(\text{ sgn }(\cdot )\) is the sign function, \(d\) is the Euclidian distance between centroid \(C^t\) and \(C^{t-1}\) and \(N(0,d)\) denotes a normally distributed one-dimensional random number with mean of zero and standard deviation \(d\). In the above equation, the noise is added for compensating possible errors in the prediction. Noted that \(S^t\) results in a bias towards the original moving direction, refer to Fig. 1.

2.2.2 Directed local search

The predicted individuals focus on generating solutions in the predicted new PS area after an environmental change. As the population might have converged before the environmental change, the diversity of the new population may be insufficient if all individuals are reinitialized with individuals based on prediction. For this reason, part of the population will be reinitialized with individuals generated in the regions orthogonal to \(D_{1}^{t}\). We will show in the empirical studies that the proposed local search along the direction orthogonal to the moving direction of the non-dominated sets in two consecutive environments works better than a random local search.

There are many directions which are orthogonal to \(D_{1}^{t}\) and here we choose the orthogonal basis of the null space of \(D_{1}^{t}\) as the orthogonal directions to \(D_{1}^{t}\). Let \(D_{1}^{t}=(v_{1},\ldots ,v_{n})\), the null space of \(D_{1}^{t}\) is defined as

According to singular value decomposition, the orthogonal basis of \(\text{ Null }(D_{1}^{t})\) is as follows:

Given \(D_{i}^{t}, i\in \{2,\ldots ,n\}\) as calculated above are orthogonal to \(D_{1}^{t}\), new candidate solutions can be generated as follows from any individual (\(x\)) in the population before the environmental change:

where \(N(0,1)\) denotes a normally distributed random number with mean zero and standard deviation 1.

The main steps of DSS1 are summarized in Algorithm 1.

In line 1 of Algorithm 1, \(N\) is population size, set \(C^{0}=(0,\ldots ,0)\), assuming that the starting location of the centroid is at the origin. In line 3, \(0< r_1 < 1 \) is a ratio. Typically, we set \(r_1=0.5\) in this paper. In lines 6 and 12, \(P^{t}\) is the reinitialized population. Boundary check is to see if the generated solutions are within the given boundary of the decision variable and if not, a repairing operation will be performed as follows:

where \(i=1,\ldots ,n\).

2.3 DSS2

In tracking a moving Pareto front, the convergence speed is central to the performance since the time period between two environmental changes can be very short. DSS1 aims to improve the tracking performance by generating an initial population close enough to and widely distributed along the Pareto set. Clearly, these one-shot measures based on a very rough prediction are inadequate for reliably and significantly improving the search performance in a changing environment. DSS2 is therefore designed to accelerate the convergence speed of DMOEAs by inserting promising individuals into the current population at every generation. These promising individuals are generated according to the moving direction of centroid of \(\mathrm{PS}^\mathrm{iter}\) of the non-dominated solutions between two consecutive generations, where iter is the generation index. Here, the assumption is that the moving direction of centroid between two consecutive generations indicates the direction of true PS to head for. The moving direction \(D_{1}^\mathrm{iter}\) is estimated as in Eq. (3) with \(t\) being replaced by iter. Thus, promising individuals are generated as follows:

where \(x\in \mathrm{PS}^\mathrm{iter}\) is a randomly selected non-dominated individual from the current population, \(d^{\prime }\) is the Euclidian distance between centroid \(C^\mathrm{iter}\) and \(C^{\mathrm{iter}-1}\), \(N(0,d^{\prime })\) denotes a normally distributed random number with mean zero and standard deviation \(d^{\prime }\), \(d^{\prime }\) is used to control the range of search, and \(S^\mathrm{iter}\) is calculated as (4) to maintain the original increasing or decreasing direction. The main components of DSS2 are summarized in Algorithm 2.

In line 1, \(N\) is the population size. Set \(C^{0}=(0,\ldots ,0)\), assuming that the starting location of centroid is at the origin.

In DSS2, the new individuals are generated according to the moving direction to search the most promising area of \(PS\). This can be seen as a sort of “gradient” and is expected to provide useful information for the EA to converge to the changed Pareto front more rapidly.

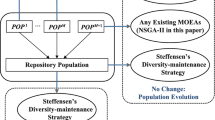

2.4 Overall framework of the proposed algorithm

The proposed directed search strategy (DSS) is incorporated into the most widely used Pareto-based MOEA, the elitist non-dominated sorting algorithm, NSGA-II (Deb et al. 2002). However, the crossover operator used in the original NSGA-II, simulated binary crossover (SBX) is replaced by the DE operator (Li and Zhang 2009; Iorio and Li 2005). Thus, the main procedure of the proposed algorithm, denoted by NSGA-II/DE+DSS, is described in Algorithm 3:

The DE operator in line 4 of Algorithm 3 is described as follows:

where \(F\) is a control parameter, \(F=0.5\) and \(k_{1},k_{2},k_{3}\) are mutually exclusive integers randomly generated within the range \([1, N]\).

3 Test instances and performance indicators

3.1 Test instances

Twelve dynamic multiobjective test instances as listed in Table 1 are adopted here to examine the performance of NSGA-II/DE+DSS in dynamic environments. The first ten test problems are taken from Farina et al. (2004), Goh and Tan (2009) and Zhou et al. (2014). The last two problems are newly proposed to include two additional challenging environmental changes. Among these test problems, F1–F4 have linearly correlated decision variables, while F5–F12 have nonlinearly correlated decision variables. As to environmental changes, F1–F8 are subject to continuous environmental changes, whereas F9–F12 have irregular and sharp environmental changes. So F9–F12 are the most difficult problems among the twelve test functions. As indicated in Zhou et al. (2014), the Pareto fronts of F9 will have a large shift between two consecutive environments, which occurs occasionally. For F10, the shape of two consecutive PFs is substantially changed. By contrast, the PS of F11 suffers from rapid reverse movements near the boundary. F12’s Pareto front will have both abrupt large shifts and big rotations, especially when \(n_{T}\) is small. Figure 2 plots the projections of the PSs of F9–F12 on lower dimensional spaces. Details about the changes in PFs and PSs of other test problems can be found in Zhou et al. (2014).

3.2 Performance indicators

IGD Zhang et al. (2008) is a widely used metric in static MOEAs, which can be used to measure both diversity and convergence of non-dominated optimums to true Pareto front. In this paper, we adopt a modified IGD metric to measure the performance of DMOEAs. Let \(P_{f}^{t^{*}}\) be a set of uniformly distributed Pareto optimal points in PF\(^t\) and \(P_{f}^{t}\) be an approximation of PF\(^t\). The original IGD metric is defined as Zhang et al. (2008)

where \(d(v,P_{f}^{t})\) is the minimum Euclidian distance between \(v\) and the point in \(P_{f}^{t}\). \(|P_{f}^{t^*}|\) is the cardinality of \(P_{f}^{t^*}\).

The objective of DMOEAs is to track moving PF (PS) as closely as possible, to find a particular single PF (PS). To consider all PF (PS), the average IGD, which is denoted as MIGD (Zhou et al. 2014) over the time steps in the whole run can be defined as follows:

where \(T\) is a set of discrete time instances in a run and \(|T|\) is the cardinality of \(T\). The lower a MIGD value is, the better the tracking performance.

In our experiments, 2,500 uniformly distributed points in the PFs of F4 and F8 and 500 uniformly distributed points in the PFs of the rest test problems are taken to form \(P_{f}^{t^*}\) for computing IGD.

4 Experimental results

4.1 Compared algorithms and parameter settings

For fair comparative studies, two existing prediction strategies for handling dynamic MOEAs, namely, FPS (Hatzakis and Wallace 2006b) and PPS (Zhou et al. 2014) are chosen to be incorporated into NSGA-II/DE. For simplicity, the three algorithms under comparison are denoted as DSS, FPS and PPS, respectively.

The parameter settings for the test problems and different algorithms are as follows. The severity of changes is set to be \(n_{T}\) = 10. The dimensions of the test problems are \(n=20\). The population size is set to be \(N=100\) for all test problems. For change detection, at every generation, \(5~\%\) randomly selected points from the population are reevaluated to detect environmental changes. The environments change for every \(K\) = 5,500 function evaluations. As a result, the environment changes every 50 generations for DSS and 52.5 generations for FPS and PPS. For simplicity, the change frequency for FPS and PPS is set to be 55 generations. The crossover and mutation probability are set to be 0.9 and 0.1, respectively. Twenty independent simulation runs are performed for each of the test problems. The parameter settings for different algorithms are listed as follows.

-

Parameters in DSS: \(r_1=0.5\) in DSS1 and \(r_2=0.05\) in DSS2

-

Parameters in FPS Hatzakis and Wallace (2006b): The reinitialized population is composed of three parts, which are \(3(m+1)\) predicted points, \(30~\%(N-3(m+1))\) inherited points from the previous population, and \(70~\%(N-3(m+1))\) randomly sampled points from the search space. The \(AR(p)\) model order is \(p=3\) and the length of history mean point series is \(M=23\). The probability in the prediction model is 0.9.

-

Parameters in PPS (Zhou et al. (2014)): In the original PPS, the reinitialized population contains prediction points only. In this paper, since NSGA-II/DE is not as powerful as RM-MEDA (Zhang et al. 2008), in which the PPS was originally embedded (Zhou et al. 2014), we slightly modify the setup, i.e., when \(t<23\), the initialized population will combine 50 % predicted points, 30 % randomly sampled points and 20 % inherited points from the previous population. When \(t\ge 24\), PPS is exactly the same as in the original algorithm Zhou et al. (2014). The \(AR(p)\) model used here is the same as in Zhou et al. (2014).

4.2 Experimental results

Table 2 gives the mean and standard deviation values of the MIGD of each algorithm on each instance. These results are presented with \(t=0\), \(1\le t\le 20\), \(21\le t\le 40\), \(41\le t\le 80\). Figure 3 shows the average IGD values versus the time on F1–F12. Figure 4 plots the Pareto front of final populations obtained by FPS, PPS and DSS at different time steps. From the above results, we can make the following observations.

-

1.

From the Table 2, we see that DSS performs better than FPS and PPS at \(t=0\), except for F1, F3 and F4, while DSS performs comparably with FPS and PPS on F1, F3 and F4. The difference between DSS, FPS and PPS at \(t=0\) may be attributed to the fact that DSS introduces some promising individuals to guide the search by DSS2. This indicates that DSS2 does accelerate the convergence of the proposed algorithm. We will further investigate the influence of DSS2 in Sect. 4.4.

Table 2 Mean and standard deviation of MIGD for FPS, PPS and DSS on F1–F12 over 20 runs -

2.

From Table 2 and Fig. 3, we can see that DSS has better performance than FPS and PPS on all test instances when \(1\le t\le 20\). Both FPS and PPS need a long history to sensibly predict the new points in a changed environment to improve the algorithm. However, when \(1\le t\le 20\), there is little history information available, which degrades the performance of FPS and PPS. On the contrary, DSS needs the moving direction of the centroid in the previous environment and no other history information is needed.

-

3.

When \(t\ge 21\), as shown in Table 2 and Fig. 3, the mean and deviation values of MIGD of DSS are better than that of FPS and PPS on most of test problems except for F1, F2, F4 and F8. Recall that F1–F4 are the easiest problems that have the linear correlation between the decision variables and are subject to smooth environmental changes. Therefore, DSS, FPS and PPS all perform well on these test problems. FPS and PPS perform better when \(t\ge 21\) than when \(1\le t\le 20\) because the quality of history information stored by FPS and PPS improves. F5–F8 can be considered to be of medium difficulty because they have the nonlinear correlation between the decision variables and smooth changes in the environment. DSS outperforms FPS and PPS except on F8. FPS and PPS are slightly worse than DSS on F6 and F7 and outperform DSS on F8. The reason might be that DSS, FPS and PPS can all predict well the new location in the presence of smooth changes in the environment. However, as observed on F1–F4, the statistical results for FPS and PPS on F5–F8 when \(t\ge 21\) are better when \(1\le t\le 20\). It indicates that history information can help improve the prediction accuracy of FPS and PPS. For DSS, it performs similarly well when \(t<21\). The results from PPS on F6 are similar to those presented in Zhou et al. (2014). F9–F12 are the most difficult problems as they have nonlinear correlation between the decision variables and they experience sharp environmental changes. From Table 2 and Fig. 3, we can see that DSS consistently shows better performance than FPS and PPS on F9–F12. For PPS, the reinitialized population completely depends on the prediction. When there is a severe environmental change such as a large shift and rotation in the Pareto front, PPS often gets trapped in a local optimum if the whole reinitialized population based on a poor estimation is far away from the true new location of the PS and if the diversity of the reinitialized population is not large enough. Although the reinitialized population in FPS includes individuals randomly sampled from the whole space to address inaccurate prediction, FPS incurs more computational cost for convergence due to an overly large search space. So when \(t\ge 21\), the history information cannot help improve the performance of PPS and FPS. By contrast, DSS aims to achieve good diversity resulting from the fact that half of the reinitialized population is based on a rough prediction of the new position of the Pareto set and the rest based on the local search along the directions orthogonal to the moving direction of the Pareto set. The convergence is further speed up by adding promising solutions generated along the moving direction of the non-dominated front in two consecutive generations, which is similar to the derivative information in single-objective optimization.

-

4.

From the overall MIGD results of DSS, FPS and PPS, we can see that DSS has robust performance except for \(t=0\), even when the environment changes irregularly. Meanwhile, from Table 2 and Fig. 4, we can see that the statistical results from the complex test problems are worse than those from the simple test problems.

4.3 Influence of severity of changes

To investigate the influence of the severity of changes, \(n_{T}\), on the performance of DSS, we conduct additional tests on F9–F12 by setting \(n_{T}=2,5,10,15\). The other parameters are the same as in Sect. 4.1.

Table 3 shows the mean and standard deviation values of MIGD over 20 runs for DSS with different settings of \(n_{T}\). From the statistical results, we can see that the performance of DSS becomes better as \(n_{T}\) increases. It means that DSS performs better when the severity of change becomes smaller. This is fairly expected, indicating that DSS is able to focus more on convergence when the change is milder.

4.4 Influence of DSS2 and the parameter of \(r_{2}\)

We are also interested in examining the influence of \(r_{2}\) on the search performance of DSS. When \(r_{2}=0\), it means that the proposed algorithm does not apply DSS2 mechanism. Table 4 shows the mean and standard deviation of MIGD metric for DSS with different settings for \(r_{2}=0,0.05,0.1,0.2,0.5\) on F9–F12 over 20 runs. We can observe that the proposed algorithm performs the worst when \(r_{2}=0\), which indicates that DSS2 can help enhance the exploitation of DSS. For other values of \(r_{2}\), the obtained results are similar when \(r_{2}=0.05,0.1,0.2\). DSS performs increasingly reliably with the increase of \(r_{2}\). However, the performance deteriorates again when \(r_{2}=0.5\), indicating that introducing too many individuals by DSS2 may reduce the diversity thus degrade the performance. In this paper, we choose \(r_{2}=0.05\), also taking computational time into consideration.

4.5 The influence of the local search in DSS1

Local search in DSS plays an important role in finding good and diverse individuals especially when prediction is inaccurate. Here we test the influence of the local search along the direction orthogonal to the moving direction of the Pareto front in comparison with a random local search. For a random local search, a new solution is generated as follows:

where \(x=(x_{1},\ldots ,x_{n})\in PS^{t}\). Then we use formula (10) replace formula (6) when a random local search is performed. All other parameters are the same as in Sect. 4.1.

Figure 5 plots the average IGD values over 20 runs over time when a local search along the orthogonal directions of the moving Pareto set and a random local search are carried out for optimization of F9–F12, respectively. It demonstrates that the algorithm with the orthogonal local search performs better than that with a random local search. This clearly shows that the local search along the orthogonal direction contributes to the diversity better than the random local search.

5 Conclusion

For MOEAs tracking a moving Pareto front, it is extremely important to achieve a good balance between maintaining diversity and accelerating convergence. In this paper, we propose a DSS to improve the performance of MOEAs in dynamic environments. DSS includes two mechanisms aiming to encourage exploration and enhance convergence. The proposed DSS is embedded into a variant of NSGA-II with SBX being replaced by a DE operation. DSS has been compared with FPS and PPS, two state-of-the-art prediction-based strategies on twelve test problems having smooth or nonsmooth environmental changes. Our experimental results show that DSS performs better than FPS and PPS on most of the test problems considered in this work.

Although DSS has showed very promising performance in dealing with DMOPs, several ideas remain to be verified. For example, due to the good performance of DSS in the early stage of the search, DSS can be combined with FPS or PPS to improve the performance of FPS or PPS. In addition, DSS only uses the information in the previous environments and it may be of interest to explore additional history information. Embedding DSS in other MOEAs such as MOEA/D (Zhang and Li 2007) is of potential interest. Finally, as indicated in Jin et al. (2013), how DSS can contribute to finding trade-off solutions that are robust over time is another future work.

References

Abello M, Bui L, Michalewicz Z (2011a) An adaptive approach for solving dynamic scheduling with time-varying number of tasks: part I. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 1703–1710

Abello M, Bui LT, Michalewicz Z (2011b) An adaptive approach for solving dynamic scheduling with time-varying number of tasks: part II. In: Proceedings of IEEE CEC. IEEE, New York, pp 1711–1718

Aragon V, Esquivel S, Coello CC (2005) Evolutionary multiobjective optimization in non-stationary environments. J Comput Sci Technol 5(3):133–143

Azevedo C, Araujo A (2011) Generalized immigration schemes for dynamic evolutionary multiobjective optimization. In: Proceedings of IEEE CEC. IEEE, New York, pp 2033–2040

Camara M, Ortega J, de Toro F (2009) A single front genetic algorithm for parallel multiobjective optimization in dynamic environments. Neurocomputing 72(16–18):3570–3579

Camara M, Ortega J, de Toro F (2010) Generalized immigration schemes for dynamic evolutionary multiobjective optimization. In: Proceedings of advances multi-objective nature inspired computation. Springer, Berlin, pp 63–86

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197

Deb K, Rao U, Karthik S (2007) Dynamic multiobjective optimization and decision-making using modified NSGA-II: A case study on hydro-thermal power scheduling. In: Evolutionary multi-criterion optimization: 4th international conference, EMO. Springer, Berlin, pp 803–817

Farina M, Deb K, Amato P (2004) Dynamic multiobjective optimization problems: test cases, approximations, and applications. IEEE Trans Evol Comput 8(5):425–442

Goh CK, Tan K (2009) A competitive-cooperative coevolutionary paradigm for dynamic multiobjective optimization. IEEE Trans Evol Comput 13(1):103–127

Greeff M, Engelbrecht A (2008) Solving dynamic multiobjective problems with vector evaluated particle swarm optimisation. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 2922–2929

Hatzakis I, Wallace D (2006a) Dynamic multiobjective optimization with evolutionary algorithms: a forward-looking approach. In: Proceedings of GECCO. ACM Press, New York, pp 1201–1208

Hatzakis I, Wallace D (2006a) Topology of anticipatory populations for evolutionary dynamic multiobjective optimization. In: Proceedings of 11th AIAA/ISSMO multidisciplinary analysis and optimization conference, AIAA, pp 1944–1950

Helbig M, Engelbrecht A (2012) Analyses of guide update approaches for vector evaluated particle swarm optimisation on dynamic multiobjective optimisation problems. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 2621–2628

Iorio A, Li X (2005) Solving rotated multi-objective optimization problems using differential evolution. In: Advances in artificial intelligence. LNAI 3339. Springer, Berlin, pp 861–872

Jin Y, Branke J (2005) Evolutionary optimization in uncertain environments: a survey. IEEE Trans Evol Comput 9(3):303–317

Jin Y, Tang K, Yu X, Sendhoff B, Yao X (2013) A framework for finding robust optimal solutions over time. Memet Comput 5(1):3–18

Jin Y, Sendhoff B (2004) Constructing dynamic test problems using the multi-objective optimization concept. In: Applications of evolutionary computing. LNCS 3005. Springer, Berlin, pp 525–536

Li H, Zhang Q (2009) Multiobjective optimization problems with complicated pareto sets, MOEA/D and NSGA-II. IEEE Trans Evol Comput 13(2):284–302

Liu C, Wang Y (2009) Multiobjective evolutionary algorithm for dynamic nonlinear constrained optimization problems. J Syst Eng Electron 20(1):204–210

Liu C, Wang Y (2006) New evolutionary algorithm for dynamic multiobjective optimization problems. In: Advances in natural computation, LNCS 4221. Springer, Berlin, pp 889–892

Liu R, Zhang W, Jiao L, Liu F, Ma J (2011) A sphere-dominance based preference immune-inspired algorithm for dynamic multiobjective optimization. In: Proceedings of GECCO. ACM Press, New York, pp 423–430

Ma Y, Liu R, Shang R (2011) A hybrid dynamic multiobjective immune optimization algorithm using prediction strategy and improved differential evolution crossover operator. In: Neural information processing. LNCS 7063. Springer, Berlin, pp 435–444

Manriquez A, Pulido G, Torres J (2010) Handling dynamic multiobjective problems with particle swarm optimization. In: Proceedings of the international conference on agents and artificial intelligence, ICAART, pp 337–342

Martins F, Carrano E, Wanner E, Takahashi R, Mateus G (2009) A dynamic multiobjective hybrid approach for designing wireless sensor networks. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 1145–1152

Nguyen T, Yang S, Branke J (2012) Evolutionary dynamic optimization: a survey of the state of the art. Swarm Evol Comput 6:1–24

Tang J, Alam S, Lokan C, Abbass H (2012) A multiobjective evolutionary method for dynamic airspace re-sectorization using sectors clipping and similarities. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 3565–3572

Vinek E, Beran P, Schikuta E (2011) A dynamic multiobjective optimization framework for selecting distributed deployments in a heterogeneous environment. Procedia Comput Sci 4:166–175

Wang Y, Li B (2009) Investigation of memory-based multiobjective optimization evolutionary algorithm in dynamic environment. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 630–637

Wei J, Wang Y (2012) Hyper rectangle search based particle swarm algorithm for dynamic constrained multiobjective optimization problems. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 259–266

Wei J, Zhang M (2011) Simplex model based evolutionary algorithm for dynamic multiobjective optimization. In: Advances in artificial intelligence, LNCS 7106. Springer, Berlin, pp 372–381

Wu PY, Campbel D, Merz T (2011) Multiobjective four-dimensional vehicle motion planning in large dynamic environments. IEEE Trans Syst Man Cybern B Cybern 41(3):621–634

Yang M, Kang L, Guan J (2008) Multialgorithm co-evolution strategy for dynamic multiobjective TSP. In: Proceedings of IEEE CEC. IEEE Press, New York, pp 466–471

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11(6):712–731

Zhang Q, Zhou A, Jin Y (2008) RM-MEDA: a regularity model based multiobjective estimation of distribution algorithm. IEEE Trans Evol Comput 12(1):41–63

Zhang Z (2008) Multiobjective optimization immune algorithm in dynamic environments and its application to greenhouse control. Appl Soft Comput 8(2):959–971

Zheng B (2007) A new dynamic multiobjective optimization evolutionary algorithm. In: Third international conference on natural computation (ICNC). IEEE Press, New York, pp 565–570

Zhou A, Jin Y, Zhang Q (2014) A population prediction strategy for evolutionary dynamic multiobjective optimization. IEEE Trans Cybern 44(1):40–53

Zhou A, Jin Y, Zhang Q, Sendhoff B, Tsang E (2007) Prediction based population re-initialization for evolutionary dynamic multiobjective optimization. In: Evolutionary multi-criterion optimization. LNCS 4403. Springer, Berlin, pp 832–846

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (No. 61105065 and No.11326188), the Fundamental Research Funds for the Central Universities (No. K5051270009), and the Joint Research Fund for Overseas Chinese, Hong Kong and Macao Scholars of the National Natural Science Foundation of China (Grant No. 61428302).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Wu, Y., Jin, Y. & Liu, X. A directed search strategy for evolutionary dynamic multiobjective optimization. Soft Comput 19, 3221–3235 (2015). https://doi.org/10.1007/s00500-014-1477-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-014-1477-4