Abstract

The geometric problem of estimating an unknown compact convex set from evaluations of its support function arises in a range of scientific and engineering applications. Traditional approaches typically rely on estimators that minimize the error over all possible compact convex sets; in particular, these methods allow for limited incorporation of prior structural information about the underlying set and the resulting estimates become increasingly more complicated to describe as the number of measurements available grows. We address both of these shortcomings by describing a framework for estimating tractably specified convex sets from support function evaluations. Building on the literature in convex optimization, our approach is based on estimators that minimize the error over structured families of convex sets that are specified as linear images of concisely described sets—such as the simplex or the spectraplex—in a higher-dimensional space that is not much larger than the ambient space. Convex sets parametrized in this manner are significant from a computational perspective as one can optimize linear functionals over such sets efficiently; they serve a different purpose in the inferential context of the present paper, namely, that of incorporating regularization in the reconstruction while still offering considerable expressive power. We provide a geometric characterization of the asymptotic behavior of our estimators, and our analysis relies on the property that certain sets which admit semialgebraic descriptions are Vapnik–Chervonenkis classes. Our numerical experiments highlight the utility of our framework over previous approaches in settings in which the measurements available are noisy or small in number as well as those in which the underlying set to be reconstructed is non-polyhedral.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the problem of estimating a compact convex set given (possibly noisy) evaluations of its support function. Formally, let \(K^{\star } \subset {\mathbb {R}}^d\) be a set that is compact and convex. The support function \(h_{K^{\star }}(u)\) of the set \(K^{\star }\) evaluated in the direction \(u\in S^{d-1}\) is defined as

Here \(S^{d-1} := \{ x\mid \Vert x\Vert _2 = 1 \} \subset {\mathbb {R}}^d\) denotes the \((d-1)\)-dimensional unit sphere. In words, the quantity \(h_{K^{\star }} (u)\) measures the maximum displacement in the direction \(u\) intersecting \(K^{\star }\). Given noisy support function evaluations \(\{ (u^{(i)}, y^{(i)} )\mid y^{(i)} = h_{K^{\star }} (u^{(i)}) + \varepsilon ^{(i)},\, 1 \le i \le n \}\), where each \(\varepsilon ^{(i)}\) denotes additive noise, our goal is to reconstruct a convex set \({\hat{K}}\) that is close to \(K^{\star }\).

The problem of estimating a convex set from support function evaluations arises in a wide range of problems such as computed tomography [24], target reconstruction from laser-radar measurements [18], and projection magnetic resonance imaging [13]. For example, in tomography the extent of the absorption of parallel rays projected onto an object provides support information [24, 30], while in robotics applications support information can be obtained from an arm clamping onto an object in different orientations [24]. A natural approach to fit a compact convex set to support function data is the following least-squares estimator (LSE):

An LSE always exists and it is not defined uniquely, although it is always possible to select a polytope that is an LSE; this is the choice that is most commonly employed and analyzed in prior work. For example, the algorithm proposed by Prince and Willsky [24] for planar convex sets reconstructs a polygonal LSE described in terms of its facets, while the algorithm proposed by Gardner and Kiderlen [10] for convex sets in any dimension provides a polytopal LSE reconstruction described in terms of extreme points. The least-squares estimator \({\hat{K}}^{\mathrm {LSE}}_n\) is a consistent estimator of \(K^\star \), but it has a number of significant drawbacks. In particular, as the formulation (1) does not incorporate any additional structural information about \(K^\star \) beyond convexity, the estimator \({\hat{K}}^{\mathrm {LSE}}_n\) can provide poor reconstructions when the measurements available are noisy or small in number. The situation is problematic even when the number of measurements available is large, as the complexity of the resulting estimate grows with the number of measurements in the absence of any regularization due to the regression problem (1) being non-parametric (the collection of all compact convex sets in \({\mathbb {R}}^d\) is not finitely parametrized); consequently, the facial structure of the reconstruction provides little information about the geometry of the underlying set.Footnote 1 Finally, if the underlying set \(K^\star \) is not polyhedral, a polyhedral choice for the solution \({\hat{K}}^{\mathrm {LSE}}_n\) (as is the case with much of the literature on this topic) can provide poor reconstructions. Indeed, even for intrinsically “simple” convex bodies such as the Euclidean ball, one necessarily requires many vertices or facets to obtain accurate polyhedral approximations. Figure 1 provides an illustration of these points.

1.1 Our Contributions

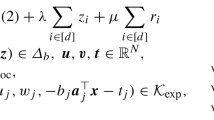

To address the drawbacks underlying the least-squares estimator, we seek a framework that regularizes the complexity of the reconstruction in the formulation (1). A natural approach to developing such a framework is to design an estimator with the same objective as in (1) but in which the decision variable \(K\) is constrained to lie in a subclass \({\mathcal {F}}\) of the collection of all compact, convex sets. For such a method to be useful, the subclass \({\mathcal {F}}\) must balance several considerations. First, \({\mathcal {F}}\) should be sufficiently expressive in order to faithfully model various attributes of convex sets that arise in applications (for example, sets consisting of both smooth and singular features in their boundary). Second, the elements of \({\mathcal {F}}\) should be suitably structured so that incorporating the constraint \(K\in {\mathcal {F}}\) leads to estimates that are more robust to noise; further, the type of structure underlying the sets in \({\mathcal {F}}\) also informs the analysis of the statistical properties of the constrained analog of (1) as well as the development of efficient algorithms for computing the associated estimate. Building on the literature on lift-and-project methods in convex optimization [12, 34], we consider families \({\mathcal {F}}\) in which the elements are specified as images under linear maps of a fixed ‘concisely specified’ compact convex set; the choice of this set governs the expressivity of the family \({\mathcal {F}}\) and we discuss this in greater detail in the sequel. Due to the availability of computationally tractable procedures for optimization over linear images of concisely described convex sets [21], the study of such descriptions constitutes a significant topic in optimization. We employ these ideas in a conceptually different context in the setting of the present paper, namely that of incorporating regularization in the reconstruction, which addresses many of the drawbacks with the LSE outlined previously. Formally, we consider the following regularized convex set regression problem:

Here \(C\subset {\mathbb {R}}^q\) is a user-specified compact convex set and \(L({\mathbb {R}}^{q},{\mathbb {R}}^d)\) denotes the set of linear maps from \({\mathbb {R}}^q\) to \({\mathbb {R}}^d\). The set \(C\) governs the expressive power of the family \(\{A(C)\mid A \in L({\mathbb {R}}^{q},{\mathbb {R}}^d)\}\). In addition to this consideration, our choices for \(C\) are also driven by statistical and computational aspects of the estimator (2). Our analysis of the statistical properties of the estimator (2) relies in part on the observation that sets \({\mathcal {F}}\) that admit certain semialgebraic descriptions form VC classes; this fact serves as the foundation for our characterization based on stochastic equicontinuity of the asymptotic properties of the estimator \({\hat{K}}_n^{C}\) as \(n \rightarrow \infty \). On the computational front, the algorithms we propose for (2) require that the support function associated to \(C\) as well as its derivatives (when they exist) can be computed efficiently. Motivated by these issues, the choices of \(C\) that we primarily discuss in our examples and numerical illustrations are the simplex and the spectraplex:

Example

The simplex in \({\mathbb {R}}^q\) is the set

where \(1= (1,\ldots ,1)^{T}\). Convex sets expressed as projections of \(\Delta ^{q}\) are precisely polytopes with at most q extreme points.

Example

Let \({\mathbb {S}}^p \cong {\mathbb {R}}^{p+1 \atopwithdelims ()2}\) denote the space of \(p \times p\) real symmetric matrices. The spectraplex \({\mathcal {O}}^{p} \subset {\mathbb {S}}^p\) (also called the free spectrahedron) is the set

where \(I \in {\mathbb {S}}^p\) is the identity matrix. The spectraplex is a semidefinite analog of the simplex, and it is especially useful if we seek non-polyhedral reconstructions, as can be seen in Fig. 1 and in Sect. 5; in particular, linear images of the spectraplex exhibit both smooth and singular features in their boundaries.

The specific selection of \(C\) from the families \(\{\Delta ^{q}\}_{q=1}^\infty \) and \(\{{\mathcal {O}}^p\}_{p=1}^\infty \) is governed by the complexity of the reconstruction one seeks, which is typically based on prior information about \(K^\star \). Our analysis in Sect. 3 of the statistical properties of the estimator (2) relies on the availability of such additional knowledge about the complexity of \(K^\star \). In practice in the absence of such information, cross-validation may be employed to obtain a suitable reconstruction; see Sect. 6.

In Sect. 2 we discuss preliminary aspects of our technical setup such as properties of the set of minimizers of the problem (2) as well as a stylized probabilistic model for noisy support function evaluations. These serve as a basis for the subsequent development in the paper. In Sect. 3 we provide the main theoretical guarantees of our approach. In our first result, we show that the sequence of estimates \(\{{\hat{K}}_n^{C}\}_{n=1}^{\infty }\) converges almost surely (as \(n \rightarrow \infty \)) in the Hausdorff metric to that linear image of \(C\) which is closest to the underlying set \(K^{\star }\) (see Theorem 3.1). Under additional conditions, we also characterize certain asymptotic distributional aspects of the sequence \(\{{\hat{K}}_n^{C}\}_{n=1}^{\infty }\) (see Theorem 3.4); this result is based on a functional central limit theorem, which requires the computation of appropriate entropy bounds for Vapnik–Chervonenkis (VC) classes of sets that admit semialgebraic descriptions, and it is here that our choice of \(C\) as either a simplex or a spectraplex plays a prominent role. Our third result describes the facial structure of \(\{{\hat{K}}_n^{C}\}_{n=1}^{\infty }\) in relation to the underlying set \(K^\star \). We prove under appropriate conditions that if \(K^\star \) is a polytope, our approach provides a reconstruction that recovers all the simplicial faces (for sufficiently large n); if \(K^\star \) is a simplicial polytope, we recover a polytope that is combinatorially equivalent to \(K^\star \). This result also applies more generally to ‘rigid’ faces for non-polyhedral \(K^{\star }\) (see Theorem 3.9).

In the sequel, we relate our formulation (2) (when \(C\) is a simplex) to the task of fitting piecewise affine convex functions to data (known as max-affine regression) as well as K-means clustering. Accordingly, the algorithm we propose in Sect. 4 for computing \({\hat{K}}_n^{C}\) bears significant similarities with methods for max-affine regression [20] as well as Lloyd’s algorithm for clustering problems.

A restriction in the development in this paper is that the simplex and the spectraplex represent particular affine slices of the non-negative orthant and the cone of positive semidefinite matrices. In principle, one can further optimize these slices (both their orientation and their dimension) in (2) to obtain improved reconstructions. However, this additional degree of flexibility in (2) leads to technical complications in establishing asymptotic normality in Sect. 3.2 as well as to challenges in developing algorithms for solving (2) (even to obtain a local optimum). The root of these difficulties lies in the fact that it is hard to characterize the variation in the support function with respect to small changes in the slice. We remark on these challenges in greater detail in Sect. 6, and for the remainder of the paper we proceed with investigating the estimator (2).

1.2 Related Work

1.2.1 Consistency of Convex Set Regression

There is a well-developed body of prior work on the consistency of convex set regression (1). Gardner et al. [11] prove that the (polyhedral) estimates \({\hat{K}}^{\mathrm {LSE}}_n\) converge almost surely to the underlying set \(K^\star \) in the Hausdorff metric as \(n \rightarrow \infty \) provided the directions \(\{u^{(i)}\}_{i=1}^{n}\) cover the sphere in a suitably uniform manner. Guntuboyina [14] analyzes rates of convergence in minimax settings, and also notes that constraining the growth of the number of vertices in the reconstruction as the number of measurements increases provides a form of robustness. Cai et al. [6] study the impact of choosing the directions \(\{u^{(i)}\}_{i=1}^{n}\) adaptively in estimating planar convex sets. In contrast, the consistency result in the present paper corresponding to the constrained estimator (2) is qualitatively different. On the one hand, for a given compact convex set \(C\subset {\mathbb {R}}^q\), we prove that the sequence of estimates \(\{{\hat{K}}_n^{C}\}_{n=1}^{\infty }\) converges to that linear image of \(C\) which is closest to the underlying set \(K^{\star }\); in particular, \(\{{\hat{K}}_n^{C}\}_{n=1}^{\infty }\) only converges to \(K^\star \) if \(K^\star \) can be represented as a linear image of \(C\). On the other hand, there are several advantages to the framework presented in this paper in comparison with prior work. First, the constrained estimator (2) lends itself to a precise asymptotic distributional characterization which is unavailable in the unconstrained case (1). Second, under appropriate conditions, the constrained estimator (2) recovers the facial structure of the underlying set \(K^\star \) unlike \({\hat{K}}_{\mathrm {LSE}}\). More significantly, beyond these technical distinctions, the constrained estimator (2) also yields concisely described non-polyhedral reconstructions (as well as associated consistency and asymptotic distributional characterizations) based on linear images of the spectraplex, in contrast to the usual choice of a polyhedral LSE in the previous literature.

1.2.2 Incorporating Prior Information and Fitting Smooth Boundaries

The problem of integrating prior information about the underlying convex set has been considered in [25], where the authors propose a method of incorporating certain structural or shape priors for fitting convex sets in two dimensions, with a particular focus on settings in which the underlying set is a disc or an ellipsoid. However, the reconstructions produced by the method in [25] are still polyhedral, and the method assumes that the support function evaluations are available at angles that are equally spaced. We are also aware of a line of work [8, 15] on fitting convex sets in two dimensions with smooth boundaries to support function measurements. The first of these papers estimates a convex set with a smooth boundary without any vertices, while the second proposes a two-step method in which one initially estimates a set of vertices followed by a second step that connects these vertices via smooth boundaries. In both cases, splines are used to interpolate between the support function evaluations with a subsequent smoothing procedure using the von Mises kernel. The smoothing is done in a local fashion and the resulting reconstruction is increasingly complex to describe as the number of measurements available grows. In contrast, our approach to producing non-polyhedral estimates based on fitting linear images of spectraplices is more global in nature, and we explicitly regularize the complexity of our reconstruction based on the dimension of the spectraplex. Further, the approaches proposed in [8, 15] estimate the singular and the smooth parts of the boundary separately, whereas our framework based on linear images of spectraplices estimates these features in a unified manner (for example, see the illustration in Fig. 12). Finally, the methods described in [8, 15, 25] are only applicable to two-dimensional reconstruction problems, while problems of a three-dimensional nature arise in many contexts (see Sect. 5.4 for an example that involves the reconstruction of a human lung).

1.2.3 Piecewise Affine Convex Regression

The formulation (2) when \(C= \Delta ^q\) may be viewed as a fitting a piecewise linear function (with at most q pieces) to the given data. This is a special case of the max-affine regression problem in which one is interested in fitting a piecewise affine function (typically with a bound on the number of pieces) to data, which is a topic that has been studied previously [2, 16, 20]. In particular, our algorithm in Sect. 4 when specialized to the setting \(C= \Delta ^q\) is analogous to the methods described in [20]. However, our framework and the algorithm in Sect. 4 may also be employed to fit more general convex functions that are not piecewise linear, but that can still be specified in a tractable manner via linear images of the spectraplex.

1.3 Outline

In Sect. 2 we discuss the geometric, algebraic, and analytic aspects of the optimization problem (2); this section serves as the foundation for the subsequent statistical analysis in Sect. 3. Throughout both of these sections, we give several examples that provide additional insight into our mathematical development. We describe algorithms for solving (2) in Sect. 4, and we demonstrate the application of these methods in a range of numerical experiments in Sect. 5. We conclude with a discussion of future directions in Sect. 6.

Notation: Given a convex set \(C\subset {\mathbb {R}}^q\), we denote the associated induced norm by \(\Vert A \Vert _{C,2} := \sup _{x\in C} \Vert A x\Vert _2\). We denote the unit \(\Vert \,{\cdot }\,\Vert \)-ball centered at \(x\) by \(B_{\Vert \cdot \Vert }(x) := \{ y\mid \Vert y-x\Vert \le 1 \}\), and we denote the Frobenius norm by \(\Vert \,{\cdot }\,\Vert _\mathrm{F}\). Given a point \(a\in {\mathbb {R}}^q\) and a subset \(U \subseteq {\mathbb {R}}^q\), we define \({{\,\mathrm{dist}\,}}(a,U) := \inf _{b\in U} \Vert a- b\Vert \), where the norm \(\Vert \,{\cdot }\,\Vert \) is the Euclidean norm. Last, given any two subsets \(U,V \subset {\mathbb {R}}^q\), the Hausdorff distance between U and V is denoted by

2 Problem Setup and Other Preliminaries

In this section, we begin with a preliminary discussion of the geometric, algebraic, and analytical aspects of our procedure (2); these underpin our subsequent development in this paper. We make the following assumptions about our problem setup for the remainder of the paper:

-

(A1)

The set \(K^{\star }\subset {\mathbb {R}}^d\) is compact and convex.

-

(A2)

The set \(C\subset {\mathbb {R}}^q\) is compact and convex.

-

(A3)

Probabilistic Model for Support Function Measurements: We assume that we are given n independent and identically distributed support function evaluations \(\{(u^{(i)},y^{(i)})\}_{i=1}^n \subset S^{d-1} \times {\mathbb {R}}\) from the following probabilistic model:

$$\begin{aligned} P_{K^{\star }}:\quad y = h_{K^{\star }} (u) + \varepsilon . \end{aligned}$$(3)Here \(u\in S^{d-1}\) is a vector distributed uniformly at random (u.a.r.) over the unit sphere, \(\varepsilon \) is a centered random variable with variance \(\sigma ^2\) (i.e., \({\mathbb {E}}[\varepsilon ]=0\) and \({\mathbb {E}}[\varepsilon ^2]=\sigma ^2\)), and \(u\) and \(\varepsilon \) are independent.

In our analysis, we quantify dissimilarity between convex sets in terms of a metric applied to their respective support functions. Let \(K_1,K_2\) be compact convex sets in \({\mathbb {R}}^d\), and let \(h_{K_1}(\,{\cdot }\,), h_{K_2}(\,{\cdot }\,)\) be the corresponding support functions. We define the \(L_p\) metric to be

where the integral is with respect to the Lebesgue measure over \(S^{d-1}\); as usual, we denote \(\rho _{\infty } (K_1, K_2) = \max _{u} | h_{K_1}(u) - h_{K_2}(u)|\). We prove our convergence guarantees in Sect. 3.1 in terms of the \(\rho _p\)-metric. This metric represents an important class of distance measures over convex sets. For instance, it features prominently in the literature on approximating convex sets as polytopes [5]. In addition, the specific case of \(p=\infty \) coincides with the Hausdorff distance [27, p. 66].

Due to the form of the estimator (2), one may reparametrize the optimization problem in terms of the linear map A. In particular, by noting that \(h_{A(C)}(u) = h_{C}(A^Tu)\), the problem (2) can be reformulated as follows:

Based on this observation, we analyze some properties of the set of minimizers of (2) via an analysis of (5). (The reformulation (5) is also more conducive to the development of numerical algorithms for solving (2).) In turn, a basic strategy for investigating the asymptotic properties of the estimator (5) is to analyze the minimizers of the loss function at the population level. Concretely, for any probability measure P over pairs \((u,y) \in S^{d-1} \times {\mathbb {R}}\), the loss function with respect to P is defined as

Thus, the focus of our analysis is on studying the set of minimizers of the population loss function \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\):

2.1 Geometric Aspects

In this subsection, we focus on the convex sets defined by the elements of the set of minimizers \(M_{K^{\star },C}\). In the next subsection, we consider the elements of \(M_{K^{\star },C}\) as linear maps. To begin with, we state a simple lemma on the continuity of \(\Phi _{C}(\,{\cdot }\,,P)\):

Proposition 2.1

Let P be any probability distribution over measurement pairs \((u,y) \in S^{d-1} \times {\mathbb {R}}\) satisfying \({\mathbb {E}}_{P}[|y|]<\infty \). Then the function \(\Phi _{C}(\,{\cdot }\,,P)\) defined in (6) is continuous.

The proof uses a simple bound. We state the result formally as we require it at a later part.

Lemma 2.2

Given any pair of linear maps \(A_1, A_2 \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\), any unit-norm vector \(u\), and any scalar y, we have

Proof

First note that \(h_{C}(A_1^{T}u) \le h_{C}(A_2^{T}u) + h_{C}((A_1-A_2)^{T}u)\). Since u is unit-norm, we have \(h_{C}(A_1^{T}u) - h_{C}(A_2^{T}u) \le h_{C}((A_1-A_2)^{T}u) \le \Vert A_1 - A_2 \Vert _{C,2}\). Similarly we have \(h_{C}(A_2^{T}u) - h_{C}(A_1^{T}u) \le \Vert A_1 - A_2 \Vert _{C,2}\). Hence (8) follows. \(\square \)

Proof of Proposition 2.1

Let \(\epsilon >0\) be arbitrary. Let \(r = 1+\Vert A \Vert _{C,2}\) and pick \(\delta =\min {\{ \epsilon /(3{\mathbb {E}}[r+|y|]), r\}}\). Then for any \(A_0 \) satisfying \(\Vert A - A_0 \Vert _{C,2} < \delta \), we have

We apply Lemma 2.2 to obtain the bound

Combining this bound with our earlier expression, it follows that \(|\Phi (A,P) - \Phi (A_0,P)| < {\mathbb {E}}_{P} [\delta (2r+\delta +2|y|)] \le \epsilon \). \(\square \)

The following result gives a series of properties about the set \(M_{K^{\star },C}\). Crucially, it shows that \(M_{K^{\star },C}\) characterizes the optimal approximations of \(K^{\star }\) as linear images of \(C\):

Proposition 2.3

Suppose that the assumptions (A1), (A2), (A3) hold. Then the set of minimizers \(M_{K^{\star },C}\) defined in (7) is compact and non-empty. Moreover, we have

Proof

Define the event \(G_{r,v}:= \{ (u,y) \mid \langle v,u\rangle \ge 1/2, \,|y| \le r/4 \}\) over \(v\in S^{d-1}\). In addition, define the function \(s(v) := {\mathbb {P}}[ (u,y) \mid \langle v,u\rangle \ge 1/2 ]\). For every \(r \ge 0\), consider the function \(g_{r}(v) :={\mathbb {P}}[G_{r,v}]\). By noting that \( g_{r} \le g_{r^{\prime }}\) whenever \(r \le r^{\prime }\) (i.e., the sequence \(\{g_{r}\}_{r\ge 0}\) is monotone increasing), \(g_{r}(\,{\cdot }\,) \uparrow s(\,{\cdot }\,)\), and that \(g_{r}(\,{\cdot }\,)\) is a continuous function over the compact domain \(S^{d-1}\), we conclude that \(g_{r}(\,{\cdot }\,)\) converges to \(s(\,{\cdot }\,)\) uniformly. Thus there exists \({\hat{r}}\) sufficiently large so that \({\hat{r}}^2{\mathbb {P}}[G_{{\hat{r}},v}]/16>\Phi _{C}(0,P_{K^{\star }})\) for all \(v\in S^{d-1}\).

Next, we show that \(M_{K^{\star },C}\subseteq {\hat{r}} B_{\Vert \cdot \Vert _{C,2}} (0)\). Let \(A \notin {\hat{r}} B_{\Vert \cdot \Vert _{C,2}} (0)\). Then, for such an A, there exists \({\hat{x}}\in C\) such that \(\Vert A{\hat{x}}\Vert _{2} > {\hat{r}}\). Define \({\hat{v}} = A {\hat{x}}/\Vert A{\hat{x}}\Vert _{2}\). We have

Here, \(\mathbf{1 }(G_{{\hat{r}},{\hat{v}}})\) denotes the indicator function for the event \(G_{{\hat{r}},{\hat{v}}}\). As such, the above inequality implies that \(A \notin M_{K^{\star },C}\). Therefore \(M_{K^{\star },C}\subseteq {\hat{r}} B_{\Vert \cdot \Vert _{C,2}} (0)\), and hence \(M_{K^{\star },C}\) is bounded.

By Proposition 2.1, the function \(A \mapsto \Phi _{C}(A,P_{K^{\star }})\) is continuous. As the minimizers of \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\), if they exist, must be contained in \( {\hat{r}} B_{\Vert \cdot \Vert _{C,2}} (0)\), we can view \(M_{K^{\star },C}\) as the set of minimizers of a continuous function restricted to the compact set \( {\hat{r}} B_{\Vert \cdot \Vert _{C,2}} (0)\), and hence it is non-empty. Moreover, since \(M_{K^{\star },C}\) is the pre-image of a closed set under a continuous function, it is also closed and thus compact.

By Fubini’s theorem, we have \({\mathbb {E}}[ \varepsilon (h_{C}(K^{\star }) - h_{C}(A^{T}u) )] = {\mathbb {E}}_{u} [ {\mathbb {E}}_{\varepsilon } [ \varepsilon (h_{C}(K^{\star }) - h_{C}(A^{T}u) ) ]] = 0\). Hence \(\Phi _{C}(A,P_{K^{\star }}) = {\mathbb {E}}\bigl [ (h_{C}(K^{\star }) + \varepsilon - h_{C}(A^{T}u) )^2\bigr ]= {\mathbb {E}}\bigl [(h_{C}(K^{\star }) - h_{C}(A^{T}u) )^2 \bigr ] + {\mathbb {E}}[ \varepsilon ^2 ]\), from which the last assertion follows. \(\square \)

It follows from Proposition 2.3 that an optimal approximation of \(K^{\star }\) as the projection of \(C\) always exists. In Sect. 3.1, we show that the estimators obtained using our method converge to an optimal approximation of \(K^{\star }\) as a linear image of \(C\) if such an approximation is unique. While this is often the case in applications one encounters in practice, the following examples demonstrate that the uniqueness condition need not always hold:

Example

Suppose \(K^{\star }\) is the regular q-gon in \({\mathbb {R}}^2\), and \(C\) is the spectraplex \({\mathcal {O}}^{2}\). Then \(M_{K^{\star },C}\) uniquely specifies an \(\ell _2\)-ball.

Example

Suppose \(K^{\star }\) is the unit \(\ell _2\)-ball in \({\mathbb {R}}^2\), and \(C\) is the simplex \(\Delta ^{q}\). Then the sets specified by the elements \(M_{K^{\star },C}\) are not unique; they all correspond to a centered regular q-gon, but with an unspecified rotation.

A natural question then is to identify settings in which \(M_{K^{\star },C}\) defines a unique set. Unfortunately, obtaining a complete characterization of this uniqueness property appears to be difficult due to the interplay between the invariances underlying the sets \(K^{\star }\) and \(C\). However, based on Proposition 2.3, we can provide a simple sufficient condition under which \(M_{K^{\star },C}\) defines a unique set:

Corollary 2.4

Assume that the conditions of Proposition 2.3 hold. Suppose further that we have \(K^{\star }= A^{\star } (C)\) for some \(A^{\star }\in L({\mathbb {R}}^q,{\mathbb {R}}^d)\). Then the set of minimizers \(M_{K^{\star },C}\) described in (7) uniquely defines \(K^{\star }\); i.e., \(K^{\star }= A (C)\) for all \(A \in M_{K^{\star },C}\).

Proof

It is clear that \(A^{\star }\in M_{K^{\star },C}\). Note that \(h_{C}(A^{T}u)\) is a continuous function of \(u\) over a compact domain for every \(A\in L({\mathbb {R}}^q,{\mathbb {R}}^d)\). Hence it follows that \({\hat{A}}\in M_{K^{\star },C}\) if and only if \(h_{C}(A^{\star T}u)=h_{C}({\hat{A}}^{T}u)\) everywhere. By applying Proposition 2.3 and using the fact that a pair of compact convex sets that have the same support function must be equal, it follows that \(K^{\star }={\hat{A}}(C)\) for all \({\hat{A}} \in M_{K^{\star },C}\).

\(\square \)

2.2 Algebraic Aspects of Our Method

While the preceding subsection focused on conditions under which the set of minimizers \(M_{K^{\star },C}\) specifies a unique convex set, the aim of the present section is to obtain a more refined picture of the collection of linear maps in \(M_{K^{\star },C}\). We begin by discussing the identifiability issues that arise in reconstructing a convex set by estimating a linear map via (5). Given a compact convex set \(C\), let g be a linear transformation that preserves \(C\); i.e., \(g(C)= C\). Then the linear map defined by Ag specifies the same convex set as A because \(Ag (C) = A (g(C)) = A (C)\). As such, every linear map \(A\in L({\mathbb {R}}^q,{\mathbb {R}}^d)\) is a member of the equivalence class defined by

Here \({{\,\mathrm{Aut}\,}}C\) denotes the subset of linear transformations that preserve \(C\). When \(C\) is non-degenerate, the elements of \({{\,\mathrm{Aut}\,}}C\) are invertible matrices and form a subgroup of \(\mathrm {GL}(q,{\mathbb {R}})\). As a result, the equivalence class \(A \cdot {{\,\mathrm{Aut}\,}}C:= \{ Ag \mid g \in {{\,\mathrm{Aut}\,}}C\}\) specified by (9) can be viewed as the orbit of \(A \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\) under (right) action of the group \({{\,\mathrm{Aut}\,}}C\). In the sequel, we focus our attention on convex sets \(C\) for which the associated automorphism group \({{\,\mathrm{Aut}\,}}C\) consists of isometries:

-

(A4)

The automorphism group of \(C\) is a subgroup of the orthogonal group, i.e., \({{\,\mathrm{Aut}\,}}C\lhd O (q,{\mathbb {R}})\).

This assumption leads to structural consequences that are useful in our analysis. In particular, as \({{\,\mathrm{Aut}\,}}C\) can be viewed as a compact matrix Lie group, the orbit \(A\cdot {{\,\mathrm{Aut}\,}}C\) inherits structure as a smooth manifold of the ambient space \(L({\mathbb {R}}^q,{\mathbb {R}}^d)\). The assumption (A4) is satisfied for the choices of \(C\) that are primarily considered in this paper—the automorphism group of the simplex is the set of permutation matrices, and the automorphism group of the spectraplex is the set of linear operators specified as conjugation by an orthogonal matrix.

Based on this discussion, it follows that the space of linear maps \(L({\mathbb {R}}^q,{\mathbb {R}}^d)\) can be partitioned into orbits \(A \cdot {{\,\mathrm{Aut}\,}}C\). Further, the population loss \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) is also invariant over orbits of A: for every \(g \in {{\,\mathrm{Aut}\,}}C\) we have that \(h_{C}(A^{T}u) = h_{C}((Ag)^{T}u)\). Thus, the set of minimizers \(M_{K^{\star },C}\) can also be partitioned into a union of orbits. Consequently, in our analysis in Sect. 3 we view the problem (5) as one of recovering an orbit rather than a particular linear map. The convergence results we obtain in Sect. 3 depend on the number of orbits in \(M_{K^{\star },C}\), with sharper asymptotic distributional characterizations available when \(M_{K^{\star },C}\) consists of a single orbit.

When \(M_{K^{\star },C}\) specifies multiple convex sets, then \(M_{K^{\star },C}\) clearly consists of multiple orbits; as an illustration, in the example in the previous subsection in which \(K^{\star }\) is the unit \(\ell _2\)-ball in \({\mathbb {R}}^2\) and \(C= \Delta ^{q}\), the corresponding set \(M_{K^{\star },C}\) is a union of multiple orbits in which each orbit specifies a unique rotation of the centered regular q-gon. However, even when \(M_{K^{\star },C}\) specifies a unique convex set, it may still be the case that it consists of more than one orbit:

Example

Suppose \(K^{\star }\) is the interval \([-1,1] \subset {\mathbb {R}}\) and \(C= \Delta ^{3}\). Then \(M_{K^{\star },C}\) is a union of orbits, with an orbit specified as the set of all permutations of the vector \((-1,1,\epsilon )\) for each \(\epsilon \in [-1, 1]\). Nonetheless, \(M_{K^{\star },C}\) specifies a unique convex set, namely, \(K^{\star }\).

More generally, it is straightforward to check that \(M_{K^{\star },C}\) consists of a single orbit if \(K^{\star }\) is a polytope with q extreme points and \(C= \Delta ^{q}\). The situation for linear images of non-polyhedral sets such as the spectraplices is much more delicate. One simple instance in which \(M_{K^{\star },C}\) consists of a single orbit is when \(K^{\star }\) is the image under a bijective linear map A of \({\mathcal {O}}^q\). Our next result states an extension to convex sets that are representable as linear images of an appropriate slice of the outer product of cones of positive semidefinite matrices.

Proposition 2.5

Let \(C=\bigl \{ X_1 \times \cdots \times X_k\mid X_i \in {\mathbb {S}}^{q_i}, \,X_i \succeq 0,\, \sum _{i=1}^{k} {{\,\mathrm{trace}\,}}X_i=1\bigr \}\), and let \(K^{\star }= A^{\star }(C) \subset {\mathbb {R}}^d\). Suppose that there is a collection of disjoint exposed faces \(F_i \subseteq K^{\star }\) such that: (i) \((A^{\star })^{-1}(F_i) \cap C\) is the i-th block \(\{ 0 \times \cdots \times 0 \times X_i \times 0 \times \cdots \times 0 \mid X_i \in {\mathbb {S}}^{q_i},\, X_i \succeq 0,\, {{\,\mathrm{trace}\,}}X_i=1\} \subset C\), and (ii) \(\dim F_i=\dim {\mathcal {O}}^{q_i}\). Then \(M_{K^{\star },C}\) consists of a single orbit.

Example

By expressing \(C= \Delta ^{q} = \bigl \{ X_1 \times \cdots \times X_q \mid X_i \in {\mathbb {S}}^{1},\, X_i \succeq 0, \sum _{i=1}^{q} {{\,\mathrm{trace}\,}}X_i=1\bigr \}\) and by considering \(K^{\star }\) to be a polytope with q extreme points, Proposition 2.5 simplifies to our earlier remark noting that \(M_{K^{\star },C}\) consists of a single orbit.

Example

The nuclear norm ball \(B_{\text {nuc}}:=\{X \in {\mathbb {S}}^2\mid \Vert X\Vert _{\text {nuc}}\le 1 \} \) is expressible as the linear image of \({\mathcal {O}}^{2} \times {\mathcal {O}}^{2}\). The extreme points of \(B_{\text {nuc}}\) comprise two connected components of unit-norm rank-one matrices specified by \(\{ U^{T} E_{11} U \mid U \in {{\,\mathrm{SO}\,}}(2,{\mathbb {R}}) \} \) and \(\{ - U^{T} E_{11} U \mid U \in {{\,\mathrm{SO}\,}}(2,{\mathbb {R}})\}\), where \(E_{11}\) is the \(2\times 2\) matrix with (1, 1)-entry equal to one and other entries equal to zero. Furthermore, each connected component is isomorphic to \({\mathcal {O}}^{2}\). It is straightforward to verify that the conditions of Proposition 2.5 hold for this instance, and thus \(M_{B_{\text {nuc}},{\mathcal {O}}^{2} \times {\mathcal {O}}^{2} }\) consists of a single orbit.

The proof of Proposition 2.5 requires an impossibility result showing that a spectraplex cannot be expressed as the linear image of the outer product of finitely many smaller-sized spectraplices. The result follows as a consequence of a related result stated in terms of the cone of positive semidefinite matrices [1, 26]. In the following, \({\mathbb {S}}^{q}_{+}\) denotes the cone of \(q\times q\) dimensional positive semidefinite matrices.

Proposition 2.6

Suppose that \({\mathbb {S}}^{q}_{+} = A( {\mathbb {S}}^{q_1}_{+} \times \cdots \times {\mathbb {S}}^{q_k}_{+} \cap L)\) for some linear map A and some affine subspace L. Then \(q \le \max q_i\).

Proposition 2.7

Let \(C= \bigl \{ X_1 \times \cdots \times X_k \mid X_i \in {\mathbb {S}}^{q_i}_{+},\, \sum _{i=1}^k{{\,\mathrm{trace}\,}}X_i=1\bigr \}\). Suppose \({\mathcal {O}}^{q} = A( C)\) for some A. Then \(q \le \max q_i\).

Proof

Express \({\mathbb {S}}^{q}_{+}\) as

where \(\Pi \) projects out the coordinate t. The result follows from Proposition 2.6. \(\square \)

Lemma 2.8

Let \(K= A(C) \subset {\mathbb {R}}^d\) where \(C\subset {\mathbb {R}}^q\) is compact convex, and suppose that \(\dim K=\dim C\). If \(K= {\tilde{A}}(C)\) for some \({\tilde{A}} \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\), then \({\tilde{A}} = A g\) for some \(g\in {{\,\mathrm{Aut}\,}}C\).

Proof

Suppose that \(0\notin {{\,\mathrm{aff}\,}}K\). By applying a suitable rotation, we may assume that \(K\) is contained in the first \(\dim K\) dimensions. Then the maps A and \({\tilde{A}}\) are of the form

Since \(\dim K=\dim C\), the map \(A_1\) is invertible. Subsequently \(A_1^{-1} A_1 \in {{\,\mathrm{Aut}\,}}C\), and thus \({\tilde{A}}_1 = A_1 g\) for some \(g \in {{\,\mathrm{Aut}\,}}C\).

The proof is similar for the case where \(0\in {{\,\mathrm{aff}\,}}K\). The only necessary modification is that we embed \(K\) into \({\mathbb {R}}^{d+1}\) via the set \({\tilde{K}}:=\{ (x,1) \mid x\in K\}\), and we repeat the same sequence of steps with \({\tilde{K}}\) in place of \(K\). We omit the necessary details as they follow in a straightforward fashion from the previous case. \(\square \)

Proof of Proposition 2.5

Let \({\tilde{A}} \in M_{K^{\star },C}\). We show that \({\tilde{A}}\) defines a one-to-one correspondence between the collection of faces \(\{F_i\}_{i=1}^{k}\) and the collection of blocks \(\{\{ 0 \times \cdots \times X_j \times \cdots \times 0\mid X_j \in {\mathcal {O}}^{q_j} \} \}_{j=1}^{k}\) subject to the condition \(\dim F_i=\dim {\mathcal {O}}^{q_j}\). We prove such a correspondence via an inductive argument beginning with the faces of largest dimensions.

We assume (without loss of generality) that \(\dim F_1=\ldots =\dim F_{k^{\prime }}>\ldots \) and that \(\dim {\mathcal {O}}^{q_1}=\ldots =\dim {\mathcal {O}}^{q_{k^{\prime }}}> \ldots \) We further denote \(q = q_1 = \ldots =q_{k^{\prime }}\). As \(F_i\) is an exposed face, the pre-image \({\tilde{A}}^{-1}(F_i) \cap C\) must be an exposed face of \(C\), and thus is of the form \(U_{i,1} X_{i,1} U_{i,1}^{\prime } \times \cdots \times U_{i,k} X_{i,k} U_{i,k}^{\prime }\), where \(X_{i,j} \in {\mathcal {O}}^{q_{i,j}}\) for some \(q_{i,j} \le q_j\), and where \(U_{i,j} \in {\mathbb {R}}^{q_{i,j} \times q_j}\) are partial orthogonal matrices. By Proposition 2.7, we have \(\max _j q_{i,j} \ge q_i = q\). Subsequently, by noting that there are \(k^{\prime }\) blocks with dimensions \(q \times q\), that there are also \(k^{\prime }\) faces \(F_i\) with \(\dim F_i = q\), and that the faces \(F_i\) are disjoint, we conclude that each block in the collection \(\{\{ 0 \times \cdots \times X_j \times \cdots \times 0 \mid X_j \in {\mathcal {O}}^{q_j} \} \}_{j=1}^{k}\) lies in the pre-image of a unique face \(F_i\), \(1\le i \le k^{\prime }\). By repeating the same sequence of arguments for the remaining faces of smaller dimensions, we establish a one-to-one correspondence between faces and blocks. Finally, we apply Lemma 2.8 to each face-block pair to conclude that, after accounting for permutations among blocks of the same size, the maps \(A_{i}^{\star }\) and \({\tilde{A}}_{i}\) are equivalent up to conjugation by an orthogonal matrix. The final assertion that \(M_{K^{\star },C}\) consists of a single orbit is straightforward to establish. \(\square \)

2.3 Analytical Aspects of Our Method

In this third subsection, we describe some of the derivative computations that repeatedly play a role in our paper in our analysis, examples, and numerical experiments. Given a compact convex set \(C\), the support function \(h_{C}(\,{\cdot }\,)\) is differentiable at \(u\) if and only if

is a singleton; the derivative in these cases is given by (10) (see [27, p. 47]). We denote the derivative of \(h_{C}\) at a differentiable \(u\) by \(e_{C}(u) := \nabla _{u} (h_{C}(u))\).

Example

Suppose \(C= \Delta ^{q} \subset {\mathbb {R}}^q\) is the simplex. The function \(h_{C}(\,{\cdot }\,)\) is the maximum entry of the input vector, and it is differentiable at this point if and only if the maximum is unique with the derivative \(e_{C}(\,{\cdot }\,)\) equal to the corresponding standard basis vector.

Example

Suppose \(C= {\mathcal {O}}^{p} \subset {\mathbb {S}}^p\) is the spectraplex. The function \(h_{C}(\,{\cdot }\,)\) is the largest eigenvalue of the input matrix, and it is differentiable at this point if and only if the largest eigenvalue has multiplicity one with the derivative \(e_{C}(\,{\cdot }\,)\) equal to the projector onto the corresponding one-dimensional eigenspace.

The following result gives a formula for the derivative of \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\).

Proposition 2.9

Let P be a probability distribution over the measurement pairs \((u,y)\), and suppose that \({\mathbb {E}}_{P}[y^2] < \infty \). Let \(A \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\) be a linear map such that \(h_{C}(\,{\cdot }\,)\) is differentiable at \(A^{T}u\) for P-a.e. \(u\). Then the function \(\Phi _{C}(\,{\cdot }\,,P)\) is differentiable with derivative

Proof

Let \(\lambda _{C}(\,{\cdot }\,,A,D)\) denote the remainder term satisfying

Since the function \(h_{C}(\,{\cdot }\,)\) is differentiable at \(A^{T}u\) for P-a.e. \(u\), we have \(\lambda _{C}(\,{\cdot }\,,A,D) \rightarrow 0\) as \(\Vert D\Vert _{C,2} \rightarrow 0\), P-a.e. Suppose D is in a bounded set. First, we can bound \(|h_{C}((A+D)^{T}u) + h_{C}(A^{T}u) -2y | \le c_1 (1 + |y|)\) for some constant \(c_1\). Second, by Lemma 2.2, we have the inequality \(| h_{C}((A+D)^{T}u) - h_{C}(A^{T}u)| \le \Vert D\Vert _{C,2}\). Third, by noting that \(|h_{C}(A^{T}u) - y|\) can be bounded by \(c_2 (1 + |y|)\) for some constant \(c_2\), and by noting that the entries of the linear map \( u\otimes e_{C}(A^{T}u)\) are uniformly bounded, we may bound \(\Vert (h_{C}(A^{T}u) - y) u\otimes e_{C}(A^{T}u)\Vert _{C,2}\) by a function of the form \(c_3 (1 + |y|)\) for some constant \(c_3\). Subsequently we may bound

for some constant c. Since \({\mathbb {E}}_{P} [y^2]<\infty \), we have \(\lambda _{C}(\,{\cdot }\,,A,D) \in {\mathcal {L}}^2(P)\), and hence \(\lambda _{C}(\,{\cdot }\,,A,D) \in {\mathcal {L}}^1(P)\). The result follows from an application of the Dominated Convergence Theorem. \(\square \)

It turns out to be considerably more difficult to compute an explicit expression of the second derivative of \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\). For this reason, our next result applies in a much more restrictive setting in comparison to Proposition 2.9.

Proposition 2.10

Suppose that the underlying set \(K^{\star }= A^{\star }(C)\) for some \(A^{\star } \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\). In addition, suppose that the function \(h_{C}(\,{\cdot }\,)\) is continuously differentiable at \(A^{\star T}u\) for \(P_{K^{\star }}\)-a.e. \(u\). Then the map \(A \mapsto \Phi _{C}(A,P_{K^{\star }})\) is twice differentiable at \(A^{\star }\) and its second derivative is the operator \(\Gamma \in L(L({\mathbb {R}}^q,{\mathbb {R}}^d),L^*({\mathbb {R}}^q,{\mathbb {R}}^d))\) defined by

Proof

To simplify notation, we denote the operator norm \(\Vert \,{\cdot }\,\Vert _{C,2}\) by \(\Vert \,{\cdot }\,\Vert \) in the remainder of the proof. By Proposition 2.9, the map \(A\mapsto \Phi (A,P)\) is differentiable in an open neighborhood around \(A^{\star }\) with derivative \(2(h_{C}(A^{T}u) - y) u\otimes e_{C}(A^{T}u)\). Hence to show that the map is twice differentiable with second derivative \(\Gamma \), it suffices to show that

First we note that every component of \(\varepsilon (u) u\otimes e_{C}((A^{\star }+D)^{T}u)\) is integrable because \({\mathbb {E}}[\varepsilon (u)^2] < \infty \), and \(u\otimes e_{C}((A^{\star }+D)^{T}u)\) is uniformly bounded. Hence by Fubini’s Theorem we have

Similarly,

Second, by differentiability of the map \(A\mapsto \Phi (A,P)\) at \(A^{\star }\) the limit

is 0. By noting that every component of \(u\otimes e_{C}((A^{\star }+D)^{T}u)\) is uniformly bounded, and an application of the Dominated Convergence Theorem, we have

Third, since \(h_{C}(\,{\cdot }\,)\) is continuously differentiable at \(A^{\star T}u\) for P-a.e. \(u\), we have \(e_{C}((A^{\star }+D)^{T}u) \rightarrow e_{C}(A^{\star T}u)\) as \(\Vert D\Vert \rightarrow 0\), for P-a.e. \(u\). By the Dominated Convergence Theorem we have \({\mathbb {E}}[e_{C}((A^{\star }+D)^{T}u)] \rightarrow {\mathbb {E}}[ e_{C}(A^{\star T}u)] \) as \(\Vert D\Vert \rightarrow 0\). It follows that

The result follows by summing the contributions from (14) and (15), as well as noting that the expressions in (12) and (13) vanish. \(\square \)

3 Main Results

In this section, we investigate the statistical aspects of minimizers of the optimization problem (5). Our objective in this section is to relate a sequence of minimizers \(\{{\hat{A}}_n\}_{n=1}^\infty \) of \(\Phi _{C}(\,{\cdot }\,,P_{n,K^{\star }})\) to minimizers of \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\). Based on this analysis, we draw conclusions about properties of sequences of minimizers \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) of the problem (2). In establishing various convergence results, we rely on an important property of the Hausdorff distance, namely that it defines a metric over collections of non-empty compact sets; therefore, the collection of all orbits \(\{A \cdot {{\,\mathrm{Aut}\,}}C\mid A \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\}\) endowed with the Hausdorff distance defines a metric space.

Our results provide progressively sharper recovery guarantees based on increasingly stronger assumptions. Specifically, Sect. 3.1 focuses on conditions under which a sequence of minimizers \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) of (2) converges to \(K^{\star }\); this result relies only on the fact that the optimal approximation of \(K^{\star }\) by a convex set specified by an element of \(M_{K^{\star },C}\) is unique (see Sect. 2.1 for the relevant discussion). Next, Sect. 3.2 gives a limiting distributional characterization of the sequence \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) based on an asymptotic normality analysis of the sequence \(\{{\hat{A}}_n\}_{n=1}^\infty \); among other assumptions, this analysis relies on the stronger requirement that \(M_{K^{\star },C}\) consists of a single orbit. Finally, based on additional conditions on the facial structure of \(K^{\star }\), we describe in Sect. 3.3 how the sequence \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) preserves various attributes of the face structure of \(K^{\star }\).

3.1 Strong Consistency

We describe conditions for convergence of a sequence of minimizers \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) of (2). Our main result essentially states that such a sequence converges to an optimal \(\rho _2\) approximation of \(K^{\star }\) as a linear image of \(C\), provided that such an approximation is unique:

Theorem 3.1

Suppose that the assumptions (A1)–(A4) hold. Let \(\{ {\hat{A}}_n \}_{n=1}^{\infty }\) be a sequence of minimizers of the empirical loss function \(\Phi _{C}(\,{\cdot }\,,P_{n,K^{\star }})\) with the corresponding reconstructions given by \({\hat{K}}_{n}^{C}= {\hat{A}}_n(C)\). We have that \({{\,\mathrm{dist}\,}}({\hat{A}}_n,M_{K^{\star },C})\rightarrow 0\) a.s. and

As a consequence, if \(M_{K^{\star },C}\) specifies a unique set—there exists \({\hat{K}}\subset {\mathbb {R}}^d\) such that \({\hat{K}}= A(C)\) for all \(A \in M_{K^{\star },C}\)—then \(\rho _{p} ( {\hat{K}}_{n}^{C}, {\hat{K}}) \rightarrow 0\) a.s. for \(1 \le p \le \infty \). Further, if \(K^{\star }= A^{\star }(C)\) for some linear map \(A^{\star } \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\), then \(\rho _{p} ({\hat{K}}_{n}^{C},K^{\star }) \rightarrow 0\) a.s. for \(1 \le p \le \infty \).

When \(M_{K^{\star },C}\) defines multiple sets, our result does not imply convergence of the sequence \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\). Rather, we obtain the weaker consequence that the sequence \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) eventually becomes arbitrarily close to the collection \(\{A(C)\mid A \in M_{K^{\star },C}\}\).

Example

Suppose \(K^{\star }\) is the unit \(\ell _2\)-ball in \({\mathbb {R}}^2\), and \(C= \Delta ^{q}\). The optimal \(\rho _2\) approximation is the regular q-gon with an unspecified rotation. The sequence \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) does not have a limit; rather, there is a sequence \(\{g_n\}_{n=1}^{\infty } \subset {{\,\mathrm{SO}\,}}(2,{\mathbb {R}})\) such that \(g_n {\hat{K}}_{n}^{C}\) converges to a centered regular q-gon (with fixed orientation) a.s.

The proof of Theorem 3.1 comprises two parts. First, we show that there exists a ball in \(L({\mathbb {R}}^q,{\mathbb {R}}^d)\) such that \({\hat{A}}_n\) belongs to this ball for all sufficiently large n a.s. Second, we appeal to the following uniform convergence result. The structure of our proof is similar to that of a corresponding result for K-means clustering (see the main theorem in [22]).

Lemma 3.2

Let \(U \subset L({\mathbb {R}}^q,{\mathbb {R}}^d)\) be bounded and suppose the set \(C\subset {\mathbb {R}}^q\) satisfies assumption (A3). Let P be a probability distribution over the measurement pairs \((u,y) \subset S^{d-1} \times {\mathbb {R}}\) satisfying \({\mathbb {E}}_{P}[y^2] < \infty \), and let \(P_n\) be the empirical measure corresponding to drawing n i.i.d. observations from the distribution P. Consider the collection of functions \({\mathcal {G}} := \{ (h_{C}(A^{T}u) - y)^2\mid A \in U \}\) in the variables \((u,y)\). Then \(\sup _{g \in {\mathcal {G}}}|{\mathbb {E}}_{P_n}[g] - {\mathbb {E}}_{P}[g]| \rightarrow 0\) as \(n \rightarrow \infty \) a.s.

The proof of Lemma 3.2 follows from an application of the following uniform strong law of large numbers (SLLN) [23, Thm. 3, p. 8].

Theorem 3.3

(Uniform SLLN) Let Q be a probability measure and let \(Q_n\) be the corresponding empirical measure. Let \({\mathcal {G}}\) be a collection of Q-integrable functions. Suppose that for every \(\epsilon >0\) there exists a finite collection of functions \({\mathcal {G}}_{\epsilon }\) such that for every \(g\in {\mathcal {G}}\) there exist \({\overline{g}},{\underline{g}}\in {\mathcal {G}}_{\epsilon }\) satisfying

-

(i)

\({\underline{g}} \le {\overline{g}}\), and

-

(ii)

\({\mathbb {E}}_{Q}[ {\overline{g}} - {\underline{g}} ] < \epsilon \).

Then \(\sup _{g \in {\mathcal {G}}} | {\mathbb {E}}_{Q_n}[g] - {\mathbb {E}}[g] | \rightarrow 0 \) a.s.

Proof of Lemma 3.2

Based on Theorem 3.3, it suffices to construct the finite collection of functions \({\mathcal {G}}_{\epsilon }\). Pick r sufficiently large so that \(U \subset r B_{\Vert \cdot \Vert _{C,2}} (0)\). Let \({\mathcal {D}}_{\delta }\) be a \(\delta \)-cover for U in the \(\Vert \,{\cdot }\,\Vert _{C,2}\)-norm, where \(\delta \) is chosen so that \(4 \delta {\mathbb {E}}_{P}[ r + |y| ] \le \epsilon \). We define \({\mathcal {G}}_{\epsilon }:=\{ ((|h_{C}(A^{T}u)-y| - \delta )_{+})^2 \}_{A \in {\mathcal {D}}_{\delta }} \cup \{ (|h_{C}(A^{T}u)-y| + \delta )^2 \}_{A \in {\mathcal {D}}_{\delta }} \).

We proceed to verify (i) and (ii). Let \(g=(h_{C}(A^{T}u)-y)^2 \in {\mathcal {G}}\) be arbitrary. Let \(A_0 \in {\mathcal {D}}_{\delta }\) be such that \(\Vert A - A_0 \Vert _{C,2} \le \delta \). Define \({\underline{g}} = ((|h_{C}(A^{T}_0 u)-y| - \delta )_{+})^2\) and \({\overline{g}} = (|h_{C}(A^{T}_0 u)-y| + \delta )^2\). It follows that \({\underline{g}} \le g \le {\overline{g}}\), which verifies (i). Next, we have \({\mathbb {E}}[{\overline{g}} - {\underline{g}} ] \le 4 \delta {\mathbb {E}}[ | h_{C}(A_0^{T}u) - y| ] \le 4 \delta {\mathbb {E}}[ r + |y| ] \le \epsilon \), which verifies (ii). \(\square \)

Proof of Theorem 3.1

To simplify notation in the following proof, we denote \(B := B_{\Vert \cdot \Vert _{C,2}}(0)\). First, we recall the definition of the event \(G_{r,v}\) and the function \(s(v)\) from the proof of Proposition 2.3. Using a sequence of arguments identical as in the proof of Proposition 2.3, it follows that there exists \({\hat{r}}\) sufficiently large so that \({\hat{r}}^2{\mathbb {P}}[G_{{\hat{r}},v}]/16 > \Phi _{C}(0,P_{K^{\star }})\) for all \(v\in S^{d-1}\). We claim that \({\hat{A}}_{n} \in {\hat{r}} B\) eventually a.s. We prove this assertion via contradiction. Suppose on the contrary that \({\hat{A}}_{n} \notin {\hat{r}} B\) i.o. For every \({\hat{A}}_n \notin {\hat{r}} B\), there exists \({\hat{x}}_{n} \in C\) such that \(\Vert {\hat{A}}_n{\hat{x}}_{n} \Vert >{\hat{r}}\). The sequence of unit-norm vectors \({\hat{A}}_n {\hat{x}}_{n} / \Vert {\hat{A}}_n {\hat{x}}_{n} \Vert _{2}\), defined over the subset of indices n such that \({\hat{A}}_n \notin {\hat{r}} B\), contains a convergent subsequence whose limit point is \({\hat{v}}\in S^{d-1}\). Then

Here, the last equality follows from the SLLN. This implies \(\Phi _{C}({\hat{A}}_n, P_{n,K^{\star }}) > \Phi _{C}(0, P_{n,K^{\star }})\) i.o., which contradicts the minimality of \({\hat{A}}_n\). Hence \({\hat{A}}_{n} \in {\hat{r}} B\) eventually a.s.

Second, we show that \({{\,\mathrm{dist}\,}}({\hat{A}}_n,M_{K^{\star },C}) \rightarrow 0\) a.s. It suffices to show that \({\hat{A}}_n \in U\) eventually a.s., where U is any open set containing \(M_{K^{\star },C}\). Let \({\hat{A}} \in M_{K^{\star },C}\) be arbitrary. By Proposition 2.1, the function \(A \mapsto \Phi _{C}(A,P_{K^{\star }})\) is continuous. By noting that the set of minimizers of \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) is compact from Proposition 2.3, we can pick \(\epsilon >0\) sufficiently small so that \(\{ A\mid \Phi _{C}(A,P_{K^{\star }}) <\Phi _{C}({\hat{A}},P_{K^{\star }}) + \epsilon \} \subset U\). Next, since \({\hat{A}}_n\) is defined as the minimizer of an empirical sum, we have \(\Phi _{C}({\hat{A}}_n,P_{n,K^{\star }}) \le \Phi _{C}({\hat{A}},P_{n,K^{\star }})\) for all n. By applying Lemma 3.2 with the choice of \(P=P_{K^{\star }}\), we have \(\Phi _{C}({\hat{A}}_n,P_{n,K^{\star }}) \rightarrow \Phi _{C}({\hat{A}}_n,P_{K^{\star }})\), and \(\Phi _{C}({\hat{A}},P_{n,K^{\star }}) \rightarrow \Phi _{C}({\hat{A}},P_{K^{\star }})\), both uniformly and in the a.s. sense. Subsequently, by combining the previous two conclusions, we have \(\Phi _{C}({\hat{A}}_n,P_{K^{\star }}) < \Phi _{C}({\hat{A}},P_{K^{\star }}) + \epsilon \) eventually, for any \(\epsilon >0\). This proves that \({{\,\mathrm{dist}\,}}({\hat{A}}_n,M_{K^{\star },C}) \rightarrow 0\) a.s.

Third, we conclude that

Fix an integer n, and let \(t_n ={{\,\mathrm{dist}\,}}({\hat{A}}_n,M_{K^{\star },C})\). Since \(M_{K^{\star },C}\) is compact, we may pick \({\bar{A}} \in M_{K^{\star },C}\) such that \(\Vert {\hat{A}}_{n} - {\bar{A}} \Vert _\mathrm{F} = t_n\). Given \(A \in {\hat{A}}_n \cdot {{\,\mathrm{Aut}\,}}C\), we have \(A = {\hat{A}}_n g\) for some \(g \in {{\,\mathrm{Aut}\,}}C\). Then \({\bar{A}} g \in {\bar{A}} \cdot {{\,\mathrm{Aut}\,}}C\), and since g is an isometry by assumption (A4), we have \(\Vert {\hat{A}}_{n} g - {\bar{A}} g \Vert _\mathrm{F} = \Vert {\hat{A}}_{n} - {\bar{A}} \Vert _\mathrm{F} = t_n\). This implies that \(d_{\text {H}}({\hat{A}}_n \cdot {{\,\mathrm{Aut}\,}}C,{\bar{A}} \cdot {{\,\mathrm{Aut}\,}}C) \le t_n\). Since \(t_n \rightarrow 0\) as \(n \rightarrow 0\), (16) follows. \(\square \)

3.2 Asymptotic Normality

In our second main result, we characterize the limiting distribution of a sequence of estimates \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) corresponding to minimizers of (2) by analyzing an associated sequence of minimizers of (5). Specifically, we show under suitable conditions that the estimation error in the sequence of minimizers of the empirical loss (5) is asymptotically normal. After developing this theory, we illustrate in Sect. 3.2.1 through a series of examples the asymptotic behavior of the set \({\hat{K}}_{n}^{C}\), highlighting settings in which \({\hat{K}}_{n}^{C}\) converges, as well as situations in which our asymptotic normality characterization fails due to the requisite assumptions not being satisfied. Our result relies on two key ingredients, which we describe next.

The first set of requirements pertains to non-degeneracy of the function \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\). First, we require that the minimizers of \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) constitute a unique orbit under the action of the automorphism group of the set \(C\); this guarantees the existence of a convergent sequence of minimizers of the empirical losses \(\Phi _{C}(\,{\cdot }\,,P_{n,K^{\star }})\), which is necessary to provide a Central Limit Theorem (CLT) type of characterization. Second, we require the function \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) to be twice differentiable at a minimizer with a positive definite Hessian (modulo invariances due to \({{\,\mathrm{Aut}\,}}C\)); such a condition allows us to obtain a quadratic approximation of \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) around a minimizer \({\hat{A}}\), and to subsequently compute first-order approximations of minimizers of the empirical losses \(\Phi (\,{\cdot }\,,P_{n,K^{\star }})\). These conditions lead to a geometric characterization of confidence regions of the extreme points of the limit of the sequence \(\{{\hat{K}}_{n}^{C}\}_{n=1}^\infty \).

Our second main assumption centers around the collection of sets that serves as the constraint in the optimization problem (2). Informally, we require that this collection is not “overly complex,” so that we can appeal to a suitable CLT. The field of empirical processes provides the tools necessary to formalize matters. Concretely, as our estimates are obtained via minimization of an empirical process, we require that the following divided differences of the loss function are well controlled:

In particular, a natural assumption is that the graph associated to these divided differences, indexed over a collection centered at \({\hat{A}}\), is of suitably “bounded complexity”:

A powerful framework to quantify the ‘richness’ of such collections is based on the notion of a Vapnik–Chervonenkis (VC) class [33]. VC classes describe collections of subsets with bounded complexity, and they feature prominently in the field of statistical learning theory, most notably in conditions under which generalization of a learning algorithm is possible.

Definition

(Vapnik–Chervonenkis class) Let \({\mathcal {F}}\) be a collection of subsets of a set F. A finite set D is said to be shattered by F if for every \(A \subset D\) there exists \(G \in {\mathcal {F}}\) such that \(A = G \cap D\). The collection \({\mathcal {F}}\) is said to be a VC class if there is a finite k such that all sets with cardinality greater than k cannot be shattered by \({\mathcal {F}}\).

Whether or not the collection (17) is VC depends on the particular choice of \(C\). If \(C\) is chosen to be either a simplex or a spectraplex then such a property is indeed satisfied—see Sect. 3.2.2 for further details. Our analysis relies on a result by Stengle and Yukich showing that certain collections of sets admitting semialgebraic representations are VC [31]. We are now in a position to state our main result of this section:

Theorem 3.4

Suppose that the assumptions (A1)–(A4) hold. Suppose that there exists \({\hat{A}} \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\) such that the set of minimizers \(M_{K^{\star },C}={\hat{A}} \cdot {{\,\mathrm{Aut}\,}}C\) (i.e., \(M_{K^{\star },C}\) consists of a single orbit), the function \(h_{C}(\,{\cdot }\,)\) is differentiable at \({\hat{A}}^{T}u\) for \(P_{K^{\star }}\)-a.e. \(u\), the function \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) is twice differentiable at \({\hat{A}}\), and the associated Hessian \(\Gamma \) at \({\hat{A}}\) is positive definite restricted to the subspace \(T^{\perp }\), i.e., \(\Gamma |_{T^{\perp }} \succ 0\); here \(T:=T_{{\hat{A}}} M_{K^{\star },C}\) denotes the tangent space of \(M_{K^{\star },C}\) at \({\hat{A}}\). In addition, suppose that the collection of sets specified by (17) forms a VC class. Let \({\tilde{A}}_n \in {{\,\mathrm{arg\,min}\,}}_{A} \Phi _{C}(A,P_{n,K^{\star }})\), \(n \in {\mathbb {N}}\), be a sequence of minimizers of the empirical loss function, and let \({\tilde{A}}_n \in {{\,\mathrm{arg\,min}\,}}_{A \in {\hat{A}}_n \cdot {{\,\mathrm{Aut}\,}}C} \Vert {\hat{A}} - A \Vert _\mathrm{{F}}\), \(n \in {\mathbb {N}}\), specify an equivalent sequence defined with respect to the minimizer \({\hat{A}}\) of the population loss. Setting \(\nabla = \nabla _{A} ((h_{C}(A^{T}u)-y)^2)\), we have that

The proof of this theorem relies on ideas from the literature of empirical processes, which we describe next in a general setting.

Proposition 3.5

Suppose P is a probability measure over \({\mathbb {R}}^{q}\) and \(P_n\) is the empirical measure corresponding to n i.i.d. draws from P. Suppose \(f(\,{\cdot }\,,\,{\cdot }\,):{\mathbb {R}}^{q} \times {\mathbb {R}}^{p} \rightarrow {\mathbb {R}}\) is a Lebesgue-measurable function such that \(f(\,{\cdot }\,,t):{\mathbb {R}}^{q} \rightarrow {\mathbb {R}}\) is P-integrable for all \(t\in {\mathbb {R}}^{p}\). Denote the expectations \(F(t) = {\mathbb {E}}_{P}[ f(\,{\cdot }\,,t)]\) and \(F_n(t) = {\mathbb {E}}_{P_n}[f(\,{\cdot }\,,t)]\). Let \({\hat{t}} \in {{\,\mathrm{arg\,min}\,}}_{t}F(t)\) be a minimizer of the population loss, let \(\{ {\hat{t}}_n \}_{n=1}^{\infty }\), \({\hat{t}}_n \in {{\,\mathrm{arg\,min}\,}}_{t}F_n(t)\), be a sequence of empirical minimizers, and let \(f(\,{\cdot }\,,t) = f(\,{\cdot }\,,{\hat{t}}) + \langle t- {\hat{t}}, \Delta (\,{\cdot }\,) \rangle + \Vert t- {\hat{t}} \Vert _{2}r(\,{\cdot }\,,t)\) denote the linearization of \(f(\,{\cdot }\,,t)\) about \({\hat{t}}\) (we assume that \(f(\,{\cdot }\,,t)\) is differentiable with respect to \(t\) at \({\hat{t}}\) P-a.e., with derivative denoted by \(\Delta (\,{\cdot }\,)\)). Let \({\mathcal {D}}=\{d_{t, {\hat{t}}}(\,{\cdot }\,)\mid t\in B_{\Vert \cdot \Vert _{2}} ({\hat{t}}) \setminus \{{\hat{t}}\} \}\), where \(d_{t_1,t_2}(\,{\cdot }\,) = (f(\,{\cdot }\,,t_1)-f(\,{\cdot }\,,t_2)) / \Vert t_1 - t_2 \Vert _{2}\), denote the collection of divided differences. Suppose

-

(i)

\({\hat{t}}_n \rightarrow {\hat{t}}\) a.s.,

-

(ii)

the Hessian \(\nabla ^{2}:=\nabla ^{2}F(t)|_{t= {\hat{t}}}\) about \({\hat{t}}\) is positive definite,

-

(iii)

the collection of sets \(\bigl \{\{ (\,{\cdot }\,, s) \mid d_{t,{\hat{t}}}(\,{\cdot }\,) \ge s \ge 0\, \text { or } \,d_{t,{\hat{t}}}(\,{\cdot }\,) \le s \le 0 \}\, \mid \, t\in B_{\Vert \cdot \Vert _{2}} ({\hat{t}}) \setminus \{ {\hat{t}} \} \bigr \}\) form a VC class, and

-

(iv)

there is a function \({\bar{d}}(\,{\cdot }\,):{\mathbb {R}}^q \rightarrow {\mathbb {R}}\) such that \(|d(\,{\cdot }\,)|\le {\bar{d}}(\,{\cdot }\,)\) for all \(d \in {\mathcal {D}}\), \(|\langle \Delta (\,{\cdot }\,), e\rangle | \le {\bar{d}}(\,{\cdot }\,)\) for all unit-norm vectors \(e\), \({\bar{d}}(\,{\cdot }\,)>0\), and \({\bar{d}}(\,{\cdot }\,)\in {\mathcal {L}}^{2}(P)\).

Then \(\sqrt{n}({\hat{t}}_n - {\hat{t}}) \overset{D}{\rightarrow } {\mathcal {N}}\bigl (0, (\nabla ^{2})^{-1} [ ({\mathbb {E}}_{P}[\Delta \Delta ^{T}]) - ({\mathbb {E}}_{P}[\Delta ])({\mathbb {E}}_{P}[\Delta ])^{T} ] (\nabla ^{2})^{-1}\bigr )\).

The proof of Proposition 3.5 is based on a series of ideas developed in [23, Ch. VII] (see in particular Example 19). These rely on computing entropy numbers for certain function classes. More formally, given a probability measure P and a collection of functions \({\mathcal {F}}\) with each member being a mapping from \({\mathbb {R}}^q\) to \({\mathbb {R}}\), we define \(\eta (\epsilon , P, {\mathcal {F}})\) to be the size of the smallest \(\epsilon \)-cover of \({\mathcal {F}}\) in the \({\mathcal {L}}^{2}(P)\)-distance. As these steps are built on substantial background material from [23], we state the key conceptual arguments in the following proof and refer the reader to specific pointers in the literature for further details.

Proof of Proposition 3.5

To simplify notation we denote \(B_{{\hat{t}}}:=B_{\Vert \cdot \Vert _{2}}({\hat{t}}) \setminus \{ {\hat{t}} \}\). The graphs of \({\mathcal {D}}:=\{d_{t,{\hat{t}}}(\,{\cdot }\,) \mid t\in B_{{\hat{t}}} \}\) form a VC class by assumption. By [23, Cor. 17, p. 20], these graphs have polynomial discrimination (see [23, Ch. II]). In addition, the collection \({\mathcal {D}}\) have an enveloping function \({\bar{d}}\) by assumption. Hence by [23, Lem. 36, p. 34], there exist constants \(\alpha _{{\mathcal {D}}},\beta _{{\mathcal {D}}}\) such that \(\eta (\delta ( {\mathbb {E}}_{P_n} [{\bar{d}}^2])^{1/2}, P_n, {\mathcal {D}}) \le \alpha _{{\mathcal {D}}} (1/\delta )^{\beta _{{\mathcal {D}}}}\), for all \(0<\delta \le 1\) and all n.

We obtain a similar bound for graphs of \({\mathcal {E}} := \{ \langle \Delta (\,{\cdot }\,) ,(t-{\hat{t}})/\Vert t-{\hat{t}}\Vert _2 \rangle \mid t\in B_{{\hat{t}}} \}\). First, we note that \({\mathcal {E}}\) is a subset of a finite dimensional vector space. By [17, Lem. 9.6, p. 159], the collection of subgraphs \(\{ \{ (t,s)\mid f(t) \le s \} \mid f \in {\mathcal {E}} \}\) forms a VC class. Then, by noting that the singletons \(\{ \{ (t,s)\mid s \le 0 \} \}\) form a VC class, and that the collection of sets formed by taking intersections of members of two VC classes is also a VC class (see [17, Lem. 9.7, p. 159]), we conclude that the collection of sets \(\{ \{ (t,s)\mid f(t) \le s \le 0 \} \mid f \in {\mathcal {E}} \}\) is a VC class. A similar sequence of steps shows that the collection of sets \(\{ \{ (t,s)\mid f(t) \ge s \ge 0 \} \mid f \in {\mathcal {E}} \}\) also forms a VC class. The collection of graphs of \({\mathcal {E}}\) is the union of the previous two collections. Hence by [17, Lem. 9.7, p. 159] it is also a VC class. Subsequently, by applying the same sequence of steps as we did for \({\mathcal {D}}\), there exists constants \(\alpha _{{\mathcal {E}}},\beta _{{\mathcal {E}}}\) such that \(\eta (\delta ({\mathbb {E}}_{P_n}[{\bar{d}}^2])^{1/2}, P_n, {\mathcal {E}}) \le \alpha _{{\mathcal {E}}} (1/\delta )^{\beta _{{\mathcal {E}}}}\), for all \(0<\delta \le 1\) and all n.

Next, consider the collection of functions \({\mathcal {F}} := \{ r(\,{\cdot }\,,t)\mid t\in B_{{\hat{t}}} \}\). We have \(r(\,{\cdot }\,,t) = d_{t,{\hat{t}}}(\,{\cdot }\,)+\langle \Delta (\,{\cdot }\,), ( t- {\hat{t}} ) / \Vert t- {\hat{t}}\Vert _2 \rangle \), and hence every element in \({\mathcal {F}}\) is expressible as a sum of functions in \({\mathcal {D}}\) and \({\mathcal {E}}\) respectively. Given \((\delta /\sqrt{2})\)-covers for \({\mathcal {D}}\) and \({\mathcal {E}}\) in any \({\mathcal {L}}^{2}\)-distance, one can show via the AM-GM inequality that the union of these covers forms a \(\delta \)-cover for \({\mathcal {F}}\). Subsequently, we conclude that there exists constants \(\alpha \) and \(\beta \) such that \(\eta (\delta ({\mathbb {E}}_{P_n}[{\bar{d}}^2])^{1/2},P_n,{\mathcal {F}}) \le \alpha (1/\delta )^{\beta }\).

The remaining sequence of arguments is identical to those in [23, Ch. VII]. Our bound on the quantity \(\eta (\delta ({\mathbb {E}}_{P_n}[{\bar{d}}^2])^{1/2},P_n,{\mathcal {F}})\) implies that there exists \(\theta \) such that \(\int _{0}^{\theta } ( \eta (t,P_n,{\mathcal {F}}) / t)^{1/2} dt < \epsilon \) for all n and all \(\epsilon >0\). We apply [23, Lem. 15, p. 150] to obtain the following stochastic equicontinuity property: given \(\gamma >0\) and \(\epsilon >0\), there exists \(\theta \) such that

Here, \(E_n\) denotes the signed measure \(\sqrt{n}(P_n-P)\). One can check measurability of the inner supremum using the notion of permissibility—see [23, App. C] (in essence, we simply require f to be measurable and the index \(t\) to reside in an Euclidean space). Finally we apply [23, Thm. 5, p. 141] to conclude the result. \(\square \)

Proof of Theorem 3.4

The first step is to verify that the sequence \(\{ {\tilde{A}}_n \}_{n=1}^{\infty }\) quotients out the appropriate equivalences. Since \({\tilde{A}}_n \in {\hat{A}}_n \cdot {{\,\mathrm{Aut}\,}}C\), we have \({\tilde{A}}_n = {\hat{A}}_n g_n\) for an isometry \(g_n\). Subsequently we have \(\Vert {\tilde{A}}_n - {\hat{A}} \Vert _\mathrm{F} = \Vert {\hat{A}}_n g_n - {\hat{A}} \Vert _\mathrm{F} = \Vert {\hat{A}}_n - {\hat{A}} g_n^{-1} \Vert _\mathrm{F} \le d_{\text {H}} ({\hat{A}}_n,M_{K^{\star },C})\). Following the conclusions of Theorem 3.1, we have \({\tilde{A}}_n \rightarrow {\hat{A}}\) a.s. Furthermore, as a consequence of the optimality conditions in the definition of \({\tilde{A}}_n\), we also have \({\tilde{A}}_n - {\hat{A}} \in T^{\perp }\).

The second step is to apply Proposition 3.5 to the sequence \(\{{\tilde{A}}_n\}_{n=1}^{\infty }\) with \((h_{C} (A^{T}u) - y)^2\) as the choice of loss function \(f(\,{\cdot }\,,t)\), \(P_{K^{\star }}\) as the probability measure P, \((u,y)\) as the argument, and A as the index \(t\). First, the measurability of the loss as a function in A and \((u,y)\) is straightforward to establish. Second, the differentiability of the loss function at \({\hat{A}}\) for \(P_{K^{\star }}\)-a.e. \((u,y)\) follows from the assumption that \(h_{C}(\,{\cdot }\,)\) is differentiable at \({\hat{A}}^{T}u\) for \(P_{K^{\star }}\)-a.e. \(u\). Third, we have shown that \({\tilde{A}}_n \rightarrow {\hat{A}}\) a.s. in the above. Fourth, the Hessian \(\Gamma |_{T^{\perp }}\) is positive definite by assumption. Fifth, the graphs of \(\{ d_{C,A,{\hat{A}}}(u,y)\mid A \in B_{\Vert \cdot \Vert _\mathrm{F}}({\hat{A}}) \setminus \{ {\hat{A}} \} \}\) form a VC class by assumption. Sixth, we need to show the existence of an appropriate function \(d(\,{\cdot }\,)\) to bound the divided differences \(d_{C,A,{\hat{A}}}(\,{\cdot }\,)\) and the inner products \(\langle \nabla (\,{\cdot }\,) |_{A={\hat{A}}}, E \rangle \), where \(\Vert E\Vert _\mathrm{F} = 1\). In the former case, we note that \(|(h_{C} (A_1^{T}u)-y) + (h_{C} (A_2^{T}u) - y)| \le \Vert A_1 - A_2 \Vert _{C,2} \le c_1\Vert A_1-A_2\Vert _\mathrm{F}\) for some \(c_1>0\); here, the first inequality follows from Lemma 2.2 and the second inequality follows from the equivalence of norms. Then, by noting that \(A \in B_{\Vert \cdot \Vert _\mathrm{F}}({\hat{A}}) \setminus {{\hat{A}}}\) is bounded, the expression \(| (h_{C} (A^{T}u)-y)+(h_{C} ({\hat{A}}^{T}u) - y) |\) is bounded above by a function of the form \(c_2(1+|y|)\). By expanding the divided difference expression and by combining the previous two bounds, one can show that \(|d_{C,A,{\hat{A}}}(\,{\cdot }\,)| \le c_3(1+|y|)\) for some \(c_3 > 0\). In the latter case, the derivative is given by \(2(h_{C} ({\hat{A}}^{T}u) - y) u\otimes e_{C}({\hat{A}}^{T}u)\). By noting that \(h_{C}({\hat{A}}^{T}u)\), \(u\), and \(e_{C}({\hat{A}}^{T}u)\) are uniformly bounded over \(u\in S^{d-1}\), and by performing a sequence of elementary bounds, one can show that \(\langle \nabla , E \rangle \) is bounded above by \(c_4(1+|y|)\) uniformly over all unit-norm E. We pick \({\bar{d}}(\,{\cdot }\,)\) to be \(c(1+|y|)\), where \(c = \max {\{1,c_3,c_4\}}\). Then \({\bar{d}}(\,{\cdot }\,) > 0\) by construction and furthermore, \({\bar{d}} \in {\mathcal {L}}^2(P_{K^{\star }})\) as \({\mathbb {E}}_{P_{K^{\star }}}[ \varepsilon ^2 ]<\infty \).

Finally, the result follows from an application of Proposition 3.5. \(\square \)

This result gives an asymptotic normality characterization corresponding to a sequence of minimizers \(\{{\hat{A}}_n\}_{n=1}^\infty \) of the empirical losses \(\Phi _{C}(\,{\cdot }\,,P_{n,K^{\star }})\). In the next result, we specialize this result to the setting in which the underlying set \(K^{\star }\) is in fact expressible as a projection of \(C\), i.e., \(K^{\star }= A^{\star }(C)\) for some \(A^\star \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\). This specialization leads to a particularly simple formula for the asymptotic error covariance, and we demonstrate its utility in the examples in Sect. 3.2.1.

Corollary 3.6

Suppose that the conditions of Theorem 3.4 and of Proposition 2.10 hold. Using the notation of Theorem 3.4, we have that \({\mathbb {E}}[ \nabla \otimes \nabla |_{A=A^{\star }} ] = 2\sigma ^2 \Gamma \) with \(\Gamma \) given by (11). In particular, the conclusion of Theorem 3.4 simplifies to \(\sqrt{n}({\tilde{A}}_n - {\hat{A}}) \overset{D}{\rightarrow } {\mathcal {N}}\bigl (0, 2\sigma ^2 (\Gamma |_{T^{\perp }})^{-1}\bigr )\).

Proof

One can check that \(\nabla _{A} ((h_{C}(A^{T}u) - y)^2) |_{A=A^{\star }} = - 2\varepsilon u\otimes e_{C}(A^{\star T}u) \), from which we have that \({\mathbb {E}}[\nabla \otimes \nabla |_{A=A^{\star }} ] = 2\sigma ^2 \Gamma \). This concludes the result. \(\square \)

3.2.1 Examples

Here we give examples that highlight various aspects of the theoretical results described previously. In all of our examples, the noise \(\{\varepsilon ^{(i)}\}_{i=1}^{n}\) is given by i.i.d. centered Gaussian random variables with variance \(\sigma ^2\). We begin with an illustration in which the assumptions of Theorem 3.4 are not satisfied and the asymptotic normality characterization fails to hold:

Example

Let \(K^{\star }:=\{0\} \subset {\mathbb {R}}\) be a singleton. As \(S^{0} \cong \{ -1,1\}\), the random variables \(u^{(i)}\) are \(\pm 1\) u.a.r. Further, \(h_{K^{\star }}(u)=0\) for all \(u\) and the support function measurements are simply \(y^{(i)} = \varepsilon ^{(i)}\) for all \(i=1,\dots ,n\). For \(C\) being either a simplex or a spectraplex, the set \(M_{K^{\star },C}=\{ 0 \} \subset L({\mathbb {R}}^q,{\mathbb {R}})\) is a singleton consisting only of the zero map. First, we consider fitting \(K^{\star }\) with the choice of \(C= \Delta ^{1} \subset {\mathbb {R}}^1\). Then we have \({\hat{A}}_n =({1}/{n}) \sum _{i=1}^{n} \varepsilon ^{(i)} u^{(i)}\), from which it follows that \(\sqrt{n}({\hat{A}}_n - 0)\) is normally distributed with mean zero and variance \(\sigma ^2\)—this is in agreement with Theorem 3.4. Second, we consider fitting \(K^{\star }\) with the choice \(C= \Delta ^{2} \subset {\mathbb {R}}^2\). Define \(U_{-} = \{ i \mid u_i = -1 \}\) and \(U_{+} = \{ i \mid u_i = 1 \}\), and

Then \({\hat{K}}_{n}^{C}= \{ x \mid \alpha _{-} \le x \le \alpha _{+} \}\) if \(\alpha _{-} \le \alpha _{+}\) and \({\hat{K}}_{n}^{C}= \bigl \{ ({1}/{n}) \sum _{i=1}^{n} \varepsilon ^{(i)} u^{(i)} \bigr \}\) otherwise. Notice that \(\alpha _{-}\) and \(\alpha _{+}\) have the same distribution, and hence \({\hat{K}}_{n}^{C}\) is a closed interval with non-empty interior w.p. 1/2, and is a singleton w.p. 1/2. Thus, one can see that the linear map \({\hat{A}}_n\) does not satisfy an asymptotic normality characterization. The reason for this failure is that the function \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) is twice differentiable everywhere excluding the line \(\{ (c,c) \mid c \in {\mathbb {R}}\}\); in particular, it is not differentiable at the minimizer (0, 0).

The above example is an instance where the function \(\Phi _{C}(\,{\cdot }\,,P_{K^{\star }})\) is not twice differentiable at \({\hat{A}}\). The manner in which an asymptotic characterization of \(\{{\hat{K}}_{n}^{C}\}_{n=1}^{\infty }\) fails in instances where \(M_{K^{\star },C}\) contains multiple orbits is also qualitatively similar. Next, we consider a series of examples in which the conditions of Theorem 3.4 hold, thus enabling an asymptotic normality characterization behavior of \({\hat{K}}_{n}^{C}\). To simplify our discussion, we describe settings in which the choices of \(C\) and \(A^{\star }\) satisfy the conditions of Corollary 3.6, which leads to simple formulas for the second derivative \(\Gamma \) of the map \(A\mapsto \Phi _{C}(A,P_{K^{\star }})\) at \(A^{\star }\).

3.2.2 Polyhedral Examples

We present two examples in which \(K^{\star }\) is polyhedral, and we choose \(C= \Delta ^{q}\) where q is the number of vertices of \(K^{\star }\). With this choice, the set \(M_{K^{\star },C}\) comprises linear maps \(A \in L({\mathbb {R}}^q,{\mathbb {R}}^d)\) whose columns are the extreme points of \(K^{\star }\).

Proposition 3.7

Let \(K^{\star }\) be a polytope with q extreme points and let \(A^{\star }\) be the linear map whose columns are the extreme points of \(K^{\star }\) (in any order). The second derivative of \(\Phi _{C}(\,{\cdot }\,,P)\) at \(A^{\star }\) is given by \(\Gamma (D) = \sum _{j=1}^{q} \int _{u\in H_{A^{\star },j} } \langle u\otimes e_j , D \rangle u\otimes e_j ~ d u\), where \(H_{A,j} := \{ u\in S^{d-1}\mid u^{T} A e_j > u^{T} A e_k \text { for all } k \ne j \}\).

Proof

Let \(A\in L({\mathbb {R}}^q,{\mathbb {R}}^d)\) be a linear map whose columns are pairwise distinct. Then the set \(V_{A} := \{ u\mid u^{T} A e_i = u^{T} A e_j ,\, 1 \le i < j \le d \} \) is a subset of \(S^{d-1}\) with measure zero. We note that the function \(h_{C}(\,{\cdot }\,)\) is differentiable at \(A^{T}u\) whenever \(u\in S^{d-1} \setminus \{V_{A}\}\), and hence the function \(h_{C}(A^{T}u)\) is differentiable with respect to \(u\) P-a.e. By Proposition 2.9, the derivative of \(\Phi _{C}(\,{\cdot }\,,P)\) at A is \(2{\mathbb {E}}_{P} [(h_{C}(A^{T}u) - y) u\otimes e_{C}(A^{T}u) ]\).

The subset of linear maps whose columns are pairwise distinct is open. Hence the derivative of \(\Phi _{C}(\,{\cdot }\,,P)\) exists in a neighborhood of \(A^{\star }\). It is clear that the derivative is also continuous at \(A^{\star }\). Finally, we note that the collection \(\{H_{A^{\star },j}\}_{j=1}^{q}\) forms a disjoint cover of \(S^{d-1}\) up to a subset of measure zero, and apply Proposition 2.10 to conclude the expression for \(\Gamma \). \(\square \)

We note that the operator \(\Gamma \) has block diagonal structure since each integral \(\int _{u\in H_{A^{\star },j} } \langle u\otimes e_j , D \rangle u\otimes e_j ~ d u\) is supported on a \(d\times d\) dimensional sub-block. Subsequently, by combining the conclusions of Theorem 3.1 and Proposition 3.7, we can conclude the following about \({\hat{K}}_{n}^{C}\): (i) it is a polytope with q extreme points, (ii) each vertex of \({\hat{K}}_{n}^{C}\) is close to a distinct vertex of \(K^{\star }\), (iii) the deviations (after scaling by a factor of \(\sqrt{n}\)) between every vertex-vertex pair are asymptotically normal with inverse covariance specified by a \(d\times d\) block of \(\Gamma \), and further these deviations are pairwise independent.

Example