Abstract

Intrinsic volumes and Minkowski tensors have been used to describe the geometry of real world objects. This paper presents an estimator that allows approximation of these quantities from digital images. It is based on a generalized Steiner formula for Minkowski tensors of sets of positive reach. When the resolution goes to infinity, the estimator converges to the true value if the underlying object is a set of positive reach. The underlying algorithm is based on a simple expression in terms of the cells of a Voronoi decomposition associated with the image.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Intrinsic volumes, such as volume, surface area, and Euler characteristic, are widely-used tools to capture geometric features of an object; see, for instance, [1, 22, 24]. Minkowski tensors are tensor valued generalizations of the intrinsic volumes, associating with every sufficiently regular compact set in \(\mathbb {R}^d\) a symmetric tensor, rather than a scalar. They carry information about geometric features of the set such as position, orientation, and eccentricity. For instance, the volume tensor—defined formally in Sect. 2—of rank 0 is just the volume of the set, while the volume tensors of rank 1 and 2 are closely related to the center of gravity and the tensor of inertia, respectively. For this reason, Minkowski tensors are used as shape descriptors in materials science [29, 31], physics [14], and biology [3, 36].

The main purpose of this paper is to present estimators that approximate all the Minkowski tensors of a set K when only weak information on K is available. More precisely, we assume that a finite set \(K_0\) which is close to K in the Hausdorff metric is known. The estimators are based on the Voronoi decomposition of \(\mathbb {R}^d\) associated with the finite set \(K_0\), following an idea of Mérigot et al. [21]. What makes these estimators so interesting is that they are consistent; that is, they converge to the respective Minkowski tensors of K when applied to a sequence of finite approximations converging to K in the Hausdorff metric. We emphasize that the notion of ‘estimator’ is used here in the sense of digital geometry [17] meaning ‘approximation of the true value based on discrete input’ and should not be confused with the statistical concept related to the inference from data with random noise. The main application we have in mind is the case where \(K_0\) is a digitization of K. This is detailed in the following.

As data is often only available in digital form, there is a need for estimators that allow us to approximate the Minkowski tensors from digital images. In a black-and-white image of a compact geometric object \(K\subseteq \mathbb {R}^d\), each pixel (or voxel) is colored black if its midpoint belongs to K and white otherwise. Thus, the information about K contained in the image is the set of black pixel (voxel) midpoints \(K_0=K\cap a\mathbb {L}\), where \(\mathbb {L}\) is the lattice formed by all pixel (voxel) midpoints and \(a^{-1}\) is the resolution. A natural criterion for the reliability of a digital estimator is that it yields the correct tensor when \(a\rightarrow 0_+\). If this property holds for all objects in a given family of sets, for instance, for all sets with smooth boundary, then the estimator is called multigrid convergent for this class.

Digital estimators for the scalar Minkowski tensors, that is, for the intrinsic volumes, are widespread in the digital geometry literature; see, e.g., [17, 24, 25] and the references therein. For Minkowski tensors up to rank two, estimators based on binary images are given in [28] for the two-dimensional and in [30] for the three-dimensional case. Even for the class of convex sets, multigrid convergence has not been proven for any of the above mentioned estimators. The only exception are volume related quantities. Most of the above mentioned estimators are n -local for some given fixed \(n\in \mathbb {N}\). We call an estimator n-local if it depends on the image only through the histogram of all \(n\times \cdots \times n\) configurations of black and white points. For instance, a natural surface area estimator [19] in three-dimensional space scans the image with a voxel cube of size \(2\times 2\times 2\) and assigns a surface contribution to each observed configuration. The sum of all contributions is then the surface area estimator, which is clearly 2-local. The advantage of n-local estimators is that they are intuitive, easy to implement, and the computation time is linear in the number of pixels or voxels.

However, many n-local estimators are not multigrid convergent for convex sets; see [32] and the detailed discussion in Sect. 6. This implies that many established estimators, like the mentioned one in [19] cannot be multigrid convergent for convex sets. All the estimators of 2D-Minkowski tensors in [28] are 2-local. By the results in [32], the estimators for the perimeter and the Euler characteristic can thus not be multigrid convergent for convex sets. The multigrid convergence of the other estimators has not been investigated. The algorithms for 3D-Minkowski tensors in [30] have as input a triangulation of the object’s boundary, and the way this triangulation is obtained determines whether the resulting estimators are n-local or not. There are no known results on multigrid convergence for these estimators either. Summarizing, to the best of our knowledge, this paper presents for the first time estimators of all Minkowski tensors of arbitrary rank that come with a multigrid convergence proof for a class of sets that is considerably larger than the class of convex sets.

The present work is inspired by [21], and we therefore start by recalling some basic notions from this paper. For a nonempty compact set K, the authors of [21] define a tensor valued measure, which they call the Voronoi covariance measure, defined on a Borel set \(A\subseteq \mathbb {R}^d\) by

Here, \(K^R\) is the set of points at distance at most \(R>0\) from K and \(p_K\) is the metric projection on K: the point \(p_K(x)\) is the point in K closest to x, provided that this closest point is unique. The metric projection of K is well-defined on \(\mathbb {R}^d\) with the possible exception of a set of Lebesgue-measure zero; see, e.g., [7].

The paper [21] uses the Voronoi covariance measure to determine local features of surfaces. It is proved there that if \(K \subseteq \mathbb {R}^3\) is a smooth surface, then

where B(x, r) is the Euclidean ball with midpoint \(x\in K\) and radius r, u(x) is one of the two surface unit normals at \(x\in K\), \(P_1(x),P_2(x)\) are the principal directions and \(k_1(x),k_2(x)\) the corresponding principal curvatures. Hence, the eigenvalues and -directions of the Voronoi covariance measure carry information about local curvatures and normal directions.

Assuming that a compact set \(K_0\) approximates K, Mérigot et al. [21] suggest to estimate \(\mathcal {V}_R(K;\cdot ) \) by \(\mathcal {V}_R(K_0;\cdot )\). It is shown in that paper that \(\mathcal {V}_R(K_0;\cdot )\) converges to \(\mathcal {V}_R(K;\cdot )\) in the bounded Lipschitz metric when \(K_0 \rightarrow K\) in the Hausdorff metric. Moreover, if \(K_0\) is a finite set, then the Voronoi covariance measure can be expressed in the form

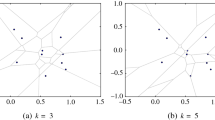

Here, \(V_x(K_0)\) is the Voronoi cell of x in the Voronoi decomposition of \(\mathbb {R}^d\) associated with \(K_0\). Thus, the estimator which is used to approximate \(\mathcal {V}_R(K;A)\) is easily computed. Given the Voronoi cells of \(K_0\), each Voronoi cell contributes with a simple integral. Figure 1a shows the Voronoi cells of a finite set of points on an ellipse. The Voronoi cells are elongated in the normal direction. This is the intuitive reason why they can be used to approximate (1).

The Voronoi covariance measure \(\mathcal {V}_R(K;A) \) can be identified with a symmetric 2-tensor. In the present work, we explore how natural extensions of the Voronoi covariance measure can be used for estimating general Minkowski tensors. The generalizations of the Voronoi covariance measure, which we will introduce, will be called Voronoi tensor measures. We will then show how the Minkowski tensors can be recovered from these. When we apply the results to digital images, we will work with full-dimensional sets K, and the finite point sample \(K_0\) is obtained from the representation \(K_0=K\cap a\mathbb {L}\) of a digital image of K. The Voronoi cells associated with \(K_0=K\cap a\mathbb {L}\) are sketched in Fig. 1b. Taking point samples from K with increasing resolution, convergence results will follow from an easy generalization of the convergence proof in [21].

The paper is structured as follows: In Sect. 2, we recall the definition of Minkowski tensors and the classical as well as a local Steiner formula for sets of positive reach. In Sect. 3, we define the Voronoi tensor measures, discuss how they can be estimated from finite point samples, and explain how the Steiner formula can be used to connect the Voronoi tensor measures with the Minkowski tensors. Section 4 is concerned with the convergence of the estimator. The results are specialized to digital images in Sect. 5. Finally, the estimator is compared with existing approaches in Sect. 6.

2 Minkowski Tensors

We work in Euclidean space \(\mathbb {R}^d\) with scalar product \(\langle \cdot \,,\cdot \rangle \) and norm \(|\cdot |\). The Euclidean ball with center \(x\in \mathbb {R}^d\) and radius \(r\ge 0\) is denoted by B(x, r), and we write \(S^{d-1}\) for the unit sphere in \(\mathbb {R}^d\). Let \(\partial A\) and \(\text {int}A\) be the boundary and the interior of a set \(A\subseteq {\mathbb R}^d\), respectively. The k-dimensional Hausdorff-measure in \(\mathbb {R}^d\) is denoted by \({\mathcal H}^k\), \(0\le k\le d\). Let \({\mathcal C}^d\) be the family of nonempty compact subsets of \(\mathbb {R}^d\) and \({\mathcal K}^d\subseteq \mathcal {C}^d\) the subset of nonempty compact convex sets. For two compact sets \(K,M \in {\mathcal C}^d\), we define their Hausdorff distance by

Let \(\mathbb {T}^p\) denote the space of symmetric p-tensors (tensors of rank p) over \(\mathbb {R}^d\). Identifying \(\mathbb {R}^d\) with its dual (via the scalar product), a symmetric p-tensor defines a symmetric multilinear map \((\mathbb {R}^d)^p\rightarrow \mathbb {R}\). Letting \(e_1,\ldots ,e_d\) be the standard basis in \(\mathbb {R}^d\), a tensor \(T\in \mathbb {T}^p\) is determined by its coordinates

with respect to the standard basis, for all choices of \({i_1},\ldots ,{i_p} \in \{1,\ldots ,d\}\). We use the norm on \(\mathbb {T}^p\) given by

for \(T\in \mathbb {T}^p\). The same definition is used for arbitrary tensors of rank p.

The symmetric tensor product of \(y_1,\ldots , y_m\in \mathbb {R}^{d}\) is given by the symmetrization \(y_1\odot \cdots \odot y_m=(m!)^{-1}\sum \otimes _{i=1}^m y_{\sigma (i)}\), where the sum extends over all permutations \(\sigma \) of \(\{1,\ldots ,m\}\) and \(\otimes \) is the usual tensor product. We write \(x^r\) for the r-fold tensor product of \(x\in \mathbb {R}^d\). For two symmetric tensors of the form \(T_1=y_1 \odot \cdots \odot y_r\) and \(T_2=y_{r+1} \odot \cdots \odot y_{r+s}\), where \(y_1, \ldots , y_{r+s} \in \mathbb {R}^d\), the symmetric tensor product \(T_1\odot T_2\) of \(T_1\) and \(T_2\), which we often abbreviate by \(T_1T_2\), is the symmetric tensor product of \(y_1, \ldots , y_{r+s} \). This is extended to general symmetric tensors \(T_1\) and \(T_2\) by linearity. Moreover, it follows from the preceding definitions that

\(y_1,\ldots , y_m\in \mathbb {R}^{d}\).

For any compact set \(K\subseteq \mathbb {R}^d\), we can define an element of \(\mathbb {T}^r\) called the rth volume tensor

For \(s\ge 1\) we define \(\varPhi _{d}^{r,s}(K)=0\). Some of the volume tensors have well-known physical interpretations. For instance, \(\varPhi _{d}^{0,0}(K)\) is the usual volume of K, \(\varPhi _{d}^{1,0}(K)\) is up to normalization the center of gravity, and \(\varPhi _{d}^{2,0}(K)\) is closely related to the tensor of inertia. All three tensors together can be used to find the best approximating ellipsoid of a particle [36]. The sequence of all volume tensors \((\varPhi _{d}^{r,0}(K))_{r=0}^\infty \) determines the compact set K uniquely. For convex sets in the plane even the following stability result [10, Rem. 4.4.] holds: If \(K, L\in {\mathcal K}^2\) are contained in the unit square and have coinciding volume tensors up to rank r, then their distance, measured in the symmetric difference metric \({\mathcal H}^2((K\setminus L) \cup (L\setminus K))\), is of order \(O(r^{-1/2})\) as \(r\rightarrow \infty \).

We will now define Minkowski surface tensors. These can also be used to characterize the shape of an object or the structure of a material as in [3, 14]. They require stronger regularity assumptions on K. Usually, like in [26, Sect. 5.4.2], the set K is assumed to be convex. However, as Minkowski tensors are tensor-valued integrals with respect to the generalized curvature measures (also called support measures) of K, they can be defined whenever the latter are available. We will use this to define Minkowski tensors for sets of positive reach.

First, we recall the definition of a set of positive reach and explain how curvature measures of such sets are determined (see [8, 35]). For a compact set \(K\in {\mathcal C}^d\), we let \(d_K(x)\) denote the distance from \(x\in \mathbb {R}^d\) to K. Then, for \(R\ge 0\), \(K^R=\{x\in \mathbb {R}^d \mid d_K(x)\le R\}\) is the R-parallel set of K. The reach \({{\mathrm{Reach}}}(K)\) of K is defined as the supremum over all \(R\ge 0\) such that for all \(x\in \mathbb {R}^d\) with \(d_K(x)<R\) there is a unique closest point \(p_K(x)\) in K. We say that K has positive reach if \({{\mathrm{Reach}}}(K)>0\). Smooth surfaces (of class \(C^{1,1}\)) are examples of sets of positive reach, and compact convex sets are characterized by having infinite reach. By definition, the map \(p_K\) is defined everywhere on \(K^R\) if \(R<{{\mathrm{Reach}}}(K)\). Let \(K\subseteq \mathbb {R}^d\) be a (compact) set of positive reach. The (global) Steiner formula for sets with positive reach states that for all \(R<{{\mathrm{Reach}}}(K)\) the R-parallel volume of K is a polynomial, that is,

Here \(\kappa _j\) is the volume of the unit ball in \(\mathbb {R}^j\). The numbers \(\varPhi ^{0,0}_0(K),\ldots , \varPhi _d^{0,0}(K)\) are the intrinsic volumes of K. They are special cases of the Minkowski tensors to be defined below. Some of them have well-known interpretations. As mentioned, \(\varPhi ^{0,0}_d(K)\) is the volume of K. Moreover, \(2\varPhi ^{0,0}_{d-1}(K)\) is the surface area, \(\varPhi ^{0,0}_{d-2}(K)\) is proportional to the total mean curvature, and \(\varPhi ^{0,0}_0(K)\) is the Euler characteristic of K. For convex sets, (2) is the classical Steiner formula which holds for all \(R\ge 0\).

Zähle [35] showed that a local version of (2) can be established giving rise to the generalized curvature measures \(\varLambda _k(K;\cdot )\) of K, for \(k=0,\ldots ,d-1\). An extension to general closed sets is considered in [12]. The generalized curvature measures (also called support measures) are measures on \(\varSigma = \mathbb {R}^d\times S^{d-1}\). They are determined by the following local Steiner formula which holds for all \(R < {{\mathrm{Reach}}}(K)\) and all Borel set \(B\subseteq \varSigma \):

The coefficients \(\varLambda _k(K;B)\) on the right side of (3) are signed Borel measures \(\varLambda _k(K;\cdot )\) evaluated on \(B\subseteq \varSigma \). These measures are called the generalized curvature measures of K. Since the pairs of points in B on the left side of (3) always consist of a boundary point of K and an outer unit normal of K at that point, each of the measures \(\varLambda _k(K,\cdot )\) is concentrated on the set of all such pairs. For this reason, the generalized curvature measures \(\varLambda _k(K;\cdot )\), \(k\in \{0,\ldots ,d-1\}\), are also called support measures. They describe the local boundary behavior of the part of \(\partial K\) that consists of points x with an outer unit normal u such that \((x,u)\in B\). A description of the generalized curvature measures \(\varLambda _k(K,\cdot )\) by means of generalized curvatures living on the normal bundle of K was first given in [35] (see also [26, §2.5, p. 217] and the references given there). The total measures \(\varLambda _k(K,\varSigma )\) are the intrinsic volumes.

Based on the generalized curvature measures, for every \(k\in \{0,\ldots ,d-1\}\), \(r,s\ge 0\) and every set \(K\subseteq \mathbb {R}^d\) with positive reach, we define the Minkowski tensor

in \(\mathbb {T}^{r+s}\). Here \(\omega _k\) is the surface area of the unit sphere \(S^{k-1}\) in \(\mathbb {R}^k\). More information on Minkowski tensors can for instance be found in [13, 15, 20, 27]. As in the case of volume tensors, the Minkowski tensors carry strong information on the underlying set. For instance, already the sequence \((\varPhi _{1}^{0,s}(K))_{s=0}^\infty \) determines any \(K\in {\mathcal K}^d\) up to a translation. A stability result also holds: if K and L are both contained in a fixed ball and have the same tensors \(\varPhi _{1}^{0,s}\) of rank \(s\le s_0\), then a translation of K is close to L in the Hausdorff metric and the distance is \(O(s_0^{-\beta })\) as \(s_0\rightarrow \infty \) for any \(0<\beta <3/(n+1)\); see [18, Thm. 4.9].

One can define local Minkowski tensors in a similar way (see [11]). For a Borel set \(B\subseteq \varSigma \), for \(k\in \{0,\ldots ,d-1\}\), \(r,s\ge 0\) and a set \(K\subseteq \mathbb {R}^d\) with positive reach, we put

and, for a Borel set \(A \subseteq \mathbb {R}^d\),

In order to avoid a distinction of cases, we also write \(\varPhi _{d}^{r,0}(K;A\times S^{d-1})\) instead of \(\varPhi _{d}^{r,0}(K;A)\). Moreover, we define \(\varPhi _{d}^{r,s}(K;\cdot )=0\) if \(s\ge 1\). The local Minkowski tensors can be used to describe local boundary properties. For instance, local 1- and 2-tensors are used for the detection of sharp edges and corners on surfaces in [6]. They also carry information about normal directions and principal curvatures as explained in the introduction.

We conclude this section with a general remark on continuity properties of the Minkowski tensors. Although the functions \(K\mapsto \varPhi _{k}^{r,s}(K)\) are continuous when considered in the metric space \((\mathcal {K}^d,d_H)\), they are not continuous on \({\mathcal C}^d\). (For instance, the volume tensors of a finite set are always vanishing, but finite sets can be used to approximate any compact set in the Hausdorff metric.) This is the reason why our approach requires an approximation argument with parallel sets as outlined below. The consistency of our estimator is mainly based on a continuity result for the metric projection map. We quote this result [4, Thm. 3.2] in a slightly different formulation which is symmetric in the two bodies involved. Let \(\Vert f\Vert _{L^1(E)}\) be the usual \(L^1\)-norm of the restriction of f to a Borel set \(E\subseteq \mathbb {R}^d\).

Proposition 2.1

Let \(\rho >0\) and let \(E\subseteq \mathbb {R}^d\) be a bounded measurable set. Then there is a constant \(C_1=C_1\left( d,{{\mathrm{diam}}}(E\cup \{0\}),\rho \right) >0\) such that

for all \(K,K_0\in {\mathcal C}^d\) with \(K,K_0\subseteq B(0,\rho )\).

Proof

Let \(E'\) be the convex hull of E and observe that

It is shown in [4, Lem. 3.3] (see also [8, Thm. 4.8]) that the map \(v_K:\mathbb {R}^d\rightarrow \mathbb {R}\) given by \(v_K(x)=|x|^2-d_K^2(x)\) is convex and that its gradient coincides almost everywhere with \(2p_K\). Since \(E'\) has rectifiable boundary, [4, Thm. 3.5] implies that

Here \(c_2={{\mathrm{diam}}}(2p_K(E')\cup 2p_{K_0}(E'))\le 2{{\mathrm{diam}}}(K\cup K_0)\le 4\rho \) and the supremum-norm \(\Vert \cdot \Vert _{\infty ,E'}\) on \(E'\) can be estimated by

Moreover, intrinsic volumes are increasing on the class of convex sets, so

Together with the trivial estimate \(d_H(K,K_0)\le 2\rho \) and with the equality \({{\mathrm{diam}}}(E\cup \{0\})={{\mathrm{diam}}}(E'\cup \{0\})\), this yields the claim. \(\square \)

The authors of [4] argue that the exponent 1/2 in Proposition 2.1 is best possible.

3 Construction of the Estimator

In Sect. 3.1 below, we define the Voronoi tensor measures and show how the Minkowski tensors can be obtained from these. We then explain in Sect. 3.2 how the Voronoi tensor measures can be estimated from finite point samples. As a special case, we obtain estimators for all intrinsic volumes. This is detailed in Sect. 3.3.

3.1 The Voronoi Tensor Measures

Let K be a compact set. Here and in the following subsections, we let \(r,s\in \mathbb {N}_0\) and \(R\ge 0\). Define the \( \mathbb {T}^{r+s}\)-valued measures \(\mathcal {V}_{R}^{r,s}(K;\cdot )\) given on a Borel set \(A\subseteq \mathbb {R}^d\) by

When K is a smooth surface, \(\mathcal {V}_{R}^{0,2}(K;\cdot )\) corresponds to the Voronoi covariance measure in [21]. We will refer to the measures defined in (4) as the Voronoi tensor measures. Note that if \(f:\mathbb {R}^d \rightarrow \mathbb {R}\) is a bounded Borel function, then

Suppose now that K has positive reach with \({{\mathrm{Reach}}}(K)>R\). Then a special case of the generalized Steiner formula derived in [12] [or an extension of (3)] implies the following version of the local Steiner formula for the Voronoi tensor measures:

where \(A\subseteq {\mathbb R}^d\) is a Borel set. In particular, the total measure is

Note that the special case \(r=s=0\) is the Steiner formula (2) for sets with positive reach.

Equation (6), used for different parallel distances R, can be solved for the Minkowski tensors. More precisely, choosing \(d+1\) different values \(0<R_0<\cdots<R_d<{{\mathrm{Reach}}}(K)\) for R, we obtain a system of \(d+1\) linear equations:

Since the Vandermonde-type matrix

in (7) is invertible, the system can be solved for the tensors, and thus we get

If \(s>0\), then \({\varPhi }_{d}^{r,s}(K;A\times S^{d-1})=0\) by definition, so we may omit one of the equations in the system (7).

3.2 Estimation of Minkowski Tensors

Let K be a compact set of positive reach. Suppose that we are given a compact set \(K_0\) that is close to K in the Hausdorff metric. In the applications we have in mind, \(K_0\) is a finite subset of K, but this is not necessary for the algorithm to work. Based on \(K_0\), we want to estimate the local Minkowski tensors of K. We do this by approximating \(\mathcal {V}_{R_k}^{r,s}(K;A)\) in Formula (9) by \(\mathcal {V}_{R_k}^{r,s}(K_0;A)\), for \(k=0,\ldots ,d\) and \(A\subseteq \mathbb {R}^d\) a Borel set. This leads to the following set of estimators for \(\varPhi _k^{r,s}(K;A\times S^{d-1})\), \(k\in \{0,\ldots ,d\}\):

with \(A_{R_0,\ldots ,R_d}^{r,s}\) given by (8). Setting \(A=\mathbb {R}^d\) in (10), we obtain estimators

of the intrinsic volumes. Note that this approach requires an estimate for the reach of K because we need to choose \(0<R_0<\cdots<R_d <{{\mathrm{Reach}}}(K)\). The idea to invert the Steiner formula is not new. It was used in [4] to approximate curvature measures of sets of positive reach. In [16, 23] it was used to estimate intrinsic volumes but without proving convergence for the resulting estimator.

We now consider the case where \(K_0\) is finite. Let

denote the Voronoi cell of \(x\in K_0\) with respect to the set \(K_0\). Since \(\mathbb {R}^d\) is the union of the finitely many Voronoi cells of \(K_0\), it follows that \(K^R_0\) is the union of the R-bounded parts \(B(x,R)\cap V_x(K_0)\), \(x\in K_0\), of the Voronoi cells \(V_x(K_0)\), \(x\in K_0\), which have pairwise disjoint interiors. Thus (4) simplifies to

Like the Voronoi covariance measure, the Voronoi tensor measure \(\mathcal {V}_{R}^{r,s}(K_0;A)\) is a sum of simple contributions from the individual Voronoi cells.

An example of a Voronoi decomposition associated with a digital image is sketched in Fig. 2. The original set K is the disk bounded by the inner black circle, and the disk bounded by the outer black circle is its R-parallel set \(K^R\). The finite point sample is \(K_0 = K \cap \mathbb {Z}^2\), which is shown as the set of red dots in the picture, and the red curve is the boundary of its R-parallel set. The Voronoi cells of \(K_0\) are indicated by blue lines. The R-bounded part of one of the Voronoi cells is the part that is cut off by the red arc.

3.3 The Case of Intrinsic Volumes

Recall that \(\varPhi _k^{0,0}(K)=\varLambda _k(K;\mathbb {R}^d)\) is the kth intrinsic volume. Thus, Sect. 3.2 provides estimators for all intrinsic volumes as a special case. This case is particularly simple. The measure \(\mathcal {V}_{R}^{0,0}(K;A)\) is simply the volume of a local parallel set

In particular, if \(K\subseteq \mathbb {R}^d\) is a compact set with \({{\mathrm{Reach}}}(K)>R\), then (6) reduces to the usual local Steiner formula

and to the (global) Steiner formula (2) if \(A=\mathbb {R}^d\).

In this case, our algorithm approximates the parallel volume \(\mathcal {H}^d(K^R)\) by \(\mathcal {H}^d(K_0^R)\). In the example in Fig. 2, this corresponds to approximating the volume of the larger black disk by the volume of the region bounded by the red curve. This volume is again the sum of the volumes of the regions bounded by the red and blue curves. In other words, it is the sum of volumes of the R-bounded Voronoi cells on the right-hand side of the equation

3.4 Estimators for General Local Minkowski Tensors

In Sect. 3.2 we have only considered estimators for local tensors of the form \(\varPhi _k^{r,s}(K;A \times S^{d-1})\), where \(K\subseteq \mathbb {R}^d\) is a set with positive reach. The natural way to estimate \(\varPhi _k^{r,s}(K;B)\), for a measurable set \(B\subseteq \varSigma \), would be to copy the idea in Sect. 3.2 with \(\mathcal {V}_{R}^{r,s}(K;A)\) replaced by the following generalization of the Voronoi tensor measures,

where \(u_K(x) = ({x-p_K(x)})/{|x-p_K(x)|}\) estimates the normal direction. Of course, this definition works for any \(K\in \mathcal {C}^d\). Moreover, we could define estimators related to (12) whenever we have a set \(K_0\) which approximates K. However, even if K has positive reach, the map \(x\mapsto u_K(x)\) is not Lipschitz on \(K^R\backslash K\), and therefore the convergence results in Sect. 4 will not work with this definition. Since the map \(x\mapsto u_K(x)\) is Lipschitz on \(K^R\backslash K^{R/2}\), it is natural to proceed as follows. For any \(K\in \mathcal {C}^d\), we define

Note that

where \(\mathcal {W}_{R}^{r,s}(K;\cdot )\) is defined in (12). We will not use the notation \(\mathcal {W}_{R}^{r,s}(K;\cdot )\) in the following. If K has positive reach and \(0<R<\text {reach}(K)\), then the generalized Steiner formula yields

Again, choosing \(0<R_1<\cdots<R_d<\text {reach}(K)\), we can recover the Minkowski tensors from

where

is a regular matrix. Using this, we can define estimators for \({\varPhi }_{k}^{r,s}(K;B)\), for \(0\le k\le d-1\), by

where \(K_0\) is a compact set which approximates K. Convergence of these modified estimators will be discussed in Sect. 4.

The estimators \(\overline{\varPhi }_{k}^{r,s}\) can be used to approximate local tensors of the form \(\varPhi _k^{r,s}(K;B)\) where the set \(B\subseteq \varSigma \) involves normal directions. Thus, they are more general than \(\hat{\varPhi }_{k}^{r,s}\). However, (14) shows that estimating \(\overline{\mathcal {V}}_{R}^{r,s}(K;B)\) requires an approximation of two parallel sets, rather than one. We therefore expect more severe numerical errors for \(\overline{\varPhi }_{k}^{r,s}\).

4 Convergence Properties

In this section we prove the main convergence results. This is an immediate generalization of [21, Thm. 5.1].

4.1 The Convergence Theorem

For a bounded Lipschitz function \(f:\mathbb {R}^d \rightarrow \mathbb {R}\), we let \(|f|_\infty \) denote the usual supremum norm,

the Lipschitz semi-norm, and

the bounded Lipschitz norm. Let \(d_{bL} \) be the bounded Lipschitz metric on the space of bounded \(\mathbb {T}^p\)-valued Borel measures on \(\mathbb {R}^d\). For any two such measures \(\mu \) and \(\nu \) on \(\mathbb {R}^d\), the distance with respect to \(d_{bL}\) is defined by

where the supremum extends over all bounded Lipschitz functions \(f:\mathbb {R}^d\rightarrow \mathbb {R}\) with \( |f|_{bL} \le 1\). The following theorem shows that the map

is Hölder continuous with exponent \( \tfrac{1}{2}\) with respect to the Hausdorff metric on \(\mathcal {C}^d\) (restricted to compact subsets of a fixed ball) and the bounded Lipschitz metric. In the proof, we use the symmetric difference \(A\varDelta B=(A\setminus B)\cup (B\setminus A)\) of sets \(A,B\subseteq \mathbb {R}^d\).

Theorem 4.1

Let \(R,\rho >0\) and \(r,s\in {\mathbb N}_0\) be given. Then there is a positive constant \(C_2=C_2(d,R,\rho ,r,s)\) such that

for all compact sets \(K,K_0\subseteq B(0,\rho )\).

Proof

Let f with \(|f|_{bL} \le 1\) be given. Then (5) yields

where I is the integral

and

By [4, Cor. 4.4], there is a constant \(c_1=c_1(d,R,\rho )>0\) such that

when \(d_H(K,K_0)\le {R}/{2}\). Replacing \(c_1\) by a possibly even bigger constant, we can ensure that (16) also holds when \(R/2\le d_H(K,K_0)\le 2 \rho \). Hence,

with some constant \(c_2=c_2(d,R,\rho ,r,s)>0\).

Using the inequalities (and interpreting empty products as 1)

with \(m=r+s\) and the rank-one tensors

we get

Since we assumed that \(|f|_{bL}\le 1\), we get

The existence of the constant \(c_3=c_3(d,R,\rho ,r,s)\) in the last inequality is guaranteed by Proposition 2.1 with \(K^R \cap K_0^R\) as the set E, because this choice of E satisfies \({{\mathrm{diam}}}(E \cup \{0\})\le 2(\rho + R)\). \(\square \)

When \(r=s=0\) and \(f=1\), the above proof simplifies to Inequality (16) as I vanishes. Hence we obtain the following strengthening of the theorem, which is relevant for the estimation of intrinsic volumes.

Theorem 4.2

Let \(R,\rho >0\). Then there is a constant \(C_3=C_3(d,R,\rho )>0\) such that

for all compact sets \(K,K_0\subseteq B(0,\rho )\).

For local tensors, the proof of Theorem 4.1 can also be adapted to show a convergence result.

Theorem 4.3

Let \(r,s\in \mathbb {N}_0\) and \(R>0\). If \(K_i \rightarrow K\) with respect to the Hausdorff metric on \({\mathcal C}^d\), as \(i\rightarrow \infty \), then \(\mathcal {V}_{R}^{r,s}(K_i;A)\rightarrow \mathcal {V}_{R}^{r,s}(K;A)\) in the tensor norm, for every Borel set \(A\subseteq \mathbb {R}^d\) which satisfies

Proof

Convergence of tensors is equivalent to coordinate-wise convergence. Hence, it is enough to show that the coordinates satisfy

for all choices of indices \(i_1, \ldots , i_{r+s};\) see the notation at the beginning of Sect. 2.

We write \(T_K(x)=p_K(x)^r(x-p_K(x))^s\). Then

is a signed measure. Let \(T_K(x)_{i_1\ldots i_{r+s}}^+\) and \(T_K(x)_{i_1\ldots i_{r+s}}^-\) denote the positive and negative part of \(T_K(x)_{i_1\ldots i_{r+s}}\), respectively. Then

are non-negative measures such that

The proof of Theorem 4.1 can immediately be generalized to show that \(\mathcal {V}_{R}^{r,s}(K_i;\cdot )^{\pm }_{i_1\ldots i_{r+s}}\) converges to \(\mathcal {V}_{R}^{r,s}(K;\cdot )^{\pm }_{i_1\ldots i_{r+s}}\) in the bounded Lipschitz norm (as \(i\rightarrow \infty \)), and hence the measures converge weakly. In particular, they converge on every continuity set of \(\mathcal {V}_{R}^{r,s}(K;\cdot )^{\pm }_{i_1\ldots i_{r+s}}\). If \(\mathcal {H}^d(p_K^{-1}(\partial A)\cap K^R)=0\), then A is such a continuity set. \(\square \)

Remark 4.4

Though relatively mild, the condition \(\mathcal {H}^d(p_K^{-1}(\partial A)\cap K^R)=0\) can be hard to control if K is unknown. It is satisfied if, for instance, K and A are smooth and their boundaries intersect transversely. A special case of this is when K is a smooth surface and A is a small ball centered on the boundary of K. This is the case in the application from [21] that was described in the introduction. Examples where it is not satisfied are when \(A=K\) or when K is a polytope intersecting \(\partial A\) at a vertex.

Remark 4.5

Let \(f:\mathbb {R}^d\rightarrow \mathbb {R}\) be a bounded measurable function. We define

Hence \(\mathcal {V}_{R}^{r,s}(K;A)=\mathcal {V}_{R}^{r,s}(K;\mathbbm {1}_A)\) for every Borel set \(A\subseteq \mathbb {R}^d\). Then, Theorem 4.3 is equivalent to saying that, for all continuous test functions \(f:\mathbb {R}^d\rightarrow \mathbb {R}\),

in the tensor norm, whenever \(K_i \rightarrow K\) with respect to the Hausdorff metric on \({\mathcal C}^d\), as \(i\rightarrow \infty \). Thus, if one is interested in the local behaviour of \(\varPhi ^{r,s}_k(K; \cdot )\) at a neighborhood A, like in [21], then one can study

where f is a continuous function with support in A. This avoids the extra condition (20).

As the matrix \(A_{R_0,\ldots ,R_d}^{r,s}\) in the definition (10) of \(\hat{\varPhi }_k^{r,s}(K_0;A\times S^{d-1})\) does not depend on the set \(K_0\), the above results immediately yield a consistency result for the estimation of the Minkowski tensors. We formulate this only for \(A=\mathbb {R}^d\).

Corollary 4.6

Let \(\rho >0\) and K be a compact subset of \(B(0,\rho )\) of positive reach such that \(\mathrm {Reach}(K)>R_d>\cdots>R_0>0\). Let \(K_0\subseteq B(0,\rho )\) be a compact set. Then there is a constant \(C_4=C_4(d,R_0,\ldots ,R_d,\rho )\) such that

for all \(k\in \{0,\ldots ,d\}\).

For \(r,s\in {\mathbb N}_0\) there is a constant \(C_5=C_5(d,R_0,\ldots ,R_d,\rho ,r,s)\) such that

for all \(k\in \{0,\ldots ,d-1\}\).

Finally, we state the convergence results for the modified estimators for \(\varPhi _k^{r,s}(K;B)\), where \(B\subseteq \varSigma \) is a Borel set, that were defined in Sect. 3.4. The map \(x\mapsto {x}/{|x|}\) is Lipschitz on \(\mathbb {R}^d \backslash {{\mathrm{int}}}({B(0,{R}/{2})})\) with Lipschitz constant 4 / R, and therefore the mapping \(u_K\), which was defined after (12), satisfies

for \(x\in (K^R \backslash K^{R/2}) \cap (K_0^R \backslash K_0^{R/2})\). Moreover,

Using this, it is straightforward to generalize the proofs of Theorems 4.1 and 4.3 to obtain the following result.

Theorem 4.7

Let \(R,\rho >0\) and \(r,s\in {\mathbb N}_0\) be given. Then there is a positive constant \(C_6=C_6(d,R,\rho ,r,s)\) such that

for all compact sets \(K,K_0\subseteq B(0,\rho )\).

This in turn leads to the next convergence result.

Theorem 4.8

Let \(r,s\in \mathbb {N}_0\) and \(R>0\). If \(K,K_i\in \mathcal {C}^d\) are compact sets such that \(K_i\rightarrow K\) in the Hausdorff metric, as \(i\rightarrow \infty \), then \(\overline{\mathcal {V}}_{R}^{r,s}(K_i;B)\) converges to \(\overline{\mathcal {V}}_{R}^{r,s}(K;B)\) in the tensor norm, for any measurable set \(B\subseteq \varSigma \) satisfying

Here \(\partial B\) is the boundary of B as a subset of \(\varSigma \).

If B satisfies this condition and \(\text {Reach}(K)>R_d\), then

Remark 4.9

We can argue as in Remark 4.5 to see that if \(K,K_i\in \mathcal {C}^d\) are compact sets such that \(K_i\rightarrow K\) in the Hausdorff metric, as \(i\rightarrow \infty \), then

whenever \(g:\varSigma \rightarrow \mathbb {R}\) is a continuous test function and \(\overline{\mathcal {V}}_{R}^{r,s}(K;g)\) is defined similarly as before.

If K satisfies \(\text {Reach}(K)>R_d\), we get \(\overline{\varPhi }_{k}^{r,s}(K_i;g) \rightarrow {\varPhi _{k}^{r,s}(K;g)}\) as \(i\rightarrow \infty \).

5 Application to Digital Images

Our main motivation for this paper is the estimation of Minkowski tensors from digital images. Recall that we model a black-and-white digital image of \(K\subseteq \mathbb {R}^d\) as the set \(K\cap a\mathbb {L}\), where \(\mathbb {L}\subseteq \mathbb {R}^d\) is a fixed lattice and \(a>0\). We refer to [2] for basic information about lattices.

The lower dimensional parts of K are generally invisible in the digital image. When dealing with digital images, we will therefore always assume that the underlying set is topologically regular, which means that it is the closure of its own interior.

In digital stereology, the underlying object K is often assumed to belong to one of the following two set classes:

-

K is called \(\delta \) -regular if it is topologically regular and the reach of its closed complement \(\mathrm{cl}({\mathbb {R}^d \backslash K})\) and the reach of K itself are both at least \(\delta >0\). This is a kind of smoothness condition on the boundary, ensuring in particular that \(\partial K\) is a \(C^1\) manifold (see the discussion after [34, Def. 1]).

-

K is called polyconvex if it is a finite union of compact convex sets. While convex sets have infinite reach, note that polyconvex sets do generally not have positive reach. Also note that for a compact convex set \(K\subseteq \mathbb {R}^d\), the set \(\mathrm{cl}({\mathbb {R}^d \backslash K})\) need not have positive reach.

It should be observed that for a compact set \(K\subseteq \mathbb {R}^d\) both assumptions imply that the boundary of K is a \((d-1)\)-rectifiable set in the sense of [9] (i.e., \(\partial K\) is the image of a bounded subset of \(\mathbb {R}^{d-1}\) under a Lipschitz map), which is a much weaker property that will be sufficient for the analysis in Sect. 5.1.

5.1 The Volume Tensors

Simple and efficient estimators for the volume tensors \(\varPhi _d^{r,0}(K)\) of a (topologically regular) compact set K are already known and are usually based on the approximation of K by the union of all pixels (voxels) with midpoint in K. This leads to the estimator

where \(V_0(\mathbb {L})\) is the Voronoi cell of 0 in the Voronoi decomposition generated by \(\mathbb {L}\). This, in turn, can be approximated by

When \(r\in \{0,1\}\), we even have \({\phi }_d^{r,0}(K\cap a\mathbb {L})=\hat{\phi }_d^{r,0}(K\cap a\mathbb {L})\).

Choose \(C>0\) such that \(V_0(\mathbb {L}) \subseteq B(0,C)\). Then

In fact, if \(x\in [\bigcup _{z\in K\cap a\mathbb {L}} (z+aV_0(\mathbb {L}))]\setminus K\), then there is some \(z\in K\cap a\mathbb {L}\) such that \(x\in z+aV_0(\mathbb {L})\) and \(x\notin K\). Since \(z\in K\) and \(x\notin K\), we have \([x,z]\cap \partial K\ne \emptyset \). Moreover, \(x-z\in aV_0(\mathbb {L})\subseteq B(0,aC)\), and hence \(|x-z|\le aC\). This shows that \(x\in (\partial K)^{aC}\). Now assume that \(x\in K\) and \(x\notin (\partial K)^{aC}\). Then \(B(x,\rho )\subseteq K\) for some \(\rho >aC\). Since \(\bigcup _{z\in a\mathbb {L}}(z+aV_0(\mathbb {L}))=\mathbb {R}^d\), there is some \(z\in a\mathbb {L}\) such that \(x\in z+aV_0(\mathbb {L})\). Hence \(x-z\in aV_0(\mathbb {L})\subseteq B(0,aC)\). We conclude that \(z\in B(x,aC)\subseteq K\), therefore \(z\in K\cap a\mathbb {L}\) and thus \(x\in \bigcup _{z\in K\cap a\mathbb {L}} (z+aV_0(\mathbb {L}))\).

Hence

If \(\mathcal {H}^{d}(\partial K)=0\), then the integral on the right-hand side goes to zero by monotone convergence, so

If \(\partial K\) is \((d-1)\)-rectifiable in the sense of [9, Sect. 3.2.14], that is, \(\partial K\) is the image of a bounded subset of \(\mathbb {R}^{d-1}\) under a Lipschitz map, then \(\mathcal {H}^{d}(\partial K)=0\). Since \(\partial K\) is compact, [9, Thm. 3.2.39] implies that \(\lim _{a\rightarrow 0_+}\mathcal {H}^d((\partial K)^{ aC})/a \) exists and equals a fixed multiple of \(\mathcal {H}^{d-1}(\partial K)\) which is finite. Hence, (21) shows that the speed of convergence in (22) is O(a) as \(a\rightarrow 0_+\).

Inequality (18) yields that \(|x^r-z^{r}|\le aC r(|x|+aC)^{r-1}\) whenever \(x\in z+ aV_0(\mathbb {L})\) and \(r\ge 1\). Therefore,

which shows that

provided that \(\mathcal {H}^d(\partial K)=0\). If \(\partial K\) is \((d-1)\)-rectifiable, then the speed of convergence is of the order O(a).

Hence, we suggest to simply use the estimators \(\hat{\phi }_d^{r,0}(K\cap a\mathbb {L})\) for the volume tensors. This estimator can be computed much faster and more directly than \(\hat{\varPhi }_d^{r,0}(K\cap a\mathbb {L})\). Moreover, it does not require an estimate for the reach of K, and it converges for a much larger class of sets than those of positive reach.

5.2 Convergence for Digital Images

For the estimation of the remaining tensors we suggest to use the Voronoi tensor measures. Choosing \(K_0=K \cap a\mathbb {L}\) in (11), we obtain

where \(A\subseteq \mathbb {R}^d\) is a Borel set.

To show some convergence results in Corollary 5.2 below, we first note that the digital image converges to the original set in the Hausdorff metric.

Lemma 5.1

If K is compact and topologically regular, then

If K is \(\delta \)-regular, then \(d_H(K,K\cap a\mathbb {L})\) is of order O(a). The same holds if K is topologically regular and polyconvex.

Proof

Recall from [2, p. 311] that \( \mu (\mathbb {L})=\max _{x\in \mathbb {R}^d}\text {dist}(x,\mathbb {L}) \) is well defined and denotes the covering radius of \(\mathbb {L}\).

Let \(\varepsilon >0\) be given. Since K is compact, there are points \(x_1,\ldots ,x_m\in K\) such that

Using the fact that K is topologically regular, we conclude that there are points \(y_i\in \text {int}(K)\cap \text {int}(B(x_i,2\varepsilon ))\) for \(i=1,\ldots ,m\). Hence, there are \(\varepsilon _i\in (0,2\varepsilon )\) such that \( B(y_i,\varepsilon _i)\subseteq K\cap B(x_i,2\varepsilon )\) for \(i=1,\ldots ,m\). Let

Since \(\varepsilon _i/a>\mu (\mathbb {L})\) it follows that \(a\mathbb {L}\cap B(y_i,\varepsilon _i)\ne \emptyset \), for \(i=1,\ldots ,m\). Thus we can choose \(z_i\in a\mathbb {L}\cap B(y_i,\varepsilon _i)\subseteq a\mathbb {L}\cap K\) for \(i=1,\ldots ,m\). By the triangle inequality, we have \(|z_i-x_i|\le \varepsilon _i+2\varepsilon \le 4\varepsilon \), and hence \(x_i\in (K\cap a\mathbb {L})+B(0,4\varepsilon )\), for \(i=1,\ldots ,m\). Therefore, \(K\subseteq (K\cap a\mathbb {L}) +B(0,5\varepsilon )\) if \(a>0\) is sufficiently small.

Assume that K is \(\delta \)-regular, for some \(\delta >0\). We choose \(0<a<\delta /(2\mu (\mathbb {L}))\). Since \(a\mu (\mathbb {L})<\delta /2\), for any \(x\in K\) there is a ball \(B(y,a\mu (\mathbb {L}))\) of radius \(a\mu (\mathbb {L})\) such that \(x\in B(y,a\mu (\mathbb {L}))\subseteq K\). From \(a\mathbb {L}\cap B(y,a\mu (\mathbb {L}))\ne \emptyset \) we conclude that there is a point \(z\in K\cap a\mathbb {L}\) with \(|x-z|\le 2a\mu (\mathbb {L})\). Hence \(x\in (K\cap a\mathbb {L}) +B(0,2a\mu (\mathbb {L}))\), and therefore \(d_H(K,K\cap a\mathbb {L})\le 2a\mu (\mathbb {L})\).

Finally, we assume that K is topologically regular and polyconvex. Then K is the union of finitely many compact convex sets with interior points. Hence, for the proof we may assume that K is convex with \(B(0,\rho )\subseteq K\) for a fixed \(\rho >0\). Choose \(0<a<\rho /(2\mu (\mathbb {L}))\) and put \(r=2a\mu (\mathbb {L})<\rho \). If \(x\in K\), then \(B((1-r/\rho )x,r)\subseteq K\) and \(B((1-r/\rho )x,r)\) contains a point \(z\in a\mathbb {L}\). Since

we get

which completes the argument. \(\square \)

Thus Theorems 4.1 and 4.2 and Corollary 4.6 together with Lemma 5.1 yield the following result.

Corollary 5.2

If K is compact and topologically regular, then

If, in addition, K has positive reach, then

If K is \(\delta \)-regular or a topologically regular convex set, then the speed of convergence is O(a) when \(r=s=0\) and \(O(\sqrt{a})\) otherwise.

The property (24) means that \(\hat{\varPhi }^{r,s}_k(K\cap a\mathbb {L})\) is multigrid convergent for the class of sets of positive reach as defined in the introduction. A similar statement about local tensors, but without the speed of convergence, can be made. We omit this here.

5.3 Possible Refinements of the Algorithm for Digital Images

We first describe how the number of necessary radii \(R_0<R_1<\cdots <R_d\) in (10) can be reduced by one if \(s=0\) and \(A=\mathbb {R}^d\). Setting \(s=0\) and \(A=\mathbb {R}^d\) and subtracting \((r!)\varPhi _d^{r,0}(K)\) on both sides of (6) yields

As mentioned in Sect. 5.1, the volume tensor \(\varPhi _d^{r,0}(K)\) can be estimated by \(\hat{\phi }_d^{r,0}(K\cap a\mathbb {L})\). We may take \(\mathcal {V}_{R}^{r,0}(K\cap a\mathbb {L})-(r!)\hat{\phi }_d^{r,0}(K\cap a\mathbb {L})\) as an improved estimator for (25). This corresponds to replacing the integration domains \(B(x,R)\cap V_x(K\cap a\mathbb {L})\) in (23) by

This makes sense since \(V_x(a\mathbb {L})\) is likely to be contained in K while the left-hand side of (25) is an integral over \(K^R\backslash K\). The Minkowski tensors can now be isolated from only d equations of the form (25) with d different values of R.

We now suggest a slightly modified estimator for the Minkowski tensors satisfying the same convergence results as \(\hat{\varPhi }_k^{r,s}(K\cap a\mathbb {L})\) but where the number of summands in (23) is considerably reduced. As the volume tensors can easily be estimated with the estimators in Sect. 5.1, we focus on the tensors with \(k<d\).

Let K be a compact set. We define the Voronoi neighborhood \(N_\mathbb {L}(0)\) of 0 to be the set of points \(y\in \mathbb {L}\) such that the Voronoi cells \(V_0(\mathbb {L})\) and \(V_y(\mathbb {L})\) of 0 and y, respectively, have exactly one common \((d-1)\)-dimensional face. Similarly, for \(z\in \mathbb {L}\) the Voronoi neighborhood \(N_\mathbb {L}(z)\) of z is defined, and thus clearly \(N_\mathbb {L}(z)=z+N_\mathbb {L}(0)\). When \(\mathbb {L}\subset \mathbb {R}^2\) is the standard lattice, \(N_\mathbb {L}(z)\) consists of the four points in \(\mathbb {L}\) that are neighbors of z in the usual 4-neighborhood [24]. Define \(I(K\cap a\mathbb {L})\) to be the set of points \(z\in K\cap a\mathbb {L}\) such that \(N_{a\mathbb {L}}(z)\subseteq K\cap a\mathbb {L}\). The relative complement \(B(K\cap a\mathbb {L})=(K\cap a\mathbb {L})\setminus I(K\cap a\mathbb {L})\) of \(I(K\cap a\mathbb {L})\) can be considered as the set of lattice points in \(K\cap a\mathbb {L}\) that are close to the boundary of the given set K.

We modify (23) by removing contributions from \(I(K\cap a\mathbb {L})\) and define

Assuming that K has positive reach, let \(0<R_0<R_1<\ldots<R_d< \text {Reach}(K)\). We write again \(K_0\) for \(K\cap a\mathbb {L}\). Then we obtain the estimators

with \(A_{R_0,\ldots ,R_d}^{r,s}\) given by (8).

Working with \(\tilde{\mathcal {V}}_{R}^{r,s}(K\cap a\mathbb {L};A)\) reduces the workload considerably. For instance, when K is \(\delta \)-regular or polyconvex and topologically regular, the number of elements in \(I(K\cap a\mathbb {L})\) increases with \(a^{-d}\), whereas the number of elements in \(B(K \cap a\mathbb {L})\) only increases with \(a^{-(d-1)}\) as \(a\rightarrow 0_+\). The set \(I(K\cap a\mathbb {L})\) can be obtained from the digital image of K in linear time using a linear filter. Moreover, we have the following convergence result.

Proposition 5.3

Let K be a topologically regular compact set with positive reach and let C be such that \(V_0(\mathbb {L})\subseteq B(0,C)\). If A is a Borel set in \(\mathbb {R}^d\) and \(aC<R_0<R_1<\ldots<R_d<\mathrm {Reach}(K)\) and \(K_0=K\cap a\mathbb {L}\), then

for all \(k\in \{0,\ldots ,d-1\}\), whenever \(s=0\) or s is odd. If s is even and \(k\in \{0,\ldots ,d-1\}\), then

Proof

Let \(aC<R<\mathrm {Reach}(K)\). For \(x\in I(K\cap a\mathbb {L})\), we have

so the contribution of x to the sum in (23) is \((s!)x^r\varPhi ^{s,0}_d(V_{0}(a\mathbb {L}))\). It follows that

For odd s we have \(\varPhi ^{s,0}_d(V_{0}(a\mathbb {L}))=0\), so the claim follows. For \(s=0\) the right-hand side of (28) does not vanish, but it is independent of R. A combination of

with (28), (10) and (27) gives the claim.

For even \(s>0\), we have that \(\varPhi ^{s,0}_d(V_{0}(a\mathbb {L}))=a^{d+s}\varPhi ^{s,0}_d(V_{0}(\mathbb {L}))\), while

Therefore, the expression on the right-hand side of (28) converges to 0. \(\square \)

It should be noted that a similar modification for \(\overline{\varPhi }_k^{r,s}\) is not necessary. In fact the modified Voronoi tensor measure (13) with \(K=K_0\) has the advantage that small Voronoi cells that are completely contained in the \(R_0/2 \)-parallel set of \(K\cap a\mathbb {L}\) do not contribute. In particular, contributions from \(I(K\cap a\mathbb {L})\) are automatically ignored when a is sufficiently small.

6 Comparison to Known Estimators

Most existing estimators of intrinsic volumes [17, 19, 24] and Minkowski tensors [28, 30] are n-local for some \(n\in \mathbb {N}\). The idea is to look at all \(n\times \,\cdots \,\times n\) pixel blocks in the image and count how many times each of the \(2^{n^d}\) possible configurations of black and white points occur. Each configuration is weighted by an element of \(\mathbb {T}^{r+s}\) and \(\varPhi ^{r,s}_k(K)\) is estimated as a weighted sum of the configuration counts. It is known that estimators of this type for intrinsic volumes other than ordinary volume are not multigrid convergent, even when K is known to be a convex polytope; see [32]. It is not difficult to see that there cannot be a multigrid convergent n-local estimator for the (even rank) tensors \(\varPhi _k^{0,2s}(K)\) with \(k=0,\ldots ,d-1\), \(s\in \mathbb {N}\), for polytopes K, either. In fact, repeatedly taking the trace of such an estimator would lead to a multigrid convergent n-local estimator of the kth intrinsic volume, in contradiction to [32].

The algorithm presented in this paper is not n-local for any \(n\in \mathbb {N}\). It is required in the convergence proof that the parallel radius R is fixed while the resolution \(a^{-1}\) goes to infinity. The non-local operation in the definition of our estimator is the calculation of the Voronoi diagram. The computation time for Voronoi diagrams of k points is \(O(k\log k + k^{\lfloor d/2\rfloor })\), see [5], which is somewhat slower than n-local algorithms for which the computation time for k data points is O(k). The computation time can be improved by ignoring interior points as discussed in Sect. 5.3.

The idea to base digital estimators for intrinsic volumes on an inversion of the Steiner formula as in (9) has occurred before in [16, 23]. In both references, the authors define estimators for polyconvex sets which are not necessarily of positive reach. This more ambitious aim leads to problems with the convergence.

In [16], the authors use a version of the Steiner formula for polyconvex sets given in terms of the Schneider index, see [26]. Since its definition is, however, n-local in nature, the authors choose an n-local algorithm to estimate it. As already mentioned, such algorithms are not multigrid convergent.

In [23], it is used that the intrinsic volumes of a polyconvex set can, on the one hand, be approximated by those of a parallel set with small parallel radius, and on the other hand, the closed complement of this parallel set has positive reach, so that its intrinsic volumes can be computed via the Steiner formula. The authors employ a discretization of the parallel volumes of digital images, but without showing that the convergence is preserved.

It is likely that the ideas of the present paper combined with the ones of [23] could be used to construct multigrid convergent digital algorithms for polyconvex sets. The price for this is that the notion of convergence in [23] is slightly artificial for practical purposes, requiring very small parallel radii in order to get good approximations and at the same time large radii compared to resolution.

In [33], n-local algorithms based on grey-valued images are suggested. They are shown to converge to the true value when the resolution tends to infinity. However, they only apply to surface and certain mean curvature tensors. Moreover, they are hard to apply in practice, since they require detailed information about the underlying point spread function which specifies the representation of the object as grey-value image. If grey-value images are given, the algorithm of the present paper could be applied to thresholded images, but there may be more efficient ways to exploit the additional information of the grey-values.

References

Arns, C.A., Knackstedt, M.A., Mecke, K.R.: Characterising the morphology of disordered materials. In: Mecke, K.R., Stoyan, D. (eds.) Morphology of Condensed Matter. Lecture Notes in Physics, vol. 600, pp. 37–74. Springer, Berlin (2002)

Barvinok, A.: A Course in Convexity. American Mathematical Society, Providence, RI (2002)

Beisbart, C., Barbosa, M.S., Wagner, H., da Costa, L.F.: Extended morphometric analysis of neuronal cells with Minkowski valuations. Eur. Phys. J. B 52, 531–546 (2006)

Chazal, F., Cohen-Steiner, D., Mérigot, Q.: Boundary measures for geometric inference. Found. Comput. Math. 10, 221–240 (2010)

Chazelle, B.: An optimal convex hull algorithm in any fixed dimension. Discrete Comput. Geom. 10, 377–409 (1993)

Clarenz, U., Rumpf, M., Telea, A.: Robust feature detection and local classification for surfaces based on moment analysis. IEEE Trans. Vis. Comput. Graph. 10, 516–524 (2004)

Fremlin, D.H.: Skeletons and central sets. Proc. Lond. Math. Soc. 74, 701–720 (1997)

Federer, H.: Curvature measures. Trans. Am. Math. Soc. 93, 418–491 (1959)

Federer, H.: Geometric Measure Theory. Springer, Berlin (1969)

Hörrmann, J., Kousholt, A.: Reconstruction of convex bodies from moments. arXiv:1605.06362v1 (2016)

Hug, D., Schneider, R.: Local tensor valuations. Geom. Funct. Anal. 24, 1516–1564 (2014)

Hug, D., Last, G., Weil, W.: A local Steiner-type formula for general closed sets and applications. Math. Z. 246, 237–272 (2004)

Hug, D., Schneider, R., Schuster, R.: Integral geometry of tensor valuations. Adv. Appl. Math. 41, 482–509 (2008)

Kapfer, S.C., Mickel, W., Schaller, F.M., Spanner, M., Goll, C., Nogawa, T., Ito, N., Mecke, K., Schröder-Turk, G.E.: Local anisotropy of fluids using Minkowski tensors. J. Stat. Mech. Theory Exp. 2010, P11010 (2010)

Kiderlen, M., Vedel Jensen, E.B.V. (eds.): Tensor Valuations and Their Applications in Stochastic Geometry and Imaging. Lecture Notes in Mathematics, vol. 2177. Springer, Berlin (2017)

Klenk, S., Schmidt, V., Spodarev, E.: A new algorithmic approach to the computation of Minkowski functionals of polyconvex sets. Comput. Geom. 34, 127–148 (2006)

Klette, R., Rosenfeld, A.: Digital Geometry. Elsevier, San Francisco (2004)

Kousholt, A., Kiderlen, M.: Reconstruction of convex bodies from surface tensors. Adv. Appl. Math. 76, 1–33 (2016)

Lindblad, J.: Surface area estimation of digitized 3D objects using weighted local configurations. Image Vis. Comput. 23, 111–122 (2005)

McMullen, P.: Isometry covariant valuations on convex bodies. Rend. Circ. Mat. Palermo 2(Suppl. 50), 259–271 (1997)

Mérigot, Q., Ovsjanikov, M., Guibas, L.: Voronoi-based curvature and feature estimation from point clouds. IEEE Trans. Vis. Comput. Graph. 17, 743–756 (2010)

Miles, R.E., Serra, J. (eds.): Geometrical Probability and Biological Structures: Buffons 200th Anniversary (Proceedings Paris, 1977). Lecture Notes in Biomath, vol. 23, Springer, Berlin (1978)

Mrkvička, T., Rataj, J.: On the estimation of intrinsic volume densities of stationary random closed sets. Stochastic Process. Appl. 118, 213–231 (2008)

Ohser, J., Mücklich, F.: Statistical Analysis of Microstructures. Wiley, Chichester (2000)

Ohser, J., Schladitz, K.: 3D Images of Materials Structures: Processing and Analysis. Wiley-VCH, Weinheim (2009)

Schneider, R.: Convex Bodies: The Brunn–Minkowski Theory, 2nd edn. Cambridge University Press, Cambridge (2014)

Schneider, R., Schuster, R.: Tensor valuations on convex bodies and integral geometry. II. Rend. Circ. Mat. Palermo 2(Suppl. 70), 295–314 (2002)

Schröder-Turk, G.E., Kapfer, S.C., Breidenbach, B., Beisbart, C., Mecke, K.: Tensorial Minkowski functionals and anisotropy measures for planar patterns. J. Microsc. 238, 57–74 (2008)

Schröder-Turk, G.E., Mickel, W., Schröter, M., Delaney, G.W., Saadatfar, M., Senden, T.J., Mecke, K., Aste, T.: Disordered spherical bead packs are anisotropic. Europhys. Lett. 90, 34001 (2010)

Schröder-Turk, G.E., Mickel, W., Kapfer, S.C., Klatt, M.A., Schaller, F.M., Hoffmann, M.J., Kleppmann, N., Armstrong, P., Inayat, A., Hug, D., Reichelsdorfer, M., Peukert, W., Schwieger, W., Mecke, K.: Minkowski tensor shape analysis of cellular, granular and porous structures. Adv. Mater. 23, 2535–2553 (2011)

Schröder-Turk, G.E., Mickel, W., Kapfer, S.C., Schaller, F.M., Breidenbach, B., Hug, D., Mecke, K.: Minkowski tensors of anisotropic spatial structure. New J. Phys. 15, 083028 (2013)

Svane, A.M.: On multigrid convergence of local algorithms for intrinsic volumes. J. Math. Imaging Vis. 49, 352–376 (2014)

Svane, A.M.: Estimation of Minkowski tensors from digital grey-scale images. Image Anal. Stereol. 34, 51–61 (2015)

Svane, A.M.: Local digital algorithms for estimating the integrated mean curvature of \(r\)-regular sets. Discrete Comput. Geom. 54, 316–338 (2015)

Zähle, M.: Integral and current representation of Federer’s curvature measures. Arch. Math. (Basel) 46, 557–567 (1986)

Ziegel, J.F., Nyengaard, J.R., Jensen, E.B.V.: Estimating particle shape and orientation using volume tensors. Scand. J. Stat. 42, 813–831 (2015)

Acknowledgements

We wish to thank the referees for carefully reading the paper and making helpful suggestions for improvements. The first author was supported in part by DFG grants FOR 1548 and HU 1874/4-2. The third author was supported by a grant from the Carlsberg Foundation. The second and third authors were supported by the Centre for Stochastic Geometry and Advanced Bioimaging, funded by the Villum Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: Kenneth Clarkson

Rights and permissions

About this article

Cite this article

Hug, D., Kiderlen, M. & Svane, A.M. Voronoi-Based Estimation of Minkowski Tensors from Finite Point Samples. Discrete Comput Geom 57, 545–570 (2017). https://doi.org/10.1007/s00454-016-9851-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-016-9851-x