Abstract

We prove a quantitative version of the multi-colored Motzkin–Rabin theorem in the spirit of Barak et al. (Proceedings of the National Academy of Sciences, 2012): Let \(V_1,\ldots ,V_n \subset {\mathbb {R}}^d\) be \(n\) disjoint sets of points (of \(n\) ‘colors’). Suppose that for every \(V_i\) and every point \(v \in V_i\) there are at least \(\delta |V_i|\) other points \(u \in V_i\) so that the line connecting \(v\) and \(u\) contains a third point of another color. Then the union of the points in all \(n\) sets is contained in a subspace of dimension bounded by a function of \(n\) and \(\delta \) alone.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Motzkin–Rabin (MR) theorem (see [3]) states that in a non-collinear set of points in the Euclidean plane, each colored blue or red, there always exists a monochromatic line (a line passing through at least two points and all points on the line are of the same color). Another way to state this theorem uses the following definition which we shall later generalize.

Definition 1.1

(MR configuration) Let \(V_1,V_2 \subset {\mathbb {R}}^2\) be disjoint, finite sets of points in the plane. The pair \(V_1,V_2\) is called an MR-configuration if every line \(L\) with \(|L \cap (V_1 \cup V_2)| \ge 2\) must intersect both sets \(V_1\) and \(V_2\).

The Motzkin–Rabin theorem can now be stated equivalently as:

Theorem 1.2

(Motzkin–Rabin Theorem) Let \(V_1,V_2 \subset {\mathbb {R}}^2\) be an MR-configuration. Then all points in \(V_1 \cup V_2\) must belong to a single line.

It is easy to see that one can replace \({\mathbb {R}}^2\) with \({\mathbb {R}}^d\) and that the theorem will still hold in this case (take a generic projection to the plane). This theorem answers a question first raised by Graham [7]. Theorem 1.2 was discovered independently by Motzkin [8] and Rabin (cf. Chakerian [5]. See [4, p. 306] for more references and history).

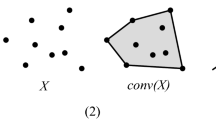

We will denote by \(\mathsf adim (S)\) the dimension of the affine span (the smallest affine subspace containing the points) of a point set \(S \subset {\mathbb {R}}^d\) and for a family of sets \(S_1,\ldots ,S_r\) we will write \(\mathsf adim (S_1,\ldots ,S_r) = \mathsf adim (S_1 \cup \cdots \cup S_r)\). Then, the conclusion of the MR theorem, namely all points in \(V_1,V_2\) being on a line, can be stated as \(\mathsf adim (V_1,V_2) \le 1\). Hence, we can view the MR theorem as converting partial information about collinearity in the sets \(V_1,V_2\) (the line through every pair of points of the same color contains a third point of a different color) into a global bound on the dimension of the entire configuration. A closely related theorem is the Sylvester–Gallai theorem which is a ‘one color’ version of the MR theorem: in every non-collinear set of points there is a line containing only two of the points.

Shannon [9] (see also [2]) proved an \(n\)-color variant of this theorem showing that if a family of \(n\) sets \(V_1,\ldots ,V_n\) spans \({\mathbb {R}}^n\) then they must define at least one monochromatic line. In this work we extend this result to the setting where the information about collinearities is only given for many of the lines passing through two points of the same color. To be precise we will give the following definition:

Definition 1.3

\((\delta ,n)\)-MR configuration) Let \(V_1, V_2, \ldots , V_n\) be disjoint sets of points in \({\mathbb {R}}^d\), and let \(V = V_1 \cup V_2 \cup \cdots \cup V_n\). We say that \(V_1, V_2, \ldots , V_n\) is a \((\delta , n)\)-MR configuration if for each \(V_i\) and for each \(v \in V_i\), there are at least \(\delta |V_i|\) points \(u \in V_i{\setminus } \{v\}\) for which the line determined by \(v\) and \(u\) contains a third point in \(V {\setminus } V_i\). For convenience we will always assume that \(|V_1| \ge |V_2| \ge \cdots \ge |V_n|\).

Our main theorem gives a dimension bound for \((\delta ,n)\)-MR configuration that depends only on \(n\) and \(\delta \). We do not believe our bound to be tight and conjecture that a bound of \(\mathsf poly (n/\delta )\) holds in general.

Theorem 1.4

(Main theorem) Let \(V = V_1, V_2, \ldots , V_n \subset {\mathbb {R}}^d\) be a \((\delta ,n)\)-MR configuration. Then, for any \(0 < \varepsilon < \delta \) we have

with \(C>0\) an absolute constant.Footnote 1

Theorem 1.4 is a multi-colored version of recent results of [1, 6], which give a similar ‘\(\delta \)-version’ of the Sylvester–Gallai theorem ([1] also establishes the \(n=2\) case of Theorem 1.4). In fact, our proof uses one of the main results of [1, 6] as its principal tool. This result, given below as Theorem 2.2, gives a lower bound on the rank of matrices whose pattern of zeros and non-zeros satisfies a certain ‘design-like’ condition. As the results of [1, 6] work also over the complex numbers, our results (in particular, Theorem 1.4) hold also when one replaces \({\mathbb {R}}^d\) with \({\mathbb {C}}^d\) (with the same bounds).

In the next section we state some preliminaries from [1, 6] that will be used in the proof of Theorem 1.4. The proof itself is given in Sect. 3.

2 Preliminaries

The main tool in the proof is a rank lower bound for design-matrices defined in [1]. For a vector \(R \in {\mathbb {F}}^n\) we denote the support of \(R\) by \(\mathsf supp (R) = \{ i \in [n]|R_i \ne 0 \}\).

Definition 2.1

(Design matrix) Let \(A\) be an \(m \times n\) matrix over a field \({\mathbb {F}}\). Let \(R_1,\ldots ,R_m \in {\mathbb {F}}^n\) be the rows of \(A\) and let \(C_1,\ldots ,C_n \in {\mathbb {F}}^m\) be the columns of \(A\). We say that \(A\) is a \((q,k,t)\)-design matrix if the following three conditions are satisfied:

-

1.

For all \(i \in [m], |\mathsf supp (R_i)| \le q\).

-

2.

For all \(j \in [n], |\mathsf supp (C_j)| \ge k\).

-

3.

For all \(j_1 \ne j_2 \in [n], |\mathsf supp (C_{j_1}) \cap \mathsf supp (C_{j_2}) | \le t\).

The following is a quantitative improvement of a bound originally proved in [1].

Theorem 2.2

[6] Let \(A\) by an \(m \times n\) complex matrix. If \(A\) is a \((q,k,t)\) design matrix then

Another lemma we will use is the following lemma whose proof is a simple consequence of the existence of diagonal Latin squares.

Lemma 2.3

[1, Lemma 2.1] Let \(r \ge 3\). Then there exists a set \(T \subset [r]^3\) of \(r^2 - r\) triples that satisfies the following properties.

-

1.

Each triple \((t_1, t_2, t_3) \in T\) consists of three distinct elements.

-

2.

For each \(i \in [r]\) there are exactly \(3(r - 1)\) triples in \(T\) that contain \(i\) as an element.

-

3.

For every pair \(i, j \in [r]\) of distinct elements there are at most 6 triples in \(T\) which contain both \(i\) and \(j\) as elements.

3 Proof of the Main Theorem

Before giving the proof of Theorem 1.4 we prove some useful lemmas. The first is the technical heart of the proof and its proof utilizes the rank bound for design matrices (Theorem 2.2). In the following we will denote by \(\dim (S)\) the dimension of the subspace spanned by a set \(S\). Notice that, since \(\mathsf adim (S) \le \dim (S)\), we can bound \(\dim (V)\) instead of \(\mathsf adim (V)\).

Lemma 3.1

Let \(V = \bigcup _{i=1}^n V_i\) be a \((\delta , n)\)-MR configuration in \({\mathbb {R}}^d\). Let \(x, y\) be indices with \(0 \le x < y \le n\). Let \(P_1 = \bigcup _{i=1}^x V_i\), let \(P_2 = \bigcup _{i=x+1}^y V_i\), and let \(P_3 = \bigcup _{i=y+1}^n V_i (P_1\) and \(P_3\) might be empty if \(x=0\) or \(y=n\)). Suppose that for some constants \(c_1, c_2 > 0\) the following two inequalities hold:

Then \(\dim (P_2) \le \dim (P_1) + 12/(c_1 c_2)\).

Proof

We start by noting that, since \(|V_1| \ge |V_2| \ge \cdots \ge |V_n|\), inequalities (1) and (2) in the lemma statement, \(|V_y| \ge c_1|P_2|\) and \((\delta - c_2)|V_y| \ge |P_3|\), also hold when \(|V_y|\) is replaced with \(|V_i|\), for \(i < y\).

We will call a line \(L\) extraordinary with respect to the configuration \(V\) if (1) \(L\) passes through at least one point of \(P_2\) and (2) \(L\) passes through at least three points of \(P_1 \cup P_2\). We will refer to the points of \(P_1 \cup P_2\) that lie on some extraordinary line \(L\) as the points associated with \(L\) (such a line \(L\) might contain additional points from \(P_3\) which are not associated with it).

Let \(L_1, L_2, \ldots , L_k\) be an enumeration of the extraordinary lines of our configuration and let \(\ell _i\) denote the number of points associated with \(L_i\), for \(1 \le i \le k\).

For each extraordinary line \(L_i\) we construct, using Lemma 2.3, a set \(T_i\) of \(\ell _i^2 - \ell _i\) triples of points so that (1) each triple in \(T_i\) consists of three distinct points associated with \(L_i\); (2) for any point \(v\) associated with \(L_i\), there are exactly \(3(\ell _i - 1)\) triples in \(T_i\) that contain \(v\); and (3) for any two points \(u \ne v\) associated with \(L_i\), there are at most 6 triples in \(T_i\) that contain both \(u\) and \(v\). Let

Next, let \(m = |V|\) and let \(M\) be the \(m \times d\) matrix whose rows are defined by the points of \(V\) (in some choice of coordinates for \({\mathbb {R}}^d\)). We will now define a matrix \(A\) that will satisfy \(A\cdot M = 0\). Each triple in \(T\) will correspond to one row of \(A\). Every triple \(t = (t_1, t_2, t_3) \in T\) consists of three distinct points in \(P_1 \cup P_2\) that are collinear. Since they are collinear, there are coefficients \(h_1, h_2, h_3\), not all zero, such that

(treating the points as vectors). We set the t’th row of \(A\) to have entries \(h_1,h_2,h_3\) in the positions corresponding to the three points \(t_1,t_2,t_3\) (we can do that since the columns of \(A\) are indexed by \(V\)) and zero elsewhere. Observe that \(A\) is a \(|T| \times m\) matrix, since there is a bijection between the elements of \(T\) and the rows of \(A\). Since the product of any row of \(A\) with \(M\) is \(0\), we must also have that

There is a bijection between the rows of the matrix \(M\) and the points in the set \(V\). Therefore, any subset of the set \(V\) corresponds to a submatrix of the matrix \(M\), obtained by taking only those rows that correspond to the points in the subset. Let \(M_1\) denote the submatrix of \(M\) corresponding to the point set \(P_1\), and likewise let \(M_2\) and \(M_3\) be the submatrices corresponding to \(P_2\) and \(P_3\). Let \(A_1\) be the submatrix of \(A\) obtained by taking those columns of \(A\) whose indices match the indices of the rows of \(M_1\) (that is, with indices corresponding to elements of \(P_1\)). Define \(A_2\) and \(A_3\) analogously (with columns in \(P_2\) and \(P_3\) respectively). Observe that \(A_1M_1, A_2M_2,\) and \(A_3M_3\) are all valid matrix products, and that

From the definition of the matrix \(A\) we have that the column corresponding to any given point in \(P_3\) contains only \(0\)’s; therefore \(A_3 = 0\), and so \(A_3M_3 = 0\). Hence \(A_1M_1 + A_2M_2 = 0\) which gives

(If \(|P_1|\) is empty we get \(A_2M_2 = 0\) and the rest of the proof is the same.)

We now claim that:

Claim 3.2

\(A_2\) is a \((3, 3c_1c_2|P_2|, 6)\)-design matrix.

Proof

By the construction of \(A\) each row contains at most three non-zero terms. Since \(A_2\) is a submatrix of \(A\), each row of \(A_2\) can contain at most three non-zero terms. Similarly, by the construction of \(A\), any two columns can share at most six non-zero locations; and again this holds for \(A_2\) as well. Finally, we claim that each column of \(A_2\) contains at least \(3c_1c_2|P_2|\) non-zero entries.

Consider a column \(C\) of \(A_2\). This column corresponds to a point \(p\) in \(P_2\). The number of non-zero entries of \(C\) is exactly equal to the number of triples in \(T\) that contain the point \(p\). Suppose that \(p \in V_i \subset P_2\) for some \(i\). We claim that there must be at least \(\delta |V_i| - |P_3|\) points \(q \ne p\) that lie on extraordinary lines through \(p\). Observe that this quantity is at least \(c_2|V_i|\) by inequality (2). Indeed, there are at least \(\delta |V_i|\) points \(q \ne p\) in \(V_i\), for which the line through \(q, p\) contains a point from some \(V_j\), with \(j \not = i\), because the configuration is \((\delta , n)\)-MR. Let us denote this set of at least \(\delta |V_i|\) points by \(S\). For each point \(q\) in \(S\), either the line through \(q, p\) contains a third point from \(P_1 \cup P_2\), and is therefore an extraordinary line, or (1) it contains no other points from \(P_1 \cup P_2\), and (2) it contains some point \(r\) from \(P_3\).

Thus, each point \(q \in S\) that is not associated with any of the extraordinary lines passing through \(p\) corresponds to some point \(r \in P_3\). Since no two \(q_1 \ne q_2 \in S\) can correspond to the same \(r\), at most \(|P_3|\) of the points in \(S\) are not associated with any of the extraordinary lines passing through \(p\). Thus, the remaining \(\delta |V_i| - |P_3|\) points are associated with one of the extraordinary lines passing through \(p\).

Now, if a given extraordinary line \(L\) passes through \(p\), and if there are \(\ell \) points associated with \(L\) besides \(p\), then that line contributes \(3\ell \) triples to \(T\) that contain \(p\). Therefore, since we showed that there are at least \(c_1 c_2 |P_2|\) points other than \(p\) that lie on the extraordinary lines passing through \(p\), there must be at least \(3c_1 c_2|P_2|\) triples in \(T\) that contain \(p\).

We conclude that that the point \(p \in V_i\) is in at least \(3c_2|V_i|\) triples; and since \(|V_i| \ge c_1|P_2|\) (by inequality (1)), this quantity is at least \(3c_1c_2|P_2|\) such points and so \(A_2\) is indeed a \((3, 3c_1c_2|P_2|, 6)\) design matrix as claimed. \(\square \)

Applying Theorem 2.2 we have that

Now, using basic linear algebra, we get that

Using Eq. (3) we immediately get

which implies \(\dim (P_2) \le \dim (P_1) + 12/(c_1c_2)\) as was required. This completes the proof of Lemma 3.1. \(\square \)

To state the next lemma we will need the following definition.

Definition 3.3

(\(\varepsilon \)-Large and \(\varepsilon \)-small indices) Let \(V_1,\ldots ,V_n \subset {\mathbb {R}}^d\) be a \((\delta ,n)\)-MR configuration and let \(c_\varepsilon = 1/(\delta -\varepsilon )\) with \(0 < \varepsilon < \delta \) some real number. We call an index \(k \in [n]\) an \(\varepsilon \)-large index if

otherwise we say that \(k\) is \(\varepsilon \)-small. By convention, we say that \(n\) is always \(\varepsilon \)-large.

Lemma 3.4

Let \(V_1, V_2, \ldots , V_n \subset {\mathbb {R}}^d\) be a \((\delta , n)\)-MR configuration, and suppose \(x\) and \(y\) are integers with \(0 \le x < y \le n\) such that \(y\) is an \(\varepsilon \)-large index, and each of the indices \(x + 1, x + 2, \ldots , y - 2, y - 1\) is \(\varepsilon \)-small. Then, for each \(i\) with \(0 \le i \le y - x - 1\) we have

Proof

We will prove the lemma by induction on \(i\). To prove the base case, \(i = 0\), we need to show that

Since \(y\) is an \(\varepsilon \)-large index, we have

and so \(\sum _{j > y} |V_j| \le 1/c_\varepsilon |V_y|.\) By adding \(|V_y|\) to both sides we immediately have that

which gives the desired bound since \(c_\varepsilon >1\) and so \(1 + 1/c_\varepsilon < 2\).

Now suppose the claim holds for \(i = k\). We wish to show that it also holds for \(i = k + 1\), assuming that \(k + 1 \le y - x - 1\). From the induction we have that

We also know that \(y- (k + 1)\) is an \(\varepsilon \)-small index, so

Substituting the first inequality into the second gives

Then adding this inequality to the first inequality yields the desired result. \(\square \)

Corollary 3.5

Under the same notations and conditions as Lemma 3.4, we have

Proof

Apply Lemma 3.4 with \(i = y - x - 1\) to get that

hence

and the corollary follows. \(\square \)

3.1 Proof of Theorem 1.4

Let \(d_1 < d_2 < \cdots < d_k=n\) be the \(\varepsilon \)-large indices determined by \(V\) (see Definition 3.3) and let us define \(d_0 = 0\). We define

etc. for \(1 \le i \le k\). Let

for \(1 \le i \le k\) and set \(m_0 = 0\).

Consider \(W_i\) for some \(1 \le i \le k\). Since \(d_i\) is an \(\varepsilon \)-large index, we have that

with \(c_\varepsilon = 1/(\delta - \varepsilon )\). Hence

Furthermore, each of \(d_{i - 1} + 1, d_{i - 1} + 2, \ldots , d_i - 2, d_i - 1\) are \(\varepsilon \)-small indices, so by Corollary 3.5 we have

Therefore our configuration satisfies the conditions of Lemma 3.1, with \(x = d_{i - 1}, y = d_i, c_1 = \frac{1}{2(1 + c_\varepsilon )^{d_i - d_{i - 1} - 1}}\), and \(c_2 = \varepsilon \). For these values of \(x\) and \(y\), the set \(P_1\) defined in the lemma equals \(W_1 \cup W_2 \cup \cdots \cup W_{i - 1}\), and the set \(P_2\) equals \(W_i\). Therefore we get that

Now, since \(m_i \le m_{i - 1} + \dim (W_i)\), we have that

Claim 3.6

For all \(0 \le i \le k\) we have

Proof

We prove the claim by induction on \(i\). The base case, \(i = 0\), holds since \(m_0 = 0\). Suppose the claim holds for \(i = h\) and consider the case \(i = h + 1\). By induction we have that

We also showed that

Substituting the first inequality into the second we find that

which gives the desired result. \(\square \)

Using the claim for \(i=k\) we get

Observe that for all \(j, d_j - d_{j - 1} \le n - k + 1\). This follows from the fact that the \(d_j\) are strictly increasing, \(d_0 = 0\), and \(d_k = n\). Therefore, the summand \(2^{k - j}(1 + c_\varepsilon )^{d_j - d_{j - 1} - 1}\) is at most \(2^{k - j}(1 + c_\varepsilon )^{n - k}\), which in turn is at most \((1 + c_\varepsilon )^n \cdot \big (\frac{2}{1 + c_\varepsilon }\big )^k\). Adding these together we get that

Observe that, since \(c_\varepsilon = 1/(\delta - \varepsilon ) > 1/(1-\varepsilon ) > 1+ \varepsilon \), we have \(2/(1+c_\varepsilon ) < 2/(2+\varepsilon )\) and so we get that

The expression \( \frac{24\cdot k}{\varepsilon } \big (\frac{2}{2+\varepsilon }\big )^k\) is maximized when \(k = -\frac{1}{\ln (2/(2+\varepsilon ))} = O(1/\varepsilon )\) and so we get

For some absolute constant \(C\). Since \(m_k = \dim (W_1 \cup W_2 \cup \cdots \cup W_n) = \dim (V_1,\ldots ,V_n)\), the proof of the theorem is complete.

Notes

One could set \(\varepsilon = \delta /2\) to get a simpler (but worse, in some cases) bound.

References

Barak, B., Dvir, Z., Wigderson, A., Yehudayoff, A.: Fractional Sylvester–Gallai theorems. Proc. Natl. Acad. Sci. USA (2012)

Borwein, P.: On monochrome lines and hyperplanes. J. Comb. Theory Ser. A 33, 76–81 (1982)

Borwein, P., Moser, W.O.J.: A survey of Sylvester’s problem and its generalizations. Aequ. Math. 40(1), 111–135 (1990)

Brass, P., Moser, W.O.J., Pach, J.: Research Problems in Discrete Geometry. Springer, New York (2005)

Chakerian, G.D.: Sylvester’s problem on collinear points and a relative. Am. Math. Mon. 77, 164–167 (1970)

Dvir, Z., Saraf, S., Wigderson, A.: Improved rank bounds for design matrices and a new proof of Kelly’s theorem. Forum Math. Sigma (2014). doi:10.1017/fms.2014.2

Grünbaum, B.: Monochromatic intersection points in families of colored lines. Geombinatorics 9, 3–9 (1999)

Motzkin, T.S.: Nonmixed connecting lines, Abstract 67T 605. Notices Am. Math. Soc. 14, 837 (1967)

Shannon, R.W.: Certain extremal problems in arrangements of hyperplanes. Ph.D. Thesis, University of Washington, Seattle (1974)

Acknowledgments

Research partially supported by NSF Grants CCF-0832797, CCF-1217416 and by the Sloan fellowship

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Dvir, Z., Tessier-Lavigne, C. A Quantitative Variant of the Multi-colored Motzkin–Rabin Theorem. Discrete Comput Geom 53, 38–47 (2015). https://doi.org/10.1007/s00454-014-9647-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-014-9647-9