Abstract

Consider an infinite planar graph with uniform polynomial growth of degree \(d > 2\). Many examples of such graphs exhibit similar geometric and spectral properties, and it has been conjectured that this is necessary. We present a family of counterexamples. In particular, we show that for every rational \(d > 2\), there is a planar graph with uniform polynomial growth of degree d on which the random walk is transient, disproving a conjecture of Benjamini (Coarse Geometry and Randomness, Volume 2100 of Lecture Notes in Mathematics. Springer, Cham, 2011). By a well-known theorem of Benjamini and Schramm, such a graph cannot be a unimodular random graph. We also give examples of unimodular random planar graphs of uniform polynomial growth with unexpected properties. For instance, graphs of (almost sure) uniform polynomial growth of every rational degree \(d > 2\) for which the speed exponent of the walk is larger than 1/d, and in which the complements of all balls are connected. This resolves negatively two questions of Benjamini and Papasoglou (Proc Am Math Soc 139(11):4105–4111, 2011).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Say that a graph G has uniform polynomial growth of degree d if the cardinality of all balls of radius r in the graph metric lie between \(c r^d\) and \(C r^d\) for two absolute constants \(C> c > 0\), for every \(r > 0\). Say that a graph has nearly-uniform polynomial growth of degree d if the cardinality of balls is trapped between \((\log r)^{-C} r^d\) and \((\log r)^C r^d\) for some universal constant \(C \geqslant 1\).

Planar graphs of uniform (or nearly-uniform) polynomial volume growth of degree \(d > 2\) arise in a number of contexts. In particular, they appear in the study of random triangulations in 2D quantum gravity [1] and as combinatorial approximations to the boundaries of 3-dimensional hyperbolic groups in geometric group theory (see, e.g., [8]).

When the dimension of volume growth disagrees with the topological dimension, one sometimes witnesses certain geometrically or spectrally degenerate behaviors. For instance, it is known that random planar triangulations of the 2-sphere have nearly-uniform polynomial volume growth of degree 4 (in an appropriate statistical, asymptotic sense) [3]. The distributional limit (see Section 1.1.1) of such graphs is called the uniform infinite planar triangulation (UIPT). But this 4-dimensional volume growth does not come with 4-dimensional isoperimetry: With high probability, a ball in the UIPT of radius r about a vertex v can be separated from the complement of a 2r ball about v by removing a set of size O(r). And, indeed, Benjamini and Papasoglu [9] showed that this phenomenon holds generally: such annular separators of size O(r) exist in all planar graphs with uniform polynomial volume growth.

Similarly, it is known that diffusion on the UIPT is anomalous. Specifically, the random walk on the UIPT is almost surely subdiffusive. In other words, if \(\{X_t\}\) is the random walk and \(d_G\) denotes the graph metric, then \({{\,\mathrm{{\mathbb {E}}}\,}}d_G(X_0,X_t) \leqslant t^{1/2-\varepsilon }\) for some \(\varepsilon > 0\). This was established by Benjamini and Curien [6]. In [14], it is shown that on any unimodular random planar graph with nearly-uniform polynomial growth of degree \(d > 3\) (in a suitable statistical sense), the random walk is subdiffusive. So again, a disagreement between the dimension of volume growth and the topological dimension results in a degeneracy typical in the geometry of fractals (see, e.g., [4]).

Finally, consider a seminal result of Benjamini and Schramm [10]: If \((G,\rho )\) is the local distributional limit of a sequence of finite planar graphs with uniformly bounded degrees, then \((G,\rho )\) is almost surely recurrent. In this sense, any such limit is spectrally (at most) two-dimensional. This was extended by Gurel-Gurevich and Nachmias [12] to unimodular random graphs with an exponential tail on the degree of the root, making it applicable to the UIPT. Benjamini [7] has conjectured that this holds for every planar graph with uniform polynomial volume. We construct a family of counterexamples. Our focus on rational degrees of growth is largely for simplicity; suitable variants of our construction should yield similar results for all real \(d > 2\) (see Remark 3.6).

Theorem 1.1

For every rational \(d > 2\), there is a transient planar graph with uniform polynomial growth of degree d.

Conversely, it is well-known that any graph with growth rate \(d \leqslant 2\) is recurrent. The examples underlying Theorem 1.1 cannot be unimodular. Nevertheless, we construct unimodular examples addressing some of the issues raised above. Angel and Nachmias (unpublished) showed the existence, for every \(\varepsilon > 0\) sufficiently small, of a unimodular random planar graph \((G,\rho )\) on which the random walk is almost surely diffusive, and which almost surely satisfies

Here, \(B_G(\rho ,r)\) is the graph ball around \(\rho \) of radius r. In other words, r-balls have an asymptotic growth rate of \(r^{3-\varepsilon }\) as \(r \rightarrow \infty \).

The authors of [9] asked whether in planar graphs with uniform growth of degree \(d \geqslant 2\), the speed of the walk should be at most \(t^{1/d+o(1)}\). We recall the following weaker theorem.

Theorem 1.2

([14]). Suppose \((G,\rho )\) is a unimodular random planar graph and G almost surely has uniform polynomial growth of degree d. Then:

We construct examples where this dependence is nearly tight.

Theorem 1.3

For every rational \(d \geqslant 2\) and \(\varepsilon > 0\), there is a constant \(c(\varepsilon ) > 0\) and a unimodular random planar graph \((G,\rho )\) such that G almost surely has uniform polynomial growth of degree d, and

Finally, let us address another question from [9]. In conjunction with the existence of small annular separators, the authors asked whether a planar graph with uniform polynomial growth of degree \(d > 2\) can be such that the complement of every ball is connected. For example, in the UIPT, there are “baby universes” connected to the graph via a thin neck that can be cut off by removing a small graph ball.

Theorem 1.4

For every rational \(d \geqslant 2\), there is a unimodular random planar graph \((G,\rho )\) such that almost surely:

-

1.

G has uniform polynomial growth of degree d.

-

2.

The complement of every graph ball in G is connected.

Annular resistances. Our unimodular constructions have the property that the “Einstein relations” (see, e.g., [4]) for various dimensional exponents do not hold. In particular, this implies that the graphs we construct are not strongly recurrent (see, e.g., [13]). Indeed, the effective resistance across annuli can be made very small (see Section 2.3 for the definition of effective resistance).

Theorem 1.5

For every \(\varepsilon > 0\) and \(d \geqslant 3\), there is a unimodular random planar graph \((G,\rho )\) that almost surely has uniform polynomial volume growth of degree d and, moreover, almost surely satisfies

where \(C(\varepsilon ) \geqslant 1\) is a constant depending only on \(\varepsilon \).

Note that the existence of annular separators of size O(R) mentioned previously gives \({\mathsf {R}}_{\mathrm {eff}}^G\left( B_G(\rho ,R) \leftrightarrow V(G) \setminus B_G(\rho ,2R)\right) \gtrsim R^{-1}\) by the Nash-Williams inequality. Moreover, recall that since the graph \((G,\rho )\) from Theorem 1.5 is unimodular and planar, it must be almost surely recurrent (cf. [10]). Therefore the electrical flow witnessing (1.1) cannot spread out “isotropically” from \(B_G(\rho ,R)\) to \(B_G(\rho ,2R)\). Indeed, if one were able to send a flow roughly uniformly from \(B_G(\rho ,2^i)\) to \(B_G(\rho ,2^{i+1})\), then these electrical flows would chain to give

and taking \(i \rightarrow \infty \) would show that G is transient.

One formalization of this fact is that the graphs in Theorem 1.5 (almost surely) do not satisfy an elliptic Harnack inequality. These graphs are almost surely one-ended, and one can easily pass to a quasi-isometric triangulation that admits a circle packing whose carrier is the entire plane \(\mathbb R^2\). By a result of Murugan [16], this implies that the graph metric \((V(G),d_G)\) on the graphs in Theorem 1.5 is not quasisymmetric to the Euclidean metric induced on the vertices by any such circle packing. (This can also be proved directly from (1.1).)

We remark on one other interesting feature of Theorem 1.5. Suppose that \(\Gamma \) is a Gromov hyperbolic group whose visual boundary \(\partial _{\infty } \Gamma \) is homeomorphic to the 2-sphere \(\mathbb S^2\). The authors of [8] construct a family \(\{G_n : n \geqslant 1\}\) of discrete approximations to \(\partial _{\infty } \Gamma \) such that each \(G_n\) is a planar graph and the family \(\{G_n\}\) has uniform polynomial volume growth.Footnote 1 They show that if there is a constant \(c > 0\) so that the annuli in \(G_n\) satisfy uniform effective resistance estimates of the form

then \(\partial _{\infty } \Gamma \) is quasisymmetric to \(\mathbb S^2\) (cf. [8, Thm 11.1].)

In particular, if it were to hold that for any (infinite) planar graph G with uniform polynomial growth we have

then it would confirm positively Cannon’s conjecture from geometric group theory. Theorem 1.5 exhibits graphs for which this fails in essentially the strongest way possible.

1.1 Preliminaries

We will consider primarily connected, undirected graphs \(G=(V,E)\), which we equip with the associated path metric \(d_G\). We will sometimes write V(G) and E(G), respectively, for the vertex and edge sets of G. If \(U \subseteq V(G)\), we write G[U] for the subgraph induced on U.

For \(v \in V\), let \(\deg _G(v)\) denote the degree of v in G. Let \({\mathrm {diam}}(G) {:}{=}\sup _{x,y \in V} d_G(x,y)\) denote the diameter (which is only finite for G finite). For \(v \in V\) and \(r \geqslant 0\), we use \(B_G(v,r) = \{ u \in V : d_G(u,v) \leqslant r\}\) to denote the closed ball in G. For subsets \(S,T \subseteq V\), we write \(d_G(S,T) {:}{=}\inf \{ d_G(s,t) : s \in S, t \in T\}\).

Say that an infinite graph G has uniform volume growth of rate f(r) if there exist constants \(C,c > 0\) such that

A graph has uniform polynomial growth of degree d if it has uniform volume growth of rate \(f(r) = r^d\), and has uniform polynomial growth if this holds for some \(d > 0\).

For two expressions A and B, we use the notation \(A \lesssim B\) to denote that \(A \leqslant C B\) for some universal constant C. The notation \(A \lesssim _{\gamma } B\) denotes that \(A \leqslant C(\gamma ) B\) where \(C(\gamma )\) is a number depending only on the parameter \(\gamma \). We write \(A \asymp B\) for the conjunction \(A \lesssim B \wedge B \lesssim A\).

1.1.1 Distributional limits of graphs

We briefly review the weak local topology on random rooted graphs. One may consult the extensive reference of Aldous and Lyons [2], and [5] for the corresponding theory of reversible random graphs. The paper [10] offers a concise introduction to distributional limits of finite planar graphs. We briefly review some relevant points.

Let \(\mathcal G\) denote the set of isomorphism classes of connected, locally finite graphs; let \(\mathcal G_{\bullet }\) denote the set of rooted isomorphism classes of rooted, connected, locally finite graphs. Define a metric on \(\mathcal G_{\bullet }\) as follows: \(\mathbb {d}_{\mathrm {loc}}\left( (G_1,\rho _1), (G_2,\rho _2)\right) = 1/(1+\alpha )\), where

and we use \(\cong _{\rho }\) to denote rooted isomorphism of graphs. \((\mathcal G_{\bullet }, \mathbb {d}_{\mathrm {loc}})\) is a separable, complete metric space. For probability measures \(\{\mu _n\}, \mu \) on \(\mathcal G_{\bullet }\), write \(\{\mu _n\} \Rightarrow \mu \) when \(\mu _n\) converges weakly to \(\mu \) with respect to \(\mathbb {d}_{\mathrm {loc}}\).

A random rooted graph \((G,X_0)\) is said to be reversible if \((G,X_0,X_1)\) and \((G,X_1,X_0)\) have the same law, where \(X_1\) is a uniformly random neighbor of \(X_0\) in G. A random rooted graph \((G,\rho )\) is said to be unimodular if it satisfies the Mass Transport Principle (see, e.g., [2]). For our purposes, it suffices to note that if \({{\,\mathrm{{\mathbb {E}}}\,}}[\deg _G(\rho )] < \infty \), then \((G,\rho )\) is unimodular if and only if the random rooted graph \(({\tilde{G}},{\tilde{\rho }})\) is reversible, where \(({\tilde{G}},{\tilde{\rho }})\) has the law of \((G,\rho )\) biased by \(\deg _G(\rho )\)

If \(\{(G_n,\rho _n)\} \Rightarrow (G,\rho )\), we say that \((G,\rho )\) is the distributional limit of the sequence \(\{(G_n,\rho _n)\}\), where we have conflated random variables with their laws in the obvious way. Consider a sequence \(\{G_n\} \subseteq \mathcal G\) of finite graphs, and let \(\rho _n\) denote a uniformly random element of \(V(G_n)\). Then \(\{(G_n,\rho _n)\}\) is a sequence of \(\mathcal G_{\bullet }\)-valued random variables, and one has the following: if \(\{(G_n,\rho _n)\} \Rightarrow (G,\rho )\), then \((G,\rho )\) is unimodular. Equivalently, if \(\{(G_n,\rho _n)\}\) is a sequence of connected finite graphs and \(\rho _n \in V(G_n)\) is chosen according to the stationary measure of \(G_n\), then if \(\{(G_n,\rho _n)\} \implies (G,\rho )\), it holds that \((G,\rho )\) is a reversible random graph.

2 A transient planar graph of uniform polynomial growth

We begin by constructing a transient planar graph with uniform polynomial growth of degree \(d > 2\). Our construction in this section has \(d = \log _3(12) \approx 2.26\). In Section 3, this construction is generalized to any rational \(d > 2\).

2.1 Tilings and dual graphs

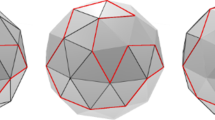

Our constructions are based on planar tilings by rectangles. A tile is an axis-parallel closed rectangle \(A \subseteq \mathbb R^2\). We will encode such a tile as a triple \((p(A), \ell _1(A), \ell _2(A))\), where \(p(A) \in \mathbb R^2\) denotes its bottom-left corner, \(\ell _1(A)\) its width (length of its projection onto the x-axis), and \(\ell _2(A)\) its height (length of its projection onto the y-axis). A tiling \(\varvec{T}\) is a finite collection of interior-disjoint tiles. Denote \([\![ \varvec{T}]\!] {:}{=}\bigcup _{A \in \varvec{T}} A\). If \(R \subseteq \mathbb R^2\), we say that \(\varvec{T}\) is a tiling of R if \([\![ \varvec{T}]\!] = R\). See Fig. 1a for a tiling of the unit square.

We associate to a tiling its dual graph \(G(\varvec{T})\) with vertex set \(\varvec{T}\) and with an edge between two tiles \(A,B \in \varvec{T}\) whenever \(A \cap B\) has Hausdorff dimension one; in other words, A, B are tangent, but not only at a corner. Denote by \(\mathcal T\) the set of all tilings of the unit square. See Fig. 1b. For the remainder of the paper, we will consider only tilings \(\varvec{T}\) for which \(G(\varvec{T})\) is connected.

Definition 2.1

(Tiling product). For \(\varvec{S}, \varvec{T}\in \mathcal T\), define the product \(\varvec{S}\circ \varvec{T}\in \mathcal T\) as the tiling formed by replacing every tile in \(\varvec{S}\) by an (appropriately scaled) copy of \(\varvec{T}\). More precisely: For every \(A \in \varvec{S}\) and \(B \in \varvec{T}\), there is a tile \(R \in \varvec{S}\circ \varvec{T}\) with \(\ell _i(R) {:}{=}\ell _i(A) \ell _i(B)\), and

for each \(i \in \{1,2\}\). See Fig. 2.

If \(\varvec{T}\in \mathcal T\) and \(n \geqslant 0\), we will use \(\varvec{T}^n {:}{=}\varvec{T}\circ \cdots \circ \varvec{T}\) to denote the n-fold tile product of \(\varvec{T}\) with itself. The following observation shows that this is well-defined.

Observation 2.2

The tiling product is associative: \((\varvec{S}\circ \varvec{T}) \circ \varvec{U}= \varvec{S}\circ (\varvec{T}\circ \varvec{U})\) for all \(S,T,U \in \mathcal T\). Moreover, if \(\varvec{I}\in \mathcal T\) consists of the single tile \([0,1]^2\), then \(\varvec{T}\circ \varvec{I}= \varvec{I}\circ \varvec{T}\) for all \(\varvec{T}\in \mathcal T\).

Definition 2.3

(Tiling concatenation). Suppose that \(\varvec{S}\) is a tiling of a rectangle R and \(\varvec{T}\) is a tiling of a rectangle \(R'\) and the heights of R and \(R'\) coincide. Let \(R''\) denote the translation of \(R'\) for which the left edge of \(R''\) coincides with the right edge of R, and denote by \(\varvec{S}\mid \varvec{T}\) the induced tiling of the rectangle \(R \cup R''\). See Fig. 3.

Let \(\varvec{H}\) denote the tiling in Fig. 1a, and define \(\mathcal H_n {:}{=}G(\varvec{H}^0 \mid \varvec{H}^1 \mid \cdots \mid \varvec{H}^n)\); see Fig. 4, where we have omitted \(\varvec{H}^0\) for ease of illustration. The next theorem represents our primary goal for the remainder of this section. Note that \(\varvec{H}^0 = \{\rho \}\) consists of a single tile, and that \(\{(\mathcal H_n,\rho )\}\) forms a Cauchy sequence in \((\mathcal G_{\bullet }, \mathbb {d}_{\mathrm {loc}})\), since \((\mathcal H_n,\rho )\) is naturally a rooted subgraph of \((\mathcal H_{n+1},\rho )\). Letting \(\mathcal H_{\infty }\) denote its limit, we will establish the following.

Theorem 2.4

The infinite planar graph \(\mathcal H_{\infty }\) is transient and has uniform polynomial volume growth of degree \(\log _3(12)\).

Uniform growth is established in Lemma 2.11 and transience in Corollary 2.17.

2.2 Volume growth

The following lemma shows that a ball of radius \(r = {\mathrm {diam}}(\varvec{H}^n)\) in \(\varvec{H}^n\) has volume \(\asymp r^{\log _3(12)}\). Later on, in Lemma 2.10, we will show a similar bound holds for balls of arbitrary radius \(1 \leqslant r \leqslant {\mathrm {diam}}(\varvec{H}^n)\) in \(\varvec{H}^n\).

Lemma 2.5

For \(n \geqslant 0\), we have \(|\varvec{H}^n| = 12^n\), and \(3^n \leqslant {\mathrm {diam}}(\varvec{H}^n) \leqslant 3^{n+1}\).

Proof

The first claim is straightforward by induction. For the second claim, note that \(\ell _1(A) = 3^{-n}\) for every \(A \in \varvec{H}^n\).

Moreover, there are \(3^n\) tiles touching the left-most boundary of \([0,1]^2\). Therefore to connect any \(A,B \in \varvec{H}^n\) by a path in \(G(\varvec{H}^n)\), we need only go from A to the left-most column in at most \(3^n\) steps, then use at most \(3^n\) steps of the column, and finally move at most \(3^n\) steps to B. \(\square \)

The next lemma is straightforward.

Lemma 2.6

Consider \(\varvec{S},\varvec{T}\in \mathcal T\) and \(G=G(\varvec{S}\circ \varvec{T})\). For any \(X \in \varvec{S}\circ \varvec{T}\), it holds that \(|B_G(X, {\mathrm {diam}}(G(\varvec{T})))| \geqslant |\varvec{T}|\).

If \(\varvec{T}\) is a tiling, let us partition the edge set \(E(G(\varvec{T})) = E_1(\varvec{T}) \cup E_2(\varvec{T})\) into horizontal and vertical edges. For \(A \in \varvec{T}\) and \(i \in \{1,2\}\), let \(N_{\varvec{T}}(A,i)\) denote the set of tiles adjacent to A in \(G(\varvec{T})\) along the ith direction, meaning that the edge separating them is parallel to the ith axis (see Fig. 5).

Further denote \(N_{\varvec{T}}(A) {:}{=}N_{\varvec{T}}(A,1) \cup N_{\varvec{T}}(A,2)\). Moreover, we define:

We take \(\alpha _{\varvec{T}} {:}{=}1\) if \(\varvec{T}\) contains a single tile. It is now straightforward to check that \(\alpha _{\varvec{T}}\) bounds the degrees in \(G(\varvec{T})\).

Lemma 2.7

For a tiling \(\varvec{T}\) and \(A \in \varvec{T}\), it holds that

Proof

After accounting for the four corners of A, every other tile \(B \in N_{\varvec{T}}(A,i)\) intersects A in a segment of length at least \(\ell _i(B) \geqslant \ell _i(A)/\alpha _{\varvec{T}}\). The second inequality follows from \(\alpha _{\varvec{T}} \geqslant 1\). \(\square \)

Lemma 2.8

Consider \(\varvec{S},\varvec{T}\in \mathcal T\) and let \(G = G(\varvec{S}\circ \varvec{T})\). Then for any \(X \in \varvec{S}\circ \varvec{T}\), it holds that

Proof

For a tile \(Y \in \varvec{S}\circ \varvec{T}\), let \({\hat{Y}} \in \varvec{S}\) denote the unique tile for which \(Y \subseteq {\hat{Y}}\). Let us also define

which is the set of vertices of \(G(\varvec{S})\) that can be reached from \({\hat{X}}\) by following at most one edge in each direction.

We will show that

It follows that

and then (2.2) follows from Lemma 2.7.

To establish (2.3), consider any path \(\left\langle X=X_0,X_1,X_2,\ldots ,X_h\right\rangle \) in G with \({\hat{X}}_h \notin {\tilde{N}}_{\varvec{S}}({\hat{X}})\). Let \(k \leqslant h\) be the smallest index for which \({\hat{X}}_k \notin {\tilde{N}}_{\varvec{S}}({\hat{X}})\). Then:

Now (2.4) implies that

And (2.5) shows that for some \(i' \in \{1,2\}\),

To clarify why this is true, note that

where ’\(+\)’ here is the Minkowski sum \(R+S {:}{=}\{ r + s : r \in R, s \in S \}\). Indeed, this inclusion motivates our definition of the “\(\ell _{\infty }\) neighborhood” \({\tilde{N}}\) above.

Combining (2.6) and (2.7) now gives

completing the proof. \(\square \)

We can now finish the analysis of the volume growth in the graphs \(\{\varvec{H}^n : n \geqslant 0\}\).

Lemma 2.9

For \(n \geqslant 1\), it holds that \(\alpha _{\varvec{H}^n} \leqslant 2\).

Proof

Consider \(A \in \varvec{H}^n\) and \(B \in N_{\varvec{H}^n}(A)\). First note that, as in the proof of Lemma 2.5, all the tiles in \(\varvec{H}^n\) have the same width \(3^{-n}\), and so \(\ell _1(A) = \ell _1(B)\). Moreover, one can easily verify that every two vertically adjacent tiles in \(\varvec{H}^n\) have the same height, and so we have \(\ell _2(A)=\ell _2(B)\) when \(B \in N_{\varvec{H}^n}(A, 1)\). Now we prove by an induction on n that for all horizontally adjacent tiles \(A, B \in \varvec{H}^n\) we have

The base case is clear for \(n = 1\). For \(n \geqslant 2\) Let us write \(\varvec{H}^n = \varvec{H}\circ \varvec{H}^{n-1}\), and let \({\hat{A}},{\hat{B}} \in \varvec{H}\) be the unique tiles for which \(A \subseteq {\hat{A}}\) and \(B \subseteq {\hat{B}}\). If \({\hat{A}}={\hat{B}}\), then the claim follows from the induction hypothesis. Otherwise, as \(B \in N_{\varvec{H}^n}(A, 2)\), it holds that \({\hat{B}} \in N_{\varvec{H}}({\hat{A}}, 2)\) as well. By symmetry, the tiles touching the left and right edges of \(\varvec{H}^{n-1}\) have the same height, and therefore it follows that

completing the proof. \(\square \)

Lemma 2.10

For any \(n \geqslant 0\), it holds that

Proof

Writing \(\varvec{H}^n = \varvec{H}^{n-k} \circ \varvec{H}^{k}\) and employing Lemma 2.6 together with Lemma 2.5 gives

The desired lower bound now follows using monotonicity of \(|B_{G(H^n)}(A,r)|\) with respect to r.

To prove the upper bound, first note that we have \(L_{\varvec{H}^k} = 3^{-k}\) (recall the definition (2.1)). Moreover, by Lemma 2.9 we have \(\alpha _{\varvec{H}^n} \leqslant 2\). Hence invoking Lemma 2.8 with \(\varvec{S}= \varvec{H}^{n-k}\) and \(\varvec{T}= \varvec{H}^k\) gives

completing the proof. \(\square \)

Finally, this allows us to establish a uniform polynomial growth rate for \(\mathcal H_{\infty }\).

Lemma 2.11

It holds that

Proof

Recall first the natural identification \(\varvec{H}^n \hookrightarrow V(\mathcal H_{\infty })\) under which \(V(\mathcal H_{\infty }) = \bigcup _{n \geqslant 0} \varvec{H}^n\) is a partition. Consider \(v \in V(\mathcal H_{\infty })\) and let \(n \geqslant 0\) be such that \(v \in \varvec{H}^n\). Now Lemma 2.10 in conjunction with Lemma 2.5 yields the bounds:

These four bounds together verify the desired claim. \(\square \)

2.3 Effective resistances

Consider a weighted, undirected graph \(G=(V,E,c)\) with edge conductances \(c :E \rightarrow \mathbb R_+\). For \(p \geqslant 1\), denote \(\ell _p(V) {:}{=}\{ f : V \rightarrow \mathbb R\mid \sum _{u \in V} |f(u)|^p < \infty \}\), and equip \(\ell _2(V)\) with the inner product \(\langle f,g\rangle = \sum _{u \in V} f(u) g(u)\).

For \(s,t \in \ell _1(V)\) with \(\Vert s\Vert _1=\Vert t\Vert _1\), we define the effective resistance

where \(L_G\) is the combinatorial Laplacian of G, and \(L_G^{\dagger }\) is the Moore-Penrose pseudoinverse. Here, \(L_G\) is the operator on \(\ell _2(V)\) defined by

If G is unweighted, we assume it is equipped with unit conductances \(c \equiv \mathbb {1}_{E(G)}\).

Equivalently, if we consider mappings \(\theta : E \rightarrow \mathbb R\), and define the energy functional

then \({\mathsf {R}}_{\mathrm {eff}}^G(s,t)\) is the minimum energy of a flow with demands \(s-t\). (See, for instance, [15, Ch. 2].) For two finite sets \(A,B \subseteq V\) in a graph, we define

and we recall the following standard characterization (see, e.g., [15, Thm. 2.3]).

If we define additionally \(c_v {:}{=}\sum _{u \in V(L)} c(\{u,v\})\) for \(v \in V\), then we can recall that weighted random walk \(\{X_t\}\) on G with Markovian law

Theorem 2.12

(Transience criterion). A weighted graph \(G=(V,E,c)\) is transient if and only if there is a vertex \(v \in V\) and an increasing sequence \(V_1 \subseteq \cdots \subseteq V_n \subseteq V_{n+1} \subseteq \cdots \) of finite subsets of vertices satisfying \(\bigcup _{n \geqslant 1} V_n = V\) and

For a tiling \(\varvec{T}\) of a closed rectangle R, let \(\mathscr {L}(\varvec{T})\) and \(\mathscr {R}(\varvec{T})\) denote the sets of tiles that intersect the left and right edges of R, respectively. We define

Observation 2.13

For any \(\varvec{S},\varvec{T}\in \mathcal T\), we have \(|\mathscr {L}(\varvec{S}\circ \varvec{T})| = |\mathscr {L}(\varvec{S})|\cdot |\mathscr {L}(\varvec{T})|\) and \(|\mathscr {R}(\varvec{S}\circ \varvec{T})| = |\mathscr {R}(\varvec{S})|\cdot |\mathscr {R}(\varvec{T})|\). In particular, \(|\mathscr {L}(\varvec{H}^n)| = |\mathscr {R}(\varvec{H}^n)| = 3^n\).

Lemma 2.14

Suppose that \(\varvec{S},\varvec{T}\) are tilings satisfying the conditions of Definition 2.3. Suppose furthermore that all rectangles in \(\mathscr {R}(\varvec{S})\) have the same height, and the same is true for \(\mathscr {L}(\varvec{T})\). Then we have

Proof

By the triangle inequality for effective resistances, it suffices to prove that

where \(G = G(\varvec{S}\mid \varvec{T})\). We construct a flow from \(\mathscr {R}(\varvec{S})\) to \(\mathscr {L}(\varvec{T})\) as follows: If \(A \in \mathscr {R}(\varvec{S}), B \in \mathscr {L}(\varvec{T})\) and \(\{A,B\} \in E(G)\), then the flow value on \(\{A,B\}\) is

Denoting \(m {:}{=}\max (|\mathscr {R}(\varvec{S})|,|\mathscr {L}(\varvec{T})|)\), we clearly have \(F_{AB} \leqslant 1/m\). Moreover,

hence

completing the proof. \(\square \)

Say that a tiling \(\varvec{T}\) is non-degenerate if \(\mathcal L(\varvec{T}) \cap \mathcal R(\varvec{T}) = \emptyset \), i.e., if no tile \(A \in \varvec{T}\) touches both the left and right edges of \([\![ \varvec{T}]\!]\). Let \(\Delta _{\varvec{T}} {:}{=}\max _{A \in \varvec{T}} \deg _{G(\varvec{T})}(A)\). If \(\varvec{S}\) and \(\varvec{T}\) are non-degenerate, we have the simple inequalities \(\rho (\varvec{T}) \geqslant 1/(\Delta _{\varvec{T}} \cdot |\mathscr {L}(\varvec{T})|) \) and \(\rho (\varvec{T}) \geqslant 1/(\Delta _{\varvec{T}} \cdot |\mathscr {R}(\varvec{T})|)\). Together with Lemma 2.7, this yields a fact that we will employ later.

Corollary 2.15

For any two non-degenerate tilings \(\varvec{S},\varvec{T}\) satisfying the assumptions of Lemma 2.14, it holds that

Lemma 2.16

For every \(n \geqslant 1\), it holds that

Proof

Fix \(n \geqslant 2\). Recalling Fig. 1b, let us consider \(\varvec{H}^n\) as consisting of three (identical) tilings stacked vertically, and where each of these three tilings is written as \(\varvec{H}^{n-1} \mid \varvec{S}\mid \varvec{H}^{n-1}\) where \(\varvec{S}\) consists of two copies of \(\varvec{H}^{n-1}\) stacked vertically. Applying Lemma 2.14 to \(\varvec{H}^{n-1} \mid \varvec{S}\mid \varvec{H}^{n-1}\) gives

where in the second inequality we have employed Observation 2.13. This yields the desired result by induction on n. \(\square \)

Corollary 2.17

The graphs \(\mathcal H_n = G(\varvec{H}^0 \mid \varvec{H}^1 \mid \cdots \mid \varvec{H}^n)\) satisfy

Hence \(\mathcal H_{\infty }\) is transient.

Proof

Employing Lemma 2.14, Observation 2.13, and Lemma 2.16 together yields

verifying (2.8). Now Theorem 2.12 yields the transience of \(\mathcal H_{\infty }\). \(\square \)

3 Generalizations and unimodular constructions

Consider a sequence \(\gamma = \left\langle \gamma _1,\ldots ,\gamma _b\right\rangle \) with \(\gamma _i \in \mathbb N\). Define a tiling \(\varvec{T}_{\gamma } \in \mathcal T\) as follows: The unit square is partitioned into b columns of width 1/b, and for \(i \in \{1,2,\ldots ,b\}\), the ith column has \(\gamma _i\) rectangles of height \(1/\gamma _i\). For instance, the tiling \(\varvec{H}\) from Fig. 1a can be written \(\varvec{H}= \varvec{T}_{\langle 3,6,3\rangle }\).

We will assume throughout this section that \(\min (\gamma )=b\) and \(\gamma _1=\gamma _b\). Let us use the notation \(|\gamma | {:}{=}\gamma _1 + \cdots + \gamma _b\). The proof of the next lemma follows just as for Lemma 2.5 using \(\min (\gamma )=b\) so that there is a column in \(\varvec{T}_{\gamma }^n\) of height \(b^n\).

Lemma 3.1

For \(n \geqslant 0\), it holds that \(|\varvec{T}_{\gamma }^n| = |\gamma |^n\), and \(b^n \leqslant {\mathrm {diam}}(\varvec{T}_{\gamma }^n) \leqslant 3 b^n\).

Clearly we have \(\alpha _{\varvec{T}_{\gamma }} \leqslant |\gamma |/b\). The following lemma can be shown using a similar argument to that of Lemma 2.9. Note that the only symmetry required in the proof of Lemma 2.9 is that the first and last column of \(\varvec{T}_{\gamma }\) have the same geometry, and this is true since \(\gamma _1=\gamma _b\).

Lemma 3.2

For any \(n \geqslant 1\), it holds that \(\alpha _{\varvec{T}_{\gamma }^n} \leqslant \alpha _{\varvec{T}_{\gamma }} \leqslant |\gamma |/b\).

The next lemma also follows from Lemma 3.1 and the same reasoning used in the proof of Lemma 2.10. The dependence of the implicit constant on \(|\gamma |/b\) comes from Lemma 3.2.

Lemma 3.3

For any \(n \geqslant 0\), it holds that

3.1 Degrees of growth

Consider \(b, k \in \mathbb N\) with \(k \geqslant b \geqslant 4\), and define the sequence

Denote \(\varvec{T}_{(b,k)} {:}{=}\varvec{T}_{\gamma ^{(b,k)}}\) and note that \(|\gamma ^{(b,k)}|=bk\). Define \({\mathsf {d}}_g(b,k) {:}{=}\log _b(bk)\), and \(\Gamma _{b,k} {:}{=}\sum _{i=1}^b 1/\gamma _i^{(b,k)}\).

Observation 3.4

The following facts hold for \(k \geqslant b \geqslant 4\) and \(n \geqslant 0\):

-

(a)

There are \(b^n\) tiles in the left- and right-most columns of \(\varvec{T}^n_{(b,k)}\).

-

(b)

If a pair of consecutive columns in \(\varvec{T}^n_{(b,k)}\) have heights h and \(h'\), then \(\min (h,h')\) divides \(\max (h,h')\).

Now observe that Lemma 3.3 yields the following.

Corollary 3.5

The family of graphs \(\mathcal F= \big \{G\big (\varvec{T}_{(b,k)}^n\big ) : n \geqslant 0\big \}\) has uniform polynomial growth of degree \({\mathsf {d}}_g(b,k)\) in the sense that

For any rational \(p/q \geqslant 2\), one can achieve \({\mathsf {d}}_g(b,k)=p/q\) by taking \(b=4^q\) and \(k=4^{p-q}\).

Remark 3.6

(Arbitrary real degrees \(d > 2\)). We note that by considering more general products of tilings, one can obtain planar graphs of uniform polynomial growth of any real degree \(d > 2\) for which the main results of this paper still hold. Instead of working with the family of powers \(\{\varvec{T}_\gamma ^n\}\) for a fixed tiling \(\varvec{T}_\gamma \), one defines an infinite sequence \(\langle \gamma ^{(1)}, \gamma ^{(2)}, \ldots \rangle \), and examines the family of graphs \(\varvec{T}_{\gamma ^{(n)}} \circ \cdots \circ \varvec{T}_{\gamma ^{(1)}}\).

More concretely, fix some real \(d > 2\), and let us consider a sequence \(\{h_n : n \geqslant 1\}\) of nonnegative integers. Also define \(\gamma ^{(n)} {:}{=}\gamma ^{(4,4+h_n)}\) and \(\varvec{T}^{(n)} {:}{=}\varvec{T}_{\gamma ^{(n)}} \circ \cdots \circ \varvec{T}_{\gamma ^{(1)}}\). Then \(|\varvec{T}^{(n)}| = 4^n \prod _{j=1}^n (4+h_j)\), and \({\mathrm {diam}}(\varvec{T}^{(n)}) \asymp 4^n\). By a similar argument as in Lemma 2.10 based on the recursive structure, it holds that for \(i \in \{1,2,\ldots ,n\}\), balls of radius \(\asymp 4^i\) in \(\varvec{T}^{(n)}\) have volume \(\asymp _K 4^i \prod _{j=1}^i (4+h_j)\), where \(K {:}{=}\max \{ h_n : n \geqslant 1\}\). Given our choice of \(\{h_1,\ldots ,h_{n-1}\}\), we choose \(h_n \geqslant 0\) as large as possible subject to

It is straightforward to argue that \(K \lesssim _d 1\), and

implying that \(4^n \prod _{j=1}^n (4+h_j) \asymp _d 4^{dn}\) for every \(n \geqslant 1\). It follows that the graphs \(\{\varvec{T}^{(n)} : n \geqslant 1 \}\) have uniform polynomial growth of degree d.

Let us now return to the graphs \(\varvec{T}^n_{(b,k)}\) and analyze the effective resistance across them.

Lemma 3.7

For every \(n \geqslant 1\), it holds that

Proof

Fix \(n \geqslant 2\) and write \(\varvec{T}^n_{(b,k)} = \varvec{T}_{(b,k)} \circ \varvec{T}^{n-1}_{(b,k)}\) as \(\varvec{A}_1 \mid \varvec{A}_2 \mid \cdots \mid \varvec{A}_b\) where, for \(1 \leqslant i \leqslant b\), each \(\varvec{A}_i\) is a vertical stack of \(\gamma _i^{(b,k)}\) copies of \(\varvec{T}^{n-1}_{(b,k)}\). Since \(\rho (\varvec{A}_i) = \rho (\varvec{T}_{(b,k)}^{n-1})/\gamma _i^{(b,k)}\) by the parallel law for effective resistances, applying Lemma 2.14 to \(\varvec{A}_1 \mid \varvec{A}_2 \mid \cdots \mid \varvec{A}_b\) gives

where in the second inequality we have employed \(\min \left( \mathscr {R}(\varvec{A}_i),\mathscr {L}(\varvec{A}_{i+1})\right) \geqslant b^{n}\) which follows from Observation 3.4(a). Finally, observe that \(\Gamma _{b,k} \geqslant 1/\gamma _1^{(b,k)} + 1/\gamma _b^{(b,k)} = 2/b\), and therefore the desired upper bound follows by induction.

For the lower bound, note that since the degrees in \(\varvec{T}^n_{(b,k)}\) are bounded by k, the Nash-Williams inequality (see, e.g., [15, §5]) gives

where \(K_i\) is the ith column of rectangles in \(\varvec{T}^n_{(b,k)}\), and the last equality follows by a simple induction. \(\square \)

The next result establishes Theorem 1.1.

Theorem 3.8

For every \(k > b\), the graphs \(\mathcal T_n^{(b,k)} {:}{=}G\left( \varvec{T}^0_{(b,k)} \mid \varvec{T}^1_{(b,k)} \mid \cdots \mid \varvec{T}^n_{(b,k)}\right) \) satisfy

Hence the limit graph \(\mathcal T_{\infty }^{(b,k)}\) is transient. Moreover, \(\mathcal T_{\infty }^{(b,k)}\) has uniform polynomial growth of degree \({\mathsf {d}}_g(b,k)\).

Proof

Employing Lemma 2.14, Observation 3.4(a), and Lemma 3.7 together yields

For \(k > b\), we have \(\max (\gamma ^{(b,k)}) > b\) and \(\min (\gamma ^{(b,k)})=b\), hence \(\Gamma _{b,k} < 1\), verifying (3.2). Now Theorem 2.12 yields transience of \(\mathcal T_{\infty }^{(b,k)}\).

Uniform polynomial growth of degree \({\mathsf {d}}_g(b,k)\) follows from Corollary 3.5 as in the proof of Lemma 2.11. \(\square \)

3.2 The distributional limit

Fix \(k \geqslant b \geqslant 4\) and take \(G_n {:}{=}G(\varvec{T}_{(b,k)}^n)\). Since the degrees in \(\{G_n\}\) are uniformly bounded, the sequence has a subsequential distributional limit, and in all arguments that follow, we could consider any such limit. But let us now argue that if \(\mu _n\) is the law of \((G_n,\rho _n)\) with \(\rho _n \in V(G_n)\) chosen according to the stationary measure, then the measures \(\{\mu _n : n \geqslant 0\}\) have a distributional limit.

Lemma 3.9

For any \(k \geqslant b \geqslant 4\), there is a reversible random graph \((G_{b,k},\rho )\) such that \(\{(G_n,\rho _n)\} \Rightarrow (G_{b,k},\rho )\). Moreover, almost surely \(G_{b,k}\) has uniform polynomial volume growth of degree \({\mathsf {d}}_g(b,k)\).

Proof

It suffices to prove that \(\{(G_n,\rho _n)\}\) has a limit \((G_{b,k},\rho )\). Reversibility of the limit then follows automatically (as noted in Section 1.1.1), and the degree of growth is an immediate consequence of Corollary 3.5. It will be slightly easier to show that the sequence \(\{(G_n,{\hat{\rho }}_n)\}\) has a distributional limit, with \({\hat{\rho }}_n \in V(G_n)\) chosen uniformly at random. As noted in Section 1.1.1, the claim then follows from [5, Prop. 2.5] (the correspondence between unimodular and reversible random graphs under degree-biasing).

Let \(\mu _{n,r}\) be the law of \(B_{G_n}({\hat{\rho }}_n,r)\). It suffices to show that the measures \(\{\mu _{n,r} : n \geqslant 0\}\) converge for every fixed \(r \geqslant 1\), and then a standard application of Kolmogorov’s extension theorem proves the existence of a limit.

For a tiling \(\varvec{T}\) of a rectangle R, let \(\partial \varvec{T}\) denote the set of tiles that intersect some side of R. Define the neighborhood \(N_r(\partial \varvec{T}_{(b,k)}^n) {:}{=}\{ v \in \varvec{T}^n_{(b,k)} : d_{G_n}(v, \partial \varvec{T}_{(b,k)}^n) \leqslant r\}\) and abbreviate \({\mathsf {d}} = {\mathsf {d}}_g(b,k)\). Then \(|\partial \varvec{T}^n_{(b,k)}| \leqslant 4b^n\), so Corollary 3.5 gives

Since \(|\varvec{T}^n_{(b,k)}| = (bk)^n\), it follows that

where \(\mathcal E_{r,n}\) is the event \(\{ B_{G_{n}}({\hat{\rho }}_{n}, r) \cap \partial \varvec{T}^{n}_{(b,k)} = \emptyset \}\).

Now write \(\varvec{T}^n_{(b,k)} = \varvec{T}_{(b,k)} \circ \varvec{T}^{n-1}_{(b,k)}\), and note that \({\hat{\rho }}_n\) falls into one of the \(|\gamma ^{(b,k)}|=bk\) copies of \(G_{n-1}\) and is, moreover, uniformly distributed in that copy. Therefore we can naturally couple \((G_n,{\hat{\rho }}_n)\) and \((G_{n-1},{\hat{\rho }}_{n-1})\) by identifying \({\hat{\rho }}_n\) with \({\hat{\rho }}_{n-1}\). Moreover, conditioned on the event \(\mathcal E_{r,n-1}\), we can similarly couple \(B_{G_n}({\hat{\rho }}_n,r)\) and \(B_{G_{n-1}}({\hat{\rho }}_{n-1},r)\).

It follows that, for every \(r \geqslant 1\),

As the latter sequence is summable, it follows that \(\{\mu _{n,r}\}\) converges for every fixed \(r \geqslant 1\), completing the proof. \(\square \)

3.3 Speed of the random walk

Let \(\{X_t\}\) denote the random walk on \(G_{b,k}\) with \(X_0 = \rho \). Our first goal will be to prove a lower bound on the speed of the walk. Define:

We will show that \({\mathsf {d}}_w(b,k)\) is related to the speed exponent for the random walk.

Theorem 3.10

Consider any \(k \geqslant b \geqslant 4\). It holds that for all \(T \geqslant 1\),

Before proving the theorem, let us observe that it yields Theorem 1.3. Fix \(k \geqslant b \geqslant 4\). Observe that for any positive integer \(p \geqslant 1\), we have \({\mathsf {d}}_g(b^p,k^p)={\mathsf {d}}_g(b,k)\). On the other hand,

So for every \(\varepsilon > 0\), there is some \(p=p(\varepsilon )\) such that

and moreover \(G_{b^p,k^p}\) almost surely has uniform polynomial growth of degree \({\mathsf {d}}_g(b,k)\). Combining this with the construction of Corollary 3.5 for all rational \(d \geqslant 2\) yields Theorem 1.3.

3.3.1 The linearized graphs

Fix integers \(k \geqslant b \geqslant 4\) and \(n \geqslant 1\), and let us consider now the (weighted) graph \(L=L_{(b,k)}^n\) derived from \(G=G(\varvec{T}_{(b,k)}^n)\) by identifying every column of rectangles into a single vertex. Thus \(|V(L)| = b^n\).

We connect two vertices \(u,v \in V(L)\) if their corresponding columns \(\mathcal C_u\) and \(\mathcal C_v\) in G are adjacent, and we define the conductances \(c_{uv} {:}{=}|E_G(\mathcal C_u,\mathcal C_v)|\), where \(E_G(S,T)\) denotes the number of edges between two subsets \(S,T \subseteq V(G)\). Define additionally \(c_{uu} {:}{=}2 |\mathcal C_u|\) and

Let us order the vertices of L from left to right as \(V(L) = \{\ell _1, \ldots , \ell _{b^n}\}\). The series law for effective resistances gives the following.

Observation 3.11

For \(1 \leqslant i \leqslant t \leqslant j\leqslant b^n\), we have

We will use this to bound the resistance between any pair of columns.

Lemma 3.12

If \(1 \leqslant s < t \leqslant b^n\), then

Proof

Let us first establish the upper bound. Denote \(h {:}{=}\lceil \log _b(t-s) \rceil \), \(\varvec{T}{:}{=}\varvec{T}_{(b,k)}\), and \(\Gamma {:}{=}\Gamma _{b,k}\). Write \(\varvec{T}^n = \varvec{T}^{n-h} \circ \varvec{T}^h\) and along this decomposition, partition \(\varvec{T}^n\) into \(b^{n-h}\) sets of tiles \(\mathcal D_1, \ldots , \mathcal D_{b^{n-h}}\), where each \(\mathcal D_i\) is formed from adjacent columns

Suppose that, for \(1 \leqslant i \leqslant b^{n-h}\), the tiling \(\varvec{T}^{n-h}\) has \(\beta _i\) tiles in its ith column. Then \(\mathcal D_i\) consists of \(\beta _i\) copies of \(\varvec{T}^h\) stacked atop each other.

Thus we have \(|\mathcal D_i| = \beta _i |\varvec{T}^h|\), and furthermore \(\rho (\mathcal D_i) \leqslant \rho (\varvec{T}^h)/\beta _i\), hence

where the last inequality uses Lemma 3.7.

Let \(1 \leqslant i \leqslant j \leqslant b^{n-h}\) be such that \(\mathcal C_s \subseteq \mathcal D_i\) and \(\mathcal C_t \subseteq \mathcal D_j\). Since \(t \leqslant s + b^h\), and each set \(\mathcal D_i\) consists of \(b^h\) consecutive columns, it must be that \(j \leqslant i+1\). If \(i=j\), then \(|\mathcal C_s|+\cdots +|\mathcal C_t| \leqslant |\mathcal D_i|\), and Observation 3.11 gives

thus (3.7) yields (3.5), as desired.

Suppose, instead, that \(j=i+1\). From Lemma 3.2, we have \(\alpha _{\varvec{T}^{n-h}} \leqslant |\gamma |/b \leqslant k\). Therefore \(1/k \leqslant \beta _{i+1}/\beta _i \leqslant k\). Since the degrees in \(G(\varvec{T}^n_{(b,k)})\) are bounded by k, this yields the following claim, which we will also employ later.

Claim 3.13

For any \(\ell _i \in V(L)\), we have

and for any \(D > 0\) and columns \(\mathcal C_a \in \mathcal D_i\) and \(\mathcal C_b \in \mathcal D_j\) with \(|i-j| \leqslant D\), it holds that

Thus using Corollary 2.15 (and noting that each \(\mathcal D_i\) is non-degenerate) along with (3.7) gives

Since it also holds that \(|\mathcal D_i|+|\mathcal D_{i+1}| = (\beta _i+\beta _{i+1}) |\varvec{T}^h| \leqslant 2k \beta _i |\varvec{T}^h|\), Observation 3.11 gives

and again (3.7) establishes (3.5). Now (3.8) completes the proof of the upper bound.

For the lower bound, define \(h' {:}{=}\lfloor \log _b (t-s)\rfloor - 1\) and decompose \(\varvec{T}^n = \varvec{T}^{n-h'} \circ \varvec{T}^{h'}\). Partition \(\varvec{T}^n\) similarly into \(b^{n-h'}\) sets of tiles \(\mathcal D_1,\ldots ,\mathcal D_{b^{n-h'}}\). Suppose that \(\mathcal C_s \subseteq \mathcal D_i\) and \(\mathcal C_t \subseteq \mathcal D_j\), and note that the width of each \(\mathcal D_i\) is \(b^{h'}\) and \(b^{h'+2} \geqslant t-s \geqslant b^{h'+1}\), hence \(j > i+1\). Therefore using again Observation 3.11 and the Nash-Williams inequality, we have

where the final inequality uses (3.1). Note also that

An application of (3.8) completes the proof of the lower bound. \(\square \)

3.3.2 Rate of escape in L

Consider again the linearized graph \(L = L^n_{(b,k)}\) with conductances \(c : E(L) \rightarrow \mathbb R_+\) defined in Section 3.3.1, and let \(\{Y_t\}\) be the random walk on L defined by

Let \(\pi _L\) be the stationary measure of \(\{Y_t\}\).

For a parameter \(1 \leqslant h \leqslant n\), consider the decomposition \(\varvec{T}^n = \varvec{T}^{n-h} \circ \varvec{T}^h\), and let \(V_1,V_2,\ldots ,V_{b^{n-h}}\) be a partition of V(L) into continguous subsets with \(|V_1|=|V_2|=\cdots =|V_b^{n-h}|=b^h\).

Let \(\{Z_i : i \in \{1,2,\ldots ,b^{n-h}\}\}\) be a collection of independent random variables with

Define the random time \(\tau (h)\) as follows: Given \(Y_0 \in V_j\), let \(\tau (h)\) be the first time \(\tau \geqslant 1\) at which

The next lemma shows that the law of the walk stopped at time \(\tau (h)\) is within a constant factor of the stationary measure.

Lemma 3.14

Suppose \(Y_0\) is chosen according to \(\pi _L\). Then for every \(v \in V(L)\),

Proof

Consider some \(5 \leqslant j \leqslant b^{n-h}-4\) and \(v \in V_j\). The proof for the other cases is similar. Let \(\mathcal E\) denote the event \(\{Y_0 \in \{V_{j-2},V_{j+2}\}\}\). The conditional measure is

Consider three linearly ordered vertices \(v,u,w \in V(L)\), i.e., such that v, w are in distinct connected components of \(L[V(L) \setminus \{u\}]\)). Let \(p_{u}^{v \prec w}\) denote the probability that the random walk, started from \(Y_0 = u\) hits v before it hits w. Now we have:

It is a classical fact (see [15, Ch. 2]) that

where the final equality uses Observation 3.11 and the fact that v, u, w are linearly ordered.

Thus from Lemma 3.12 and (3.9), whenever \(w \in V_{j-4}, u \in V_{j-2}, v \in V_{j}\) or \(u \in V_{j+2}, v \in V_j, w \in V_{j+4}\), it holds that

Another application of (3.9) gives

hence (3.11) gives

completing the proof. \(\square \)

Lemma 3.15

It holds that \({{\,\mathrm{{\mathbb {E}}}\,}}[\tau (h) \mid Y_0] \lesssim _k b^{h\,{\mathsf {d}}_w(b,k)}\).

Proof

Consider a triple of vertices \(u \in V_{j}, v \in V_{j-2}, w\in V_{j+2}\) for \(3 \leqslant j \leqslant b^{n-h} - 2\). Let \(\tau _{v,w}\) be the smallest time \(\tau \geqslant 0\) such that \(X_\tau \in \{v,w\}\), and denote

Then the standard connection between hitting times and effective resistances [11] yields

where the last line employs Lemma 3.12. Recalling that \({\mathsf {d}}_w(b,k) = \log _b(bk \Gamma _{b,k})\), this yields

for any \(v \in V_3 \cup V_4 \cup \cdots \cup V_{b^{n-h}-2}\). A one-sided variant of the argument follows in the same manner for \(v \in V_j\) when \(j \leqslant 2\) or \(j \geqslant b^{n-h} - 1\). \(\square \)

Lemma 3.16

Let \(Y_0\) have law \(\pi _L\). There is a number \(c_k > 0\) such that for any \(T \leqslant c_k {\mathrm {diam}}(L)^{{\mathsf {d}}_w(b,k)}\), we have

Proof

First, we claim that for every \(T \geqslant 1\),

Let \(s' \leqslant T\) be such that

Then there exists an even time \(s \in \{s',s'-1\}\) such that \({{\,\mathrm{{\mathbb {E}}}\,}}[d_L(Y_0,Y_s)] \geqslant {{\,\mathrm{{\mathbb {E}}}\,}}[d_L(Y_0,Y_{s'})-d_L(Y_0,Y_1)]\). Consider \(\{Y_t\}\) and an identically distributed walk \(\{{\tilde{Y}}_t\}\) such that \({\tilde{Y}}_t=Y_t\) for \(t \leqslant s/2\) and \({\tilde{Y}}_t\) evolves independently after time s/2. By the triangle inequality, we have

But since \(\{Y_t\}\) is stationary and reversible, \((Y_0,{\tilde{Y}}_T)\) and \(({\tilde{Y}}_T, Y_s)\) have the same law as \((Y_0,Y_T)\). Taking expectations yields (3.12).

Let \(h \in \{1,2,\ldots ,n\}\) be the largest value such that \({{\,\mathrm{{\mathbb {E}}}\,}}[\tau (h)] \leqslant T\). We may assume that T is sufficiently large so that \({{\,\mathrm{{\mathbb {E}}}\,}}[\tau (1)] \leqslant T\), and Lemma 3.15 guarantees that

as long as \(h < n\) (which gives our restriction \(T \leqslant c_k b^{h {\mathsf {d}}_w(b,k)} = c_k {\mathrm {diam}}(L)^{1/{\mathsf {d}}_w(b,k)}\) for some \(c_k > 0\)).

From the definition of \(\tau (h)\), we have

hence the triangle inequality implies

Again, let \(\{{\tilde{Y}}_t\}\) be an independent copy of \(\{Y_t\}\). Then since \({{\,\mathrm{{\mathbb {P}}}\,}}(\tau (h) \leqslant 2T) \geqslant 1/2\), Lemma 3.14 implies

Therefore,

Define \(\eta {:}{=}b^{-h} \max _{0 \leqslant t \leqslant 2T} {{\,\mathrm{{\mathbb {E}}}\,}}[d_L(Y_0,Y_t)]\). Using the above bound yields

for some number C(k). Taking expectations in (3.14) gives

If \(\eta \leqslant 1/(2 C(k))\), then \({{\,\mathrm{{\mathbb {E}}}\,}}[d_L(Y_0,Y_{2T})] \geqslant b^h/4\). If, on the other hand, \(\eta > 1/(2 C(k))\), then (3.12) yields

Now (3.13) completes the proof. \(\square \)

3.3.3 Rate of escape in \(G_{b,k}\)

Consider now the graphs \(G_n {:}{=}G(\varvec{T}^n_{(b,k)})\) for some \(k \geqslant b \geqslant 4\) and \(n \geqslant 1\). Let us define the cylindrical version \({\tilde{G}}_n\) of \(G_n\) with the same vertex set, but additionally and edge from the top tile to the bottom tile in every column (see Fig. 6). If we choose \({\tilde{\rho }}_n \in V({\tilde{G}}_n)\) according to the stationary measure on \({\tilde{G}}_n\), then clearly \(\{({\tilde{G}}_n,{\tilde{\rho }}_n)\} \Rightarrow (G_{b,k},\rho )\) as well.

Define also \(L_n {:}{=}L^n_{b,k}\). Because of Observation 3.4(b), the graph \({\tilde{G}}_n\) has vertical symmetry: Tiles within a column all have the same degree and, more specifically, have the same number of neighbors on the left and on the right. Let \(\pi _n : V(G_n) \rightarrow V(L_n)\) denote the projection map and observe that

Let \(\{X^{(n)}_t\}\) denote the random walk on \({\tilde{G}}_n\) with \(X^{(n)}_0={\tilde{\rho }}_n\), and let \(\{Y^{(n)}_t\}\) be the stationary random walk on \(L_n\) defined in (3.10).

Note that, by construction, \(\{\pi _n(X_t^{(n)})\}\) and \(\{Y_t^{(n)}\}\) have the same law, and therefore

With this in hand, we can establish speed lower bounds in the limit \((G_{b,k},\rho )\).

Proof of Theorem 3.10

Observe that (3.15) in conjunction with Lemma 3.16 gives, for every \(T \leqslant c_k ({\mathrm {diam}}({\tilde{G}}_n)/2)^{{\mathsf {d}}_w(b,k)}\),

Since \(\{({\tilde{G}}_n,\rho _n)\} \Rightarrow (G,\rho )\) by Lemma 3.9, it holds that if \(\{X_t\}\) is the random walk on G with \(X_0=\rho \), then for all \(T \geqslant 1\),

\(\square \)

3.4 Annular resistances

We will establish Theorem 1.5 by proving the following.

Theorem 3.17

For any \(k \geqslant b \geqslant 4\), there is a constant \(C=C(k)\) such that for \(G=G_{b,k}\), almost surely

To see that this yields Theorem 1.5, consider some \(k \geqslant b^2\), corresponding to the restriction \({\mathsf {d}}_g(b,k) \geqslant 3\). Then for all positive integers \(p \geqslant 1\), we have \({\mathsf {d}}_g(b^p,k^p)={\mathsf {d}}_g(b,k)\) and recalling (3.4),

To prove Theorem 3.17, it suffices to show the following.

Lemma 3.18

For every \(n \geqslant 1\), \(k \geqslant b \geqslant 4\), there is a constant \(C=C(k)\) such that for \(G=G(\varvec{T}^n_{(b,k)})\), we have

Proof

Denote \(\varvec{T}{:}{=}\varvec{T}_{(b,k)}\). Consider some value \(1 \leqslant R \leqslant {\mathrm {diam}}(G)/C\), and define \(h {:}{=}\lfloor \log _b(R/3)\rfloor \).

Let \(\mathcal C_1,\ldots ,\mathcal C_{b^n}\) denote the columns of \(\varvec{T}^n\) and writing \(\varvec{T}^n = \varvec{T}^{n-h} \circ \varvec{T}^h\), let us partition the columns into consecutive sets \(\mathcal D_1,\ldots ,\mathcal D_{b^{n-h}}\) (as in the proof of Lemma 3.12), where \(\mathcal D_i = \mathcal C_{(i-1) b^h + 1} \cup \cdots \cup \mathcal C_{i b^h}\). For \(1 \leqslant i \leqslant b^{n-h}\), let \(\beta _i\) denote the number of tiles in the ith column of \(\varvec{T}^{n-h}\) so that \(\mathcal D_i\) consists of \(\beta _i\) copies of \(\varvec{T}^h\) stacked vertically.

Fix some vertex \(x \in V(G)\) and suppose that \(x \in \mathcal D_s\) for some \(1 \leqslant s \leqslant b^{n-h}\). Denote \(\Delta {:}{=}9b\). By choosing C sufficiently large, we can assume that \(b^{n-h} > 2\Delta \), so that either \(s \leqslant b^{n-h} -\Delta \) or \(s \geqslant 1+\Delta \). Let us assume that \(s \leqslant b^{n-h}-\Delta \), as the other case is treated symmetrically. Define \(t {:}{=}\lceil s+2+6b\rceil \) so that \(t-s \leqslant \Delta \), and

Denote \(\xi {:}{=}\gcd (\beta _s,\beta _{s+1},\ldots ,\beta _t)\). We claim that

This follows because \(\min (\beta _i,\beta _{i+1}) \mid \max (\beta _i,\beta _{i+1})\) for all \(1 \leqslant i < b^n\) (cf. Observation 3.4(b)), and moreover the ratio \(\max (\beta _i,\beta _{i+1})/\min (\beta _i,\beta _{i+1})\) is bounded by a function depending only on k. Since \(t-s \lesssim _{k} 1\), this verifies (3.17).

Denote \({\hat{\mathcal D}} {:}{=}\mathcal D_s \cup \cdots \cup \mathcal D_t\). One can verify that \({\hat{\mathcal D}}\) is a vertical stacking of \(\xi \) copies of \({\hat{\varvec{T}}} {:}{=}\varvec{T}_{\langle \beta _s/\xi ,\ldots ,\beta _t/\xi \rangle } \circ \varvec{T}^h\), and Corollary 2.15 implies that

with the final inequality being the content of Lemma 3.7.

Let \({\hat{\varvec{A}}}\) be the copy of \({\hat{\varvec{T}}}\) that contains x, and let \(\varvec{S}\) be the copy of \(\varvec{T}^h\) in \(\varvec{T}^n = \varvec{T}^{n-h} \circ \varvec{T}^h\) that contains x. Since \(\xi \) divides \(\beta _s\), it holds that \(\varvec{S}\subseteq {\hat{\varvec{A}}}\) and \(\mathscr {L}(\varvec{S}) \subseteq \mathscr {L}({\hat{\varvec{A}}})\). We further have

This yields

where we have used the hybrid notation: For \(s \in \ell _1(V)\),

Therefore,

Since \({\mathrm {diam}}_G(\varvec{S}) \leqslant 3 b^h \leqslant R\) and \(x \in \varvec{S}\), it holds that \(\varvec{S}\subseteq B_G(x,R)\). On the other hand, since \(x \in \varvec{S}\), (3.16) shows that \(B_G(x, 2 R) \cap \mathscr {R}({\hat{\varvec{A}}}) = \emptyset \). We conclude that

as desired. \(\square \)

3.5 Complements of balls are connected

Let us finally prove Theorem 1.4. Recall the setup from Section 3.3.3: We take \(k \geqslant b \geqslant 4\), define \(G_n = G(\varvec{T}^n_{(b,k)})\), and use \(({\tilde{G}}_n,{\tilde{\rho }}_n)\) to denote the cylindrical version, which satisfies \(\{({\tilde{G}}_n,{\tilde{\rho }}_n)\} \Rightarrow (G_{b,k},\rho )\).

Partition the vertices of \({\tilde{G}}_n\) into columns \(\mathcal C_1, \ldots , \mathcal C_{b^n}\) in the natural way (as was done in Sects. 3.3 and 3.4). In what follows, we say that a set \(S \subseteq V({\tilde{G}}_n)\) is connected to mean that \({\tilde{G}}_n[S]\) is a connected graph.

Definition 3.19

Say that a set of vertices \(U \subseteq V({\tilde{G}}_n)\) is vertically convex if for all \(1 \leqslant i \leqslant b^n\), either \(U \cap \mathcal C_i = \emptyset \) or \(U \cap \mathcal C_i\) is connected.

Observation 3.20

Consider a connected set \(U \subseteq \mathcal C_i\) for some \(1 \leqslant i \leqslant b^n\). Then for \(1 \leqslant i' \leqslant b^n\) with \(|i - i'| \leqslant 1\), the set \(B_{{\tilde{G}}_n}(U, 1) \cap \mathcal C_{i'}\) is connected.

Momentarily, we will argue that balls in \({\tilde{G}}_n\) are vertically convex.

Lemma 3.21

Consider any \(A \in \varvec{T}\) and \(R \geqslant 0\). It holds that \(B_{{\tilde{G}}_n}(A,R)\) is vertically convex.

With this lemma in hand, it is easy to establish the following theorem which, in conjunction with Lemma 3.9, implies Theorem 1.4.

Theorem 3.22

Almost surely, the complement of every ball in \(G_{b,k}\) is connected.

Proof

Since \(\{({\tilde{G}}_n,{\tilde{\rho }}_n)\} \Rightarrow G_{b,k}\), it suffices to argue that for every \(n \geqslant 1\), \(x \in V({\tilde{G}}_n)\), and \(R \leqslant b^n/3\), the set \(U {:}{=}V({\tilde{G}}_n) \setminus B_{{\tilde{G}}_n}(x,R)\) is connected.

By Lemma 3.21, it holds that \(B_{{\tilde{G}}_n}(x,R)\) is vertically convex. Since the complement of a vertically convex set is vertically convex (given that every column in \({\tilde{G}}_n\) is isomorphic to a cycle), U is vertically convex as well. To argue that U is connected, it therefore suffices to prove that there is a path from \(\mathscr {L}(\varvec{T}^n_{(b,k)})\) to \(\mathscr {R}(\varvec{T}^n_{(b,k)})\) in \({\tilde{G}}_n[U]\).

In fact, the tiles in \(\varvec{T}^n_{(b,k)}\) have height at most \(b^{-n}\), and therefore the projection of \(K {:}{=}[\![ B_{{\tilde{G}}_n}(x,R)]\!]\) onto \(\{0\} \times [0,1]\) has length at most \((2R+1) b^{-n} < 1\). Hence there is some height \(h \in [0,1]\) such that a horizontal line \(\ell \) at height h does not intersect K. The set of tiles \(\{ A \in V({\tilde{G}}_n) : \ell \cap [\![ A]\!] \ne \emptyset \}\) therefore contains a path from \(\mathscr {L}(\varvec{T}^n_{(b,k)})\) to \(\mathscr {R}(\varvec{T}^n_{(b,k)})\) that is contained in \({\tilde{G}}_n[U]\), completing the proof. \(\square \)

We are left to prove Lemma 3.21. To state the next lemma more cleanly, let us denote \(\mathcal C_0 = \mathcal C_{b^n+1} = \emptyset \).

Lemma 3.23

Consider \(1 \leqslant i \leqslant b^n\) and let \(U \subseteq \mathcal C_{i-1} \cup \mathcal C_i \cup \mathcal C_{i+1}\) be a connected, vertically convex subset of vertices. Then \(B_{{\tilde{G}}_n}(U, 1) \cap \mathcal C_i\) is connected as well.

Proof

For \(i' \in \{i-1, i, i+1\}\), denote \(U_{i'} {:}{=}U \cap C_{i'}\). Clearly we have

If \(U_i = \emptyset \), then as U is connected it should be that either \(U = U_{i-1}\) or \(U = U_{i+1}\), and in either case the claim follows by Observation 3.20.

Now suppose \(U_i \ne \emptyset \), and consider some \(i' \in \{i-1, i+1\}\) such that \(U_{i'} \ne \emptyset \). Then Observation 3.20 implies that \(B_{{\tilde{G}}_n}(U_{i'}, 1) \cap \mathcal C_i\) is connected. Furthermore, since U is connected, it holds that \(B_{{\tilde{G}}_n}(U_{i'}, 1) \cap U_i \ne \emptyset \). Now, as we know that \(U_i\) is connected by the assumed vertical convexity of U, we obtain that \(B_{{\tilde{G}}_n}(U, 1) \cap \mathcal C_i\) is connected, completing the proof. \(\square \)

Proof of lemma 3.21

We proceed by induction on R, where the base case \(R=0\) is trivial, so consider \(R \geqslant 1\). Fix some \(i \in \{1,2,\ldots ,b^n\}\), and suppose \(B_{{\tilde{G}}_n}(A, R) \cap \mathcal C_i \ne \emptyset \). Denote

Clearly we have \(B_{{\tilde{G}}_n}(A, R) \cap \mathcal C_i = B_{{\tilde{G}}_n}(U, 1) \cap \mathcal C_i\). The set U is manifestly connected and, by the induction hypothesis, is also vertically convex. Thus from Lemma 3.23, it follows that \(B_{{\tilde{G}}_n}(U, 1) \cap \mathcal C_i\) is connected as well. We conclude that \(B_{{\tilde{G}}_n}(A, R)\) is vertically convex, completing the proof. \(\square \)

Notes

More precisely, for the boundary of a hyperbolic group as above, one can choose a sequence of approximations with this property.

References

Ambjørn, J., Durhuus, B., Jonsson, T.: Quantum geometry. A statistical field theory approach. Cambridge Monographs on Mathematical Physics. Cambridge University Press, Cambridge (1997)

Aldous, D., Lyons, R.: Processes on unimodular random networks. Electron. J. Probab. 12(54), 1454–1508 (2007)

Angel, O.: Growth and percolation on the uniform infinite planar triangulation. Geom. Funct. Anal. 13(5), 935–974 (2003)

Barlow, M.T.: Diffusions on fractals. In: Lectures on Probability Theory and Statistics (Saint-Flour, 1995), volume 1690 of Lecture Notes in Mathematics. pp. 1–121. Springer, Berlin (1998)

Benjamini, I., Curien, N.: Ergodic theory on stationary random graphs. Electron. J. Probab. 17(93), 20 (2012)

Benjamini, I., Curien, N.: Simple random walk on the uniform infinite planar quadrangulation: subdiffusivity via pioneer points. Geom. Funct. Anal. 23(2), 501–531 (2013)

Benjamini, I.: Coarse geometry and randomness, volume 2100 of Lecture Notes in Mathematics. Springer, Cham, 2013. Lecture notes from the 41st Probability Summer School held in Saint-Flour. Chapter 5 is due to Nicolas Curien, Chapter 12 was written by Ariel Yadin, and Chapter 13 is joint work with Gady Kozma, École d’Été de Probabilités de Saint-Flour. [Saint-Flour Probability Summer School] (2011)

Bonk, M., Kleiner, B.: Quasisymmetric parametrizations of two-dimensional metric spheres. Invent. Math. 150(1), 127–183 (2002)

Benjamini, I., Papasoglu, P.: Growth and isoperimetric profile of planar graphs. Proc. Am. Math. Soc. 139(11), 4105–4111 (2011)

Benjamini, I., Schramm, O.: Recurrence of distributional limits of finite planar graphs. Electron. J. Probab. 6(23), 13 (2001)

Chandra, A.K., Raghavan, P., Ruzzo, W.L., Smolensky, R., Tiwari, P.: The electrical resistance of a graph captures its commute and cover times. Comput. Complex. 6(4), 312–340 (1996/97)

Gurel-Gurevich, O., Nachmias, A.: Recurrence of planar graph limits. Ann. Math. (2) 177(2), 761–781 (2013)

Kumagai, T., Misumi, J.: Heat kernel estimates for strongly recurrent random walk on random media. J. Theoret. Probab. 21(4), 910–935 (2008)

Lee, J.R.: Conformal growth rates and spectral geometry on distributional limits of graphs. (2017). arXiv:1701.01598

Lyons, R., Peres, Y.: Probability on Trees and Networks. Cambridge University Press, New York, (2016). http://pages.iu.edu/~rdlyons/

Murugan, M.: Quasisymmetric uniformization and heat kernel estimates. Trans. Am. Math. Soc. 372(6), 4177–4209 (2019)

Acknowledgements

We thank Shayan Oveis Gharan and Austin Stromme for many useful preliminary discussions, Omer Angel and Asaf Nachmias for sharing with us their construction of a graph with asymptotic \((3-\varepsilon )\)-dimensional volume growth on which the random walk has diffusive speed, and Itai Benjamini for emphasizing many of the questions addressed here. We also thank the anonymous referees for very useful comments. This research was partially supported by NSF CCF-1616297 and a Simons Investigator Award.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ebrahimnejad, F., Lee, J.R. On planar graphs of uniform polynomial growth. Probab. Theory Relat. Fields 180, 955–984 (2021). https://doi.org/10.1007/s00440-021-01045-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-021-01045-5