Abstract

Recent work has made substantial progress in understanding the transitions of random constraint satisfaction problems. In particular, for several of these models, the exact satisfiability threshold has been rigorously determined, confirming predictions of statistical physics. Here we revisit one of these models, random regular k-nae-sat: knowing the satisfiability threshold, it is natural to study, in the satisfiable regime, the number of solutions in a typical instance. We prove here that these solutions have a well-defined free energy (limiting exponential growth rate), with explicit value matching the one-step replica symmetry breaking prediction. The proof develops new techniques for analyzing a certain “survey propagation model” associated to this problem. We believe that these methods may be applicable in a wide class of related problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In a random constraint satisfaction problem (csp), we have n variables taking values in a (finite) alphabet \(\mathcal {X}\), subject to a random set of constraints. In previous works on models of this kind, it has emerged that the space of solutions—a random subset of \(\mathcal {X}^n\)—can have a complicated structure, posing obstacles to mathematical analysis. Major advances in intuition were achieved by statistical physicists, who developed powerful analytic heuristics to shed light on the behavior of random csps ([31] and references therein). Their insights and methods are fundamental to the current understanding of random csps.

One prominent application of the physics heuristic is in giving explicit (non-rigorous) predictions for the locations of satisfiability thresholds in a large class of random csps ([36] and others). Recent works have given rigorous proofs for some of these thresholds: in the random regular nae-sat model [22, 25], in the random k-sat model [23], and in the independent set model on random regular graphs [24]. However, the satisfiability threshold is only one aspect of the rich picture that physicists have developed. There are deep conjectures for the behavior of these models inside the satisfiable regime, and it remains an outstanding mathematical challenge to prove them. In this paper we address one part of this challenge, concerning the total number of solutions for a typical instance in the satisfiable regime.

1.1 Main result

Given a cnf boolean formula, a not-all-equal-sat (nae-sat) solution is an assignment \(\underline{{{\varvec{x}}}}\) of literals to variables such that both \(\underline{{{\varvec{x}}}}\) and its negation \(\lnot \underline{{{\varvec{x}}}}\) evaluate to true—equivalently, such that no clause gives the same evaluation to all its variables. A k-nae-sat problem is one in which each clause has exactly k literals; it is termed d-regular if each variable appears in exactly d clauses. Sampling such a formula in a uniformly random manner gives rise to the random d-regular k-nae-sat model. (The formal definition is given in Sect. 2.) See [3] for important early work on the closely related model of random (Erdős–Rényi) nae-sat. The appeal of this model is that it has certain symmetries making the analysis particularly tractable, yet it is expected to share most of the interesting qualitative phenomena exhibited by other commonly studied problems, including random k-sat and random graph colorings.

Following convention, we fix k and then parametrize the model by its clause-to-variable ratio, \(\alpha \equiv d/k\). The partition function, denoted \(Z\equiv Z_n\), is the number of valid nae-sat assignments for an instance on n variables. It is conjectured that for each \(k\geqslant 3\), the model has an exact satisfiability threshold \(\alpha _\text {sat}(k)\): for \(\alpha <\alpha _\text {sat}\) it is satisfiable (\(Z>0\)) with high probability, but for \(\alpha >\alpha _\text {sat}\) it is unsatisfiable (\(Z=0\)) with high probability. This has been proved [25, Thm. 1] for all k exceeding an absolute constant \(k_0\), together with an exact formula for \(\alpha _\text {sat}\) which matches the physics prediction. It can be approximated as

where \(\epsilon _k\) denotes an error tending to zero as \(k\rightarrow \infty \).

We say the model has free energy \({\textsf {f}}(\alpha )\) if \(Z^{1/n}\) converges to \({\textsf {f}}(\alpha )\) in probability as \(n\rightarrow \infty \). A priori, the limit may not be well-defined. If it exists, however, Markov’s inequality and Jensen’s inequality imply that it must be upper bounded by the replica symmetric free energy

(In this model and in other random regular models, the replica symmetry free energy is the same as the annealed free energy.) One of the intriguing predictions from the physics analysis [38, 44] is that there is a critical value \(\alpha _\text {cond}\) strictly below \(\alpha _\text {sat}\), such that \({\textsf {f}}(\alpha )\) and \({\textsf {f}}^\textsc {rs}(\alpha )\) agree up to \(\alpha =\alpha _\text {cond}\) and diverge thereafter. In particular, this implies that the function \({\textsf {f}}(\alpha )\) must be non-analytic at \(\alpha =\alpha _\text {cond}\). This is the condensation transition (or Kauzmann transition), and will be further described below in Sect. 1.2. For all \(0\leqslant \alpha <\alpha _\text {sat}\), the free energy is predicted to be given by a formula

The function \({\textsf {f}}^\textsc {1rsb}(\alpha )\) is quite explicit, although not extremely simple to state; it is formally presented below in Definition 1.3. The formula for \({\textsf {f}}^\textsc {1rsb}(\alpha )\) is derived via the one-step replica symmetry breaking (1rsb) heuristic, discussed further below. Our main result is to prove this prediction for large k:

Theorem 1

In random regular k-nae-sat with \(k\geqslant k_0\), for all \(\alpha <\alpha _\text {sat}(k)\) the free energy \({\textsf {f}}(\alpha )\) exists and equals the predicted value \({\textsf {f}}^\textsc {1rsb}(\alpha )\).

Remark 1.1

We allow for \(k_0\) to be adjusted as long as it remains an absolute constant (so it need not equal the \(k_0\) from [25]). It is assumed throughout the paper that \(k\geqslant k_0\), even when not explicitly stated. The following considerations restrict the range of \(\alpha =d/k\) that we must consider:

-

A convenient upper bound on the satisfiable regime is given by

$$\begin{aligned} \alpha _\text {sat}\leqslant \alpha _{\textsc {rs}}\equiv \frac{\ln 2}{-\ln (1-2/2^k)} < 2^{k-1}\ln 2 \equiv \alpha _\text {ubd}\,. \end{aligned}$$This bound is certainly implied by the estimate (1) from [25], but it follows much more easily and directly from the first moment calculation (2). Indeed, we see from (2) that the function \({\textsf {f}}^\textsc {rs}(\alpha )\) is decreasing in \(\alpha \) and satisfies \({\textsf {f}}^\textsc {rs}(\alpha _{\textsc {rs}})=1\), so \((\mathbb {E}Z)^{1/n}<1\) for all \(\alpha >\alpha _{\textsc {rs}}\). Thus, by Markov’s inequality, we have that \(\mathbb {P}(Z>0) \leqslant \mathbb {E}Z\) tends to zero as \(n\rightarrow \infty \), i.e., the random problem instance is unsatisfiable with high probability.

-

For \(\alpha >\alpha _\text {sat}\) we must have \({\textsf {f}}(\alpha )=0\). On the other hand, we can see by comparing (1) and (2) that \(\alpha _\text {sat}\) is strictly smaller than \(\alpha _{\textsc {rs}}\), and \({\textsf {f}}^\textsc {rs}(\alpha _\text {sat})\) is strictly positive. This suggests that \(\alpha _\text {cond}\) occurs strictly before \(\alpha _\text {sat}\), since \(\alpha _\text {cond}=\alpha _\text {sat}\) would mean that \({\textsf {f}}(\alpha )={\textsf {f}}^\textsc {rs}(\alpha )\) up to \(\alpha _\text {sat}\), and in this case we would expect to have \(\alpha _\text {sat}=\alpha _{\textsc {rs}}\). Formally, it requires further argument to confirm that \(\alpha _\text {cond}<\alpha _\text {sat}\) in random regular nae-sat, and we obtain this as a consequence of results in the present paper. However, the phenomenon of \(\alpha _\text {cond}<\alpha _\text {sat}\) was previously confirmed by [20] and [8] for random hypergraph bicoloring and random regular sat, both of which are very similar to random regular nae-sat. As for the value of \({\textsf {f}}(\alpha )\) at the threshold \(\alpha =\alpha _\text {sat}\), we point out that \(\alpha =\alpha _\text {sat}\) makes sense in the setting of this paper only if \(d_\text {sat}(k)\equiv k\alpha _\text {sat}(k)\) is integer-valued for some k. We have no reason to think that this ever occurs; however, if it does, then the probability for \(Z>0\) is bounded away from both zero and one [25, Thm. 1]. In this case, \(Z^{1/n}\) does not concentrate around a single value but rather on two values,

$$\begin{aligned}\bigg \{0, \lim _{\alpha \uparrow \alpha _\text {sat}} {\textsf {f}}^\textsc {1rsb}(\alpha ) \bigg \}\,. \end{aligned}$$ -

In [25, Propn. 1.1] it is shown that for \(0\leqslant \alpha \leqslant \alpha _\text {lbd}\equiv (2^{k-1}-2)\ln 2\) and n large enough,

$$\begin{aligned} \frac{\mathbb {E}(Z^2)}{(\mathbb {E}Z)^2} \leqslant C\equiv C(k,\alpha )<\infty \end{aligned}$$where \(Z\equiv Z_n\) and \(C(k,\alpha )\) does not depend on n. Thus, for any fixed \(0<\epsilon <1\) and n large enough,

$$\begin{aligned}\mathbb {P}(Z\geqslant \epsilon \mathbb {E}Z) {\mathop {\geqslant }\limits ^{\odot }} \frac{\mathbb {E}(Z \mathbf {1}\{ Z\geqslant \epsilon \mathbb {E}Z\})^2}{\mathbb {E}(Z^2)} \geqslant \frac{(1-\epsilon )^2(\mathbb {E}Z)^2}{\mathbb {E}(Z^2)} \geqslant \frac{(1-\epsilon )^2}{C} \equiv \delta \,. \end{aligned}$$where the step marked \(\odot \) is by the Cauchy–Schwarz inequality. The results of [25, Sec. 6] imply the stronger statement that for any \(0\leqslant \alpha \leqslant \alpha _\text {lbd}\),

$$\begin{aligned} \lim _{\epsilon \downarrow 0} \liminf _{n\rightarrow \infty } \mathbb {P}(Z \geqslant \epsilon \mathbb {E}Z)=1\,. \end{aligned}$$On the other hand we already noted in (2) that \(\mathbb {E}(Z^{1/n}) \leqslant (\mathbb {E}Z)^{1/n}={\textsf {f}}^\textsc {rs}(\alpha )\) for all \(\alpha \geqslant 0\) and \(n\geqslant 1\). It follows by combining these facts that \(Z^{1/n}\) converges in probability to \({\textsf {f}}^\textsc {rs}(\alpha )\) in probability for any \(0\leqslant \alpha \leqslant \alpha _\text {lbd}\). That is to say, the result of Theorem 1 is already proved for \(\alpha \leqslant \alpha _\text {lbd}\), with \({\textsf {f}}(\alpha )={\textsf {f}}^\textsc {rs}(\alpha )\). This also implies that the condensation transition \(\alpha _\text {cond}\) must occur above \(\alpha _\text {lbd}\).

In summary, we have \(\alpha _\text {lbd}< \alpha _\text {sat}< \alpha _{\textsc {rs}}< \alpha _\text {ubd}\), and it remains to prove Theorem 1 for \(\alpha \in (\alpha _\text {lbd},\alpha _\text {sat})\). Thus, we can assume for the remainder of the paper that

In the course of proving Theorem 1 we will also identify the condensation threshold \(\alpha _\text {cond}\in (\alpha _\text {lbd},\alpha _\text {sat})\) (characterized in Proposition 1.4 below).

The 1rsb heuristic, along with its implications for the condensation and satisfiability thresholds, has been studied in numerous recent works, which we briefly survey here. The existence of a condensation transition was first shown in random hypergraph bicoloring [20], which as we mentioned above is a model very similar to random nae-sat. We also point out [17] which is the first work to successfully analyze solution clusters within the condensation regime, leading to a very good lower bound on satisfiability threshold. This was an important precursor to subsequent works [23,24,25] on exact satisfiability thresholds in random regular nae-sat, random sat, and independent sets. Condensation has been demonstrated to occur even at positive temperature in hypergraph bicoloring (which is very similar to nae-sat) [11]. However, determining the precise location of \(\alpha _\text {cond}\) is challenging, and was first achieved for the random graph coloring model [10] by an impressive and technically challenging analysis. A related paper pinpoints \(\alpha _\text {cond}\) for random regular k-sat (which again is very similar to nae-sat) [8]. Subsequent work [15] characterizes the condensation threshold in a more general family of models, and shows a correspondence with information-theoretic thresholds in statistical inference problems. The main contribution of this paper is to determine for the first time the free energy throughout the condensation regime \((\alpha _\text {cond},\alpha _\text {sat})\).

1.2 Statistical physics predictions

According to the heuristic analysis by statistical physics methods, the random regular nae-sat model has a single level of replica symmetry breaking (1rsb). We summarize here some of the key phenomena that are predicted from the 1rsb framework [31, 38, 44], referring the reader to [33, Ch. 19] for a full expository account. While much of the following description remains conjectural, the implications at the free energy level are rigorously established by the present paper. Throughout the following we write \(\doteq \) to indicate equality up to subexponential factors (\(\exp \{o(n)\}\)).

Recall that we consider nae-sat with k fixed, parametrized by the clause density \(\alpha \equiv d/k\). Abbreviate \({\texttt {0}}\equiv \textsc {true}\), \({\texttt {1}}\equiv \textsc {false}\). For small \(\alpha \), almost all of the solutions lie in a single well-connected subset of \(\{{\texttt {0}},{\texttt {1}}\}^n\). This holds until a clustering transition (or dynamical transition) \(\alpha _\text {clust}\), above which the solution space becomes broken up into many well-separated pieces, or clusters (see [1, 2, 6, 35]). Informally speaking, clusters are subsets of solutions which are characterized by the property that within-cluster distances are very small relative to between-cluster distances. Conjecturally, \(\alpha _\text {clust}\) also coincides with the reconstruction threshold [28, 31, 39], and is small relative to \(\alpha _\text {sat}\) when k is large, with \(\alpha _\text {clust}/\alpha _\text {sat}\asymp (\ln k)/k\).

For \(\alpha \) above \(\alpha _\text {clust}\) it is expected that the number of clusters of size \(\exp \{ n s\}\) has mean value \(\exp \{ n\Sigma (s;\alpha ) \}\), and is concentrated about this mean. The function \(\Sigma (s)\equiv \Sigma (s;\alpha )\) is referred to as the “cluster complexity.” The 1rsb framework of statistical physics gives an explicit conjecture for \(\Sigma \), discussed below in Sect. 1.3. Then, summing over cluster sizes \(0\leqslant s\leqslant \ln 2\) gives that the total number Z of nae-sat solutions has mean

where \(s_1={{\,\mathrm{arg\,max}\,}}[s+\Sigma (s)]\). It is expected that \(\Sigma \) is continuous and strictly concave in s, and also that \(s+\Sigma (s)\) has a unique maximizer \(s_1\) with \(\Sigma '(s_1)=-1\). For nae-sat and related models, this explicit calculation reveals a critical value \(\alpha _\text {cond}\in (\alpha _\text {clust},\alpha _\text {sat})\), characterized as

By contrast, the satisfiability threshold can be characterized as

For all \(\alpha \geqslant \alpha _\text {clust}\), the expected partition function \(\mathbb {E}Z\) is dominated by clusters of size \(\exp \{n s_1\}\). However, for \(\alpha >\alpha _\text {cond}\), we have \(\Sigma (s_1)<0\), so the expected number of clusters of this size is very small: \(\exp \{n\Sigma (s_1)\}\) tends to zero exponentially fast as \(n\rightarrow \infty \). This means that clusters of size \(\exp \{ns_1\}\) are highly unlikely to appear in a typical realization of the model. Instead, in a typical realization we only expect to see clusters of size \(\exp \{ns\}\) with \(\Sigma (s)\geqslant 0\). As a result the solution space should be dominated (with high probability) by clusters of size \(s_{\max }\) where

Since \(\Sigma \) is continuous, \(s_{\max }\) is the largest root of \(\Sigma \), and for \(\alpha \in (\alpha _\text {cond}, \alpha _\text {sat})\) we should have

(where the approximation for Z holds with high probability). The 1rsb free energy, formally given by Definition 1.3 below, should be interpreted as an expression for the function \({\textsf {f}}^\textsc {1rsb}(\alpha )=s_{\max }(\alpha )\).

1.3 The tilted cluster partition function

From the discussion of Sect. 1.2 we see that once the function \(\Sigma (s;\alpha )\) is determined, it is possible to derive \(\alpha _\text {cond}\), \(\alpha _\text {sat}\), and \({\textsf {f}}(\alpha )\). However, previous works have not taken the approach of actually computing \(\Sigma \). Indeed, \(\alpha _\text {sat}\) was determined [25] by an analysis involving only \(\max _s\Sigma (s;\alpha )\), which contains less information than the full curve \(\Sigma \). Related work on the exact determination (in a range of models) of \(\alpha _\text {cond}\) [8, 10, 15] also avoids computing \(\Sigma \), reasoning instead via the so-called “planted model.”

In order to compute the free energy, however, we cannot avoid computing (some version of) the function \(\Sigma \), which we will do by a physics-inspired approach. First consider the \(\lambda \)-tilted partition function

where \(\mathrm {\textsf {{CL}}}(\mathscr {G})\) denotes the set of solution clusters of \(\mathscr {G}\), and \(|\varvec{\gamma }|\) denotes the number of satisfying assignments inside the cluster \(\varvec{\gamma }\). According to the conjectural picture described above, we should have

where \({\mathfrak {F}}\) is the Legendre dual of \(-\Sigma \):

where \(s_\lambda \equiv {{\,\mathrm{arg\,max}\,}}_s[\lambda s + \Sigma (s)]\). Moreover, if \(\Sigma (s_\lambda )\geqslant 0\), then \(\varvec{Z}_\lambda \) should concentrate near \(\mathbb {E}\varvec{Z}_\lambda \), and in this regime physicists have an exact prediction for \({\mathfrak {F}}(\lambda )\), which will be further discussed below in Sect. 1.5. In short, the physics approach to computing \(\Sigma \) is to first compute \({\mathfrak {F}}(\lambda )\) (in the regime where \(\Sigma (s_\lambda )\geqslant 0\)), and then set \(\Sigma = -{\mathfrak {F}}^\star \). Note that by differentiating \({\mathfrak {F}}(\lambda ) = n^{-1}\ln \mathbb {E}\bar{\varvec{Z}}_\lambda \) we find that \({\mathfrak {F}}\) is convex in \(\lambda \), so the resulting \(\Sigma \) will indeed be concave.

At first glance the reduction to computing \({\mathfrak {F}}(\lambda )\) may not seem to improve matters. It is not immediately clear how “clusters” should be defined. It turns out that in the regime we are studying, a reasonable definition is that two nae-sat solutions are connected if they differ by a single bit, and the clusters are the connected components of the solution space. A typical nae-sat solution will have a positive density of variables which are free, meaning their value can be changed without violating any clause; any such solution must belong in a cluster of exponential size. Each cluster may be a complicated subset of \(\{{\texttt {0}},{\texttt {1}}\}^n\)—changing the value at one free variable may affect whether its neighbors are free, so a cluster need not be a simple subcube of \(\{{\texttt {0}},{\texttt {1}}\}^n\). Nevertheless, we wish to sum over the cluster sizes raised to non-integer powers. This computation is made tractable by constructing more explicit combinatorial models for the nae-sat solution clusters, as we next describe.

1.4 Modeling solution clusters

In our regime of interest (i.e., \(k\geqslant k_0\) and \(\alpha _\text {lbd}\leqslant \alpha \leqslant \alpha _\text {ubd}\); see Remark 1.1), the analysis of nae-sat solution clusters is greatly simplified by the fact that in a typical satisfying assignment the vast majority of variables are frozen rather than free. The result of this, roughly speaking, is that a cluster \(\varvec{\gamma }\in \mathrm {\textsf {{CL}}}(\mathscr {G})\) can be encoded by a configuration \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^n\) (representing its circumscribed subcube, so \(x_v={\texttt {f}}\) indicates a free variable) with no essential loss of information. This is formalized by a combinatorial model of “frozen configurations” representing the clusters (Definition 2.2). These frozen configurations can be viewed as the solutions of a certain csp lifted from the original nae-sat problem — so the physics heuristics can be applied again to (the randomized version of) the lifted model. Variations on this idea appear in several places in the physics literature; in the specific context of random csps we refer to [13, 34, 42]. Analyzing the number of frozen configurations, corresponding to (5) with \(\lambda =0\), yields the satisfiability threshold for this model [25].

Analyzing (5) for general \(\lambda \) requires deeper investigation of the arrangement of free variables in a typical frozen configuration \(\underline{{x}}\). For this purpose it is convenient to view an nae-sat instance as a (bipartite) graph \(\mathscr {G}\), where the vertices are given by variables and clauses, and the edges indicate which variables participate in which clauses (the formal description appears in Sect. 2). A key piece of intuition is that if we consider the subgraph of \(\mathscr {G}\) induced by the free variables, together with the clauses through which they interact, then this subgraph is predominantly comprised of disjoint components \(\varvec{T}\) of bounded size. (In fact, the majority of free variables are simply isolated vertices; a smaller fraction occur in linked pairs; a yet smaller fraction occur in components of size three or more.) Each free component \(\varvec{T}\) is surrounded by frozen variables, and we let \(z(\varvec{T})\) be the number of nae-sat assignments on \(\varvec{T}\) which are consistent with the frozen boundary. Since disjoint components \(\varvec{T},\varvec{T}'\) do not interact, the size of the cluster represented by \(\underline{{x}}\) is simply the product of \(z(\varvec{T})\) over all \(\varvec{T}\).

Another key observation is that the random nae-sat graph has few short cycles, so almost all of the free components will be trees. As a result, their weights \(z(\varvec{T})\) can be evaluated recursively by belief propagation (bp), a well-known dynamic programming method (see e.g. [33, Ch. 14]). In the rsb heuristic framework, a cluster is represented by a vector \(\underline{{{\texttt {m}}}}\) of “messages,” indexed by the directed edges of the nae-sat graph \(\mathscr {G}\). Informally, for a given cluster, and for any variable v adjacent to any clause a,

where \(\partial v\) denotes the neighboring clauses of v. Each message is a probability measure on \(\{{\texttt {0}},{\texttt {1}}\}\), and the messages are related to one another via local consistency equations, which are known as the bp equations. A configuration \(\underline{{{\texttt {m}}}}\) which satisfies all the local consistency equations is a bp solution. Thus a cluster \(\varvec{\gamma }\) can be encoded either by a frozen configuration \(\underline{{x}}\) or by a bp solution \(\underline{{{\texttt {m}}}}\); the latter has the key advantage that the size of \(\varvec{\gamma }\) can be easily read off from \(\underline{{{\texttt {m}}}}\), as a certain product of local functions. For the cluster size raised to power \(\lambda \), simply raise each local function to power \(\lambda \). Thus the configurations \(\underline{{{\texttt {m}}}}\) with \(\lambda \)-tilted weights form a spin system (Markov random field), whose partition function is the quantity of interest (5). This new spin system is sometimes termed the “auxiliary model” (e.g. [33, Ch. 19]).

1.5 One-step replica symmetry breaking

In Sect. 1.4 we described informally how a solution cluster \(\varvec{\gamma }\) can be encoded by a frozen configuration \(\underline{{x}}\), or a bp solution \(\underline{{{\texttt {m}}}}\). An important caveat is that the converse need not hold. In the nae-sat model, for any value of \(\alpha \), a trivial bp solution is always given by the “replica symmetric fixed point” (also called the “factorized fixed point”), where every \({\texttt {m}}_{v\rightarrow a}\) is the uniform measure on \(\{{\texttt {0}},{\texttt {1}}\}\). However, above \(\alpha _\text {cond}\), this is a spurious solution. One way to see this is via the heuristic “cavity calculation” of \({\textsf {f}}^\textsc {rs}(\alpha )\), which we now describe to motivate the more complicated expression for \({\textsf {f}}^\textsc {1rsb}(\alpha )\).

Given a random regular nae-sat instance \(\mathscr {G}\) on n variables, choose k uniformly random variables \(v_1,\ldots ,v_k\), and assume for simplicity that no two of these share a clause. Remove the k variables along with their kd incident clauses, producing the “cavity graph” \(\mathscr {G}''\). Then add \(d(k-1)\) new clauses to \(\mathscr {G}''\), producing the graph \(\mathscr {G}'\). Under this operation (cf. [7]), \(\mathscr {G}'\) is distributed as a random regular nae-sat instance on \(n-k\) variables. If the free energy \({\textsf {f}}(\alpha )=\lim _{n\rightarrow \infty } Z^{1/n}\) exists, then we would expect it to agree asymptotically with

Let U denote the set of “cavity neighbors”: the variables in \(\mathscr {G}''\) of degree \(d-1\), which neighbored the clauses that were deleted from \(\mathscr {G}\). Then \(\mathscr {G}\) and \(\mathscr {G}'\) differ from \(\mathscr {G}''\) only in the addition of a few small subgraphs which are attached to U. Computing \(Z(\mathscr {G})/Z(\mathscr {G}'')\) or \(Z(\mathscr {G}')/Z(\mathscr {G}'')\) reduces to understanding the joint law of the spins \(({\varvec{x}}_u)_{u\in U}\) under the nae-sat model defined by \(\mathscr {G}''\). Since \(\mathscr {G}\) is unlikely to have many cycles, the vertices of U are typically far apart from one another in \(\mathscr {G}''\). Therefore, one plausible scenario is that their spins are approximately independent under the nae-sat model on \(\mathscr {G}''\), with \({\varvec{x}}_u\) marginally distributed according to \({\texttt {m}}_{u\rightarrow a}\) where a is the deleted clause that neighbored u in \(\mathscr {G}\). If this is the case, then each \({\texttt {m}}_{u\rightarrow a}\) must be uniform over \(\{{\texttt {0}},{\texttt {1}}\}\), by the negation symmetry of nae-sat. Under this assumption, we can calculate

Substituting into (8) gives the replica symmetric free energy prediction \({\textsf {f}}(\alpha )\doteq {\textsf {f}}^\textsc {rs}(\alpha )\), which we know to be false for large \(\alpha \) (in particular, it is inconsistent with the known satisfiability threshold). Thus the replica symmetric fixed point, \({\texttt {m}}_{u\rightarrow a}= \text {unif}(\{0,1\})\) for all \(u\rightarrow a\), is a spurious bp solution. In reality the \({\varvec{x}}_u\) are not approximately independent in \(\mathscr {G}''\), even though the u’s are far apart. This phenomenon of non-negligible long-range dependence may be taken as a definition of replica symmetry breaking (rsb) in this setting, and occurs precisely for \(\alpha \) larger than \(\alpha _\text {cond}\).

Since above \(\alpha _\text {cond}\) the partition function cannot be estimated by (9) due to replica symmetry breaking, a different approach is needed. To this end, the one-step rsb (1rsb) heuristic posits that even when the original nae-sat model exhibits rsb, the (seemingly more complicated) “auxiliary model” of \(\lambda \)-weighted bp solutions \(\underline{{{\texttt {m}}}}\) is replica symmetric, for \(\lambda \) small enough: conjecturally, as long as \(\Sigma (s_\lambda )\geqslant 0\) for \(s_\lambda \equiv {{\,\mathrm{arg\,max}\,}}_s \{ \lambda s+\Sigma (s)\}\) (cf. the discussion below (6)). That is, for such \(\lambda \), the auxiliary model is predicted to have correlation decay, in contrast with the long-range correlations of the original model. This would mean that in the auxiliary model of the cavity graph \(\mathscr {G}''\), the spins \(({\texttt {m}}_{u\rightarrow a})_{u\in U}\) are approximately independent, with each \({\texttt {m}}_{u\rightarrow a}\) marginally distributed according to some law \({\dot{q}}_{u\rightarrow a}\). The model has a replica symmetric fixed point, \({\dot{q}}_{u\rightarrow a}={\dot{q}}_\lambda \) for all \(u\rightarrow a\) (the analogue of \({\texttt {m}}_{u\rightarrow a}=\text {unif}(\{{\texttt {0}},{\texttt {1}}\})\) for all \(u\rightarrow a\)). If we substitute this assumption into the cavity calculation (the analogues of (8) and (9)), we obtain the replica symmetric prediction for the auxiliary model free energy \({\mathfrak {F}}(\lambda )\), expressed as a function of \({\dot{q}}_\lambda \). As explained above, from \({\mathfrak {F}}(\lambda )\) we can derive the complexity function \(\Sigma (s)\) and the 1rsb nae-sat free energy \({\textsf {f}}^\textsc {1rsb}(\alpha )\).

1.6 The 1RSB free energy prediction

Having described the heuristic reasoning, we now proceed to formally state the 1rsb free energy prediction. We first describe \({\dot{q}}_\lambda \) as a certain discrete probability measure over \({\texttt {m}}\). Since \({\texttt {m}}\) is a probability measure over \(\{{\texttt {0}},{\texttt {1}}\}\), we encode it by \(x\equiv {\texttt {m}}({\texttt {1}})\in [0,1]\). A measure q on \({\texttt {m}}\) can thus be encoded by an element \(\mu \in {\mathscr {P}}\) where \({\mathscr {P}}\) denotes the set of discrete probability measures on [0, 1]. For measurable \(B\subseteq [0,1]\), define

where \(\hat{{\mathscr {Z}}}(\mu )\) and \(\dot{{\mathscr {Z}}}(\mu )\) are the normalizing constants such that \(\hat{\mathscr {R}}_\lambda \mu \) and \(\dot{\mathscr {R}}_\lambda \mu \) are also probability measures on [0, 1]. (In the context of \(\lambda =0\) we take the convention that \(0^0=0\).) Denote \(\mathscr {R}_{\lambda } \equiv \dot{\mathscr {R}}_{\lambda }\circ \hat{\mathscr {R}}_{\lambda }\). The map \(\mathscr {R}_{\lambda }:{\mathscr {P}}\rightarrow {\mathscr {P}}\) represents the bp recursion for the auxiliary model. The following presents a solution for \(\alpha \) in the interval \((\alpha _\text {lbd},\alpha _\text {ubd})\) which we recall (Remark 1.1) is a superset of \((\alpha _\text {cond},\alpha _\text {sat})\).

Proposition 1.2

(proved in “Appendix B” ) For \(\lambda \in [0,1]\), let \(\dot{\mu }_{\lambda ,0}\equiv \frac{1}{2} \delta _0 + \frac{1}{2} \delta _1\in {\mathscr {P}}\), and define recursively \(\dot{\mu }_{\lambda ,l+1} = \mathscr {R}_\lambda \dot{\mu }_{\lambda ,l}\in {\mathscr {P}}\) for all \(l\geqslant 0\). Define \(S_l \equiv ({{\,\mathrm{supp}\,}}\dot{\mu }_{\lambda ,l}) {\setminus } ({{\,\mathrm{supp}\,}}( \dot{\mu }_{\lambda ,0} +\ldots +\dot{\mu }_{\lambda ,l-1} ))\); this is a finite subset of [0, 1]. Regard \(\dot{\mu }_{\lambda ,l}\) as an infinite sequence indexed by the elements of \(S_1\) in increasing order, followed by the elements of \(S_2\) in increasing order, and so on. For \(k\geqslant k_0\) and \(\alpha _\text {lbd}\leqslant \alpha \leqslant \alpha _\text {ubd}\), in the limit \(l\rightarrow \infty \), \(\dot{\mu }_{\lambda ,l}\) converges in the \(\ell ^1\) sequence space to a limit \(\dot{\mu }_\lambda \in {\mathscr {P}}\) satisfying the fixed point equation \(\dot{\mu }_\lambda = \mathscr {R}_\lambda \dot{\mu }_\lambda \), as well as the estimates \(\dot{\mu }_\lambda ((0,1))\leqslant 7/2^k\) and \(\dot{\mu }_\lambda (dx) = \dot{\mu }_\lambda (d(1-x))\).

The limit \(\dot{\mu }_\lambda \) of Proposition 1.2 encodes the desired replica symmetric solution \({\dot{q}}_\lambda \) for the auxiliary model. We can then express \({\mathfrak {F}}(\lambda )\) in terms of \(\dot{\mu }_\lambda \) as follows. Writing \(\hat{\mu }_\lambda \equiv \hat{{\mathscr {R}}}_\lambda \dot{\mu }_\lambda \), let \({\dot{w}}_\lambda ,{\hat{w}}_\lambda ,{\bar{w}}_\lambda \in {\mathscr {P}}\) be defined by

with \(\dot{\mathfrak {Z}}_{\lambda },\hat{\mathfrak {Z}}_{\lambda },\bar{\mathfrak {Z}}_{\lambda }\) the normalizing constants. The analogue of (9) for this model is

and substituting into (8) gives the 1rsb prediction \(\bar{\varvec{Z}}_\lambda \doteq \exp \{{\mathfrak {F}}(\lambda )\}\) where

Further, the maximizer of \(s\mapsto (\lambda s+\Sigma (s))\) is predicted to be given by

If \(s=s_\lambda \) for \(\lambda \in [0,1]\) then we define

We then use (14) to define the thresholds

We can now formally state the predicted free energy of the original nae-sat model:

Definition 1.3

For \(\alpha \in k^{-1}{\mathbb {Z}}\), 1rsb free energy prediction \({\textsf {f}}^\textsc {1rsb}(\alpha )\) is defined as

(In regular k-nae-sat we must have integer \(d=k\alpha \), so we need not consider \(\alpha \notin k^{-1}{\mathbb {Z}}\).)

Proposition 1.4

(proved in “Appendix B” ) Assume \(k\geqslant k_0\) and write \(A\equiv [\alpha _\text {lbd}, \alpha _\text {ubd}] \cap (k^{-1}{\mathbb {Z}})\).

-

a.

For each \(\alpha \in A\), the function \(s\mapsto \Sigma (s;\alpha )\) is well-defined, continuous, and strictly decreasing in s.

-

b.

For each \(0\leqslant \lambda \leqslant 1\), the function \(\alpha \mapsto \Sigma (s_\lambda ;\alpha )= {\mathfrak {F}}(\lambda ) -\lambda s_\lambda \) is strictly decreasing with respect to \(\alpha \in A\). There is a unique \(\alpha _\lambda \in A\) such that \(\Sigma (s_\lambda ;\alpha )\) is nonnegative for all \(\alpha \leqslant \alpha _\lambda \), and is negative for all \(\alpha >\alpha _\lambda \). Taking \(\lambda =0\) we recover the estimate (1); and taking \(\lambda =1\) we obtain in addition

$$\begin{aligned} \alpha _\text {cond}=\alpha _1 = (2^{k-1}-1)\ln 2+ \epsilon _k\,.\end{aligned}$$(16)

(The main purpose of this proposition is to show that \(\Sigma (s_1)<0\) for all \(\alpha \in (\alpha _\text {cond},\alpha _\text {sat})\), i.e., that the “condensation regime” is a contiguous range of values of \(\alpha \). The expansion of \(\alpha _{\text {cond}}\) matches an earlier result of [20], which was obtained for a slightly different but closely related model.)

1.7 Proof approach

Since \({\textsf {f}}={\textsf {f}}(\alpha )\) is a priori not well-defined, the statement \({\textsf {f}}\leqslant \textsf {g}\) means formally that for all \(\epsilon >0\), \(\mathbb {P}( Z^{1/n} \geqslant \textsf {g}+\epsilon )\) tends to zero as \(n\rightarrow \infty \). With this notation, we will prove separately the upper bound \({\textsf {f}}(\alpha )\leqslant {\textsf {f}}^\textsc {1rsb}(\alpha )\) and the matching lower bound \({\textsf {f}}(\alpha )\geqslant {\textsf {f}}^\textsc {1rsb}(\alpha )\). This implies the main result Theorem 1: the free energy \({\textsf {f}}(\alpha )\) is indeed well-defined, and equals \({\textsf {f}}^\textsc {1rsb}(\alpha )\).

The upper bound is proved by an interpolation argument, which we defer to “Appendix E”. This argument builds on similar bounds for spin glasses on Erdős–Rényi graphs [26, 43], together with ideas from [12, 27] for interpolation in random regular models. Let \(Z_n(\beta )\) denote the partition function of nae-sat at inverse temperature \(\beta >0\). The interpolation method yields an upper bound on \(\mathbb {E}\ln Z_n(\beta )\) which is expressed as the infimum of a certain function \({\mathcal {P}}(\mu ;\beta )\), with \(\mu \) ranging over probability measures on [0, 1]. We then choose \(\mu \) according to Proposition 1.2, and take \(\beta \rightarrow \infty \) to obtain the desired bound \({\textsf {f}}(\alpha )\leqslant {\textsf {f}}^\textsc {1rsb}(\alpha )\).

Most of the paper is devoted to establishing the matching lower bound. The proof strategy is inspired by the physics picture described above, and at a high level proceeds as follows. Take any \(\lambda \) such that \(\Sigma (s_\lambda )\) (as defined by (13) and (14)) is nonnegative, and let \(\varvec{Y}_\lambda \) be the number of clusters of size roughly \(\exp \{ns_\lambda \}\). (As discussed in §1.3, we shall think of a cluster as a connected component of the solution space.) The informal statement of what we show is that

Adjusting \(\lambda \) as indicated by (15) then proves the desired bound \({\textsf {f}}(\alpha )\geqslant {\textsf {f}}^\textsc {1rsb}(\alpha )\).

Proving a formalized version of (17) occupies a significant part of the present paper. We introduce a slightly modified version of the messages \({\texttt {m}}\) which record the topologies of the free trees \(\varvec{T}\). We then restrict to cluster encodings in which every free tree has fewer than T variables, which limits the distance that information can propagate between free variables. We prove a version of (17) for every fixed T, and show that this yields the sharp lower bound in the limit \(T\rightarrow \infty \). The proof of (17) for fixed T is via the moment method for the auxiliary model, which boils down to a complicated optimization problem over many dimensions. It is known (see e.g. [25, Lem. 3.6]) that stationary points of the optimization problem correspond to “generalized” bp fixed points—these are measures \(Q_{v\rightarrow a}({\texttt {m}}_{v\rightarrow a},{\texttt {m}}_{a\rightarrow v})\), rather than the simpler “one-sided” measures \(q_{v\rightarrow a}({\texttt {m}}_{v\rightarrow a})\) considered in the 1rsb heuristic.

The one-sided property is a crucial simplification in physics calculations (cf. [33, Proposition 19.4]), but is challenging to prove in general. One contribution of this work that we wish to highlight is a novel resampling argument which yields a reduction to one-sided messages, and allows us to solve the moment optimization problem. (We are helped here by the truncation on the sizes of free trees.) Furthermore, the approach allows us to bring in methods from large deviations theory. With these we can show that the objective function has negative-definite Hessian at the optimizer, which is necessary for the second moment method. This resampling approach is quite general and should apply in a broad range of models.

1.8 Open problems

Beyond the free energy, it remains a challenge to establish the full picture predicted by statistical physicists for \(\alpha \leqslant \alpha _\text {sat}\). We refer the reader to several recent works targeted at a broad class of models in the regime \(\alpha \leqslant \alpha _\text {cond}\) [9, 16, 19], and to work on the location on \(\alpha _\text {cond}\) in a general family of models [14]. In the condensation regime \((\alpha _\text {cond},\alpha _\text {sat})\), an initial step would be to show that most solutions lie within a bounded number of clusters. A much more refined prediction is that the mass distribution among the largest clusters forms a Poisson–Dirichlet process. Another question is to show that on a typical problem instance over n variables, if \(\underline{{{\varvec{x}}}}^1,\underline{{{\varvec{x}}}}^2\) are sampled independently and uniformly at random from the solutions of that instance, then the normalized overlap \(R_{1,2}\equiv n^{-1}\{v:{\varvec{x}}^1_v={\varvec{x}}^2_v\}\) concentrates on two values (corresponding roughly to the two cases that \(\underline{{{\varvec{x}}}}^1,\underline{{{\varvec{x}}}}^2\) come from the same cluster, or from different clusters)—this criterion is sometimes taken as the precise definition of 1rsb. During the final revision stage of this paper, some of the above questions were addressed by a new preprint [40].

Beyond the immediate context of random csps, understanding the condensation transition may deepen our understanding of the stochastic block model, a model for random networks with underlying community structure. Here again ideas from statistical physics have played an important role [21]. A great deal is now known rigorously for the case of two blocks [32, 37], where there is no condensation regime. For models with more than two blocks, however, it is predicted that the condensation can occur, and may define a regime where detection is information-theoretically possible but computationally intractable. A condensation threshold has been established for the anti-ferromagnetic Potts model, corresponding to the disassortative regime of the stochastic block model. An analogous transition is expected in the ferromagnetic (assortative) case, and this remains open.

2 Combinatorial model

In this section we formalize a combinatorial model of clusters, which allows us to rigorously lower bound the tilted cluster partition function (5). We begin by reviewing the (standard) graphical depiction of nae-sat. A not-all-equal-sat (nae-sat) problem instance is naturally represented by a bipartite factor graph \(\mathscr {G}\) with signed edges, as follows. The vertex set of \(\mathscr {G}\) is partitioned into a set \(V=\{v_1,\ldots ,v_n\}\) of variables and a set \(F=\{a_1,\ldots ,a_m\}\) of clauses; we then have a set E of edges joining variables to clauses. For each edge \(e\in E\) we write v(e) for the incident variable, and a(e) for the incident clause; and we assign an edge literal \({\texttt {L}}_e\in \{{\texttt {0}},{\texttt {1}}\}\) to indicate whether v(e) participates affirmatively (\({\texttt {L}}_e={\texttt {0}}\)) or negatively (\({\texttt {L}}_e={\texttt {1}}\)) in a(e). We define all edges to have length one-half, so two variables \(v\ne v'\) lie at unit distance if and only if they appear in the same clause. Throughout this paper we denote \(\mathcal {G}\equiv (V,F,E)\) for the bipartite graph without edge literals, and \(\mathscr {G}\equiv (V,F,E,\underline{{\texttt {L}}})\equiv (\mathcal {G},\underline{{\texttt {L}}})\) for the nae-sat instance.

Formally we regard the edges E as a permutation, as follows. Each variable \(v\in V\) has incident half-edges \(\delta v\), while each clause \(a\in F\) has incident half-edges \(\delta a\). Write \(\delta V\) for the labelled set of all variable-incident half-edges, and \(\delta F\) for the labelled set of all clause-incident half-edges; we require that \(|\delta V|=|\delta F|=\ell \). Then any permutation \({\mathfrak {m}}\) of \([\ell ]\equiv \{1,\ldots ,\ell \}\) defines E by defining a matching between \(\delta V\) and \(\delta F\). Note that any permutation of \([\ell ]\) is permitted, so multi-edges can occur. In this paper we assume that the graph is (d, k)-regular: each variable has d incident edges, and each clause has k incident edges, so \(|E|=nd=mk\). A random k-nae-sat instance is given by \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\) where \(|V|=n\), \(|F|=m\), E is given by a uniformly random permutation \({\mathfrak {m}}\) of [nd], and \(\underline{{\texttt {L}}}\) is a uniformly random sample from \(\{{\texttt {0}},{\texttt {1}}\}^E\). We write \(\mathbb {P}\) and \(\mathbb {E}\) for probability and expectation over the law of \(\mathscr {G}\).

Definition 2.1

(solutions and clusters) A solution of the nae-sat problem instance \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\) is any assignment \(\underline{{{\varvec{x}}}}\in \{{\texttt {0}},{\texttt {1}}\}^V\) such that for all \(a\in F\), \(({\texttt {L}}_e \oplus {\varvec{x}}_{v(e)})_{e\in \delta a}\) is neither identically \({\texttt {0}}\) nor identically \({\texttt {1}}\). Let \(\mathrm {\textsf {{SOL}}}(\mathscr {G})\subseteq \{{\texttt {0}},{\texttt {1}}\}^V\) denote the set of all solutions of \(\mathscr {G}\), and define a graph on \(\mathrm {\textsf {{SOL}}}(\mathscr {G})\) by connecting any pair of solutions at unit Hamming distance. The (maximal) connected components of the \(\mathrm {\textsf {{SOL}}}(\mathscr {G})\) graph are the solution clusters, hereafter denoted \(\mathrm {\textsf {{CL}}}(\mathscr {G})\).

The aim of this section is to establish that (under a certain restriction) the nae-sat solution clusters can be represented by a combinatorial model of what we will term “colorings.” We will describe the correspondence in a few stages. Informally, the progression is given by

Each step of (18) is formalized below. As mentioned previously, the key feature of the last model is that the size of a cluster \(\varvec{\gamma }\) can be easily read off from its corresponding coloring \(\underline{{\sigma }}\), as a product of local functions. Some steps of the correspondence (18) appear in existing literature (see [13, 25, 33, 34, 42]) but we present them here in detail for completeness.

2.1 Frozen and warning configurations

We introduce a new value \({\texttt {f}}\) (free), and adopt the convention \({\texttt {0}}\oplus {\texttt {f}}\equiv {\texttt {f}}\equiv {\texttt {1}}\oplus {\texttt {f}}\). For \(l\geqslant 1\) and \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^l\) let \(I^\textsc {nae}(\underline{{x}})\) be the indicator that \(\underline{{x}}\) is neither identically \({\texttt {0}}\) nor identically \({\texttt {1}}\). Given an nae-sat instance \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\) and an assignment \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^V\), denote

By Definition 2.1, an nae-sat solution is an assignment \(\underline{{{\varvec{x}}}}\in \{{\texttt {0}},{\texttt {1}}\}^V\) satisfying \(I^\textsc {nae}(\underline{{{\varvec{x}}}};\mathscr {G})=1\).

Definition 2.2

(frozen configurations) Given an nae-sat instance \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\), for any \(e\in E\) let \(\mathscr {G}\oplus {\texttt {1}}_e\) denote the instance obtained by flipping the edge label \({\texttt {L}}_e\) to \({\texttt {L}}_e\oplus {\texttt {1}}\). We say that \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^V\) is a valid frozen configuration on \(\mathscr {G}\) if (i) no nae-sat constraint is violated, meaning \(I^\textsc {nae}(\underline{{x}};\mathscr {G})=1\); and (ii) for all \(v\in V\), \(x_v\) takes a value in \(\{{\texttt {0}},{\texttt {1}}\}\) only when forced to do so, meaning there is some \(e\in \delta v\) such that

If no such \(e\in \delta v\) exists then \(x_v={\texttt {f}}\).

It is well known that on any given \(\mathscr {G}\), every nae-sat solution \(\underline{{{\varvec{x}}}}\) can be mapped to a frozen configuration \(\underline{{x}}=\underline{{x}}(\underline{{{\varvec{x}}}})\) via a “coarsening” or “whitening” procedure [42], as follows. Initialize \(\underline{{x}}=\underline{{{\varvec{x}}}}\). Then, whenever \(x_v\in \{{\texttt {0}},{\texttt {1}}\}\) but there exists no \(e\in \delta v\) such that (19) holds, update \(x_v\) to \({\texttt {f}}\). Iterate until no further updates can be made; the result is then a valid frozen configuration. Two nae-sat solutions \(\underline{{{\varvec{x}}}}\), \(\underline{{{\varvec{x}}}}'\) map to the same frozen configuration \(\underline{{x}}\) if and only if they lie in the same cluster \(\varvec{\gamma }\in \mathrm {\textsf {{CL}}}(\mathscr {G})\). Thus, for any given \(\mathscr {G}\), we have a well-defined mapping from clusters \(\varvec{\gamma }\) to frozen configurations \(\underline{{x}}\). This map is one-to-one but not necessarily onto: for instance, the all-free assignment \(\underline{{x}}\equiv {\texttt {f}}\) is always trivially a valid frozen configuration, but on many instances \(\mathscr {G}\) there is no solution cluster \(\varvec{\gamma }\in \mathrm {\textsf {{CL}}}(\mathscr {G})\) whose coarsening is \(\underline{{x}}\equiv {\texttt {f}}\). Since the aim is to lower bound the clusters, the lack of surjectivity must be addressed. We will do so momentarily (Definition 2.4 below), but first we review an useful alternative representation of frozen configurations:

Definition 2.3

(warning configurations) For the integers \(l\geqslant 1\), define functions \(\dot{Y}: \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^l\rightarrow \{{\texttt {0}},{\texttt {1}},{\texttt {f}},\texttt {z}\}\) and \(\hat{Y}: \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^l\rightarrow \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}\) by

Denote \(M\equiv \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^2\). We write \(\underline{{y}}\in M^E\) if \(\underline{{y}}=(y_e)_{e\in E}\) where \(y_e\equiv ({\dot{y}}_e,{\hat{y}}_e)\in M\). If edge e joins variable v to clause a, then \({\dot{y}}_e\) represents a “warning” along e from v to a, while \({\hat{y}}_e\) represents a “warning” along e from a to v. We say that \(\underline{{y}}\in M^E\) is a valid warning configuration on \(\mathscr {G}\) if it satisfies the local equations

for all \(e\in E\) (with no \({\dot{y}}_e=\texttt {z}\)).

It is well known that on any given \(\mathscr {G}\) there is a natural bijection

In the forward direction, given a (valid) frozen configuration \(\underline{{x}}\), for any variable v and any edge \(e\in \delta v\) such that (19) holds, set \({\hat{y}}_e=x_v \in \{{\texttt {0}},{\texttt {1}}\}\); then in all other cases set \({\hat{y}}_e={\texttt {f}}\). Then, having defined all the \({\hat{y}}_e\), the \({\dot{y}}_e\) can only be defined by the local Eq. (20). One can check that the resulting assignment \(\underline{{y}}\in M^E\) is a warning configuration. Conversely, given a warning configuration \(\underline{{y}}\), a frozen configuration \(\underline{{x}}\) can be obtained by setting \(x_v=\dot{Y}({\hat{y}}_{\delta v})\) for all v.

2.2 Message configurations

We return to the question of surjectivity: does a given frozen configuration \(\underline{{x}}\) encode a (nonempty) solution cluster \(\varvec{\gamma }\in \mathrm {\textsf {{CL}}}(\mathscr {G})\)? We will now state an easy sufficient condition for this to hold. The condition is not in general necessary, but we will show that it captures enough of the solution space to deliver a sharp lower bound on the free energy.

Definition 2.4

(free cycles) Let \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^V\) be a valid frozen configuration on \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\). We say that a clause \(a\in F\) is separating (with respect to \(\underline{{x}}\)) if there exist \(e',e''\in \delta a\) such that \({\texttt {L}}_{e'}\oplus x_{v(e')}={\texttt {0}}\) while \({\texttt {L}}_{e''}\oplus x_{v(e'')}={\texttt {1}}\). For instance, a forcing clause is also separating. A cycle in \(\mathscr {G}\) is a sequence of edges

where, taking indices modulo \(2\ell \), it holds for each integer i that \(e_{2i-1}\) and \(e_{2i}\) are distinct but share a clause, while \(e_{2i}\) and \(e_{2i+1}\) are distinct but share a variable. (In particular, if v is joined to a by two edges \(e' \ne e''\), then \(e'e''\) forms a cycle.) We say the cycle in \(\mathscr {G}\) is free (with respect to \(\underline{{x}}\)) if all its variables are free and all its clauses are non-separating.

Definition 2.5

(free trees) Let \(\underline{{x}}\) be a frozen configuration on \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\) that has no free cyces. Let H be the subgraph of \(\mathscr {G}\) induced by the free variables and non-separating clauses of \(\underline{{x}}\). Since \(\underline{{x}}\) has no free cycles, H must be a disjoint union of tree components \(\varvec{t}\), which we term the free trees of \(\underline{{x}}\). For each \(\varvec{t}\), let \(\varvec{T}\equiv \varvec{T}(\varvec{t})\) be the subgraph of \(\mathscr {G}\) induced by \(\varvec{t}\) together with its incident variables. The subgraphs \(\varvec{T}\) (which can contain cycles) will be termed the free pieces of \(\underline{{x}}\). Each free variable is covered by exactly one free piece. In the simplest case, a free piece consists of a single free variable surrounded by d separating clauses.

Let us say that \(\underline{{{\varvec{x}}}}\in \{{\texttt {0}},{\texttt {1}}\}^V\) extends \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^V\) if \({\varvec{x}}_v=x_v\) for all v such that \(x_v\in \{{\texttt {0}},{\texttt {1}}\}\). If \(\underline{{x}}\) is a frozen configuration on \(\mathscr {G}\) with no free cycles, it is easy to extend \(\underline{{x}}\) to valid nae-sat solutions \(\underline{{{\varvec{x}}}}\in \{{\texttt {0}},{\texttt {1}}\}^V\)—we simply extend \(\underline{{x}}\) on each free tree \(\varvec{t}\), since nae-sat on a tree is always solvable; the different free trees do not interact. Let \(\varvec{\gamma }\) denote the set of all valid nae-sat solutions on \(\mathscr {G}\) that extend \(\underline{{x}}\), and denote \(\mathrm {\textsf {{size}}}(\underline{{x}})\equiv |\varvec{\gamma }|\). Meanwhile, let \({\mathfrak {T}}(\underline{{x}})\) denote the set of all free pieces of \(\underline{{x}}\). For each \(\varvec{T}\in {\mathfrak {T}}(\underline{{x}})\), let \(\mathrm {\textsf {{size}}}(\underline{{x}};\varvec{T})\) denote the number of valid nae-sat solutions on \(\varvec{T}\) that extend \(\underline{{x}}|_{\varvec{T}}\). It follows from our discussion that \(\varvec{\gamma }\in \mathrm {\textsf {{CL}}}(\mathscr {G})\) with

That is to say, the absence of free cycles is an easy sufficient condition for a frozen configuration to encode a nonempty cluster; and it further ensures that the cluster has a relatively simple product structure (22). As noted previously, the structure within each free piece \(\varvec{T}\) can be understood by dynamic programming (bp). This is a well-known calculation (see e.g. [33, Ch. 14]) but we will review the details for our setting. To this end, we first introduce a combinatorial model of “message configurations” which will map directly to the natural bp variables.

Recall from Definition 2.3 that a warning configuration is denoted \(\underline{{y}}\in M^E\) where each \(y_e\equiv ({\dot{y}}_e,{\hat{y}}_e)\in M\). We denote a message configuration by \(\underline{{\tau }}\in {\mathscr {M}}^E\) where each \(\tau _e=(\dot{\tau }_e,\hat{\tau }_e)\in {\mathscr {M}}\) (for \({\mathscr {M}}\) to be defined below). It will be convenient to let \({\textsc {e}}\) indicate a directed edge, pointing from tail vertex \(t({\textsc {e}})\) to head vertex \(h({\textsc {e}})\). If e is the undirected version of \({\textsc {e}}\), then we denote

We will make a definition such that \(\tau _{{\textsc {e}}}\) either takes the value “\(\star \)” or is a bipartite factor tree. The tree is unlabelled except that one vertex is distinguished as the root, and some edges are assigned \({\texttt {0}}\) or \({\texttt {1}}\) values as explained below. The root of \(\tau _{{\textsc {e}}}\) is required to have degree one, and should be thought of as corresponding to the head vertex \(h({\textsc {e}})\).

In the context of message configurations \(\underline{{\tau }}\), we use “\({\texttt {0}}\)” or “\({\texttt {1}}\)” to stand for the tree consisting of a single edge which is labelled \({\texttt {0}}\) or \({\texttt {1}}\) and rooted at the endpoint corresponding to the head vertex—the root is the incident clause in the case of \(\dot{\tau }\), the incident variable in the case of \(\hat{\tau }\). We use \({\texttt {s}}\) to stand for the tree consisting of a single unlabelled edge, rooted at the incident variable; this will be related to the situation of separating clauses from Definition 2.4. Given a collection of rooted trees \(t_1,\ldots ,t_\ell \) whose roots \(o_1,\ldots ,o_\ell \) are all of the same type (either all variable or all clauses), we define \(t=\mathrm {\textsf {{join}}}(t_1,\ldots ,t_\ell )\) by identifying all the \(o_i\) as a single vertex o, then adding an edge which joins o to a new vertex \(o'\). The vertex o has the same type (variable or clause) as the \(o_i\); and the vertex \(o'\) is assigned the opposite type and becomes the root of t.

Definition 2.6

(message configurations) Start with \(\dot{\mathscr {M}}_0\equiv \{{\texttt {0}},{\texttt {1}},\star \}\) and \(\hat{\mathscr {M}}_0\equiv \varnothing \), and suppose inductively that \(\dot{\mathscr {M}}_t,\hat{\mathscr {M}}_t\) have been defined. For \(\underline{{\hat{\tau }}}\in (\hat{\mathscr {M}}_t)^{d-1}\) and \(\underline{{\dot{\tau }}}\in (\dot{\mathscr {M}}_t)^{k-1}\), let us abbreviate \(\{\hat{\tau }_i\}\equiv \{\hat{\tau }_1,\ldots ,\hat{\tau }_{k-1}\}\) and \(\{\dot{\tau }_i\}\equiv \{\dot{\tau }_1,\ldots ,\dot{\tau }_{d-1}\}\). Define

Then, for \(t\geqslant 0\), define recursively the sets

We then let \(\dot{\mathscr {M}}\) be the union of all the \(\dot{\mathscr {M}}_t\), let \(\hat{\mathscr {M}}\) be the union of all the \(\hat{\mathscr {M}}_t\), and let \({\mathscr {M}}=\dot{\mathscr {M}}\times \hat{\mathscr {M}}\). On \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\), the assignment \(\underline{{\tau }}\in {\mathscr {M}}^E\) is a valid message configuration if (i) it satisfies the local equations

for all \(e\in E\) (with no \(\dot{\tau }_e=\texttt {z}\)), and (ii) if one element of \(\{\dot{\tau }_e,\hat{\tau }_e\}\) equals \(\star \) then the other element is in \(\{{\texttt {0}},{\texttt {1}}\}\). In (23), we take the convention that \({\texttt {L}}_e\oplus {\texttt {f}}={\texttt {f}}\) and \({\texttt {L}}_e\oplus \star =\star \), and if \(\tau \) is a tree with labels then \({\texttt {L}}_e\oplus \tau \) is defined by applying \({\texttt {L}}_e\oplus \cdot \) entrywise to all labels of \(\tau \). See Fig. 1.

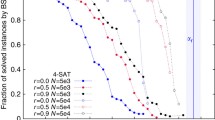

Examples of messages (Definition 2.6). Variables are indicated by circle nodes, clauses by square nodes, and edges by lines. For simplicity we assume that all edges depicted have literals \({\texttt {L}}_e={\texttt {0}}\). Each message is shown with its root as a filled node at the top. The variable-to-clause messages \(\dot{\tau }\) are rooted at clauses, while the clause-to-variable messages \(\hat{\tau }\) are rooted at variables. The heavy lines indicates edges inside the message that are labelled \({\texttt {0}}\) or \({\texttt {1}}\). In our notation we have \(\dot{\tau }_0={\texttt {0}}\) and \(\dot{\tau }_1={\texttt {1}}\). Next \(\hat{\tau }_0={\hat{T}}(\dot{\tau }_1,\dot{\tau }_1)={\texttt {0}}\), while \(\hat{\tau }_1={\hat{T}}(\dot{\tau }_0,\dot{\tau }_1)={\texttt {s}}\). Finally \(\dot{\tau }_3={\dot{T}}(\hat{\tau }_1,\hat{\tau }_1) =\mathrm {\textsf {{join}}}(\hat{\tau }_1,\hat{\tau }_1)\) and \(\hat{\tau }_2={\hat{T}}(\dot{\tau }_3,\dot{\tau }_0) =\mathrm {\textsf {{join}}}(\dot{\tau }_3,\dot{\tau }_0)\)

Suppose \(\underline{{x}}\) is a frozen configuration on \(\mathscr {G}\), and let \(\underline{{y}}\) be its corresponding warning configuration from (21). Given \(\underline{{y}}\), we define \(\underline{{\tau }}\) in four stages:

-

1.

If \({\dot{y}}_e\in \{{\texttt {0}},{\texttt {1}}\}\) then set \(\dot{\tau }_e={\dot{y}}_e\); likewise if \({\hat{y}}_e\in \{{\texttt {0}},{\texttt {1}}\}\) then set \(\hat{\tau }_e={\hat{y}}_e\).

-

2.

If \((\underline{{\texttt {L}}}\oplus \underline{{{\dot{y}}}})_{\delta a(e){\setminus } e}\) has both \({\texttt {0}}\) and \({\texttt {1}}\) entries, then set \(\hat{\tau }_e={\texttt {s}}\).

-

3.

Apply the local Eq. (23) recursively to define \(\dot{\tau }_e,\hat{\tau }_e\) wherever possible.

-

4.

Lastly, if any \(\dot{\tau }_e\) or \(\hat{\tau }_e\) remains undefined, then set it to \(\star \).

An example with \(\star \) messages is given in Fig. 2.

Example of how \(\star \) messages can arise in the mapping from \(\underline{{y}}\) to \(\underline{{\tau }}\) (§2.2). The figure shows a subgraph of \(\mathscr {G}\) with variables indicated by circle nodes, clauses by square nodes, and edges by lines. All edges in the figure are assumed to have label \({\texttt {L}}_e={\texttt {0}}\). All variables shown are frozen to \({\texttt {0}}\) or \({\texttt {1}}\), and all clauses shown are separating. To avoid clutter we did not label the edges with the warnings \(y_e\); instead, each variable v is labeled with its frozen configuration spin \(x_v\), according to the \(\underline{{x}}\leftrightarrow \underline{{y}}\) bijection (21). The clauses force the variables along the cycle in the clockwise direction, resulting in \(\star \) values in the final \(\underline{{\tau }}\) in the counterclockwise direction of the cycle. (Note also that \(\underline{{y}}\) can be recovered from \(\underline{{\tau }}\) by changing \(\star \) to \({\texttt {f}}\); cf. Lemma 2.7)

Lemma 2.7

The mapping described above defines a bijection

Proof

Let \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^V\) be a frozen configuration on \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\) without free cycles, and let \(\underline{{y}}\in M^E\) be the warning configuration which corresponds to \(\underline{{x}}\) via (21). We first check that the mapping \(\underline{{y}}\mapsto \underline{{\tau }}\), as described above, gives a message configuration which is valid, i.e., satisfies conditions (i) and (ii) of Definition 2.6. In the first stage, the mapping procedure sets \(\dot{\tau }_e={\dot{y}}_e\) whenever \({\dot{y}}_e\in \{{\texttt {0}},{\texttt {1}}\}\), and \(\hat{\tau }_e={\hat{y}}_e\) whenever \({\hat{y}}_e\in \{{\texttt {0}},{\texttt {1}}\}\). One can argue by induction that the rest of the procedure does not create any additional \({\texttt {0}}\) or \({\texttt {1}}\) messages, so that in the final configuration the \(\{{\texttt {0}},{\texttt {1}}\}\) values of \(\underline{{\tau }}\) will match those of \(\underline{{y}}\). The second and third stages of the procedure are clearly consistent with the local Eq. (23). Note in particular that the third stage does not produce any \(\dot{\tau }_e=\texttt {z}\) message, because it would contradict the assumption that \(\underline{{y}}\) is a valid warning configuration; it also does not produce any \(\star \) message. All \(\star \) messages are created in the fourth stage, and this is clearly consistent with the mapping of \(\star \) messages under \(\dot{T}\) and \(\hat{T}\). This concludes the proof that \(\underline{{\tau }}\) satisfies condition (i) of Definition 2.6. To check condition (ii), suppose \(\tau _{{\textsc {e}}}=\star \), and let \({\textsc {f}}\) denote the reversal of \({\textsc {e}}\). From the above construction, it must be that \(y_{{\textsc {e}}}={\texttt {f}}\) and \(\tau _{{\textsc {e}}'}=\star \) for some \({\textsc {e}}'\) that points to the tail vertex \(t({\textsc {e}})\) but does not equal \({\textsc {f}}\). Consequently \({\textsc {e}}\) must belong to a directed cycle \({\textsc {e}}_1 {\textsc {e}}_2\ldots {\textsc {e}}_{2k} {\textsc {e}}_1\) with all the \(\tau _{{\textsc {e}}_i}\) equal to \(\star \). Whenever \({\textsc {e}}\) points from a separating clause a to free variable v, we must have \(\tau _{{\textsc {e}}}={\texttt {s}}\). As a result, if all the variables along the cycle are free, then none of the clauses can be separating, contradicting the assumption that \(\underline{{x}}\) has no free cycles. Therefore some variable v on the cycle must take value \(x_v\in \{{\texttt {0}},{\texttt {1}}\}\), and by relabelling we may assume \(v=t({\textsc {e}}_1)\). Let \({\textsc {f}}_i\) denote the reversal of \({\textsc {e}}_i\): since \(x_v\ne {\texttt {f}}\) but \(y_{{\textsc {e}}_1}={\texttt {f}}\), it must be that \(y_{{\textsc {f}}_1}=x_v\). This means that the clause \(a=h({\textsc {e}}_1)=t({\textsc {f}}_1)\) is forcing to v, so in particular \(y_{{\textsc {f}}_2}\in \{{\texttt {0}},{\texttt {1}}\}\). Continuing in this way we see that \(y_{{\textsc {f}}_i}\in \{{\texttt {0}},{\texttt {1}}\}\) for all i, and it follows that \(\underline{{\tau }}\) satisfies condition (ii), and so is a valid message configuration.

The mapping from \(\underline{{y}}\) to \(\underline{{\tau }}\) is clearly injective. To see that it is surjective, let \(\underline{{\tau }}\) be any valid message configuration. Projecting \(\dot{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}}\}\mapsto {\texttt {f}}\) and \(\hat{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}}\}\mapsto {\texttt {f}}\) yields a valid warning configuration \(\underline{{y}}\), which in turn maps to a valid frozen configuration \(\underline{{x}}\). It remains then to check that \(\underline{{x}}\) has no free cycles. Indeed, along a free cycle, all the warnings (in either direction) must be \({\texttt {f}}\). This means none of the messages can be in \(\{{\texttt {0}},{\texttt {1}}\}\), and as a result none of the messages can be \(\star \), by condition (ii) of Definition 2.6. This means all the messages must be in \(\dot{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}},\star \}\) or \(\hat{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}},\star \}\). Suppose in one direction of the cycle we have the directed edges \({\textsc {e}}_1{\textsc {e}}_2\ldots {\textsc {e}}_{2k}{\textsc {e}}_1\). By definition of \(\dot{T}\) and \(\hat{T}\), \(\tau _{{\textsc {e}}_i}\) is a proper subtree of \(\tau _{{\textsc {e}}_{i+1}}\) for all i, with indices modulo 2k. Going around the cycle we find that \(\tau _{{\textsc {e}}_1}\) is a proper subtree of \(\tau _{{\textsc {e}}_{2k+1}}=\tau _{{\textsc {e}}_1}\), which gives the contradiction.\(\square \)

2.3 Bethe formula

We now describe the dynamic programming (bp) calculation which will ultimately take a message configuration \(\underline{{\tau }}\) and evaluate a product of local functions to compute the size of its associated cluster. The first step is to define the dynamic programming variables; these will formalize the measures \({\texttt {m}}\) which were introduced previously in (7). Recall that for \(l\geqslant 1\) and \(\underline{{x}}\in \{{\texttt {0}},{\texttt {1}},{\texttt {f}}\}^l\), we write \(I^\textsc {nae}(\underline{{x}})\) for the indicator that the entries of \(\underline{{x}}\) are not identically \({\texttt {0}}\) or identically \({\texttt {1}}\).

Definition 2.8

Recall that message configuration spins belong to the space \({\mathscr {M}}=\dot{\mathscr {M}}\times \hat{\mathscr {M}}\) (Definition 2.6). Let \({\mathscr {P}}(\{{\texttt {0}},{\texttt {1}}\})\) denote the space of probability measures on \(\{{\texttt {0}},{\texttt {1}}\}\). Define the mappings \(\dot{{\texttt {m}}}:\dot{\mathscr {M}}\rightarrow {\mathscr {P}}(\{{\texttt {0}},{\texttt {1}}\})\) and \(\hat{{\texttt {m}}}:\hat{\mathscr {M}}\rightarrow {\mathscr {P}}(\{{\texttt {0}},{\texttt {1}}\})\) as follows. For \(\dot{\tau }\in \{{\texttt {0}},{\texttt {1}}\}\) let \(\dot{{\texttt {m}}}(\dot{\tau })\) be the unit measure supported on \(\dot{\tau }\). Likewise, for \(\hat{\tau }\in \{{\texttt {0}},{\texttt {1}}\}\) let \(\hat{{\texttt {m}}}(\hat{\tau })\) be the unit measure supported on \(\hat{\tau }\). For \(\dot{\tau }\in \dot{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}},\star \}\) or \(\hat{\tau }\in \hat{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}},\star \}\) we let \(\dot{{\texttt {m}}}(\dot{\tau })\) and \(\hat{{\texttt {m}}}(\hat{\tau })\) be recursively defined: if \(\dot{\tau }=\dot{T}(\hat{\tau }_1,\ldots ,\hat{\tau }_{d-1})\) where no \(\hat{\tau }_j=\star \), define

Note that \(\hat{\tau }_1,\ldots ,\hat{\tau }_{d-1}\) can be recovered from \(\dot{\tau }\) modulo permutation of the indices, so these quantities are well-defined. We see inductively that for \(\dot{\tau }\in \dot{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}},\star \}\), the normalizing factor \(\dot{z}(\dot{\tau })\) is positive, and \(\dot{{\texttt {m}}}(\dot{\tau })\) is a nondegenerate probability measure on \(\{{\texttt {0}},{\texttt {1}}\}\). Similarly, if \(\hat{\tau }\in \hat{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}},\star \}\) equals \(\hat{T}(\dot{\tau }_1,\ldots ,\dot{\tau }_{k-1})\) where none of the \(\dot{\tau }_i\) are \(\star \), then set

Again, we see inductively that for \(\hat{\tau }\in \hat{\mathscr {M}}{\setminus }\{{\texttt {0}},{\texttt {1}},\star \}\), the normalizing factor \(\hat{z}(\hat{\tau })\) is positive, and \(\hat{{\texttt {m}}}(\hat{\tau })\) is a nondegenerate probability measure on \(\{{\texttt {0}},{\texttt {1}}\}\). Finally, we will see below that for our purposes we can take \(\dot{{\texttt {m}}}(\star )\) and \(\hat{{\texttt {m}}}(\star )\) to be arbitrary nondegenerate probability measures on \(\{{\texttt {0}},{\texttt {1}}\}\); we therefore define them both to equal the uniform measure on \(\{{\texttt {0}},{\texttt {1}}\}\).

Given a valid message configuration \(\underline{{\tau }}\) on \(\mathscr {G}\), define \(\underline{{{\texttt {m}}}} = ({\texttt {m}}_e)_{e\in E}\) where \({\texttt {m}}_e\equiv (\dot{{\texttt {m}}}_e,\hat{{\texttt {m}}}_e)\) with \(\dot{{\texttt {m}}}_e\equiv \dot{{\texttt {m}}}(\dot{\tau }_e)\) and \(\hat{{\texttt {m}}}_e\equiv \hat{{\texttt {m}}}(\hat{\tau }_e)\). It follows from Definition 2.8 that \(\underline{{{\texttt {m}}}}\) satisfies the following local consistency equations, which are inherited from the Eq. (23) satisfied by \(\underline{{\tau }}\), in combination with the above definitions (24) and (25). If \(\dot{\tau }_e\ne \star \), then \(\dot{{\texttt {m}}}_e\) is given by the equation

for \({\varvec{x}}\in \{{\texttt {0}},{\texttt {1}}\}\). Likewise, if \(\hat{\tau }_e\ne \star \), then \(\hat{{\texttt {m}}}_e\) is given by the equation

for \({\varvec{x}}\in \{{\texttt {0}},{\texttt {1}}\}\). The Eqs. (26) and (27) are known as the bp equations. We now proceed to the calculation of the cluster size (22). To this end, we define the local functions

where the last identity in the last two lines holds for any choice of i. The bp calculation is summarized by the following:

Lemma 2.9

Suppose on \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\) that \(\underline{{x}}\) is a frozen configuration with no free cycles, and let \(\underline{{\tau }}\) be its corresponding message configuration from Lemma 2.7. Let \(\varvec{T}\in {\mathfrak {T}}(\underline{{x}})\) be a free piece of \(\underline{{x}}\), and let \(\varvec{t}\) be the free tree inside it. Then the number of nae-sat extensions of \(\underline{{x}}|_{\varvec{T}}\) on \(\varvec{T}\) is given by

where \(V(\varvec{t})\) and \(F(\varvec{t})\) denote respectively the variables and clauses in \(\varvec{t}\). (An example calculation is worked out in Fig. 3.)

Example of correspondence (Lemma 2.7) between frozen and message configurations. Variables are indicated by circle nodes, clauses by square nodes, and edges by lines. The graph formed by the wavy lines is a free piece \(\varvec{T}\), with the free tree \(\varvec{t}\) in green and \(\varvec{T}{\setminus }\varvec{t}\) in blue (Definition 2.5). Each variable v is labelled with its frozen configuration spin value  . The four separating clauses are indicated by filled black squares. The message configuration is only partially shown, with the remaining values given by the obvious symmetries. The clause-to-variable messages \(\hat{\tau }\) are shown in black, and the variable-to-clause messages \(\dot{\tau }\) are shown in purple. Each message is a tree, with root vertex shown as a filled node. The heavy black and purple lines indicate edges inside the messages that are labeled \({\texttt {1}}\). For instance, the message coming up out of the bottom variable is a tree consisting of a single edge, labelled \({\texttt {1}}\) (indicated in the figure by a heavy purple line), rooted at its incident clause. We then calculate on each edge the values \(m=( {\dot{{\texttt {m}}}[\dot{\tau }]({\texttt {1}})}, {\hat{{\texttt {m}}}[\hat{\tau }]({\texttt {1}})})\), and use this to determine the factors \(\dot{\varphi }\), \(\hat{\varphi }^\text {lit}\), \(\bar{\varphi }\) from (28). In this example, \(\varvec{t}\) has two free variables each with \(\dot{\varphi }=1/4\), four separating clauses each with \(\hat{\varphi }^\text {lit}=1\), and one non-separating clause with \(\hat{\varphi }^\text {lit}=3/4\). There are six edges incident to \(\varvec{t}\), each with \(\bar{\varphi }=2\). Multiplying all these factors together (Lemma 2.9) gives \(\mathrm {\textsf {{size}}}(\underline{{x}};\varvec{T})=3\). Indeed, in this small example it is easy to see that there are exactly three nae-sat assignments extending the frozen configuration \(\underline{{x}}\) on \(\varvec{T}\), since the two free variables cannot both take value \({\texttt {1}}\), but the remaining three possibilities give valid nae-sat assignments (color figure online)

. The four separating clauses are indicated by filled black squares. The message configuration is only partially shown, with the remaining values given by the obvious symmetries. The clause-to-variable messages \(\hat{\tau }\) are shown in black, and the variable-to-clause messages \(\dot{\tau }\) are shown in purple. Each message is a tree, with root vertex shown as a filled node. The heavy black and purple lines indicate edges inside the messages that are labeled \({\texttt {1}}\). For instance, the message coming up out of the bottom variable is a tree consisting of a single edge, labelled \({\texttt {1}}\) (indicated in the figure by a heavy purple line), rooted at its incident clause. We then calculate on each edge the values \(m=( {\dot{{\texttt {m}}}[\dot{\tau }]({\texttt {1}})}, {\hat{{\texttt {m}}}[\hat{\tau }]({\texttt {1}})})\), and use this to determine the factors \(\dot{\varphi }\), \(\hat{\varphi }^\text {lit}\), \(\bar{\varphi }\) from (28). In this example, \(\varvec{t}\) has two free variables each with \(\dot{\varphi }=1/4\), four separating clauses each with \(\hat{\varphi }^\text {lit}=1\), and one non-separating clause with \(\hat{\varphi }^\text {lit}=3/4\). There are six edges incident to \(\varvec{t}\), each with \(\bar{\varphi }=2\). Multiplying all these factors together (Lemma 2.9) gives \(\mathrm {\textsf {{size}}}(\underline{{x}};\varvec{T})=3\). Indeed, in this small example it is easy to see that there are exactly three nae-sat assignments extending the frozen configuration \(\underline{{x}}\) on \(\varvec{T}\), since the two free variables cannot both take value \({\texttt {1}}\), but the remaining three possibilities give valid nae-sat assignments (color figure online)

Proof

As we have mentioned before, this calculation is well known (see e.g. [33, Ch. 14]) but we will review it here, beginning with a minor technical point. As noted in Definition 2.5, \(\varvec{t}\) is a tree but \(\varvec{T}\) has a cycle wherever a variable \(v\in \varvec{T}{\setminus }\varvec{t}\) is joined by more than one edge to \(\varvec{t}\). However, since \(\underline{{x}}|_{\varvec{T}{\setminus }\varvec{t}}\) is \(\{{\texttt {0}},{\texttt {1}}\}\)-valued, these cycles play no role in the question of extending \(\underline{{x}}|_{\varvec{T}}\) to a valid nae-sat assignment on \(\varvec{T}\)—one can simply duplicate variables in \(\varvec{T}{\setminus }\varvec{t}\) so that each one joins to \(\varvec{t}\) by exactly one edge. We may therefore assume for the rest of the proof that all the free pieces \(\varvec{T}\in {\mathfrak {T}}(\underline{{x}})\) are acyclic.

For any \(\varvec{T}\in {\mathfrak {T}}(\underline{{x}})\) and any edge \(e\in \varvec{T}\), delete from \(\varvec{T}\) the edges \(\delta a(e){\setminus } e\), and let \(\dot{\varvec{T}}_e\) denote the component containing e in what remains, rooted at a(e). Likewise, delete from \(\varvec{T}\) the edges \(\delta v(e){{\setminus }} e\), and let \(\hat{\varvec{T}}_e\) denote the component containing e in what remains, rooted at v(e). For each variable \(w\in \dot{\varvec{T}}_e{{\setminus }}\varvec{t}\), let \(\acute{x}_w\in \{{\texttt {0}},{\texttt {1}}\}\) be the boolean sum of \(x_w\) together with all the edge literals \({\texttt {L}}\) on the path joining w to a(e) in \(\dot{\varvec{T}}_e\). Note then that \(\dot{\tau }_e\) encodes the isomorphism class of \(\dot{\varvec{T}}_e\), labelled with boundary data \(\acute{x}_w\) (for all the variables \(w\in \dot{\varvec{T}}_e{\setminus }\varvec{t}\)). A similar relation holds between \(\hat{\tau }_e\) and \(\hat{\varvec{T}}_e\). For each \(e\in \varvec{T}\), let \(\dot{\textsf {s}}_e({\varvec{x}};\underline{{x}})\) count the number of valid nae-sat assignments that extend \(\underline{{x}}|_{\dot{\varvec{T}}_e}\) on \(\dot{\varvec{T}}_e\) and take value \({\varvec{x}}\) on v(e). Let \(\hat{\textsf {s}}_e({\varvec{x}};\underline{{x}})\) count the number of valid nae-sat assignments that extend \(\underline{{x}}|_{\hat{\varvec{T}}_e}\) on \(\hat{\varvec{T}}_e\) and take value \({\varvec{x}}\) on v(e). Denote

There are two boundary cases: if edge e joins a free variable in \(\varvec{t}\) to a separating clause in \(\varvec{T}{\setminus }\varvec{t}\), then we have \(\hat{\textsf {s}}_e({\texttt {0}};\underline{{x}}) = \hat{\textsf {s}}_e({\texttt {1}};\underline{{x}})=1\). If edge e instead joins a non-separating clause in \(\varvec{t}\) to a frozen variable in \(\varvec{T}{\setminus }\varvec{t}\), then we have \(\dot{\textsf {s}}_e({\varvec{x}};\underline{{x}})=\mathbf {1}\{{\varvec{x}}=x_{v(e)}\}\). By induction started from these boundary cases we find that for all \(e\in \varvec{T}\),

It follows that for any variable \(v\in \varvec{t}\), any clause \(a\in \varvec{t}\), and any edge \(e\in \varvec{T}\), we have the identities

Combining the identities and rearranging gives (writing \(E(\varvec{t})\) for the edges of \(\varvec{t}\))

For \(a\in F(\varvec{t})\) and \(e\in \delta a{\setminus }\varvec{t}\), the variable v(e) is frozen and so we have \(\dot{\textsf {s}}_e(\underline{{x}})=1\). For any \(v\in V(\varvec{t})\) and \(e\in \delta v{\setminus }\varvec{t}\), we have \(\dot{\textsf {s}}_e(\underline{{x}})=\mathrm {\textsf {{size}}}(\underline{{x}};\varvec{T})\). The tree \(\varvec{t}\) has Euler characteristic one. The right-hand side of the above equation then simplifies to \(\mathrm {\textsf {{size}}}(\underline{{x}};\varvec{T})\), thereby proving the claim.\(\square \)

Corollary 2.10

Suppose on \(\mathscr {G}=(V,F,E,\underline{{\texttt {L}}})\) that \(\underline{{x}}\) is a frozen configuration with no free cycles, and let \(\underline{{\tau }}\) be its corresponding message configuration from Lemma 2.7. Then the number of valid nae-sat extensions of \(\underline{{x}}\) is given by the product formula

This identity holds as long as \(\hat{{\texttt {m}}}(\star )\) and \(\hat{{\texttt {m}}}(\star )\) are fixed nondegenerate probability measures on \(\{{\texttt {0}},{\texttt {1}}\}\).

Proof

Let \(V'\) denote the set of free variables, and let \(E'\) denote the set of all edges incident to \(V'\). Let \(F'\) the set of non-separating clauses. From (22) and Lemma 2.9 we have