Abstract

Humans have the unique ability to efficiently execute instructions that were never practiced beforehand. In this Rapid Instructed-Task-Learning, not-yet-executed novel rules are presumably held in procedural working-memory (WM), which is assumed to hold stimulus-to-response bindings. In this study, we employed a computerized-cognitive training protocol targeting procedural WM to test this assumption and to examine whether the ability to rapidly learn novel rules can itself be learned. 175 participants were randomly assigned to one of three groups: procedural WM training (involving task-switching and N-back elements, all with novel rules; Shahar and Meiran in PLoS One 10(3):e0119992, 2015), active-control training (adaptive visual-search task), and no-contact control. We examined participants’ rapid instructed-task-learning abilities before and after training, by administrating 55 novel choice tasks, and measuring their performance in the first two trials (where participants had no practice). While all participants showed shorter reaction-times in post vs. pretest, only participants in the procedural WM training group did not demonstrate an increased error rate at posttest. Evidence accumulation modelling suggested that this result stems from a reduction in decision threshold (the amount of evidence that needs to be gathered to reach a decision), which was more pronounced in the control groups; possibly accompanied by an increased drift-rate (the rate of evidence accumulation) only for the training group. Implication are discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Humans have the unique ability to efficiently follow task instructions, even without practice. This can be seen in many real-life situations, for instance in getting instructions on how to operate your car’s new sound system (e.g., press the left button to turn it on, press the upper button to adjust settings, etc.). Although execution of new instructions may be subjectively experienced as trivial, from a theoretical standpoint, it is not. Specifically, procedural representations, the building blocks of actions, have long been considered to form through experience, and are thought to be very different from the declarative representations which are used to communicate the instructions (Anderson, 1996; Logan, 1988). Moreover, newly instructed tasks have been shown to operate automatically under some conditions (Meiran, Liefooghe, & De Houwer, 2017). This fact further challenges the procedural-declarative distinction given the widely held assumption that procedural but not declarative knowledge leads to automatic behavior. All these and other reasons make this topic an attractive new area of research (e.g., as reflected in the 27th Attention and Performance meeting, see De Houwer, Hughes, & Brass, 2017).

The ability to perform actions based on instruction alone was termed rapid instructed-task-learning (Cole, Laurent, & Stocco, 2013). Rapid instructed-task-learning is measured by asking participants to perform a series of novel choice reaction-time (RT) tasks, each for a small number of trials (Cole, Bagic, Kass, & Schneider, 2010; Cole et al., 2013). Since the stimulus–response rules are novel and unpracticed, performance in the set of novel choice-RT tasks cannot solely rely on long-term memory, and thus is expected to rely on working-memory (WM) resources (Meiran, Cole, & Braver, 2012; see Wilhelm & Oberauer, 2006).

It is assumed that at least on the very first execution of an instruction, which has no traces from past performance, novel rules must be held in WM, whose mechanism should hold novel associations between familiar elements (Cole, Braver, & Meiran, 2017; Meiran et al., 2012). A prominent WM model discussed in this literature, by Oberauer (2009), assumes two components—the first and more relevant is a procedural subsystem for holding associations between stimuli and responses (e.g., press the right key when you see a square)—i.e., information that guides action. A similar mechanism is assumed to underlie a declarative subsystem that holds associations between stimulus elements (e.g., a letter and its location in the list). Whether these systems are separate is still debated [for supporting evidence see (Oberauer, Souza, Druey, & Gade, 2013; Souza, Oberauer, Gade, & Druey, 2012, but see Barrouillet, Corbin, Dagry, & Camos, 2015; Shahar, Teodorescu, Usher, Pereg, & Meiran, 2014, Experiment 3, for evidence questioning this distinction)]. In the current research, we do not deal with this debate. Instead, we focus on the hypothesized involvement of procedural WM in rapid instructed-task-learning, using a cognitive training protocol that was shown to improve procedural WM processing, to test whether rapid instructed-task-learning can itself be learned.

Training procedural working memory

The robust correlation between WM updating, and fluid intelligence (e.g., Friedman et al., 2006) lead researchers to predict that improving WM would lead to improved performance in fluid intelligence (Melby-Lervåg, Redick, & Hulme, 2016). Despite some encouraging findings (Jaeggi, Buschkuehl, Jonides, & Perrig, 2008; Salminen, Strobach, & Schubert, 2012), recent meta-analyses reported only near transfer effects [improvement in a training procedure leading to improvement in a similar experimental procedure, e.g., (Melby-Lervåg, Redick, & Hulme, 2016; Soveri, Antfolk, Karlsson, Salo, & Laine, 2017)], suggesting that the benefits of cognitive training might be somewhat limited. Importantly, near transfer effects are highly important on their own, as they are evidence for improvement in the underlying processes of the tasks.

In a previous study, Shahar & Meiran (2015) introduced a novel procedural WM training design that combines task-switching and N-back elements, and showed transfer to procedural WM processing. In this procedural training task, participants performed novel tasks, comprising two 2-choice reaction-time (RT) task-sets in short mini-blocks of ten trials for 19 sessions. The experimental trials included random switching between the two tasks and participants were also requested to respond according to the cue/target (randomly chosen) that was presented N trials beforehand, where N level was adapted to participants’ performance (see Fig. 1). Importantly, increasing N-back level in this task is considered to influence the procedural demands of the task, since the memoranda is action-related (i.e., serves to guide action). Thus, this training protocol is assumed to load procedural WM as well as hierarchical control demands, given the need to switch between task sets (Koechlin, Basso, Pietrini, Panzer, & Grafman, 1999).

Illustrations of example blocks in the procedural working-memory training task. a, b show an example for two sets of instructions. c, d illustrate example task sequences for performing the instructions presented in a. In c participants need to perform the tasks according to the currently presented cues and targets, whereas in d they need to perform the tasks according to the task-cue that was presented on the previous trial

Shahar & Meiran’s (2015) results showed near transfer to an untrained 2-choice reaction time task, suggesting improvement in rule retrieval relative to a no-contact control group. Benefits of this training procedure were reported in an additional study (Shahar et al., 2018), showing that error rates in a novel choice task were lower in the trained group relative to an active control group. Nonetheless, it is yet unknown whether benefit is also seen on the first trials of transfer tasks, where rapid-instructed task learning is evidenced. Importantly, this could only be tested using specifically designed tasks that allow measuring rapid instructed-task-learning, which were not previously tested in training studies, to our knowledge.

Measuring rapid instructed-task-learning

Rapid instructed-task-learning paradigms all involve multiple novel instructions performed in a small number of trials (Cole et al., 2013; Liefooghe, Wenke, & De Houwer, 2012). Here, we used the NEXT paradigm (Meiran, Pereg, Kessler, Cole, & Braver, 2015a, Fig. 2) in which participants performed a series of novel two-choice reaction time tasks (e.g., press left for “Y” and right for “X”). Participants were asked to perform each task for only two trials (GO phase; also termed an inducer task), in which the stimuli appeared in green color. In each task, prior to the GO phase, participants encountered a sequence of stimuli in red color and were requested to press a right/left response (counterbalanced between participants and constant throughout the experiment) to get to the (instructions’ implementation) GO phase. This part of the task is called the NEXT phase (also termed a ‘diagnostic task’).

Trial sequence in the NEXT paradigm. Each mini-block consists of novel stimulus–response associations (for example, X and Y). In the instructions screen, participants are introduced with instructions towards the performance in the GO phase, where the stimuli appear in green color and only performed twice. Prior to the GO phase, a number of targets in red color require a NEXT response (right/left, counterbalanced between participants and constant throughout the experiment), which can thus be compatible (cogruent) or incompatible (incongruent) with the instructed GO response

Rapid instructed-task learning abilities can be estimated using GO phase performance, and more specifically—the difference between the first and second GO trials (i.e., GO trial effect). This effect was found to stem from poorer performance in first relative to subsequent GO trials (Meiran et al., 2015a). It is the first GO trial where performance is completely based on instructions since there is no prior experience with the task, and thus no memory traces from previous GO task performance (Cole et al., 2017). For that reason, the first GO trial is considered the purest measure of rapid instructed-task learning. Small GO Trial effects indicate efficient rapid-instructed-task-learning. For example, it was found that longer GO phases comprising ten trials, that allow task learning via experience, resulted in a larger GO Trial effect as compared to the usual 2-GO-trial sequence (Meiran et al., 2015a).

Performance in the NEXT phase serves to measure a side effect of holding instructions in WM towards their execution: their reflexive activation in an inappropriate context. This effect, termed Intention-Based Reflexivity (Meiran et al., 2012; A.K.A Automatic Effects of Instructions, Meiran et al., 2017) is reflected in a compatibility effect that is also measured in the NEXT paradigm (Meiran et al., 2015a). In the NEXT paradigm, compatibility relationships are formed between the NEXT and GO responses (e.g., if “Y” was associated with a left GO response, but in the NEXT phase a red “Y” demands a right response—it is considered an incompatible NEXT trial. See Fig. 2). In addition, the inclusion of a NEXT phase adds a hierarchical control demand (Koechlin et al., 1999) to the paradigm as performance necessitates identification of the task context (here, red vs. green color, indicating whether this is the NEXT of the GO phase of the miniblock) before the execution of the instructions. Importantly, the training procedure also includes a hierarchical element, given that it necessitates determining the required task before task execution.

A previous study tested individual differences in the pretest data from the current training study (Meiran, Pereg, Givon, Danieli, & Shahar, 2016). That study aimed to examine the involvement of WM in rapid instructed-task-learning and automatic effects of instructions, while also examining the relationship between the two later phenomena. Meiran et al. (2016) showed that participants with better rapid instructed-task-learning performance had smaller NEXT compatibility effects. Although automaticity is a by-product of rapid instructed-task-learning, these are two partially distinct (but related; r = − 0.32, between the respective latent-variables) phenomena.

The current study

Since the training procedure includes novel tasks, we recently suggested (Shahar et al., 2018), that training might encourage participants to hold in mind an abstract task representation. These representations are assumed to be ‘specific-general’ representations, in the sense that they are constrained to the specific task procedure, but are general to any stimulus or response that might be used in the context of this specific task. In choice-RT tasks, holding in mind abstract stimulus–response associations should allow participants to quickly adopt novel task rules, which will then facilitate performance. The existence of such abstract stimulus–response rules should improve rapid instructed-task-learning, and allow better performance in GO phase trials in the NEXT paradigm (see Verbruggen, McLaren, & Chambers, 2014), suggesting that basic learning mechanisms enable abstract representations’ development). Training-related improvement in rapid instructed-task-learning would suggest both that it relies on procedural WM, and that it is trainable. In addition, such results would provide evidence for a somewhat wider than thought near-transfer of this training protocol, given the non-trivial differences between the choice RT tasks used to assess transfer in Shahar & Meiran’s (2015) and Shahar et al.’s (2018) works and the rapid instructed-task-learning paradigm (i.e., measuring choice performance in the very first trial(s) of a novel task). Most importantly, such a finding would point to a direction in which cognitive training can lead to wider transfer than seen so far. This is because improvement in rapid-instructed-task learning constitutes an example of learning-to-learn. As such, it influences future learning and thus sows the seeds for a continuously growing impact.

Furthermore, based on Meiran et al. (2016), we predicted that if procedural WM training would improve rapid instructed-task-learning performance, its associated automaticity would correspondingly decrease. Such a transfer effect would be considered as “intermediate”, since although these phenomena are related, as well as procedurally measured within the same task, they were found to reflect two partially independent phenomena.

In the current study, participants were randomly assigned to procedural WM training, an active control group (who were trained on a visual-search task that does not tap executive functions, Redick et al., 2013, and includes a constant stimulus–response association, thus not hypothesized to facilitate abstract stimulus–response associations) and a no-contact control group. We predicted that procedural working memory training would allow participants to form abstract stimulus–response rules, which would, in turn, enhance their rapid instructed-task-learning. Specifically, we predicted that, relative to controls, participants in the training group would show better performance in unpracticed and novel choice-RT tasks, even in the first GO trial where no prior overt practice had occurred.

Method

Participants

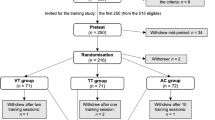

Participants were 175 students in a pre-academic preparatory course for engineering at Ben-Gurion University of the Negev. The study was performed in two consecutive years (Year 1, N = 76; Year 2, N = 99). Participants took part in the experiment in return for monetary compensation. The participants reported having normal or corrected-to-normal vision, including intact color vision, and having no psychiatric or neurological disorders.

Participants were randomly assigned to two groups in the first year of the study: procedural WM and active control. In the second year, they were assigned to these groups and in addition to a no-contact group. Thus, the total numbers were N = 71 (12 females, mean age 23.32) for procedural WM training, N = 71 (12 females, mean-age 23.63) for active control and N = 30 (5 females, mean-age 23.81) for no-contact group.

Materials and procedure

Pre and posttest testing, as well as the training sessions, took place in a classroom located on campus. The class contained 14 testing positions, each comprising of a desk and a desktop, with partitions between them. There were six 14 in. and eight 17 in. monitors. As a result, there has been a slight difference in stimulus size between the screens: In the NEXT paradigm, the stimuli were 2 × 2 cm on the 14-inch screens and 2.5 × 2.5 cm on the 17-inch ones. However, the assignment of participants to computers was random, such that there were no systematic differences between the groups. The stimuli consisted of English and Hebrew letters, digits, symbols, and mostly pictures drawn from Microsoft PowerPoint symbols pool and free Internet image databases.

Procedural overview

Due to technical issues, the training included 14 training sessions during the first year, and 12 sessions during the second year. The training sessions were performed twice a week (~ 30 min each), such that the training was completed within 8–10 weeks. During this interval, the no-contact control did not attend any measurements. Further details, including the involvement of other transfer measurements are reported in Shahar et al. (2018).

Transfer task Footnote 1

The NEXT paradigm (Fig. 2) was similar to that used by Meiran et al. (2015a). Participants performed a series of 55 experimental mini-blocks (on each pre/post measurement), each involving a set of novel 2-choice RT instructions that were only executed twice during a task-execution (GO) phase (in which the stimuli appear in GREEN color). The instructions associated each stimulus to a left/right response (the letter keys A/L, covered with stickers on a QWERTY keyboard). Just prior to task execution, a pseudo-random number of trials (in which the stimuli appear in RED color) appeared. In these NEXT trials, participants were requested to press a single key to advance the screen to reach the GO trials. The key that was used in the red targets’ NEXT phase was either right or left (counterbalanced between participants). To create ambiguity and decrease temporal expectations regarding the GO phase initiation, 10% of the mini-blocks did not involve any NEXT trials, 30% of the mini-blocks involved one NEXT trial, 20% involved 2 and 20% involved 3 trials, 10% involved 4 NEXT trials and the remaining 10% of the mini-blocks involved 5 NEXT trials. The decreasing probability of NEXT trials was introduced to partially control the temporal predictability (see Meiran et al., 2015a).

Stimuli included Hebrew letters, English letters, digits, symbols, and pictures. Each mini-block included 2 stimuli randomly selected by the computer from the same category (e.g., two digits, two Hebrew letters).

The instructions ended with a spacebar response, but not before 3 s had elapsed. Each NEXT/GO trial began with a 500 ms fixation, followed by the target stimulus presented until the response and followed by a 800 ms blank screen.

Training tasks

Working-Memory Training (Fig. 1)Footnote 2: The task, described in full by Shahar et al. (2018), was similar to that used by Shahar & Meiran (2015). In each session, participants received a series of nine novel 2-choice reaction-time tasks, comprising of an object classification task (requiring a response to the identity of the stimulus) and a spatial classification task (requiring a response to the location of the stimulus on the screen). The task-set (i.e., the novel association of stimuli to responses) was randomly generated on each block, from a pool containing 12 object pairs, 6 location pairs and 7 response pairs, and was only performed for 10 trials. Each session included nine such blocks.

At the beginning of each block, participants received instructions regarding the new task, followed by a short practice phase of four trials of each task separately. Then, following an instructions reminder, participants moved on to the experimental trials, which included random switching between the tasks.

The level of difficulty was adaptive and was increased in the following block when participants made 0–1 errors, or decreased if they made more than 3 errors. The level of difficulty involved the information for which the participants were required to respond to—either to perform according to the cue/target (randomized on each block) that was presented N trials prior to the current trial. For example, if N = 2 and the N-back level refers to the cue, the current task (semantic/spatial) depended on the task-cue that was presented 2 trials ago, and the response was made according to the currently presented stimulus. If the N-back level refered to the stimulus, the task decision was made according to the currently presented task-cue, but the response was made according to the stimulus that was presented 2 trials ago.

Each trial sequence included a fixation point (1000 ms), a task cue (500 ms), a second fixation point (1000 ms) and the target stimulus (presented until a response was made or until 6000 ms had elapsed). If the participant made an error, a 400 ms beep was played.

Active control (visual search task): This task was the same as the visual-search task used by Redick et al. (2013), with minor changes that concerns our specific study. On each trial, participants were requested to identify the letter F in an array, and report whether the letter was facing right or left (i.e., F or the mirror image of F). The task and response keys were constant throughout training. Difficulty level was manipulated by increasing the size of the array and its composition (each altered on every other level). The smallest array was of 4 letters, and it was increased until it reached 100 letters. The array size increase was made by adding two additional rows and columns. The composition factor involved the identity of the distractors. First, the distractors were homogenous (only E’s or only T’s, which could be rotated left or right), and on the following level, they were heterogenous (both E’s and T’s, rotated in different directions). Thus, in the first level there were 4 letters, homogenous distractors, then on level 2, 4 letters with heterogenous distractors, and then 16 with homogenous distractors, and so on. The criterion for level increase was accuracy higher than 87.5%, and accuracy lower than 75% led to level decrease. Since some participants managed to reach the maximal level of difficulty (100 letters, heterogenous distractors) we increased the difficulty for them by increasing the requested accuracy: the criterion for level increase was accuracy higher than 92%, and the criterion for level decrease was accuracy lower than 80% (the array was kept at 100 letters, heterogenous).

Each training session consisted of 16 blocks, with 24 trials, each. Each trial began with a 500 ms fixation, followed by a 500 ms letters-array, and then a 2500 ms mask during which the response was made.

Data analysis

Preprocessing was conducted using the “prepdat” R package (Allon & Luria, 2016). GO trials with an error, with Response Times (RTs) < 100 ms (anticipation errors) or > 4000 ms (outliers) were omitted, as well as trials above 3 SD of the participant’s mean RT per condition. We employed Bayesian analyses of variance (BANOVA) (using JASP 0.8.1.2; JASP Team, 2017) with the default priors to estimate the relative odds of H1 and H0 given the data (assuming equal priors for H0 and H1). We further report BF10, the relative odds of H1 and H0.

Given our specific interest in group differences between pre and posttest measures, in the following section, we focus on effects involving the interaction between training group and the time of measurement.

In the NEXT phase, each trial could be compatible or incompatible with the GO response (Fig. 2). The difference (measured in response times) between the incompatible and compatible trials is considered to reflect Automatic Effects of Instructions (i.e., NEXT compatibility effect, Meiran et al., 2015a). In the GO phase, the difference between the first and second GO trials in response times and error rates serves as an indication of rapid instructed-task-learning (i.e., GO Trial effect, Meiran et al., 2015a). As has been mentioned, this effect was found to stem from differences in first GO trial performance, and thus, the first GO trial is first analyzed separately.

Results

Training results

Both the WM training group and the active control group demonstrated improvement in their performance throughout the training period, as evident in a robust effect of Session on the average training level across groups (BF10 = 2e + 263; \(\eta _{{\text{p}}}^{2}\) = 0.56).

GO Phase (rapid instructed-task-learning; near transfer)

Given its theoretical and empirical importance, we report first GO trial performance separately first. The BANOVAs included the between-subjects independent variable Group (WM-Training, Active, and Silent Control), and the within-subjects independent variable Time (pre vs. posttest). In RT, the results indicated a robust Time main effect (BF10 = 5.32e + 20; \(\eta _{{\text{p}}}^{2}\) = 0.42) and no Group main effect (BF10 = 0.08; \(\eta _{{\text{p}}}^{2}\) < 0.01). The lack of interaction between Group and Time (BF10 = 0.27; \(\eta _{{\text{p}}}^{2}\) = 0.02, supporting H0), demonstrated that all three groups showed a similar pretest-to-posttest response acceleration in first GO trial. In error-rates, the results indicated a main effect for Time (BF10 = 62.73; \(\eta _{{\text{p}}}^{2}\) = 0.07), that demonstrates an increase in error-rates from pre to posttest. While the main effect for Group was indecisive (BF10 = 0.53; \(\eta _{{\text{p}}}^{2}\) = 0.03), there was strong support for a Group by Time interaction in the first GO Trial error-rates (BF10 = 20.47; \(\eta _{{\text{p}}}^{2}\) = 0.07; Fig. 3). Descriptively, both control groups showed an increase in error-rates from pre to posttest. Exploring the simple-effects, we found no Time simple-effect for the training group (BF10 = 0.18; \(\eta _{{\text{p}}}^{2}\) < 0.01), a robust Time effect in the active control group (BF10 = 2,326.16; \(\eta _{{\text{p}}}^{2}\) = 0.24), and an indecisive Time effect in the silent control group (BF10 = 1.06; \(\eta _{{\text{p}}}^{2}\) = 0.11); with no robust difference between the control groups (BF10 = 0.40; \(\eta _{{\text{p}}}^{2}\) = 0.01), or between the silent control group and the training group (BF10 = 0.70; \(\eta _{{\text{p}}}^{2}\) = 0.03).

At this point, we should note that finding a robust difference between the training and the active control group is much more meaningful than finding a difference between the training and silent control group, since the active and training groups are better matched in terms of random assignment, activity during the training period and sample size. This contrasts with the silent control group which had lower number of participants, resulting in compromised statistical power that makes it difficult to find effects related to that group. Taken together, the results demonstrate a comparable first GO trial RT improvement across the three groups. However, only the procedural WM training group did not demonstrate an accompanying (at least descriptive) increase in error rate.

Next, we tested GO Trial effect. The BANOVAs included the between-subjects independent variable Group (WM-Training, Active, and Silent Control), and the within-subjects independent variables Time (pre vs. posttest) and GO Trial (Trial 1, Trial 2) (Fig. 3). In RTs, we found a robust GO Trial main effect (BF10 = 4.61e+26; \(\eta _{{\text{p}}}^{2}\) = 0.55) indicating better performance on the second GO trial, across groups. There was strong evidence for a lack of interaction between Group, Time, and GO Trial, demonstrating that all groups showed a similar pre-to-post reduction in their GO Trial effect (the difference between the first and second GO Trials) (BF10 = 0.07; \(\eta _{{\text{p}}}^{2}\) = 0.01). The two-way interaction between Group and Time (above and beyond GO Trial) similarly indicated no difference between the groups in their GO performance RT reduction from pre to posttest (BF10 = 0.19; \(\eta _{{\text{p}}}^{2}\) = 0.02).

In error-rates, the main effect for GO Trial had BF10 = 3.58e+39, \(\eta _{{\text{p}}}^{2}\) = 0.59, indicating more errors in the first GO trial. There was no interaction between Group, Time and GO Trial, as in RT (BF10 = 0.08; \(\eta _{{\text{p}}}^{2}\) = 0.02), indicating that the error-rates GO Trial effect was not differentially influenced in the training groups. However, the interaction between Time and Group indicated a decisive effect with BF10 = 46.96 (\(\eta _{{\text{p}}}^{2}\) = 0.09), supporting the pattern observed in the first GO trial analyses.

The pre-to-post increase in error rates (control groups) coupled with RT decrease (all groups) suggests that speed-accuracy tradeoff took place (mostly) in the control groups, whereas in the WM training group, GO response acceleration did not come at the cost of an increased error rate. Additionally, the increase in error rates seen in the active-control group could reflect regression to the mean, given the trend showing that the groups did not have equal error rates at pretest (This difference in first trial error rates between the groups at pretest was indecisive, however, with BF10 = 0.49). To control for this issue, based on first-trial error rates, we sub-sampled the data by removing participants with extreme valuesFootnote 3 (as explained in the "Appendix”), resulting in equivalent pretest performance. Analyses involving this sub-sample support the same conclusion reached for the full-sample (increase in error rates from pre to posttest only in the control groups). We do however note that this sub-sampling approach decreases the within-group variance, and could thus potentially increase effect sizes. Nonetheless, the results presented in the "Appendix” demonstrate that speed-accuracy tradeoff took place in the control groups regardless of pretest performance.

NEXT Phase (automatic effects of instructions; intermediate transfer)

BANOVA was performed on NEXT RTs (NEXT errors were not monitored) with the between-subjects independent variable Group (WM-Training, Active, and Silent Control), and the within-subject independent variables Time (pre vs. posttest), and Compatibility (compatible vs. incompatible). The results showed a substantial NEXT compatibility effect (BF10 = 9.24e+8; \(\eta _{{\text{p}}}^{2}\) = 0.43, slower incompatible than compatible NEXT responses) and an overall pre-to-post reduction of the NEXT compatibility effect (BF10 = 14.02; \(\eta _{{\text{p}}}^{2}\) = 0.11), across training groups. However, the results showed no modulation of the NEXT compatibility effect by training, as indicated by the lack of interaction between Group, Time and Compatibility (BF10 = 0.32; \(\eta _{{\text{p}}}^{2}\) = 0.04) (see Fig. 4).

Evidence accumulation modelling

The current results suggest that speed-accuracy tradeoff occurred only (or mostly) in the control groups. However, we cannot be sure that speed-accuracy tradeoff is the only decision mechanism that was influenced by training. For example, it might be that in addition, training resulted in improved information processing. The above model-agnostic analysis cannot disentangle the two. Therefore, we turned to evidence accumulation modelling (Forstmann, Ratcliff, & Wagenmakers, 2016; Ratcliff, Smith, Brown, & McKoon, 2016) in examining choice mechanisms underlying the first GO trial performance. The idea in these models is of sequential sampling of evidence that continues until the amount of evidence reaches a certain boundary, at which the agent makes the decision. Although the various models differ in detail (e.g., parameters and assumptions), they all have some analogous parameters such as drift-rate (the rate of evidence accumulation), boundary (the amount of evidence that is needed for a decision) and non-decision time, reflecting the duration of non-decision processes (e.g., early perceptual processes, late motor processes).

Given the behavioral results, we hypothesized that for the training group, there would be a drift rate increase and for the control groups there would be a boundary decrease. Such results could explain the RT decrease in all groups, and the (at least descriptive) accuracy reduction in the control groups. Note that our predictions do not exclude the possibility that in addition, there might be a boundary change in the training group and a drift rate in the control groups.

For this purpose, we implement the Linear Ballistic Accumulator model (LBA; Brown & Heathcote, 2008) where N accumulators are racing independently. In the LBA, choice and RT are determined by the first accumulator to reach the boundary. It was found that the LBA produces very similar results to those of the well-known Drift Diffusion model (Donkin, Brown, Heathcote, & Wagenmakers, 2011). Importantly, we chose LBA since it is simpler than the Drift Diffusion model.

In LBA, there are five free parameters: (1) drift-rate (v), reflecting the average (across trials) rate of evidence accumulation. The higher the drift rate, the steeper the slope towards the boundary, leading to higher chance of the accumulator winning the race in a shorter time (Ratcliff, & Smith, 2004). In contrast to Drift Diffusion in which evidence accumulation rate is stochastic and determined by sequential sampling, LBA assumes a constant increase in evidence within a trial (which is why it is considered to be ‘ballistic’); (2) “boundary”, or response threshold (b)—determining how much evidence is required in order for the accumulation process of a single accumulator to end. This parameter is describing speed-accuracy tradeoff, with low threshold describing quick and careless choice policy.

Additional parameters include the starting point (SP), reflecting a bias towards a certain alternative before evidence started to gather. The amount of evidence before the beginning of the accumulation ranges between zero and SP, with a value drawn from a rectangular distribution. The forth parameter is non-decision time (NDT) which was described above. The fifth and final parameter is the standard deviation of the distribution from which the drift rate is drawn (SDDR), reflecting between-trial variability in drift-rate.

To address our questions, we employed a model-comparison approach. Here we used the Bayesian Information Criterion (BIC; Schwarz, 1978) which reflects relative model fit, with smaller values indicating better fit. BIC considers model fit (maximum likelihood of the model), but also the number of free parameters (P; such that a larger number of parameters is penalized, to avoid over-fitting), and number of observations (N):

Importantly, the ΔBIC associated with comparing two models can be translated to Bayes Factor (Neath & Cavanaugh, 2012), which suits the statistical approach we took in this study, with BF = e0.5*ΔBIC. Thus, when ΔBIC is greater than 2.2, the BF exceeds 3 and reflects a decisive result towards one of the models. However, if ΔBIC is lower, this would be considered an indecisive result. In addition to ΔBIC, we also examined the expected (vs. observed) proportion of correct responses under the models, to appreciate whether the model succeeds in capturing the descriptive accuracy trends that we observed in the data.

Due to the limited trial number, we pooled the data across groups, separately for pre and posttest. To control for individual differences in minimal RT and RT variance, we estimated the minimal and standard deviation (SD) RT per participant, followed by a within-group standardization of these values. Formally, we first subtracted from each individual RT the minimal RT per participant across trials. We then multiplied this value with the quotient of the standard deviation (SD) per group to which an individual belongs to, divided by the individual SD per participant and group. We then added for each RT the minimal RT per group. With these four values, the transformed value RTt is defined as follows:

RTijk represent the RT in Group i, Participant j, Trial k. MINij is the minimal RT for Participant j in Group i (MINij = MIN(RTijk)). SDij is the standard deviation of RT for that participant SDij = SD(RTijk). SD-GROUPi is the mean SD per group (SD-GROUPi = MEAN(SDij)), and MIN-GROUPi is the mean minimal RT per groups (MIN-GROUPi = MEAN(MINij))Footnote 4.

These transformed values were then modeled with LBA.

Modelling pretest measurement—preliminary models

Given that the results suggest differences between the groups at pretest (see Fig. 3), it was unclear whether the models we compare should assume initial group differences in boundary and drift rate at pretest. Since these are the most important parameters concerning the decision stage, and most relevant for assessing speed-accuracy tradeoff, these are the only two parameters that we examined for group differences during the modelling process. Thus, in the preliminary analysis, we considered three hypotheses regarding pretest: (1) No group differences; (2) the silent group is different from the other two groups (pooled); and (3) all groups are different from one another. The rationale for the second model is that the silent control group was only included during year 2 of the study and was thus not strictly randomly assigned.

Thus, we ran three models, where the first (Model P1) had five free parameters (drift-rate, boundary, SP, NDT and SDDR, equal across groups). The second model (Model P2) had seven free parameters: SP NDT and SDDR were assumed to be equal across groups, but drift-rate and boundary were different for the silent control group, and in the third model (Model P3) there were nine free parameters: a separate drift-rate and boundary for each group. BIC was 1807.27, 1810.57, and 1804.89 for Models P1–P3, respectively. As can be seen, the third model had the best (lowest) BIC. Compared to the first model the BF 3.29, and compared to the second model the BF 17.11. Therefore, the ΔBIC allows us to endorse Model P3, which assumes that the groups started with a different baseline boundary and drift-rate at pretest. Hence, the following analyses were based on this preliminary model.

Which process could account for the transfer effect?

Next, we compared seven models that were meant to determine whether the groups differed in the degree to which drift rate and/or boundary changed between pre-testing and post-testing. To account for the Time*Group interaction, the models were structured such that the drift rate and boundary at posttest were calculated as pretest value + Δvalue, with Δvalue representing the change in the value (of drift-rate or boundary) between pretest and posttest.

Given our hypotheses for the modelling results, we formulated the following models: The Null Model (Model 1) assuming that the groups were different at pretest, and that they did not change between pre-test to post-test, i.e., Δdrift-rate and Δboundary are both zero. Model 2 assuming that drift-rate and boundary changed from pretest to posttest, but that this change was equal across the groups, i.e., Δdrift-rate and Δboundary are free parameters, but equal across groups. Model 3 assuming that Δdrift-rate and Δboundary were different across groups, and Model 4 assuming that Δdrift-rate was equal across groups but Δboundary differed across groups. In the following models, we focused on the training-vs.-controls difference. In Model 5, we assumed that Δdrift-rate was equal across groups but Δboundary differed between training and controls. In Model 6 Δdrift-rate differed between training and controls but Δboundary was equal across groups. Finally, in Model 7, Δdrift-rate and Δboundary differed between training and controls.

Table 1 summarizes the results for these seven models. Table 2 elaborates the parameter values under each model. First, Models 3 and 4 showed poorer BIC values than those of Models 5 and 7, suggesting that pooling the control groups improves model-fitFootnote 5. The best BIC rates were obtained for Models 5 and 7, with a ΔBIC of 1.01 between them. This value translates to an indecisive BF 0.66. Thus, BIC did not allow us to decide between these two models, although when relying only on ΔBIC, then the parsimonious model should be selected (Model 5 in this case). However, as we noted earlier, we also tested whether the models correctly predict the descriptive accuracy pattern in the results. Importantly, whereas Model 7 correctly predicted the pretest-to-posttest descriptive increase in accuracy seen in the training group (the actual values were 0.889–0.896, an increase of 0.007, with the model predicting an increase from 0.863 to 0.875, an increase of 0.012); Model 5 wrongly predicted a decrease in accuracy (from 0.871 to 0.867, a decrease of 0.004). Concerning the controls, both models correctly predicted the descriptive decrease in accuracy from pretest to posttest. Thus, although the BIC value was slightly better for the model assuming just boundary separation differences between the groups (Model 5), this model misses an important qualitative pattern—the increase in accuracy in the training group. As a result of these considerations, we tentatively endorse Model 7, while acknowledging the fact that this is not a clear-cut result. Both Model 5 and Model 7 show steeper boundary decrease in the controls relative to the training group, suggesting that those participants became hastier. In addition, Model 7 suggests an additional increase in drift-rate in the training group, and a slight decrease in drift-rate in the controls (see Table 2). Figure 5 provides a graphical assessment of Model 7 fit, indicating excellent fit of the model.

Graphical assessment of Model 7. Cumulative Probability is the proportion of correct responses (or errors) multiplied by the respective quantiles (0.1, 0.3, 0.5, 0.7, 0.9). Observed data (triangles) and model predictions (circles) are presented for both correct (upper arms) and incorrect (lower arms), separately for pretest (upper panels) and posttest (lower panels)

Discussion

The current study tested the hypothesis that procedural WM training would improve the ability to execute novel stimulus–response rules immediately upon instructions and without any previous practice (rapid instructed-task-learning). The procedural WM training task used in the current study involved frequent learning of novel stimulus–response mappings (suggested to be held in procedural WM; Oberauer, 2009) and has been shown to improve the process of retrieving action rules from working memory when performing choice-RT tasks (Shahar et al., 2018). It was thus hypothesized to influence the ability to perform tasks immediately following instructions (rapid instructed-task-learning). The results, especially those from the first GO Trial, show that all groups reacted more quickly in posttest relative to pretest. However, only in the group who underwent procedural WM training, response speeding did not come at the cost of an increased error-rate. To clarify the picture, we employed LBA modelling, focusing on two parameters. One parameter, the drift-rate, represents the rate by which stimulus-related evidence favoring its associated response accumulates. The other parameter is the amount of evidence required to reach a decision (boundary). Our results generally favor two models, both assuming greater boundary decrease from pretest-to-posttest in the control groups (relative to the training group), supporting the behaviorally observed speed-accuracy tradeoff in the controls. In addition, one of these models, which correctly predicted the descriptive accuracy increase in the training group from pre-to-posttest, also assumed an increase in drift-rate in the training group with a slight decrease in the control groups. However, given that this accuracy increase in the training group was not robust, we evidently cannot state that a predicted increase in accuracy is obligatory in the chosen model.

This result proposes that the control groups became hasty at posttest relative to pretest, which accommodates the observed speed-accuracy tradeoff in those groups. We view this result as evidence that the control groups actually became worse in the sense of adopting impulsive speed-accuracy tradeoff (see Dickman, & Meyer, 1988). This finding might suggest a reduction in motivation (to succeed in the task) in the control groups, something which was prevented to a certain degree in the training group.

In addition, if one appreciates the better descriptive fit of the model assuming additional drift-rate increase in the raining group, the results might also suggest that training in reoccurring task learning improved rapid instructed-task-learning, as it somewhat influenced evidence accumulation rate. In other words, it might be possible to learn how to learn. Note that while the LBA modelling results were less clear-cut than one might hope for, the PE and RT results (especially concerning active training) are more clear-cut. Thus, what remains tentative is the explanation of the results and not the results themselves.

Importantly, it should be noted that the results in the silent control group were less clear-cut than in the other groups possibly due to the sample size differences between the groups. Unfortunately, we cannot increase sample-size at this point as that would violate random assignment. However, the most important finding is that the difference in error rates between the training group and the active control group was significant. We view this difference as most critical since the active control group is the one most closely matched to the training group.

Based on prior correlational findings (Meiran et al., 2016), we also predicted an intermediate transfer effect to automatic effects of instructions, but no such transfer effect was found. In retrospect, we can say that our training protocol improved the ability to efficiently encode the instructions, but it did not improve the ability to overcome automaticity. Specifically, the training task involved an N-back element, and thus involved responding to lures (trials in which the target was the same as the target in the trial adjacent to the Nth trial). Importantly, lures are considered to elicit familiarity signals that participants need to overcome to maintain accurate performance (Kane, Conway, Miura, & Colflesh, 2007). While this type of interference was perhaps trained to some extent, it seems to be of a different kind than the interference in the NEXT paradigm. For example, in the NEXT paradigm, interference results from automatic response activation, where the stimulus is directly associated with the response via instructions (Meiran et al., 2015a; Meiran, Pereg, Kessler, Cole, & Braver, 2015b). In contrast, lures are only indirectly linked to a motor response. Thus, perhaps it is that the training did not have a specific element that closely resembled what was measured in Automatic-Effects of Instructions.

While the results did not show the expected intermediate transfer to automaticity, they were somewhat in line with the expected near transfer to rapid instructed-task-learning. Thus, the results of the current study join many training studies demonstrating near but not far transfer effects. The current results additionally elaborate prior findings by demonstrating the WM near transfer effect in the very first trials of the task.

Another main conclusion from this study is that since rapid instructed-task-learning possibly benefitted from a procedural WM training, procedural WM processing is probably involved in rapid instructed-task-learning abilities, as has been hypothesized by Meiran et al. (2012), as well as demonstrated in a correlative study by Meiran et al., (2016).

Limitations

One prominent limitation with the measurement of the Automatic-Effect of Instructions is that the NEXT phase did not involve errors recording. It is thus possible that a similar effect to that observed in the GO phase would have showed in NEXT errors. Another limitation is that the procedure we used included an embedded measure of Instructions’ automaticity, which might interact with the rapid instructed-task-learning measurement. Cole et al. (2017) suggested that in the NEXT paradigm, during the NEXT phase, the instructions are temporarily held in an anterior prefrontal cortex “task buffer” that shields them from interference, caused by the NEXT phase, thus incorporating hierarchical control. As has been mentioned, the WM training task also involved a hierarchical component. Consequently, we cannot point to the exact procedural WM mechanism that has been transferred. This, in turn, implies that it is possible that there would not have been any transfer had we used a different rapid instructed-task-learning procedure that does not involve hierarchical control.

Conclusion

The current study demonstrates that rapid instructed-task-learning relies on procedural WM and shows that procedural WM training leads to improvement in the ability to perform novel instructions, as manifested in faster, but not less accurate responses relative to the control groups. This suggests that learning to learn (novel procedural rules) is possible.

Notes

Other transfer measures that were administered are reported in a different report (Shahar et al., 2018).

Paraphrasing Shahar et al. (2018)

We wish to thank the anonymous reviewer who has suggested this analysis.

The rationale behind this transformation is as follows. Individual differences in minimal RT are eliminated by replacing the individual’s minimal RT with the group-average of that value. Individual differences in the dispersion of values are eliminated, by the within-group standardizing of the discrepancy-from-the minimum. This standardization expands the dispersion for individuals whose RT dispersion is low (their SDRT is low) and similarly, shrinks the dispersion for individuals whose dispersion is high.

Given that the behavioral results did not show a decisive difference between the silent control group and the training group, we also ran Models 8 and 9. These models assume that the different group at posttest is the active training group. In Model 8, Δboundary was same for the training and silent groups but different for the active training, and in Model 9 both Δboundary and Δdrift-rate were different for active control relative to the other groups. Both these models did worse than Models 5 and 7 (BIC Model 8 = 1218.00; BIC Model 9 = 1223.27). These results suggest than indeed pooling the controls and contrasting them with the training group best fits the data.

References

Allon, A., & Luria, R. (2016). Prepdat—an R package for preparing experimental data for statistical analysis. Journal of Open Research Software. https://doi.org/10.5334/jors.134.

Anderson, J. R. (1996). ACT: A simple theory of complex cognition. American Psychologist, 51(4), 355–365. https://doi.org/10.1037/0003-066X.51.4.355.

Barrouillet, P., Corbin, L., Dagry, I., & Camos, V. (2015). An empirical test of the independence between declarative and procedural working memory in Oberauer’s (2009) theory. Psychonomic Bulletin & Review, 22(4), 1035–1040. https://doi.org/10.3758/s13423-014-0787-y.

Brown, S. D., & Heathcote, A. (2008). The simplest complete model of choice response time: Linear ballistic accumulation. Cognitive Psychology, 57(3), 153–178. https://doi.org/10.1016/j.cogpsych.2007.12.002.

Cole, M. W., Bagic, A., Kass, R., & Schneider, W. (2010). Prefrontal dynamics underlying rapid instructed task learning reverse with practice. Journal of Neuroscience, 30(42), 14245–14254. https://doi.org/10.1523/JNEUROSCI.1662-10.2010.

Cole, M. W., Braver, T. S., & Meiran, N. (2017). The task novelty paradox: Flexible control of inflexible neural pathways during rapid instructed task learning. Neuroscience & Biobehavioral Reviews, 81, 4–15. https://doi.org/10.1016/j.neubiorev.2017.02.009.

Cole, M. W., Laurent, P., & Stocco, A. (2013). Rapid instructed task learning: A new window into the human brain’s unique capacity for flexible cognitive control. Cognitive, Affective, & Behavioral Neuroscience, 13(1), 1–22. https://doi.org/10.3758/s13415-012-0125-7.

De Houwer, J., Hughes, S., & Brass, M. (2017). Toward a unified framework for research on instructions and other messages: An introduction to the special issue on the power of instructions. Neuroscience & Biobehavioral Reviews, 81, 1–3. https://doi.org/10.1016/j.neubiorev.2017.04.020.

Dickman, S. J., & Meyer, D. E. (1988). Impulsivity and speed-accuracy tradeoffs in information processing. Journal of Personality and Social Psychology, 54(2), 274–290. https://doi.org/10.1037/0022-3514.54.2.274.

Donkin, C., Brown, S., Heathcote, A., & Wagenmakers, E.-J. (2011). Diffusion versus linear ballistic accumulation: different models but the same conclusions about psychological processes? Psychonomic Bulletin & Review, 18(1), 61–69. https://doi.org/10.3758/s13423-010-0022-4.

Forstmann, B. U., Ratcliff, R., & Wagenmakers, E.-J. (2016). Sequential sampling models in cognitive neuroscience: Advantages, applications, and extensions. Annual Review of Psychology. https://doi.org/10.1146/annurev-psych-122414-033645.

Friedman, N. P., Miyake, A., Corley, R. P., Young, S. E., DeFries, J. C., & Hewitt, J. K. (2006). Not all executive functions are related to intelligence. Psychological Science (0956–7976), 17(2), 172–179. https://doi.org/10.1111/j.1467-9280.2006.01681.x.

Jaeggi, S. M., Buschkuehl, M., Jonides, J., & Perrig, W. J. (2008). Improving fluid intelligence with training on working memory. Proceedings of the National Academy of Sciences, 105(19), 6829–6833. https://doi.org/10.1073/pnas.0801268105.

JASP Team. (2017). JASP (Version 0.8.1.2). https://jasp-stats.org/.

Kane, M. J., Conway, A. R. A., Miura, T. K., & Colflesh, G. J. H. (2007). Working memory, attention control, and the n-back task: A question of construct validity. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33(3), 615–622. https://doi.org/10.1037/0278-7393.33.3.615.

Koechlin, E., Basso, G., Pietrini, P., Panzer, S., & Grafman, J. (1999). The role of the anterior prefrontal cortex in human cognition. Nature, 399(6732), 148–151.

Liefooghe, B., Wenke, D., & De Houwer, J. (2012). Instruction-based task-rule congruency effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38(5), 1325–1335. https://doi.org/10.1037/a0028148.

Logan, G. D. (1988). Automaticity, resources, and memory: Theoretical controversies and practical implications. Human Factors, 30(5), 583–598. https://doi.org/10.1177/001872088803000504.

Meiran, N., Cole, M. W., & Braver, T. S. (2012). When planning results in loss of control: intention-based reflexivity and working-memory. Frontiers in Human Neuroscience. https://doi.org/10.3389/fnhum.2012.00104.

Meiran, N., Liefooghe, B., & De Houwer, J. (2017). Powerful instructions: Automaticity without practice. Curr Dir Psychol Sci. https://doi.org/10.1177/0963721417711638.

Meiran, N., Pereg, M., Givon, E., Danieli, G., & Shahar, N. (2016). The role of working memory in rapid instructed task learning and intention-based reflexivity: An individual differences examination. Neuropsychologia, 90, 180–189. https://doi.org/10.1016/j.neuropsychologia.2016.06.037.

Meiran, N., Pereg, M., Kessler, Y., Cole, M. W., & Braver, T. S. (2015a). The power of instructions: Proactive configuration of stimulus–response translation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(3), 768–786. https://doi.org/10.1037/xlm0000063.

Meiran, N., Pereg, M., Kessler, Y., Cole, M. W., & Braver, T. S. (2015b). Reflexive activation of newly instructed stimulus–response rules: Evidence from lateralized readiness potentials in no-go trials. Cognitive, Affective, & Behavioral Neuroscience, 15(2), 365–373. https://doi.org/10.3758/s13415-014-0321-8.

Melby-Lervåg, M., Redick, T. S., & Hulme, C. (2016). Working memory training does not improve performance on measures of intelligence or other measures of “far transfer”: Evidence from a meta-analytic review. Perspectives on Psychological Science, 11, 512–534.

Neath, A. A., & Cavanaugh, J. E. (2012). The Bayesian information criterion: Background, derivation, and applications. Wiley Interdisciplinary Reviews: Computational Statistics, 4(2), 199–203. https://doi.org/10.1002/wics.199.

Oberauer, K. (2009). Design for a Working Memory. Psychology of Learning and Motivation, 51, 45–100. https://doi.org/10.1016/S0079-7421(09)51002-X.

Oberauer, K., Souza, A. S., Druey, M. D., & Gade, M. (2013). Analogous mechanisms of selection and updating in declarative and procedural working memory: Experiments and a computational model. Cognitive Psychology, 66(2), 157–211. https://doi.org/10.1016/j.cogpsych.2012.11.001.

Ratcliff, R., & Smith, P. L. (2004). A comparison of sequential sampling models for two-choice reaction time. Psychological Review, 111(2), 333–367. https://doi.org/10.1037/0033-295X.111.2.333.

Ratcliff, R., Smith, P. L., Brown, S. D., & McKoon, G. (2016). Diffusion decision model: Current issues and history. Trends in Cognitive Sciences, 20(4), 260–281. https://doi.org/10.1016/j.tics.2016.01.007.

Redick, T. S., Shipstead, Z., Harrison, T. L., Hicks, K. L., Fried, D. E., Hambrick, D. Z., … Engle, R. W. (2013). No evidence of intelligence improvement after working memory training: A randomized, placebo-controlled study. Journal of Experimental Psychology: General, 142(2), 359–379. https://doi.org/10.1037/a0029082.

Salminen, T., Strobach, T., & Schubert, T. (2012). On the impacts of working memory training on executive functioning. Frontiers in Human Neuroscience. https://doi.org/10.3389/fnhum.2012.00166.

Schwarz, G. (1978). Estimating the dimension of a model. The Annals of Statistics, 6(2), 461–464. https://doi.org/10.1214/aos/1176344136.

Shahar, N., & Meiran, N. (2015). Learning to control actions: Transfer effects following a procedural cognitive control computerized training. PLoS One, 10(3), e0119992. https://doi.org/10.1371/journal.pone.0119992.

Shahar, N., Pereg, M., Teodorescu, A. R., Moran, R., Karmon-Presser, A., & Meiran, N. (2018). Formation of abstract task representations: Exploring dosage and mechanisms of working memory training effects. Cognition, 181, 151–159. https://doi.org/10.1016/j.cognition.2018.08.007.

Shahar, N., Teodorescu, A. R., Usher, M., Pereg, M., & Meiran, N. (2014). Selective influence of working memory load on exceptionally slow reaction times. Journal of Experimental Psychology: General, 143(5), 1837–1860. https://doi.org/10.1037/a0037190.

Souza, AdaS., Oberauer, K., Gade, M., & Druey, M. D. (2012). Processing of representations in declarative and procedural working memory. The Quarterly Journal of Experimental Psychology. https://doi.org/10.1080/17470218.2011.640403.

Soveri, A., Antfolk, J., Karlsson, L., Salo, B., & Laine, M. (2017). Working memory training revisited: A multi-level meta-analysis of n-back training studies. Psychonomic Bulletin & Review, 24(4), 1077–1096. https://doi.org/10.3758/s13423-016-1217-0.

Verbruggen, F., McLaren, I. P. L., & Chambers, C. D. (2014). Banishing the control homunculi in studies of action control and behavior change. Perspectives on Psychological Science, 9, 497–524.

Wilhelm, O., & Oberauer, K. (2006). Why are reasoning ability and working memory capacity related to mental speed? An investigation of stimulus–response compatibility in choice reaction time tasks. European Journal of Cognitive Psychology, 18(1), 18–50. https://doi.org/10.1080/09541440500215921.

Funding

This work was supported by a research grant from the US-Israel Binational Science Foundation Grant #2015-186 To Nachshon Meiran, Michael W. Cole, and Todd S. Braver.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The study was approved by the departmental ethics committee.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

To control for the possibility that regression to the mean took place (especially regarding active control), we sub-sampled the data by removing participants with extreme values. We removed the four participants with the lowest PE from the active control group; the two lowest-PE and the two highest-PE participants from the training group (to roughly keep the same average, but create a sub-sample as well); and the three highest-PE participants from the silent control group (we removed three and not four participants due to the different sample size). This sub-sample resulted in 9.6%, 10.3% and 10.9% errors in the active, training and silent groups, respectively; a trend which supported the null hypothesis (BF10 = 0.09).

We then re-ran the BANOVAs, first focusing on the first GO trial. The results were similar to those described above, with a robust Group by Time interaction (BF10 = 57.65, \(\eta _{{\text{p}}}^{2}\) = 0.09) showing that error rates increased in the two control groups (BF10 = 1841.64, \(\eta _{{\text{p}}}^{2}\) = 0.26; and BF10 = 6.41, \(\eta _{{\text{p}}}^{2}\) = 0.18 for the active and silent control groups, respectively), but not in the training group (BF10 = 0.19, \(\eta _{{\text{p}}}^{2}\) < 0.01). Next, we repeated the analyses testing the GO trial effect. As with the full sample, the results did not show an interaction between Group, Time and GO Trial (BF10 = 0.16, \(\eta _{{\text{p}}}^{2}\) = 0.02, see Fig. 6), but did indicate a robust Time by Group interaction (BF10 = 48.24, \(\eta _{{\text{p}}}^{2}\) = 0.11).

For completeness we also repeated the analyses involving RTs, and the results were the same as for the full-sample, indicating no Group by Time interaction (see Fig. 6), with an equivalent acceleration in all three groups (BF10 = 1.11e+31, \(\eta _{{\text{p}}}^{2}\) = 0.42).

Sub-sample GO phase results after equivalating pretest accuracy between the groups: Three-way interaction between Group (WM-Training, Active, and Silent Control), Time (pre vs. posttest), and GO Trial (Trial 1, 2) in RT (upper panels) and Proportion of Errors (lower panels). Error bars represent Bayesian 95% credible intervals

Rights and permissions

About this article

Cite this article

Pereg, M., Shahar, N. & Meiran, N. Can we learn to learn? The influence of procedural working-memory training on rapid instructed-task-learning. Psychological Research 83, 132–146 (2019). https://doi.org/10.1007/s00426-018-1122-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-018-1122-4