Abstract

Introduction

Numerous extended donor criteria (EDC) have been identified in liver transplantation (LT), but different EDC have different impacts on graft and patient survival. This study aimed to identify major EDC (maEDC) that were best able to predict the outcome after LT and to examine the plausibility of an allocation algorithm based on these criteria.

Methods

All consecutive LTs between 12/2006 and 03/2014 were included (n = 611). We analyzed the following EDC: donor age > 65 years, body mass index > 30, malignancy and drug abuse history, intensive care unit stay/ventilation > 7 days, aminotransferases > 3 times normal, serum bilirubin > 3 mg/dL, serum Na+ > 165 mmol/L, positive hepatitis serology, biopsy-proven macrovesicular steatosis (BPS) > 40%, and cold ischemia time (CIT) > 14 h. We analyzed hazard risk ratios of graft failure for each EDC and evaluated primary non-function (PNF). In addition, we analyzed 30-day, 90-day, 1-year, and 3-year graft survival. We established low- and high-risk graft (maEDC 0 vs. ≥ 1) and recipient (labMELD < 20 vs. ≥ 20) groups and compared the post-LT outcomes between these groups.

Results

BPS > 40%, donor age > 65 years, and CIT > 14 h (all p < 0.05) were independent predictors of graft failure and patient mortality and increased PNF, 30-day, 90-day, 1-year, and 3-year graft failure rates. Three-year graft and patient survival decreased in recipients of ≥ 1 maEDC grafts (all p < 0.05) and LT of high-risk grafts into high-risk recipients yielded worse outcomes compared with other groups.

Conclusion

Donor age > 65 years, BPS > 40%, and CIT > 14 h are major EDC that decrease short and 3-year graft survival, and 3-year patient survival. An allocation algorithm based on maEDC and labMELD is therefore plausible.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Liver transplantation (LT) is a traditional, well-established treatment for end-stage liver disease. Post-LT outcomes are improving, but the requirement for donor organs dramatically exceeds the supply, and even the use of donors after cardiac death (DCD) and living donor LT has not met this demand [1]. To address this problem, the use of extended donor criteria (EDC) grafts has been proposed [2, 3]. Such grafts have been widely used in the Eurotransplant (ET) region, but following the initial enthusiasm, some centers have raised concerns about the safety of EDC grafts and reported higher risks of graft dysfunction, non-anastomotic biliary strictures, and acute and chronic graft rejection [2,3,4]. Consequently, whether EDC grafts should be used in LT remains a hot topic. Furthermore, different EDC do not have the same impact on graft and patient outcome [5,6,7]. Therefore, the definition of EDC and their cutoff values is ambiguous, and caution and careful recipient selection is necessary to achieve acceptable post-LT outcomes [4, 8,9,10,11,12,13].

Higher model of end-stage liver disease (labMELD) scores are associated with increased preoperative mortality and worse post-LT outcome [9, 14, 15]. Some centers suggested that EDC grafts should be allocated to patients with lower labMELD scores and based this deduction on the premise that such recipients could easily overcome the risks associated with EDC grafts [9, 10, 16,17,18]. Conversely, other centers recommended that EDC grafts should be transplanted into patients with higher labMELD scores and that transplanting high-risk patients as soon as possible using the first available graft substantially optimizes the postoperative prognosis of such recipients [19, 20]. The reports are globally controversial; therefore, the allocation procedure of EDC grafts remains imprecisely defined.

This study aimed to identify the most relevant major EDC (maEDC) that are best able to predict post-LT graft and patient outcome in the era of MELD scores, to show that different combinations of maEDC and labMELD scores have different impacts on post-LT outcome, and to propose an LT-allocation algorithm based on maEDC in a single-center setting.

Methods

Patient collective

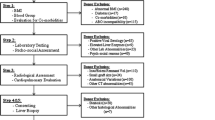

We reviewed the medical records of 611 adult deceased-donor LTs performed at the transplant center of the University of Heidelberg between December 2006 and March 2014. Patients were identified from a prospectively maintained database. Data were extracted from the comprehensive transplant recipient registry, written and electronic medical records, and ET records. All recipients were listed for LT according to ET procedures and protocols. National and institutional regulations concerning data acquisition were followed at all times. The Institutional Review Board at the University of Heidelberg granted a priori approval for the study (S-195/2015).

To ensure a homogenous collective, we excluded recipients under 18 years of age, split-liver transplants, combined LTs, and high-urgency LTs, and in the end, 465 patients remained eligible for analysis. The mean age of the recipients was 51.9 ± 11.3 years, and 73.5% of them were male. The mean age of the donors was 59.2 ± 16.9 years, and 55.7% of the donors were male. The indications for LT were stratified according to the European Liver Transplant Register (www.eltr.org). Alcoholic cirrhosis was the most common (26%) indication for LT. The second most common indication was hepatocellular carcinoma (HCC) due to alcohol- or viral hepatitis-induced cirrhosis (22.8%) followed by cirrhosis due to viral hepatitis (18.1%). The mean labMELD score was 18.7 ± 10.6 (mean ± standard deviation [SD]), and the mean standard exception (SE) MELD score was 25.8 ± 8.8. The mean cold ischemia time (CIT) in the collective was 11.7 ± 2.5 h. Donor and recipient demographic characteristics, indications for LT, and clinical parameters are summarized in Table 1.

Extended donor criteria

As proposed by ET and others, we analyzed the EDC and their cutoff values as follows: donor age > 65 years, body mass index (BMI) > 30 kg/m2, history of previous drug abuse, history of extrahepatic malignancy, peak serum sodium (Na+) > 165 mmol/L, bilirubin > 3 mg/dL, alanine aminotransferase (ALT) or aspartate aminotransferase (AST) > 3 times the normal level, positive hepatitis serology (HBs antigen, anti-HBc, anti-HCV positive), duration of intensive care unit (ICU) stay and/or duration mechanical ventilation (MV) > 7 days prior to procurement, CIT > 14 h, and biopsy-proven macrovesicular steatosis (BPS) > 40% [3, 21,22,23,24]. All grafts worthy of caution prior to implantation (conspicuous macroscopic appearance of the liver, higher donor age, history of hepatitis, or alcohol consumption) were biopsied, and an experienced liver pathologist examined the samples. LTs were performed using the modified piggyback technique by Belghiti as described elsewhere [25].

Assessment of clinical outcome

Overall patient survival was defined as the time between the initial (primary) LT until death or last known contact. Primary non-function (PNF) was defined as non-recoverable liver function requiring re-transplant (re-LT) or causing death within 15 days (day 0–14) [3]. The study was designed to examine the effect of EDC on the postoperative outcome and also to identify maEDC that are best able to predict post-LT graft and patient survival. For this purpose, patient records were meticulously reviewed for information on the postoperative course, causes and circumstances of graft loss, and patient death. Graft loss was separated into graft loss by patient death with a functioning graft and graft loss as a consequence of graft failure or graft complications, which resulted in re-LT or patient death. Circumstances surrounding graft loss and patient death were complex, and causes were assigned based on the most likely precipitating event. Biliary complications were considered the primary cause of graft loss in patients with severe chronic biliary complications who died of bacterial infection. Cardiac or cerebrovascular accidents, recurrence of disease, death due to trauma, and sepsis due to pulmonary infection, pancreatitis, or intestinal ischemia were not considered to be graft-related. These cases were considered as death with a functioning liver graft. The labMELD score was calculated to objectively assess the recipient condition, and a cutoff value of 20 was chosen based on a previous study [15]. Risk factor analysis was performed for 15-day, 30-day, 90-day, 1-year, and 3-year graft failure and patient mortality. Overall graft survival was corrected for labMELD score with a cutoff value of 20. Follow-up ended on the date of the last documented contact or on patient death. The median follow-up was 36 (range 0–108) months.

Statistical analysis

Statistical package for social sciences (SPSS), version 23 (IBM Corp. released 2015, IBM SPSS Statistics for Windows. Armonk, NY; IBM) was used for statistical analysis. Unless otherwise indicated, continuous variables are expressed as mean ± SD, and categorical variables are shown as percentages. Continuous variables were compared across EDC and no-EDC categories using the independent t test or in cases of abnormal data distribution, the Mann-Whitney U test. The Pearson chi-square test or Fisher exact test were performed to compare categorical variables between different groups. Survival rates were analyzed with the Kaplan-Meier method and differences were compared using the log-rank test. Cox proportional hazard regression analysis was used to calculate multivariate hazard ratios (HR) and 95% confidence intervals (95% CI). To identify maEDC, all EDC parameters were entered in the multivariate analysis. A two-sided p value of less than 0.05 was considered statistically significant in all tests.

Results

Extended donor criteria

Of the 465 recipients eligible for analysis, 353 (75.9%) received EDC organs (EDC ≥ 1), and 112 (24.1%) were transplanted with no-EDC grafts (EDC = 0). Most patients received grafts with one EDC (n = 191; 54.1%), 114 (32.3%) patients received grafts with two EDC, 32 (9.1%) patients received organs with three EDC, and 16 patients (3.4%) received grafts with four or five EDC, of which 14 (3%) received livers with four, and two (0.4%) patients received organs with five EDC. A donor age > 65 years was the most frequent EDC (n = 191; 41.1%) followed by > 7 days MV or ICU stay prior to procurement (n = 87; 18.7%), elevated transaminases > 3 times the normal level (n = 82; 17.6%), and a CIT > 14 h (n = 77; 16.6%). The remaining EDC were BMI > 30 kg/m2 (n = 56; 2%), positive hepatitis serology (n = 55; 11.8%), serum Na+ > 165 mmol/L (n = 17; 3.7%), BPS > 40% (n = 8; 1.7%), history of extrahepatic malignancy (n = 9; 1.9%), serum bilirubin > 3 mg/dL (n = 5; 1.1%), and history of drug abuse (n = 5; 1.1%).

Comparison of patient groups

There were significantly more male recipients of EDC organs compared with male recipients of no-EDC grafts (75.9% vs. 66.1%; p = 0.04), and more female donors of EDC organs in comparison with female donors of no-EDC grafts (47.3% vs. 34.8%, p = 0.02). The mean labMELD score was higher in recipients of no-EDC grafts (21.2 ± 11.8 vs. 17.8 ± 10.0, p = 0.003), and so was the mean standard exception (SE)-MELD score (27.7 ± 9.2 vs. 25.8 ± 8.8, p = 0.008). No significant differences were observed in age, recipient or donor BMI, donor MV/ICU stay, and indication for LT between recipients of EDC and no-EDC grafts (Table 2).

Graft survival and EDC

The re-LT rate in the whole collective was 15.9% (74 patients). PNF and 30-day failure rates did not differ significantly between EDC and no-EDC LT groups (PNF 2.7% vs. 4.0%, p = 0.53; 30-day failure rate 3.6% vs. 5.4%, p = 0.44). In addition, 90-day graft failure and 1-year graft survival were not significantly different between recipients of EDC and no-EDC organs (90-day graft failure 6.5% vs. 3.6%, p = 0.25; 1-year graft survival 89.2 ± 1.8% vs. 88.7 ± 3.0%, log-rank p = 0.9). Three-year graft survival was slightly higher in the no-EDC group, but this difference was not significant (EDC 84.7 ± 2.2%, mean 31.9 months, 95% CI 30.7–33.1; no-EDC 88.9 ± 3.2%, mean 32.5 months, 95% CI 30.7–33.1; log-rank p = 0.382; Fig. 1a). The HR of 3-year graft loss was 1.4 (95% CI 0.7–2.8; p = 0.280) for EDC graft recipients compared with the no-EDC group.

Patient survival and EDC

Overall 3-year patient survival was 69.5 months ± 2.2% (mean 27.1 months, 95% CI 25.8–28.4). Considering patient mortality secondary to graft failure or graft-related complications only, survival did not differ between the no-EDC (92.3 ± 2.6%) and EDC (87.6 ± 1.9%) groups (log-rank p = 0.235; Fig. 1b). Likewise, considering all-cause patient mortality, overall survival did not differ between recipients of no-EDC (75.2 ± 4.1%) and EDC (67.7 ± 2.6%) grafts (log-rank p = 0.166) (mean survival of no-EDC graft recipients 28.5 months [95% CI 26.0–31.0]; mean survival of EDC graft recipients 26.6 months [95% CI 25.1–28.2]).

Major EDC

Multivariate analysis of EDC parameter HRs revealed BPS (HR 10.5, 95% CI 3.6–30.3, p < 0.001), donor age (HR 2.0, 95% CI 1.1–3.4, p = 0.03), and CIT (HR 2.0, 95% CI 1.1–3.8, p = 0.024) as independent predictive factors of 3-year graft failure following LT (Table 3). These parameters were regarded as maEDC, and their effects on graft and patient survival were analyzed.

Graft survival and major EDC

PNF, 30-day, 90-day, 1-year, and 3-year graft failure rates were significantly higher in recipients of organs with at least one maEDC and increased further with an increasing number of maEDC (Table 4). No patient received an organ with three maEDC. The HR of the 3-year graft failure rate of maEDC grafts was 2.3 (95% CI 1.3–4.2, p = 0.004). Pooling non-maEDC that were termed minor EDC (miEDC) and no-EDC patients revealed that 3-year graft survival was significantly lower when donor organs had at least one maEDC (3-year survival 80.6% ± 3.0%, mean 31.1 months, 95% CI 29.5–32.6) compared with the pooled collective (91.5 ± 2.0%, mean 33.3 months, 95% CI 32.0–34.6, log-rank p = 0.007; Fig. 2a). This difference was also significant after correcting for labMELD score with a cutoff value of 20 (log-rank p = 0.004; Fig. 4). Consistent with this finding, 3-year graft outcomes were significantly worse as the number of maEDC increased (log-rank test p = 0.001) (Fig. 3a). Similarly, the difference was significant after correcting for SE-MELD score with a cutoff value of 20 (log-rank p = 0.007; data not shown).

Patient survival and major EDC

Considering patient mortality secondary to graft failure or graft-related complications only, 3-year survival was significantly lower in maEDC recipients (85.1 ± 2.5% vs. 93.2 ± 1.8%, log-rank p = 0.014; Fig. 2b) compared with non-maEDC recipients. Also, overall 3-year patient survival was significantly lower following transplantation of maEDC grafts compared with the remaining collective (63.8 ± 3.2% vs. 76.4 ± 3.0%, log-rank p = 0.011). Three-year patient survival decreased as the number of maEDC increased (log-rank test p = 0.037) (Fig. 3b).

Allocation algorithm

Based on the graft quality (maEDC) and the recipient status (labMELD score), we established allocation groups and considered the graft to be at low risk or “good” if no maEDC were present, whereas livers with ≥ 1 maEDC were regarded as higher risk organs. Based on a previous study, we considered recipients with a labMELD score ≥ 20 to be at higher risk and those with a labMELD score < 20 as low risk or “good” recipients [17]. The comparison of the groups was as follows: group A (low-risk graft to a low-risk recipient); group B (low-risk graft to a high-risk recipient); group C (high-risk graft to a low-risk recipient); and group D (high-risk graft to a high-risk recipient). Group A consisted of 122 cases (26.2%), group B of 89 cases (19.1%), group C of 156 cases (33.5%), and group D of 85 cases (18.3%).

Allocation algorithm: graft survival

Graft failure rates were lowest in group A and highest in group D at all investigated time points (Table 5). Failure rates of group B doubled after 1 year, and those of group C were similar to group A and B but remained lower than those of group D (Table 5) (all p < 0.05). Group D had the lowest 3-year graft survival and group A had the highest (Fig. 5a) (p = 0.004). Three-year graft survival was significantly lower in high-risk recipients receiving grafts with two maEDC (Fig. 6b; p = 0.034). No differences were observed in graft survival in recipients at low risk who received grafts with one or two maEDC (Fig. 6a; p = 0.11).

Allocation algorithm: patient survival

Patient mortality rates did not differ significantly between the groups at 90 days and 1 year after LT, but group D had the highest mortality rate at 3 years after LT (16.5%), and group A had the lowest (4.9%) (p = 0.04) (Table 6). Consistent with these findings, group D had the lowest 3-year survival (p = 0.018) (Fig. 5b). Patient survival did not differ significantly between recipients at high risk (labMELD ≥ 20) who received organs with one or two maEDC (Fig. 6d; p = 0.46). Also, no significant differences were observed in recipients at low risk who were transplanted with grafts with one or two maEDC (Fig. 6c; p = 0.17).

Discussion

Extended donor criteria and post-LT outcomes

EDC grafts carry an increased risk of PNF and are associated with higher morbidity and mortality rates after LT [4, 12, 26]. These findings are in contrast to the results of other studies that suggested that transplantation of EDC grafts is safe with acceptable outcomes [3, 27,28,29,30,31]. The current data is therefore still subject to debate and the definition of EDC and their precise cutoff values remains difficult [1]. The MELD-based allocation policy has reduced waiting list mortality from 20 to 10%, but the post-transplant 1-year survival rates have dropped from 90 to 80% [24, 32, 33]. The quality of organs in most ET countries has deteriorated over the last 10–15 years because of organ shortage [32, 33], increased dependence of transplant centers on EDC grafts [34], and increasing MELD scores of the patients on the waiting lists [32, 33]. Considering patient mortality as secondary to graft failure or graft-related complications only, the current 3-year graft and patient survival rates at our center are 85.8 ± 1.8% and 88.7 ± 1.6% respectively.

Major EDC predict the post-LT outcome

In general, EDC did not influence graft or patient survival after LT (Fig. 1), suggesting that transplantation of EDC grafts is safe, but different EDC had different impacts on post-LT outcome in the present study. In the multivariate analysis, a macrovesicular BPS of > 40%, a donor age of > 65 years, and a CIT of > 14 h significantly affected short and 3-year graft and 3-year patient survival. These EDC were referred to as major (maEDC) in contrast to other EDC that were less important and called minor EDC (miEDC) (Table 3).

Major EDC: macrovesicular biopsy-proven steatosis > 40%

BPS, traditionally classified as mild (< 30%), moderate (30–60%), or severe (> 60%), is a valuable parameter for graft selection [35]. BPS is considered as an EDC associated with graft dysfunction and correlates with PNF and lower post-LT recipient survival [36,37,38]. PNF occurs in 80% of liver transplants with severe (> 60%) macrovesicular steatosis [39, 40], and PNF rates declined when these grafts were excluded [40]. Steatotic livers with less than 60% macrovesicular steatosis can be transplanted, but caution has been advised when using moderately fatty organs (30–60% steatosis), especially in sicker recipients (labMELD > 30) [39]. In 35% of cases, inspection-based organ assessment by the explant surgeon is a poor predictor of higher grade steatosis [41]; therefore, routine biopsies should be performed, and fibrotic grafts should not be transplanted, irrespective of the steatosis grade [41]. As proposed by others, and as currently accepted by the German Medical Association, a BPS of > 40% is considered an EDC [3, 21,22,23,24]. Our analysis supports these findings as a BPS of > 40% was the EDC with the highest risk of graft failure (Table 3). The role of BMI as a predictor of steatosis remains controversial [39, 42, 43]. In this study, however, BMI did not affect graft survival (Table 3).

Major EDC: donor age > 65 years

The mean age of liver donors is increasing worldwide. Studies have shown excellent graft survival with octogenarian donors, suggesting no age limits for organ donation [44, 45]. However, these studies analyzed carefully selected collectives with no additional risk factors. In an ET study, donor age was identified as a predictor of post-LT survival, but only in the univariate analysis, and no cutoff value was provided [1]. Others have identified high donor age as a risk factor for lower survival and strategies have been proposed on how to optimize the use of such organs [46]. In old livers, hepatocytes have a lower regenerative capacity; therefore, the hepatic parenchyma is more vulnerable to ischemia-reperfusion injury (IRI) [47] and inflammatory cytokine responses before and after transplantation [48, 49]. A donor age of > 65 years is accepted by the German Medical Association as an EDC. It was the most prevalent EDC in our study and affected the 3-year graft survival by doubling the hazard of failure (Table 3).

Major EDC: cold ischemia time > 14 h

The German Medical Association does not consider CIT to be an EDC because it can only be calculated retrospectively [23, 50]. However, prolonged CIT (over 12 h) was correlated with poor initial graft function and organ failure [5, 51]. The CIT should be limited to 14–16 h in donors of up to 60 years of age [52] and to 8–12 h for donors over 60 years with hepatosteatosis [53]. It seems that 1-year graft survival decreases by roughly 4% with every additional hour of cold ischemia provided the CIT is longer than 12 h; therefore, CIT is relevant, but its estimation before organ procurement and allocation remains imprecise [1]. Similarly, a European Liver Transplant Register study revealed that 5-year survival decreased as the CIT duration increased [5]. Prolonged CIT also increased the risk of non-anastomotic biliary strictures because the biliary epithelium became more prone to IRI after reperfusion [47]. The allocation process is very complex, and lengthy CIT is common. However, shorter CIT can be managed by regional allocation and higher internal organization [50]. In agreement with these findings, the mean CIT in the present study was 11.7 ± 2.5 h, and CIT longer than 14 h doubled the 3-year graft failure rate (Table 3).

Major EDC predicted graft failure and patient mortality within 3 years after LT. Regardless of the combination, the presence of two maEDC significantly increased the PNF, 30-day, 90-day, 1-year, and 3-year graft failure rates (Table 4). Three-year survival analysis confirmed poorer graft and patient outcome in recipients of maEDC organs (Fig. 2), and the adverse effect on survival increased as the number of maEDC increased (Fig. 3). This explains the increasing failure rates and suggests a cumulative negative impact of maEDC on the post-LT outcome (Table 4). The detrimental effect of maEDC on graft survival was not related to the labMELD score that was regarded as a surrogate for the patient’s condition (Fig. 4) and it was also not related to the SE-MELD scores (data not shown). For the subgroup analysis, a labMELD cutoff score of 20 was chosen because this value predicted a poorer post-LT outcome in a previous analysis [15].

EDC and donor risk index

A donor risk index (DRI) > 1.5 and a labMELD score ≥ 20 affected 90-day and 1-year postoperative mortality [15]. The validity of the DRI has already been confirmed in the ET region [21]. In addition to DRI, gamma-glutamyltransferase levels and rescue allocation were also predictive of poor post-LT outcome and together these factors make up the ET-DRI [21]. This score also considers DCD and split-liver grafts. DCD is indeed a critical EDC, but donation after cardiac death is not allowed in Germany; therefore, this parameter was not analyzed in the current study. Splitting the liver has also been suggested to negatively influence the post-LT outcome, but this argument is debatable. Splitting the graft does not always have a negative impact on graft or patient survival [54]. These findings are in contrast to the results of other studies [12] and may be interpreted as selection bias because only no-EDC livers, i.e., grafts of excellent quality or “ideal organs", are considered for splitting and are matched to appropriate recipients. These factors might introduce bias to the analysis; therefore, split-livers were not considered in the present study. The CIT and donor age, but not BPS, have been used to calculate the DR-indices. As maEDC had a cumulative effect on post-LT outcome in this study, external validation may imply that modification of the current DR indices is relevant. As expected, we found that the higher the number of maEDC, the higher the DRI, confirming the validity of the index. The mean DRI was 1.53 for no-maEDC grafts, 1.88 for grafts with one maEDC, and 2.05 for grafts with two maEDC (p < 0.001; data not shown). This finding indicates that DRI would increase as the number of maEDC increases and confirms the validity of the DRI. It is the fast and straightforward calculability of the maEDC that might render the suggested maEDC-based allocation model more applicable, especially in a setting of competitive organ allocation when the transplant center must provide a rapid but dependable decision.

Allocation algorithm based on major EDC

Similar to the findings of Schrem et al. [24], the number of EDC was significantly higher in patients with lower labMELD scores (labMELD < 20 vs. labMELD ≥ 20, 21.2 ± 11.8 vs. 17.8 ± 10.0, p = 0.003) (Table 2). This was observed in the initial phase of the MELD-based organ allocation and was mostly based on the opinion that a recipient in a good clinical condition might tolerate an EDC graft better than a patient with a higher labMELD score. Indeed, graft and patient outcomes were not different when donor organs with a high number of EDC were transplanted into recipients with lower MELD scores. This suggests center bias, but also that the center could choose recipients by matching MELD scores with the number of minor EDC without adverse effects on the post-LT outcomes. As previously reported [21, 30], it was not possible to compare the factors that lead to organ allocation decisions. Therefore, the analysis may be prone to center bias. Nonetheless, the EDC were analyzed in a preselected group of grafts concerning organ quality. Poor-quality organs were typically eliminated during the allocation procedure, and this is likely to have contributed to the low PNF rate (3.3%).

Since donor age and steatosis cannot be influenced, the CIT should be reduced as much as possible and modifications to the allocation strategies should be considered. Machine perfusion (MP) of livers is a novel organ preservation technology. Initial studies have demonstrated both the safety and feasibility of the MP and its superiority to conventional static cold storage. The main benefits of MP include an extended period of organ preservation, decreased incidence of early allograft dysfunction, and reduction of biliary complications. This novel method will likely transform liver preservation routines and improve the use of EDC grafts, but its boundaries are yet to be defined and high costs mean that this method cannot be used in every center [55, 56].

In the current study, we showed that the combination of liver grafts that carry an increased risk of graft failure (maEDC ≥ 1) and recipients at high risk (labMELD ≥ 20) worsens the post-LT outcome. In contrast, the combination of low-risk grafts and low-risk recipients (labMELD < 20) yielded the lowest graft failure and patient mortality rates (Tables 5 and 6). Regarding the labMELD score as a single surrogate parameter for the patient’s condition bears a risk of bias. However, the finding that recipients with lower labMELD scores are more tolerant of EDC grafts (EDC ≥ 1) could be seen as a window of opportunity for the center to choose recipients by matching labMELD scores with the number of EDC without adversely affecting the post-LT outcome. Graft survival was worse in high-risk recipients (labMELD ≥ 20) who were transplanted with organs with one or two maEDC, whereas no difference in graft survival was seen when grafts with one or two maEDC were transplanted into recipients with labMELD scores < 20 (Figs. 5 and 6). This indicates that a liver allocation algorithm based on maEDC is plausible and that no-EDC and miEDC grafts could be eligible for any recipient. Furthermore, maEDC graft allocation could be decided by balancing the number of maEDC and the recipient’s condition (Fig. 7). In this study, an increasing number of maEDC worsened the post-LT graft survival and this effect was significantly more prominent in high-risk recipients (Figs. 5 and 6). These findings suggest that grafts with one maEDC could be allocated to high-risk recipients and grafts with two maEDC could preferably be allocated to low-risk patients (labMELD < 20). Grafts with three maEDC could be given to oncologic patients in a match-MELD setting (e.g., patients with hepatocellular carcinoma or controlled LT trials). The current study is susceptible to center bias, but an exact match between graft and recipient is important, and the proposed allocation algorithm might facilitate graft allocation and improve LT outcome.

Graft (a) and patient (b) survival plots: group A (low-risk graft to low-risk patient): major extended donor criteria (maEDC) = 0, labMELD < 20; group B (low-risk graft to high-risk patient): maEDC = 0, labMELD ≥ 20; group C (high-risk graft to low-risk patient): maEDC ≥ 1, labMELD < 20; group D (high-risk graft to high-risk patient): maEDC ≥ 1, labMELD ≥ 20

In conclusion, macrovesicular BPS of > 40%, donor age of > 65 years, and prolonged CIT of > 14 h significantly decrease short and 3-year graft survival and 3-year patient survival. These three major EDC had a more significant effect than other extended criteria, and combining them increased the risk of 3-year patient mortality and 3-year graft failure irrespective of the recipient’s condition. The single-center study design and the modest transplant collective limit these findings. In addition, due to the extreme predefined EDC cutoffs and low recipient labMELD scores, donor and recipient characteristics probably do not reflect those of other countries in Europe and the USA. However, careful evaluation of the maEDC is prudent, and caution is advised when allocating a graft with at least one maEDC for transplantation.

Change history

22 July 2022

A Correction to this paper has been published: https://doi.org/10.1007/s00423-022-02619-0

References

Silberhumer GR, Rahmel A, Karam V, Gonen M, Gyoeri G, Kern B, Adam R, Muehlbacher F, Rogiers X, Burroughs AK (2013) The difficulty in defining extended donor criteria for liver grafts: the Eurotransplant experience. Transpl Int 26(10):990–998

Busuttil RW, Tanaka K (2003) The utility of marginal donors in liver transplantation. Liver Transpl 9(7):651–663

Schemmer P, Nickkholgh A, Hinz U, Gerling T, Mehrabi A, Sauer P, Encke J, Friess H, Weitz J, Büchler M Extended donor criteria have no negative impact on early outcome after liver transplantation: a single-center multivariate analysis. In: Transplantation proceedings, 2007. vol 2. Elsevier, pp 529–534

Attia M, Silva MA, Mirza DF (2008) The marginal liver donor–an update. Transpl Int 21(8):713–724

Adam R, Cailliez V, Majno P, Karam V, McMaster P, Calne RY, O'grady J, Pichlmayr R, Neuhaus P, Otte J-B (2000) Normalised intrinsic mortality risk in liver transplantation: European Liver Transplant Registry study. Lancet 356(9230):621–627

Angelico M (2005) Donor liver steatosis and graft selection for liver transplantation: a short review. Eur Rev Med Pharmacol Sci 9(5):295–297

Salizzoni M, Franchello A, Zamboni F, Ricchiuti A, Cocchis D, Fop F, Brunati A, Cerutti E (2003) Marginal grafts: finding the correct treatment for fatty livers. Transpl Int 16(7):486–493

Mirza DF, Gunson BK, Da Silva R, Mayer AD, Buckels JA, McMaster P (1994) Policies in Europe on. Lancet 344(8935):1480–1483

Nemes B, Gelley F, Zádori G, Piros L, Perneczky J, Kóbori L, Fehérvári I, Görög D Outcome of liver transplantation based on donor graft quality and recipient status. In: Transplantation proceedings, 2010. vol 6. Elsevier, pp 2327–2330

Burroughs SG, Busuttil RW (2009) Optimal utilization of extended hepatic grafts. Surg Today 39(9):746–751

Mittler J, Pascher A, Neuhaus P, Pratschke J (2008) The utility of extended criteria donor organs in severely ill liver transplant recipients. Transplantation 86(7):895–896

Feng S, Goodrich N, Bragg-Gresham J, Dykstra D, Punch J, DebRoy M, Greenstein S, Merion R (2006) Characteristics associated with liver graft failure: the concept of a donor risk index. Am J Transplant 6(4):783–790

Gwiasda J, Schrem H, Klempnauer J, Kaltenborn A (2017) Identifying independent risk factors for graft loss after primary liver transplantation. Langenbeck's Arch Surg 402(5):757–766

Halldorson J, Bakthavatsalam R, Fix O, Reyes J, Perkins J (2009) D-MELD, a simple predictor of post liver transplant mortality for optimization of donor/recipient matching. Am J Transplant 9(2):318–326

Bruns H, Lozanovski VJ, Schultze D, Hillebrand N, Hinz U, Büchler MW, Schemmer P (2014) Prediction of postoperative mortality in liver transplantation in the era of MELD-based liver allocation: a multivariate analysis. PLoS One 9(6):e98782

Avolio AW, Agnes S, Gasbarrini A, Nure E, Siciliano M, Castagneto M Prognostic value of MELD score and donor quality in liver transplantation: implications for the donor recipient match. In: Transplantation proceedings, 2006. vol 4. Elsevier, pp 1059–1062

Avolio AW, Frongillo F, Nicolotti N, Mulè A, Vennarecci G, De Simone P, Agnes S Successful use of extended criteria donor grafts with low to moderate steatosis in patients with model for end-stage liver disease scores below 27. In: Transplantation proceedings, 2009. vol 1. Elsevier, pp 208–212

Gastaca M Extended criteria donors in liver transplantation: adapting donor quality and recipient. In: Transplantation proceedings, 2009. vol 3. Elsevier, pp 975–979

Amin MG, Wolf MP, TenBrook JA, Freeman RB, Cheng SJ, Pratt DS, Wong JB (2004) Expanded criteria donor grafts for deceased donor liver transplantation under the MELD system: a decision analysis. Liver Transpl 10(12):1468–1475

Maluf DG, Edwards EB, Kauffman HM (2006) Utilization of extended donor criteria liver allograft: is the elevated risk of failure independent of the model for end-stage liver disease score of the recipient? Transplantation 82(12):1653–1657

Braat A, Blok J, Putter H, Adam R, Burroughs A, Rahmel A, Porte R, Rogiers X, Ringers J (2012) The Eurotransplant donor risk index in liver transplantation: ET-DRI. Am J Transplant 12(10):2789–2796

Nickkholgh A, Weitz J, Encke J, Sauer P, Mehrabi A, Büchler MW, Schmidt J, Schemmer P (2007) Utilization of extended donor criteria in liver transplantation: a comprehensive review of the literature. Nephrol Dial Transplant 22(suppl 8):viii29–viii36

Richtlinientext A Richtlinien zur Organtransplantation gem. § 16 TPG

Schrem H, Reichert B, Frühauf N, Becker T, Lehner F, Kleine M, Bektas H, Zachau L, Klempnauer J (2012) The donor-risk-index, ECD-score and D-MELD-score all fail to predict short-term outcome after liver transplantation with acceptable sensitivity and specificity. Ann Transplant 17(3):5–13

Mehrabi A, Mood ZA, Fonouni H, Kashfi A, Hillebrand N, Müller SA, Encke J, Büchler MW, Schmidt J (2009) A single-center experience of 500 liver transplants using the modified piggyback technique by Belghiti. Liver Transpl 15(5):466–474

Mehrabi A, Fonouni H, Müller S, Schmidt J (2008) Current concepts in transplant surgery: liver transplantation today. Langenbeck’s Arch Surg 393(3):245–260

Anderson CD, Vachharajani N, Doyle M, Lowell JA, Wellen JR, Shenoy S, Lisker-Melman M, Korenblat K, Crippin J, Chapman WC (2008) Advanced donor age alone does not affect patient or graft survival after liver transplantation. J Am Coll Surg 207(6):847–852

Briceño J, Ciria R, de la Mata M, Rufián S, López-Cillero P (2010) Prediction of graft dysfunction based on extended criteria donors in the model for end-stage liver disease score era. Transplantation 90(5):530–539

Müllhaupt B, Dimitroulis D, Gerlach JT, Clavien P-A (2008) Hot topics in liver transplantation: organ allocation–extended criteria donor–living donor liver transplantation. J Hepatol 48:S58–S67

Schrem H, Reichert B, Frühauf N, Kleine M, Zachau L, Becker T, Lehner F, Bektas H, Klempnauer J (2012) Extended donor criteria defined by the German Medical Association: study on their usefulness as prognostic model for early outcome after liver transplantation. Der Chirurg; Zeitschrift fur alle Gebiete der operativen Medizen 83(11):980–988

Mehrabi A, Fonouni H, Ahmadi R, Schmied BM, Müller SA, Welsch T, Hallscheidt P, Zeier M, Weitz J, Schmidt J (2009) Transplantation of a severely lacerated liver–a case report with review of the literature. Clin Transpl 23(3):321–328

Schlitt H, Loss M, Scherer M, Becker T, Jauch K, Nashan B, Schmidt H, Settmacher U, Rogiers X, Neuhaus P (2011) Current developments in liver transplantation in Germany: MELD-based organ allocation and incentives for transplant centres. Z Gastroenterol 49(1):30–38

Weismüller TJ, Negm A, Becker T, Barg-Hock H, Klempnauer J, Manns MP, Strassburg CP (2009) The introduction of MELD-based organ allocation impacts 3-month survival after liver transplantation by influencing pretransplant patient characteristics. Transpl Int 22(10):970–978

Seehofer D, Schöning W, Neuhaus P (2013) Deceased donor liver transplantation. Chirurg; Z Geb Oper Med 84(5):391–397

Flechtenmacher C, Schirmacher P, Schemmer P (2015) Donor liver histology—a valuable tool in graft selection. Langenbeck’s Arch Surg 400(5):551–557

Portmann B, Wight D (1987) Pathology of liver transplantation (excluding rejection). In: Liver transplantation. Grune & Stratton, London, pp 437–470

Adam R, Reynes M, Johann M, Morino M, Astarcioglu I, Kafetzis I, Castaing D, Bismuth H (1991) The outcome of steatotic grafts in liver transplantation. Transplant Proc 23(1):1538–1540

D’alessandro AM, Kalayoglu M, Sollinger HW, Hoffmann RM, Reed A, Knechtle SJ, Pirsch JD, Hafez GR, Lorentzen D, Belzer FO (1991) The predictive value of donor liver biopsies for the development of primary nonfunction after orthotopic liver transplantation. Transplantation 51(1):157–163

Nocito A, El-Badry AM, Clavien P-A (2006) When is steatosis too much for transplantation? J Hepatol 45(4):494–499

Ploeg RJ, D’alessandro AM, Knechtle SJ, Stegall MD, Pirsch JD, Hoffmann RM, Sasaki T, Sollinger HW, Belzer FO, Kalayoglu M (1993) Risk factors for primary dysfunction after liver transplantation--a multivariate analysis. Transplantation 55(4):807–813

Durand F, Renz JF, Alkofer B, Burra P, Clavien PA, Porte RJ, Freeman RB, Belghiti J (2008) Report of the Paris consensus meeting on expanded criteria donors in liver transplantation. Liver Transpl 14(12):1694–1707

Rinella ME, Alonso E, Rao S, Whitington P, Fryer J, Abecassis M, Superina R, Flamm SL, Blei AT (2001) Body mass index as a predictor of hepatic steatosis in living liver donors. Liver Transpl 7(5):409–414

Tevar AD, Clarke C, Wang J, Rudich SM, Woodle ES, Lentsch AB, Edwards ML (2010) Clinical review of nonalcoholic steatohepatitis in liver surgery and transplantation. J Am Coll Surg 210(4):515–526

Nardo B, Masetti M, Urbani L, Caraceni P, Montalti R, Filipponi F, Mosca F, Martinelli G, Bernardi M, Daniele Pinna A (2004) Liver transplantation from donors aged 80 years and over: pushing the limit. Am J Transplant 4(7):1139–1147

Romero CJ, González EM, Ruíz FC, Carazo FP, Segurola CL, González FR, Pinto IG, García IG, Romano DR, Sanz CM (1999) Use of octogenarian livers safely expands the donor pool. Transplantation 68(4):572–575

Pinna AD, Neri F, Ravaioli M (2014) A bridge too far: we have overstepped the line for extended deceased donors. Liver Transpl 20(S2):S6–S8

Feng S, Lai JC (2014) Expanded criteria donors. Clin Liver Dis 18(3):633–649

Oweira H, Lahdou I, Daniel V, Opelz G, Schmidt J, Zidan A, Mehrabi A, Sadeghi M (2016) Early post-operative acute phase response in patients with early graft dysfunction is predictive of 6-month and 12-month mortality in liver transplant recipients. Hum Immunol 77(10):952–960

Oweira H, Lahdou I, Daniel V, Hofer S, Mieth M, Schmidt J, Schemmer P, Opelz G, Mehrabi A, Sadeghi M (2016) Early post-transplant neopterin associated with one year survival and bacteremia in liver transplant recipients. Hum Immunol 77(1):115–120

Mossdorf A, Kalverkamp S, Langenbrinck L, Ulmer TF, Temizel I, Neumann U, Heidenhain C (2013) Allocation procedure has no impact on patient and graft outcome after liver transplantation. Transpl Int 26(9):886–892

Burroughs AK, Sabin CA, Rolles K, Delvart V, Karam V, Buckels J, O'Grady JG, Castaing D, Klempnauer J, Jamieson N (2006) 3-month and 12-month mortality after first liver transplant in adults in Europe: predictive models for outcome. Lancet 367(9506):225–232

Porte R, Ploeg R, Hansen B, Van Bockel J, Thorogood J, Persijn G, Hermans J, Terpstra O, Group EMS (1998) Long-term graft survival after liver transplantation in the UW era: late effects of cold ischemia and primary dysfunction. Transpl Int 11 (1):S164-S167

Yersiz H, Shared A, Thoff KO, Imagawa D, Shackleton C, Martin P, Busuttil RW (1995) Correlation between donor age and the pattern of liver graft recovery after transplantation. Transplantation 60(8):790–793

Frühauf NR, Fischer-Fröhlich C-L, Kutschmann M, Schmidtmann I, Kirste G (2011) Joint impact of donor and recipient parameters on the outcome of liver transplantation in Germany. Transplantation 92(12):1378–1384

Boteon YL, Afford SC, Mergental H (2018) Pushing the limits: machine preservation of the liver as a tool to recondition high-risk grafts. Curr Transplant Reports:1–8

Nasralla D, Coussios CC, Mergental H, Akhtar MZ, Butler AJ, Ceresa CD, Chiocchia V, Dutton SJ, García-Valdecasas JC, Heaton N (2018) A randomized trial of normothermic preservation in liver transplantation. Nature 557(7703):50–56

Author information

Authors and Affiliations

Contributions

VJL and AM were the main investigators, designed the study, and analyzed and interpreted the data. EK, HF, JP, RH, TB, MM, PS, CWM, KHW, and MWB contributed knowledge, and read and corrected the manuscript. EK performed the statistical analyses. All authors approved the final version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Ethical approval

The proposed study is a retrospective analysis of liver transplantation cases performed at Heidelberg University Hospital. All procedures were performed following the ethical standards of the institutional research committee and the 1964 Helsinki declaration and its later amendments or comparable ethical standards. The Institutional Review Board at the University of Heidelberg approved the study (reference number S-195/2015). No animal experiments were performed for this study.

Rights and permissions

About this article

Cite this article

Lozanovski, V.J., Khajeh, E., Fonouni, H. et al. The impact of major extended donor criteria on graft failure and patient mortality after liver transplantation. Langenbecks Arch Surg 403, 719–731 (2018). https://doi.org/10.1007/s00423-018-1704-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00423-018-1704-z