Abstract

Stability of the soil slopes is one of the most challenging issues in civil engineering projects. Due to the complexity and non-linearity of this threat, utilizing simple predictive models does not satisfy the required accuracy in analysing the stability of the slopes. Hence, the main objective of this study is to introduce a novel metaheuristic optimization namely Harris hawks’ optimization (HHO) for enhancing the accuracy of the conventional multilayer perceptron technique in predicting the factor of safety in the presence of rigid foundations. In this way, four slope stability conditioning factors, namely slope angle, the position of the rigid foundation, the strength of the soil, and applied surcharge are considered. Remarkably, the main contribution of this algorithm to the problem of slope stability lies in adjusting the computational weights of these conditioning factors. The results showed that using the HHO increases the prediction accuracy of the ANN for analysing slopes with unseen conditions. In this regard, it led to reducing the root mean square error and mean absolute error criteria by 20.47% and 26.97%, respectively. Moreover, the correlation between the actual values of the safety factor and the outputs of the HHO–ANN (R2 = 0.9253) was more significant than the ANN (R2 = 0.8220). Finally, an HHO-based predictive formula is also presented to be used for similar applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Local slopes can highly affect adjacent engineering studies. In most civil engineering projects, the stability of the local slopes has been considered as a significant problem. Also, slope failures can cause various psychological damages, including property loss as well as human life in our world. For instance, Iranian Landslide Working Party (2007) reported that 187 people were killed due to the destructive effects of slope failure [1]. The impregnation level, along with different intrinsic characteristics of the soil, can impact the slope failure likelihood [2, 3]. Various studies have been conducted to propose impressive modelling for slope stability issue. Traditional methods have many shortcomings, such as the requirement of utilizing laboratory equipment and also high complexity barricade them from being an appropriate solution [4]. Nevertheless, because of their constraint in studying a particular slope state, for example, soil properties, height, slope angle, groundwater level, etc., these solutions have not commonly been considered as a general solution. Various sorts of numerical solutions, finite element model (FEM), and limit equilibrium methods (LEMs) are extensively chosen for the slope stability issue [5,6,7]. For providing a trustworthy method for slope stability study, scholars have focused on the expansion of design charts [8]. However, this method also has some defects. Producing an impressive design chart needs a lot of time and cost. Also, indicating the accurate mechanical factors is a problematic duty [9, 10]. Hence, because of efficiency, design charts commonly accompanied high precision, therefore the usage of artificial intelligence methods is more bolded [11, 12]. These methods can specify the non-linear relationship between the target factors as well as its key parameters, and this is an outstanding advantage of these approaches. Artificial neural network (ANN) commonly uses any determined number of hidden nodes [13, 14]. In geotechnical studies, different scholars stated that machine learning approaches such as support vector machine (SVM) and ANNs have proper efficiency [15,16,17,18,19]. The intricacy of the slope stability issue is obvious. What makes the problem even more complex and critical is creating different buildings in the presence of slopes that are showing a considerable value of loads used on a rigid footing. It is known that the interval of the slope’s crest along with the value of surcharge is considered as two factors that can affect the stability of the target slope [20]. Because of this fact, scholars have motivated to show a relationship to compute the factor of safety of pure slopes and sometimes the slopes taking a static load [21,22,23,24,25]. Chakraborty and Goswami [26] predicted the factor of safety for around 200 slopes to distinct geometric and shear strength factors by taking into account the multiple linear regression (MLR) along with ANN algorithms. In their work, a comparison study has been conducted to compare calculated results to a FEM model. They have obtained a proper rate of precision obtained for both practical models. In addition, they found that ANN had better performance compared to MLR. Lie et al. [27] utilized the random forest (RF) along with regression tree in functional soil–landscape simulations to regionalize the depth of the failure level and density of soil bulk. Although looking for more reliable analysis of the stability of the slopes various hybrid evolutionary algorithms has been successfully employed in plenty of studies [28,29,30,31], this study presents a novel optimization technique named Harris hawks’ optimization (HHO) incorporated with ANN to give a reliable approximation of the stability of soil slopes. Notably, the HHO is a recently proposed natural inspired metaheuristic algorithm, and the authors did not come across any previous study which applied this algorithm to the mentioned subject.

2 Methodology

2.1 Artificial neural network

The artificial neural network (ANN) is based on the interaction among the neurons in the biological neural apparatus. McCulloch and Pitts [32] proposed ANN for the first one. The algorithm of ANNs is generally utilized as approximators in a non-linear survey of input–output data [33,34,35,36,37,38]. These methods are commonly used for different engineering issues because of their specific mathematical solution in optimization tasks [22, 39,40,41,42,43,44,45,46,47]. Basically, the ANN algorithm includes a group of computational relationships that are commonly worked with each other. Multilayer perceptron (MLP) is known as one of the most appropriate methods between different algorithms of ANNs that used for classification as well as regression issues. The whole structure of the MLP algorithm is presented in Fig. 1. As can be observed, this model consists of three different types of layers. In this method, the number of hidden layers usually changes; however, it just can have input and output layers. Scholars determined that MLPs possess one hidden layer in terms of efficiency [48].

The MLP is basically employed for detecting the mathematical relations among different factors with the taking into account of one and even more activation function(s). We consider W1 and W2 as the weight matrices layers in the hidden and output sections, respectively. After that, the mentioned method is adjusted as below:

where \(f_{A}\) stands for the activation function. b1 and b2 stand for the bias matrices related to the neurons located in the hidden and output layers, respectively.

2.2 Harris hawks’ optimization algorithm

The algorithm of Harris hawks’ optimization (HHO) is inspired using the cooperative treatment along with the chasing manner of Harris’ hawks that is first expanded by Heidari et al. [49]. This algorithm has been successfully used for various scientific applications [50, 51]. Hawks attempting to surprise their prey and from different paths swooped on them, cooperatively. In addition, Harris hawks have the ability to choose chase type according to the distinct patterns of prey flight. It has three base stages in HHO, including amaze pounce, tracking the prey, and other different sorts of attacking strategies. Different phases of Harris hawks’ optimization (HHO) are shown in Fig. 2. The pseudo-code of HHO algorithm is also illustrated in Table 1. In a glance, the first stage is named “Exploration” and is modelled to mathematically wait, search, and discover the desired hunt. The second stage of this algorithm is transforming from exploration to exploitation, based on the external energy of a rabbit. Finally, in the third phase which is called “Exploitation”, considering the residual energy of the prey, hawks commonly take a soft and sometimes hard surround for hunting the rabbit from different directions.

Different phases of Harris hawks’ optimization (HHO) (after Heidari et al. [49])

2.2.1 Exploration

In each step, Harris’ hawks have been considered the best solutions. The iter + 1 (the Harris hawks’ position) is mathematically modelled by the following relation:

where iter means the present iteration, \(X_{\text{rand}}\) stands selected for hawk at the available population, \(r_{i}\), i = 1, 2, 3, 4…, q are random numbers that are between 0 and 1, \(X_{{\rm rabit}}\) stands for the rabbit position, and \(X_{m}\) is the mean position for hawks and that is computed as follows:

where \(X_{i}\) shows the every hawk place and N stands for the hawks size.

2.2.2 Transition from exploration to exploitation

The rabbit energy may be calculated by the below relation:

where E is the external energy from rabbit and T stands for the maximum size about the iterations. In this relation, E stands for the energy of the rabbit, and \(E_{0} \in \left( { - 1. 1} \right)\) shows the inlet energy for each step. HHO may determine the rabbit state based on the variation trend of \(E_{0}\).

2.2.3 Exploitation

In this stage, for successful escape of the prey: if \(r < 0.5\). If \(\left| E \right| \ge 0.5\) HHO takes soft surround and if \(\left| E \right| < 0.5\) the HHO takes hard surround. To model the attacking stage, the algorithm of HHO used four distinct methods based on the escaping approaches of the prey as well as pursuing approaches of the Harris’ hawks: hard and soft surrounds, advanced rapid dives while soft surround, progressive rapid dives while hard surround. Particularly, \(\left| E \right| \ge 0.5\) means that the prey has enough energy for running out from the surround. Therefore, whether the rabbit runs out from the surround or not is based on two values of r and E.

A—soft surround: \(r \ge \frac{1}{2} {\text{and}} \left| E \right| \ge \frac{1}{2}\).

We can use the following relation:

where \(\Delta X\) stands for deference among the position vector of the prey, J = 2(1-\(r_{s}\)) stands for jump severity of the prey in the stage of escaping and \(r_{s} \in \left( {01} \right)\) shows a random number.

B—hard surround: \(r \ge \frac{1}{2} {\text{and}} \left| E \right| < \frac{1}{2}\).

We can use the following formula for showing the present positions:

C—advanced rapid dives while soft surround: \(r < \frac{1}{2} \, {\text{and}} \, \left| E \right| \ge \frac{1}{2}\).

As stated for soft surround, previously, hawks find the next purpose using the below relation:

The hawks can dive as the below relation:

where D stands for the issue dimension and \(S_{1 \times D}\) shows a random vector along with the levy flight. We can calculate LF as follows:

where \(\mu\) and \(\vartheta\) stand for random amounts among in the range of 0–1. Hence, for updating the hawks’ locations, the final approach can be shown as follows:

D—advanced rapid dives while hard surround.

In the present paper, the hawks were considered being near the rabbit. The behaviour of them can be modelled as follows:

Y and Z should be calculated as follows:

in which \(X_{m} \left( {\text{iter}} \right) {\text{shows}}\frac{1}{N}\mathop \sum \nolimits_{i = 1}^{N} X_{i} \left( {\text{iter}} \right)\) [52].

3 Data collection and methodology

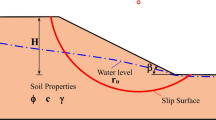

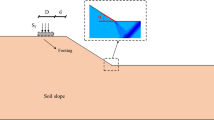

We can use a single-layer slope to obtain a reliable database. In this method, we should assume a purely cohesive soil, having only undrained cohesive strength (Cu), creates the body of this slope. The basic parameters that can have some influences on the strength of the slope versus the failure (i.e., the factor of safety) are the magnitude of the surcharge on the footing enchased onto the slope (w), setback distance ratio (b/B), and slope angle (β). Figure 3a shows these parameters. In this study, the Optum G2 software was used for computing the factor of safety. In most cases, the safety factor is a typical method to show the geotechnical stability as well as deformation in slopes [53] (see Fig. 3b). In this regard, various geometries of the slope angle (β) along with different rigid foundation (b/B) (i.e., around 630 possible cases) are drawn and then evaluated in Optum G2 for calculating the factor of safety. Other parameters were also considered into simulation including cohesive strength of the soil (Cu) and applied surcharge (w). The mechanical factors, including the ratio of Poisson, internal friction angle, and soil unit weight, were specified 0.35, 0°, and 18 kN/m3, respectively. Moreover, modulus of Young (E) differed for every amount of Cu. It is adjusted to be 1000, 2000, 3500, 5000, 9000, 15,000 and 30,000 kPa for amount of Cu 25, 50, 75, 100, 200, 300 and 400 kPa, respectively.

An example of the utilized dataset is shown in Table 2. In this table, we illustrated the examples of the relation between the slope safety factor and its effective parameters. As can be observed, when Cu has high value, the slope ensures more stability. The factors of β (5°, 30°, 45°, 60°, and 75°), as well as w (50, 100, and 150 KN/m2), have been considered as adversely proportionate for the FOS. By increasing the values of β and w, the slope is more likely to be failing. The factor of safety cannot illustrate any considerable sensibility to the b/B ratio variations (0, 1, 2, 3, 4, and 5). Also, Table 2 shows that the safety factor does not specify any considerable sensitivity for the b/B ratio variations of 0, 1, 2, 3, 4, and 5.

We have randomly divided the dataset into training and testing sub-classes that have the respective amounts of 0.8 (504 instances) and 0.2 (126 instances). It is important to note that the training instances are utilized for training the ANN and HHO–ANN models. The performance of these methods has been verified using the testing database. Also, k-fold cross-validation procedure is utilized to mitigate the bias caused by the random selection of the data [54,55,56] (see Fig. 4).

4 Results and discussion

4.1 Implementation and optimization

As stated previously, the main objective of this research is to present a new optimization of the artificial neural network, namely Harris hawks’ optimization, for the stability analysis of soil slopes by predicting the FOS. To this end, four slope stability conditioning factors, namely slope angle, the position of the rigid foundation, the strength of the soil, and the magnitude of the surcharge are considered to create the required dataset. After dividing the data into the training and testing parts, utilizing the programming language of MATLAB v.2014, the proposed ANN and HHO–ANN models were designed. Based on the authors’ experience, as well as a trial and error process, an MLP neural network with six hidden computational units in the middle layer was developed. In this sense, lots of theoretical attempts have revealed the efficiency of the MLP tool with one hidden layer [57, 58]. Notably, the activation function of “Tansig” was used to activate the calculations of these neurons. This function is expressed as follows:

After determining the optimal structure of the ANN, the HHO algorithm was coupled with it. It is worth noting that the main aim of such optimization algorithms in incorporation with intelligent tools (e.g., ANFIS and ANN) is to find the most appropriate values for their computational parameters. In the case of MLP we used in this study, the HHO performs to find the solution for a mathematically defined problem which contains the weights and biases of the neurons. Ten different structures of HHO–ANN networks were tested based on the population size. In this sense, the population size was considered to vary from 50 to 500 with 50 intervals. Each model performed within 1000 repetitions when meaning square error was defined as the objective function (Table 3). Figure 5 shows the obtained convergence curves. According to this chart, the HHO–ANN having population size = 90 outperformed other tested models. It finally achieved the MSE = 2.469635486 in 4129 s. Remarkably, the majority of the reduction of the MSE occurred in the first 100 iterations.

4.2 Performance assessment

The outputs (i.e., the predicted FOS) of the ANN and HHO–ANN models were extracted and compared with the actual values to evaluate their prediction capability. Two error criteria of root mean square error (RMSE) and mean absolute error (MAE) are used to measure the prediction error. Moreover, the correlation between the observed and predicted FOSs is measured by the coefficient of determination (R2). These indices are expressed as follows:

where Yipredicted and Yiobserved stand for the predicted and actual FOSs, respectively. The term N symbolizes the number of samples and \(\overline{Y}_{\text{observed}}\) denotes the average value of the observed FOS.

Figure 6 illustrates the results of the ANN and HHO–ANN models. In these figures, the error (i.e., the difference between the actual and predicted) and histogram of the errors are also presented. Based on the results, applying the HHO algorithm has helped the ANN to have a better analysis of the relationship between the FOS and its conditioning factors. In this sense, the training RMSE was decreased by 26.52% (from 2.1388 to 1.5715). As for the MAE, the HHO reduced this error criterion by 32.31% (from 1.7151 to 1.1610). Furthermore, the obtained values of R2 (0.8778 vs. 0.9339) show more consistency for the outputs of the HHO–ANN. About the testing phase, it can be deduced that using the HHO increases the generalization power (i.e., predicting the unseen samples) of the ANN. More clearly, the testing RMSE and MAE fell by 20.47% (from 2.0806 to 1.6546) and 26.97% (from 1.6883 to 1.2330), respectively. Besides, the correlation analysis between the testing outputs of the ANN and HHO–ANN show that the R2 increases from 0.8220 to 0.9253.

4.3 Presenting the HHO-based predictive formula

Overall, it was found that the weights and biases which were suggested by the HHO algorithm can predict the FOS more efficiently than those found in the non-optimized ANN. Hence, in this part of the study, it was aimed to extract the FOS predictive formula from the HHO–ANN model. Notably, the calculated accuracy criteria indicate that it can estimate the FOS accurately, by taking into consideration four slope stability influential factors, namely slope angle, the position of the rigid foundation, strength of the soil, and applied surcharge. Equation 20 denotes the HHO–ANN formula:

where Z1, Z2, …, Z6 are calculated as shown in Table 3.

5 Conclusion

The complexity of environmental threats has driven scholars to employ evolutionary evaluative methods for dealing with them. The stability of the soil slopes is a crucial civil engineering issue which needs nonlinear analysis. In this paper, Harris hawks’ optimization was used as a novel hybrid metaheuristic technique for optimizing the performance of the artificial neural network in predicting FOS of the soil slope. In other words, the HHO was used to overcome the computational drawbacks of the ANN, through finding the best-fitted structure. Based on the results of the sensitivity analysis, the HHO–ANN with population size = 90 outperforms others. Moreover, the findings showed that synthesizing the HHO algorithm can effectively help the ANN to have more consistent learning and predicting of the slope failure pattern. Lastly, conducting comparative studies for comparing the potential of the used HHO algorithm with other well-known optimization techniques is a good idea for future works to determine the most appropriate technique for solving the mentioned problem.

References

Pourghasemi HR, Pradhan B, Gokceoglu C (2012) Application of fuzzy logic and analytical hierarchy process (AHP) to landslide susceptibility mapping at Haraz watershed, Iran. Nat Hazards 63:965–996

Latifi N, Rashid ASA, Siddiqua S, Majid MZA (2016) Strength measurement and textural characteristics of tropical residual soil stabilised with liquid polymer. Measurement 91:46–54

Moayedi H, Huat BB, Kazemian S, Asadi A (2010) Optimization of tension absorption of geosynthetics through reinforced slope. Electron J Geotech Eng B 15:1–12

Marto A, Latifi N, Janbaz M, Kholghifard M, Khari M, Alimohammadi P, Banadaki AD (2012) Foundation size effect on modulus of subgrade reaction on sandy soils. Electron J Geotech Eng 17:2523–2530

Moayedi H, Huat BK, Kazemian S, Asadi A (2010) Optimization of shear behavior of reinforcement through the reinforced slope. Electron J Geotech Eng 15:93–104

Raftari M, Kassim KA, Rashid ASA, Moayedi H (2013) Settlement of shallow foundations near reinforced slopes. Electron J Geotech Eng 18:797–808

Nazir R, Ghareh S, Mosallanezhad M, Moayedi H (2016) The influence of rainfall intensity on soil loss mass from cellular confined slopes. Measurement 81:13–25

Javankhoshdel S, Bathurst RJ (2014) Simplified probabilistic slope stability design charts for cohesive and cohesive-frictional (c − ϕ) soils. Can Geotech J 51:1033–1045

Duncan JM (1996) State of the art: limit equilibrium and finite-element analysis of slopes. J Geotech Eng 122:577–596

Kang F, Xu B, Li J, Zhao S (2017) Slope stability evaluation using Gaussian processes with various covariance functions. Appl Soft Comput 60:387–396

Trigila A, Iadanza C, Esposito C, Scarascia-Mugnozza G (2015) Comparison of logistic regression and random forests techniques for shallow landslide susceptibility assessment in Giampilieri (NE Sicily, Italy). Geomorphology 249:119–136

Hervás-Martínez C, Gutiérrez PA, Peñá-Barragán JM, Jurado-Expósito M, López-Granados F (2010) A logistic radial basis function regression method for discrimination of cover crops in olive orchards. Expert Syst Appl 37:8432–8444

Basarir H, Kumral M, Karpuz C, Tutluoglu L (2010) Geostatistical modeling of spatial variability of SPT data for a borax stockpile site. Eng Geol 114:154–163

Secci R, Foddis ML, Mazzella A, Montisci A, Uras G (2015) Artificial neural networks and kriging method for slope geomechanical characterization, engineering, geology for society and territory-volume 2. Springer, Switzerland , pp 1357–1361

Zhang Y, Dai M, Ju Z (2015) Preliminary discussion regarding SVM kernel function selection in the twofold rock slope prediction model. J Comput Civil Eng 30:04015031

Moayedi H, Nazir R, Mosallanezhad M, Noor RBM, Khalilpour M (2018) Lateral deflection of piles in a multilayer soil medium. Case study: the terengganu seaside platform. Measurement 123:185–192

Mosallanezhad M, Moayedi H (2017) Developing hybrid artificial neural network model for predicting uplift resistance of screw piles. Arab J Geosci 10:479

Gao W, Karbasi M, Hasanipanah M, Zhang X, Guo J (2018) Developing GPR model for forecasting the rock fragmentation in surface mines. Eng Comput 34:339–345

Gao W, Karbasi M, Derakhsh AM, Jalili A (2019) Development of a novel soft-computing framework for the simulation aims: a case study. Eng Comput 35:315–322

Youssef AM, Pradhan B, Al-Harthi SG (2015) Assessment of rock slope stability and structurally controlled failures along Samma escarpment road, Asir Region (Saudi Arabia). Arab J Geosci 8:6835–6852

Acharyya R, Dey A (2018) Assessment of bearing capacity for strip footing located near sloping surface considering ANN model. Neural Comput Appl 31:1–14

Moayedi H, Hayati S (2018) Modelling and optimization of ultimate bearing capacity of strip footing near a slope by soft computing methods. Appl Soft Comput 66:208–219

Singh J, Banka H, Verma AK (2019) A BBO-based algorithm for slope stability analysis by locating critical failure surface. Neural Comput Appl 31:1–18

Jellali B, Frikha W (2017) Constrained particle swarm optimization algorithm applied to slope stability. Int J Geomech 17:06017022

Pei H, Zhang S, Borana L, Zhao Y, Yin J (2019) Slope stability analysis based on real-time displacement measurements. Measurement 131:686–693

Chakraborty A, Goswami D (2017) Prediction of slope stability using multiple linear regression (MLR) and artificial neural network (ANN). Arab J Geosci 10:385

Ließ M, Glaser B, Huwe B (2011) Functional soil-landscape modelling to estimate slope stability in a steep Andean mountain forest region. Geomorphology 132:287–299

Gao W, Raftari M, Rashid ASA, Mu’azu MA, Jusoh WAW (2019) A predictive model based on an optimized ANN combined with ICA for predicting the stability of slopes. Eng Comput 35:1–20

Moayedi H, Mosallanezhad M, Mehrabi M, Safuan ARA, Biswajeet P (2019) Modification of landslide susceptibility mapping using optimized PSO-ANN technique. Eng Comput 35:967–984

Yuan C, Moayedi H (2019) The performance of six neural-evolutionary classification techniques combined with multi-layer perception in two-layered cohesive slope stability analysis and failure recognition. Eng Comput 36:1–10

Nguyen H, Mehrabi M, Kalantar B, Moayedi H, MaM Abdullahi (2019) Potential of hybrid evolutionary approaches for assessment of geo-hazard landslide susceptibility mapping. Geomat Na Hazards Risk 10:1667–1693

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5:115–133

Committee AT (2000) Artificial neural networks in hydrology. II: hydrologic applications. J Hydrol Eng 5:124–137

Alnaqi AA, Moayedi H, Shahsavar A, Nguyen TK (2019) Prediction of energetic performance of a building integrated photovoltaic/thermal system thorough artificial neural network and hybrid particle swarm optimization models. Energy Convers Manag 183:137–148

Xi W, Li G, Moayedi H, Nguyen H (2019) A particle-based optimization of artificial neural network for earthquake-induced landslide assessment in Ludian county, China. Geomat Nat Hazards Risk 10:1750–1771

Yuan C, Moayedi H (2019) Evaluation and comparison of the advanced metaheuristic and conventional machine learning methods for the prediction of landslide occurrence. Eng Comput 36:1–11

Wang B, Moayedi H, Nguyen H, Foong LK, Rashid ASA (2019) Feasibility of a novel predictive technique based on artificial neural network optimized with particle swarm optimization estimating pullout bearing capacity of helical piles. Eng Comput 36:1–10

Liu L, Moayedi H, Rashid ASA, Rahman SSA, Nguyen H (2019) Optimizing an ANN model with genetic algorithm (GA) predicting load-settlement behaviours of eco-friendly raft-pile foundation (ERP) system. Eng Comput 35:1–13

Moayedi H, Huat BB, Mohammad Ali TA, Asadi A, Moayedi F, Mokhberi M (2011) Preventing landslides in times of rainfall: case study and FEM analyses. Disaster Prevent Manag Int J 20:115–124

Moayedi H, Rezaei A (2017) An artificial neural network approach for under-reamed piles subjected to uplift forces in dry sand. Neural Comput Appl 31:327–336

Moayedi H, Hayati S (2018) Applicability of a CPT-based neural network solution in predicting load-settlement responses of bored pile. Int J Geomech 18:06018009

Seyedashraf O, Mehrabi M, Akhtari AA (2018) Novel approach for dam break flow modeling using computational intelligence. J Hydrol 559:1028–1038

Gao W, Dimitrov D, Abdo H (2018) Tight independent set neighborhood union condition for fractional critical deleted graphs and ID deleted graphs. Discrete Contin Dyn Syst S 12:711–721

Gao W, Guirao JLG, Abdel-Aty M, Xi W (2019) An independent set degree condition for fractional critical deleted graphs. Discrete Contin Dyn Syst S 12:877–886

Gao W, Guirao JLG, Basavanagoud B, Wu J (2018) Partial multi-dividing ontology learning algorithm. Inf Sci 467:35–58

Gao W, Wang W, Dimitrov D, Wang Y (2018) Nano properties analysis via fourth multiplicative ABC indicator calculating. Arab J Chem 11:793–801

Gao W, Wu H, Siddiqui MK, Baig AQ (2018) Study of biological networks using graph theory. Saudi J Biol Sci 25:1212–1219

Kavzoglu T, Mather PM (2003) The use of backpropagating artificial neural networks in land cover classification. Int J Remote Sens 24:4907–4938

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris Hawks optimization: algorithm and applications. Future Gener Comput Syst 97:849–872

Bao X, Jia H, Lang C (2019) A novel hybrid harris hawks optimization for color image multilevel thresholding segmentation. IEEE Access 7:76529–76546

Jia H, Lang C, Oliva D, Song W, Peng X (2019) Dynamic harris hawks optimization with mutation mechanism for satellite image segmentation. Remote Sens 11:1421

Du P, Wang J, Hao Y, Niu T, Yang W (2019) A novel hybrid model based on multi-objective Harris hawks optimization algorithm for daily PM2. 5 and PM10 forecasting. arXiv preprint arXiv:1905.13550

Krabbenhoft K, Lyamin A, Krabbenhoft J (2015) Optum computational engineering (Optum G2). Available on: www.optumce.com. Accessed 2018

Allayear SM, Sarker K, Ara SJF (2018) Prediction model for prevalence of type-2 diabetes complications with ANN approach combining with K-fold cross validation and K-means clustering. Advances in information and communication networks: proceedings of the 2018 future of information and communication conference (FICC), San Francisco, United States

Nandi GC, Agarwal P, Gupta P, Singh A (2018) Deep learning based intelligent robot grasping strategy. 14th International conference on control and automation (ICCA), Anchorage, AK, USA

Abas M, Zubir N, Ismail N, Yassin I, Ali N, Rahiman M, Saiful N, Taib M (2017) Agarwood oil quality classifier using machine learning. J Fund Appl Sci 9:62–76

Cybenko G (1989) Approximation by superpositions of a sigmoidal function. Math Control Signals Syst 2:303–314

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2:359–366

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Moayedi, H., Osouli, A., Nguyen, H. et al. A novel Harris hawks’ optimization and k-fold cross-validation predicting slope stability. Engineering with Computers 37, 369–379 (2021). https://doi.org/10.1007/s00366-019-00828-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-019-00828-8