Abstract

We describe generalizations of the universal approximation theorem for neural networks to maps invariant or equivariant with respect to linear representations of groups. Our goal is to establish network-like computational models that are both invariant/equivariant and provably complete in the sense of their ability to approximate any continuous invariant/equivariant map. Our contribution is three-fold. First, in the general case of compact groups we propose a construction of a complete invariant/equivariant network using an intermediate polynomial layer. We invoke classical theorems of Hilbert and Weyl to justify and simplify this construction; in particular, we describe an explicit complete ansatz for approximation of permutation-invariant maps. Second, we consider groups of translations and prove several versions of the universal approximation theorem for convolutional networks in the limit of continuous signals on euclidean spaces. Finally, we consider 2D signal transformations equivariant with respect to the group SE(2) of rigid euclidean motions. In this case we introduce the “charge–conserving convnet”—a convnet-like computational model based on the decomposition of the feature space into isotypic representations of SO(2). We prove this model to be a universal approximator for continuous SE(2)—equivariant signal transformations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation

Symmetric models An important topic in learning theory is the design of predictive models properly reflecting symmetries naturally present in the data (see, e.g., [3, 35, 37]). Most commonly, in the standard context of supervised learning, this means that our predictive model should be invariant with respect to a suitable group of transformations: given an input object, we often know that its class or some other property that we are predicting does not depend on the object representation (e.g., associated with a particular coordinate system), or for other reasons does not change under certain transformations. In this case we would naturally like the predictive model to reflect this independence. If f is our predictive model and \(\Gamma \) the group of transformations, we can express the property of invariance by the identity \(f({\mathcal {A}}_\gamma {\mathbf {x}})= f({\mathbf {x}})\), where \({\mathcal {A}}_\gamma {\mathbf {x}}\) denotes the action of the transformation \(\gamma \in \Gamma \) on the object \({\mathbf {x}}\).

There is also a more general scenario where the output of f is another complex object that is supposed to transform appropriately if the input object is transformed. This scenario is especially relevant in the setting of multi-layered (or stacked) predictive models, if we want to propagate the symmetry through the layers. In this case one speaks about equivariance, and mathematically it is described by the identity \(f({\mathcal {A}}_\gamma {\mathbf {x}})= {\mathcal {A}}_\gamma f({\mathbf {x}})\), assuming that the transformation \(\gamma \) acts in some way not only on inputs, but also on outputs of f. (For brevity, here and in the sequel we will slightly abuse notation and denote any action of \(\gamma \) by \({\mathcal {A}}_\gamma \), though of course in general the input and output objects are different and \(\gamma \) acts differently on them. It will be clear which action is meant in a particular context).

A well-known important example of equivariant transformations are convolutional layers in neural networks, where the group \(\Gamma \) is the group of grid translations, \({\mathbb {Z}}^d\).

Symmetrization vs. intrinsic symmetry We find it convenient to roughly distinguish two conceptually different approaches to the construction of invariant and equivariant models that we refer to as the symmetrization-based one and the intrinsic one. The symmetrization-based approach consists in starting from some asymmetric model, and symmetrizing it by a group averaging. On the other hand, the intrinsic approach consists in imposing prior structural constraints on the model that guarantee its symmetricity.

In the general mathematical context, the difference between the two approaches is best illustrated with the example of symmetric polynomials in the variables \(x_1,\ldots ,x_n\), i.e., the polynomials invariant with respect to arbitrary permutations of these variables. With the symmetrization-based approach, we can obtain any invariant polynomial by starting with an arbitrary polynomial f and symmetrizing it over the group of permutations \(S_n\), i.e. by defining \(f_{\mathrm {sym}}(x_1,\ldots ,x_n)=\frac{1}{n!}\sum _{\rho \in S_n}f(x_{\rho (1)},\ldots ,x_{\rho (n)}).\) On the other hand, the intrinsic approach is associated with the fundamental theorem of symmetric polynomials, which states that any invariant polynomial \(f_{\mathrm {sym}}\) in n variables can be obtained as a superposition \(f(s_1,\ldots ,s_n)\) of some polynomial f and the elementary symmetric polynomials \(s_1,\ldots ,s_n\). Though both approaches yield essentially the same result (an arbitrary symmetric polynomial), the two constructions are clearly very different.

In practical machine learning, symmetrization is ubiquitous. It is often applied both on the level of data and the level of models. This means that, first, prior to learning an invariant model, one augments the available set of training examples \(({\mathbf {x}}, f({\mathbf {x}}))\) by new examples of the form \(({\mathcal {A}}_\gamma {\mathbf {x}}, f({\mathbf {x}}))\) (see, for example, Section B.2 of Thoma [43] for a list of transformations routinely used to augment datasets for image classification problems). Second, once some, generally non-symmetric, predictive model \({{\widehat{f}}}\) has been learned, it is symmetrized by setting \({{\widehat{f}}}_{\mathrm{sym}}({\mathbf {x}})=\frac{1}{|\Gamma _0|}\sum _{\gamma \in \Gamma _0}{{\widehat{f}}}({\mathcal {A}}_\gamma {\mathbf {x}})\), where \(\Gamma _0\) is some subset of \(\Gamma \) (e.g., randomly sampled). This can be seen as a manifestation of the symmetrization-based approach, and its practicality probably stems from the fact that the real world symmetries are usually only approximate, and in this approach one can easily account for their imperfections (e.g., by adjusting the subset \(\Gamma _0\)). On the other hand, the weight sharing in convolutional networks [22, 45] can be seen as a manifestation of the intrinsic approach (since the translational symmetry is built into the architecture of the network from the outset), and convnets are ubiquitous in modern machine learning [23].

Completeness In this paper we will be interested in the theoretical opportunities of the intrinsic approach in the context of approximations using neural-network-type models. Suppose, for example, that f is an invariant map that we want to approximate with the usual ansatz of a perceptron with a single hidden layer, \({{\widehat{f}}}(x_1,\ldots ,x_d)= \sum _{n=1}^N c_n\sigma (\sum _{k=1}^d w_{nk}x_k+h_n)\) with some nonlinear activation function \(\sigma \). Obviously, this ansatz breaks the symmetry, in general. Our goal is to modify this ansatz in such a way that, first, it does not break the symmetry and, second, it is complete in the sense that it is not too specialized and any reasonable invariant map can be arbitrarily well approximated by it. In Sect. 2 we show how this can be done by introducing an extra polynomial layer into the model. In Sects. 3, 4 we will consider more complex, deep models (convnets and their modifications). We will understand completeness in the sense of the universal approximation theorem for neural networks [32].

Linear representations Designing invariant and equivariant models requires us to decide how the symmetry information is encoded in the layers. A standard assumption, to which we also will adhere in this paper, is that the group acts by linear transformations. Precisely, when discussing invariant models we are looking for maps of the form

where V is a vector space carrying a linear representation \(R:\Gamma \rightarrow \mathrm {GL}(V)\) of a group \(\Gamma \). More generally, in the context of multi-layer models

we assume that the vector spaces \(V_k\) carry linear representations \(R_k:\Gamma \rightarrow \mathrm {GL}(V_k)\) (the “baseline architecture” of the model), and we must then ensure equivariance in each link. Note that a linear action of a group on the input space \(V_1\) is a natural and general phenomenon. In particular, the action is linear if \(V_1\) is a linear space of functions on some domain, and the action is induced by (not necessarily linear) transformations of the domain. Prescribing linear representations \(R_k\) is then a viable strategy to encode and upkeep the symmetry in subsequent layers of the model.

Compact vs. non-compact groups From the perspective of approximation theory, we will be interested in finite computational models, i.e. including finitely many operations as performed on a standard computer. Finiteness is important for potential studies of approximation rates (though such a study is not attempted in the present paper). Compact groups have the nice property that their irreducible linear representations are finite-dimensional. This allows us, in the case of such groups, to modify the standard shallow neural network ansatz so as to obtain a computational model that is finite, fully invariant/equivariant and complete, see Sect. 2. On the other hand, irreducible representations of non-compact groups such as \({\mathbb {R}}^\nu \) are infinite-dimensional in general. As a result, finite computational models can be only approximately \({\mathbb {R}}^\nu \)-invariant/equivariant. Nevertheless, we show in Sects. 3, 4 that complete \({\mathbb {R}}^\nu \)—and SE(\(\nu \))—equivariant models can be rigorously described in terms of appropriate limits of finite models.

1.2 Related Work

Our work can be seen as an extension of results on the universal approximation property of neural networks [7, 10, 18, 19, 24, 29, 31, 32] to the setting of group invariant/equivariant maps and/or infinite-dimensional input spaces.

Our general results in Sect. 2 are based on classical results of the theory of polynomial invariants [16, 17, 46].

An important element of constructing invariant and equivariant models is the extraction of invariant and equivariant features. In the present paper we do not focus on this topic, but it has been studied extensively, see e.g. general results along with applications to 2D and 3D pattern recognition in [3, 27, 35, 37, 41].

In a series of works reviewed in Cohen et al. [5], the authors study expressiveness of deep convolutional networks using hierarchical tensor decompositions and convolutional arithmetic circuits. In particular, representation universality of several network structures is examined in Cohen and Shashua [4].

In a series of works reviewed in Poggio et al. [33], the authors study expressiveness of deep networks from the perspective of approximation theory and hierarchical decompositions of functions. Learning of invariant data representations and its relation to information processing in the visual cortex has been discussed in Anselmi et al. [1].

In the series of papers [2, 25, 26, 39], multiscale wavelet-based group invariant scattering operators and their applications to image recognition have been studied.

There is a large body of work proposing specific constructions of networks for applied group invariant recognition problems, in particular image recognition approximately invariant with respect to the group of rotations or some of its subgroups: deep symmetry networks of Gens and Domingos [11], G-CNNs of Cohen and Welling [6], networks with extra slicing operations in Dieleman et al. [8], RotEqNets of Marcos et al. [28], networks with warped convolutions in Henriques and Vedaldi [15], Polar Transformer Networks of Esteves et al. [9]. In Sect. 4 we study a family of models equivariant w.r.t. 2D euclidean motions; our construction partly resembles the one used in Worrall et al. [48]. However, in contrast to all these papers, we are primarily interested in the theoretical guarantees of invariance and completeness.

Our Theorem 3.1 resembles the Curtis-Hedlund-Lyndon theorem from the theory of cellular automata, that states that a map \(f:\{1,\ldots ,N\}^{{\mathbb {Z}}^\nu }\rightarrow \{1,\ldots ,N\}^{{\mathbb {Z}}^\nu }\) is \({\mathbb {Z}}^\nu \)-equivariant and continuous in the product topology if and only if it is defined by a finite cellular automaton [14]. In Theorem 3.1 we characterize the maps \(f:L^2({\mathbb {R}}^\nu , {\mathbb {R}}^{d_V})\rightarrow L^2({\mathbb {R}}^\nu , {\mathbb {R}}^{d_U})\) that are \({\mathbb {R}}^\nu \)-equivariant and continuous in the norm topology as limit points of convnets.

In Sect. 2.4 we apply the invariant theory to construct complete permutation-invariant networks. See [34, 49] for related results and discussions of permutation-invariant models, as well as applications to image recognition problems.

In Kondor and Trivedi [20], it is proved that network layers of the conventional structure “a linear transformation followed by pointwise nonlinear activation” are group-equivariant iff the linear part is a (generalized) convolution. This can be viewed as a completeness result for equivariant maps implementable by a single standard layer. In the present paper, our point of view is rather different: first, we strive to describe approximations to maps from general functional classes, and second, we are particularly interested in symmetries like \({\mathbb {R}}^\nu \) or SE(2), that cannot be implemented in a single finite layer, but can be recovered in a suitable limit (cf. Sects. 3, 4).

1.3 Contribution of this Paper

As discussed above, we will be interested in the following general question: assuming there is a “ground truth” invariant or equivariant map f, how can we “intrinsically” approximate it by a neural-network-like model? Our goal is to describe models that are finite, invariant/ equivariant (up to limitations imposed by the finiteness of the model) and provably complete in the sense of approximation theory.

Our contribution is three-fold:

-

In Sect. 2 we consider general compact groups and approximations by shallow networks. Using the classical polynomial invariant theory, we describe a general construction of shallow networks with an extra polynomial layer which are exactly invariant/equivariant and complete (Propositions 2.3, 2.4). Then, we discuss how this construction can be improved using the idea of polarization and a theorem of Weyl (Propositions 2.5, 2.7). Finally, as a particular illustration of the “intrinsic” framework, we consider maps invariant with respect to the symmetric group \(S_N\), and describe a corresponding neural network model which is \(S_N\)-invariant and complete (Theorem 2.4). This last result is based on another theorem of Weyl.

-

In Sect. 3 we prove several versions of the universal approximation theorem for convolutional networks and groups of translations. The main novelty of these results is that we approximate maps f defined on the infinite-dimensional space of continuous signals on \({\mathbb {R}}^\nu \). Specifically, one of these versions (Theorem 3.1) states that a signal transformation \(f:L^2({\mathbb {R}}^\nu , {\mathbb {R}}^{d_V})\rightarrow L^2({\mathbb {R}}^\nu , {\mathbb {R}}^{d_U})\) can be approximated, in some natural sense, by convnets without pooling if and only if f is continuous and translationally-equivariant (here, by \(L^2({\mathbb {R}}^\nu , {\mathbb {R}}^{d})\) we denote the space of square-integrable functions \({\varvec{\Phi }}:{\mathbb {R}}^\nu \rightarrow {\mathbb {R}}^d\)). Another version (Theorem 3.2) states that a map \(f:L^2({\mathbb {R}}^\nu , {\mathbb {R}}^{d_V})\rightarrow {\mathbb {R}}\) can be approximated by convnets with pooling if and only if f is continuous.

-

In Sect. 4 we describe a convnet-like model which is a universal approximator for signal transformations \(f:L^2({\mathbb {R}}^2, {\mathbb {R}}^{d_V})\rightarrow L^2({\mathbb {R}}^2, {\mathbb {R}}^{d_U})\) equivariant with respect to the group SE(2) of rigid two-dimensional euclidean motions. We call this model charge–conserving convnet, based on a 2D quantum mechanical analogy (conservation of the total angular momentum). The crucial element of the construction is that the operation of the network is consistent with the decomposition of the feature space into isotypic representations of SO(2). We prove in Theorem 4.1 that a transformation \(f:L^2({\mathbb {R}}^2, {\mathbb {R}}^{d_V})\rightarrow L^2({\mathbb {R}}^2, {\mathbb {R}}^{d_U})\) can be approximated by charge–conserving convnets if and only if f is continuous and SE(2)-equivariant.

2 Compact Groups and Shallow Approximations

In this section we give several results on invariant/equivariant approximations by neural networks in the context of compact groups, finite-dimensional representations, and shallow networks. We start by describing the standard group-averaging approach in Sect. 2.1. In Sect. 2.2 we describe an alternative approach, based on the invariant theory. In Sect. 2.3 we show how one can improve this approach using polarization. Finally, in Sect. 2.4 we describe an application of this approach to the symmetric group \(S_N\).

2.1 Approximations Based on Symmetrization

We start by recalling the universal approximation theorem, which will serve as a “template” for our invariant and equivariant analogs. There are several versions of this theorem (see the survey Pinkus [32]), we will use the general and easy-to-state version given in Pinkus [32].

Theorem 2.1

(Pinkus [32], Theorem 3.1) Let \(\sigma :{\mathbb {R}}\rightarrow {\mathbb {R}}\) be a continuous activation function that is not a polynomial. Let \(V={\mathbb {R}}^d\) be a real finite dimensional vector space. Then any continuous map \(f:V\rightarrow {\mathbb {R}}\) can be approximated, in the sense of uniform convergence on compact sets, by maps \({{\widehat{f}}}: V\rightarrow {\mathbb {R}}\) of the form

with some coefficients \(c_n,w_{ns},h_n\).

Throughout the paper, we assume, as in Theorem 2.1, that \(\sigma :{\mathbb {R}}\rightarrow {\mathbb {R}}\) is some (fixed) continuous activation function that is not a polynomial.

Also, as in this theorem, we will understand approximation in the sense of uniform approximation on compact sets, i.e. meaning that for any compact \(K\subset V\) and any \(\epsilon >0\) one can find an approximating map \({{\widehat{f}}}\) such that \(|f({\mathbf {x}})-{{\widehat{f}}}({\mathbf {x}})|\le \epsilon \) (or \(\Vert f({\mathbf {x}})-{{\widehat{f}}}({\mathbf {x}})\Vert \le \epsilon \) in the case of vector-valued f) for all \({\mathbf {x}}\in K\). In the case of finite-dimensional spaces V considered in the present section, one can equivalently say that there is a sequence of approximating maps \({{\widehat{f}}}_n\) uniformly converging to f on any compact set. Later, in Sects. 3, 4, we will consider infinite-dimensional signal spaces V for which such an equivalence does not hold. Nevertheless, we will use the concept of uniform approximation on compact sets as a guiding principle in our precise definitions of approximation in that more complex setting.

Now suppose that the space V carries a linear representation R of a group \(\Gamma \). Assuming V is finite-dimensional, this means that R is a homomorphism of \(\Gamma \) to the group of linear automorphisms of V:

In the present section we will assume that \(\Gamma \) is a compact group, meaning, as is customary, that \(\Gamma \) is a compact Hausdorff topological space and the group operations (multiplication and inversion) are continuous. Accordingly, the representation R is also assumed to be continuous. We remark that an important special case of compact groups are the finite groups (with respect to the discrete topology).

One important property of compact groups is the existence of a unique, both left- and right-invariant Haar measure normalized so that the total measure of \(\Gamma \) equals 1. Another property is that any continuous representation of a compact group on a separable (but possibly infinite-dimensional) Hilbert space can be decomposed into a countable direct sum of irreducible finite-dimensional representations. There are many group representation textbooks to which we refer the reader for details, see e.g. [38, 40, 44]. Accordingly, in the present section we will restrict ourselves to finite-dimensional representations. Later, in Sects. 3 and 4, we will consider the noncompact groups \({\mathbb {R}}^\nu \) and SE(\(\nu \)) and their natural representations on the infinite-dimensional space \(L^2({\mathbb {R}}^\nu )\), which cannot be decomposed into countably many irreducibles.

Motivated by applications to neural networks, in this section and Sect. 3 we will consider only representations over the field \({\mathbb {R}}\) of reals (i.e. with V a real vector space). Later, in Sect. 4, we will consider complexified spaces as this simplifies the exposition of the invariant theory for the group SO(2).

For brevity, we will call a vector space carrying a linear representation of a group \(\Gamma \) a \(\Gamma \)-module. We will denote by \(R_\gamma \) the linear automorphism obtained by applying R to \(\gamma \in \Gamma \). In particular, the property that R is a homomorphism between \(\Gamma \) and \(\text {GL}(V)\) can then be written as

The integral over the normalized Haar measure on a compact group \(\Gamma \) is denoted by \(\int _\Gamma \cdot \mathrm{d}\gamma \). We will denote vectors by boldface characters; scalar components of the vector \({\mathbf {x}}\) are denoted \(x_k\).

Recall that given a \(\Gamma \)-module V, we call a map \(f:V\rightarrow {\mathbb {R}}\) \(\Gamma \)-invariant (or simply invariant) if \(f(R_\gamma {\mathbf {x}})=f({\mathbf {x}})\) for all \(\gamma \in \Gamma \) and \({\mathbf {x}}\in V\). We state now the basic result on invariant approximation, obtained by symmetrization (group averaging).

Proposition 2.1

Let \(\Gamma \) be a compact group and V a finite-dimensional \(\Gamma \)-module. Then, any continuous invariant map \(f:V\rightarrow {\mathbb {R}}\) can be approximated by \(\Gamma \)-invariant maps \({\widehat{f}}:V\rightarrow {\mathbb {R}}\) of the form

where \(c_n,h_n\in {\mathbb {R}}\) are some coefficients and \(l_n\in V^*\) are some linear functionals on V, i.e. \(l_n({\mathbf {x}})=\sum _{k}w_{nk}x_k\).

Proof

It is clear that the map (2.2) is \(\Gamma \)-invariant, and we only need to prove the completeness part. Let K be a compact subset in V, and \(\epsilon >0\). Consider the symmetrization of K defined by \(K_{\mathrm {sym}}=\cup _{\gamma \in \Gamma } R_\gamma (K).\) Note that \(K_{\mathrm {sym}}\) is also a compact set, because it is the image of the compact set \(\Gamma \times K\) under the continuous map \((\gamma ,{\mathbf {x}})\mapsto R_\gamma {\mathbf {x}}\). We can use Theorem 2.1 to find a map \(f_1:V\rightarrow {\mathbb {R}}\) of the form \(f_1({\mathbf {x}})=\sum _{n=1}^N c_n\sigma (l_n({\mathbf {x}})+h_n)\) and such that \(|f({\mathbf {x}})-f_1({\mathbf {x}})|\le \epsilon \) on \(K_{\mathrm {sym}}\). Now consider the \(\Gamma \)-invariant group-averaged map \({{\widehat{f}}}({\mathbf {x}})=\int _{\Gamma } f_1(R_\gamma {\mathbf {x}})\mathrm{d}\gamma \). Then for any \({\mathbf {x}}\in K\),

where we have used the invariance of f and the fact that \(|f_1({\mathbf {x}})-f({\mathbf {x}})|\le \epsilon \) for \({\mathbf {x}}\in K_{\mathrm {sym}}\). \(\square \)

Now we establish a similar result for equivariant maps. Let V, U be two \(\Gamma \)-modules. For brevity, we will denote by R the representation of \(\Gamma \) in either of them (it will be clear from the context which one is meant). We call a map \(f:V\rightarrow U\) \(\Gamma \)-equivariant if \(f(R_\gamma {\mathbf {x}})=R_\gamma f({\mathbf {x}})\) for all \(\gamma \in \Gamma \) and \({\mathbf {x}}\in V\).

Proposition 2.2

Let \(\Gamma \) be a compact group and V and U two finite-dimensional \(\Gamma \)-modules. Then, any continuous \(\Gamma \)-equivariant map \(f:V\rightarrow U\) can be approximated by \(\Gamma \)-equivariant maps \({\widehat{f}}:V\rightarrow U\) of the form

with some coefficients \(h_n\in {\mathbb {R}}\), linear functionals \(l_n\in V^*\), and vectors \({\mathbf {y}}_n\in U\).

Proof

The proof is analogous to the proof of Proposition 2.1. Fix any norm \(\Vert \cdot \Vert \) in U. Given a compact set K and \(\epsilon >0\), we construct the compact set \(K_{\mathrm {sym}}=\cup _{\gamma \in \Gamma }R_\gamma (K)\) as before. Next, we find \(f_1:V\rightarrow U\) of the form \(f_1({\mathbf {x}})=\sum _{n=1}^N {\mathbf {y}}_n\sigma (l_n({\mathbf {x}})+h_n)\) and such that \(\Vert f({\mathbf {x}})-f_1({\mathbf {x}})\Vert \le \epsilon \) on \(K_{\mathrm {sym}}\) (we can do it, for example, by considering scalar components of f with respect to some basis in U, and approximating these components using Theorem 2.1). Finally, we define the symmetrized map by \({{\widehat{f}}}({\mathbf {x}})=\int _{\Gamma }R_{\gamma }^{-1}f_1(R_\gamma {\mathbf {x}}) \mathrm{d}\gamma \). This map is \(\Gamma \)-equivariant, and, for any \({\mathbf {x}}\in K\),

By continuity of R and compactness of \(\Gamma ,\) \(\max _{\gamma \in \Gamma }\Vert R_\gamma \Vert <\infty \), so we can approximate f by \({{\widehat{f}}}\) on K with any accuracy. \(\square \)

Propositions 2.1, 2.2 present the “symmetrization-based” approach to constructing invariant/equivariant approximations relying on the shallow neural network ansatz (2.1). The approximating expressions (2.2), (2.3) are \(\Gamma \)-invariant/equivariant and universal. Moreover, in the case of finite groups the integrals in these expressions are finite sums, i.e. these approximations consist of finitely many arithmetic operations and evaluations of the activation function \(\sigma \). In the case of infinite groups, the integrals can be approximated by sampling the group.

In the remainder of Sect. 2 we will pursue an alternative approach to symmetrize the neural network ansatz, based on the theory of polynomial invariants.

We finish this subsection with the following general observation. Suppose that we have two \(\Gamma \)-modules U, V, and U can be decomposed into \(\Gamma \)-invariant submodules: \(U=\bigoplus _\beta U_\beta ^{m_\beta }\) (where \(m_\beta \) denotes the multiplicity of \(U_\beta \) in U). Then a map \(f:V\rightarrow U\) is equivariant if and only if it is equivariant in each component \(U_\beta \) of the output space. Moreover, if we denote by \({\text {Equiv}}(V,U)\) the space of continuous equivariant maps \(f:V\rightarrow U\), then

This shows that the task of describing equivariant maps \(f:V\rightarrow U\) reduces to the task of describing equivariant maps \(f:V\rightarrow U_\beta \). In particular, describing vector-valued invariant maps \(f:V\rightarrow {\mathbb {R}}^{d_U}\) reduces to describing scalar-valued invariant maps \(f:V\rightarrow {\mathbb {R}}\).

2.2 Approximations Based on Polynomial Invariants

The invariant theory seeks to describe polynomial invariants of group representations, i.e. polynomial maps \(f:V\rightarrow {\mathbb {R}}\) such that \(f(R_\gamma {\mathbf {x}})\equiv f({\mathbf {x}})\) for all \({\mathbf {x}}\in V\). A fundamental result of the invariant theory is Hilbert’s finiteness theorem [16, 17] stating that for completely reducible representations, all the polynomial invariants are algebraically generated by a finite number of such invariants. In particular, this holds for any representation of a compact group.

Theorem 2.2

(Hilbert) Let \(\Gamma \) be a compact group and V a finite-dimensional \(\Gamma \)-module. Then there exist finitely many polynomial invariants \(f_1,\ldots ,f_{N_{\mathrm {inv}}}:V\rightarrow {\mathbb {R}}\) such that any polynomial invariant \(r:V\rightarrow {\mathbb {R}}\) can be expressed as

with some polynomial \({{\widetilde{r}}}\) of \({N_{\mathrm {inv}}}\) variables.

See, e.g., Kraft and Procesi [21] for a modern expositions of the invariant theory and Hilbert’s theorem. We refer to the set \(\{f_s\}_{s=1}^{N_{\mathrm {inv}}}\) from this theorem as a generating set of polynomial invariants (note that this set is not unique and \({N_{\mathrm {inv}}}\) may be different for different generating sets).

Thanks to the density of polynomials in the space of continuous functions, we can easily combine Hilbert’s theorem with the universal approximation theorem to obtain a complete invariant ansatz for invariant maps:

Proposition 2.3

Let \(\Gamma \) be a compact group, V a finite-dimensional \(\Gamma \)-module, and \(f_1,\ldots ,f_{N_{\mathrm {inv}}}:V\rightarrow {\mathbb {R}}\) a finite generating set of polynomial invariants on V (existing by Hilbert’s theorem). Then, any continuous invariant map \(f:V\rightarrow {\mathbb {R}}\) can be approximated by invariant maps \({\widehat{f}}:V\rightarrow {\mathbb {R}}\) of the form

with some parameter N and coefficients \(c_n,w_{ns},h_n\).

Proof

It is obvious that the expressions \({\widehat{f}}\) are \(\Gamma \)-invariant, so we only need to prove the completeness part.

Let us first show that the map f can be approximated by an invariant polynomial. Let K be a compact subset in V, and, like before, consider the symmetrized set \(K_{\mathrm {sym}}.\) By the Stone-Weierstrass theorem, for any \(\epsilon >0\) there exists a polynomial r on V such that \(|r({\mathbf {x}})-f({\mathbf {x}})|\le \epsilon \) for \({\mathbf {x}}\in K_{\mathrm {sym}}\). Consider the symmetrized function \(r_{\mathrm {sym}}({\mathbf {x}})=\int _{\Gamma }r(R_\gamma {\mathbf {x}}) \mathrm{d}\gamma \). Then the function \(r_{\mathrm {sym}}\) is invariant and \(|r_{\mathrm {sym}}({\mathbf {x}})-f({\mathbf {x}})|\le \epsilon \) for \({\mathbf {x}}\in K\). On the other hand, \(r_{\mathrm {sym}}\) is a polynomial, since \(r(R_\gamma {\mathbf {x}})\) is a fixed degree polynomial in \({\mathbf {x}}\) for any \(\gamma \).

Using Hilbert’s theorem, we express \(r_{\mathrm {sym}}({\mathbf {x}})={\widetilde{r}}(f_1({\mathbf {x}}), \ldots , f_{N_{\mathrm {inv}}}({\mathbf {x}}))\) with some polynomial \({\widetilde{r}}\).

It remains to approximate the polynomial \({\widetilde{r}}(z_1,\ldots ,z_{N_{\mathrm {inv}}})\) by an expression of the form \({\widetilde{f}}(z_1,\ldots ,z_{N_{\mathrm {inv}}})=\sum _{n=1}^Nc_n\sigma (\sum _{s=1}^{N_{\mathrm {inv}}}w_{ns}z_s+h_n)\) on the compact set \(\{(f_1({\mathbf {x}}), \ldots , f_{N_{\mathrm {inv}}}({\mathbf {x}}))|{\mathbf {x}}\in K\}\subset {\mathbb {R}}^{N_{\mathrm {inv}}}\). By Theorem 2.1, we can do it with any accuracy \(\epsilon \). Setting finally \({\widehat{f}}({\mathbf {x}})={\widetilde{f}}(f_1({\mathbf {x}}), \ldots , f_{N_{\mathrm {inv}}}({\mathbf {x}})),\) we obtain \({\widehat{f}}\) of the required form such that \(|{\widehat{f}}({\mathbf {x}})-f({\mathbf {x}})|\le 2\epsilon \) for all \({\mathbf {x}}\in K\). \(\square \)

Note that Proposition 2.3 is a generalization of Theorem 2.1; the latter is a special case obtained if the group is trivial (\(\Gamma =\{e\}\)) or its representation is trivial (\(R_\gamma {\mathbf {x}}\equiv {\mathbf {x}}\)), and in this case we can just take \(N_{\mathrm {inv}}=d\) and \(f_s({\mathbf {x}}) = x_s\).

In terms of neural network architectures, formula (2.5) can be viewed as a shallow neural network with an extra polynomial layer that precedes the conventional linear combination and nonlinear activation layers.

We extend now the obtained result to equivariant maps. Given two \(\Gamma \)-modules V and U, we say that a map \(f:V\rightarrow U\) is polynomial if \(l\circ f\) is a polynomial for any linear functional \(l:U\rightarrow {\mathbb {R}}\). We rely on the extension of Hilbert’s theorem to polynomial equivariants:

Lemma 2.1

Let \(\Gamma \) be a compact group and V and U two finite-dimensional \(\Gamma \)-modules. Then there exist finitely many polynomial invariants \(f_1,\ldots ,f_{N_{\mathrm {inv}}}:V\rightarrow {\mathbb {R}}\) and polynomial equivariants \(g_1,\ldots ,g_{N_{\mathrm {eq}}}:V\rightarrow U\) such that any polynomial equivariant \(r_{\mathrm {sym}}: V\rightarrow U\) can be represented in the form \(r_{\mathrm {sym}}({\mathbf {x}})=\sum _{m=1}^{N_{\mathrm {eq}}} g_m({\mathbf {x}}){{\widetilde{r}}}_m(f_1({\mathbf {x}}), \ldots , f_{N_{\mathrm {inv}}}({\mathbf {x}}))\) with some polynomials \({{\widetilde{r}}}_m\).

Proof

We give a sketch of the proof, see e.g. Section 4 of Worfolk [47] for details. A polynomial equivariant \(r_{\mathrm {sym}}: V\rightarrow U\) can be viewed as an invariant element of the space \({\mathbb {R}}[V]\otimes U\) with the naturally induced action of \(\Gamma \), where \({\mathbb {R}}[V]\) denotes the space of polynomials on V. The space \({\mathbb {R}}[V]\otimes U\) is in turn a subspace of the algebra \({\mathbb {R}}[V\oplus U^*],\) where \(U^*\) denotes the dual of U. By Hilbert’s theorem, all invariant elements in \({\mathbb {R}}[V\oplus U^*]\) can be generated as polynomials of finitely many invariant elements of this algebra. The algebra \({\mathbb {R}}[V\oplus U^*]\) is graded by the degree of the \(U^*\) component, and the corresponding decomposition of \({\mathbb {R}}[V\oplus U^*]\) into the direct sum of \(U^*\)-homogeneous spaces indexed by the \(U^*\)-degree \(d_{U^*}=0,1,\ldots ,\) is preserved by the group action. The finitely many polynomials generating all invariant polynomials in \({\mathbb {R}}[V\oplus U^*]\) can also be assumed to be \(U^*\)-homogeneous. Let \(\{f_s\}_{s=1}^{N_{\mathrm {inv}}}\) be those of these generating polynomials with \(d_{U^*}=0\) and \(\{g_s\}_{s=1}^{N_{\mathrm {eq}}}\) be those with \(d_{U^*}=1.\) Then, a polynomial in the generating invariants is \(U^*\)-homogeneous with \(d_{U^*}=1\) if and only if it is a linear combination of monomials \(g_sf_1^{n_1}f_2^{n_2}\cdots f_{N_{\mathrm {inv}}}^{n_{N_{\mathrm {inv}}}}.\) This yields the representation stated in the lemma. \(\square \)

We will refer to the set \(\{g_s\}_{s=1}^{N_{\mathrm {eq}}}\) as a generating set of polynomial equivariants.

The equivariant analog of Proposition 2.3 now reads:

Proposition 2.4

Let \(\Gamma \) be a compact group, V and U be two finite-dimensional \(\Gamma \)-modules. Let \(f_1,\ldots ,f_{N_{\mathrm {inv}}}:V\rightarrow {\mathbb {R}}\) be a finite generating set of polynomial invariants and \(g_1,\ldots ,g_{N_{\mathrm {eq}}}:V\rightarrow U\) be a finite generating set of polynomial equivariants (existing by Lemma 2.1). Then, any continuous equivariant map \(f:V\rightarrow U\) can be approximated by equivariant maps \({\widehat{f}}:V\rightarrow U\) of the form

with some parameter N and coefficients \(c_{mn},w_{mns}, h_{mn}.\)

Proof

The proof is similar to the proof of Proposition 2.3, with the difference that the polynomial map r is now vector-valued, its symmetrization is defined by \(r_{\mathrm{sym}}({\mathbf {x}})=\int _{\Gamma }R_\gamma ^{-1}r(R_\gamma {\mathbf {x}}) \mathrm{d}\gamma \), and Lemma 2.1 is used in place of Hilbert’s theorem. \(\square \)

We remark that, in turn, Proposition 2.4 generalizes Proposition 2.3; the latter is a special case obtained when \(U={\mathbb {R}}\), and in this case we just take \(N_{\mathrm {eq}}=1\) and \(g_1= 1.\)

2.3 Polarization and Multiplicity Reduction

The main point of Propositions 2.3 and 2.4 is that the representations described there use finite generating sets of invariants and equivariants \(\{f_s\}_{s=1}^{N_{\mathrm {inv}}}, \{g_m\}_{m=1}^{N_{\mathrm {eq}}}\) independent of the function f being approximated. However, the obvious drawback of these results is their non-constructive nature with regard to the functions \(f_s, g_m\). In general, finding generating sets is not easy. Moreover, the sizes \(N_{\mathrm {inv}},N_{\mathrm {eq}}\) of these sets in general grow rapidly with the dimensions of the spaces V, U.

This issue can be somewhat ameliorated using polarization and Weyl’s theorem. Suppose that a \(\Gamma \)-module V admits a decomposition into a direct sum of invariant submodules:

Here, \(V_\alpha ^{m_\alpha }\) is a direct sum of \(m_\alpha \) submodules isomorphic to \(V_\alpha \):

Any finite-dimensional representation of a compact group is completely reducible and has a decomposition of the form (2.6) with non-isomorphic irreducible submodules \(V_\alpha \). In this case the decomposition (2.6) is referred to as the isotypic decomposition, and the subspaces \(V_\alpha ^{m_\alpha }\) are known as isotypic components. Such isotypic components and their multiplicities \(m_\alpha \) are uniquely determined (though individually, the \(m_\alpha \) spaces \(V_\alpha \) appearing in the direct sum (2.7) are not uniquely determined in general, as subspaces in V).

For finite groups the number of non-isomorphic irreducibles \(\alpha \) is finite. In this case, if the module V is high-dimensional, then this necessarily means that (some of) the multiplicities \(m_\alpha \) are large. This is not so, in general, for infinite groups, since infinite compact groups have countably many non-isomorphic irreducible representations. Nevertheless, it is in any case useful to simplify the structure of invariants for high-multiplicity modules, which is what polarization and Weyl’s theorem do.

Below, we slightly abuse the terminology and speak of isotypic components and decompositions in the broader sense, assuming decompositions (2.6), (2.7) but not requiring the submodules \(V_\alpha \) to be irreducible or mutually non-isomorphic.

The idea of polarization is to generate polynomial invariants of a representation with large multiplicities from invariants of a representation with small multiplicities. Namely, note that in each isotypic component \(V_\alpha ^{m_\alpha }\) written as \(V_\alpha \otimes {\mathbb {R}}^{m_\alpha }\) the group essentially acts only on the first factor, \(V_\alpha \). So, given two isotypic \(\Gamma \)-modules of the same type, \(V_\alpha ^{m_\alpha }=V_\alpha \otimes {\mathbb {R}}^{m_\alpha }\) and \(V_\alpha ^{m'_\alpha }=V_\alpha \otimes {\mathbb {R}}^{m'_\alpha }\), the group action commutes with any linear map \(\mathbb {1}_{V_\alpha }\otimes A:V_\alpha ^{m_\alpha }\rightarrow V_\alpha ^{m'_\alpha }\), where A acts on the second factor, \(A:{\mathbb {R}}^{m_\alpha }\rightarrow {\mathbb {R}}^{m'_\alpha }\). Consequently, given two modules \(V=\bigoplus _\alpha V_\alpha ^{m_\alpha }\), \(V'=\bigoplus _\alpha V_\alpha ^{m'_\alpha }\) and a linear map \(A_\alpha :V_\alpha ^{m_\alpha }\rightarrow V_\alpha ^{m'_\alpha }\) for each \(\alpha \), the linear operator \({\mathbf {A}}:V\rightarrow V'\) defined by

will commute with the group action. In particular, if f is a polynomial invariant on \(V'\), then \(f\circ {\mathbf {A}}\) will be a polynomial invariant on V.

The fundamental theorem of Weyl states that it suffices to take \(m'_\alpha =\dim V_\alpha \) to generate in this way a complete set of invariants for V. We will state this theorem in the following form suitable for our purposes.

Theorem 2.3

(Weyl [46], sections II.4-5) Let F be the set of polynomial invariants for a \(\Gamma \)-module \(V'=\bigoplus _\alpha V_\alpha ^{\dim V_\alpha }.\) Suppose that a \(\Gamma \)-module V admits a decomposition \(V=\bigoplus _\alpha V_\alpha ^{m_\alpha }\) with the same \(V_\alpha \), but arbitrary multiplicities \(m_\alpha \). Then the polynomials \(\{f\circ {\mathbf {A}}\}_{f\in F}\) linearly span the space of polynomial invariants on V, i.e. any polynomial invariant f on V can be expressed as \(f({\mathbf {x}}) = \sum _{t=1}^T f_{t}({\mathbf {A}}_t{\mathbf {x}})\) with some polynomial invariants \(f_t\) on \(V'\).

Proof

A detailed exposition of polarization and a proof of Weyl’s theorem based on the Capelli–Deruyts expansion can be found in Weyl’s book or in Sections 7–9 of Kraft and Procesi [21]. We sketch the main idea of the proof.

Consider first the case where V has only one isotypic component: \(V=V_\alpha ^{m_\alpha }\). We may assume without loss of generality that \(m_\alpha >\dim V_\alpha \) (otherwise the statement is trivial). It is also convenient to identify the space \(V'=V_\alpha ^{\dim V_\alpha }\) with the subspace of V spanned by the first \(\dim V_\alpha \) components \(V_\alpha \). It suffices to establish the claimed expansion for polynomials f multihomogeneous with respect to the decomposition \(V=V_\alpha \oplus \ldots \oplus V_\alpha \), i.e. homogeneous with respect to each of the \(m_\alpha \) components. For any such polynomial, the Capelli–Deruyts expansion represents f as a finite sum \(f=\sum _{n}C_nB_nf\). Here \(C_n,B_n\) are linear operators on the space of polynomials on V, and they belong to the algebra generated by polarization operators on V. Moreover, for each n, the polynomial \({{\widetilde{f}}}_n=B_nf\) depends only on variables from the first \(\dim V_\alpha \) components of \(V=V_\alpha ^{m_\alpha }\), i.e. \({{\widetilde{f}}}_n\) is a polynomial on \(V'\). This polynomial is invariant, since polarization operators commute with the group action. Since \(C_n\) belongs to the algebra generated by polarization operators, we can then argue (see Proposition 7.4 in Kraft and Procesi [21]) that \(C_nB_nf\) can be represented as a finite sum \(C_nB_nf({\mathbf {x}})=\sum _{k}{{\widetilde{f}}}_n((\mathbb {1}_{V_\alpha }\otimes A_{kn}){\mathbf {x}})\) with some \(m_\alpha \times \dim V_\alpha \) matrices \(A_{kn}\). This implies the claim of the theorem in the case of a single isotypic component.

Generalization to several isotypic components is obtained by iteratively applying the Capelli–Deruyts expansion to each component. \(\square \)

Now we can give a more constructive version of Proposition 2.3:

Proposition 2.5

Let \((f_s)_{s=1}^{N_{\mathrm {inv}}}\) be a generating set of polynomial invariants for a \(\Gamma \)-module \(V'=\bigoplus _\alpha V_\alpha ^{\dim V_\alpha }.\) Suppose that a \(\Gamma \)-module V admits a decomposition \(V=\bigoplus _\alpha V_\alpha ^{m_\alpha }\) with the same \(V_\alpha \), but arbitrary multiplicities \(m_\alpha \). Then any continuous invariant map \(f:V\rightarrow {\mathbb {R}}\) can be approximated by invariant maps \({\widehat{f}}:V\rightarrow {\mathbb {R}}\) of the form

with some parameter T and coefficients \(c_{t},w_{st},h_{t},{\mathbf {A}}_{t},\) where each \({\mathbf {A}}_{t}\) is formed by an arbitrary collection of \((m_\alpha \times \dim V_\alpha )\)-matrices \(A_\alpha \) as in (2.8).

Proof

We follow the proof of Proposition 2.3 and approximate the function f by an invariant polynomial \(r_{\mathrm {sym}}\) on a compact set \(K_{\mathrm {sym}}\subset V\). Then, using Theorem 2.3, we represent

with some invariant polynomials \(r_t\) on \(V'\). Then, by Proposition 2.3, for each t we can approximate \(r_t({\mathbf {y}})\) on \({\mathbf {A}}_tK_{\mathrm {sym}}\) by an expression

with some \({\widetilde{c}}_{nt},{\widetilde{w}}_{nst},{\widetilde{h}}_{nt}.\) Combining (2.10) with (2.11), it follows that f can be approximated on \(K_{\mathrm {sym}}\) by

The final expression (2.9) is obtained now by removing the superfluous summation over n. \(\square \)

Proposition 2.5 is more constructive than Proposition 2.3 in the sense that the approximating ansatz (2.9) only requires us to know an isotypic decomposition \(V=\bigoplus _\alpha V_\alpha ^{m_\alpha }\) of the \(\Gamma \)-module under consideration and a generating set \((f_s)_{s=1}^{N_{\mathrm {inv}}}\) for the reference module \(V'=\bigoplus _\alpha V_\alpha ^{\dim V_\alpha }\). In particular, suppose that the group \(\Gamma \) is finite, so that there are only finitely many non-isomorphic irreducible modules \(V_\alpha \). Then, for any \(\Gamma \)-module V, the universal approximating ansatz (2.9) includes not more than \(CT\dim V\) scalar weights, with some constant C depending only on \(\Gamma \) (since \(\dim V=\sum _{\alpha }m_\alpha \dim V_\alpha \)).

We remark that in terms of the network architecture, formula (2.9) can be interpreted as the network (2.5) from Proposition 2.3 with an extra linear layer performing multiplication of the input vector by \({\mathbf {A}}_t\).

We establish now an equivariant analog of Proposition 2.5. We start with an equivariant analog of Theorem 2.3.

Proposition 2.6

Let \(V'=\bigoplus _\alpha V_\alpha ^{\dim V_\alpha }\) and G be the space of polynomial equivariants \(g:V'\rightarrow U\). Suppose that a \(\Gamma \)-module V admits a decomposition \(V=\bigoplus _\alpha V_\alpha ^{m_\alpha }\) with the same \(V_\alpha \), but arbitrary multiplicities \(m_\alpha \). Then, the functions \(\{g\circ {\mathbf {A}}\}_{g\in G}\) linearly span the space of polynomial equivariants \(g:V\rightarrow U\), i.e. any such equivariant can be expressed as \(g({\mathbf {x}}) = \sum _{t=1}^T g_{t}({\mathbf {A}}_t{\mathbf {x}})\) with some polynomial equivariants \(g_t:V'\rightarrow U\).

Proof

As mentioned in the proof of Lemma 2.1, polynomial equivariants \(g:V\rightarrow U\) can be viewed as invariant elements of the extended polynomial algebra \({\mathbb {R}}[V\oplus U^*]\). The proof of the theorem is then completely analogous to the proof of Theorem 2.3 and consists in applying the Capelli–Deruyts expansion to each isotypic component of the submodule V in \(V\oplus U^*\). \(\square \)

The equivariant analog of Proposition 2.5 now reads:

Proposition 2.7

Let \((f_s)_{s=1}^{N_{\mathrm {inv}}}\) be a generating set of polynomial invariants for a \(\Gamma \)-module \(V'=\bigoplus _\alpha V_\alpha ^{\dim V_\alpha }\), and \((g_s)_{s=1}^{N_\mathrm {eq}}\) be a generating sets of polynomial equivariants mapping \(V'\) to a \(\Gamma \)-module U. Let \(V=\bigoplus _\alpha V_\alpha ^{m_\alpha }\) be a \(\Gamma \)-module with the same \(V_\alpha \). Then any continuous equivariant map \(f:V\rightarrow U\) can be approximated by equivariant maps \({\widehat{f}}:V\rightarrow U\) of the form

with some coefficients \(c_{mt},w_{mst},h_{mt},{\mathbf {A}}_{t},\) where each \({\mathbf {A}}_{t}\) is given by a collection of \((m_\alpha \times \dim V_\alpha )\)-matrices \(A_\alpha \) as in (2.8).

Proof

As in the proof of Theorem 2.4, we approximate the function f by a polynomial equivariant \(r_{\mathrm {sym}}\) on a compact \(K_{\mathrm {sym}}\subset V\). Then, using Theorem 2.6, we represent

with some polynomial equivariants \(r_t:V'\rightarrow U\). Then, by Proposition 2.4, for each t we can approximate \(r_t({\mathbf {x}}')\) on \({\mathbf {A}}_tK_{\mathrm {sym}}\) by expressions

Using (2.13) and (2.14), f can be approximated on \(K_{\mathrm {sym}}\) by expressions

We obtain the final form (2.12) by removing the superfluous summation over n. \(\square \)

We remark that Proposition 2.7 improves the earlier Proposition 2.4 in the equivariant setting in the same sense in which Proposition 2.5 improves Proposition 2.3 in the invariant setting: construction of a universal approximator in the case of arbitrary isotypic multiplicities is reduced to the construction with particular multiplicities by adding an extra equivariant linear layer to the network.

2.4 The Symmetric Group \(S_N\)

Even with the simplification resulting from polarization, the general results of the previous section are not immediately useful, since one still needs to find the isotypic decomposition of the analyzed \(\Gamma \)-modules and to find the relevant generating invariants and equivariants. In this section we describe one particular case where the approximating expression can be reduced to a fully explicit form.

Namely, consider the natural action of the symmetric group \(S_N\) on \({\mathbb {R}}^N\):

where \({\mathbf {e}}_n\in {\mathbb {R}}^N\) is a coordinate vector and \(\gamma \in S_N\) is a permutation.

Let \(V={\mathbb {R}}^N\otimes {\mathbb {R}}^M\) and consider V as a \(S_N\)-module by assuming that the group acts on the first factor, i.e. \(\gamma \) acts on \({\mathbf {x}}=\sum _{n=1}^N {\mathbf {e}}_n\otimes {\mathbf {x}}_n\in V\) by

We remark that this module appears, for example, in the following scenario (cf. Zaheer et al. [49]). Suppose that f is a map defined on the set of sets \(X=\{{\mathbf {x}}_1,\ldots ,{\mathbf {x}}_N\}\) of N vectors from \({\mathbb {R}}^M\). We can identify the set X with the element \(\sum _{n=1}^N {\mathbf {e}}_n\otimes {\mathbf {x}}_n\) of V and in this way view f as defined on a subset of V. However, since the set X is unordered, it can also be identified with \(\sum _{n=1}^N {\mathbf {e}}_{\gamma (n)}\otimes {\mathbf {x}}_n\) for any permutation \(\gamma \in {\mathcal {S}}_N\). Accordingly, if the map f is to be extended to the whole V, then this extension needs to be invariant with respect to the above action of \(S_N\).

We describe now an explicit complete ansatz for \(S_N\)-invariant approximations of functions on V. This is made possible by another classical theorem of Weyl and by a simple form of a generating set of permutation invariants on \({\mathbb {R}}^N\). We will denote by \(x_{nm}\) the coordinates of \({\mathbf {x}}\in V\) with respect to the canonical basis in V:

Theorem 2.4

Let \(V={\mathbb {R}}^N\otimes {\mathbb {R}}^M\) and \(f:V\rightarrow {\mathbb {R}}\) be a \(S_N\)-invariant continuous map. Then f can be approximated by \(S_N\)-invariant expressions

with some parameters \(T_1,T_2\) and coefficients \(c_t,w_{qt},b_q,a_{tm},e_{q},{h}_{t}\).

Proof

It is clear that expression (2.15) is \(S_N\)-invariant and we only need to prove its completeness. The theorem of Weyl [46, Section II.3] states that a generating set of symmetric polynomials on V can be obtained by polarizing a generating set of symmetric polynomials \(\{f_p\}_{p=1}^{N_\mathrm {inv}}\) defined on a single copy of \({\mathbb {R}}^N\). Arguing as in Proposition 2.5, it follows that any \(S_N\)-invariant continuous map \(f:V\rightarrow {\mathbb {R}}\) can be approximated by expressions

where \(\widetilde{{\mathbf {A}}}_t{\mathbf {x}}=\sum _{n=1}^N\sum _{m=1}^M {{\widetilde{a}}}_{tm}x_{nm}{\mathbf {e}}_n.\) A well-known generating set of symmetric polynomials on \({\mathbb {R}}^N\) is the first N coordinate power sums:

It follows that f can be approximated by expressions

Using Theorem 2.1, we can approximate \({{\widetilde{f}}}_p(y)\) by expressions \(\sum _{q=1}^{T}{{\widetilde{d}}}_{pq}\sigma ({{\widetilde{b}}}_{pq}y+{{\widetilde{h}}}_{pq})\). It follows that (2.16) can be approximated by

Replacing the double summation over p, q by a single summation over q, we arrive at (2.15). \(\square \)

Note that expression (2.15) resembles the formula of the usual (non-invariant) feedforward network with two hidden layers of sizes \(T_1\) and \(T_2\):

Let us also compare ansatz (2.15) with the ansatz obtained by direct symmetrization (see Proposition (2.1)), which in our case has the form

From the application perspective, since \(|S_N|=N!,\) at large N this expression has prohibitively many terms and is therefore impractical without subsampling of \(S_N\), which would break the exact \(S_N\)-invariance. In contrast, ansatz (2.15) is complete, fully \(S_N\)-invariant and involves only \(O(T_1N(M+T_2))\) arithmetic operations and evaluations of \(\sigma \).

We remark that another complete permutation-invariant network architecture, using the \(\max \) function, was given in Qi et al. [34, Theorem 1].

3 Translations and Deep Convolutional Networks

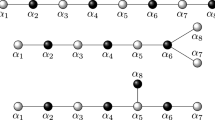

Convolutional neural networks (convnets, [22]) play a key role in many modern applications of deep learning. Such networks operate on input data having grid-like structure (usually, spatial or temporal) and consist of multiple stacked convolutional layers transforming initial object description into increasingly complex features necessary to recognize complex patterns in the data. The shape of earlier layers in the network mimics the shape of input data, but later layers gradually become “thinner” geometrically while acquiring “thicker” feature dimensions. We refer the reader to deep learning literature for details on these networks, e.g. see Chapter 9 in Goodfellow et al. [12] for an introduction.

There are several important concepts associated with convolutional networks, in particular weight sharing (which ensures approximate translation equivariance of the layers with respect to grid shifts); locality of the layer operation; and pooling. Locality means that the layer output at a certain geometric point of the domain depends only on a small neighborhood of this point. Pooling is a grid subsampling that helps reshape the data flow by removing excessive spatial detalization. Practical usefulness of convnets stems from the interplay between these various elements of convnet design.

From the perspective of the main topic of the present work—group invariant/equivariant networks—we are mostly interested in invariance/equivariance of convnets with respect to Lie groups such as the group of translations or the group of rigid motions (to be considered in Sect. 4), and we would like to establish relevant universal approximation theorems. However, we first point out some serious difficulties that one faces when trying to formulate and prove such results.

Lack of symmetry in finite computational models Practically used convnets are finite models; in particular they operate on discretized and bounded domains that do not possess the full symmetry of the spaces \({\mathbb {R}}^d\). While the translational symmetry is partially preserved by discretization to a regular grid, and the group \({\mathbb {R}}^d\) can be in a sense approximated by the groups \((\lambda {\mathbb {Z}})^d\) or \((\lambda {\mathbb {Z}}_n)^d\), one cannot reconstruct, for example, the rotational symmetry in a similar way. If a group \(\Gamma \) is compact, then, as discussed in Sect. 2, we can still obtain finite and fully \(\Gamma \)-invariant/equivariant computational models by considering finite-dimensional representations of \(\Gamma \), but this is not the case with noncompact groups such as \({\mathbb {R}}^d\). Therefore, in the case of the group \({\mathbb {R}}^d \) (and the group of rigid planar motions considered later in Sect. 4), we will need to prove the desired results on invariance/eqiuvariance and completeness of convnets only in the limit of infinitely large domain and infinitesimal grid spacing.

Erosion of translation equivariance by pooling Pooling reduces the translational symmetry of the convnet model. For example, if a few first layers of the network define a map equivariant with respect to the group \((\lambda {\mathbb {Z}})^2\) with some spacing \(\lambda \), then after pooling with stride m the result will only be equivariant with respect to the subgroup \((m\lambda {\mathbb {Z}})^2\). (We remark in this regard that in practical applications, weight sharing and accordingly translation equivariance are usually only important for earlier layers of convolutional networks.) Therefore, we will consider separately the cases of convnets without or with pooling; the \({\mathbb {R}}^d\)-equivariance will only apply in the former case.

In view of the above difficulties, in this section we will give several versions of the universal approximation theorem for convnets, with different treatments of these issues.

In Sect. 3.1 we prove a universal approximation theorem for a single non-local convolutional layer on a finite discrete grid with periodic boundary conditions (Proposition 3.1). This basic result is a straightforward consequence of the general Proposition 2.2 when applied to finite abelian groups.

In Sect. 3.2 we prove the main result of Sect. 3, Theorem 3.1. This theorem extends Proposition 3.1 in several important ways. First, we will consider continuum signals, i.e. assume that the approximated map is defined on functions on \({\mathbb {R}}^n\) rather than on functions on a discrete grid. This extension will later allow us to rigorously formulate a universal approximation theorem for rotations and euclidean motions in Sect. 4. Second, we will consider stacked convolutional layers and assume each layer to act locally (as in convnets actually used in applications). However, the setting of Theorem 3.2 will not involve pooling, since, as remarked above, pooling destroys the translation equivariance of the model.

In Sect. 3.3 we prove Theorem 3.2, relevant for convnets most commonly used in practice. Compared to the setting of Sect. 3.2, this computational model will be spatially bounded, will include pooling, and will not assume translation invariance of the approximated map.

3.1 Finite Abelian Groups and Single Convolutional Layers

We consider a group

where \({\mathbb {Z}}_n={\mathbb {Z}}/(n{\mathbb {Z}})\) is the cyclic group of order n. Note that the group \(\Gamma \) is abelian and conversely, by the fundamental theorem of finite abelian groups, any such group can be represented in the form (3.1).

We consider the “input” module \(V={\mathbb {R}}^{\Gamma }\otimes {\mathbb {R}}^{d_V}\) and the “output” module \(U={\mathbb {R}}^{\Gamma }\otimes {\mathbb {R}}^{d_U}\), with some finite dimensions \(d_V, d_U\) and with the natural representation of \(\Gamma \):

We will denote elements of V, U by boldface characters \({\varvec{\Phi }}\) and interpret them as \(d_V\)- or \(d_U\)-component signals defined on the set \(\Gamma \). For example, in the context of 2D image processing we have \(\nu =2\) and the group \(\Gamma ={\mathbb {Z}}_{n_1}\times {\mathbb {Z}}_{n_2}\) corresponds to a discretized rectangular image with periodic boundary conditions, where \(n_1,n_2\) are the geometric sizes of the image while \(d_V\) and \(d_U\) are the numbers of input and output features, respectively (in particular, if the input is a usual RGB image, then \(d_V=3\)).

Denote by \(\Phi _{\theta k}\) the coefficients in the expansion of a vector \({\varvec{\Phi }}\) from V or U over the standard product bases in these spaces:

We describe now a complete equivariant ansatz for approximating \(\Gamma \)-equivariant maps \(f:V\rightarrow U\). Thanks to decomposition (2.4), we may assume without loss that \(d_U=1\). By (3.2), any map \(f:V\rightarrow U\) is then specified by the coefficients \(f({\varvec{\Phi }})_{\theta }(\equiv f({\varvec{\Phi }})_{\theta ,1})\in {\mathbb {R}}\) as \({\varvec{\Phi }}\) runs over V and \(\theta \) runs over \(\Gamma \).

Proposition 3.1

Any continuous \(\Gamma \)-equivariant map \(f:V\rightarrow U\) can be approximated by \(\Gamma \)-equivariant maps \({\widehat{f}}:V\rightarrow U\) of the form

where \({\varvec{\Phi }} =\sum _{\gamma \in \Gamma }\sum _{k=1}^{d_V}\Phi _{\gamma k}{\mathbf {e}}_{\gamma }\otimes {\mathbf {e}}_{k}\), N is a parameter, and \(c_n,w_{n\theta k}, h_n\) are some coefficients.

Proof

We apply Proposition 2.2 with \(l_n({\varvec{\Phi }})=\sum _{\theta '\in \Gamma }\sum _{k=1}^{d_V}w'_{n\theta ' k}\Phi _{\theta ' k}\) and \({\mathbf {y}}_n=\sum _{\varkappa \in \Gamma }y_{n\varkappa }{\mathbf {e}}_{\varkappa }\), and obtain the ansatz

where

By linearity of the expression on the r.h.s. of (3.3), it suffices to check that each \({\mathbf {a}}_{\varkappa n}\) can be written in the form

But this expression results if we make in (3.4) the substitutions \(\gamma =\varkappa -\gamma ', \theta =\theta '-\varkappa \) and \(w_{n\theta k}=w'_{n,\theta +\varkappa ,k}.\) \(\square \)

The expression (3.3) resembles the standard convolutional layer without pooling as described, e.g., in Goodfellow et al. [12]. Specifically, this expression can be viewed as a linear combination of N scalar filters obtained as compositions of linear convolutions with pointwise non-linear activations. An important difference with the standard convolutional layers is that the convolutions in (3.3) are non-local, in the sense that the weights \(w_{n\theta k}\) do not vanish at large \(\theta \). Clearly, this non-locality is inevitable if approximation is to be performed with just a single convolutional layer.

We remark that it is possible to use Proposition 2.4 to describe an alternative complete \(\Gamma \)-equivariant ansatz based on polynomial invariants and equivariants. However, this approach seems to be less efficient because it is relatively difficult to specify a small explicit set of generating polynomials for abelian groups (see, e.g. Schmid [36] for a number of relevant results). Nevertheless, we will use polynomial invariants of the abelian group SO(2) in our construction of “charge-conserving convnet” in Sect. 4.

3.2 Continuum Signals and Deep Convnets

In this section we extend Proposition 3.1 in several ways.

First, instead of the group \({\mathbb {Z}}_{n_1}\times \cdots \times {\mathbb {Z}}_{n_\nu }\) we consider the group \(\Gamma ={\mathbb {R}}^\nu \). Accordingly, we will consider infinite-dimensional \({\mathbb {R}}^\nu \)-modules

with some finite \(d_V, d_U\). Here, \(L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d})\) is the Hilbert space of maps \({\varvec{\Phi }}:{\mathbb {R}}^\nu \rightarrow {\mathbb {R}}^{d}\) with \(\int _{{\mathbb {R}}^d}|{\varvec{\Phi }}(\gamma )|^2\mathrm{d}\gamma <\infty ,\) equipped with the standard scalar product \(\langle {\varvec{\Phi }},{\varvec{\Psi }}\rangle = \int _{{\mathbb {R}}^d} {\varvec{\Phi }}(\gamma )\cdot {\varvec{\Psi }}(\gamma ) \mathrm{d}\gamma \), where \({\varvec{\Phi }}(\gamma )\cdot {\varvec{\Psi }}(\gamma )\) denotes the scalar product of \({\varvec{\Phi }}(\gamma )\) and \({\varvec{\Psi }}(\gamma )\) in \({\mathbb {R}}^{d}\). The group \({\mathbb {R}}^\nu \) is naturally represented on V, U by

Throughout this section, \(R_\gamma \) will denote this representation of \({\mathbb {R}}^\nu \) on V or U. Compared to the setting of the previous subsection, we interpret the modules V, U as carrying now “infinitely extended” and “infinitely detailed” \(d_V\)- or \(d_U\)-component signals. We will be interested in approximating arbitrary \({\mathbb {R}}^\nu \)-equivariant continuous maps \(f:V\rightarrow U\).

The second extension is that we will perform this approximation using stacked convolutional layers with local action. Our approximation will be a finite computational model, and to define it we first need to apply a discretization and a spatial cutoff to vectors from V and U.

Let us first describe the discretization. For any grid spacing \(\lambda >0\), let \(V_\lambda \) be the subspace in V formed by signals \({\varvec{\Phi }}:{\mathbb {R}}^\nu \rightarrow {\mathbb {R}}^{d_V}\) constant on all cubes

where \({\mathbf {k}} = (k_1,\ldots ,k_\nu )\in {\mathbb {Z}}^\nu .\) Let \(P_\lambda \) be the orthogonal projector onto \(V_\lambda \) in V:

A function \({\varvec{\Phi }}\in V_\lambda \) can naturally be viewed as a function on the lattice \((\lambda {\mathbb {Z}})^\nu \), so that we can also view \(V_\lambda \) as a Hilbert space

with the scalar product \(\langle {\varvec{\Phi }},{\varvec{\Psi }}\rangle =\lambda ^\nu \sum _{\gamma \in (\lambda {\mathbb {Z}})^\nu }{\varvec{\Phi }}(\gamma )\cdot {\varvec{\Psi }}(\gamma )\). We define the subspaces \(U_\lambda \subset U\) similarly to the subspaces \(V_\lambda \subset V\).

Next, we define the spatial cutoff. For an integer \(L\ge 0\) we denote by \(Z_L\) the size-2L cubic subset of the grid \({\mathbb {Z}}^\nu \):

where \({\mathbf {k}}=(k_1,\ldots ,k_\nu )\in {\mathbb {Z}}^\nu \) and \(\Vert {\mathbf {k}}\Vert _\infty =\max _{n=1,\ldots ,\nu }|k_n|\). Let \(\lfloor \cdot \rfloor \) denote the standard floor function. For any \(\Lambda \ge 0\) (referred to as the spatial range or cutoff) we define the subspace \(V_{\lambda ,\Lambda }\subset V_\lambda \) by

Clearly, \(\dim V_{\lambda ,\Lambda }=(2\lfloor \Lambda /\lambda \rfloor +1)^\nu d_V.\) The subspaces \(U_{\lambda ,\Lambda }\subset U_\lambda \) are defined in a similar fashion. We will denote by \(P_{\lambda ,\Lambda }\) the linear operators orthogonally projecting V to \(V_{\lambda , \Lambda }\) or U to \(U_{\lambda ,\Lambda }\).

In the following, we will assume that the convolutional layers have a finite receptive field \(Z_{L_\mathrm {rf}}\)—a set of the form (3.7) with some fixed \(L_\mathrm {rf}>0\).

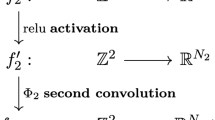

We can now describe our model of stacked convnets that will be used to approximate maps \(f:V\rightarrow U\) (see Fig. 1). Namely, our approximation will be a composition of the form

Here, the first step \(P_{\lambda ,\Lambda +(T-1)\lambda L_{\mathrm {rf}}}\) is an orthogonal finite-dimensional projection implementing the initial discretization and spatial cutoff of the signal. The maps \({{\widehat{f}}}_t\) are convolutional layers connecting intermediate spaces

with some feature dimensions \(d_t\) such that \(d_1=d_V\) and \(d_{T+1}=d_U\). The first intermediate space \(W_1\) is identified with the space \(V_{\lambda ,\Lambda +(T-1)\lambda L_{\mathrm {rf}}}\) (the image of the projector \(P_{\lambda ,\Lambda +(T-1)\lambda L_{\mathrm {rf}}}\) applied to V), while the end space \(W_{T+1}\) is identified with \(U_{\lambda ,\Lambda }\) (the respective discretization and cutoff of U).

The convolutional layers are defined as follows. Let \((\Phi _{\gamma n})_{{\mathop {n=1,\ldots ,d_t}\limits ^{\gamma \in Z_{\lfloor \Lambda /\lambda \rfloor +(T-t) L_{\mathrm {rf}}}}}}\) be the coefficients in the expansion of \({\varvec{\Phi }}\in W_t\) over the standard basis in \(W_t\), as in (3.2). Then, for \(t<T\) we define \({{\widehat{f}}}_t\) using the conventional “linear convolution followed by nonlinear activation” formula,

while in the last layer (\(t=T\)) we drop nonlinearities and only form a linear combination of values at the same point of the grid:

Note that the grid size \({\lfloor \Lambda /\lambda \rfloor +(T-t)L_{\mathrm {rf}}}\) associated with the space \(W_t\) is consistent with the rule (3.11) which evaluates the new signal \({\widehat{f}}({\varvec{\Phi }})\) at each node of the grid as a function of the signal \({\varvec{\Phi }}\) in the \(L_\mathrm {rf}\)-neighborhood of that node (so that the domain \(\lambda Z_{\lfloor \Lambda /\lambda \rfloor +(T-t) L_{\mathrm {rf}}}\) “shrinks” slightly as t grows).

Note that we can interpret the map \({{\widehat{f}}}\) as a map between V and U, since \(U_{\lambda , \Lambda }\subset U\).

Definition 3.1

A basic convnet is a map \({{\widehat{f}}}:V\rightarrow U\) defined by (3.9), (3.11), (3.12), and characterized by parameters \(\lambda , \Lambda , L_{\mathrm {rf}}, T, d_1,\ldots ,d_{T+1}\) and coefficients \(w_{n\theta k}^{(t)}\) and \(h_n^{(t)}\).

Note that, defined in this way, a basic convnet is a finite computational model in the following sense: while being a map between infinite-dimensional spaces V and U, all the steps in \({{\widehat{f}}}\) except the initial discretization and cutoff involve only finitely many arithmetic operations and evaluations of the activation function.

We aim to prove an analog of Theorem 2.1, stating that any continuous \({\mathbb {R}}^\nu \)-equivariant map \(f:V\rightarrow U\) can be approximated by basic convnets in the topology of uniform convergence on compact sets. However, there are some important caveats due to the fact that the space V is now infinite-dimensional.

First, in contrast to the case of finite-dimensional spaces, balls in \(L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d_V})\) are not compact. The well-known general criterion states that in a complete metric space, and in particular in \(V=L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d_V})\), a set is compact iff it is closed and totally bounded, i.e. for any \(\epsilon >0\) can be covered by finitely many \(\epsilon \)-balls.

The second point (related to the first) is that a finite-dimensional space is hemicompact, i.e., there is a sequence of compact sets such that any other compact set is contained in one of them. As a result, the space of maps \(f:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}^{m}\) is first-countable with respect to the topology of compact convergence, i.e. each point has a countable base of neighborhoods, and a point f is a limit point of a set S if and only if there is a sequence of points in S converging to f. In a general topological space, however, a limit point of a set S may not be representable as the limit of a sequence of points from S. In particular, the space \(L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d_V})\) is not hemicompact and the space of maps \(f:L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d_V})\rightarrow L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d_U})\) is not first countable with respect to the topology of compact convergence, so that, in particular, we must distinguish between the notions of limit points of the set of convnets and the limits of sequences of convnets. We refer the reader, e.g., to the book [30] for a general discussion of this and other topological questions and in particular to §46 for a discussion of compact convergence.

When defining a limiting map, we would like to require the convnets to increase their resolution \(\frac{1}{\lambda }\) and range \(\Lambda \). At the same time, we will regard the receptive field and its range parameter \(L_\mathrm {rf}\) as arbitrary but fixed (the current common practice in applications is to use small values such as \(L_\mathrm {rf}=1\) regardless of the size of the network; see, e.g., the architecture of residual networks [13] providing state-of-the-art performance on image recognition tasks).

With all these considerations in mind, we introduce the following definition of a limit point of convnets.

Definition 3.2

With \(V=L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d_V})\) and \(U=L^2({\mathbb {R}}^\nu ,{\mathbb {R}}^{d_U})\), we say that a map \(f:V\rightarrow U\) is a limit point of basic convnets if for any \(L_\mathrm {rf}\), any compact set \(K\subset V\), and any \(\epsilon>0, \lambda _0>0\) and \(\Lambda _0>0\) there exists a basic convnet \({{\widehat{f}}}\) with the receptive field parameter \(L_{\mathrm {rf}}\), spacing \(\lambda \le \lambda _0\) and range \(\Lambda \ge \Lambda _0\) such that \(\sup _{{\varvec{\Phi }}\in K}\Vert {{\widehat{f}}}({\varvec{\Phi }})-f({\varvec{\Phi }})\Vert <\epsilon \).

We can state now the main result of this section.

Theorem 3.1

A map \(f:V\rightarrow U\) is a limit point of basic convnets if and only if f is \({\mathbb {R}}^\nu \)-equivariant and continuous in the norm topology.

Before giving the proof of the theorem, we recall the useful notion of strong convergence of linear operators on Hilbert spaces. Namely, if \(A_n\) is a sequence of bounded linear operators on a Hilbert space and A is another such operator, then we say that the sequence \(A_n\) converges strongly to A if \(A_n{\varvec{\Phi }}\) converges to \(A{\varvec{\Phi }}\) for any vector \({\varvec{\Phi }}\) from this Hilbert space. More generally, strong convergence can be defined, by the same reduction, for any family \(\{A_\alpha \}\) of linear operators once the convergence of the family of vectors \(\{A_\alpha {\varvec{\Phi }}\}\) is specified.

An example of a strongly convergent family is the family of discretizing projectors \(P_\lambda \) defined in (3.6). These projectors converge strongly to the identity as the grid spacing tends to 0: \(P_{\lambda }{\varvec{\Phi }}{\mathop {\longrightarrow }\limits ^{\lambda \rightarrow 0}}{\varvec{\Phi }}.\) Another example is the family of projectors \(P_{\lambda ,\Lambda }\) projecting V onto the subspace \(V_{\lambda ,\Lambda }\) of discretized and cut-off signals defined in (3.8). It is easy to see that \(P_{\lambda ,\Lambda }\) converge strongly to the identity as the spacing tends to 0 and the cutoff is lifted, i.e. as \(\lambda \rightarrow 0\) and \(\Lambda \rightarrow \infty \). Finally, our representations \(R_\gamma \) defined in (3.5) are strongly continuous in the sense that \(R_{\gamma '}\) converges strongly to \(R_\gamma \) as \(\gamma '\rightarrow \gamma \).

A useful standard tool in proving strong convergence is the continuity argument: if the family \(\{A_\alpha \}\) is uniformly bounded, then the convergence \(A_\alpha {\varvec{\Phi }}\rightarrow A{\varvec{\Phi }}\) holds for all vectors \({\varvec{\Phi }}\) from the Hilbert space once it holds for a dense subset of vectors. This follows by approximating any \({\varvec{\Phi }}\) with \({\varvec{\Psi }}\)’s from the dense subset and applying the inequality \(\Vert A_\alpha {\varvec{\Phi }}- A{\varvec{\Phi }}\Vert \le \Vert A_\alpha {\varvec{\Psi }}- A{\varvec{\Psi }}\Vert +(\Vert A_\alpha \Vert +\Vert A\Vert )\Vert {\varvec{\Phi }}-{\varvec{\Psi }}\Vert \). In the sequel, we will consider strong convergence only in the settings where \(A_\alpha \) are orthogonal projectors or norm-preserving operators, so the continuity argument will be applicable.

Proof of Theorem 3.1

Necessity (a limit point of basic convnets is \({\mathbb {R}}^\nu \)-equivariant and continuous).

We start by noting that basic convnets \({{\widehat{f}}}:V\rightarrow U\), as defined by Definition 3.1, are continuous in the norm topology, because the initial projection \(P_{\lambda ,\Lambda +(T-1)\lambda L_{\mathrm {rf}}}\) is continuous, and the subsequent layers are finite-dimensional transformations that are continuous since they are composed of linear operations and continuous activation functions. The continuity of a limit point of convnets then follows from the continuity of convnets and their uniform convergence on compact sets by a standard argument (see Theorem 46.5 in Munkres [30]).

Let f denote a limit point of convnets. Let us prove the \({\mathbb {R}}^\nu \)-equivariance of f, i.e.

Let \(D_{M} =[-M,M]^\nu \subset {\mathbb {R}}^\nu \) with some \(M>0\), and \(P_{D_M}\) be the orthogonal projector in U onto the subspace of signals supported on the set \(D_{M}\). Then \(P_{D_M}\) converges strongly to the identity as \(M\rightarrow +\infty \). Hence, (3.13) will follow if we prove that for any M

Let \(\epsilon >0\). Let \(\gamma _\lambda \in (\lambda {\mathbb {Z}})^\nu \) be the nearest point to \(\gamma \in {\mathbb {R}}^\nu \) on the grid \((\lambda {\mathbb {Z}})^\nu \). Then, since \(R_{\gamma _\lambda }\) converges strongly to \(R_{\gamma }\) as \(\lambda \rightarrow 0\), there exist \(\lambda _0\) such that for any \(\lambda <\lambda _0\)

and

where we have also used the already proven continuity of f.

Observe that the discretization/cutoff projectors \(P_{\lambda , M}\) converge strongly to \(P_{D_M}\) as \(\lambda \rightarrow 0\), hence we can ensure that for any \(\lambda <\lambda _0\) we also have

Next, observe that basic convnets are partially translationally equivariant by our definition, in the sense that if the cutoff parameter \(\Lambda \) of the convnet is sufficiently large then

Indeed, note first that, away from the boundary of the domain \([-\Lambda ,\Lambda ]^\nu \), all the operations of the convnet (the initial discretizing projection and subsequent convolutional layers) are equivariant with respect to the subgroup \((\lambda {\mathbb {Z}})^\nu \subset {\mathbb {R}}^\nu \). Accordingly, since \(\gamma _\lambda \in (\lambda {\mathbb {Z}})^\nu \), we have \({{\widehat{f}}}(R_{\gamma _\lambda } {\varvec{\Phi }})(\lambda {\mathbf {k}})=R_{\gamma _\lambda }{{\widehat{f}}}({\varvec{\Phi }})(\lambda {\mathbf {k}})\) for any point \(\lambda {\mathbf {k}}\) such that both \(\lambda {\mathbf {k}}\) and \(\lambda {\mathbf {k}}-\gamma _\lambda \) belong to the convnet output domain \(\lambda Z_{\lfloor \Lambda /\lambda \rfloor }\). In other words, Eq. (3.18) holds as long as both sets \(\lambda Z_{\lfloor M/\lambda \rfloor }\) and \(\lambda Z_{\lfloor M/\lambda \rfloor }-\gamma _\lambda \) are subsets of \(\lambda Z_{\lfloor \Lambda /\lambda \rfloor }\). This condition is satisfied if we require that \(\Lambda >\Lambda _0\) with \(\Lambda _0=M+\Vert \gamma \Vert _\infty \).

Now, take the compact set \(K=\{R_\theta {\varvec{\Phi }}| \theta \in {\mathcal {N}}\},\) where \({\mathcal {N}}\subset {\mathbb {R}}^\nu \) is some compact set including 0 and all points \(\gamma _\lambda \) for \(\lambda <\lambda _0\). Then, by our definition of a limit point of basic convnets, there is a convnet \({{\widehat{f}}}\) with \(\lambda <\lambda _0\) and \(L>L_0\) such that for all \(\theta \in {\mathcal {N}}\) (and in particular for \(\theta =0\) or \(\theta =\gamma _\lambda \))

We can now write a bound for the difference of the two sides of (3.14):

Here in the first step we split the difference into several parts, in the second step we used the identity (3.18) and the fact that \(P_{\lambda ,M}, R_{\gamma _\lambda }\) are linear operators with the operator norm 1, and in the third step we applied the inequalities (3.15)–(3.17) and (3.19). Since \(\epsilon \) was arbitrary, we have proved (3.14).

Sufficiency (an \({\mathbb {R}}^\nu \)-equivariant and continuous map is a limit point of basic convnets). We start by proving a key lemma on the approximation capability of basic convnets in the special case when they have the degenerate output range, \(\Lambda =0\). In this case, by (3.9), the output space \(W_T=U_{\lambda ,0}\cong {\mathbb {R}}^{d_U},\) and the first auxiliary space \(W_1=V_{\lambda , (T-1)\lambda L_{\mathrm {rf}}}\subset V\).

Lemma 3.1

Let \(\lambda ,T\) be fixed and \(\Lambda =0\). Then any continuous map \(f:V_{\lambda ,(T-1)\lambda L_{\mathrm {rf}}}\rightarrow U_{\lambda ,0}\) can be approximated by basic convnets having spacing \(\lambda \), depth T, and range \(\Lambda =0\).

Note that this is essentially a finite-dimensional approximation result, in the sense that the input space \(V_{\lambda ,(T-1)\lambda L_{\mathrm {rf}}}\) is finite-dimensional and fixed. The approximation is achieved by choosing sufficiently large feature dimensions \(d_t\) and suitable weights in the intermediate layers.

Proof

The idea of the proof is to divide the operation of the convnet into two stages. The first stage is implemented by the first \(T-2\) layers and consists in approximate “contraction” of the input vectors, while the second stage, implemented by the remaining two layers, performs the actual approximation.

The contraction stage is required because the components of the input signal \({\varvec{\Phi }}_{\mathrm{in}}\in V_{\lambda ,(T-1)\lambda L_\mathrm {rf}}\cong L^2(\lambda Z_{(T-1) L_\mathrm {rf}}, {\mathbb {R}}^{d_V})\) are distributed over the large spatial domain \(\lambda Z_{(T-1) L_\mathrm {rf}}\). In this stage we will map the input signal to the spatially localized space \(W_{T-1}\cong L^2(\lambda Z_{L_\mathrm {rf}},{\mathbb {R}}^{d_{T-1}})\) so as to approximately preserve the information in the signal.