Abstract

Zero-adjusted generalized linear models (ZAGLMs) are used in many areas to fit variables that are discrete at zero and continuous on the positive real numbers. As in other classes of regression models, hypothesis testing inference in the class of ZAGLMs is usually performed using the likelihood ratio statistic. However, the LR test is substantially size distorted when the sample size is small. In this work, we derive an analytical Bartlett correction of the LR statistic. We also consider two different adjustments for the LR statistic based on bootstrap. Monte Carlo simulation studies show that the improved LR tests have null rejection rates close to the nominal levels in small sample sizes and similar power. An application illustrates the usefulness of the improved statistics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Zero-adjusted regression models (ZAR models) are often used to fit variables that are discrete at zero and continuous at some interval of the positive real numbers. They are used in many areas such as insurance (Bortoluzzo et al. 2011), botany (Thomson et al. 2018), credit risk (Tong et al. 2016), microbiology (Rocha et al. 2017), biodiversity (Rubec et al. 2016) and meteorology (Zamani and Bazrafshan 2020). ZAR models are also known as zero-augmented regression models (Nogarotto et al. 2020) or zero-inflated regression models. The last expression is used especially when the continuous component of the response variable is beta distributed (Ospina and Ferrari 2012). However, zero-inflated regression models usually refer to models in which the response variable is discrete with more zeros than expected by a known probability distribution (Lambert 1992). Recent works related to ZAR models include Tomazella et al. (2019); Calsavara et al. (2019); Hashimoto et al. (2019); Pereira et al. (2020); Michaelis et al. (2020); Ye et al. (2021) and Silva et al. (2021).

Zero-adjusted generalized linear models (ZAGLMs) are a subclass of ZAR models, in which the continuous component of the regression model is a generalized linear model (Dunn and Smyth 2018). The main components of the class of ZAGLMs are the zero-adjusted gamma regression models (ZAGA regression models, Tong et al. 2013) and the zero-adjusted inverse Gaussian regression models (ZAIG regression models, Heller et al. 2006).

Hypothesis testing inference in the class of ZAGLMs is usually performed using the likelihood ratio (LR) statistic, especially when the null hypothesis of interest involves more than one parameter. Under the null hypothesis, the LR statistic has an asymptotic chi-squared distribution (Sen et al. 2010). However, in many regression models, the chi-squared distribution is not a good approximation of the null distribution of the LR statistic when the sample size is small (Melo et al. 2009; Pereira and Cribari-Neto 2014). As a consequence, in these cases, the test based on the LR statistic is often size distorted.

An alternative to improve the chi-squared approximation to the exact null distribution of the LR statistic is to use the Bartlett correction (Bartlett 1937; Lawley 1956). The Bartlett correction is usually effective in bringing the true sizes of the test of the model closer to the nominal levels (Botter and Cordeiro 1997). In practical situations, type I errors should be nearer to the fixed nominal value (usually 1%, 5% or 10%) than the original statistic. Many authors have presented Bartlett correction factors for specific regression models. Cordeiro (1983) derived the Bartlett correction factor for generalized linear models and Botter and Cordeiro (1997) extended it to double generalized linear models. This correction was derived for mixed linear models and for beta regression by Melo et al. (2009) and Bayer and Cribari-Neto (2013), respectively. Moulton et al. (1993) and Das et al. (2018) showed that the Bartlett correction also improves the LR statistic in logistic regression. Recent works related to this topic include Loose et al. (2018); Araújo et al. (2020); Magalhães and Gallardo (2020); Rauber et al. (2020); Guedes et al. (2020, 2021) and Melo et al. (2022).

Few works have proposed small-sample adjustments to the LR statistic in ZAR models. Pereira and Cribari-Neto (2014) derived a correction for the LR statistic known as Skovgaard’s adjustment (Skovgaard 2001) for zero-adjusted beta regressions. For the same model, Loose et al. (2017) proposed a Bartlett correction based on bootstrap (Efron 1979), instead of the traditional analytical correction. To the best of our knowledge, no study has proposed small-sample adjustments to the LR statistic in ZAGLMs. Moreover, the previous works involving ZAR models have not studied the behavior of the adjusted LR statistic when the null hypothesis of interest involves parameters of more than a submodel of the ZAR model. In practice, it is often desirable to test whether the distribution of the response variable is related to a given covariate. In these cases, the null hypothesis has parameters of all ZAGLM submodels.

The chief goal of our paper is to improve the LR statistic in the class of ZAGLMs. Two approaches are used. First, we derive an analytical Bartlett correction of the LR statistic. In addition, we propose using two different adjustments for the LR statistic based on bootstrap (Cordeiro and Cribari-Neto 2014). The performance in small and medium-sized samples of the adjusted statistics is compared with the usual LR statistics through extensive Monte Carlo simulation studies.

The remainder of the paper is organized as follows. Section 2 defines the ZAGLMs and presents some of their inferential aspects. The adjusted LR statistic is derived in Sect. 3 and the bootstrap corrections are also described in that section. In the following section, Monte Carlos simulation studies are performed to compare the finite sample behavior of different LR statistics. Section 5 presents an application to real data. Concluding remarks are provided in Sect. 6.

2 Model

Suppose that the univariate random variable \(Y \in \{0\} \cup (0, \infty )\), has a density with the following

where \(\pi = \mathbb {P}(Y = 0)\) and \(f(y; \theta , \phi )\) is a probability density function (PDF) of a positive continuous random variable. The expression (1) can be written as:

with

In our work, we define

where \(b(\cdot )\), \(c(\cdot )\), \(d_1(\cdot )\) and \(d_2(\cdot )\) are known functions, i.e., \(f(\cdot , \theta , \phi )\) is the PDF of a member of the exponential family (EF) with parameters \(\theta \) and \(\phi \), the canonical and the precision parameters respectively (the inverse, \(\phi ^{-1}\), is the dispersion parameter). If \(Z \sim \) EF(\(\theta , \phi \)), then \(\mathbb {E}(Z) = db(\theta )/d\theta = \mu \) and Var\((Z) = \phi ^{-1} d^2b(\theta )/d\theta ^2 = \phi ^{-1} V(\mu )\), where \(V = V(\mu )\) is the variance function. Note that the function of the random variable \(\mathbb {I}_{\{0\}}^{(y)}\), in (2), can be seen as a probability function of a Bernoulli distribution with probability \(\pi \), also a member of the EF. Therefore, \(\mathbb {E}(Y) = (1-\pi )\mu \) and Var\((Y) = (1-\pi )[\pi \mu ^2 + \phi ^{-1} V(\mu )]\). Table 1 presents the quantities presented in (3) for the distributions in the EF used this paper.

We consider that (1) has three systematic components, which are parameterized as \(\mu = \mu ({{\varvec{\beta }}})\), \(\phi = \phi ({{\varvec{\delta }}})\) and \(\pi = \pi ({{\varvec{\gamma }}})\). The systematic components are:

where \(h_1(\cdot )\) to \(h_3(\cdot )\) are the link functions and are known one-to-one continuously four-times differentiable functions, \(\eta _1\) to \(\eta _3\) are the linear predictors, \({{\varvec{x}}} = (x_1, \ldots x_{p_{\mu }})^{\top }\), \({{\varvec{t}}} = (t_1, \ldots t_{p_{\phi }})^{\top }\), \({{\varvec{s}}} = (s_1, \ldots s_{p_{\pi }})^{\top }\) are specified regressor vectors, \({{\varvec{\beta }}} = (\beta _1, \ldots , \beta _{p_{1}})^{\top }\), \({{\varvec{\delta }}} = (\delta _1, \ldots , \delta _{p_{2}})^{\top }\) and \({{\varvec{\gamma }}} = (\gamma _1, \ldots , \gamma _{p_{3}})^{\top }\) are sets of unknown parameters to be estimated. We also assume that \({{\varvec{\beta }}}\), \({{\varvec{\delta }}}\) and \({{\varvec{\gamma }}}\) are functionally independent from each other.

Consider \(Y_{1}\), \(\ldots \), \(Y_{n}\) independent random variables from (1) and the parameter vector \({{\varvec{\lambda }}} = ({{\varvec{\beta }}}^{\top }, {{\varvec{\delta }}}^{\top }, {{\varvec{\gamma }}}^{\top })^{\top }\). The logarithm of the likelihood (log-likelihood) function based on a sample of n independent observations is given by

where

and

The function (5) is assumed to be regular with respect to all \({{\varvec{\lambda }}}\) derivatives. The score vector, obtained by differentiation of the log-likelihood function \(l({{\varvec{\lambda }}})\) with respect to \({{\varvec{\lambda }}}\), can be written as \({{\varvec{U}}} = {{\varvec{U}}}({{\varvec{\lambda }}}) = \left( {{\varvec{U}}}_{{\varvec{\beta }}}({{\varvec{\lambda }}})^{\top }, {{\varvec{U}}}_{{\varvec{\delta }}}({{\varvec{\lambda }}})^{\top }, {{\varvec{U}}}_{{\varvec{\gamma }}}({{\varvec{\lambda }}})^{\top }\right) ^{\top }\), with

where \({{\varvec{X}}} = ({{\varvec{x}}}_1, \ldots , {{\varvec{x}}}_n)^{\top }\) is a specified \(n \times p_1\) matrix of full rank \(p_1 < n\), \({{\varvec{\Phi }}} =\) diag\(\{\phi _1, \ldots , \phi _n\}\), \({{\varvec{W}}} =\) diag\(\{w_1, \ldots , w_n\}\), \(w_{\ell } = (d\mu _{\ell }/d\eta _{1\ell })^2 V_{\ell }^{-1}\), \({{\varvec{V}}} =\) diag\(\{V_{1}, \ldots , V_{n}\}\), \({{\varvec{I}}}_{{\varvec{y}}} =\) diag\(\left\{ 1 - \mathbb {I}_{\{0\}}^{(y_{1})}, \ldots , 1 - \mathbb {I}_{\{0\}}^{(y_{n})} \right\} \), \({{\varvec{y}}} = (y_{1}, \ldots , y_{n})^{\top }\), \({{\varvec{\mu }}} = (\mu _{1}, \ldots , \mu _{n})^{\top }\),

where \({{\varvec{T}}} = ({{\varvec{t}}}_1, \ldots , {{\varvec{t}}}_n)^{\top }\) is a specified \(n \times p_2\) matrix of full rank \(p_2 < n\), \({{\varvec{\Phi }}}_{1} =\) diag\(\{\phi _{11}, \ldots , \phi _{1n}\}\), \(\phi _{1\ell } = d\phi _{\ell }/d\eta _{2\ell }\), \({{\varvec{\nu }}} = (\nu _{1}, \ldots , \nu _{n})^{\top }\), \(\nu _{\ell } = y_{\ell } \theta _{\ell } - b(\theta _{\ell }) + c(y_{\ell }) + d d_2(\phi _{\ell })/d\phi _{\ell }\),

where \({{\varvec{S}}} = ({{\varvec{s}}}_1, \ldots , {{\varvec{s}}}_n)^{\top }\) is a specified \(n \times p_3\) matrix of full rank \(p_3 < n\), \({{\varvec{W}}}_{\pi } =\) diag\(\{w_{\pi 1}, \ldots , w_{\pi n}\}\), \(w_{\pi \ell } = (d\pi _{\ell }/d\eta _{3\ell })^2 V_{\pi \ell }^{-1}\), \({{\varvec{V}}}_{\pi } =\) diag\(\{V_{\pi 1}, \ldots , V_{\pi n}\}\), \(V_{\pi \ell } = \pi _{\ell } (1-\pi _{\ell })\), \({{\varvec{y}}}^I = (y_{1}^I, \ldots , y_{n}^I)^{\top }\), \(y_{\ell }^I = \mathbb {I}_{\{0\}}^{(y_{\ell })}\), \({{\varvec{\pi }}} = (\pi _{1}, \ldots , \pi _{n})^{\top }\). The partition \({{\varvec{\lambda }}} = ({{\varvec{\beta }}}^{\top }, {{\varvec{\delta }}}^{\top }, {{\varvec{\gamma }}}^{\top })^{\top }\) induces a corresponding partitioned Fisher information matrix for these parameters. This matrix is block-diagonal given by:

where \({{\varvec{\Delta }}} =\) diag\(\{1 - \pi _1, \ldots , 1 - \pi _n\}\), \({{\varvec{D}}}_2 =\) diag\(\{d_{21}, \ldots , d_{2n}\}\), \(d_{2\ell } = d^2 d_2(\phi _{\ell })/d\phi _{\ell }^2\), \({{\varvec{\Phi }}}_{1}^2 =\) diag\(\{\phi _{11}^2, \ldots , \phi _{1n}^2\}\), \(\phi _{1\ell }^2 = (d\phi _{\ell }/d\eta _{2\ell })^2\). Thus, the parameters \({{\varvec{\beta }}}\), \({{\varvec{\delta }}}\), \({{\varvec{\gamma }}}\) are globally orthogonal (Cox and Reid 1987) and their maximum likelihood estimates \({{\varvec{{\hat{\beta }}}}}\), \({{\varvec{{\hat{\delta }}}}}\) and \({{\varvec{{\hat{\gamma }}}}}\) are asymptotically independent. The former property is necessary to simplify the calculations of the Bartlett corrections, whereas the latter is desirable in the context of inference. The Fisher scoring method can be used to compute \({{\varvec{{\hat{\beta }}}}}\), \({{\varvec{{\hat{\delta }}}}}\) and \({{\varvec{{\hat{\gamma }}}}}\) by iteratively solving the following equations:

In many problems, the restrictions under a test involve a subset of the \({{\varvec{\beta }}}\), \({{\varvec{\delta }}}\) and \({{\varvec{\gamma }}}\) parameters. We partition the parameters as \({{\varvec{\beta }}} = \left( {{\varvec{\beta }}}_1^{\top }, {{\varvec{\beta }}}_2^{\top }\right) ^{\top }\), \({{\varvec{\delta }}} = \left( {{\varvec{\delta }}}_1^{\top }, {{\varvec{\delta }}}_2^{\top }\right) ^{\top }\) and \({{\varvec{\gamma }}} = \left( {{\varvec{\gamma }}}_1^{\top }, {{\varvec{\gamma }}}_2^{\top }\right) ^{\top }\) where \({{\varvec{\beta }}}_1 = \left( \beta _1, \ldots , \beta _{q_1}\right) ^{\top }\), \({{\varvec{\beta }}}_2 = \left( \beta _{q_1 + 1}, \ldots , \beta _{p_1}\right) ^{\top }\), \({{\varvec{\delta }}}_1 = \left( \delta _1, \ldots , \delta _{q_2}\right) ^{\top }\), \({{\varvec{\delta }}}_2 = \left( \delta _{q_2 + 1}, \ldots , \delta _{p_2}\right) ^{\top }\), \({{\varvec{\gamma }}}_1 = \left( \gamma _1, \ldots , \gamma _{q_3}\right) ^{\top }\) and \({{\varvec{\gamma }}}_2 = \left( \gamma _{q_3 + 1}, \ldots , \gamma _{p_3}\right) ^{\top }\). The partitions of \({{\varvec{\beta }}}\), \({{\varvec{\delta }}}\) and \({{\varvec{\gamma }}}\) induce the corresponding partitions \({{\varvec{X}}} = ({{\varvec{X}}}_1, {{\varvec{X}}}_2)\), \({{\varvec{T}}} = ({{\varvec{T}}}_1, {{\varvec{T}}}_2)\), \({{\varvec{S}}} = ({{\varvec{S}}}_1, {{\varvec{S}}}_2)\), \({{\varvec{U}}} = \left( {{\varvec{U}}}_{{\varvec{\beta }}_1}({{\varvec{\beta }}}_1, {{\varvec{\beta }}}_2)^{\top }, {{\varvec{U}}}_{{\varvec{\beta }}_2}({{\varvec{\beta }}}_1, {{\varvec{\beta }}}_2)^{\top }\right. \), \({{\varvec{U}}}_{{\varvec{\delta }}_1}({{\varvec{\delta }}}_1, {{\varvec{\delta }}}_2)^{\top }, {{\varvec{U}}}_{{\varvec{\delta }}_2}({{\varvec{\delta }}}_1, {{\varvec{\delta }}}_2)^{\top }\), \(\left. {{\varvec{U}}}_{{\varvec{\gamma }}_1}({{\varvec{\gamma }}}_1, {{\varvec{\gamma }}}_2)^{\top }, {{\varvec{U}}}_{{\varvec{\gamma }}_2}({{\varvec{\gamma }}}_1, {{\varvec{\gamma }}}_2)^{\top }\right) ^{\top }\) and

where \({{\varvec{X}}}_1\), \({{\varvec{X}}}_2\), \({{\varvec{T}}}_1\), \({{\varvec{T}}}_2\), \({{\varvec{S}}}_1\) and \({{\varvec{S}}}_2\) are known matrices of full rank and dimensions \(n \times q_1\), \(n \times (p_1 - q_1)\), \(n \times q_2\), \(n \times (p_2 - q_2)\), \(n \times q_3\), \(n \times (p_3 - q_3)\), respectively, and \({{\varvec{K}}}_{{{\varvec{\beta }}}_{11}} = {{\varvec{X}}}_1^{\top } {{\varvec{\Delta }}} {{\varvec{W}}} {{\varvec{\Phi }}} {{\varvec{X}}}_1\), \({{\varvec{K}}}_{{{\varvec{\beta }}}_{12}} = {{\varvec{K}}}_{{{\varvec{\beta }}}_{21}}^{\top } = {{\varvec{X}}}_1^{\top } {{\varvec{\Delta }}} {{\varvec{W}}} {{\varvec{\Phi }}} {{\varvec{X}}}_2\), \({{\varvec{K}}}_{{{\varvec{\beta }}}_{22}} = {{\varvec{X}}}_2^{\top } {{\varvec{\Delta }}} {{\varvec{W}}} {{\varvec{\Phi }}} {{\varvec{X}}}_2\), \({{\varvec{K}}}_{{{\varvec{\delta }}}_{11}} = - {{\varvec{T}}}_1^{\top } {{\varvec{\Delta }}} {{\varvec{D}}}_2 {{\varvec{\Phi }}}_{1}^{2} {{\varvec{T}}}_1\), \({{\varvec{K}}}_{{{\varvec{\delta }}}_{12}} = {{\varvec{K}}}_{{{\varvec{\delta }}}_{21}}^{\top } = - {{\varvec{T}}}_1^{\top } {{\varvec{\Delta }}} {{\varvec{D}}}_2 {{\varvec{\Phi }}}_{1}^{2} {{\varvec{T}}}_2\), \({{\varvec{K}}}_{{{\varvec{\delta }}}_{22}} = - {{\varvec{T}}}_2^{\top } {{\varvec{\Delta }}} {{\varvec{D}}}_2 {{\varvec{\Phi }}}_{1}^{2} {{\varvec{T}}}_2\), \({{\varvec{K}}}_{{{\varvec{\gamma }}}_{11}} = {{\varvec{S}}}_1^{\top } {{\varvec{W}}}_{\pi } {{\varvec{S}}}_1\), \({{\varvec{K}}}_{{{\varvec{\gamma }}}_{12}} = {{\varvec{K}}}_{{{\varvec{\gamma }}}_{21}}^{\top } = {{\varvec{S}}}_1^{\top } {{\varvec{W}}}_{\pi } {{\varvec{S}}}_2\) and \({{\varvec{K}}}_{{{\varvec{\gamma }}}_{22}} = {{\varvec{S}}}_2^{\top } {{\varvec{W}}}_{\pi } {{\varvec{S}}}_2\).

We are interested in testing

where \({{\varvec{\beta }}}_1^{(0)}\), \({{\varvec{\delta }}}_1^{(0)}\) and \({{\varvec{\gamma }}}_1^{(0)}\) are specified vectors of dimensions \(q_1\), \(q_2\) and \(q_3\), respectively. We assume that \(0 \le q_1 \le p_1\), \(0 \le q_2 \le p_2\) and \(0 \le q_3 \le p_3\), but the trivial case \(q_1 = q_2 = q_3 = 0\) is excluded because there are no parameters left under the null hypothesis. Let \({{\varvec{{\hat{\lambda }}}}} = \left( {{\varvec{{\hat{\beta }}}}}^{\top }, {{\varvec{{\hat{\delta }}}}}^{\top }, {{\varvec{{\hat{\gamma }}}}}^{\top }\right) ^{\top }\) be the unrestricted maximum likelihood estimates of \({{\varvec{\beta }}}\), \({{\varvec{\delta }}}\) and \({{\varvec{\gamma }}}\) and \({{\varvec{{\tilde{\lambda }}}}} = \left( {{\varvec{\beta }}}_1^{(0)\top }, {{\varvec{{\tilde{\beta }}}}}_2^{\top }, {{\varvec{\delta }}}_1^{(0)\top }, {{\varvec{{\tilde{\delta }}}}}_2^{\top }, {{\varvec{\gamma }}}_1^{(0)\top }, {{\varvec{{\tilde{\gamma }}}}}_2^{\top } \right) ^{\top }\) be their restricted maximum likelihood estimates under \({\mathcal {H}}\). The likelihood ratio statistic for testing \({\mathcal {H}}\) is

which is, under \({\mathcal {H}}\) and some regularity conditions, asymptotically distributed as \(\chi _{q_1 + q_2 + q_3}^2\) with approximation error of order \(n^{-1}\).

3 Corrected likelihood ratio tests

It is known that under the null hypothesis and general conditions of regularity, the likelihood ratio statistic, \(\text{ LR }\), has an asymptotic \(\chi _q^2\) distribution, where q is the number of restrictions imposed on the parameters by the null hypothesis. Lawley (1956) improved the \(\text{ LR }\) statistic by defining a statistic, say LR\(_\textrm{c}\), such that \(\mathbb {P}_{{\mathcal {H}}} \left( \text{ LR}_\textrm{c} \le w \right) = \mathbb {P} \left( \chi _q^2 \le w \right) + {\mathcal {O}}(n^{-2})\), while \(\mathbb {P}_{{\mathcal {H}}} \left( \text{ LR } \le w \right) = \mathbb {P} \left( \chi _q^2 \le w \right) + {\mathcal {O}}(n^{-1})\), where \(\mathbb {P}_{{\mathcal {H}}} \left( \cdot \right) \) is the cumulative distribution function under the null hypothesis. The improved statistic is given by LR\(_\textrm{c} = {\tilde{c}}^{-1} \text{ LR }\), where \({\tilde{c}} = q^{-1} \tilde{\mathbb {E}}(\text{ LR})\) is a consistent estimate of \(c = q^{-1} \mathbb {E}(\text{ LR})\) and \(\mathbb {E}(\text{ LR})\) is the expectation of the likelihood ratio statistic, evaluated under the null hypothesis up to order \(n^{-1}.\) The factor c is known as the Bartlett correction factor.

For the model presented in Sect. 2, define \(q = q_1 + q_2 + q_3\) and \(p = p_1 + p_2 + p_3.\) The expected likelihood ratio statistic to order \({\mathcal {O}}(n^{-1})\) for the test of hypotheses in (7) is \(\mathbb {E}(\text{ LR}) = q + \varepsilon _{p} - \varepsilon _{p - q}\), where

with \({{\varvec{1}}} = (1, \ldots , 1)_{n \times 1}^{\top }\), \({{\varvec{\Phi }}}_{2} =\) diag\(\{\phi _{21}, \ldots , \phi _{2n}\}\), \(\phi _{2\ell } = d^2\phi _{\ell }/d\eta _{2\ell }^2\), \({{\varvec{Z}}}_{\beta } = {{\varvec{X}}} {{\varvec{K}}}^{{{\varvec{\beta }}}, {{\varvec{\beta }}}} {{\varvec{X}}}^{\top }\), \({{\varvec{Z}}}_{\beta d} =\) diag\(\{z_{\beta , 11}, \ldots , z_{\beta , nn}\}\), \({{\varvec{Z}}}_{\delta } = {{\varvec{T}}} {{\varvec{K}}}^{{{\varvec{\delta }}}, {{\varvec{\delta }}}} {{\varvec{T}}}^{\top }\), \({{\varvec{Z}}}_{\delta d} =\) diag\(\{z_{\delta , 11}, \ldots , z_{\delta , nn}\}\), \({{\varvec{Z}}}_{\gamma } = {{\varvec{S}}} {{\varvec{K}}}^{{{\varvec{\gamma }}}, {{\varvec{\gamma }}}} {{\varvec{S}}}^{\top }\), \({{\varvec{Z}}}_{\gamma d} =\) diag\(\{z_{\gamma , 11}, \ldots , z_{\gamma , nn}\}\), \({{\varvec{D}}}_3 =\) diag\(\{d_{31}, \ldots , d_{3n}\}\), \(d_{3\ell } = d^3 d_2(\phi _{\ell })/d\phi _{\ell }^3\), \({{\varvec{Z}}}_{\beta }^{(2)} = {{\varvec{Z}}}_{\beta } \odot {{\varvec{Z}}}_{\beta }\), \({{\varvec{Z}}}_{\beta }^{(3)} = {{\varvec{Z}}}_{\beta }^{(2)} \odot {{\varvec{Z}}}_{\beta }\), \({{\varvec{Z}}}_{\delta }^{(2)} = {{\varvec{Z}}}_{\delta } \odot {{\varvec{Z}}}_{\delta }\), \({{\varvec{Z}}}_{\delta }^{(3)} = {{\varvec{Z}}}_{\delta }^{(2)} \odot {{\varvec{Z}}}_{\delta }\), \({{\varvec{Z}}}_{\gamma }^{(2)} = {{\varvec{Z}}}_{\gamma } \odot {{\varvec{Z}}}_{\gamma }\), \({{\varvec{Z}}}_{\gamma }^{(3)} = {{\varvec{Z}}}_{\gamma }^{(2)} \odot {{\varvec{Z}}}_{\gamma }\) and \(\odot \) represents a direct product of matrices (Hadamard product). For sake of brevity, \(\varepsilon _{\beta _{p_{1}}}\), \(\varepsilon _{\delta _{p_{2}}}\), \(\varepsilon _{\gamma _{p_{3}}}\) are given in the Appendix. The term \(\varepsilon _{p}\) is of order \({\mathcal {O}}(n^{-1})\) evaluated at the true parameter point. For sake of brevity, we will not discuss particular cases derived from (8). However, they can be obtained similarly as Botter and Cordeiro (1997).

The Bartlett-corrected LR test statistic for testing (7) is

where \(\zeta = \left( \varepsilon _p - \varepsilon _{p-q}\right) /q\), \(\varepsilon _{p-q}\) can be determined from (8) with \({{\varvec{X}}}_2\), \({{\varvec{T}}}_2\) and \({{\varvec{S}}}_2\) in place of \({{\varvec{X}}}\), \({{\varvec{T}}}\) and \({{\varvec{S}}}\), respectively.

The Bartlett correction factor is very general, and in some cases it is very difficult or even impossible to particularize its formula for specific regression models. For instance, although Cordeiro et al. (1994) found the Bartlett correction factor for the dispersion models, it has no closed-form for the simplex distribution. As can be seen in (8), we have been able to apply the results for our model. For continuous case, i.e., \(\pi _{\ell } = 0, \forall \ell = 1, \ldots , n\), \(\varepsilon _{\gamma _{p_{3}}}\) vanishes, \({{\varvec{\Delta }}}\) is the identity matrix and the term \(\varepsilon _{p}\) in (8) coincides with equation (5) from Botter and Cordeiro (1997). Thus, our result generalizes their work.

An alternative strategy for improving LR testing inference is to use the bootstrap procedure. Suppose that \({{\varvec{{\tilde{\mu }}}}}\), \({{\varvec{{\tilde{\phi }}}}}\) and \({{\varvec{{\tilde{\pi }}}}}\) are the restricted MLEs of \({{\varvec{\mu }}}\), \({{\varvec{\phi }}}\) and \({{\varvec{\pi }}}\) from the original dataset. In the parametric bootstrap case, B pseudo-samples with size n are generated from ZAGLM(\({{\varvec{{\tilde{\mu }}}}}, {{\varvec{{\tilde{\phi }}}}}, {{\varvec{{\tilde{\pi }}}}}\)), and, for each b, \(b = 1, \ldots , B\), the regressor coefficients \({{\varvec{{\hat{\lambda }}}}}^{(b)}\) are estimated and the LR statistic is calculated, as:

The null hypothesis (7) is rejected if \(p^{\star } = (k + 1)/(B + 1)\) is smaller than or equal to the significance level \(\alpha \). Here, k is the number of bootstrap replications in which \(\text{ LR}^{(b)}\) is greater than the LR statistic computed using the original sample and \(p^{\star }\) is the bootstrap p-value.

Rocke (1989) proposed bootstrap resampling to estimate the Bartlett correction factor in the following form: B parametric bootstrap samples, imposing the null hypothesis, are produced and the Bartlett-corrected bootstrap test statistic is computed as

where \(\overline{\text{ LR }}^{\star } = B^{-1} \sum _{b=1}^{B} \text{ LR}^{(b)}\), the average of all bootstrap statistics. The statistic \(\text{ LR}_\textrm{boot2} \sim \chi _q^2\), for more details on the Bartlett corrections, included the bootstrap Bartlett adjustment, see Cordeiro and Cribari-Neto (2014).

4 Numerical results

This section presents the results of Monte Carlo simulation studies performed to evaluate the finite sample performance of the following tests: the likelihood ratio statistic (LR), the Bartlett-corrected statistic (\(\text{ LR}_\textrm{c}\)), the bootstrap test based on \(p^{\star }\) \((\text{ LR}_\textrm{boot1})\), and the Bartlett-corrected bootstrap statistic \((\text{ LR}_\textrm{boot2})\). For each considered scenario, we used 5,000 Monte Carlo replications and \(B = 1,000\). The simulations were performed using the Ox language (Doornik 2009).

The following ZAGA regression model was considered in the simulations:

in which we considered \(x_{2l}=t_{2l}=s_{2l}\) and \(x_{3l}=t_{3l}=s_{3l}\). First, we evaluated the null rejection rates of the different tests considering three nominal levels \((1\%, 5\% \text { and } 10\%)\) and three sample sizes (50, 75 and 100). We did not consider sample sizes smaller than 50, because in ZAR models, this leads to bootstrap samples with a very small number of observations equal to zero or a very small number of observations greater than zero. As a consequence, the parameters cannot be estimated in many bootstrap samples when sample size is smaller than 50.

Initially, we considered four scenarios to test the following hypothesis:

Note that in (10), we are testing if the distribution of the response variable is a function of the covariate \(x_3\). In Scenarios 1 to 3, the covariate values were taken as random draws of the standard uniform distribution. In Scenario 4, \(x_2\) and \(x_3\) were taken from the Gamma distribution and from inverse Gaussian distribution, respectively, considering for these distributions the same mean and the same variance of the standard uniform distribution. In all scenarios, covariate values were kept fixed in the Monte Carlo replicates.

In Scenario 1, we considered \(\beta _1 = 3.0\), \(\beta _2 = 2.0\), \(\beta _3 = 0.0\), \(\delta _1 = 4.0\), \(\delta _2 = -2.0\), \(\delta _3 = 0.0\), \(\gamma _1 = 0.1\), \(\gamma _2 = -1.0\) and \(\gamma _3 = 0.0\). These parameter values yielded \(\mu \in (20.08,148.42)\), \(\phi \in (7.38,54.60)\), and \(\pi \in (0.289,0.525)\). In the second scenario, we changed the value of \(\gamma _1\) to 0.8, which yielded \(\pi \in (0.450,0.690)\). In the third scenario, the value of \(\delta _1\) was changed to 2.0, which yielded \(\phi \in (1.00,7.39)\). Finally, in Scenario 4, we changed the distribution used to generate the covariates as mentioned before.

Table 2 presents the null rejection rates for the test (10) in the four scenarios described above. The LR test is considerably liberal for \(n=50\), reaching, for example, a rejection rate of 4.00 when \(\alpha =1\%\) in Scenario 4. Even when \(n=100\), in all scenarios, the rejection rates of the LR test were not close to the nominal levels. On the other hand, in general, the three improved tests presented rejection rates close to the nominal levels even when \(n=50\). For example, in Scenario 4, for \(\alpha =1\%\) and \(n=50\), the rejection rates of \(\text{ LR}_\textrm{c}\), \(\text{ LR}_\textrm{boot1}\) and \(\text{ LR}_\textrm{boot2}\) were 1.08, 0.84 and 1.04, respectively. There were some exceptions, mainly in Scenario 2, in which the rejection rates of the test based on the \(\text{ LR}_\textrm{boot1}\) statistic were not very close to the nominal levels.

We also performed simulation studies considering a hypothesis with a single parameter. We considered Scenario 1, and three different null hypotheses: \({\mathcal {H}}: \beta _3 = 0\), \({\mathcal {H}}: \delta _3 = 0\), and \({\mathcal {H}}: \gamma _3 = 0\). Table 3 presents the null rejection rates for these tests. The LR test is also considerably liberal for the two tests related with the continuous component of the model, but it performs well for the test related with the discrete component of the model. On the other hand, the improved LR tests have null rejection rates close to the nominal levels for the three hypothesis, even when \(n=50\).

In Tables 4, 5, 6, we present the simulation results to evaluate the non-null rejection rates of the tests (power) based on the statistics \(\text{ LR}_\textrm{c}\), \(\text{ LR}_\textrm{boot1}\) and \(\text{ LR}_\textrm{boot2}.\) The LR statistic is not included in this study, because our simulations showed that it is oversized. We considered the following three sets of hypotheses:

-

\({\mathcal {H}}: \beta _3 = 0, \delta _3 = 0, \gamma _3 = 0\) versus \({\mathcal {A}}: \beta _3 \ne 0, \delta _3 \ne 0, \gamma _3 \ne 0,\) considering in \({\mathcal {A}}\) \(\beta _3=\delta _3=\gamma _3=\tau \) (Table 4)

-

\({\mathcal {H}}: \beta _3 = 0, \delta _3 = 0, \gamma _3 = 0\) versus \({\mathcal {A}}: \beta _3 \ne 0, \delta _3 \ne 0, \gamma _3 \ne 0,\) considering in \({\mathcal {A}}\) \(\beta _3=\tau , \delta _3 = \gamma _3 = 0\) (Table 5)

-

\({\mathcal {H}}: \beta _3 = 0\) versus \({\mathcal {A}}: \beta _3 \ne 0,\) considering in \({\mathcal {A}}\) \(\beta _3=\tau ,\) (Table 6)

where \(\tau =\) \(-\)0.50, \(-\)0.25, 0.25 and 0.50. The remaining parameters were as in Scenario 1.

For the three tables, with fixed values of \(\alpha \), n and \(\tau \), the non-null rejection rates were similar in the three tests. As expected, for all cases, the non-null rejection rates increased as n and the absolute value of \(\tau \) grew.

5 Application

This section presents an application to real data using the LR test and its improved versions. The data are part of the work presented in Rocha et al. (2009) and refer to the production of mycotoxin FB\(_2\) in corn grains in Brazil. Fusarium verticillioides is a species of fungus that commonly produces mycotoxins in corn grains. When these substances are produced in high quantity, the corn grains become improper for consumption.

The dataset consists of 200 unit samples, each composed of 30 gs of corn grains. The response variable is the quantity of mycotoxin FB\(_2\) (measured in \(\mu \)g/g) and the covariates are the percentage of water activity (\(x_2\)) and the percentage of grains with F. verticillioides infection (\(x_3\)). A total of 51 out of 200 unit samples did not contain mycotoxin FB\(_2\).

We fitted ZAGA and ZAIG regression models for the quantity of mycotoxin FB\(_2\) with the following three systematic components:

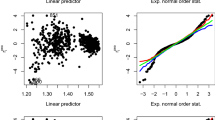

Note that we considered in (11) the two covariates in the three components of the model. We used a logarithmic link function in the submodels for \(\mu \) and \(\phi \) and a logit link in the submodel for \(\pi \). Diagnostic analysis omitted here for the sake of brevity suggested that the ZAGA regression model is adequate to fit these data and that the ZAIG regression model produces a worse fit. In addition, the AIC value is much lower for the ZAGA regression model (373.09) than for the ZAIG regression model (429.37).

First, we tested if the three parameters related to the percentage of grains with Fusarium verticillioides are all equal to zero. The p-values of the four tests considered here are greater than 0.1. Therefore, there is no evidence that the quantity of mycotoxin FB\(_2\) is a function of the the percentage of grains with F. verticillioides and we excluded this covariate from the model.

Second, we fitted a model using water activity as the single covariate and tested if the three parameters related to this covariate were all equal to zero. The second and third columns of Table 7 present the results. Note that the value of the statistic is greater for the LR test than for the corrected test, in agreement with the simulation results. However, all tests yielded the same conclusion, that the quantity of mycotoxin FB\(_2\) is a function of the water activity at the usual nominal levels.

Finally, using the same model, we tested if each of the parameters of the model was equal to zero; the results are presented in the last 6 columns of Table 7. At the usual nominal levels, all statistics also yielded the same conclusion for the three tests. The mean of the continuous component of the quantity of mycotoxin FB\(_2\) and the probability of the quantity of mycotoxin FB\(_2\) assuming a zero value are functions of the water activity, but there is no evidence that the dispersion parameter of the continuous component of the quantity of mycotoxin FB\(_2\) was a function of the water activity.

Table 8 presents the estimates of the parameters and their standard errors for the final model. To interpret the estimates of the parameters, the table also presents the exponential of the estimates. The results indicated that, for every percentage point increase in the water activity, the mean quantity of mycotoxin FB\(_2\), given that there is some FB\(_2\) (mean of the continuous component of the response variable), increased by \(5.5\%\). It was also estimated that, for every percentage point increase in the water activity, the odds of a random sample of 30 gs of corn not containing FB\(_2\), decreased by \(12.8\%\).

Similar to Melo et al. (2022), we randomly selected a subset to illustrate that the conclusions of different tests may be different. We selected the sample using a binomial random variable with probability of success of 0.2 and obtained a dataset with \(n=41\). Table 9 presents for this reduced dataset the results of the same tests performed before for the complete data. Note that considering a significance level of \(1\%\) or \(5\%\), the conclusions were different between the LR tests and the improved LR tests. Based on the results of the simulation studies, if the reduced database were the true one, we would rely on the conclusions reached when using the improved LR tests.

6 Concluding remarks

Response variables that are discrete at zero and continuous on the positive real numbers are common in many areas and they are usually fitted using zero-adjusted generalized linear models. In many regression models, the likelihood ratio test is used to perform hypothesis testing, especially when the null hypothesis involves more than one parameter. However, the likelihood ratio test is considerably liberal (oversized) in the class of ZAGLMs when the sample size is small. In this work, we derived an analytical Bartlett-corrected likelihood ratio test and considered two bootstrap-based corrected likelihood ratio tests. We developed Monte Carlo simulation studies that showed that the null rejection rates of the three improved tests are close to nominal levels for small sample sizes. We also concluded that the three improved likelihood ratio tests considered here have similar power. An application illustrated the usefulness of the improved statistics.

Zero-adjusted regression models are a wide class of regression models that contain the ZAGLMs. There are no previous studies that have evaluated the performance of improved hypothesis tests that simultaneously involve parameters of the continuous and discrete component of the model. Therefore, this work is the first to show that the improved likelihood ratio tests perform well for these kinds of hypotheses, which are useful when one wants to test if the distribution of the response variable is a function of a covariate.

Based on the results of the simulation studies and the features of the three corrected likelihood ratio tests considered here, we suggest that practitioners use the analytical Bartlett-corrected likelihood ratio test when the sample size is small and they want to perform hypothesis testing in the class of ZAGLMs. Our recommendation is based especially on two reasons. First, the performances of the tests related to size and power were similar, but in one of the scenarios considered here, the null rejection rate of the bootstrap corrected test was slightly size distorted. The other reason is that bootstrap uses randomization. For this reason, when the p-value of the test is close to the significance level, two practitioners can reach different conclusion for the same database and hypothesis. This does not happen with the analytical correction.

Change history

07 August 2024

A Correction to this paper has been published: https://doi.org/10.1007/s00362-024-01592-8

References

Araújo MC, Cysneiros AH, Montenegro LC (2020) Improved heteroskedasticity likelihood ratio tests in symmetric nonlinear regression models. Stat Pap 61:167–188

Bartlett MS (1937) Properties of sufficiency and statistical tests. Proceedings of the royal society of London A: Mathematical, Physical and Engineering Sciences 160(901):268–282

Bayer FM, Cribari-Neto F (2013) Bartlett corrections in beta regression models. J Stat Plan Inference 143(3):531–547

Bortoluzzo AB, Claro DP, Caetano MAL, Artes R (2011) Estimating total claim size in the auto insurance industry: a comparison between tweedie and zero-adjusted inverse gaussian distribution. Braz Adm Rev 8(1):37–47

Botter DA, Cordeiro GM (1997) Bartlett corrections for generalized linear models with dispersion covariates. Commun Stat Theory Methods 26(2):279–307

Calsavara VF, Rodrigues AS, Rocha R, Louzada F, Tomazella V, Souza AC, Costa RA, Francisco RPV (2019) Zero-adjusted defective regression models for modeling lifetime data. J Appl Stat 46(13):2434–2459

Cordeiro GM (1983) Improved likelihood ratio statistics for generalized linear models. J R Stat Soc B 45(3):404–413

Cordeiro GM, Cribari-Neto F (2014) An introduction to Bartlett correction and bias reduction. Springer, Berlin

Cordeiro GM, Paula GA, Botter DA (1994) Improved likelihood ratio tests for dispersion models. Int Stat Rev 62(2):257–274

Cox DR, Reid N (1987) Parameter orthogonality and approximate conditional inference. J R S Soc B 49(1):1–39

Das U, Dhar SS, Pradhan V (2018) Corrected likelihood-ratio tests in logistic regression using small-sample data. Commun Stat Theory Methods 47(17):4272–4285

Doornik JA (2009) An object-oriented matrix language: Ox 6. Timberlake Consultants Press, London

Dunn PK, Smyth GK (2018) Generalized linear models with examples in R. Springer, Berlin

Efron B et al (1979) Bootstrap methods: another look at the jackknife. Ann Stat 7(1):1–26

Guedes AC, Cribari-Neto F, Espinheira PL (2020) Modified likelihood ratio tests for unit gamma regressions. J Appl Stat 47(9):1562–1586

Guedes AC, Cribari-Neto F, Espinheira PL (2021) Bartlett-corrected tests for varying precision beta regressions with application to environmental biometrics. PLoS ONE 16(6):e0253349

Hashimoto EM, Ortega EM, Cordeiro GM, Cancho VG, Klauberg C (2019) Zero-spiked regression models generated by gamma random variables with application in the resin oil production. J Stat Comput Simul 89(1):52–70

Heller G, Stasinopoulos M, Rigby B The zero-adjusted inverse gaussian distribution as a model for insurance claims, in: J. Hinde, J. Einbeck, J. Newell (Eds.), Proceedings of the 21th International Workshop on Statistical Modelling, Ireland, Galway, 2006, pp. 226–233

Lambert D (1992) Zero-inflated poisson regression, with an application to defects in manufacturing. Technometrics 34(1):1–14

Lawley DN (1956) A general method for approximating to the distribution of likelihood ratio criteria. Biometrika 43(3/4):295–303

Loose LH, Bayer FM, Pereira TL (2017) Bootstrap bartlett correction in inflated beta regression. Commun Stat-Simul Comput 46(4):2865–2879

Loose LH, Valença DM, Bayer FM (2018) On bootstrap testing inference in cure rate models. J Stat Comput Simul 88(17):3437–3454

Magalhães TM, Gallardo DI (2020) Bartlett and bartlett-type corrections for censored data from a weibull distribution. Stat Op Res Trans 44(1):127–140

Melo TF, Ferrari SL, Cribari-Neto F (2009) Improved testing inference in mixed linear models. Comput Stat Data Anal 53(7):2573–2582

Melo TF, Vargas TM, Lemonte AJ, Moreno-Arenas G (2022) Higher-order asymptotic refinements in the multivariate dirichlet regression model. Commun Stat-Simul Comput 51(1):53–71

Michaelis P, Klein N, Kneib T (2020) Mixed discrete-continuous regression-a novel approach based on weight functions. Stat 9(1):e277

Moulton LH, Weissfeld LA, Laurent RTS (1993) Bartlett correction factors in logistic regression models. Comput Stat Data Anal 15(1):1–11

Nogarotto DC, Azevedo CLN, Bazán JL et al (2020) Bayesian modeling and prior sensitivity analysis for zero-one augmented beta regression models with an application to psychometric data. Braz J Probab Stat 34(2):304–322

Ospina R, Ferrari S (2012) A general class of zero-or-one inflated beta regression models. Comput Stat Data Anal 56(6):1609–1623

Pereira TL, Cribari-Neto F (2014) Modified likelihood ratio statistics for inflated beta regressions. J Stat Comput Simul 84(5):982–998

Pereira GH, Scudilio J, Santos-Neto M, Botter DA, Sandoval MC (2020) A class of residuals for outlier identification in zero adjusted regression models. J Appl Stat 47(10):1833–1847

Rauber C, Cribari-Neto F, Bayer FM (2020) Improved testing inferences for beta regressions with parametric mean link function. Adv Stat Anal 104(4):687–717

Rocha LO, Nakai VK, Braghini R, Reis TA, Kobashigawa E, Corrêa B (2009) Mycoflora and co-occurrence of fumonisins and aflatoxins in freshly harvested corn in different regions of brazil. Int J Mol Sci 10(11):5090–5103

Rocha LO, Reis GM, Fontes LC, Piacentini KC, Barroso VM, Reis TA, Pereira AA, Corrêa B (2017) Association between fum expression and fumonisin contamination in maize from silking to harvest. Crop Prot 94:77–82

Rocke DM (1989) Bootstrap bartlett adjustment in seemingly unrelated regression. J Am Stat Assoc 84(406):598–601

Rubec PJ, Kiltie R, Leone E, Flamm RO, McEachron L, Santi C (2016) Using delta-generalized additive models to predict spatial distributions and population abundance of juvenile pink shrimp in tampa bay, florida. Marine Coastal Fish 8(1):232–243

Sen PN, Singer JM, Lima ACP (2010) From finite sample to asymptotic methods in statistics. Cambridge University Press, Cambridge

Silva AR, Azevedo CL, Bazán JL, Nobre JS (2021) Augmented-limited regression models with an application to the study of the risk perceived using continuous scales. J Appl Stat 48(11):1998–2021

Skovgaard IM (2001) Likelihood asymptotics. Scand J Stat 28(1):3–32

Thomson FJ, Letten AD, Tamme R, Edwards W, Moles AT (2018) Can dispersal investment explain why tall plant species achieve longer dispersal distances than short plant species? New Phytol 217(1):407–415

Tomazella V, Nobre JS, Pereira GH, Santos-Neto M (2019) Zero-adjusted birnbaum-saunders regression model. Statist Probab Lett 149:142–145

Tong E, Mues C, Thomas L (2013) A zero-adjusted gamma model for mortgage loan loss given default. Int J Forecast 29:548–562

Tong EN, Mues C, Brown I, Thomas LC (2016) Exposure at default models with and without the credit conversion factor. Eur J Op Res 252(3):910–920

Ye T, Lachos VH, Wang X, Dey DK (2021) Comparisons of zero-augmented continuous regression models from a Bayesian perspective. Stat Med 40(5):1073–1100

Zamani H, Bazrafshan O (2020) Modeling monthly rainfall data using zero-adjusted models in the semi-arid, arid and extra-arid regions. Meteorol Atmos Phys 132(2):239–253

Acknowledgements

We acknowledge the two referees’ suggestions, which helped us to improve this work.

Funding

This work was partially supported by São Paulo Research Foundation (FAPESP), grant number 2020/16334-9.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The remaining quantities to define the Bartlett correction factor, see equation (8), are:

\({{\varvec{F}}} = \text{ diag }\{f_{11}, \ldots , f_{nn}\}\), \({{\varvec{G}}} = \text{ diag }\{g_{11}, \ldots , g_{nn}\}\), \({{\varvec{F}}}_{\pi } = \text{ diag }\{f_{\pi 11}, \ldots , f_{\pi nn}\}\), \({{\varvec{G}}}_{\pi } = \text{ diag }\{g_{\pi 11}, \ldots , g_{\pi nn}\}\), \({{\varvec{H}}}_{i} = \text{ diag }\{h_{i,11}, \ldots , h_{i,nn}\}\), \(i = 1, 2, 3\), where

Although deriving the expression for \(\varepsilon _p\) entails a great deal of algebra, this expression only involves simple operations of diagonal matrices, i.e. they are simple expressions to implement.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Magalhães, T.M., Pereira, G.H.A., Botter, D.A. et al. Bartlett corrections for zero-adjusted generalized linear models. Stat Papers 65, 2191–2209 (2024). https://doi.org/10.1007/s00362-023-01477-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-023-01477-2