Abstract

We revisit and update estimating variances, fundamental quantities in a time series forecasting approach called kriging, in time series models known as FDSLRMs, whose observations can be described by a linear mixed model (LMM). As a result of applying the convex optimization, we resolved two open problems in FDSLRM research: (1) theoretical existence and equivalence between two standard estimation methods—least squares estimators, non-negative (M)DOOLSE, and maximum likelihood estimators, (RE)MLE, (2) and a practical lack of free available computational implementation for FDSLRM. As for computing (RE)MLE in the case of n observed time series values, we also discovered a new algorithm of order \({\mathcal {O}}(n)\), which at the default precision is \(10^7\) times more accurate and \(n^2\) times faster than the best current Python(or R)-based computational packages, namely CVXPY, CVXR, nlme, sommer and mixed. The LMM framework led us to the proposal of a two-stage estimation method of variance components based on the empirical (plug-in) best linear unbiased predictions of unobservable random components in FDSLRM. The method, providing non-negative invariant estimators with a simple explicit analytic form and performance comparable with (RE)MLE in the Gaussian case, can be used for any absolutely continuous probability distribution of time series data. We illustrate our results via applications and simulations on three real data sets (electricity consumption, tourism and cyber security), which are easily available, reproducible, sharable and modifiable in the form of interactive Jupyter notebooks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The need to obtain sufficiently accurate predictions for facilitating and improving decision making becomes an integral part not only of science, industry or economy but also in many other human activities. The key idea of the so-called kriging as a time series prediction approach consists in modeling the given time series data in an appropriate general class of linear regression models (LRMs) and subsequently finding the best linear unbiased predictor—the BLUP which minimizes the mean squared error (MSE) of prediction among all linear unbiased predictors, see e.g. Štulajter (2002), Kedem and Fokianos (2005), Christensen (2011) and Brockwell and Davis (2016).

In the frame of kriging, we investigate theoretical features and econometric applications of a class of time series models called finite discrete spectrum linear regression models or shortly FDSLRMs. The FDSLRM class was introduced in 2002–2003 by Štulajter (Štulajter 2002, 2003) as a direct extension of classical (ordinary) linear regression models (see e.g. Christensen 2011; Hyndman and Athanasopoulos 2018).

The FDSLRM has mean values (trend) given by linear regression and random components (error terms) are represented as a sum of a linear combination of uncorrelated zero-mean random variables and white noise which together can be interpreted in terms of the finite discrete spectrum (Priestley 2004).

Formally the FDSLRM can be presented as

whereFootnote 1

-

\(\mathbb {T}\) representing the time domain is a countable subset of the real line \(\mathbb {R}^{}\),

-

k and l are some fixed non-negative integers, i.e. \(k,l \in \mathbb {N}_0\),

-

\(\varvec{\beta }= (\beta _1, \beta _2, \ldots , \beta _k)' \in \mathbb {R}^{k}\) is a vector of regression parameters,

-

\(\varvec{Y}= (Y_1,Y_2,\ldots ,Y_l)'\) is an unobservable \(l \times 1\) random vector with zero mean vector \(E\left\{ \varvec{Y}\right\} ={\varvec{0}}_{l}\), and with \(l\times l\) diagonal covariance matrix \({Cov}\{\varvec{Y}\} = \text {diag}\left\{ \sigma _j^2\right\} \), where \(\sigma _j^2 \in \mathbb {R}^{}_+\) are non-negative real numbers,

-

\(f_i(.); \, i=1,2,\ldots ,k\) and \(v_j(.); \, j=1,2,\ldots ,l\) are real functions defined on \(\mathbb {R}^{}\),

-

Z(.) stands for white noise uncorrelated with \(\varvec{Y}\) and having a positive dispersion \(D\left\{ Z(t)\right\} =\sigma _0^2 \in \mathbb {R}^{}_{++}\).

Typically, due to the nature of time series data collection, the most frequently considered time domain \(\mathbb {T}\) is the set of natural numbers \(\mathbb {N}=\{1,2, \ldots \}\). FDSLRM variance parameters, which are fundamental quantities in kriging, are commonly described by one vector \(\varvec{\upnu }\equiv (\upnu _0, \upnu _1, \ldots , \upnu _l)' = (\sigma _0^2,\sigma _1^2,\ldots ,\sigma _l^2)'\), an element of the parametric space \(\Upupsilon = (0, \infty ) \times [ 0, \infty )^{l}\) or \(\Upupsilon = \mathbb {R}^{}_{++} \times \mathbb {R}^{l}_+\) for short.

Our recent article (Gajdoš et al. 2017) summarizes the FDSRLM kriging procedure, guiding methodology and corresponding references dealing with FDSLRM kriging for time series econometric forecasting as one of the advanced alternative approaches to the most popular Box-Jenkins methodology (Box et al. 2015; Shumway and Stoffer 2017).

The principal goal of this paper is to revisit and update theory, computational and implementation aspects of estimation procedures for variances in FDSLRM fitting (Štulajter 2002; Štulajter and Witkovský 2004; Hančová 2008), the first part of FDSLRM kriging, in the light of recent advances in closely related linear mixed modeling (LMM), convex optimization and modern computational technology. As a result of such investigation, we would also like to present relations of existing FDSLRM estimators with the well-established standard variance estimators in the field of linear mixed models. Moreover, the alternative views via convex optimization and the theory of empirical BLUPs lead us to new theoretical results, computational algorithms and tools in estimating FDSLRM variances.

The structure of the paper is organized as follows. Since the time series analysis and prediction based on FDSLRM is still a relatively new approach and therefore not broadly known, brief review Sect. 2 recapitulates key basic features and benefits of FDSLRM together with important notes about corresponding theoretical methods and computational tools offered by closely connected mathematical branches and computational research. This background became the strong basis for our investigation focusing especially on non-negative versions of the estimators.

Section 3 is devoted to standard estimation methods of variances \(\varvec{\upnu }\) in FDSLRM leading to non-negative estimates of \(\varvec{\upnu }\) in the frame of convex optimization. Since the methods are based on maximum likelihood or least squares (Gajdoš et al. 2017), we also demonstrate their relations to the well-known maximum likelihood estimators in LMM—MLE, residual MLE (REMLE) requiring specification of the time series distribution, and noniterative distribution-free quadratic estimators—method of moments (MM), minimum norm quadratic estimation (MINQE) and variance least squares (VLS) estimation (Demidenko 2013).

Section 4 describes new alternative estimators for FDSLRM variances whose definition becomes a consequence of applying the general theory of the BLUP in LMM. Our approach also represents an improved two-stage modification of the previously developed method of natural estimators (NE) (Hančová 2008) which is now based on the idea of empirical (plug-in) BLUPs. Therefore, we will refer to our new method as EBLUP-NE for short. The section also develops the computational form and some basic statistical properties of EBLUP-NE using two special matrices known in linear algebra as the Schur complement and Gram matrix (Zhang 2005). The method is distribution-free which means that a finite FDLSRM observation \(\varvec{X}= (X(1),\ldots ,X(n))',\) \(n \in \mathbb {N}\) must have only an absolutely continuous probability distribution with respect to some \(\sigma \)-finite measure.

In the following fifth section containing numerical simulations, we illustrate theoretical results of the paper and the performance of EBLUP-NE on three real time series data sets—electricity consumption, tourism and cyber security. Final Sect. 6 presents the conclusions of the paper. For the sake of paper readability, we moved some proofs to the Appendix. In the Appendix, we report current computational tools found or developed by us for FDSLRM kriging. Finally for a better orientation, the Appendix also contains a list of acronyms and abbreviations used in the paper (Table 6).

Since the paper is aimed at statisticians, analysts, econometricians and data scientists applying time series and forecasting methods, our notation is standard for time series analysis and prediction using linear regression models (Štulajter 2002; Kedem and Fokianos 2005; Brockwell and Davis 2009). Sets like \(\mathbb {R}^{}, \mathbb {R}^{}_{+}, \mathbb {R}^{}_{++}\) are labeled as it is usual in convex optimization (Boyd and Vandenberghe 2009).

2 Theoretical and computational background for FDSLRM kriging

Let us recall some important notes about time series FDSLRM kriging, its connection to other math branches and current computational technology.

Any finite FDSLRM observation \(\varvec{X}= (X(1),\ldots ,X(n))',\) \(n \in \mathbb {N}\) satisfies a special type of linear mixed model (LMM) of the formFootnote 2

where

Matrices \(\mathbf{F }= \{F_{ti}\} =\{f_i(t)\}, \mathbf{V }=\{V_{tj}\} =\{v_j(t)\} \text { for } t=1, 2, \ldots ,n\); \(i=1,2,\ldots ,k\); \(j=1,2\ldots ,l\) are LMM design matrices and random vector \(\varvec{Z}=(Z(1), Z(2), \ldots , Z(n))'\) is a finite n-dimensional white noise observation. In the language of LMM terminology \(\varvec{\beta }\) represents the \(k\times 1\) vector of fixed effects and \(\varvec{Y}\) the \(l\times 1\) vector of the random effects (Witkovský 2012; Demidenko 2013).

Thanks to the fundamental property (2.1), we can apply convenient LMM mathematical techniques for FDSLRM fitting and forecasting (Jiang 2007; Pinheiro and Bates 2009; Christensen 2011; Witkovský 2012; Demidenko 2013; Rao and Molina 2015; Covarrubias-Pazaran 2016; Singer et al. 2017).

Another characteristic feature of LMM (2.1) is that it also belongs to linear models with linear covariance structure or shortly linear covariance models (Demidenko 2013, Sect. 4.3)

where vectors \(\varvec{v}_j, j = 1, 2, \ldots , l\) are columns of design matrix \(\mathbf{V }\). According to Wu and Xiao (2012), such type of a linear model was introduced just in time series domain by Anderson (1970). From the perspective of the variance parameter estimation, it was studied extensively e.g. in Rao and Kleffe (1988) and it still remains the subject of intensive research (Zwiernik et al. 2017).

From a time series modeling point of view, if we consider any time series model X(.) in the additive form \(X(t)=m(t)+\varepsilon (t);t \in \mathbb {T}\) whose mean value function m(.) is a real function on \(\mathbb {R}^{}\) expressible by a functional series (e.g. Taylor or Fourier series) and error term \(\varepsilon (.)\) is a mean-zero stationary process, then according to the spectral representation theory of time series (Priestley 2004; Percival and Walden 2009) X(.) can be approximated arbitrarily closely by FDSLRM. Therefore, from a practice perspective, FDSLRMs can be potentially applied in many practical situations.

In econometric applications of FDSLRM kriging, we have some restrictions given by character of econometric data. There is typically only one realization of time series X(.) and we do not know the mean-value parameters \(\varvec{\beta }\in \mathbb {R}^{k}\) nor the variance parameters \(\varvec{\upnu }\in \Upupsilon \). From the view of time series analysis, the problem of estimating variances \(\varvec{\upnu }\) belongs to the class of problems concerning covariance matrix estimation with one realization (Wu and Xiao 2012).

Simultaneously, in time series econometric analysis using FDSLRMs (Štulajter 2002; Gajdoš et al. 2017), econometric datasets almost always show some periodic patterns as they are influenced by seasons or regularly repeating events. Therefore the fundamental modeling procedure is based on spectral analysis of time series (Štulajter 2002; Priestley 2004; Brockwell and Davis 2009) using trigonometric functions \(\cos , \sin \) as \(f_i\), \(v_j\) regressors in FDLSRM (1.1).

If we identify the significant Fourier frequencies in time series data by periodogram (Štulajter and Witkovský 2004; Gajdoš et al. 2017), then it restricts the form of design matrices \(\mathbf{F }\) an \(\mathbf{V }\) leading to the orthogonality condition for FDSLRM (Štulajter and Witkovský 2004)

where \(\varvec{v}_j, j = 1,2\ldots ,l\) is j-th column of \(\mathbf{V }\) and \(\left\| \bullet \right\| ^2 = (\bullet )'(\bullet )\) is the square of the standard Euclidean vector norm. Such FDSLRM, satisfying condition (2.3), is called orthogonal (Štulajter and Witkovský 2004). If we consider Gaussian FDSLRM, then with respect to the model identifiability (Demidenko 2013, Sect. 3.2) the orthogonal model (2.3) is always identifiable. So in econometric practice, we mainly work with the orthogonal and identifiable version of FDSLRM. Our interactive supplementary materials (Gajdoš et al. 2019c), dealing with real data sets in Sect. 5, demonstrate every step of the iterative econometric FDLSRM building that can be followed in every detail with respect to given restrictions.

Finally, it is worth to mention that from the viewpoint of prediction quality (the size of MSE), any FDSLRM model with \(k, l > 0\) is always better in applications than corresponding classical LRM with the same regressors (containing \(\alpha _j \in \mathbb {R}^{}\) instead of \(Y_j\) in (1.1)), because MSE of the FDSLRM is always less or equal and conditions of the same MSE practically never hold. It was directly proved in Hančová (2007) using block matrix algebra and the Löwner partial order relation.

On the other hand, the standard maximum likelihood or least squares estimates of \(\varvec{\upnu }\) in FDSLRM are optimization problems. Our motivation to explore estimating FDSLRM variances in the frame of convex optimization lays on the fact that its mathematical tools and efficient, very reliable computational interior-point methods became important or fundamental tools in many other branches of mathematics (Boyd and Vandenberghe 2009) like the design of experiments, high-dimensional data, machine learning or data mining.

Inspired by essential and recent works on convex optimization (Boyd and Vandenberghe 2009; Bertsekas 2009; Koenker et al. 2014; Cornuéjols et al. 2018; Agrawal et al. 2018), we prove new theoretical relations among existing FDSLRM estimators in the extended parametric space \(\Upupsilon = \mathbb {R}^{l+1}_+\) for the orthogonal version of FDSLRM. Obtained theoretical results bring us also to the development of new fast and accurate computational algorithms for estimating variances in FDSLRM and can be effectively used in time series simulations (Monte Carlo and bootstrap).

One of the reasons, why time series FDSLRM kriging is not widely used, seems to be also the lack of available computer implementation of FDSLRM approach.Footnote 3 Therefore all our computational algorithms, developed functions, packages and results, confronted with standard LMM and convex optimization computational tools, are easily readable, sharable, reproducible, and modifiable, thanks to open-source Jupyter technology (Kluyver et al. 2016). In particular, we present our results in the form of Jupyter notebooks, dynamic HTLM documents integrating prose, code (Python or R language) and results similarly as Mathematica notebooks, stored in our free available GitHub (Lima et al. 2014) repositories devoted to our FDSLRM research (Gajdoš et al. 2019a). This easily reproducible way and presentation of our computing with real data were inspired by works (Brieulle et al. 2019; Weiss 2017). More details dealing with the computer implementation and tools are described in the Appendix A.3.

3 Estimating FDSLRM variances via convex optimization

3.1 Mathematical approaches in estimating FDSLRM variances

In the LMM framework (Searle et al. 2009; Demidenko 2013), generally in variance estimation problems, the basic nonlinear optimization theory with the standard matrix calculus or advanced matrix (tensor) calculusFootnote 4 are applied. Recently, however, we can also see the use of mathematical apparatus of convex optimization in the aforementioned work, Zwiernik et al. (2017), dealing with MLE in linear covariance models.

In time series analysis and forecasting, one of the traditional approaches in finding least squares or maximum likelihood estimators of unknown parameters in linear regression models, initiated by Kolmogorov in 1941 and presented in the well-known reference books (Brockwell and Davis 1991, 2009; Pourahmadi 2001; Galántai 2004; Christensen 2011), is represented by geometrical projection methods in the frame of Hilbert (or inner product) spaces. Therefore the theory and computational aspects of the estimation procedures for \(\varvec{\upnu }\) in FDSLRM fitting, have been developed using orthogonal and oblique projections (Štulajter 2002; Štulajter and Witkovský 2004; Hančová 2007).

In this section we investigate, revisit and update five variance estimation methods under different assumptions on the structure and distribution of FDSLRM observation \(\varvec{X}\), summarized in Table 1, in the light of convex optimization, LMM and modern computational technology.

According to Agrawal et al. (2018) and Boyd and Vandenberghe (2009), to formulate any mathematical problem as an convex optimization problem, we need to identify three attributes of the problem: optimization variable, convex constraints that the variable must satisfy and the convex objective function depending on the variable whose optimal value we want to achieve.

In all our estimation methods, optimization variable is \(\varvec{\upnu }\in \mathbb {R}^{l+1}\) satisfying constraints \(\Upupsilon = \mathbb {R}^{}_{++} \times \mathbb {R}^{l}_{+} \). In order to avoid any incompatibility problems in the convex optimization theoretical or computational framework, we extend constraints \(\Upupsilon \) into the form of standard non-negativity constraints \(\Upupsilon ^{*} = \mathbb {R}^{l+1}_{+} = [0, \infty ]^{l+1}\) or \(\varvec{\upnu }\succeq 0\) using generalized inequality.

Finally, we also explain relations of the FDSLRM estimators with the standard estimators in the LMM framework, where the problem of estimating variances has a long and rich history with many essential reference works (e.g. Rao and Kleffe 1988; Searle et al. 2009; Christensen 2011; Demidenko 2013 and Rao and Molina 2015, see also a review paper Witkovský 2012).

3.2 General case of the orthogonal FDSLRM

First of all, we formulate the basic assumptions required in our investigations on the structure and distribution of FDSLRM. As we mentioned in review Sect. 2, in econometric FDSLRM analysis we mainly work with orthogonal FDSLRMs (2.3). Unless otherwise stated, we will assume hereafter this type of FDSLRMs.

The second assumption deals with a distribution of the FDSLRM observation \(\varvec{X}= (X(1),\ldots ,X(n))',\) \(n \in \mathbb {N}\). We will assume \(\varvec{X}\) (2.1), having any absolutely continuous probability distribution with respect to some \(\sigma \)-finite measure.

Under these two assumptions, we can consider three noniterative distribution-free quadratic estimation methods: natural estimators (NE), double ordinary least squares estimators (DOOLSE) and modified (unbiased) double ordinary least squares estimators (MDOOLSE).

3.2.1 Natural estimators—NE

In the paper Hančová (2008), we proposed the method of almost surely positive invariant quadratic estimators of FDSLRM variances \(\varvec{\upnu }\), called natural estimators (NE) . The main idea behind NE came from the fact that \(\sigma _j^2 = {Cov}\{Y_j\} = E\left\{ Y_j^2\right\} ; j = 1, \ldots , l\). Therefore, if random vector \(\varvec{Y}\) in (1.1) was known, the natural estimate of \(\sigma _j^2\) would be just \(Y_j^2\). This initial consideration was identical with Rao’s estimates known as MINQE (Rao and Kleffe (1988, Sect. 5.1) and Christensen (2011, Sect. 12.7)).

To get sufficiently simple, explicit analytic expressions available for further theoretical study, we predicted the random vector \(\varvec{Y}\) as fixed by the ordinary least squares method leading to the following form of linear predictor of \(\varvec{Y}\) based on \(\varvec{X}\)

Matrix \(\mathbf{M} _\mathbf{F }= (\mathbf{I }_n-\mathbf{F }(\mathbf{F }'\mathbf{F })^{-1}\mathbf{F }') \in \mathbb {R}^{n \times n}\) represents (Hančová 2008) the orthogonal projector onto the orthogonal complement of the column space of \(\mathbf{F }\) and matrixFootnote 5\(\mathbf{W }\succ 0\) is known in linear algebra and statistics (Zhang 2005, chap. 6) as the Schur complement of \(\mathbf{F }'\mathbf{F }\) in the block matrix  .

.

The form (3.1) exists only in the case of FDSLRM with the full column rank \(k+l\) of matrix \((\mathbf{F }\; \mathbf{V })\). Such FDSLRM, not necesarily orthogonal, is called full-rank FDSLRM. However, NE exist and can be calculated in FDSLRMs without full-rank assumption. Moreover, if we assume extra condition \(n>k+l\) together with full-rankness, then from the viewpoint of LMM identifiability FDSLRM (2.1) under the assumption of normal distribution becomes identifiable (Demidenko 2013, Sect. 3.2). As for \(\sigma _0^2\), NE is simply the sum of residuals in the OLS method applied to (2.1) assuming both \(\varvec{\beta }\) and \(\varvec{Y}\) as fixed divided by degrees of freedom. Unlike NE for \(\sigma _j^2, j = 1,..., l\), such estimator is not only almost surely positive, but also unbiased.

Employing results of Hančová (2008), orthogonality condition (2.3), also giving \(\mathbf{M} _\mathbf{F }\mathbf{V }=\mathbf{V }\) in (3.1), leads to the following analytic form of NE

where \({\varvec{e}}=\varvec{x}-\mathbf{F }\varvec{\beta ^*}=\mathbf{M} _\mathbf{F }\varvec{x}\,\, (\varvec{\beta ^*}= (\mathbf{F }'\mathbf{F })^{-1}\mathbf{F }\varvec{x}) \) is nothing else than the vector of ordinary least squares (OLS) residuals in FDSLRM, \(\varvec{x}\) is an arbitrary realization of the FDSLRM observation \(\varvec{X}\) and vectors \(\varvec{v}_j, j = 1, 2, \ldots , l\) are columns of design matrix \(\mathbf{V }\). Matrix \(\mathbf{M} _\mathbf{V }= \mathbf{I }_n- \mathbf{V }(\mathbf{V }'\mathbf{V })^{-1}\mathbf{V }'\) represents again the orthogonal projector onto the orthogonal complement of the column space of \(\mathbf{V }\).

As for computational complexity of NE, using elementary theory of complexity (Boyd and Vandenberghe 2018), we get the complexity of 2kn operations for the residual vector \({\varvec{e}}\) and 4ln for NE (3.2). Therefore, computing NE (3.2) represents an algorithm with the complexity having order \({\mathcal {O}}(n)\) with respect to the realization length or the number of time series data n.

Since NE employ the least-squares method, it is easy to show that in orthogonal FDSLRMs, NE can be obtained as a unique, always existing, non-negative solution of the following convex optimization problem (Agrawal et al. 2018; Boyd and Vandenberghe 2009)

where matrices in the objective function \(f_0(\varvec{\upnu })\) are defined by expressions \(\mathbf{V }_0=\mathbf{M} _\mathbf{F }\mathbf{M} _\mathbf{V }\) and \(\mathbf{V }_j = \varvec{v}_j\varvec{v}_j', j=1,\ldots ,l.\). Now for any symmetric matrix \(\mathbf{A }\)

means the standard Euclidean matrix norm of \(\mathbf{A }\).

In convex optimization, this kind of optimization problems belongs to the convex quadratic optimization problems or the norm approximation problems (Boyd and Vandenberghe 2009, Sects. 4.4, 6.1). Although we known the analytic form of NE, from the computational point of view, the representation as the convex optimization problem can be useful for the purposes of software cross-checking results and evaluating numerical precision of general convex optimization algorithms.

Remark 1

NE vs. MM

From LMM perspective, NE method used in orthogonal FDSLRMs can be viewed as a variation of the Henderson method 3 (method of fitting constants), whose generalization is the method of moments (MM) provinding unbiased estimators (Christensen (2011, Sect. 12.9), Demidenko (2013, Sect. 3.11), Rao and Molina (2015, Sect. 5.1)).

If we applied the idea behind MM estimator in orthogonal FDSLRMs, we would also calculate residuals \({\varvec{e}}=\varvec{x}-\mathbf{F }\varvec{\beta ^*}=\mathbf{M} _\mathbf{F }\varvec{x}\) as in (3.2). Then we would orthogonally project \({\varvec{e}}\) onto column space of \(\mathbf{V }\) by OLS leading to empirical covariance matrix \(\breve{\varvec{Y}}\breve{\varvec{Y}}'\) with vector \(\breve{\varvec{Y}} = (\mathbf{V }'\mathbf{V })^{-1}\mathbf{V }'\varvec{X}\), the same as \(\breve{\varvec{Y}}\) (3.1) for orthogonal FDSLRM (\(\mathbf{V }'\mathbf{M} _\mathbf{F }=\mathbf{V }'\)), from which we would finally removed its bias.

Comparing NE and MM, in orthogonal FDSLRMs, gives us that NE for \(\upnu _0\) is identical with the MM estimator and NE for each \(\upnu _j, j = 1,..., l\) is the first non-negative term of MM estimator for \(\upnu _j\) (or the term before removing bias). In other words, if we removed a bias from NE, we got MM estimator. But such unbiased NE, equivalent to MM estimates, generally do not hold non-negative constrains \(\varvec{\upnu }\succeq 0\) required by convex optimization.

3.2.2 Double least squares estimators—DOOLSE, MDOOLSE

From the optimization viewpoint, assuming full-rank, not necessarily orthogonal FDSLRM observation \(\varvec{X}\) (2.1), DOOLSE and MDOOLSE can be viewed as the following optimization problems for \(\varvec{\upnu }\) at given OLS residuals \({\varvec{e}}\)

where \({\varvec{e}}\) are again OLS residuals as in the case of NE and \(\varvec{{\Sigma }}_{\varvec{\upnu }}= \sigma _0^2\mathbf{I }_n+ \mathbf{V }\mathbf{D }_{\varvec{\upnu }}\mathbf{V }'\) is the covariance matrix of \(\varvec{X}\). We call empirical covariance matrix \(\mathbf{S }_{{\varvec{e}}}\equiv {\varvec{e}}{\varvec{e}}'\) matrix of residual products or more compactly the residual products matrix. Using elementary properties of mean value, we can derive that \( E\left\{ \mathbf{S }_{{\varvec{e}}}\right\} = \mathbf{M} _\mathbf{F }\varvec{{\Sigma }}_{\varvec{\upnu }}\mathbf{M} _\mathbf{F }\).

Remark 2

DOOLSE, MDOOLSE vs. VLS, MINQE

In the LMM framework, DOOLSE and MDOOLSE without non-negativity constraints are identical with variance least squares (VLS) and unbiased VLS (UVLS) estimation methods (Demidenko 2013, Sect. 3.12) applied to FDSLRM observation \(\varvec{X}\) described by the LMM model (2.1). The idea behind VLS is known more than 40 years (Amemiya 1977). The DOOLSE method, its name and acronym DOOLSE were independently reinvented and formulated in time series analysis by Štulajter (1991, 2002). Since the DOOLSE method consists in applying two OLS regressions, the first one producing residuals \({\varvec{e}}\) and the second one variance estimates, we consider name DOOLSE, even not well-known in statistical community, as more descriptive and better representing the idea of the method as name VLS.

In addition, using results of (Witkovský 2012), we can also prove by direct computation that in the orthogonal FDSLRM invariant MINQE or shortly MINQE(I) are identical with DOOLSE and invariant unbiased MINQE, MINQE(U,I), are identical to MDOOLSE.

In the next text, some theoretical results can be written in one form for both of DOOLSE and MDOOLSE problems. To emphasize this fact, we will use one abbreviation with parenthesis ( ), e.g (M)DOOLSE, assuming that given results or considerations hold for both problems.

Using the geometrical language of Hilbert spaces (Brockwell and Davis 1991, 2009; Štulajter 2002), (M)DOOLSE determine an unknown variances \(\varvec{\upnu }\) stored in matrices \(\varvec{{\Sigma }}_{\varvec{\upnu }}\) or in \(\mathbf{M} _\mathbf{F }\varvec{{\Sigma }}_{\varvec{\upnu }}\mathbf{M} _\mathbf{F }\) in such way, that these matrices have the smallest Euclidean distance to the observable matrix \(\mathbf{S }_{{\varvec{e}}}\). In such case, (M)DOOLSE for orthogonal FDSLRMs could be computed geometrically as the orthogonal projection of \(\mathbf{S }_{{\varvec{e}}}\) onto the linear span \({\mathscr {L}}(\mathbf{V }_0, \mathbf{V }_1,\ldots , \mathbf{V }_l)\) using the Gram matrix \(\mathbf{G }\) of set \(\{\mathbf{V }_0, \mathbf{V }_1, \ldots , \mathbf{V }_l\}\) generated by the inner product \((\bullet ,\bullet ) = \text {tr}(\bullet \cdot \bullet )\). Matrices \(\mathbf{V }_j, j=1,\ldots , l\) are the same as in (2.2), for DOOLSE: \(\mathbf{V }_0 = \mathbf{I }_n\) and for MDOOLSE: \(\mathbf{V }_0 = \mathbf{M} _\mathbf{F }\).

According to Štulajter and Witkovský (2004), the mentioned projection is given by

where

where for DOOLSE: \(n^* \equiv n\), for MDOOLSE: \(n^* \equiv n-k\), \({\varvec{e}}\) are again OLS residuals, \(\varvec{v}_j\) are columns of \(\mathbf{V }\). This formula gives the same results as formula (4.111) in Demidenko (2013) obtained by standard matrix calculus, applied to orthogonal FDSLRMs in (2.1).

However, the formula (3.6) can produce estimates out of the parametric space \(\Upupsilon \) at a relatively high probability (Gajdoš et al. 2017), i.e. in many practical cases of econometric time series analysis it frequently produces negative estimates. The projection-based (or standard matrix calculus) calculation (3.6) is not able to handle non-negative constraints given by \(\Upupsilon \) reliably and in some simple way.

To distinguish the projection-based estimates (or VLS estimates from the viewpoint of LMM) from actual DOOLSE (3.4), MDOOLSE (3.5) which are always non-negative, we suggest to be more accurate and use full names for real DOOLSE and MDOOLSE: non-negative DOOLSE (NN-DOOLSE) and non-negative MDOOLSE (NN-MDOOLSE). Such specific way of notation is not rare and is similarly used e.g. in the case of Rao’s MINQE (Rao and Kleffe 1988, chap. 5). For the projection-based DOOLSE and MDOOLSE (3.6), without considering non-negativity constraints, we leave the original acronyms DOOLSE, MDOOLSE coined by Štulajter.

3.2.3 Non-negative double least squares estimators – NN-(M)DOOLSE

Now applying the basic convex quadratic optimization theory (Cornuéjols et al. 2018; Bertsekas 2009) in orthogonal FDSLRMs, we can rewrite NN-(M)DOOLSE as strictly convex quadratic problems, whose solutions \(\tilde{\varvec{\upnu }}\) always exist and are unique global minimizers on \(\Upupsilon ^{*}\). Here are details proving this conclusion.

Using the standard inner product of matrices defined as \((\bullet , \bullet ) = \text {tr}(\bullet , \bullet )\), generating the Euclidean norm \(\left\| \bullet \right\| \) of a matrix, and basic properties of the trace function, we can easily rewrite expressions (3.4), (3.5) for the objective functions as quadratic forms. The particular form of these quadratic forms in optimization problems (M)DOOLSE is described by the following proposition.

Proposition 1

(NN-(M)DOOLSE as quadratic optimization problems)

In an orthogonal FDSLRM the NN-(M)DOOLSE problems are convex quadratic optimization problems in the form

where for NN-DOOLSE: \(n^* \equiv n\), NN-MDOOLSE: \(n^* \equiv n-k\),

According to the fundamental KKT theorem of convex optimization (Boyd and Vandenberghe 2009, chap. 5) which handles convex optimization problems with constraints in the form of inequalities giving a list of the so-called Karush–Kuhn–Tucker (KKT) optimality conditions, we consider our optimization problem NN-(M)DOOLSE in the Lagrange multiplier form with Lagrangian L as a sum of the objective function \(f_0(\varvec{\upnu })\) and a linear combination of multipliers \(\varvec{\uplambda }= (\uplambda _0, \uplambda _1, \ldots , \uplambda _l)' \succeq {\varvec{0}}_{l+1}\) and constraints \(-\varvec{\upnu }=(\upnu _0, \upnu _1, \ldots , \upnu _l)' \preceq {\varvec{0}}_{l+1}\).

Then KKT optimality conditions for (3.7) become necessary and sufficient conditions of optimality, satisfied at any local optimal solution, being represented by three sets of conditions: (1) primal and dual feasibility: \(\varvec{\upnu }\succeq {\varvec{0}}_{l+1}\), \(\lambda \succeq {\varvec{0}}_{l+1}\), (2) stationarity of the Lagrangian: \(\nabla _{\varvec{\upnu }} L(\varvec{\upnu }, \varvec{\uplambda }) = {\varvec{0}}_{l+1}\) and (3) complementary slackness: \(\upnu _j\uplambda _j=0,\, j\in \{0,1, \ldots ,l\}\). Computing the gradient of the Lagrangian, we arrive at the following proposition.

Proposition 2

(KKT optimality conditions for NN-(M)DOOLSE)

In an orthogonal FDSLRM consider the non-negative NN-(M)DOOLSE problems in the Lagrange multiplier form with the Lagrangian

Then, a necessary and sufficient condition for \(\tilde{\varvec{\upnu }}, \tilde{\varvec{\uplambda }}\) to be problems’ optimal solution is

-

(1)

\(\varvec{\upnu }\succeq {\varvec{0}}_{l+1}, \quad \varvec{\uplambda }\succeq {\varvec{0}}_{l+1}\),

-

(2)

\(\begin{pmatrix} \mathbf{G} &{} -\mathbf{I}_{l+1} \\ \end{pmatrix} \begin{pmatrix} \varvec{\upnu }\\ \varvec{\uplambda }\end{pmatrix} = \varvec{q}\),

-

(3)

\(\varvec{\upnu }\circ \varvec{\uplambda }= {\varvec{0}}_{l+1} \quad \left( \Leftrightarrow \upnu _j\uplambda _j=0,\,\, j\in \{0,1, \ldots ,l\}\right) \).

As for the existence of optimal solutions, we can apply the well-known basic results for minimizing quadratic forms (Bertsekas (2009, chap. 3)). Since the Hessian of (3.7) equal to \(\nabla _{\varvec{\upnu }}^2 f_0(\varvec{\upnu }) = 2\mathbf{G }\) is a positive definite matrix, like \(\mathbf{G }\), then \(f_0(\varvec{\upnu })\) is strictly convex and also coercive proper function. These two properties are sufficient conditions for NN-(M)DOOLSE (Bertsekas (2009, Weierstrass’ theorem, p. 119)) to have always a unique global optimal solution \(\tilde{\varvec{\upnu }}\). Employing the familiar Bessel’s inequality (Brockwell and Davis 2009, Sect. 2.4)

it is also not difficult to rewrite KKT conditions (1–3) as the following system

implying an optimal solution \(\tilde{\varvec{\upnu }}\) with \(\tilde{\upnu }_0=0\) if and only if the vector of OLS residuals \({\varvec{e}}\) belongs to the column space of \(\mathbf{V }\). Since probability of \({\varvec{e}}\in {\mathscr {L}}(\mathbf{V })\) is zero, our existence conclusions can be summarized in the next proposition.

Proposition 3

(Solution existence of NN-(M)DOOLSE)

In an orthogonal FDSLRM the following holds

-

(1)

NN-(M)DOOLSE problems are strictly quadratic optimization problems.

-

(2)

Their solutions \(\tilde{\varvec{\upnu }}\) always exist and they are unique global minimizers.

-

(3)

\(\tilde{\upnu }_0=0 \Leftrightarrow {\varvec{e}}\in \mathscr {L}(\mathbf{V}) \Leftrightarrow \varvec{x}\in \mathscr {L}({\mathbf{F}, \mathbf{V}}) \).

-

(4)

\(P(\tilde{\upnu }_0=0)=P({\varvec{e}}\in \mathscr {L}(\mathbf{V}))=P\left( \varvec{x}\in \mathscr {L}({\mathbf{F}, \mathbf{V}})\right) =0\).

From the practical computational viewpoint, the most important result is fundamental KKT conditions because finding solutions of convex optimization problems is nothing else than solving the corresponding KKT conditions analytically or in general numerically.

In our case of NN-(M)DOOLSE, the KKT conditions (1–3) in Proposition 2 can be represented by a set of \(2^{l}\) nonsingular linear systems of equations, where each of them is given by a specific matrix \(\mathbf{K }\) derived from \(\mathbf{G }\) and by vector \(\varvec{q}\) from (3.6). Details of such representation can be found in the Appendix A.2. Then our theoretical results in Proposition 3 dictate that only one of the linear systems always gives us all required non-negative elements of \(\tilde{\varvec{\upnu }}\).

On this representation, we can establish a simple KKT optimization algorithm which run through given linear systems, compute their analytical solution and stops when the solution appears non-negative. In other words, the proposed KKT algorithm always founds the required NN-(M)DOOLSE \(\tilde{\varvec{\upnu }}\) in at most \(2^{l}\) steps. The algorithm is summarized in Table 2, whereas the proof is in the Appendix A.2.

As for computational complexity of the KKT optimization algorithm, all calculations of input have complexity \({\mathcal {O}}(n)\), whereas the body of the algorithm (steps 1–3) needs extra operations of order \({\mathcal {O}}(l^2\cdot 2^{l})\). Since fixed number l of variance parameters in matrix \(\mathbf{D }_{\varvec{\upnu }}= {Cov}\{\varvec{Y}\}\) is usually much smaller than n (or \(l^2\cdot 2^l\) is not bigger than n), then the complete algorithm has the leading order \({\mathcal {O}}(n)\) with respect to n. It is the same as in the case of NE and generally typical only for computationally fastest analytical solutions of the KKT conditions.

3.3 Gaussian orthogonal FDSLRM

In the LMM framework, the most usual distribution of \(\varvec{X}\) used in practice is represented by the multivariate normal (Gaussian) distribution. Therefore, in addition to the orthogonality of FDSLRM, we will require in this section the distributional assumption \(\varvec{X}\sim {\mathcal {N}}_n(\mathbf{F }\varvec{\beta }, \varvec{{\Sigma }}_{\varvec{\upnu }})\). We will refer to FDSLRM under these assumptions as to Gaussian orthogonal FDSLRM.

In the case of Gaussian orthogonal FDSLRM, we can add to our investigation last two always non-negative estimation methods from Table 1: maximum likelihood estimators (MLE) and residual maximum likelihood estimators (REMLE).

3.3.1 Maximum likelihood estimators—MLE, REMLE

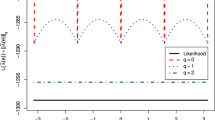

Both MLE and REMLE of variances \(\varvec{\upnu }\) provide estimates maximizing ML and REML log-likelihood functions (logarithms of likelihoods).

Using simple and clear arguments from Lamotte’s paper (LaMotte 2007), we can easily form the ML log-likelihood function  and REML loglikelihood function

and REML loglikelihood function  , assuming full-rank FDSLRM observation \(\varvec{X}\) (2.1), as

, assuming full-rank FDSLRM observation \(\varvec{X}\) (2.1), as

where \({\varvec{e}}_{\varvec{\upnu }}=\varvec{x}-\mathbf{F }\varvec{\beta }_{\varvec{\upnu }}^{*}\) are FDSLRM residuals, known as marginal residuals in LMM (Singer et al. 2017), \(\varvec{x}\) is an arbitrary realization of the FDSLRM observation \(\varvec{X}\) with covariance matrix \(\varvec{{\Sigma }}_{\varvec{\upnu }}\), \(\varvec{\beta }_{\varvec{\upnu }}^{*}= (\mathbf{F }'\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}\mathbf{F })^{-1}\mathbf{F }'\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}\varvec{x}\) is the best linear unbiased estimator (BLUE) of \(\varvec{\beta }\) and \(\left\| \bullet \right\| _{\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}}^2 = (\bullet )'\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}(\bullet )\) is the square of a generalized vector norm given by \(\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}\). Moreover, in orthogonal FDSLRM, BLUE \(\varvec{\beta }_{\varvec{\upnu }}^{*}= (\mathbf{F }'\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}\mathbf{F })^{-1}\mathbf{F }'\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}\varvec{x}\) and marginal residuals \({\varvec{e}}_{\varvec{\upnu }}\) are identical with OLS estimate \(\varvec{\beta ^*}=(\mathbf{F }'\mathbf{F })^{-1}\mathbf{F }'\varvec{x}\) and OLS residuals \({\varvec{e}}\) respectively, not depending on \(\varvec{\upnu }\) (Štulajter and Witkovský 2004).

Remark 3

— REML likelihood

In Lammote’s paper (LaMotte 2007), which presents a direct derivation of REML likelihood function in LMM using familiar linear algebra operations, we can find that the formulation of ML and REML likelihood in LMM under the assumption of normality was originally done in Harville’s works (Harville 1974, 1977).

However, LMM references up to 2007 show the explicit form of REML likelihood for LMM rarely. In Štulajter’s FDSLRM reference works dealing with MLE (Štulajter 2002; Štulajter and Witkovský 2004) REML likelihood for FDSLRM is also absent. According to Lammote (LaMotte 2007), the reason why seems to be Harville’s too sophisticated, and indirect derivation. As for Demidenko’s LMM monograph (Demidenko 2013), the FDSLRM formulas (3.11), (3.12) are special cases of ML and REML log-likelihood functions presented in Sect. 2.2.6, where estimator \(\varvec{\beta }_{\varvec{\upnu }}^{*}\) is mentioned as the generalized least squares estimator. Assumption about full column rank of \(\mathbf{F }\) is identical with Demidenko’s assumption in Sect. 2.2.6.

Maximum likelihood and residual maximum likelihood estimation of variance components in LMMs produce, in general, no analytical expressions for the estimators (Searle et al. 2009, chap. 6). They exist only for \(\upnu _0 >0\). According to Demidenko (2013, Sect. 2.5, Theorem 4) MLE and REMLE estimates do not exist in general FDSLRM with LMM observation \(\varvec{X}\) (2.1) if and only if a realization \(\varvec{x}\) of \(\varvec{X}\) belongs to \({\mathscr {L}}(\mathbf{F },\mathbf{V })\), linear span of columns \(\mathbf{F }\) and \(\mathbf{V }\). This condition is also equivalent with \({\varvec{e}}\in {\mathscr {L}}(\mathbf{V })\).

In orthogonal FDSLRM, the MLE and REMLE can be rewritten as the optimization problems for \(\varvec{\upnu }\) at given OLS residuals \({\varvec{e}}\)

where \(\left\| {\varvec{e}}\right\| _{\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}}^2 = {\varvec{e}}'\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}{\varvec{e}}= \text {tr}\left( \mathbf{S }_{{\varvec{e}}}\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}\right) \) and \(\mathbf{S }_{{\varvec{e}}}\) is the residual products matrix used in definitions (3.4), (3.5) of non-negative (M)DOOLSE.

Generally, (RE)MLE in FDSLRM are not convex optimization problems with respect to \(\varvec{\upnu }\) and they do not exist for \(\upnu _0 = 0\) which occurs if and only if \({\varvec{e}}\in \mathscr {L}(\mathbf{V})\). If we apply the following bijective transformation defined on \(\Upupsilon = (0,\infty )\times [0,\infty )^l\)

we can convert the (RE)MLE problems into the form of equivalent convex problems (Boyd and Vandenberghe 2009, Sect. 4.1.3) whose solutions can be readily converted into (RE)MLE solutions by the inverse transformation to (3.15). Here are more detailed steps of the conversion.

In orthogonal FDSLRM \(\varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}\) (Štulajter 2003, Lemma 2.1) and \({\varvec{e}}' \varvec{{\Sigma }}_{\varvec{\upnu }}^{-1}{\varvec{e}}\) are equal to

Using orthogonality conditions (2.3) and determinant expression (Searle and Khuri 2017, Sect. 6.8)

we get for determinants in (RE)ML loglikelihoods (3.13), (3.14)

Since the chosen bijective transformation (3.15) also transforms convex constraints for \(\varvec{\upnu }\) to convex constraints for \(\varvec{d}\), substituting (3.16), (3.18) into objective functions (3.13), (3.14) of (RE)MLE, we can formulate the following proposition.

Proposition 4

((RE)MLE as equivalent convex problems)

Let assume \({\varvec{e}}\not \in \mathscr {L}(\mathbf{V})\) and consider a bijective transformation in the following form:

Then, in a Gaussian orthogonal FDSLRM the (RE)MLE problems are equivalent to convex problems:

where for \(MLE: n^* \equiv n,\) and \( for REMLE: n^* \equiv n - k \).

Similarly as for NN-(M)DOOLSE, we can write the equivalent (RE)MLE problem with 2l constraints in the corresponding Lagrangian multiplier form based on the following Lagrangian with 2l multipliers \((\lambda _1, \ldots , \lambda _l, \mu _1, \ldots , \mu _l)' = (\varvec{\uplambda }', \varvec{\mu }')' \in \mathbb {R}^{2l}_+\)

Then by direct computation, we obtain KKT conditions for equivalent (RE)MLE describing: (1) primal and dual feasibility for \(\varvec{d}\) and \((\varvec{\uplambda }', \varvec{\mu }')'\), (2) stationarity of the Lagrangian (\(\nabla _{\varvec{d}}L(\varvec{d}, \varvec{\uplambda }, \varvec{\mu }) = 0\)) and (3) complementary slackness, all described by the next proposition.

Proposition 5

(KKT optimality conditions for equivalent (RE)MLE)

Consider equivalent convex optimization problems to (RE)MLE in the Lagrangian multiplier form

Then, a necessary and sufficient condition for \({\hat{\varvec{d}}},{\hat{\varvec{\uplambda }}},{\hat{\varvec{\mu }}}\) to be problems’ optimal solution is

-

(1)

\(d_0-d_j\left\| \varvec{v}_j\right\| ^2>0,\,\, d_j \ge 0, \,\,\lambda _j\ge 0,\,\,\mu _j\ge 0,\,\, j\in \{1,\ldots ,l\},\)

-

(2)

\(||{\varvec{e}}||^2-\dfrac{n^{*}-l}{d_0}-\displaystyle \sum \limits _{j=1}^{l}\left( \mu _j+ \dfrac{1}{d_0-d_j||\varvec{v}_j||^2}\right) =0, \)

\(\qquad \dfrac{||\varvec{v}_j||^2}{d_0-d_j||\varvec{v}_j||^2}-({\varvec{e}}'\varvec{v}_j)^2- \lambda _j+\mu _j||\varvec{v}_j||^2=0,\,\,j\in \{1,\ldots ,l\},\)

-

(3)

\(-d_j\lambda _j=0,\,\,-(d_0-d_j||\varvec{v}_j||^2)\mu _j=0, j\in \{1,\ldots ,l\}\).

Similarly as in the case of NN-(M)-DOOLSE, the Hessian \(\mathbf{H }\equiv \nabla _{\varvec{d}}^2 f_0(\varvec{d})\) equal to

leads to strict convexity of the problem. Since \(f_0(\varvec{d})\) is also coercive, we can summarize the existence conditions of optimal solutions in the final proposition as we did for NN-(M)DOOLSE problems.

Proposition 6

(Solution existence of equivalent (RE)MLE)

Let assume \({\varvec{e}}\not \in \mathscr {L}(\mathbf{V})\). Then in a Gaussian orthogonal FDSLRM the following holds

-

(1)

Equivalent (RE)MLE problems are strictly convex optimization problems.

-

(2)

The objective function \(f_0(\varvec{d})\) is coercive with respect to constraints.

-

(3)

Their solutions \({\hat{\varvec{d}}}\) always exist and they are unique global minimizers.

The bijection (3.15) implies \(d_0-d_j \left\| \varvec{v}_j\right\| ^2 = (\upnu _0+\upnu _j\left\| \varvec{v}_j\right\| ^2)^{-1} \ne 0\) which dictates \(\mu _j = 0\) to satisfy the complementary slackness conditions in KKT (3) and simultaneously forms the new version of KKT (1–3) of Proposition 5

But for \({\varvec{e}}\not \in {\mathscr {L}}(\mathbf{V })\), which occurs with probability 1, the system (3.19)–(3.21) is the same as the system (3.8)–(3.10).

In other words we just proved that for \(\upnu _0 \ne 0\) (or \({\varvec{e}}\not \in {\mathscr {L}}(\mathbf{V }) \)) the KKT conditions for NN-(M)DOOLSE are equivalent with the KKT conditions for (RE)MLE and the bijective transformation. It means that using the convex optimization approach in orthogonal FDSLRM, we proved the equivalence of NN-(M)DOOLSE and (RE)MLE with probability 1.

Theorem 1

(equivalence between NN-(M)DOOLSE and (RE)MLE)

In a Gaussian orthogonal FDSLRM non-negative (M)DOOLSE are almost surely equal to (RE)MLE, i.e.

The important theoretical result of Theorem 1 is stronger and more general than in Štulajter and Witkovský (2004) proved only for interior points of \(\Upupsilon \) and whose iterative computational algorithm could fail in calculating (RE)MLE when (M)DOOLSE, not satisfying non-negativity (equivalent with VLS estimates), are negative.

The very useful consequence of Theorem 1 is that we can practically compute MLE or REMLE in orthogonal FDSLRMs as quadratic NN-(M)DOOLSE. Moreover, for \({\varvec{e}}\in {\mathscr {L}}(\mathbf{V })\) NN-(M)DOOLSE do not fail as (RE)MLE, but naturally extend them. Therefore, we can use our KKT algorithm of order \({\mathcal {O}}(n)\). However, in computing (RE)MLE we can apply any of the current standard computational tools of convex optimization or LMM (all giving algorithms at least of the order \({\mathcal {O}}(n^3)\)) described in detail in the Appendix A.3.

4 Estimating FDSLRM variances via EBLUPs

4.1 Definition and computational form of new estimators

The convex optimization approach brought us new theoretical relations between non-iterative distribution-free quadratic and maximum likelihood estimators of FDSLRM variances and also a new fast computational algorithm for their computation. As we can see in this section, the LMM framework itself gives us a new improved version of the previously developed method of NE described in Sect. 3.2.

If we look at formula (3.1) for NE through eyes of the general theory of the BLUP in LMM (see e.g. Christensen (2011, chap. 12)), then in FDSLRM the OLS predictor \(\breve{\varvec{Y}}\) for \(\varvec{Y}\) is linear, unbiased, computationally and analytically simple as we required for further theoretical research, but not the best. Therefore, following the standard practice of LMMs in predicting \(\varvec{Y}\), the random effect in LMM (2.1), we take the best linear unbiased predictor (BLUP) as the estimator of \(\varvec{Y}\). This consideration leads to the following improved version of our original NE.

Let us consider FDSLRM (1.1) and its observation \(\varvec{X}\) described by (2.1), not necessarily orthogonal. Then estimators in the form

are said to be natural estimators of \(\varvec{\upnu }\) based on the BLUP or BLUP-NE for short.

The BLUP \(\varvec{Y}_{\varvec{\upnu }}^{*}\) of \(\varvec{Y}\) together with the best linear unbiased estimator \(\varvec{\beta }_{\varvec{\upnu }}^{*}\) (BLUE) of \(\varvec{\beta }\), mentioned in (RE)ML log-likelihoods (3.11) and (3.12), can be obtained from celebrated Henderson’s mixed model equations, MME for short [for MME in the current framework of LMM see e.g. Christensen (2011, Sect. 12.3) or Witkovský (2012, Sect. 2)]. MME have the following form in the case of FDSLRM

where \(\mathbf{G} _{\varvec{\upnu }}\equiv \mathbf{G }_\mathbf{V }+ \sigma _0^2\mathbf{D }_{\varvec{\upnu }}^{-1} = \mathbf{V }'\mathbf{V }+\sigma _0^2\mathbf{D }_{\varvec{\upnu }}^{-1}\) and \(\mathbf{G }_\mathbf{V }\equiv \mathbf{V }'\mathbf{V }\in \mathbb {R}^{l \times l}\) is the Gram matrix (Boyd and Vandenberghe 2018, Sect. 10.1) for columns of matrix \(\mathbf{V }\).

Assuming the full-ranked FDSLRM with \(\text {r}(\mathbf{F }\; \mathbf{V }) = k+l\) as in the case of NE and using the so-called Banachiewicz inversion formula for the inverse of a \(2\times 2\) partitioned (block) matrix (see e.g. Zhang 2005, Sect. 6.0.2) or (Hančová 2008, Sect. 2.1), we can easily prove the existence and the following form of the inverse to the block matrix in (4.2)

where \(\mathbf{W} _{\varvec{\upnu }}\equiv \mathbf{W }+ \sigma _0^2\mathbf{D }_{\varvec{\upnu }}^{-1} = \mathbf{V }'\mathbf{M} _\mathbf{F }\mathbf{V }+ \sigma _0^2\mathbf{D }_{\varvec{\upnu }}^{-1} \succ 0\), \(\mathbf{W }\) is the same Schur complement as in NE case (3.1). The matrix \(\mathbf{W} _{\varvec{\upnu }}\) is also Schur complement of \(\mathbf{F }'\mathbf{F }\) but now in the block matrix  . We call matrix \(\mathbf{W} _{\varvec{\upnu }}\) more specifically as the extended Schur complement determined by variances \(\varvec{\upnu }\).

. We call matrix \(\mathbf{W} _{\varvec{\upnu }}\) more specifically as the extended Schur complement determined by variances \(\varvec{\upnu }\).

Substituting the last result for the inverse into MME (4.2) and rearranging, we get for \(\varvec{Y}_{\varvec{\upnu }}^{*}\) (symbols \(\bullet \) denote blocks not needed in deriving)

Denoting matrix \(\mathbf{W} _{\varvec{\upnu }}^{-1}\mathbf{V }'\mathbf{M} _\mathbf{F }\in \mathbb {R}^{l \times n}\) as \(\mathbf{T }_{\varvec{\upnu }}\), we just derived the following computational form of new BLUP-NE estimators.

Proposition 7

(the computational form of BLUP-NE)

Let us consider the following full-ranked LMM model for FDSLRM observation \(\varvec{X}\)

Then BLUP-NE estimators \(\mathring{{\sigma }}_{1}^2,\ldots ,\mathring{{\sigma }}_{l}^2\) of parameters \(\sigma _1^2,\ldots ,\sigma _l^2\) are given by

where \(\varvec{\tau }_{ j}\equiv ({T}_{\varvec{\upnu }}{j1},{T}_{\varvec{\upnu }}{j2},\ldots ,{T}_{\varvec{\upnu }jn})'\) are rows of matrix \(\mathbf{T}_{\varvec{\upnu }}\) and \(\mathbf{T}_{\varvec{\upnu }}=\mathbf{W}_{\varvec{\upnu }}^{-1}{} \mathbf{V}'{} \mathbf{M}_{\mathbf{F}}\) with \(\mathbf{W}_{\varvec{\upnu }}= \text {diag}\left\{ \sigma _0^2/\sigma _j^2\right\} +\mathbf{V}'{} \mathbf{M}_{\mathbf{F}}{} \mathbf{V}\).

It is straightforward to extend our computational form to the case when some variances \(\sigma _j^2\) are zero or in other words when matrix \(\mathbf{D }_{\varvec{\upnu }}\) is singular. For a singular \(\mathbf{D }_{\varvec{\upnu }}\), MME (4.2) have the alternative form (Witkovský 2012)

where \(\mathbf{H} _{\varvec{\upnu }}\equiv \mathbf{G }_\mathbf{V }\mathbf{D }_{\varvec{\upnu }}+ \sigma _0^2 \mathbf{I }_l= \mathbf{V }'\mathbf{V }\mathbf{D }_{\varvec{\upnu }}+\sigma _0^2 \mathbf{I }_l\) and \(\varvec{Y}_{\varvec{\upnu }}^{*}= \mathbf{D }_{\varvec{\upnu }}\varvec{Z}_{\varvec{\upnu }}^{*}\).

Applying the same argument with the Banachiewicz inversion formula, we get the second version of the computational form of \(\mathbf{T }_{\varvec{\upnu }}\) determining \(\varvec{Y}_{\varvec{\upnu }}^{*}\)

As opposed to \(\mathbf{W} _{\varvec{\upnu }}, \mathbf{G} _{\varvec{\upnu }}\), matrices \(\mathbf{U} _{\varvec{\upnu }}, \mathbf{H} _{\varvec{\upnu }}\) always exist under the assumption of \(\text {r}(\mathbf{F }\;\mathbf{V }) = k+l\). Simultaneously both of \(\mathbf{U} _{\varvec{\upnu }}, \mathbf{H} _{\varvec{\upnu }}\) are also always invertible, since both of \(\det \mathbf{U} _{\varvec{\upnu }}, \det \mathbf{H} _{\varvec{\upnu }}\) are nonzero for any \(\mathbf{D }_{\varvec{\upnu }}\) in our FDSLRM as it is shown in the Appendix A.4. In the case of a nonsingular \(\mathbf{D }_{\varvec{\upnu }}\) the mentioned matrices are connected via following relationships

The version (4.5) of MME is also preferred in numerical calculations (Witkovský 2012), since it can handle not only a singular \(\mathbf{D }_{\varvec{\upnu }}\) but also a very ill-conditioned \(\mathbf{D }_{\varvec{\upnu }}\) appearing when \(\varvec{\upnu }\) has very small positive components \(\sigma _j^2\).

It is worth to mention that originally MME were derived under the normality assumptions [see the original work Henderson et al. (1959) or Witkovský (2012)], but from the viewpoint of least squares both versions (4.2), (4.5) of MME describe the BLUP \(\varvec{Y}_{\varvec{\upnu }}^{*}\) and BLUE \(\varvec{\beta }_{\varvec{\upnu }}^{*}\) (Christensen 2011, Sect. 12.3) with no need to restrict distributions of \(\varvec{Y}\) and \(\varvec{Z}\) to be normal.

Remark 4

—General FDSLRM and BLUP-NE for \(\sigma _0^2\)

Unlike our computational form in Proposition 7, the idea (4.1) behind BLUP-NE does not depend on the assumption of FDSLRM full-rankness. As it is described in (Searle et al. 2009) or (Witkovský 2012) the inverse of block matrix in MME (4.2) can be calculated using any g-inverse of the block matrix. In this situation, we would have to abandon our analytic approach and explore the problem only numerically, but in econometric applications this is not the case.

As for the first component \(\upnu _0 = \sigma _0^2\) of \(\varvec{\upnu }\), we could consider as the BLUP based natural estimator the sum of squares of white noise residuals \(\varvec{Z}_{\varvec{\upnu }}^{*}=\varvec{X}-\mathbf{F }\varvec{\beta }_{\varvec{\upnu }}^{*}-\mathbf{V }\varvec{Y}_{\varvec{\upnu }}^{*}\) divided by the number of degrees of freedom in FDSLRM

In the LMM framework, FDSLRM white noise residuals \(\varvec{Z}_{\varvec{\upnu }}^{*}\) are also called conditional residuals (Singer et al. 2017). It is not difficult to show that such estimator would be biased even in orthogonal FDSLRM. Therefore, as a natural estimator of \(\sigma _0^2\) we will keep the original unbiased invariant quadratic NE \(\breve{\sigma }_{0}^2(\varvec{X})\) of \(\sigma _0^2\), the sum of residuals in the OLS method applied to (2.1) assuming both \(\varvec{\beta }\) and \(\varvec{Y}\) as fixed divided by degrees of freedom [Eq. (2.2) in Hančová (2008)].

4.2 Statistical properties at known variance parameters

The derivation of theoretical properties of BLUP-NE estimates under the assumption of known \(\varvec{\upnu }\), regarding the first and second order moment characteristics, can be significantly facilitated by the following lemma describing the properties of matrix \(\mathbf{T }_{\varvec{\upnu }}\) determining the BLUP \(\varvec{Y}_{\varvec{\upnu }}^{*}\) in (4.3).

Its proof can be accomplished in a similar way as the proof of Lemma 3.1 in Hančová (2008) by a direct routine computation employing formulas (4.7), \(\mathbf{T }_{\varvec{\upnu }}=\mathbf{W} _{\varvec{\upnu }}^{-1}\mathbf{V }'\mathbf{M} _\mathbf{F }\), \(\mathbf{W} _{\varvec{\upnu }}=\mathbf{V }'\mathbf{M} _\mathbf{F }\mathbf{V }+\sigma _0^2\mathbf{D }_{\varvec{\upnu }}^{-1}\), properties of orthogonal projectors (orthogonality, idempotency, symmetry) and Schur complements (symmetry, positive definiteness).

Lemma 1

(basic properties of \(\mathbf{T }_{\varvec{\upnu }}\))

-

(1)

\(\mathbf{T}_{\varvec{\upnu }}{} \mathbf{T}_{\varvec{\upnu }}'=\mathbf{W}_{\varvec{\upnu }}^{-1}(\mathbf{I}_l-\sigma _0^2\mathbf{D}_{\varvec{\upnu }}^{-1}{} \mathbf{W}_{\varvec{\upnu }}^{-1})=\mathbf{D}_{\varvec{\upnu }}{} \mathbf{U}_{\varvec{\upnu }}^{-1}(\mathbf{I}_l-\sigma _0^2\mathbf{U}_{\varvec{\upnu }}^{-1})\)

-

(2)

\(\mathbf{T}_{\varvec{\upnu }}{} \mathbf{F}={\varvec{0}}_{l \times n}\) and \(\mathbf{T}_{\varvec{\upnu }}{} \mathbf{V}=\mathbf{I}_l-\sigma _0^2\mathbf{D}_{\varvec{\upnu }}^{-1}{} \mathbf{W}_{\varvec{\upnu }}^{-1} = \mathbf{I}_l-\sigma _0^2\mathbf{U}_{\varvec{\upnu }}^{-1}\)

-

(3)

\(\mathbf{T}_{\varvec{\upnu }}\varvec{{\Sigma }}_{\varvec{\upnu }}\mathbf{T}_{\varvec{\upnu }}'=\mathbf{D}_{\varvec{\upnu }}-\sigma _0^2 \mathbf{W}_{\varvec{\upnu }}^{-1} = \mathbf{D}_{\varvec{\upnu }} (\mathbf{I}_l - \sigma _0^2 \mathbf{U}_{\varvec{\upnu }}^{-1})\)

The computational forms (4.4) of BLUP-NE are also quadratic forms of \(\varvec{X}\). In addition, result (2) \(\mathbf{T }_{\varvec{\upnu }}\mathbf{F }= {\varvec{0}}_{l \times n} \) implies that \(\varvec{\tau }_{ j}\mathbf{F }={\varvec{0}}_{}\). Such a condition leads to the same conclusion as in the case of NE. BLUP based natural estimators \({\mathring{{\sigma }}_{j}}^2(\varvec{X})\) are always translation invariant or shortly invariant quadratic estimators (Štulajter (2002, Sect. 1.5) or Hančová (2008, Sect. 3.1)). The following theorem summarizes theoretical properties of BLUP-NE.

Theorem 2

(statistical properties of \({\mathring{{\sigma }}_{j}}^2(\varvec{X})\))

Natural estimators \({\mathring{{\sigma }}_{j}}^2(\varvec{X});j=1,2, \ldots , l\) of \(\varvec{\upnu }\) based on the BLUP \(\varvec{Y}_{\varvec{\upnu }}^{*}\) of \(\varvec{Y}\) are invariant quadratic estimators having the following properties

-

(1)

\(E_{\varvec{\upnu }}\left\{ {\mathring{{\sigma }}_{j}}^2(\varvec{X})\right\} =\sigma _j^2-\sigma _0^2(\mathbf{W}_{\varvec{\upnu }}^{-1})_{jj};\) \(j=1,2,\ldots ,l,\)

If \(\varvec{X}\sim {\mathcal {N}}_n(\mathbf{F}\varvec{\beta }, \varvec{{\Sigma }}_{\varvec{\upnu }})\), then

-

(2)

\(D_{\varvec{\upnu }}\{{\mathring{{\sigma }}_{j}}^2(\varvec{X})\}=2(\sigma _j^2-\sigma _0^2(\mathbf{W}_{\varvec{\upnu }}^{-1})_{jj})^2;\) \(j=1,2,\ldots ,l,\)

-

(3)

\({Cov}_{\varvec{\upnu }}\left\{ {\mathring{{\sigma }}_{i}}^2(\varvec{X}),{\mathring{{\sigma }}_{j}}^2(\varvec{X})\right\} =2(\sigma _0^2 (\mathbf{W}_{\varvec{\upnu }}^{-1})_{ij})^2;\) \(i,j=1,2,\ldots ,l,\) \(i\ne j\),

-

(4)

\({MSE}_{\varvec{\upnu }}\{{\mathring{{\sigma }}_{j}}^2(\varvec{X})\}=2{(\sigma _j^2-\sigma _0^2(\mathbf{W}_{\varvec{\upnu }}^{-1})_{jj})}^2+\sigma _0^4(\mathbf{W}_{\varvec{\upnu }}^{-1})_{jj}^2;\)

\(j=1,2,\ldots ,l.\)

Proof

See the Appendix A.4. \(\square \)

Remark 5

Bias of BLUP-NE

If some components in \(\varvec{\upnu }\) are zero, there is a need to use expression \(\mathbf{D }_{\varvec{\upnu }}\mathbf{U} _{\varvec{\upnu }}^{-1}\) instead of \(\mathbf{W} _{\varvec{\upnu }}^{-1}\). The first property, biasedness of \({\mathring{{\sigma }}_{j}}^2(\varvec{X}), j = 1,2,\ldots ,l\), is in full accordance with the Ghosh theorem (Ghosh 1996; Gajdoš et al. 2017) about the incompatibility between simultaneous non-negativity and unbiasedness of estimators for variances of random components \(\varvec{Y}\) in LMM. As for bias, defined as \(\Updelta _{\varvec{\upnu }}\left\{ {\mathring{{\sigma }}_{j}}^2(\varvec{X})\right\} = E_{\varvec{\upnu }}\left\{ {\mathring{{\sigma }}_{j}}^2(\varvec{X})\right\} -\sigma _j^2\), we have the following expression given by the extended Schur complement \(\mathbf{W} _{\varvec{\upnu }}\)

Now we can theoretically compare quality of the BLUP natural estimators with original natural estimators (NE) proposed in our previous paper (Hančová 2008). As we can see from summarizing Table 3, their quality is determined by Schur complements \(\mathbf{W }\) and \(\mathbf{W} _{\varvec{\upnu }}\) where both of them are symmetric and positive definite matrices.

Using elementary properties of the Löwner partial ordering (Puntanen et al. 2013, chap. 24), we have immediately relations \(\mathbf{W} _{\varvec{\upnu }}> \mathbf{W }, \mathbf{W }^{-1}>\mathbf{W} _{\varvec{\upnu }}^{-1}, (\mathbf{W })_{jj} > (\mathbf{W} _{\varvec{\upnu }}^{-1})_{jj}\) which imply a smaller absolute value of bias, dispersion and MSE for BLUP-NE \({\mathring{{\sigma }}_{j}}^2(\varvec{X})\) in comparison with original NE \(\breve{\sigma }_{j}^2(\varvec{X})\). This conclusion directly supports our idea of the improvement leading to the BLUP-NE definition (4.1).

Under the condition of orthogonality (2.3), we can write for matrices \(\mathbf{M} _\mathbf{F }\), \(\mathbf{U} _{\varvec{\upnu }}\), \(\mathbf{W} _{\varvec{\upnu }}\), \(\mathbf{T }_{\varvec{\upnu }}\) and BLUP-NE \({\mathring{{\sigma }}_{j}}^2(\varvec{X})\)

where we introduced \( \rho _j \equiv \frac{\sigma _j^2\left\| \varvec{v}_j\right\| ^2}{\sigma _0^2+\sigma _j^2\left\| \varvec{v}_j\right\| ^2} \in \mathbb {R}^{}, \; 0\le \rho _j <1, j = 1, 2, \ldots , l \).

Applying these results, we get the following direct corollary of Theorem 2 for any orthogonal FDSLRM.

Corollary 1

(properties of \({\mathring{{\sigma }}_{j}}^2(\varvec{X})\) in orthogonal FDSLRM)

In an orthogonal FDSLRM natural estimators \({\mathring{{\sigma }}_{j}}^2\) based on the BLUP \(\varvec{Y}_{\varvec{\upnu }}^{*}\) have the following properties

-

(1)

\(E_{\varvec{\upnu }}\left\{ {\mathring{{\sigma }}_{j}}^2(\varvec{X})\right\} = \rho _j \sigma _j^2 \); \(j=1,2,\ldots ,l,\) where \(\rho _j=\dfrac{\sigma _j^2\left\| \varvec{v}_j\right\| ^2}{\sigma _0^2+\sigma _j^2\left\| \varvec{v}_j\right\| ^2} \in \mathbb {R}^{}_+\).

If \(\varvec{X}\sim {\mathcal {N}}_n(\mathbf{F}\varvec{\beta }, \varvec{{\Sigma }}_{\varvec{\upnu }})\), then

-

(2)

\(D_{\varvec{\upnu }}\{{\mathring{{\sigma }}_{j}}^2(\varvec{X})\}= 2 \rho _j^2 \sigma ^4_j\); \(j=1,2,\ldots ,l,\)

-

(3)

\({Cov}_{\varvec{\upnu }}\left\{ {\mathring{{\sigma }}_{i}}^2(\varvec{X}),{\mathring{{\sigma }}_{j}}^2(\varvec{X})\right\} =0\); \(i,j=1,2,\ldots ,l,\) \(i \ne j\),

-

(4)

\({MSE}_{\varvec{\upnu }}\{{\mathring{{\sigma }}_{j}}^2(\varvec{X})\}=[2\rho _j^2+(1-\rho _j)^2]\sigma ^4_j\); \(j=1,2,\ldots ,l.\)

Finally, if we look at original natural estimators \(\breve{\sigma }_{j}^2(\varvec{X})\) from our paper (Hančová 2008), then in orthogonal FDSLRM it can be easily shown that \({\mathring{{\sigma }}_{j}}^2(\varvec{X}) = \rho _j^2 \breve{\sigma }_{j}^2(\varvec{X}),\) \(j = 1, 2 , \ldots , l\). If we introduce \(\rho _0 = 1 \), then we obtain the complete orthogonal version of (4.4) for computing BLUP-NE \({\mathring{{\sigma }}_{j}}^2(\varvec{X})\):

4.3 Empirical version of BLUP-NE method

The computational form of our BLUP natural estimators, their first and second order moment characteristics were derived under the assumption of known variances \(\varvec{\upnu }\), which is in practice rarely fulfilled. In such case, the simplest reasonable solution is to use an empirical version of BLUP (EBLUP), as it is usual in the general theory of empirical BLUPs in LMMs (Štulajter (2002, chap. 5), Witkovský (2012) and Rao and Molina 2015, chap. 5). In particular, this step means replacing unknown true parameters \(\varvec{\upnu }\) with other “initial” values or estimates of \(\varvec{\upnu }\) in FDSLRM. In the light of iterative approaches, such empirical version of BLUP-NE can be viewed as a two-stage iterative estimation method with one step in the iteration.

From the theoretical perspective, we prefer initial estimates with the simplest explicit form and under least restrictive assumptions on the structure or distribution of FDSLRM. On the other hand, from the practical point of view, it will be sufficient to have such initial estimates which can be obtained by reliable and time efficient computational methods. Therefore, we suggest natural estimators (NE) as initial estimates for BLUP-NE, although we could consider any estimates based on least squares or maximum likelihood.

Using (4.9), these new distribution-free estimates which will be referred as empirical BLUP-NE or EBLUP-NE for short, are invariant and given in orthogonal FDSLRM by

where \(\breve{\rho }_0 = 1, \, \breve{\rho }_j=\frac{\breve{\sigma }_j^2\left\| \varvec{v}_j\right\| ^2}{\breve{\sigma }_0^2+\breve{\sigma }_j^2\left\| \varvec{v}_j\right\| ^2}\).

As for computational complexity of EBLUP-NE, we get 5l or \({\mathcal {O}}(l)\) extra operations to compute \(\breve{\rho }_j^2\) and product with \(\breve{\sigma }_{j}^2(\varvec{X})\) in comparison with NE from (3.2). Therefore, computing EBLUP-NE represents again an algorithm with the complexity having order \({\mathcal {O}}(n)\). It has the same order as our KKT algorithm, but in computational practice it will be a little quicker, since KKT algorithm extra operations are \({\mathcal {O}}(l.2^l)\), which should be observable in cases of smaller n, comparable with \(l.2^l\).

Remark 6

EBLUPs from NE

According to general theory of EBLUPs (Štulajter 2002; Ża̧dło 2009; Witkovský 2012; Rao and Molina 2015), if initial estimates \({{\tilde{\varvec{\upnu }}}}\) of \(\varvec{\upnu }\) are even, invariant and probability distribution of \(\varvec{Y}\) and \(\varvec{Z}\) in LMM (2.1) are both symmetric around zero with finite mean values, then empirical non-linear BLUP \(\varvec{Y}_{{\tilde{\varvec{\upnu }}}}^{*}\) based on \({{\tilde{\varvec{\upnu }}}}\) is unbiased. Our quadratic natural estimators NE, which are even and invariant, belong to this type of initial estimates.

However, general theory of EBLUPs also says that an explicit expression for MSE of EBLUP is not known. Non-linearity of EBLUP causes that theoretical properties of EBLUP can be usually explored only by approximate methods, using functional series, which will be our goal in future research.

5 Application in real data examples

5.1 Three real data sets and their FDSLRMs

Generally, since EBLUPs are nonlinear functions of the observation \(\varvec{X}\), their theoretical study (especially their second order statistical properties) is considered as a very difficult mathematical task and it is still open research theoretical problem in LMM and FDSLRM (Witkovský 2012; Gajdoš et al. 2017). Therefore, we will illustrate our theoretical results, performance and properties of the proposed EBLUP-NE method applying numerical and computer simulation approach.

We use three real data sets: electricity consumption, tourism and cyber security. For all data sets, we identified the most parsimonious structure of the FDSLRM using an iterative process of the model building and selection based on exploratory tools of spectral analysis and LMM theory. All details of our FDSLRM analysis and modeling can be found in our easy reproducible Jupyter notebooks freely available at our GitHub fdslrm repository EBLUP-NE (Gajdoš et al. 2019a).

5.1.1 Electricity consumption

As the first real data example, we have the econometric time series data set, representing hourly observations of the consumption of the electric energy (in kWh) in a department store. The number of time series observations is \(n=24\). The data and model was adapted from Štulajter and Witkovský (2004)

with \(k=3, l=4, \;\varvec{Y}= (Y_1, Y_2, Y_3, Y_4)' \sim {\mathcal {N}}_4({\varvec{0}}_{4}, \mathbf{D }_{\varvec{\upnu }}),\; Z(.) \sim iid \, {\mathcal {N}}(0,\sigma _0^2) \) and \(\varvec{\upnu }= (\sigma _0^2, \sigma _1^2, \sigma _2^2, \sigma _3^2, \sigma _4^2)' \in \mathbb {R}^{5}_{+}\). Frequencies \(\left( \omega _1,\omega _2,\omega _3\right) ' = 2\pi \left( 1/24, 2/24, 3/24\right) '\) are significant Fourier frequencies from the time series periodogram in spectral analysis (Priestley 2004; Štulajter and Witkovský 2004; Gajdoš et al. 2017).

Since the time series data set contains only 24 observations and we have to estimate three regression and five variance parameters of the FDSLRM, this real data FDSLRM example should be considered only as a toy example. This model give us the opportunity to check our numerical results and to demonstrate how in principle FDSLRM estimation methods and computational tools work.

5.1.2 Tourism

In this econometric FDSLRM application, we consider the time series data set, called visnights, representing total quarterly visitor nights (in millions) from 1998 to 2016 in one of the regions of Australia—inner zone of Victoria state. The number of time series observations is \(n=76\). The data was adapted from Hyndman and Athanasopoulos (2018).

The Gaussian orthogonal FDSLRM fitting the tourism data has the following form (for modeling details see our Jupyter notebook tourism.ipynb):

with \(k=3, l=3, \;\varvec{Y}= (Y_1, Y_2, Y_3)' \sim {\mathcal {N}}_3({\varvec{0}}_{3}, \mathbf{D }_{\varvec{\upnu }}),\, Z(.) \sim iid \, {\mathcal {N}}(0,\sigma _0^2)\) and \(\varvec{\upnu }= (\sigma _0^2, \sigma _1^2, \sigma _2^2, \sigma _3^2)' \in \mathbb {R}^{4}_{+}\).

5.1.3 Cyber attacks

Our final FDSLRM application describes the real time series data set representing the total weekly number of cyber attacks against a honeynet—an unconventional tool which mimics real systems connected to Internet, like business or school computers intranets, to study methods, tools and goals of cyber attackers. Data, taken from Sokol and Gajdoš (2018), were collected from November 2014 to May 2016 in CZ.NIC honeynet consisting of Kippo honeypots in medium-interaction mode. The number of time series observations is \(n=72\).

The suitable FDSLRM, after a preliminary logarithmic transformation of data \(L(t) = \log X(t)\), is again Gaussian orthogonal (for modeling details see our Jupyter notebook cyberattacks.ipynb) and in comparison with previous models (5.1), (5.2) has the simplest structure:

with \(k=3, l=2, \;\varvec{Y}= (Y_1, Y_2)' \sim {\mathcal {N}}_2({\varvec{0}}_{2}, \mathbf{D }_{\varvec{\upnu }}), \, Z(.) \sim iid \, {\mathcal {N}}(0,\sigma _0^2)\) and \(\varvec{\upnu }= (\sigma _0^2, \sigma _1^2, \sigma _2^2)' \in \mathbb {R}^{3}_{+}\).

5.1.4 Numerical results of estimating \(\varvec{\upnu }\)

For cross-checking purposes, we realized our numerical computations in both Python and R based software tools (or packages). As we describe in the Appendix A.3, we implemented own algorithms and methods in SciPy, SageMath and R, particularly analytical expressions (3.2) for NE and the KKT algorithm for NN-(M)DOOLSE (Table 2) in orthogonal FDSLRM. Simultaneously, we confirmed the same estimations using CVXPY (or CVXR) package based on convex optimization and analogically using up-to-date standard LMM R packages nlme, MMEinR (mixed) and sommer.

Table 4 summarizes all types of considered estimates in all four models. Since computations also confirmed equivalency of NN-(M)DOOLSE and (RE)MLE described by Theorem 1, we wrote results for (RE)MLE only.

Detailed computational results for all three data sets using all corresponding relevant tools can be found and easily reproduced in our collection of Jupyter notebooks (asterisk * represents a name of data and specific computational tool): PY-estimation-*.ipynb and R-estimation-*.ipynb, available in repository EBLUP-NE (Gajdoš et al. 2019c).

5.2 Simulation study of EBLUP-NE properties

In this section we present the results of a simulation study in the following scenario:

-

(1)

We take all three considered Gaussian FDSLRM models (5.1), (5.2), (5.3);

-

(2)

We set NE of variances \({\breve{\varvec{\upnu }}}\) and OLSE of regression parameters \(\varvec{\beta }^*\), computed from corresponding data sets (the first and last column in Table 4), as true values of models’ parameters;

-

(3)

For each model we simulate \(N=5000\) time series realizations of \(\varvec{X}\) by Monte Carlo from corresponding multivariate normal distributions \({\mathcal {N}}_n(\mathbf{F }\varvec{\beta }^*, \varvec{{\Sigma }}_{\breve{\varvec{\upnu }}})\);

-

(4)

Finally we calculate NE, (RE)MLE, EBLUP-NE and their statistical properties – Monte Carlo mean value (Mean) and Monte Carlo mean squared error (MSE).

Complete results of simulation summaries for all models are reported in Table 5.

As expected, NE exhibit the biggest biases, the biggest MSEs from all methods. Mean values of NE are always bigger than true variance parameters \(\varvec{\upnu }\) which is consistent with the positive theoretical bias in Table 3. As for the proposed method EBLUP-NE, results indicate that it is a really improvement of the NE method. Moreover, it seems that although we replaced in EBLUP-NE true parameters by NE, results indicate that absolute value of bias and MSE still remained smaller as we conclude from Table 3 for theoretical BLUP-NE at known \(\varvec{\upnu }\).

Comparing the proposed method EBLUP-NE with MLE and REMLE, it is important for our further research that EBLUP-NE slightly outperforms both of them in majority of variance parameters (achieves smaller biases and smaller MSEs). But differences are really small, so results indicate that EBLUP-NE shows at least comparable performance as MLE and REMLE. Simulations also confirmed comparable runtimes of computational form (4.10) for EBLUP-NE and the KKT algorithm (Table 2) used for (RE)MLE, which is in full accordance with the same order \({\mathcal {O}}(n)\) of theoretical computational complexity.

As it can be seen in our Jupyter notebooks, all results obtained by our procedures are fully consistent with the results of the best convex optimization and LMM computational tools (see Appendix A.3). However, we also noticed that unlike our procedures or the convex optimization tools, standard LMM tools failed in small number of realizations during simulations.

Both—the computational form for EBLUP-NE and the KKT algorithm for (RE)MLE working with double floating-point precision \(\epsilon <10^{-15}\) as the default precision of outputs—significantly outperform these Python or R-based standard computational tools in precision (\(10^7\) times more accurate) and also in run-times (10–100 times faster on the current standard PC).Footnote 6

6 Conclusions

In this paper we revisited and updated theoretical and computational knowledge dealing with FDSLRM least squares and maximum likelihoods estimation procedures, in the light of recent advances in closely related convex optimization, linear mixed modeling (LMM), and modern computational technology.

Specifically, applying the convex optimization theory, we reformulated all estimation methods as convex optimization problems in the so-called orthogonal FDSLRM, the most usual form of FDSLRM used in econometric practice. We formulated the KKT optimality conditions, which, unlike likelihood equations, are necessary and sufficient conditions for optimal solutions of (RE)MLE or NN-(M)DOOLSE on extended parametric space \(\Upupsilon = [ 0, \infty )^{l+1}\).

KKT optimality conditions dictate not only the exact existence conditions of estimates but they also solve the well-known problem dealing with standardly used likelihood equations (Christensen (2011, chap. 12) and Searle et al (2009, chap. 6)) in LMM where their solutions for (RE)MLE or NN-(M)DOOLSE may not be required estimates—they may be out of the parameter space or they may be other than the maximum or minimum.

Moreover, using KKT optimality conditions in orthogonal FDSLRMs, we proved the equivalence of NN-(M)DOOLSE and (RE)MLE with propability 1. This important theoretical result of the paper is the stronger and more general result than in Štulajter and Witkovský (2004) proved only for interior points of \(\Upupsilon \).

Simultaneously, the convex optimization theory brought us to the new KKT algorithm for computing NN-(M)DOOLSE, equivalent to (RE)MLE, with double floating-point precision \(\epsilon <10^{-15}\) as the default precision of outputs and with computational complexity \({\mathcal {O}}(n)\).

Such an algorithm which we implemented in SciPy, SageMath and R (all free and open-source) and which at the default precision level is \(10^7\) times more accurate and approximately \(n^2\) faster than the best current Python(or R)-based standard computational tools, can be used in effective computational time series research to study properties of FDSLRM (Monte Carlo and bootstrap methods, Kreiss and Lahiri (2012)).

As a consequence of applying the general theory of BLUP in LMM, we suggested and investigated an alternative, new method EBLUP-NE based on empirical BLUPs for estimating variances in time series modeled by FDSLRM. The estimation method can be also viewed, analogously like EBLUP (Rao and Molina 2015; Witkovský 2012), as a two-stage iterative method with one step in the iteration.

EBLUP-NE are almost surely positive invariant estimators whose simple explicit computational form are given by two special matrices—the Schur complement and Gram matrix (Zhang 2005). The method can be used not only in the case of normally distributed time series data, but for any absolutely continuous probability distribution of time series data.