Abstract

In this paper, a new robust and efficient estimation approach based on local modal regression is proposed for partially linear models with large-dimensional covariates. We show that the resulting estimators for both parametric and nonparametric components are more efficient in the presence of outliers or heavy-tail error distribution, and as asymptotically efficient as the corresponding least squares estimators when there are no outliers and the error distribution is normal. We also establish the asymptotic properties of proposed estimators when the covariate dimension diverges at the rate of \(o\left( {\sqrt{n} } \right) \mathrm{{ }}\). To achieve sparsity and enhance interpretability, we develop a variable selection procedure based on SCAD penalty to select significant parametric covariates and show that the method enjoys the oracle property under mild regularity conditions. Moreover, we propose a practical modified MEM algorithm for the proposed procedures. Some Monte Carlo simulations and a real data are conducted to illustrate the finite sample performance of the proposed estimators. Finally, based on the idea of sure independence screening procedure proposed by Fan and Lv (J R Stat Soc 70:849–911, 2008), a robust two-step approach is introduced to deal with ultra-high dimensional data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the partially linear models (PLM)

where \(X={({x_1}, \ldots ,{x_{{p_n}}})^T} \in {\mathbb {R}^{p_n}}\) and \(Z={({z_1}, \ldots ,{z_q})^T}\in {\mathbb {R}^{q}}\) are the covariates in the parametric and nonparametric components, \(\beta = ({\beta _1},\ldots ,{\beta _{{p_n}}})^{T}\) is a \(p_n\)-dimensional vector of unknown parameters, \(f( \cdot )\) is an unknown smooth function, and the random error \({\varepsilon }\) satisfies \(E\left( {{\varepsilon }\left| {{X},{Z}} \right. } \right) = 0\). Ever since first introduced by Engle et al. (1986), the PLM have been extensively studied in the literature. For example, see Robinson (1988), Speckman (1988), Zeger and Diggle (1994), Severini and Staniswalis (1994) and Hardle et al. (2000).

In practice, large amounts of variables are usually included in regression model to reduce the possible modeling biases. However, inclusion of too many irrelevant variables can degrade the estimation accuracy and model interpretability. Classical variable selection procedures such as AIC (Akaike 1973), BIC (Schwarz 1978), Mallows’ Cp (Mallows 1973) and k-fold Cross-Validation (Breiman 1995) all suffered from the problems of unstability and intensive computation. To address these deficiencies, Tibshirani (1996) proposed the least absolute shrinkage and selection operator (LASSO) penalty to perform simultaneous estimation and variable selection. But just as Fan and Li (2001) conjectured, the oracle property does not hold for the LASSO penalty. Later, the smoothly clipped absolute deviation (SCAD) penalty (Fan and Li 2001) and adaptive LASSO penalty (Zou 2006) had been proposed to possess the oracle property. In recent years, these penalized methods have been widely used for variable selection in PLM. For instance, Li and Liang (2008) proposed two classes of penalized procedures for variable selection in PLM with measurement errors. Ni et al. (2009) proposed a new type of double-penalized method for PLM with a divergent number of covariates. Xie and Huang (2009) introduced SCAD-penalized regression in high-dimensional PLM. Zhou et al. (2010) proposed nonconcave penalized procedure for fixed-effects PLM with errors in variables. And, Chen et al. (2012) combined the ideas of profiling and adaptive Elastic-net for variable selection in PLM with large-dimensional covariates. It is important to note that all these works were built on the least squares (LS) regression, which is highly sensitive to outliers and their efficiency may be significantly decreased for many commonly used non-normal errors. To this end, researchers began to study the robust estimation and variable selection for PLM in the framework of the least absolute deviation (LAD) method (Wang et al. 2007), which is particularly suited to the heavy-tailed error distributions. To the best of our knowledge, Zhu et al. (2013) proposed a class of penalized LAD approach in PLM with large-dimensional covariates. However, the LAD method may lose some efficiency when there are no outliers and the error distribution is normal. Hence, it is highly desirable to develop a robust and efficient method that can simultaneously conduct estimation and variable selection in PLM for different error distributions.

More recently, Yao et al. (2012) investigated a new estimation method based on a local modal regression (LMR) in a nonparametric model. They demonstrated that the LMR estimator has a great efficiency gain across a wide spectrum of non-normal error distributions and almost not lose any efficiency for the normal error compared with the LS estimator. Similar conclusions have also been confirmed in Zhang et al. (2013), Yang and Yang (2014), Yao and Li (2014) and Zhao et al. (2014). This fact motivates us to extend the local modal regression to PLM. The main goal of this paper is to develop a robust and efficient estimation and variable selection procedure for PLM, in which the covariate dimension diverges at the rate of \(o( {\sqrt{n} } )\mathrm{{ }}\). We show that the resulting estimators for both parametric and nonparametric components are more efficient in the case of outliers or heavy-tail error distribution, and as asymptotically efficient as the corresponding LS estimators when there are no outliers and the error distribution is normal. The main contributions of this paper are threefold. Firstly, the proposed LMR estimators for both parametric and nonparametric components are robust and efficient in PLM with large-dimensional covariates. Secondly, we develop a variable selection procedure based on SCAD penalty to identify significant covariates in the parametric component and prove that the method enjoys the oracle property under mild regularity conditions. Finally, a two-step robust procedure based on sure independence screening and penalized LMR is proposed to deal with ultra-high dimensional cases.

The rest of this paper is organized as follows. In Sect. 2, following the idea of LMR, we propose a new estimation method for PLM with large-dimensional covariates, and establish the theoretical properties of the resulting LMR estimators for both parametric and nonparametric components. A robust and efficient variable selection procedure via SCAD penalty is developed to select significant parametric covariates and its oracle property is also established in Sect. 3. In Sect. 4, we discuss the details of bandwidth selection and BIC criterion is suggested to select the regularization parameter. Moreover, we introduce a modified MEM algorithm for implementation. In Sect. 5, some Monte Carlo simulations as well as a real data example are conducted to show the finite sample performance of the proposed estimators. In Sect. 6, a two-step robust procedure based on sure independence screening and penalized LMR is proposed to deal with ultra-high dimensional cases and a simulation study is also presented in this section. We conclude with a few remarks in Sect. 7. All the technical proofs are given in the “Appendix”.

2 Robust estimation procedure

2.1 Robust LMR for PLM

In this subsection, our strategy is that we first use the profile least squares approach (Speckman 1988) to transform the semiparametric model to the classic linear model, and then develop a robust estimation procedure for PLM.

Suppose that \(\{( {{X_i},{Y_i},{Z_i}} ),i = 1, 2, \ldots , n\}\) is an independent identically distributed sample from model (1). It follows from the profile least squares approach that

which is a standard linear model if \(E( {{X_i}| {{Z_i}}})\) and \(E( {{Y_i}| {{Z_i}}} )\) are known. However, both \(E( {{X_i}| {{Z_i}} } )\) and \(E( {{Y_i}| {{Z_i}}})\) in model (2) are not observed in practice. Thus, we first need to estimate \(E( {{X_i}| {{Z_i}} })\) and \(E( {{Y_i}| {{Z_i}}})\). This can be done through kernel smoothing or local linear approximation (Speckman 1988; Fan and Gijbels 1996). For example, we can estimate \(E( {{X_i}| {{Z_i}} } )\) and \(E( {{Y_i}| {{Z_i}}})\) by

and

respectively, where \(K(\cdot )\) is a q-dimensional kernel function, \(d_1\) and \(d_2\) are the bandwidths. In what follows, we denote \({m_X}(Z) = E( {X|Z})\), \({m_Y}(Z) = E({Y|Z})\), \({\widetilde{X}} = {X} - E( {{X}| {{Z}}})\), \({\widetilde{Y}} = {Y} - E( {{Y}| {{Z}}})\), \({\widehat{{\widetilde{X}}}} = {X} - \widehat{E}( {{X}| {{Z}}})\), and \({\widehat{{\widetilde{Y}}}} = {Y} - \widehat{E}( {{Y}| {{Z}}})\).

After profiling, we can focus on the general estimation procedures in the context of classic linear model. First, we introduce the commonly used LS method. Specifically, we construct the LS estimator by minimizing the following objective function

with respect to \(\beta \).

It is well known that the LS method is very sensitive to outliers in the dataset. Then we can consider the outliers-resistant loss functions such as \(L_1\) or, more generally, Huber’s \(\psi \) function (Huber 1981). Without loss of generality, we only introduce the \(L_1\) loss function to obtain the LAD estimator (Wang et al. 2007). One can also refer to Zhu et al. (2013) for a detailed discussion of this class of estimator. Therefore, instead of minimizing (4), we minimize

with respect to \(\beta \).

However, the LAD method may lose some efficiency when there are no outliers and the error distribution is normal. Hence, it is highly desirable to develop a robust and efficient method that can conduct robust estimation in PLM for different error distributions. In general, the mode is insensitive to the outliers in dataset or the heavy-tail error distributions. Moreover, the modal regression provides more meaningful point prediction and larger coverage probability for prediction than the mean regression when the error density is skewed (Zhang et al. 2013). Motivated by this fact, we devote to extending the local modal regression to PLM.

In this paper, we propose the LMR estimator \({\widehat{\beta }}\) by maximizing the following objective function

with respect to \(\beta \), where \({\phi _{{h_1}}}(t) = h_1^{ - 1}\phi (t/{h_1})\), \(\phi (t)\) is a kernel density function, and \(h_1\) plays the role of the bandwidth, which determines the degree of robustness of the LMR estimator. For the ease of computation, we use the standard normal density for \(\phi (\cdot )\) throughout this paper. Similar idea can be seen in Yao et al. (2012), Zhang et al. (2013), and Yang and Yang (2014).

After obtaining the LMR estimator of \(\beta \), we can further estimate the unknown smooth function \(f(\cdot )\). In this paper, we adopt local linear approximation (Fan and Gijbels 1996) to approximate \(f(\cdot )\). That is to say, for any fixed \(Z = z \in \mathbb {R}^{q}\), we approximate f(z) by a linear function

for \({z_0}\) in a neighborhood of z. As a consequence, we turn to estimate the intercept term a. To this end, we propose the LMR estimator \({\widehat{f}}(z)={\widehat{a}}\) by maximizing

with respect to a and b.

It is noteworthy that the bandwidths \(h_1\), \(h_2\) and \(h_3\) are selected by data-driven method so that the resulting estimators can be adaptively robust, and the detailed choices of the these bandwidths will be discussed in Sect. 4.

2.2 Theoretical properties

In this subsection, we first will study the theoretical properties of the proposed LMR estimators. For simplicity, we denote \(U = ( {X,Z})\), and \({\beta _0}\) as the true value of \(\beta \). Let \(F( {u,h} ) = E\left\{ {\phi _h^{\prime \prime }( \varepsilon )| {U = u} }\right\} \), \(G( {u,h}) = E\left\{ {\phi _h^{\prime }{{( \varepsilon )}^2}| {U = u}}\right\} \).

To obtain the theoretical properties of the LMR estimator \(\widehat{\beta }\), we assume the following regularity conditions:

-

(A1)

The matrix cov(\(\widetilde{X}\)) is positive-definite, \(E\left( {{{\left| \varepsilon \right| }^3}\left| {X,Z} \right. } \right) < \infty \), and \({\sup _z}E\left( {{{\left\| X \right\| }^3}\left| {Z = z} \right. } \right) < \infty \).

-

(A2)

The bandwidth \(d_k\) in Eq. (3) satisfies \(n{d_k^8} \rightarrow 0\) and \(n{d_k^{2q}} \rightarrow \infty \) for \(k=1,2\).

-

(A3)

The kernel function \(K\left( \cdot \right) \) is a symmetric density function with compact support and satisfies \(\int _{ - \infty }^{ + \infty } { \cdots \int _{ - \infty }^{ + \infty } {K\left( \mathbf{t} \right) } } d{t_1} \cdots d{t_q} = 1\), \(\int _{ - \infty }^{ + \infty } { \cdots \int _{ - \infty }^{ + \infty } {\mathbf{t}K\left( \mathbf{t} \right) } } d{t_1} \cdots d{t_q} = 0\), where \(\mathbf{t}= {\left( {{t_1}, \ldots ,{t_q}} \right) ^T}\).

-

(A4)

\(f(\cdot )\) and \({m_X}(\cdot )\) are continuous on their support sets.

-

(A5)

F(u, h), G(u, h) are continuous with respect to u.

-

(A6)

\(F(u,h)<0\) for any \(h>0\).

-

(A7)

\(E\left( {{\phi }^\prime _h\left( \varepsilon \right) \left| {U = u} \right. } \right) = 0\), \(E\left( {\phi _h^\prime {{\left( \varepsilon \right) }^3}\left| {U = u} \right. } \right) \), \(E\left( {\phi _h^{\prime \prime }{{\left( \varepsilon \right) }^2}\left| {U = u} \right. } \right) \), and \(E\left( {\phi _h^{\prime \prime \prime }\left( \varepsilon \right) \left| {U = u} \right. } \right) \) are continuous with respect to u.

Remark 1

The conditions (A1)–(A4) are standard in the semiparametric regression literature. Condition (A1) is a necessary moment condition. Condition (A2) ensures that undersmoothing is not needed in order to obtain the root-\({{n/{p_n}}}\) consistency and asymptotic normality. In practice, we can use data-driven approach to select the bandwidths \(d_k\), \(k=1,2\). Condition (A3) is a common condition on the kernel function. Condition (A4), together with conditions (A1)–(A3), ensures that the consistency of the kernel estimation. A detailed discussion of these conditions can be found in Hardle et al. (2000). The conditions (A5)–(A7) are necessary conditions used in local modal nonparametric regression in Yao et al. (2012). Condition (A5) is a basic assumption. Condition (A6) ensures that there exists a local maximizer in the objective function (6), while condition (A7) is used to control the magnitude of the remainder in a third-order Taylor expansion of this objective function. In particular, the condition \(E\left( {{\phi }^\prime _h\left( \varepsilon \right) \left| {U = u} \right. } \right) = 0\) is satisfied if the error density is symmetric about zero, which ensures that the proposed LMR estimator is consistent.

Theorem 1

Under the regularity conditions (A1)–(A7), if \({{p_n^2} /n} \rightarrow 0\) as \(n \rightarrow \infty \), and \(h_1\) is a constant and does not depend on n, then we have

where \(\left\| {\cdot } \right\| \) stands for the Euclidean norm.

Theorem 1 indicates that the LMR estimator \(\widehat{\beta }\) is root-\({{n / {{p_n}}}}\) consistent. Meanwhile, the following theorem states the asymptotic normality of the LMR estimator \(\widehat{\beta }\) when \(p_n\) diverges at the rate of \(o( {{n^{{1 / 2}}}} )\).

Theorem 2

Under the regularity conditions (A1)–(A7), if \({{p_n^2} / n} \rightarrow 0\) as \(n \rightarrow \infty ,\) and \(h_1\) is a constant and does not depend on n, then we have

in distribution, where \({\varSigma _1} = E\{ {F( {u,{h_1}}){\widetilde{X}}{{ \widetilde{X}}^T}}\},\) and \({\varSigma _2} = Var\{ { {\widetilde{X}}\phi _{{h_1}}^\prime ( \varepsilon )}\}.\)

In this paper, we also provide the asymptotic normality of the LMR estimator \(\widehat{f}( z )\) as follows.

Throughout, let \(\rho (z)\) be the density function of Z and \(g( {{\bar{y}}| z })\) be the conditional density function of \(\overline{Y} = Y - {X^T}\beta _0\) given \(Z=z\) with respect to a measure \(\mu \). With a given constant h that does not depend on n, we let \(\varphi _h(t| z )= E\{ {{ - {\phi _{{h}}}}( {\varepsilon + t)}| {Z = z}}\}\), and use \(\varphi _h^\prime (t\left| z \right. )\) and \(\varphi _h^{\prime \prime } (t\left| z \right. )\) to denote \(\partial (\varphi _h(t\left| z \right. ))/\partial t\) and \({\partial ^2}(\varphi _h(t\left| z \right. ))/{\partial ^2}t\), respectively. In addition to the conditions in Theorem 1, we further assume the following regularity conditions:

-

(B1)

The smooth function \(f\left( \cdot \right) \) has a continuous second derivative.

-

(B2)

The density function \(\rho \left( \cdot \right) \) is continuous and positive on its support.

-

(B3)

Assume that \(\varphi _h(t|{z_n})\), \(\varphi _h^{\prime } (t|{z_n})\) and \(\varphi _h^{\prime \prime } (t|{z_n})\) as functions of \({z_n}\) are bounded and continuous in a neighborhood of z for all small t and that \(\varphi _h(0|{{z_n}}) \ne 0\). \(\varphi _h^{\prime \prime } (t|{z_n})\) as a function of t is continuous in a neighborhood of point 0, uniformly for \({z_n}\) in a neighborhood of z.

-

(B4)

The conditional density function \(g( {{\bar{y}}| z })\) is continuous in z for each \({\bar{y}}\). Moreover, there exist positive constants \(\epsilon \), \(\sigma \) and a positive function \(G( {{\bar{y}}|z})\) such that

$$\begin{aligned}&{\sup _{| {{z_n} - z} | \le \epsilon }}g( {{\bar{y}}| z_n}) \le G( {{\bar{y}}|z}),\\&\quad {\int |{{\phi }^\prime _h({\varepsilon })|} ^{2 + \sigma }}G( {{\bar{y}}| z })d\mu ( {{\bar{y}}}) <\infty , \end{aligned}$$and

$$\begin{aligned} {\int {{\{{\phi _{h}}({{\bar{y}} - t} ) - {\phi _{h}}({{\bar{y}}})- {\phi }^\prime _h(\bar{y})t}}}\}^2G( {{\bar{y}}|z})d\mu ( {{\bar{y}}} ) = o( {{t^2}}), \quad \mathrm{as} \quad t \rightarrow 0. \end{aligned}$$

Remark 2

The conditions (B1)–(B4) follow from the adaptations of the condition A of Fan et al. (1994) and can be easily ensured or verified. Conditions (B1) and (B2) are necessary conditions to ensure that the bias and the variance of \(\widehat{f}( z )\) have the right rate of convergence, respectively. Condition (B3) ensures the uniqueness of the solution to the objective function (8). Conditions (B4) is required by the dominated convergence theorem and moment calculation in the proof of the asymptotic normality.

Theorem 3

In addition to the conditions in Theorem 1, the regularity conditions (B1)–(B4) are satisfied. If \(h_2 \rightarrow 0\), \(nh_2^q \rightarrow \infty \) as \(n \rightarrow \infty \), and \(h_3\) is a constant and does not depend on n, then for any fixed \(Z = z \in {\mathbb {R}^{q}}\),

in distribution, where

3 Variable selection procedure

3.1 Penalized LMR for PLM

In this subsection, we aim to develop a variable selection procedure to select significant parametric covariates for PLM. To this end, we consider the following penalized function based on LMR

where \({p_{{\lambda }}}(\cdot )\) is a penalty function with regularization parameter \({\lambda }\). One of the most commonly used penalty is the SCAD penalty, which is defined as follows

where a is some constant usually taken to be 3.7 as suggested in Fan and Li (2001). For given regularization parameter \({\lambda }\), we can get a sparse estimator \(\widehat{\beta }^{\lambda }\) of \(\beta \) and then conduct the variable selection procedure.

However, as the SCAD penalty function is singular at 0, it is difficult to maximize the objective function (9). Following Fan and Li (2001), we then apply the local quadratic approximation (LQA) algorithm to the SCAD penalty function for fixed \(\lambda \). Suppose that the initial value \({{\widehat{\beta } }^{\lambda (0)}}\) is very close to the maximizer of the objective function (9). If \(\widehat{\beta }_j^{\lambda (0)}\) is very close to 0, then we set \(\widehat{\beta }_j^\lambda =0\). Otherwise, \({p_{{\lambda }}}(\beta _j)\) can be locally approximated as

As a consequence, we can obtain the penalized LMR estimator \(\widehat{\beta }^{\lambda }\) by maximizing the following objective function

with respect to \(\beta \).

Then, similar as the objective function (8), we obtain the LMR estimator \({\widehat{f}^\lambda }(z) = {\widehat{a}^\lambda }\) by maximizing

with respect to a and b.

3.2 Theoretical properties

In this subsection, we will study the theoretical properties of the penalized LMR estimator \(\widehat{\beta }^{\lambda }\). We first introduce some notations. Without loss of generality, we decompose the true parameter vector \(\beta _0\) as \(\beta _0 = {( {\beta _{0a}^T,\beta _{0b}^T} )^T}\), where \({\beta _{0a}}={( {{\beta _{01}}, \ldots ,{\beta _{{0k_n}}}} )^T}\) is the vector corresponding to all the nonzero coefficients and \({\beta _{0b}}={( {{\beta _{0,{k_n} + 1}},\ldots ,{\beta _{0p_n}}})^T}\) is the vector corresponding to all the zero coefficients. In the same way, we also decompose \({{\widetilde{X}}} = {(\widetilde{X}_{a}^T,\widetilde{X}_{b}^T)^T}\) and \({{\widehat{\beta }}^\lambda } = {({(\widehat{\beta }_a^\lambda )^T},{(\widehat{\beta }_b^\lambda )^T})^T}.\) Denote

Theorem 4

Under the regularity conditions (A1)–(A7), if \({{p_n^2} / n} \rightarrow 0\) as \(n \rightarrow \infty ,\)\(h_1\) is a constant and does not depend on n, and the penalty function \({p_{{\lambda }}}(t)\) satisfies \({a_n} = {O}( {{n^{ - {1 / 2}}}})\), \({b_n} \rightarrow 0\) as \(n \rightarrow \infty ,\) and there are constants \(M_1\) and \(M_2\) such that \(\left| {{p^{\prime \prime }_\lambda }(t) - {p^{\prime \prime }_\lambda }(t)} \right| \le {M_2}\left| {{t_1} - {t_2}} \right| \) for any \(t_1\), \(t_2>M_1\lambda \), then we have

where \(\left\| {\cdot } \right\| \) stands for the Euclidean norm.

Theorem 4 indicates that the penalized LMR estimator \(\widehat{\beta }^{\lambda }\) is root-\({{n / {{p_n}}}}\) consistent with suitable penalty function. Furthermore, the following theorem states the oracle property of the penalized LMR estimator \(\widehat{\beta }^{\lambda }\).

Theorem 5

Under the same conditions as in Theorem 4, if \({\lambda }\rightarrow 0\), \({({n / {{p_n}}})^{{1 / 2}}}{\lambda } \rightarrow \infty \) as \(n \rightarrow \infty ,\) and the penalty function \({p_{{\lambda }}}(t)\) satisfies

Then, with probability tending to 1, the root-\({{n / {{p_n}}}}\) consistent estimator \({{\widehat{\beta }}^\lambda } = {({(\widehat{\beta }_a^\lambda )^T},{(\widehat{\beta }_b^\lambda )^T})^T}\) in Theorem 4 satisfies:

-

(a)

Sparsity: \(\widehat{\beta }_b^\lambda = 0.\)

-

(b)

Asymptotic normality:

$$\begin{aligned} \sqrt{n} \left( \varSigma _1^{(1)} + {\varPsi _\lambda }\right) \left\{ \widehat{\beta }_a^\lambda - {\beta _{0a}} + {\left( \varSigma _1^{(1)} + {\varPsi _\lambda }\right) ^{ - 1}}{\mathbf{{s}}_n}\right\} \rightarrow {N}\left( {\mathbf{{0}},\varSigma _2^{(1)}}\right) \end{aligned}$$in distribution, where \(\varSigma _1^{(1)}\), \(\varSigma _2^{(1)}\) are the submatrices of \(\varSigma _1\) and \(\varSigma _2\) corresponding to \(\beta _{0a}\).

Built upon the results on the penalized LMR estimator \(\widehat{\beta }^{\lambda }\), we provide the asymptotic normality of the LMR estimator \({{\widehat{f}}^\lambda }(z)\) as follows:

Theorem 6

In addition to the conditions in Theorem 5, the regularity conditions (B1)–(B4) are satisfied. If \(h_2 \rightarrow 0\), \(nh_2^q \rightarrow \infty \) as \(n \rightarrow \infty \), and \(h_3\) is a constant and does not depend on n, then for any fixed \(Z = z \in {\mathbb {R}^{q}}\),

in distribution, where

4 Bandwidth selection and estimation algorithm

In this section, we first discuss the selection of bandwidths both in theory and in practice. Then, BIC criterion is suggested to select the regularization parameter. Finally, we introduce a modified MEM algorithm to obtain our proposed estimators.

4.1 Asymptotic optimal bandwidth

Based on Theorem 2 and the asymptotic variance of the LS estimator given in Zhu et al. (2013), we can that the ratio of the asymptotic variance of the LMR estimator \(\widehat{\beta }\) to that of the corresponding LS estimator is given by

where \(Var(\varepsilon \left| {U = u} \right. ) = {\sigma ^2}(u)\).

We can see that the ratio \(R(u,{h_1})\) depends only on u and \(h_1\), and it plays an important role in efficiency and robustness of the LMR estimator \(\widehat{\beta }\). Therefore, the asymptotic optimal bandwidth of \(h_1\) can be chosen as

which indicates that \(h_{1,\mathrm opt}\) does not depends on n and only depends on the conditional error distribution of \(\varepsilon \) given U. It follows from Theorem 2.4 in Yao et al. (2012) that \(\mathrm{inf}_{{h_1}}R(u,{h_1}) \le 1\) for any error distribution, and if the error term follows normal distribution, \(R(u,{h_1}) > 1\) and \(\mathrm{inf}_{{h_1}}R(u,{h_1}) = 1\). That is to say, the LMR estimator \(\widehat{\beta }\) is more efficient than the corresponding LS estimator when there exist outliers and heavy-tail error distribution and does not lose efficiency under normal error distribution.

Similarly, the asymptotic optimal bandwidth of \(h_3\) is given by

Moreover, according to Theorem 3, we can see that the optimal bandwidth for \(h_2\) is of order \(n^{-1/(4+q)}\). If \(q=1\), the order of the optimal bandwidth for \(h_2\) is \(n^{-1/5}\), which is a common requirement for semiparametric models in the kernel literature.

4.2 Bandwidth selection in practice

In this subsection, we will address how to select the bandwidths for the LMR estimators in practice. For simplicity, we further assume that \(\varepsilon \) is independent of U. Thus, we can estimate \(F({u,h_1})\) and \(G({u,h_1})\) by

respectively, where \({{\widehat{\varepsilon }}_i} ={{\widehat{\widetilde{Y}}}_i} - {{\widehat{\widetilde{X}}}_i}^T\widehat{\beta }\).

Then, we can estimate \(R({h_1})\) by \(\widehat{R}({h_1}) = \widehat{G}({h_1})\widehat{F}{({h_1})^{ - 2}}\mathrm{{ }}/{{\widehat{\sigma }}^2}\), where \({{\widehat{\sigma }}}\) is estimated based on the pilot estimator. In this paper, we use the grid search method to find \(h_{1,\mathrm opt}\) to minimize \(\widehat{R}({h_1})\). According to the suggestion of Yao et al. (2012), the possible grids points for \(h_1\) can be \(0.5\widehat{\sigma }\times {1.02^j}\), \(j=0,1,\ldots ,k\), for some fixed k, such as \(k=50\) or \(k=100\). Similarly, we can find the optimal bandwidth for \(h_3\).

Finally, we suggest to use k-fold Cross-Validation (Breiman 1995) or generalized Cross-Validation (Tibshirani 1996) to select the optimal bandwidth for \(h_2\).

4.3 Selection of regularization parameter

To produce a sparse estimator of \(\beta _0,\) it remains to select the regularization parameter \({\lambda }\). In this paper, we propose a modified BIC-type criterion to choose the optimal \({\lambda }\), which minimizes the following objective function

where \(df{_\lambda }\) is the number of non-zero coefficients of \(\widehat{\beta }^{\lambda }\).

4.4 Estimation algorithm

In this subsection, we propose a modified modal expectation-maximization (MEM) algorithm, proposed by Li et al. (2007), for the proposed estimators. The algorithm has two iterative steps similar to the expectation and the maximization steps in EM (Dempster et al. 1977).

-

Step 1 We first calculate the LMR estimator \(\widehat{\beta }\). Let \({\widehat{\beta }^{(0)}} = (\widehat{\beta }_1^{(0)}, \ldots ,\widehat{\beta }_{{p_n}}^{(0)})^{T}\) be the initial value and set \(k=0\).

-

(E-step): Update \(\pi _1 (j| {{\widehat{\beta }^{(k)}}} )\) by

$$\begin{aligned} \pi _1 (j| {{\widehat{\beta }^{(k)}}}) = \frac{{{\phi _{{h_1}}}\left( {{{\widehat{\widetilde{Y}}}}_j} - {{{\widehat{\widetilde{X}}}}_j^T}\widehat{\beta }^{(k)}\right) }}{{\sum \nolimits _{i = 1}^n {{\phi _{{h_1}}}\left( {\widehat{\widetilde{Y}}}_i - {{{\widehat{\widetilde{X}}}}_j^T}\widehat{\beta }^{(k)}\right) } }},\quad j = 1,2, \ldots ,n. \end{aligned}$$item (M-step): Update \(\beta \) to obtain \(\widehat{\beta }^{(k+1)}\) by

$$\begin{aligned} {\widehat{\beta }^{(k + 1)}}= & {} \arg \mathop {\max }\limits _\beta \sum \limits _{i = 1}^n { \left\{ \pi _1 \left( i| {{\widehat{\beta }^{(k)}}}\right) \mathrm{log}{\phi _{{h_1}}}\left( {{{\widehat{\widetilde{Y}}}}_i} - {{{\widehat{\widetilde{X}}}}_j^T}\beta \right) \right\} }\\= & {} {\left( {{{\widehat{\widetilde{\mathbf{{X}}}}}}^T}{W_1^{(k)}}{{\widehat{\widetilde{\mathbf{{X}}}}}}\right) ^{ - 1}}{{{\widehat{\widetilde{\mathbf{{X}}}}}}^T}{W_1^{(k)}}{{\widehat{\widetilde{\mathbf{{Y}}}}}}, \end{aligned}$$where \({{\widehat{\widetilde{\mathbf{{X}}}}}} = {({{{\widehat{\widetilde{X}}}}_1}, \ldots ,{{{\widehat{\widetilde{X}}}}_n})^T}\), \({\widehat{\widetilde{\mathbf{Y}}}} = {({{{\widehat{\widetilde{Y}}}}_1}, \ldots , {{{\widehat{\widetilde{Y}}}}_n})^T}\) and \({W_1^{(k)}} = {\mathrm{diag}}\{ \pi _1 (1| {{\widehat{\beta }^{(k)}}}), \ldots ,\pi _1 (n| {{\widehat{\beta }^{(k)}}} )\}\). Iterate the E-step and M-step until convergence.

Remark 3

When \(\phi \left( t \right) \) is the standard normal density, the M-step has a unique maximum. Similar as in the EM algorithm, it is usually much easier to maximize \(\sum \nolimits _{i = 1}^n {\{ \pi _1 (i| {{\beta }}) \mathrm{log}{\phi _{{h_1}}}({{{\widehat{\widetilde{Y}}}}_i} - {{{\widehat{\widetilde{X}}}}_i}\beta )\}}\) than the original objective function (6).

-

Step 2 Similarly, we then calculate the LMR estimator \(\widehat{f}(z)\). Let \(\widehat{\theta }^{(0)}={( {{{\widehat{a}}^{(0)T}},{{\widehat{b}}^{(0)T}}})^T}\) be the initial value and set \(k=0\).

-

(E-step): Update \(\pi _2 (j| {{\widehat{\theta }^{(k)}}} )\) by

$$\begin{aligned} \pi _2\left( j| {{\widehat{\theta }^{(k)}}}\right) = \frac{{K\left( {\frac{{{Z_j} - Z}}{{{h_2}}}}\right) {\phi _{{h_3}}}\left( {{{{{Y^ *_j }}}}} - {{{{X^ *_j}}}^T}{\widehat{\theta }^{(k)}}\right) }}{{\sum \nolimits _{i = 1}^n {K\left( {\frac{{{Z_i} - Z}}{{{h_2}}}}\right) {\phi _{{h_3}}}\left( {Y^ *_i} - {{{{X^ *_i}}}^T}{\widehat{\theta }^{(k)}}\right) } }},\quad j = 1,2, \ldots ,n, \end{aligned}$$where \({Y_i^*} = {Y_i} - X_i^T\widehat{\beta }\), and \({X_i^* }= {\{ {1,{{({Z_i} - Z)}^T}}\}^T}\).

-

(M-step): Update \(\theta \) to obtain \(\widehat{\theta }^{(k+1)}\) by

$$\begin{aligned} {\widehat{\theta }^{(k + 1)}}= & {} \arg \mathop {\max }\limits _\beta \sum \limits _{i = 1}^n { \left\{ \pi _2 \left( i| {{\widehat{\theta }^{(k)}}}\right) \mathrm{log}{\phi _{{h_3}}}\left( {{{Y^*_i}}} - {{{{X^ *_i}}}^T}\theta \right) \right\} }\\= & {} {\left( {{{{{\mathbf{{X}}^*}}}}^T}{W_2^{(k)}}{{{{\mathbf{{X}}^*}}}}\right) ^{ - 1}}{{{{{\mathbf{{X}}^*}}}}^T}{W_2^{(k)}}{{{{\mathbf{{Y}}^*}}}}, \end{aligned}$$where \({{{{\mathbf{{X}}^*}}}} = {({{{{X}}}^*_1}, \ldots ,{{{{X}}}^*_n})^T}\), \({{{\mathbf{Y}}^*}} = {({{{{Y}}}^*_1}, \ldots ,{{{{Y}}}^*_n})^T}\) and \({W_2^{(k)}} = \mathrm{diag}\{ \pi _2 (1| {{\widehat{\theta }^{(k)}}}), \ldots ,\pi _2 (n| {{\widehat{\theta }^{(k)}}})\}\). Iterate the E-step and M-step until convergence.

With the aid of the LQA algorithm, we can obtain the penalized LMR estimator \({{\widehat{\beta }}^\lambda }\) by a revision of Step 1 as follows:

-

Step 1’ Let \({{\widehat{\beta }}^{\lambda (0)}} = ({{{\widehat{\beta }}^{\lambda (0)}}_1}, \ldots ,{{{\widehat{\beta }}^{\lambda (0)}}_{p_n}})^{T}\), and set \(k=0\).

-

(E-step): Update \(\pi _1 (j| {{\widehat{\beta }}^{{\lambda (k)}}})\) by

$$\begin{aligned} \pi _1 \left( j| {{\widehat{\beta }}^{{\lambda (k)}}}\right) = \frac{{{\phi _{{h_1}}}\left( {{{\widehat{\widetilde{Y}}}}_j} - {{{\widehat{\widetilde{X}}}}_j^T}{{\widehat{\beta }}^{{\lambda (k)}}}\right) }}{{\sum \nolimits _{i = 1}^n {{\phi _{{h_1}}}\left( {\widehat{\widetilde{Y}}}_i - {{{\widehat{\widetilde{X}}}}_j^T}{{\widehat{\beta }}^{{\lambda (k)}}}\right) } }},\quad j = 1,2, \ldots ,n. \end{aligned}$$ -

(M-step): Update \(\beta \) to obtain \({\widehat{\beta }^{\lambda (k + 1)}}\) by

$$\begin{aligned} {\widehat{\beta }^{\lambda (k + 1)}}= & {} \arg \mathop {{\max }}\limits _\beta \sum \limits _{i = 1}^n \left\{ {\pi _1}\left( i|{{\widehat{\beta }}^{\lambda (k)}}\right) {\mathrm{log}}{\phi _{{h_1}}}\left( {{{\widehat{\widetilde{Y}}}}_i} - {{{\widehat{\widetilde{X}}}}_j^T}{\widehat{\beta } }^{\lambda (k)}\right) \right. \\&\left. - \frac{n}{2}\sum \limits _{j = 1}^{p_n} \left\{ {{{{p_{{\lambda }}^\prime }\left( {\widehat{\beta }_j^{\lambda (k)}}\right) }/ {| {\widehat{\beta }_j^{\lambda (k)}}|}}} \right\} \beta _j^2\right\} \\= & {} \left\{ {{{\widehat{\widetilde{\mathbf{X}}}}}^T}{W_1^{(k)}}{{\widehat{\widetilde{\mathbf{X}}}}} + n{\varSigma _\lambda }\left( {{\widehat{\beta } }^{\lambda (k)}}\right) \right\} ^{ - 1}{{ {{\widehat{\widetilde{\mathbf{X}}}}}}^T}{W_1^{(k)}}{{\widehat{\widetilde{\mathbf{Y}}}}}, \end{aligned}$$

where \({\varSigma _\lambda }(\beta ) = \mathrm{diag}\left\{ p_{{\lambda }}^\prime (| {{\beta _1}}|), \ldots ,p_{{\lambda }}^\prime (| {{\beta _{{p_n}}}}|)\right\} \), \({\widehat{\widetilde{\mathbf{X}}}}\), \({\widehat{\widetilde{\mathbf{Y}}}}\), and \(W_1^{(k)}\) are the same as in Step 1. Iterate the E-step and M-step until convergence.

5 Simulation studies

5.1 Monte Carlo simulations

In this subsection, we will provide some Monte Carlo simulations to evaluate the finite-sample performance of the proposed estimators in terms of robust estimation and variable selection. Without loss of generality, we consider following four models:

-

(I):

\(Y\mathrm{{ = }}{X^T}\beta + 2\sin ({\gamma ^T}Z) + \varepsilon \);

-

(II):

\(Y\mathrm{{ = }}{X^T}\beta + \left| {({\gamma ^T}Z) + 1} \right| + \varepsilon \);

-

(III):

\(Y\mathrm{{ = }}{X^T}\beta + {{\exp \{ {{({\gamma ^T}Z)}/ 2}\} } / 2} + \varepsilon \);

-

(IV):

\(Y\mathrm{{ = }}{X^T}\beta + 2({\gamma ^T}Z) + \varepsilon .\)

In each model, we generate \(Z = {({z_1},{z_2})^T}\) from a two-dimensional normal distribution with mean zero and identity covariance matrix, and X from \({X_j} = \gamma ^T Z + 2{e_j}\) for \(j = 1,2, \ldots , p_n\), where \(\gamma = {({1 / {\sqrt{2} ,}}{1 / {\sqrt{2} }})^T}\) and \({e_j}\)’s are independently generated from standard normal distribution. Similar models were also considered in Zhu et al. (2013). We choose \(\beta = {({\mathbf{1}_{{k_n}}},{\mathbf{0}_{{p_n}-{k_n}}})^T}\), where \({k_n} = {p_n}/4,\) indicating that the size of significant parametric covariates is also diverging with the sample size. To examine the robustness and efficiency of our proposed LMR estimators, we compare the simulation results with corresponding LS estimators and LAD estimators. In our simulations, we considered the following error distributions: N(0, 1) distribution; t(3) distribution which is used to produce heavy-tailed error distribution; mixed normal distribution \(0.9N(0,1)+0.1N(0,10)\) which is used to produce outliers. Similar error distributions were also considered in Yao et al. (2012), Zhang et al. (2013), and Zhao et al. (2015). The simulations are repeated 200 times with sample size \(n=400\) and dimension \({p_n} = 2{n^{{1 / 2}}}=40\).

For sake of evaluation, the estimation accuracy of the parametric estimators is measured by the mean and median of the mean squared errors (MeanMSE and MedianMSE) over the 200 simulated datasets. Meanwhile, the performance of the nonparametric estimators is assessed by the median absolute prediction error (MAPE), which is defined by

and the sample mean and standard deviation (SD) of the MAPE’s are presented in the last columns of Tables 1 and 2. In addition, we adopt the notation (C, IC) to identify the performance of the variable selection in Table 3. Here C means the average number of zero regression coefficients that are correctly estimated as zero, IC presents the average number of non-zero regression coefficients that are incorrectly set to zero.

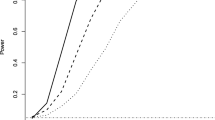

From Table 1, we can see that the LMR estimator \(\widehat{\beta }\) performs best in the case of non-normal error distributions, and as asymptotically efficient as the corresponding LS estimator when the error is normal distribution. Meanwhile, the LMR estimator \(\widehat{f}(z)\) seems to perform no worse than the corresponding LAD estimator for \(t_3\) error distribution and 10\(\%\) outliers, and is comparable to the corresponding LS estimator when the error is drawn from the normal distribution. Similar conclusion can be draw from Table 2 for the penalized LMR procedure. Furthermore, the penalized LMR estimator \(\widehat{\beta }^{\lambda }\) outperforms the other estimators in terms of C and IC. Finally, we provide the plots of the LMR estimator \(\widehat{f}(z)\). For illustration, we only present the figures when the robust LMR procedure is applied for model (I). From Figs. 1, 2, and 3, we find the LMR estimator \(\widehat{f}(z)\) performs favorably compared to other estimators.

5.2 A real data example

As an illustration, we apply the proposed procedures to analyze an automobile dataset (Johnson 2003), which had been analyzed by Zhu et al. (2012) and Zhu et al. (2013). We intend to investigate how the manufactures suggested retail price (MSRP) of vehicles depends upon different factors. Thus, it is appropriate to treat the MSRP as the response variable (Y). This dataset contains 412 available observations in total, after removing sixteen observations with missing values. There are seven important factors which possibly affect the MSRP of vehicles, such as engine size (\(x_1\)), number of cylinders (\(x_2\)), horsepower (\(x_{3}\)), weight in pounds (\(x_{4}\)), wheel base in inches (\(x_{5}\)), average city miles per gallon (\(z_1\)) and average highway miles per gallon (\(z_2\)). Similar as Zhu et al. (2013), we choose \(Z = {({z_1}, {z_2})^T}\) as the nonparametric covariate. In the subsequent analysis, we first standardize the response variable Y and the parametric covariate vector \(X = {({x_1}, \ldots ,{x_{5}})^T}\), respectively.

From the histogram and boxplot of the standardized Y presented in Figs. 4 and 5, we can see that the distribution of the standardized Y is highly skewed and that there exist a number of outliers in the standardized Y. Then, we apply the three different estimation procedures (LS, LAD, LMR) to analyze the dataset by a partially linear model stated as (1). The prediction performance is measured by the median absolute prediction error (MAPE), which is the median of \(\{ {| {Y_i - \widehat{Y}_i}|,i =1, 2, \ldots ,412} \}\). The corresponding estimation results are summarized in Table 4, from which we can see that the LMR method has smaller MAPE than the other methods in the presence of outliers. Based on the previous analysis by Zhu et al. (2013), we further include other seven binary variables as auxiliary covariates, which have little contributions to the MSRP of vehicles. These auxiliary covariates are sport car (\(x_6\)), sport utility vehicle (\(x_7\)), wagon (\(x_8\)), mini-van (\(x_9\)), pickup (\(x_{10}\)), all-wheel drive (\(x_{11}\)), and rear-wheel drive (\(x_{12}\)). In this way, the dimension of the covariate vector X becomes 12, and then we consider the problem of variable selection. From Table 5, we can see that all three penalized procedures identify \(x_3\), \(x_4\), and \(x_5\) as important variables, which coincides with Zhu et al. (2012) that \(x_3\) is seems to be the most important factor that affects the MSRP, followed by \(x_4\). In addition, the penalized LMR procedure selects \(x_1\) as important variable, which has negative connection with the MSRP. However, the LAD penalized procedure selects two auxiliary covariates, \(x_6\) and \(x_{12}\). To conclude, the penalized LMR procedure is better than the penalized LS procedure in terms of MAPE, and is sparse than the penalized LAD procedure by shrinking all the auxiliary covariates to zeros.

6 Extension

In this section, we further discuss how the penalized LMR procedure can be applied to ultra-high dimensional data in which \(p_n>n\). Based on correlation learning, Fan and Lv (2008) proposed sure independence screening (SIS) to reduce dimensionality from high to a moderate scale that is below the sample size. They further established the sure screening property for SIS. Inspired by the results of Fan and Lv (2008), we propose a two-stage approach combined SIS and penalized LMR to deal with ultra-high dimensional data. We first apply SIS to reduce the model dimensions to \(d_n=o\left( {\sqrt{n} } \right) \) and then fit the data using the penalized LMR to obtain the final estimation. We call this two-step procedure SIS + LMR.

To demonstrate SIS + LMR, we consider the commonly used model (I) in Sect. 5.1, except that \(p_n=1000\). From Table 6, we see that when the error is normally distributed, SIS + LMR is comparable to SIS + LS. However, in the case of 10\(\%\) outliers or \(t_3\) error distribution, SIS + LMR outperforms the other procedures in terms of parameter estimation and variable selection.

7 Concluding discussion

In this paper, we adopt the local modal regression for robust estimation in partially linear models with large-dimensional covariates. We show that the resulting estimators for both parametric and nonparametric components are more efficient in the case of outliers or heavy-tail error distribution, and as asymptotically efficient as the corresponding least squares estimators when there are no outliers and the error is normal distribution. We also develop the variable selection procedure to select significant parametric covariates and and establish its oracle property under mild regularity conditions.

In some applications, the parametric covariate X may be in much higher dimension than \(o\left( {\sqrt{n} } \right) \mathrm{{ }}\). To this end, we introduce a robust two-step approach based on the idea of sure independence screening procedure to deal with ultra-high dimensional data. However, how to apply the proposed procedure directly in such high dimensional scenario without feature screening is of both theoretical and practical importance.

Furthermore, the proposed procedures may encounter the curse of dimensionality when the dimension of nonparametric covariate Z is large, although we complete the theoretical results when Z is allowed to be multivariate. In practice, we may consider the partially linear single-index model to solve this issue. But such an extension is by no means of trivial and needs additional investigations in the future.

References

Akaike H (1973) Maximum likelihood Identification of Gaussian autoregressive moving average models. Biometrika 60:255–265

Breiman L (1995) Better subset regression using the nonnegative garrote. Technometrics 37:373–384

Chen B, Yu Y, Zou H, Liang H (2012) Profiled adaptive Elastic-Net procedure for partially linear models with high-dimensional covariates. J Stat Plann Inference 142:1733–1745

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc 39:1–21

Engle R, Granger C, Rice J, Weiss A (1986) Semiparametric estimates of the relation between weather and electricity sales. J Am Stat Assoc 81:310–320

Fan J, Gijbels I (1996) Local polynomial modelling and its applications. Chapman and Hall, London

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96:1348–1360

Fan J, Lv J (2008) Sure independence screening for ultra-high-dimensional feature space. J R Stat Soc 70:849–911

Fan J, Hu TC, Truong YK (1994) Robust nonparametric function estimation. Scand J Stat 21:433–446

Hardle W, Liang H, Gao JT (2000) Partial linear models. Springer, New York

Huber PJ (1981) Robust estimation. Wiley, New York

Johnson RW (2003) Kiplingers personal finance. J Stat Educ 57:104–123

Li R, Liang H (2008) Variable selection in semiparametric regression modeling. Ann Stat 36:261–286

Li J, Ray S, Lindsay B (2007) A nonparametric statistical approach to clustering via mode identification. J Mach Learn Res 8:1687–1723

Li GR, Peng H, Zhu LX (2011) Nonconcave penalized M-estimation with diverging number of parameters. Stat Sin 21:391–420

Mallows CL (1973) Some comments on \(Cp\). Technometrics 15:661–675

Ni X, Zhang HH, Zhang D (2009) Automatic model selection for partially linear models. J Multivar Anal 100:2100–2111

Pollard D (1991) Asymptotics for least absolute deviation regression estimators. Econom Theory 7:186–199

Rao BLSP (1983) Nonparametric functional estimation. Academic Press, Orlando

Robinson PM (1988) Root \(n\)-consistent semiparametric regression. Econometrica 56:931–954

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Severini TA, Staniswalis JG (1994) Quasi-likelihood estimation in semiparametric models. J Am Stat Assoc 89:501–511

Speckman PE (1988) Kernel smoothing in partial linear models. J R Stat Soc 50:413–436

Tibshirani R (1996) Regression shrinkage and selection via the LASSO. J R Stat Soc 58:267–288

Wang H, Li G, Jiang G (2007) Robust regression shrinkage and consistent variable selection through the LAD-Lasso. J Bus Econ Stat 25:347–355

Xie H, Huang J (2009) SCAD-penalized regression in high-dimensional partially linear models. Ann Stat 37:673–696

Yang H, Yang J (2014) A robust and efficient estimation and variable selection method for partially linear single-index models. J Multivar Anal 129:227–242

Yao W, Li L (2014) A new regression model: modal linear regression. Scand J Stat 41:656–671

Yao W, Lindsay B, Li R (2012) Local modal regression. J Nonparametr Stat 24:647–663

Zeger S, Diggle P (1994) Semiparametric models for longitudinal data with application to CD4 cell numbers in HIV seroconverters. Biometrics 50:689–699

Zhang R, Zhao W, Liu J (2013) Robust estimation and variable selection for semiparametric partially linear varying coefficient model based on modal regression. J Nonparametr Stat 25:523–544

Zhao W, Zhang R, Liu J, Lv Y (2014) Robust and efficient variable selection for semiparametric partially linear varying coefficient model based on modal regression. Ann Inst Stat Math 66:165–191

Zhao W, Zhang R, Liu Y, Liu J (2015) Empirical likelihood based modal regression. Stat Papers 56:411–430

Zhou H, You J, Zhou B (2010) Statistical inference for fixed-effects partially linear regression models with errors in variables. Stat Pap 51:629–650

Zhu LX, Fang KT (1996) Asymptotics for kernel estimation of sliced inverse regression. Ann Stat 3:1053–1068

Zhu L, Huang M, Li R (2012) Semiparametric quantile regression with high-dimensional covariates. Stat Sin 22:1379–1401

Zhu L, Li R, Cui H (2013) Robust estimation for partially linear models with large-dimensional covariates. Sci China Math 56:2069–2088

Zou H (2006) The adaptive lasso and its oracle properties. J Am Stat Assoc 101:1418–1429

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant No. 11671059).

Author information

Authors and Affiliations

Corresponding author

Additional information

This work is supported by the National Natural Science Foundation of China (Grant No. 11671059).

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix: Proofs of Theorems

Appendix: Proofs of Theorems

Proof of Theorem 1

We want to show that for any given \({{\delta > 0}}\), there exists a large constant C such that

where \(R(\beta ) = \frac{1}{n}\sum \limits _{i = 1}^n {\phi _{h_1}}( {{{{\widehat{\widetilde{Y}}}}_i} - {{{\widehat{\widetilde{X}}}}_i}^T\beta })\), \({\mu _n}={p_n}^{{1 / 2}} {{n^{{{ - 1} / 2}}}}\).

For simplicity, we define \({D_n}(\mathbf{{v}}) = {R}( {\beta _0 + {{\mu _n}}{} \mathbf{{v}}}) - {R }(\beta _0)\) and then obtain that

where \({\sigma _i} = {{\widetilde{Y}}_i} - {{{\widehat{\widetilde{Y}}}}_i} - {({{\widetilde{X}}_i} - {{{\widehat{\widetilde{X}}}}_i})^T}{\beta _0}\), and \(t_i\) is between \({\varepsilon _i} - {\sigma _i}\) and \({\varepsilon _i} - {\sigma _i}-{\mu _n}{\mathbf{{v}}^T}{{{\widehat{\widetilde{X}}}}_i}\). By the regularity conditions (A1)–(A4), the consistence of the kernel estimation implies that \({\max _{1 \le i \le n}}\Vert {{\sigma _i}} \Vert = {o_p}(1)\) almost surely, which will repeatedly be used in our proof. A detailed discussion on this argument can be found in the Lemma 3.5.1 and Lemma A.1 of Hardle et al. (2000).

Based on the fact \(\xi = E(\xi ) + {\mathrm{O}_p}(\sqrt{Var(\xi )})\), the regularity condition (A7) and the uniform convergence of \({{\mathbf{{v}}^T}{{{\widehat{\widetilde{X}}}}_i}}\) entail that \({I_1} = {\mathrm{O}_p}(\frac{{C{\mu _n}}}{{\sqrt{n} }})\). One can refer to Rao (1983) and Zhu and Fang (1996) for these technical details. Similarly, we have \({I_3} = {\mathrm{O}_p}({C^3}{\mu _n}^3)\). For \(I_2\), we have \({I_2} = \frac{1}{2}{\mu _n}^2{\mathbf{{v}}^T}{\varSigma _1}{} \mathbf{{v}}(1 + {o_p}(1))\), where \({\varSigma _1} = E\{ F(U,{h_1}){\widetilde{X}}{{\widetilde{X}}^T}\}.\) By the condition \({{p_n^2} / n} \rightarrow 0\) as \(n \rightarrow \infty \), we can show that \({I_1} = {o_p}\left( {{I_2}} \right) \), and \({I_3} = {o_p}\left( {{I_2}} \right) \). Similar practice can been in Li et al. (2011).

By the regularity condition (A6), \(F(\mathbf{{v}},{h_1}) < 0\); hence, \({\varSigma _1}\) is a negative matrix. Noting \(\left\| \mathbf {v} \right\| = C\), we can get C large enough such that \(I_2\) dominates both \(I_1\) and \(I_3\) with a probability of at least \(1- \delta \). It follows that Eq. (13) holds. Hence, \(\widehat{\beta }\) is a root-\({{n / {{p_n}}}}\) consistent estimator of \(\beta \). \(\square \)

Proof of Theorem 2

Let \({{\widehat{\gamma }}_i} = {{{\widehat{\widetilde{X}}}}_i}^T( {\widehat{\beta }- {\beta _0}} )\). If \(\widehat{\beta }\) maximizes Eq. (6), then \(\widehat{\beta }\) satisfies the following equation:

where \({\varepsilon _i^ * }\) is between \(\varepsilon _i\) and \(\varepsilon _i-{\sigma _i}-{\widehat{\gamma }}_i\).

For \(I_5\), we have

where \({\varSigma _1} = E\{ {F( {u,{h_1}}){\widetilde{X}}{{ \widetilde{X}}^T}}\},\) and the last equality is derived from the regularity conditions (A5) and (A7).

Based on \({| {{{\widehat{\gamma }}_i}} |^2} = {\mathrm{O}_p}({\Vert {\widehat{\beta }- {\beta _0}}\Vert ^2})\) and \({{p_n^2} / n} \rightarrow 0\) as \(n \rightarrow \infty \), we have \({I_6} = o_p({I_5})\). It can be shown, by easy calculation, that \(\sqrt{n} (\widehat{\beta }- {\beta _0}) = \frac{1}{{\sqrt{n} }}\varSigma _1^{ - 1}\sum \nolimits _{i = 1}^n {{{{\widehat{\widetilde{X}}}}_i}\phi _{{h_1}}^\prime ( {{\varepsilon _i}})} + {o_p}(1).\)

Note that \(E\left( {{\phi }^\prime _h( \varepsilon )\left| {U = u} \right. } \right) = 0\), and by the central limit theorem, we have

where \({\varSigma _2} = Var\{ \widetilde{X}\phi _{{h_1}}^\prime (\varepsilon )\}\). This completes the proof. \(\square \)

Proof of Theorem 3

Since Theorem 3 is parallel to Theorem 6, we will only present detailed proof for Theorem 6. \(\square \)

Proof of Theorem 4

It is sufficient to show that for any given \({{\delta > 0}}\), there exists a large constant C such that

where \({\omega _n} = p_n^{{1 / 2}}({n^{ - {1 / 2}}} + {a_n}).\)

Let \({I_7} = - \sum \nolimits _{j = 1}^{{k_n}} {\{ p{}_{{\lambda }}(\left| {{\beta _{{0j}}} + {\omega _n}{v_j}} \right| ) - p{}_{{\lambda }}(\left| {{\beta _{{0j}}}} \right| )\} } \), where \({k_n}\) is the number of components of \(\beta _{{0a}}\). Note that \(p{}_{{\lambda }}(0) = 0\) and \(p{}_{{\lambda }}(| {{\beta _{{j}}}} |) \ge 0\) for all \(\beta _j\). By the proof of Theorem 1, we have

where \({{\bar{I}}_1}\), \({{\bar{I}}_2}\), and \({{\bar{I}}_3}\) are the same as \(I_1\), \(I_2\) and \(I_3\) except the factor \({\mu _n}\) replaced by \(\omega _n\).

By the Taylor expansion and the Cauchy–Schwarz inequality, \(I_7\) is bounded by

Consequently, as \(b_n \rightarrow 0\), \(I_7\) is dominated by \({{\bar{I}}_2} = \frac{1}{2}\omega _n^2{\mathbf{{v}}^T}{\varSigma _1}{} \mathbf{{v}}(1 + {o_p}(1))\), provided C is taken to be sufficiently large. Hence, for large C, \({\bar{I}}_2\) dominates all other three terms in Eq. (15). Based on the fact \({\bar{I}}_2<0\), Eq. (14) holds. Consequently, the result in Theorem 4 holds.

To prove Theorem 5, we need the following lemma. \(\square \)

Lemma 1

Under the conditions in Theorem 5, with probability tending to 1, for any given \({\beta _a}\) satisfying \(\left\| {{\beta _a} - {\beta _{0a}}} \right\| = {\mathrm{O}_p}(\sqrt{{{{p_n}} / n}} )\) and any constant C, we have

Proof of Lemma 1

From the proof of Theorem 2, we have

It can be shown that \(\frac{1}{n}\sum \nolimits _{i = 1}^n {{{{\widehat{\widetilde{X}}}}_i}\phi _{h_1}^\prime ({\varepsilon _i})}= {O_p}({n^{ - {1 / 2}}})\). By the assumption that \(\left\| {{\beta _a} - {\beta _{0a}}} \right\| = {O_p}(\sqrt{{{{p_n}} / n}} )\), then we have \(R_j^\prime (\beta ) = {O_p}(\sqrt{{{{p_n}} / n}} )\). Therefore, for \({\beta _j} \ne 0\) and \(j = {k_n} + 1, \ldots ,{p_n}\),

Since \({{\lim {{\inf }_{n \rightarrow \infty }}\lim {{\inf }_{t \rightarrow {0^ + }}}p_{{\lambda }}^\prime (t)} / {{\lambda }}} > 0\) and \({({n / {{p_n}}})^{{1 / 2}}}{\lambda } \rightarrow \infty \), then the sign of the derivative for \({\beta _j} \in ( - C\sqrt{{{{p_n}} / n}}, C\sqrt{{{{p_n}} / n}})\) is completely determined by that of \({\beta _j}\). Therefore, Eq. (16) holds. \(\square \)

Proof of Theorem 5

From Lemma 1, it follows that \(\widehat{\beta }_b^\lambda = 0\). We will next show the asymptotic normality of \(\widehat{\beta }_a^\lambda \). By Theorem 4, it can be shown easily that there exists a \(\widehat{\beta }_a^\lambda \) that is a root-\({{n / {{p_n}}}}\) consistent local maximizer of \({Q_\lambda }\{ {{{\left( {\beta _a^T,0} \right) }^T}}\}\), which satisfies the following equations:

Therefore,

where \({\varepsilon _i^ * }\) is between \(\varepsilon _i\) and \(\varepsilon _i-{\widehat{\gamma }}_i\).

By the similar proof in Theorem 2, it follows by the central limit theorem and the Slutsky’s theorem that

in distribution, where \(\varSigma _1^{(1)}\), \(\varSigma _2^{(1)}\) are the submatrices of \(\varSigma _1\) and \(\varSigma _2\) corresponding to \(\beta _{0a}\). \(\square \)

Proof of Theorem 6

For notational clarity, we let \({K_i} = K({\frac{{{Z_i} -Z}}{{{h_2}}}})\) and \(l\left( r \right) = - {\phi _{{h_3}}}(r)\). Then, Eq. (11) can be rewritten as

Let \({\theta } = {\left( {nh_2^q} \right) ^{{1 / 2}}}[ {{{a}}-f(Z),{h_2}( {{{b}}-f'(Z)})}]\), \(z_i^ * = {[ {1,{{{{( {{Z_i} - Z} )}^T}}/{{h_2}}}} ]^T}\), \({s_i} = X_i^T( {{\beta _0} - {{\widehat{\beta }}^\lambda }})\), \({\delta _i} = {Y_i} - X_i^T\widehat{\beta }^{\lambda } - f(Z) - f'(Z)({Z_i} - Z)\), \({\delta _i^*} = {Y_i} - X_i^T\beta _0 - f(Z) - f'(Z)({Z_i} - Z)\) and \(f_i={f({Z_i}) - f(Z) - f'({Z})({Z_i} - Z)}\). Then, \({\theta _n}= {\left( {nh_2^q} \right) ^{{1 / 2}}}[ {{{\widehat{a}}^\lambda }-f(Z),{h_2}( {{{\widehat{b}}^\lambda }-f'(Z)})}]\) minimizes the function

Since the function \({J_n}({\theta })\) is convex in \(\theta \), it is sufficient to prove that \({J_n}({\theta })\) converges pointwise to its conditional expectation (Pollard 1991).

Given \({{{{\mathbf{{X}}}}}} = {({{{{X}}}_1}, \ldots ,{{{{X}}}_n})^T}\) and \({{{{\mathbf{{Z}}}}}} = {({{{{Z}}}_1}, \ldots ,{{{{Z}}}_n})^T}\), we can obtain that

where the last equality is derived from the regularity condition (B3). Similar arguments can be also seen in (D.2) and (D.3) of Zhu et al. (2013).

Then, we can obtain that

which is parallel to (4.6) of Fan et al. (1994). The rest of the proof follows literally from Fan et al. (1994) by treating the dimension of Z as fixed, so the detail is omitted here. \(\square \)

Rights and permissions

About this article

Cite this article

Yang, H., Li, N. & Yang, J. A robust and efficient estimation and variable selection method for partially linear models with large-dimensional covariates. Stat Papers 61, 1911–1937 (2020). https://doi.org/10.1007/s00362-018-1013-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-018-1013-1