Abstract

In the framework of censored regression models the random errors are routinely assumed to have a normal distribution, mainly for mathematical convenience. However, this method has been criticized in the literature because of its sensitivity to deviations from the normality assumption. Here, we first establish a new link between the censored regression model and a recently studied class of symmetric distributions, which extend the normal one by the inclusion of kurtosis, called scale mixtures of normal (SMN) distributions. The Student-t, Pearson type VII, slash, contaminated normal, among others distributions, are contained in this class. A member of this class can be a good alternative to model this kind of data, because they have been shown its flexibility in several applications. In this work, we develop an analytically simple and efficient EM-type algorithm for iteratively computing maximum likelihood estimates of the parameters, with standard errors as a by-product. The algorithm has closed-form expressions at the E-step, that rely on formulas for the mean and variance of certain truncated SMN distributions. The proposed algorithm is implemented in the R package SMNCensReg. Applications with simulated and a real data set are reported, illustrating the usefulness of the new methodology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Regression models with censored dependent variable (hereafter CR models) are applied in many fields, like econometric analysis, clinical essays, medical surveys, engineering studies, among others. For example, in econometrics, the study of the labor force participation of married women is usually conducted under the ordinary Tobit model Greene (2012). In this case, the observed response is the wage rate, which is typically considered as censored below zero, i.e., for working women, positive values for the wage rates are registered, whereas for the non-working women the observed wage rates are zero (see Mroz 1987). In AIDS research, the viral load measures may be subjected to some upper and lower detection limits, below or above which they are not quantifiable. As a result, the viral load responses are either left or right censored depending on the diagnostic assays used (see Wu 2010).

In general, for mathematical tractability reasons, it is assumed that the random errors have a normal distribution Wei and Tanner (1990). However, it is well-known that several phenomena are not always in agreement with this assumption, yielding data with a distribution with heavier tails. The problem of longer-than-normal tails (or outliers) can be circumvented by data transformations (namely, Box–Cox, etc.), which can render approximate normality with reasonable empirical results. However, some possible drawbacks of these methods are: (i) transformations provide reduced information on the underlying data generation scheme; (ii) component wise transformations may not guarantee joint normality; (iii) parameters may lose interpretability on a transformed scale and (iv) transformations may not be universal and usually vary with the data set. Hence, from a practical perspective, there is a necessity to seek an appropriate theoretical model that avoids data transformations, yet presenting a robustified “Gaussian” framework.

To deal with the problem of atypical observations in regression models with complete responses, proposals have been made in the literature to replace normality with more flexible classes of distributions. For instance, Lange et al. (1989) discussed the use of the Student-t distribution in multivariate regression models. In this case, the degrees of freedom parameter is the natural choice to control kurtosis. Ibacache-Pulgar and Paula (2011), proposed some local influence measures in Student-t partially linear models. Villegas et al. (2012) proposed the generalized symmetric linear models, in which a link function is defined to establish a relationship between the mean values of symmetric distributions and linear predictors. Recently, Arellano-Valle et al. (2012) advocated the use of the Student-t distribution in the context of truncated regression models. More recently, Massuia et al. (2014) developed diagnostic measures for censored regression models using the Student-t distribution, including the implementation of an interesting (and simple) expectation-maximization (EM) algorithm for maximum likelihood (ML) estimation. They demonstrated its robustness aspects against outliers through extensive simulations.

Although there are some proposals that overcome the problem of atypical observations in CR models, there are no studies taking into account, at the same time, censored responses and observational errors modeled by a distribution in the scale mixture of normal class, which is, maybe, the most important family of symmetric distributions. SMN distributions are extensions of the normal one, incorporating kurtosis. The Student-t (T), Pearson type VII (PVII), slash (SL), power exponential (PE), contaminated normal (CN) and, obviously, the normal (N) distributions are included in this class. Comprehensive surveys are available in Fang and Zhang (1990), Arellano-Valle (1994) and Meza et al. (2012), among others. In this paper, we propose a CR model where the observational errors have a SMN distribution (hereafter we will call it the SMN-CR model). A fully likelihood-based approach is carried out, including the implementation of an exact EM-type algorithm for ML estimation. As in Massuia et al. (2014), we show that the E-step reduces to computing the first two moments of certain truncated SMN distributions. The general formulas for these moments were derived in closed form by Genç (2012). The likelihood function and the asymptotic standard errors (SE) are easily computed as a by-product of the E-step and are used for monitoring convergence and for model selection using the akaike information criterion (AIC) or the bayesian information criterion (BIC). The theoretical justification of the proposal rests on the facts that the SMN class stochastically attributes varying weights to each subject, i.e., lower weight for outliers and thus controls the influence of atypical observations on the overall inference. Moreover, every member of the SMN class tends to the normal case, for example, as the Student-t degrees of freedom tends to the infinity, it approaches normality.

The rest of the paper is organized as follows. Section 2 briefly outlines some preliminary properties of the SMN and truncated SMN distributions. The SMN-CR model is presented in Sect. 3, including the implementation of the ECME algorithm Liu and Rubin (1994) for ML estimation, which is a simple extension/modification of the EM algorithm. In Sect. 4, we derive approximate SE for the regression parameters of the SMN-CR model. Sect. 5, presents some simulation studies to compare the performance of our methods with other normality-based methods. In Sect. 6, advantages of the proposed methodology is illustrated through the analysis of a real data set on housewives wages, previously analyzed under normal errors. Section 7 concludes with a short discussion on the issues raised by our study and some possible directions for future research.

2 Preliminaries

Throughout this paper \(X \sim \text {N}(\mu ,\sigma ^2)\) denotes a random variable X with normal distribution with mean \(\mu \) and variance \(\sigma ^2\) and \(\phi \left( \cdot |\mu ,\sigma ^2\right) \) denotes its probability density function (pdf). \(\phi (\cdot )\) and \(\Phi (\cdot )\) denote, respectively, the pdf and the cumulative distribution function (cdf) of the standard normal distribution. In general, we use the traditional convention denoting a random variable (or a random vector) by an upper case letter and its realization by the corresponding lower case. Random vectors and matrices are denoted by boldface letters. \(\mathbf {X}^{\top }\) is the transpose of \(\mathbf {X}\). \(X \bot Y\) indicates that the random variables X and Y are independent.

We start by defining the SMN distributions, through their hierarchical formulation, and then we introduce some further properties.

Definition 1

We say that a random variable X has a SMN distribution with location parameter \(\mu \) and scale parameter \(\sigma ^2>0\) if it has the following stochastic representation:

where \(Z\sim {N}(0,\sigma ^2)\), U is a positive random variable with cdf \(H(\cdot |{\varvec{\nu }})\) and \({\varvec{\nu }}\) is a scalar or vector parameter indexing the distribution of U.

We use the notation \(X \sim \text {SMN}(\mu , \sigma ^2,{\varvec{\nu }}).\) When \(\mu =0\) and \(\sigma ^2=1\) we have the so-called standard SMN distribution. Note from (1) that \(X|U=u~\sim ~N(\mu ,u^{-1}\sigma ^2)\). Thus, integrating out U from the joint density of X and U will lead to the following marginal density of X:

where \(H(\cdot |{\varvec{\nu }})\) is the cdf of U, which determines the form of the SMN distribution. U is called the scale factor and \(H(\cdot |{\varvec{\nu }})\) is called the mixture distribution.

It is important to notice that there exists a relation between SMN distributions and elliptical distributions. We say that the random variable X has a univariate elliptical distribution with location parameter \(\mu \) and scale parameter \(\sigma ^2\), when its density is given by

where \(z=(x-\mu )^2/\sigma ^2\) and \(g: \mathbb {R} \rightarrow [0,\infty )\) satisfies \(\int _{0}^{\infty } z^{-\frac{1}{2}}g(z) dz < \infty \). It easy to see that (2) has the form (3). The relation between SMN and elliptical distributions will be used in Sect. 4, to obtain SE for the regression parameters.

Definition 2

Let \(X \sim \text {SMN}(\mu , \sigma ^2,{\varvec{\nu }})\) and \(a<b\) such that \(P(a<X<b)>0\). A random variable Y has a truncated SMN distribution in the interval (a, b) if it has the same distribution as \(X|X \in (a,b) \). In this case we write \(Y \sim \text {TSMN}_{(a,b)}(\mu , \sigma ^2,{\varvec{\nu }})\).

As an obvious consequence of Definition 2, we can obtain the density of \(Y \sim \text {TSMN}_{(a,b)}(\mu ,\sigma ^2,{\varvec{\nu }})\), given by

and \(f_{\text {TSMN}}(y|\mu ,\sigma ^2,{\varvec{\nu }};(a,b))=0\) otherwise, where \({F}_{SMN}(\cdot )\) denotes the cdf of the standard SMN distribution. Now we establish the following proposition, which is crucial to the development of our proposed theory. It is a natural extension of Theorem 1 (and Corollary 1) of Genç (2012). In what follows \(\text {E}[\cdot ]\) denotes expectation, \(\text {E}_X[\cdot ]\) denotes expectation relative to the distribution of X and, for the sake of notation simplicity, we denote all pdf’s by \(f(\cdot )\). Thus, for example, f(u, x) denotes the joint pdf of U and X and \(f(u|X \in {\mathcal A})\) denotes the pdf of U given the event \(\left\{ X \in {\mathcal A}\right\} \).

Proposition 1

Let \(X \sim \text {SMN}(0,1,{\varvec{\nu }})\) with scale factor U and mixture distribution \(H(\cdot |{\varvec{\nu }})\). Then, for \(a <b\), the \(\text {E}\left[ U^{r}X^{s}|X\in (a,b)\right] \) for \(r\ge 1\) and \(s=0,1,2\) is given by:

where

Proof

Let \({\mathcal A}=(a,b)\). From Definitions 1 and 2, we have that \(X|U=u\sim \text { N}(0,u^{-1})\), \(X|X\in \mathcal{A}\sim \text {TSMN}_{\mathcal{A}}(0,1,{\varvec{\nu }})\) and \(X|U=u,X\in \mathcal{A}\sim \text {TN}_{\mathcal{A}}(0,u^{-1})\), that is, a truncated normal distribution in \(\mathcal{A}\), being 0 and \(u^{-1}\) the mean and variance, respectively, before truncation. Then,

The pdf in the integral sign takes the following form:

where \(\mathcal{A}^*=(a u^{\frac{1}{2}},b u^{\frac{1}{2}})\) and \(\mathbb {I}_{A}(\cdot )\) is the indicator function of the set A. Equation (10) is obtained using the pdf’s expression of \(X| X \in \mathcal A\). Equation (11) is consequence of the fact that, if \(\left\{ x \in \mathcal A\right\} \), then \(\left\{ X \in \mathcal A, X=x\right\} =\left\{ X=x\right\} \), implying that \(f(u,x) =f(u|X=x)f(x) =f(u|X=x, X \in \mathcal A)f(x)\). If \(\left\{ x \notin \mathcal A\right\} \) then \(\mathbb {I}_{\mathcal A}(x) =0\) and the integrands in (10) and (11) are equal to zero. By (8) and Lemma 1 given in Appendix A, it follows that

-

for \(s=0\),

$$\begin{aligned} \text {E}\left[ U^{r}|X\in \mathcal{A}\right]&=\int ^{\infty }_{0}u^{r}f(u|X \in {\mathcal A})du \\&=\tau (a,b) \text {E}_U\left\{ U^{r}\left[ \Phi \left( b {U} ^{\frac{1}{2}}\right) -\Phi \left( a {U} ^{\frac{1}{2}}\right) \right] \right\} \!; \end{aligned}$$ -

for \(s=1\),

$$\begin{aligned} \text {E}\left[ U^{r}X|X\in \mathcal{A}\right]&= \int ^{\infty }_{0}\frac{u^{r}}{u^{\frac{1}{2}}} \frac{\phi \left( a {u}^{\frac{1}{2}} \right) -\phi \left( b {u}^{\frac{1}{2}}\right) }{\Phi \left( b {u}^{\frac{1}{2}}\right) -\Phi \left( a {u}^{\frac{1}{2}} \right) } f(u|X \in {\mathcal A})(u)du\\&=\tau (a,b) \text {E}_U\left\{ U^{r-\frac{1}{2}}\left[ \phi \left( a {U}^{\frac{1}{2}} \right) -\phi \left( b {U}^{\frac{1}{2}}\right) \right] \right\} \!. \end{aligned}$$ -

for \(s=2\),

$$\begin{aligned} \text {E}\left[ U^{r}X^2|X\in \mathcal{A}\right]&=\int ^{\infty }_{0}\left[ u^{r-1}+\frac{au^{r-\frac{1}{2}}\phi \left( a {u}^{\frac{1}{2}}\right) -bu^{r-\frac{1}{2}}\phi \left( b {u}^{\frac{1}{2}}\right) }{\Phi \left( b {u}^{\frac{1}{2}}\right) -\Phi \left( a {u}^{\frac{1}{2}}\right) }\right] \nonumber \\&\quad \times f(u|X \in {\mathcal A})du\\&=\tau (a,b) \text {E}_U\left\{ U^{r-1}\left[ \Phi \left( b {U}^{\frac{1}{2}}\right) - \Phi \left( a {U}^{\frac{1}{2}}\right) \right] \right. \\&\left. \quad {} + U^{r-\frac{1}{2}} \left[ a\phi \left( a {U}^{\frac{1}{2}}\right) -b\phi \left( b {U}^{\frac{1}{2}}\right) \right] \right\} . \end{aligned}$$

\(\square \)

When the distribution of U is available, this proposition gives closed form expressions for the expected values \(\text {E}\left[ U^{r}X^{s}|X\in (a,b)\right] \), where \(s=0,1,2\) and \(r \ge 1.\)

Now we compute the quantities \(\text {E}_{\phi }\left( r,h\right) \) and \(\text {E}_{\Phi }\left( r,h\right) \) for some elements of the SMN family. They are useful for implementing the ECME algorithm. For the sake of completeness, a detailed proof of these results is sketched in Appendix B.

-

Pearson type VII distribution: in this case we consider \(U\sim Gamma(\nu /2,\delta /2)\), with \(\nu >0~\text {and}~\delta >0\), where Gamma(a, b) denotes the Gamma distribution with mean a / b. The density of the random variable X, defined in (1), takes the form

$$\begin{aligned} f_{PVII}(x|\nu ,\delta )=\frac{1}{B\left( \nu /2,1/2\right) \sqrt{\delta }}\left( 1+\frac{x^2}{\delta }\right) ^{-\frac{\nu +1}{2}}, \end{aligned}$$where \(\delta >0\) and \(\nu >0\) are shape parameters and B(a, b) represents the beta function. We use the notation \(X\sim PVII(0,1;\nu ,\delta )\). In this case, we have that

$$\begin{aligned} \text {E}_{\Phi }\left( r,h\right)&=\frac{\Gamma \left( \frac{\nu +2r}{2}\right) }{\Gamma \left( \frac{\nu }{2}\right) }\left( \frac{\delta }{2}\right) ^{-r}F_{PVII}(h|\nu +2r,\delta );\\ \text {E}_{\phi }\left( r,h\right)&=\frac{\Gamma \left( \frac{\nu +2r}{2}\right) }{\Gamma \left( \frac{\nu }{2}\right) \sqrt{2\pi }}\left( \frac{\delta }{2}\right) ^{\frac{\nu }{2}}\left( \frac{h^2 +\delta }{2}\right) ^{-\frac{\left( \nu +2r\right) }{2}}, \end{aligned}$$where \(\Gamma \left( a\right) \) is the gamma function and \(F_{PVII}(\cdot )\) is the cdf of the Pearson type VII distribution. When \(\delta =\nu \) we have the Student-t distribution with \(\nu \) degrees of freedom. Also, we have the Cauchy distribution when \(\delta =\nu =1\).

-

Slash distribution: here the distribution of the scale factor U is \(Beta(\nu ,1)\), with \(\nu >0\). The density of the random variable X, defined in (1), is given by

$$\begin{aligned} f_{sl}(x|\nu )=\nu \int ^1_0u^{\nu -1}\phi (x {u}^{\frac{1}{2}})du. \end{aligned}$$We use the notation \(X\sim SL(0,1;\nu )\). In this case, we have that

$$\begin{aligned} \text {E}_{\Phi }\left( r,h\right)&=\left( \frac{\nu }{\nu +r}\right) F_{SL}(h|\nu +r);\\ \text {E}_{\phi }\left( r,h\right)&=\frac{\nu }{\sqrt{2\pi }}\left( \frac{h^2}{2}\right) ^{-\left( \nu +r\right) }\Gamma \left( \nu +r,\frac{h^2}{2}\right) \!, \end{aligned}$$where \(\Gamma \left( a,b\right) =\int ^{b}_{0}e^{-t}t^{a-1}dt\) is the incomplete gamma function, see Lemma 6 in Genç (2012), and \(F_{SL}(\cdot )\) is the cdf of the slash distribution.

-

Contaminated normal distribution: here U is a discrete random variable taking one of two states 1 or \(\gamma \). In this case the probability function of U is given by

$$\begin{aligned} U= \left\{ \begin{array}{ll} \gamma &{} \text{ with } \text{ probability }~~\xi ;\\ 1 &{} \text{ with } \text{ probability } 1- \xi , \end{array} \right. \end{aligned}$$It follows immediately that the density of the random variable X, defined in (1), is given by

$$\begin{aligned} f_{CN}(x|\xi ,\gamma )= & {} \xi \phi (x|0,\gamma ^{-\frac{1}{2}})+(1-\xi )\phi (x). \end{aligned}$$So, we have that

$$\begin{aligned} \text {E}_{\Phi }\left( r,h\right)&=\gamma ^r F_{CN}(h|\xi ,\gamma ) + \left( 1-\gamma ^r\right) \left( 1-\xi \right) \Phi \left( h\right) ;\\ \text {E}_{\phi }\left( r,h\right)&=\xi \gamma ^{r}\phi \left( h\sqrt{\gamma }\right) +\left( 1-\xi \right) \phi \left( h\right) , \end{aligned}$$where \(F_{CN}(\cdot )\) is the cdf of the contaminated normal distribution.

As a direct consequence of Proposition 1, in Appendix A we present an important corollary, which is useful for implementing the ECME algorithm.

3 The SMN censored linear regression model

3.1 The model

Consider first a linear regression model where the responses are observed with errors which are independent and identically distributed according to some SMN distribution. To be more precise, let us write

where the \(Y_i\) are responses, \({\varvec{\beta }}=(\beta _1,\ldots ,\beta _p)^{\top }\) is a vector of regression parameters and \(\mathbf {x}^{\top }_i=(x_{i1},\ldots ,x_{ip})\) is a vector such that \(x_{ij}\) is the value of the j-th explanatory variable for the subject i. By Definition 1, we have that \(Y_i\sim \text {SMN}(\mathbf {x}^{\top }_i{\varvec{\beta }},\sigma ^2,{\varvec{\nu }})\), for \(i=1,\ldots ,n\). We call it the SMN regression (SMN-R) model.

We are interested in the case where left-censored observations can occur. That is, the observations are of the form

\(i=1,\ldots ,n\), for some threshold point \(\kappa _{i}\). This is called the SMN-CR model. For convenience, we have chosen to work with the left censored case, but the results are easily extensible to other censoring types. If we make \(\kappa _i=0\) and assume that \(\epsilon _i \sim \text {N}(0,\sigma ^2)\), which corresponds to \(U_i=1\) in Definition 1, \(i=1,\ldots ,n\), we obtain the Tobit censored response model studied by Barros et al. (2010). In addition, if \(U_i\sim Gamma(\nu /2,\nu /2)\) we obtain the Student-t censored regression model developed by Massuia et al. (2014).

It is important to emphasize the difference between censored and truncated data. Citing Lee and Scott (2012), data are said to be censored when the exact values of measurements are not reported. For example, the needle of a scale that does not provide a reading over 200 kg will show 200 kg for all the objects that weigh more than the limit. Data are said to be truncated when the number of measurements outside a certain range is not reported.

Let \({\varvec{\theta }}=({\varvec{\beta }}^{\top },\sigma ^2,{\varvec{\nu }})^{\top }\) be the vector with all parameters in the SMN-CR model. Supposing that there are (possibly) m censored values of the characteristic of interest, we can partition the observed sample \(\mathbf {y}_\text {obs}\) in two subsamples of m censored and \(n-m\) uncensored values, such that \(\mathbf {y}_\text {obs}=\{\kappa _1,\ldots ,\kappa _m,y_{m+1},\ldots ,y_n\}\). Then, the log-likelihood function is given by

To estimate the parameters of the SMN-CR model, an alternative is to maximize this log-likelihood function directly, a procedure that can be quite cumbersome. Alternatively, the standard algorithm in this case is the so-called EM algorithm of Dempster et al. (1977) or some extension like the ECM Meng and Rubin (1993) or the ECME algorithms Liu and Rubin (1994). Our choice is to use the ECME algorithm, a classical, reliable, widespread tool to obtain maximum likelihood estimates.

3.2 Parameter estimation via an EM-type algorithm for the SMN-CR model

In this section we develop an EM-type algorithm for maximum likelihood estimation of the parameters in the SMN-CR model. In order to do this, we need a representation of the model in terms of missing data. First, note that using Definition 1, we have the following hierarchical representation:

If the observation i is censored, we can consider \(y_i\) as a realization of the latent unobservable variable \(Y_i \sim \text {SMN}(\mathbf {x}_i^{\top } {\varvec{\beta }}, \sigma ^2,{\varvec{\nu }})\), \(i=1,\ldots ,m\). The key to the development of our EM-type algorithm is to consider the complete-data \(\mathbf {z}=\{\mathbf {y}_\text {obs}, y_1,\ldots ,y_m,u_1,\ldots ,u_n\}\), that is, we treat the problem as if the missing data \(\mathbf {y}_{L}=\{y_1,\ldots ,y_m\}\) and \(\mathbf {u}=\{u_1,\ldots ,u_n\}\) were in fact observed. Then, using representation (15), we obtain the complete-data log-likelihood, given by

where \(f(\cdot |{\varvec{\nu }})\) is the density of the random variable U.

In what follows the superscript (k) indicates the estimate of the related parameter at stage k of the algorithm. In the E-step of the algorithm, we must obtain the so-called Q-function,

where \(\text {E}_{{\varvec{\theta }}^{(k)}}\) means that the expectation is being affected using \({\varvec{\theta }}^{(k)}\) for \({\varvec{\theta }}\). Observe that the expression of the Q-function is completely determined by the knowledge of the following expectations

as well as

Thus, dropping unimportant constants, the Q-function can be written in a synthetic form as

At each step, the conditional expectations \(\mathcal{E}_{si}\big ({\varvec{\theta }}^{(k)}\big )\) can be easily derived from the results given in Proposition 1. Thus, for an uncensored observation i, we have that \(Y_{\text {obs}_i}=Y_i \sim \text {SMN}(\mathbf {x}_i^{\top } {\varvec{\beta }},\sigma ^2,{\varvec{\nu }})\) and, therefore,

where \(\text {E}_{{\varvec{\theta }}^{(k)}}[U_i |y_i]\) can be obtained using results in Osorio et al. (2007). Thus, for example,

-

If \(Y_i \sim PVII(\mathbf {x}_i^{\top } {\varvec{\beta }},\sigma ^2,\nu ,\delta )\), we have

$$\begin{aligned} \text {E}_{{\varvec{\theta }}^{(k)}}[U_i |y_i]=\frac{\left( \nu +1\right) }{\delta +d^2\big ({\varvec{\theta }}^{(k)},y_i\big )}; \end{aligned}$$ -

If \(Y_i \sim SL(\mathbf {x}_i^{\top } {\varvec{\beta }},\sigma ^2,\nu )\), we have

$$\begin{aligned} \text {E}_{{\varvec{\theta }}^{(k)}}[U_i |y_i] = \frac{\Gamma \left( \nu +1.5,d^2\big ({\varvec{\theta }}^{(k)},y_i\big )/2\right) }{\Gamma \left( \nu +0.5,d^2\big ({\varvec{\theta }}^{(k)},y_i\big )/2\right) }; \end{aligned}$$ -

If \(Y_i \sim CN(\mathbf {x}_i^{\top } {\varvec{\beta }},\sigma ^2,\nu ,\gamma )\), we have

$$\begin{aligned} \text {E}_{{\varvec{\theta }}^{(k)}}[U_i |y_i] = \frac{1-\nu +\nu \gamma ^{1.5}e^{0.5\left( 1-\gamma \right) d^2\big ({\varvec{\theta }}^{(k)},y_i\big )}}{1-\nu +\nu \gamma ^{0.5}e^{0.5\left( 1-\gamma \right) d^2\big ({\varvec{\theta }}^{(k)},y_i\big )}}, \end{aligned}$$

where \(d\big ({\varvec{\theta }}^{(k)},y_i\big )=\left( y_i-\mathbf {x}_i^{\top }{\varvec{\beta }}^{(k)}\right) /\sigma ^{(k)}\).

For a censored observation i, we have \(Y_i \le \kappa _i\), so that

which can be obtained for the different distributions using the results given in Proposition 1, along with the results given in Eqs. (6) and (7) with \(r=1\).

When the M-step turns out to be analytically intractable, it can be replaced with a sequence of conditional maximization (CM) steps. The resulting procedure in known as ECM algorithmMeng and Rubin (1993). The ECME algorithmLiu and Rubin (1994), a faster extension of EM and ECM algorithm, is obtained by maximizing the constrained Q-function with some CM-steps that maximize the corresponding constrained actual marginal likelihood function, called CML-steps. Therefore, our EM-type algorithm (ECME) for the SMN-CR models can be summarized in the following way (see Appendix C for details):

E-step: Given \({\varvec{\theta }}={\varvec{\theta }}^{(k)}\), for \(i=1,\ldots ,n\);

-

If observation i is uncensored then, for \(s=0,1,2\), compute \(\mathcal{E}_{si}\big ({\varvec{\theta }}^{(k)}\big ) \) given in (18);

-

If observation i is censored then, for \(s=0,1,2\), compute \(\mathcal{E}_{si}\big ({\varvec{\theta }}^{(k)}\big ) \) in (19).

CM-step: Update \({{\varvec{\theta }}}^{(k)}\) by maximizing \(Q\big ({\varvec{\theta }}|{\varvec{\theta }}^{(k)}\big )\) over \({\varvec{\theta }}\), which leads to the following expressions,

CML-step: Update \(\nu ^{(k)}\) by maximizing the actual marginal log-likelihood function, obtaining

This process is iterated until some distance involving two successive evaluations of the actual log-likelihood \(\ell ({\varvec{\theta }}|\mathbf {y}_{obs})\), like

is small enough. We have adopted this strategy to update the estimate of \({\varvec{\nu }}\), by direct maximization of the marginal log-likelihood, circumventing the computation of \(\text {E}_{{\varvec{\theta }}^{(k)}}[\log (U_i)|y_{\text {obs}_i}]\) and \(\text {E}_{{\varvec{\theta }}^{(k)}}[\log (f(U_i |{\varvec{\nu }}))|y_{\text {obs}_i}]\).

4 Approximated standard errors for the fixed effects

Standard errors of the ML estimates can be approximated by the inverse of the observed information matrix, but there is generally no closed form, see Meilijson (1989) and Lin (2009). Considering \({\varvec{\theta }}=\left( {\varvec{\beta }},\sigma ^2,{\varvec{\nu }}\right) \), the empirical information matrix is defined as

where \(\mathbf {V}^{\top }\left( \mathbf {y}_{{obs}}|{\varvec{\theta }}\right) =\sum _{i=1}^{n}\mathbf {v}\left( \mathbf {y}_{{obs}_i}|{\varvec{\theta }}\right) \). It is noted from the result of Louis (1982) that, the individual score can be determined as

Thus, substituting the ML estimates of \({\varvec{\theta }}\) in (23), the empirical information matrix \(\mathbf {I}_{e}\left( {\varvec{\theta }}|\mathbf {y}_{obs}\right) \) is reduced to

where \(\widehat{\mathbf {v}}_i=\left( \widehat{\mathbf {v}}_{{\varvec{\beta }}i},\widehat{\mathbf {v}}_{\sigma ^2 i},\widehat{\mathbf {v}}_{{\varvec{\nu }}i}\right) \) is an individual score vector and

where \(\ell _{c}({\varvec{\theta }}|\mathbf {z}_i )\) is the log-likelihood formed from the single complete observation \(\mathbf {z}_i=(y_{\text {obs}_i},y_{i},u_i)^{\top }\) and \(\mathcal{E}_{si}({\varvec{\theta }}^{(k)})=\text {E}_{{\varvec{\theta }}^{(k)}}[U_i Y_i^s|y_{\text {obs}_i}]\). It is important notice that the values of Eq. (25) depend of the distribution of U. Thus for example:

-

For the Student-t distribution: We consider \(U\sim Gamma(\nu /2,\delta /2)\), with \(\nu >0\), then

$$\begin{aligned} \widehat{\mathbf {v}}_{\nu i}= & {} -\psi \left( \frac{\widehat{\nu }}{2}\right) +\frac{1}{2}\left( \log \left( \frac{\widehat{\nu }}{2}\right) +1\right) \\&+\frac{1}{2}\left( \text {E}\left[ \log \left( U_i\right) |\mathbf {y}_{{obs}_i},\widehat{{\varvec{\theta }}}\right] -\mathcal{E}_{0 i}\big (\widehat{{\varvec{\theta }}}\big )\right) , \end{aligned}$$where \(\psi \left( x\right) \) represents the digamma function of x.

-

For the Slash distribution: We consider \(U \sim Beta(\nu ,1)\) with positive shape parameter \(\nu \), then

$$\begin{aligned} \widehat{\mathbf {v}}_{\nu i}= & {} \frac{1}{\widehat{\nu }}+ \text {E}\left[ \log \left( U_i\right) |\mathbf {y}_{{obs}_i},\widehat{{\varvec{\theta }}}\right] . \end{aligned}$$

It is important to stress that the SE of \(\nu \) depends heavily on the calculation of \(\text {E}\left[ \log \left( U_i\right) |\mathbf {y}_{{obs}_i},\widehat{{\varvec{\theta }}}\right] \), which relies on computationally intensive Monte Carlo integrations. In our analysis, we focus solely on comparing the SE of \(\beta \) and \(\sigma ^2\).

5 Simulation studies

5.1 Robustness of the EM estimates (simulation study 1)

The goal of this section is to compare the performance of the estimates for some censored regression models in the presence of outliers on the response variable. We consider the cases normal, Student-t, contaminated normal and slash, and denote them by N-CR, T-CR, CN-CR and SL-CR, respectively. The computational procedures were implemented using the "R" software R Core Team (2015).

We performed a simulation study based on the N-CR model. Specifically, we considered model (12) with \(\mathbf {x}_i^{\top }=(1,x_i)\) and \(\varepsilon _i\sim \text {N}(0, \sigma ^2)\), \(i=1,\ldots ,n\). We generated 1000 artificial samples of size \(n=300\), considering \({\varvec{\beta }}^{\top }=\left( \beta _1,\beta _2\right) =\left( 1,4\right) ,\sigma ^2=2\) and fixing the left censoring level at \(p=8,~20~\text {and}~35\,\%\) (that is, 8, 20 and \(35\,\%\) of the observations in each data set were left censored, respectively). We generated independently the values \(x_i\), for \(i=1,\ldots ,n\), from a uniform distribution on the interval (2, 20). These values were fixed throughout the simulations.

To assess how much the EM estimates are influenced by the presence of outliers, we replaced the observation \(y_{150}\) by \(y_{150}(\vartheta )=y_{150}-\vartheta \), with \(\vartheta =1,2,\ldots ,10\). Let \(\widehat{\beta }_{i}(\vartheta )\) and \(\widehat{\beta }_{i}\) be the EM estimates of \(\beta _i\) with and without contamination, respectively, \(i=1,2\). We are particularly interested in the relative changes

We define the relative changes for \(\sigma ^2\) analogously.

For each replication we obtained the parameter estimates with and without outliers, under the following models: N-CR, T-CR and SL-CR, both with \(\nu =3\), and CN-CR with \({\varvec{\nu }}^{\top }= (\xi ,\gamma )=(0.3,0.3)\). Table 1 and Fig. 1 depict the average values of the relative changes across all samples and different censoring levels. In the N-CR case, we observe that influence increases dramatically when \(\vartheta \) increases. However, for the SMN-CR models with heavy tails, as the T-CR and the SL-CR, these measures vary little, which indicates that they are more robust than the N-CR model in the presence of discrepant observations. For the CN-CR model with censoring level \(p=20\,\%\) and \(35\,\%\) we can observe that the relative change increases as \(\vartheta \) increases, specially for the parameter \(\beta _1\).

5.2 Asymptotic properties (simulation study 2)

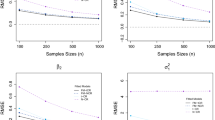

We also conducted a simulation study to evaluate the finite-sample performance of the parameter estimates. We generated artificial samples from the SMN-CR model (12), with \(\mathbf {x}_i^{\top }=(1,x_{i})\), \(i=1,\ldots ,n\).

We considered the censoring levels \(p=10\), 25 and \(45\,\%\). The sample sizes were fixed at \(n=50\), 100, 150, 200, 300, 400, 500, 700 and 800. The true values of the regression parameters were taken as \(\beta _1=1.5,~\beta _2=4\) and \(\sigma ^2=0.5\). As considered in Labra et al. (2012), the variable \(x_i\) ranges from 0.1 to 20 and these values were maintained throughout the experiment. For each combination of parameters, sample sizes and censoring levels, we generated 1000 samples from the SMN-CR model, under four different situations: N-CR, T-CR \((\nu =3)\), SL-CR \((\nu =4)\) and CN-CR \(\left( {\varvec{\nu }}^{\top }=(0.5,0.5)\right) \).

In order to analyze the performance of the estimates obtained using our proposed EM-type algorithm, we computed the bias and the mean squared error (MSE) for each combination of sample size, level of censoring and parameter value. For \(\beta _i\), they are given, respectively, by

where \(\widehat{\beta }^{(j)}_i\) is the estimate of \(\beta _i\) for the j-th sample. We define bias and MSE for \(\sigma ^2\) in the same manner. The result considering \(p=10\,\%\) is shown in Fig. 2. We can see a pattern of convergence to zero of the bias and MSE when n increases. As a general rule, we can say that bias and MSE tend to approach to zero when the sample size increases indicating that the estimates based on the proposed EM-type algorithm do provide good asymptotic properties. This same pattern of convergence to zero is repeated considering different levels of censoring p (see Appendix D for details).

5.3 Consistency of the estimates of the standard errors for the MLE’s of the parameters (simulation study 3)

Now we show, via simulation study, that the method suggested in Sect. 4 to approximate the SE of the MLE of the regression parameters has good asymptotic properties. We fixed a SMN-CR model (N-CR and T-CR or SL-CR with \(\nu =4\) respectively) and a censoring level (5, 10, 20, 30 or 50 %). For each one of these fifteen combinations of model and censoring level, we generated 1000 samples of size \(n=100\) with \(\beta _1=2\), \(\beta _2=1\) and \(\sigma ^2=1\). For each sample, we obtained the MLE’s of \({\varvec{\theta }}=(\beta _1,\beta _2,\sigma ^2)\), the estimates of their SE using the technique proposed in Sect. 4 and an approximate 95 % confidence interval assuming asymptotic normality. Table 2 presents the sample standard errors of \(\widehat{\theta }\), i.e., the value

The results from this table show a reasonable MC coverage for both \({\varvec{\beta }}\) and \(\sigma ^2\), although the values for \(\sigma ^2\) tend to be lower the nominal level \((95\,\%)\). Taking into account the moderate sample size (n=100), we consider these results quite satisfactory.

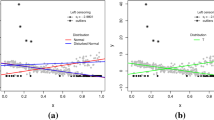

6 Application

In this section, we provide an application of the results derived in the previous sections using the data described by Mroz (1987). The data set consists of 753 married white women with ages between 30 and 60 years old in 1975, with 428 women that worked at some point during that year. The response variable is the wage rate, which represents a measure of the wage of the housewife known as the average hourly earnings. It is important to stress that if the wage rates are set equal to zero, these wives did not work in 1975. Therefore, these observations are considered left censored at zero. Four predictor variables were considered: the wife’s age, years of schooling, the number of children younger than six years old in the household and the number of children between six and nineteen years old. These data were analyzed by Arellano-Valle et al. (2012) using a truncated Student-t regression model. We analyzed it with the aim of providing additional inferences by using the SMN distributions in the context of censored models. We fitted a regression model with an intercept parameter \(\beta _1\) and applied the EM-type algorithm for censored data explained in Sect. 3.2, considering again the N-CR, T-CR, SL-CR and CN-CR models for comparative purposes.

Table 3 shows the parameter estimates, together with their corresponding SE. Table 4 presents some model selection criteria, together with the values of the log-likelihood. The AIC Akaike (1974), BIC Schwarz (1978) and EDC Bai et al. (1989) criteria indicate that the three models with longer tails than the N-CR model seem to produce more accurate estimates. The SE of the T-CR, SL-CR and CN-CR models are smaller than that of the N-CR model.

In order to identify atypical observations and/or model misspecification, we analyzed the transformation of the martingale residual, \(r_{MT_i}\), proposed by Barros et al. (2010). These residuals are defined by

\(i=1,\ldots ,n\), where \({r_{M_i}=\delta _i + \log S(y_i,\widehat{{\varvec{\theta }}}) }\) is the martingale residual proposed by Ortega et al. (2003)—see more details in Therneau et al. (1990), with \(\delta _i=0,1\) indicating whether the i-th observation is censored or not, respectively, \(\text {sign}(r_{M_i})\) denoting the sign of \(r_{M_i}\) and \(S\big (y_i,\widehat{{\varvec{\theta }}}\big )=P_{\widehat{{\varvec{\theta }}}}(Y_i > y_i)\) representing the survival function evaluated at \({y}_i\), supposing that it is being affected using the EM estimate \(\widehat{{\varvec{\theta }}}\) for \({\varvec{\theta }}\).

The plots of \(r_{MT_i}\) with generated confidence envelopes are presented in Fig. 3. From this figure, we can see clearly that the SMN-CR models with heavy tails fit better the data than the N-CR model, since, in that cases, there are fewer observations which lie outside the envelopes.

The robustness of the three models with longer tails than the N-CR model can be assessed by considering the influence of a single outlying observation on the EM estimate of \({\varvec{\theta }}\). In particular, we can assess how much the EM estimate of \({\varvec{\theta }}\) is influenced by a change of \(\nabla \) units in a single observation \(y_{i}\). Replacing \(y_{i}\) by \(y_{i}(\nabla )=y_{i}+\nabla \), we define \(\widehat{\beta }_{i}(\nabla )\) as the EM estimate of \(\beta _i\) after contamination, \(i=1,\ldots ,5\), and analyze the behavior of the relative changes, as we did in Sect. 5.1. In this study we contaminated the observations \(y_{750}\) (censored) and \(y_7\) (uncensored), considering \(\nabla \in \{0,1,\ldots ,10\}\).

Figure 4 displays the results of the relatives changes of the estimates for different values of \(\nabla \). We omitted the plot concerning \(\beta _2\) because the relative changes patterns are not so distinguishable in this case. As expected, the estimates from the models with longer tails than the N-CR model are less affected by variations on \(\nabla \), no matter if the observation is censored or not. Thus, it is clear that the SMN-CR models with heavy tails are more robust, providing more accurate estimates when the data have departures from normality.

7 Conclusions

We have proposed a robust approach to linear regression models with censored observations based on SMN distributions, called SMN-CR models. This offers a high degree of flexibility, allowing us to deal properly with censored data in the presence of outliers. A novel ECME algorithm to obtain approximated maximum likelihood estimates is developed using formulas for the moments of the truncated SMN distribution, leading to closed-form expressions for the E-step. We applied our methodology to real data set (freely downloadable from "R") as well as to simulated data, in order to illustrate how the procedures can be used to evaluate model assumptions, identify outliers, and obtain robust parameter estimates. From these results, it is encouraging that the use of SMN-CR models with heavy tails offer a better fitting, a better protection against outliers and more precise inferences than the N-CR model.

Although the SMN-CR models considered here have shown great flexibility to model symmetric data, its robustness against outliers can be seriously affected by the presence of skewness. Recently, Lachos et al. (2010) proposed a remedy to accommodate skewness and heavy-tailedness simultaneously, using SMSN distributions. We conjecture that our methodology can be used under CR models, and should yield satisfactory results at the expense of additional complexity in implementation. An in-depth investigation of such extensions is beyond the scope of the present paper, but it is an interesting topic for further research. Finally, the proposed EM-type algorithm has been coded and implemented in the "R" package "SMNCensReg" Garay et al. (2013), which is available for download at CRAN repository. A great advantage of this package is that all the censoring possibilities are taken into account: left, right and interval.

References

Akaike H (1974) A new look at the statistical model identification. Autom Control IEEE Trans 19:716–723

Arellano-Valle R, Castro L, González-Farías G, Muñoz-Gajardo K (2012) Student-t censored regression model: properties and inference. Stat Methods Appl 21:453–473

Arellano-Valle R. B (1994) Distribuições elípticas: propriedades, inferência e aplicações a modelos de regressão. Ph.D. thesis, Instituto de Matemática e Estatística, Universidade de São Paulo, in portuguese

Bai ZD, Krishnaiah PR, Zhao LC (1989) On rates of convergence of efficient detection criteria in signal processing with white noise. Inform Theory IEEE Trans 35:380–388

Barros M, Galea M, González M, Leiva V (2010) Influence diagnostics in the Tobit censored response model. Stat Methods Appl 19:716–723

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B 39:1–38

Fang KT, Zhang YT (1990) Generalized multivariate analysis. Springer, Berlin

Garay A. M, Lachos V, Massuia M. B (2013) SMNCensReg: fitting univariate censored regression model under the scale mixture of normal distributions. R package version 2.2. http://CRAN.R-project.org/package=SMNCensReg

Genç AI (2012) Moments of truncated normal/independent distributions. Stat Pap 54:741–764

Greene W (2012) Econometric analysis. Prentice Hall, New York

Ibacache-Pulgar G, Paula G (2011) Local influence for Student-t partially linear models. Comput Stat Data Anal 55:1462–1478

Kim HJ (2008) Moments of truncated Student- distribution. J Korean Stat Soc 37:81–87

Labra FV, Garay AM, Lachos VH, Ortega EMM (2012) Estimation and diagnostics for heteroscedastic nonlinear regression models based on scale mixtures of skew-normal distributions. J Stat Plan Inference 142:2149–2165

Lachos VH, Ghosh P, Arellano-Valle RB (2010) Likelihood based inference for skew-normal independent linear mixed models. Stat Sin 20:303–322

Lange KL, Little R, Taylor J (1989) Robust statistical modeling using t distribution. J Am Stat Assoc 84:881–896

Lee G, Scott C (2012) EM algorithms for multivariate Gaussian mixture models with truncated and censored data. Comput Stat Data Anal 56:2816–2829

Liu C, Rubin DB (1994) The ECME algorithm: a simple extension of EM and ECM with faster monotone convergence. Biometrika 80:267–278

Lin TI (2010) Robust mixture modeling using multivariate skew tădistributions. Stat Comput 20:343–356

Louis TA (1982) Finding the observed information matrix when using the EM algorithm. J R Stat Soc 44:226–233

Massuia MB, Cabral CRB, Matos LA, Lachos VH (2014) Influence diagnostics for Student-t censored linear regression models. Statistics 85:1–21. doi:10.1080/02331888.2014.958489

Meilijson I (1989) A fast improvement to the EM algorithm to its own terms. J R Stat Soc Ser B 51:127–138

Meng XL, Rubin BD (1993) Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80:267–278

Meza C, Osorio F, la Cruz RD (2012) Estimation in nonlinear mixed-effects models using heavy-tailed distributions. Stat Comput 22:121–139

Mroz TA (1987) The sensitivity of an empirical model of married women’s hours of work to economic and statistical assumptions. Econometrica 55:765–799

Ortega EMM, Bolfarine H, Paula GA (2003) Influence diagnostics in generalized log-gamma regression models. Comput Stat Data Anal 42:165–186

Osorio F, Paula GA, Galea M (2007) Assessment of local influence in elliptical linear models with longitudinal structure. Comput Stat Data Anal 51:4354–4368

R Core Team (2015) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, http://www.R-project.org

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Therneau TM, Grambsch PM, Fleming RT (1990) Martingale-based residuals for survival models. Biometrika 77(1):147–160

Villegas C, Paula G, Cysneiros F, Galea M (2012) Influence diagnostics in generalized symmetric linear models. Comput Stat Data Anal 59:161–170

Wei CG, Tanner MA (1990) Posterior computations for censored regression data. J Am Stat Assoc 85:829–839

Wu L (2010) Mixed effects models for complex data. Chapman & Hall/CRC, Boca Raton

Acknowledgments

We thank the editor, associate editor, and two referees whose constructive comments led to an improved presentation of the paper. The research of Víctor H. Lachos was supported by Grant 305054/2011-2 from Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq-Brazil) and by Grant 2014/02938-9 from Fundação de Amparo à Pesquisa do Estado de São Paulo (FAPESP-Brazil). Celso R. B. Cabral was supported by CNPq (via the Universal and CT-Amazônia projects) and CAPES (via Project PROCAD 2007). The research of Aldo M. Garay is supported by Grant 161119/2012-3 from CNPq and by Grant 2013/21468-0 from FAPESP-Brazil and Heleno Bolfarine was supported by CNPq.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Lemmas and corollary

The following Lemmas, provided by Kim (2008) and Genç (2012), are useful for evaluating some integrals used in this paper as well as for the implementation of the proposed EM-type algorithm.

Lemma 1

If \(Z\sim \text {TN}_{(a,b)}\left( 0,1\right) \), then

for \(k=-1,0,1,2,\ldots \)

Proof: See Lemma 2.3 in Kim (2008).

Lemma 2

Let U be a positive random variable. Then \({F}_{SMN}\left( a\right) =E_U\left[ \Phi \left( aU^{\frac{1}{2}}\right) \right] ,\) where \({F}_{SMN}(\cdot )\) denotes the cdf of a standard SMN random variable, that is, when \(\mu =0\) and \(\sigma ^2=1\).

Proof: See Lemma 3 in Genç (2012).

The following Corollary is a direct consequence of Proposition 1 given in Sect. 2.

Corollary 1

Let \(Y \sim \text {SMN}(\mu ,\sigma ^2, {\varvec{\nu }})\) with scale factor U and \(\mathcal{A}=(\text {a},\text {b})\). Then, for \(r \ge 1\),

where \(X \sim \text {SMN }(0,1,{\varvec{\nu }})\) and \(\mathcal{A}^*=\left( a^*,b^*\right) \), with \(a^*=\left( a-\mu \right) /\sigma \) and \(b^*=\left( b-\mu \right) /\sigma \).

Appendix 2: Derivations of quantities \(\text {E}_{\phi }\left( r,h\right) \) and \(\text {E}_{\Phi }\left( r,h\right) ~\)for SMN distributions

In this Appendix, we calculate the expressions for the expected values \(\text {E}_{\phi }\left( r,h\right) \) and \(\text {E}_{\Phi }\left( r,h\right) ,\) for \(r,h\ge 0\), given in Proposition 1.

1.1 Pearson type VII distribution (and the Student-t distribution)

In this case \(U\sim Gamma(\nu /2,\delta /2)\), with \(\nu >0~\text {and}~\delta >0.\) To facilitate the notation, let us make \(\alpha _1=(\nu +2r)/2\) and \(\alpha _2=(h^2+\delta )/2\). Then,

where the integrand in (26) is the pdf of a random variable \(U'\) with distribution \(Gamma\left( \alpha _1,\alpha _2\right) \).

where in (27) the expectation is computed with respect to \(U' \sim Gamma\left( \alpha _1,\delta /2\right) \) and \(F_{PVII}(\cdot )\) represents the cdf of the Pearson type VII distribution. Then, the result follows from Lemma 2. When \(\delta =\nu \), i.e., the Student-t distribution, we have that \(\text { E}_{\phi }\left( r,h\right) \) and \(\text { E}_{\Phi }\left( r,h\right) \) are given by

1.2 Slash distribution

In this case \(U \sim Beta(\nu ,1)\), with positive shape parameter \(\nu \), and

thus, considering \(\gamma ^{*}\left( a,x\right) =\int ^{x}_{0}e^{-t}t^{a-1}dt\), we obtain Eq. (28).

where the integrand in (29) is the expectation of the random variable \(\Phi (h U'^{\{ \frac{1}{2} \} })\), with \({U'}\sim ~\text {Beta}(\nu +r,1)\). Using Lemma 2, we obtain Eq. (30), where \(F_{SL}(\cdot )\) is the cdf of the slash distribution.

1.3 Contaminated normal distribution

where \(F_{CN}(\cdot )\) is the cdf of the contaminated normal distribution.

Appendix C. Details of the EM-type algorithm

In this Appendix, we derive the EM algorithm Eqs. (20)–(22). Let \({\varvec{\theta }}=({\varvec{\beta }}^{\top }, \sigma ^2, {\varvec{\nu }})\) be the vector with all parameters in the SMN-CR model and consider the notation given in Sect. 3.2. Denoting the complete-data likelihood by \(L(\cdot |\mathbf {y}_\text {obs}, \mathbf {y}_{L},\mathbf {u})\) and pdf’s in general by \(f(\cdot )\), we have that

Dropping unimportant constants, the complete-data log-likelihood function is given by

The Q-function at the E-step of the algorithm is given by

so we have

The expectations \(\mathcal{E}_{si}\big ({\varvec{\theta }}^{(k)}\big ) = \text {E}_{{\varvec{\theta }}^{(k)}}[U_i Y_i^s|y_{\text {obs}_i}],\,\,\, s=0,1,2\), used in the E-step of the algorithm, are computed considering the two possible cases: (i) when the observation i is uncensored and (ii) otherwise. In the former case we solve the problem using results obtained by Osorio et al. (2007). In the later case we use Proposition 1. Then, we have

In the CM-step, we take the derivatives of \(Q\big ({\varvec{\theta }}|{{\varvec{\theta }}}^{(k)}\big )\) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\), i.e.,

The solution of \(\displaystyle \frac{\partial Q\big ({\varvec{\theta }}|{\varvec{\theta }}^{(k)}\big )}{\partial {\varvec{\beta }}} = 0\) is

The solution of \(\displaystyle \frac{\partial Q\big ({\varvec{\theta }}|{\varvec{\theta }}^{(k)}\big )}{\partial \sigma ^2} = 0\) is

For the CML-step, we estimate \({\varvec{\nu }}\) by maximizing the marginal log-likelihood, circumventing the (in general) complicated task of computing \(\text {E}_{{\varvec{\theta }}^{(k)}}[\log \left( U_i\right) |y_{\text {obs}_i}]\) and \(\text {E}_{{\varvec{\theta }}^{(k)}}[\log \left( f(U_i |{\varvec{\nu }})\right) |y_{\text {obs}_i}]\), i.e.,

Appendix D. Complementary results of the simulation studies: asymptotic properties

Figures 5 and 6 depict the average bias and the average MSE of \(\widehat{\beta }_1\), \(\widehat{\beta }_2\) and \(\widehat{\sigma ^2}\) for the levels of censoring \(p=25\,\%\) and \(p=45\,\%\), respectively.

Rights and permissions

About this article

Cite this article

Garay, A.M., Lachos, V.H., Bolfarine, H. et al. Linear censored regression models with scale mixtures of normal distributions. Stat Papers 58, 247–278 (2017). https://doi.org/10.1007/s00362-015-0696-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-015-0696-9