Abstract

This paper proposes an improved solution for the calibration of multi-camera line structured light vision systems, which is aimed at the problem of discontinuity or even faults in the overlapping area of measurement in the coordinate system. First, the two-dimensional target is placed in different poses in the overlapping area of two adjacent cameras, to obtain the initial matching matrix of the adjacent camera coordinate system. Then, an optimization objective function is constructed to optimize the internal and external parameters of the camera based on the characteristics of the ring-shaped measurement field itself, to achieve the best matching of the overlapping area. The experimental results show that, compared to the traditional matching method, the method proposed in this paper reduces the matching error of adjacent cameras by about 66.2\(\%\), the accumulated error is reduced by about 91.8\(\%\), and the overall measurement accuracy is improved by 4.5 times.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Visual measurement is an important means of industrial measurement. At present, visual measurement is divided into two categories: active and passive. In passive methods, for example, binocular stereovision [1], multi-view geometry technology [2], however, for this passive method, when the surface texture of the object is less, the matching point pair of the image will be reduced, and the measurement accuracy will be seriously reduced. The active method can apply feature information to the object under test, so the active method has a larger application field of view. For example, the laser speckle coding technology adopted by Kinect V1 will form different diameter spots after the laser is irradiated on the surface of objects of different depths. According to the change, depth information can be calculated. However, the speckle is affected by light. The Time-of-Flight principle (ToF) [3] is a method for measuring the distance between a sensor and an object, based on the time difference between the emission of a signal and its return to the sensor, after being reflected by an object. The whole system can be very compact and suitable for mobile applications. However, the technology still has the problems of low resolution and high power consumption. The digital fringe projection method [4] is a method of measuring the structure light with high precision. However, when the surface shape height of the object under test changes dramatically or is discontinuous, such as the engine camshaft, the grating fringes collected by the camera will be misaligned and the phase level is not clear, which leads to the inaccurate contour calculation. The linear structure optical measurement technology is a non-contact measurement technology based on the laser triangulation method [5, 6]. This method has high precision, and fast measurement speed and is very suitable for the measurement of shaft parts. Zhou [7] successfully applied the line structure light measurement technology to the detection and measurement of the rail cross-section. Miao [8] calculate the diameter of the axis by projecting the line structure light on the shaft parts. However, due to the limited field of view angle, the single line structured optical measurement system often needs to cooperate with a multi-axis system to complete the whole contour scanning of the workpiece [9]. It is not only time-consuming and laborious, but also the overall geometric parameters are estimated based on local information, and the accuracy is relatively poor. Therefore, some researchers [10,11,12,13] have combined multiple single-line structured optical measurement systems (MLSVS) to realize the full profile measurement of complex structures, which greatly improves the measurement efficiency and accurate estimation of the overall structure parameters.

There is not much direct research on the global calibration of multi-camera line structured optical vision systems, but it can refer to the idea of global calibration of dual cameras and multi-cameras. Because in the calibration of multi-camera line structured optical vision system, it is very important to realize the unity of multi-camera field of view. The unified thinking is to find the transformation relationship between a local coordinate system and the target world coordinate system, This is consistent with the global calibration idea of dual cameras and multi-cameras. According to the different dimensions of the target, it can be divided into three methods: 1D, 2D, and 3D. Wang [14] proposed an accurate calibration algorithm for a one-dimensional object multi-camera based on convex relaxation technology. Two-dimensional targets, such as chessboards [15] or round targets [16], with flexible use and low cost, are highly praised by many scholars. Cai [17] proposed that three phase-shifting circular gratings should be used instead of traditional targets to improve the accuracy of center extraction under defocusing. Usamentiaga [18] Based on a calibration plate with protruding cylinder, the laser spectrum line is projected on the cylinder, and the global calibration between multiple cameras is completed according to the cylinder profile information. However, the calibration version needs to be marked in advance with the help of three coordinates before each use to ensure the positioning accuracy, which is not suitable for the measurement in the industrial field, and is large in volume and not easy to carry. To reduce the cost of the 3D target and improve the portability and simplicity of operation, Sun [19] achieved three-dimensional calibration by two high-precision cylinders with known radius and length, but the extraction accuracy of the projection profile of the cylinder is affected by the illumination conditions. From the current global calibration technology, the two-dimensional calibration method is still the mainstream method, and it is also the benchmark for the accurate comparison of various novel calibration technologies. The above methods are mostly used for the global calibration of multiple cameras with a certain overlapping area, but the non-overlapping field of view matching problem can be regarded as an extension of the overlapping field of view matching problem. Most of the non-overlapping field of view global calibration technology is also suitable for the matching of overlapping fields of view, so it is also worthy of reference. Wei [20] use the manipulator to control the structured light projection to multiple known positions, and solve the relationship between the structured light equation and the external parameters of each camera for system calibration. Yang [21] designed a non overlapping camera calibration device including two fixed chessboard targets. An [22] proposed an omnidirectional camera calibration method based on charcoal board. Despite their success, these methods still have limitations because they rely on either large goals, auxiliary equipment, and complex processes. To reduce the experimental cost, Van Crombrugge [23] proposed the method of multi-camera calibration based on Gray code projection. However, this method needs to be projected on the screen, so it is suitable for cameras arranged in parallel, but there is nothing to do with cameras arranged in a ring, so the flexibility is not high. In addition, the accuracy is not as good as the method of using high-precision targets or high-precision mobile devices [24].

Whether the method is based on the match of overlapping area or non overlapping area, if the feature points can be accurately extracted and the manufacturing accuracy of the imaging system is ideal, the camera calibration error should not exist. However, there certainly is, and this error will continue to accumulate with the increase of cameras, resulting in uneven connection or even fault in the overlapping area. On this issue, Jiang [25] proposed to use an LCD screen instead of a checkerboard target, because an LCD screen can achieve better contrast, which is conducive to the accurate extraction of feature points. However, to better improve the accuracy based on the LCD screen, the resolution of the LCD screen also needs to be improved to a high level. This method is not cheap. In addition, many scholars believe that blindly improving the manufacturing accuracy of the imaging system is very costly, so they focus on the back-end data processing. Huang [26] proposed a point cloud correction algorithm to optimize the coordinates of the global point cloud obtained by different individual systems, successfully reducing the error and improving the coincidence degree of the spliced point cloud, However, although the algorithm of back-end processing has improved the point cloud smoothness, it is not reasonable to analyze the overall measurement accuracy, and it is unreasonable to not consider the improvement of calibration accuracy of the imaging system. Even the low-end imaging system still has a larger calibration accuracy improvement space. With the continuous improvement of deep learning technology in recent years, Chen [27] has realized the high precision extraction of targets based on a deep CNN network, thus improving the calibration accuracy. Charco [28] predicts the camera transformation relationship in multi-view based on Alexnet network variation. Although the method has some effect and has some reference significance for the multi-camera coordinate system, the prediction transformation accuracy is not very high.

Aiming at the discontinuous fault problem of overlapping fields of view mentioned above, this paper studies the calibration of multi-camera line structured light vision systems in annular measurement fields and proposes a loop closure calibration scheme based on the characteristics of annular fields. Firstly, the mutual position of each sensor is preliminarily determined based on the two-dimensional target. Then, the overall optimization equation of the measurement field is constructed based on the characteristics of the annular measurement field, and the overall optimization of the mutual position of the cameras and the nonlinear error is carried out. Finally, according to the optimization results, the best matching of the overlapping area is achieved. The method improves the overall accuracy of the multi-camera line structured light vision system, does not need to rely on complex equipment, and is suitable for fast multi-field calibration of the multi-line structured light measurement system in an industrial field.

The following chapters are arranged as follows: Sect. 2 is the introduction of the system; Sect. 3 is the calibration principle; Sect. 4 is the experiment and analysis, and Sect. 5 is the conclusion.

2 System setup and measurement model

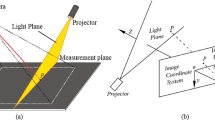

The multi-camera line structured light vision system studied in this paper consists of multiple line structured light sensors, each of which consists of a camera and a line structured light generator. Each line structured light sensor is arranged around the object to be measured at an interval of 120 degrees, as shown in Fig. 1.

The coordinate system in the measurement system includes three line structured light probe coordinate systems, which are \(O_{c_1}-X_{c_1}Y_{c_1}Z_{c_1}\), \(O_{c_2}-X_{c_2}Y_{c_2}Z_{c_2}\), and \(O_{c_3}-X_{c_3}Y_{c_3}Z_{c_3}\). \(O_{c_1}-X_{c_1}Y_{c_1}Z_{c_1}\) where camera 1 is located is the world coordinate system of the sensor. \(Z_c\) is the optical axis of each camera.

The light emitted by the line structured light generator intersects with the surface of the cylinder to produce an arc light band, which is captured by the camera. The measurement system processes the light strip images and uses a laser triangulation algorithm to obtain surface measurements. The entire system is mounted on a vertical lift table for push-broom measurements.

As shown in Fig. 1, \(P_c(x, y, z)\) is the coordinate of the point where the line structured light intersects with the object’s outer surface to be measured in the camera coordinate system. The pixel coordinates of this point on the camera CCD is P(u, v, 1). The camera imaging is based on the pinhole imaging model, and \(P_c\) and P satisfy the following relationship:

In formula(1), \(\rho\) is the scale coefficient, A is the camera’s internal parameter matrix. \(\gamma\) is used to describe the angular relationship between the u-axis and the v-axis, in the ideal case \(\gamma =0\). \((u_0,v_0)\) is the coordinates of the main point in the pixel coordinate system, f is the camera focal length, \((d_x,d_y)\) is the CCD pixel size. The parameter calibration in formula(1) is obtained by Zhang’s [15] camera calibration method. After the camera parameters are obtained, the structured light plane needs to be calibrated. The structured light plane equation in the camera coordinate system is:

In formula (2), (a, b, c, d) is the light plane coefficient of the structured light. Using the coordinates of the images formed by multiple structured light strips in the camera coordinate system, the structured light plane equation can be obtained. Since there are multiple structured optical probes in this system, the research focus of this paper is on the calibration method of each line structured light coordinate system to the same coordinate system.

3 Calibration of improved multi-camera line structured light vision system

3.1 Preliminary calibration of multi-camera line structured light vision system

In this paper, the calibration program of the traditional calibration method is written based on MATLAB r2019b stereo calibration toolbox [29]. A two-dimensional circular target is placed between two cameras, and the coordinate system of the three structured light probes is moved to the coordinate system of camera 1 by means of the two-dimensional target. The schematic diagram of the calibration is shown in Fig. 2.

Taking the transformation relationship between camera 1 and camera 2 as an example, the pixel coordinates of camera 1 and camera 2 satisfy the following relationship:

In formula (3), \((x_w, y_w, z_w)\) is the world coordinate of the point on the target. \((u_1, v_1)\) is the pixel coordinate of the point on camera 1. \((u_2,v_2)\) is the pixel coordinate of the point on camera 2. \(\rho _1\) and \(\rho _2\) are the scale coefficient between camera 1 and camera 2. \(RT_{11}\) is the transformation relationship from the local coordinate system of target 1 to the camera coordinate system of camera 1. \(RT_{12}\) is the transformation relationship between the local coordinate system of target 1 and the camera coordinate system of camera 2. \(A_1\) and \(A_2\) are the internal parameter matrix of camera 1 and camera 2.

Each variable in formula (3) can be obtained by the camera calibration method of Zhang [15]. Table 1 represents the coordinate transformation relationship during the calibration process.

\(RT_{ij}\) in Table 1 is the transformation matrix from the world coordinate system where target i is located to the camera coordinate system of camera j.

The world coordinate system is established on the camera coordinate system of camera 1. According to the relationship of each coordinate system in Table 1, the transformation matrix from the coordinate system of camera 2 to the world coordinate system is obtained as:

So the transformation matrix from the camera 3 coordinate system to the world coordinate system is:

Using the traditional two-dimensional target calibration method to calibrate the camera coordinate system in pairs, the calibration process is simple and the conversion relationship between each camera and the sensor coordinate system can be quickly calculated. However, in the world coordinate system unified by this method to calculate the reprojection error of the feature points captured by adjacent cameras, it will be found that the error is large, and there is an accumulated error between non-adjacent cameras. The Fig. 3 is the actual experimental result.

As can be seen from Fig. 3a, the reprojection error of adjacent cameras obtained by the traditional two-dimensional target calibration method is not only large overall, but also relatively discrete. Comparing the two pictures in Fig. 3, it can be found that the accumulated error even reaches about 4 pixels. The main reason for the above phenomenon is that camera calibration is an optimized process, and the internal parameter calibration results are related to the target attitude and target accuracy collected at that time. Even if different cameras have the same configuration, the internal parameter calibration results will be different. Then, when calculating the coordinates of the camera coordinate system of the target, the internal parameter error of the camera directly affects its calculation accuracy, thus eventually causing the matching error between adjacent cameras and the accumulated error between different cameras.

In this paper, taking the focal length f of the camera as an example, set the focal lengths of camera 1, camera 2, and camera 3 to be \(f_3\), \(f_2\), and \(f_3\), which satisfy the following relationship.

In the formula(6), \(\varDelta f\) is a non-negative number, indicating the deviation of the focal length of other cameras relative to the focal length of camera 1.

The Fig. 4 below shows the influence of the calibration error of the camera focal length f on the matching error of adjacent cameras and the accumulated error of different cameras.

It can be seen from Fig. 4 that as the focal length error increases, the adjacent camera error and the accumulated error will become larger and larger. In response to this problem, this paper regards the traditional algorithm as a preliminary calibration algorithm for the pose relationship between cameras, and on this basis proposes a loop closure calibration method to optimize the nonlinear error of different cameras and the transformation relationship between different cameras.

3.2 Loop calibration of the multi-camera line structured light vision system

In the annular measurement field, the point coordinates on the target should theoretically be the same as their initial coordinates after a circle of camera coordinate system transformation. In this paper, the deviation between the coordinate value of the target after one circle of coordinate transformation and its initial value is called loop re projection error. When there is no error between the camera intrinsic parameter matrix and the extrinsic parameter matrix, the loop closure reprojection error is 0. To evaluate the actual matching error in the loop closure system, this paper uses the loop closure reprojection error as the evaluation index. The calculation of the loop closure reprojection error is shown in formula (7).

In the formula (7), \(M_{loop}\) is the loop closure matrix, which respectively represents the transformation matrix that starts from different camera coordinate systems and returns to the camera coordinate system after a circle of coordinate transformation. \(P_w\) is the point coordinate on the world coordinate system. The world coordinate system can coincide with the camera coordinate system of any camera. \(D_i\) is the loop closure reprojection error from different cameras, and the deviation of \(P_w\) from the original position after the loop closure coordinate system transformation is evaluated. \(D(RT,P_w)\) is the total loop closure reprojection error. In the ideal case, the loop closure matrix would be equal to the identity matrix when the positions of the cameras are accurate relative to each other, and the loop closure reprojection error would be 0.

Considering that the camera’s internal parameters affect the accuracy of the camera field of view matching by affecting the extraction accuracy of \(P_w\), formula(1) and formula(7) are combined to establish the optimal objective function as formula(8).

The following is the simulation result based on the loop closure optimization idea (Fig. 5).

The figure shows the optimization process of error and loop closure calibration error when \(\varDelta f=0.2\) mm. It can be seen from the figure that the overall error is constantly decreasing and converging. After about 100 iterations, the loop closure reprojection error converges. The comparison of the adjacent camera matching errors and accumulated matching errors between the traditional calibration method and the loop closure calibration method is shown in Fig. 6.

It can be seen from Figs. 6 and 7 that after loop closure optimization, both the matching error of adjacent cameras and the accumulated error of non-adjacent cameras are reduced. In addition to the focal length, there are other parameters in the camera’s internal parameters that will affect the target calculation accuracy. Therefore, to fully prove the effectiveness of the method, this paper also compares the calibration effect of the traditional calibration method and the loop closure calibration method under the influence of other parameter errors. Let the principal points of the three cameras be \((u_1, v_1)\), \((u_2, v_2)\), \((u_3, v_3)\), and they satisfy the following formula(9).

In formula(9), \(i=\)1,2,3; \(\varDelta u=0.2\)pixels; \(\varDelta v=0.2\)pixels. Figures 8 and 9 show the influence on the coordinate system matching when there is a deviation in the calibration of the principal point in the camera’s internal reference.

It can be seen from the figure that after loop closure optimization, both the matching error of adjacent cameras and the accumulated error of non-adjacent cameras are reduced. Therefore, compared with the traditional calibration method, the loop closure calibration method proposed in this paper not only reduces the matching error of adjacent cameras but also reduces the accumulated error of coordinate system matching.

4 Experiment and result analysis

To verify the feasibility and practicability of the above multi-line structured light system calibration method in the annular measurement field, a multi-camera line structured light vision system as shown in Fig. 4 was built in the laboratory environment,shown in Fig. 10.

The system consists of three self-contained line structured light sensors, each of which consists of a \(2592\times 2048\) resolution camera, a lens with a focal length of f=25 mm, and a line structured light generator. The camera is placed at a distance of about 300 mm from the object to be measured. A lift table is placed in the middle of the system, and the repeatability of the lift table is \(\pm 3\) \({\upmu }\)m. In actual use, the measured object is placed on the lifting platform for push-broom scanning.

We used VS2015 to write the measurement system control program to capture the pictures required for the calibration process.Then, we used Matlab r2019b to write a calibration program for calibration experiments, which verified the effectiveness of the calibration method proposed in this paper.

The experiment was divided into two comparative experiments. First, the matching errors of adjacent camera coordinate systems obtained by the traditional calibration method and the loop closure calibration method and the cumulative matching errors of the coordinate systems of different cameras are compared. Second, by scanning the reference cylinder, the errors of the traditional calibration method and the loop closure calibration method are compared. The errors compared include the stitching error of the line scan data of adjacent cameras and the accumulated stitching error of the line scan data of different cameras. At the same time, the accuracy of the two calibration methods is compared.

4.1 Coordinate system error comparison

The circular calibration board used for camera calibration and coordinate system matching is 10 mm spacing, and the accuracy is \(\pm 10\) \({\upmu }\)m. Figure 11 shows the adjacent camera reprojection errors obtained by the traditional calibration method and the loop closure calibration method respectively. Figure 12 shows the accumulated reprojection errors of different cameras.

Combining Figs. 11 and 12, it can be seen that for the actual system, whether it is the reprojection error of adjacent cameras or the accumulated error, the loop closure optimization reduces the error. Table 2 shows the errors change after optimization. After calculation, the reprojection error’ Root Mean Square(RMS) of adjacent cameras is reduced from 0.4809 mm before loop closure optimization to 0.1627 mm, and the error is reduced by about 66.2\(\%\). The accumulated error’ RMS is reduced from 4.5823 mm before loop closure calibration optimization to 0.3675 mm, and the error is reduced by about 91.8\(\%\). This also proves the effectiveness of the loop closure calibration method. Table 3 shows the parameter changes after optimization.

Parameters in Table 3 are the internal parameter of camera 1. It can be found in Table 3 that the variation of the parameters is small. This shows that the loop closure optimization process is a fine-tuning of the traditional calibration method, rather than a complete overthrow of the traditional calibration method. This also shows the rationality of the loop closure calibration method.

4.2 Comparison of line scan splicing error and accuracy

The matching accuracy of different camera coordinate systems has been improved, which means that the merging of different line scan data will be more accurate, and the overall measurement accuracy will be higher. To verify this, a multi-camera line structured light vision system was built to measure a standard cylinder with a diameter of 30 mm, an error of \(\pm 2\) \({\upmu }\)m, and a push-broom height of 2.25 cm.

Figure 13 below shows the spatial distribution of the three light planes. It can be seen from Fig. 13 that the spatial position of the light plane changes a little before and after optimization.

The following Fig. 14 shows the cylindrical scanning results obtained by the traditional calibration method and the loop closure calibration method respectively.

From Fig. 14, it can be seen that when using the traditional calibration method, the overlapping point cloud of the two probes has obvious layering phenomenon. After using the loop closure calibration method, the uneven distribution of the overall error of the system has been significantly improved. To compare the influence of the improvement of the calibration method on the overall error distribution of the system more intuitively, the truncated contour of the same position of the cylindrical point cloud measured by the traditional calibration method and the loop closure calibration method are shown in Fig. 15.

It can be seen from Fig. 15 that the cylindrical section obtained by the traditional calibration method cannot be closed as a ring, and the cylindrical section obtained by the loop closure calibration method is closed as a ring. This phenomenon proves the effectiveness of the improved calibration method in eliminating the problem of uneven distribution of the overall error in the system.

Repeat the scan 10 times, and fit the cylindrical point cloud data obtained by the two methods to obtain the diameter. Table 4 shows the diameter calculation results.

It can be seen from the Table 4 that the average diameter measurement of the traditional calibration method is 30.013 mm, while the average diameter measurement of the loop closure calibration method is 30.006 mm, and the diameter measurement accuracy is slightly improved. Because the diameter fitting is a process of data error synthesis, the fault problem does not necessarily have a great influence on the diameter.

To clearly show the matching situation before and after optimization, this paper will match the point cloud before and after optimization with the standard cylinder. Figure 16 is the distribution of the shape error before and after optimization.

It can be seen from the figure that compared with the measurement results of the traditional calibration method, the surface shape error of the loop closure calibration method is smaller, and the overall surface shape error becomes smoother. The surface shape error’s peak-to-valley (PV) of the traditional calibration method is 334 \({\upmu }\)m, and the RMS is 59 \({\upmu }\)m. The surface shape error’s PV of the loop closure calibration method is is 72 \({\upmu }\)m, and the RMS is 13 \({\upmu }\)m. After optimization, the RMS profile error is reduced by 46 \({\upmu }\)m, the accuracy is increased by 4.5 times, and the PV of profile error is reduced by 262 \({\upmu }\)m. This proves the effectiveness of the loop closure optimization again.

The method proposed in this paper has a very high industrial application value and can be used for the rapid measurement of shaft parts. Figure 17 shows the result of a 3D scan of a camshaft in a piston engine

Based on the three-dimensional results obtained by scanning, we measured the shaft diameter and roundness of the camshaft, and the parameters to be measured are shown in Fig. 18.

Based on the three-dimensional results obtained by scanning, we measured the shaft diameter and roundness of the camshaft, and the parameters to be measured are shown in Fig. 18. \(R_1\), \(R_2\), \(R_3\), \(R_4\), \(R_5\) are the radius of the contour arcs, respectively. Use cylindrical fitting to fit each camber radius respectively, and the error between the measurement results and the design value is shown in Table 5.

The roundness error refers to the runout of the contour on the section perpendicular to the axis of the rotating body to its ideal circle. The cross section of the position to be measured was intercepted to measure the roundness error, and the measurement results are shown in Fig. 19. The roundness error of this area is calculated to be 11 \({\upmu }\)m, which is consistent with its machining accuracy, which proves that this method can measure the roundness.

5 Conclusion

In this paper, an improved calibration method for a multi-camera line structured light vision system is proposed, which solves the problem of discontinuity or even fault in the overlapping area during coordinate system calibration. Based on the loop closure calibration method, the system model is overall optimized by loop closure calibration, which reduces the matching error of adjacent cameras by about 66.2\(\%\), the accumulated error is reduced by about 91.8\(\%\), and the overall measurement accuracy is increased by 4.5 times.

The calibration method proposed in this paper is suitable for the calibration of the annular measurement field. The calibration process is simple and no expensive external measurement equipment is used in the calibration process. This calibration method is very suitable for use in online measurement systems in industrial sites. At present, it has been used in the calibration of the whole topography measurement system of shaft parts. Quickly correct the position of each coordinate system with only a simple calibration procedure.

References

RB. Xia, R. Su, J. Zhao et al., An accurate and robust method for the measurement of circular holes based on binocular vision. Measur Sci Technol 31(2), 25006 (2019)

B. Hepp, M. Nießner, O. Hilliges, Plan3d: Viewpoint and trajectory optimization for aerial multi-view stereo reconstruction. ACM Trans. Graph. (TOG) 38(1), 1–17 (2018)

L. Streeter, Time-of-flight range image measurement in the presence of transverse motion using the Kalman filter. IEEE Trans. Instrum. Meas. 67(7), 1573–1578 (2018)

C. Zuo, S.J. Feng, L. Huang et al., Phase shifting algorithms for fringe projection profilometry: a review. Opt. Lasers Eng. 109, 23–59 (2018)

M. O’Toole, J. Mather, K.N. Kutulakos, 3D shape and indirect appearance by structured light transport. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 3246–3253 (2014)

E. Lilienblum, A. Al-Hamadi, A structured light approach for 3-D surface reconstruction with a stereo line-scan system. IEEE Trans. Instrum. Meas. 64(5), 1258–1266 (2015)

P. Zhou, K. Xu, D. Wang, Rail profile measurement based on line-structured light vision. IEEE Access 6, 16423–16431 (2018)

J. Miao, H. Yuan, L. Li et al, Line structured light vision online inspection of automotive shaft parts,International Conference on Application of Intelligent Systems in Multi-modal Information Analytics. Springer, Cham, 585–595 (2019)

Z. Shi, T. Wang, J. Lin, A simultaneous calibration technique of the extrinsic and turntable for structured-light-sensor-integrated CNC system. Opt. Lasers Eng. 138, 106451 (2021)

X.U. Yuan, Y. Wang, J. Zhou et al., A laser 3d scanner system based on multi-camera 3D-reconstruction. Comput. Digit. Eng. 46(11), 2342–2346 (2018)

J.Q. Gao, D.H. Liu, 3D detection technology for rail surface with multi-camera line structure light. Mach. Des. Manuf. 3, 170–172 (2017)

D. Zhan, L. Yu, J. Xiao et al., Multi-camera and structuredlight vision system (MSVS) for dynamic high-accuracy 3D measurements of railway tunnels. Sensors 15(4), 8664–8684 (2015)

W. Liu, Z. Jia, F. Wang et al., An improved online dimensional measurement method of large hot cylindrical forging. Measurement 45(8), 2041–2051 (2012)

L. Wang, W. Wang, C. Shen et al., A convex relaxation optimization algorithm for multi-camera calibration with 1D objects. Neurocomputing 215, 82–89 (2016)

Z.Y. Zhang, A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22(11), 1330–1334 (2000)

B.H. Shan, W.T. Yuan, Z.L. Xue, A calibration method for stereovision system based on solid circle target[J]. Measurement 132, 213–223 (2019)

B.L. Cai, Y.W. Wang, J.J. Wu et al., An effective method for camera calibration in defocus scene with circular gratings. Opt. Lasers Eng. 114, 44–49 (2019)

R. Usamentiaga, D.F. Garcia, Multi-camera calibration for accurate geometric measurements in industrial environments. Measurement 134, 345–358 (2019)

J.H. Sun, X.Q. Cheng, Q.Y. Fan, Camera calibration based on two-cylinder target. Opt. Express 27(20), 29319–29331 (2019)

Z.Z. Wei, W. Zou, G.J. Zhang et al., Extrinsic parameters calibration of multi-camera with non-overlapping fields of view using laser scanning. Opt. Express 27(12), 16719–16737 (2019)

T.L. Yang, Q.C. Zhao, X. Wang et al., Accurate calibration approach for non-overlapping multi-camera system. Opt. Laser Technol. 110, 78–86 (2019)

GH. An, S. Lee, M.W. Seo et al., Charuco board-based omnidirectional camera calibration method. Electronics 7(12), 421 (2018)

I. Van Crombrugge, R. Penne, S. Vanlanduit, Extrinsic camera calibration for non-overlapping cameras with Gray code projection. Opt. Lasers Eng. 134, 106305 (2020)

R.B. Xia, M.B. Hu, J.B. Zhao et al., Global calibration of non-overlapping cameras: state of the art. Optik 158, 951–961 (2018)

J. Jiang, L.C. Zeng, B. Chen et al., An accurate and flexible technique for camera calibration[J]. Computing 101(12), 1971–1988 (2019)

L. Huang, F.P. Da, S.Y. Gai, Research on multi-camera calibration and point cloud correction method based on three-dimensional calibration object. Opt. Lasers Eng. 115, 32–41 (2019)

B. Chen, Y. Liu, C. Xiong, Automatic checkerboard detection for robust camera calibration. 2021 IEEE International Conference on Multimedia and Expo (ICME), IEEE, 1–6 (2021)

J.L. Charco, B.X. Vintimilla, A.D. Sappa, Deep learning based camera pose estimation in multi-view environment. In: 14th International Conference on Signal-Image Technology and Internet-Based Systems (SITIS), IEEE, 224–228 (2018)

MATLAB, 9.7.0.1190202 (R2019b), Natick, Massachusetts: The MathWorks Inc (2018)

Funding

This research was funded by the National Key Research and Development Program of China (Grant No. 2017YFA0701200); Science Challenge Program (Grant No. TZ2018006-0203-01); Tianjin Natural Science Foundation of China (Grant No. 19JCZDJC39100).

Author information

Authors and Affiliations

Contributions

Hongmai Yang.and Changshuai Fang wrote the main manuscript text ,and prepared the experimental data in the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflicts of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, H., Fang, C. & Zhang, X. Improvement of calibration method for multi-camera line structured light vision system. Appl. Phys. B 128, 116 (2022). https://doi.org/10.1007/s00340-022-07832-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00340-022-07832-9