Abstract

Objectives

Coronary computed tomography angiography (CCTA) has rapidly developed in the coronary artery disease (CAD) field. However, manual coronary artery tree segmentation and reconstruction are time-consuming and tedious. Deep learning algorithms have been successfully developed for medical image analysis to process extensive data. Thus, we aimed to develop a deep learning tool for automatic coronary artery reconstruction and an automated CAD diagnosis model based on a large, single-centre retrospective CCTA cohort.

Methods

Automatic CAD diagnosis consists of two subtasks. One is a segmentation task, which aims to extract the region of interest (ROI) from original images with U-Net. The second task is an identification task, which we implemented using 3DNet. The coronary artery tree images and clinical parameters were input into 3DNet, and the CAD diagnosis result was output.

Results

We built a coronary artery segmentation model based on CCTA images with the corresponding labelling. The segmentation model had a mean Dice value of 0.771 ± 0.021. Based on this model, we built an automated diagnosis model (classification model) for CAD. The average accuracy and area under the receiver operating characteristic curve (AUC) were 0.750 ± 0.056 and 0.737, respectively.

Conclusion

Herein, using a deep learning algorithm, we realized the rapid classification and diagnosis of CAD from CCTA images in two steps. Our deep learning model can automatically segment the coronary artery quickly and accurately and can deliver a diagnosis of ≥ 50% coronary artery stenosis. Artificial intelligence methods such as deep learning have the potential to elevate the efficiency in CCTA image analysis considerably.

Key Points

• The deep learning model rapidly achieved a high Dice value (0.771 ± 0.0210) in the autosegmentation of coronary arteries using CCTA images.

• Based on the segmentation model, we built a CAD autoclassifier with the 3DNet algorithm, which achieved a good diagnostic performance (AUC) of 0.737.

• The deep neural network could be used in the image postprocessing of coronary computed tomography angiography to achieve a quick and accurate diagnosis of CAD.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Coronary artery disease (CAD) is one of the leading causes of myocardial ischemia and has become a global healthcare concern in recent years [1]. Risk assessments in the CAD population play a pivotal role in the early identification of high-risk patients as well as the optimization of treatment options, including medication, coronary intervention, and surgery, to improve patient prognosis. In contemporary practice, invasive imaging procedures, such as invasive coronary angiography, are commonly used to assess the severity of coronary stenosis [2]. However, coronary computed tomography angiography (CCTA) has been rapidly developed in the 20 years since its emergence and has changed the diagnostic landscape [3, 4]. This technology allows physicians to obtain high-quality coronary anatomy images in a short period with high diagnostic accuracy. Considering its excellent specificity in CAD diagnosis, CCTA has become the first-line recommendation in terms of screening (class I, level B) and risk stratification (class I, level B) for suspected CAD patients [5].

CCTA images are volume collections of computed tomography measurements, where the contrast agent in the coronary artery makes the lumen visible. With segmentation of the coronary artery wall and lumen, anatomical and morphological information of plaques, especially the severity of stenosis, can be obtained. However, image postprocessing is usually performed in a radiology workstation, and the segmentation and reconstruction of the coronary artery tree are time-consuming and tedious.

Recently, deep learning algorithms have been successfully developed for medical image analysis to process extensive data, segment or detect regions of interest (ROIs), and automatically classify diseases without human interference with high precision [6, 7]. Most research in this area has been limited to coronary artery tree segmentation [8, 9]. For example, Huang et al obtained a Dice value of 0.71–0.78 in coronary segmentation using deep learning in a CCTA training set of 18 samples [10]. However, previous work aimed at evaluating the practicability of the proposed method has been limited by the scale of the testing set. Moreover, few studies have shown the diagnostic accuracy of deep convolutional neural networks for the detection of stenosis of the coronary artery tree. Predicting the outcome of a patient is meaningful and challenging. Therefore, more efforts should be made to construct a large-scale dataset, develop deep learning models to automatically segment the coronary artery tree, and analyze the relationships between CAD and CCTA images with clinical indices.

In this study, we aimed to develop a deep learning tool for automatic coronary artery reconstruction and a CAD-automated diagnosis model based on a large, single-centre retrospective CCTA cohort and to evaluate the performance of this artificial intelligence-assisted tool.

Methods

Study population

The data source of this study was an image cohort in West China Hospital. This single-centre study enrolled patients with suspected CAD who underwent CCTA examination from January 2012 to August 2012. The exclusion criteria were as follows: (1) age < 18 years old; (2) end-stage renal disease; (3) severe liver disease requiring artificial liver plasmapheresis; and (4) malignancies. This study protocol was approved by the ethics committee and institutional review boards and conducted in accordance with the Declaration of Helsinki.

Data collection

Thin-slice (0.6 mm) computed tomography angiography (CTA) images of all patients were obtained using a second-generation dual-source computed tomography system (SOMATOM Definition CT, Siemens Medical Solutions).

The scanning protocol was as follows: 50~70 mL of contrast agent followed by 50 mL of normal saline was injected through the median cubital vein with the dual head injector at a flow rate of 5.0 mL/s. The aortic root was set as the ROI. The following method of bolus tracking was used: when the attenuation exceeded 100 HU, scanning was automatically triggered after a delay of 5 s.

The scanning parameters were as follows: collimator of 2 × 128 × 0.6 mm, slice thickness of 0.6 mm, gantry rotation of 0.28 ms, tube voltage of 80~120 kV (adjusted by CARE kV to reduce radiation exposure), and tube current of 290~560 mAs/R (adjusted by CARE Dose 4D technology to reduce radiation exposure). The pitch was 0.17~0.24 (adjusted according to patient heart rate during scanning).

All the image data were analyzed by one cardiologist and one radiologist independently and anonymously. The stenosis severity of the coronary artery was assessed following the Society of Cardiovascular Computed Tomography (SCCT) grading scale. We used the Coronary Artery Disease Reporting and Data System (CAD-RADS) score to describe the standardized report and perform the risk assessment. The demographic data, medical history, laboratory examination, and vital signs at admission were obtained for each patient from the hospital information system. From January 2012 to August 2012, 443 patients with a total of 1327 vessels were included in the study.

Model development

Automatic CAD diagnosis consists of two subtasks. One is a segmentation task, which aims to extract the ROI from original images. In this study, the coronary artery tree was first cropped from all CCTA images. The other task is an identification task, in which cases of the coronary artery tree image and corresponding clinical parameters are classified into normal and abnormal, where abnormal cases denote the stenosis ≥ 50% group. CCTA coronary artery labelling intended for segmentation task training was conducted by two experienced cardiovascular physicians with 5 years of experience in cardiac image postprocessing and analysis. The labelled data were inspected three times and confirmed to contain the following coronary artery structures: (1) left and right coronary artery ostia; (2) ostia of the left main coronary and anterior descending arteries, as well as the circumflex artery. The segments with vessel diameters less than 1.5 mm were excluded

In the first stage, we implemented our model using U-Net for the segmentation task [11]. This convolutional neural network included two parts. In part one, complex features were extracted from each CCTA image. In part two, the neural network was used to decode the feature representation with a sole focus on the coronary artery tree. The original CCTA images were the input of U-Net, and the coronary artery tree was the output. We further applied continuous frame postprocessing procedures to generate consecutive slices for a 3D coronary artery tree.

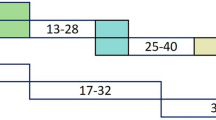

In the second stage, we implemented our model using 3DNet for the identification task. CAD was defined as stenosis of three main branches: the left anterior descending branch, the left circumflex branch, and the right and left main coronary arteries (50% or above). This network included three parts. In part one, the neural network was used to extract image features from the full coronary artery tree image. In part two, we extracted text features from clinical parameters. In part three, we used this network to combine all features for the final feature representation. The final classification was calculated using the weighted softmax layer. The coronary artery tree images and clinical parameters were the input of 3DNet, and the CAD diagnosis result was the output (Fig. 1).

Main architecture for automatic CAD diagnosis. a First stage for auto-segmentation of the coronary artery tree. b Second stage for automatic CAD diagnosis. The blue arrow represents the convolution operation, and the green arrow represents the upsampling convolution, and the yellow arrow means fully connected computation. The cube denotes the learned feature maps, and the number next to the cube denotes the corresponding number (channel) of feature maps. The final two outputs for the network denote the stenosis ≥ 50% group or not

The coronary artery segmentation model was based on the data of 243 patients, and the data of the remaining 200 patients were used to establish a classification model of stenosis ≥ 50%. The training process of the proposed deep convolutional neural networks mainly consisted of two phases: data preprocessing and network learning. In the preprocessing phase, the pixel values for each CCTA slice were normalized between 0 and 1. In the first stage, the image remained at 512 * 512, and the input of the second model was resized from 512 * 512 to 128 * 128 for fewer network parameters. The backpropagation (BP) algorithm was used for network learning [12]. Considering the small-sample independent dataset would fail to illustrate the effectiveness of the model, we used the average 5-fold validation to evaluate the accuracy of the coronary artery tree segmentation and CAD classification with all cases weighted equally. In cross-validation, part of the data is employed as the training set, and the other part is used as the testing set. Multiple rounds of testing are performed, and the average performance is taken as the final result. This approach can avoid the limitations and particularity of fixed datasets. All models were implemented using the PyTorch deep learning framework [13].

Statistical analysis

Continuous variables are expressed as the mean ± standard deviation (SD), and categorical variables are reported as counts and percentages. Patients’ baseline characteristics were compared between the stenosis ≥ 50% group and the stenosis < 50% group. The differences between groups were evaluated by t-tests and chi-square tests for continuous and categorical variables, respectively. In the segmentation task, we used several quantitative metrics to evaluate the coronary artery tree segmentation performance including the Dice similarity coefficient (DSC), true positive volume fraction (TPVF), and positive predictive value (PPV) [14, 15]. The Dice coefficient (Dice), also called the overlap index, is the most commonly used metric to compare automatic AI results and ground truth (GT) data from doctors (\( \mathrm{dice}=\frac{2\ \left(\mathrm{GT}\cap AI\right)}{\mathrm{GT}+ AI} \)). Higher scores indicate greater volumetric overlap between automatic segmentation and manual annotation. In the identification task, we calculated several indices to evaluate the CAD diagnostic accuracy, including accuracy, recall, precision, F1 score, and area under the curve (AUC), where the stenosis ≥ 50% group was defined as the true sample. The F1 score is defined as the harmonic mean of the model’s precision and recall. Higher scores indicate a better prediction of CAD diagnosis [16].

Results

From January 2012 to August 2012, 443 patients with a total of 1327 vessels were included in the study. The average age of the patients was 65.84 ± 11.21 years, 261 (58.92%) of the patients were male, and 164 (37.02%) patients had coronary artery stenosis ≥ 50%. The baseline characteristics of the two groups are shown in Table 1. Fivefold cross-validation was performed in both groups, and statistical analysis revealed no significant differences in baseline characteristics between the two groups (Supplement 1).

We built a coronary artery segmentation model based on CCTA images with the corresponding labelling. The metrics used to quantify automatic segmentation performance are listed in Table 2. The segmentation model achieved a mean Dice value of 0.771 ± 0.021, a TPVF value of 0.766 ± 0.041, and a PPV of 0.815 ± 0.018. These results suggested that the model can be used to automatically segment coronary arteries with high accuracy. The segmentation border for automated prediction and manual labelling is illustrated in Fig. 2. The model trained with the neural network can effectively segment the three coronary arteries and their major branches.

Based on the segmentation model, we built an automated diagnosis model (classification model) for CAD according to the stenosis of the three main coronary arteries and the left main coronary artery (equal to or greater than 50% or less than 50%). Regarding the classification metrics of the model, the performance metrics average accuracy, recall, precision, and F1 score were 0.750 ± 0.056, 0.860 ± 0.108, 0.719 ± 0.084, and 0.775 ± 0.040, respectively. The receiver operating characteristic (ROC) curves for automated CAD diagnosis are shown in Fig. 3, and the average AUC for the five subsets was 0.737 (Fig. 3). This result implies that the classification model can rapidly achieve an accurate diagnosis of CAD based on CCTA images.

We also compared the processing speed between the deep learning approach and the manual approach on the same personal platform (i7-9700K, GTX1650, 16 G RAM). The image postprocessing procedure of manual segmentation includes the selection of individual threshold islands and the level tracing of blood vessels. The physician manually segments the coronary artery and removes irrelevant cardiac tissue and the pulmonary vascular tree. After training, segmentation of a coronary tree through deep learning was completed in 6.73 s on average. The average time required for a physician to manually trace a coronary tree using 3D slicer (version 4.11) was 45 min. For the diagnosis of coronary heart disease (stenosis ≥ 50%), the deep learning method did not require additional processing, while the manual approach required an additional 5 min to establish a diagnosis.

Discussion

In this study, utilizing deep learning, we achieved the rapid classification and diagnosis of CAD based on CCTA images through 2 steps. First, we achieved rapid autosegmentation of coronary arteries using CCTA images and secured a high Dice value. Second, based on the segmentation model, we built an autoclassifier of CAD. The results suggested that CCTA image analysis using deep learning can accurately reconstruct the coronary tree and diagnose CAD and do so in less time than required by human experts.

Coronary artery segmentation and extraction represent crucial steps in the postprocessing of CCTA images. The traditional method of manual segmentation and reconstruction is very tedious and time-consuming. Previous studies have suggested that deep learning can automatically extract the coronary lumen or coronary central line [17]. Ring et al used the I2I-3D method to explore the autodetection of the coronary artery wall, lumen, and plaque [8]. In our study, a total of 243 patients were included to build a coronary artery segmentation model. To the best of our knowledge, this sample size is larger than that of previous studies. U-Net is a typical segmentation model that uses a deep learning algorithm, and we added a postprocessing procedure based on the outcome of the network [18]. Therefore, the accuracy of this segmentation model is higher than that of previous research, with a Dice value of 0.771 ± 0.021, a TPVF of 0.766 ± 0.041, and a PPV of 0.815 ± 0.018. These results indicate that the segmentation model based on deep learning was able to accurately identify and extract coronary arteries and has fair robustness to possible graphical errors or noise in the input data.

It is generally believed that patients with stenosis ≥ 50% in major branches, as confirmed by invasive angiography, can be diagnosed with CAD [19]. With a high negative predictive value, CCTA can serve as an effective tool for the initial screening of CAD. In the past, evaluating stenosis required radiologists to perform multiplanar reconstruction of CCTA images and measure the diameter of coronary lesions, which was a time-consuming work and expertise-dependent process. A few studies have used machine learning or deep learning to evaluate plaque or stenosis in the coronary artery. Using the 3D CNN and combining radiomics parameters extracted from CCTA, Denzinger et al were able to accurately predict the degree of coronary artery stenosis [20]. In addition, Moon et al were able to automate keyframe extraction and stenosis assessment in invasive coronary angiography through a deep learning algorithm [21]. In this study, to prevent potential subjective bias during labelling and overfitting, we did not perform coronary artery segmentation and stenosis recognition in the same group of patients. We built a classification model in another cohort of 200 patients without segmentation labelling. Using the radiology workstation report as the label, we achieved the autoclassification of CAD patients based on a deep learning algorithm. With the U-Net architecture having performed well on coronary artery tree segmentation in the first stage, this architecture was expected to also be able to learn to classify the CAD diagnosis result from the CTA images by combining clinical information. Since U-Net is an encoder-decoder network architecture based on a single image slice, according to the contrary coronary artery tree in 3D form, we developed the 2D filter into 3D convolution in the encoder part for feature extraction [11, 22]. Compared to the number in 2D operation, fewer filters (4, 8, 16, 32, and 64 in each layer) were needed to reduce the parameters in 3DNet because 3D convolutional filters produce more parameters than 2D operation [23]. To overcome overfitting in the classification task, few convolutional filters were used in the feature extraction for the coronary artery tree. Furthermore, a fully connected layer with 1024 neurons was deployed to upsample the clinical information. Sparse high-dimensional representation is more conducive to feature learning. In addition, the classification model utilizing 3D U-Net used only part of the CCTA data, including the coronary tree [24]. The accuracy of the classification model was 0.750 ± 0.056, and the AUC was 0.737, suggesting that the autodetection of ≥ 50% stenosis based on the coronary segmentation model through deep learning has acceptable accuracy.

In recent years, the use of machine learning and deep learning methods has driven rapid advancements in CCTA image analysis, but some unresolved issues remain. Previous studies have shown that classic classifiers or neural network algorithms can effectively detect coronary artery calcification in CCTA, but their performance in detecting low-density or mixed plaque is less satisfactory. In addition, the coronary artery occupies a small area in the whole CCTA image and presents considerable variability, posing a more significant challenge for artificial intelligence to learn the coronary artery and its lesions than other kinds of diseases [25]. Recently, several artificial intelligence platforms have been proposed in an attempt to overcome these challenges, but there remains significant room for improvement in terms of sample size or comprehensive reporting [26]. Lee et al suggested that the transformer network, combined with an appropriate penalty, performs well in coronary artery segmentation, achieving a Dice value of 0.768–0.787[27]. In addition, the results of a multicentre study suggested that commercial software based on VGG19 and 3D U-NET can accurately estimate the degree of coronary artery stenosis through CCTA, with only a 2.3% mean difference from experts in prediction at the patient level [28]. This study focused on the important task of coronary artery segmentation and CAD classification in image analysis and clinical practice and on the deployment of a deep learning algorithm, and it achieved promising results. However, further research is warranted to examine the anatomical, morphological, and functional analysis of coronary artery lesions using an artificial intelligence-based approach.

Limitations

Due to the inherent design of retrospective studies, we could include only patients who had received CCTA examinations in the past, and we were unable to compare the deep learning method with traditional image analysis prospectively. However, by building the CAD classification model using an isolated cohort and comparing it with traditional reports, we obtained conclusive and convincing results. In addition, as metal stents in CCTA can significantly impact deep learning training, we excluded patients with prior stent implantation. However, since CCTA was indicated for CAD screening in the majority of patients, the number of patients with metal stent implantation was not large (12 patients). Moreover, due to the limitations of the study design, the materials used to verify ≥ 50% stenosis in this study were radiology workstation reports rather than invasive coronary angiography findings. However, these reports were issued by a radiologist with more than 10 years of experience in chest imaging and contain quantitative measurements of the degree and location of stenosis; therefore, the measurements have high accuracy, and the reports serve as valuable references. Last, the classification of coronary artery stenosis in this study was dichotomic. Limited by the sample size, this work did not perform multiclassification, such as CAD-RADS classification, for prediction. We need further investigation to develop artificial intelligence models which could utilize more anatomical and morphological information on coronary lesions.

Conclusion

In this study, using a deep learning algorithm, we realized the rapid classification and diagnosis of CAD based on CCTA images in two steps. Our deep learning model can automatically segment the coronary artery quickly and accurately and can deliver a diagnosis of ≥ 50% coronary artery stenosis. We constructed a large-scale dataset and evaluated the performance of our methods based on cross-validation. Although this type of artificial intelligence cannot replace manual operation in CCTA image analysis, it may considerably increase the efficiency of CCTA image analysis. In future studies, we intend to refine the utility of our submodel in the clinical context and improve the accuracy and thoroughness of CAD assessment with more clinical input and algorithm optimization.

Abbreviations

- AUC:

-

Area under the curve

- BP:

-

Backpropagation

- CAD:

-

Coronary artery disease

- CAD-RADS:

-

Coronary Artery Disease Reporting and Data System

- CCTA:

-

Coronary computed tomography angiography

- DSC:

-

Dice similarity coefficient

- PPV:

-

Positive predictive value

- ROI:

-

Region of interest

- SCCT:

-

Society of Cardiovascular Computed Tomography

- SD:

-

Standard deviation

- TPVF:

-

True positive volume fraction

References

Joseph P, Leong D, McKee M et al (2017) Reducing the global burden of cardiovascular disease, part 1: the epidemiology and risk factors. Circ Res 121:677–694

Campeau L (1989) Percutaneous radial artery approach for coronary angiography. Catheter Cardiovasc Diagn 16:3–7

Budoff MJ, Dowe D, Jollis JG et al (2008) Diagnostic performance of 64-multidetector row coronary computed tomographic angiography for evaluation of coronary artery stenosis in individuals without known coronary artery disease: results from the prospective multicenter ACCURACY (Assessment by Coronary Computed Tomographic Angiography of Individuals Undergoing Invasive Coronary Angiography) trial. J Am Coll Cardiol 52:1724–1732

Miller JM, Rochitte CE, Dewey M et al (2008) Diagnostic performance of coronary angiography by 64-row CT. N Engl J Med 359:2324–2336

Knuuti J, Wijns W, Saraste A et al (2020) 2019 ESC Guidelines for the diagnosis and management of chronic coronary syndromes. Eur Heart J 41:407–477

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press

Ring F (2018) Deep learning for coronary artery segmentation in cta images,

Chen M, Wang X, Hao G et al (2020) Diagnostic performance of deep learning-based vascular extraction and stenosis detection technique for coronary artery disease. Br J Radiol 93:20191028

Huang W, Huang L, Lin Z et al (2018) Coronary artery segmentation by deep learning neural networks on computed tomographic coronary angiographic images. 2018 40th Annual international conference of the IEEE engineering in medicine and biology society (EMBC). IEEE, pp 608-611

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentationInternational. Conference on Medical image computing and computer-assisted intervention. Springer, pp 234-241

Braspenning PJ, Thuijsman F, Weijters AJMM (1995) Artificial neural networks: an introduction to ANN theory and practice. Springer Science & Business Media

Imambi S, Prakash KB, Kanagachidambaresan G (2021) PyTorchProgramming with TensorFlow. Springer, pp 87–104

Taha AA, Hanbury A (2015) Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Med Imaging 15:1–28

Yeghiazaryan V, Voiculescu ID (2018) Family of boundary overlap metrics for the evaluation of medical image segmentation. J Med Imaging 5:015006

Ling CX, Huang J, Zhang H (2003) AUC: a statistically consistent and more discriminating measure than accuracy. Ijcai, pp 519–524

Tatsugami F, Higaki T, Nakamura Y et al (2019) Deep learning–based image restoration algorithm for coronary CT angiography. Eur Radiol 29:5322–5329

Oktay O, Schlemper J, Folgoc LL et al (2018) Attention u-net: learning where to look for the pancreas. arXiv preprint arXiv:180403999

Raff GL, Abidov A, Achenbach S et al (2009) SCCT guidelines for the interpretation and reporting of coronary computed tomographic angiography. J Cardiovasc Comput Tomogr 3:122–136

Denzinger F, Wels M, Ravikumar N et al (2019) Coronary artery plaque characterization from CCTA scans using deep learning and radiomics. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, pp 593-601

Moon JH, Cha WC, Chung MJ, Lee K-S, Cho BH, Choi JH (2021) Automatic stenosis recognition from coronary angiography using convolutional neural networks. Comput Methods Prog Biomed 198:105819

Isensee F, Petersen J, Klein A et al (2018) nnu-net: self-adapting framework for u-net-based medical image segmentation. arXiv preprint arXiv:180910486

Wohlkinger W, Aldoma A, Rusu RB, Vincze M (2012) 3dnet: large-scale object class recognition from cad models. 2012 IEEE international conference on robotics and automation. IEEE, pp 5384-5391

Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O (2016) 3D U-Net: learning dense volumetric segmentation from sparse annotationInternational conference on medical image computing and computer-assisted intervention. Springer, pp 424–432

Baskaran L, Maliakal G, Al’Aref SJ et al (2020) Identification and quantification of cardiovascular structures from CCTA: an end-to-end, rapid, pixel-wise, deep-learning method. Cardiovasc Imaging 13:1163–1171

Kumamaru KK, Fujimoto S, Otsuka Y et al (2020) Diagnostic accuracy of 3D deep-learning-based fully automated estimation of patient-level minimum fractional flow reserve from coronary computed tomography angiography. Eur Heart J-Cardiovasc Imaging 21:437–445

Lee MCH, Petersen K, Pawlowski N, Glocker B, Schaap M (2019) TeTrIS: template transformer networks for image segmentation with shape priors. IEEE Trans Med Imaging 38:2596–2606

Choi AD, Marques H, Kumar V et al (2021) CT evaluation by artificial intelligence for atherosclerosis, stenosis and vascular morphology (CLARIFY): a multi-center, international study. J Cardiovasc Comput Tomogr 15:470–476

Funding

Funding of this work was supported by the National Major Science and Technology Projects (grant number 2018AAA0100201) to Z.Y.; the National Natural Science Foundation of China (grant 81970325 to M.C.; grant number 61906127 to J.W.); the 1.3.5 project for disciplines of excellence, West China Hospital, Sichuan University to M.C.; the Science and Technology Achievement Transformation Fund of West China Hospital of Sichuan University (CGZH19009) to M.C; and Open Fund Research from State Key Laboratory of Hydraulics and Mountain River Engineering (SKHL1920 to Xiong. T.Y.)

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Guarantor

The scientific guarantors of this publication are Prof. Zhang Yi and Prof. Mao Chen.

Conflict of interest

The authors declare no competing interests.

Statistics and biometry

No complex statistical methods were necessary for this paper.

Informed consent

Written informed consent was obtained from all subjects (patients) in this study.

Ethical approval

Institutional Review Board approval was obtained.

Methodology

• retrospective

• diagnostic study

• performed at one institution

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

ESM 1

(DOCX 24 kb)

Rights and permissions

About this article

Cite this article

Li, Y., Wu, Y., He, J. et al. Automatic coronary artery segmentation and diagnosis of stenosis by deep learning based on computed tomographic coronary angiography. Eur Radiol 32, 6037–6045 (2022). https://doi.org/10.1007/s00330-022-08761-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-022-08761-z