Abstract

We study a discrete-time multi-type Wright–Fisher population process. The mean-field dynamics of the stochastic process is induced by a general replicator difference equation. We prove several results regarding the asymptotic behavior of the model, focusing on the impact of the mean-field dynamics on it. One of the results is a limit theorem that describes sufficient conditions for an almost certain path to extinction, first eliminating the type which is the least fit at the mean-field equilibrium. The effect is explained by the metastability of the stochastic system, which under the conditions of the theorem spends almost all time before the extinction event in a neighborhood of the equilibrium. In addition to the limit theorems, we propose a maximization principle for a general deterministic replicator dynamics and study its implications for the stochastic model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we study a multi-type Wright–Fisher process, modeling the evolution of a population of cells or microorganisms in discrete time. Each cell belongs to one of the several given types, and its type evolves stochastically while the total number of cells does not change with time. The process is a time-homogeneous Markov chain that exhibits a rich and complex behavior.

Due to the apparent universality of its dynamics, availability of effective approximation schemes, and its relative amenability to numerical simulations, the Wright–Fisher process constitutes a popular modelling tool in applied biological research. In particular, there is a growing body of literature using the Wright–Fisher model of evolutionary game theory (Imhof and Nowak 2006) and its variations along with other tools of game theory and evolutionary biology to study compartmental models of complex microbial communities and general metabolic networks (Cavaliere et al. 2017; Hummert et al. 2014; Tarnita 2017; West et al. 2007; Zeng et al. 2017; Zomorrodi and Segrè 2017). For instance, recent studies (Zomorrodi and Segrè 2017) incorporate evolutionary game theory and concepts from behavioral economics into their hybrid models of metabolic networks including microbial communities with so-called Black Queen functions. See also Cai et al. (2019), Hummert et al. (2014), Ruppin et al. (2010) for related research. The Wright–Fisher model has been employed to study the evolution of host-associated microbial communities (Zeng et al. 2017). In a number of studies, the Wright–Fisher model was applied to examine adaptive dynamics in living organisms, for instance viral and immune populations perpetually adapting (Nourmohammad et al. 2016) and response to T-cell-mediated immune pressure (Barton et al. 2016; Chen and Kardar 2019) in HIV-infected patients. Another interesting application of the Wright–Fisher model to the study of evolution of viruses has been previously reported (Zinger et al. 2019).

Other biological applications of the Wright–Fisher model supported by experimental data include, for instance, substitutions in protein-coding genes (dos Reis 2015), analysis of the single-nucleotide polymorphism differentiation between populations (Dewar et al. 2011), correlations between genetic diversity in the soil and above-ground population (Koopmann et al. 2017), and persistence of decease-associated gene alleles in wild populations (Vallier et al. 2017). In cancer-related research, the Wright–Fisher model has been used to model tumor progression (Beerenwinkel et al. 2007; Datta et al. 2013), clonal interference (Garcia et al. 2018; Park and Krug 2007), cancer risk across species (Peto’s paradox) (Caulin et al. 2015), and intra-tumoral heterogeneity (Iwasa and Michor 2011). Not surprisingly, the Wright–Fisher model is also extensively used to model phenomena in theoretical evolutionary biology (Cvijović et al. 2018; Goudenège and Zitt 2015; Hobolth and Sireén 2016; Hofrichter et al. 2017; Houchmandzadeh 2015; John and Seetharaman 2016; Proulx 2011; Simonsen Speed et al. 2019; Waxman and Loewe 2010).

We now describe the Wright–Fisher model and state our results in a semi-formal setting. For a population with N cells, we define M-dimensional vectors

where \(Z^{(N)}_k(i)\) represents the number of type i cells in the generation k. We denote by

the frequency vectors of the population. That is,

for all \(1\le i\le M.\) We refer to the frequency vector \(\textbf{X}^{(N)}_k\) as the profile of the population at time k.

With biological applications in mind, the terms “particles” and “cells” are used interchangeably throughout this paper. We assume that the sequence \(\textbf{Z}^{(N)}_k\) forms a time-homogeneous Markov chain with the Wright–Fisher frequency-dependent transition mechanism. That is, conditioned on \(\textbf{Z}^{(N)}_k,\) the next generation vector \(\textbf{Z}^{(N)}_{k+1}\) has a multinomial distribution with the profile-dependent parameter \({\varvec{\Gamma }}\big (\textbf{X}^{(N)}_k\big ),\) where \({\varvec{\Gamma }}\) is a vector field that shapes the fitness landscape of the population (see Sect. 2.2 for details). In Example 1 we list several examples of the fitness taken from either biological or biologically inspired mathematical literature and briefly indicate a rationale behind their introduction.

It is commonly assumed in evolutionary population biology that particles reproduce or adopt their behavior according to their fitness, which depends on the population composition through a parameter representing utility of a random interaction within the population. In Sect. 3, in order to relate the stochastic dynamics of the Wright–Fisher model to first biological principles, we interpret the mean-field model in terms of the reproductive fitness of the stochastic process and study some basic properties of the fitness. Our main contribution here is the following maximization principle for a general deterministic replicator dynamics. An informal statement of this contribution is as follows. We refer to a vector \(\textbf{x}\) in \({{\mathbb {R}}}^m\) as a population profile vector if all its components are non-negative and their sum is one (i. e., \(\textbf{x}\) is a probability vector).

Theorem 1

Assume that \({\varvec{\Gamma }}\) is a continuous vector-field. Then there exists a “nice” (non-decreasing, bounded, complete Lyapunov, see Definition 2) function h mapping population profiles into the set of reals \({{\mathbb {R}}},\) such that:

-

(i)

\(h(\textbf{x})\) is the average \(\sum _i x(i)\varphi _i(\textbf{x})\) of an ordinal Darwinian fitness given by

$$\begin{aligned} \varphi _i(\textbf{x})=\frac{\Gamma _i(\textbf{x})}{x(i)}h(\textbf{x}),\qquad \qquad i=1,\ldots ,M. \end{aligned}$$(2) -

(ii)

\(h\big ({\varvec{\Gamma }}(\textbf{x})\big )\ge h(\textbf{x})\) for any population profile \(\textbf{x}.\)

-

(iii)

\(h\big ({\varvec{\Gamma }}(\textbf{x})\big )>h(\textbf{x})\) for any population profile \(\textbf{x}\) which is, loosely speaking, “not recurrent” (see Definition 1 for the definition of the “recurrence”).

We refer to Theorem 3.2 for a formal statement of the result. The theorem is a simple consequence of the “fundamental theorem of dynamical systems” due to Conley (1978), see Sect. 3.2 for details.

Let \(\textbf{x}\) be an arbitrary population profile, set \({{\varvec{\psi }}}_0=\textbf{x},\) and define recursively,

We will occasionally use the notation \({{\varvec{\psi }}}_k(\textbf{x})\) to indicate the initial state \({{\varvec{\psi }}}_0=\textbf{x}.\) It turns out that dynamical system \({{\varvec{\psi }}}_k\) serves as mean-field approximation for the stochastic model (see Sect. 2.2 and Theorem 4.1 for details). It follows from Theorem 1 that the average fitness h is non-decreasing along the orbits of \({{\varvec{\psi }}}_k:\)

and, moreover, (again, informally) “typically” we have a strong inequality \(h({{\varvec{\psi }}}_{k+1})>h({{\varvec{\psi }}}_k).\) Furthermore, since h is a bounded function,

exists for all initial conditions \({{\varvec{\psi }}}_0.\)

In fact, the average/population Darwinian fitness (as defined in (14) below) generically remains constant during the evolution of any Wright–Fisher model driven by a replicator mean-field dynamics (see Sect. 3.1 for details). Theorem 1 offers an alternative notion of fitness, a distinguished ordinal utility, that is free of this drawback. In contrast to the most of the known results of this type (Ao 2005; Birch 2016; Bratus et al. 2018; Doebeli et al. 2017; Edwards 2016; Ewens 2011; Ewens and Lessard 2015; Gavrilets 2010; Obolski et al. 2018; Orr 2009; Queller 2017), our maximization principle 1) is genuinely universal, such that it can be applied to any discrete-time and continuous in state space replicator dynamics; and 2) refers to maximization of the entire reproduction fitness, rather than of its part.

In Sect. 4.3 we study fixation probabilities of a certain class of Wright–Fisher models, induced by a classical linear-fractional replicator mean-field dynamics with a symmetric payoff matrix which corresponds to a partnership normal-form game with a unique evolutionary stable equilibrium. Understanding the route to fixation of multivariate population models is of a great importance to many branches of biology (Der et al. 2011; Gavrilets 2010; Hofrichter et al. 2017; Nowak and Sigmund 2004; Obolski et al. 2018; Waxman and Loewe 2010). For instance, extinction risk of biologically important components, regulated by metabolism in living cells, has been the focus of intensive research and is a key factor in numerous biotechnologically and biomedically relevant applications (Assaf and Meerson 2008; Samoilov and Arkin 2006). Our contribution can be summarized as follows. Firstly, Theorem 4.6 offers an interpretation of the notion “the least fit” which is in the direct accordance to the law of competitive exclusion and Fisher’s fundamental theorem of natural selection. Secondly, on a more practical note, the theorem suggests that for the underlying class of population models, the path to extinction is almost deterministic when the population size is large. We remark that for a different class of models, a similar fixation mechanism has been described in Assaf and Meerson (2010), Assaf and Mobilia (2011), Park and Traulsen (2017). However, our proof is based on a different idea, and is mathematically rigorous in contrast to the previous work (where, in order to establish the result, analytical methods are combined with some heuristic arguments).

Informally, the result in Theorem 4.6 can be stated as follows:

Theorem 2

Suppose that \({\varvec{\Gamma }}\) satisfies the following set of conditions:

-

(i)

There is \({{\varvec{\chi _{eq}}}} \in {{\mathbb {R}}}^M\) such that \({\chi _{eq} }(i)>0\) for all \(i=1,\ldots ,M\) and \(\lim _{k\rightarrow \infty } {{\varvec{\psi }}}_k={{\varvec{\chi _{eq}}}}\) whenever \(\psi _0(i)>0\) for all \(i=1,\ldots ,M.\)

-

(ii)

There are no mutations, that is \(\Gamma _i(x)=0\) if \(x(i)=0.\)

-

(iii)

The Markov chain \(\textbf{X}^{(N)}_k\) is metastable, that is it spends a long time confined in a small neighborhood of the deterministic equilibrium \({{\varvec{\chi _{eq}}}}\) before a large (catastrophic) fluctuation occurs and one of the types gets instantly extinct.

Then the probability that the least fit at the equilibrium \({{\varvec{\chi _{eq}}}}\) type will extinct first converges to one when N goes to infinity.

Metastability or stable coexistence is described in Broekman et al. (2019) as “the long-term persistence of multiple competing species, without any species being competitively excluded by the others, and with each species able to recover from perturbation to low density.” Various mathematical conditions equivalent to a variant of the above concept can be found in Auletta et al. (2018), Bovier and den Hollander (2015), Landim (2019), see also Assaf and Meerson (2010). The interplay between metastability, quasi-stationary distribution, strong persistence, and extinction probabilities in multitype population models has been discussed by many authors, see, for instance, Ao (2005), Ashcroft et al. (2015), Benaïm and Schreiber (2019), Block and Allen (2000), Gyllenberg and Silvestrov (2008), Hofbauer and Sigmund (1998), Hutson and Schmitt (1992), Kang and Chesson (2010), Méléard and Villemonais (2012), Steinsaltz and Evans (2004), Xu et al. (2014), Zhou and Qian (2011) and references therein.

Heuristically, the result in our theorem suggests that if a stochastic population model has a metastable state, then under some technical conditions that grantee a strong enough attraction to the quasi-equilibrium, the extinction by a series of intermediate steps is “costly” to the system (that is where the existence of a Lyapunov function is relevant to the result), and the overwhelmingly most likely extinction scenario is an instantaneous “collapse” of the “weakest link”, namely an instantaneous extinction of the type which is the least abundant at the quasi-equilibrium state. We also emphasize the importance of the strong attraction to the quasi-equilibrium factor, without which the mechanism of extinction can be completely different as it has been illustrated in the literature.

The benefit of the kind of results stated in Theorem 2 for applied biological research is that they elucidate conditions under which the ultimate outcome of a complex stochastic evolution is practically deterministic, and thus can be robustly predicted in a general accordance with a simple variation of the competitive exclusion principle.

In Sect. 4.3, in addition to our main result stated in Theorem 4.6, we prove an auxiliary Proposition 4.7 which complements (Losert and Akin 1983) and explains the mechanism of convergence to the equilibrium under the conditions of Theorem 4.6. The proposition establishes a strong contraction near the equilibrium property for the systems considered in Theorem 4.6. The claim is a “reverse engineering” in the sense that it would immediately imply Theorem 2-(i), whereas the latter has been obtained in Losert and Akin (1983) in a different way and is used here to prove the claim in Proposition 4.7.

The Wright–Fisher Markov chains are known to exhibit complex multi-scale dynamics, partially because of the non-smooth boundary of their natural phase space. The analytic intractability of Wright–Fisher models has motivated an extensive literature on the approximation of these stochastic processes (Chalub and Souza 2014; Ewens 2012; Hartle and Clark 2007; Hofrichter et al. 2017; Nagylaki 1990). These approximations “illuminate the evolutionary process by focusing attention on the appropriate scales for the parameters, random variables, and time and by exhibiting the parameter combinations on which the limiting process depends” (Nagylaki 1990). Our results in Sect. 4.3 concerning the model’s path to extinction rely partially on the Gaussian approximation constructed in Theorem 4.1 and error estimates proved in Sect. 4.2. The construction is fairly standard and has been used to study other stochastic population models. Our results in Sects. 4.1 and 4.2 can be informally summarized as follows:

Theorem 3

Assuming that the vector field \({\varvec{\Gamma }}\) is smooth enough, the following is true:

-

(i)

\(\textbf{X}^{(N)}_k={{\varvec{\psi }}}_k+\frac{\textbf{u}^{(N)}_k}{\sqrt{N}},\) where \({{\varvec{\psi }}}_k\) is the deterministic mean-field sequence defined in (3) (in particular, independent of N) and \(\textbf{u}^{(N)}_k\) is a random sequence that, as N goes to infinity, converges in distribution to a solution of certain random linear recursion.

-

(ii)

For a fixed N and any given \(\varepsilon >0,\) with an overwhelmingly large probability, the norm of the noise perturbation vector \(\frac{\textbf{u}^{(N)}_k}{\sqrt{N}}\) will not exceed \(\varepsilon \) for an order of \(e^{\alpha \varepsilon ^2 N}\) first steps of the Markov chain, where \(\alpha >0\) is a constant independent of N and \(\varepsilon .\)

The theorem implies that when the population size N is large, the stochastic Wright–Fisher model can be interpreted as a perturbation of the mean-field dynamical system \({\varvec{\Gamma }}\) with a small random noise.

Due to the particular geometry of the phase space boundary, the stochastic dynamics of the Wright–Fisher model often exhibits structural patterns (specifically, invariant and quasi-invariant sets) inherited from the mean-field model. “Appendix A” of this paper is devoted to the exploration of the peculiar connection between invariant sets of the mean-field dynamical system and the form of the equilibria of the stochastic model when mutation is allowed. The results in the appendix aim to contribute to the general theory of Wright–Fisher processes with mutations and are not used directly anywhere in the paper.

The rest of the paper is organized as follows. The mathematical model is formally introduced in Sect. 2. Section 3 is concerned with the geometry of the fitness landscape and fitness maximization principle for the deterministic mean-field model. Our main results for the stochastic dynamics are stated and discussed in Sect. 4, with proofs deferred to Sect. 5. Finally, conclusions are outlined in Sect. 6. “Appendix A” ties the geometric properties of the fitness to the form of stochastic equilibria of the Wright–Fisher process. In order to facilitate the analysis of stochastic persistence in the Wright–Fisher model considered in Sect. 4, we survey in “Appendix B” a mathematical theory of coexistence for a class of deterministic interacting species models, known as permanent (or uniform persistent) dynamical systems.

2 The model

The aim of this section is to formally present general form of the Wright–Fisher model and introduce some of the key notions pertinent to the general framework. The section is divided into two subsections. Section 2.1 introduces a broader geometric context and thus sets the stage for a formal definition of the model given in Sect. 2.2.

2.1 Geometric setup, notation

Fix \(M\in {{\mathbb {N}}}\) and define \(S_M=\{1,2,\ldots ,M\}.\) Let \({{\mathbb {Z}}}_+\) and \({{\mathbb {R}}}_+\) denote, respectively, the set of non-negative integers and the set of non-negative reals. Given a vector \(\textbf{x}\in {{\mathbb {R}}}^M,\) we usually use the notation x(i) to denote its i-th coordinate. The only exception is in the context of vector fields, whose i-th component will typically be indicated with the subscript i.

Let \(\textbf{e}_i=(0,\ldots ,0,1,0,\ldots ,0)\) denote the unit vector in the direction of the i-th axis in \({{\mathbb {R}}}^M.\) We define the \((M-1)\)-dimensional simplex

and denote by \(V_M\) the set of its vertices:

\(\Delta _M\) is a natural state space for the entire collection of stochastic processes \((\textbf{X}^{(N)})_{N\in {{\mathbb {N}}}}\) of (1). We equip \(\Delta _M\) with the usual Euclidean topology inherited from \({{\mathbb {R}}}^M.\) For an arbitrary closed subset \(\Delta \) of \(\Delta _M,\) we denote by \(\text{ bd }(\Delta )\) its topological boundary and by \(\text{ Int }(\Delta )\) its topological interior \(\Delta \backslash \text{ bd }(\Delta ).\) For any non-empty subset J of \(S_M\) we denote by \(\Delta _{[J]}\) the simplex built on the vertices corresponding to J. That is,

We denote by \(\partial (\Delta _{[J]})\) the manifold boundary of \(\Delta _{[J]}\) and by \(\Delta _{[J]}^\circ \) the corresponding interior \(\Delta _{[J]}\backslash \partial (\Delta _{[J]}).\) That is,

and

We write \(\partial (\Delta _M)\) and \(\Delta _M^\circ \) for, respectively, \(\partial (\Delta _{[S_M]})\) and \(\Delta _{[S_M]}^\circ .\) Note that \( \text{ bd }(\Delta _{[J]})=\Delta _{[J]},\) and hence \(\partial (\Delta _{[J]})\ne \text{ bd }(\Delta _{[J]})\) for any proper subset J of \(S_M.\)

Furthermore, let

Note that \(\textbf{X}^{(N)}_k\in \Delta _{M,N}\) for all \(k\in {{\mathbb {Z}}}_+\) and \(N\in {{\mathbb {N}}}.\) For any set of indices \(J\subset S_M\) we write

We simplify the notations \(\partial (\Delta _{[S_M],N})\) and \(\Delta _{[S_M],N}^\circ \) to \(\partial (\Delta _{M,N})\) and \(\Delta _{M,N}^\circ ,\) respectively.

We use the maximum (\(L^\infty \)) norms for vectors in \(\Delta _M\) and functions in \(C(\Delta _M),\) the space of real-valued continuous functions on \(\Delta _M:\)

The Hadamard (element-wise) product of two vectors \(\textbf{u},\textbf{v}\in {{\mathbb {R}}}^M\) is denoted by \(\textbf{u}\circ \textbf{v}.\) That is, \(\textbf{u}\circ \textbf{v} \in {{\mathbb {R}}}^M\) and

The usual dot-notation is used to denote the regular scalar product of two vectors in \({{\mathbb {R}}}^M\).

2.2 Formulation of the model

We now proceed with the formal definition of the Markov chain \(\textbf{X}^{(N)}_k.\) Let P denote the underlying probability measure defined in some large probability space that includes all random variables considered in this paper. We use E and COV to denote the corresponding expectation and covariance operators. For a random vector \(\textbf{X}=(X(1),X(2),\ldots ,X(M))\in {{\mathbb {R}}}^M,\) we set \(E(\textbf{X}):=\big (E\big (X(1)\big ),E\big (X(2)\big ),\ldots ,E\big (X(M)\big )\big ).\)

Let \({\varvec{\Gamma }}:\Delta _M\rightarrow \Delta _M\) be a mapping of the probability simplex \(\Delta _M\) into itself. Suppose that the population profile at time \(k\in {{\mathbb {Z}}}_+,\) is given by a vector \(\textbf{x}=\bigl (x(1),\ldots ,x(M)\bigr )\in \Delta _{M,N},\) i.e. \(\textbf{X}^{(N)}_k=\textbf{x}.\) Then the next generation’s profile, \(\textbf{X}^{(N)}_{k+1}\), is determined by a multinomial trial where the probability of producing an i-th type particle is equal to \({\varvec{\Gamma }}_i (\textbf{x}).\) In other words, \(\textbf{X}^{(N)}\) is a Markov chain whose transition probability matrix is defined as

for all \(k\in {{\mathbb {Z}}}_+\) and \(\Bigl (\frac{j_1}{N},\ldots ,\frac{j_M}{N}\Bigr )\in \Delta _{M,N}.\) Note that for any state \(\textbf{x}\in \Delta _{M,N}\) and time \(k\in {{\mathbb {Z}}}_+\) we have

and

We refer to any Markov chain \(\textbf{X}^{(N)}\) on \(\Delta _{M,N}\) that satisfies (7) as a Wright–Fisher model associated with the update rule \({\varvec{\Gamma }}.\)

We denote transition kernel of \(\textbf{X}^{(N)}\) on \(\Delta _{M,N}\) by \(P_N.\) That is, \(P_N(\textbf{x},\textbf{y})\) is the conditional probability in (7) with \(y(i)=j_i/N.\) For \(k\in {{\mathbb {N}}},\) we denote by \(P_N^k\) the k-th iteration of the kernel. Thus,

for any \(m\in {{\mathbb {Z}}}_+.\)

The Wright–Fisher model is completely characterized by the choice of the parameters M, N and the update rule \({\varvec{\Gamma }}(\textbf{x}).\) Theorem 4.1 below states that when N approaches infinity while M and \({\varvec{\Gamma }}\) remain fixed, the update rule emerges as the model’s mean-field map, in that the path of the Markov chain \(\textbf{X}^{(N)}\) with \(\textbf{X}^{(N)}_0=\textbf{x}\) converges to the orbit of the (deterministic) discrete-time dynamical system \({{\varvec{\psi }}}_k\) introduced in (3). Thus, for the large population size N, the Wright–Fisher process can be perceived as a small-noise perturbation of the deterministic dynamical system \({{\varvec{\psi }}}_k.\)

Throughout the paper we assume that the mean-field dynamics is induced by a replicator equation

where the vector-field \({{\varvec{\varphi }}}:\Delta _M\rightarrow {{\mathbb {R}}}_+^M\) serves as a fitness landscape of the model (see Sect. 3 for details). For biological motivations of this definition, see (Cressman and Tao 2014; Hofbauer and Sigmund 2003; Sigmund 1986). The replicator dynamics can be viewed as a natural discretization scheme for its continuous-time counterpart described in the form of a differential equation (Garay and Hofbauer 2003; Hofbauer and Sigmund 1998). The normalization factor \(\sum _{j\in S_m} x(j) \varphi _j(\textbf{x})\) in (10) is the total population fitness and plays a role similar to that of the partition function in statistical mechanics (Barton and Coe 2009).

Example 1

-

(i)

One standard choice of the fitness \({{\varvec{\varphi }}}\) in the replicator equation (10) is Hofbauer (2011), Hofbauer and Sigmund (1998), Hofrichter et al. (2017), Imhof and Nowak (2006):

$$\begin{aligned} {{\varvec{\varphi }}} (\textbf{x})=(1-\omega )\textbf{b}+\omega \textbf{A} \textbf{x}, \end{aligned}$$(11)where \(\textbf{A}=(A_{ij})_{i,j\in S_M}\) is an \(M\times M\) payoff matrix, \(\textbf{b}\) is a constant M-vector, and \(\omega \in (0,1)\) is a selection parameter. The evolutionary game theory interprets \((Ax)(i)=\sum _{j\in S_M}A_{ij}x(j)\) as the expected payoff of a type i particle in a single interaction (game) with a particle randomly chosen from the population profile \(\textbf{x}\) when the utility of the interaction with a type j particle is \(A_{ij}.\) The constant vector \(\textbf{b}\) represents a baseline fitness and the selection parameter w is a proxy for modeling the strength of the effect of interactions on the evolution of the population compared to inheritance (Imhof and Nowak 2006).

-

(ii)

In replicator models associated with multiplayer games (Broom and Rychtář 2013; Gokhale and Traulsen 2014), the pairwise interaction of case (i) (two-person game) is replaced by a more complex interaction within a randomly selected group (multiplayer game). In this case, the fitness \({{\varvec{\varphi }}}\) is typically a (multivariate) polynomial of \(\textbf{x}\) of a degree higher than one.

-

(iii)

An evolutionary game dynamics with non-uniform interaction rates has been considered in Taylor and Nowak (2006). Mathematically, their assumptions amount to replacing (11) with

$$\begin{aligned} \varphi _i(\textbf{x})=(1-\omega )b(i)+\omega \frac{Bx(i)}{Rx(i)}, \end{aligned}$$where \(\textbf{R}\) is the matrix of rates reflecting the frequency of pair-wise interaction between different types, and \(\textbf{B}=\textbf{A}\circ \textbf{R},\) the Hadamard product of the payoff matrix \(\textbf{A}\) and \(\textbf{R}.\)

-

(iv)

Negative frequency dependence of the fitness can be captured by, for instance, allowing matrix \(\textbf{A}\) in (11) to have negative entries. In fact, it suffices that the matrix off-diagonal elements are larger than the respective diagonal elements (Broekman et al. 2019).

-

(v)

A common choice of a non-linear fitness is an exponential function (Hofbauer and Sigmund 1998; Sandholm 2010):

$$\begin{aligned} \varphi _i(\textbf{x})=e^{\textbf{e}ta Ax(i)}, \end{aligned}$$(12)where, as before, \(\textbf{A}\) is a payoff matrix, and \(\textbf{e}ta>0\) is a “cooling” parameter [analogue of the inverse temperature in statistical mechanics (Barton and Coe 2009)].

3 Mean-field model: fitness landscape

In Sect. 3.1 we relate the update rule \(\Gamma \) to a family of fitness landscapes of the mean-field model in the absence of mutations. All the fitness functions in the family yield the same relative fitness and differ from each other only by a scalar normalization function. Section 3.2 contains a version of Fisher’s maximization principle for the deterministic mean-field model. In Sect. 3.3, we incorporate mutations into the framework presented in Sects. 3.1 and 3.2.

The main result of this section is Theorem 3.2 asserting the existence of a non-constant reproductive fitness which is maximized during the evolutionary process. The result is an immediate consequence of a version of Conley’s “fundamental theorem of dynamical systems” (1978, Theorem B in Section II.6.4), a celebrated mathematical result that appears to be underutilized in biological applications.

3.1 Reproductive fitness and replicator dynamics

The main goal of this section is to introduce a notion of fitness suitable for supporting a formalization of Fisher’s fitness maximization principle stated below in Sect. 3.2 as Theorem 3.2. Proposition 3.1 provides a useful characterization of the fitness, while Theorem 1 [which is a citation from Garay and Hofbauer (2003)] addresses some of its more technical properties, all related to the characterization of the support of the fitness function.

Let \({{\mathcal {C}}}:\Delta _M\rightarrow 2^{S_M}\) be the composition set-function defined as

According to (8), for a population profile \(\textbf{x}\in \Delta _{M,N},\) the M-dimensional vector \(\textbf{f}(\textbf{x})\) with i-th component equal to

represents the Darwinian fitness of the profile \(\textbf{x}\) (Hartle and Clark 2007; Orr 2009), see also Doebeli et al. (2017), Svensson and Connallon (2019). We will adopt the terminology of Chalub and Souza (2017), Traulsen et al. (2005) and call a vector field \({{\varvec{\varphi }}}: \Delta _M\rightarrow {{\mathbb {R}}}_+^M\) a reproductive fitness of the population if for all \(\textbf{x}\in \Delta _M\) we have:

Notice that even though we assume that both \({{\varvec{\varphi }}}(\textbf{x})\) and \(\textbf{f}(\textbf{x})\) are vectors in \({{\mathbb {R}}}_+^M,\) the definitions (14) and (15) only consider \(i\in {{\mathcal {C}}}(\textbf{x}),\) imposing no restriction on the rest of the vectors components. Notice that, since \({\varvec{\Gamma }}\) is a probability vector, the Darwinian fitness defined in (14) can serve as an example of the reproductive fitness. Furthermore, a comparison of (15) and (14) shows that \(\varphi (\textbf{x})\) is a reproductive fitness if and only if \({{\varvec{\varphi }}}(\textbf{x})=h(\textbf{x})\textbf{f}(\textbf{x})\) for all \(\textbf{x}\in \Delta _M,\) where \(h: \Delta _M\rightarrow {{\mathbb {R}}}_+\) is an arbitrary strictly positive scalar-valued function.

Both concepts of fitness are pertinent to the amount of reproductive success of particles of a certain type per capita of the population. We remark that in McAvoy et al. (2018), \({{\varvec{\varphi }}}\) is referred to as the reproductive capacity rather than the reproductive fitness. A similar definition of reproductive fitness, but with the particular normalization \(\textbf{x}\cdot {{\varvec{\varphi }}}=1\) and the restriction of the domain of \({\varvec{\Gamma }}\) to the interior \(\Delta _M^\circ ,\) is given in Sandholm (2010, p. 160).

In the following straightforward extension of Lemma 1 in Chalub and Souza (2017), we show that two notions of fitness essentially agree under the following no-mutations condition:

Proposition 3.1

The following is true for any given \({\varvec{\Gamma }}:\Delta _M\rightarrow \Delta _M:\)

-

(i)

A reproductive fitness exists if and only if condition (16) is satisfied.

-

(ii)

Suppose that condition (16) is satisfied and let \(h: \Delta _M\rightarrow (0,\infty )\) be an arbitrary strictly positive function defined on the simplex. Then any \({{\varvec{\varphi }}}:\Delta _M\rightarrow {{\mathbb {R}}}_+^M\) such that

$$\begin{aligned} \varphi _i(\textbf{x})=\frac{\Gamma _i(\textbf{x})}{x(i)}h(\textbf{x})\qquad \forall \, i\in {{\mathcal {C}}}(\textbf{x}), \end{aligned}$$(17)is a reproductive fitness.

-

(iii)

Conversely, if \({{\varvec{\varphi }}}: \Delta _M\rightarrow {{\mathbb {R}}}_+^M\) is a reproductive fitness, then (17) holds for \(h(\textbf{x})=\textbf{x}\cdot {{\varvec{\varphi }}}(\textbf{x}).\)

-

(iv)

If \({{\varvec{\varphi }}}:\Delta _M\rightarrow {{\mathbb {R}}}_+^M\) is a reproductive fitness, \(\textbf{x}\in \Delta _M\) and \(i\in {{\mathcal {C}}}(\textbf{x}),\) then \(\varphi _i(\textbf{x})>0\) if and only if \(\Gamma _i(\textbf{x})>0.\)

Note that (16) is equivalent to the condition that \({\varvec{\Gamma }}\big (\Delta _{[I]}^\circ \big )\subset \Delta _{[I]}\) for all \(I\subset S_M.\) Therefore, if \({\varvec{\Gamma }}\) is a continuous map, then (16) is equivalent to the condition

For continuous fitness landscapes, the following refined version of Proposition 3.1 has been obtained in Garay and Hofbauer (2003). Recall that \({\varvec{\Gamma }}\) is called a homeomorphism of \(\Delta _M\) if it is a continuous bijection of \(\Delta _M\) and its inverse function is also continuous. Intuitively, a bijection \({\varvec{\Gamma }}:\Delta _M\rightarrow \Delta _M\) is a homeomorphism when the distance \(\Vert {\varvec{\Gamma }}(x)-{\varvec{\Gamma }}(y)\Vert \) is small if and only if \(\Vert \textbf{x}-\textbf{y}\Vert \) is small. \({\varvec{\Gamma }}\) is a diffeomorphism if it is a homeomorphism and both \({\varvec{\Gamma }}\) and \({\varvec{\Gamma }}^{-1}\) are continuously differentiable.

Theorem 1

(Garay and Hofbauer 2003) Suppose that \({\varvec{\Gamma }}=\textbf{x}\circ {{\varvec{\varphi }}}\) for a continuous \({{\varvec{\varphi }}}:\Delta _M\rightarrow {{\mathbb {R}}}_+^M\) and \({\varvec{\Gamma }}\) is a homeomorphism of \(\Delta _M.\) Then:

-

(i)

We have:

$$\begin{aligned} \Gamma \big (\Delta _{[I]}^\circ \big )=\Delta _{[I]}^\circ \quad \text{ and } \quad \Gamma \big (\partial (\Delta _{[I]})\big )=\partial \big (\Delta _{[I]}\big ),\qquad \forall \,I\subset S_M. \end{aligned}$$ -

(ii)

If, in addition, \({\varvec{\Gamma }}\) is a diffeomorphism of \(\Delta _M\) and each \(\varphi _i,\) \(i\in S_M,\) can be extended to an open neighborhood U of \(\Delta _M\) in \({{\mathbb {R}}}^M\) in such a way that the extension is continuously differentiable on U, then \(\varphi _i(\textbf{x})>0\) for all \(\textbf{x}\in \Delta _M\) and \(i\in S_M.\)

The first part of the above theorem is the Surjectivity Theorem on p. 1022 of Garay and Hofbauer (2003) and the second part is Corollary 6.1 there. The assumption that \({\varvec{\Gamma }}\) is a bijection, i. e. one-to-one and onto on \(\Delta _M,\) seems to be quite restrictive in biological applications (Bratus et al. 2018; de Visser and Krug 2014; Gavrilets 2010; Obolski et al. 2018). Nevertheless, the theorem is of a considerable interest for us because it turns out that its conditions are satisfied for \({\varvec{\Gamma }}\) in (11) and (12).

3.2 Maximization principle for the reproductive fitness

The aim of this section is to introduce a maximization principle suitable for the deterministic replicator dynamics and reproductive fitness. The result is stated in Theorem 3.2. An example of application to the stochastic Wright–Fisher model is given in Proposition 3.3.

For the Darwinian fitness we have \(\overline{\textbf{f}}(\textbf{x}):=\sum _{i\in S_M} x_if_i(\textbf{x})= \sum _{i\in S_M} \Gamma _i(\textbf{x})=1.\) Thus the average Darwinian fitness is independent of the population profile, and in particular does not change with time. In contrary, the average reproductive fitness \(\overline{{\varvec{\varphi }}}(\textbf{x}):= \sum _{i\in S_M} x_i\varphi _i(\textbf{x})\) is equal to the (arbitrary) normalization factor \(h(\textbf{x})\) in (17), and hence in principle can carry more structure. If the mean-field dynamics is within the domain of applicability of Fisher’s fundamental theorem of natural selection, we should have (Birch 2016; Edwards 2016; Ewens and Lessard 2015; Queller 2017; Schneider 2010):

In order to elaborate on this point, we need to introduce the notion of chain recurrence.

Definition 1

(Conley 1978) An \(\varepsilon \)-chain from \(\textbf{x}\in \Delta _M\) to \(\textbf{y}\in \Delta _M\) for \({\varvec{\Gamma }}\) is a sequence of points in \(\Delta _M,\) \(\textbf{x} = \textbf{x}_0, \textbf{x}_1, \ldots , \textbf{x}_n = \textbf{y},\) with \(n\in {{\mathbb {N}}},\) such that \(\big \Vert {\varvec{\Gamma }}(\textbf{x}_i)-\textbf{x}_{i+1}\big \Vert < \varepsilon \) for \(0 \le i \le n-1.\) A point \(\textbf{x} \in \Delta _M\) is called chain recurrent if for every \(\varepsilon > 0\) there is an \(\varepsilon \)-chain from x to itself. The set \({{\mathcal {R}}}({\varvec{\Gamma }})\) of chain recurrent points is called the chain recurrent set of \({\varvec{\Gamma }}.\)

Write \(\textbf{x} \looparrowright \textbf{y}\) if for every \(\varepsilon >0\) there is an \(\varepsilon \)-chain from \(\textbf{x}\) to \(\textbf{y}\) and \(\textbf{x}\sim \textbf{y}\) if both \(\textbf{x} \looparrowright \textbf{y}\) and \(\textbf{y} \looparrowright \textbf{x}\) hold true. It is easy to see that \(\sim \) is an equivalence relation on \({{\mathcal {R}}}({\varvec{\Gamma }}).\) For basic properties of the chain recurrent set we refer the reader to Conley (1978) and Block and Franke (1985), Fathi and Pageault (2015), Franke and Selgrade (1976). In particular, Conley showed [see Proposition 2.1 in Franke and Selgrade (1976)] that equivalence classes of \({{\mathcal {R}}}({\varvec{\Gamma }})\) are precisely its connected components. Note that if \({\varvec{\Gamma }}(\textbf{x})=\textbf{x},\) then \(\textbf{x}_0=\textbf{x}\) and \(\textbf{x}_1=\textbf{x}\) form an \(\varepsilon \)-chain for any \(\varepsilon >0.\) Thus, any fixed point of \({\varvec{\Gamma }}\) is chain recurrent

Somewhat surprisingly, the following general result is true:

Theorem 3.2

Assume that \({\varvec{\Gamma }}:\Delta _M\rightarrow \Delta _M\) is continuous. Then there exists a reproductive fitness function \({{\varvec{\varphi }}}\) such that

-

(i)

\({{\varvec{\varphi }}}\) is continuous in \(\Delta _{[I]}^\circ \) for all \(I\subset \Delta _M.\)

-

(ii)

The average \(h(\textbf{x})=\textbf{x}\cdot {{\varvec{\varphi }}}(\textbf{x})\) satisfies (18) and, moreover, is a complete Lyapunov function for \({\varvec{\Gamma }}\) (see Definition 2).

In particular, the difference \(h\big ({\varvec{\Gamma }}(\textbf{x})\big )-h(\textbf{x})\) is strictly positive on \(\Delta _M\backslash {{\mathcal {R}}}({\varvec{\Gamma }}).\)

Theorem 3.2 is an immediate consequence of a well-known mathematical result which is sometimes called a “fundamental theorem of dynamical systems” (Franks; Norton 1995; Papadimitriou and Piliouras 2016). The following definition and theorem are adapted from Hurley (1998). To simplify notation, in contrast to the conventional definition, we define Lyapunov function as a function non-decreasing (rather than non-increasing) along the orbits of \({{\varvec{\psi }}}_k.\)

Definition 2

A complete Lyapunov function for \({\varvec{\Gamma }}:\Delta _M\rightarrow \Delta _M\) is a continuous function \(h: \Delta _M \rightarrow [0,1]\) with the following properties:

-

1.

If \(\textbf{x}\in \Delta _M\backslash {{\mathcal {R}}}({\varvec{\Gamma }}),\) then \(h\big ({\varvec{\Gamma }}(\textbf{x})\big ) > h(\textbf{x}).\)

-

2.

If \(\textbf{x}, \textbf{y} \in {{\mathcal {R}}}({\varvec{\Gamma }})\) and \( \textbf{x} \looparrowright \textbf{y},\) then \(h(\textbf{x})\le h(\textbf{y}).\) Moreover, \(h(\textbf{x}) = h(\textbf{y})\) if and only if \(\textbf{x} \sim \textbf{y}.\)

-

3.

The image \(h\big ({{\mathcal {R}}}({\varvec{\Gamma }})\big )\) is a compact nowhere dense subset of [0, 1].

We remark that while h must be bounded as a continuous functions defined on a compact domain \(\Delta _M,\) the normalization \(h(\textbf{x})\in [0,1]\) is rather arbitrary and chosen for the convenience only. Indeed, any multiplier ch of a complete Lyapunov function h with a positive scalar \(c>0,\) is a complete Lyapunov function itself.

We have:

Theorem 2

(Fundamental theorem of dynamical systems) If \({\varvec{\Gamma }}:\Delta _M\rightarrow \Delta _M\) is continuous, then there is a complete Lyapunov function for \({\varvec{\Gamma }}.\)

To derive Theorem 3.2 from this fundamental result, set \(\varphi _i(\textbf{x})=\frac{\Gamma _i(\textbf{x})}{x_i}h(\textbf{x})\) for \(i\in {{\mathcal {C}}}(\textbf{x})\) and, for instance, \(\varphi _i(\textbf{x})=0\) for \(i\not \in {{\mathcal {C}}}(\textbf{x}).\)

Theorem 2 was established by Conley for continuous-time dynamical systems on a compact space (Conley 1978). The above discrete-time version of the theorem is taken from Hurley (1998), where it is proven for an arbitrary separable metric state space. For recent progress and refinements of the theorem see Bernardi and Florio (2019), Fathi and Pageault (2019), Pageault (2009) and references therein.

The Lyapunov functions constructed in Conley (1978) and Hurley (1998) map \({{\mathcal {R}}}({\varvec{\Gamma }})\) into a subset of the Cantor middle-third set. Thus, typically, \(\Delta _M\backslash {{\mathcal {R}}}({\varvec{\Gamma }})\) is a large set [cf. Section 6.2 in Conley (1978), Theorem A in Block and Franke (1985), and an extension of Theorem 2 in Fathi and Pageault (2015)]. For example, for the class of Wright–Fisher models discussed in Sect. 4.3, \({{\mathcal {R}}}({\varvec{\Gamma }})\) consists of the union of a single interior point (asymptotically stable global attractor in \(\Delta _M^\circ \)) and the boundary of \(\Delta _M\) (repeller). For a related more general case, see “Appendix B” and Example 7 below.

Recall that a point \(\textbf{x}\in \Delta _M\) is called recurrent if the orbit \(({{\varvec{\psi }}}_k)_{k\in {{\mathbb {Z}}}_+}\) with \({{\varvec{\psi }}}_0=\textbf{x}\) visits any open neighborhood of \(\textbf{x}\) infinitely often. Any recurrent point is chain recurrent, but the converse is not necessarily true. It is easy to verify that the equality \(h(\Gamma (\textbf{x})) =h(\textbf{x})\) holds for any recurrent point \(\textbf{x}.\) The following example builds upon this observation. It shows that for a general chaotic map the complete Lyapunov function needs to be constant.

Example 2

The core of this example is the content of Theorem 4 in Basener (2013). Assume that (18) holds for a continuous function \(h:\Delta _M\rightarrow {{\mathbb {R}}}_+\) in (17). Suppose in addition that the mean-field dynamics is chaotic. More precisely, assume that \({\varvec{\Gamma }}\) is topologically transitive, that is \({\varvec{\Gamma }}\) is continuous and for any two non-empty open subsets U and V of \(\Delta _M,\) there exists \(k\in {{\mathbb {N}}}\) such that \({{\varvec{\psi }}}_k(U)\bigcap V\ne \emptyset \) [this is not a standard definition of a chaotic dynamical system, we omitted from a standard Devaney’s set of conditions a part which is not essential for our purpose, cf. Banks et al. (1992), Basener (2013), Silverman (1992)]. Equivalently (Silverman 1992), there is \(\textbf{x}_0\in \Delta _M\) such that the orbit \(\big ({{\varvec{\psi }}}_k(\textbf{x}_0)\big )_{k\in {{\mathbb {Z}}}_+}\) is dense in \(\Delta _M.\)

Let \(\textbf{x}={{\varvec{\psi }}}_k(\textbf{x}_0)\) be an arbitrary point on this orbit. We will now show that the assumption \(\varepsilon :=\big [h\big ({\varvec{\Gamma }}(\textbf{x})\big )-h(\textbf{x})\big ]>0\) leads to a contradiction. To this end, let \(\delta >0\) be so small that \(|h(\textbf{x})-h(\textbf{y})|<\varepsilon /2\) whenever \(\Vert \textbf{x}-\textbf{y}\Vert <\delta .\) By our assumption, there exists \(m>k\) such that \(\Vert {{\varvec{\psi }}}_m(\textbf{x}_0)-\textbf{x}\Vert <\delta ,\) and hence \(h\big ({{\varvec{\psi }}}_m(\textbf{x}_0)\big )<h\big ({\varvec{\Gamma }}(\textbf{x})\big )=h\big ({{\varvec{\psi }}}_{k+1}(\textbf{x}_0)\big ),\) which is impossible in view of the monotonicity condition (18). Thus h is constant along the forward orbit of \(\textbf{x}_0.\) Since the normalization function \(h(\textbf{x})\) is constant on a dense subset of \(\Delta _M\) and \(\Delta _M\) is compact, \(h(\textbf{x})\) is a constant function. In other words, for a chaotic mean-field dynamics a constant function is the only choice of a continuous normalization \(h(\textbf{x})\) that satisfies (18).

A common example of a chaotic dynamical system is the iterations of a one-dimensional logistic map. In our framework this can be translated into the two-dimensional case \(M=2\) and \({\varvec{\Gamma }}(x,y)=\big (4x(1-x), 1-4x(1-x)\big )=(4xy,1-4xy).\) In principle, the example can be extended to higher-dimensions using, for instance, techniques of Akhmet and Fen (2016).

A generic instance of the mean-field update function \({\varvec{\Gamma }}\) with a non-constant complete Lyapunov function is discussed in Example 7 deferred to “Appendix B”.

The existence of a non-decreasing reproductive fitness does not translate straightforwardly into a counterpart for the stochastic dynamics. Indeed, in the absence of mutations \(\textbf{X}^{(N)}_k\) converges almost surely to an absorbing state, i. e. to a random vertex of the simplex \(\Delta _M.\) Therefore, by the bounded convergence theorem,

where \(p_j(\textbf{x})=\lim _{k\rightarrow \infty } P\big (\textbf{X}^{(N)}_k=\textbf{e}_j\,\mid \,\textbf{X}^{(N)}_0=\textbf{x}\big ).\) It then follows from part 2 of Definition 2 that if the boundary \(\partial \big (\Delta _{M,N}\big )\) is a repeller for \({\varvec{\Gamma }}\) (cf. “Appendix B”), then generically the limit is not equal to the \(\sup _{k\in {{\mathbb {N}}}} E\big (h(\textbf{X}^{(N)}_k)\,\mid \,\textbf{X}^{(N)}_0=\textbf{x}\big ).\)

The following example shows that under some additional convexity assumptions, the mean of a certain continuous reproductive fitness is a strictly increasing function of time. More precisely, for the class of Wright–Fisher models in the example, \(h\big (\textbf{X}^{(N)}_k)\) is a submartingale for an explicit average reproductive fitness h.

Example 3

Let \(h:\Delta _M\rightarrow {{\mathbb {R}}}_+\) be an arbitrary convex (and hence, continuous) function that satisfies (18), not necessarily the one given by Theorem 3.2. Then, by the multivariate Jensen’s inequality (Ferguson 1967, p. 76), with probability one, for all \(k\in {{\mathbb {Z}}}_+,\)

Thus, \(M_k=h\big (\textbf{X}^{(N)}_k\big )\) is a submartingale in the filtration \({{\mathcal {F}}}_k=\sigma \big (\textbf{X}^{(N)}_0,\ldots ,\textbf{X}^{(N)}_k\big ).\) In particular, the sequence \({{\mathfrak {f}}}_k=E(M_k)\) is non-decreasing and converges to its supremum as \(k\rightarrow \infty .\) In fact, since the first inequality in (19) is strict whenever \(\textbf{X}^{(N)}_k\not \in V_M,\) the mean of the reproductive fitness is strictly increasing under the no-mutations assumption (16):

as long as \(P\big (\textbf{X}^{(N)}_0\in \Delta _{M,N}^\circ \big )=1.\) Note that the maximum exists and is finite because h is continuous and \(\Delta _M\) is a compact set.

The effect of the fitness convexity assumption on the evolution of biological populations has been discussed by several authors, see, for instance, Christie and Beekman (2017), Hintze et al. (2015), Spichtig and Kawecki (2004) and references therein. Generally speaking, the inequality \(h\big (\alpha \textbf{x}+(1-\alpha )\textbf{y}\big )\le \alpha h(\textbf{x})+(1-\alpha )h(\textbf{y}),\) \(\alpha \in [0,1],\) \(\textbf{x},\textbf{y}\in \Delta _M,\) that characterizes convexity, manifests an evolutionary disadvantage of the intermediate population \(\alpha \textbf{x}+(1-\alpha )\textbf{y}\) in comparison to the extremes \(\textbf{x},\textbf{y}.\) A typical biological example of such a situation is competitive foraging (Hintze et al. 2015).

It can be shown [see Section 6 in Hofbauer (2011) or Losert and Akin (1983)] that if \(\textbf{A}\) is a non-negative symmetric \(M\times M\) matrix, then (18) holds with \(h(\textbf{x})=\textbf{x}^T\textbf{A}{} \textbf{x}\) for (11) and (12). Here and henceforth, \(\textbf{A}^T\) for a matrix \(\textbf{A}\) (of arbitrary dimensions) denotes the transpose of \(\textbf{A}.\) Moreover, the inequality is strict unless \(\textbf{x}\) is a game equilibrium. The evolutionary games with \(\textbf{A}=\textbf{A}^T\) are referred to in Hofbauer (2011) as partnership games, such games are a particular case of so-called potential games [see Hofbauer and Sigmund (2003), Sandholm (2010) and references therein]. In the case of the replicator mean-field dynamics (10) and potential games, the potential function \(h(\textbf{x})\) coincides with the average reproductive fitness \(\textbf{x}\cdot {{\varvec{\varphi }}}(\textbf{x}).\) Let

It is easy to check that if \(\textbf{A}\) is positive definite on \(W_M,\) that is

then \(\textbf{f}(\textbf{x})=\textbf{x}^T\textbf{A}{} \textbf{x}\) is a convex function on \(\Delta _M,\) that is

We thus have:

Proposition 3.3

(Hofbauer 2011; Losert and Akin 1983) Suppose that \(\textbf{A}\) is a symmetric invertible \(M\times M\) matrix with positive entries such that (21) holds true (i. e. \(\textbf{A}\) is positive-definite on \(W_M\)). Then (19) holds with \(h(\textbf{x})=\textbf{x}^T\textbf{A}{} \textbf{x}.\)

The above proposition shows that a normalized (in order to scale its range into the interval [0, 1]) version of h, namely \({{\widetilde{h}}}(\textbf{x})=\frac{1}{\Vert A\Vert }{} \textbf{x}^T\textbf{A}{} \textbf{x}\) can serve as a complete Lyapunov function, strictly increasing within the interior of \(\Delta _M.\) It is shown in Losert and Akin (1983) that under the conditions of the proposition, \({{\varvec{\psi }}}_k\) converges, as k tends to infinity, to an equilibrium on the boundary of the simplex \(\Delta _M\) (cf. Theorem 4 in “Appendix B”). The proposition thus indicates that when \(\textbf{A}\) is positive-definite on \(W_M,\) the stochastic and deterministic mean-field model might have very similar asymptotic behavior when N is large. This observation partially motivated our Theorem 4.6 stated below in Sect. 4.3.

3.3 Incorporating mutations

In order to incorporate mutations into the interpretation of the update rule \({\varvec{\Gamma }}\) in terms of the population’s fitness landscape, we adapt the Wright–Fisher model with neutral selection and mutation of Hobolth and Sireén (2016). Namely, for the purpose of this discussion we will make the following assumption:

Assumption 3.4

The update rule \({\varvec{\Gamma }}\) can be represented in the form \({\varvec{\Gamma }}(\textbf{x})={{\varvec{\Upsilon }}}(\textbf{x}^T{{\varvec{\Theta }}}),\) where \(\textbf{x}^T\) denotes the transpose of \(\textbf{x},\) and

-

1.

\({{\varvec{\Upsilon }}}: \Delta _M\rightarrow \Delta _M\) is a vector field that satisfies the “no-mutations” condition (cf. (16))

$$\begin{aligned} x(j)=0~\Longrightarrow ~\Upsilon _j(\textbf{x})=0 \end{aligned}$$(22)for all \(\textbf{x}\in \Delta _M\) and \(j\in S_M.\)

-

2.

\({{\varvec{\Theta }}}=(\Theta _{ij})_{i,j\in S_M}\) is \(M\times M\) stochastic matrix, that is \(\Theta _{ij}\ge 0\) for all \(i,j\in S_m\) and \(\sum _{j\in S_M}\Theta _{ij}=1\) for all \(i\in S_M.\)

The interpretation is that while the entry \(\Theta _{ij}\) of the mutation matrix \({{\varvec{\Theta }}}\) specifies the probability of mutation (mutation rate) of a type i particle into a particle of type j for \(j\ne i,\) the update rule \({{\varvec{\Upsilon }}}\) encompasses the effect of evolutional forces other than mutation, for instance genetic drift and selection. The total probability of mutation of a type i particle is \(\sum _{j\ne i} \Theta _{ij}=1-\Theta _{ii},\) and, accordingly, \({\varvec{\Gamma }}\) satisfies the no-mutation condition if \({{\varvec{\Theta }}}\) is the unit matrix. Note that, since \({{\varvec{\Theta }}}\) is assumed to be stochastic, the linear transformation \(\textbf{x}\mapsto \textbf{x}^T{{\varvec{\Theta }}}\) leaves the simplex \(\Delta _M\) invariant, that is \(\textbf{x}^T{{\varvec{\Theta }}}\in \Delta _M\) for all \(\textbf{x}\in \Delta _M.\) Both the major implicit assumptions forming a foundation for writing \({\varvec{\Gamma }}\) as the composition of a non-mutative map \({{\varvec{\Upsilon }}}\) and the action of a mutation matrix \({{\varvec{\Theta }}},\) namely that mutation can be explicitly separated from other evolutionary forces and that mutations happen at the beginning of each cycle before other evolutionary forces take effect, are standard (Antal et al. 2009; Hobolth and Sireén 2016; Hofrichter et al. 2017) even though at least the latter is a clear idealization (Stephens 2014). Certain instances of Fisher’s fundamental theorem of natural selection have been extended to include mutations in Basener and Sanford (2018), Hofbauer (1985).

We now can extend the definition (15) as follows:

Definition 3

Let Assumption 3.4 hold. A vector field \({{\varvec{\varphi }}}: \Delta _M\rightarrow {{\mathbb {R}}}_+^M\) is called the reproductive fitness landscape of the model if for all \(\textbf{x}\in \Delta _M\) we have:

where \(\textbf{x}^T\) is the transpose of \(\textbf{x}.\)

An analogue of Proposition 3.1 for a model with mutation reads:

Proposition 3.5

Let Assumption 3.4 hold. Then \({{\varvec{\varphi }}}:\Delta _M\rightarrow \Delta _M\) is a reproductive fitness landscape if and only if

for some function \(h:\Delta _M\rightarrow (0,\infty ).\)

Proof

For the “if part” of the proposition, observe that if \({{\varvec{\varphi }}}\) is given by (24), then

where in the second step we used the fact that \({{\mathcal {C}}}\big ({\varvec{\Gamma }}(\textbf{x})\big )\subset {{\mathcal {C}}}\big (\textbf{x}^T{{\varvec{\Theta }}}\big )\) by virtue of (22). Thus \({{\varvec{\varphi }}}\) is a solution to (23).

For the “only if part” of the proposition, note that if \({{\varvec{\varphi }}}\) is a reproductive fitness landscape, then (23) implies that (24) holds with \(h(\textbf{x})=\textbf{x}^T{{\varvec{\Theta }}}{{\varvec{\varphi }}} (\textbf{x}).\) \(\square \)

If the weighted average \(\textbf{x}^T{{\varvec{\Theta }}}{{\varvec{\varphi }}} (\textbf{x})\) is adopted as the definition of the mean fitness, Theorem 3.2 can be carried over verbatim to the setup with mutations.

4 Stochastic dynamics: main results

In this section we present our main results for the stochastic model. The proofs are deferred to Sect. 5.

First, we prove two different approximation results. The Markov chain \(\textbf{X}^{(N)}\), even for the classical two-allele haploid model of genetics, is fairly complex. In fact, most of the known results about the asymptotic behavior of Wright–Fisher Markov chains were obtained through a comparison to various limit processes, including the mean-field deterministic system, branching process, diffusions and Gaussian processes (Chalub and Souza 2014; Ewens 2012; Hartle and Clark 2007; Hofrichter et al. 2017; Nagylaki 1990).

In Sect. 4.1 we are concerned with the so-called Gaussian approximation, which can be considered as an intermediate between the deterministic and the diffusion approximations. This approximation process can be thought of as the mean-field iteration scheme perturbed by an additive Gaussian noise. Theorem 4.1 constructs such an approximation for the Wright–Fisher model. Results of this type are well-known in literature, and our proof of Theorem 4.1 is a routine adaptation of classical proof methods.

In Sect. 4.2, we study the longitudinal error of the deterministic approximation by the mean-field model. Specifically, Theorem 4.2 provides an exponential lower bound on the decoupling time, namely the first time when an error of approximation exceeds a given threshold. A variation of the result is used later in the proof of Theorem 4.6, the main result of this section.

Conceptually, Theorem 4.1 is a limit theorem suggesting that for large values of N, the stochastic component is the major factor in determination of the asymptotic behavior of the stochastic model. Theorem 4.2 then complements this limit theorem by quantifying this intuition for the stochastic model of a given (though large) population size N (versus the infinite-population-limit result of Theorem 4.1).

The impact of the mean-field dynamics on the underlying stochastic process is further studied in Sect. 4.3. The main result is Theorem 4.6 which specifies the route to extinction of the stochastic system in a situation when the mean-field model is “strongly permanent” (heuristically, strongly repelling from the boundary, cf. “Appendix B” and the discussion on p. 22) and has a unique interior equilibrium. In general, the problem of determining the route to extinction for a multi-type stochastic population model is of an obvious importance to biological sciences, but is notoriously difficult in practice.

The proof of Theorem 4.6 highlights a mechanism leading to an almost deterministic route to extinction for the model. Intuitively, under the conditions of Theorem 4.6 the system is trapped in a quasi-equilibrium stochastic state, fluctuating near the deterministic equilibrium for a very long time, eventually “untying the Gordian knot”, and instantly jumping to the boundary. A similar mechanism of extinction was previously described for different stochastic population models in Assaf and Meerson (2010), Assaf and Mobilia (2011), Park and Traulsen (2017). We note that analysis in these papers involves a mixture of rigorous mathematical and heuristic arguments, and is different in nature from our approach.

4.1 Gaussian approximation of the Wright–Fisher model

Let \(\overset{P}{\rightarrow }\) denote convergence in probability as the population size N goes to infinity. The following theorem is an adaptation of Theorems 1 and 3 in Buckley and Pollett (2010) for our multivariate setup. We also refer to Klebaner and Nerman (1994), Nagylaki (1986), Nagylaki (1990), Parra-Rojas et al. (2014) for earlier related results and to (Rao 2001, p. 527) for a rigorous construction of a degenerate Gaussian distribution.

Recall \({{\varvec{\psi }}}_k\) from (3). We have:

Theorem 4.1

Suppose that

for some \({{\varvec{\psi }}}_0\in \Delta _M.\) Then the following holds true:

-

(a)

\(\textbf{X}^{(N)}_k\overset{P}{\rightarrow }\ {{\varvec{\psi }}}_k\) for all \(k\in {{\mathbb {Z}}}_+.\)

-

(b)

Let

$$\begin{aligned} \textbf{u}^{(N)}_k=\sqrt{N}\bigl (\textbf{X}^{(N)}_k-{{\varvec{\psi }}}_k\bigr ),\qquad N\in {{\mathbb {N}}},\,k\in {{\mathbb {Z}}}_+, \end{aligned}$$and \({{\varvec{\Sigma }}}(\textbf{x})\) be the matrix \(M\times M\) with entries (cf. (9) in Sect. 2.2)

$$\begin{aligned} \Sigma _{i,j}(\textbf{x}):= \left\{ \begin{array}{ll} -\Gamma _i(\textbf{x})\Gamma _j(\textbf{x})&{}\text{ if }~i\ne j, \\ \Gamma _i(\textbf{x})\bigl (1-\Gamma _i(\textbf{x})\bigr )&{}\text{ if }~i=j \end{array} \right. , \qquad \textbf{x}\in \Delta _M. \end{aligned}$$Suppose that in addition to (25), \({\varvec{\Gamma }}\) is twice continuously differentiable and \(\textbf{u}^{(N)}_0\) converges weakly, as N goes to infinity, to some (possibly random) \(\textbf{u}_0.\)

Then the sequence \(\textbf{u}^{(N)}:=\bigl (\textbf{u}^{(N)}_k\bigr )_{k\in {{\mathbb {Z}}}_+}\) converges in distribution, as N goes to infinity, to a time-inhomogeneous Gaussian AR(1) sequence \((\textbf{U}_k)_{k\in {{\mathbb {Z}}}_+}\) defined by

where \(\textbf{D}_x({{\varvec{\psi }}}_k)\) denotes the Jacobian matrix of \({\varvec{\Gamma }}\) evaluated at \({{\varvec{\psi }}}_k,\) and \(\textbf{g}_k,\) \(k\in {{\mathbb {Z}}}_+,\) are independent degenerate Gaussian vectors, each \(\textbf{g}_k\) distributed as \(N\bigl (0,{{\varvec{\Sigma }}}({{\varvec{\psi }}}_k)\bigr ).\)

The proof of the theorem is included in Sect. 5.1. It is not hard to prove [cf. Remark (v) on p. 61 of Buckley and Pollett (2010), see also Klebaner and Nerman (1994)] that if \({{\varvec{\chi _{eq}}}}\) is a unique global stable point of \({\varvec{\Gamma }},\) \({{\varvec{\psi }}}_0\overset{P}{\rightarrow }\ {{\varvec{\chi _{eq}}}}\) in the statement of Theorem 4.1 and, in addition, \(\textbf{u}^{(N)}_0\) converges weakly, as N goes to infinity, to some \(\textbf{u}_0,\) then the linear recursion (26) can be replaced with

where \(\widetilde{\textbf{g}}_k,\) \(k\in {{\mathbb {Z}}}_+,\) are i. i. d. degenerate Gaussian vectors in \({{\mathbb {R}}}^M,\) each \(\widetilde{\textbf{g}}_k\) distributed as \(N\bigl (0,{{\varvec{\Sigma }}}({{\varvec{\chi _{eq}}}})\bigr ).\) One then can show that if the spectral radius of \(\textbf{D}_x({{\varvec{\chi _{eq}}}})\) is strictly less than one, Markov chain \(\textbf{U}_k\) has a stationary distribution, see Buckley and Pollett (2010, p. 61) for more details. In the case when \({\varvec{\Gamma }}\) has a unique global stable point, (27) was obtained in Parra-Rojas et al. (2014) by different from ours, analytic methods.

4.2 Difference equation approximation

Theorem 4.1 indicates that when N is large, the trajectory of the deterministic dynamical system \({{\varvec{\psi }}}_k\) can serve as a good approximation to the path of the Markov chain \(\textbf{X}^{(N)}_k.\) The following theorem offers some insight into the duration of the time for which the deterministic and the stochastic paths stay fairly close to each other, before separating significantly for the first time. The theorem is a suitable modification of some results of Aydogmus (2016) in a one-dimensional setup.

For \(\varepsilon >0,\) let

where \({{\varvec{\psi }}}_0=\textbf{X}^{(N)}_0\) and \({{\varvec{\psi }}}_k\) is the sequence introduced in (3). Thus \(\tau _N(\varepsilon )\) is the first time when the path of the Markov chain \(\textbf{X}^{(N)}_k\) deviates from the trajectory of the deterministic dynamical system (3) by more than a given threshold \(\varepsilon >0.\) We have:

Theorem 4.2

Suppose that the map \({\varvec{\Gamma }}:\Delta _M \rightarrow \Delta _M\) is Lipschitz continuous, that is there exists a constant \(\rho >0\) such that

for all \(\textbf{x},\textbf{y}\in \Delta _M.\) Then the following holds for any \(\varepsilon >0,\) \(K\in {{\mathbb {N}}},\) and \(N\in {{\mathbb {N}}}:\)

where

In particular, if \(\rho <1\) we have:

The proof of the theorem is given in Sect. 5.2. Note that the upper bound in (30) is meaningful for large values of N even when \(\rho \ge 1.\) When \({\varvec{\Gamma }}\) is a contraction, i.e. \(\rho <1,\) the Banach fixed point theorem implies that there is a unique point \({{\varvec{\chi _{eq}}}}\in \Delta _M\) such that \({\varvec{\Gamma }}({{\varvec{\chi _{eq}}}})={{\varvec{\chi _{eq}}}}.\) Furthermore, for any \(\textbf{x}\in \Delta _M\) and \(k\in {{\mathbb {N}}}\) we have

that is, as k goes to infinity, \({{\varvec{\psi }}}_k\) converges exponentially fast to \({{\varvec{\chi _{eq}}}}.\) The following theorem is a suitable modification of Theorem 4.2 for contraction maps.

Theorem 4.3

Assume that there exist a closed subset \({{\mathcal {E}}}\) of \(\Delta _M\) and a constant \(\rho \in (0,1)\) such that (29) holds for any \(\textbf{x},\textbf{y}\in {{\mathcal {E}}}.\) Then the following holds for any \(\varepsilon >0,\) \(K\in {{\mathbb {N}}},\) \(N\in {{\mathbb {N}}}\) such that \(1-2M\exp \bigl (-\frac{(1-\rho )^2\varepsilon ^2 N}{2}\bigr )>0,\) and \(\textbf{X}^{(N)}_0\in {{\mathcal {E}}}:\)

In particular,

The proof of the theorem is given in Sect. 5.3. We remark that relaxing the assumption that \({\varvec{\Gamma }}\) is Lipschitz continuous on the whole simplex \(\Delta _M\) allows, for instance, to cover the one-dimensional setup of Aydogmus (2016) where \(M=2,\) the underlying Markov chain is binomial, and \({\varvec{\Gamma }}\) is a contraction only on a subset of \(\Delta _2.\)

4.3 Metastability and elimination of types

In this section, we consider a large population Wright–Fisher model with the update rule \({\varvec{\Gamma }}\) satisfying the following conditions:

Assumption 4.4

Suppose that \({\varvec{\Gamma }}\) is defined by (10) and (11). Furthermore, assume that \(\textbf{b}=(1,1,\ldots ,1)\) and \(\textbf{A}\) is a symmetric invertible \(M\times M\) matrix with positive entries such that the following two conditions hold:

and there are \({{\varvec{\chi _{eq}}}}\in \Delta _M^\circ \) and \(c>0\) such that

where \(\textbf{e}:=(1,\ldots ,1)\in {{\mathbb {R}}}^M.\)

Note that \(\Gamma _j(\textbf{x})=0\) if and only if \(x_j=0,\) and hence the Markov chain \(\textbf{X}^{(N)}\) is absorbing and will eventually become fixed on one of the simplex vertices (cf. “Appendix A”). The assumption is borrowed from Losert and Akin (1983), where the defined in (3) deterministic dynamical system \({{\varvec{\psi }}}_k\) is studied. In particular, it is shown in Losert and Akin (1983) that under these conditions the internal equilibrium point of \({{\varvec{\psi }}}_k\) is stable (see Theorem 4 in our “Appendix B” for technical details).

The main result of the section is Theorem 4.6, which intuitively says that if the push away from the boundary and toward the equilibrium is strong enough and Assumption 4.4 holds, with a high degree of certainty, the type with the lowest fitness at the equilibrium is the one that goes extinct first. The result provides an interesting link between the interior equilibrium of the mean-field dynamics and the asymptotic behavior of the stochastic system which is eventually absorbed at the boundary. A similar phenomenon for a different type of stochastic dynamics has been described in physics papers (Assaf and Meerson 2010; Assaf and Mobilia 2011; Park and Traulsen 2017), see also Auletta et al. (2018). Key generic features that are used to prove the result are:

-

1.

The mean-field dynamics is permanent, that is (see “Appendix B” below for a brief background discuion): there exists \(\delta >0\) such that

$$\begin{aligned} \liminf _{k\rightarrow \infty } \psi _k(i)>\delta \qquad \forall \,i\in S_M,{{\varvec{\psi }}}_0\in \Delta _M^\circ . \end{aligned}$$The condition ensures a strong repelling of the mean-field dynamical system from the boundary. Heuristically, for the corresponding Wright–Fisher model and a large population N, this condition propagates into the metastable (asymptotically quasi-stationary with a long life-time before extinction of any type) behavior of the stochastic system.

-

2.

There is a unique interior equilibrium \({{\varvec{\chi _{eq}}}}\). In addition, \(\lim _{k\rightarrow \infty } {{\varvec{\psi }}}_k={{\varvec{\chi _{eq}}}}\) for any \({{\varvec{\psi }}}_0\in \Delta _M^\circ .\)

-

3.

The push toward equilibrium is strong enough to force the stochastic system to follow the deterministic dynamics closely and get trapped for a prolonged time in a “metastable” state near the equilibrium.

-

4.

Finally, the same strong push toward the equilibrium yields a large deviation principle which ensures that the stochastic system is much likely to jump to the boundary directly from the neighborhood of the equilibrium by one giant fluctuation than by a sequence of small consecutive steps.

With these features, one would expect the following. If the starting point \(\textbf{X}^{(N)}_0\) lies within the interior of \(\Delta _M,\) the mean-field dynamical system \({{\varvec{\psi }}}_k\) converges to the unique equilibrium, \({{\varvec{\chi _{eq}}}}\in \Delta _M^\circ .\) It can be shown that the rate of the convergence is exponential. In view of Theorem 4 in “Appendix B” and Theorem 4.1, one can expect that the trajectory of the stochastic system will stay close to the deterministic mean-field path for a long enough time, so that the stochastic model will follow the mean-field path to a small neighborhood of \({{\varvec{\chi _{eq}}}}.\) Then the probability of an immediate elimination of a type i will be of order \(\big (1-\Gamma _i({{\varvec{\chi _{eq}}}})\big )^N.\) Assuming that

we expect that the stochastic system will enter a metastable state governed by its quasi-stationary distribution and will be trapped in a neighborhood of the equilibrium for a very long time. In fact, since by virtue of Theorem 4.1, \(\textbf{X}^{(N)}\) can be considered as a random perturbation of the dynamical system \({{\varvec{\psi }}}_k\) with a small noise, the quasi-stationary distribution should converge as N goes to infinity to the degenerate probability measure supported by the single point \({{\varvec{\chi _{eq}}}}.\) Finally, general results in the theory of large deviations (Faure and Schreiber 2014; Iglehart 1972) suggest that under a suitable condition (34), with an overwhelming probability, the system will eventually jump to the boundary by one giant fluctuation. It follows from the above heuristic argument, that the probability of such an instant jump to the facet where, say, \(x(j)=0\) and the rest of coordinates are positive is roughly \(\Gamma _j({{\varvec{\chi _{eq}}}})^N.\) For large values of N this practically guarantees that the stochastic system will hit the boundary through the interior of \(\Delta _{[J^*]},\) where \(J^*=S_M\backslash \{j^*\},\) and \(j^*\in \arg \min _{i\in S_M}\{\Gamma _i({{\varvec{\chi _{eq}}}}):i\in S_M\}.\) In other words, under Assumption 4.4, when N is large, the elimination of the least fit and survival of the remaining types is almost certain (see results of a numerical simulation supporting the hypothesis below in Tables 1 and 2).

We now turn to the statement of our results. First, we state the following technical result. Recall (3) and Definition 1.

Proposition 4.5

Let Assumption 4.4 hold. Then for any neighborhood \({{\mathcal {N}}}\) of the interior equilibrium \({{\varvec{\chi _{eq}}}}\) and any compact set \(K\subset \Delta _M\) there exist a real constant \(\varepsilon =\varepsilon ({{\mathcal {N}}},K)>0\) and an integer \(T=T({{\mathcal {N}}},K)\in {{\mathbb {N}}}\) such that if \(\delta \in (0,\varepsilon ),\) \(\textbf{x}_0\in K,\) and \(\textbf{x}_0,\textbf{x}_1,\ldots ,\textbf{x}_T\) is an \(\delta \)-chain of length T for the dynamical system \({{\varvec{\psi }}}_k,\) then \(\textbf{x}_T\in {{\mathcal {N}}}.\)

The proof of the proposition is deferred to Sect. 5.4. The proposition is a general property of dynamical system converging to an asymptotically stable equilibrium (see Remark 2 at the end of the proof). Since we were unable to find a reference to this claim in the literature, we provided a short self-contained proof in Sect. 5.4.

We are now ready to state the main result of this section. Suppose \(\textbf{x}\in \Delta _M\) such that at least one component \(\Gamma _i(\textbf{x})\) is not equal to 1/M. Then we define

We set \(\alpha :=\alpha _{{\varvec{\chi _{eq}}}},\) \(\textbf{e}ta:=\textbf{e}ta_{{\varvec{\chi _{eq}}}},\) and \(J^*:=J^*_{{\varvec{\chi _{eq}}}}.\)

Additionally, for any \(\theta \in \big (0,\frac{\textbf{e}ta-\alpha }{2}\big )\) and \(\eta >0\) such that

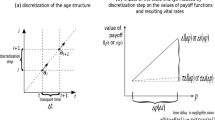

we define (see Fig. 1):

and

In the infinite population limit we have:

Theorem 4.6

Assume that \({\varvec{\Gamma }}\) satisfies Assumption 4.4 and \(\textbf{X}^{(N)}_0\overset{P}{\rightarrow }\textbf{x}_0\) for some \(\textbf{x}_0\in \Delta _M^\circ .\) Suppose in addition that there exist \(\theta \in \big (0,\frac{\textbf{e}ta-\alpha }{2}\big )\) and \(\eta \in \Big (0,1-\frac{\log (1-\alpha -\theta )}{\log (1-\textbf{e}ta+\theta )}\Big )\) (and, therefore, the inequality in (35) is satisfied) such that

where the constant \(c_T\) is introduced in (31), \(\varepsilon _\theta =\varepsilon ({{\mathcal {N}}}_\theta ,K_{\theta ,\eta }),\) \(T=T({{\mathcal {N}}}_\theta ,K_{\theta ,\eta }),\) and \(\varepsilon ({{\mathcal {N}}},K)\) and \(T({{\mathcal {N}}}_\theta ,K_{\theta ,\eta })\) are introduced in Proposition 4.5.

Let

and \({{\mathcal {E}}}_N\) be the event that

-

(1)

\(\textbf{X}^{(N)}_{\nu _N}\) has exactly \(M-1\) non-zero components (that is, \(|C(\textbf{X}^{(N)}_{\nu _N}) |=M-1\) in notation of (13)); and

-

(2)

\(X^{(N)}_{\nu _N}(i)=0\) for some \(i\in J^*.\)

Then the following holds true:

-

(i)

\(\lim _{N\rightarrow \infty } P ({{\mathcal {E}}}_N)=1.\)

-

(ii)

\(\lim _{N\rightarrow \infty } E(\nu _N)=+\infty .\)

The proof of Theorem 4.6 is included in Sect. 5.5. In words, part (i) of the theorem is the claim that with overwhelming probability, at the moment that the Markov chain hits the boundary, exactly one of its components is zero, and, moreover, the index i of the zero component \(\textbf{X}^{(N)}_{\nu _N}(i)\) belongs to the set \(J^*.\) A similar metastability phenomenon for continuous-time population models has been considered in Assaf and Meerson (2010) and Assaf and Mobilia (2011), Auletta et al. (2018), Park and Traulsen (2017), however their proofs rely on a mixture of rigorous computational and heuristic “physicist arguments”.

Condition (38) is a rigorous version of the heuristic condition (34). We note that several more general but less explicit and verifiable conditions of this type can be found in mathematical literature, see, for instance, Faure and Schreiber (2014), Panageas et al. (2016) and Assaf and Meerson (2010), Park and Traulsen (2017). It seems plausible that the negative-definite condition (32) is an artifact of the proof and can be relaxed.

It is shown in Losert and Akin (1983) that in this more general situation, the mean-field dynamical system \({{\varvec{\psi }}}_k\) still converges to an equilibrium which might be on the boundary and depends on the initial condition \({{\varvec{\psi }}}_0.\)

Remark 1

Recall (13). A classical result of Karlin and McGregor (1964) implies that under the conditions of Theorem 4.6, the types vanish from the population with an exponential rate until only one left. That is, \(\lim _{k\rightarrow \infty } \frac{1}{k}\log E(|C(\textbf{X}^{(N)}_k)|-1)\) exists and is finite and strictly negative. However, heuristically, the limit is extremely small under the conditions of the theorem [the logarithm of the expected extinction time is anticipated to be of order \(M\cdot N\) (Panageas et al. 2016)], and hence the convergence to boundary states is extremely slow for any practical purpose. The situation when the system escapes from a quasi-equilibrium fast but with a rate of escape quickly converging to zero when the population size increases to infinity, is typical for metastable population systems (Auletta et al. 2018; Broekman et al. 2019; Doorn and Pollett 2013; Méléard and Villemonais 2012).

We will next illustrate the (hypothesized) important role of the threshold condition (38) with a numerical example. To that end, we consider two examples (see matrices \(\textbf{A}_1\) and \(\textbf{A}_2\), bellow) with \(N=500\) and \(M=3\). The choice of the low dimension \(M=3\) is due to the computational intensity involved with tracking the evolution of a metastable stochastic system in a “quasi-equilibrium” state. For each example, we use various sets of initial conditions and run 10000 rounds of simulations. Each simulation is stopped at the random time T defined as follows:

Using this termination rule, we were able to complete all the simulation runs in a reasonable finite time. In each simulation run, we sample the value of the process at some random time ranging between 1000 and 5000. At this intermediate sampling time, we measure \(d_{eq},\) the distance between the stochastic system and the equilibrium \({{\varvec{\chi _{eq}}}}\). In addition, we record the composition of the last state of the Markov chain at the end of each simulation run.

Example 4

Our first example does not satisfy the threshold conditions. More precisely, we choose \(\omega \) in (11) in such a way that \(\frac{\omega }{1-\omega }=10^{-3}\) and we consider the following symmetric \(3\times 3\) matrix \(\textbf{A}_1:\)

with the equilibrium \({{\varvec{\chi ^{(1)}_{eq}}}}=(0.24766355, 0.41121495, 0.3411215)\). Table 1 reports the number of times that each type \(j\in S_3\) was the type with abundance less than 0.05 at the time of exiting the simulation runs.

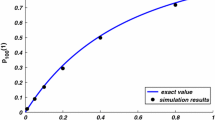

In addition, Fig. 2 below depicts the distribution of \(d_{eq}\) different initial values. Evidently, the threshold condition plays an important role as the theory predicts in Theorem 4.6. The results in Table 1 cannot be explained by the equilibrium value \({{\varvec{\chi ^{(1)}_{eq}}}}\) only, rather they constitute an intricate result of the combined effect of this value and the initial position of the Markov chain.

Example 5

For the second example, we let \(\omega =1/2\) and use the following matrix \(\textbf{A}_2\):

with the equilibrium \({{\varvec{\chi ^{(2)}_{eq}}}}=(0.0246913, 0.7345679, 0.2407407).\) This matrix is almost the same as \(\textbf{A}_1,\) with the only difference that 45 is changed to 35. The result of simulations for three different initial values are reported in Table 2 and Fig. 3, which are in complete agreement with the prediction of Theorem 4.6.

The difference between the two examples is two-fold: (1) by increasing the value of \(\omega \) we increase the influence of the “selection matrix” \(\textbf{A}\) comparing to the neutral selection, and (2) by changing \(\textbf{A}_1\) to \(\textbf{A}_2\) we replace the equilibrium \({{\varvec{\chi ^{(1)}_{eq}}}}\) with fairly uniform distribution of “types” by the considerably less balanced \({{\varvec{\chi ^{(2)}_{eq}}}},\) increasing the threshold in (38) by a considerable margin. We note that in a general, and not specifically designed, example, it may be hard to verify conditions of the theorem, particularly (38).

Simulations of the trajectories of \({{\varvec{\psi }}}_k\) and \(\textbf{X}^{(N)}_k\) for \(\Gamma _i(\textbf{x})=\frac{1000+A_1x(i)}{1000+\textbf{x}^T\textbf{A}_1\textbf{x}}x(i).\) The x-axis represents \(d_{eq},\) and the height of a histogram bar corresponds to the number of occurrences of \(d_{eq}\) in a simulation of 10,000 runs

Next we provide an auxiliary “contraction near the equilibrium” type result, further illustrating the mechanism of convergence to equilibrium in Theorem 4.6. Formally, the result is a slight improvement of a classical stability theorem of Losert and Akin (1983) (see Theorem 4 in “Appendix B” of this paper) showing that the unique interior equilibrium of the dynamical system \({{\varvec{\psi }}}_k\) introduced in (3) is in fact exponentially stable.

Proposition 4.7

Let Assumption 4.4 hold. Then the spectral radius of the Jacobian (Frechét derivative in \(\Delta _M\)) of \({\varvec{\Gamma }}\) at \({{\varvec{\chi _{eq}}}}\) is strictly less than one.

The proof of the proposition is included in Sect. 5.6. This result is instrumental in understanding various applications including but not limited to mixing times (Panageas et al. 2016) and the random sequence \(\textbf{u}_k\) defined in the statement of Theorem 4.1. In the later case, as we mentioned before, it implies that the limiting sequence \(\textbf{U}_k\) in (27) converges to a stationary distribution.