Abstract

Ion channel data recorded using the patch clamp technique are low-pass filtered to remove high-frequency noise. Almanjahie et al. (Eur Biophys J 44:545–556, 2015) based statistical analysis of such data on a hidden Markov model (HMM) with a moving average adjustment for the filter but without correlated noise, and used the EM algorithm for parameter estimation. In this paper, we extend their model to include correlated noise, using signal processing methods and deconvolution to pre-whiten the noise. The resulting data can be modelled as a standard HMM and parameter estimates are again obtained using the EM algorithm. We evaluate this approach using simulated data and also apply it to real data obtained from the mechanosensitive channel of large conductance (MscL) in Escherichia coli. Estimates of mean conductances are comparable to literature values. The key advantages of this method are that it is much simpler and computationally considerably more efficient than currently used HMM methods that include filtering and correlated noise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

An ion channel is a large protein molecule that regulates cell function by controlling the flow of ions across the cell membrane (Aidley and Stanfield 1996, p. 3). Conduction of ions occurs through an aqueous pore which opens in response to a stimulus specific to the type of channel. Simple channels exhibit only two levels, open (conducting) and closed (Hille 2001, Chapters 4 and 5), but complex channels also have subconducting levels (Sukharev et al. 1999).

Mechanosensitive channels are triggered (gated) by membrane tension. These channels undergo conformational changes when the cell membrane is mechanically stressed (Martinac 2011). The most studied mechanosensitive channels are those of small (MscS) and large (MscL) conductance in the bacterium Escherichia coli (E. coli); see (Hamill and Martinac 2001; Martinac 2011). These two types of channel are multi-level and control intracellular pressure. In other organisms, mechanosensitive channels mediate the senses of touch, hearing, balance and proprioception, the latter including the sense of position of body parts in humans (Gillespie and Walker 2001; Ernstrom and Chalfie 2002; Xiao and Xu 2010).

Ion channel currents are recorded using the patch clamp technique (Hamill et al. 1981), and this provides a key source of information regarding ion channel activity. Currents appear to fluctuate randomly between various conducting levels. The noisy current is low-pass filtered and then digitised (sampled and quantised) to produce a sequence of observed currents in discrete time. Evidence indicates that noise in the recordings is not white (Venkataramanan et al. 1998a; Qin et al. 2000b; Fredkin and Rice 2001; Colquhoun and Sigworth 2009). For example, Schouten (2000, Chapter 4) analysed patch clamp recordings of barley leaf protoplasts and concluded, based on plots of autocorrelation functions of the noise at the closed level, that the noise in their records was correlated.

Hidden Markov models (HMMs) are widely used for describing the gating behaviour of a single ion channel and form a basis for statistical analysis of patch clamp data (Chung et al. 1990; Khan et al. 2005). Incorporating adjustments for filtering and coloured noise complicates the application of hidden Markov methodology. However, Michalek et al. (1999) found that model parameters and the channel gating scheme for Na\(^+\) channels were incorrectly estimated when correlations in channel noise were ignored.

Other models for ion channels have also been proposed, including fractal models (Liebovitch et al. 1987; Liebovitch 1989) and defect-diffusion models (Laüger 1985, 1988; Milhauser et al. 1988; French and Stockbridge 1988). These models differ fundamentally from the Markov model in that transition rates are no longer constant. The general conclusion from the literature is that while non-Markovian models may have some merit, the Markovian models so far provide a better fit for the data (Colquhoun and Sigworth 2009; Korn and Horn 1988; McManus et al. 1988; Sansom et al. 1989; McManus and Magleby 1989; Petracchi et al. 1991; Gibb and Colquhoun 1992). More recently, a non-parametric technique with exact missed event correction has been reported (Epstein et al. 2016). However, the method is based on the rather strong assumption that the filtered and digitised channel record has perfect resolution. No comparison of this method with Markov-based methods has been conducted yet.

In their paper, Almanjahie et al. (2015) considered an HMM with filtering but uncorrelated noise for ion channel data. They used the EM algorithm for parameter estimation, based on an extended forward–backward algorithm similar to that of Fredkin and Rice (2001). In this paper, we extend that work to an HMM with filtering and correlated noise.

The remainder of the paper is organised as follows. In “Standard hidden Markov models”, we review the standard HMM and present some mathematical preliminaries in “Some preliminaries”. Extensions of the HMM that include filtering and AR models for correlated noise are given in “HMMs with filtering and correlated noise”. We then introduce our filtered HMM with a moving average (MA) model for correlated noise in “Deconvolution approach”, using deconvolution (a signal processing method) to pre-whiten the noise. The pre-processed data can be modelled as a standard HMM, and parameters are estimated using the EM algorithm. Results of simulation studies to evaluate the performance of this approach are discussed in “Simulation study”. In “Application: MscL data”, the method is applied to real data obtained from MscL in E. coli. Finally, in “Discussion”, we discuss our findings and make concluding remarks.

Standard hidden Markov models

We model the gating behaviour of a single ion channel by a continuous time, regular, homogeneous Markov chain with a finite number of states that correspond to the conformational states of the channel (Colquhoun and Hawkes 1997, 1981), which we assume (for the moment) have distinct conductances. Let \(X_t\) denote the state of the channel at time \(t\ge 0\), \(S =\{0 ,1,\ldots ,N-1\}\) the state space and \(\varvec{Q}\) the intensity matrix for the process \(\{X_t:t\ge 0\}\). Further, let \(\varvec{\mu }=(\mu _{0},\mu _{1},\ldots ,\mu _{N-1})\) and \(\varvec{\sigma }=(\sigma _{0},\sigma _{1},\ldots ,\sigma _{N-1})\), where \(\mu _{i}\) and \(\sigma _{i}\) are, respectively, the mean current and the noise standard deviation corresponding to state i.

In practice, the noisy current is low-pass filtered and sampled, but for the moment we ignore the effect of the filtering. Then \(\varvec{X}=(X_{1},X_{2},\ldots ,X_{T})\) is a (segment of a) discrete-time, homogeneous, irreducible Markov chain with a finite state space \(S =\{0 ,1 , \ldots ,N-1\}\), \(N\times N\) transition probability matrix \(\varvec{P}=[p_{ij}]\), and initial state distribution \(\varvec{\pi }=(\pi _{0},\pi _{1},\ldots ,\pi _{N-1})\) where \(\pi _i={\mathbb {P}}(X_1=i)\), \(i\in S\). The sampled current at time t can be represented as

where \(\varepsilon _{1},\varepsilon _{2},\ldots ,\varepsilon _{T}\) are independent and identically distributed (iid) N(0, 1) random variables, assumed also to be independent of \(\varvec{X}\). Given \(\varvec{X}\), the random variables \(Y_{1},Y_{2},\ldots ,Y_{T}\) are conditionally independent. Moreover, the distribution of \(Y_{t}\) conditional on \(\varvec{X}\) depends only on \(X_{t}\) and, by Eq. (2.1),

Set \(\varvec{Y} = (Y_{1},Y_{2},\ldots ,Y_{T})\). The joint distribution of \((\varvec{X}, \varvec{Y})\), i.e. probability mass function for \(\varvec{X}\) and (conditional) probability density function for \(\varvec{Y}\) given \(\varvec{X}\), is

\(\varvec{x}\in S^T, \varvec{y}\in {\mathbb {R}}^T\), where \(f_{x_t}\) is the \(N(\mu _{x_{t}},\sigma ^2_{x_{t}})\) probability density function. We call the representation in (2.2) a standard HMM.

Denote the model parameters by the vector \(\varvec{\phi }=(\varvec{\pi },\varvec{P},\varvec{\mu },\varvec{\sigma })\). As in Khan et al. (2005) and Almanjahie et al. (2015), the EM algorithm (Dempster et al. 1977) can be used for parameter estimation. In the following \(i,j=0,1,\ldots ,N-1\) represent the states of the Markov chain. Let the index \(m=0\) indicate initial parameter values. Then at iteration \(m=1,2,\ldots\) of the EM algorithm, the updating formulae for \(\pi _{i}\), \(p_{ij}\), \(\mu _{i}\) and \(\sigma _{i}\) are

where

and

\(t=1,2,\ldots ,T-1\). The \(\gamma _{t}^{m}(i)\) and \(\gamma _{t}^{m}(i,j)\) can be computed recursively (Khan et al. 2005; Almanjahie et al. 2015) using Baum’s forward and backward algorithms (Baum et al. 1970; Devijver 1985). Iterations continue until some stopping criterion, such as a pre-set tolerance, is satisfied.

Some preliminaries

The notation

represents a discrete time sequence, where the index can be a subset of the integers. When the limits of the index are clear from the context we will omit them.

A discrete time system is a function T that maps an input sequence \(\{x_k: k \in {\mathbb {Z}}\}\) to an output sequence \(\{y_k: k \in {\mathbb {Z}}\}\) given by

A system is stable if for every bounded input sequence \(\{x_k\},\) the output \(\{y_k\}\) is bounded. The system is linear if for sequences \(\{u_k\}\) and \(\{v_k\},\)

and is time invariant if the input–output relationship does not change over time, that is, for each \(j \in {\mathbb {Z}}\), \(T\left( \{x_{k-j}\}\right) = \{y_{k-j}\}\). Henceforth we consider only linear time-invariant (LTI) systems.

The impulse response\(\{h_k\}\) of a discrete LTI system is the output of the system when the input is an impulse \(\{\delta _k\}\), where \(\{\delta _k\}\) is the Kronecker delta defined as

The system is called finite (duration) impulse response (FIR) if its impulse response is a sequence of finite length.

Let \(\{u_k: k \in {\mathbb {Z}}\}\) and \(\{x_k: k \in {\mathbb {Z}}\}\) be discrete time sequences. The convolution of \(\{u_k\}\) and \(\{x_k\}\), denoted \(\{x_k\}*\{u_k\}\), is defined as

see Proakis and Manolakis (1996, p. 76–82). Note the convolution yields a sequence. It follows that for any sequence \(\{x_k\},\)

so \(\{\delta _k\}\) is the identity for convolution.

An LTI system can be completely characterised by its impulse response \(\{h_k\}\), as formalised in the following result (Proakis and Manolakis 1996, p.76).

Theorem 1

The output\(\{y_k\}\) of an LTI system is related to the input\(\{x_k\}\) by

where\(\{h_k\}\)is the system impulse response.

In particular, since \(\{h_k\}\) is the impulse response,

a result that also follows from (3.3).

The z-transform

The ztransform (Adsad and Dekate 2015; Proakis and Manolakis 1996, p. 169) of the sequence \(\left\{ x_k\right\}\) is given by

The region of convergence (ROC) of X(z), denoted \(R_X\), is the subset of the complex plane for which the sum converges. In simple cases, the z transform can be written in closed form.

Note that if we substitute \(z = sT,\) then the z-transform is equivalent to the Laplace transform of a continuous time signal sampled at frequency \(f=1/T\) (Adsad and Dekate 2015).

The next theorem relates convolution to the z transform.

Theorem 2

Let\(\{u_k\}\)and\(\{x_k\},\ k \in {\mathbb {Z}}\), be discrete time sequences and put

Then theztransformY(z) of\(\{y_k\}\)is

whereU(z) andX(z) are theztransforms of\(\{u_k\}\)and\(\{x_k\},\)respectively.

Theorem 2 implies that convolution in the discrete time domain is equivalent to multiplication in the z-domain. Applying the result to (3.4) gives

The function H(z) is the z transform of the system impulse response and is called the transfer function of the system. It can be shown that an LTI system is stable if and only if the ROC of H(z) includes the unit circle (Proakis and Manolakis 1996, p. 209).

Deconvolution

Deconvolution is the process of determining the input sequence given an output sequence and the system impulse response. This requires finding a discrete time sequence \(\{b_k\}\) that when convolved with the known output \(\{y_k\}\) yields the input sequence \(\{x_k\}\). By (3.4), it follows that

By (3.3), the sequence \(\{b_k\}\) must satisfy

that is, \(\{b_k\}\) is the inverse of \(\{h_k\}\) under convolution. Solving (3.10) for \(\{b_k\}\) given \(\{h_k\}\) in the time domain is usually difficult (Proakis and Manolakis 1996, p. 356). However, (3.8) can be rewritten as

where \(F(z) = 1/H(z)\) is the reciprocal of the transfer function. Thus, deconvolution in the time domain is equivalent to division in the z domain. It follows from (3.9), (3.11) and Theorem 2 that F(z) is the z transform of \(\{b_k\}\), that is, \(F(z) = \sum _k b_k z^{-k}\).

Note that in general the inverse \(\{b_k\}\) may be of infinite length. In practice, this requires the sequence to be truncated (Mourjopoulos 1994). We will consider a truncation to be adequate if it satisfies the (Euclidean) norm-based criterion

for some pre-set value of \(\varepsilon\) (Proakis and Manolakis 1996, §8.5.2).

HMMs with filtering and correlated noise

Correlation in the noise is due to the low-pass filter and perhaps channel gating characteristics. Various models that include correlated noise have been proposed in the literature.

Venkataramanan et al. (1998a, b) developed a model incorporating correlated background noise and state-dependent ‘excess’ noise. They modelled background correlated noise \(\{n_t\}\) as an autoregressive (AR) process of order p. Their model can be written for \(t=1,2,\ldots ,T\) as

Each of the sequences \(\{\varepsilon _t\}\) and \(\{\delta _t\}\) was assumed to be iid normally distributed, with variances 1 and \(\sigma ^2_{\delta },\) respectively, and \(\zeta _1,\zeta _2,\ldots ,\zeta _{p}\) are the coefficients specifying the AR(p) model for \(\{n_t\}\).

Venkataramanan et al. (1998a, b) estimated the coefficients of the AR process as follows. First, the autocorrelation function of noise was estimated from long stretches of noise at the closed level. Then the coefficients in Eq. (4.1b) were estimated by using the Levinson–Durbin algorithm to solve the Yule–Walker equations for the autocorrelations of \(\{n_t\}\), a standard time series approach (Brockwell and Davis 2006, Chapter 8). They then preprocessed the data using a pre-whitening filter of length k to remove the correlation in the noise, the parameters of this filter being obtained from the inverse of the AR filter transfer function. Since the signal \(\mu _{X_t}\) also passes through the pre-whitening filter, the observed current \(Y_t\) at time t now depends on the Markov chain states at k time points. This collection (vector) of the k Markov chain states is referred to as a meta-state (Venkataramanan et al. 1998a). To calculate the likelihood of the model for this preprocessed data, Venkataramanan et al. (1998a) considered a vector Markov chain over the \(\left( M=N^k\right)\)meta-states. They developed a modified Baum–Welch algorithm (Baum et al. 1970; Baum and Eagon 1967), involving some approximations, to estimate the parameters in their HMM (Venkataramanan et al. 1998a, b). However, the modified Baum–Welch algorithm does not guarantee that the likelihood is non-decreasing after each iteration, and does not necessarily produce maximum likelihood estimates (Venkataramanan et al. 1998b, p. 1926).

Schouten (2000, Chapter 5) and De Gunst et al. (2001) used the model in Eq. (4.1), but also incorporated a Gaussian MA filter with length \(2r+1\) as an adjustment for the effect of the low-pass filter. Specifically, for \(\max (r,p)\le t\le T-r\),

where \(\{\varepsilon _t\}\) and \(\{\delta _t\}\) are as in Eq. (4.1) and the filter weights \(\eta _s\), \(s=-r,\ldots ,r\), are determined from filter characteristics as described in Colquhoun and Sigworth (2009, Appendix 3, p. 576); see also Table 4.1 in Schouten (2000). They too estimated the order of the AR process from long stretches of the noise at the closed level, but used Markov Chain Monte Carlo (MCMC) methods based on a meta-state approach to estimate the AR coefficients \(\zeta _{j}\), \(j=1,2,\ldots ,p\), and the HMM parameters.

Fredkin and Rice (2001) modelled the effect of the low-pass filter by a finite (duration) impulse response (FIR) filter and the state-independent correlated noise by an AR model. They pre-whitened the noise and developed an approximation to simplify likelihood computations, but did not report an application of their method to real data.

Qin et al. (2000b) extended the model of Fredkin and Rice (2001) to allow for state-dependent correlated noise. They modelled the effect of the low-pass filter by an FIR filter with coefficients \(h_s\), \(s=0,\dots ,r-1\), and used AR models for the state-dependent correlated noise \(\{n^{(X_t)}_t\}\), i.e. a separate model for each level. To simplify the computations they assumed that the AR models at all channel levels had the same order. Their model can be written as

for \(\max (r,p)\le t\le T\).

Rewriting Eq. (4.3b) gives

where \(\kappa ^{(X_t)}_0=1\) and \(\kappa ^{(X_t)}_j=-\zeta _{j}^{(X_t)}\), \(j=1,2,\dots ,p\). This equation can be written as

where \(*\) indicates convolution. Taking z transforms and using Theorem 2 give

whence

where \(K^{(X)}(z)\) is the transfer function of the AR filter. Then taking the z transform of the model in Eq. (4.3a) and using Eq. (4.4) yield

where H(z) is the transfer function of the FIR filter.

Pre-whitening is equivalent to multiplying both sides of (4.5) by \(K^{(X)}(z)\), yielding

Rewriting Eq. (4.6) in the time domain gives

where \(k=r+p\) and \(c_j^{(X_t)}\) is the convolution of \(\{h_s\}\) and \(\{\kappa ^{(X_t)}_\ell \}\). Hence, as in equation 4 of Qin et al. (2000b),

Finally, based on a meta-state approach, Qin et al. (2000b) obtained parameter estimates by direct optimisation using quasi-Newton methods.

For an HMM with state-dependent Gaussian white noise, Khan et al. (2005) used a (Gaussian) MA adjustment for filtering. They considered the following model. For \(t=r+1,r+2,\ldots ,T-r\),

where the filter weights \(\eta _s\), \(s=-r,\ldots ,r\) are determined as for Eq. (4.2). Parameters were estimated by the EM algorithm based on a meta-state process.

Almanjahie et al. (2015) employed the model of Eq. (4.9), which they called a moving average filtered HMM (MAFHMM), and obtained parameter estimates using an EM-based algorithm. A key aspect of that work was the development of a generalised Baum’s forward–backward algorithm, similar to that of Fredkin and Rice (2001), which greatly reduced the computational demand. Nonetheless, estimation of model parameters is still effectively based on a meta-state model; see Almanjahie et al. (2015) for details. As a result, computational requirements were much greater than for the standard HMM.

A common feature of each of the above models is that they are based on meta-state processes. Note also that the vector Markov chain based on the meta-states has a much expanded state space. For example, consider a Markov chain with five states. If a 3-tuple of states is considered, there are \(5^3=125\) possible meta-states. Consequently, maximising the log-likelihood and estimating parameters for these models considerably increases the computational demand.

Deconvolution approach

In Eq. (4.9), only the current is considered to be filtered. However, in practice, during data collection it is the noisy current that is low-pass filtered, so the filter also affects the state-dependent noise. Thus, a more appropriate model for the recorded current is

where \(t=1,2,\ldots ,T\), and the value of r and the filter weights \(\eta _{s}\) are determined as for Eq. (4.2). Note that the Markov chain has been relabelled here, so \(\varvec{X}=(X_{-r+1},X_{-r+2},\ldots ,X_1,X_2,\ldots ,X_{T+r})\). The second term in Eq. (5.1) now represents correlated state-dependent noise. Furthermore, in Eq. (5.1) the mean current and the state-dependent noise at time t both depend on the underlying Markov chain states at the present time t as well as the immediate r past and r future time points.

Our justification for this choice of model is as follows. In reported studies for the models in Eq. (4.1) and Eq. (4.2), either \(\sigma _{\delta }^2\) is larger than each \(\sigma _i^2\) (\(i=0,\ldots ,N-1\)) by at least an order of magnitude, or vice versa; see Table 3 in De Gunst et al. (2001). Since only one of these noise terms denominates in the model, we absorb all noise sources into the filtered state-dependent noise.

Each sum on the right hand side of Eq. (5.1) is a convolution, so this equation can be written in the time domain as

Taking the z transform in Eq. (5.2) and using linearity gives

where \(H(z)=\sum _{s=-r}^{r}\eta _{s}z^{-s}\) is the transfer function of the MA filter. Let \(F(z)={1}/{H(z)}=\sum _{t} b_{t}z^{-t}\). This inverse exists under certain conditions, for example if the series converges in a region of the complex plane including the unit circle; see the example in “Implementing MAD” and the appendix in Mourjopoulos (1994). Multiplying both sides of Eq. (5.3) by F(z) yields

In the time domain, Eq. (5.4) becomes

Note that \(\{b_t\}*\{\eta _t\}=\{\delta _t\}\). Thus, Eq. (5.5) is simply the convolution of \(\{b_t\}\) with Eq. (5.2).

Putting \(\{\breve{Y}_{t}\} = \{b_t\}*\{Y_t\}\) in Eq. (5.5) gives

This final model is a standard HMM for \(\breve{\varvec{Y}}\), so Baum’s forward and backward algorithms and the EM algorithm can be used for parameter estimation. We call this method Moving average with deconvolution (MAD). Khan’s algorithm (Khan 2003) can be used for computing the standard errors of the parameter estimates.

Simulation study

The data simulation mimicked the data generation process for ion channels. We began by simulating a continuous time Markov chain, which was then sampled to produce a discrete time Markov chain that represented the sequence of states of the channel. Each state was mapped to a mean current, following which noise was added.

Almanjahie et al. (2015) had shown that MscL in E. coli has five subconductance levels in addition to the closed and fully open levels. They had also estimated the mean current and noise standard deviation at each level. For our simulations, we chose an intensity matrix \(\varvec{Q}\) which gave mean dwell times reflecting those estimated for MscL, but was otherwise arbitrary. A seven-state continuous time Markov chain with state space \(S=\{0,1,\ldots ,6\}\) was generated. The resulting continuous time Markov chain was sampled at 50 kHz, giving a discrete time Markov chain.

Currents were set to 0, 15, 30, 45, 65, 85 and 105, and state-dependent Gaussian white noise was added to these currents at each sampling point. The noise standard deviation at level 0 was set to 3, increasing in steps of 0.5 to 6 at the fully open level. The resulting noisy current was digitally filtered at 25 kHz to produce a data set containing 100,000 points.

Implementing MAD

For our simulated data set, the ratio of the sampling frequency \(f_s\) and the filter cutoff frequency \(f_c\) is 2. From Colquhoun and Sigworth (2009, Appendix, 3, p. 576) or Schouten (2000, Table 4.1), this corresponds to a filter of length 3 (\(r=1\)) with coefficients \(\eta _0=0.93\) and \(\eta _{-1}=\eta _1=0.035\). The reciprocal of the transfer function of the corresponding MA filter is

where 0.0377 is obtained from 1 / 26.53. The function given by Eq. (6.1) is well defined in the region \(0.0377<|z|<26.53\), which includes the unit circle \(|z|=1\). Therefore, the corresponding system is stable (Proakis and Manolakis 1996, p. 209; see also appendix in Mourjopoulos 1994), so an inverse filter \(\{b_t\}\) can be constructed.

From (6.1), a (Laurent) series expansion yields

We obtain \(b_t\) as the coefficient of \(z^{-t}\) in Eq. (6.2), for \(t=0,\pm\, 1, \pm \, 2,\ldots\). Note that, here and in general, the inverse filter \(\{b_t\}\) is of infinite length and needs to be truncated. We consider a truncation \(\{b'_t\}\) of \(\{b_t\}\) that satisfies (Proakis and Manolakis 1996, §8.5.2)

The corresponding truncated discrete time inverse MA filter is

where the number in bold indicates the entry corresponding to \(t = 0\). In this case,

to within four decimal places for each entry.

Results

The simulated data set was analysed using MAD, MAFHMM and standard HMM. The results are summarised in Table 1, which shows the estimates of mean current and noise standard deviation for each level, together with the corresponding standard errors (determined by Khan’s algorithm, Khan 2003) and (individual) \(95\%\) confidence intervals.

All three methods give good estimates of the mean current at each level, but MAFHMM gave the largest standard errors. However, MAD provides much better estimates of noise standard deviations, and again MAFHMM produced the largest standard errors.

For HMM, MAFHMM and MAD, data were idealised as described in Almanjahie et al. (2015) and Khan et al. (2005). The idealised and simulated Markov chains were compared to identify any discrepancies or idealisation errors between them. MAD made 90 such errors, compared to 150 for HMM and 119 for MAFHMM out of a total of 100,000 points.

The mean dwell times and the number of points at each level as computed from the idealisations are presented in Table 2. For each level, this table shows the following mean dwell times: theoretical or true (\(\tau _i\), computed as \(-1/q_{ii}\), for each i, where \(q_{ii}\) is the \(i\)th diagonal entry of the \(\varvec{Q}\) used in the simulation), simulated (\({\bar{\tau }}_i\), computed as the mean dwell time in each state of the sampled simulated Markov chain) and estimated (\({\hat{\tau }}_i\)). The mean dwell times estimated by the methods were comparable, and similar to the corresponding theoretical and simulated values.

Fifty such simulation studies were conducted and gave results similar to the above.

Algorithm complexity

Computation of the likelihood is dominated by the number of arithmetic operations required for calculating the forward and backward probabilities. For an N-state Markov chain of length T, the forward probabilities require \(O(N^2T)\) calculations; see Rabiner (1989). If a moving average filter of total length \(2r+1\) is included, the number of calculations required when using the metastate approach is \(O(M^2T)\), where \(M=N^{2r+1}\) (Khan et al. 2005). The generalised forward–backward algorithm used in MAFHMM (Almanjahie et al. 2015) requires O(MNT) calculations.

As an example, when \(N=7\) and \(T=100{,}000\), the number of calculations required for each type of algorithm are summarised in Table 3. Note that MAD is equivalent to the standard HMM. For large data sets (typical of ion channel records), the run-time savings for MAD can be considerable.

Application: MscL data

Extensive high bandwidth patch clamp data were obtained in the laboratory of Professor Boris Martinac (Head of Mechanosensory Biophysics Laboratory, Victor Chang Cardiac Research Institute) from MscL in the bacterium E. coli. The data were recorded by the same researcher in the same laboratory during the same afternoon under identical environmental conditions, with applied voltage \(+100\) mV, bandwidths 25 kHz and 50 kHz and digitally sampled at 75 kHz and 150 kHz, respectively. Four recordings at each bandwidth were obtained, each containing between 5 and 30 million data values. Further details of the experiment can be found in Almanjahie et al. (2015).

The data were screened and eight data sets (four at each bandwidth) each containing about 200,000 values were selected for analysis. Preliminary exploration and analysis (see Almanjahie et al. 2015) revealed that the noise standard deviations at the intermediate levels were larger than those at the closed and fully open levels. Following Khan et al. (2005), constraints were placed so the noise standard deviations at intermediate levels were equally spaced between those for the closed and fully open levels. Based on comprehensive analysis of the data using HMM and MAFHMM (moving average filtered HMM), Almanjahie et al. (2015) concluded that MscL in E. coli had five subconducting levels along with the fully open and closed levels. The main purpose in this section is to compare the performance of MAD with that of HMM and MAFHMM.

For the MscL data, the ratio \(f_s/f_c=3\) corresponds to a filter of length 3 (\(r=1\)) with coefficients \(\eta _0=0.84\) and \(\eta _{-1}=\eta _1=0.080\) as in Colquhoun and Sigworth (2009, Appendix, 3, p. 576) or Schouten (2000, Table 4.1). For MAD, the norm-based criterion Eq. (3.12) with \(\varepsilon =0.001\) yields the corresponding truncated discrete time inverse MA filter as

In this case \(\{b'_t\}*\{\eta _t\} =\{\delta _t\}\) to within 3 decimal places for each entry.

Statistical analysis

With the number of levels set at \(N=7\) (Almanjahie et al. 2015) and state-dependent noise constrained as described above, the eight data sets were analysed using HMM, MAFHMM and MAD. Equations (2.3)–(2.6) were used for parameter estimation in HMM, and also MAD (for the preprocessed data). Parameter estimates for MAFHMM, derived in Almanjahie et al. (2015), are given for comparison. Estimated mean currents were offset to give \({\check{\mu }}_i= {\hat{\mu }}_i - {\hat{\mu }}_0\), \(i=0,1,\ldots ,6\), so that the mean current at the closed level was zero.

Results

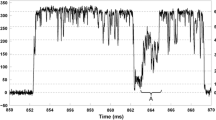

Table 4 shows estimated intermediate conductance values for MscL. For each level the estimated conductance (% max) is the corresponding mean current as a percentage of that at the fully open level. (Conductance values for the closed and fully open levels are 0 and 100, respectively.) Also given for each bandwidth are the mean (\({\bar{x}}\)) and standard deviation (s) of the four estimates of mean conductances at each level. Figure 1 is a plot of the conductance values for the eight data sets.

For the 25 kHz data, the estimated intermediate conductance at each level is highest for HMM. However, for the 50 kHz data there is little difference in the conductance estimates obtained by the three approaches.

Discussion

We have incorporated correlated noise in an HMM for ion channel data and used deconvolution to pre-whiten the noise, resulting in a standard HMM for the preprocessed data. Parameter estimates were obtained by the EM algorithm. The method performed well in simulation studies.

We applied this methodology to MscL data from E. coli, giving the results in Table 4 and Fig. 1. The estimates of channel conductances are comparable to those of other researchers (Sukharev et al. 1999, 2001; Petrov et al. 2011), as can be seen from Table 3 and Figure 8 in Almanjahie et al. (2015).

We have also computed standard errors and confidence intervals for parameter estimates for the simulated data sets; these are not routinely produced by channel researchers, but are an important adjunct as they quantify precision of the estimates.

An important point to note is that the model in Eq. (5.6) is an HMM for the preprocessed data. Since the preprocessing depends on an approximation to the inverse filter, the parameter estimates and standard errors for Eq. (5.6) do not coincide exactly with those for Eq. (5.1). However, in practice this approximation should have minimal effect when the truncation error is small.

Almanjahie et al. (2015) determined transition schemes for MscL in E. coli based on HMM and MAFHMM analyses of the eight data sets. Compared to that for the 50 kHz data, their scheme for the 25 kHz data had one extra transition. Based on MAD analysis for all eight data sets, we produced transition schemes for this channel and these coincided exactly with that for the 50 kHz data in Almanjahie et al. (2015); they also reported that at the closed level the channel has two states. However, for the purpose of estimating mean channel conductance, this has no effect.

Overall, the key contributions of this paper are the development of a filtered HMM incorporating correlated noise and a meta-state-free algorithm for parameter estimation; statistical analysis of extensive high bandwidth data; and highlighting the importance of bandwidth for estimating channel characteristics. The new algorithm is simple and greatly reduces computation time and memory requirements. These advantages are important for processing the very large data sets that are made possible by high bandwidth recordings as a result of improvements in technology and experimental technique. Enhancements in technology coupled with corresponding advances in computational techniques are instrumental in furthering our understanding of the structure of ion channels.

References

Adsad SS, Dekate MV (2015) Relation of Z-transform and Laplace transform in discrete time signal. Int Res J Eng Technol 2:813–815

Aidley DJ, Stanfield PR (1996) Ion channels: molecules in action. Cambridge University Press, New York

Almanjahie IM, Khan RN, Milne RK, Martinac B, Nomura T (2015) Hidden Markov analysis of improved bandwidth mechanosensitive ion channel gating data. Eur Biophys J 44:545–556

Baum LE, Eagon JE (1967) An inequality with applications to statistical estimation for probabilistic functions of Markov processes and to a model for ecology. Bull Am Math Soc 73:360–363

Baum LE, Petrie T, Soules G, Weiss N (1970) A maximisation technique occurring in the statistical analysis of probabilistic functions of Markov chains. Ann Math Stat 41:164–171

Brockwell PJ, Davis RA (2006) Time series: theory and methods, 2nd edn. Springer, New York

Chung SH, Moore JB, Xia L, Premkumar LS, Gage PW (1990) Characterization of single channel currents using digital signal processing techniques based on hidden Markov models. Philos Trans R Soc Lond Ser B 329:265–285

Colquhoun D, Hawkes A (1981) On the stochastic properties of single ion channels. Proc R Soc Lond Ser B 211:205–235

Colquhoun D, Hawkes A (1997) Relaxation and fluctuations of membrane currents that flow through drug-operated channels. Proc R Soc Lond Ser B 199:231–262

Colquhoun D, Sigworth FJ (2009) Fitting and statistical analysis of single-channel records. In: Sakmann B, Neher E (eds) Single channel recording, 2nd edn. Springer, New York, pp 483–587

De Gunst MCM, Künsch HR, Schouten JG (2001) Statistical analysis of ion channel using hidden Markov models with correlated state-dependent noise and filtering. J Am Stat Assoc 9:805–815

Dempster AP, Laird NM, Rubin DR (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B 39:1–22

Devijver PA (1985) Baum’s forward–backward algorithm revisited. Pattern Recognit Lett 3:369–373

Epstein M, Calderhead B, Girolami M, Sivilotti G (2016) Bayesian statistical inference in ion-channel models with exact missed event correction. Biophys J B 111:338–348

Ernstrom GG, Chalfie M (2002) Genetics of sensory mechanotransduction. Annu Rev Gen 36:411–453

Fredkin DR, Rice JA (2001) Fast evaluation of the likelihood of an HMM: ion channel currents with filtering and coloured noise. IEEE Trans Signal Process 49:625–633

French AS, Stockbridge LL (1988) Fractal and Markov behaviour in ion channel kinetics. Can J Physiol Pharmcol 66:967–970

Gibb AJ, Colquhoun D (1992) Activation of NDMA receptors by l-glutamte in cells dissociated from adult rate hippocampus. J Physiol 456:143–179

Gillespie PG, Walker RG (2001) Molecular basis of mechanosensory transduction. Nature 413:194–202

Hamill OP, Martinac B (2001) Molecular basis of mechanotransduction in living cells. Physiol Rev 81:685–740

Hamill OP, Marty A, Neher E, Sakmann B, Sigworth FJ (1981) Improved patch-clamp techniques for high-resolution current recordings from cells and cell-free membrane patches. Pflügers Arc Eur J Physiol 391:85–100

Hille B (2001) Ionic channels of excitable membranes, 3rd edn. Sinauer Associates Inc, Sunderland

Khan RN (2003) Statistical modelling and analysis of ion channel data based on hidden Markov models and the EM algorithm. Dissertation, University of Western Australia, Perth

Khan RN, Martinac B, Madsen BW, Milne RK, Yeo GF, Edeson RO (2005) Hidden Markov analysis of mechanosensitive ion channel gating. Math Biosci 193:139–158

Korn SJ, Horn R (1988) Statistical discrimination of fractal and Markov models for single channel gating. Biophys J 54:871–877

Laüger P (1985) Ionic channels with conformational substates. Biophys J 47:581–590

Laüger P (1988) Internal motion in proteins and gating kinetics of ionic channels. Biophys J 53:877–884

Liebovitch LS (1989) Testing fractal and Markov models of ion channel kinetics. Biophys J 55:373–377

Liebovitch LS, Fischbarg J, Koniarek JP, Todorova I, Wang M (1987) Fractal model of ion channel kinetics. Biochim Biophys Acta 896:173–180

Martinac B (2011) Bacterial mechanosensitive channels as a paradigm for mechanosensory transduction. Cell Physiol Biochem 28:1051–1060

McManus OB, Magleby KL (1989) Kinetic time constants independent of previous single-channel activity suggest Markov gating for a large conductance Ca-activated K-channel. J Gen Physiol 94:1037–1070

McManus OB, Weiss DS, Spivak CE, Blatz AL, Magleby KL (1988) Fractal models are inadequate for the kinetics of four different channels. Biophys J 54:859–870

Michalek S, Lerche H, Wagner M, Mitrović N, Schiebe M, Lehmann-Horn F, Timmer J (1999) On identification of Na+ channel gating schemes using moving-average filtered hidden Markov models. Eur Biophys J 28:605–609

Milhauser GL, Salpeter EE, Oswald RE (1988) Rate-amplitude correlation from single channel records. Biophys J 54:1165–1168

Mourjopoulos JN (1994) Digital equalization of room acoustics. Audio Eng Soc Conv 42:884–900

Petracchi D, Barbi M, Pellegrini M, Simoni A (1991) Use of conditional distributions in the analysis of ion channel recordings. Eur Biophys J 20:31–39

Petrov E, Rohde PR, Martinac B (2011) Flying-patch patch-clamp study of G22E-MscL mutant under high hydrostatic pressure. Biophys J 100:1635–1641

Proakis JG, Manolakis DG (1996) Digital signal processing: principles, algorithms, and applications. Prentice Hall, Englewood Cliffs

Qin F, Auerbach A, Sachs F (2000) Hidden Markov modeling for single channel kinetics with filtering and correlated noise. Biophys J 79:1928–1944

Rabiner LR (1989) A tutorial on hidden Markov models and selected applications in speech recognition. Proc IEEE 77:257–285

Sansom MSP, Ball FG, Kerry CJ, McGee R, Ramsey RL, Usherwood PNR (1989) Markov, fractal, diffusion and related models of ion channel gating: a comparison with experimental data from two ion channels. Biophys J 56:1229–1243

Schouten JG (2000) Stochastic modelling of ion channel kinetics. Dissertation, Thomas Stieltjes Institute for Mathematics, Vrije Universiteit, Amsterdam

Sukharev S, Sigurdson WJ, Kung C, Sachs F (1999) Energetic and spatial parameters for gating of the bacterial large conductance mechanosensitive channel, MscL. J Gen Physiol 113:525–539

Sukharev S, Betanzos M, Chiang CS, Guy HR (2001) The gating mechanism of the large mechanosensitive channel MscL. Nature 409:720–724

Venkataramanan L, Walsh JL, Kuc R, Sigworth FJ (1998a) Identification of hidden Markov models for ion channel currents—part I: coloured background noise. IEEE Trans Signal Process 46:1901–1915

Venkataramanan L, Kuc R, Sigworth FJ (1998b) Identification of hidden Markov models for ion channel currents–part II: state dependent excess noise. IEEE Trans Signal Process 46:1916–1929

Xiao R, Xu XZS (2010) Mechanosensitive channels: in touch with Piezo. Curr Biol 20:936–938

Acknowledgements

The experimental part of the study was supported by the APP1047980 grant from the National Health and Medical Research Council of Australia to Professor B. Martinac. Ibrahim M. Almanjahie thanks the government of Saudi Arabia, King Khalid University and the Cultural Mission of Royal Embassy of Saudi Arabia in Australia for support during his PhD study. We also thank two referees for their comments, which have enabled us to significantly improve the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Almanjahie, I.M., Khan, R.N., Milne, R.K. et al. Moving average filtering with deconvolution (MAD) for hidden Markov model with filtering and correlated noise. Eur Biophys J 48, 383–393 (2019). https://doi.org/10.1007/s00249-019-01368-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00249-019-01368-1