Abstract

We use convex duality techniques to study a spatial Pareto problem with transport costs and derive a spatial second welfare theorem. The existence of an integrable equilibrium distribution of quantities is nontrivial and established under general monotonicity assumptions. Our variational approach also enables us to give a numerical algorithm à la Sinkhorn and present simulations for equilibrium prices and quantities in one-dimensional domains and a network of French cities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The present article proposes a simple model of efficient spatial trade in an exchange economy subject to transportation costs. The notion of efficiency we consider comprises two quite different aspects: efficiency of the transport (import/export) process and efficiency in the Pareto sense of the distribution of goods after import/export has taken place. We take as primitives the spatial (initial) distribution of goods, the transportation cost for these goods and the agent’s preferences, given by a (possibly location dependent) utility function. The unknown is the (final) distribution of goods after trade among the different locations. Our approach is based on the maximization of a global criterion which is a weighted average of the utility of final consumption (Pareto efficiency) net of the minimal total transport cost taking into account mass conservation constraints (optimal transport).

Our model relies to a large extent on optimal transport theory which has been the object of an intensive and fruitful stream of research in the last three decades and has found numerous applications in PDEs, functional inequalities, probability theory, economics and more recently machine learning, see the textbooks of Villani [1] and Santambrogio [2], and for an overview of optimal transport methods in economics and econometrics, see Galichon [3]. Optimal transport aims at explaining how to transport mass efficiently (i.e. so as to minimize a total cost) between two given measures with the same total mass. A cornerstone of this theory is the Kantorovich duality which characterizes optimal plans in terms of a pair of dual variables which are naturally interpreted as prices.

In a spatially distributed exchange economy where various goods are available in different quantities according to location, agents located at different locations may have an interest to trade and they will certainly do so if they can all increase their utility for a small transport cost. Possible utility improvements is then the reason why some mass is actually transported. To fix ideas, think of two locations A and B, all the resources in water are concentrated at A and all the resources in food are concentrated at B, agents located at B will then import some water from A and export some food to B but the precise amount of water/food transported will depend on: (i) the initial endowments of A and B, (ii) the preferences of agents located at A and B and (iii) on how costly transporting these goods from one location to another is. If we fix the demand for water of B and the demand for food of A, this is a simple optimal transport problem but these demands result from a Pareto (weighted utility maximization) problem between these two locations. A dual version of the problem consists in looking for prices for which markets clear and also reflect the cost of transporting the goods as efficiently as possible.

We propose a simple variational model that combines optimal transport (that is how to transport the goods) with Pareto efficiency requirements (that is why some goods are actually transported). The variational problem we consider to find Pareto efficient distributions involves separable optimal transport terms and a joint concave utility term. Interestingly, this has a somehow similar structure as steps of the celebrated JKO scheme of Jordan, Kinderlehrer and Otto [4] for Wasserstein gradient flows, see Ambrosio, Gigli and Savaré [5] for a detailed account of this theory. Convex duality also enables us to derive supporting prices and to prove a sort of spatial second welfare theorem. From a mathematical point, the existence of integrable Pareto optimal final resource distributions is non trivial and we provide a complete proof using quite general monotonicity properties. We also prove existence of continuous prices supporting these Pareto distribution of the goods. The fact that the analysis resorts on convex minimization and duality enables us to use state of the art solvers based on the Sinkhorn algorithm for entropic optimal transport, see Cuturi [6], Benamou et al. [7], Cuturi and Peyré [8], Peyré [9]. We illustrate the practical use of our model by solving numerically one-dimensional examples as well as a graph model representing some cities in France.

The paper is organized as follows. Our variational spatial Pareto model and our assumptions are introduced in Sect. 2. Section 3 is concerned with existence and duality results. Primal-dual optimality conditions are further analysed and a second welfare theorem is derived from the latter in Sect. 4. Finally, Sect. 5 presents an algorithm for the entropic regularization of the problem and numerical simulations for equilibrium prices and quantities in one-dimensional domains and a graph representing a network of French cities.

2 Assumptions and Notations

We consider a region denoted by X which we assume to be a compact metric space. We consider agents populating X having initial endowments of N goods, given by nonnegative Borel measures \(\left( \mu _1, \ldots , \mu _N \right) \in {{{\mathcal {M}}}}_+(X)^N\). Choosing a reference measure \(m\in {{{\mathcal {M}}}}_+(X)\) such that \(\mu _i\) is absolutely continuous with respect to m for \(i=1, \ldots , N\) (e.g. \(m=\sum _{i=1}^N \mu _i\)) we denote by \(\alpha _i\) the density of \(\mu _i\) with respect to m i.e.

Assumption A1

A1 For each good i, there is a transport cost \(c_i \in C(X\times X)\) which satisfies

The quantity \(c_i(x,y)\) represents the cost of transporting one unit of mass of good i from x to y.

The image (or pushforward) of a Borel measure \(\theta \) by a Borel map T will be denoted \(T_\#\theta \) in the sequel. More precisely, if Y and Z are compact metric spaces, if \(\theta \in {{{\mathcal {M}}}}(Y)\) (i.e. \(\theta \) is a Borel measure on Y) and if T : \(Y \rightarrow Z\) is Borel, then \(T_\#\theta \) is defined by \(T_\# \theta (B)= \theta (T^{-1}(B))\) for every Borel subset B of Z. Equivalently, using test-functions, \(T_\#\theta \) is defined by

In particular, if \(\gamma \in {{{\mathcal {M}}}}_+(X\times X)\), the marginals of \(\gamma \) are \({\mathop {\textrm{proj}}\nolimits _1}_\# \gamma \) and \({\mathop {\textrm{proj}}\nolimits _{2}}_\# \gamma \), where \((\mathop {\textrm{proj}}\nolimits _1(x,y), \mathop {\textrm{proj}}\nolimits _2(x,y))=(x,y)\) denote the canonical projections. Given \(\nu _i\) a Borel measure on X, we set

where \( \Pi (\mu _i, \nu _i)\) is the set of transport plans between \(\mu _i\) and \(\nu _i\) i.e. the set of nonnegative Borel measures on \(X\times X\) having \(\mu _i\) and \(\nu _i\) as marginals (so that \(\gamma _i(A\times B)\) represents the amount of mass of good i initially in A transported to a location in B). In our model, transporting goods is costly but may be worth because it may result in some improvement in terms of the agent’s preferences. These preferences are given by a (space-dependent) utility function U: \(X\times {\mathbb {R}}_+^N \rightarrow {\mathbb {R}}\cup \{-\infty \}\), on which we make the following assumptions:

Assumption A2

A2 For m-a.e. \(x\in X\), \(\beta \in {\mathbb {R}}_+^N \mapsto U(x, \beta )\) is usc, concave and nondecreasingFootnote 1,

Assumption A3

A3 For every \(\beta \in {\mathbb {R}}_+^N\), \(x\in X \mapsto U(x, \beta )\) is m-measurable.

Assumption A4

A4 The map \((x, \beta ) \mapsto U(x,\beta )\) is sublinear with respect to \(\beta \) uniformly in \(x\in X\), meaning that for every \(\delta >0\) there exists \(C_\delta \) such that for m-a.e. \(x\in X\) one has

Assumption A5

A5 There exists \(({{\overline{\alpha }}}_1, \ldots , {{\overline{\alpha }}}_N)\in L^1(X,m)^N\) such that

and

A typical utility which satisfies the above assumptions (as well as the stronger version (A5’) of (A5) introduced in Sect. 3.3) is the Cobb–Douglas utility:

with w, a positive measurable and bounded weight function and \(a_i > 0\) and \(\sum _{i=1}^N a_i < 1\).

Now, Given \(\nu := (\nu _1, \ldots , \nu _N) \in {{{\mathcal {M}}}}_+(X)^N\), let us define

and

We are here interested in allocations which maximize the average utility (Pareto efficiency) taking into account transportation cost of the different goods and therefore consider

which can also be expressed as

which incorporates the mass conservation constraints

Note that (A5) and (A4) ensure that the value of the maximization problem (2.2) is finite.

3 Existence and Duality

3.1 Existence of \(L^1\) Optimizers

Notice that the existence of an optimizer in \(L^1\) for (2.2) is not totally straighforward, and this is the object of the next result.

Proposition 3.1

Assuming (A1)–(A5), the maximization problem (2.2) admits at least one solution. If, in addition, there exists \(\Omega \subset X\), Borel, such that \(m(\Omega ) > 0\) and U(x, .) is strictly concave on \({\mathbb {R}}_+^N\) for every \(x \in \Omega \) then the solution is unique.

Proof

Let \(\nu ^n =\beta ^n m\) be a maximizing sequence for (2.2). Since \(\beta _i^n\ge 0\) and \(\int _X \beta _i^n \hbox {d} m=\mu _i(X)\), \((\beta ^n)_n\) is bounded in \(L^1(X,m)^N\). It therefore follows from Komlos’ Theorem [10], that there exists a (not relabeled) subsequence such that the Cesaro means

converges m-a.e. to some \(\beta =(\beta _1, \ldots , \beta _N)\). Since both \({{{\mathcal {U}}}}\) and \(-{{{\mathcal {T}}}}_{\mu }\) are concave, the above sequence of Cesaro means is also a maximizing sequence, hence, slightly abusing notations by calling again \(\beta ^n\) this new sequence we may assume that

Let \(\delta >0\), the sublinearity assumption (A4), together with (3.1), the semicontinuity of U(x, .) and Fatou’s Lemma yield

by letting \(\delta \rightarrow 0^+\), we thus get

Moreover, \(\nu ^n= \beta ^n m\) being bounded in \({{{\mathcal {M}}}}_+(X)^N\), we can assume (again passing to a subsequence if necessary) that it converges weakly-\(*\) to some \(\nu =(\nu _1, \ldots , \nu _N)\in {{{\mathcal {M}}}}_+(X)^N\)

Notice that \(\nu _i(X)=\mu _i(X)\), and that \(T_{c_i}(\mu _i, .)\) is sequentially weakly-\(*\) lsc (as a consequence of the well-known Kantorovich duality formula for optimal transport, see [2]) so that

From (3.2)–(3.4) and the fact that \(\nu ^n\) is a maximizing sequence, we deduce:

Since \(\beta \) and \(\nu \) may be different and \(\beta \) may violate the mass preservation constraints (2.4), the identity (3.5) is not enough to conclude that (2.2) has a solution. To obtain a solution of (2.2), we shall use some monotonicity arguments relying on the assumptions (A1) and (A2).

Let \(f \in C(X)\) with \(f\ge 0\). For all \(M>0\), we have,

Letting \(n\rightarrow +\infty \), using (3.1), Lebesgue’s dominated convergence Theorem and (3.3), we deduce

so that, letting \(M\rightarrow \infty \), by monotone convergence, we obtain

Thanks to the Radon-Nikodym Theorem, we can decompose each measure \(\nu _i\) as

Since \(\nu _i^s\) and m are mutually singular there exists a Borel subset of X, which we denote \(A_i\), such that \(m(X\setminus A_i)=\nu _i^s(A_i)=0\) so that for every Borel subset B of X, there holds

As a consequence, with (3.6), we also have

Let now \(\gamma _i \in \Pi (\mu _i, \nu _i)\) be such that \({{{\mathcal {T}}}}_{c_i}(\mu _i, \nu _i)=\int _{X\times X} c_i \hbox {d} \gamma _i\) and let us decompose \(\gamma _i\) as \(\gamma _i=\gamma _i^a+\gamma _i^s\) where \(\gamma _i^a\) and \(\gamma _i^s\) are defined by

for every \(\varphi \in C(X\times X)\). By construction, we have

and one can decompose \( \mu _i=\alpha _i m={\mathop {\textrm{proj}}\nolimits _1}_\# \gamma _i\) as \({\mathop {\textrm{proj}}\nolimits _1}_\# \gamma _i^a + {\mathop {\textrm{proj}}\nolimits _1}_\# \gamma _i^s\). Since these two terms are absolutely continuous with respect to m, we can write them as

Now let us define

and observe that

and

so that \({\widetilde{\gamma }}_i \in \Pi (\mu _i, {\widetilde{\beta }}_i m)\). Using (A1) and the definition of \({\widetilde{\gamma }}_i\) in (3.10), we have

Setting \({\widetilde{\beta }}=({\widetilde{\beta }}_1, \ldots , {\widetilde{\beta }}_N)\) we thus have

and since \({\widetilde{\beta }}_i \ge \beta _i^a\), from (3.9), we deduce that \({\widetilde{\beta }}\ge \beta \), hence from the monotonicity part in assumption (A2), we get

Together with (3.5), this implies that \({\widetilde{\beta }}\) solves (2.2). Of course, this solution is unique as soon as \({\mathcal {U}}\) is strictly concave since \({\mathcal {T}}_{\mu }\) is convex. \(\square \)

Remark 3.2

The proof above shows that if \(\nu \in {{{\mathcal {M}}}}_+(X)^N\) is such that \(\nu _i(X) =\mu _i(X)\) for \(i=1, \ldots , N\), and writing the Lebesgue decomposition of \(\nu _i\) with respect to m as

there exists \({\widetilde{\beta }}_i \in L^1(X, m)\) such that

This, together with the monotonicity of U, shows that

therefore we have

where in the right-hand side \(\nu ^a=(\nu _1^a, \ldots , \nu _N^a) \) denotes the absolutely continuous part of \(\nu \) with respect to m.

3.2 Duality

The Pareto problem (2.2) appears naturally as the dual of a convex minimization problem over continuous functions which we now describe. Let us first introduce for \((x, \varphi )=(x, \varphi _1, \ldots , \varphi _N)\in X\times {\mathbb {R}}^N\):

Note that each V(x, .) is convex, lsc, nonincreasing and that \(\varphi \in {\mathbb {R}}_+^N\) whenever \(V(x,\varphi )<+\infty \). Let us then consider the convex integral functional

and observe that, thanks to (A4), one has

Recall that, for any \(i=1, \ldots , N\) and given \(\psi \in C(X)\), the \(c_i\)-transform of \(\psi \), denoted by \(\psi ^{c_i}\), is defined by

For \(\varphi :=(\varphi _1, \ldots , \varphi _N)\in C(X)^N\), let us set

so that K is convex and everywhere continuous w.r.t the uniform norm. Finally, let us consider the convex minimization problem

It is easy to deduce, from (A5) and the fact that \(c_i\) is bounded, that \(K + {{{\mathcal {V}}}}\) is bounded from below. Hence, (3.13) together with the continuity of K implies that the value of (3.15) is finite and that the Fenchel-Rockafellar Theorem applies to (3.15). Identifying the dual of \(C(X)^N\) with \({{{\mathcal {M}}}}(X)^N\), the Fenchel-Rockafellar dual of (3.15) reads

where \(K^*\) and \({{{\mathcal {V}}}}^*\) respectively stand for the Legendre transform of K and \({{{\mathcal {V}}}}\) (for the duality between continuous functions and Radon measures), i.e. for every \(\nu \in {{{\mathcal {M}}}}(X)^N\):

It turns out that (3.16) is nothing but (2.2), slightly in disguise, as expressed by the next result.

Lemma 3.3

Let \(\nu \in {{{\mathcal {M}}}}(X)^N\), there holds

where \(\nu ^a\) is the absolutely continuous part of \(\nu \) with respect to m. Moreover, we have

Proof

The fact that \(K^*(\nu )= {{{\mathcal {T}}}}_\mu (\nu )\) is a consequence of the well-known Kantorovich duality formula for optimal transport (see for instance [2]). As for the Legendre transform of \({{{\mathcal {V}}}}\), it follows from the seminal results of Rockafellar [11, 12] for convex integral functionals. More precisely, thanks to Theorem 4 in [12] and our assumptions on U, we have:

On the one hand, by the Fenchel-Moreau Theorem and the convexity and lower semicontinuity of \(-U(x,.)\) we have

On the other hand, since \({{{\mathcal {V}}}}(\varphi )<+\infty \) whenever each \(\varphi _i\) is strictly positive and \({{{\mathcal {V}}}}(\varphi )<+\infty \) implies that each \(\varphi _i\) is nonnegative, we have

As a consequence, using the monotonicity of U(x, .) and the fact that for \(\theta _i \in L^1(X,m)\), \(0 \le \theta _i \le \nu _i\) is equivalent to \(0\le \theta _i \le \nu _i^a\), we get

By the Fenchel-Rockafellar Theorem (see [13]), together with the continuity of K and the fact that \({{{\mathcal {V}}}}\) is lsc and \({{{\mathcal {V}}}}(\varphi )\) is finite whenever the components of \(\varphi \) are bounded from below by a positive constant, we get

from which we deduce (3.18) thanks to proposition 3.1 and (3.11). \(\square \)

3.3 Existence for the Primal

Let us consider the following qualification condition which is a strengthening of (A5):

Assumption A5’ There exists \({{\overline{\alpha }}}\in L^1(X,m)^N\) such that (2.1) holds and there exists \(\varepsilon \in (0,1)\) such that

We then have an existence result for (3.15):

Proposition 3.4

Assuming (A1)–(A5’), the minimization problem (3.15) admits at least one solution.

Proof

Let \(\varphi ^n=(\varphi _1^n, \ldots , \varphi _N^n)\) be a minimizing sequence for (3.15), let us then define \({\widetilde{\varphi }}^n:=({\widetilde{\varphi }}_1^n, \ldots , {\widetilde{\varphi }}_N^n)\) by

It is well-known that \(({\widetilde{\varphi }}_i^n)^{c_i}= ({\varphi _i^n})^{c_i}\) and that \({\widetilde{\varphi }}_i^n \ge \varphi _i^n\) so that \(K({\widetilde{\varphi }}^n)=K(\varphi ^n)\) and (since V(x, .) is nonincreasing) \({{{\mathcal {V}}}}({\widetilde{\varphi }}^n)\le {{{\mathcal {V}}}}(\varphi ^n)\). Hence \({\widetilde{\varphi }}^n\) is also a minimizing sequence for (3.15). Now the advantage of working with the minimizing sequence \({\widetilde{\varphi }}_i^n\) is that it is uniformly equicontinuous (indeed, thanks to (3.19), a modulus of continuity of \(c_i\) is also a modulus of \({\widetilde{\varphi }}_i^n\) for every n). Since \({{{\mathcal {V}}}}({\widetilde{\varphi }}^n)\) is finite, \({\widetilde{\varphi }}_i^n\ge 0\), let us then set \(\delta _i^n:=\min _X {\widetilde{\varphi }}_i^n \ge 0\), and let us prove that \(\delta _i^n\) is bounded. First, from the equicontinuity, there is a constant \(C\ge 0\) such that for every \(i\in \{1, \ldots , N\}\) and every n, one has

Now fix, M such that

Since \( {\widetilde{\varphi }}_i^n \ge \delta _i^n\), thanks to (A1) we have \(K({\widetilde{\varphi }}_n) \ge \sum _{i=1}^N (\delta _i^n -\min _{X\times X} c_i) \mu _i(X)= \sum _{i=1}^N \delta _i^n \mu _i(X)\). Moreover, by the very definition of V and (3.20)

Integrating and using (A5’), this yields that for some constant \(C'\), \({{{\mathcal {V}}}}({\widetilde{\varphi }}^n) \ge C'-(1-\varepsilon ) \sum _{i=1}^N \delta _i^n \mu _i(X)\). Thanks to (3.21), we therefore get

which shows that \(\delta _i^n\) is bounded so that \({\widetilde{\varphi }}^n\) is uniformly bounded. Applying Ascoli’s Theorem, we may therefore assume that \({\widetilde{\varphi }}^n\) converges uniformly to some \({\widetilde{\varphi }}\), so that \(K({\widetilde{\varphi }}^n)\) converges to \(K({\widetilde{\varphi }})\), and since for some constant \(C''\), one has \(V(x, {\widetilde{\varphi }}_n(x)) \ge U(x, {{\overline{\alpha }}}(x))-C''\), one deduces from (A5) and Fatou’s lemma that \(\liminf _n {{{\mathcal {V}}}}({\widetilde{\varphi }}^n) \ge {{{\mathcal {V}}}}({\widetilde{\varphi }})\) which finally proves that \({\widetilde{\varphi }}\) solves (3.15). \(\square \)

4 Optimality Conditions and a Second Welfare Theorem

4.1 Primal-Dual Extremality Conditions

Now that we know that, under the assumptions of proposition 3.4, there exist solutions both to (2.2) and its primal (3.15), we can easily use (3.18) to deduce a characterization of these solutions. Indeed, by construction, we have

and for every i, \(\beta :=(\beta _1,\ldots ,\beta _N) \in L^1(X, m)^N\) such that \(\beta _i \ge 0\) and \(\int _X \beta _i m =\mu _i(X)\) and every \(\varphi := (\varphi _1,\ldots ,\varphi _N) \in C(X)^N\), we have

Now if \(\varphi \) solves (3.15) and \(\beta \) solves (2.2), from (3.18), we obtain

so that with (4.1), we get

Likewise, with (4.2), we get for every i,

so that

By continuity of \(\varphi _i\) and \(c_i\), this means that, whenever (x, y) is in the support of \(\gamma _i\), one has

Note in particular that (4.3) implies that for m-a.e. x, \(\varphi (x)\) is a supergradient of U(x, .) at \(\beta (x)\) and then

4.2 A Second Welfare Theorem with Transport Costs

The optimality conditions (4.4)–(4.6) can easily be interpreted in terms of equilibrium conditions as we shall now explain. Imagine that there is a representative agent at each location x, with utility U(x, .) and an initial endowment \(\alpha (x)\) for the goods \(1, \ldots , N\). Assume also that this agent has a given (monetary) endowment w(x). In an exchange economy, where the agent located at y can trade with agents located at any location x, an equilibrium for the monetary endowment w is a system of prices \(y\mapsto p(y)\) together with a final endowment \(y\mapsto \beta (y)\) of the goods, satisfying:

-

There is free-mobility of trade Given the price, system p, and a location x, agents located at x exporting one unit of good i to location y, get a profit \(p_i(y)-c_i(x,y)\), so that their total profit is

$$\begin{aligned} \pi (x) \cdot \alpha (x)=\sum _{i=1}^N \pi _i(x) \alpha _i(x), \; \pi _i(x)=\max _{y\in Y} \{p_i(y)-c_i(x,y)\}=-p_i^{c_i}(x),\end{aligned}$$ -

Consumers maximize utility Agents located at y maximize their utility \(U(y, \beta )\) subject to their budget constraint that expenditure \(p(y)\cdot \beta \) is smaller than their total revenue which is w(y) augmented by their export profit \(\pi (y) \cdot \alpha (y)\), i.e. \(\beta (y)\) should solve

$$\begin{aligned}\max \{ U(y, \beta ) \; : \; p(y) \cdot \beta \le \pi (y)\cdot \alpha (y)+w(y)\}\end{aligned}$$ -

Markets clear which means that for every i, one can find a plan \(\gamma _i\) (\(\gamma _i(A\times B)\) represents the quantity of good i transported from a location in A to a location in B), such that \(\gamma _i \in \Pi (\alpha _i m, \beta _i m)\) (i.e. demand and supply match) and \(\pi _i(x)=p_i(y)-c_i(x,y)\) for every (x, y) in the support of \(\gamma _i\) (which means that this plan is consistent with free-mobility of trade).

We then straighforwardly deduce from the optimality conditions (4.4)–(4.6), a sort of second welfare theorem with transport costs

Theorem 4.1

Let \(\varphi \) and \(\beta \) solves (3.15) and (2.2) respectively. Define then

then \((\beta , p)\) is an equilibrium with monetary endowment w.

5 Algorithm and Simulations

In this final section, we describe the entropic approximation of (3.15) and its dual (2.2). Since the influential paper of Cuturi [6], entropic optimal transport has become a popular and efficient tool for computational optimal transport thanks to Sinkhorn’s celebrated scaling algorithm, and we refer to [8] for a comprehensive exposition. The algorithm detailed in the next paragraph is based on a variant of the Sinkhorn algorithm introduced by Peyré [9] in the context of Wasserstein gradient flows. We finally give numerical results in the one-dimensional case and in the case of a network of cities.

5.1 An Entropic Approximation Algorithm

From now on, we assume that X is finite, take the counting measure m as reference measure on X, and denote by \(\alpha _i(x)>0\) the inital endowment of location \(x\in X\) in the good \(i\in \{1, \ldots , N\}\). Problem (3.15) thus takes the form:

Given a regularization parameter \(\varepsilon >0\), we consider the smooth approximation of (5.1) where maxima are replaced by soft maximaFootnote 2:

Now, let us observe that (5.2) can be conveniently reformulated by considering the convex function

for \((\psi , \varphi ) \in {\mathbb {R}}^{X\times N} \times {\mathbb {R}}^{X\times N}\). Indeed, for fixed \(\varphi \), the minimizer of \(\psi \mapsto \Phi (\varphi , \psi )\) is explicitly given by

so replacing in \(\Phi \), we get

where C is the constant

Optimality system in primal and dual form The optimality condition for (5.2) (which is the minimization of the sum of a convex lsc function with a smooth convex function) writes:

where \(\beta (y)=(\beta _1(y), \ldots , \beta _N(y))\) is given by

Defining

we thus have \(\gamma _i \in \Pi (\alpha _i, \beta _i)\) and \(\gamma _i\) solves the entropic optimal transport problem

It is then easy to check that (5.4)–(5.5) is equivalent to the fact that \(\beta \) solves the dual of (5.2):

which is the entropic regularization of (2.2) with regularization parameter \(\varepsilon \).

Coordinate descent/Sinkhorn We can find the (regularized) prices \(\varphi \) by solving (5.2) and then the optimal quantities \(\beta \) by using (5.5). As noted above, (5.2) is equivalent to

which can be solved by coordinate descent as follows. Starting from \((\psi ^{0}, \varphi ^0)\), we recursively compute \((\psi ^{k+1}, \varphi ^{k+1})\) by:

which, using (5.3), gives totally explicit Sinkhorn-like updates:

for \(i=1, \ldots , N\) and every \(x\in X\). Then, we update the prices by:

which is the same as solving a one-dimensional strictly convex minimization problem for each \(y\in X\):

This boils down to a strictly monotone equation (if V is differentiable, see the Cobb–Douglas case below) or inclusion (if V is nonsmooth) in t which can be solved efficiently by dichotomy for instance. The next price updates are performed similarly by iteratively solving for, \(i=2, \ldots , N-1\) and every \(y\in X\):

and for \(i=N\)

The Cobb–Douglas case If we further specify the utility to be of Cobb–Douglas formFootnote 3 :

then a direct computation gives

Hence, each minimization step as (5.10) amounts, for some parameter \(A>0\) and exponent b, to finding the root t of the strictly monotone equation

which can be solved efficiently with a few steps of a dichotomy method. We can also notice that since V is smooth and locally strongly convex on its domain, the linear convergence of the coordinate scheme described above is guaranteed by the results of Beck and Tetruashvili [15].

5.2 Numerical Results

We now present some simulations using the entropic approximation algorithm described in the previous paragraph.

5.2.1 One-Dimensional Experiments

We first consider the one dimensional case \(X=[0,1]\) (suitably discretized), assume there are twoo goods and that the utility is a Cobb-Douglas function with parameters \(a_1\) and \(a_2\). As for the transport cost, we take a power of the distance:

When \(\lambda \) is large, transport is costly and \(\beta _1\), \(\beta _2\) tend to be close to the initial distributions \(\alpha _1\) and \(\alpha _2\). On the contrary, when \(\lambda \) is small, the utility term dominates and \(\beta _1\) and \(\beta _2\) tend to be constant.

In our first three simulations, \(\alpha _1\) and \(\alpha _2\) are gaussians of expected values respectively \(\mu _1 = 0.25\) and \(\mu _2 = 0.75\) and standard deviations \(\sigma _1 = \sigma _2 = 0.2\), \((a_1,a_2) = (0.49,0.49)\) and \(\varepsilon = 0.01\). The three simulations correspond to different exponents p for the cost: a concave cost with \(p=0.3\) (Fig. 1), the linear cost \(p=1\) (Fig. 2) and the quadratic cost \(p=2\) (Fig. 3). In the first row, \(\alpha \) appears in light grey and \(\beta \) in dark grey, the prices \((\varphi _1,\varphi _2)\) are plotted in the second row. The third row represents the convergence of the algorithm: we plot the decay of the functional \(\Phi \) and the evolution of the error \(||\partial V(\varphi (\cdot )) + \beta (\cdot )||_2\) for the first order necessary and sufficient condition (5.4).

The most striking phenomenon shown by these simulations is the emergence of extra modes in the final distributions whereas the initial distributions are single peaked. This phenomenon seems to be related to the concavity of the transport cost. In the concave case of Fig. 1, \(\beta \) exhibits three local maxima, the linear case of Fig. 2 exhibits two modes and in the quadratic case of case of Fig. 3, initial and final distributions roughly have the same unimodal shape.

In order to further explore numerically the impact of the various parameters on the structure of the Pareto optimum, we present in Figs. 4 and 5, the case of mixture of three gaussians for \(\alpha _1\) and of two gaussians for \(\alpha _2\) for different values of \(a_1\) and \(a_2\) (in Fig. 4, \(a_1+a_2\) is close to 1 and in Fig. 5, \(a_1+a_2=0.5\)).

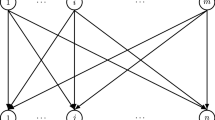

5.2.2 A Network Model

Our next application concerns a connected network of French cities. The nodes of this network (see Fig. 6) are the nine French cities: Brest, Lille, Lyon, Marseille, Nantes, Paris, Rennes, St-Malo and Strasbourg. The cost between two neighbouring cities is given by the distance as the crow fliesFootnote 4 and more generally the cost between two cities is given by the shortest path distance between them. To take into account population differences between these cities, we have weighted the Cobb–Douglas utility by a weight w(x) for city x:

City | Brest | Lyon | Marseille | Paris | Strasbourg | Rennes | Lille | Saint-Malo | Nantes |

|---|---|---|---|---|---|---|---|---|---|

w (thousands) | 139 | 518 | 868 | 2175 | 285 | 217 | 233 | 46 | 314 |

This leads to the weighted utility

and the corresponding conjugate term (see (3.12)):

with \(a=a_1+a_2+a_3<1\). In our examples, we have taken \( (a_1,a_2,a_3)=(0.4,0.1,0.2)\). In Figs. 6, 7, 8 and 9, we have represented the initial and final distributions as well as the optimal transport plans between the cities. Note that the larger \(\varepsilon \), the stronger the diffusion effect in the transport plan, which explains why we see more connections in Figs. 6 and 7 (large diffusion with \(\varepsilon =0.1\)) than in Figs. 8 and 9 (\(\varepsilon =0.01\)). We also give the prices of the goods in the different cities and analyze the convergence of the algorithm both in terms of the decay of the objective function \(\Phi \) and in the residuals for the optimality condition.

Notes

by nondecreasing we mean that \(\beta -\beta ' \in {\mathbb {R}}_+^N\) implies \(U(x, \beta ) \ge U(x, \beta ')\).

for simplicity, we take U independent of the location but there is no extra difficulty in having a dependence in y in a multiplicative prefactor or even in the exponents \(a_i\).

given by the website https://www.coordonnees-gps.fr/distance.

References

Villani, C.: Topics in optimal transportation. Graduate Studies in Mathematics, vol. 58. American Mathematical Society, Providence, RI (2003)

Santambrogio, F.: Optimal transport for applied mathematicians. Progress in Nonlinear Differential Equations and their Applications, 87. Birkhäuser/Springer, Cham (2015). Calculus of variations, PDEs, and modeling

Galichon, A.: Optimal Transport Methods in Economics. Princeton University Press, Princeton (2016)

Jordan, R., Kinderlehrer, David, Otto, F.: The variational formulation of the Fokker–Planck equation. SIAM J. Math. Anal. 29(1), 1–17 (1998)

Ambrosio, L., Gigli, N., Savaré, G.: Gradient Flows in Metric Spaces and in the Space of Probability Measures. Lectures in Mathematics ETH Zürich, 2nd edn. Birkhäuser, Basel (2008)

Marco, C.: Sinkhorn distances: Lightspeed computation of optimal transport. In Advances in Neural Information Processing Systems, pp 2292–2300 (2013)

Benamou, J. D., Carlier, G., Cuturi, M., Nenna, L., & Peyré, G.: Iterative Bregman projections for regularized transportation problems. SIAM J. Sci. Comput. 37(2), A1111–A1138 (2015)

Peyré, G., Cuturi, M.: Computational optimal transport: with applications to data science. Found. Trends Mach. Learn. 11(5–6), 355–607 (2019)

Peyré, G.: Entropic approximation of wasserstein gradient flows. SIAM J. Imaging Sci. 8(4), 2323–2351 (2015)

Komlós, J.: A generalization of a problem of Steinhaus. Acta Math. Acad. Sci. Hungar. 18, 217–229 (1967)

Rockafellar, R.T.: Integrals which are convex functionals. Pacific J. Math. 24, 525–539 (1968)

Rockafellar, R.T.: Integrals which are convex functionals. II. Pacific J. Math. 39, 439–469 (1971)

Ivar, E. and Roger, T.: Convex analysis and variational problems, volume 28 of Classics in Applied Mathematics. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, english edition (1999)

Alfred, G., Bernard, S.: Cupid’s invisible hand: social surplus and identification in matching models. Rev. Econ. Stud. 89(5), 2600–2629 (2021)

Beck, A., Tetruashvili, L.: On the convergence of block coordinate descent type methods. SIAM J. Optim. 23(4), 2037–2060 (2013)

Acknowledgements

GC is grateful to the Agence Nationale de la Recherche for its support through the projects MAGA (ANR-16-CE40-0014) and MFG (ANR-16-CE40-0015-01). GC also acknowledges the support of the Lagrange Mathematics and Computation Research Center.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests..

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bacon, X., Carlier, G. & Nazaret, B. A Spatial Pareto Exchange Economy Problem. Appl Math Optim 87, 45 (2023). https://doi.org/10.1007/s00245-022-09947-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s00245-022-09947-z

Keywords

- Exchange economy Pareto optimality

- Optimal transport

- Convex duality

- Second welfare theorem

- Entropic optimal transport

- Sinkhorn algorithm