Abstract

In this paper we deal with a general second order continuous dynamical system associated to a convex minimization problem with a Fréchet differentiable objective function. We show that inertial algorithms, such as Nesterov’s algorithm, can be obtained via the natural explicit discretization from our dynamical system. Our dynamical system can be viewed as a perturbed version of the heavy ball method with vanishing damping, however the perturbation is made in the argument of the gradient of the objective function. This perturbation seems to have a smoothing effect for the energy error and eliminates the oscillations obtained for this error in the case of the heavy ball method with vanishing damping, as some numerical experiments show. We prove that the value of the objective function in a generated trajectory converges in order \(\mathcal {O}(1/t^2)\) to the global minimum of the objective function. Moreover, we obtain that a trajectory generated by the dynamical system converges to a minimum point of the objective function.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since Su et al. in [32] showed that Nesterov’s accelerated convex gradient method has the exact limit the second order differential equation that governs the heavy ball system with vanishing damping, that is,

with \(\alpha =3\), the latter system has been intensively studied in the literature in connection to the minimization problem \(\inf _{x\in \mathbb {R}^m}g(x).\) Here \(g:\mathbb {R}^m\longrightarrow \mathbb {R}\) is a convex Fréchet differentiable function with Lipschitz continuous gradient.

In [32] the authors proved that

for every \(\alpha \ge 3,\) however they did not show the convergence of a generated trajectory to a minimum of the objective function g.

In [9], Attouch et al. considered the case \(\alpha >3\) in (1), and showed that the generated trajectory x(t) converges to a minimizer of g as \(t\longrightarrow +\infty \). Actually in [9] the authors considered the perturbed version of the heavy ball system with vanishing damping, that is,

where \(h:[t_0,+\infty )\longrightarrow \mathbb {R}\) is a small perturbation term that satisfies \(\int _{t_0}^{+\infty }t\Vert h(t)\Vert dt<+\infty .\) Beside the convergence of a generated trajectory x(t) to a minimizer of g, they showed that also in this case the convergence rate of the objective function along the trajectory, that is \(g(x(t))-\min g,\) is of order \(\mathcal {O}\left( \frac{1}{t^2}\right) \).

Another perturbed version of (1) was studied by Attouch et al. in [8], see also [3, 7, 11, 30]. They assumed that the objective g is twice continuously differentiable and the perturbation of their system is made at the damping term. More precisely, they studied the dynamical system with Hessian driven damping

where \(\alpha >0\) and \(\beta >0.\) In case \(\alpha>3,\,\beta >0\) they showed the convergence of a generated trajectory to a minimizer of g. Moreover, they obtained that in this case the convergence rate of the objective function along the trajectory, that is \(g(x(t))-\min g,\) is of order \({o}\left( \frac{1}{t^2}\right) \).

Further, Attouch et al. in [10] studied the subcritical case \(\alpha \le 3\) and they proved that in this case the convergence rates of the objective function g along the trajectory generated by (1), i.e \(g(x(t))-\min g,\) is of order \(\mathcal {O}\left( \frac{1}{t^{\frac{2}{3}\alpha }}\right) \).

Another approach is due to Aujol et al. [14], who assumed that beside convexity, the objective g in (1) satisfies some geometrical conditions, such as the Łojasiewicz property. The importance of their results obtained in [14] is underlined by the fact that applying the classical Nesterov scheme on a convex objective function without studying its geometrical properties may lead to sub-optimal algorithms.

It is worth mentioning the work of Aujol and Dossal [13], who did not assumed the convexity of g, but the convexity of the function \((g(x(t))-g(x^*))^\beta \), where \(\beta \) is strongly related to the damping parameter \(\alpha \) and \(x^*\) is a global minimizer of g. Under these assumptions, they obtained some general convergence rates and also the convergence of the generated trajectories of (1). In case \(\beta =1\) they results reduce to the results obtained in [8,9,10].

However, the convergence of the trajectories generated by the continuous heavy ball system with vanishing damping (1), in the general case when g is non-convex is still an open question. Some important steps in this direction have been made in [19] (see also [18]), where convergence of the trajectories of a perturbed system, have been obtained in a non-convex setting. More precisely in [19] is considered the system

where \(t_0>0,u_0,v_0\in \mathbb {R}^m,\,\gamma >0,\,\alpha \in \mathbb {R}.\) Note that here \(\alpha \) can take non-positive values. For \(\alpha =0\) we recover the dynamical system studied in [16]. According to [19], the trajectory generated by the dynamical system (4) converges to a critical point of g, if a regularization of g satisfies the Kurdyka–Łojasiewicz property.

Further results concerning the heavy ball method and its extensions can be found in [4, 6, 15, 21,22,23].

1.1 An Extension of the Heavy Ball Method and the Nesterov Type Algorithms Obtained via Explicit Discretization

What one can notice concerning the heavy ball system and its variants is, that despite of the result of Su et al. [32], these systems will never give through the natural implicit/explicit discretization the Nesterov algorithm. This is due to the fact that the gradient of g is evaluated in x(t), and this via discretization will become \({\nabla }g(x_n)\) or \({\nabla }g(x_{n+1})\) and never of the form \({\nabla }g(x_n+\alpha _n(x_n-x_{n-1}))\) as Nesterov’s gradient method requires. Another observation is that using the same approach as in [32] one can show, see [25], that (1), (and also (4)), models beside Nesterov’s algorithm other algorithms too. In this paper we overcome the deficiencies emphasized above by introducing a dynamical system that via explicit discretization leads to inertial algorithms of gradient type. To this end, let us consider the optimization problem

where \(g:\mathbb {R}^m\longrightarrow \mathbb {R}\) is a convex Fréchet differentiable, function with \(L_g\)-Lipschitz continuous gradient, i.e. there exists \(L_g\ge 0\) such that \(\Vert {\nabla }g(x)-{\nabla }g(y)\Vert \le L_g\Vert x-y\Vert \) for all \(x,y\in \mathbb {R}^m.\)

We associate to (5) the following second order dynamical system:

where \(u_0,v_0\in \mathbb {R}^m,\) \(t_0>0\) and \(\alpha >0,\,\beta \in \mathbb {R},\,\gamma \ge 0.\)

Remark 1

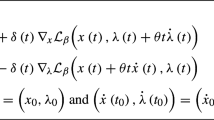

The connection of (6) with the heavy ball system with vanishing damping (1) is obvious, the latter one can be obtained from (6) for \(\gamma =\beta =0.\) Further, by using the Taylor expansion for \({\nabla }g(\cdot )\) we get

Hence, (6) is strongly related to the following version of heavy ball method with Hessian driven damping:

We emphasize that second order dynamical systems with Hessian driven damping similar to (8) have been intensively studied in the literature, see [3, 7, 8, 11, 21, 30]

The study of the dynamical system (6) in connection to the optimization problem (5) is motivated by the following facts.

- 1.:

-

The dynamical system (6) leads via explicit discretization to inertial algorithms. In particular Nesterov’s algorithm can be obtained via this natural discretization.

- 2.:

-

A generated trajectory and the objective function value in this trajectory in general have a better convergence behaviour than a trajectory generated by the heavy ball system with vanishing damping (1), as some numerical examples show.

- 3.:

-

The same numerical experiments reveal that the perturbation term \(\left( \gamma +\frac{\beta }{t}\right) \dot{x}(t)\) in the argument of the gradient of the objective function g has a smoothing effect and annihilates the oscillations obtained in case of the dynamical system (1) for the errors \(g(x(t)-\min g\) and \(\Vert x(t)-x^*\Vert ,\) where \(x^*\) is a minimizer of g. In view of Remark 1 this fact is not surprising, since according to [30] the system (8) has the same smoothing effect.

- 4.:

-

Despite of the similarities between the systems (6) and (8) underlined in Remark 1, the trajectories generated by the dynamical system (6) seems to have a better convergence behaviour than the trajectories generated by (8) as some numerical experiments show.

- 5.:

-

A trajectory x(t) generated by the dynamical system (6) ensures the convergence rate of order \(\mathcal {O}\left( \frac{1}{t^2}\right) \) for the decay \(g\left( x(t)+\left( \gamma +\frac{\beta }{t}\right) \dot{x}(t)\right) -\min g\), provided it holds that \(\alpha>3,\,\gamma > 0,\,\beta \in \mathbb {R}\) or \(\alpha \ge 3,\,\gamma =0,\,\beta \ge 0.\)

- 6.:

-

A trajectory x(t) generated by the dynamical system (6) ensures the same convergence rate of order \(\mathcal {O}\left( \frac{1}{t^2}\right) \) for the decay \(g(x(t))-\min g\) as the heavy ball method with vanishing damping, for the case \(\alpha >3,\,\gamma =0,\,\beta \ge 0.\)

- 7.:

-

The convergence of a generated trajectory x(t) to a minimizer of the objective function g can be obtained in case \(\alpha>3,\,\gamma >0\) and \(\beta \in \mathbb {R}\) and also in the case \(\alpha >3,\,\gamma =0\) and \(\beta \ge 0.\)

Remark 2

Nevertheless, in case \(\gamma =0\) and \(\beta <0\) the dynamical system (6) can generate periodical solutions, hence the convergence of a generated trajectory to a minimizer of the objective is hopeless. To illustrate this fact, for \(\beta <0,\,\alpha >0,\,\gamma =0\) consider the strongly convex objective function \(g:\mathbb {R}\longrightarrow \mathbb {R},\) \(g(x)=\frac{\alpha }{-2\beta } x^2\). Then, taking into account that \(\gamma =0\) the dynamical system (6) becomes

Now, the periodical function \(x(t)=\sin \sqrt{\frac{\alpha }{-\beta }} t\) is a solution of (9), consequently do not exist the limit \(\lim _{t\longrightarrow +\infty }x(t).\)

We emphasize that the formulation of the dynamical system (6) is natural since by explicit discretization leads to inertial gradient methods, in particular the famous Polyak and Nesterov numerical schemes can be obtained from (6). For other inertial algorithms we refer to [2, 17, 25].

Indeed, explicit Euler discretization of (6), with the constant stepsize h, \(t_n=nh,\,\,x_n=x(t_n)\) leads to

Equivalently, the latter equation can be written as

Now, setting \(h^2=s\) and denoting the constants \(\frac{\gamma }{h}\) and \(\frac{\beta }{h^2}\) still with \(\gamma \) and \(\beta \), we get the following general inertial algorithm:

Let \(x_0,x_{-1}\in \mathbb {R}^m\) and for all \(n\in \mathbb {N}\) consider the sequences

However, from a practical point of view it is more convenient to work with the following equivalent formulation: Let \(x_0,x_{-1}\in \mathbb {R}^m\) and for all \(n\in \mathbb {N}\) consider the sequences

where \(\alpha >0,\,\beta \in \mathbb {R}\) and \(\gamma \ge 0.\)

Remark 3

Notice that for \(\gamma =\beta =0\) we obtain a variant of Polyak’s algorithm [29] and for \(\gamma =1\) and \(\beta =0\) we obtain Nesterov’s algorithm [27, 28]. An interesting fact is that Algorithm (12) allows different inertial steps and this approach seems to be new in the literature.

Remark 4

Note that according to Theorem 9 below, the dynamical system (6) can equivalently be rewritten as a first order system. Further, since the explicit Euler method applied to a first order system is an explicit Runge–Kutta method of order 1, the interested reader can use the results from [33] in order to obtain numerical schemes from (6) via Runge–Kutta discretization. In the view of the results from [33] the Runge–Kutta discretization of the dynamical system (6) may have an acceleration effect.

Remark 5

Independently to us, very recently, a system similar to (6) was studied by Muehlebach and Jordan in [26] and they show that Nesterov’s accelerated gradient method can be obtained from their system via a semi-implicit Euler discretization scheme. They considered a constant damping instead of \(\frac{\alpha }{t}\) and also took \(\beta =0.\) Further they also treated the ODE

which for \(s=1\) is obviously equivalent to the particular case of the governing ODE from (6), obtained for \(\alpha =3,\,\gamma =1,\,\beta =-3.\) However, the freedom of controlling the parameters \(\beta \) and \(\gamma \) in (6) is essential as the next numerical experiments show.

1.2 Some Numerical Experiments

In this section we consider two numerical experiments for the trajectories generated by the dynamical system (6) for a convex but not strongly convex objective function.

Everywhere in the following numerical experiments we consider the continuous time dynamical systems (6) and (8), solved numerically with the ode45 adaptive method in MATLAB. We solved these dynamical systems with ode45 on the interval [1, 100] and the plot in Figs. 1, 2 show the energy error \(|g(x(t)) - g(x^*)|\) on the left and the iterate error \(\Vert x(t) - x^*\Vert \) on the right.

We show the evolution of the two errors with respect to different values for \(\alpha \), \(\beta \) and \(\gamma \), including the case that yields the Heavy Ball with Friction. One can observe, see Fig. 1, that the best choice is not \(\gamma =\beta =0\) which is the case of heavy ball system with vanishing damping (1). Further, in the view of Remark 1, it seems interesting to compare the convergence behaviour of the trajectories generated by the dynamical systems (6) and (8) for different values for \(\alpha \), \(\beta \) and \(\gamma \), see Fig. 2.

In the next experiments we consider the convex, but not strongly convex function \(g:\mathbb {R}^2\longrightarrow \mathbb {R},\)

Then, \({\nabla }g(x,y)=(4x^3-4,10y-10)\), further \(x^*=(1,1)\) is the unique minimizer of g and \(g^*=g(1,1)=0.\)

In our first experiment we compare the convergence behaviour of the generated trajectories of (6) by taking into account the following instances.

\(\alpha \) | \(\beta \) | \(\gamma \) | Color |

|---|---|---|---|

3.1 | 0 | 0 | Blue |

3.1 | 2 | 0 | Red |

3.1 | 0 | 1 | Yellow |

3.1 | 0.5 | 0.5 | Purple |

3.1 | \(-\) 0.5 | 1 | Green |

The result are depicted in Fig. 1 for the starting points \(u_0=(-1,5),\,v_0=(2,-2)\) and \(u_0=(2,-2),\) \(v_0=(-2,2)\), respectively.

Remark 6

Observe that in all cases the trajectories generated by dynamical system (6) have a better behaviour than the trajectories generated by the heavy ball system with vanishing damping (1). The choice of the parameters \(\alpha \), \(\gamma \) and \(\beta \) will be validated by the theoretical results from Sect. 3, where we show that in case \(\alpha>3,\,\gamma >0,\,\beta \in \mathbb {R}\) and also in the case \(\alpha >3,\,\gamma =0,\,\beta \ge 0\) the energy error \(g(x(t)-\min g\) is of order \(\mathcal {O}\left( \frac{1}{t^2}\right) \) just as the case of heavy ball method. Further, for the values \(\alpha>3,\,\gamma >0,\,\beta \in \mathbb {R}\) and for \(\alpha >3,\,\gamma =0,\,\beta \ge 0\) we are able to show that a generated trajectory x(t) converges to a minimum of the objective function g.

Remark 7

As we have emphasized before, it seems that the perturbation \(\left( \gamma +\frac{\beta }{t}\right) \dot{x}(t)\) in the argument of the gradient of the objective function has a smoothing effect. The explanation of this fact is that, according to Remark 1, the trajectories generated by the dynamical system (6) and the trajectories generated by the dynamical system (8) are similar. Further, the behaviour of the trajectories generated by the dynamical system (8), in particular the explanation for this smoothing effect, are enlightened in [30].

In our second numerical experiment we consider the same convex function g and we compare the convergence behaviour of the trajectories generated by the dynamical system (6) (abbreviated D.Sys. (6)) and the trajectories generated by the dynamical system (8) (abbreviated D.Sys. (8)) for the following instances.

\(\alpha \) | \(\beta \) | \(\gamma \) |

|---|---|---|

3.1 | 3 | 1 |

3.1 | 1.5 | 0 |

3.1 | \(-\) 1 | 2.5 |

The result are depicted in Fig. 2 for the starting points \(u_0=(2,-2)\) and \(v_0=(-2,2)\). One can observe that also for these instances the trajectories generated by (6) have slightly better convergence properties than the trajectories generated by (8).

1.3 The Organization of the Paper

The outline of the paper is the following. After a short section concerning the existence and uniqueness of the trajectories generated by the dynamical system (6) in Sect. 3 we deal with the convergence analysis of the generated trajectories. We introduce a general energy functional, which will play the role of a Lyapunov function associated to the dynamical system (6) (see the full computations given in the Appendix). We obtain a rate of order \(\mathcal {O}(1/t^2)\) for the decay \(g\left( x(t)+\left( \gamma +\frac{\beta }{t}\right) \dot{x}(t)\right) -\min g.\) Further, we show that also the error \(g(x(t))-\min g\) has a rate of order \(\mathcal {O}(1/t^2)\). Finally, we show the convergence of the generated trajectories to a minimum of the objective function g. In Sect. 4 we conclude our paper and we present some possible related future researches.

2 Existence and Uniqueness

The first step toward our existence and uniqueness result obtained in the present section concerns the definition of a strong global solution of the dynamical system (6).

Definition 1

We call the function \(x:[t_0, + \infty ) \rightarrow \mathbb {R}^m\) a strong global solution of the dynamical system (6) if satisfies the following properties:

For brevity reasons, we recall that a mapping \(x : [t_0, + \infty ) \rightarrow \mathbb {R}^m\) is called locally absolutely continuous if it is absolutely continuous on every compact interval \([t_0, T]\), where \(T > t_0\). Further, we have the following equivalent characterizations for an absolutely continuous function \(x:[t_0,T]\longrightarrow \mathbb {R}^m\), (see, for instance, [1, 5]):

-

(a)

there exists \(y : [t_0, T] \rightarrow \mathbb {R}^m\) an integrable function, such that

$$\begin{aligned} x(t) = x(t_0) + \int \limits _{t_0}^{t} y(t) ds, \forall t \in [t_0, T]; \end{aligned}$$ -

(b)

x is a continuous function and its distributional derivative is Lebesgue integrable on the interval \([t_0, T];\)

-

(c)

for every \(\varepsilon > 0\), there exists \(\eta > 0\), such that for every finite family \(I_k = (a_k, b_k)\) from \([t_0, T]\), the following implication is valid :

$$\begin{aligned} \left[ I_k \cap I_j = \emptyset \text { and } \sum \limits _{k} |b_k-a_k|< \eta \right] \Rightarrow \left[ \sum \limits _k \Vert x(b_k) - x(a_k) \Vert < \varepsilon \right] . \end{aligned}$$

Remark 8

Let \(x : [t_0, +\infty ) \rightarrow \mathbb {R}^m\) be a locally absolutely continuous function. Then x is differentiable almost everywhere and its derivative coincides with its distributional derivative almost everywhere. On the other hand, we have the equality \(\dot{x}(t) = y(t)\) for almost every \(t \in [t_0, +\infty )\), where \(y = y(t)\) is defined at the integration formula (a).

The first result of the present section concerns the existence and uniqueness of the trajectories generated by the dynamical system (6). We prove existence and uniqueness of a strong global solution of (6) by making use of the Cauchy-Lipschitz-Picard Theorem for absolutely continues trajectories (see for example [24, Proposition 6.2.1], [31, Theorem 54]). The key argument is that one can rewrite (6) as a particular first order dynamical system in a suitably chosen product space (see also [3, 12, 19, 20]).

Theorem 9

Let \((u_0, v_0) \in \mathbb {R}^m \times \mathbb {R}^m\). Then, the dynamical system (6) admits a unique strong global solution.

It is worthwhile to emphasize that we do not need to assume that the objective function g is convex in order to obtain existence and uniqueness of the trajectories generated by the dynamical system (6). Further, we need the following result.

Proposition 10

For the starting points \((u_0,v_0) \in \mathbb {R}^m \times \mathbb {R}^m\) let x be the unique strong global solution of the dynamical system (6). Then, \(\ddot{x}\) is locally absolutely continuous on \([t_0, + \infty )\). In particular the third order derivative \(x^{(3)}\) exists almost everywhere on \([t_0, + \infty )\).

The proofs of Theorem 9 and Proposition 10 are postponed to Appendix.

3 Convergence Analysis

3.1 On a General Energy Functional Associated to the Dynamical System (6)

In order to obtain convergence rates for the function values in the trajectories generated by the dynamical system (6), we need to introduce an appropriate energy functional which will play the role of a Lyapunov function. The form of such an energy functional associated to heavy ball system with vanishing damping and its extensions is well known in the literature, see for instance [8, 9, 13, 14]. However, the novelty of the dynamical system (6), compared with the extended/perturbed variants of the heavy ball system studied in the above mentioned papers, consists in the fact that in system (6) the perturbation is carried out in the argument of the gradient of the objective function. This seems to be a new approach in the literature, therefore the previously mentioned energy functionals are not suitable for a valuable convergence analysis of the dynamical system (6).

Hence, let us denote \(\alpha (t)=\frac{\alpha }{t}\) and \(\beta (t)=\gamma +\frac{\beta }{t}\), and assume that \({{\,\mathrm{argmin}\,}}g\ne \emptyset .\) Further, let \(g^*=\min g=g(x^*),\,x^*\in {{\,\mathrm{argmin}\,}}g.\) In connection to the dynamical system (6), we introduce the general energy functional

which can be seen as an extension of the energy function studied in [9] in connection to the heavy ball system with vanishing damping.

Our purpose is to define the non-negative functions a(t), b(t), c(t), d(t) such that \(\dot{\mathcal {E}}(t)\le 0\), that is, the function \(\mathcal {E}(t)\) is non-increasing after a \(t_1\ge t_0.\) Indeed, if \(\mathcal {E}(t)\) is non-increasing for \(t\ge t_1,\) then

in other words

Concerning the derivative of the energy functional \(\mathcal {E}(t)\) we can prove the following result (see the Sect. A2 at the Appendix).

Consequently, in order to have \(\dot{\mathcal {E}}(t)\le 0\) for all \(t\ge t_1,\,t_1\ge t_0,\) one must assume that for all \(t\ge t_1\) the following inequalities hold:

Remark 11

Observe that (16) implies that \(\beta (t)\ge 0\) for all \(t\ge t_1,\,t_1\ge t_0\) and this shows that in dynamical system (6), one must have \(\beta \ge 0\) whenever \(\gamma =0.\) Further, (18) is satisfied whenever b(t) and d(t) are constant functions. It is obvious that there exists \(t_1\) such that for all \(t\ge t_1\) we have \(-\alpha (t)\beta (t)+\beta '(t)+1=1-\frac{\alpha \gamma }{t}-\frac{\alpha \beta +\beta }{t^2}>0,\) hence from (17) we get

Since (19) and (20) do not depend by \(\beta (t),\) it seem natural to choose c(t), b(t) and d(t) the same as in case of heavy ball system with vanishing damping (see [9]), that is, \(c(t)=t,\, b(t)=b\in (0,\alpha -1]\) and \(d(t)=b(\alpha -1-b),\) for all \(t\ge t_0,\) provided \(\alpha >1.\) Now, an easy computation shows that in this case

hence (15) is satisfied whenever \(b>2,\) which implies that \(\alpha >3.\)

However, if \(\gamma =0\) then

hence (15) holds also for \(b=2\) and \(\alpha =3.\)

3.2 Error Estimates for the Values

In this section we obtain convergence rate of order \(\mathcal {O}(1/t^2),\,t\longrightarrow +\infty \) for the difference \(g\left( x(t)+\left( \gamma +\frac{\beta }{t}\right) \dot{x}(t)\right) -g^*\) where \(g^*=g(x^*)=\min g,\,x^*\in {{\,\mathrm{argmin}\,}}g\ne \emptyset .\) From here we are able to show that \(g(x(t))-g^*\) also has a convergence rate of order \(\mathcal {O}(1/t^2),\,t\longrightarrow +\infty .\) However, just as in the case of heavy ball system with vanishing damping, in order to obtain these rates, it is necessary to assume \(\alpha \ge 3\) in our system (6). We have the following result.

Theorem 12

Let x be the unique strong global solution of (6) and assume that \({{\,\mathrm{argmin}\,}}g\ne \emptyset .\) Assume further that \(\alpha > 3\), \(\gamma >0\) and \(\beta \in \mathbb {R}\).

Then, there exists \(K\ge 0\) and \(t_1\ge t_0\) such that

Further,

and

Proof

Let \(\min g=g^*=g(x^*),\,x^*\in {{\,\mathrm{argmin}\,}}g.\) Consider the energy functional (13) with

According to Remark 11 we have

and the conditions (17)–(20) are satisfied with equality for every \(t\ge t_0\).

Obviously, if t is big enough on has that \(a(t)>0\) and since \(\gamma >0\) it holds that \(\gamma +\frac{\beta }{t}>0\) for t big enough, even if \(\beta <0\). Hence, there exists \(t'\ge t_0\) such (16) is satisfied for every \(t\ge t'.\)

Now, \(a'(t)-b(t)c(t)=(3-\alpha )t+\gamma +\mathcal {O}\left( \frac{1}{t^2}\right) \) and by taking into account that \(\alpha >3\) we obtain that there exists \(t''\ge t_0\) such that (15) holds for every \(t\ge t''.\)

Let \(t'''=\max (t',t'').\) We conclude that, \(\dot{\mathcal {E}}(t)\le 0 \) for all \(t\ge t''',\) hence \(\mathcal {E}(t)\) is non-increasing, i.e.

But, obviously there exists \(t_1\ge t'''\) such that \(a(t)\ge t^2\) for all \(t\ge t_1\) and by denoting \(K=\mathcal {E}(t''')\) we obtain

Next we prove (22). Since \(a'(t)-b(t)c(t)=(3-\alpha )t+\gamma +\mathcal {O}\left( \frac{1}{t^2}\right) \) there exist \(t_2\ge t_1\) such that

Hence from (14) we have

and by integrating from \(t_2\) to \(T> t_2\) we get

Now, by letting \(T\longrightarrow +\infty \) and taking into account that \(\mathcal {E}\) is non-increasing, the conclusion follows.

For proving (23) observe that there exists \(t_3\ge t_1\) such that \(-a(t)\left( \gamma +\frac{\beta }{t}\right) \le -\frac{\gamma }{2} t^2\) for all \(t\ge t_3.\) Consequently

Integrating from \(t_3\) to \(T>t_3\) and letting \(T\longrightarrow +\infty \) we obtain the desired conclusion. \(\square \)

Remark 13

Note that according to Remark 11 the condition (16) holds even if \(\gamma =0\) and \(\beta \ge 0.\) Consequently, even in the case \(\alpha >3,\,\gamma =0,\,\beta \ge 0\) the conclusions (21) and (22) in Theorem 12 hold. However, if \(\beta =0\) then we cannot obtain (23). Nevertheless, if \(\beta >0\) then (23) becomes:

Remark 14

Concerning the case \(\alpha =3\), note that (16) is satisfied if one assumes that \(\gamma =0\) but \(\beta \ge 0.\) Moreover, in this case (15) is also satisfied since, one has \(a'(t)-b(t)c(t)=- \frac{4\beta ^2(\alpha +1)}{(t^2-\beta (\alpha +1))^2}\le 0.\) Hence, also in the case \(\alpha =3,\,\gamma =0,\,\beta \ge 0\) (21) in the conclusion of Theorem 12 holds.

Moreover, if we assume \(\beta >0\) then (23) becomes:

Next we show that also the error \(g\left( x(t)\right) -\min g\) is of order \(\mathcal {O}(1/t^2).\) For obtaining this result we need the Descent Lemma, see [28].

Lemma 15

Let \(g:\mathbb {R}^m\longrightarrow \mathbb {R}\) be a Frèchet differentiable function with \(L_g\) Lipschitz continuous gradient. Then one has

Theorem 16

Let x be the unique strong global solution of (6) and assume that \({{\,\mathrm{argmin}\,}}g\ne \emptyset .\) If \(\alpha> 3,\,\gamma >0\) and \(\beta \in \mathbb {R}\) or \(\alpha > 3,\,\gamma =0\) and \(\beta \ge 0\) , then x is bounded and there exists \(K\ge 0\) and \(t_1\ge t_0\) such that

Further,

and for \(\gamma =0\) and \(\beta \ge 0\) there exists \(K_1\ge 0\) and \(t_2\ge t_1\) such that

Proof

For \(x^*\in {{\,\mathrm{argmin}\,}}g\) let \(g^*=g(x^*)=\min g\) and consider the energy function (13) with \(b(t)=b,\) where \(2<b<\alpha -1\), \(c(t)=t\), \(d(t)=b(\alpha -1-b)>0\) and

According to Remark 11 there exists \(t_1\ge t_0\) such that the conditions (15)–(20) are satisfied.

From the definition of \(\mathcal {E}\) one has

and

that is

and

By using the fact that \(\mathcal {E}\) non-increasing on an interval \([t_1,+\infty )\) the latter inequality assures that x is bounded.

Now, by using the inequality \(\Vert X-Y\Vert \ge \Vert X\Vert -\Vert Y\Vert \) we get

hence for all \(t\ge t_1\) one has

where \(K=\left( 1+\sqrt{\frac{1}{\alpha -1-b}}\right) \sqrt{2\mathcal {E}(t_1)}.\)

Further, (20) becomes \((b+1-\alpha )t<0,\) hence for all \(t\ge t_1\) one has

By integrating from \(t_1\) to \(T>t_1\) one gets

By letting \(T\longrightarrow +\infty \) we obtain

Now, by considering \(\gamma =0,\,\beta \ge 0\) and by using Lemma 15 with \(y=x(t)\) and \(x=x(t)+\frac{\beta }{t}\dot{x}(t)\) we obtain

From (24), the Lipschitz continuity of \({\nabla }g\) and the facts that x and \(\dot{x}\) are bounded, we get that there exists \(t_2'\ge t_1\) and \(K'>0\) such that

Further (24) assures that

Combining the latter relations with (27) we get that there exists \(K_1'>0\) such that

Now, by adding (21) and (28) we get that there exists \(K_1>0\) and \(t_2\ge t_2'\) such that

\(\square \)

Remark 17

Note that (23), which holds whenever \(\alpha >3\) and \(\gamma >0\), assures that

Further, (25) assures that

Consequently, the system (6) leads to

hence, by (38) (see Lemma 27 in Appendix), that is \(\Vert {x}^{(3)}(t)\Vert \le K(\Vert \dot{x}(t)\Vert +\Vert \ddot{x}(t)\Vert )\) for some \(K>0\), we get

Remark 18

Notice that (29) and (30) and (38) assure in particular that

Remark 19

In the case \(\gamma =0\) and \(\beta \ge 0\) according to Remark 13 one has

Hence, as in Remark 17 we derive that

and consequently (31) holds.

3.3 The Convergence of the Generated Trajectories

In this section we show convergence of the generated trajectories to a minimum point of the objective g. Consider the set of limit points of the trajectory x, that is

We show that \(\omega (x)\subseteq {{\,\mathrm{argmin}\,}}g.\) We emphasize that since g is convex one has

We have the following result.

Lemma 20

Let x be the unique strong global solution of (6) and assume that \({{\,\mathrm{argmin}\,}}g\ne \emptyset .\) If \(\alpha> 3,\,\gamma >0\) and \(\beta \in \mathbb {R}\), then the following assumptions hold.

-

(i)

\(\omega (x)=\omega \left( x(\cdot )+\left( \gamma +\frac{\beta }{t}\right) \dot{x}(\cdot )\right) \);

-

(ii)

\(\omega (x)\subseteq {{\,\mathrm{argmin}\,}}g.\)

Proof

Indeed (31) assures that \(\lim _{t \longrightarrow +\infty } \left( \gamma + \dfrac{\beta }{t} \right) \dot{x}(t) = 0\), which immediately proves (i).

For proving (ii) consider \(\overline{x}\in \omega (x).\) Then, there exists \((t_n)_{n\in \mathbb {N}}\subseteq \mathbb {R},\, t_n\longrightarrow +\infty ,\,n\longrightarrow +\infty \) such that \(\lim _{n\longrightarrow +\infty }x(t_n) = \overline{x}.\) Now, since \({\nabla }g\) is continuous and \(\lim _{n\longrightarrow +\infty }\left( x(t_n)+\left( \gamma +\frac{\beta }{t_n}\right) \dot{x}(t_n)\right) =\overline{x}\) one has

Further, according to (31)

Now, the system (6) gives

that is \(\overline{x}\in {{\,\mathrm{argmin}\,}}g\) and this proves (ii). \(\square \)

Remark 21

Obviously, according to Remark 19 the conclusion of Lemma 20 remains valid also in the case \(\alpha >3,\,\gamma =0\) and \(\beta \ge 0.\)

Our aim is to prove that the limit \(\lim _{t\longrightarrow +\infty }\Vert x(t)-x^*\Vert \) exists for every \(x^*\in {{\,\mathrm{argmin}\,}}g,\) and for this we need the following result from [9].

Lemma 22

(Lemma A.4. [9]) Let \(t_0>0\), and let \(w:[t_0,+\infty )\longrightarrow \mathbb {R}\) be a continuously differentiable function which is bounded from below. Assume that

for some \(\alpha > 1,\) almost every \(t >t_0\), and some non-negative function \(G\in L^1(t_0,+\infty ).\) Then, the positive part \([\dot{w}]_+\) of \(\dot{w}\) belongs to \(L^1(t_0,+\infty )\) and limit \(\lim _{t\longrightarrow +\infty } w(t)\) exists.

Now we can prove the following.

Lemma 23

Let x be the unique strong global solution of (6) and assume that \({{\,\mathrm{argmin}\,}}g\ne \emptyset .\) If \(\alpha> 3,\,\gamma >0\) and \(\beta \in \mathbb {R}\), then for every \(x^*\in {{\,\mathrm{argmin}\,}}g\) there exists the limit

Proof

Let \( x^*\in {{\,\mathrm{argmin}\,}}g\) and define the function \(h_{x^*}:[t_0,+\infty )\longrightarrow \mathbb {R},\,h_{x^*}(t)= \dfrac{1}{2} \Vert x(t) - x^* \Vert ^2\). Using the chain rule with respect to the differentiation of \(h_{x^*}\), we obtain that

and that

Let us denote \(g^{*} = g(x^{*}) = min(g)\). Using the dynamical system (6), one has that

This means that

By the convexity of the mapping g and taking into account that \(g^*=g(x^*)=\min g\), we obtain

hence by using (6) the inequality (32) becomes

Consequently, one has

Now, according to (29) one has

Consequently,

Further,

and (30) assures that

hence

According to (34) and (35) we get that the function

Moreover, it is obvious that there exists \(t_1\ge t_0\) such that G is non negative for every \(t\ge t_1.\) From (33) one has

hence Lemma 22 leads to the existence of the limit

and consequently the limit

also exists. \(\square \)

Now, we present the main result of this subsection regarding the convergence of the solution of the dynamical system (6) as \(t \longrightarrow + \infty \).

Theorem 24

Let x be the unique strong global solution of the dynamical system (6) and assume that \({{\,\mathrm{argmin}\,}}g\ne \emptyset .\) If \(\alpha> 3,\,\gamma >0\) and \(\beta \in \mathbb {R}\), then x(t) converges to a point in \({{\,\mathrm{argmin}\,}}g\) as \(t \longrightarrow +\infty \).

Proof

Let \(x^*\in {{\,\mathrm{argmin}\,}}g\). Then, by Lemma 23, we know that \(\lim \limits _{t \longrightarrow +\infty } \Vert x(t) - x^*\Vert \) exists, hence x is bounded. But then from Bolzano–Weierstrass Theorem we get that there exists \(\bar{x} \in \omega (x)\). In other words, there exists a sequence \(t_n \longrightarrow +\infty ,\,n\longrightarrow +\infty \), such that \(\lim \limits _{n \longrightarrow +\infty } x(t_n) =\bar{x}.\) From Lemma 20, we obtain that \(\bar{x} \in {{\,\mathrm{argmin}\,}}g\). Using Lemma 23 again, we find that \(\lim \limits _{t \longrightarrow +\infty } \Vert x(t) - \bar{x} \Vert = \lim \limits _{n \longrightarrow +\infty } \Vert x(t_n) - \bar{x} \Vert = 0,\) hence

\(\square \)

Remark 25

As it was expected, Theorem 24 remains true if in its hypothesis we assume only that \(\alpha >3,\, \gamma =0\) and \(\beta \ge 0.\) Indeed, note that under these assumptions the conclusion of Lemma 23 holds, since G from its proof becomes

and according to Remark 19\(G(t)\in L^1([t_0,+\infty ),\mathbb {R}).\) Moreover, it is obvious that there exists \(t_1\ge t_0\) such that G is non negative for every \(t\ge t_1.\)

This fact combined with Remark 21 lead to the desired conclusion.

4 Conclusion

In this paper we study a second order dynamical system which can be viewed as an extension of the heavy ball system with vanishing damping. This dynamical system is actually a perturbed version of the heavy ball system with vanishing damping, but the perturbation is made in the argument of the gradient of the objective function. Our system is also strongly related to the heavy ball system with Hessian driven damping. Numerical experiments show that the above mentioned perturbation brings a smoothing effect in the behaviour of the energy error \(g(x(t))-\min g\) and also in the behaviour of the absolute error \(\Vert x(t)-x^*\Vert \), where x(t) is a trajectory generated by our dynamical system and \(x^*\) is a minimum of the objective g. We show that our system via explicit discretization leads to inertial algorithms. A related future research is the convergence analysis of algorithm (12), since this algorithm contains as particular case the famous Nesterov algorithm. However, since Algorithm (12) may allow different inertial steps, its area of applicability can be considerable.

We have shown existence and uniqueness of the trajectories generated by our dynamical system even for the case the objective function g is non-convex. Further, we treated the cases when the energy error \(g(x(t))-\min g\) is of order \(\mathcal {O}\left( \frac{1}{t^2}\right) \) and we obtained the convergence of a generated trajectory to a minimum of the objective function g. Another related research is the convergence analysis of the generated trajectories in the case when the objective function g is possible non-convex. This would be a novelty in the literature even for the case \(\alpha >3,\,\gamma =\beta =0\), that is, for the case of the heavy ball system with vanishing damping.

Finally, we underline that the dynamical system (6) can easily be extended to proximal-gradient dynamical systems, (see [18, 20] and the references therein).

References

Abbas, B., Attouch, H., Svaiter, B.F.: Newton-like dynamics and forward–backward methods for structured monotone inclusions in Hilbert spaces. J. Optim. Theory Appl. 161(2), 331–360 (2014)

Alecsa, C., László, S.C.: Viorel, A: A gradient type algorithm with backward inertial steps associated to a nonconvex minimization problem. Num. Algorithms (2019). https://doi.org/10.1007/s11075-019-00765-z

Alvarez, F., Attouch, H., Bolte, J., Redont, P.: A second-order gradient-like dissipative dynamical system with Hessian-driven damping. Application to optimization and mechanics. Journal de Mathématiques Pures et Appliquées 81(8), 747–779 (2002)

Attouch, H., Chbani, Z.: Fast inertial dynamics and fista algorithms in convex optimization. Perturbation aspects. (2015). arXiv:1507.01367

Attouch, H., Svaiter, B.F.: A continuous dynamical Newton-like approach to solving monotone inclusions. SIAM J. Control Optim. 49(2), 574–598 (2011)

Attouch, H., Goudou, X., Redont, P.: The heavy ball with friction method, I. The continuous dynamical system: global exploration of the local minima of a real-valued function by asymptotic analysis of a dissipativ dynamical system. Commun. Contemp. Math. 2, 1–34 (2000)

Attouch, H., Peypouquet, J., Redont, P.: A dynamical approach to an inertial forward–backward algorithm for convex minimization. SIAM J. Optim. 24(1), 232–256 (2014)

Attouch, H., Peypouquet, J., Redont, P.: Fast convex optimization via inertial dynamics with Hessian driven damping. J. Differ. Equ. 261(10), 5734–5783 (2016)

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Program. 168(1–2), 123–175 (2018)

Attouch, H., Chbani, Z., Riahi, H.: Rate of convergence of the Nesterov accelerated gradient method in the subcritical case \(\alpha \le 3\). ESAIM: COCV 25, 2 (2019)

Attouch, H., Chbani, Z., Fadili, J., Riahi, H.: First-order optimization algorithms via inertial systems with Hessian driven damping. (2019). arXiv:1907.10536

Attouch, H., Chbani, Z., Riahi, H.: Fast convex optimization via time scaling of damped inertial gradient dynamics. https://hal.archives-ouvertes.fr/hal-02138954 (2019)

Aujol, J.F., Dossal, Ch.: Optimal rate of convergence of an ODE associated to the Fast Gradient Descent schemes for \(b > 0\). https://hal.inria.fr/hal-01547251v2/document (2017)

Aujol, J.F., Dossal, C., Rondepierre, A.: Optimal convergence rates for Nesterov acceleration. SIAM J. Optim. 29(4), 3131–3153 (2019)

Balti, M., May, R.: Asymptotic for the perturbed heavy ball system with vanishing damping term. Evol. Equ. Control Theory 6(2), 177–186 (2017)

Bégout, P., Bolte, J., Jendoubi, M.A.: On damped second-order gradient systems. J. Differ. Equ. 259, 3115–3143 (2015)

Boţ, R.I., Csetnek, E.R., László, S.C.: An inertial forward–backward algorithm for minimizing the sum of two non-convex functions. Euro J. Comput. Optim. 4(1), 3–25 (2016)

Boţ, R.I., Csetnek, E.R., László, S.C.: Approaching nonsmooth nonconvex minimization through second-order proximal-gradient dynamical systems. J. Evol. Equ. 18(3), 1291–1318 (2018)

Boţ, R.I., Csetnek, E.R., László, S.C.: A second order dynamical approach with variable damping to nonconvex smooth minimization. Appl. Anal. 99(3), 361–378 (2018)

Boţ, R.I., Csetnek, E.R., László, S.C.: A primal-dual dynamical approach to structured convex minimization problems. (2019). arXiv:1905.08290

Boţ, R.I., Csetnek, E.R., László, S.C.: Tikhonov regularization of a second order dynamical system with Hessian driven damping. (2019). arXiv:1911.12845

Cabot, A., Engler, H., Gadat, S.: On the long time behavior of second order differential equations with asymptotically small dissipation. Trans. Am. Math. Soc. 361, 5983–6017 (2009)

Cabot, A., Engler, H., Gadat, S.: Second order differential equations with asymptotically small dissipation and piecewise at potentials. Electr. J. Differ. Equ. 17, 33–38 (2009)

Haraux, A.: Systèmes Dynamiques Dissipatifs et Applications. Recherches en Mathématiques Appliquéées 17, Masson (1991)

László, S.C.: Convergence rates for an inertial algorithm of gradient type associated to a smooth nonconvex minimization. (2018). arXiv:1811.09616

Muehlebach, M., Jordan, M.I.: A Dynamical systems perspective on Nesterov acceleration. (2019). arXiv:1905.07436

Nesterov, Y.: A method for solving the convex programming problem with convergence rate \(O(1/k^2)\). Dokl. Akad. Nauk SSSR 269(3), 543–547 (1983). (Russian)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Kluwer Academic Publishers, Dordrecht (2004)

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. U.S.S.R. Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Shi, B., Du, S.S., Jordan, M.I., Su, W.J.: Understanding the acceleration phenomenon via high-resolution differential equations. (2018). arXiv:1810.08907

Sontag, E.D.: Mathematical Control Theory. Deterministic Finite-Dimensional Systems. Texts in Applied Mathematics 6, vol. 2. Springer, New York (1998)

Su, W., Boyd, S., Candes, E.J.: A differential equation for modeling Nesterov’s accelerated gradient method: theory and insights. J. Mach. Learn. Res. 17, 1–43 (2016)

Zhang, J., Mokhtari, A., Sra, S., Jadbabaie, A.: Direct Runge-Kutta discretization achieves acceleration. In: Advances in Neural Information Processing Systems, pp. 3900–3909 (2018)

Acknowledgements

The authors are thankful to two anonymous referees for their valuable remarks and suggestions which improved the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by a Grant of Ministry of Research and Innovation, CNCS - UEFISCDI, project number PN-III-P1-1.1-TE-2016-0266.

Appendices

A. Complements to the Section Existence and uniqueness

In what follows we give a detailed proof for Theorem 9.

Proof

(Theorem 9).

By making use of the notation \(X(t)=(x(t),\dot{x}(t))\) the system (6) can be rewritten as a first order dynamical system:

where \(F:[t_0,+\infty )\times \mathbb {R}^m\times \mathbb {R}^m\longrightarrow \mathbb {R}^m\times \mathbb {R}^m,\, F(t,u,v)=\left( v, -\frac{\alpha }{t}v-\nabla g\left( u+\left( \gamma +\frac{\beta }{t}\right) v\right) \right) .\)

The existence and uniqueness of a strong global solution of (6) follows according to the Cauchy–Lipschitz–Picard Theorem applied to the first order dynamical system (37). In order to prove the existence and uniqueness of the trajectories generated by (37) we show the following:

-

(I)

For every \(t\in [t_0,+\infty )\) the mapping \(F(t, \cdot , \cdot )\) is L(t)-Lipschitz continuous and \(L(\cdot )\in L^1_{loc}([t_0,+\infty ))\).

-

(II)

For all \(u,v\in {\mathbb {R}^m}\) one has \(F(\cdot ,u,v)\in L^1_{loc}([t_0,+\infty ),{\mathbb {R}^m}\times {\mathbb {R}^m})\) .

Let us prove (I). Let \(t \in [t_0, +\infty )\) be fixed and consider the pairs (u, v) and \((\bar{u}, \bar{v})\) from \(\mathbb {R}^m \times \mathbb {R}^m\). Using the Lipschitz continuity of \({\nabla }g\) and the obvious inequality \(\Vert A+B\Vert ^2\le 2\Vert A\Vert ^2+2\Vert B\Vert ^2\) for all \(A,B\in \mathbb {R}^m\), we make the following estimations :

By employing the notation \(L(t)= \sqrt{1+4L_g^2+2\left( \frac{\alpha }{t}\right) ^2+4L_g^2\left( \gamma +\frac{\beta }{t}\right) ^2}\), we have that

Obviously the function \(t \longrightarrow L(t)\) is continuous on \([t_0, +\infty )\), hence \(L(\cdot )\) is integrable on \([t_0,T]\) for all \(t_0<T<+\infty \).

For proving (II) consider \((u,v) \in \mathbb {R}^m \times \mathbb {R}^m\) a fixed pair of elements and let \(T > t_0\). We consider the following estimations:

and the conclusion follows by the continuity of the function \(t \longrightarrow \sqrt{5+2\left( \frac{\alpha }{t}\right) ^2+4L_g^2\left( \gamma +\frac{\beta }{t}\right) ^2}\) on \([t_0,T]\).

The Cauchy–Lipschitz–Picard theorem guarantees existence and uniqueness of the trajectory of the first order dynamical system (37) and thus of the second order dynamical system (6). \(\square \)

Remark 26

Note that we did not use the convexity assumption imposed on g in the proof of Theorem 9. However, we emphasize that according to the proof of Theorem 9, the assumption that g has a Lipschitz continuous gradient is essential in order to obtain existence and uniqueness of the trajectories generated by the dynamical system (6).

Next we present the complete proof of Proposition 10. For simplicity, in the proof of the following sequel we employ the 1-norm on \(\mathbb {R}^m \times \mathbb {R}^m\), defined as \(\Vert (x_1, x_2) \Vert _{1} = \Vert x_1 \Vert + \Vert x_2 \Vert \), for all \(x_1,x_2 \in \mathbb {R}^m\). Obviously one has

Proof

(Proposition 10).

We show that \(\dot{X}(t) = (\dot{x}(t), \ddot{x}(t))\) is locally absolutely continuous, hence \(\ddot{x}\) is also locally absolutely continuous. This implies by Remark 8 that \(x^{(3)}\) exists almost everywhere on \([t_0, + \infty )\).

Let \(T > t_0\) and \(s,t \in [t_0, T]\). We consider the following chain of inequalities :

and by using the \(L_g\)-Lipschitz continuity of \({\nabla }g\) we obtain

Further, let us introduce the following additional notations:

Then, one has

By the fact that x is the strong global solution for the dynamical system (6), it follows that x and \(\dot{x}\) are absolutely continuous on the interval \([t_0,T]\). Moreover, the function \(t \longrightarrow \dfrac{1}{t}\) belongs to \(C^1([t_0, T], \mathbb {R})\), hence it is also absolutely continuous on the interval \([t_0,T]\). Let \(\varepsilon > 0\). Then, there exists \(\eta > 0\), such that for \(I_k = (a_k,b_k) \subseteq [t_0,T]\) satisfying \(I_k \cap I_j = \emptyset \) and \(\sum \limits _{k} |b_k-a_k| < \eta \), we have that

Summing all up, we obtain

consequently \(\dot{X}\) is absolutely continuous on \([t_0,T]\). and the conclusion follows. \(\square \)

Concerning an upper bound estimate of the third order derivative \(x^{(3)}\) the following result holds.

Lemma 27

For the initial values \((u_0,v_0) \in \mathbb {R}^m \times \mathbb {R}^m\) consider x the unique strong global solution of the second-order dynamical system (6). Then, there exists \(K> 0\) such that for almost every \(t \in [t_0, +\infty )\), we have that:

Proof

For \(h > 0 \) we consider the following inequalities :

Now, dividing by \(h > 0\) and taking the limit \({h \longrightarrow 0}\), it follows that

Consequently,

Finally,

where \(K=\max \left\{ \max _{t\ge t_0}\left( L_g + \dfrac{\alpha +L_g|\beta |}{t^2}\right) ,\max _{t\ge t_0}\left( \frac{\alpha }{t} + L_g \left| \gamma + \dfrac{\beta }{t} \right| \right) \right\} \). \(\square \)

B. On the Derivative of the Energy Functional (13)

In what follows we derive the conditions which must be imposed on the positive functions a(t), b(t), c(t), d(t) in order to obtain \(\dot{\mathcal {E}}(t)\le 0\) for every \(t\ge t_1.\) We have,

Now, from (6) we get \(\ddot{x}(t)=-\alpha (t)\dot{x}(t)-{\nabla }g(x(t)+\beta (t)\dot{x}(t)),\) hence

Consequently,

But

and by the convexity of g we have \(\langle {\nabla }g(x(t)+\beta (t)\dot{x}(t)),x(t)+\beta (t)\dot{x}(t)-x^*\rangle \ge g(x(t)+\beta (t)\dot{x}(t))-g^*,\) hence

Therefore, (39) becomes

From here one easily can observe that the conditions (15)–(20) assure that

Remark 28

Note that we did not use the form of the sequences \(\alpha (t)\) and \(\beta (t)\) in the above computations. Consequently, the energy functional (13) may be suitable also for the following general system.

where \(u_0,v_0\in \mathbb {R}^m\) and \(\alpha ,\beta :[t_0+\infty )\longrightarrow (0,+\infty ),\, t_0\ge 0\) are continuously derivable functions.

Rights and permissions

About this article

Cite this article

Alecsa, C.D., László, S.C. & Pinţa, T. An Extension of the Second Order Dynamical System that Models Nesterov’s Convex Gradient Method. Appl Math Optim 84, 1687–1716 (2021). https://doi.org/10.1007/s00245-020-09692-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-020-09692-1

Keywords

- Convex optimization

- Heavy ball method

- Continuous second order dynamical system

- Convergence rate

- Inertial algorithm