Abstract

The synthesis of reactive systems from linear temporal logic (LTL) specifications is an important aspect in the design of reliable software and hardware. We present our adaption of the classic automata-theoretic approach to LTL synthesis, implemented in the tool Strix which has won the two last synthesis competitions (Syntcomp2018/2019). The presented approach is (1) structured, meaning that the states used in the construction have a semantic structure that is exploited in several ways, it performs a (2) forward exploration such that it often constructs only a small subset of the reachable states, and it is (3) incremental in the sense that it reuses results from previous inconclusive solution attempts. Further, we present and study different guiding heuristics that determine where to expand the on-demand constructed arena. Moreover, we show several techniques for extracting an implementation (Mealy machine or circuit) from the witness of the tree-automaton emptiness check. Lastly, the chosen constructions use a symbolic representation of the transition functions to reduce runtime and memory consumption. We evaluate the proposed techniques on the Syntcomp2019 benchmark set and show in more detail how the proposed techniques compare to the techniques implemented in other leading LTL synthesis tools.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Synthesis refers to the problem of finding for a formal specification of an input–output relation a matching implementation [37], e.g. an (I/O)-transducer, a Mealy machine, a Moore machine or a circuit. In our case we focus on linear temporal logic (LTL) as the specification logic. While an asymptotically optimal synthesis algorithm has been given in [37], this approach and other algorithms solving this taskFootnote 1 have not yet been successfully put into industrial practice. Tools able to deal with large specifications have been elusive and those that are available often produce subpar results compared to straight-forward manual implementations when successfully applied. [29] identifies four challenges that hinder the practical impact of these synthesis algorithms: “algorithmic, methodological, scope, and qualitative” [29]. The first challenge is to find efficient synthesis algorithms. The second challenge is the discrepancy between the assumed synthesis setting and reality: a finished and complete specification is the exception and not the rule. Users often iterate specifications and thus this brings up the task of reusing and composing intermediate results. The third challenge is expressiveness and succinctness of input and output formats. Finally, the fourth challenge is not only to compute any valid solution, but to find implementations that have good quality. In this paper we primarily address the algorithmic and qualitative side of the synthesis problem, but also sketch ideas for the two other areas.

The classic automata-theoretic synthesis procedure using deterministic automata suffers from the “messy state space” [29] of Safra’s determinisation, which hinders efficient implementations that need to work on top of it. Moreover this automata-theoretic approach to synthesis requires the construction of a potentially double exponentially sized automaton (in the length of the specification) [22]. These two issues gave rise to “Safraless” approaches [5, 9, 14, 27, 30] to avoid the complicated state structure and to alleviate the state space explosion problem. Further, bounded synthesis adds to the synthesis problem an additional size constraint on the matching implementation. This effectively turns the synthesis problem into a search problem.

We, on the other hand, address the “messy state space” issue by employing a collection of “Safraless” LTL to deterministic parity automaton (DPA) translations [12, 13, 38] in combination with a special product automaton construction that includes a latest appearance record (LAR) construction and a formula decomposition in the spirit of [10, 15, 34, 35]. Our construction recovers the Boolean structure present in the input specification. The state explosion problem is tackled by exploring the on-demand constructed parity game using a forward search resembling the optimisation described in [17]. This enables our customised strategy iteration [31] to leave most of the arena (and thus of the automaton) unexplored. Further, the decomposition allows us to split-off formulas and use them to prune the search-space which is a generalisation of a central insight from [39].

Further, we propose two heuristics guiding the construction of the arena in directions of probably decisive regions and thus focussing on important states, while skipping irrelevant parts. One approach is agnostic about the internal structure of the parity game, while the other one extracts information from the special parity automaton construction. Lastly, since we use strategy iteration to compute winning strategies, we can reuse so-far constructed solution attempts after expanding the arena, thereby reducing the amount of iterations until stabilisation is reached. We believe that our approach could be adapted to cache intermediate results (constructed automata, partial strategies) when using LTL synthesis interactively to speed up synthesis, which addresses the second area.

Regarding the quality of the synthesised structures “there is no emphasize [sic]” on constructing optimal or well-structured systems [29]. While in this paper we do not look at general methods for producing qualitatively good solutions and do not support synthesis under a specific quality measure, we provide a set of best-effort heuristics to produce good solutions: we make use of a range of post-processing steps to ensure that the solution is as small as possible. We also provide a modular encoding of the product automata into circuits that retains the Boolean structure of the specification. This approach surprisingly yields on some of the specifications used in the experimental evaluation smaller circuits compared to extracting a circuit out of a minimised Mealy machine. This data suggests that minimisation of the implementation represented as a Mealy machine might be in some cases diametral to generating small circuits. It seems that this area has not been studied enough and we think enriching specifications with additional, explicit optimisation goals is worthwhile, but currently these ideas have not manifested in specifications such as the Syntcomp2019 benchmarks.

We implement and test the outlined ideas within StrixFootnote 2 [33], which relies on [28] for automata translations and [32] for parity game solving. An older version of Strix won in the TLSF/LTL track in all six categories of Syntcomp2018 against other mature tools such as ltlsynt [23], which also implements synthesis using parity games, and BoSy [14], which implements several bounded synthesis approaches. We further improve the prototype by representing the transition relation symbolically to address scalability issues for large alphabets and replace external tooling such as Speculoos with an internal implementation able to cope with larger systems. This newer version again won in all TLSF/LTL tracks of Syntcomp2019 against ltlsynt.

The rest of the paper is structured as follows: after introducing preliminaries, we give a high-level overview of the synthesis procedure and detail it in the following subsections. We then put our improvements to the test by evaluating them on the Syntcomp2019 benchmarks and comparing them with the old version, ltlsynt and BoSy. Each section, if appropriate, contains its specific discussion of related work.

Editorial Note. This paper is an extended version of the preliminary report published in [33]. The synthesis approach is the same, but we give a much more detailed explanation of the techniques used, e.g., the decomposition and the product automaton construction. Further, we describe new extensions for different exploration strategies in Sect. 3.2.1 and different encoding strategies in Sect. 3.3.2. We also give an updated experimental evaluation on a larger set of benchmarks and a comprehensive comparison with the old version and other tools.

2 Preliminaries

2.1 \(\omega \)-languages and \(\omega \)-automata

Let \(\varSigma \) be a finite alphabet. An \(\omega \)-word w over \(\varSigma \) is an infinite sequence of letters \(a_0 a_1 a_2 \ldots \) with \(a_i \in \varSigma \) for all \(i \ge 0\) and an \(\omega \)-language is a set of \(\omega \)-words. The set of all \(\omega \)-words is denoted \(\varSigma ^\omega \). We denote the i-th letter of an \(\omega \)-word w (starting at 0) by w(i) and the infinite suffix \(w(i) w(i+1) \ldots \) by \(w_{i}\).

In this paper we focus on deterministic \(\omega \)-automata with accepting conditions defined on transitions which is nowadays the preferred acceptance condition in implementations due to the succinctness and in-line with other recent papers and tools [2, 8, 20, 28]. The discussed constructions can also be transferred to automata with acceptance defined on states with the folklore translation from transition acceptance to state acceptance.

A deterministic pre-automaton (DA) over \(\varSigma \) is a tuple \((Q, \delta , q^0)\) where Q is a finite set of states, \(\delta :Q \times \varSigma \rightarrow Q\) is a transition function, and \(q^0\) is an initial state. A transition is a triple \((q, a, q')\) such that \(q' = \delta (q, a)\).

A deterministic Parity automaton (DPA) is a deterministic pre-automaton automaton \({A}= (Q, \delta , q^0, \chi , d, p)\) with the addition of the transition colouring\(\chi :Q\times \varSigma \rightarrow \{0,1,\ldots ,d\}\), \(d \ge 1\) the maximal colour and \(p\in \{ 0,1\}\) the parity that determines whether a run is accepting or not (as defined below). A run of A on an \(\omega \)-word \(w :{\mathbb {N}}_0 \rightarrow \varSigma \) is an \(\omega \)-sequence of states \(\rho :{\mathbb {N}}_0 \rightarrow Q\) such that \(\rho (0)=q^0\) and for all positions \(i \in {\mathbb {N}}_0\), we have that \((\rho (i),w(i),\rho (i+1)) \in \delta \). Given a run \(\rho \) over a word w, the infinite sequence of colours traversed by the run \(\rho \) is denoted by \(\chi (\rho )\,{:=}\,\bigl (\chi (\rho (i),w(i))\bigr )_{i\in {\mathbb {N}}_0}\). The minimal colour appearing infinitely often along a run \(\rho \) is \(\liminf \chi (\rho )\). A run \(\rho \) is accepting if \(\liminf \chi (\rho ) \equiv _2 p\) (with \(x\equiv _2y :\Leftrightarrow (x-y)\bmod {2} = 0\)). An \(\omega \)-word w is in the language of \({A}\), denoted \(w \in {\mathcal {L}}({A})\), iff the run for w on \({A}\) is an accepting run.

For a parity \(p\in \{0,1\}\), define \({\overline{p}} \,{:=}\,1-p\) as the switched parity. Note that by changing the parity \(p\) to \({\overline{p}}\), we obtain a complement automaton\({\overline{{A}}} \,{:=}\,(Q, \delta , q^0, \chi , d, {\overline{p}})\) for which we have \({\mathcal {L}}({\overline{{A}}}) = \varSigma ^\omega {\setminus } {\mathcal {L}}({A})\).

To change the parity \(p\) to \({\overline{p}}\) while preserving the language, one can use \({A}' \,{:=}\,(Q, \delta , q^0, \chi ', d + 1, {\overline{p}})\) with \(\chi '(q, a) \,{:=}\,\chi (q, a) + 1\), which has one more colour and satisfies \({\mathcal {L}}({A}') = {\mathcal {L}}({A})\).

A deterministic Büchi automaton (DBA) with the set of accepting transitions \(\alpha \) is a DPA with colours \(\{0,1\}\), parity \(0\) and \(\chi \) defined as:

A deterministic co-Büchi automaton (DCA) with the set of rejecting transitions \(\beta \) is a DPA with colours \(\{0,1\}\), parity \(1\) and \(\chi \) defined as:

A deterministic weak automaton (DWA) with the set of accepting states \(\gamma \) is a DPA with colours \(\{0,1\}\) and parity \(0\) or \(1\), where for each strongly connected component \(S \subseteq Q\), either \(S \subseteq \gamma \) or \(S \cap \gamma = \emptyset \). Then \(\chi \) is defined as:

Note that for weak automata, we can switch between parity \(0\) and \(1\) while preserving the language without increasing the number of colours.

A bottom state of a DPA is a special state \(\bot \in Q\) such that \(\delta (\bot , a) = \bot \) and \(\chi (\bot , a) \equiv _2 {\overline{p}}\) for each \(a \in \varSigma \). A top state of a DPA is a special state \(\top \in Q\) such that \(\delta (\top , a) = \top \) and \(\chi (\top , a) \equiv _2 p\) for each \(a \in \varSigma \).

2.2 Linear temporal logic

We present LTL [36] with a larger than usual set of modal operators and Boolean connectives, instead of a minimalistic syntax often found in other publications. While a minimalistic syntax reduces the amount of cases, e.g., in an induction, we want to keep as much structure of the given formula as possible and thus add redundancy. In particular, we are going to present customised constructions in order to deal with the Boolean connective \(\leftrightarrow \) that effectively reduces the size of the automaton that is constructed. We work with a syntax for LTL in which formulas within the scope of modal operators (\({\mathbf {X}}\), \({\mathbf {U}}\)) are written in negation-normal form, i.e., negations only occur in front of atomic propositions. Thus we need to introduce \({\mathbf {ff}}\), \(\lnot a\), \(\vee \), and the temporal operator \({\mathbf {R}}\) (release) in order to remove \(\lnot \) from the syntax. It is easy to see that LTL formulas with the usual syntax can be translated to equivalent LTL formulas of the same size in our syntax. A formula of LTL over a set of atomic propositions (\(\mathsf {Ap}\)) is given by the following syntax:

Definition 1

(Syntax of LTL)

We also define the usual abbreviations \({\mathbf {F}}\varphi \,{:=}\,{\mathbf {tt}}{\mathbf {U}}\varphi \) (eventually) and \({\mathbf {G}}\varphi \,{:=}\,{\mathbf {ff}}{\mathbf {R}}\varphi \) (always). The satisfaction relation \(\models \) between \(\omega \)-words over the alphabet \(\varSigma \,{:=}\,2^\mathsf {Ap}\) and formulas is inductively defined as follows:

Definition 2

(Semantics of LTL)

We denote by \({\mathcal {L}}(\varphi )\) the language of \(\varphi \) defined as \({\mathcal {L}}(\varphi ) \,{:=}\,\{ w \in \varSigma ^\omega \mid w \models \varphi \}\).

2.3 Notable fragments of LTL

In the latter section we are going to consider the following four fragments of LTL:

\(\mu {LTL}\) and \(\nu {LTL}\):

\(\mu {LTL}\) is the fragment of LTL restricted to the temporal operator \({\mathbf {U}}\), the Boolean connectives \((\wedge , \vee )\), the literals \((a, \lnot a)\), and the next operator \(({\mathbf {X}})\). \(\nu {LTL}\) is defined analogously, but with the operator \({\mathbf {R}}\) instead of \({\mathbf {U}}\). In the literature \(\mu {LTL}\) is also called syntactic co-safety and \(\nu {LTL}\) syntactic safety.

\({\mathbf {G}}(\mu {LTL})\) and \({\mathbf {F}}(\nu {LTL})\):

These fragments contain the formulas of the form \({\mathbf {G}}\varphi \), where \(\varphi \in \mu {LTL}\), and \({\mathbf {F}}\varphi \), where \(\varphi \in \nu {LTL}\).

The reason for the names \(\mu {LTL}\) and \(\nu {LTL}\) is that \({\mathbf {U}}\) is a least-fixed-point operator, in the sense that its semantic is naturally formulated by a least fixed point, e.g., in the \(\mu \)-calculus, while the semantics of \({\mathbf {R}}\) is naturally formulated by a greatest fixed point.

For all these fragments several translations to deterministic automata are known and we are going to use the constructions for \(\mu {LTL}\), \(\nu {LTL}\), \({\mathbf {G}}{\mathbf {F}}(\mu {LTL})\), and \({\mathbf {F}}{\mathbf {G}}(\nu {LTL})\) described in [13], for \({\mathbf {G}}(\mu {LTL})\) and \({\mathbf {F}}(\nu {LTL})\) the construction described in [38], and for arbitrary LTL formulas the construction described in [12]. It should be noted that of course these constructions can be swapped with other constructions, but some of the implemented heuristics rely on the specific state structure these constructions yield.

2.4 Synthesis problem

Let \(\varphi \) be a specification given as an LTL formula and let the atomic propositions \(\mathsf {Ap}= {\mathsf {Ap}_{\textsf {in}}}\uplus {\mathsf {Ap}_{\textsf {out}}}\) be partitioned into input symbols\({\mathsf {Ap}_{\textsf {in}}}\) and output symbols\({\mathsf {Ap}_{\textsf {out}}}\). We then define \(\varSigma \,{:=}\,2^\mathsf {Ap}\), \(\varSigma _{\textsf {in}}\,{:=}\,2^{\mathsf {Ap}_{\textsf {in}}}\), and \(\varSigma _{\textsf {out}}\,{:=}\,2^{\mathsf {Ap}_{\textsf {out}}}\).

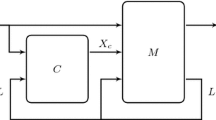

Then the synthesis problem is to decide if a function \(\sigma : \varSigma _{\textsf {in}}^* \rightarrow \varSigma _{\textsf {out}}\) exists such that for every \(\omega \)-word \(v \in \varSigma _{\textsf {in}}^\omega \), the \(\omega \)-word \(w \in \varSigma ^\omega \) defined by \(w(i) \,{:=}\,v(i) \cup \sigma (v(0)v(1)\ldots v(i))\) satisfies \(w \in {\mathcal {L}}(\varphi )\). In the positive case, also a finite and executable representation of \(\sigma \) in the form of a controller should be produced, e.g., a Mealy machine or a circuit.

3 Synthesis procedure

We start with an overview on how Strix constructs parity games from specification formulas and solves them. For the controller extraction we refer the reader to Sect. 3.3. We illustrate the intuition of Algorithm 1 using a simple arbiter example and refer to functionality explained in subsequent sections via oracles: First, the formula is analysed with \({{\mathcal {O}}}_{{\mathcal {T}}}\) and a DPA is constructed on-the-fly via \({{\mathcal {O}}}_{q_0}\), \({{\mathcal {O}}}_{p}\), and \({{\mathcal {O}}}_{\delta }\). Second, the DPA is interpreted as a parity game and the game is solved via \({{\mathcal {O}}}_{\textsf {win}}\) computing the winning regions. Further an exploration heuristic (\({{\mathcal {O}}}_{\textsf {expl}}\)) guides which parts of the parity games are extended. To be more precise, we use the following oracles:

\({{\mathcal {O}}}_{{\mathcal {T}}}(\varphi )\): Given the formula \(\varphi \), the oracle returns an annotated formula \(\alpha \) that labels syntax nodes with a recommended automaton type to be used for this subformula for translation and how to compose a (product) DPA from these components.

\({{\mathcal {O}}}_{q_0}(\alpha )\): Given the annotated formula \(\alpha \), the oracle returns the initial state \(q_0\) of the (product) DPA recognising \(\varphi \).

\({{\mathcal {O}}}_{p}(\alpha )\): Given the annotated formula \(\alpha \), the oracle returns the parity \(p\) of the (product) DPA recognising \(\varphi \).

\({{\mathcal {O}}}_{\delta }(\alpha , q)\): Given the annotated formula \(\alpha \) and a (product) state q, the oracle returns a set of outgoing transitions from q. The elements of the set are tuples \((I,O,c,q')\), where \(I \subseteq \varSigma _{\textsf {in}}\) are the input letters, \(O \subseteq \varSigma _{\textsf {out}}\) are the output letters, c is the colour of the transition and \(q'\) is the successor state. Formally for each such tuple \((I,O,c,q')\) we have \(\delta (q, i \cup o) = q'\) and \(\chi (q, i \cup o) = c\) for all \(i \in I\) and \(o \in O\). The oracle will return \(q' = \bot \) for states that are trivially losing for the system and \(q' = \top \) for states that are trivially winning.

\({{\mathcal {O}}}_{\textsf {win}}\): Given

, compute whether the state q is won by player

, compute whether the state q is won by player  , where

, where  is the set of nodes from which player

is the set of nodes from which player  moves,

moves,  is the set of nodes from which player

is the set of nodes from which player  moves,

moves,  is the labeled edge relation, \(\chi :E\rightarrow {\mathbb {N}}_0\) is an edge colouring,

is the labeled edge relation, \(\chi :E\rightarrow {\mathbb {N}}_0\) is an edge colouring,  is the set of boundary nodes (i.e. nodes whose successors have yet to be constructed), \(p\) is the parity for player \(\mathsf {P}\), i.e. player \(\mathsf {P}\) wins if the minimal colour occurring infinitely often along the edges of a play has parity \(p\), and \(\kappa \) is an initial (partial, nondeterministic)Footnote 3 strategy for player \(\mathsf {P}\). \({{\mathcal {O}}}_{\textsf {win}}\) also returns an updated strategy \(\kappa '\), which is winning from q for \(\mathsf {P}\) if q is won by player \(\mathsf {P}\). Depending on the player \(\mathsf {P}\) boundary nodes are declared as winning for the opponent in order to correctly under-approximate the parity game on the completely constructed arena.

is the set of boundary nodes (i.e. nodes whose successors have yet to be constructed), \(p\) is the parity for player \(\mathsf {P}\), i.e. player \(\mathsf {P}\) wins if the minimal colour occurring infinitely often along the edges of a play has parity \(p\), and \(\kappa \) is an initial (partial, nondeterministic)Footnote 3 strategy for player \(\mathsf {P}\). \({{\mathcal {O}}}_{\textsf {win}}\) also returns an updated strategy \(\kappa '\), which is winning from q for \(\mathsf {P}\) if q is won by player \(\mathsf {P}\). Depending on the player \(\mathsf {P}\) boundary nodes are declared as winning for the opponent in order to correctly under-approximate the parity game on the completely constructed arena.\({{\mathcal {O}}}_{\textsf {expl}}\): Given a set B of boundary nodes, the so far constructed arena and the intermediate strategies, the oracle returns a nonempty subset of B of nodes that should be further explored.

We use the specification of a simple arbiter as an example. In this setting two processes (\(i\in \{1,2\}\)) request access to the critical section by raising the flag \(r_i\) and the arbiter eventually grants access to process i by raising \(g_i\). Thus we have \({\mathsf {Ap}_{\textsf {in}}}= \{r_1,r_2\}\) and \({\mathsf {Ap}_{\textsf {out}}}= \{g_1,g_2\}\) and the following specification:

Applying the annotation oracle, we obtain from the specification \(\phi \) an annotated syntax tree \(\alpha \) shown in Fig. 1 that represents a decomposition of \(\phi \) into subformulas w.r.t. the weakest class of deterministic automata needed for their translation and how to combine the automata in order to obtain the automaton for \(\phi \) itself. In our example, \(\psi _0\) is a simple mutex requirement which is classified as recognisable by a DWA (denoted by \({\mathcal {W}}\)), and \(\psi _1\) and \(\psi _2\) are fairness requirements that are classified as recognisable by a DBA (denoted by \({\mathcal {B}}\)). The conjunctions \(\psi _1 \wedge \psi _2\) and \(\phi \) itself are then also recognisable by a DBA (denoted by \({\mathcal {B}}_{\wedge }\)). The corresponding automata are displayed in Fig. 2.

We query \({{\mathcal {O}}}_{q_0}(\alpha )\) and obtain \(q_0 \,{:=}\,(a_0,((b_0,c_0),0))\), which matches the tree structure of \(\alpha \). Here, we have add a round-robin counter \(r\in \{0,1\}\) for the intersection of the two Büchi automata representing \(\psi _1\) and \(\psi _2\): This round-robin counter remembers which of the two Büchi automata is due to take an accepting transition. To ease notation for our example and the corresponding figures we flatten \((a_0,((b_0,c_0),0))\) to \((a_0,\underline{b_0},c_0)\) with the underlined state representing the round-robin counter. Further, we query \({{\mathcal {O}}}_{p}(\alpha )\) for the parity associated with the controller, i.e. player  . In our example it is 0 and

. In our example it is 0 and  wins a play if the minimal colour encountered infinitely often is even.

wins a play if the minimal colour encountered infinitely often is even.

As we are using Mealy semantics, we let the environment  move from the initial node \((a_0,\underline{b_0},c_0)\). Our parity game also includes two nodes \(\bot \) and \(\top \) where by our construction \(\bot \) is always won by the environment

move from the initial node \((a_0,\underline{b_0},c_0)\). Our parity game also includes two nodes \(\bot \) and \(\top \) where by our construction \(\bot \) is always won by the environment  , while \(\top \) is always won by the controller

, while \(\top \) is always won by the controller  .

.

We now start the on-the-fly forward exploration of parity game arena. In every iteration of the while-loop in Algorithm 1 we extend the boundaryB. The boundary always consists of nodes belonging to the environment  whose successors have yet to be explored. Initially the boundary is just the initial node of the parity game, in our example \(B=\{(a_0,\underline{b_0},c_0)\}\).

whose successors have yet to be explored. Initially the boundary is just the initial node of the parity game, in our example \(B=\{(a_0,\underline{b_0},c_0)\}\).

As initially B is a singleton set, \({{\mathcal {O}}}_{\textsf {expl}}\) tells us to explore all direct successors of \(q_0=(a_0,\underline{b_0},c_0)\). \({{\mathcal {O}}}_{\delta }(\alpha , q_0)\) groups the outgoing transitions \(\delta (q_0, \star )\) using \(\varSigma _{\textsf {in}}\) and \(\varSigma _{\textsf {out}}\) as previously mentioned as a set of tuples of the shape \((I, O, q', c)\). Due to the Mealy semantics each such tuple \((I, O, q', c)\) is broken up in two steps: starting in \(q_0\), first the environment issues a signal \(i \in I\), which leads the game into the intermediate state \((q_0,I)\);Footnote 4 as this is only an intermediate step the corresponding edge is assigned the (w.r.t. min-parity) “neutral” colour \(\infty \); in \((q_0,I)\) the controller  then issues a signal \(o \in O\) leading to the next state of the DPA. We illustrate this using our example: we have 4 choices for the inputs at \((a_0,\underline{b_0},c_0)\):

then issues a signal \(o \in O\) leading to the next state of the DPA. We illustrate this using our example: we have 4 choices for the inputs at \((a_0,\underline{b_0},c_0)\):

- 1.

\(\overline{r_1}\, \overline{r_2} = \{\emptyset \}\), i.e. no process requests access;

- 2.

\(r_1\, \overline{r_2} = \{\{r_1\}\}\), i.e. only process 1 requests access;

- 3.

\(\overline{r_1}\, r_2 = \{\{r_2\}\}\), i.e. only process 2 requests access; and

- 4.

\(r_1\, r_2 = \{\{r_1,r_2\}\}\), i.e. both processes want to access the critical section.

Consider the case that the environment chooses the input \(I=r_1\,\overline{r_2}\). \({{\mathcal {O}}}_{\delta }\) groups the outputs available to  into the three groups \(g_1\,g_2\) (grant access to both processes), \(g_1\,\overline{g_2}\) (grant access to only process 1), and \(\overline{g_1}\) (do not grant access to process 1). In case of \(O=\overline{g_1}=\{\{\}, \{g_2\}\}\), the DBA \({{\mathcal {A}}}_{\psi _1}\) takes a non-accepting transition, hence, the round-robin counter stays unchanged, while the other two automata take a loop, arriving at state \((a_0,\underline{b_1},c_0)\) in the product; \({{\mathcal {O}}}_{\delta }\) determines by analysing \(\alpha \) that we only need the colours \(\{0,1\}\) for the parity game under construction; as \({{\mathcal {A}}}_{\psi _1}\) takes a non-accepting transition, \({{\mathcal {O}}}_{\delta }\) gives the input–output pair \((I, O) = (r_1\,\overline{r_2},\overline{g_1})\) a colour of parity \({\overline{p}}\) in order to prevent

into the three groups \(g_1\,g_2\) (grant access to both processes), \(g_1\,\overline{g_2}\) (grant access to only process 1), and \(\overline{g_1}\) (do not grant access to process 1). In case of \(O=\overline{g_1}=\{\{\}, \{g_2\}\}\), the DBA \({{\mathcal {A}}}_{\psi _1}\) takes a non-accepting transition, hence, the round-robin counter stays unchanged, while the other two automata take a loop, arriving at state \((a_0,\underline{b_1},c_0)\) in the product; \({{\mathcal {O}}}_{\delta }\) determines by analysing \(\alpha \) that we only need the colours \(\{0,1\}\) for the parity game under construction; as \({{\mathcal {A}}}_{\psi _1}\) takes a non-accepting transition, \({{\mathcal {O}}}_{\delta }\) gives the input–output pair \((I, O) = (r_1\,\overline{r_2},\overline{g_1})\) a colour of parity \({\overline{p}}\) in order to prevent  from replying to \(r_1\,\overline{r_2}\) by \(\overline{g_1}\) infinitely often. Thus \((r_1\,\overline{r_2},\overline{g_1},1,(a_0,\underline{b_1},c_0)) \in {{\mathcal {O}}}_{\delta }(\alpha , q_0)\). For \(O=g_1\,\overline{g_2}\) we obtain analogously the entry \((r_1\,\overline{r_2}, g_1\,\overline{g_2},0,(a_0,\underline{b_0},c_0))\): as all automata take an accepting loop in this case the input–output pair is given the colour 0 and, as the round-robin counter is incremented twice, we are back in the initial node of the parity game. Finally, for \(O=g_1\,g_2\) the oracle \({{\mathcal {O}}}_{\delta }\) determines that

from replying to \(r_1\,\overline{r_2}\) by \(\overline{g_1}\) infinitely often. Thus \((r_1\,\overline{r_2},\overline{g_1},1,(a_0,\underline{b_1},c_0)) \in {{\mathcal {O}}}_{\delta }(\alpha , q_0)\). For \(O=g_1\,\overline{g_2}\) we obtain analogously the entry \((r_1\,\overline{r_2}, g_1\,\overline{g_2},0,(a_0,\underline{b_0},c_0))\): as all automata take an accepting loop in this case the input–output pair is given the colour 0 and, as the round-robin counter is incremented twice, we are back in the initial node of the parity game. Finally, for \(O=g_1\,g_2\) the oracle \({{\mathcal {O}}}_{\delta }\) determines that  has no chance of winning anymore as the DWA \({{\mathcal {A}}}_{\psi _0}\) representing the mutex requirements cannot reach an accepting transition anymore; for this reason, \({{\mathcal {O}}}_{\delta }\) simplifies the successor state to \(\bot \) which by construction is always won by the environment

has no chance of winning anymore as the DWA \({{\mathcal {A}}}_{\psi _0}\) representing the mutex requirements cannot reach an accepting transition anymore; for this reason, \({{\mathcal {O}}}_{\delta }\) simplifies the successor state to \(\bot \) which by construction is always won by the environment  and includes the tuple \((r_1\,\overline{r_2},g_1g_2,1,\bot )\) into its output.Footnote 5 Analogously, \({{\mathcal {O}}}_{\delta }\) handles the other three possible inputs by

and includes the tuple \((r_1\,\overline{r_2},g_1g_2,1,\bot )\) into its output.Footnote 5 Analogously, \({{\mathcal {O}}}_{\delta }\) handles the other three possible inputs by  which eventually leads to the arena shown in Fig. 3 with border \(B=\{(a_0,\underline{b_0},c_1), (a_0,b_0,\underline{c_1}), (a_0,\underline{b_1},c_1)\}\).

which eventually leads to the arena shown in Fig. 3 with border \(B=\{(a_0,\underline{b_0},c_1), (a_0,b_0,\underline{c_1}), (a_0,\underline{b_1},c_1)\}\).

We now run the parity game solver, i.e. query \({{\mathcal {O}}}_{\textsf {win}}\) for the so-far constructed arena. We first mark the boundary nodes as losing for the controller and ask \({{\mathcal {O}}}_{\textsf {win}}\) for an optimal winning strategy for the controller. In this case, this leads to the nondeterministic strategy \(\sigma \) depicted in Fig. 4 where the thick edges in dark green belong to \(\sigma \), while edges disabled by \(\sigma \) are drawn as dashed lines. We will describe our instantiation of \({{\mathcal {O}}}_{\textsf {win}}\) in Sect. 3.2 in more detail, but intuitively \({{\mathcal {O}}}_{\textsf {win}}\) tries to maximise the distance from the trivial losing states \(\bot \) and the boundary B with each colour interpreted as a distance; hence, in the intermediate states corresponding to \(I\in \{\overline{r_1}\, r_2, \overline{r_1}\,\overline{r_2}, r_1\,\overline{r_2}\}\), \({{\mathcal {O}}}_{\textsf {win}}\) chooses to let the controller play back to the initial state so that ideally never the losing state is reached and thus the distance to it is maximised. One particular feature of our instantiation of \({{\mathcal {O}}}_{\textsf {win}}\) is that it outputs nondeterministic strategies, i.e. a strategy \(\sigma \) for  is still required to respect the edge relation of the parity game but \(\sigma (q)\) is only required to be some subset of the successors of q in the parity game; in particular \(\sigma (q)=\emptyset \) is allowed and has to be interpreted as

is still required to respect the edge relation of the parity game but \(\sigma (q)\) is only required to be some subset of the successors of q in the parity game; in particular \(\sigma (q)=\emptyset \) is allowed and has to be interpreted as  giving up at node q which is the case of the strategy shown in Fig. 4 at the intermediate node \((q_0, r_1\,r_2)\). Note that we do not draw \(\bot \) and the corresponding edges in Figs. 4 and 5 as \({{\mathcal {O}}}_{\textsf {win}}\) will always prefer to tell the controller

giving up at node q which is the case of the strategy shown in Fig. 4 at the intermediate node \((q_0, r_1\,r_2)\). Note that we do not draw \(\bot \) and the corresponding edges in Figs. 4 and 5 as \({{\mathcal {O}}}_{\textsf {win}}\) will always prefer to tell the controller  to give up instead of playing to \(\bot \) (analogously for

to give up instead of playing to \(\bot \) (analogously for  and \(\top \)). More importantly, if a strategy tells a player to give up at a specific node this means that the player loses any play reaching this specific node. For this reason, the computed strategy \(\sigma \) is not winning for the controller so far, hence, we also ask \({{\mathcal {O}}}_{\textsf {win}}\) if the environment

and \(\top \)). More importantly, if a strategy tells a player to give up at a specific node this means that the player loses any play reaching this specific node. For this reason, the computed strategy \(\sigma \) is not winning for the controller so far, hence, we also ask \({{\mathcal {O}}}_{\textsf {win}}\) if the environment  might win the initial state—now with the boundary marked as winning for the controller. As \({{\mathcal {O}}}_{\textsf {win}}\) also fails to find a winning strategy for the environment (

might win the initial state—now with the boundary marked as winning for the controller. As \({{\mathcal {O}}}_{\textsf {win}}\) also fails to find a winning strategy for the environment ( can easily force

can easily force  directly into the boundary), we proceed to further explore and construct the arena.

directly into the boundary), we proceed to further explore and construct the arena.

Optimal strategy for the controller in the parity game arena of Fig. 3

In this iteration \({{\mathcal {O}}}_{\textsf {expl}}\) now uses \(\sigma \) and \(\tau \): as neither the controller nor the environment wins the initial node so far, starting in \(q_0=(a_0,\underline{b_0},c_0)\) each player can force his opponent into the boundary; hence, \(\sigma \) and \(\tau \) give us some information where to further explore the arena; further, from \(\alpha \) we obtain scores that tell us how far from acceptance resp. rejection a given boundary state is w.r.t. to the product of the underlying automata; in our example, using \(\alpha \) the oracle \({{\mathcal {O}}}_{\textsf {expl}}\) tells us to first explore only the two states \((a_0,\underline{b_1},c_0)\) and \((a_0,b_0,\underline{c_0})\) as in both cases only one of the two processes is waiting for being granted access. Proceeding as before, we further extend the parity game under construction leading to the parity game shown in Fig. 5 (which also shows the strategy obtained for  ) and again ask if either

) and again ask if either  or

or  can now win the initial node \(q_0\). As the extended parity game coincides with that of the previous iteration in all but the boundary nodes, we pass the so-far computed strategies also to \({{\mathcal {O}}}_{\textsf {win}}\) in order to re-use the information stored in them.

can now win the initial node \(q_0\). As the extended parity game coincides with that of the previous iteration in all but the boundary nodes, we pass the so-far computed strategies also to \({{\mathcal {O}}}_{\textsf {win}}\) in order to re-use the information stored in them.

Fig. 5 shows the strategy that \({{\mathcal {O}}}_{\textsf {win}}\) now computes: the updated \(\sigma \) coincides with the previous \(\sigma \) on the nodes where  did not give up (edges coloured in dark green); it only adds the edges coloured in light blue. The so updated \(\sigma \) now wins the initial node for the controller. In particular, \(\sigma \) keeps the nondeterminism at \((q_0,r_1\,r_2)\) where it only tells the controller to grant access to exactly one process but it does not tell

did not give up (edges coloured in dark green); it only adds the edges coloured in light blue. The so updated \(\sigma \) now wins the initial node for the controller. In particular, \(\sigma \) keeps the nondeterminism at \((q_0,r_1\,r_2)\) where it only tells the controller to grant access to exactly one process but it does not tell  which one of the processes should be preferred. This ambiguity can be used when translating the strategy into a circuit or a program to reduce the description size. Finally note that as we mark the nodes on the boundary as losing for the respective “main” player when calling \({{\mathcal {O}}}_{\textsf {win}}\), if the “main” player can win the so-far constructed parity game, then his strategy has to avoid the boundary, i.e. by construction we always find winning strategies that try to enclose all plays starting in the initial node in a “minimal” winning region. We remark that this bears some similarity to the local strategy iteration schemes by Friedmann [17]; but there the parity game is assumed to be explicitly given, and the goal is simply to speed-up strategy iteration itself; in our case the goal is to construct as little as possible from the actual parity game, while the actual choice of the oracle \({{\mathcal {O}}}_{\textsf {win}}\) is unimportant at this point.

which one of the processes should be preferred. This ambiguity can be used when translating the strategy into a circuit or a program to reduce the description size. Finally note that as we mark the nodes on the boundary as losing for the respective “main” player when calling \({{\mathcal {O}}}_{\textsf {win}}\), if the “main” player can win the so-far constructed parity game, then his strategy has to avoid the boundary, i.e. by construction we always find winning strategies that try to enclose all plays starting in the initial node in a “minimal” winning region. We remark that this bears some similarity to the local strategy iteration schemes by Friedmann [17]; but there the parity game is assumed to be explicitly given, and the goal is simply to speed-up strategy iteration itself; in our case the goal is to construct as little as possible from the actual parity game, while the actual choice of the oracle \({{\mathcal {O}}}_{\textsf {win}}\) is unimportant at this point.

This brings us to the end of our walk-through of the main algorithm. In the following sections we describe in more detail how we choose to instantiate the oracles.

3.1 DPA construction

3.1.1 Formula analysis and decomposition

Before constructing a DPA the formula is analysed and its syntax-tree is annotated with automata acceptance conditions based on syntactic criteria. Such a formula decomposition focussed on conjunctions has been previously used in other work such as [10, 15, 34]. However, we will also consider disjunctions and bi-implications. In Sect. 2.1 we introduced the following three sub-classes of DPAs: DWAs, DBAs, and DCAs. Accordingly we annotate the LTL formula with “acceptance-typing” information:

Definition 3

[Acceptance-Type Annotated LTL]

We obtain an acceptance-typed LTL formula \(\alpha \) from an LTL formula \(\varphi \) using the following heuristic approach: First, we determine syntactically the “simplest” acceptance type, denoted \(\tau _\varphi \), such that we can build a deterministic automaton with acceptance \(\tau _\varphi \) for \(\varphi \) efficiently. Second, we annotate \(\varphi \) with this information and obtain \(\alpha \) as the result of \({\mathcal {T}}_\varphi \). Formally:

Definition 4

Let \(\varphi \) be a formula. Then \(\tau \) is recursively defined as:

where \(\sqcup \) denotes the least upper bound relative to the partial order \(\preceq \) defined by \({\mathcal {W}} \prec {\mathcal {B}}\), \({\mathcal {W}} \prec {\mathcal {C}}\), \({\mathcal {B}} \prec {\mathcal {P}}\), \({\mathcal {C}} \prec {\mathcal {P}}\), \({\mathcal {B}} \not \preceq {\mathcal {C}}\) and \({\mathcal {C}} \not \preceq {\mathcal {B}}\). The acceptance-type annotated formula \({\mathcal {T}}_\varphi \) is then recursively defined as:

In a specific implementation this decomposition and annotation might be fine-tuned to allow better translation performance, e.g. safety properties classified as separate weak sub-formulas might be grouped for performance reasons.

Further, let \({\mathcal {X}}\), \({\mathcal {Y}}\), and \({\mathcal {Z}}\) be acceptance-typed LTL formulas. We make use of the following simple pattern matching notation:

\({\mathcal {X}} = {\mathcal {B}}_\wedge ({\mathcal {X}}_1, {\mathcal {X}}_2)\): Here \({\mathcal {X}}_1\) and \({\mathcal {X}}_2\) are fresh variables binding to the left and right subtree of \({\mathcal {X}}\), which is constrained to be a conjunction typed as Büchi acceptance.

\({\mathcal {Y}} = {\mathcal {Y}}_{\vee }({\mathcal {C}}_1, {\mathcal {Y}}_2)\): Here \({\mathcal {C}}_1\) and \({\mathcal {Y}}_2\) are fresh variables binding to the left and right subtree of \({\mathcal {Y}}\). Further, \({\mathcal {C}}_1\) has to be typed as co-Büchi acceptance and \({\mathcal {Y}}\) can be typed with any acceptance condition, but needs to be a disjunction.

\({\mathcal {Z}} = {\mathcal {W}}_{\leftrightarrow }({\mathcal {W}}_1, {\mathcal {W}}_2)\): Here \({\mathcal {W}}_1\) and \({\mathcal {W}}_2\) are fresh variables binding to the left and right subtree of \({\mathcal {Z}}\) and are both typed with weak acceptance. Moreover, \({\mathcal {Z}}\) is a bi-implication with weak acceptance.

Moreover, instead of only binary conjunctives, we use Büchi conjunction and co-Büchi disjunction of sets, i.e. we add

for any \(n \ge 2\) to the syntax. We restrict this rule to applications where all children are in the Büchi class for \({\mathcal {B}}_\wedge \) (resp. co-Büchi class for \({\mathcal {C}}_\vee \)). After computing \({\mathcal {T}}_\varphi \), successive conjunctions are directly grouped together with the rule \({\mathcal {B}}_\wedge ({\mathcal {B}}_\wedge ({\mathcal {B}}_1, {\mathcal {B}}_2), {\mathcal {B}}_3) = {\mathcal {B}}_\wedge ({\mathcal {B}}_1, {\mathcal {B}}_\wedge ({\mathcal {B}}_2, {\mathcal {B}}_3)) = {\mathcal {B}}_\wedge ({\mathcal {B}}_1, {\mathcal {B}}_2, {\mathcal {B}}_3)\), and the respective rule for \({\mathcal {C}}_\vee \).

3.1.2 Product construction with LAR

Given an acceptance-type annotated syntax tree \({\mathcal {T}}_\varphi \) for a formula \(\varphi \), we now describe a recursive procedure to construct a (transition-based) DPA \({A}({\mathcal {T}}_\varphi )\) with \({\mathcal {L}}({A}({\mathcal {T}}_\varphi )) = {\mathcal {L}}(\varphi )\). Observe that not all patterns that are syntactically possible are covered, but all patterns generated by \({\mathcal {T}}_\varphi \).

Base Case. In the case \({\mathcal {T}}_\varphi \in \{{\mathcal {W}}(\varphi ), {\mathcal {B}}(\varphi ), {\mathcal {C}}(\varphi ), {\mathcal {P}}(\varphi )\}\) we use one of the direct automata constructions described in Sect. 2.3.

Conjunction. Now consider the case for the conjunction \({\mathcal {T}}_{\varphi } = {\mathcal {X}}_\wedge ({\mathcal {T}}_{\psi _1}, {\mathcal {T}}_{\psi _2})\), or \({\mathcal {T}}_{\varphi } = {\mathcal {B}}_\wedge ({\mathcal {T}}_{\psi _1}, \ldots , {\mathcal {T}}_{\psi _n})\). We start the construction by recursively constructing DPAs \({A}({\mathcal {T}}_{\psi _i}) = (Q_i, \delta _i, q^0_i, \chi _i, d_i, p_i)\) for each child \({\mathcal {T}}_{\psi _i}\). Then we apply a case distinction based on \({\mathcal {T}}_{\varphi }\) and each \({\mathcal {T}}_{\psi _i}\).

In the cases where we have \({\mathcal {X}}_\wedge ({\mathcal {W}}_1, {\mathcal {X}}_2)\) (and symmetrically \({\mathcal {X}}_\wedge ({\mathcal {X}}_1, {\mathcal {W}}_2)\)), we can use a simple product construction. Then define the DPA

$$\begin{aligned} {A}({\mathcal {X}}_\wedge ({\mathcal {W}}_1, {\mathcal {X}}_2))&\,{:=}\,(Q_1 \times Q_2, \delta , (q^0_1, q^0_2), \chi , d_2, p_2) \end{aligned}$$with:

$$\begin{aligned} \delta (q, a)&\,{:=}\,(\delta _1(q_1, a), \delta _2(q_2, a))&\chi (q, a)&\,{:=}\,{\left\{ \begin{array}{ll} \chi _2(q_2, a) &{} \hbox { if}\ \chi _1(q_1, a) = p_1 \\ \overline{p_2} &{} \hbox { if}\ \chi _1(q_1, a) \ne p_1 \end{array}\right. } \end{aligned}$$In the cases where we have \({\mathcal {X}}_\wedge ({\mathcal {C}}_1, {\mathcal {X}}_2)\) (and symmetrically \({\mathcal {X}}_\wedge ({\mathcal {X}}_1, {\mathcal {C}}_2)\)), we can also use a product construction, with possibly one extra colour. W.l.o.g. assume \(p_2 = 1\). This can be achieved by switching the parity of \(A({\mathcal {X}}_2)\) if necessary. Then define the DPA

$$\begin{aligned} {A}({\mathcal {X}}_\wedge ({\mathcal {C}}_1, {\mathcal {X}}_2))&\,{:=}\,(Q_1 \times Q_2, \delta , (q^0_1, q^0_2), \chi , d_2, 1) \end{aligned}$$with:

$$\begin{aligned} \delta (q, a)&\,{:=}\,(\delta _1(q_1, a), \delta _2(q_2, a))&\chi (q, a)&\,{:=}\,{\left\{ \begin{array}{ll} 0 &{} \text {if }\chi _1(q_1, a) = 0 \\ \chi _2(q_2, a) &{} \text {if }\chi _1(q_1, a) = 1 \end{array}\right. } \end{aligned}$$Next, we consider the case \({\mathcal {B}}_\wedge ({\mathcal {B}}_1, \ldots , {\mathcal {B}}_n)\) with two or more Büchi children, and only Büchi children. Here, on top of a product construction, we need an additional round-robin-counter to track of successive satisfaction of the Büchi acceptance of the children. We define the DBA

$$\begin{aligned} {A}({\mathcal {B}}_\wedge ({\mathcal {B}}_1, \ldots , {\mathcal {B}}_n))&\,{:=}\,(Q \times \{ 0, 1, \ldots , n-1 \}, \delta , (q^0, 0), \chi , 1, 0) \end{aligned}$$with \(Q \,{:=}\,\left( Q_1 \times \ldots \times Q_n \right) \), \(q^0 \,{:=}\,(q^0_1, \ldots , q^0_n)\) and

$$\begin{aligned} \delta ((q, r), a)&\,{:=}\,((\delta _1(q_1, a), \ldots , \delta _n(q_n, a)), r' \bmod n) \\ \chi ((q, r), a)&\,{:=}\,{\left\{ \begin{array}{ll} 0 &{} \text {if }r' = n \\ 1 &{} \text {if }r' < n \end{array}\right. } \end{aligned}$$where \(r' \,{:=}\,\max \{ s \in \{r,r+1,\ldots ,n\} \mid \forall r < j \le s : \chi _j(q_j, a) = 0\}\).

Last, we consider the case \({\mathcal {P}}_\wedge ({\mathcal {B}}_1, {\mathcal {P}}_2)\) (and symmetrically \({\mathcal {P}}_\wedge ({\mathcal {P}}_1, {\mathcal {B}}_2)\)). W.l.o.g. we may assume \(p_2 = 1\) by switching parity if necessary. Here, we need additional memory to remember the minimal colour of \({\mathcal {P}}_2\) between acceptances of \({\mathcal {B}}_1\). We define the DPA

$$\begin{aligned} {A}({\mathcal {P}}_\wedge ({\mathcal {B}}_1, {\mathcal {P}}_2))&\,{:=}\,(Q_1 \times Q_2 \times \{0, 1, \ldots , d_2\}, \delta , ((q^0_1, q^0_2), d_2), \chi , d, 1) \end{aligned}$$with \(d \,{:=}\,\min \{d \in \{d_2, d_2 + 1\} \mid d \equiv _2 0\}\) and

$$\begin{aligned} \delta ((q, c), a)&\,{:=}\,{\left\{ \begin{array}{ll} ((\delta _1(q_1, a), \delta _2(q_2, a)), d_2) &{} \text {if }\chi _1(q_1, a) = 0 \\ ((\delta _1(q_1, a), \delta _2(q_2, a)), c') &{} \text {otherwise} \end{array}\right. } \\ \chi ((q, c), a)&\,{:=}\,{\left\{ \begin{array}{ll} c' &{} \text {if }\chi _1(q_1, a) = 0 \\ d &{} \text {otherwise} \end{array}\right. } \end{aligned}$$where \(c' \,{:=}\,\min \left( c, \chi _2(q_2, a) \right) \).

Note that if some child in a conjunction reaches a non-accepting sink, then we also know that the conjunction can never accept again, and we can simplify the product state. A similar argument holds if all children reach a accepting sink. Formally, we replace \(\delta \) by \(\delta '\) and \(\chi \) by \(\chi '\) defined by:

Disjunction. The construction of the DPA \({A}({\mathcal {X}}_\vee ({\mathcal {X}}_1, {\mathcal {X}}_2))\) or \({A}({\mathcal {C}}_\vee ({\mathcal {C}}_1, \ldots , {\mathcal {C}}_n))\) for the disjunctive \({\mathcal {X}}_\vee \) is dual to the conjunction case.

Bi-implication. Finally, we consider the bi-implication \({\mathcal {X}}_\leftrightarrow ({\mathcal {X}}_1, {\mathcal {X}}_2)\). This can be expressed through \({\mathcal {X}}_\wedge \) and \({\mathcal {X}}_\vee \) by the logical equivalence \(\varphi \leftrightarrow \psi \equiv (\varphi \wedge \psi ) \vee (\lnot \varphi \wedge \lnot \psi )\). However, this construction would increase the state space and number of colours in some cases, since four automata (\(\varphi \), \(\lnot \varphi \), \(\psi \), \(\lnot \psi \)) instead of two (\(\varphi \), \(\psi \)) need to be constructed. Therefore we have a special construction for the DPA \({A}({\mathcal {X}}_\leftrightarrow ({\mathcal {X}}_1, {\mathcal {X}}_2))\). As before, we start by constructing the DPAs for the children \({\mathcal {X}}_1,{\mathcal {X}}_2\). Let \({A}({\mathcal {X}}_i) = (Q_i, \delta _i, q^0_i, \chi _i, d_i, p_i)\) for \(i \in \{1,2\}\).

In the case \({\mathcal {W}}_\leftrightarrow ({\mathcal {W}}_1, {\mathcal {W}}_2)\), we can apply a simple product construction. W.l.o.g. assume \(p_1 = p_2\) by switching parity if necessary. We define the DWA

$$\begin{aligned} {A}({\mathcal {W}}_\leftrightarrow ({\mathcal {W}}_1, {\mathcal {W}}_2))&\,{:=}\,(Q_1 \times Q_2, \delta , (q^0_1, q^0_2), \chi , 1, 0) \end{aligned}$$with:

$$\begin{aligned} \delta (q, a)&\,{:=}\,(\delta _1(q_1, a), \delta _2(q_2, a))&\chi (q, a)&\,{:=}\,\left( \chi _1(q_1, a) + \chi _2(q_2, a)\right) \bmod 2 \end{aligned}$$Now we consider the general case for \({\mathcal {P}}_\leftrightarrow ({\mathcal {X}}_1, {\mathcal {X}}_2)\) (note that \({\mathcal {B}}_\leftrightarrow \) and \({\mathcal {C}}_\leftrightarrow \) never occur). W.l.o.g. assume that \({\mathcal {X}}_1 \ne {\mathcal {P}}\) and thus \(d_1 = 1\). We consider \({\mathcal {X}}_2\) to be of class \({\mathcal {P}}\) and need to store its minimal colour between acceptances of \({\mathcal {X}}_1\), as for conjunction. However, whenever \({\mathcal {X}}_1\) does not accept, we emit the colour of \(A_2\), shifted by one. If \({\mathcal {X}}_1 = {\mathcal {W}}\), we can actually omit the memory to store the colour of \({\mathcal {X}}_2\). We define the DPA

$$\begin{aligned} {A}({\mathcal {P}}_\leftrightarrow ({\mathcal {X}}_1, {\mathcal {X}}_2))&\,{:=}\,(\left( Q_1 \times Q_2 \right) \times \{ 0, 1, \ldots , d_2 \}, \delta , ((q^0_1, q^0_2), d_2), \chi , d, p) \end{aligned}$$with \(d \,{:=}\,d_2 + 1\), \(p\,{:=}\,\left( p_1 + p_2\right) \bmod 2\) and

$$\begin{aligned} \delta ((q, c), a)&\,{:=}\,{\left\{ \begin{array}{ll} ((\delta _1(q_1, a), \delta _2(q_2, a)), d_2) &{} \text {if }\chi _1(q_1, a) = p_1\text { or }{\mathcal {X}}_1 = {\mathcal {W}} \\ ((\delta _1(q_1, a), \delta _2(q_2, a)), c') &{} \text {if }\chi _1(q_1, a) \ne p_1 \end{array}\right. } \\ \chi (q, a)&\,{:=}\,{\left\{ \begin{array}{ll} c' &{} \text {if }\chi _1(q_1, a) = p_1 \\ \chi _2(q_2, a) + 1 &{} \text {if }\chi _1(q_1, a) \ne p_1 \end{array}\right. } \end{aligned}$$where \(c' \,{:=}\,\min \left( c, \chi _2(q_2, a) \right) \).

We note that the construction of the previous case can be generalized to the product of two arbitrary DPAs by remembering for each colour c of \({A}({\mathcal {X}}_1)\) the minimal colour of \({A}({\mathcal {X}}_2)\) between minimal occurrences of c in \({A}({\mathcal {X}}_1)\).

As with conjunction, we apply the simplification to \({A}({\mathcal {X}}_\leftrightarrow ({\mathcal {X}}_1,{\mathcal {X}}_2))\), that if both children reach a state in \(\{\bot ,\top \}\), also the product state is either \(\bot \) or \(\top \). Replace \(\delta \) by \(\delta '\) and \(\chi \) by \(\chi '\) defined by:

Let \(\varphi \) be a formula and let \(\alpha = {\mathcal {T}}_\varphi \) be the acceptance-type annotated formula. We then implement the oracles \({{\mathcal {O}}}_{p}\), \({{\mathcal {O}}}_{q_0}\), and \({{\mathcal {O}}}_{\delta }\) by the DPA \({A}(\alpha ) = (Q, \delta , q^0, \chi , d, p)\) in the following way: \({{\mathcal {O}}}_{p}(\alpha ) \,{:=}\,p\), \({{\mathcal {O}}}_{q_0}(\alpha ) \,{:=}\,q^0\), and \({{\mathcal {O}}}_{\delta }(\alpha , q) \,{:=}\,\{ (\{i\}, \{o\}, \chi (q, i \cup o), \delta (q, i \cup o) ) \mid i \in \varSigma _{\textsf {in}}, o \in \varSigma _{\textsf {out}}\}\).

3.1.3 Implementation details

On-the-fly construction. For this construction, the functions \(\delta \) and \(\chi \) only need to query the local state, and never need a global state. They might call the functions \(\delta \) and \(\chi \) for their children, but those also only depend on the local state and their respective children. Therefore it is possible to implement the construction of the DPA \({A}(\alpha )\) for a decomposition \(\alpha \)on-the-fly: Starting with the initial state, successor states are only generated when necessary, and the DPA is not fully constructed until all states have been queried. This holds both for the root automaton and for any child automata constructed by the decomposition.

Memoization. When querying the root DPA for successors, the successors of the same state in a child DPA may be needed several times. Instead of recomputing them each time, the successors of the state are cached or memoized for direct access.

Formula Isomorphism. Building up on the memoization feature the construction only constructs one automaton for a pair of formulas isomorphic under renaming atomic propositions und remaps letters in the query stage, effectively reducing the automata states needed to be constructed for parametric formulas, where the same pattern is repeated with different atomic propositions.

Symbolic Construction and Representation of Transition Relations. The description of the oracle interface only specifies that successor function represented by a set needs to be returned. An implementation can choose to represent such a transition relation explicitly (e.g. by a list of length \(2^n\)) or symbolically (e.g. by an MTBDD). In fact Strix 19.07 relies on a symbolic construction and representation of the transition relation of the arena and the automata. This then allows efficient (symbolic) grouping of inputs and outputs into equivalence classes by \({{\mathcal {O}}}_{\delta }\).

3.2 Parity game solver

We instantiate \({{\mathcal {O}}}_{\textsf {win}}\) with a variant of the strategy iteration algorithm in [31].

We first give a brief description of what strategy iteration is and why we deem it particularly useful when combined with a demand-driven construction of the arena. We then exemplify these ideas in a bit more detail using our preceding example from Sec. 3.

In brief, strategy iteration consists of improving the current strategy (resp. controller) by iterating the two steps: (i) compute the “worst case” the environment can inflict on the system w.r.t. the current strategy; (ii) state-wise redefine the current strategy by selecting any successor(s) which lead to a better “worst case”. The iteration stops as soon as the strategy cannot be improved anymore i.e. if the currently selected successors led to the best “worst case”. Strategy iteration nicely combines with our approach of constructing the actual arena in a demand driven way: we only construct the arena to that extent that allows the controller to stay within the constructed subarena; all nodes resp. edges which are outside of this subarena are simply flagged to be lost to the controller, when checking for realisability, resp. the system, when checking for unrealisability; in addition, if we need to further explore the arena as we could neither prove realisability nor unrealisability using the subarena constructed so far, we can re-use the already computed strategies as initial strategies for computing the optimal strategies for the extended subarena.

We exemplify these ideas now. Let  be a parity game arena with nodes

be a parity game arena with nodes  split between the two players

split between the two players  and

and  , edges E, edge colouring \(\chi :E\rightarrow {\mathbb {N}}_0\), and boundary nodes \(B\subseteq V\). We assume that \({{\mathcal {A}}}\) includes two special nodes \(\bot \) and \(\top \) where \(\bot \) is always won by the environment

, edges E, edge colouring \(\chi :E\rightarrow {\mathbb {N}}_0\), and boundary nodes \(B\subseteq V\). We assume that \({{\mathcal {A}}}\) includes two special nodes \(\bot \) and \(\top \) where \(\bot \) is always won by the environment  and \(\top \) is always won by the controller

and \(\top \) is always won by the controller  . We further require that all nodes in \(V{\setminus } B\) have at least one successor w.r.t. E.Footnote 6 To simplify notation, we forget about the inputs and outputs that also label the edges (as shown e.g. in Fig. 3) s.t. we can simply write vE for the set of successors of the node v.

. We further require that all nodes in \(V{\setminus } B\) have at least one successor w.r.t. E.Footnote 6 To simplify notation, we forget about the inputs and outputs that also label the edges (as shown e.g. in Fig. 3) s.t. we can simply write vE for the set of successors of the node v.

Besides \({{\mathcal {A}}}\), the parity game solver takes as additional input the “main player”  , the parity \(p\in \{0,1\}\) with which \(\mathsf {P}\) wins a play, a node \(q\in V\) of the arena whose winner we want to determine, and an initial strategy \(\kappa \) for the main player \(\mathsf {P}\). We will write \({\overline{\mathsf {P}}}\) for the opponent of \(\mathsf {P}\) s.t.

, the parity \(p\in \{0,1\}\) with which \(\mathsf {P}\) wins a play, a node \(q\in V\) of the arena whose winner we want to determine, and an initial strategy \(\kappa \) for the main player \(\mathsf {P}\). We will write \({\overline{\mathsf {P}}}\) for the opponent of \(\mathsf {P}\) s.t.  with the parity of the opponent \({\overline{\mathsf {P}}}\) being \({\overline{p}}\) accordingly. All nodes in B are considered to be losing for the main player P, i.e. \({\overline{\mathsf {P}}}\) can win be forcing \(\mathsf {P}\) into B.

with the parity of the opponent \({\overline{\mathsf {P}}}\) being \({\overline{p}}\) accordingly. All nodes in B are considered to be losing for the main player P, i.e. \({\overline{\mathsf {P}}}\) can win be forcing \(\mathsf {P}\) into B.

In order to solve the parity game, we reformulate the winning condition into a sup-inf-distance problem: To this end, we first introduce an (implicit) auxiliary node \(\bullet \) to which only the main player \(\mathsf {P}\) can move to in order to give up. This node is in addition to the two nodes \(\top \) and \(\bot \) and only serves to simplify presentation. All edges leading to \(\bullet \) are defined to be coloured by \(\infty \) (“don’t care”). We denote such a modification of an arena \({{\mathcal {A}}}\) by \({{\mathcal {A}}}^\bullet \).

We interpret the edge colours \(\chi (v,w)\) as weights \(\gamma (v,w)\) that measure how close P comes to winning resp. losing when taking the corresponding edge. Let \(C\subseteq {\mathbb {N}}_0\) be the set of all colours occurring in \({{\mathcal {A}}}\) except for \(\infty \). (Recall that we used the colour “\(\infty \)” for edges that are unimportant w.r.t. the winning condition.) A colour \(c\in C\) is identified with the multiset \(\{c\}\) which we represent by its characteristic function w.r.t. \({\mathbb {N}}_0^C\). The colour \(\infty \) is identified with the empty multiset resp. its characteristic function. Addition on \({\mathbb {N}}_0^C\) is defined point-wise as usual s.t. it coincides with the union of multisets. The weight of a finite play is then simply the sum of the weights of the edges traversed by it, i.e. the multiset of the colours of the edges of a play.

We order \({\mathbb {N}}_0^C\) from the point of view of \(\mathsf {P}\) by means of the following order relation \(\prec _{\mathsf {P}}\): Given two distinct functions \(g,g'\in {\mathbb {N}}_0^C\), let \(c:=\min \{ c' \in C \mid g(c') \ne g'(c')\}\) be the least colour in which the two differ; if c has parity \(p\), we set \(g \prec _{\mathsf {P}}g'\) if and only if \(g(c) < g'(c)\); else if c has parity \({\overline{p}}\), we set \(g \prec _{\mathsf {P}}g'\) if and only if \(g(c)> g'(c)\). For instance, taking a look at the arena of Fig. 3, the colours 0 and 1 are mapped on the functions (represented as tuples) (1, 0) and (0, 1), respectively. From the perspective of the controller  and its winning parity 0, the weight (1, 0) (representing the colour 0) is more attractive than the weight (0, 1) (representing the colour 1), i.e. \((1,0) \prec _{\mathsf {P}}(0,1)\).

and its winning parity 0, the weight (1, 0) (representing the colour 0) is more attractive than the weight (0, 1) (representing the colour 1), i.e. \((1,0) \prec _{\mathsf {P}}(0,1)\).

The arena of Fig. 3 extended by the auxiliary node \(\bullet \). The colours 0, 1 and \(\infty \) have been transformed into the respective edge weights (1, 0), (0, 1) and (0, 0) in \({\mathbb {N}}_0^C\). We assume that we have to decide whether the controller  can win in the initial node \((a_0,\underline{b_0},c_0)\). Here, the parity of \(\mathsf {P}\) is \(p=0\) so the colour 0 resp. the tuple (1, 0) is positive from the point of view of

can win in the initial node \((a_0,\underline{b_0},c_0)\). Here, the parity of \(\mathsf {P}\) is \(p=0\) so the colour 0 resp. the tuple (1, 0) is positive from the point of view of  , while the colour 1 resp. the tuple (0, 1) is negative. When not given an initial strategy, we start with the strategy that tells \(\mathsf {P}\) to give up at every node controlled by \(\mathsf {P}\), thereby preventing the existence of negative cycles in any case, as shown in the figure on the left. This leads to the sup-inf-distance to be (0, 0) at every node s.t. playing back to the initial node is an improvement as it closes in each case a cycle of positive weight. Choosing all these improvements (thick dotted edges) yields the strategy shown in the figure on the right; after removing the auxiliary node \(\bullet \), this yields the strategy shown already in Fig. 4

, while the colour 1 resp. the tuple (0, 1) is negative. When not given an initial strategy, we start with the strategy that tells \(\mathsf {P}\) to give up at every node controlled by \(\mathsf {P}\), thereby preventing the existence of negative cycles in any case, as shown in the figure on the left. This leads to the sup-inf-distance to be (0, 0) at every node s.t. playing back to the initial node is an improvement as it closes in each case a cycle of positive weight. Choosing all these improvements (thick dotted edges) yields the strategy shown in the figure on the right; after removing the auxiliary node \(\bullet \), this yields the strategy shown already in Fig. 4

To make the interpretation of the functions in \({\mathbb {N}}_0^C\) as weights more intuitive, let us remark that one can recover the order \(\prec _{\mathsf {P}}\) by reading the function \(g\in {\mathbb {N}}_0^C\) as a numeral w.r.t. the alternating basis \(-b\), i.e. g is interpreted as the integer \(\sum _{c\in C} g(c)\cdot (-1)^{p+c} \cdot b^{-c+\max C}\) where b is any sufficiently large positive integer, e.g. \(b=\left| {V}\right| \). This ensures that in every simple cycle in \({{\mathcal {A}}}\) we have that the cycle is won by \(\mathsf {P}\) if and only if the total weight of the cycle is positive, i.e. staying forever in the cycle leads to gaining infinite distance. For instance in the arena of Fig. 6 we could choose \(b=2\) s.t. (1, 0) is mapped onto 2, while (0, 1) is mapped on \(-1\).

\(\mathsf {P}\)’s goal thus becomes to maximise the distance to losing, i.e. the minimal total weight accumulated along a play—thus P will only accept infinite plays if these yield an infinite distance to losing, otherwise P will use the option to play to \(\bullet \) (or into the boundary) in order to terminate a play and bound the distance to losing to a finite value.

Computation of the sup-inf-distances in \({{\mathcal {A}}}^\bullet \) is complicated by the existence of both positive and negative cycles (w.r.t. the interpretation of \({\mathbb {N}}_0^C\) as numerals). For this reason, we use strategy iteration, i.e. we construct a sequence of strategies for \(\mathsf {P}\) where each strategy only allows for positive cycles, while negative cycles are prevented by playing to \(\bullet \): Assume \(\mathsf {P}\) uses a (nondeterministic memoryless) strategy \(\kappa \) (i.e. \(\emptyset \ne \kappa (v) \subseteq vE\) for all \(v\in V_P\)) s.t. in the accordingly restricted arena \({{\mathcal {A}}}^\bullet _\kappa \) (i.e. \(\mathsf {P}\) may only move to a successor in \(\kappa (v)\) at every node \(v\in V_{\mathsf {P}}\)) s.t. no negative cycles exist anymore (e.g. consider \(\kappa = V_P\times \{\bullet \}\)); then the sup-inf-distance for every node can be easily computed using fixed-point iteration just as in the case of the standard attractor computation using some variant of the Bellmann-Ford algorithm (in order to identify infinite positive sup-inf-distance). Let \(d_{\kappa }(v)\) denote the sup-inf-distance of node v in \({{\mathcal {A}}}^\bullet _{\kappa }\). For every node v in \(B\cup \{\bullet \}\), we define \(d_{\kappa }(v)=0\); for the nodes \(\bot \) and \(\top \) we predefine \(d_{\kappa }\) accordingly; for every v controlled by \({\overline{\mathsf {P}}}\) we have the unrestricted optimality equation \(d_{\kappa }(v) = \min ^{\prec _{\mathsf {P}}}\{ \gamma (v,w)+d_{\kappa }(w) \mid w\in vE\}\), while for v controlled by \(\mathsf {P}\) we have the \(\kappa \)-restricted optimality equation \(d_{\kappa }(v)=\max ^{\prec _{\mathsf {P}}}\{ \gamma (v,w)+ d_{\kappa }(w) \mid w\in \kappa (v)\}\) (with \(\gamma (v,w)\) the weight induced by the colour of the edge from v to w). We call any nondeterministic strategy \(\kappa '\) an improvement of \(\kappa \) if (1) \(\kappa '\ne \kappa \), (2) \(\emptyset \ne \kappa '(v) \subseteq vE\) for all \(v\in V_P\), (3) if \((v,w)\in \kappa '{\setminus } \kappa \), then \(d_{\kappa }(v) < \gamma (v,w)+d_{\kappa }(w)\) (i.e. v’s sup-inf-distance can be improved by playing to w), and (4) if \((v,w)\in \kappa '\cap \kappa \), then \(d_{\kappa }(v)=\gamma (v,w)+d_{\kappa }(w)\). [31] shows that any such improvement \(\kappa '\) does not introduce any negative cycles and that \(d_{\kappa '} > d_{\kappa }\), i.e. the sup-inf-distances w.r.t. \(\kappa '\) do not decrease for any node and strictly improves for at least one node. Thus, no strategy can be encountered twice. Finally, note that if \(W_{\mathsf {P}}\uplus W_{{\overline{\mathsf {P}}}}\) is the winning partition of the nodes V of \({{\mathcal {A}}}\) w.r.t. the min-parity-condition, then \(\mathsf {P}\) can use his (memoryless deterministic) winning strategy from the parity game to ensure infinite sup-inf-distance on \(W_{\mathsf {P}}\) also in \({{\mathcal {A}}}^\bullet \), while \({\overline{\mathsf {P}}}\) can use his winning strategy from the parity game to ensure at least finite sup-inf-distance in \({{\mathcal {A}}}^\bullet \). Hence, eventually the strategy iteration will terminate in a strategy that guarantees infinite sup-inf-distance on exactly \(W_P\) (Fig. 7).

In our implementation, we make use of the fact that the fixed-point iteration used for solving the optimality equations stated above can be easily parallelised in order to make use of modern multi-core CPUs (or even GPUs [32]).

The arena after one exploration step as show in Fig. 5 but extended with the node \(\bullet \). To simplify presentation, we have removed the node \(\bot \) as  will always rather play to \(\bullet \); we also have dropped the neutral edge weights i.e. every unlabelled edge is implicitly labeled by (0, 0). Top figure: Here, we are given the strategy we computed in Fig. 6 as initial strategy for

will always rather play to \(\bullet \); we also have dropped the neutral edge weights i.e. every unlabelled edge is implicitly labeled by (0, 0). Top figure: Here, we are given the strategy we computed in Fig. 6 as initial strategy for  ; for every node added by the exploration step, we extend the strategy by letting

; for every node added by the exploration step, we extend the strategy by letting  play to \(\bullet \) again. We identify as improvements the thick dotted edges. This gives us the strategy shown in the figure in the middle where the edges in green have been inherited from the initial strategy, while the edges in blue are the improvement identified in the preceding step of the strategy iteration. W.r.t. this strategy we only identify one further improvement which leads to the final strategy shown in the lower figure that wins the node \((a_0,\underline{b_0},c_0)\)

play to \(\bullet \) again. We identify as improvements the thick dotted edges. This gives us the strategy shown in the figure in the middle where the edges in green have been inherited from the initial strategy, while the edges in blue are the improvement identified in the preceding step of the strategy iteration. W.r.t. this strategy we only identify one further improvement which leads to the final strategy shown in the lower figure that wins the node \((a_0,\underline{b_0},c_0)\)

3.2.1 Exploring the boundary

\({{\mathcal {O}}}_{\textsf {expl}}\) selects nodes from the boundary B that should be further explored, i.e., where successors should be computed. After each expansion B is recomputed such that it contains the nodes which successors have not yet been explored. We instantiate \({{\mathcal {O}}}_{\textsf {expl}}\) with two different approaches. One is based on breadth-first search exploration, and one on a priority queue ordering states in the boundary by some quality score. For each method, we then have an additional variant that filters the states that are currently needed to determine the winner of the arena. In total, this results in the following four methods.

\({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {bfs}}\) (breadth-first search (BFS) exploration): In the initial implementation the algorithm explored and constructed the parity game using a breadth-first-search (BFS). This approach helps to ignore parts of the game that are far away from the initial state and not decisive for winning the game, however it also explores states that are close to the initial state, but are irrelevant with the currently computed strategy.

Conceptually, we use the exploration function that picks states from B with a minimal distance from the initial state \(q_0\) in each step. Define \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {bfs}}\) by:

In the implementation, instead of recomputing the minimal distances in each step, we select the next state from the boundary from a worklist queue.

\({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {bfs+}}\) (BFS exploration with strategy-based worklist filtering): This exploration strategy is a variant of \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {bfs}}\). The worklist is still populated in a BFS way, but we only keep states that we know are needed to determine if the initial state is winning or losing. These are states which are reachable through some path not blocked by an already winning or losing state. We define the exploration function \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {bfs+}}\) as follows:

We reuse the results of \({{\mathcal {O}}}_{\textsf {win}}\) already computed in the main algorithm. However, in contrast to \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {bfs}}\), we need to check for existence of a path from \(q_0\) to all states \(b \in B\) without already won states, which we do using a single linear-time search in each iteration.

\({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {pq}}\) (priority queue exploration based on scores): This exploration method is based on quality scores (to be defined in the next section) assigned to each state in the boundary. The idea is that a high score means this state is a promising state for

to win the game from the initial state, while a low score is a promising state for

to win the game from the initial state, while a low score is a promising state for  to win the game. As we initially do not know if the specification is realisable or unrealisable, and thus do not know for which player the initial state is winning, this method explores both states with high and low scores simultaneously. We assign scores not to states, but to edges, to incorporate information from colours and updates in the LAR. Let \({{\mathcal {O}}}_{\textsf {score}}(\alpha , q, a, q')\) be a function assigning a score to a given edge \((q,a,q')\) of the parity automaton \({A}(\alpha )\). With an intermediate function s(b) assigning to a state \(b \in B\) all scores of incoming edges, we define the exploration method \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {pq}}\) by:

to win the game. As we initially do not know if the specification is realisable or unrealisable, and thus do not know for which player the initial state is winning, this method explores both states with high and low scores simultaneously. We assign scores not to states, but to edges, to incorporate information from colours and updates in the LAR. Let \({{\mathcal {O}}}_{\textsf {score}}(\alpha , q, a, q')\) be a function assigning a score to a given edge \((q,a,q')\) of the parity automaton \({A}(\alpha )\). With an intermediate function s(b) assigning to a state \(b \in B\) all scores of incoming edges, we define the exploration method \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {pq}}\) by:

We implement this method with a double-ended priority queue, in which states are inserted with their respective minimal and maximal score of an incoming edge upon discovery.

\({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {pq+}}\) (priority queue exploration with strategy-based worklist filtering): Finally, we can combine the exploration using scores with the filtering function \(\textsf {filter}\) from \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {bfs+}}\), yielding the method \({{\mathcal {O}}}_{\textsf {expl}}^{\textsf {pq+}}\) defined by:

3.2.2 Quality scores

We now describe how to compute scores by \({{\mathcal {O}}}_{\textsf {score}}({\mathcal {T}}_\varphi , q,a,q')\) for an edge \((q,a,q')\) of the DPA \({A}({\mathcal {T}}_\varphi )\) leading to a boundary state \(q'\). We first define a function \(\textsf {score}\), which takes the acceptance-type annotated formula \({\mathcal {T}}_\varphi \) and an edge \((q,a,q')\) of \({A}({\mathcal {T}}_\varphi )\), and returns a weighted score\((w,s) = \textsf {score}({\mathcal {T}}_\varphi ,q,a,q')\). We always assign the score value s such that \(s = 0\) if \(q' = \bot \), \(s = 1\) if \(q' = \top \) and \(0< s < 1\) otherwise. The function \(\textsf {score}({\mathcal {T}}_\varphi ,q,a,q')\) computes (w, s) recursively on \({\mathcal {T}}_\varphi \), similarly to the LAR-product construction, and we define it by case distinction on \({\mathcal {T}}_\varphi \) and q.

If \(q = \bot \), return \(w = 1\) and \(s = 0\).

If \(q = \top \), return \(w = 1\) and \(s = 1\).

In the base case for \({\mathcal {W}}(\varphi ), {\mathcal {B}}(\varphi ), {\mathcal {C}}(\varphi )\) and \({\mathcal {P}}(\varphi )\), return \(w = 1\) and compute s depending on the type of automaton.

If we encounter \({\mathcal {W}}(\varphi )\), then \(\varphi \in \mu {LTL}\cup \nu {LTL}\). By using the construction of [13] the state \(q'\) is in fact a Boolean formula over modal operators treated as variables. Let \({\mathcal {V}}\) be the set of variables and let \({\mathcal {M}}\) be the set of satisfying assignments of \(q'\). We then set \(s = |{\mathcal {M}}| / 2^{|{\mathcal {V}}|}\). Using this definition, we assign to the state \({\mathbf {tt}}\) (which corresponds to \(\top \)) \(s = 1\) and to the state \({\mathbf {ff}}\) (which corresponds to \(\bot \)) \(s = 0\).

If we encounter \({\mathcal {B}}(\varphi )\), then we apply the construction of [38] and the state \(q' = (p, p')\) is a tuple of two Boolean formulas p and \(p'\). We then construct \(p \wedge p'\) and compute the scoring for \({\mathcal {W}}(p \wedge p')\).

If we encounter \({\mathcal {C}}(\varphi )\), then we apply the same approach as in \(B(\varphi )\), since these automata are obtained by complementing Büchi automata and have an identical structure besides the acceptance condition.

If we encounter \({\mathcal {P}}(\varphi )\), we bail and return \(s = \frac{1}{2}\).

In the case \({\mathcal {T}}_\varphi = {\mathcal {X}}_\wedge ({\mathcal {X}}_1,\ldots ,{\mathcal {X}}_n)\) and \({\mathcal {T}}_\varphi = {\mathcal {X}}_\vee ({\mathcal {X}}_1,\ldots ,{\mathcal {X}}_n)\), we first compute the child scores \((w_i,s_i) = \textsf {score}({\mathcal {X}}_i,q,a,q')\). Then, for each \({\mathcal {X}}_i\), we update \(w_i\) and \(s_i\) as follows:

If \({\mathcal {T}}_\varphi = {\mathcal {X}}_\wedge \), set \(w_i \leftarrow w_i \cdot \log _{\nicefrac {1}{2}} s_i\). This gives score values close to 0 an increased weight, which could make the whole conjunction false.

If \({\mathcal {T}}_\varphi = {\mathcal {X}}_\vee \), set \(w_i \leftarrow w_i \cdot \log _{\nicefrac {1}{2}} (1 - s_i)\). This gives score values close to 1 an increased weight, which could make the whole disjunction true.

If \({\mathcal {T}}_\varphi = {\mathcal {B}}_\wedge ({\mathcal {B}}_1, \ldots , {\mathcal {B}}_n)\), or \({\mathcal {T}}_\varphi = {\mathcal {C}}_\vee ({\mathcal {C}}_1, \ldots , {\mathcal {C}}_n)\), then \({\mathcal {X}}_i = {\mathcal {B}}\) or \({\mathcal {X}}_i = {\mathcal {C}}\) and \(q = (q'',r)\) and \(q' = (q''',r')\) for some round-robin counters \(r,r'\). Now if the child caused the round-robin counter to increase, i.e. we have \(r \le i < r'\), then we set \(w_i \leftarrow 2 \cdot w_i\) and update the score. If \({\mathcal {X}}_i = {\mathcal {B}}\), set \(s_i \leftarrow \frac{3+s_i}{4}\) to increase the score, and if \({\mathcal {X}}_i = {\mathcal {C}}\), set \(s_i \leftarrow \frac{s_i}{4}\) to decrease the score.