Abstract

We investigate schema languages for unordered XML having no relative order among siblings. First, we propose unordered regular expressions (UREs), essentially regular expressions with unordered concatenation instead of standard concatenation, that define languages of unordered words to model the allowed content of a node (i.e., collections of the labels of children). However, unrestricted UREs are computationally too expensive as we show the intractability of two fundamental decision problems for UREs: membership of an unordered word to the language of a URE and containment of two UREs. Consequently, we propose a practical and tractable restriction of UREs, disjunctive interval multiplicity expressions (DIMEs). Next, we employ DIMEs to define languages of unordered trees and propose two schema languages: disjunctive interval multiplicity schema (DIMS), and its restriction, disjunction-free interval multiplicity schema (IMS). We study the complexity of the following static analysis problems: schema satisfiability, membership of a tree to the language of a schema, schema containment, as well as twig query satisfiability, implication, and containment in the presence of schema. Finally, we study the expressive power of the proposed schema languages and compare them with yardstick languages of unordered trees (FO, MSO, and Presburger constraints) and DTDs under commutative closure. Our results show that the proposed schema languages are capable of expressing many practical languages of unordered trees and enjoy desirable computational properties.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When XML is used for document-centric applications, the relative order among the elements is typically important e.g., the relative order of paragraphs and chapters in a book. On the other hand, in case of data-centric XML applications, the order among the elements may be unimportant [1]. In this paper we focus on the latter use case. As an example, take a trivialized fragment of an XML document containing the DBLP repository in Fig. 1. While the order of the elements title, author, and year may differ from one publication to another, it has no impact on the semantics of the data stored in this semi-structured database.

Typically, a schema for XML defines for every node its content model i.e., the children nodes it must, may, and cannot contain. For instance, in the DBLP example, one would require every article to have exactly one title, one year, and one or more authors. A book may additionally contain one publisher and may also have one or more editors instead of authors. A schema has numerous important uses. For instance, it allows to validate a document against a schema and identify potential errors. A schema also serves as a reference for a user who does not know yet the structure of the XML document and attempts to query or modify its content.

The Document Type Definition (DTD), the most widespread XML schema formalism for (ordered) XML [8, 23], is essentially a set of rules associating with each label a regular expression that defines the admissible sequences of children. The DTDs are best fitted for ordered content because they use regular expressions, a formalism that defines sequences of labels. However, when unordered content model needs to be defined, there is a tendency to use over-permissive regular expressions. For instance, the DTD below corresponds to the one used in practice for the DBLP repository:Footnote 1

This DTD allows an article to contain any number of titles, years, and authors. A book may also have any number of titles, years, authors, editors, and publishers. These regular expressions are clearly over-permissive because they allow XML documents that do not follow the intuitive guidelines set out earlier e.g., an XML document containing an article with two titles and no should not be valid.

While it is possible to capture unordered content models with regular expressions, a simple pumping argument shows that their size may need to be exponential in the number of possible labels of the children. In case of the DBLP repository, this number reaches values up to 12, which basically precludes any practical use of such regular expressions. This suggests that over-permissive regular expressions may be employed for the reasons of conciseness and readability, a consideration of great practical importance.

The use of over-permissive regular expressions, apart from allowing documents that do not follow the guidelines, has other negative consequences e.g., in static analysis tasks that involve the schema. Take for example the following two twig queries [3, 47]:

The first query selects the elements labeled book, children of dblp and having an author containing the text “C. Papadimitriou.” The second query additionally requires that book has a title. Naturally, these two queries should be equivalent because every book should have a title. However, the DTD above does not capture properly this requirement, and consequently the two queries are not equivalent w.r.t. this DTD.

In this paper, we investigate schema languages for unordered XML. First, we study languages of unordered words, where an unordered word can be seen as a multiset of symbols. We consider unordered regular expressions (UREs), which are essentially regular expressions with unordered concatenation “ | |” instead of standard concatenation. The unordered concatenation can be seen as union of multisets, and consequently, the star “ ∗” can be seen as the Kleene closure of unordered languages.

Similarly to a DTD which associates to each label a regular expression to define its (ordered) content model, an unordered schema uses UREs to define for each label its unordered content model. For instance, take the following schema (satisfied by the tree in Fig. 1):

The above schema uses UREs and captures the intuitive requirements for the DBLP repository. In particular, an article must have exactly one title, exactly one year, and at least one author. A book may additionally have a publisher and may have one or more editors instead of authors. Note that, unlike the DTD defined earlier, this schema does not allow documents having an article with several titles or without any author.

Using UREs is equivalent to using DTDs with regular expressions interpreted under the commutative closure [4, 34]: essentially, a word matches the commutative closure of a regular expression if there exists a permutation of the word that matches the regular expression in the standard way. Deciding this problem is known to be NP-complete [26] for arbitrary regular expressions. We show that the problem of testing the membership of an unordered word to the language of a URE is NP-complete even for a restricted subclass of UREs that allows unordered concatenation and the option operator “ ?” only. Not surprisingly, testing the containment of two UREs is also intractable. These results are of particular interest because they are novel and do not follow from complexity results for regular expressions, where the order plays typically an essential role [31, 46]. Consequently, we focus on finding restrictions rendering UREs tractable and capable of capturing practical languages in a simple and concise manner.

The first restriction is to disallow repetitions of a symbol in a URE, thus banning expressions of the form a∥a ? because the symbol a is used twice. Instead we add general interval multiplicities a [1,2] which offer a way to specify a range of occurrences of a symbol in an unordered word without repeating a symbol in the URE. While the complexity of the membership of an unordered word to the language of a URE with interval multiplicities and without symbol repetitions has recently been shown to be in PTIME [11], testing containment of two such UREs remains intractable. We, therefore, add limitations on the nesting of the disjunction and the unordered concatenation operators and the use of intervals, which yields the proposed class of disjunctive interval multiplicity expressions (DIMEs). DIMEs enjoy good computational properties: both the membership and the containment problems become tractable. Also, we believe that despite the imposed restriction DIMEs remain a practical class of UREs. For instance, all UREs used in the schema for the DBLP repository above are DIMEs.

Next, we employ DIMEs to define languages of unordered trees and propose two schema languages: disjunctive interval multiplicity schema (DIMS), and its restriction, disjunction-free interval multiplicity schema (IMS). Naturally, the above schema for the DBLP repository is a DIMS. We study the complexity of several basic decision problems: schema satisfiability, membership of a tree to the language of a schema, containment of two schemas, twig query satisfiability, implication, and containment in the presence of schema. We present in Table 1 a summary of the complexity results and we observe that DIMSs and IMSs enjoy the same computational properties as general DTDs and disjunction-free DTDs, respectively.

The lower bounds for the decision problems for DIMSs and IMSs are generally obtained with easy adaptations of their counterparts for general DTDs and disjunction-free DTDs. To obtain the upper bounds we develop several new tools. We propose to represent DIMEs with characterizing tuples that can be efficiently computed and allow deciding in polynomial time the membership of a tree to the language of a DIMS and the containment of two DIMSs. Also, we develop dependency graphs for IMSs and a generalized definition of an embedding of a query. These two tools help us to reason about query satisfiability, query implication, and query containment in the presence of IMSs. Our constructions and results for IMSs allow also to characterize the complexity of query implication and query containment in the presence of disjunction-free DTDs, which, to the best of our knowledge, have not been previously studied.

Finally, we compare the expressive power of the proposed schema languages with yardstick languages of unordered trees (FO, MSO, and Presburger constraints) and DTDs under commutative closure. We show that the proposed schema languages are capable of expressing many practical languages of unordered trees.

It is important to mention that this paper is a substantially extended version of a preliminary work presented in [10]. More precisely, in this paper we show novel intractability results for some subclasses of unordered regular expressions and we extend the expressibility of the tractable subclasses. While in [10] we have considered only simple multiplicities (∗,+,?), in this paper we deal with arbitrary interval multiplicities of the form [n,m].

Organization

In Section 2 we introduce some preliminary notions. In Section 3 we study the reasons of intractability of unordered regular expressions while in Section 4 we present the tractable subclass of disjunctive interval multiplicity expressions (DIMEs). In Section 5 we define two schema languages: the disjunctive interval multiplicity schemas (DIMSs) and its restriction, the disjunction-free interval multiplicity schemas (IMSs), and the related problems of interest. In Sections 6 and 7 we analyze the complexity of the problems of interest for DIMSs and IMSs, respectively. In Section 8 we discuss the expressiveness of the proposed formalisms. In Section 9 we present related work. In Section 10 we summarize our results and outline further directions.

2 Preliminaries

Throughout this paper we assume an alphabet Σ that is a finite set of symbols. We also assume that Σ has a total order <Σ that can be tested in constant time.

Trees

We model XML documents with unordered labeled trees. Formally, a tree t is a tuple (N t ,r o o t t ,l a b t , c h i l d t ), where N t is a finite set of nodes, r o o t t ∈N t is a distinguished root node, \(lab_{t}:N_{t}\rightarrow {\Sigma }\) is a labeling function, and \(child_{t}\subseteq N_{t}\times N_{t}\) is the parent-child relation. We assume that the relation c h i l d t is acyclic and require every non-root node to have exactly one predecessor in this relation. By T r e e we denote the set of all trees.

Queries

We work with the class of twig queries, which are essentially unordered trees whose nodes may be additionally labeled with a distinguished wildcard symbol ⋆∉Σ and that use two types of edges, child (/) and descendant (//), corresponding to the standard XPath axes. Note that the semantics of the / /-edge is that of a proper descendant (and not that of descendant-or-self). Formally, a twig query q is a tuple (N q ,r o o t q ,l a b q ,c h i l d q , d e s c q ), where N q is a finite set of nodes, r o o t q ∈N q is the root node, \(lab_{q}:N_{q}\rightarrow {\Sigma }\cup \{\star \}\) is a labeling function, \(child_{q}\subseteq N_{q}\times N_{q}\) is a set of child edges, and \(desc_{q}\subseteq N_{q}\times N_{q}\) is a set of descendant edges. We assume that \(child_{q}\ \cap \ desc_{q}= \varnothing \) and that the relation \(child_{q} \ \cup \ desc_{q}\) is acyclic and we require every non-root node to have exactly one predecessor in this relation. By T w i g we denote the set of all twig queries. Twig queries are often presented using the abbreviated XPath syntax [47] e.g., the query q 0 in Fig. 2a can be written as r/⋆[⋆]// a.

Embeddings

We define the semantics of twig queries using the notion of embedding which is essentially a mapping of nodes of a query to the nodes of a tree that respects the semantics of the edges of the query. Formally, for a query q∈T w i g and a tree t∈T r e e, an embedding of q in t is a function \(\lambda : N_{q} \rightarrow N_{t}\) such that:

-

1.

λ(r o o t q )=r o o t t ,

-

2.

for every \((n,n^{\prime })\in child_{q}\), \((\lambda (n),\lambda (n^{\prime }))\in child_{t}\),

-

3.

for every \((n,n^{\prime })\in desc_{q}\), \((\lambda (n),\lambda (n^{\prime }))\in (child_{t})^{+}\) (the transitive closure of c h i l d t ),

-

4.

for every n∈N q , l a b q (n)=⋆ or l a b q (n)=l a b t (λ(n)).

We write \(t\preccurlyeq q\) if there exists an embedding of q in t. Later on, in Section 7.2 we generalize this definition of embedding as a tool that permits us characterizing the problems of interest.

As already mentioned, we use the notion of embedding to define the semantics of twig queries. In particular, we say that t satisfies q if there exists an embedding of q in t and we write t⊧q. By L(q) we denote the set of all trees satisfying q.

Note that we do not require the embedding to be injective i.e., two nodes of the query may be mapped to the same node of the tree. Figure 3 presents all embeddings of the query q 0 in the tree t 0 from Fig. 2.

Unordered Words

An unordered word is essentially a multiset of symbols i.e., a function \(w:{\Sigma }\rightarrow {\mathbb {N}}_{0}\) mapping symbols from the alphabet to natural numbers. We call w(a) the number of occurrences of the symbol a in w. We also write a∈w as a shorthand for w(a)≠0. An empty word ε is an unordered word that has 0 occurrences of every symbol i.e., ε(a)=0 for every a∈Σ. We often use a simple representation of unordered words, writing each symbol in the alphabet the number of times it occurs in the unordered word. For example, when the alphabet is Σ={a,b,c}, w 0=a a a c c stands for the function w 0(a)=3, w 0(b)=0, and w 0(c)=2. Additionally, we may write w 0=a 3 c 2 instead of w 0=a a a c c.

We use unordered words to model collections of children of XML nodes. As it is usually done in the context of XML validation [41, 42], we assume that the XML document is encoded in unary i.e., every node takes the same amount of memory. Thus, we use a unary representation of unordered words, where each occurrence of a symbol occupies the same amount of space. However, we point out that none of the results presented in this paper changes with a binary representation. In particular, the intractability of the membership of an unordered word to the language of a URE (Theorem 1) also holds with a binary representation of unordered words.

Consequently, the size of an unordered word w, denoted |w|, is the sum of the numbers of occurrences in w of all symbols in the alphabet. For instance, the size of w 0=a a a c c is |w 0|=5.

The (unordered) concatenation of two unordered words w 1 and w 2 is defined as the multiset union w 1⊎w 2 i.e., the function defined as (w 1⊎w 2)(a)=w 1(a)+w 2(a) for every a∈Σ. For instance, a a a c c⊎a b b c=a a a a b b c c c. Note that ε is the identity element of the unordered concatenation ε⊎w=w⊎ε=w for every unordered word w. Also, given an unordered word w, by w i we denote the concatenation w⊎…⊎w (i times).

A language is a set of unordered words. The unordered concatenation of two languages L 1 and L 2 is a language L 1⊎L 2={w 1⊎w 2∣w 1∈L 1,w 2∈L 2}. For instance, if L 1={a,a a c} and L 2={a c,b,ε}, then L 1⊎L 2={a,a b,a a c,a a b c,a a a c c}.

Unordered Regular Expressions

Analogously to regular expressions, which are used to define languages of ordered words, we propose unordered regular expressions to define languages of unordered words. Essentially, an unordered regular expression (URE) defines unordered words by using Kleene star “ ∗”, disjunction “ ∣”, and unordered concatenation “ ∥”. Formally, we have the following grammar:

where a∈Σ. The semantics of UREs is defined as follows:

For instance, the URE (a∥(b∣c))∗ accepts the unordered words having the number of occurrences of a equal to the total number of b’s and c’s.

The grammar above uses only one multiplicity ∗ and we introduce macros for two other standard and commonly used multiplicities:

The URE (a∥b ?)+∥(a∣c)? accepts the unordered words having at least one a, at most one c, and a number of b’s less or equal than the number of a’s.

Interval Multiplicities

While the multiplicities ∗, + , and ? allow to specify unordered words with multiple occurrences of a symbol, we additionally introduce interval multiplicities to allow to specify a range of allowed occurrences of a symbol in an unordered word. More precisely, we extend the grammar of UREs by allowing expressions of the form E [n,m] and \(E^{[n,m]^?}\), where \(n\in \mathbb N_{0}\) and \(m\in \mathbb N_{0}\cup \{\infty \}\). Their semantics is defined as follows:

In the rest of the paper, we write simply interval instead of interval multiplicity. Furthermore, we view the following standard multiplicities as macros for intervals:

Additionally, we introduce the single occurrence multiplicity 1 as a macro for the interval [1,1].

Note that the intervals do not add expressibility to general UREs, but they become useful if we impose some restrictions. For example, if we disallow repetitions of a symbol in a URE and ban expressions of the form a∥a ?, we can however write a [1,2] to specify a range of occurrences of a symbol in an unordered word without repeating a symbol in the URE.

3 Intractability of Unordered Regular Expressions

In this section, we study the reasons of the intractability of UREs w.r.t. the following two fundamental decision problems: membership and containment. In Section 3.1 we show that membership is NP-complete even under significant restrictions on the UREs while in Section 3.2 we show that the containment is \({\Pi }_{2}^{p}\)-hard (and in 3-EXPTIME). We notice that the proofs of both results rely on UREs allowing repetitions of the same symbol. Consequently, we disallow such repetitions and we show that this restriction does not avoid intractability of the containment (Section 3.3). We observe that the proof of this result employs UREs with arbitrary use of disjunction and intervals, and therefore, in Section 4 we impose further restrictions and define the disjunctive interval multiplicity expressions (DIMEs), a subclass for which we show that the two problems of interest become tractable.

3.1 Membership

In this section, we study the problem of deciding the membership of an unordered word to the language of a URE. First of all, note that this problem can be easily reduced to testing the membership of a vector to the Parikh image of a regular language, known to be NP-complete [26], and vice versa. We show that deciding the membership of an unordered word to the language a URE remains NP-complete even under significant restrictions on the class of UREs, a result which does not follow from [26].

Theorem 1

Given an unordered word w and an expression E of the grammar \(E ::= a\mid E^?\mid (E ``\parallel {\prime }{\prime }E)\) , deciding whether w∈L(E) is NP-complete.

Proof

To show that this problem is in NP, we point out that a nondeterministic Turing machine guesses a permutation of w and checks whether it is accepted by the NFA corresponding to E with the unordered concatenation replaced by standard concatenation. We recall that w has unary representation.

Next, we prove the NP-hardness by reduction from SAT1-in-3 i.e., given a 3CNF formula, determine whether there exists a valuation such that each clause has exactly one true literal (and exactly two false literals). The SAT1-in-3 problem is known to be NP-complete [38]. The reduction works as follows. We take a 3CNF formula \(\varphi =c_{1}\wedge \ldots \wedge c_{k}\) over the variables \(\{x_{1},\ldots ,x_{n}\}\). We take the alphabet \(\{d_{1},\ldots ,d_{k},v_{1},\ldots ,v_{n}\}\). Each d i corresponds to a clause c i (for \(1\leqslant i\leqslant k\)) and each v j corresponds to a variable x j (for \(1\leqslant j\leqslant n\)). We construct the unordered word \(w_{\varphi }=d_{1}{\ldots } d_{k}v_{1}{\ldots } v_{n}\) and the expression \(E_{\varphi } = X_{1}\parallel {\ldots } \parallel X_{n}\), where for \(1\leqslant j\leqslant n\):

and \(d_{t_{1}},\ldots ,d_{t_{l}}\) (with \(1\leqslant t_{1},\ldots ,t_{l}\leqslant k\)) correspond to the clauses that use the literal x j , and \(d_{f_{1}},{\ldots } d_{f_{m}}\) (with \(1\leqslant f_{1},\ldots ,f_{m}\leqslant k\)) correspond to the clauses that use the literal ¬x j . For example, for the formula \(\varphi _{0}=(x_{1}\vee \neg x_{2}\vee x_{3})\wedge (\neg x_{1}\vee x_{3}\vee \neg x_{4})\), we construct \(w_{\varphi _{0}}=d_{1}d_{2}v_{1}v_{2}v_{3}v_{4}\) and

We claim that φ∈SAT1-in-3 iff w φ ∈L(E φ ). For the only if case, let \(V:\{x_{1},\ldots ,x_{n}\}\rightarrow \{\mathit {true},\mathit {false}\}\) be the SAT1-in-3 valuation of φ. We use V to construct the derivation of w φ in L(E φ ): for \(1\leqslant j\leqslant n\), we take \((v_{j}\parallel d_{t_{1}}\parallel \ldots \parallel d_{t_{l}})\) from X j if V(x j )=t r u e, and \((v_{j}\parallel d_{f_{1}}\parallel \ldots \parallel d_{f_{m}})\) from X j otherwise. Since V is a \(\text {SAT}_{1\text {-}\text {in}\text {-}3}\) valuation of φ, each d i (with \(1\leqslant i\leqslant k\)) occurs exactly once, hence \(w_{\varphi }\in L(E_{\varphi })\). For the if case, we assume that \(w_{\varphi }\in L(E_{\varphi })\). Since \(w_{\varphi }(v_{j}) = 1\), we infer that w φ uses exactly one of the expressions of the form (v j ∥…)?. Moreover, since w φ (d i )=1, we infer that the valuation encoded in the derivation of w φ in L(E φ ) validates exactly one literal of each clause in φ, and therefore, φ∈SAT1-in-3. Clearly, the described reduction works in polynomial time. □

3.2 Containment

In this section, we study the problem of deciding the containment of two UREs. It is well known that regular expression containment is a PSPACE-complete problem [46], but we cannot adapt this result to characterize the complexity of the containment of UREs because the order plays an essential role in the reduction. In this section, we prove that deciding the containment of UREs is \({\Pi }_{2}^{\mathrm P}\)-hard and we show an upper bound which follows from the complexity of deciding the satisfiability of Presburger logic formulas [36, 44].

Theorem 2

Given two UREs E 1 and E 2 , deciding \(L(E_{1})\subseteq L(E_{2})\) is 1) \({\Pi }_{2}^{\mathrm P}\) -hard and 2) in 3-EXPTIME.

Proof

1) We prove the \({\Pi }_{2}^{\mathrm P}\)-hardness by reduction from the problem of checking the satisfiability of ∀∗∃∗QBF formulas, a classical \({\Pi }_{2}^{p}\)-complete problem. We take a ∀∗∃∗QBF formula

where φ=c 1∧…∧c k is a quantifier-free CNF formula. We call the variables x 1,…,x n universal and the variables y 1,…,y m existential.

We take the alphabet \(\{d_{1},\ldots ,d_{k},t_{1},f_{1},\ldots ,t_{n},f_{n}\}\) and we construct two expressions, \(E_{\psi }\) and \(E_{\psi }^{\prime }\). First, \(E_{\psi } = d_{1}\parallel \ldots \parallel d_{k}\parallel X_{1}\parallel \ldots \parallel X_{n}\), where for \(1\leqslant i\leqslant n\) \(X_{i} = ((t_{i}\parallel d_{a_{1}}\parallel \ldots \parallel d_{a_{l}})\mid (f_{i}\parallel d_{b_{1}}\parallel \ldots \parallel d_{b_{s}}))\), and \(d_{a_{1}},{\ldots } d_{a_{l}}\) (with \(1\leqslant a_{1},\ldots ,a_{l}\leqslant k\)) correspond to the clauses which use the literal x i , and \(d_{b_{1}},\ldots ,d_{b_{s}}\) (with \(1\leqslant b_{1},\ldots ,b_{s}\leqslant k\)) correspond to the clauses which use the literal ¬x i . For example, for the formula

we construct:

Note that there is an one-to-one correspondence between the unordered words in L(E ψ ) and the valuations of the universal variables. For example, given the formula ψ 0, the unordered word \({d_{1}^{3}}d_{2}d_{3}t_{1}f_{2}\) corresponds to the valuation V such that V(x 1)=t r u e and V(x 2)=f a l s e.

Next, we construct \(E_{\psi }^{\prime } = X_{1}\parallel \ldots \parallel X_{n}\parallel Y_{1}\parallel \ldots \parallel Y_{m}\), where:

-

\(X_{i} = ((t_{i}\parallel d_{a_{1}}^{*}\parallel \ldots \parallel d_{a_{l}}^{*})\mid (f_{i}\parallel d_{b_{1}}^{*}\parallel \ldots \parallel d_{b_{s}}^{*}))\), and \(d_{a_{1}},{\ldots } d_{a_{l}}\) (with \(1\leqslant a_{1},\ldots ,a_{l}\leqslant k\)) correspond to the clauses which use the literal x i , and \(d_{b_{1}},\ldots ,d_{b_{s}}\) (with \(1\leqslant b_{1},\ldots ,b_{s}\leqslant k\)) correspond to the clauses which use the literal ¬x i (for 1≤i≤n),

-

\(Y_{j} = ((d_{a_{1}}^{*}\parallel \ldots \parallel d_{a_{l}}^{*})\mid (d_{b_{1}}^{*}\parallel \ldots \parallel d_{b_{s}}^{*}))\), and \(d_{a_{1}},{\ldots } d_{a_{l}}\) (with \(1\leqslant a_{1},\ldots ,a_{l}\leqslant k\)) correspond to the clauses which use the literal y j , and \(d_{b_{1}},\ldots ,d_{b_{s}}\) (with \(1\leqslant b_{1},\ldots ,b_{s}\leqslant k\)) correspond to the clauses which use the literal ¬y j (for \(1\leqslant j\leqslant m\)).

For example, for ψ 0 above we construct:

We claim that ⊧ψ iff \(E_{\psi }\subseteq E_{\psi }^{\prime }\). For the only if case, for each valuation of the universal variables, we take the corresponding unordered word w∈L(E ψ ). Since there exists a valuation of the existential variables which satisfies φ, we use this valuation to construct a derivation of w in \(L(E_{\psi }^{\prime })\). For the if case, for every unordered word from L(E ψ ), we take its derivation in \(L(E_{\psi }^{\prime })\) and we use it to construct a valuation of the existential variables which satisfies φ. Clearly, the described reduction works in polynomial time.

2) The membership of the problem to 3-EXPTIME follows from the complexity of deciding the satisfiability of Presburger logic formulas, which is in 3-EXPTIME [36]. Given two UREs E 1 and E 2, we compute in linear time [44] two existential Presburger formulas for their Parikh images: \(\varphi _{E_{1}}\) and \(\varphi _{E_{2}}\), respectively. Next, we test the satisfiability of the following closed Presburger logic formula: \(\forall \overline {x}.\ \varphi _{E_{1}}(\overline {x})\Rightarrow \varphi _{E_{2}}(\overline {x})\). □

While the complexity gap for the containment of UREs (as in Theorem 2) is currently quite important, we believe that this gap may be reduced by working on quantifier elimination for the Presburger formula obtained by translating the containment of UREs (as shown in the second part of the proof of Theorem 2). Although we believe that this problem is \({\Pi }_{2}^{\mathrm P}\)-complete, its exact complexity remains an open question.

3.3 Disallowing Repetitions

The proofs of Theorem 1 and Theorem 2 rely on UREs allowing repetitions of the same symbol, which might be one of the causes of the intractability. Consequently, from now on we disallow repetitions of the same symbol in a URE. Similar restrictions are commonly used for the regular expressions to maintain practical aspects: single occurrence regular expressions (SOREs) [7], conflict-free types [17, 18, 22], and duplicate-free DTDs [33]. While the complexity of the membership of an unordered word to the language of a URE without symbol repetitions has recently been shown to be in PTIME [11], testing containment of two such UREs continues to be intractable.

Theorem 3

Given two UREs E 1 and E 2 not allowing repetitions of symbols, deciding \(L(E_{1})\subseteq L(E_{2})\) is coNP-hard.

Proof

We show the coNP-hardness by reduction from the complement of 3SAT. Take a 3CNF formula \(\varphi =c_{1}\wedge \ldots \wedge c_{k}\) over the variables \(\{x_{1},\ldots ,x_{n}\}\). We assume w.l.o.g. that each variable occurs at most once in a clause. Take the alphabet \(\{a_{ij}\mid 1\leqslant i\leqslant k, 1\leqslant j\leqslant n,\) c i uses x j or ¬x j }. We construct the expression \(E_{\varphi } = X_{1}\parallel \ldots \parallel X_{n}\), where \(X_{j}=((a_{t_{1}j}\parallel {\ldots } \parallel a_{t_{l}j})\mid (a_{f_{1}j}\parallel \ldots \parallel a_{f_{m}j})\) (for \(1\leqslant j\leqslant n\)), and \(c_{t_{1}},\ldots ,c_{t_{l}}\) (with \(1\leqslant t_{1},\ldots ,t_{l}\leqslant k\)) are the clauses which use the literal x j , and \(c_{f_{1}},\ldots ,c_{f_{m}}\) (with \(1\leqslant f_{1},\ldots ,f_{m}\leqslant k\)) are the clauses which use the literal ¬x j . Next, we construct \(E_{\varphi }^{\prime }= (C_{1}\mid \ldots \mid C_{k})^{[0,k-1]}\), where \(C_{i}=(a_{ij_{1}}\mid \ldots \mid a_{ij_{p}})^{+}\) (for \(1\leqslant i\leqslant k\)), and \(x_{j_{1}},\ldots ,x_{j_{p}}\) (with \(1\leqslant j_{1},\ldots ,j_{p}\leqslant n\)) are the variables used by the clause c i . For example, for

we obtain:

Note that there is an one-to-one correspondence between the unordered words w V in L(E φ ) and the valuations V of the variables \(x_{1},\ldots ,x_{n}\) (*). For example, for above φ 0 and the valuation V such that V(x 1)=V(x 2)=V(x 3)=t r u e and V(x 4)=f a l s e, the unordered word w V =a 11 a 32 a 13 a 23 a 24 a 34 is in \(L(E_{\varphi _{0}})\). Moreover, given an w V ∈L(E φ ), one can easily obtain the valuation.

We observe that the interval [0,k−1] is used above a disjunction of k expressions of the form C i and there is no repetition of symbols among the expressions of the form C i . This allows us to state an instrumental property (**): \(w\in L(E_{\varphi }{\prime })\) iff there exists an i∈{1,…,k} such that none of the symbols used in C i occurs in w. From (*) and (**), we infer that given a valuation V, V⊧φ iff \(w_{V}\in L(E_{\varphi })\,\backslash \,L(E_{\varphi }{\prime })\), that yields φ∈ 3SAT iff \(L(E_{\varphi })\nsubseteq L(E_{\varphi }^{\prime })\). Clearly, the described reduction works in polynomial time. □

Theorem 3 shows that disallowing repetitions of symbols in a URE does not avoid the intractability of the containment.

Additionally, we observe that the proof of Theorem 3 employs UREs with arbitrary use of disjunction and intervals. Consequently, in the next section we impose further restrictions that yield a class of UREs with desirable computational properties.

4 Disjunctive Interval Multiplicity Expressions (DIMEs)

In this section, we present the DIMEs, a subclass of UREs for which membership and containment become tractable. First, we present an intuitive representation of DIMEs with characterizing tuples (Section 4.1). Next, we formally define DIMEs and show that they are precisely captured by their characterizing tuples (Section 4.2). Finally, we use a compact representation of the characterizing tuples to show the tractability of DIMEs (Section 4.3).

4.1 Characterizing Tuples

In this section, we introduce the notion of characterizing tuple that is an alternative, more intuitive representation of DIMEs, the subclass of UREs that we formally define in Section 4.2. Recall that by a∈w we denote w(a)≠0. Given a DIME E, the characterizing tuple Δ E =(C E ,N E ,P E ,K E ) is as follows.

-

The conflicting pairs of siblings C E consisting of all pairs of symbols in Σ such that E defines no word using both symbols simultaneously:

$$ C_{E} = \{(a,b)\in{\Sigma}\times{\Sigma}\mid \nexists w\in L(E).\ a\in w\wedge b\in w\}. $$ -

The extended cardinality map N E capturing for each symbol in the alphabet the possible numbers of its occurrences in the unordered words defined by E:

$$ N_{E} = \{(a, w(a))\in {\Sigma}\times\mathbb N_{0}\mid w\in L(E)\}. $$ -

The collections of required symbols P E capturing symbols that must be present in every word; essentially, a set of symbols X belongs to P E if every word defined by E contains at least one element from X:

$$ P_{E}=\{X\subseteq{\Sigma}\mid\forall w\in L(E).\ \exists a\in X.\ a \in w\}. $$ -

The counting dependencies K E consisting of pairs of symbols (a,b) such that in every word defined by E, the number of bs is at most the number of as. Note that if both (a,b) and (b,a) belong to K E , then all unordered words defined by E should have the same number of a’s and b’s.

$$\begin{array}{@{}rcl@{}} K_{E}=\{(a,b)\in{\Sigma}\times{\Sigma}\mid\forall w\in L(E).\ w(a)\geqslant w(b)\}. \end{array} $$

As an example we take \(E_{0}= a^{+} \parallel ((b \parallel c^?)^{+}\mid d^{[5,\infty ]})\) and we illustrate its characterizing tuple \({\Delta }_{E_{0}}\). Because P E is closed under supersets, we list only its minimal elements:

We point out that N E may be infinite and P E exponential in the size of E. Later on we discuss how to represent both sets in a compact manner while allowing efficient manipulation.

Then, an unordered word w satisfies a characterizing tuple Δ E corresponding to a DIME E, denoted w⊧Δ E , if the following conditions are satisfied:

-

1.

w⊧C E i.e., \(\forall (a, b) \in C_{E}.\ (a\in w \Rightarrow b \notin w) \wedge (b\in w \Rightarrow a\notin w)\),

-

2.

w⊧N E i.e., ∀a∈Σ. (a,w(a))∈N E ,

-

3.

w⊧P E i.e., ∀X∈P E . ∃a∈X. a∈w,

-

4.

w⊧K E i.e., \(\forall (a,b)\in K_{E}.\ w(a)\geqslant w(b)\).

For instance, the unordered word a a b b c satisfies the characterizing tuple \({\Delta }_{E_{0}}\) corresponding to the aforementioned DIME \(E_{0}= a^{+} \parallel ((b \parallel c^?)^{+}\mid d^{[5,\infty ]})\) since it satisfies all the four conditions imposed by \({\Delta }_{E_{0}}\). On the other hand, note that the following unordered words do not satisfy \({\Delta }_{E_{0}}\):

-

a b d d d d d because it contains at the same time b and d, and \((b,d)\in C_{E_{0}}\),

-

a d d because it has two d’s and \((d,2)\notin N_{E_{0}}\),

-

a a because it does not contain any b or d and \(\{b,d\}\in P_{E_{0}}\),

-

a b b c c c because it has more c’s than b’s and \((b,c)\in K_{E_{0}}\).

In the next section, we define the DIMEs and show that they are precisely captured by characterizing tuples.

4.2 Grammar of DIMEs

An atom is \((a_{1}^{I_{1}}\parallel \ldots \parallel a_{k}^{I_{k}})\), where all I i ’s are ? or 1. For example, (a∥b ?∥c) is an atom, but (a [3,4]∥b) is not an atom. A clause is \((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\), where all A i ’s are atoms and all I i ’s are intervals. A clause is simple if all I i ’s are ? or 1. For example, (a [2,3]∣(b ?∥c)∗) is a clause (which is not simple), ((a ?∥b)∣c ?) is a simple clause while ((a ?∥b +)∣c) is not a clause.

A disjunctive interval multiplicity expression (DIME) is \((D_{1}^{I_{1}}\parallel \ldots \parallel D_{k}^{I_{k}})\), where for \(1\leqslant i\leqslant k\) either 1) D i is a simple clause and I i ∈{+,∗}, or 2) D i is a clause and I i ∈{1,?}. Moreover, a symbol can occur at most once in a DIME. For example, (a∣(b∥c ?)+)∥(d [3,4]∣e ∗) is a DIME while (a∥b ?)+∥(a∣c) is not a DIME because it uses the symbol a twice. A disjunction-free interval multiplicity expression (IME) is a DIME which does not use the disjunction operator. An example of IME is a∥(b∥c ?)+∥d [3,4]. For more practical examples of DIMEs see Examples 3 and 4 from Section 5.

We have tailored DIMEs to be able to capture them with characterizing tuples that permit deciding membership and containment in polynomial time (cf. Section 4.3). As we have already pointed out Section 3.3, a slightly more relaxed restriction on the nesting of disjunction and intervals leads to intractability of the containment (Theorem 3). Even though DIMEs may look very complex, the imposed restrictions are necessary to obtain lower complexity while considering fragments with practical relevance (cf. Section 8).

Next, we show that each DIME can be rewritten as an equivalent reduced DIME. Reduced DIMEs may also seem complex, but they are a building block for (i) proving that the language of a DIME is precisely captured by its characterizing tuple (Lemma 1), and (ii) computing the compact representation of the characterizing tuples that yield the tractability of DIMEs (cf. Section 4.3).

Before defining the reduced DIMEs, we need to introduce some additional notations. Given an atom A (resp. a clause D), we denote by Σ A (resp. Σ D ) the set of symbols occurring in A (resp. D). Given a DIME E, by \({I_{E}^{a}}\) (resp. \({I_{E}^{a}}\) or \({I_{E}^{D}}\)) we denote the interval associated in E to the symbol a (resp. atom A or clause D). Because we consider only expressions without repetitions, this interval is well-defined. Moreover, if E is clear from the context, we write simply I a (resp. I A or I D) instead of \({I_{E}^{a}}\) (resp. \({I_{E}^{a}}\) or \({I_{E}^{D}}\)). Furthermore, given an interval I which can be either [n,m] or [n,m]?, by I ? we understand the interval [n,m]?. In a reduced DIME E, each clause with interval D I has one of the following three types:

-

1.

D I=(A 1∣…∣A k )+, where \(k\geqslant 2\) and, for every i∈{1,…,k}, A i is an atom such that there exists \(a\in {\Sigma }_{A_{i}}\) such that I a=1.

For example, ((a∥b ?)∣c)+ has type 1, but a + and ((a ?∥b ?)∣c)+ do not.

-

2.

\((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\), where for every i∈{1,…,k}1) A i is an atom such that there exists \(a\in {\Sigma }_{A_{i}}\) such that I a=1 and 2) 0 does not belong to the set represented by the interval I i .

For example, \((a\mid (b^?\parallel c)^{[5,\infty ]})\) and a + have type 2, but \((a\mid (b^?\parallel c^?)^{[5,\infty ]})\) and \((a^{*}\mid (b^?\parallel c)^{[5,\infty ]})\) do not.

-

3.

\((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\), where for every i∈{1,…,k} A i is an atom and I i is an interval such that 0 belongs to the set represented by the interval I i .

For example, \((a^{*}\mid (b\parallel c)^{[3,4]^?})\) and \((a^?\parallel b^?)^{*}\) have type 3, but \((a^?\parallel b^?)^{[3,4]}\) does not.

The reduced DIMEs easily yield the construction of their characterizing tuples. Take a clause with interval D I from a DIME E and observe that the symbols from Σ D are present in the characterizing tuple Δ E as follows.

-

If D I is of type 1, then there is no symbol in Σ D that occurs in a conflict in C E . Otherwise, C E consists of all pairs of distinct symbols (a,b) from Σ D that appear in different atoms from D I.

-

If D I is of type 1, then we have (a,n)∈N E for every \((a,n)\in {\Sigma }_{D}\times {\mathbb {N}_{0}}\). Otherwise, the possible number of occurrences of every symbol a from Σ D can be obtained directly from the two intervals above it: the interval of D and the interval of the atom containing a. We explain in Section 4.3 how to precisely construct a compact representation of the potentially infinite set N E .

-

If D I is of type 1 or 2, then every unordered word defined by E contains at least one of the symbols a from Σ D having interval I a=1. More precisely, P E contains all sets of symbols \(X\subseteq {\Sigma }\) containing, for every atom of D, at least one symbol a with I a=1. For example, for ((a∥b∥c ?)∣(d∥e))+, P E consists of the sets {a,d},{a,e},{b,d},{b,e} and all their supersets. Otherwise, if D I is of type 3, then there is no set in P E containing only symbols from Σ D .

-

Regardless of the type of D I, the counting dependencies K E consist of all pairs of symbols (a,b) such that they appear in the same atom in D and I a=1.

To obtain reduced DIMEs, we use the following rules:

-

Take a simple clause \((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\).

-

\((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})^{*}\) goes to \(A_{1}^{*}\parallel \ldots \parallel A_{k}^{*}\) (k clauses of type 3). black Essentially, we distribute the ∗ of a disjunction of atoms with intervals to each of the atoms. For example, (a∣(b∥c ?))∗ goes to a ∗∥(b∥c ?)∗.

-

\((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})^{+}\) goes to \(A_{1}^{*}\parallel \ldots \parallel A_{k}^{*}\) (k clauses of type 3) if there exists an atom with interval \(A_{i}^{I_{i}}\) (i∈{1,…,k}) that defines the empty word i.e., I i =? or I a=? for every symbol \(a\in {\Sigma }_{A_{i}}\). If the empty word is defined, then we can basically transform the + into ∗ and then distribute the ∗ as for the previous case. For example, ((a∥b ?)∣(c∥d)?)+ goes to (a∥b ?)∗∥(c∥d)∗.

-

-

Take a clause \((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\).

-

\((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})^?\) goes to \((A_{1}^{I_{1}^?}\mid \ldots \mid A_{k}^{I_{k}^?})\) (type 3). We essentially distribute the ? of a disjunction of atoms with intervals to each of the atoms. For example, (a [2,3]∣b +)? goes to \((a^{[2,3]^?}\mid b^{*})\).

-

\((A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\) goes to \((A_{1}^{I_{1}^?}\mid \ldots \mid A_{k}^{I_{k}^?})\) (type 3) if there exists an atom with interval \(A_{i}^{I_{i}}\) (i∈{1,…,k}) that defines the empty word i.e., 0 belongs to the set represented by I i or I a=? for every symbol \(a\in {\Sigma }_{A_{i}}\). If the empty word is defined by one of the atoms, then we can basically distribute ? to all of them. For example, (a∣(b∥c)[0,5]) goes to (a ?∣(b∥c)[0,5]).

-

-

Take an atom (a1?∥…∥a k?) and an interval I. Then, (a1?∥…∥a k?)I goes to \((a_{1}^?\parallel \ldots \parallel a_{k}^?)^{[0,\max (I)]}\), where by \(\max (I)\) we denote the maximum value from the set represented by the interval I. This step may be combined with one of the previous ones to rewrite a clause with interval as one of type 3. For example, ((a ?∥b ?)[3,6]∣c) goes to ((a ?∥b ?)[0,6]∣c ?).

-

Remove symbols a (resp. atoms A or clauses D) such that I a (resp. I A or I D) is [0,0].

Note that each of the rewriting steps gives an equivalent reduced expression.

Next, we assume that we work with reduced DIMEs only and show that the language defined by a DIME E comprises of all unordered words satisfying the characterizing tuple Δ E .

Lemma 1

Given an unordered word w and a DIME E, w∈L(E) iff w⊧Δ E .

Proof

The only if part follows from the definition of the satisfiability of Δ E . For the if part, we take the tuple Δ E corresponding to a DIME \(E=D_{1}^{I_{1}}\parallel \ldots \parallel D_{k}^{I_{k}}\) and an unordered word w such that w⊧Δ E . Let \(w=w_{1}\uplus \ldots \uplus w_{k}\uplus w^{\prime }\), where each w i contains all occurrences in w of the symbols from \({\Sigma }_{D_{i}}\) (for \(1\leqslant i\leqslant k\)). Since w⊧N E , we infer that there is no symbol \(a\in {\Sigma }\setminus ({\Sigma }_{D_{1}}\cup \ldots \cup {\Sigma }_{D_{k}})\) such that a∈w, which implies \(w^{\prime }=\varepsilon \). Thus, proving w⊧E reduces to proving that \(w_{i}\models D_{i}^{I_{i}}\) (for \(1\leqslant i\leqslant k\)). Since E is a reduced DIME, each derivation can be constructed by reasoning on the three possible types of the \(D_{i}^{I_{i}}\) (for \(1\leqslant i\leqslant k\)).

Case 1

Take \(D_{i}^{I_{i}}=(A_{1}\mid \ldots \mid A_{k})^{+}\) of type 1. From the semantics of the UREs, we observe that proving \(w_{i}\models D_{i}^{I_{i}}\) is equivalent to proving that (i) w i is non-empty and (ii) w i can be split as \(w_{i}= w_{1}^{\prime }\uplus \ldots \uplus w_{p}^{\prime }\), where every \(w_{j}^{\prime }\) (\(1\leqslant j\leqslant p\)) satisfies an atom A l (\(1\leqslant l\leqslant k\)). First, we point out that since w satisfies the collections of required symbols P E , we infer that w i is non-empty, which implies (i). Then, since w satisfies the extended cardinality map N E and the counting dependencies K E , we infer that (ii) is also satisfied.

Case 2

Take \(D_{i}^{I_{i}} = (A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\) of type 2. From the semantics of UREs, we observe that proving \(w_{i}\models D_{i}^{I_{i}}\) is equivalent to proving that (i) w i is non-empty and (ii) there exists an atom with interval \(A_{j}^{I_{j}}\) (\(1\leqslant j\leqslant k\)) such that \(w_{i}\models A_{j}^{I_{j}}\). Since w⊧P E , we infer that w i is non-empty hence (i) is satisfied. Then, since w⊧C E , we infer that only the symbols from one atom A j of D i are present in w i . Moreover, since w⊧N E and w⊧K E , we infer that the number of occurrences of each symbol from \({\Sigma }_{A_{j}}\) are such that \(w_{i}\models A_{j}^{I_{j}}\). Hence, the condition (ii) is also satisfied.

Case 3

Take \(D_{i}^{I_{i}} = (A_{1}^{I_{1}}\mid \ldots \mid A_{k}^{I_{k}})\) of type 3. The only difference w.r.t the previous case is that w i may be also empty, hence proving \(w_{i}\models D_{i}^{I_{i}}\) is equivalent to proving only that there exists an atom with interval \(A_{j}^{I_{j}}\) (\(1\leqslant j\leqslant k\)) such that \(w_{i}\models A_{j}^{I_{j}}\), which follows similarly to the previous case.

□

Moreover, we define the subsumption of two characterizing tuples, which captures the containment of DIMEs. Given two DIMEs E and \(E^{\prime }\), we write \({\Delta }_{E^{\prime }}\preccurlyeq {\Delta }_{E}\) if \(C_{E}\subseteq C_{E^{\prime }}\), \(N_{E^{\prime }}\subseteq N_{E}\), \(P_{E}\subseteq P_{E^{\prime }}\), and \(K_{E}\subseteq K_{E^{\prime }}\). Then, we obtain the following.

Lemma 2

Given two DIMEs E and \(E^{\prime }\) , \({L}(E^{\prime })\subseteq {L}(E)\) iff \({\Delta }_{E^{\prime }}\preccurlyeq {\Delta }_{E}\).

Proof

First, we claim that given two DIMEs E and \(E^{\prime }\): \({\Delta }_{E^{\prime }}\preccurlyeq {\Delta }_{E}\) iff \(w\models {\Delta }_{E^{\prime }}\) implies w⊧Δ E for every w (*). The only if part of (*) follows directly from the definitions while the if part can be easily shown by contraposition. From Lemma 1 and (*) we infer the correctness of Lemma 2. □

Example 1

For the following DIMEs, it holds that \(L(E^{\prime })\subsetneq L(E)\) and \(L(E)\nsubseteq L(E^{\prime })\):

-

Take E=a ∗∥b ∗ and \(E^{\prime } = (a\parallel b^?)^{*}\). Note that \(K_{E}=\varnothing \) and \(K_{E^{\prime }} = \{(a,b)\}\). For instance, the unordered word b belongs to L(E), but does not belong to \(L(E^{\prime })\).

-

Take \(E=a^{[3,6]^?}\mid b^{*}\) and \(E^{\prime } = a^{[3,6]}\mid b^{+}\). Note that \(P_{E} =\varnothing \), and \(P_{E^{\prime }} = \{\{a,b\}\}\). For instance, the unordered word ε belongs to L(E), but does not belong to \(L(E^{\prime })\).

-

Take E=(a∥b ?)∗ and \(E^{\prime } = (a\parallel b^?)^{[0,5]}\). Note that (a,6) belongs to N E , but not to \(N_{E^{\prime }}\). For instance, the unordered word a 6 belongs to L(E), but does not belong to \(L(E^{\prime })\).

-

Take E=(a∣b)+ and \(E^{\prime } = a^{+}\mid b^{+}\). Note that \(C_{E} = \varnothing \), and \(C_{E^{\prime }} = \{(a,b),(b,a)\}\). For instance, the unordered word ab belongs to L(E), but does not belong to \(L(E^{\prime })\).

Lemma 2 shows that two equivalent DIMEs yield the same characterizing tuple, and hence, the tuple Δ E can be viewed as a “canonical form” for the language defined by a DIME E. Formally, we obtain the following.

Corollary 1

Given two DIMEs E and \(E^{\prime }\) , \(L(E) = L(E^{\prime })\) iff \({\Delta }_{E} = {\Delta }_{E^{\prime }}\).

In the next section, we show that the characterizing tuple has a compact representation that permits us to decide the problems of membership and containment in polynomial time.

4.3 Tractability of DIMEs

We now show that the characterizing tuple admits a compact representation that yields the tractability of deciding membership and containment of DIMEs.

Given a reduced DIME E, note that C E and K E are quadratic in |Σ| and can be easily constructed. The set C E consists of all pairs of distinct symbols (a,b) such that they appear in different atoms in the same clause of type 2 or 3. Moreover, K E consists of all pairs of distinct symbols (a,b) such that they appear in the same atom and I a=1.

While N E may be infinite, it can be easily represented in a compact manner using intervals: for every symbol a, the set \(\{i\in \mathbb N_{0}\mid (a,i)\in N_{E}\}\) is representable by an interval. Given a symbol a∈Σ, by \(\hat N_{E}(a)\) we denote the interval representing the set \(\{i\in \mathbb N_{0}\mid (a,i)\in N_{E}\}\) that can be easily obtained from E:

-

\(\hat N_{E}(a) = [0,0]\) if a appears in no clause in E,

-

\(\hat N_{E}(a) = [0,\infty ]\) (or simply ∗) if a appears in a clause of type 1 in E,

-

\(\hat N_{E}(a)= I^{A}\) if I a=1, A is the atom containing a, and A is the unique atom of a clause of type 2 or 3,

-

\(\hat N_{E}(a) = {I^{A}}^?\) if I a=1, A is the atom containing a, and A appears in a clause of type 2 or 3 containing at least two atoms,

-

\(\hat N_{E}(a)= [0,\max (I^{A})]\) if I a=?, A is the atom containing a, and A appears in a clause of type 2 or 3.

For example, for \(E_{0}= a^{+} \parallel ((b \parallel c^?)^{+}\mid d^{[5,\infty ]})\), we obtain the following \(\hat N_{E_{0}}\):

Naturally, testing \(N_{E^{\prime }}\subseteq N_{E}\) reduces to a simple test on \(\hat N_{E^{\prime }}\) and \(\hat N_{E}\).

Representing P E in a compact manner is more tricky. A natural idea would be to store only its \(\subseteq \)-minimal elements since P E is closed under supersets. Unfortunately, there exist DIMEs having an exponential number of \(\subseteq \)-minimal elements. For instance, for the DIME E 1=((a∥b)∣(c∥d))+∥((e∥f)[2,5]∣g [1,3])∥(h ∗∥i [0,9]), the set \(P_{E_{1}}\) has 6 \(\subseteq \)-minimal elements: {a,c}, {a,d}, {b,c}, {b,d}, {e,g}, and {f,g}. The example easily generalizes to arbitrary numbers of atoms used in the clauses.

However, we observe that the exponentially-many \(\subseteq \)-minimal elements may contain redundant information that is already captured by other elements of the characterizing tuple. For instance, for the above DIME E 1, if we know that {a,c} belongs to P E , we can easily see that other \(\subseteq \)-minimal elements also belong to P E . More precisely, we observe that for every unordered word w defined by E it holds that w(a)=w(b), w(c)=w(d) and w(e)=w(f), which is captured by the counting dependencies K E ={(a,b),(b,a),(c,d),(d,c),(e,f),(f,e)}. Hence, for the unordered words defined by E, the presence of an a implies the presence of a b, the presence of a c implies the presence of a d, etc. Consequently, if {a,c} belongs to P E , then {b,c}, {a,d}, and {b,d} also belong to P E . Similarly, if {e,g} belongs to P E , then {f,g} also belongs to P E .

Next, we use the aforementioned observation to define a compact representation of P E . For this purpose, we introduce the auxiliary notion of symbols implied by a DIME E in the presence of a set of symbols X, denoted i m p l E (X):

For example, for the above E 1, we have i m p l E ({a,c})={a,b,c,d}.

Moreover, given a DIME E, by \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\) we denote the set of all \(\subseteq \)-minimal elements of P E . Given a subset \(P\subseteq \mathit {P^{\subseteq _{\tiny \min }}_{E}}\), we say that P is:

-

non-redundant if \(\forall X\in P.\ \not \exists Y\in P.\ X\subseteq \mathit {impl}_{E}(Y)\),

-

covering if \(\forall X\in \mathit {P^{\subseteq _{\tiny \min }}_{E}}.\ \exists Y\in P.\ X\subseteq \mathit {impl}_{E}(Y)\).

For example, take the above E 1=((a∥b)∣(c∥d))+∥((e∥f)[2,5]∣g [1,3])∥(h ∗∥i [0,9]) and recall that \(\mathit {P^{\subseteq _{\tiny \min }}_{E_{1}}}=\{\{a,c\},\{a,d\},\{b,c\},\{b,d\},\{e,g\},\{f,g\}\}\). Then, we have the following:

-

{{b,c},{f,g}} is non-redundant and covering,

-

{{b,c}} is non-redundant and it is not covering,

-

{{a,c},{b,c},{f,g}} is redundant and covering,

-

{{a,c},{b,c}} is redundant and not covering.

Given a DIME E, the compact representation of the collections of required symbols P E is naturally a non-redundant and covering subset of \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\). Since there may exist many non-redundant and covering subsets of \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\), we use the total order <Σ on the alphabet Σ to propose a deterministic construction of the compact representation \(\hat P_{E}\). For this purpose, we define first some additional notations.

Given an atom A, by Φ(A) we denote the smallest label from Σ w.r.t. <Σ that is present in A and has interval 1:

For example, Φ(a∥b)=a. Then, given a clause with interval D I, by Φ(D I) we denote the set of all symbols Φ(A) for every atom A in D:

For example, Φ(((a∥b)∣(c∥d))+)={a,c} and Φ(((e∥f)[2,5]∣g [1,3]))={e,g}. Then, \(\hat P(E)\) consists of all such sets for the clauses with intervals of type 1 or 2:

For example, \(\hat P_{E_{1}}=\{\{a,c\},\{e,g\}\}\). Notice that the set {a,c} is due to the clause with interval ((a∥b)∣(c∥d))+ of type 1 and the set {e,g} is due to the clause with interval ((e∥f)[2,5]∣g [1,3]) of type 2. Also notice that the clause with interval (h ∗∥i [0,9]) is of type 3, none of its symbols is required, and consequently, no set in \(\hat P_{E}\) contains symbols from it.

We have introduced all elements to be able to define the compact representation of a characterizing tuple. Given a DIME E, we say that \(\hat {\Delta }=(C_{E},\hat N_{E},\hat P_{E}, K_{E})\) is the compact representation of its characterizing tuple Δ E . Then, an unordered word w satisfies \(\hat {\Delta }_{E}\), denoted \(w\models \hat {\Delta }_{E}\), if

-

w⊧C E and w⊧K E as previously defined when we have introduced w⊧Δ E ,

-

\(w\models \hat N_{E}\) i.e., \(\forall a\in {\Sigma }.\ w(a)\in \hat N_{E}(a)\),

-

\(w\models \hat P_{E}\) i.e., \(\forall X\in \hat P_{E}.\ \exists a\in X.\ a\in w\). Notice that we use exactly the same definition as for w⊧P E and recall that \(\hat P_{E}\) is in fact a non-redundant and covering subset of \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\).

Next, we show that given a DIME E, its compact characterizing tuple \(\hat {\Delta }_{E}\) defines precisely the same set of unordered words as its characterizing tuple Δ E .

Lemma 3

Given an unordered word w and a DIME E, w⊧Δ E iff \(w\models \hat {\Delta }_{E}\).

Proof

The only if part follows directly from the definitions. For the if part, proving w⊧Δ E reduces to proving that w⊧P E , which moreover, reduces to proving that for every X from \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\) there is a symbol a in X that occurs in w (*). Since \(\hat P_{E}\) is a covering subset of \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\), we know that for every \(X\in \mathit {P^{\subseteq _{\tiny \min }}_{E}}\) there exists a set \(Y\in \hat P_{E}\) such that \(X\subseteq \mathit {impl}_{E}(Y)\). Since \(w\models \hat P_{E}\) and w⊧K E , we infer that (*) is satisfied. □

Additionally, we define the subsumption of the compact representations of two characterizing tuples. Given two DIMEs E and \(E^{\prime }\), we write \(\hat {\Delta }_{E^{\prime }}\preccurlyeq \hat {\Delta }_{E}\) if

-

\(C_{E}\subseteq C_{E^{\prime }}\) and \(K_{E}\subseteq K_{E^{\prime }}\) (as for the subsumption of characterizing tuples),

-

\(\forall a\in {\Sigma }.\ \hat N_{E^{\prime }}(a)\subseteq \hat N_{E}(a)\),

-

\(\forall X\in \hat P_{E}.\ \exists Y\in \hat P_{E^{\prime }}.\ Y\subseteq \mathit {impl}_{E^{\prime }}(X)\).

Next, we show that the subsumption of compact representations of characterizing tuples captures the subsumption of characterizing tuples.

Lemma 4

Given two DIMEs E and \(E^{\prime }\) , \({\Delta }_{E^{\prime }}\preccurlyeq {\Delta }_{E}\) iff \(\hat {\Delta }_{E^{\prime }}\preccurlyeq \hat {\Delta }_{E}\).

Proof

First, since P E is closed under supersets, we observe that

Moreover, the conditions \(C_{E}\subseteq C_{E^{\prime }}\) and \(K_{E}\subseteq K_{E^{\prime }}\) are part of both \({\Delta }_{E^{\prime }}\preccurlyeq {\Delta }_{E}\) and \(\hat {\Delta }_{E^{\prime }}\preccurlyeq \hat {\Delta }_{E}\). Consequently, proving \({\Delta }_{E^{\prime }}\preccurlyeq {\Delta }_{E}\) iff \(\hat {\Delta }_{E^{\prime }}\preccurlyeq \hat {\Delta }_{E}\) reduces to proving that, if \(C_{E}\subseteq C_{E^{\prime }}\) and \(K_{E}\subseteq K_{E^{\prime }}\), then

For the only if part, take a set X from \(\hat P_{E}\). Since X also belongs to \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\), we know by hypothesis that there exists a set Y in \(\mathit {P^{\subseteq _{\tiny \min }}_{E^{\prime }}}\) such that \(Y\subseteq X\). Then, construct a set \(Y^{\prime }\) from Y by replacing each symbol b from Y with the smallest a w.r.t. <Σ such that (a,b) and (b,a) belong to \(K_{E^{\prime }}\). Moreover, since \(K_{E}\subseteq K_{E^{\prime }}\), we infer that \(Y^{\prime }\subseteq \mathit {impl}_{E^{\prime }}(X)\). For the if part, take an X from \(\hat P_{E}\) and an Y from \(\hat P_{E^{\prime }}\) s.t. \(Y\subseteq \mathit {impl}_{E^{\prime }}(X)\). To construct the corresponding \(X^{\prime }\) in \(\mathit {P^{\subseteq _{\tiny \min }}_{E}}\) and \(Y^{\prime }\) in \(\mathit {P^{\subseteq _{\tiny \min }}_{E^{\prime }}}\) such that \(Y^{\prime }\subseteq X^{\prime }\), we replace symbols a from X and \(a^{\prime }\) from Y with symbols b in \(X^{\prime }\) and \(b^{\prime }\) in \(Y^{\prime }\) such that (a,b) and (b,a) belong to K E , and \((a^{\prime },b^{\prime })\) and \((b^{\prime },a^{\prime })\) belong to \(K_{E^{\prime }}\). Since \(K_{E}\subseteq K_{E^{\prime }}\), we know that such \(X^{\prime }\) and \(Y^{\prime }\) do exist. □

Example 2

Take E=a ∗∥(b∣c)+∥d ∗ and \(E^{\prime }=(a\parallel b)^{+}\mid (c\parallel d)^{+}\). Notice that \(L(E^{\prime })\subseteq L(E)\), \({\Delta }_{E^{\prime }}\preccurlyeq {\Delta }_{E}\), and \(\hat {\Delta }_{E^{\prime }}\preccurlyeq \hat {\Delta }_{E}\). In particular, we have the following.

-

\(C_{E}=\varnothing \) is included in \(C_{E^{\prime }}=\{(a,c),(a,d),(b,c),(b,d),(c,a),(c,b),(d,a),(d,b)\}\),

-

\(\hat N_{E}(a) = \hat N_{E^{\prime }}(a) = *,\ldots , \hat N_{E}(d) = \hat N_{E^{\prime }}(d) = *\),

-

\(K_{E}=\varnothing \) is included in \(K_{E^{\prime }} = \{(a,b),(b,a),(c,d),(d,c)\}\),

-

\(\hat P_{E} = \{\{b,c\}\}\) and \(\hat P_{E^{\prime }} = \{\{a,c\}\}\) that compactly represent P E ={{b,c},…} and \(P_{E^{\prime }}=\{\{a,c\}, \{a,d\}, \{b,c\}, \{b,d\},\ldots \}\), respectively (we have listed only the \(\subseteq \)-minimal sets). Then, take X={b,c} from \(\hat P_{E}\) and notice that there exists Y={a,c} in \(\hat P_{E^{\prime }}\) such that \( Y\subseteq \mathit {impl}_{E^{\prime }}(X)\) because \(\mathit {impl}_{E^{\prime }}(\{b,c\}) = \{a,b,c,d\}\).

Next, we show that the compact representation is of polynomial size.

Lemma 5

Given a DIME E, the compact representation \(\hat {\Delta }_{E}=(C_{E},\hat N_{E},\hat P_{E},K_{E})\) of its characterizing tuple Δ E is of size polynomial in the size of the alphabet Σ.

Proof

By construction, the sizes of C E and K E are quadratic in |Σ| while the sizes of \(\hat P_{E}\) and \(\hat N_{E}\) are linear in |Σ|. □

The use of compact representation of characterizing tuples allows us to state the main result of this section.

Theorem 4

Given an unordered word w and two DIMEs E and \(E^{\prime }\):

-

1.

deciding whether w∈L(E) is in PTIME,

-

2.

deciding whether \(L(E^{\prime })\subseteq L(E)\) is in PTIME.

Proof

The first part follows from Lemma 1, Lemma 3, and Lemma 5. The second part follows from Lemma 2, Lemma 4, and Lemma 5. □

5 Interval Multiplicity Schemas

In this section, we employ DIMEs to define schema languages and we present the related problems of interest.

Definition 1

A disjunctive interval multiplicity schema (DIMS) is a tuple S=(r o o t S ,R S ), where r o o t S ∈Σ is a designated root label and R S maps symbols in Σ to DIMEs. By D I M S we denote the set of all disjunctive interval multiplicity schemas. A disjunction-free interval multiplicity schema (IMS) S=(r o o t S ,R S ) is a restricted DIMS, where R S maps symbols in Σ to IMEs. By I M S we denote the set of all disjunction-free interval multiplicity schemas.

We define the language captured by a DIMS S in the following way. Given a tree t, we first define the unordered word \(c{h_{t}^{n}}\) of children of a node n∈N t of t i.e., \({\mathit {ch}_{t}^{n}}(a) = |\{m\in N_{t}\mid (n,m)\in \mathit {child}_{t}\wedge \mathit {lab}_{t}(m)=a\}|\). Now, a tree t satisfies S, in symbols t⊧S, if l a b t (r o o t t )=r o o t S and for every node n∈N t , \({ch_{t}^{n}}\in L(R_{S}(lab_{t}(n)))\). By \(L(S)\subseteq {\mathit {Tree}}\) we denote the set of all trees satisfying S.

In the sequel, we present a schema S=(r o o t S ,R S ) as a set of rules of the form \(a\rightarrow R_{S}(a)\), for every a∈Σ. If L(R S (a))=ε, then we write \(a\rightarrow \epsilon \) or we simply omit writing such a rule.

Example 3

Take the content model of a semi-structured database storing information about a peer-to-peer file sharing system, having the following rules: 1) a peer is allowed to download at most the same number of files that it uploads, and 2) peers are split into two groups: a peer is a vip if it uploads at least 100 files, otherwise it is a simple user:

Example 4

Take the content model of a semi-structured database storing information about two types of cultural events: plays and movies. Every event has a date when it takes place. If the event is a play, then it takes place in a theater while a movie takes place in a cinema.

Problems of Interest

We define next the problems of interest and we formally state the corresponding decision problems parameterized by the class of schema \(\mathcal S\) and, when appropriate, by a class of queries \(\mathcal Q\).

-

Schema satisfiability – checking if there exists a tree satisfying the given schema:

$$ \text{SAT}_{\mathcal{S}} = \{ S \in\mathcal{S} \mid \exists t\in Tree.\ t\models S \}. $$ -

Membership – checking if the given tree satisfies the given schema:

$$ \text{MEMB}_{\mathcal{S}} = \{(S,t) \in\mathcal{S}\times Tree \mid t\models S\}. $$ -

Schema containment – checking if every tree satisfying one given schema satisfies another given schema:

$$ \text{CNT}_{\mathcal{S}} = \{(S_{1},S_{2})\in\mathcal{S}\times\mathcal{S}\mid L(S_{1})\subseteq L(S_{2})\}. $$ -

Query satisfiability by schema – checking if there exists a tree that satisfies the given schema and the given query:

$$ \text{SAT}_{\mathcal{S},\mathcal{Q}} = \{(S,q)\in \mathcal{S}\times\mathcal{Q}\mid \exists t\in L(S).\ t\models q\}. $$ -

Query implication by schema – checking if every tree satisfying the given schema satisfies also the given query:

$$ \text{IMPL}_{\mathcal{S},\mathcal{Q}} = \{(S, q)\in \mathcal{S}\times\mathcal{Q}\mid \forall t\in L(S).\ t\models q\}. $$ -

Query containment in the presence of schema – checking if every tree satisfying the given schema and one given query also satisfies another given query:

$$ \text{CNT}_{\mathcal{S},\mathcal{Q}}= \{(p, q, S) \in \mathcal{Q}\times\mathcal{Q}\times\mathcal{S} \mid \forall t\in L(S).\ t \models p\Rightarrow t\models q\}. $$

We study these problems for DIMSs and IMSs in Sections 6 and 7 of the paper.

6 Complexity of Disjunctive Interval Multiplicity Schemas (DIMSs)

In this section, we present the complexity results for DIMSs. First, we show the tractability of schema satisfiability and containment. Then, we provide an algorithm for deciding membership in streaming i.e., that processes an XML document in a single pass and using memory depending on the height of the tree and not on its size. Finally, we point out that the complexity of query satisfiability, implication, and containment in the presence of the schema follow from existing results.

First, we show the tractability of schema satisfiability and schema containment.

Proposition 1

SAT DIMS and CNT DIMS are in PTIME.

Proof

A simple algorithm based on dynamic programming can decide the satisfiability of a DIMS. More precisely, given a schema S=(r o o t S ,R S ), one has to determine for every symbol a of the alphabet Σ whether there exists a (finite) tree t that satisfies \(S^{\prime }=(a,R_{S})\). Then, the schema S is satisfiable if there exist such a tree for the root label r o o t S .

Moreover, testing the containment of two DIMSs reduces to testing, for each symbol in the alphabet, the containment of the associated DIMEs, which is in PTIME (Theorem 4). □

Next, we provide an algorithm for deciding membership in streaming i.e., that processes an XML document in a single pass and uses memory depending on the height of the tree and not on its size. Our notion of streaming has been employed in [42] as a relaxation of the constant-memory XML validation against DTDs, which can be performed only for some DTDs [41, 42]. In general, validation against DIMSs cannot be performed with constant memory due to the same observations as in [41, 42] w.r.t. the use of recursion in the schema. Hence, we have chosen our notion of streaming to be able to have an algorithm that works for the entire class of DIMSs. We assume that the input tree is given in XML format, with arbitrary ordering of sibling nodes. Moreover, the proposed algorithm has earliest rejection i.e., if the given tree does not satisfy the given schema, the algorithm outputs the result as early as possible. For a tree t, h e i g h t(t) is the height of t defined in the usual way. We employ the standard RAM model and assume that subsequent natural numbers are used as labels in Σ.

Proposition 2

MEMB DIMS is in PTIME. There exists an earliest rejection streaming algorithm that checks membership of a tree t in a DIMS S in time O(|t|×|Σ| 2 ) and using space O(height(t)×|Σ| 2 ).

Proof

We propose Algorithm 1 for deciding the membership of a tree t to the language of a DIMS S. The input tree t is given in XML format, with some arbitrary ordering of sibling nodes. We assume a well-formed stream \(\widetilde {t} \subset \{\mathit {open},\mathit {close}\}\times {\Sigma }\) representing a tree t and a procedure \(read(\widetilde {t})\) that returns the next pair (𝜃,b) in the stream, where 𝜃∈{o p e n,c l o s e} and b∈Σ. The algorithm works for every arbitrary ordering of sibling nodes. To validate a tree t against a DIMS S=(r o o t S ,R S ), one has to run Algorithm 1 after reading the opening tag of the root.

For a given node, the algorithm constructs the compact representation of the characterizing tuple of its label (line 1), which requires space O(|Σ|2) (cf. Lemma 5). The algorithm also stores for a given node the number of occurrences of each label in Σ among its children. This is done using the array c o u n t, which requires space O(Σ). Initially, all values in the array c o u n t are set at 0 (lines 2-3) and they are updated after reading the open tag of the children (lines 4-6). During the execution, the algorithm maintains a stack whose height is the depth of the currently visited node. Naturally, the bound on space required is O(h e i g h t(t)×|Σ|2).

The algorithm has earliest rejection since it rejects a tree as early as possible. More precisely, this can be done after reading the opening tag for nodes that violate the maximum value for the allowed cardinality for their label (lines 7-8) or violate some conflicting pair of siblings (lines 9-10). If it is not the case, the algorithm recursively validates the corresponding subtree (lines 11-12). After reading all children of the current node, the algorithm checks whether the components of the characterizing tuple are satisfied: the extended cardinality map (lines 14-15), the collections of required symbols (lines 16-17), and the counting dependencies (lines 18-19). Notice that since we have checked the conflicting pairs of siblings after reading each opening tag, we do not need to check them again after reading all children. However, we still need to check the extended cardinality map at this moment to see whether the number of occurrences of each label is in the allowed interval. When we have read the opening tag, we were able to reject only if the maximum value for the allowed number of occurrences has been already violated. As for the collections of required symbols and the counting dependencies, we are able to establish whether they are satisfied or not after reading all children. If none of the constraints imposed by the characterizing tuple is violated, the algorithm returns true (line 20). As we have already shown with Lemma 1 and Lemma 3, the compact representation of the characterizing tuple captures precisely the language of a given DIME. Consequently, the algorithm returns true after reading the root node iff the given tree satisfies the given schema. □

We continue with complexity results that follow from known facts. Query satisfiability for DTDs is NP-complete [5] and we adapt the result for DIMSs.

Proposition 3

SAT DIMS,Twig is NP-complete.

Proof

Proposition 4.2.1 from [5] implies that satisfiability of twig queries in the presence of DTDs is NP-hard. We adapt the proof and we obtain the following reduction from SAT to SAT D I M S,T w i g : we take a CNF formula \(\varphi =\bigwedge _{i=1}^{n}C_{i}\) over the variables x 1,…,x m , where each C i is a disjunction of literals. We take Σ={r,t 1,f 1,…,t m ,f m ,c 1,…,c n } and we construct:

-

The DIMS S having the root label r and the rules:

-

\(r \rightarrow (t_{1} \mid f_{1}) \parallel \dots \parallel (t_{m}\mid f_{m})\),

-

\(t_{j} \rightarrow c_{j_{1}}\parallel \ldots \parallel c_{j_{k}}\), where \(c_{j_{1}},\ldots ,c_{j_{k}}\) correspond to the clauses using x j (for \(1\leqslant j\leqslant m\)),

-

\(f_{i} \rightarrow c_{j_{1}}\parallel \ldots \parallel c_{j_{k}}\), where \(c_{j_{1}},\ldots ,c_{j_{k}}\) correspond to the clauses using ¬x j (for \(1\leqslant j\leqslant m\)).

-

-

The twig query \(q = r[{/\!/} c_{1}]\dots [{/\!/} c_{n}]\).

For example, for the formula \(\varphi _{0}=(x_{1}\vee \neg x_{2} \vee x_{3})\wedge (\neg x_{1}\vee x_{3}\vee \neg x_{4})\) we obtain the DIMS S containing the rules:

and the query q=/r[/ /c 1][/ /c 2]. The formula φ is satisfiable iff (S,q)∈SAT D I M S,T w i g . The described reduction works in polynomial time in the size of the input formula.

For the NP upper bound, we reduce SAT D I M S,T w i g to SAT D T D,T w i g (i.e., the problem of satisfiability of twig queries in the presence of DTDs), known to be in NP (Theorem 4.4 from [5]). Given a DIMS S, we construct a DTD D having the same root label as S and whose rules are obtained from the rules of S by replacing the unordered concatenation with standard (ordered) concatenation. Then, take a twig query q. We claim that there exists an (unordered) tree satisfying q and S iff there exists an (ordered) tree satisfying q and D. For the if part, take an ordered tree t satisfying q and D, remove the order to obtain an unordered tree \(t^{\prime }\), and observe that \(t^{\prime }\) satisfies S. For the only if part, take an unordered tree t satisfying q and S. From the construction of D, we infer that there exists an ordered tree \(t^{\prime }\) (obtained via some ordering of the sibling nodes of t) satisfying both q and D. We recall that the twig queries disregard the relative order among the siblings.

□

The complexity results for query implication and query containment in the presence of DIMSs follow from the EXPTIME-completeness proof from [35] for twig query containment in the presence of DTDs.

Proposition 4

IMPL DIMS,Twig and CNT DIMS,Twig are EXPTIME-complete.

Proof

The EXPTIME-hardness proof of twig containment in the presence of DTDs (Theorem 4.5 from [35]) has been done using a reduction from the Two-player corridor tiling problem and a technique introduced in [32]. In the proof from [35], when testing the containment \(p\subseteq _{S}q\), p is chosen such that it satisfies every tree in S, hence IMPL DTD, Twig is also EXPTIME-complete. Furthermore, Lemma 3 in [32] can be adapted to twig queries and DIMS: for every S ∈ DIMS and twig queries q 0,q 1,…,q m there exists \(S^{\prime }\in DIMS\) and twig queries q and \(q^{\prime }\) such that \(q_{0} \subseteq _{S} q_{1}\cup \ldots \cup q_{m}\) iff \(q \subseteq _{S^{\prime }} q^{\prime }\). Moreover, the DTD in [35] can be captured with a DIMS constructible in polynomial time: take the same reduction as in [35] and then replace the standard concatenation with unordered concatenation. Hence, we infer that CNT D I M S,T w i g and IMPL D I M S,T w i g are also EXPTIME-hard.

For the EXPTIME upper bound, we reduce CNT D I M S,T w i g to CNT D T D,T w i g (i.e., the problem of twig query containment in the presence of DTDs), known to be in EXPTIME (Theorem 4.4 from [35]). Given a DIMS S, we construct a DTD D having the same root label as S and whose rules are obtained from the rules of S by replacing the unordered concatenation with standard (ordered) concatenation. Then, take two twig queries p and q. We claim that \(p\subseteq _{S} q\) iff \(p\subseteq _{D} q\) and show the two parts by contraposition. For the if part, assume \(p\not \subseteq _{S} q\), hence there exists an unordered tree t that satisfies q and S, but not p. From the construction of D, we infer that there exists an ordered tree \(t^{\prime }\) (obtained via some ordering of the sibling nodes of t) that satisfies q and D, but not p. For the only if part, assume \(p\not \subseteq _{D} q\), hence there exists an ordered tree t that satisfies q and D, but not p. By removing the order of t, we obtain an unordered tree \(t^{\prime }\) that satisfies q and S, but not p. We recall that the twig queries disregard the relative order among the siblings. The membership of CNT D I M S,T w i g to EXPTIME yields that IMPL D I M S,T w i g is also in EXPTIME (it suffices to take as p the universal query). □

7 Complexity of Disjunction-Free Interval Multiplicity Schemas (IMSs)

Although query satisfiability and query implication in the presence of schema are intractable for DIMSs, we prove that they become tractable for IMSs (Section 7.4). We also show a considerably lower complexity for query containment in the presence of schema: coNP-completeness for IMSs instead of EXPTIME-completeness for DIMSs (Section 7.4). Additionally, we point out that our results for IMSs allow also to characterize the complexity of query implication and query containment in the presence of disjunction-free DTDs (i.e., restricted DTDs using regular expressions without disjunction operator), which, to the best of our knowledge, have not been previously studied (Section 7.5). To prove our results, we develop a set of tools that we present next: dependency graphs (Section 7.1), generalized definition of embedding (Section 7.2), family of characteristic graphs (Section 7.3).

7.1 Dependency Graphs

Recall that IMSs use IMEs, which are essentially expressions of the form \(A_{1}^{I_{1}}\parallel \ldots \parallel A_{k}^{I_{k}}\), where A 1,…,A k are atoms, and I 1,…,I k are intervals. Given an IME E, let s y m b o l s ∀(E) be the set of symbols present in all unordered words in L(E), and s y m b o l s(E) the set of symbols present in at least one unordered word in L(E):

Given an IME E, notice that \({\mathit {symbols}^{\forall }}(E)\subseteq { \mathit {symbols}^{\exists }}(E)\), and moreover, the sets s y m b o l s ∀(E) and s y m b o l s(E) can be easily constructed from E. For example, given E 0=(a∥b ?)[5,6]∥c +, we have s y m b o l s ∀(E 0)={a,c} and s y m b o l s(E 0)={a,b,c}.

Definition 2

Given an IMS S=(r o o t S ,R S ), the existential dependency graph of S is the directed rooted graph \(G_{S}^{\exists } = ({\Sigma },root_{S}, E_{S}^{\exists })\) with the node set Σ, the distinguished root node r o o t S , and the set of edges \(E_{S}^{\exists }\) such that \((a,b)\in E_{S}^{\exists }\) if b∈s y m b o l s ∃(R S (a)). Furthermore, the universal dependency graph of S is the directed rooted graph \(G_{S}^{\forall } = ({\Sigma },root_{S}, E_{S}^{\forall })\) such that \((a,b)\in E_{S}^{\forall }\) if b∈s y m b o l s ∀(R S (a)).

Example 5

Take the IMS S containing the rules:

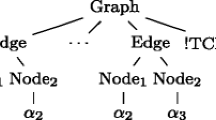

In Fig. 4 we present the existential dependency graph of S and the universal dependency graph of S.

Given an IMS S and a symbol a, we say that a is reachable (or useful) in S if there exists a tree in L(S) which has a node labeled by a. Moreover, we say that an IMS is trimmed if it contains rules only for the reachable symbols. For every satisfiable IMS S, there exists an equivalent trimmed version which can be obtained by removing the rules for the symbols involved in unreachable components in \(G_{S}^{\forall }\) (in the spirit of [2]). Notice that the unreachable components of \(G_{S}^{\forall }\) correspond in fact to cycles in \(G_{S}^{\forall }\). In the sequel, we assume w.l.o.g. that all IMSs that we manipulate are satisfiable and trimmed.

7.2 Generalizing the Embedding

We generalize the notion of embedding previously defined in Section 2. Note that in the rest of the section we use the term dependency graphs when we refer to both existential and universal dependency graphs. First, an embedding of a query q in a dependency graph G=(Σ,r o o t,E) is a function \(\lambda : N_{q} \rightarrow {\Sigma }\) such that:

-

1.