Abstract

We investigate the behavior of the spectrum of the continuous Anderson Hamiltonian \(\mathcal{H}_{L}\), with white noise potential, on a segment whose size \(L\) is sent to infinity. We zoom around energy levels \(E\) either of order 1 (Bulk regime) or of order \(1\ll E \ll L\) (Crossover regime). We show that the point process of (appropriately rescaled) eigenvalues and centers of mass converge to a Poisson point process. We also prove exponential localization of the eigenfunctions at an explicit rate. In addition, we show that the eigenfunctions converge to well-identified limits: in the Crossover regime, these limits are universal. Combined with the results of our companion paper (Dumaz and Labbé in Ann. Probab. 51(3):805–839, 2023), this identifies completely the transition between the localized and delocalized phases of the spectrum of \(\mathcal{H}_{L}\). The two main technical challenges are the proof of a two-points or Minami estimate, as well as an estimate on the convergence to equilibrium of a hypoelliptic diffusion, the proof of which relies on Malliavin calculus and the theory of hypocoercivity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In a celebrated article [4], Anderson proposed the Hamiltonian \(-\Delta + V\) on the lattice \({{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{\text{\scriptsize \textbf{Z}}}{ \text{\tiny \textbf{Z}}}}}^{d}\) as a simplified model for electron conduction in a crystal. The so-called disorder \(V\) is a random potential that models the defects of the crystal. The question was whether those defects can trap the electron i.e. localize the electronic wave function. He argued that for a large enough disorder \(V\), the spectrum is pure point and the eigenfunctions exponentially localized – a phenomenon now referred to as Anderson localization. Mathematically, one can see this model as the interpolation between the discrete Laplacian \(-\Delta \) on the grid \({{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{\text{\scriptsize \textbf{Z}}}{ \text{\tiny \textbf{Z}}}}}^{d}\), which has delocalized eigenfunctions and the multiplication by a potential \(V\) on each site, whose eigenfunctions are the coordinate vectors.

One of the first rigorous results of Anderson localization was obtained by Goldsheid, Molchanov and Pastur [15]: it concerned the continuum analogue of the above model in dimension \(d=1\), namely the operator \(-\partial _{x}^{2} +V\) on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\), for some specific random potential \(V\). This was followed by a series of major articles [3, 10, 13, 23] to name but a few, in the discrete or the continuum setting, and for general dimension \(d\ge 1\). One can summarize the main results as follows: (1) In dimension \(d=1\), Anderson localization holds in the whole spectrum; (2) In dimension \(d \geq 2\), for a large enough disorder or at a low enough energy, Anderson localization holds. In dimension \(d \geq 3\), it is expected (but not proved) that there is a delocalized phase for \(V\) weak enough while in dimension \(d=2\), the question remains open. We refer to [6, 20] for more details.

In the present article, we consider the case where the potential is a white noise \(\xi \) in dimension \(d=1\). This is a random Gaussian distribution with covariance given by the Dirac delta: it models physical situations where the disorder is totally uncorrelated. Due to its universality property (white noise arises as scaling limit of appropriately rescaled noises with finite variance), this is a natural choice of potential in the continuum. However, white noise is a highly irregular potential as it is only distribution valued, and therefore falls out of scope of virtually all general results of the literature.

It is standard to tackle Anderson localization by considering first the Hamiltonian truncated to a finite box, before passing to the infinite volume limit. In this article we focus on the truncated Hamiltonian \(\mathcal{H}_{L} = -\partial ^{2}_{x} + \xi \) on \((-L/2,L/2)\) with Dirichlet b.c., and investigate its entire spectrum in the limit \(L\to \infty \). More precisely, we study the local statistics of the operator recentered around energy levels \(E\) that are either finite or diverge with \(L\): note that the infinite volume limit only captures energy levels that do not depend on \(L\). The results of this article, complemented by those presented in our companion papers [7, 9], reveal a rich variety of behaviors for the eigenvalues/eigenfunctions of \(\mathcal{H}_{L}\) according to the energy regime considered: in particular, delocalized eigenfunctions arise at large enough energies. This is to be compared with the aforementioned general results of Anderson localization in dimension \(d=1\) that assert localization in the full spectrum. A proof of Anderson localization for the infinite volume Hamiltonian with white noise potential was given by Minami [25], see also [8] for an alternative proof.

Let us now present the main results of the present article, and their connections with the results already obtained in [7, 9]. We recenter \(\mathcal{H}_{L}\) around some energy level \(E=E(L)\) and distinguish five regimes:

-

(1)

Bottom: \(E \sim -(\frac{3}{8} \ln L)^{2/3}\),

-

(2)

Bulk: \(E\) is fixed with respect to \(L\),

-

(3)

Crossover: \(1 \ll E \ll L\),

-

(4)

Critical: \(E \asymp L\),

-

(5)

Top: \(E\gg L\).

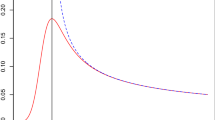

For each regime, we investigate the local statistics of the eigenvalues \(\lambda _{i}\) near \(E\), and the behavior of the corresponding normalized eigenfunctions \(\varphi _{i}\). In [7, 9], we covered the Bottom, Critical and Top regimes, while in the present article we derive the Bulk and Crossover regimes. The main results are summarized on Fig. 1.

The transition between Poisson statistics and Picket Fence occurs in the Critical regime where \(E\asymp L\). In [9], we prove that, in that regime, the eigenvalue statistics are given by the Sch point process introduced by Kritchevski, Valkó and Virág [24]. Actually, we obtain a convergence at the operator level, and show not only that the eigenvalues converge towards Sch but also that the eigenfunctions converge to a universal limit given by the exponential of a two-sided Brownian motion plus a negative linear drift. Note that this limit lives on a finite interval thus making the eigenfunctions delocalized. Our denomination universal is justified by the fact that this shape already appeared in the work of Rifkind and Virág [33] who conjectured that it should arise in various critical models.

In the present article, we show that in the Bulk and Crossover regimes, the local statistics of the eigenvalues (jointly with the centers of mass of the eigenfunctions) converge to a Poisson point process. Moreover, we establish exponential decay of the eigenfunctions (from their centers of mass) at an explicit rate, which is of order 1 in the Bulk regime and of order \(E\) in the Crossover regime.

Actually we provide much more information about the eigenfunctions. We show that the eigenfunctions (recentered at their centers of mass and rescaled in space by 1 in the Bulk and by \(E\) in the Crossover) converge to explicit limits. In the Bulk regime, the limits are some well-identified diffusions \(Y_{E}\), whose law depend of \(E\). In the Crossover regime, the limits are given by the same universal shape as in the Critical regime: namely, the exponential of a two-sided Brownian motion plus a negative linear drift. However since the space scale is \(E \ll L\), the eigenfunctions are still localized and the limiting shape lives on an infinite interval, in contrast with the Critical regime. As \(E\uparrow L\), one formally recovers delocalized states and this justifies a posteriori the denomination Crossover: this regime of energy interpolates between the (localized) Bulk regime and the (delocalized) Critical regime and shares features with both (Poisson statistics with the former and universal shape with the latter).

Finally, in the Bottom regime, investigated in [7], we also obtained Poisson statistics for the eigenvalues (and centers of mass). Furthermore we showed that the eigenfunctions are strongly localized: at space scale \(1/(\log L)^{1/3}\) and recentered at their centers of mass, they converge to the deterministic limit \(1/\cosh \).

Let us comment on the regime of energies \(-(\frac{3}{8} \ln L)^{2/3} \ll E \ll -1\). In this case, the eigenvalues (and centers of mass) should still converge to a Poisson point process, while the eigenfunctions, at space scale \(1/\sqrt{\vert E\vert }\) and recentered at their centers of mass, should still converge to the deterministic limit \(1/\cosh \). A modification of the proof presented in [7] should suffice to prove these claims for energies \(E\) that go to \(-\infty \) fast enough.

Overall, our results provide a transition from strongly localized states to totally delocalized states and identify explicitly the local statistics of the eigenvalues together with the asymptotic shapes of the eigenfunctions.

Let us now comment on the technical challenges that these results represent. Since white noise is out of scope of usual standing assumptions, we do not rely on general results from Anderson localization literature, so that our article is self-contained. Let us point out two major difficulties that we encounter. First, the derivation of the two-points estimate, often called Minami estimate [26], is delicate in the context of the irregular potential given by the white noise, whereas some general 1-d results [21] are available for some related models with smoother potential. To prove this estimate, we rely on a thorough study of a joint diffusion, see Sect. 8. Second, in the Crossover regime the phase function rotates at an unbounded speed on the unit circle and this yields many technical challenges. In particular, to obtain quantitative (with respect to the unbounded parameter \(E\)) estimates on the convergence to equilibrium of this phase, we cannot simply apply Hörmander’s Theory of hypoellipticity in contrast with the situation in [5, 28]: we obtain these estimates using Malliavin Calculus and the theory of hypocoercivity, and this constitutes one of the main technical achievements of the present article, see Sect. 5.

Let us relate our results to other studies in the literature. The discrete counterpartFootnote 1 of our model is given by the \(N\times N\) random tridiagonal matrix \(-\Delta _{N} + \sigma _{N} \,V_{N}\) where \(\Delta _{N}\) is the discrete Laplacian on \(\{1,\ldots ,N\}\), \(V_{N}\) is a diagonal matrix with i.i.d. entries of mean 0 and variance 1 and \(\sigma _{N}\) a positive parameter, possibly depending on \(N\). If \(\sigma _{N}\) does not depend on the size \(N\) of the matrix, then the limit \(N\to \infty \) of the model falls into the scope of general 1-d Anderson localization results, and the spectrum is localized. On the other hand, one recovers delocalized states when \(\sigma _{N} = O(N^{-1/2})\), see [11]. Actually for \(\sigma _{N} = \sigma N^{-1/2}\) with \(\sigma > 0\) the point process of eigenvalues of the matrix in the bulk converges to the Sch random point process [24] and the eigenfunctions are delocalized [33].

The aforementioned Russian model of Goldsheid, Molchanov and Pastur [15] deals with a potential \(V(x) = F(B_{x})\) where \(F\) is a smooth bounded function and \((B_{x},x\in{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}})\) is a stationary Brownian motion taking values in a compact manifold. Molchanov [27, 28] established for this model Poisson statistics for the appropriately rescaled eigenvalues in the large volume limit and for energies that correspond to our Bulk regime; he mentioned that his results should remain true in the white noise case. The present article confirms this statement. Let us point out that the boundedness of \(V\) provides many a priori estimates on the eigenvalues and eigenfunctions which are not available anymore in the white noise case.

Let us mention that Minami [26] showed that for Anderson operators in arbitrary dimension, the local statistics of the eigenvalues converge to Poisson point processes provided some control on the density of states and the exponential decay of the fractional moments of the Green’s function.

There are also connections with recent investigations in [22, 30, 31] of the aforementioned Russian model of Goldsheid, Molchanov and Pastur [15], in which, as in the random matrix model, a parameter depending on the size of the system is added in front of the potential to reduce its influence.

Our description of the local statistics of the eigenvalues/centers of mass is in the vein of results obtained by Nakano [29], on the local statistics of eigenvalues/eigenfunctions for discrete Anderson operators, and more recently by Germinet and Klopp [14], who proved precise results on the local and global eigenvalue statistics for a large class of Schrödinger operators. On the other hand, we provide a complete and explicit description of the asymptotic shape taken by the eigenfunctions: to the best of our knowledge, such results are very rare in the literature on Anderson localization.

2 Main results

Let \(\xi \) be a Gaussian white noise on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\), that is, the derivative of a Brownian motion \(B\). We consider the truncated Anderson Hamiltonian (sometimes also called Hill’s operator)

endowed with homogeneous Dirichlet boundary conditions. It was shown in [12] that this operator is self-adjoint on \(L^{2}(-L/2,L/2)\) with pure point spectrum of multiplicity one bounded from below \(\lambda _{1} < \lambda _{2} < \cdots \) We let \((\varphi _{k})_{k}\) be the corresponding eigenfunctions normalized in \(L^{2}\) and satisfyingFootnote 2\(\varphi _{k}'(-L/2) > 0\). These r.v. depend on \(L\), but for notational simplicity we omit writing this dependence.

This operator has a deterministic density of states \(n(E)\), see [12, 18]. This is defined as \(n(E) := dN(E)/dE\) where

Here the convergence is almost sure and the limit is deterministic. Roughly speaking, \(1/(L n(E))\) measures the typical spacing between two consecutive eigenvalues lying near \(E\) for the operator \(\mathcal{H}_{L}\). From the explicit integral expression of \(n(E)\), see [12], one deduces that \(E\mapsto n(E)\) is smooth and that

In the present article, we focus on two regimes of energy:

-

(1)

Bulk regime: the energy \(E\) is fixed with respect to \(L\),

-

(2)

Crossover regime: the energy \(E = E(L)\) satisfies \(1 \ll E \ll L\),

and investigate the asymptotic behavior as \(L\to \infty \) of the eigenvalues \(\lambda _{i}\) and of the eigenfunctions, seen as probability measures by considering \(\varphi ^{2}_{i}(t) dt\). For every eigenfunction \(\varphi _{i}\), a relevant statistics to its localization center isFootnote 3 the center of mass \(U_{i}\) defined through

Our first result shows convergence, in both regimes, of the point process of rescaled eigenvalues and centers of mass.

Theorem 1

Poisson statistics

In the Bulk and the Crossover regimes, the following random measure on \({{\mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{ \textit{\tiny \textbf{R}}}}}\times [-1/2,1/2]\)

converges in law as \(L\to \infty \) to a Poisson random measure on \({{\mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{ \textit{\tiny \textbf{R}}}}}\times [-1/2,1/2]\) of intensity \(d\lambda \otimes du\).

In this statement, the convergence holds for the vague topology on the set of Radon measures on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\), that is, the smallest topology that makes continuous the maps \(m\mapsto \langle m,f\rangle \) with \(f:{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\to {{\mathchoice{\text{\textbf{R}}}{ \text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\) bounded continuous and compactly supported in its first variable.

Our second result establishes exponential localization of the eigenfunctions from their centers of mass. In the Bulk regime, the exponential rate is given by \((1/2)\nu _{E}\) where

while in the Crossover regime it is given by \(1/(2E)\). This rate is of course related to the Lyapunov exponent of the underlying diffusions.

Theorem 2

Exponential localization

Fix \(h >0\) and set \(\Delta := [E-h/(Ln(E)),E+h/(Ln(E))]\). For every \(\varepsilon > 0\) small enough, there exist some r.v. \(c_{i}>0\) such that:

-

(1)

for every eigenvalue \(\lambda _{i} \in \Delta \) we have in the Bulk regime

$$ \big(\varphi _{i}(t)^{2} + \varphi '_{i}(t)^{2}\big)^{1/2} \le c_{i} \exp \Big(-\frac{(\nu _{E} - \varepsilon )}{2}\,|t-U_{i}|\Big)\;, \quad \forall t\in [-L/2,L/2]\;, $$and in the Crossover regime

$$ \Big(\varphi _{i}(t)^{2} + \frac{\varphi '_{i}(t)^{2}}{E}\Big)^{1/2} \le \frac{c_{i}}{\sqrt {E}} \exp \Big(-\frac{(1-\varepsilon )}{2} \frac{|t-U_{i}|}{E}\Big)\;,\quad \forall t\in [-L/2,L/2]\;, $$ -

(2)

in both regimes, there exists \(q=q(\varepsilon ) > 0\) such that

$$ \limsup _{L\to \infty} \mathbb{E}\Big[\sum _{\lambda _{i} \in \Delta} c_{i}^{q} \Big] < \infty \;. $$

Our third result shows that the eigenfunctions (rescaled into probability measures) converge to a Poisson point process with an explicit intensity. Actually the convergence will be taken jointly with the eigenvalues and the centers of mass, so the result is a strengthening of Theorem 1.

Let us start with the definition of the probability measures associated with the eigenfunctions. Given the rate of exponential localization appearing in the previous result, one needs to recenter the eigenfunctions appropriately to get convergence, so we define for every eigenvalue \(\lambda _{i}\)

which is an element of the set \(\mathcal{M}= \mathcal{M}({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{ \text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}})\) of all probability measures on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\) endowed with the topology of weak convergence.

In the statement below, we rely on a probability measure on ℳ that describes the law of the limits. In the Crossover regime, this probability measure \(\sigma _{\infty}\) admits a simple definition: it is the law of the random probability measure

where ℬ is a two-sided Brownian motion on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\).

In the Bulk regime, this probability measure \(\sigma _{E}\) is the law of the random probability measure

where \(Y_{E}\) is the concatenation at \(t=0\) of two processes \((Y_{E}(t),t\ge 0)\) and \((Y_{E}(-t),t\ge 0)\) with explicit laws. The precise definition of \(Y_{E}\) requires some notations and is given in Sect. 9.1.

Theorem 3

Shape

In the Bulk and the Crossover regimes, the random measure

converges in law as \(L\to \infty \) to a Poisson random measure on \({{\mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{ \textit{\tiny \textbf{R}}}}}\times [-1/2,1/2] \times \mathcal{M}\) of intensity \(d\lambda \otimes du \otimes \sigma _{E}\) in the Bulk regime and of intensity \(d\lambda \otimes du \otimes \sigma _{\infty}\) in the Crossover regime.

Here \(\mathcal{N}_{L}\) is seen as a random element of the set of measures on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2] \times \mathcal{M}\) that give finite mass to \(K\times [-1/2,1/2] \times \mathcal{M}\), for any given compact set \(K\subset {{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\). The topology considered in the convergence above is then the smallest topology on this set of measures that makes continuous the maps \(m\mapsto \langle f,m\rangle \), for every bounded and continuous function \(f:{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2] \times \mathcal{M}\to {{ \mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\) that is compactly supported in its first coordinate.

Let us make some comments on the limits of these eigenfunctions. First of all, the localization length is of order 1 in the Bulk regime and \(E\) in the Crossover regime: this is in line with the exponential decay of Theorem 2. Moreover, this localisation length increases with the level of energy, and this is coherent with the general fact that: the lower we look into the spectrum, the more localized the eigenfunctions are. Second, it suggests that for \(E\) of order \(L\) the eigenfunctions are no longer localized: this is rigorously proved in our companion paper [9].

Remark 2.1

Informally, our result shows that in the Crossover regime the eigenfunction \(\varphi _{\lambda }\), properly rescaled, converges as a probability measure towards \(Y_{\infty }\) (rescaled by its \(L^{2}\) mass). It is possible to go further. Introduce the distorted polar coordinates \(\frac{\varphi '_{\lambda }}{E} + i \varphi _{\lambda }= r_{\lambda }e^{i \theta _{\lambda }}\) and note that the phase \(\theta _{\lambda }\) oscillates very fast (as soon as \(E\to \infty \)). After removing deterministic oscillations from the phase \(\theta _{\lambda }\), the arguments presented in this article can be adapted to show that it converges towards a non-trivial limiting phase. Moreover, the modulus \(r_{\lambda }\), after a proper rescaling, converges to \(\sqrt{2} Y_{\infty }\).

We now outline the remaining of this article. We introduce in Sect. 3 the diffusions associated to our eigenvalue equation as they play a central role in this article. Then we detail in Sect. 4 our strategy of proof: this section presents the main intermediate results of this paper and contains the proofs of Theorems 1, 2 and 3. The subsequent sections are then devoted to proving these intermediate results, more details on their contents and relationships will be given at the end of Sect. 4.

3 The diffusions

The eigenvalue problem associated to the operator \(\mathcal{H}_{L}\) gives rise to a family \(y_{\lambda }\), \(\lambda \in {{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\) of ODEs, corresponding to the solution of the eigenvalue equation:

Up to fixing two degrees of freedom there is a unique solution to this equation for every parameter \(\lambda \in {{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\). If we fix the starting conditions \(y_{\lambda }(-L/2) = 0\) and \(y_{\lambda }'(-L/2)\) to an arbitrary value different from 0, then the map \(\lambda \mapsto y_{\lambda }(L/2)\) is continuous. The zeros of this map are the eigenvalues of \(\mathcal{H}_{L}\), and the corresponding solutions \(y_{\lambda }\) are the eigenfunctions of \(\mathcal{H}_{L}\) (which are defined up to a multiplicative factor corresponding to \(y_{\lambda }'(-L/2)\) of course).

It is crucial in our analysis to consider the evolution of both \(y_{\lambda }\) and \(y'_{\lambda }\), and this is naturally described by the complex function \(y_{\lambda}' + i y_{\lambda}\). It will actually be convenient to work in polar coordinates (also called Prüfer variables): we consider \((r_{\lambda},\theta _{\lambda})\) where

The process \(\theta _{\lambda }\) is called the phase of the above ODE. It is instrumental for the study of the spectrum of \(\mathcal{H}_{L}\) as we will see later on. It is also convenient to define

In these new coordinates, we have the following coupled stochastic differential equations:

where \(dB(t) = \xi (dt)\).

The two degrees of freedom given by the initial conditions \(y_{\lambda }(-L/2)\) and \(y_{\lambda }'(-L/2)\) are transferred to \(\theta _{\lambda }(-L/2)\) and \(r_{\lambda }(-L/2)\) (or equivalently \(\rho _{\lambda }(-L/2)\)). Note that

while

For any angle \(\theta \in {{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\), we define

Let us point out an important property of the process \(\theta _{\lambda }\): at the times \(t\) such that \(\{\theta _{\lambda }(t)\}_{\pi }= 0\), the drift term is strictly positive while the diffusion coefficient vanishes. Consequently \(\{\theta _{\lambda }\}_{\pi}\) cannot hit 0 from above: this readily implies that \(t \mapsto \lfloor \theta _{\lambda }(t) \rfloor _{\pi}\) is non-decreasing. Moreover, the evolution of \(\theta _{\lambda }\) depends only on \(\{\theta _{\lambda }\}_{\pi}\) so that the latter is a Markov process. We let \(p_{\lambda ,t}(\theta _{0}, \theta )\) be the density of its transition probability at time \(t\) when it starts from \(\theta _{0}\) at time 0. When this process starts from 0 at time 0, we drop the first variable and simply write \(p_{\lambda ,t}(\theta )\).

At some occasions, we will write \(\mathbb{P}_{(t_{0},\theta _{0})}\) for the law of the process \(\theta _{\lambda }\) starting from \(\theta _{0}\) at time \(t_{0}\), and we will write \(\mathbb{P}_{(t_{0},\theta _{0})\to (t_{1},\theta _{1})}\) to denote the law of the bridge of diffusion that starts from \(\theta _{0}\) at time \(t_{0}\) and is conditioned to reach \(\theta _{1}+\pi{{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{\text{\scriptsize \textbf{Z}}}{\text{\tiny \textbf{Z}}}}}\) at time \(t_{1}\).

Most of the time, we will take \(\theta _{\lambda }(-L/2) = 0\) and \(r_{\lambda }(-L/2) > 0\). In this setting, \(\lambda \) is an eigenvalue of \(\mathcal{H}_{L}\) iff \(\{\theta _{\lambda }(L/2)\}_{\pi }= 0\) and then, the function \(y_{\lambda}\) is the associated eigenfunction. Since \(\lambda \mapsto \lfloor \theta _{\lambda }(L/2) \rfloor _{\pi}\) is non-decreasing, we deduce the so-called Sturm-Liouville property: almost surely,

This phase function \(\theta _{\lambda }(\cdot )\) is a powerful tool to investigate the spectrum of \(\mathcal{H}_{L}\). It has been used in numerous articles on 1d-Schrödinger operators, sometimes under the form of the so-called Riccati transform \(y'_{\lambda }/y_{\lambda }\) which is nothing but \(\text{cotan}\,\theta _{\lambda }\).

3.1 The distorted coordinates

For large energies, that is, whenever \(\lambda \) is of order \(E\) for some \(E=E(L) \to \infty \), the phase \(\theta _{\lambda }\) takes a time of order \(1/\sqrt {E}\) to make one rotation so that the solutions \(y_{\lambda }\), \(y_{\lambda }'\) oscillate very fast. It is then more convenient to use distorted and sped up coordinates. We set

and

Defining the Brownian motion \(B^{(E)}(t) = E^{-1/2} B(tE)\) and setting \(\rho _{\lambda }^{(E)} := \ln (r_{\lambda }^{(E)})^{2}\), the evolution equations take the form

Let \(p_{\lambda ,t}^{(E)}(\theta _{0}, \theta )\) be the density of the transition probability at time \(t\) of the processes \(\{\theta _{\lambda }^{(E)}\}_{\pi}\) starting from \(\theta _{0}\) at time \(t=0\). When this process starts from 0 at time 0, we drop the first variable and simply write \(p_{\lambda ,t}^{(E)}(\theta )\). The change of variable formula shows that

Again, we use the notation \(\mathbb{P}_{(t_{0},\theta _{0})}\), resp. \(\mathbb{P}_{(t_{0},\theta _{0})\to (t_{1},\theta _{1})}\), to denote the law of the diffusion, resp. bridge of diffusion.

3.2 Condensed notation

The distorted coordinates will be used in the Crossover regime, while we will rely on the original coordinates in the Bulk regime. However most of our arguments apply indifferently to both cases. Consequently, we will adopt condensed notations as much as possible. First we introduce

Moreover, when the arguments apply to both sets of coordinates, we will use the generic notation \(\theta _{\lambda }^{(\mathbf{E})}\), \(\rho _{\lambda }^{(\mathbf{E})}\) to denote \(\theta _{\lambda }\), \(\rho _{\lambda }\) in the original coordinates, and \(\theta _{\lambda }^{(E)}\), \(\rho _{\lambda }^{(E)}\) in the distorted coordinates. We will sometimes introduce quantities of interest such as processes, measures, etc. using the generic notation at once. For instance, we could have introduced the SDE satisfied by \(\theta _{\lambda }\), \(\rho _{\lambda }\) and \(\theta _{\lambda }^{(E)}\), \(\rho _{\lambda }^{(E)}\) by simply writing

3.3 Invariant measure

The Markov process \(\{\theta _{\lambda }^{(\mathbf{E})}\}_{\pi}\) admits a unique invariant probability measure whose density \(\mu _{\lambda }^{(\mathbf{E})}\) has a simple integral expression, see Sect. A.4 of the Appendix for more details. Straightforward computations relying on this expression show the following estimates:

Lemma 3.1

Bounds on the invariant measure

Original coordinates. For any compact interval \(\Delta \subset {{\mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{\textit{\tiny \textbf{R}}}}}\), there are two constants \(0 < c < C\) such that for all \(\theta \in [0,\pi )\) and all \(\lambda \in \Delta \) we have

Distorted coordinates. For any \(h>0\) there are two constants \(c,C > 0\) such that for all \(E>1\), all \(\theta \in [0,\pi )\) and all \(\lambda \in \Delta := [E-\frac{h}{En(E)},E+\frac{h}{En(E)}]\)

Finally, we have as \(E \to \infty \)

The last convergence shows that our distorted coordinates are the “right ones” in the Crossover regime: as \(E\to \infty \) the corresponding invariant measure converges to a non-degenerate limit given by the uniform measure on the circle.

Remark 3.2

The length of \(\Delta \) is of order 1 for the original coordinates and of order \(E^{-1/2}\) in the distorted coordinates. The parameter \(L\) does not play any role in this setting. This should not be confused with the setting of Theorem 2 (and of many other estimates/statements of the article) where we consider an interval \(\Delta \) on length \(1/L \ll 1\) in the Bulk regime and of order \(E^{1/2}/L \ll E^{-1/2}\) in the Crossover regime.

3.4 Rotation time and density of states

Let us introduce the first rotation time of the diffusion \(\theta _{\lambda }^{(\mathbf{E})}\)

Lemma 3.3

The law of \(\zeta _{\lambda }^{(\mathbf{E})}\) is independent of the initial condition \(\theta _{\lambda }^{(\mathbf{E})}(0)\).

Proof

Write \(\theta _{\lambda }^{(\mathbf{E})}(0) = n\pi + \theta \) with \(\theta \in [0,\pi [\) and \(n\in{{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{\text{\scriptsize \textbf{Z}}}{ \text{\tiny \textbf{Z}}}}}\). Introduce \(H_{\pi }:= \inf \{t\ge 0: \theta _{\lambda }^{(\mathbf{E})}(t) =(n+1) \pi \}\) and \(H_{\theta }' := \inf \{t\ge 0 : \theta _{\lambda }^{(\mathbf{E})}(t+H_{ \pi }) =\theta _{\lambda }^{(\mathbf{E})}(0) + \pi \}\). By the strong Markov property, \(H_{\theta }'\) is independent of \(H_{\pi }\). Furthermore, \(H'_{\theta }\) has the same law as \(T_{0,\theta }\), and \(H_{\pi }\) has the same law as \(T_{\theta ,\pi }\) where \(T_{\theta _{0},\theta _{1}}\) is the first hitting time of \(\theta _{1}\) for the diffusion starting from \(\theta _{0}\).

We thus showed that \(\zeta _{\lambda }^{(\mathbf{E})} = H_{\pi }+ H'_{\theta }\) has the same law as \(T_{0,\theta } + T_{\theta ,\pi }\) where \(T_{\theta ,\pi }\) is independent of \(T_{0,\theta }\). But the strong Markov property implies that \(T_{0,\theta } + T_{\theta ,\pi }\) has the law of \(\zeta _{\lambda }^{(\mathbf{E})}\) when \(\theta _{\lambda }^{(\mathbf{E})}(0) = 0\), thus concluding the proof. □

As a consequence the expectation of \(\zeta _{\lambda }^{(\mathbf{E})}\) does not depend on the initial condition and we thus set

This expectation of the rotation time admits the following integral expression

In the original coordinates, this expression is established in [2]. On the other hand, the mere definition of our distorted coordinates implies that \(\zeta _{\lambda }^{(E)}\) is equal in law to \(\zeta _{\lambda }/ E\), so that \(m_{\lambda }^{(E)} = m_{\lambda }/ E\) and the integral expression follows in this case as well.

Note that \(m_{\lambda }\) is nothing but \(1/N(\lambda )\), that is, the inverse of the integrated density of states introduced in (2). Indeed, by the law of large numbers, the number of rotations of \(\theta _{\lambda }\) on the interval \([-L/2,L/2]\) is of order \(L/m_{\lambda }\), so that the Sturm-Liouville property recalled above implies that the number of eigenvalues of \(\mathcal{H}_{L}\) that lie below \(\lambda \) is of the same order.

From the integral expression, one can check that

and this allows to recover the asymptotic of \(N(\lambda )\) stated in the introduction. Let us finally mention that some moment estimates on \(\zeta _{\lambda }^{(\mathbf{E})}\) are presented in Appendix A.3.

3.5 Forward and backward diffusions

We introduce in this paragraph the so-called forward and backward diffusions as their concatenation will be instrumental in our study. Let us consider the original coordinates first.

The eigenproblem is symmetric in law under the map \(t\mapsto -t\) since \(B'(\cdot )\) and \(B'(-\cdot )\) have the same law. To take advantage of this symmetry, we consider the solutions \((r_{\lambda }^{\pm }=e^{\frac{1}{2} \rho _{\lambda }^{\pm}},\theta _{ \lambda }^{\pm})\) of

for \(t\in [-L/2,L/2]\) where

The processes \((r_{\lambda }^{+},\theta _{\lambda }^{+})\) will be called the forward diffusions, while \((r_{\lambda }^{-},\theta _{\lambda }^{-})\) will be called the backward diffusions. We also introduce \((y^{\pm}_{\lambda })' + i y^{\pm}_{\lambda }:= r_{\lambda }^{\pm} \exp (i \theta ^{\pm}_{\lambda })\). Of course the forward diffusions coincide with the original diffusions \((r_{\lambda },\theta _{\lambda })\).

Take \(\theta _{\lambda }^{+}(-L/2) = \theta _{\lambda }^{-}(-L/2) = 0\). We have already seen that \(\lambda \) is an eigenvalue of \(\mathcal{H}_{L}\) if and only if \(\{\theta _{\lambda }^{+}(L/2)\}_{\pi }= 0\). From the symmetry of the eigenvalue problem, we can also read off the set of eigenvalues out of the backward diffusions: namely, \(\lambda \) is an eigenvalue if and only if \(\{\theta _{\lambda }^{-}(L/2)\}_{\pi }= 0\).

It will actually be convenient to combine these two criteria in the following way: one follows the forward diffusion up to some given time \(u \in [-L/2,L/2]\), and the backward diffusion up to time \(-u\). The set of eigenvalues can then be read off using the following fact (whose proof is postponed below):

Lemma 3.4

Take \(\theta _{\lambda }^{\pm}(-L/2) = 0\). It holds:

It is therefore natural to consider concatenations of the forward and backward diffusions. For any time \(u\in [-L/2,L/2]\), we set:

and we defineFootnote 4

where \(k := \lfloor \theta _{\lambda }^{+}(u)+\theta _{ \lambda }^{-}(-u) \rfloor _{\pi }\). Note that \(\hat{r}_{\lambda }\), \(\hat{\theta }_{\lambda }\) depend on \(u\), but for notational convenience we omit writing explicitly this dependence.

We also define the process \(\hat{y}_{\lambda }\) by setting

Using the identity \((r_{\lambda }^{\pm }(t) \sin \theta _{\lambda }^{\pm }(t))' = r_{ \lambda }^{\pm }(t) \cos \theta _{\lambda }^{\pm }(t)\), we deduce that for all \(t\in [-L/2,L/2] \backslash \{u\}\)

and that this identity remains true at \(u_{+}\) and \(u_{-}\), with possibly a discontinuity there. When \(\lambda _{i}\) is an eigenvalue, denoting \(\|\cdot \|_{2}\) the \(L^{2}\)-norm, we have the identity (valid for all \(u \in [-L/2,L/2]\)):

Proof of Lemma 3.4

If \(\lambda \) is an eigenvalue of \(\mathcal{H}_{L}\), and provided \(\{\theta _{\lambda }^{\pm}(-L/2)\}_{\pi }= 0\), the functions \(y^{+}_{\lambda }\) and \(y^{-}_{\lambda }(-\cdot )\) coincide up to a multiplicative factor (which is related to the values \(r_{\lambda }^{\pm}(-L/2)\)). Consequently if \(\lambda \) is an eigenvalue then we must have the following equality in \(\{-\infty \}\cup {{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\)

This is equivalent to \(\text{cotan}\,( \theta ^{+}_{\lambda }(u_{-})) = \text{cotan}\,(\pi - \theta ^{-}_{\lambda }((-u)_{+}))\), which is itself equivalent to \(\{\theta _{\lambda }^{+}(u_{-})\}_{\pi }= \{\pi -\theta _{\lambda }^{-}((-u)_{+}) \}_{\pi}\). Note that \(\{\theta _{\lambda }^{+}(u_{-})\}_{\pi }= \{\theta _{\lambda }^{+}(u) \}_{\pi}\), and similarly \(\{\pi -\theta _{\lambda }^{-}((-u)_{+})\}_{\pi }= \{\pi -\theta _{ \lambda }^{-}(-u)\}_{\pi}\). We end up with \(\{\theta _{\lambda }^{+}(u)\}_{\pi }= \{\pi -\theta _{\lambda }^{-}((-u)) \}_{\pi}\) which is equivalent to \(\{\theta _{\lambda }^{+}(u) + \theta _{\lambda }^{-}(-u)\}_{\pi }=0\).

On the other hand, if \(\{\theta _{\lambda }^{+}(u) + \theta _{\lambda }^{-}(-u)\}_{\pi }= 0\) then the concatenation \(\hat{y}_{\lambda }\) is continuously differentiable at \(u\) (recall that \(r_{\lambda }^{\pm}(\pm u)=1\)), satisfies (6) at all points \(t\in [-L/2,L/2]\) and satisfies the Dirichlet b.c., consequently \(\lambda \) is an eigenvalue. □

With distorted coordinates, all the above quantities find naturally their counterparts. For \(u\in [-L/(2E),L/(2E)]\), we denote by \(\hat{y}^{(E)}_{\lambda }\), \(\hat{r}^{(E)}_{\lambda }\), \(\hat{\theta }^{(E)}_{\lambda }\) the concatenation of the respective backward \(\theta ^{-,(E)}_{\lambda }\) and forward diffusions \(\theta ^{+,(E)}_{\lambda }\) on \([-L/(2E),L/(2E)]\). In particular, \(\hat{y}_{\lambda }^{(E)} = \hat{r}_{\lambda }^{(E)} \sin \hat{\theta }^{(E)}_{\lambda }\), \((\hat{y}_{\lambda }^{(E)})'/E^{3/2} = \hat{r}_{\lambda }^{(E)} \cos \hat{\theta }^{(E)}_{\lambda }\) and when \(\{\theta _{\lambda }^{\pm }(-L/2)\}_{\pi }= 0\), the link between \(\hat{y}^{(E)}_{\lambda _{i}}\) and \(\varphi _{i}\) becomes:

For both sets of coordinates and for any \(u\in [-L/(2\mathbf{E}),L/(2\mathbf{E})]\) we will denote by \(\mathbb{P}^{(u)}_{\theta ,\theta '}\) the product law \(\mathbb{P}^{+}_{(-L/(2\mathbf{E}),0)\rightarrow (u,\theta )} \otimes \mathbb{P}^{-}_{(-L/(2\mathbf{E}),0)\rightarrow (-u,\theta ')}\) under which \(\theta ^{\pm ,(\mathbf{E})}_{\lambda }\) are two independent bridges of diffusion between \((-L/(2\mathbf{E}),0)\) and \((u,{\theta +\pi{{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{ \text{\scriptsize \textbf{Z}}}{\text{\tiny \textbf{Z}}}}}})\) for \(\theta _{\lambda }^{+,(\mathbf{E})}\), and between \((-L/(2\mathbf{E}),0)\) and \((-u,{\theta '+\pi{{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{ \text{\scriptsize \textbf{Z}}}{\text{\tiny \textbf{Z}}}}}})\) for \(\theta _{\lambda }^{-,(\mathbf{E})}\). Then, the processes \(\hat{r}_{\lambda }^{(\mathbf{E})}\), \(\hat{\theta }_{\lambda }^{(\mathbf{E})}\) and \(\hat{y}_{\lambda }^{(\mathbf{E})}\) are defined under \(\mathbb{P}^{(u)}_{\theta ,\theta '}\) according to (18) and (19) with the original coordinates, and according to similar equations with distorted coordinates.

4 Strategy of proof

4.1 Convergence to equilibrium of the phase

Our proofs rely on the following exponential convergence of the transition probability of the phase \(\theta _{\lambda }^{(\mathbf{E})}\) toward its equilibrium measure \(\mu _{\lambda }^{(\mathbf{E})}\).

Theorem 4

Exponential convergence to the invariant measure

In the original coordinates, fix a compact set of energies \(\Delta \). Then there exist \(c,C>0\) such that for all \(t\ge 1\)

In the distorted coordinates, fix \(h>0\) and set \(\Delta = [E-\frac{h}{n(E)E},E+\frac{h}{n(E)E}]\). There exist \(c,C > 0\) such that uniformly over all \(E>1\) and for all \(t\ge 1\)

The proof of this estimate is delicate, especially in the distorted coordinates since the bound is uniform over all \(E\in [1,\infty )\). The proof relies on tools from Malliavin calculus and the theory of hypocoercivity, it is an important technical step in our article. This result will be crucial for deriving the exponential decay of the eigenfunctions and evaluating the expectation of the number of eigenvalues in small intervals.

4.2 Goldscheid-Molchanov-Pastur (GMP) formula

We have seen that the Sturm-Liouville property allows to extract a lot of spectral information from the phase function. A major observation on which this article relies is that we can extract even more information through a beautiful formula, originally obtained by Goldscheid, Molchanov and Pastur [15] in a similar but smoother context, and that we name GMP formula from now on. This formula expresses the intensity of the point process

in terms of concatenations of the forward and backward diffusions introduced earlier. Roughly speaking, it shows that in average the eigenfunctions are concatenations of the forward and backward diffusions at uniform points \(u \in (-L/2,L/2)\). Below \(\|\cdot \|_{2}\) denotes the \(L^{2}\)-norm.

Proposition 4.1

GMP formula

Let \(\mathcal{D}\) be the Skorohod space of càdlàg functions on \([-L/2, L/2]\). For any measurable map \(G\) from \({{\mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{ \textit{\tiny \textbf{R}}}}}\times \mathcal{D}\times \mathcal{D}\) into \({{\mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{ \textit{\tiny \textbf{R}}}}}_{+}\), with the original coordinates we have

and with the distorted coordinates this becomes

To exploit this formula, one needs to analyze both the transition probabilities of the phase and the concatenation \(\hat{y}^{(\mathbf{E})}_{\lambda }\) for \(\lambda \) in the regime of energies under consideration. Regarding the transition probabilities, Theorem 4 allows to replace them, up to an error that vanishes as \(L\to \infty \), by the equilibrium measure whose expression is explicit. On the other hand, the concatenation of the diffusions can be thoroughly studied since the SDEs at stake are tractable.

Taking \(G = 1_{\Delta}(\lambda )\), we will derive a Wegner estimate, that is an estimate on the number of eigenvalues in small (microscopic) intervals: this will be an important ingredient for the convergence to a Poisson point process, see Sect. 4.4. Studying carefully the concatenation \(\hat{y}^{(\mathbf{E})}_{\lambda }\), we will prove the exponential decay of the eigenfunctions and we will derive their asymptotic behavior, see the next paragraph.

4.3 Exponential decay

Until the end of Sect. 4, we are given a function \(E=E(L)\) of \(L\) that satisfies either

-

1.

Bulk regime: \(E\) is fixed with respect to \(L\),

-

2.

Crossover regime: \(E = E(L)\) satisfies \(1 \ll E \ll L\),

and all the forthcoming estimates lie in that setting.

Let us fix again \(h >0\) and let \(\Delta := [E-\frac{h}{Ln(E)},E+\frac{h}{Ln(E)}]\). Introduce

where \(\nu _{E}\) was defined in (3). As we will see in Sect. 7.2, this quantity is related to the Lyapunov exponent of our diffusions, that is, the rate of linear growth in time of \(\ln r_{\lambda }^{(\mathbf{E})}(t)\).

The main technical step towards the exponential decay is the following result:

Proposition 4.2

Exponential decay of the eigenfunctions

In the Bulk and Crossover regimes, for any \(\varepsilon > 0\) small enough, there exists \(q_{0}(\varepsilon ) > 0\) such that for all \(q\in [0,q_{0}(\varepsilon )]\)

where

With this result at hand, we can present the proof of the exponential decay of the eigenfunctions.

Proof of Theorem 2

Fix \(\varepsilon >0\) small enough. From the last proposition, we deduce that for every eigenvalue \(\lambda _{i} \in \Delta \) there exists \(\tilde{U}_{i}\) (depending on \(\varepsilon \)) such that

where \(\tilde{c}_{i} := \inf _{u\in [-L/2,L/2]} G_{u}(\lambda _{i},\varphi _{ \lambda _{i}},\varphi _{\lambda _{i}}')\). Recentering the exponential term at \(U_{i}\), we obtain

with \(c_{i} = \tilde{c}_{i} \exp \Big( \frac{(\boldsymbol{\nu _{E}}-\varepsilon )}{2} \frac{|\tilde{U}_{i}- U_{i}|}{\mathbf{E}}\Big)\). We need some control on the distance \(\tilde{U}_{i} - U_{i}\) to conclude the proof. From the definition of \(U_{i}\) and since \(\varphi _{i}^{2}(t) dt\) is a probability measure, we have

By Jensen’s inequality, we thus have for any \(a \in (0, \boldsymbol{\nu _{E}}-\varepsilon )\)

From the bound of the proposition, we already know that

Using the previous bound, this remains true with \(c_{i}\) instead of \(\tilde{c}_{i}\), up to decreasing \(q\). □

The main ideas of the proof of Proposition 4.2 are simple. First of all, we apply the GMP formula to rephrase our statement on the eigenfunctions in terms of the concatenation of the forward/backward processes. Second, we establish precise moment bounds on the exponential growth of \(r^{(\mathbf{E})}_{\lambda }\). Third, we transfer these estimates to the concatenation \(\hat{y}^{(\mathbf{E})}_{\lambda }\). We refer to Sect. 7 for the details.

4.4 Poisson statistics

Obviously, Theorem 3 implies Theorem 1 so we concentrate on the former statement. The argument is twofold. First, we introduce an approximation \(\bar{\mathcal{N}}_{L}\) of the random measure \(\mathcal{N}_{L}\) that possesses more independence, and we prove that it converges to the Poisson random measure of the statement. Second we show that \(\mathcal{N}_{L}-\bar{\mathcal{N}}_{L}\) goes to 0.

There are some topological difficulties arising in the spaces at stake, which will be explained in more details in the proof of Theorem 3, see below. For the time being, we view the r.v. \(\mathcal{N}_{L}\) and \(\bar{\mathcal{N}}_{L}\) as random Radon measures on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\times \mathcal{M}( \bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}})\), where \(\bar{\mathcal{M}}:= \mathcal{M}( \bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}})\), the set of probability measures on \(\bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}}\) endowed with the topology of weak convergence, and where \(\bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}}\) is the compactification of \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\).

Let us present the approximation scheme that leads to the definition of \(\bar{\mathcal{N}}_{L}\). We subdivide the interval \((-L/2,L/2)\) into \(k\) (microscopic) disjoint boxes \((t_{j-1},t_{j})\) where \(t_{j} = -L/2+ j L/k\) and where \(k=k(L)\) is a quantity that goes to \(+\infty \) at a sufficiently small speed.Footnote 5 We consider the Anderson Hamiltonian \(\mathcal{H}^{(j)}_{L} = -\partial ^{2}_{x} + \xi \) restricted to \((t_{j-1},t_{j})\) with Dirichlet b.c., and we denote by \(\lambda ^{(j)}_{i}\) its eigenvalues, \(\varphi ^{(j)}_{i}\) its eigenfunctions, \({U_{i}}^{(j)}\) its centers of mass and \(w_{i}^{(j)}(dt) = \mathbf{E}\varphi ^{(j)}_{i}({U_{i}}^{(j)} + \mathbf{E}\,t)^{2} dt\) the associated probability measure (after recentering at \(U_{i}^{(j)}\)). We then define

as well as

Proposition 4.3

In the Bulk and Crossover regimes, provided \(k\to \infty \) slowly enough, the random measure \(\mathcal{N}_{L} - \bar{\mathcal{N}}_{L}\) converges in law to the null measure as \(L\to \infty \).

The proof of this proposition is presented in Sect. 9.3 and relies on two inputs: (1) the eigenfunctions of \(\mathcal{H}_{L}\) and \(\mathcal{H}^{(j)}_{L}\) are exponentially localized, (2) \(\bar{\mathcal{N}}_{L}\) converges to a Poisson random measure. Point (1) is the content of the previous proposition (the exponential localization of the eigenfunctions of \(\mathcal{H}^{(j)}_{L}\) holds for exactly the same reasons). Point (2) is proven below. Given these two inputs, the proof of Proposition 4.3 consists in building a one-to-one correspondence between the atoms of \(\mathcal{N}_{L}\) and \(\bar{\mathcal{N}}_{L}\), when these measures are restricted to some arbitrary set \([-h,h]\times [-1/2,1/2]\times \bar{\mathcal{M}}\), and to show that the corresponding pairs of atoms are close in the topology at stake.

Let us now explain the main steps towards the convergence of \(\bar{\mathcal{N}}_{L}\) to a Poisson random measure. We fix a constant \(h >0\) (independent of \(L\)) and define the interval of energies

For convenience, we set

as well as

First, we control the second moments of \(N_{L}^{(j)}(\Delta )\). Note that the \(N_{L}^{(j)}(\Delta )\) are i.i.d., so it suffices to consider \(j=1\). (We also state a similar estimate on \(N_{L}(\Delta )\) for later convenience).

Proposition 4.4

In the Bulk and Crossover regimes, provided \(k\to \infty \) slowly enough, we have

Second, we show that, with large probability, every box \((t_{j-1},t_{j})\) contains at most one eigenvalue that lies in the interval of energy \(\Delta \).

Proposition 4.5

Minami estimate

In the Bulk and Crossover regimes, provided \(k\to \infty \) slowly enough,

Our proof of this two points estimate, which is usually named after Minami [26], is technically involved and appears as one of the main achievements of this paper. As mentioned in the introduction, it relies on probabilistic tools, and can be viewed as an alternative approach to the strategy of proof of Molchanov [28] (in a smoother context). The proofs of Propositions 4.4 and 4.5 are given in Sect. 8.

Remark 4.6

The arguments presented in the proof are sufficient to prove the stronger statement: there exists \(C>0\) such that for all \(L\) large enough

This is another form of the so-called Minami estimate.

Third, we identify the limit of the intensity measure of \(\bar{\mathcal{N}}_{L}\). Let \(\boldsymbol{\sigma _{E}}\) be the measure \(\sigma _{E}\) (introduced in (5)) in the Bulk regime, and \(\sigma _{\infty}\) (defined in (4)) in the Crossover regime. Note that the definition in the Bulk regime will be given in Sect. 9, while the definition in the Crossover regime was presented above Theorem 3.

Proposition 4.7

Intensity of \(\bar{\mathcal{N}}_{L}\)

In the Bulk and Crossover regimes, provided \(k\to \infty \) slowly enough, for any compactly supported, continuous function \(f:{{\mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{ \textit{\tiny \textbf{R}}}}}\times [-1/2,1/2]\times \bar {\mathcal{M}}\to{{ \mathchoice{\textit{\textbf{R}}}{\textit{\textbf{R}}}{\textit{\scriptsize \textbf{R}}}{\textit{\tiny \textbf{R}}}}}\), we have

The proof of this proposition is presented in Sect. 9.1: it combines many different arguments and definitions introduced earlier in the article.

Proofs of Theorems 1 and 3

As already explained, it suffices to concentrate on the stronger statement given by Theorem 3. Let us now explain the topological difficulties at stake. The space \(\mathcal{M}({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}})\) is not locally compact so that the tightness of \((\mathcal{N}_{L})_{L\ge 1}\) or \((\bar{\mathcal{N}}_{L})_{L\ge 1}\) is not elementary in \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\times \mathcal{M}({{\mathchoice{ \text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}})\). One option would have been to prove directly this tightness (using the exponential decay of the eigenfunctions), but we prefer a simpler point of view. Namely, we deal with \(\bar{\mathcal{M}}:= \mathcal{M}( \bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}})\), the set of probability measures on \(\bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}}\) endowed with the topology of weak convergence, where \(\bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}}\) is the compactification of \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\). Note that \(\mathcal{M}( \bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}})\) is compact. We then view the r.v. \(\mathcal{N}_{L}\) and \(\bar{\mathcal{N}}_{L}\) as random Radon measures on \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\times \mathcal{M}( \bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}})\), so that we are in a more common setting to prove convergences. It can be checked that if the convergence of Theorem 3 holds in \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\times \mathcal{M}( \bar{{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}})\), then it also holds in \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\times \mathcal{M}({{\mathchoice{ \text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}})\).

Given Proposition 4.3, we only need to show that \(\bar{\mathcal{N}}_{L}\) converges to a Poisson random measure of intensity \(d\lambda \otimes dx \otimes \boldsymbol{\sigma _{E}}\). By standard criteria, see for instance [19, Th 16.16], the convergence follows if we can show that for any given compactly supported, continuous function \(f:{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\times [-1/2,1/2]\times \bar{\mathcal{M}}\to {{ \mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}\) we have

We fix such a function and concentrate on the proof of this convergence. From the independence of the \(\mathcal{N}_{L}^{(j)}\) we have

Choose \(h>0\) large enough such that \(f(\lambda ,\cdot ,\cdot )= 0\) whenever \(\lambda \notin [-h,h]\), and set \(\Delta \) as in (22). The main observation is that on the event \(\{N_{L}^{(j)}(\Delta )=1\}\), the random measure \({\mathcal{N}}_{L}^{(j)}\) has at most one atom on the support of \(f\) and therefore on this event

We can therefore write

The third term on the r.h.s. is bounded by a constant (independent of \(j\) and \(L\)) times \(\mathbf{1}_{\{N_{L}^{(j)}(\Delta ) \ge 2\}}\), so that by Proposition 4.5 its expectation is negligible compared to \(1/k\). We write the expectation of the second term

Set \(C:=\|e^{i f}-1\|_{\infty }< \infty \). Using Proposition 4.4 there exists \(C'>0\) such that for all \(L\) large enough

Moreover

By Propositions 4.4 and 4.5, this last term is negligible compared to \(1/k\), uniformly over all \(j\) and \(L\) large enough. Putting everything together, we obtain

uniformly over all \(j\) and all \(L\) large enough. Plugging this identity into (25), we get

which converges to the desired limit by Proposition 4.7 (note that \(e^{i f}-1\) is compactly supported, since \(f(\lambda ,\cdot ,\cdot )=0\) whenever \(\lambda \notin [-h,h]\)). □

The remaining sections are organized as follows. In Sect. 5, we prove the convergence to equilibrium stated in Theorem 4. In Sect. 6, we establish the GMP formula of Proposition 4.1 and collect some corollaries. In Sect. 7 we prove Proposition 4.2 on the exponential decay of the eigenfunctions. In Sect. 8 we establish the estimates on \(N_{L}^{(j)}(\Delta )\) stated in Propositions 4.4 and 4.5. These four sections can be read independently of each other.

On the other hand, Sect. 9, where the proofs of Proposition 4.3 and 4.7 are presented, relies extensively on definitions and intermediate results collected in Sect. 7.

In order not to interrupt the flow of arguments, we have postponed to Appendix A some technical (but elementary) results used along the article.

5 Convergence to equilibrium

The goal of this section is to prove Theorem 4. This result is delicate for three reasons. First we stated \(L^{\infty}\) bounds on the density thus requiring much finer control than the more usual total-variation bounds. Second, the (generator of the) diffusion that we are considering is not uniformly elliptic, but simply hypoelliptic, thus making both regularization and convergence estimates delicate. Third for \(E\to \infty \), the drift term of the diffusion \(\theta _{\lambda }^{(E)}\) is unbounded and makes the process rotate very fast on the circle: it is then a priori unclear whether one can obtain bounds on the density that are uniform over \(E>1\).

The proof consists of two distinct steps. First, we show quantitative regularization estimates on the density of the diffusion. The existence and the smoothness of the density at any time \(t>0\) is due to the hypoellipticity of the associated generator and follows from Hörmander’s Theorem [16]. However this result does not provide any quantitative estimate on this density: this is problematic in particular in the case where \(E\to \infty \). We establish a quantitative estimate using Malliavin calculus (which was originally introduced to give a probabilistic proof of Hörmander’s Theorem).

Second, we show exponential convergence to equilibrium in \(H^{1}\): by Sobolev embedding, this readily implies exponential convergence in \(L^{\infty}\). From the first step, we know that at a time of order 1, the \(H^{1}\)-norm of the density is itself of order 1. To establish an exponential convergence to equilibrium, one would use coercivity in \(H^{1}\) of the (adjoint in \(L^{2}(\mu _{\lambda }^{(\mathbf{E})})\) of the) generator of the diffusion: however the lack of ellipticity prevents one from getting this coercivity. We thus rely on hypocoercivity techniques following Villani’s monograph [35]: we identify a “twisted” \(H^{1}\)-norm, equivalent to the original one, in which the (adjoint in \(L^{2}(\mu _{\lambda }^{(\mathbf{E})})\) of the) generator of the diffusion is coercive. In particular, when working with distorted coordinates, we obtain a control on the coercivity constant which is uniform over \(E > 1\).

From now on, all the functions are defined on the circle \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}/\pi {{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{ \text{\scriptsize \textbf{Z}}}{\text{\tiny \textbf{Z}}}}}\) and take values in \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}\). We will denote by \(\mathcal{C}^{k}\) the space of \(k\)-times continuously differentiable functions on the circle. Furthermore “uniformly over all parameters” will mean uniformly over all \(\lambda \in \Delta \) when working with the original coordinates and uniformly over all \(E >1\) and \(\lambda \in \Delta \) with the distorted coordinates.

Remark 5.1

Let us mention that the result of Theorem 4 controls the exponential decay in \(L^{\infty}\) while a control in \(L^{2}\) would have sufficed for our purpose, however the proof would have been only marginally simpler if we had worked in \(L^{2}\) instead of \(L^{\infty}\).

5.1 Hypoellipticity - regularization step

We apply Malliavin calculus to the diffusion \(\theta _{\lambda }^{(\mathbf{E})}\) following Norris [32] and Hairer [17]. We drop the superscript \((\mathbf{E})\) but we argue simultaneously in both sets of coordinates. We also drop the subscript \(\lambda \). We let \(\mathbb{P}_{\theta _{0}}\) denote the law of the diffusion starting from \(\theta _{0}\) at time 0 and \(p_{t}(\theta _{0},\cdot )\) the density of this diffusion. The goal of this step is to show the following estimate: for any \(k\ge 1\), there exists \(C_{k}>0\) such that uniformly over all parameters

We write

with

Remark 5.2

The diffusion is not elliptic since \(V_{1}\) vanishes at \(x=0\). However, it satisfies the so-called Hörmander’s Bracket Condition which can be stated as follows. Let us associate to any function \(F\) an operator defined through \(Ff(x) := F(x) f'(x)\). Introduce the Lie bracket \([A,B] := AB - BA\) for any two operators \(A\), \(B\). The condition then reads: there exists \(k\ge 0\) (here \(k=2\) works) such that

where \(\mathscr{V}_{0} := \{V_{1}\}\) and \(\mathscr{V}_{n+1} := \{[F,\tilde{V}_{0}], [F,V_{1}]: F\in \mathscr{V}_{n}\} \cup {\mathscr{V}_{n}}\) where \(\tilde{V}_{0} = V_{0} + (1/2) V'_{1} V_{1}\).

We rely on the process \(J_{0,t}\) which is the derivative of the flow associated to the SDE satisfied by \(\theta \):

We will also need the inverse \(J_{0,t}^{-1}\) that satisfies

We also set \(J_{s,t} := J_{0,t} J_{0,s}^{-1}\).

Lemma 5.3

Fix \(t > 0\). For every \(p\ge 1\), there exists \(c_{p} > 0\) such that uniformly over all parameters

Proof

The proof is virtually the same for \(J\) and \(J^{-1}\). If we let \(U\) be the logarithm of either of these processes, then it solves

where \(\alpha \) and \(\beta \) are adapted processes. The crucial observation is that \(|\alpha (t)|\) and \(|\beta (t)|\) are bounded by some deterministic constant \(C>0\) uniformly over all parameters: indeed, the unbounded term \(E^{3/2}\) that appears in \(V_{0}\) (when working with the distorted coordinates) is “killed” upon differentiation. Lemma A.2 then suffices to conclude. □

Define the so-called Malliavin covariance matrix (which is a scalar here since our diffusion is one-dimensional):

The following is a standard result of Malliavin calculus: the only specificity is that our estimates are uniform over all parameters (in particular, w.r.t. \(E>1\) in the distorted coordinates) and this requires some extra care in the proof.

Proposition 5.4

Fix \(t>0\). Assume that for every \(p\ge 1\) there exists \(K_{p}>0\) such that \(\sup _{\theta _{0}}\mathbb{E}_{\theta _{0}}[\mathscr{C}_{t}^{-p}] < K_{p}\). Then for every \(k\ge 1\) there exists \(c_{k} > 0\) such that uniformly over all parameters

Proof

Assume that for every \(k\ge 1\) there exists \(C_{k} > 0\) such that for all \(\mathcal{C}^{\infty }\) function \(G\) there exists a r.v. \(Z_{k}\) such that

and such that uniformly over all parameters

Then, we deduce that for all \(\mathcal{C}^{\infty }\) function \(G\) uniformly over all parameters

so that standard functional analysis arguments, see for instance [32, Th. 0.1], ensure that the bound of the statement holds.

To establish (27) and (28) we rely on the notion of Malliavin derivative that we do not recall, see for instance [17, Sect. 3 and 5]. Let \(Y\) be a Malliavin differentiable r.v. and denote by \(\mathscr{D}_{s} Y\) the Malliavin derivative at time \(s\) of \(Y\). We recall the following properties of the Malliavin derivative [17, Sect. 3]:

The key tool in Malliavin Calculus is the so-called integration by parts formula which writes

for any (regular enough) adapted process \(u\). In the sequel all derivatives will be taken in direction \(u\) where \(u(s) := J_{0,s}^{-1} V_{1}(\theta (s))\), \(s\in [0,t]\). For convenience, we use the notation \(\mathscr{D}_{u} Q := \langle \mathscr{D}_{\cdot }Q , u(\cdot ) \rangle _{L^{2}([0,t])}\). We also introduce the successive derivatives in direction \(u\) by setting recursively \(\mathscr{D}^{(k)}_{u} Q := \mathscr{D}_{u} (\mathscr{D}^{(k-1)}_{u} Q)\).

Let us state a general result on the Malliavin derivative of the solution of an SDE. Assume that \(X = (X_{j})_{1\le j \le d} \in{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{ \text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}^{d}\) solves the autonomous SDE

where \(A\) and \(C\) are smooth maps from \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}^{d}\) to \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}^{d}\). Then the \(d\)-dimensional process \(Y =(Y_{j})_{1\le j \le d}\) whose \(j\)-th coordinate is \(Y_{j}(s) := \langle \mathscr{D}_{\cdot }X_{j}(s), u(\cdot ) \rangle _{L^{2}([0,s])}\) solves

In this last equation, \(\nabla A(x)\) is the \(d\times d\) matrix whose \((i,j)\)-entry equals \(\partial _{x_{j}} A_{i}(x)\), and similarly for \(\nabla C(x)\). This result can be established as follows. First of all if \(J^{X}(r,s)\) stands for the \(d\times d\) Jacobian matrix associated to the SDE \(X\) then for any \(0\le r \le s\)

see for instance [17, Eq. (5.2)]. By [17, Eq. (5.6)], \(\mathscr{D}_{r} X_{j}(s) = \Big (J^{X}(r,s) C(X(r)) \Big )_{j}\) and consequently the following identity holds in \({{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{ \text{\tiny \textbf{R}}}}}^{d}\)

from which we derive the above SDE.

In the particular case where \(X=\theta \), we find

We observe that

Combined with the chain rule (29), we deduce that for any integer \(k\ge 1\),

Let us set \(R(t) := \int _{0}^{t} u(s) dB(s)\). For every smooth function \(G\) and any Malliavin differentiable r.v. \(Z\), we apply the integration by parts formula to \(Q=G(\theta (t)) \mathscr{N}_{t}^{-1} Z\) and get

where \(\tilde{Z}\) is given by

Starting with \(Z_{0} = 1\) and applying iteratively this identity we obtain for some r.v. \(Z_{k}\)

thus proving (27). It remains to establish the bound (28).

First, observe that \(Z_{k}\) is a polynomial in \(\mathscr{N}_{t}^{-1}\), in \(\mathscr{D}_{u} \theta (t)\), \(\mathscr{D}_{u}^{(2)} \theta (t)\), … and in \(R(t)\), \(\mathscr{D}_{u} R(t)\), \(\mathscr{D}_{u}^{(2)} R(t)\), …. Indeed, the class of all such polynomials contains \(Z_{0}\), is stable under the action of \(\mathscr{D}_{u}\), and thus, remains stable under the map that leads from \(Z\) to \(\tilde{Z}\).

The assumption of the statement together with Lemma 5.3 allow to bound the moments of \(\mathscr{N}_{t}^{-1}\) uniformly over all parameters. In addition, the Burkholder-Davis-Gundy inequality combined with Lemma 5.3 allows to bound the moments of \(R(t)\) uniformly over all parameters. We are left with bounding the moments of \(\mathscr{D}_{u} \theta (t)\), \(\mathscr{D}_{u}^{(2)} \theta (t)\), … and \(\mathscr{D}_{u} R(t)\), \(\mathscr{D}_{u}^{(2)} R(t)\), ….

Consider the triplet \(X^{(0)}(s) = (\theta (s), J_{0,s}^{-1}, R(s)) \in{{\mathchoice{\text{\textbf{R}}}{ \text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}^{3}\). This is the solution of an autonomous SDE of the form (30) withFootnote 6

We can thus apply the general result stated above and let \(Y^{(0)} \in{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{\text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}^{3}\) be the process of the Malliavin derivative of \(X^{(0)}\) in direction \(u\). Note that the only problematic term is the first component of \(A^{(0)}\) which contains the factor \(\mathbf{E}^{3/2}\). However, the function \(C^{(0)}\), together with the functions \(\nabla A^{(0)}(x)\) and \(\nabla C^{(0)}(x)\) that appear in the evolution equation of \(Y^{(0)}\), are bounded uniformly over all parameters. This remains true for any higher order derivatives of these functions and this will suffice for our purpose.

We then consider the process \(X^{(1)} := (X^{(0)}, Y^{(0)}) \in{{\mathchoice{\text{\textbf{R}}}{\text{\textbf{R}}}{ \text{\scriptsize \textbf{R}}}{\text{\tiny \textbf{R}}}}}^{6}\). It is straightforward that \(X^{(1)}\) solves an autonomous SDE of the form (30). We can thus iterate the arguments and build recursively some processes \(X^{(n)}\) and \(Y^{(n)}\). The functions \(\nabla A^{(n)}(x)\) and \(\nabla C^{(n)}(x)\) that appear in the evolution equation of \(Y^{(n)}\) are bounded uniformly over all parameters.

Since the r.v. \(\mathscr{D}_{u} \theta (t)\), \(\mathscr{D}_{u}^{(2)} \theta (t)\), … and \(\mathscr{D}_{u} R(t)\), \(\mathscr{D}_{u}^{(2)} R(t)\), … eventually appear in the entries of the sequence \(Y^{(n)}\), \(n\ge 0\), it suffices to bound the moments of \(\vert Y^{(n)}(t)\vert \) for any given \(n\ge 0\).

We write \(Y\) for a generic element among the sequence \(Y^{(n)}\), \(n\ge 0\). Recall that it solves

where \(C(x)\), \(\nabla A(x)\), \(\nabla C(x)\) are bounded uniformly over all parameters. Applying Itô’s formula, one can check that the process \(U(s) := \ln (1+\vert Y(s)\vert ^{2})\) satisfies

where \(\vert \alpha (s)\vert \) and \(\vert \beta (s)\vert \) are adapted processes that are bounded by some deterministic constant \(K>0\) uniformly over all parameters and all \(s\ge 0\). Lemma A.2 allows to obtain bounds on the \(p\)-th moments of \(\int _{0}^{t} \alpha (s) ds + \int _{0}^{t}\beta (s) dB(s)\). In addition, the moments of the first term on the r.h.s. can be bounded since there exists some deterministic constant \(K'>0\) such that

□

It remains to check the assumption of Proposition 5.4 at time \(t=1\) (this is arbitrary). In the classical proof of Hörmander’s Theorem with Malliavin Calculus, this is where one uses the so-called Hörmander’s Bracket Condition (see Remark 5.2) via repeated applications of Itô’s formula involving the process \(\mathscr{C}_{t}\), see for instance [17, Proof of Theorem 6.3]. This would work with the original coordinates, but with the distorted coordinates this does not seem to work out (easily). We proceed differently and write

Lemma 5.3 allows to bound the second term. To bound the first term, we need some control on the time spent near \(\pi {{\mathchoice{\text{\textbf{Z}}}{\text{\textbf{Z}}}{\text{\scriptsize \textbf{Z}}}{ \text{\tiny \textbf{Z}}}}}\) by the diffusion \(\theta \): this is provided by Lemma A.6, that relies on a comparison of \(\theta \) with the solution of the deterministic part of its SDE. This completes the proof of (26).

5.2 Hypocoercivity - convergence step

In this subsection, we argue simultaneously in the two sets of coordinates. We abbreviate \(\mu _{\lambda }\) and \(\mu _{\lambda }^{(E)}\) to \(\mu \). All the \(L^{p}\) spaces will be taken w.r.t. the measure \(\mu \) (and not the Lebesgue measure). We write \(\|\cdot \|\) and \(\langle \cdot ,\cdot \rangle \) for the \(L^{2}\) norm and inner product w.r.t. \(\mu \).

By Lemma 3.1, uniformly over all parameters the measure \(\mu \) is equivalent to Lebesgue measure. This implies that the corresponding \(L^{p}\) norms are equivalent. In addition, we have the following Poincaré inequality

uniformly over all parameters. Indeed,

and

so that

For some constant \(c>0\) (to be adjusted later on) we define the following \(H^{1}\) norm (w.r.t. \(\mu \))

Let ℒ be the generator of our diffusion

where

Let \(\mathcal{L}^{*}\) be its adjoint in \(L^{2}\). The unique decomposition of \(-\mathcal{L}^{*}\) into a symmetric (actually self-adjoint) and an anti-symmetric part is given by \(-\mathcal{L}^{*}= A^{*}A + B\) where

and \(A^{*}\) is the adjoint in \(L^{2}\) of \(A\)

It is a standard fact that the centered density \(q_{t} = (p_{t} / \mu - 1)\) w.r.t. the invariant measure of a diffusion satisfies the PDE

In the previous section we dealt with regularity issues and showed that \(p_{1}\) (and therefore \(q_{1}\) since \(\mu \) is bounded from above and below) is smooth: more precisely, its \(\mathcal{C}^{1}\)-norm is bounded uniformly over all parameters. Our goal is to prove an exponential decay of the \(L^{\infty}\) norm of \(p_{t}\). By Sobolev embedding it suffices to prove an exponential decay in \(H^{1}\).

The natural route to such an estimate is to prove some coercivity: unfortunately this fails. Indeed, we have

and since \(\sigma \) vanishes at 0, \(\| Af\|\) is not equivalent to \(\| f'\|\) and so we cannot use Poincaré inequality to get a bound in terms of \(\|f\|\). Actually it is possible to check that the operator \(A^{*}A\) does not have spectral gap so that one cannot get exponential decay of the \(L^{2}\)-norm of \(q_{t}\) from the previous computation. In \(H^{1}\) the situation is similar. Generally speaking, if we work with “standard” norms then we do not have enough control on the derivative(s) of the function at stake near 0.

These computations do not take advantage of the anti-symmetric part \(B\) of \(\mathcal{L}^{*}\). The general idea of hypocoercivity [35] consists in exploiting the successive Lie brackets between \(A\) and \(B\) to recover some coercivity. One can check that \([A,B] f := AB f-BA f\) contains the term \(-\mathbf{E}^{3/2} \sigma ' f'\) and \([[A,B],B] f\) contains the term \(\mathbf{E}^{3} \sigma '' f'\): since \(\sigma ''\) does not vanish anymore at \(x=0\), these terms should offer the required control on the derivative at 0. To implement this idea, one constructs a twisted \(H^{1}\)-norm, denoted \(\mathfrak{H}^{1}\), that contains some successive Lie brackets of \(A\) and \(B\), see below for the precise expression, and that satisfies the following properties.

Proposition 5.5

There exists a norm \(\|\cdot \|_{\mathfrak{H}^{1}}\), derived from an inner product \(\langle \cdot ,\cdot \rangle _{\mathfrak{H}^{1}}\) such that:

-

(1)

There exists \(\kappa >0\) such that uniformly over all parameters we have

$$ \|f\|_{H^{1}} \le \| f \|_{\mathfrak{H}^{1}} \le (1+\kappa ) \|f\|_{H^{1}} \;. $$ -

(2)

There exists \(K>0\) such that uniformly over all parameters we have

$$ \langle f, \mathcal{L}^{*}f\rangle _{\mathfrak{H}^{1}} \le -K \|f'\|^{2} \;. $$

With this proposition at hand, we can easily conclude the proof of the main result of this section.

Proof of Theorem 4

In the previous subsection, we showed that there exists \(C_{1} > 0\) such that uniformly over all parameters

Set \(q_{t} := \frac{p_{t}}{\mu} - 1\) and recall that \(\partial _{t} q_{t} = \mathcal{L}^{*}q_{t}\). The second property of the proposition then yields

Since \(\int q_{t} d\mu = 0\), Poincaré inequality (31) together with the first property of the proposition show that for some constant \(K'>0\)

Applying the first property again, we deduce that uniformly over all the parameters, for all \(t \geq 1\),

for some constants \(c',C'>0\). Combining this bound with (32), applying the Sobolev embedding \(H^{1}(dx) \subset L^{\infty}(dx)\) and the fact that \(\mu \) is equivalent to the Lebesgue measure, we obtain the desired result. □

The rest of this subsection is devoted to the proof of Proposition 5.5. We start by introducing successive Lie brackets: actually we identify within the successive Lie brackets between \(A\) and \(B\) the terms \(\mathbf{E}^{3/2} C_{k}\) that will allow us to gain coercivity and we denote the remainder \(R_{k}\). Introduce \(C_{0} f := A f\), and recursively for \(k=1,2\)

and finally

We now introduce a family of coefficients indexed by \(\delta \in (0,1/4)\). Set \(b_{-1} := \delta \) and for every \(k\ge 0\)

One can check that for all \(0\le k \le 2\)

We then introduce

as well as \(\langle f,g \rangle _{\mathfrak{H}^{1}} = \langle f,g \rangle _{{H}^{1}} + \left [\mkern - 3 mu\left [f,g\right ]\mkern - 3 mu\right ]\) where

This norm depends on two parameters, \(c\) and \(\delta \), that will be adjusted later on.

As \(b_{k}^{2} = \delta a_{k} a_{k+1}\), we have

Note that for every \(k\in \{0,1,2,3\}\), we have \(\|C_{k} f\| \le 2\|f'\|\). Since \(\mathbf{E}\ge 1\), we easily deduce that \(0 \le \left [\mkern - 3 mu\left [f,f\right ]\mkern - 3 mu\right ] \le \kappa \|f'\|\) for some constant \(\kappa >0\) independent of all parameters, and the first property of Proposition 5.5 follows.

The proof of the second property is carried out in two steps. First, we show that there exists a constant \(C>0\) such that uniformly over all parameters,

Second we show that there exists \(\delta \in (0,1/4)\) and \(K'>0\) such that uniformly over all parameters

With these two bounds at hand, we get

It suffices to set \(c:= K' (2C)^{-1}\) and \(K=K'/2\) in order to deduce the second property of the proposition.

Remark 5.6

Our proof is close to [35, Proof of Theorem 24] and would be essentially the same if we had taken \(c=0\), that is, if we had taken \(\|f\|_{2}^{2}\) instead of \(\|f\|_{H^{1}}^{2}\) in the definition of the \(\mathfrak{H}_{1}\) norm. Actually, with the original coordinates, the proof would carry through with \(c=0\). With the distorted coordinates however, the first property of Proposition 5.5 would fail with \(c=0\).

We proceed with the proof of (34). For convenience, we define the operator \(Df=f'\). We then have

Since \(B\) is anti-symmetric we find \(\langle Df, DBf\rangle = \langle Df, [D,B] f\rangle \). Note that \([D,B] f = (b - 2\sigma \sigma ' - \sigma ^{2} \frac{\mu '}{\mu})' f'\). The key point here is that the only unbounded factor (\(\mathbf{E}^{3/2}\) which appears in \(b\)) is killed upon differentiation. A simple computation shows that there exists \(C_{1}\) such that

On the other hand, we have

Recall the bounds on \(\mu \) stated in Lemma 3.1. Recall that Young’s inequality yields \(XY \le \varepsilon X^{2} + \frac{1}{4 \varepsilon } Y^{2}\) for all \(\varepsilon >0\). There exists \(C_{2}>0\) such that

and

We deduce that there exists \(C_{3} > 0\) such that

Combining (36) and (37) we obtain (34).

We turn to the proof of (35), which is very close to [35, Proof of Theorem 24]. The main difference lies in the unboundedness of the coefficients of the operator at stake, namely the term \(E^{3/2}\) in \(B\) in the distorted coordinates, that requires some additional care.

First of all, we say that an operator \(Q\) is bounded relatively to some operators \(\{E_{j}\}_{j}\) if