Abstract

We prove the existence and the linear stability of Cantor families of small amplitude time quasi-periodic standing water wave solutions—namely periodic and even in the space variable x—of a bi-dimensional ocean with finite depth under the action of pure gravity. Such a result holds for all the values of the depth parameter in a Borel set of asymptotically full measure. This is a small divisor problem. The main difficulties are the fully nonlinear nature of the gravity water waves equations—the highest order x-derivative appears in the nonlinear term but not in the linearization at the origin—and the fact that the linear frequencies grow just in a sublinear way at infinity. We overcome these problems by first reducing the linearized operators, obtained at each approximate quasi-periodic solution along a Nash–Moser iterative scheme, to constant coefficients up to smoothing operators, using pseudo-differential changes of variables that are quasi-periodic in time. Then we apply a KAM reducibility scheme which requires very weak Melnikov non-resonance conditions which lose derivatives both in time and space. Despite the fact that the depth parameter moves the linear frequencies by just exponentially small quantities, we are able to verify such non-resonance conditions for most values of the depth, extending degenerate KAM theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

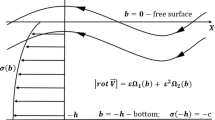

We consider the Euler equations of hydrodynamics for a 2-dimensional perfect, incompressible, inviscid, irrotational fluid under the action of gravity, filling an ocean with finite depth h and with space periodic boundary conditions, namely the fluid occupies the region

In this paper we prove the existence and the linear stability of small amplitude quasi-periodic in time solutions of the pure gravity water waves system

where \( g > 0 \) is the acceleration of gravity. The unknowns of the problem are the free surface \( y = \eta (t,x) \) and the velocity potential \( \Phi : {{\mathcal {D}}}_\eta \rightarrow {\mathbb {R}}\), i.e. the irrotational velocity field \( v =\nabla _{x,y} \Phi \) of the fluid. The first equation in (1.2) is the Bernoulli condition stating the continuity of the pressure at the free surface. The last equation in (1.2) expresses the fact that the fluid particles on the free surface always remain part of it.

Following Zakharov [60] and Craig–Sulem [26], the evolution problem (1.2) may be written as an infinite-dimensional Hamiltonian system in the unknowns \( (\eta (t,x), \psi (t,x) ) \) where \( \psi (t,x)=\Phi (t,x,\eta (t,x)) \) is, at each instant t, the trace at the free boundary of the velocity potential. Given the shape \(\eta (t,x)\) of the domain top boundary and the Dirichlet value \(\psi (t,x)\) of the velocity potential at the top boundary, there is a unique solution \(\Phi (t,x,y; h)\) of the elliptic problem

As proved in [26], system (1.2) is then equivalent to the Craig–Sulem–Zakharov system

where \( G(\eta , h)\) is the Dirichlet–Neumann operator defined as

(we denote by \( \eta _x \) the space derivative \( \partial _x \eta \)). The reason of the name “Dirichlet–Neumann” is that \(G(\eta ,h)\) maps the Dirichlet datum \(\psi \) into the (normalized) normal derivative \(G(\eta ,h)\psi \) at the top boundary (Neumann datum). The operator \( G(\eta , h) \) is linear in \( \psi \), self-adjoint with respect to the \( L^2 \) scalar product and positive-semidefinite, and its kernel contains only the constant functions. The Dirichlet–Neumann operator is a pseudo-differential operator with principal symbol \( D \tanh (hD) \), with the property that \( G(\eta , h ) - D \tanh (hD) \) is in \( \textit{OPS}^{-\infty } \) when \( \eta (x) \in {{\mathcal {C}}}^\infty \). This operator has been introduced in Craig–Sulem [26] and its properties are nowadays well-understood thanks to the works of Lannes [46, 47], Alazard–Métivier [5], Alazard–Burq–Zuily [2], Alazard–Delort [4]. In Appendix A we provide a self-contained analysis of the Dirichlet–Neumann operator adapted to our purposes.

Furthermore, equations (1.4) are the Hamiltonian system (see [26, 60])

where \( \nabla \) denotes the \( L^2 \)-gradient, and the Hamiltonian

is the sum of the kinetic and potential energies expressed in terms of the variables \( (\eta , \psi ) \). The symplectic structure induced by (1.6) is the standard Darboux 2-form

for all \( u_1 = (\eta _1, \psi _1) \), \( u_2 = (\eta _2, \psi _2) \). In the paper we will often write \(G(\eta ), H(\eta ,\psi )\) instead of \(G(\eta ,h), H(\eta ,\psi , h)\), omitting for simplicity to denote the dependence on the depth parameter h.

The phase space of (1.4) is

is the homogeneous space obtained by the equivalence relation \( \psi _1 (x) \sim \psi _2 (x) \) if and only if \( \psi _1 (x) - \psi _2 (x) = c \) is a constant, and \(H^1_0({\mathbb {T}})\) is the subspace of \(H^1({\mathbb {T}})\) of zero average functions. For simplicity of notation we denote the equivalence class \( [\psi ] \) by \( \psi \). Note that the second equation in (1.4) is in \( {\dot{H}}^1 ({\mathbb {T}}) \), as it is natural because only the gradient of the velocity potential has a physical meaning. Since the quotient map induces an isometry of \( {\dot{H}}^1 ({\mathbb {T}}) \) onto \( H^1_0 ({\mathbb {T}}) \), it is often convenient to identify \( \psi \) with a function with zero average.

The water waves system (1.4)–(1.6) exhibits several symmetries. First of all, the mass \( \int _{\mathbb {T}}\eta \, dx \) is a first integral of (1.4). In addition, the subspace of functions that are even in x,

is invariant under (1.4). In this case also the velocity potential \( \Phi (x,y) \) is even and \( 2 \pi \)-periodic in x and so the x-component of the velocity field \( v = (\Phi _x, \Phi _y) \) vanishes at \( x = k \pi \), for all \( k \in {\mathbb {Z}}\). Hence there is no flow of fluid through the lines \( x = k \pi \), \( k \in {\mathbb {Z}}\), and a solution of (1.4) satisfying (1.10) describes the motion of a liquid confined between two vertical walls.

Another important symmetry of the water waves system is reversibility, namely equations (1.4)–(1.6) are reversible with respect to the involution \( \rho : (\eta , \psi ) \mapsto (\eta , - \psi ) \), or, equivalently, the Hamiltonian H in (1.7) is even in \( \psi \):

As a consequence it is natural to look for solutions of (1.4) satisfying

namely \( \eta \) is even in time and \( \psi \) is odd in time. Solutions of the water waves equations (1.4) satisfying (1.10) and (1.12) are called gravity standing water waves.

In this paper we prove the first existence result of small amplitude time quasi-periodic standing waves solutions of the pure gravity water waves equations (1.4), for most values of the depth h, see Theorem 1.1.

The existence of standing water waves is a small divisor problem, which is particularly difficult because (1.4) is a fully nonlinear system of PDEs, the nonlinearity contains derivatives of order higher than those present in the linearized system at the origin, and the linear frequencies grow as \( \sim j^{1/2} \). The existence of small amplitude time-periodic gravity standing wave solutions for bi-dimensional fluids has been first proved by Plotinkov and Toland [53] in finite depth and by Iooss, Plotnikov and Toland in [42] in infinite depth, see also [38, 39]. More recently, the existence of time periodic gravity-capillary standing wave solutions in infinite depth has been proved by Alazard and Baldi [1]. Next, both the existence and the linear stability of time quasi-periodic gravity-capillary standing wave solutions, in infinite depth, have been proved by Berti and Montalto in [21], see also the expository paper [20].

We also mention that the bifurcation of small amplitude one-dimensional traveling gravity water wave solutions (namely traveling waves in bi-dimensional fluids like (1.4)) dates back to Levi-Civita [48]; note that standing waves are not traveling because they are even in space, see (1.10). For three-dimensional fluids, the existence of small amplitude traveling water wave solutions with space periodic boundary conditions has been proved by Craig and Nicholls [25] for the gravity-capillary case (which is not a small divisor problem) and by Iooss and Plotinikov [40, 41] in the pure gravity case (which is a small divisor problem).

From a physical point of view, it is natural to consider the depth h of the ocean as a fixed physical quantity and to introduce the space wavelength \( 2 \pi \varsigma \) as an internal parameter. Rescaling time, space and amplitude of the solution \((\eta (t,x), \psi (t,x))\) of (1.4) as

we get that \(({\tilde{\eta }}(\tau ,{\tilde{x}}), {\tilde{\psi }}(\tau ,{\tilde{x}}))\) satisfies

Choosing the scaling parameters \( \varsigma , \mu , \alpha \) such that \( \frac{\varsigma ^2}{ \alpha \mu } = 1 \), \( \frac{g \alpha }{\varsigma \mu } = 1 \) we obtain system (1.4) where the gravity constant g has been replaced by 1 and the depth parameter h by

Changing the parameter \(\mathtt {{h}}\) can be interpreted as changing the space period \( 2 \pi \varsigma \) of the solutions and not the depth h of the water, giving results for a fixed equation (1.4).

In the sequel we shall look for time quasi-periodic solutions of the water waves system

with \(\eta (t) \in H^1_0({\mathbb {T}}_x) \) and \(\psi (t) \in {\dot{H}}^1 ({\mathbb {T}}_x) \), actually belonging to more regular Sobolev spaces.

1.1 Main result

We look for small amplitude solutions of (1.14). Hence a fundamental rôle is played by the dynamics of the system obtained linearizing (1.14) at the equilibrium \((\eta , \psi ) = (0,0)\), namely

where \( G(0, {\mathtt {{h}}}) = D \tanh ({\mathtt {{h}}} D) \) is the Dirichlet–Neumann operator at the flat surface \( \eta = 0 \). In the compact Hamiltonian form as in (1.6), system (1.15) reads

which is the Hamiltonian system generated by the quadratic Hamiltonian (see (1.7))

The solutions of the linear system (1.15), i.e. (1.16), even in x, satisfying (1.12) and (1.9), are

with linear frequencies of oscillation

Note that, since \( j \mapsto j \tanh ({\mathtt {{h}}} j) \) is monotone increasing, all the linear frequencies are simple.

The main result of the paper proves that most solutions (1.18) of the linear system (1.15) can be continued to solutions of the nonlinear water waves equations (1.14) for most values of the parameter \( {\mathtt {{h}}} \in [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2] \). More precisely we look for quasi-periodic solutions \( u (\widetilde{\omega }t) = (\eta , \psi )( \widetilde{\omega }t) \) of (1.14), with frequency \( \widetilde{\omega }\in {\mathbb {R}}^\nu \) (to be determined), close to solutions (1.18) of (1.15), in the Sobolev spaces of functions

where \(\langle \ell ,j \rangle := \max \{ 1, |\ell |, |j| \} \). For

one has \( H^s ( {\mathbb {T}}^{\nu +1},{\mathbb {R}}) \subset L^\infty ( {\mathbb {T}}^{\nu +1},{\mathbb {R}})\), and \(H^s({\mathbb {T}}^{\nu +1},{\mathbb {R}})\) is an algebra.

Fix an arbitrary finite subset \( {\mathbb {S}}^+\subset {\mathbb {N}}^+ := \{1,2, \ldots \} \) (tangential sites) and consider the solutions of the linear equation (1.15)

which are Fourier supported on \( {\mathbb {S}}^+\). We denote by \( \nu := | {\mathbb {S}}^+| \) the cardinality of \( {\mathbb {S}}^+\).

Theorem 1.1

(KAM for gravity water waves in finite depth) For every choice of the tangential sites \( {\mathbb {S}}^+\subset {\mathbb {N}}{\setminus } \{0\} \), there exists \( {\bar{s}} > \frac{|{\mathbb {S}}^+| + 1}{2} \), \( \varepsilon _0 \in (0,1) \) such that for every vector \( \vec {a} := (a_j)_{j \in {\mathbb {S}}^+} \), with \(a_j > 0\) for all \(j \in {\mathbb {S}}^+\) and \( |\vec {a}| \le \varepsilon _0 \), there exists a Cantor-like set \( {\mathcal {G}}\subset [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2] \) with asymptotically full measure as \( \vec {a} \rightarrow 0 \), i.e.

such that, for any \( {\mathtt {{h}}} \in {\mathcal {G}}\), the gravity water waves system (1.14) has a time quasi-periodic solution \( u( {\widetilde{\omega }} t, x ) = (\eta ( {\widetilde{\omega }} t, x), \psi ( {\widetilde{\omega }} t, x) ) \), with Sobolev regularity \( (\eta , \psi ) \in H^{{\bar{s}}} ( {\mathbb {T}}^\nu \times {\mathbb {T}}, {\mathbb {R}}^2) \), with a Diophantine frequency vector \( {\widetilde{\omega }} := {\widetilde{\omega }}(\mathtt {{h}}, \vec {a}) := ({\widetilde{\omega }}_j )_{j \in {\mathbb {S}}^+} \in {\mathbb {R}}^\nu \), of the form

with \( {{\widetilde{\omega }}}(\mathtt {{h}}, \vec {a}) \rightarrow \vec \omega ({\mathtt {{h}}}) := (\omega _j ({\mathtt {{h}}}))_{j \in {{\mathbb {S}}}^+} \) as \( \vec {a} \rightarrow 0 \), and the functions \( r_1 ( \varphi ,x ), r_2(\varphi , x)\) are \(o( |\vec {a}| )\)-small in \( H^{{\bar{s}}} ( {\mathbb {T}}^\nu \times {\mathbb {T}}, {\mathbb {R}}) \), i.e. \(\Vert r_i \Vert _{{\bar{s}}}/ |\vec {a}| \rightarrow 0\) as \(|\vec {a}| \rightarrow 0\) for \(i = 1, 2\). The solution \( (\eta ({\widetilde{\omega }} t, x), \psi ({\widetilde{\omega }} t, x)) \) is even in x, \( \eta \) is even in t and \( \psi \) is odd in t. In addition these quasi-periodic solutions are linearly stable, see Theorem 1.2.

Let us make some comments on the result.

No global wellposedness results concerning the initial value problem of the water waves equations (1.4) under periodic boundary conditions are known so far. Global existence results have been proved for smooth Cauchy data rapidly decaying at infinity in \( {\mathbb {R}}^d \), \( d = 1, 2 \), exploiting the dispersive properties of the flow. For three dimensional fluids (i.e. \( d = 2 \)) it has been proved independently by Germain–Masmoudi–Shatah [33] and Wu [59]. In the more difficult case of bi-dimensional fluids (i.e. \( d = 1 \)) it has been proved by Alazard–Delort [4] and Ionescu–Pusateri [37].

In the case of periodic boundary conditions, Ifrim–Tataru [36] proved for small initial data a cubic life span time of existence, which is longer than the one just provided by the local existence theory, see for example [3]. For longer times, we mention the almost global existence result in Berti–Delort [19] for gravity-capillary space periodic water waves.

The Nash–Moser-KAM iterative procedure used to prove Theorem 1.1 selects many values of the parameter \( {\mathtt {{h}}} \in [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2] \) that give rise to the quasi-periodic solutions (1.23), which are defined for all times. By a Fubini-type argument it also results that, for most values of \({\mathtt {{h}}} \in [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2] \), there exist quasi-periodic solutions of (1.14) for most values of the amplitudes \( | \vec {a} | \le \varepsilon _0 \). The fact that we find quasi-periodic solutions only restricting to a proper subset of parameters is not a technical issue, because the gravity water waves equations (1.4) are expected to be not integrable, see [27, 28] in the case of infinite depth.

The dynamics of the pure gravity and gravity-capillary water waves equations is very different:

- (i):

-

the pure gravity water waves vector field in (1.14) is a singular perturbation of the linearized vector field at the origin in (1.15), which, after symmetrization, is \( |D_x|^{\frac{1}{2}}{\tanh ^{\frac{1}{2}}(\mathtt {{h}}|D_x|)}\); in fact, the linearization of the nonlinearity gives rise to a transport vector field \(V\partial _x\), see (1.43). On the other hand, the gravity capillary vector field is quasi-linear and contains derivatives of the same order as the linearized vector field at the origin, which is \(\sim |D_x|^{\frac{3}{2}}\). This difference, which is well known in the water waves literature, requires a very different analysis of the linearized operator (Sects. 6–12) with respect to the gravity capillary case in [1, 21], see Remark 1.4.

- (ii):

-

The linear frequencies \( \omega _j \) in (1.19) of the pure gravity water waves grow like \(\sim j^{\frac{1}{2}}\) as \( j \rightarrow + \infty \), while, in presence of surface tension \( \kappa \), the linear frequencies are \( \sqrt{(1+ \kappa j^2)j \tanh ({\mathtt {{h}}}j) } \sim j^{\frac{3}{2}}\). This makes a substantial difference for the development of KAM theory. In presence of a sublinear growth of the linear frequencies \(\sim j^\alpha \), \(\alpha <1\), one may impose only very weak second order Melnikov non-resonance conditions, see e.g. (1.36), which lose also space (and not only time) derivatives along the KAM reducibility scheme. This is not the case of the abstract infinite-dimensional KAM theorems [44, 45, 54] where the linear frequencies grow as \( j^\alpha \), \( \alpha \ge 1 \), and the perturbation is bounded. In this paper we overcome the difficulties posed by the sublinear growth \(\sim j^{\frac{1}{2}}\) and by the unboundedness of the water waves vector field thanks to a regularization procedure performed on the linearized PDE at each approximate quasi-periodic solution obtained along a Nash–Moser iterative scheme, see the regularized system (1.41). This regularization strategy is in principle applicable to a broad class of PDEs where the second order Melnikov non-resonance conditions lose space derivatives.

- (iii):

-

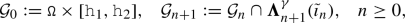

The linear frequencies (1.19) vary with \(\mathtt {{h}}\) only by exponentially small quantities: they admit the asymptotic expansion

$$\begin{aligned}&\sqrt{j \tanh ({\mathtt {{h}}} j)} = \sqrt{j} + r(j,\mathtt {{h}}) \nonumber \\&\quad \mathrm{where} \quad \big | \partial _{\mathtt {{h}}}^k r(j,\mathtt {{h}}) \big | \le C_k e^{ - {\mathtt {{h}}} j} \quad \forall k \in {\mathbb {N}}, \ \forall j \ge 1, \end{aligned}$$(1.24)uniformly in \( {\mathtt {{h}}} \in [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2] \), where the constant \(C_k\) depends only on k and \(\mathtt {{h}}_1\). Nevertheless we shall be able, extending the degenerate KAM theory approach in [11, 21], to use the finite depth parameter \( \mathtt {{h}}\) to impose the required Melnikov non-resonance conditions, see (1.36) and Sects. 3 and 4.2. On the other hand, for the gravity capillary water waves considered in [21], the surface tension parameter \( \kappa \) moves the linear frequencies \( \sqrt{(1+ \kappa j^2)j \tanh ({\mathtt {{h}}}j) } \) of polynomial quantities \( O( j^{3/2})\).

Linear stability The quasi-periodic solutions \( u( {\widetilde{\omega }} t) = (\eta ( {\widetilde{\omega }} t), \psi ( {\widetilde{\omega }} t) ) \) found in Theorem 1.1 are linearly stable. Since this is not only a dynamically relevant information, but also an essential ingredient of the existence proof (it is not necessary for time periodic solutions as in [1, 38, 39, 42, 53]), we state precisely the result.

The quasi-periodic solutions (1.23) are mainly supported in Fourier space on the tangential sites \( {\mathbb {S}}^+\). As a consequence, the dynamics of the water waves equations (1.4) on the symplectic subspaces

is quite different. We shall call \( v \in H_{{\mathbb {S}}^+} \) the tangential variable and \( z \in H_{{\mathbb {S}}^+}^\bot \) the normal one. In the finite dimensional subspace \( H_{{\mathbb {S}}^+} \) we shall describe the dynamics by introducing the action-angle variables \( (\theta , I) \in {\mathbb {T}}^\nu \times {\mathbb {R}}^\nu \) in Sect. 4.

The classical normal form formulation of KAM theory for lower dimensional tori, see for instance [13, 14, 29, 43,44,45, 49, 54, 55, 62], provides, when applicable, existence and linear stability of quasi-periodic solutions at the same time. On the other hand, existence (without linear stability) of periodic and quasi-periodic solutions of PDEs has been proved by using the Lyapunov-Schmidt decomposition combined with Nash–Moser implicit function theorems, see e.g. [1, 6, 22, 24, 25, 38, 39, 42, 53] and references therein. In this paper we follow the Nash Moser approach to KAM theory outlined in [16] and implemented in [8, 21], which combines ideas of both formulations, see Sect. 1.2 “Analysis of the linearized operators” and Sect. 5.

We prove that around each torus filled by the quasi-periodic solutions (1.23) of the Hamiltonian system (1.14) constructed in Theorem 1.1 there exist symplectic coordinates \( (\phi , y, w) = (\phi , y, \eta , \psi ) \in {\mathbb {T}}^\nu \times {\mathbb {R}}^\nu \times H_{{\mathbb {S}}^+}^\bot \) (see (5.16) and [16]) in which the water waves Hamiltonian reads

where \( K_{\ge 3} \) collects the terms at least cubic in the variables (y, w) (see (5.18) and note that, at a solution, one has \( \partial _\phi K_{00} = 0 \), \( K_{10} = {\widetilde{\omega }} \), \( K_{01} = 0 \) by Lemma 5.4). The \( (\phi , y) \) coordinates are modifications of the action-angle variables and w is a translation of the Cartesian variable z in the normal subspace, see (5.16). In these coordinates the quasi-periodic solution reads \( t \mapsto ({\widetilde{\omega }} t, 0, 0 ) \) and the corresponding linearized water waves equations are

The self-adjoint operator \( K_{02} ({\widetilde{\omega }} t) \) is defined in (5.18) and \( J K_{02} ({\widetilde{\omega }} t) \) is the restriction to \( H_{{\mathbb {S}}^+}^\bot \) of the linearized water waves vector field \( J \partial _u \nabla _u H (u ({\widetilde{\omega }} t ))\) (computed explicitly in (6.8)) up to a finite dimensional remainder, see Lemma 6.1.

We have the following result of linear stability for the quasi-periodic solutions found in Theorem 1.1.

Theorem 1.2

(Linear stability) The quasi-periodic solutions (1.23) of the pure gravity water waves system are linearly stable, meaning that for all s belonging to a suitable interval \([s_1,s_2]\), for any initial datum \(y(0) \in {\mathbb {R}}^\nu \), \(w(0) \in H^{s - \frac{1}{4}}_x \times H^{s + \frac{1}{4}}_x\), the solutions y(t), w(t) of system (1.27) satisfy

In fact, by (1.27), the actions \( y (t) = y(0) \) do not evolve in time and the third equation reduces to the linear PDE

Sections 6–14 imply the existence of a transformation \((H^s_x \times H^s_x) \cap H_{{\mathbb {S}}^+}^\bot \rightarrow (H^{s - \frac{1}{4}}_x \times H^{s + \frac{1}{4}}_x) \cap H_{{\mathbb {S}}^+}^\bot \), bounded and invertible for all \(s \in [s_1, s_2]\), such that, in the new variables \({{\mathtt {w}}}_\infty \), the homogeneous equation \({\dot{w}} = J K_{02}({\widetilde{\omega }} t)[ w]\) transforms into a system of infinitely many uncoupled scalar and time independent ODEs of the form

where \(\mathrm{i} \) is the imaginary unit, \({\mathbb {S}}_0^c := {\mathbb {Z}}{\setminus } {\mathbb {S}}_0\), \({{\mathbb {S}}}_0 := {\mathbb {S}}^+\cup (- {\mathbb {S}}^+) \cup \{ 0 \} \subseteq {\mathbb {Z}}\), the eigenvalues \(\mu _j^{\infty }\) are (see (4.26), (4.27))

and \( {\mathtt {m}}_{\frac{1}{2}}^{\infty } = 1 + O( | \vec {a} |^{c}) \), \(\sup _{j \in {{\mathbb {S}}}_0^c} |j|^{\frac{1}{2}} |{\mathfrak {r}}_j^{\infty }| = O( |\vec {a} |^{c} ) \) for some \( c > 0 \), see (4.28). Since \(\mu _j^\infty \) are even in j, equations (1.29) can be equivalently written in the basis \((\cos (jx))_{j\in {\mathbb {N}}{\setminus }{\mathbb {S}}^+}\) of functions even in x; in Sect. 14, for convenience, we represent even operators in the exponential basis \((e^{\mathrm{i} j x})_{j\in {{\mathbb {S}}}_0^c}\). The above result is the reducibility of the linearized quasi-periodically time dependent equation \({\dot{w}} = J K_{02}({\widetilde{\omega }} t)[w]\). The Floquet exponents of the quasi-periodic solution are the purely imaginary numbers \( \{ 0, \mathrm{i} \mu _j^{\infty }, j \in {{\mathbb {S}}}_0^c \}\) (the null Floquet exponent comes from the action component \(\dot{y} = 0\)). Since \(\mu _j^\infty \) are real, the Sobolev norms of the solutions of (1.29) are constant.

The reducibility of the linear equation \({\dot{w}} = J K_{02}({\widetilde{\omega }} t)[w]\) is obtained by two well-separated procedures:

-

1.

First, we perform a reduction of the linearized operator into a constant coefficient pseudo-differential operator, up to smoothing remainders, via changes of variables that are quasi-periodic transformations of the phase space, see (1.41). We perform such a reduction in Sects. 6–13.

-

2.

Then, we implement in Sect. 14 a KAM iterative scheme which completes the diagonalization of the linearized operator. This scheme uses very weak second order Melnikov non-resonance conditions which lose derivatives both in time and in space. This loss is compensated along the KAM scheme by the smoothing nature of the variable coefficients remainders. Actually, in Sect. 14 we explicitly state only a result of almost-reducibility (in Theorems 14.3–14.4 we impose only finitely many Melnikov non-resonance conditions and there appears a remainder \({\mathcal {R}}_n\) of size \(O(N_n^{-{\mathtt {a}}})\), where \({\mathtt {a}} > 0\) is the large parameter fixed in (14.7)), because this is sufficient for the construction of the quasi-periodic solutions. However the frequencies of the quasi-periodic solutions that we construct in Theorem 1.1 satisfy all the infinitely many Melnikov non-resonance conditions in (4.29) and Theorems 14.3–14.4 pass to the limit as \(n\rightarrow \infty \), leading to (1.29).

We shall explain these steps in detail in Sect. 1.2. In the pioneering works of Plotnikov-Toland [53] and Iooss-Plotnikov-Toland [42] dealing with time-periodic solutions of the water waves equations, as well as in [1], the latter diagonalization is not required. The key difference is that, in the periodic problem, a sufficiently regularizing operator in the space variable is also regularizing in the time variable, on the “characteristic” Fourier indices which correspond to the small divisors. This is definitely not true for quasi-periodic solutions.

Literature about KAM for quasilinear PDEs KAM theory for PDEs has been developed to a large extent for bounded perturbations and for linear frequencies growing in a superlinear way, as \( j^\alpha \), \( \alpha \ge 1 \). The case \( \alpha = 1 \), which corresponds to 1d wave and Klein-Gordon equations, is more delicate. In the sublinear case \( \alpha < 1\), as far as we know, there are no general KAM results, since the second order Melnikov conditions lose space derivatives. Since the eigenvalues of \(- \Delta \) on \({\mathbb {T}}^d\) grow, according to the Weyl law, like \( \sim j^{2/d}\), \(j \in {\mathbb {N}}\), one could regard the KAM results for multidimensional Schrödinger and wave equations in [15, 18, 22, 29, 55], under this perspective. Actually the proof of these results exploits specific properties of clustering of the eigenvalues of the Laplacian.

The existence of quasi-periodic solutions of PDEs with unbounded perturbations (i.e. the nonlinearity contains derivatives) has been first proved by Kuksin [45] and Kappeler-Pöschel [43] for KdV, then by Liu-Yuan [49], Zhang-Gao-Yuan [62] for derivative NLS, and by Berti–Biasco–Procesi [13, 14] for derivative wave equation. All these previous results still refer to semilinear perturbations, i.e. where the order of the derivatives in the nonlinearity is strictly lower than the order of the constant coefficient (integrable) linear differential operator.

For quasi-linear or fully nonlinear PDEs the first KAM results have been recently proved by Baldi–Berti–Montalto in [7,8,9] for perturbations of Airy, KdV and mKdV equations, introducing tools of pseudo-differential calculus for the spectral analysis of the linearized equations. In particular, [7] concerns quasi-periodically forced perturbations of the Airy equation

where the forcing frequency \(\omega \) is an external parameter. The key step is the reduction of the linearized operator at an approximate solution to constant coefficients up to a sufficiently smoothing remainder, followed by a KAM reducibility scheme leading to its complete diagonalization. Once such a reduction has been achieved, the second order Melnikov nonresonance conditions required for the diagonalization are easily imposed since the frequencies are \(\sim j^3\) and using \(\omega \) as external parameters. Because of the purely differential structure of (1.31), the required tools of pseudo-differential calculus are mainly multiplication operators and Fourier multipliers. These techniques have been extended by Feola-Procesi [31] for quasi-linear forced perturbations of Schrödinger equations and by Montalto [51] for the forced Kirchhoff equation.

The paper [8] deals with the more difficult case of completely resonant autonomous Hamiltonian perturbed KdV equations of the form

Since the Airy equation \(u_t + u_{xxx}=0\) possesses only \(2\pi \)-periodic solutions, the existence of quasi-periodic solutions of (1.32) is entirely due to the nonlinearity, which determines the modulation of the tangential frequencies of the solutions with respect to its amplitudes. This is achieved via “weak” Birkhoff normal form transformations that are close to the identity up to finite rank operators. The paper [8] implements the general symplectic procedure proposed in [16] for autonomous PDEs, which reduces the construction of an approximate inverse of the linearized operator to the construction of an approximate inverse of its restriction to the normal directions. This is obtained along the lines of [7], but with more careful size estimates because (1.32) is a completely resonant PDE. The symplectic procedure of [16] is also applied in [21] and in Sect. 5 of the present paper. We refer to [23] and [32] for a similar reduction which applies also to PDEs which are not Hamiltonian, but for example reversible.

By further extending these techniques, the existence of quasi-periodic solutions of gravity capillary water waves has been recently proved in [21]. In items (i)–(iii) after Theorem 1.1 we have described the major differences between the pure gravity and gravity-capillary water waves equations and we postpone to Remark 1.4 more comments about the differences regarding the reducibility of the linearized equations.

1.2 Ideas of the proof

The three major difficulties in proving the existence of time quasi-periodic solutions of the gravity water waves equations (1.14) are:

- (i):

-

The nonlinear water waves system (1.14) is a singular perturbation of (1.15).

- (ii):

-

The dispersion relation (1.19) is sublinear, i.e. \( \omega _j \sim \sqrt{j} \) for \( j \rightarrow \infty \).

- (iii):

-

The linear frequencies \( \omega _j ( \mathtt {{h}}) = j^{\frac{1}{2}}\tanh ^{\frac{1}{2}}(\mathtt {{h}}j)\) vary with \( \mathtt {{h}}\) of just exponentially small quantities.

We present below the key ideas to solve these three major problems.

Nash–Moser Theorem 4.1 of hypothetical conjugation In Sect. 4 we rescale \(u \mapsto \varepsilon u\) and introduce the action angle variables \((\theta , I) \in {\mathbb {T}}^\nu \times {\mathbb {R}}^\nu \) on the tangential sites (see (1.25))

where \( \xi _j > 0 \), \( j = 1, \ldots , \nu \), the variables \(I_j\) satisfy \( | I_j | < \xi _j \), so that system (1.14) becomes the Hamiltonian system generated by

where P is given in (4.8). The unperturbed actions \(\xi _j\) in (1.33) and the unperturbed amplitudes \(a_j\) in (1.22) and Theorem 1.1 are related by the identity \(a_j = \varepsilon \sqrt{(2/\pi )}\,\omega _j^{\frac{1}{2}} \sqrt{\xi _j}\) for all \(j \in {\mathbb {S}}^+\).

The expected quasi-periodic solutions of the autonomous Hamiltonian system generated by \(H_\varepsilon \) will have shifted frequencies \( {\widetilde{\omega }}_j \)—to be found—close to the linear frequencies \( \omega _j ({\mathtt {{h}}}) \) in (1.19). The perturbed frequencies depend on the nonlinearity and on the amplitudes \( \xi _j \). Since the Melnikov non-resonance conditions are naturally imposed on \( \omega \), it is convenient to use the frequencies \(\omega \in {\mathbb {R}}^\nu \) as parameters, introducing “counterterms” \( \alpha \in {\mathbb {R}}^\nu \) (as in [21], in the spirit of Herman-Féjoz [30]) in the family of Hamiltonians (see (4.9))

Then the first goal (Theorem 4.1) is to prove that, for \( \varepsilon \) small enough, there exist \( \alpha _\infty (\omega , {\mathtt {{h}}}, \varepsilon )\), close to \(\omega \), and a \( \nu \)-dimensional embedded torus \(i_\infty (\varphi ; \omega ,{\mathtt {{h}}}, \varepsilon )\) of the form

close to \( (\varphi , 0, 0 ) \), defined for all \((\omega ,\mathtt {{h}}) \in {\mathbb {R}}^\nu \times [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2]\), such that, for all \((\omega ,\mathtt {{h}})\) belonging to the set \({{\mathcal {C}}}^\gamma _\infty \) defined in (4.20), \((i_\infty , \alpha _\infty )(\omega ,{\mathtt {{h}}}, \varepsilon )\) is a zero of the nonlinear operator (see (4.10))

The explicit set \({{\mathcal {C}}}^\gamma _\infty \) requires \( \omega \) to satisfy, in addition to the Diophantine property

the first and second Melnikov non-resonance conditions stated in (4.20), in particular

where \( \mu _{j}^\infty (\omega , {\mathtt {{h}}}) \) are the “final eigenvalues” in (4.18), defined for all \( (\omega ,\mathtt {{h}}) \in {\mathbb {R}}^\nu \times [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2]\) (we use the abstract Whitney extension theorem in Appendix B). The torus \( i_\infty \), the counterterm \( \alpha _\infty \) and the final eigenvalues \( \mu _{j}^\infty (\omega , {\mathtt {{h}}}) \) are \( {{\mathcal {C}}}^{k_0} \) differentiable with respect to the parameters \( (\omega , {\mathtt {{h}}}) \). The value of \( k_0 \) is fixed in Sect. 3, depending only on the unperturbed linear frequencies, so that transversality conditions like (1.39) hold, see Proposition 3.4. The value of the counterterm \( \alpha := \alpha _\infty (\omega , {\mathtt {{h}}}, \varepsilon ) \) is adjusted along the Nash–Moser iteration in order to control the average of the first component of the Hamilton equation (4.10), especially for solving the linearized equation (5.35), in particular (5.39).

Theorem 4.1 follows by the Nash–Moser Theorem 15.1 which relies on the analysis of the linearized operators \( d_{i,\alpha } {{\mathcal {F}}} \) at an approximate solution, performed in Sects. 5–14. The formulation of Theorem 4.1 is convenient as it completely decouples the Nash–Moser iteration required to prove Theorem 1.1 and the discussion about the measure of the set of parameters \( {{\mathcal {C}}}^\gamma _\infty \) where all the Melnikov non-resonance conditions are verified. In Sect. 4.2 we are able to prove positive measure estimates, if the exponent \( {{\mathtt {d}}} \) in (1.36) is large enough and \(\gamma = o(1)\) as \(\varepsilon \rightarrow 0\). Since such a value of \( {{\mathtt {d}}} \) determines the number of regularization steps to be performed on the linearized operator, we prefer to first discuss how we fix it, applying degenerate KAM theory.

Proof of Theorem 1.1: degenerate KAM theory and measure estimates In order to prove the existence of quasi-periodic solutions of the system with Hamiltonian \(H_\varepsilon \) in (1.34), thus (1.14), and not only of the system with modified Hamiltonian \( H_\alpha \) with \( \alpha := \alpha _\infty (\omega , {\mathtt {{h}}}, \varepsilon ) \), we have to prove that the curve of the unperturbed linear tangential frequencies

intersects the image \( \alpha _\infty ({{\mathcal {C}}}^\gamma _\infty ) \) of the set \( {{\mathcal {C}}}^\gamma _\infty \) under the map \( \alpha _\infty \), for “most” values of \( {\mathtt {{h}}} \in [{\mathtt {{h}}} _1, {\mathtt {{h}}} _2] \). Setting

where \(\alpha _\infty ^{- 1}(\cdot , {\mathtt {{h}}} )\) is the inverse of the function \(\alpha _\infty (\cdot , {\mathtt {{h}}} )\) at a fixed \({\mathtt {{h}}} \in [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2]\), if the vector \(( \omega _\varepsilon ({\mathtt {{h}}} ), {\mathtt {{h}}} )\) belongs to \({{\mathcal {C}}}^\gamma _\infty \), then Theorem 4.1 implies the existence of a quasi-periodic solution of the system with Hamiltonian \(H_\varepsilon \) with Diophantine frequency \(\omega _\varepsilon ({\mathtt {{h}}} )\).

In Theorem 4.2 we prove that for all the values of \(\mathtt {{h}}\in [\mathtt {{h}}_1,\mathtt {{h}}_2]\) except a set of small measure \(O(\gamma ^{1/k_0^*})\) (where \(k_0^*\) is the index of non-degeneracy appearing below in (1.39)), the vector \(( \omega _\varepsilon ({\mathtt {{h}}} ), {\mathtt {{h}}} )\) belongs to \({{\mathcal {C}}}^\gamma _\infty \). Since the parameter interval \( [{\mathtt {{h}}}_1, {\mathtt {{h}}}_2 ] \) is fixed, independently of the \( O(\varepsilon ) \)-neighborhood of the origin where we look for the solutions, the small divisor constant \( \gamma \) in the definition of \({\mathcal {C}}^\gamma _\infty \) (see e.g. (1.36)) can be taken as \( \gamma = \varepsilon ^a \) with \( a > 0 \) as small as needed, see (4.22), so that all the quantities \(\varepsilon \gamma ^{-\kappa }\) that we encounter along the proof are \(\ll 1\).

The first task is to prove a transversality property for the unperturbed tangential frequencies \(\vec \omega ({\mathtt {{h}}})\) in (1.37) and the normal ones \(\vec \Omega (\mathtt {{h}}):= (\Omega _j(\mathtt {{h}}))_{j\in {\mathbb {N}}^+{\setminus }{\mathbb {S}}^+} :=(\omega _j(\mathtt {{h}}))_{j\in {\mathbb {N}}^+{\setminus }{\mathbb {S}}^+}\). Exploiting the fact that the maps \( {\mathtt {{h}}} \mapsto \omega _j ({\mathtt {{h}}}^4) \) are analytic, simple—namely injective in j—in the subspace of functions even in x, and they grow asymptotically like \( \sqrt{j} \) for \( j \rightarrow \infty \), we first prove that the linear frequencies \(\omega _j(\mathtt {{h}})\) are non-degenerate in the sense of Bambusi–Berti–Magistrelli [11] (i.e. they are not contained in any hyperplane). This is verified in Lemma 3.2 using a generalized Vandermonde determinant (see Lemma 3.3). Then in Proposition 3.4 we translate this qualitative non-degeneracy condition into quantitative transversality information: there exist \(k_0^*>0, \rho _0>0\) such that, for all \(\mathtt {{h}}\in [\mathtt {{h}}_1,\mathtt {{h}}_2]\),

and similarly for the 0th, 1st and 2nd order Melnikov non-resonance condition with the \( +\) sign. We call (following [57]) \( k_0^*\) the index of non-degeneracy and \( \rho _0 \) the amount of non-degeneracy. Note that the restriction to the subspace of functions with zero average in x eliminates the zero frequency \( \omega _0 = 0 \), which is trivially resonant (this is used also in [27]).

The transversality condition (1.39) is stable under perturbations that are small in \( {{\mathcal {C}}}^{k_0}\)-norm, where \( k_0 := k_0^* + 2 \), see Lemma 4.4. Since \(\omega _\varepsilon ({\mathtt {{h}}})\) in (1.38) and the perturbed Floquet exponents \(\mu _j^\infty (\mathtt {{h}}) = \mu _j^\infty (\omega _\varepsilon ({\mathtt {{h}}}), \mathtt {{h}})\) in (4.26) are small perturbations of the unperturbed linear frequencies \( \sqrt{j \tanh ({\mathtt {{h}}} j)} \) in \( {{\mathcal {C}}}^{k_0}\)-norm, the transversality property (1.39) still holds for the perturbed frequencies. As a consequence, by applying the classical Rüssmann lemma (Theorem 17.1 in [57]) we prove that, for most \(\mathtt {{h}}\in [\mathtt {{h}}_1,\mathtt {{h}}_2]\), the 0th, 1st and 2nd Melnikov conditions on the perturbed frequencies hold if \( {\mathtt {d}}> \frac{3}{4} \, k_0^*\), see Lemma 4.5 and (4.46).

The larger is \( {\mathtt {d}}\), the weaker are the Melnikov conditions (1.36), and the stronger will be the loss of space derivatives due to the small divisors in the reducibility scheme of Sect. 14. In order to guarantee the convergence of such a KAM reducibility scheme, these losses of derivatives will be compensated by the regularization procedure of Sects. 6–13, where we reduce the linearized operator to constant coefficients up to very regularizing terms \( O( |D_x|^{-M}) \) for some \( M := M({\mathtt {d}}, \tau )\) large enough, fixed in (14.8), which is large with respect to \( {\mathtt {d}}\) and \( \tau \) by (14.7). We will explain in detail this procedure below.

Analysis of the linearized operators In order to prove the existence of a solution of \( {{\mathcal {F}}} (i, \alpha ) = 0 \) in (1.35), proving the Nash–Moser Theorem 4.1, the key step is to show that the linearized operator \( d_{i, \alpha } {{\mathcal {F}}} \) obtained at any approximate solution along the iterative scheme admits an almost approximate inverse satisfying tame estimates in Sobolev spaces with loss of derivatives, see Theorem 5.6. Following the terminology of Zehnder [61], an approximate inverse is an operator which is an exact inverse at a solution (note that the operator \({\mathcal {P}}\) in (5.49) is zero when \({{\mathcal {F}}}(i, \alpha ) = 0 \)). The adjective almost refers to the fact that at the n-th step of the Nash–Moser iteration we shall require only finitely many non-resonance conditions of Diophantine type, therefore there remain operators (like (5.50)) that are Fourier supported on high frequencies of magnitude larger than \(O(N_n)\) and thus they can be estimated as \(O(N_n^{-a})\) for some \(a > 0\) (in suitable norms). The tame estimates (5.49)–(5.52) are sufficient for the convergence of a differentiable Nash–Moser scheme: the remainder (5.49) produces a quadratic error since it is of order \(O({\mathcal {F}}(i_n,\alpha _n))\); the remainder (5.50) arising from the almost-reducibility is small enough by taking \({\mathtt {a}}>0\) sufficiently large, as in (14.7); the remainder (5.51) arises by ultraviolet cut-off truncations and its contribution is small by usual differentiable Nash–Moser mechanisms, see for instance [17]. These abstract tame estimates imply the Nash–Moser Theorem 15.1.

In order to find an almost approximate inverse of \( d_{i, \alpha } {{\mathcal {F}}} \) we first implement the strategy of Sect. 5 introduced in Berti–Bolle [16], which is based on the following simple observation: around an invariant torus there are symplectic coordinates \((\phi , y, w)\) in which the Hamiltonian assumes the normal form (1.26) and therefore the linearized equations at the quasi-periodic solution assume the triangular form as in (1.27). In these new coordinates it is immediate to solve the equations in the variables \( \phi , y \), and it remains to invert an operator acting on the w component, which is precisely \({{\mathcal {L}}}_\omega \) defined in (5.26). By Lemma 6.1 the operator \({{\mathcal {L}}}_\omega \) is a finite rank perturbation (see (6.5)) of the restriction to the normal subspace \( H_{{\mathbb {S}}^+}^\bot \) in (1.25) of

where the functions B, V are given in (6.7), which is obtained linearizing the water waves equations (1.14) at a quasi-periodic approximate solution \( (\eta , \psi ) (\omega t, x) \) and changing \( \partial _t \) into the directional derivative \( \omega \cdot \partial _\varphi \).

If \( {{\mathcal {F}}}(i, \alpha ) \) is not zero but it is small, we say that i is approximately invariant for \( X_{H_\alpha } \), and, following [16], in Sect. 5 we transform \( d_{i, \alpha } {{\mathcal {F}}} \) into an approximately triangular operator, with an error of size \( O({{\mathcal {F}}}(i, \alpha ))\). In this way, we have reduced the problem of almost approximately inverting \( d_{i, \alpha } {{\mathcal {F}}} \) to the task of almost inverting the operator \({{\mathcal {L}}}_\omega \). The precise invertibility properties of \( {{\mathcal {L}}}_\omega \) are stated in (5.29)–(5.33).

Remark 1.3

The main advantage of this approach is that the problem of inverting \( d_{i, \alpha } {{\mathcal {F}}} \) on the whole space (i.e. both tangential and normal modes) is reduced to invert a PDE on the normal subspace \( H_{{\mathbb {S}}^+}^\bot \) only. In this sense this is reminiscent of the Lyapunov-Schmidt decomposition, where the complete nonlinear problem is split into a bifurcation and a range equation on the orthogonal of the kernel. However, the Lyapunov-Schmidt approach is based on a splitting of the space \(H^s({\mathbb {T}}^{\nu +1})\) of functions \(u(\varphi ,x)\) of time and space, whereas the approach of [16] splits the phase space (of functions of x only) into \( H_{{\mathbb {S}}^+} \oplus H_{{\mathbb {S}}^+}^\bot \) more similarly to a classical KAM theory formulation.

The procedure of Sect. 5 is a preparation for the reducibility of the linearized water waves equations in the normal subspace developed in Sects. 6–14, where we conjugate the operator \( {{\mathcal {L}} }_\omega \) to a diagonal system of infinitely many decoupled, constant coefficients, scalar linear equations, see (1.42) below. First, in Sects. 6–12, in order to use the tools of pseudo-differential calculus, it is convenient to ignore the projection on the normal subspace \( H_{{\mathbb {S}}^+}^\bot \) and to perform a regularization procedure on the operator \({{\mathcal {L}}}\) acting on the whole space, see Remark 6.2. Then, in Sect. 13, we project back on \( H_{{\mathbb {S}}^+}^\bot \). Our approach involves two well separated procedures that we describe in detail:

-

1.

Symmetrization and diagonalization of \( {\varvec{{\mathcal {L}}} } \) up to smoothing operators The goal of Sects. 6–12 is to conjugate \( {{\mathcal {L}} }\) to an operator of the form

$$\begin{aligned} \omega \cdot \partial _\varphi \mathbb + \mathrm{i} {{\mathtt {m}}}_{\frac{1}{2}} |D|^{\frac{1}{2}} \tanh ^{\frac{1}{2}}(\mathtt {{h}}|D|) + \mathrm{i} r (D) + {{\mathcal {T}}}_8 (\varphi ) \end{aligned}$$(1.41)where \( {{\mathtt {m}}}_{\frac{1}{2}} \approx 1 \) is a real constant, independent of \( \varphi \), the symbol \( r (\xi ) \) is real and independent of \( (\varphi , x) \), of order \( S^{-1/2} \), and the remainder \( {{\mathcal {T}}}_8 (\varphi ) \), as well as \( \partial _\varphi ^\beta {{\mathcal {T}}}_8 \) for all \( | \beta | \le \beta _0 \) large enough, is a small, still variable coefficient operator, which is regularizing at a sufficiently high order, and satisfies tame estimates in Sobolev spaces.

-

2.

KAM reducibility In Sect. 13 we restrict the operator in (1.41) to \(H_{{\mathbb {S}}_+}^\bot \) and in Sect. 14 we implement an iterative diagonalization scheme to reduce quadratically the size of the perturbation, completing the conjugation of \( {{\mathcal {L}} }_\omega \) to a diagonal, constant coefficient system of the form

$$\begin{aligned} \omega \cdot \partial _\varphi + \mathrm{i} \mathrm{Op} (\mu _j ) \end{aligned}$$(1.42)where \( \mu _j = {{\mathtt {m}}}_{\frac{1}{2}} |j|^{\frac{1}{2}} \tanh ^{\frac{1}{2}}(\mathtt {{h}}|j|) + r (j) + {\tilde{r}}(j) \) are real and \( {\tilde{r}}(j) \) are small.

We underline that all the transformations performed in Sects. 6–14 are quasi-periodically-time-dependent changes of variables acting in phase spaces of functions of x (quasi-periodic Floquet operators). Therefore, they preserve the dynamical system structure of the conjugated linear operators.

All these changes of variables are bounded and satisfy tame estimates between Sobolev spaces. As a consequence, the estimates that we shall obtain inverting the final operator (1.42) directly provide good tame estimates for the inverse of the operator \({{\mathcal {L}}}_\omega \) in (6.5).

We also note that the original system \( {{\mathcal {L}}} \) is reversible and even and that all the transformations that we perform are reversibility preserving and even. The preservation of these properties ensures that in the final system (1.42) the \( \mu _j \) are real valued. Under this respect, the linear stability of the quasi-periodic standing wave solutions proved in Theorem 1.1 is obtained as a consequence of the reversible nature of the water waves equations. We could also preserve the Hamiltonian nature of \( {{\mathcal {L}}} \) performing symplectic transformations, but it would be more complicated.

Remark 1.4

(Comparison with the gravity-capillary linearized PDE) With respect to the gravity capillary water waves in infinite depth in [1, 21], the reduction in decreasing orders of the linearized operator is completely different. The linearized operator in the gravity-capillary case is like

the term \(V \partial _x\) is a lower order perturbation of \(|D_x|^{\frac{3}{2}}\), and it can be reduced to constant coefficients by conjugating the operator with a “semi-Fourier Integral Operator” A of type \((\frac{1}{2}, \frac{1}{2})\) (like in [1] and [21]): the commutator of \(|D_x|^{\frac{3}{2}}\) and A produces a new operator of order 1, and one chooses appropriately the symbol of A for the reduction of \(V \partial _x\). Instead, in the pure gravity case we have a linearized operator of the type

where the term \(V \partial _x\) is a singular perturbation of \( \mathrm{i} |D_x|^{\frac{1}{2}} \). The commutator between \(|D_x|^{\frac{1}{2}}\) and any bounded pseudo-differential operator produces operators of order \(\le 1/2\), which do not interact with \(V \partial _x\). Hence one uses the commutator with \(\omega \cdot \partial _\varphi \) (which is the leading term of the unperturbed operator) to produce operators of order 1 that cancel out \(V \partial _x\). This is why our first task is to straighten the first order vector field (1.44), which corresponds to a time quasi-periodic transport operator. Furthermore, the fact that the unperturbed linear operator is \(\sim |D|^{\frac{1}{2}}\), unlike \(\sim |D|^{\frac{3}{2}}\), also affects the conjugation analysis of the lower order operators, where the contribution of the commutator with \(\omega \cdot \partial _\varphi \) is always of order higher than the commutator with \(|D_x|^{\frac{1}{2}}\). As a consequence, in the procedure of reduction of the symbols to constant coefficients in Sects. 11–12, we remove first their dependence on \(\varphi \), and then their dependence on x. We also note that in [21], since the second order Melnikov conditions do not lose space derivatives, there is no need to perform such reduction steps at negative orders before starting with the KAM reducibility algorithm. \(\square \)

We now explain in detail the steps of the conjugation of the quasi-periodic linear operator (1.40) described in the items 1 and 2 above. We underline that all the coefficients of the linearized operator \( {{\mathcal {L}} } \) in (1.40) are \( {{\mathcal {C}}}^\infty \) in \( (\varphi , x) \) because each approximate solution \( (\eta (\varphi , x), \psi (\varphi , x)) \) at which we linearize along the Nash–Moser iteration is a trigonometric polynomial in \( (\varphi , x) \) (at each step we apply the projector \( \Pi _n \) defined in (15.1)) and the water waves vector field is analytic. This allows us to work in the usual framework of \( {{\mathcal {C}}}^\infty \) pseudo-differential symbols, as recalled in Sect. 2.3.

1. Linearized good unknown of Alinhac The first step is to introduce in Sect. 6.1 the linearized good unknown of Alinhac, as in [1] and [21]. This is indeed the same change of variable introduced by Lannes [46] (see also [47]) for proving energy estimates for the local existence theory. Subsequently, the nonlinear good unknown of Alinhac has been introduced by Alazard–Métivier [5], see also [2, 4] to perform the paralinearization of the Dirichlet–Neumann operator. In these new variables, the linearized operator (1.40) becomes the more symmetric operator (see (6.15))

where the Dirichlet–Neumann operator admits the expansion

and \({\mathcal {R}}_G \) is an \( \textit{OPS}^{-\infty } \) smoothing operator. In Appendix A we provide a self-contained proof of such a representation. We cannot directly use a result already existing in the literature (for the Cauchy problem) because we have to provide tame estimates for the action of \(G(\eta )\) on Sobolev spaces of time-space variables \((\varphi , x)\) and to control its smooth dependence with respect to the parameters \((\omega , \mathtt {{h}})\). We can neither directly apply the corresponding result of [21], which is given in the case \(\mathtt {{h}}= + \infty \).

Notice that the first order transport operator \( V \partial _x \) in (1.43) is a singular perturbation of \({\mathcal {L}}_0\) evaluated at \((\eta ,\psi ) = 0\), i.e. \(\omega \cdot \partial _\varphi + \big ( {\begin{matrix} 0 &{} - G(0) \\ 1 &{} 0 \end{matrix}} \big )\).

2. Straightening the first order vector field \(\omega \cdot \partial _\varphi + V(\varphi ,x) \partial _x \). The next step is to conjugate the variable coefficients vector field (we regard equivalently a vector field as a differential operator)

to the constant coefficient vector field \(\omega \cdot \partial _\varphi \) on the torus \( {\mathbb {T}}^\nu _{\varphi } \times {\mathbb {T}}_x \) for \( V (\varphi , x) \) small. This a perturbative problem of rectification of a close to constant vector field on a torus, which is a classical small divisor problem. For perturbations of a Diophantine vector field this problem was solved at the beginning of KAM theory, we refer e.g. to [61] and references therein. Notice that, despite the fact that \( \omega \in {\mathbb {R}}^\nu \) is Diophantine, the constant vector field \( \omega \cdot \partial _\varphi \) is resonant on the higher dimensional torus \( {\mathbb {T}}^{\nu }_{\varphi } \times {\mathbb {T}}_x \). We exploit in a crucial way the symmetry induced by the reversible structure of the water waves equations, i.e. \( V(\varphi , x ) \) is odd in \( \varphi \), to prove that it is possible to conjugate \( \omega \cdot \partial _\varphi + V(\varphi ,x) \partial _x \) to the constant vector field \( \omega \cdot \partial _\varphi \) without changing the frequency \( \omega \).

From a functional point of view we have to solve a linear transport equation which depends on time in a quasi-periodic way, see equation (7.4). Actually we solve equation (7.6) for the inverse diffeomorphism. This problem amounts to prove that all the solutions of the quasi periodically time-dependent scalar characteristic equation \( \dot{x} = V(\omega t, x )\) are quasi-periodic in time with frequency \( \omega \), see Remark 7.1, [42, 53] and [52]. We solve this problem in Sect. 7 using a Nash–Moser implicit function theorem. Actually, after having inverted the linearized operator at an approximate solution (Lemma 7.2), we apply the Nash–Moser–Hörmander Theorem C.1, proved in Baldi-Haus [10]. We cannot directly use already existing results for equation (7.6) because we have to prove tame estimates and Lipschitz dependence of the solution with respect to the approximate torus, as well as its smooth dependence with respect to the parameters \((\omega ,\mathtt {{h}})\), see Lemmata 7.4–7.5.

We remark that, when searching for time periodic solutions as in [42, 53], the corresponding transport equation is not a small-divisor problem and has been solved in [53] by a direct ODE analysis.

In Lemma 7.6 we apply this change of variable to the whole operator \( {\mathcal {L}}_0 \) in (1.43), obtaining the new conjugated system (see (7.31))

where the remainder \( {{\mathcal {R}}}_1 \) is in \( \textit{OPS}^{-\infty } \).

3. Change of the space variable In Sect. 8 we introduce a change of variable induced by a diffeomorphism of \( {\mathbb {T}}_x \) of the form (independent of \( \varphi \))

Conjugating \( {{\mathcal {L}}}_1\) by the change of variable \( u (x) \mapsto u (x + \alpha (x) )\), we obtain an operator of the same form

see (8.5), where \( {{\mathcal {R}}}_2 \) is in \( \textit{OPS}^{-\infty } \), and the functions \( a_5, a_6 \) are given by

We shall choose in Sect. 11 the function \( \alpha (x) \) (see (11.23)) in order to eliminate the dependence on x from the time average \(\langle a_7 \rangle _\varphi (x)\) in (11.17)–(11.18) of the coefficient of \(|D_x|^{\frac{1}{2}}\). The advantage of introducing the diffeomorphism (1.45) at this step, rather than in Sect. 11 where it is used, is that it is easier to study the conjugation under this change of variable of differentiation and multiplication operators, Hilbert transform, and integral operators in \( \textit{OPS}^{-\infty }\), see Sect. 2.4 (on the other hand, performing this transformation in Sect. 11 would require delicate estimates of the symbols obtained after an Egorov-type analysis).

4. Symmetrization of the order 1 / 2 In Sect. 9 we apply two simple conjugations with a Fourier multiplier and a multiplication operator, whose goal is to obtain a new operator of the form

see (9.9)–(9.13), up to lower order operators. The function \( a_7 \) is close to 1 and \( \breve{a}_4 \) is small in \( \varepsilon \), see (9.16). Notice that the off-diagonal operators in \({\mathcal {L}}_3\) are opposite to each other, unlike in \({\mathcal {L}}_2\). Then, in the complex unknown \( h = \eta + \mathrm{i} \psi \), the first component of such an operator reads

(which corresponds to (10.1) neglecting the projector \( \mathrm{i} \Pi _0 \)) where \( P_5 (\varphi ) \) is a \( \varphi \)-dependent families of pseudo-differential operators of order \( - 1/ 2 \), and \( Q_5 (\varphi ) \) of order 0. We shall call the former operator “diagonal”, and the latter “off-diagonal”, with respect to the variables \( (h, {\bar{h}} ) \).

In Sects. 10–12 we perform the reduction to constant coefficients of (1.46) up to smoothing operators, dealing separately with the diagonal and off-diagonal operators.

5. Symmetrization of the lower orders. In Sect. 10 we reduce the off-diagonal term \( Q_5 \) to a pseudo-differential operator with very negative order, i.e. we conjugate the above operator to another one of the form (see Lemma 10.3)

where \( P_6 \) is in \({ OPS}^{- \frac{1}{2} } \) and \( Q_6 \in { OPS}^{-M }\) for a constant M large enough fixed in Sect. 14, in view of the reducibility scheme.

6. Time and space reduction at the order 1/2 In Sect. 11 we eliminate the \( \varphi \)- and the x-dependence from the coefficient of the leading operator \( \mathrm{i} a_7 (\varphi , x) |D|^{\frac{1}{2}} T_\mathtt {{h}}^{\frac{1}{2}} \). We conjugate the operator (1.47) by the time-1 flow of the pseudo-PDE

where \( \beta (\varphi , x) \) is a small function to be chosen. This kind of transformations—which are “semi-Fourier integral operators”, namely pseudo-differential operators of type \((\frac{1}{2}, \frac{1}{2})\) in Hörmander’s notation—has been introduced in [1] and studied as flows in [21].

Choosing appropriately the functions \( \beta (\varphi , x) \) and \( \alpha (x) \) (introduced in Sect. 8), see formulas (11.19) and (11.23), the final outcome is a linear operator of the form, see (11.31),

where \({\mathcal {H}}\) is the Hilbert transform. This linear operator has the constant coefficient \( {\mathtt {m}}_{\frac{1}{2}} \approx 1 \) at the order 1 / 2, while \( P_7 \) is in \( \textit{OPS}^{-1/2} \) and the operator \( {{\mathcal {T}}}_7 \) is small, smoothing and satisfies tame estimates in Sobolev spaces, see (11.39).

7. Reduction of the lower orders In Sect. 12 we further diagonalize the linear operator in (1.48), reducing it to constant coefficients up to regularizing smoothing operators of very negative order \( | D |^{-M} \). This step, based on standard pseudo-differential calculus, is not needed in [21], because the second order Melnikov conditions in [21] do not lose space derivatives. We apply an iterative sequence of pseudo-differential transformations that eliminate first the \( \varphi \)- and then the x-dependence of the diagonal symbols. The final system has the form

where the constant Fourier multiplier \( r (\xi ) \) is real, even \( r ( \xi ) = r (- \xi ) \), it satisfies (see (12.78))

and the variable coefficient operator \( {{\mathcal {T}}}_8 (\varphi ) \) is regularizing and satisfies tame estimates, see more precisely (12.85). We also remark that the operator (1.49) is reversible and even, since all the previous transformations that we performed are reversibility preserving and even.

At this point the procedure of diagonalization of \({\mathcal {L}}\) up to smoothing operators is complete. Thus, in Sect. 13, restricting the operator (1.49) to \( H_{{\mathbb {S}}^+}^\bot \), we obtain the reduction of \({\mathcal {L}}_\omega \) up to smoothing remainders. We are now ready to begin the KAM reduction procedure.

8. KAM reducibility In order to decrease quadratically the size of the resulting perturbation \( {{\mathcal {R}}}_0 \) (see (14.4)) we apply the KAM diagonalization iterative scheme of Sect. 14, which converges because the operators

satisfy tame estimates for some \( {\mathtt {b}} := {\mathtt {b}} (\tau , k_0) \in {\mathbb {N}}\) and \( {\mathfrak {m}}:= {\mathfrak {m}}(k_0) \) that are large enough (independently of s), see Lemma 14.2. Such conditions hold under the assumption that M (the order of regularization of the remainder) is chosen large enough as in (14.8) (essentially \( M = O({\mathfrak {m}}+ {{\mathtt {b}}})\)). This is the property that compensates, along the KAM iteration, the loss of derivatives in \( \varphi \) and x produced by the small divisors in the second order Melnikov non-resonance conditions. Actually, for the construction of the quasi-periodic solutions, it is sufficient to prove the almost-reducibility of the linearized operator, in the sense that the remainder \({\mathcal {R}}_n\) in Theorem 14.4 is not zero but it is of order \( O( \varepsilon \gamma ^{-2(M+1)} N_{n-1}^{- {\mathtt {a}}})\), which can be obtained imposing only the finitely many Diophantine conditions (14.41), (14.26).

The big difference of the KAM reducibility scheme of Sect. 14 with respect to the one developed in [21] is that the second order Melnikov non-resonance conditions that we impose are very weak, see (14.26), in particular they lose regularity, not only in the \( \varphi \)-variable, but also in the space variable x. For this reason we apply at each iterative step a smoothing procedure also in the space variable (see the Fourier truncations \(|\ell |, |j-j'| \le N_{{\mathtt {n}}-1}\) in (14.26)).

After the above almost-diagonalization of the linearized operator we almost-invert it, by imposing the first order Melnikov non-resonance conditions in (14.92), see Lemma 14.9. Since all the changes of variables that we performed in the diagonalization process satisfy tame estimates in Sobolev spaces, we finally conclude the existence of an almost inverse of \({{\mathcal {L}}}_\omega \) which satisfies tame estimates, see Theorem 14.10.

At this point the proof of the Nash–Moser Theorem 4.1, given in Sect. 15, follows in a usual way, in the same setting of [21].

Notation Given a function \( u(\varphi , x ) \) we write that it is \( \text {even}(\varphi ) \text {even}(x)\) if it is even in \( \varphi \) for any x and, separately, even in x for any \( \varphi \). With similar meaning we say that \( u (\varphi , x) \) is \( \text {even}(\varphi ) \text {odd}(x)\), \( \text {odd}(\varphi ) \text {even}(x)\) and \( \text {odd}(\varphi ) \text {even}(x)\).

The notation \( a \lesssim _{s, \alpha , M} b \) means that \(a \le C(s, \alpha , M ) b\) for some constant \(C(s, \alpha , M ) > 0\) depending on the Sobolev index s and the constants \( \alpha , M \). Sometimes, along the paper, we omit to write the dependence \( \lesssim _{s_0, k_0} \) with respect to \( s_0, k_0 \), because \( s_0 \) (defined in (1.21)) and \( k_0 \) (determined in Sect. 3) are considered as fixed constants. Similarly, the set \({\mathbb {S}}^+\) of tangential sites is considered as fixed along the paper.

2 Functional setting

2.1 Function spaces

In the paper we will use Sobolev norms for real or complex functions \(u(\omega , \mathtt {{h}}, \varphi , x)\), \((\varphi ,x) \in {\mathbb {T}}^\nu \times {\mathbb {T}}\), depending on parameters \((\omega ,\mathtt {{h}}) \in F\) in a Lipschitz way together with their derivatives in the sense of Whitney, where F is a closed subset of \({\mathbb {R}}^{\nu +1}\). We use the compact notation \(\lambda := (\omega ,\mathtt {{h}})\) to collect the frequency \(\omega \) and the depth \(\mathtt {{h}}\) into a parameter vector.

We use the multi-index notation: if \( k = ( k_1, \ldots , k_{\nu +1}) \in {\mathbb {N}}^{\nu +1} \) we denote \(|k| := k_1 + \cdots + k_{\nu +1}\) and \(k! := k_1! \cdots k_{\nu +1}!\) and if \( \lambda = (\lambda _1, \ldots , \lambda _{\nu +1}) \in {\mathbb {R}}^{\nu +1}\), we denote the derivative \( \partial _\lambda ^k := \partial _{\lambda _1}^{k_1} \ldots \partial _{\lambda _{\nu +1}}^{k_{\nu +1}} \) and \(\lambda ^k := \lambda _1^{k_1} \cdots \lambda _{\nu +1}^{k_{\nu +1}} \). Recalling that \(\Vert \ \Vert _s\) denotes the norm of the Sobolev space \(H^s({\mathbb {T}}^{\nu +1}, {\mathbb {C}}) = H^s_{(\varphi ,x)}\) introduced in (1.20), we now define the “Whitney-Sobolev” norm \(\Vert \cdot \Vert _{s,F}^{k+1,\gamma }\).

Definition 2.1

(Whitney–Sobolev functions) Let F be a closed subset of \({\mathbb {R}}^{\nu +1}\). Let \(k \ge 0\) be an integer, \(\gamma \in (0,1]\), and \(s \ge s_0 > (\nu +1)/2\). We say that a function \(u : F \rightarrow H^s_{(\varphi ,x)}\) belongs to \(\text {Lip}(k+1,F,s,\gamma )\) if there exist functions \(u^{(j)} : F \rightarrow H^s_{(\varphi ,x)}\), \(j \in {\mathbb {N}}^\nu \), \(0 \le |j| \le k\) with \(u^{(0)} = u\), and a constant \(M > 0\) such that, if \( R_j(\lambda ,\lambda _0) := R_j^{(u)}(\lambda ,\lambda _0) \) is defined by

then

An element of \(\text {Lip}(k+1,F,s,\gamma )\) is in fact the collection \(\{ u^{(j)} : |j| \le k \}\). The norm of \(u \in \text {Lip}(k+1,F,s,\gamma )\) is defined as

If \( F = {\mathbb {R}}^{\nu +1} \) by \( \text {Lip}(k+1,{\mathbb {R}}^{\nu +1},s, \gamma ) \) we shall mean the space of the functions \( u = u^{(0)} \) for which there exist \( u^{(j)} = \partial _{\lambda }^j u \), \( |j| \le k \), satisfying (2.2), with the same norm (2.3).

We make some remarks.

-

1.

If \( F = {\mathbb {R}}^{\nu +1} \), and \( u \in \text {Lip}(k+1,F,s,\gamma ) \) the \( u^{(j)} \), \( | j | \ge 1 \), are uniquely determined as the partial derivatives \( u^{(j)} = \partial _{\lambda }^j u \), \( | j | \le k \), of \( u = u^{(0)} \). Moreover all the derivatives \( \partial _{\lambda }^j u \), \( | j | = k \) are Lipschitz. Since \( H^s \) is a Hilbert space we have that \( \text {Lip}(k+1,{\mathbb {R}}^{\nu +1},s,\gamma ) \) coincides with the Sobolev space \( W^{k+1, \infty } ({\mathbb {R}}^{\nu + 1}, H^s) \).

-

2.

The Whitney–Sobolev norm of u in (2.3) is equivalently given by

$$\begin{aligned}&\Vert u \Vert _{s,F}^{k+1,\gamma }:= \Vert u \Vert _s^{k+1,\gamma }\nonumber \\&\qquad = \max _{|j| \le k} \Bigg \{ \gamma ^{|j|} \sup _{\lambda \in F} \Vert u^{(j)}(\lambda ) \Vert _s, \gamma ^{k+1} \sup _{\lambda \ne \lambda _0} \frac{\Vert R_j(\lambda , \lambda _0) \Vert _s}{|\lambda - \lambda _0|^{k+1 - |j|}}\Bigg \}.\qquad \end{aligned}$$(2.4)

Theorem B.2 and (B.10) provide an extension operator which associates to an element \(u \in \text {Lip}(k+1,F,s,\gamma )\) an extension \({\tilde{u}} \in \text {Lip}(k+1,{\mathbb {R}}^{\nu +1},s,\gamma ) \). As already observed, the space \(\text {Lip}(k+1,{\mathbb {R}}^{\nu +1},s,\gamma ) \) coincides with \( W^{k+1, \infty } ({\mathbb {R}}^{\nu + 1}, H^s)\), with equivalence of the norms (see (B.9))

By Lemma B.3, the extension \({\tilde{u}}\) is independent of the Sobolev space \(H^s\).

We can identify any element \(u \in \text {Lip}(k+1, F, s, \gamma )\) (which is a collection \(u = \{ u^{(j)} : |j| \le k\}\)) with the equivalence class of functions \(f \in W^{k+1, \infty }({\mathbb {R}}^{\nu +1}, H^s) / \sim \) with respect to the equivalence relation \(f \sim g\) when \(\partial _\lambda ^j f(\lambda ) = \partial _\lambda ^j g(\lambda )\) for all \(\lambda \in F\), for all \(|j| \le k+1\).

For any \(N>0\), we introduce the smoothing operators

Lemma 2.2

(Smoothing) Consider the space \(\text {Lip}(k+1,F,s,\gamma )\) defined in Definition 2.1. The smoothing operators \(\Pi _N, \Pi _N^\perp \) satisfy the estimates

Proof

See Appendix B. \(\square \)

Lemma 2.3

(Interpolation) Consider the space \(\text {Lip}(k+1,F,s,\gamma )\) defined in Definition 2.1.

(i) Let \(s_1<s_2\). Then for any \(\theta \in (0,1)\) one has

(ii) Let \(a_0, b_0 \ge 0\) and \(p,q>0\). For all \(\epsilon >0\), there exists a constant \(C(\epsilon ):= C(\epsilon ,p,q)>0\), which satisfies \(C(1)<1\), such that

Proof

See Appendix B. \(\square \)

Lemma 2.4

(Product and composition) Consider the space \(\text {Lip}(k+1,F,s,\gamma )\) defined in Definition 2.1. For all \( s \ge s_0 > (\nu + 1)/2 \), we have

Let \( \Vert \beta \Vert _{2s_0+1}^{k+1,\gamma }\le \delta (s_0, k) \) small enough. Then the composition operator

satisfies the following tame estimates: for all \( s \ge s_0\),

Let \( \Vert \beta \Vert _{2s_0+k+2}^{k+1,\gamma }\le \delta (s_0, k) \) small enough. The function \( \breve{\beta }\) defined by the inverse diffeomorphism \( y = x + \beta (\varphi , x) \) if and only if \( x = y + \breve{\beta }( \varphi , y ) \), satisfies

Proof

See Appendix B. \(\square \)

If \( \omega \) belongs to the set of Diophantine vectors \( \mathtt {DC}(\gamma , \tau ) \), where

the equation \(\omega \cdot \partial _\varphi v = u\), where \(u(\varphi ,x)\) has zero average with respect to \( \varphi \), has the periodic solution

For all \(\omega \in {\mathbb {R}}^\nu \) we define its extension

where \(\chi \in {\mathcal {C}}^\infty ({\mathbb {R}},{\mathbb {R}})\) is an even and positive cut-off function such that

Note that \((\omega \cdot \partial _\varphi )^{-1}_{ext} u = (\omega \cdot \partial _\varphi )^{-1} u\) for all \(\omega \in \mathtt {DC}(\gamma , \tau )\).

Lemma 2.5

(Diophantine equation) For all \(u \in W^{k+1,\infty ,\gamma }({\mathbb {R}}^{\nu +1}, H^{s+\mu })\), we have

Moreover, for \(F \subseteq \mathtt {DC}(\gamma ,\tau ) \times {\mathbb {R}}\) one has

Proof

See Appendix B. \(\square \)

We finally state a standard Moser tame estimate for the nonlinear composition operator

Since the variables \( (\varphi , x) := y \) have the same role, we state it for a generic Sobolev space \( H^s ({\mathbb {T}}^d ) \).

Lemma 2.6

(Composition operator) Let \( f \in {{\mathcal {C}}}^{\infty }({\mathbb {T}}^d \times {\mathbb {R}}, {\mathbb {C}})\) and \(C_0 > 0\). Consider the space \(\text {Lip}(k+1,F,s,\gamma )\) given in Definition 2.1. If \(u(\lambda ) \in H^s({\mathbb {T}}^d, {\mathbb {R}})\), \(\lambda \in F\) is a family of Sobolev functions satisfying \(\Vert u \Vert _{s_0,F}^{k+1, \gamma } \le C_0\), then, for all \( s \ge s_0 > (d + 1)/2 \),

The constant \(C(s,k,f, C_0)\) depends on s, k and linearly on \(\Vert f \Vert _{{\mathcal {C}}^m({\mathbb {T}}^d \times B)}\), where m is an integer larger than \(s+k+1\), and \(B \subset {\mathbb {R}}\) is a bounded interval such that \(u(\lambda ,y) \in B\) for all \(\lambda \in F\), \(y \in {\mathbb {T}}^d\), for all \(\Vert u \Vert _{s_0,F}^{k+1, \gamma } \le C_0\).

Proof

See Appendix B. \(\square \)

2.2 Linear operators

Along the paper we consider \( \varphi \)-dependent families of linear operators \( A : {\mathbb {T}}^\nu \mapsto {{\mathcal {L}}}( L^2({\mathbb {T}}_x)) \), \( \varphi \mapsto A(\varphi ) \) acting on functions u(x) of the space variable x, i.e. on subspaces of \( L^2 ({\mathbb {T}}_x) \), either real or complex valued. We also regard A as an operator (which for simplicity we denote by A as well) that acts on functions \( u(\varphi , x) \) of space-time, i.e. we consider the corresponding operator \( A \in {{\mathcal {L}}}(L^2({\mathbb {T}}^\nu \times {\mathbb {T}}) )\) defined by

We say that an operator A is real if it maps real valued functions into real valued functions.

We represent a real operator acting on \( (\eta , \psi ) \in L^2({\mathbb {T}}^{\nu + 1}, {\mathbb {R}}^2) \) by a matrix

where A, B, C, D are real operators acting on the scalar valued components \( \eta , \psi \in L^2({\mathbb {T}}^{\nu + 1}, {\mathbb {R}})\).

The action of an operator A as in (2.20) on a scalar function \( u := u(\varphi , x ) \in L^2 ({\mathbb {T}}^\nu \times {\mathbb {T}}, {\mathbb {C}}) \), that we expand in Fourier series as

is

We shall identify an operator A with the matrix \( \big ( A^{j'}_j (\ell - \ell ') \big )_{j, j' \in {\mathbb {Z}}, \ell , \ell ' \in {\mathbb {Z}}^\nu } \), which is Töplitz with respect to the index \(\ell \). In this paper we always consider Töplitz operators as in (2.20), (2.23).

The matrix entries \(A^{j'}_j (\ell - \ell ')\) of a bounded operator \(A : H^s \rightarrow H^s\) (as in (2.23)) satisfy

where \(\Vert A \Vert _{{\mathcal {L}}(H^s)} := \sup \{ \Vert Ah \Vert _s : \Vert h \Vert _s = 1 \}\) is the operator norm (consider \(h = e^{\mathrm{i} (\ell ', j') \cdot (\varphi ,x)}\)).

Definition 2.7

Given a linear operator A as in (2.23) we define the operator

-

1.

|A| (majorant operator) whose matrix elements are \( | A_j^{j'}(\ell - \ell ')| \),

-

2.

\( \Pi _N A \), \( N \in {\mathbb {N}}\) (smoothed operator) whose matrix elements are

$$\begin{aligned} (\Pi _N A)^{j'}_j (\ell - \ell ') := {\left\{ \begin{array}{ll} A^{j'}_j (\ell - \ell ') \quad \mathrm{if} \quad \langle \ell - \ell ' ,j - j' \rangle \le N \\ 0 \qquad \qquad \quad \mathrm{otherwise}. \end{array}\right. } \end{aligned}$$(2.25)We also denote \( \Pi _N^\bot := \mathrm{Id} - \Pi _N \),

-

3.

\( \langle \partial _{\varphi , x} \rangle ^{b} A \), \( b \in {\mathbb {R}}\), whose matrix elements are \( \langle \ell - \ell ', j - j' \rangle ^b A_j^{j'}(\ell - \ell ') \).

-

4.

\(\partial _{\varphi _m} A(\varphi ) = [\partial _{\varphi _m}, A] = \partial _{\varphi _m} \circ A - A \circ \partial _{\varphi _m}\) (differentiated operator) whose matrix elements are \(\mathrm{i} (\ell _m - \ell _m') A_{j}^{j'}(\ell - \ell ')\).

Similarly the commutator \( [\partial _x, A ]\) is represented by the matrix with entries \( \mathrm{i} (j - j') A^{j'}_j (\ell - \ell ') \).

Given linear operators A, B as in (2.23) we have that (see Lemma 2.4 in [21])

where, for a given a function \( u(\varphi , x) \) expanded in Fourier series as in (2.22), we define the majorant function

Note that the Sobolev norms of u and \( | |u | |\) are the same, i.e.

2.3 Pseudo-differential operators

In this section we recall the main properties of pseudo-differential operators on the torus that we shall use in the paper, similarly to [1, 21]. Pseudo-differential operators on the torus may be seen as a particular case of the theory on \( {\mathbb {R}}^n \), as developed for example in [35].

Definition 2.8

(\(\Psi \mathrm{DO}\)) A linear operator A is called a pseudo-differential operator of order m if its symbol a(x, j) is the restriction to \( {\mathbb {R}}\times {\mathbb {Z}}\) of a function \( a (x, \xi ) \) which is \( {{\mathcal {C}}}^\infty \)-smooth on \( {\mathbb {R}}\times {\mathbb {R}}\), \( 2 \pi \)-periodic in x, and satisfies the inequalities

We call \( a(x, \xi ) \) the symbol of the operator A, which we denote

We denote by \( S^m \) the class of all the symbols \( a(x, \xi ) \) satisfying (2.29), and by \( \textit{OPS}^m \) the associated set of pseudo-differential operators of order m. We set \( \textit{OPS}^{-\infty } := \cap _{m \in {\mathbb {R}}} \textit{OPS}^{m} \).

For a matrix of pseudo differential operators

we say that \(A \in \textit{OPS}^m\).

When the symbol a(x) is independent of j, the operator \( A = \mathrm{Op} (a) \) is the multiplication operator by the function a(x), i.e. \( A : u (x) \mapsto a ( x) u(x )\). In such a case we shall also denote \( A = \mathrm{Op} (a) = a (x) \).

We underline that we regard any operator \( \mathrm{Op}(a) \) as an operator acting only on \( 2 \pi \)-periodic functions \( u (x) = \sum _{j \in {\mathbb {Z}}} u_j e^{\mathrm{i} j x }\) as

Along the paper we consider \( \varphi \)-dependent pseudo-differential operators \( (A u)(\varphi , x) = \sum _{j \in {\mathbb {Z}}} a(\varphi , x, j) u_j(\varphi ) e^{\mathrm{i} j x } \) where the symbol \( a(\varphi , x, \xi ) \) is \( {{\mathcal {C}}}^\infty \)-smooth also in \( \varphi \). We still denote \( A := A(\varphi ) = \mathrm{Op} (a(\varphi , \cdot ) ) = \mathrm{Op} (a ) \).

Moreover we consider pseudo-differential operators \( A(\lambda ) := \mathrm{Op}(a(\lambda , \varphi , x, \xi )) \) that are \({k_0}\) times differentiable with respect to a parameter \( \lambda := (\omega , \mathtt {{h}})\) in an open subset \( \Lambda _0 \subseteq {\mathbb {R}}^\nu \times [\mathtt {{h}}_1, \mathtt {{h}}_2] \). The regularity constant \( k_0 \in {\mathbb {N}}\) is fixed once and for all in Sect. 3. Note that \( \partial _{\lambda }^k A = \mathrm{Op}(\partial _{\lambda }^k a) \), \( \forall k \in {\mathbb {N}}^{\nu + 1} \).

We shall use the following notation, used also in [1, 21]. For any \(m \in {\mathbb {R}}{\setminus } \{ 0\}\), we set

where \(\chi \) is the even, positive \({\mathcal {C}}^\infty \) cut-off defined in (2.16). We also identify the Hilbert transform \({{\mathcal {H}}}\), acting on the \(2 \pi \)-periodic functions, defined by

with the Fourier multiplier \( \mathrm{Op}\big (- \mathrm{i} \, \mathrm{sign}(\xi ) \chi (\xi ) \big )\), i.e. \( {{\mathcal {H}}} \equiv \mathrm{Op}\big (- \mathrm{i} \,\mathrm{sign}(\xi ) \chi (\xi ) \big ) \).

We shall identify the projector \(\pi _0 \), defined on the \( 2 \pi \)-periodic functions as

with the Fourier multiplier \( \mathrm{Op}\big ( 1 - \chi (\xi ) \big )\), i.e. \( \pi _0 \equiv \mathrm{Op}\big ( 1 - \chi (\xi ) \big ) \), where the cut-off \(\chi (\xi )\) is defined in (2.16). We also define the Fourier multiplier \( \langle D \rangle ^m \), \(m \in {\mathbb {R}}{\setminus } \{ 0 \}\), as

We now recall the pseudo-differential norm introduced in Definition 2.11 in [21] (inspired by Métivier [50], chapter 5), which controls the regularity in \( (\varphi , x)\), and the decay in \( \xi \), of the symbol \( a (\varphi , x, \xi ) \in S^m \), together with its derivatives \( \partial _\xi ^\beta a \in S^{m - \beta }\), \( 0 \le \beta \le \alpha \), in the Sobolev norm \( \Vert \ \Vert _s \).

Definition 2.9

(Weighted \(\Psi DO\) norm) Let \( A(\lambda ) := a(\lambda , \varphi , x, D) \in \textit{OPS}^m \) be a family of pseudo-differential operators with symbol \( a(\lambda , \varphi , x, \xi ) \in S^m \), \( m \in {\mathbb {R}}\), which are \(k_0\) times differentiable with respect to \( \lambda \in \Lambda _0 \subset {\mathbb {R}}^{\nu + 1} \). For \( \gamma \in (0,1) \), \( \alpha \in {\mathbb {N}}\), \( s \ge 0 \), we define the weighted norm

where

For a matrix of pseudo differential operators \(A \in \textit{OPS}^m\) as in (2.30), we define its pseudo differential norm