Abstract

Living in a complex and multisensory environment involves constant interaction between perception and action. There is evidence that multisensory integration is governed by temporal factors, such as physiological synchrony between cross-modal stimuli favouring multisensory benefit, and the existence of a range of asynchrony between the stimuli which affords their binding (the temporal window of integration). These factors were examined in this study in a bimanual sensorimotor synchronization task with cross-modal stimuli. Participants synchronized each hand to a pair of audio–tactile stimuli, in which the asynchrony between onsets of auditory and tactile stimuli was systematically manipulated. In cross-modal conditions, they were instructed to tap either to the auditory stimuli or to tactile stimuli. The results reported a temporal window of integration of 160 ms centred around 40 and 80 ms (tactile first). Moreover, the temporal interval between the auditory and tactile stimuli affected the stability of bimanual coordination and of synchronization exclusively when participants were instructed to synchronize with tactile stimuli. Overall, the results indicate that both physiological asynchrony and temporal window of integration apply to cross-modal integration in a bimanual synchronization task. In addition, it shows the effect of auditory dominance onto multisensory temporal processes. This study sheds light on the role of temporal factors in multisensory processes when perception and actions are rhythmic and coupled.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Professional dancers are striking in their ability to coordinate steps and body movements while keeping the beat of music. This fine-tuned body synchronization is usually associated with a feeling of beauty and harmony. Combining information across the senses, such as touch and audition, is critical in helping dancers to move at the unison. According to the “temporal rule” of multisensory integration (Spence and Squire 2003), temporal alignment between the musical beat and tactile information acts as a powerful binding cue to guide movements. Like dancing, a variety of tasks involve the sustained coordination of several joints, such as walking, running, juggling and speaking. In the present study, we examined the temporal factors which act on the coordination behaviour while synchronizing to bimodal stimuli. Bimanual coordination was considered, given that it is a widely used model of multi-joint coordination (Kelso 1995; Todorov and Jordan 2002; Diedrichsen et al. 2010; Swinnen 2002). To date only few studies examined the impact of multisensory perception on bimanual coordination (Ronsse et al. 2009; Blais et al. 2015; Zelic et al. 2012, 2016; Lagarde et al. 2012), and none tested the role of temporal alignment between stimuli. As an example of bimodal stimuli to rhythmically pace hand movements, we focused on pairs of auditory and tactile cues, engaging sensory interactions at various levels in the nervous system (Ghazanfar and Schroeder 2006; Lakatos et al. 2007; Soto-Faraco and Deco 2009).

Numerous studies investigated the effect of bimodal and tri-modal stimuli on behaviour. A set of factors and principles governing their multisensory integration have been proposed (Todd 1912; Hershenson 1962; Raab 1962; Miller 1982; Ernst and Bülthoff 2004; Driver and Noesselt 2008). Yet, their robustness is still an object of debate (Otto et al. 2013). The main predictions governing the effect of multisensory processes on behaviour are based on “the spatial rule”, “the temporal rule” and the “principle of inverse effectiveness”, originally derived from electrophysiology studies (Stein and Meredith 1993). A multisensory benefit is observed when signals occur at the same location, when they are presented at the same time and when their intensity is rather low (Alais et al. 2010).

Evidence in favour of a lawful relation linking multisensory processes to the spatial and temporal configuration of the stimuli is mostly based on the study of latency processes. Treating and integrating latencies is a common denominator in a variety of tasks such as stimulus detection, decision-making, saccades to a visual target and simple reaction times (Harrar and Harris 2005; Zampini et al. 2005; Colonius and Diederich 2010). These processes are often accounted for in terms of counter-models and more general diffusion models. These models postulate a sensory accumulation of evidence that the stimulus is present, leading after a given temporal interval or latency to a detection or decision, and to a movement, once a threshold is crossed (Diederich 1995; Jones and Dzhafarov 2014). In the aforementioned tasks, needing a reaction to cross-modal stimuli, a temporal shortening of sensory accumulation processes due to the stimuli leads to a functional behavioural improvement, such as shorter reaction times (Bell et al. 2005; Rowland et al. 2007). This account of reaction time tasks, based on latency analysis, however, is likely to be insufficient for explaining multisensory integration in dancing, or in rhythmic tasks, such as bimanual coordination to single or bimodal rhythmic stimuli. These interlimb coordination tasks are typically accounted for by models issued from coordination dynamics theory (e.g. Haken et al. 1985; Kelso 1995; Swinnen 2002).

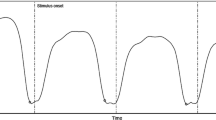

A large number of studies of bimanual coordination have documented preferred movement execution either in-phase, with the right and left fingers flexing simultaneously, or in anti-phase, with fingers moving in an alternate way (Turvey 1990; Kelso 1995; Swinnen 2002). Notably, those stable patterns are also strongly dominant in daily manual activities (Howard et al. 2009). The theory of coordination dynamics offers an explanation of bimanual coordination patterns using a model of entrainment of two dynamically coupled oscillators (the HKB model, after Haken et al. 1985). This theory is based on the empirical discovery of the systematic transition from anti-phase to in-phase patterns when movement rate is increased (Kelso 1984, 1995; Schöner et al. 1986; Haken 1988; Schöner and Kelso 1988a; Beek et al. 1995; Leise and Cohen 2007). In the HKB model, changes in temporal stability determine the formation of bimanual patterns, caused by the interplay between the dynamics of each hand, and the coupling with the other hand. Conceptually, the HKB model can be expressed as indicated in Fig. 1a. It is important to note that we use the word stable here as used in the dynamical system approach of brain and behaviour (see Kelso 1995). It corresponds strictly to the mathematical definition of stability for dynamical systems. The simplest way to define stability is the following: return to equilibrium after a perturbation. In this case, the equilibrium is called an attractor.

a The HKB model (Haken et al. 1985). b The paced HKB model (Schöner and Kelso 1988b). c The scenario corresponding to the present study: the HKB paced by the sum of two modalities, auditory (aud) and tactile (tact). In a the architecture of the model is left–right symmetric and comprises components dynamics represented by an evolution function F acting on the state of each hand which generates the stable rhythmic behaviour of each hand. The state of, respectively, the left and right hand is represented by X and Y, each being two-dimensional (position and velocity, or in polar coordinates amplitude and phase). A coupling function G enables information exchanges between left and right hands. Left and right hands times series are depicted in solid and dotted lines. From the interplay between F and G, stable in-phase and anti-phase patterns are available, and a transition from anti-phase to in-phase is obtained when rate is increased beyond a critical value. Only the anti-phase pattern was studied here. In b each hand is paced by the addition of a sine wave function of time, S(t), the subscripts denoting a specific hand. This pacing function is simply S(t) = A sin(ωt + θ) (see text for the abbreviations). In c each hand is paced by two sine waves, one for each modality, and addition is assumed like in the paced HKB model

Bimanual coordination while synchronizing to external periodic events

Bimanual coordination can be made more stable when subjects synchronize their movements to periodic events, such as auditory metronome (Byblow et al. 1994; Fink et al. 2000). External periodic events also reduce the variability in the trajectory of hand movements (i.e. “anchoring effect”, Byblow et al. 1994). The HKB model can account for these effects of sensory pacing by adding a periodic perturbation of the coordination patterns. This perturbation represents an external forcing that entrains the phase of the oscillators. This forcing introduces an explicit dependency on time and takes the form: S(t) = A sin(ωt + θ) (Schöner and Kelso 1988b), with A being the amplitude, ω the angular velocity, t the time and θ the phase. The phase θ enables introducing a phasing between the stimuli to which the right and left hands synchronize, respectively (see Fig. 1b). The amplitude corresponds to the intensity of the stimuli (see for uni-manual tapping, Bialunska et al. 2011).

In the context of rhythmic bimanual coordination, if the phase difference between the cues for the left and right hands equals the phase difference of the coordination pattern performed, the stability of the pattern increases (Schöner and Kelso 1988b; Schöner et al. 1992).

Bimanual coordination while synchronizing to a pacing stimulus can be analysed at two levels: a synchronization of each hand to the stimuli, and the coordination pattern between the hands. Taking into account the stochastic nature of biological systems, the dynamical stability of those processes can be estimated by the SD of the phase difference (i.e. “asynchrony”) between movement and stimuli (Kelso et al. 1990) and by the SD of the phase difference (i.e. temporal shift) between hands’ positions (this is based on the diffusion theory framework; see Schöner et al. 1986).

After having presented how cross-modal stimuli should theoretically lead to more stable behaviour, we focus next on the effects of the relative timing of cross-modal stimuli. As mentioned above, our goal is to examine the “temporal rule” of multisensory integration in a bimanual synchronization task (i.e. bimanual tapping to cross-modal stimuli). We shall next introduce the three properties susceptible to affect multisensory integration in this task, namely the physiological synchronicity hypothesis (PSH), the temporal window of integration (TWI) and auditory dominance.

Physiological synchronicity for cross-modal stimuli

The temporal rule states that a cross-modal benefit is observed when stimuli are presented at approximately the same time. However, the PSH modifies this basic rule, as first formulated by Raab (1962), indicating that physiological synchrony is more important than stimulus synchrony per se. Early perceptual studies supported this hypothesis by showing enhanced performance when auditory signals were delayed relative to visual ones (Hershenson 1962). The temporal gap is dictated by neurophysiological sensory transduction and conduction processes in different modalities.

Recent results that are in keeping with the PSH are obtained in a variety of tasks, such as reaction time (RT) tasks, temporal order judgement (TOJ) and discrimination tasks. RT tasks show that participants react 42–45 ms faster to auditory stimuli than to tactile ones (Diederich and Colonius 2004; Diederich 1995; Murray et al. 2005). In two studies, the difference between the reaction times to uni-modal auditory and tactile stimuli was first estimated and used to scale the stimuli-onset asynchrony (SOA) between a pair of stimuli of distinct modalities. It was found that SOAs compensating for time differences obtained between uni-modal responses increase the multisensory benefit (Diederich and Colonius 2004; Diederich 1995). During passive movement, TOJ tasks revealed also that tactile stimuli have to be presented 45 ms before the auditory stimuli in order to reach subjective simultaneity (Frissen et al. 2012). Finally, in detection tasks using two-alternative forced choice (2AFC), the performance improved for synchronous presentation of stimuli relative to non-synchronous ones. However, when stimulus presentation was not synchronous, the performance was optimal only when the tactile stimulus preceded the auditory ones (Wilson et al. 2009). Altogether, these studies point to the introduction of a temporal gap between auditory and tactile stimuli in order to improve performance.

Additionally, a hypothesis based on similar assumptions as the PSH has been proposed long ago for uni-manual sensorimotor synchronization to rhythmic stimuli, namely the Paillard–Fraisse hypothesis (Paillard 1949; Fraisse 1980). This hypothesis has been put forward to account for mean negative asynchrony, typically observed in finger-tapping experiments. Indeed, when asked to tap to sequences of isochronous sounds, participants tend to anticipate the sound by 20–80 ms, a phenomenon termed “mean negative asynchrony”. According to the Paillard–Fraisse hypothesis, this phenomenon is due to the constant time differences between the perception of sound and the cutaneous and proprioceptive reafferences produced by movement, in order to ensure an alignment at some central level in the CNS (for reviews see Aschersleben 2002; Repp 2005). Interestingly, mean negative asynchrony varies with the sensory modality of the stimuli. Participants tap (Müller et al. 2008) or reach maximal flexion (when physical contact with a surface is absent; Lagarde and Kelso 2006), before the auditory stimuli and after the tactile stimuli, with differences between the two around 40 ms. In sum, data from uni-manual sensorimotor synchronization suggest that the PSH also applies to rhythmic motor tasks in which participants synchronize to periodic stimuli.

The fact that the PSH can provide a reasonable account of different phenomena encompassing both uni-manual reaction time tasks and rhythmic tasks is particularly appealing. Thus, it seems plausible that a similar hypothesis may extend to bimanual coordination paced by pairs of auditory and tactile stimuli. Given the differences in mean asynchrony found between uni-manual synchronization to tactile and to auditory stimuli, one may expect that an optimal SOA is reached when tactile cues precede auditory cues by about 40 ms. This possibility entails an extension of the dynamical model previously illustrated for rhythmic pacing by a single modality. Each hand can be conceived as driven by two sine waves instead of one (see in Fig. 1c). This assumption seems reasonable for both auditory and tactile pacing, as recent magneto-encephalographic studies have shown for both modalities time-locked oscillatory brain responses to periodic stimuli in their respective primary sensory areas (Carver et al. 2002; Nangini et al. 2006). The paced HKB model predicts that the stabilization of the coordination pattern depends on the intensity of the pacing stimuli. Assuming that rhythmic pacing by two modalities is a simple sum of two sine waves (Fig. 1c), the resultant intensity is maximal if the two sensory inputs are synchronous. As explained before, the synchrony of auditory and tactile stimuli is not reached if auditory and tactile stimuli are sent physically in synchrony but rather when tactile stimuli precede auditory stimuli. Accordingly, and in agreement with the PSH, synchrony between the two unisensory processes, by increasing the total intensity of cross-modal stimulation, is likely to play an important role in stabilizing a bimanual coordination. To date, it is not known whether the results from uni-manual studies extend to bimanual coordination to cross-modal stimuli. The interaction between the hands, at the core of coordination dynamics, may modify the effects found in uni-manual tasks, and to the best of our knowledge, the hypothesis of an optimal SOA has not been tested in this area. So far, we described the effect of the PSH on behaviour as a way to optimize the benefit of cross-modal stimuli. Now we will turn to the TWI.

Temporal window of integration

The TWI is characterized by the integration of cross-modal stimuli with distinct arrivals times. This translates into a certain degree of invariance in multisensory response by the central nervous system within a given range of SOAs (Spence and Squire 2003; Colonius and Diederich 2010). Having a temporal window of integration is likely to have an adaptive function in real life, as all events coming from a unique source are not always present at the same point in time. For instance, when people are speaking from a large enough distance, we see the movements of the lips before hearing, but we integrate the events as coming from a unique source without noticing the time gap. The temporal window of integration was tested in the laboratory with perceptual and RT tasks (Colonius et al. 2009; Harris et al. 2009). For example, in the TOJ task, a temporal window is reported in which participants cannot determine which of two stimuli (i.e. auditory and tactile) comes first. The size of this window varies between 25 and 80 ms (Kitagawa et al. 2005; Zampini et al. 2005; Harrar and Harris 2008; Fujisaki and Nishida 2009). The variations of the size of the window can result from task and methodological differences between the studies (Occelli et al. 2011). A temporal window of integration is also reported in RT tasks but to our knowledge only for audio–visual (Diederich and Colonius 2009) and visuo-tactile modalities (Diederich and Colonius 2007a, b). To date, a temporal window of integration has not been investigated in bimanual synchronization tasks. Finally, we turn to the last process which is affecting cross-modal integration, that is auditory dominance.

Auditory dominance

Sensory dominance of a one sensory modality over another one is a longstanding theme in multisensory research. Basically, it denotes the fact that one modality can influence behaviour more strongly than another one for a given dimension (e.g. space, timing). Recent studies have shown that the so-called dominance can be modulated by the reliability of the signals (Ernst and Banks 2002). Auditory dominance, identified in uni-manual tasks (Repp and Penel 2004), is expected to influence the synchronization to cross-modal events. A robust finding in sensorimotor synchronization is that movement is attracted by the auditory modality when participants synchronize to audio–visual rhythmic stimuli (Aschersleben and Bertelson 2003; Kato and Konishi 2006; Repp and Penel 2002, 2004). In those studies employing paradigms with parametric variation of SOA between the two modalities, participants perform a tapping task with asynchronous cross-modal stimuli. They are instructed to synchronize their taps to stimuli presented in one modality while ignoring stimuli presented in another modality, acting as distractors. Typically, movement is particularly disturbed by auditory stimuli when participants synchronize to visual stimuli; in contrast, visual stimuli can be easily ignored when participants tap to the auditory stimuli; thus, they disrupt less their synchronization to the target sounds. This dominance of the auditory over the visual modality is visible in terms of both synchronization accuracy and of its variability. When auditory and visual stimuli are both presented with varying SOAs, movement is particularly attracted by auditory stimuli. In sum, auditory dominance is a robust phenomenon which to date was obtained exclusively for pairs of audio–visual stimuli. Even though evidence is scant about the effects of audio–tactile stimulation, auditory dominance is similarly expected in this case, based on uni-modal synchronization data (Kolers and Brewster 1985; Lagarde and Kelso 2006).

In the present study, we examined the temporal factors governing bimanual coordination paced by cross-modal periodic events by varying the SOA between tactile and auditory stimuli. Cross-modal stimuli were presented to participants who synchronized their hand movements either with auditory or with tactile stimuli. According to the TWI, we expected a temporal window of 80 ms. This value corresponds to the maximal size of the window observed in perceptual tasks (Zampini et al. 2005). In addition, based on the PSH, we postulated that the optimal condition would be tactile stimuli preceding auditory stimuli by 40 ms. It was found that participants tap before the auditory stimuli and after the tactile stimuli, with differences between the two reaching 40 ms (Lagarde and Kelso 2006; Müller et al. 2008). Taking these assumptions together, we assumed a window not centred around 0 asynchrony between audio–tactile stimuli but rather when a temporal gap of 40 ms separates them. Inside this window, we postulated that all multisensory conditions would lead to a better performance relative to uni-modal stimuli (i.e. a multisensory benefit). Operationally, this would cause a decrease in the variability, corresponding to an increase in stability, of the coordination between hands and of the synchronization with the stimuli. In addition, because of auditory dominance, stronger effects of SOA manipulation were predicted when participants synchronized with tactile stimuli. Indeed, previous experiments reported that focus on the auditory modality removed the effect of visual stimuli (Aschersleben and Bertelson 2003; Repp and Penel 2004).

In the present study, we performed two experiments: a reaction time (RT) task and a bimanual synchronization task. The RT task is a task of reference to evaluate the behavioural response to a specific stimulus. It was used as a control task to get an estimate of the difference in time of processing between the modalities. Thus, thanks to both experiments, we will be able to compare the temporal gap between uni-modal conditions.

The same participants performed the two tasks in a single session. The order of the tasks was counter-balanced. For the sake of clarity, we present the RT and the bimanual synchronization tasks as two experiments.

Experiment 1

Method

Participants

Fifteen adults (6 females), aged between 22 and 32 years (mean = 28.9 years), volunteered to participate in the experiment. They all reported normal audition and touch as well as no movement disorder. Thirteen were right-handed and two left-handed.

Material and equipment

The auditory stimuli were 80-ms square-wave pulses with a tone carrier frequency of 440 Hz, presented at a sound pressure level of 65 dB. The tactile stimuli, which were provided by piezo-electric vibrators, were 80-ms square-wave pulses with a vibration carrier frequency at 150 Hz. The diameter of vibrators was 0.5 cm. Stimulus duration was chosen based on previous experiments (Zelic et al. 2012, 2016). Participants wore earphones for auditory stimuli and headphones playing white noise to avoid the disturbances of environmental noises and noises produced by the vibrators. To avoid loss of tactile sensitivity on the ongoing movement due to the stimulation (i.e. gating effect), we positioned the vibrators on each hip rather than on fingers. Vibrators were fixed by a belt in direct contact with the skin of the participants, on the right and left hips above the iliac bone.

The experiment was conducted using two PCs, one devoted to stimulus presentation, the other used to record simultaneously fingers movement and stimuli, via an A/D card (NI USB-6009, National Instruments). The stimuli, controlled using the data acquisition toolbox MATLAB (Mathworks), were sent via the sound card to a hardware system (Arduino 1.0.5). This device was used to deliver auditory and tactile stimuli while avoiding electronic delays. Index fingers positions were recorded using finger goniometers (resistive flex sensor; Spectra Symbol; resolution: 1°) attached to the index finger and to the back of the hand in order to estimate the metacarpophalangeal joint position of the index fingers. Fingers’ angular positions and stimuli were collected by a second PC at a sampling frequency of 1000 Hz using an A/D NI USB acquisition board (6009). Recording was controlled by a custom program using the functions of the data acquisition toolbox MATLAB.

Procedure

Participants sat comfortably with their forearms placing horizontally on a table. They were instructed to keep the index finger of their dominant hand extended while their other fingers were flexed, and the other hand was resting. As soon as they detected a stimulus, they were asked to flex their index finger and touch a physical surface (see Fig. 2). Each participant was submitted to 3 conditions, using auditory, tactile or audio–tactile stimuli, respectively. For the audio–tactile condition, both stimuli were sent synchronously, that is with a SOA of 0 ms. Each condition included three blocks of 40 trials (120 trials per stimulus type; 360 trials overall). A break of 3 min has been imposed once 120 trials were performed; thus, participants took two breaks during the RT tasks and one of 10 min at the end of the RT task, before beginning the second experiment. Each trial was preceded by a warning stimulus (i.e. a double stimuli identical to the stimuli presented for the reaction time task). The time interval between the warning stimuli and the target stimuli varied randomly from 2 to 4 s (step 100 ms). The duration between the stimulus onset and the next warning stimulus was fixed and lasted 3 s. The order of the three conditions was randomized across participants. The task lasted around 60 min.

Experimental designs are presented in the top panel the reaction time task, and in the bottom panel the bimanual synchronization task. In both tasks, the angular position of the index finger(s) was recorded at the metacarpophalangeal joint by goniometers. The goniometers are taped to each finger and back of the hand and connected to an A/D card (not represented). Participants were instructed to move the index finger only at the metacarpophalangeal joint and to touch the physical surface represented here by the white box

Preprocessing and data analysis

The time series of fingers’ position were low-pass filtered with a zero-lag 5-Hz second-order Butterworth filter. Participants performed a reactive flexion at the metacarpophalangeal joint. Each peak of flexion corresponded to a tap to the physical surface. The peak of flexion was estimated by identifying the maximal excursion of the position measured by the goniometer using the “findpeak” function in MATLAB (version 2012b, Mathworks Inc.). Stimulus onsets were estimated using the same function. This procedure was visually checked for all trials. The first three index flexions of each block in the RT task were removed because the majority of participants answer before the stimulus at the beginning of the block. To calculate the RT, the difference between the onset of the stimulus and the peak of the index flexion has been computed. Trials with RTs shorter than 150 ms and longer than 600 ms were excluded (1 % of all trials). The experiment took approximately 60 min.

Results

Mean RTs obtained in the RT task for the three conditions are reported in Fig. 3. The reactions times were entered in a one-way ANOVA with 3 conditions (audio, tactile and audio–tactile). The analyses yield to a significant effect of the condition (F(2,28) = 16.83, p < .001). A post hoc comparison (HSD Tukey) showed that larger RTs were obtained for the tactile stimuli as compared to auditory (p < .001) and audio–tactile stimuli (p < .001); nevertheless, no difference has been reported between auditory alone and audio–tactile (p = .52). The mean of RTs for audio alone is 307 ms, for tactile alone 337 ms and for audio–tactile 300 ms. The time difference between reactions to tactile stimuli and to auditory stimuli was of 30 ms (337–307).

Discussion

The RT task served to evaluate the behavioural response to the auditory and tactile stimuli, presented alone and together, which will be used in experiment 2 in a bimanual synchronization task. The task allowed us to estimate the difference in time of processing between the modalities. A temporal gap was found between uni-modal conditions. The difference between the RT to tactile stimuli and to auditory stimuli was 30 ms. This difference is slightly smaller than the differences usually reported in the literature, which range between 42 and 45 ms (Diederich 1995; Diederich and Colonius 2004; Murray et al. 2005) although still comparable. Thus, with the present stimuli intensities, we confirm the general observation made in a number of previous studies that processing of audio stimuli is slightly faster than for tactile stimuli.

Interestingly, with the current stimuli, we did not observe a multisensory benefit. RTs in the audio–tactile condition are not faster than in the uni-auditory condition. This result is contrary to previous findings (Murray et al. 2005). This discrepancy may result from methodological factors. For example, the intensities of the auditory and the tactile stimuli were not calibrated before the experiment (i.e. we did not asked participants to match subjectively the intensities of tactile and auditory stimuli, in reducing the decibel of the auditory stimuli). According to the inverse effectiveness principle, a multisensory benefit is inversely related to the intensity of the uni-modal signals (Stein and Meredith 1993). The more important the “effectiveness” of uni-modal signals, the weaker the benefit. In this configuration, auditory stimuli may have been too intense, thus preventing a general multisensory benefit.

These results pave the ground to the second experiment by showing different RTs between the specific auditory and tactile stimuli. Shorter auditory reaction time to stimuli relative to tactile stimuli is coherent with the hypothesis that auditory stimuli should be delayed relative to tactile stimuli in order to be optimally integrated.

Experiment 2

The goal of this second experiment was to examine the effect of the temporal factors associated with multisensory integration on bimanual synchronization. The same subjects participating in experiment 1 took part in this experiment. The same material and equipment were used. However, there are differences due to the nature of the task (RT vs bimanual synchronization with audio–tactile stimuli) and to the manipulated variables. First, in the bimanual synchronization task, the stimuli were presented in sequences. Second, the SOA between auditory and tactile stimuli was manipulated systematically (9 SOAs from −160 to 160 ms in steps of 40 ms). Finally, to pace an anti-phase coordination, the stimuli were presented to the right and left sides in an alternated way. The order of the RT task and of the bimanual synchronization task was alternated across participants.

Procedure

In the bimanual synchronization task, participants were instructed to synchronize their index fingers with the stimuli while executing a continuous flexion–extension movement, as shown in Fig. 2. When the right index was flexed, the left index was extended and vice versa (anti-phase movement). When the fingers were flexed, participants tapped to the physical surface (see Fig. 2). Stimuli were lateralized and presented alternatively on the left and right sides, therefore, in anti-phase. Participants synchronized their right index flexion with the stimuli presented to the right and their left index flexion to the stimuli presented to the left (i.e. movement was ipsilateral to the stimuli).

Two variables have been manipulated: the SOA between auditory and tactile stimuli (9 SOAs) and the focus (2 Focuses, audio vs. tactile). Auditory and tactile stimuli were presented at 9 SOAs: −160, −120, −80, −40, 0, 40, 80, 120 and 160 ms. The two different Focuses involved two different instructions to the participants. In the Focus audio condition, the participants were instructed to synchronize their index flexion to auditory stimuli, and, in the Focus tactile condition to tactile stimuli. Therefore, when participants were instructed to synchronize to the sounds (Focus audio), the tactile stimuli were presented either before the sound by −160, −120, −80, −40 ms; or after the sound by 40, 80, 120, 160 ms or simultaneously: 0 ms. The opposite occurred when participants were instructed to synchronize to the tactile stimuli (Focus tactile). Altogether, participants performed 36 trials, that is 2 trials in each of the 18 experimental conditions (2 Focuses × 9 SOAs). In addition, 4 baseline trials in a uni-modal condition were performed before the trials with bimodal stimuli. In the uni-modal condition, two trials were performed with auditory stimuli alone and two with tactile stimuli alone. On each trial, a sequence of 80 stimuli was presented with an interonset interval of 800 ms (1.25 Hz). This frequency corresponds approximately to the average spontaneous frequency of anti-phase coordination (Zelic et al. 2016). Each trial lasted approximately 1 min (80 × 800 ms). The order of Focus conditions was counter-balanced, and the order of the cross-modal conditions was randomized across participants. The experiment lasted approximately 60 min.

Preprocessing and data analysis

The time series of fingers’ position were low-pass filtered with a zero-lag 5-Hz second-order Butterworth filter. Participants performed continuously flexion and extension at the metacarpophalangeal joint. Each peak of flexion corresponded to a tap to the physical surface. The peak of flexion was estimated by identifying the maximal excursion of the position measured by the goniometer using the “findpeak” function in MATLAB. Stimulus onsets were estimated using the same function. This procedure was visually checked for all trials. At the beginning of a trial, participants adapted their movements to the stimuli; consequently to ensure stationarity, we discarded the first four index flexions. The analysis was carried out on 72 index fingers flexion for each trial (i.e. 36 for the right finger and 36 for the left).

Sequences of taps relative to the bimodal stimuli were analysed to obtain measures of (1) interlimb coordination, and (2) synchronization between movements and stimuli. Coordination patterns are defined by the relative phase (Kelso 1995) between right and left fingers flexion, expressed by an angle on a circular scale, and synchronization most often by a time difference between stimuli and fingers flexion. For the sake of simplicity, all the variables are presented in ms. In the bimanual synchronization task, the coordination between fingers was obtained by computing the time elapsed between each right index flexion and the subsequent left index flexion. Mean and SD of coordination between fingers were computed. SD of relative time is a measure of the stability of coordination pattern defined between limb movements (Kelso 1984; Schöner et al. 1986; Kelso et al. 1990). In the current experiment, this measure is named “SD coordination”. The higher the SD coordination, the less stable the behaviour.

Synchronization between movement and stimuli was obtained by computing the temporal difference between the index flexion and the onset of stimuli presented on the same side (i.e. those with which participants were instructed to synchronize). By convention, the difference between the stimulus and the movement was negative when the movement preceded the stimuli and positive when the movement was lagging (see Fig. 4). For each trial, there were 71 temporal differences, which are referred to as “asynchronies”. Mean asynchrony was computed as a measure of the accuracy of the synchronization. Positive mean asynchrony indicates that on average participants flexed their fingers after the stimuli (see Fig. 4). As done for the coordination between limbs, the SD of asynchrony was calculated as a measure of the stability of the synchronization (Kelso et al. 1990; Repp and Penel 2004; Bialunska et al. 2011).

Schematic presentation of the conventions used to estimate the asynchrony between the peak flexion of the index finger and the onset of the stimuli. The peak flexion is the maximal excursion in the flexing action, namely at the tap with the physical surface. In the left panel a one peak flexion is marked by a black filled dot. When the tap on the physical surface had two bumps, as presented here, the time of the first maximum was identified. The asynchronies were computed relative to the modality towards which the focus of attention was directed, namely a to the auditory stimuli when focus was on audio, or b to the tactile stimuli when focus was on tactile. In a auditory stimuli were presented first; in b tactile stimuli were presented first. Asynchrony was positive when the peak flexion lagged after the target stimuli and negative it occurred before the stimuli. In Fig. 6 we present the mean asynchronies calculated with both references (tactile and audio) to emphasize the invariance of the asynchrony to one of the modality (see text)

Hereafter, the methods used to estimate the PSH, the TWI and auditory dominance are explained. Existing methods for the analysis of perceptual and RT tasks with cross-modal stimuli (Vroomen and Keetels 2010; Mégevand et al. 2013) had to be adapted for rhythmic and continuous movement. To test the prediction based on the PSH (i.e. that physiological synchrony is achieved when tactile stimuli preceded auditory stimuli by 40 ms), we sought the condition with the smallest variability (i.e. the most stable performance). Based on previous uni-manual studies using audio–visual stimuli (Repp and Penel 2004), we expected that the general shape of the response to SOA would correspond to a U-shaped function centred on this minimum. Moreover, we fitted a quadratic polynomial function to the SD coordination and to the SD asynchrony data. The purpose of this fitting was to confirm that the response curve to the SOA variation possesses a minimum and that this minimum was not located on the SOA equal to zero. We reported a measure of the goodness of fit (R-squared) as indicative of the adjustment of this quadratic polynomial function to the participants’ data, obtained by a least square method, and the significance level of the fit, both using the “lm” function of the R software (R core Team 2013).

Further analyses were carried out to test the hypothesis linked to the TWI that the performance was invariant (and enhanced) within a certain range of temporal discrepancies between cross-modal stimuli. In keeping with our hypotheses, we expected a TWI of 80 ms centred at 40 ms (tactile first), within which stability was higher than in the uni-tactile condition, for Focus tactile. The expected TWI comprised three SOAs: 80 (tactile first), 40 (tactile first) and 0 ms. This was tested by computing the difference between the uni-modal tactile condition and cross-modal conditions for each SOA. When the difference was below 0, there was a benefit in the cross-modal conditions, the value 0 representing the performance in the uni-modal condition. Negative values indicated the conditions which were more stable (i.e. lower SD) than the uni-tactile condition. The range of SOAs thus identified could be considered as the extent of the TWI.

Finally, the hypothesis that auditory stimuli attracted more the movement compared to the tactile stimuli (auditory dominance) was tested by comparing the mean of asynchronies across the SOAs when the Focus was on auditory stimuli versus on tactile stimuli. An effect of SOAs was expected only when the focus was on tactile stimuli, because participants are usually strongly attracted by auditory stimuli.

Results

For statistical analysis, the SOAs are ordered according to the Focus. When Focus was on tactile the negative SOAs represent auditory first and the positive SOAs auditory after. Inversely when Focus was on auditory, the negative SOAs represent tactile first and the positive SOAs tactile after. However, in order to represent both Focus and SOAs on the figures, we represent the data according to the order of stimuli, that is, which came in first. So, in the figures, the SOAs where auditory was first represent in the statistical analysis the positive SOAs for Focus on auditory and the negative SOAs for Focus on tactile.

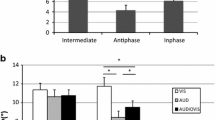

General effect of SOA on cross-modal stimuli

Synchronization and coordination variables were entered in a 2 (Focuses) × 9 (SOAs) repeated-measures ANOVA taking SOA −160, −120, −80, −40, 0, 40, 80, 120 and 160 ms and Focus (auditory and tactile) as within-subject factors. Stability of coordination (Fig. 5a) varied as a function of the SOA, an effect depending on the Focus, as revealed by a significant SOA × Focus interaction (F(8112) = 2.31, p < .05). Simple effects showed that the effect of the SOA was confined to the tactile Focus (F(8112) = 2.55, p < .05) and not visible when participants focused on auditory stimuli (F(8112) = 1.15, p = .33). Synchronization stability (Fig. 5b) varied as a function of the SOA, regardless of the focus (F(8112) = 3.10, p < .01). Finally, synchronization accuracy (Fig. 6a) was affected by the SOA, depending on the Focus; SOA × Focus interaction (F(8112) = 102.02, p < .01).

Mean asynchrony a for audio and tactile focus as a function of SOAs. Mean asynchrony b for tactile focus compared to auditory stimuli for SOAs. Panel a presents the mean asynchrony computed from the differences between the peak flexions and the tactile stimuli. Panel b presents the mean asynchrony computed from the differences between peak flexions and the auditory stimuli. Positive mean asynchrony indicates that participants flexed their fingers after the stimuli. Error bars indicate SE of the Mean. Note that when focus was on tactile stimuli and when the tactile was first in a pair (panel a, in grey), subjects tapped after both stimuli, with a linear lawful relation between mean asynchrony and SOA (mean asynchrony = 2 × SOA, see also figures with raw data in the Supplementary Materials)

Auditory dominance

Additionally to the effect of the SOA that was confined to the tactile Focus, the auditory dominance was tested by considering the mean asynchrony between movements and stimuli, indicator of synchronization accuracy.

An asymmetry effect depending on the order of the presentation of auditory and tactile stimuli was observed. As previously explained in the Preprocessing and data analysis section, the mean asynchrony was computed from the differences between peak of flexion and tactile stimuli (see Fig. 4), because in the Focus tactile conditions the instruction of synchronization was on the tactile stimuli. However, inspection of the data for mean asynchrony revealed that for SOAs where auditory stimuli preceded tactile stimuli, participants tapped in such a way that they kept the asynchrony with the auditory stimuli close to zero, irrespective of the SOA. To illustrate this adaptation, we also computed the mean asynchrony between the peaks of flexion and the auditory stimuli (Fig. 6b). Yet, this was not the case when auditory stimuli followed tactile stimuli. It is intriguing that participants flexed their finger after both tactile and auditory stimuli, in spite of the fact that the focus was on tactile stimulation and the sound was lagging after tactile stimuli (i.e. when tactile stimuli precede from 40 to 160 ms the auditory stimuli). This phenomenon can readily be seen in the individual’s distributions of asynchronies as a function of SOA (Fig. 7). More strikingly, the mean asynchrony was consistently very close to twice the SOA when estimated relative to the first stimuli (i.e. tactile). Therefore, fingers’ peak flexion occurred systematically after the second stimulus, the sound, with a lag equal to the SOA.

Individual histograms of asynchronies (ms) presented for each SOA, for the focus tactile condition. Each line on the y-axis represents one participant. At the bottom in the first line, the grand histogram estimated from the whole data is represented in grey. The histograms show as a function of SOA both the shift in central tendency, corresponding to the mean asynchrony used in the study, and the consistency within and between participants’ response, that is the SD asynchrony used in the study

Physiological synchronicity hypothesis

First, we computed the mean asynchrony in the uni-modal conditions, on average, the participants flexed their fingers 24.4 ms after auditory stimuli and 70.5 ms after tactile stimuli corresponding to an average difference of 46.1 ms. Thus, the condition SOA = 40 ms (tactile first) corresponded well to this difference. The presence of positive mean asynchrony, namely when participants tapped after the stimulus, was also reported in bimanual tasks with audio–visual stimuli for anti-phase coordination (Blais et al. 2015).

As SOAs did not influence the performance when the Focus was on auditory stimuli, data where the Focus was on tactile stimuli were selectively considered. The inspection of the distribution of the synchronization data for individual participants revealed two peaks at 160 ms SOA (i.e. auditory first) and was single-peaked for the other SOAs as shown in Fig. 7. In the 160 ms SOA condition, the mean asynchrony was −256 ms for 7 participants and −70 ms for the 9 others. Due to this high variability between participants in this condition, the condition was not included in the data for the fitting.

The quadratic polynomial provided a good fit to the data for both the SD of coordination (R 2 = .88; F(2,5) = 18.72, p < .01, Fig. 8a) and for the SD of synchronization (R 2 = .90; F(2,5) = 23.66, p < .01, Fig. 8b). These results confirm the visual inspection, the presence of a minimum and an increase towards the extreme values of SOA. Interestingly, the data and the fitted curves showed that synchronization stability was not centred at the physical synchrony between auditory and tactile stimuli (i.e. SOA = 0 ms) but was rather lowest when tactile stimuli preceded auditory stimuli by 40 and 80 ms. For SOAs larger or smaller than these values, SD asynchrony increased, that is the stability of synchronization diminished. For the SD coordination, the minima of the curve correspond to the SOA = 40 ms when tactile is first; however, as noticed in Fig. 8a, the lowest value corresponds to SOA = 80 ms when tactile is first. For SOAs larger or smaller than 80 ms when tactile is first, SD coordination increased, that is the stability of coordination diminished.

Finally, it was observed that the stability of movement increased together with the stability of synchronization (r = .84, p < .05).

Temporal window of integration

To test the possibility that a temporal window of integration may affect audio–tactile integration, we computed the difference between the uni-modal tactile condition and cross-modal conditions for each SOA (i.e. Fig. 9). This was done for SD coordination (i.e. Fig. 9a) and SD asynchrony (i.e. Fig. 9b). As shown in Fig. 9a, no difference between SOAs is visible for the stability of coordination. Synchronization stability differed from 0 for SOAs when tactile preceded auditory by 80 ms (t(14) = 3.27, p < .01) and 40 ms (t(14) = 4.00, p < .01). Additionally, for SOAs with tactile first (120, 80, 40 ms), SOA = 0 and SOA with audio first (40 ms), the SD asynchrony was below 0, indicating an advantage of the cross-modal stimuli relative to the uni-tactile condition. This was indicative of a temporal window of integration of 160 ms, namely between 120 (tactile first) and 40 ms (audio first).

Discussion

The goal of the present study was to examine the temporal factors governing the synchronization of bimanual coordination paced by audio–tactile stimuli while varying the SOA between tactile and auditory stimuli. Our predictions have been partly confirmed. The uni-modal auditory condition proved overall the pacing condition leading to the most stable performance in terms of both coordination and synchronization to the rhythmic stimuli. Nevertheless, both physiological synchronicity and the temporal window of integration contributed to multisensory integration. The effect of these temporal factors on the stability of the synchronization to the stimuli was visible only when the focus of attention was on tactile stimuli.

Multisensory integration

To investigate whether audio–tactile stimulation leads to a multisensory benefit, cross-modal stimulation was compared to uni-modal stimulation. All bimodal conditions were less efficient than the uni-modal auditory condition for coordination and were comparable for synchronization. Nonetheless, a multisensory benefit of synchronization stability was observed when the bimodal condition with tactile focus is compared to the uni-modal tactile condition. In particular, this was found when tactile stimulation was presented prior to auditory stimuli by 80 and 40 ms. This effect was found when considering the synchronization to the stimuli but not for the coordination between hands. This finding indicates that multisensory benefit, albeit frequently observed with perceptual tasks and in RT tasks, may not systematically extend to tasks involving a repeated rhythmic movement. This is consistent with previous evidence of limited enhancement or lack of effect of audio–tactile stimulation on uni-manual synchronization or coordination of continuous movement. For example, walking with auditory stimuli has the same effect on gait parameters, such as stride interval variability, as audio-visuo-tactile stimulation (Sejdić et al. 2012). In uni-manual synchronization, only one study reported audio–tactile benefits (Wing et al. 2010), while two others did not (Lagarde and Kelso 2006; Elliott et al. 2010). To the best of our knowledge, the effect of audio–tactile stimuli on bimanual coordination was examined in only one study, showing a general multisensory benefit if pairs of stimuli were presented in an alternate way to the left and the right, directly matching the anti-phase pattern of the hands coordination (Zelic et al. 2016). Using here the same condition, we did not replicate a multisensory benefit for coordination stability. However, this discrepancy may partly stem from methodological differences between the two studies. Zelic et al. (2016) employed a transition protocol where rate of movement and stimuli varied during bimanual coordination. In this study, the stabilization of coordination was driven by the rate at which the anti-phase coordination was maintained before a spontaneous switch to in-phase occurred. In the present study, rate was kept constant and the stability was measured by the standard deviation of the coordination (Schöner et al. 1986). Moreover, as previously evoked in the discussion of experiment 1, a factor which may have limited the observation of a multisensory benefit was the intensity of stimulation. The loudness of auditory stimuli may have hindered the emergence of a multisensory benefit in both the RT task and bimanual synchronization task. Nevertheless, even in the absence of a multisensory benefit, we found an effect of the SOAs on the performance. This result provided valuable information about the effect of this temporal factor on performance.

Physiological synchronicity and temporal window of integration

We obtained compelling evidence that the SOA between isochronous auditory and tactile stimulation influences synchronization and coordination, provided that the focus of attention is on tactile stimuli. According to the TWI and the PSH, we expected a window of 80 ms centred at 40 ms (i.e. tactile first). Thus, for SOAs of 80, 40 and 0 ms (tactile first), we expected a decrease in the variability of the coordination between hands and of the synchronization with stimuli relative to the uni-modal condition. The results obtained for the synchronization to the stimuli showed a TWI with a wider range of SOAs (−120 to 40 ms). Differently for the coordination between hands, a TWI was not confirmed, and the variability of the cross-modal conditions was never lower than in the uni-tactile condition.

The analysis of synchronization stability and of coordination stability provided clear insights about the hypothesis that introducing a temporal gap between cross-modal stimuli can make more efficient its binding with movement as compared to an isochronous cross-modal condition. The curves of SD of synchronization and SD of coordination showed a clear effect of SOA. The general shape of this response to SOA displayed a central minimum and increasing extreme values. For SOA of 160 ms, irrespective of the order between stimulus modalities, the SD of synchronization and of coordination increased, thus showing a decrease in stability. Moreover, these responses functions were displaced towards the left. Indeed, relative to the uni-modal tactile baseline, the lowest values of SD synchronization and coordination as a function of the SOA were located to the left instead of being centred around a zero SOA. Previous studies giving arguments in favour to the physiological synchronicity hypothesis found larger mean asynchrony when participants synchronize uni-manually to tactile than to auditory stimuli (Lagarde and Kelso 2006; Müller et al. 2008). Our results in a bimanual task showed also a larger mean asynchrony for uni-modal tactile than for uni-modal auditory conditions, resulting in a time difference of 46 ms. This was in the order of difference estimated from the reaction time task (30 ms) and comparable to what was reported in previous studies using reaction time tasks (Diederich and Colonius 2004; Diederich 1995; Murray et al. 2005). Note that this time difference corresponds to the conditions when tactile preceded auditory stimuli by 40 ms. As reported, the curve reached its minimum in the interval between 80 and 40 ms for synchronization stability and for coordination stability. Moreover, the low variability among participants, in terms of the stability measure for synchronization (SD synchronization) and of mean asynchrony, indicated high consistency between individuals in this range of SOA. Thus, the results confirmed that conditions which respect the physiological synchronicity of modalities facilitate the binding of movements with cross-modal events.

In addition, as reported for SD synchronization, the conditions 80 and 40 ms (tactile first) showed a benefit compared to the uni-tactile condition. Concerning the stability of the coordination, the pattern proved less stable for extreme SOAs than for 0, 40 and 80 ms SOA (tactile first), but none of them indicated a benefit compared to uni-modal tactile. This absence of benefit in SD coordination prevented the effect of the TWI on this variable (the differences between both variables are further discussed below). Regarding the synchronization to cross-modal stimuli, the results pointed towards a TWI of 160 ms, namely between 120 (tactile first) and 40 ms (audio first), which is wider than expected (80 ms). That the size of the TWI is wider than expected is quite surprising, considering that the TWI is narrower in RT task compared to temporal order judgement tasks for audio–visual stimuli (Mégevand et al. 2013). A wider TWI in a synchronization task to audio–tactile stimuli may reflect the ability of the system to keep stable behaviour despite the discrepancies between senses. It is intriguing that the behaviour remained stable for larger discrepancies in a motor coordination task than in a perceptual task. The dependence of the TWI on the nature of the tasks and on specific task demands (e.g. maintain stability) deserves further studies in the future.

Altogether, both the PSH and the TWI influence the performance in a bimanual synchronization task to audio–tactile stimuli. A final hypothesis concerned the effect of auditory dominance. Indeed, because of auditory dominance, stronger effects of SOA manipulation were expected when participants focused on tactile stimuli.

Auditory dominance

The results showed an effect of the SOA between modalities when the focus is on the tactile stimuli. When the focus was on the auditory stimuli, in particular for synchronization, stability remained generally constant in spite of the changing SOA, thus revealing no multisensory integration. Together, the analysis of both synchronization stability and accuracy indicated that instructing participants to synchronize to auditory stimuli prevented an effect of the tactile stimuli, suggesting the absence of effective multisensory processes. This finding is consistent with the so-called auditory dominance effect (Repp and Penel 2002, 2004; Kato and Konishi 2006). This phenomenon is often explained by the specificity of the two modalities. Typically, the auditory modality is efficient when temporal aspects are critical for the execution of a task, while the visual modality is more appropriate for spatial aspects, and the tactile modality for surface discrimination (Welch and Warren 1980). The auditory dominance is reported in tapping in comparison to vision (Aschersleben and Bertelson 2003; Kato and Konishi 2006; Repp and Penel 2002, 2004). By systematic variation of the SOA, those studies have shown that synchronized movement is typically more attracted by auditory than by visual stimuli. Here we observed auditory dominance in a bimanual synchronization task for audio–tactile stimuli. However, despite auditory dominance, previous studies with a uni-manual task reported multisensory integration when participants were instructed to synchronize to the visual stimuli, that is an effect of the SOA (Aschersleben and Bertelson 2003; Kato and Konishi 2006; Repp and Penel 2002, 2004). We obtained similar results in the present study when participants focused on the tactile stimulation.

Additionally, analysis of mean asynchrony revealed an intriguing effect of the order of cross-modal stimuli. The variation of the SOA, from negative to positive, introduced a change of the order of presentation of the stimuli. Either the tactile stimuli appear first, or the auditory stimuli appear first. This asymmetry has been shown for uni-manual audio–visual tapping (Repp and Penel 2002, 2004), and the attraction exerted by leading modalities was usually stronger than that exerted by lagging modalities. Mean asynchrony allowed us to pinpoint two distinct kinds of adaptation to the stimuli depending on stimulus order (Fig. 6). This change was most clearly visible when asynchrony was calculated relative to the auditory stimuli (see Fig. 6b). When the tactile stimuli were presented first, the mean asynchrony increased as a function of the SOA, and the participants tapped after the each cross-modal pair. When the auditory stimuli were first, mean asynchrony remained in the vicinity of the zero. In the former situation, a clear dependence of synchronization accuracy (mean asynchrony) on SOA was found; yet, the reason why movement was placed after both stimuli is still unclear. The latter situation could result from the dominance of auditory modality. When sounds occurred first in time, movement was mostly anchored to the auditory information, leaving little room to tactile stimulation to act on synchronization. At the same time, the increase in synchronization stability (SD synchronization) for SOAs greater than 40 ms revealed that this spontaneous locking of synchronization to the sound was not immune to a multisensory effect. Note that effects of asymmetry linked to the order of presentation of the stimuli are not uncommon in multisensory studies (Diederich and Colonius 2015; Fujisaki and Nishida 2009; Mégevand et al. 2013; Powers et al. 2009; Wilson et al. 2009). For example, the attentional prior-entry effect indicates that attending to a modality can improve the reactivity to the attended modality, compared to a less or unattended modality (Spence and Parise 2010). In our study, when the focus was on tactile stimuli and auditory preceded tactile stimuli, the auditory modality was not the attended modality. The observed attraction of movement to auditory stimuli was likely to result from both auditory dominance and the order of presentation, whereby the directed attention to the tactile stimuli may have interfered with synchronization processes.

Relation between synchronization and movement

As mean asynchrony showed, the most stable synchronization was not accompanied by the smallest mean asynchrony. How multisensory processes determined that this timing was adopted with such consistency remains an open question. Thus, in this part, we focused on the link between the variables of the experiment and specifically those measuring the stability. For synchronization of each hand to the cross-modal stimuli, it was clear that a given range of SOAs could facilitate the multisensory processes at work to bind movement to stimulation. However, this effect was less clear for the coordination stability between the hands. The two levels were indeed correlated, but the stability of the coordination in this cross-modal condition never outperformed the uni-modal tactile condition. Accordingly, physiological synchronicity applies to synchronization in the case of coordination; however, the advantage obtained in terms of hand synchronization to the stimuli did not extend to the stability of the coordination pattern. The HKB model directly predicts this gain, when the intended anti-phase pattern is paced by events presented in anti-phase to the left and right hand. One line of interpretation is that synchronization to cross-modal events competed to some extent with the coordination between hands. For coordination to be stable, co-variation from cycle to cycle between the hands is required, enabled by an interhemispheric exchange represented by the HKB coupling. However, if the timing of each hand varied from cycle to cycle due to movement adjustments and local random errors while synchronizing to the cross-modal stimuli, and assuming the origins of such variation are independent between the hands, this process may compete with the bimanual coupling. Studying in more detail the relation between synchronization and coordination in cross-modal conditions is beyond the scope of the present study. However, further studies can be conducted to examine the autocorrelations between cycles for each hand in cross-modal and in the uni-modal tactile conditions, to shed light on the dynamical relations between the coordination between the hands (stabilized “globally”) and the synchronization of each hand to stimuli (stabilized “locally”).

In conclusion, in keeping with the hypothesis of physiological synchronicity and of the temporal window of integration, we found that the tactile had to precede the auditory by 40 and 80 ms to obtain the best performance for the synchronization behaviour. The effect was weaker for the coordination behaviour. Moreover, the size of the estimated TWI was wider (160 ms) than in perceptive tasks (maximal 80 ms). This suggests that in the context of an interlimb coordination task, the CNS is able to dynamically adapt to larger temporal discrepancies between audio and tactile stimuli. Those stabilization effects by cross-modal stimuli occurred when participants attempted to synchronize to tactile stimuli. This effect of the focus of attention can be relevant to subsequent research on the binding of movement with audio–tactile events. Taken together, these findings bring new knowledge on temporal factors determining multisensory processes when perception and movements are rhythmic and coupled. An interesting extension of this paradigm would be to investigate these questions in more realistic situations such as cued walking, promising in particular for rehabilitation protocols.

References

Alais D, Newell FN, Mamassian P (2010) Multisensory processing in review: from physiology to behaviour. See Perceiving 23:3–38. doi:10.1371/journal.pone.0011283

Aschersleben G (2002) Temporal control of movements in sensorimotor synchronization. Brain Cogn 48:66–79. doi:10.1006/brcg.2001.1304

Aschersleben G, Bertelson P (2003) Temporal ventriloquism: crossmodal interaction on the time dimension. 2. Evidence from sensorimotor synchronization. Int J Psychophysiol 50:157–163. doi:10.1016/S0167-8760

Beek PJ, Peper CE, Stegeman DF (1995) Dynamical models of movement coordination. Hum Mov Sci 14:573–608. doi:10.1016/0167-9457(95)00028-5

Bell AH, Meredith MA, Van Opstal AJ, Munoz DP (2005) Crossmodal integration in the primate superior colliculus underlying the preparation and initiation of saccadic eye movements. J Neurophysiol 93:3659–3673. doi:10.1152/jn.01214.2004

Bialunska A, Dalla Bella S, Jaskowski P (2011) Increasing stimulus intensity does not affect sensorimotor synchronization. Psychol Res 75:43–53. doi:10.1007/s00426-010-0282-7

Blais M, Albaret J-M, Tallet J (2015) Is there a link between sensorimotor coordination and inter-manual coordination? Differential effects of auditory and/or visual rhythmic stimulations. Exp Brain Res 233:3261–3269. doi:10.1007/s00221-015-4394-6

Byblow WD, Carson RG, Goodman D (1994) Expressions of asymmetries and anchoring in bimanual coordination. Hum Mov Sci 13:3–28

Carver FW, Fuchs A, Jantzen KJ, Kelso JAS (2002) Spatiotemporal analysis of the neuromagnetic response to rhythmic auditory stimulation: rate dependence and transient to steady-state transition. Clin Neurophysiol 113:1921–1931. doi:10.1016/S1388-2457(02)00299-7

Colonius H, Diederich A (2010) The optimal time window of visual-auditory integration: a reaction time analysis. Front Integr Neurosci. doi:10.3389/fnint.2010.00011

Colonius H, Diederich A, Steenken R (2009) Time-window-of-integration (TWIN) model for saccadic reaction time: effect of auditory masker level on visual-auditory spatial interaction in elevation. Brain Topogr 21:177–184. doi:10.1007/s10548-009-0091-8

Diederich A (1995) Intersensory facilitation of reaction time: evaluation of counter and diffusion coactivation models. J Math Psychol 39:197–215. doi:10.1006/jmps.1995.1020

Diederich A, Colonius H (2004) Bimodal and trimodal multisensory enhancement: effects of stimulus onset and intensity on reaction time. Percept Psychophys 66:1388–1404. doi:10.3758/BF03195006

Diederich A, Colonius H (2007a) Modeling spatial effects in visual-tactile saccadic reaction time. Percept Psychophys 69:56–67. doi:10.3758/BF03194453

Diederich A, Colonius H (2007b) Why two “Distractors” are better than one: modeling the effect of non-target auditory and tactile stimuli on visual saccadic reaction time. Exp Brain Res 179:43–54. doi:10.1007/s00221-006-0768-0

Diederich A, Colonius H (2009) Crossmodal interaction in speeded responses: time window of integration model. In: Raab M (ed) Progress in brain research. Elsevier, Amsterdam, pp 119–135

Diederich A, Colonius H (2015) The time window of multisensory integration: relating reaction times and judgments of temporal order. Psychol Rev 122:232–241. doi:10.1037/a0038696

Diedrichsen J, Shadmehr R, Ivry RB (2010) The coordination of movement: optimal feedback control and beyond. Trends Cogn Sci 14:31–39. doi:10.1016/j.tics.2009.11.004

Driver J, Noesselt T (2008) Multisensory interplay reveals crossmodal influences on “sensory-specific” brain regions, neural responses, and judgments. Neuron 57:11–23. doi:10.1016/j.neuron.2007.12.013

Elliott MT, Wing AM, Welchman AE (2010) Multisensory cues improve sensorimotor synchronisation. Eur J Neurosci 31:1828–1835. doi:10.1111/j.1460-9568.2010.07205.x

Ernst MO, Banks MS (2002) Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415:429–433. doi:10.1038/415429a

Ernst MO, Bülthoff HH (2004) Merging the senses into a robust percept. Trends Cogn Sci 8:162–169. doi:10.1016/j.tics.2004.02.002

Fink PW, Foo P, Jirsa VK, Kelso JS (2000) Local and global stabilization of coordination by sensory information. Exp Brain Res 134:9–20. doi:10.1007/s002210000439

Fraisse P (1980) Les sycnhronisations sensori-motrices aux rythmes [The sensorimotor sycnhronization of rhythms]. In: Requin J (ed) Anticipations et comportements. Centre National, Paris, pp 233–257

Frissen I, Ziat M, Campion G et al (2012) The effects of voluntary movements on auditory-haptic and haptic-haptic temporal order judgments. Acta Psychol (Amst) 141:140–148. doi:10.1016/j.actpsy.2012.07.010

Fujisaki W, Nishida S (2009) Audio-tactile superiority over visuo-tactile and audio-visual combinations in the temporal resolution of synchrony perception. Exp Brain Res 198:245–259. doi:10.1007/s00221-009-1870-x

Ghazanfar A, Schroeder CE (2006) Is neocortex essentially multisensory? Trends Cogn Sci 10:278–285. doi:10.1016/j.tics.2006.04.008

Haken H (1988) Information and self-organization: a macroscopic approach to complex systems. Springer, Berlin

Haken H, Kelso JAS, Bunz H (1985) A theoretical model of phase transitions in human hand movements. Biol Cybern 51:347–356. doi:10.1007/BF00336922

Harrar V, Harris LR (2005) Simultaneity constancy: detecting events with touch and vision. Exp Brain Res 166:465–473. doi:10.1007/s00221-005-2386-7

Harrar V, Harris LR (2008) The effect of exposure to asynchronous audio, visual, and tactile stimulus combinations on the perception of simultaneity. Exp Brain Res 186:517–524. doi:10.1007/s00221-007-1253-0

Harris L, Harrar V, Jaekl P, Kopinska A (2009) Mechanisms of simultaneity constancy. In: Nijhawan R, Khurana B (eds) Space and time in perception and action. Cambridge university Press, Cambridge, pp 232–253

Hershenson M (1962) Reaction time as a measure of intersensory facilitation. J Exp Psychol 63:289

Howard IS, Ingram JN, Körding KP, Wolpert DM (2009) Statistics of natural movements are reflected in motor errors. J Neurophysiol 102:1902–1910. doi:10.1152/jn.00013.2009

Jones M, Dzhafarov EN (2014) Unfalsifiability and mutual translatability of major modeling schemes for choice reaction time. Psychol Rev 121:1–32

Kato M, Konishi Y (2006) Auditory dominance in the error correction process: a synchronized tapping study. Brain Res 1084:115–122. doi:10.1016/j.brainres.2006.02.019

Kelso JAS (1984) Phase transitions and critical behavior in human bimanual coordination. Am J Physiol 15:1000–1004

Kelso JAS (1995) Dynamic patterns: the self-organization of brain and behavior. MIT Press, Cambridge

Kelso JAS, Delcolle JD, Schöner G (1990) Action-perception as a pattern formation process. In: Jeannerod M (ed) Attention and performance XIII. Erlbaum, Hillsdale, pp 139–169

Kitagawa N, Zampini M, Spence C (2005) Audiotactile interactions in near and far space. Exp Brain Res 166:528–537. doi:10.1007/s00221-005-2393-8

Kolers PA, Brewster JM (1985) Rhythms and responses. J Exp Psychol Hum Percept Perform 11:150–167. doi:10.1037/0096-1523.11.2.150

Lagarde J, Kelso JAS (2006) Binding of movement, sound and touch: multimodal coordination dynamics. Exp Brain Res 173:673–688. doi:10.1007/s00221-006-0410-1

Lagarde J, Zelic G, Mottet D (2012) Segregated audio-tactile events destabilize the bimanual coordination of distinct rhythms. Exp Brain Res 219:409–419. doi:10.1007/s00221-012-3103-y

Lakatos P, Chen CM, O’Connell MN et al (2007) Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53:279–292. doi:10.1016/j.neuron.2006.12.011

Leise T, Cohen A (2007) Nonlinear oscillators at our fingertips. Am Math Mon 114:14–28

Mégevand P, Molholm S, Nayak A, Foxe JJ (2013) Recalibration of the multisensory temporal window of integration results from changing task demands. PLoS One 8:e71608. doi:10.1371/journal.pone.0071608

Miller J (1982) Divided attention: evidence for coactivation with redundant signals. Cogn Psychol 14:274–279

Müller K, Aschersleben G, Schmitz F et al (2008) Inter- versus intramodal integration in sensorimotor synchronization: a combined behavioral and magnetoencephalographic study. Exp Brain Res 185:309–318. doi:10.1007/s00221-007-1155-1

Murray MM, Molholm S, Michel CM et al (2005) Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex 15:963–974. doi:10.1093/cercor/bhh197

Nangini C, Ross B, Tam F, Graham SJ (2006) Magnetoencephalographic study of vibrotactile evoked transient and steady-state responses in human somatosensory cortex. Neuroimage 33:252–262. doi:10.1016/j.neuroimage.2006.05.045

Occelli V, Spence C, Zampini M (2011) Audiotactile interactions in temporal perception. Psychon Bull Rev 18:429–454. doi:10.3758/s13423-011-0070-4

Otto TU, Dassy B, Mamassian P (2013) Principles of multisensory behavior. J Neurosci 33:7463–7474. doi:10.1523/JNEUROSCI.4678-12.2013

Paillard J (1949) Quelques données psychophysiologiques relatives au déclenchement de la commande motrice [Some psychophysiological data relating to the triggering of motor commands]. Annee Psychol 48:28–47

Powers AR, Hillock AR, Wallace MT (2009) Perceptual training narrows the temporal window of multisensory binding. J Neurosci 29:12265–12274. doi:10.1523/JNEUROSCI.3501-09.2009

R core Team (2013) R: a language and environment for statistical computing. R A Lang Environ Stat Comput

Raab DH (1962) Statistical facilitation of simple reaction time. Trans N Y Acad Sci 43:574–590. doi:10.1017/CBO9781107415324.004

Repp BH (2005) Sensorimotor synchronization: a review of the tapping literature. Psychon Bull Rev 12:969–992. doi:10.3758/BF03206433

Repp BH, Penel A (2002) Auditory dominance in temporal processing: new evidence from synchronization with simultaneous visual and auditory sequences. J Exp Psychol Hum Percept Perform 28:1085–1099. doi:10.1037/0096-1523.28.5.1085

Repp BH, Penel A (2004) Rhythmic movement is attracted more strongly to auditory than to visual rhythms. Psychol Res 68:252–270. doi:10.1007/s00426-003-0143-8

Ronsse R, Miall RC, Swinnen SP (2009) Multisensory integration in dynamical behaviors: maximum likelihood estimation across bimanual skill learning. J Neurosci 29:8419–8428. doi:10.1523/JNEUROSCI.5734-08.2009

Rowland BA, Quessy S, Stanford TR, Stein BE (2007) Multisensory integration shortens physiological response latencies. J Neurosci 27:5879–5884. doi:10.1523/JNEUROSCI.4986-06.2007

Schöner G, Kelso JAS (1988a) A synergetic theory of environmentally-specified and learned patterns of movement coordination II. Component oscillator dynamics. Biol Cybern 58:81–89

Schöner G, Kelso JAS (1988b) A synergic theory of environmentally-specified and learned patterns of movement coordination I. Relative phase dynamics. Biol Cybern 58:71–80

Schöner G, Haken H, Kelso JAS (1986) A stochastic theory of phase transitions in human hand movement. Biol Cybern 53:247–257. doi:10.1007/BF00336995

Schöner G, Zanone PG, Kelso JAS (1992) Learning as change of coordination dynamics: theory and experiment. J Mot Behav 24:29–48

Sejdić E, Fu Y, Pak A et al (2012) The effects of rhythmic sensory cues on the temporal dynamics of human gait. PLoS One. doi:10.1371/journal.pone.0043104

Soto-Faraco S, Deco G (2009) Multisensory contributions to the perception of vibrotactile events. Behav Brain Res 196:145–154. doi:10.1016/j.bbr.2008.09.018

Spence C, Parise C (2010) Prior-entry: a review. Conscious Cogn 19:364–379. doi:10.1016/j.concog.2009.12.001

Spence C, Squire S (2003) Multisensory integration: maintaining the perception of synchrony. Curr Biol 13:519–521. doi:10.1016/S0960-9822(03)00445-7

Stein BE, Meredith MA (1993) The merging of the senses. MIT Press, Cambridge

Swinnen SP (2002) Intermanual coordination: from behavioural principles to neural–network interactions. Nat Rev Neurosci 3:348–359. doi:10.1038/nrn807

Todd JW (1912) Reaction to multiple stimuli. Arch Psychol 25:1–65

Todorov E, Jordan MI (2002) Optimal feedback control as theory of motor coordination. Nat Neurosci 5:1226–1235. doi:10.1038/nn963

Turvey MT (1990) Coordination. Am Psychol 45:938–953. doi:10.1037/0003-066X.45.8.938

Vroomen J, Keetels M (2010) Perception of intersensory synchrony: a tutorial review. Atten Percept Psychophys 72:871–884. doi:10.3758/APP.72.4.871

Welch RB, Warren DH (1980) Immediate perceptual response to intersensory discrepancy. Psychol Bull 88:638–667. doi:10.1037/0033-2909.88.3.638

Wilson EC, Reed CM, Braida LD (2009) Integration of auditory and vibrotactile stimuli: effects of phase and stimulus-onset asynchrony. J Acoust Soc Am 126:1960–1974

Wing AM, Doumas M, Welchman AE (2010) Combining multisensory temporal information for movement synchronisation. Exp Brain Res 200:277–282. doi:10.1007/s00221-009-2134-5

Zampini M, Brown T, Shore DI et al (2005) Audiotactile temporal order judgments. Acta Psychol (Amst) 118:277–291. doi:10.1016/j.actpsy.2004.10.017

Zelic G, Mottet D, Lagarde J (2012) Behavioral impact of unisensory and multisensory audio-tactile events: pros and cons for interlimb coordination in juggling. PLoS One 7:e32308. doi:10.1371/journal.pone.0032308

Zelic G, Mottet D, Lagarde J (2016) Perceptuo-motor compatibility governs multisensory integration in bimanual coordination dynamics. Exp Brain Res 234:463–474. doi:10.1007/s00221-015-4476-5

Acknowledgments

We thank two anonymous reviewers for their careful reading of the manuscript and suggestions on earlier drafts of the manuscript. The study was supported by a grant from the European Commission (FP7-ICT-2013-10 “BeatHealth: Health and Wellness on the Beat”). The authors declare no competing financial interests. We would like to thank Bernard Gilbert, Déborah Varoqui and Robin Salesse for their help at various stages of this project.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Roy, C., Dalla Bella, S. & Lagarde, J. To bridge or not to bridge the multisensory time gap: bimanual coordination to sound and touch with temporal lags. Exp Brain Res 235, 135–151 (2017). https://doi.org/10.1007/s00221-016-4776-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-016-4776-4