Abstract

The involvement or noninvolvement of a clock-like neural process, an effector-independent representation of the time intervals to produce, is described as the essential difference between event-based and emergent timing. In a previous work (Bravi et al. in Exp Brain Res 232:1663–1675, 2014a. doi:10.1007/s00221-014-3845-9), we studied repetitive isochronous wrist’s flexion–extensions (IWFEs), performed while minimizing visual and tactile information, to clarify whether non-temporal and temporal characteristics of paced auditory stimuli affect the precision and accuracy of the rhythmic motor performance. Here, with the inclusion of new recordings, we expand the examination of the dataset described in our previous study to investigate whether simple and complex paced auditory stimuli (clicks and music) and their imaginations influence in a different way the timing mechanisms for repetitive IWFEs. Sets of IWFEs were analyzed by the windowed (lag one) autocorrelation—wγ(1), a statistical method recently introduced for the distinction between event-based and emergent timing. Our findings provide evidence that paced auditory information and its imagination favor the engagement of a clock-like neural process, and specifically that music, unlike clicks, lacks the power to elicit event-based timing, not counteracting the natural shift of wγ(1) toward positive values as frequency of movements increase.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In a previous work, we studied repetitive isochronous wrist’s flexion–extensions (IWFEs) to clarify whether non-temporal and temporal characteristics of paced auditory stimuli affect rhythmic motor performance. Results showed that the distinct characteristics of an auditory stimulus influenced differently the accuracy of isochronous movements by altering their duration and provided evidence for an adaptable control of timing in the audio-motor coupling for isochronous movements (Bravi et al. 2014a). With the present work, we seek to analyze whether paced auditory stimuli with distinct characteristics and their recall influence timing mechanisms for repetitive IWFEs.

The involvement or noninvolvement of a clock-like neural process, an abstract effector-independent representation of the time intervals to produce (Wing and Kristofferson 1973a, b), is described as the essential difference between event-based and emergent timing, i.e., the two forms of timing for the control of rhythmic movements (Spencer and Ivry 2005; Baer et al. 2013). Event-based timing, stemming from the information processing theory, is nicely captured by the two-level model proposed by Wing and Kristofferson, showing that temporal precision in self-paced tapping is determined by variability in a central timekeeper and by variability arising in the peripheral motor system (Semjen et al. 2000). Emergent timing, deriving from the dynamics systems theory, is considered to arise from dynamic control of non-temporal movements parameters (Schöner 2002; Huys et al. 2008) such as stiffness playing a major role for regulating movement frequency (Turvey 1977; Delignières et al. 2004). In particular, event-based timing predicts that a longer interval should be followed by a shorter interval and vice versa. A statistical approach that is used for identifying the timing mode of a rhythmic activity is the (lag one) autocorrelation γ(1) that returns negative values (between −0.5 and 0), when event-based timing controls the timed movements, or positive values (between 0 and 0.5), in case of involvement of emergent timing (Wing and Kristofferson 1973a, b). Extensions of the two-level model for synchronization have been examined (Vorberg and Wing 1996; Pressing 1998; Vorberg and Schulze 2002), and the available models of synchronization, based on a timekeeper estimating time integrated with information on previous performance and on expected events (Joiner and Shelhamer 2009), have been recently evaluated for their predictive abilities (Jacoby and Repp 2012).

Different rhythmic motor tasks are used to study timing abilities. Tapping is thought to employ the event-based temporal control while circle drawing or forearm oscillations involve emergent timing control (Zelaznik et al. 2002). Robertson et al. (1999) found that temporal precision in tapping was not related to temporal precision in circle drawing and hypothesized that the two tasks involved distinct timing processes, the former favoring a process of timing “with a timer” and the latter a process of timing “without a timer”. The discontinuous and continuous character of movements for tapping and circle drawing was considered a key factor in the engagement of one or the other timing mode (Robertson et al. 1999). Theoretical analyses (Schöner 2002; Huys et al. 2008) substantiated that the class of discontinuous movements, defined as having a definite beginning and end, favors the involvement of clock-like neural processes.

The discreteness in rhythmic movements is not the only variable favoring the utilization of event-based timing. Recent studies have demonstrated that salient auditory markers, i.e., streams of clicks and tactile feedback, are also able to elicit the event-based timing (Torre and Delignières 2008; Studenka et al. 2012). Also, it is known that the characteristics of an auditory stimulus, and particularly non-temporal characteristics such as nature, energy, and complexity, have a decisive influence on the perception of time (Allan 1979). In fact, the framework for synchronizing movement to different classes of auditory stimuli such as streams of clicks or music may be very different. Paced clicks are simple and brief sensory events marking only the onset and the offset of empty silent intervals. A signal consisting of a stream of clicks provides unique informational economy by making the next click in the sequence perfectly predictable (Fraisse 1982). Rhythm perception in this case is simply related to duration of the interonset interval as defined by periodic auditory markers (Repp 2005). Conversely, paced music consists of a complex sound architecture filling entirely temporal intervals subdivided by periodic pulses. Musical beat perception is a brain function involving the extraction of a periodic pulse from spectrotemporally complex sound sequences as hierarchical recursive structures composed by adjacent notes and meters, allowing the listener to infer a steady succession of beats (Jackendoff and Lerdahl 2006; Large and Snyder 2009; Patel and Iversen 2014). Finally, also movements participate to beat perception in music encoding, even for ambiguous musical rhythm (Phillips-Silver and Trainor 2007). These results suggest that movement takes part in the coding of rhythm from audition.

People are able to mentally represent an auditory stimulus and retain it (Halpern 1992). The introspective persistence of an auditory experience, including that constructed of components drawn from memory, in the absence of direct sensory instigation of experience, has been defined as auditory imagery (Hubbard 2010; Bishop et al. 2013). Zelaznik and Rosenbaum (2010) reasoned on the fact that kinematic differences in tapping and circle drawing may not satisfy entirely for the distinction between event-based and emergent timing and on whether sensory representation of movement may be significant for the difference between the two timing conditions. The authors, using a paradigm of correlation for individual differences between timing variability in tapping and circle drawing, showed that the distinction between event-based and emergent timing resides also in the representation of the goal and provided evidence that the auditory representation of movement favors the event-based timing (Zelaznik and Rosenbaum 2010).

After Spencer et al. (2003) demonstrated that air finger tapping, a motor task performed in the air with no surface contact, could invoke either an event-based or an emergent timing mode, the notion that a rhythmic motor task employs only a specific timing mode for the production of rhythmic movements has been challenged. Indeed, tasks typically thought to exploit event-based timing have been shown to exhibit an emergent timing mode and vice versa (Huys et al. 2008; Studenka and Zelaznik 2008; Zelaznik and Rosenbaum 2010; Studenka et al. 2012). These evidences suggested that processes for timing are more flexible than previously thought and that the event–emergent distinction is not an all-or-none difference (Repp and Steinman 2010). As consequence, the relationship linking the two forms of temporal control is now a matter of debate.

Repp and Steinman (2010), using a condition in which tapping with left hand and circle drawing with right hand were performed simultaneously, showed that event-based and emergent timing can coexist when two different effectors perform different tasks, but also argued the possibility that the two timing modes could coexist in a single activity. On the other hand, Delignières and Torre (2011) rejected the possibility of a simultaneous expression of event-based and emergent timing in a single task. They showed, using an air tapping task, that the two forms of temporal processes represent two exclusive alternatives during the performance of a single task.

On the basis of the above-mentioned findings and theories, we aim to address three questions in the domain of timing as result of sensory-motor integration. To accomplish these aims, we use in part the dataset already described in Bravi et al. (2014a) and obtained from a combined task of audio- and recall-motor integration in which sets of IWFEs are performed—while minimizing visual and tactile information—under different conditions (self-paced free from auditory constrain, driven by streams of paced clicks or by excerpts of paced music, and self-paced during recall of previous auditions) and at various tempi. Moreover, since in Bravi et al. (2014a, Table 1) a minimal part of the studied ranges of tempi was unequally distributed in terms of length, the original segmentation was slightly modified in order to achieve a continuous representation of the variable tempo.

In the wake of the discoveries about the ability of perceptual events to elicit event-based timing, we first investigate whether simple and complex paced auditory stimuli, as streams of clicks and excerpts of music, influence differently the processes for temporal regulation of IWFEs. In particular, we want to study whether music, although being not homogeneous and coming in many genres, has a different power to elicit the event-based timing with respect to the most commonly used streams of clicks. During the listening of music, the complexity inherent to beat perception (Patel and Iversen 2014) could make the beat scarcely predictable and offer a poor temporal anchor for repetitive movements making them less discrete and, consequently, evoking emergent timing. Conversely, the listening to clicks could allow for an easy representation of the time intervals and produce an attraction toward the event-based management of timing (Zelaznik and Rosenbaum 2010). Therefore, we may hypothesize a priori that the ability of music to elicit the event-based timing should be somehow hampered by the intrinsic sound architecture; this hypothesis could be particularly plausible for high frequencies where the movement was shown to be less discontinuous (Huys et al. 2008; Repp 2008, 2011).

Secondly, we explore whether the recall of an auditory stimulus, where auditory imagery is involved as an internal representation, is sufficient to influence timing control processes for the production of rhythmic movements. Our interest comes from the fact that in the millisecond timing research it was never taken into account whether the recall of audio information may be of significance for the difference between the two timing processes. However, little or nothing is known about the timing mechanisms active when the pacing stimulus is drawn using memory storages after short-term/working memory, i.e., those pertaining to intermediate-term and long-term memory (Kesner and Hopkins 2006; Rosenzweig et al. 1993).

Finally, our IWFEs performed with no direct surface opposition and while minimizing visual information could potentially employ, as air finger tapping movements, both event-based and emergent timing processes (Spencer et al. 2003, 2007; Delignières and Torre 2011). By using sets of IWFEs performed under different conditions and at various tempi, we want to get a better insight into whether the two timing modes are mutually exclusive or whether an event-based component can coexist with an emergent component. We chose to analyze our data by the windowed (lag one) autocorrelation wγ(1), a recently introduced and reliable statistical method for the distinction between event-based and emergent timing (Lemoine and Delignières 2009; Delignières and Torre 2011), to reduce the positive bias on autocorrelation values provoked by the drift variance (Collier and Ogden 2004), and to investigate the evolution of γ(1) over time by identifying appropriately the underlying timing processes. The hybrid hypothesis proposed by Repp and Steinman (2010) would predict the possibility to observe near to zero series of wγ(1) values over the entire duration of the set. Conversely, the mutually exclusive hypothesis by Delignières and Torre (2011) would predict the possibility to observe an alternation between epochs of positive and negative wγ(1) values over time.

Materials and methods

Participants for the present study are largely from the same cohort as those examined in a previous study (Bravi et al. 2014a). A new cohort composed of nine subjects was added in order to include the modifications of the original ranges of tempi. The setup, audio stimuli, data format, and structure of the session are fully described elsewhere (Bravi et al. 2014a) and are here summarized.

Participants

Eighty-seven right-handed volunteers took part in the study (forty males and forty-seven females, 18–27 years old). All were not musically trained and reported no auditory, motor, or other neurological impairments. Informed consent procedures were in accordance with local ethical guidelines and conformed to the Declaration of Helsinki.

Setup

Each participant was tested individually, sitting upright on a chair with the feet on leg rest, wearing eye mask and headphones. The eye mask prevented interference from visual information while headphones (K 240 Studio, AKG Acoustics GmbH, Wien, AT) were used to reduce environmental noise and to deliver audio information. The dominant forearm was placed in horizontal position supported by an armrest. The wrist and hand were free to move in midair. A triaxial accelerometer sensor (ADXL330, by Analog Devices Inc., Norwood, MA 02062), kept in position by an elastic band and secured by a Velcro strap, was positioned on the dorsal aspect of the hand, over the proximal part of the second–third metacarpal bones. Sensor output was acquired and digitized at 200 Hz through PCI-6071E (12-Bit E Series Multifunction DAQ, National Instruments, Austin, TX, USA).

Audio stimuli

Stimuli were delivered binaurally to the participants through headphones. Audio stimuli (60 s duration) were paced streams of clicks and excerpts of music. Clicks and music were selected to fit reference musical tempi chosen within the metronome markings from Adagio to Presto (Sachs 1953), from 64 to 176 bpm (beats per minute—bpm—is the unit to measure tempo in music; our reference tempi are from 1.067 to 2.933 Hz, equivalent of 937.207 to 340.910 ms, Table 1). In Bravi et al. (2014a), the selected fourteen reference musical tempi were taken out of the original and generally accepted scale by Maelzel (Maelzel 1818), with minor modifications. The same tempi were chosen for both streams of clicks and excerpts of music. To perform our analyses using tempo as a continuous variable, in the present study, the tempi selection used in Bravi et al. (2014a) was slightly modified by the inclusion of the 160 bpm tempo, to replace the 162 bpm tempo, and of the 168 bpm tempo, for a total of fifteen reference tempi. Streams of clicks were produced using the audio editor Audacity (GNU/GPL, http://audacity.sourceforge.net/), via the function Generate Click (duration = 10 ms, sound white noise). Music excerpts were extracted from original professional productions. The music fragments had a simple explicit rhythm and a stable tempo that remained constant throughout time. Since the determination of perceptual musical tempo can be ambiguous (McKinney and Moelants 2007) and algorithms for tempo extraction have limitations (McKinney et al. 2007), three musical experts (professionals with musical experience) independently verified the tempo of the excerpts. Only fragments for which the experts collectively agreed of having a single musical tempo were selected. Music fragments were taken from a variety of musical genres popular among young people: rock, techno, dance, trance, hard rock, and film music. We used fragments from music productions, likely to be unfamiliar to the participants, since it was shown that familiarity with a song installs an accurate long-term memory of its tempo (Levitin and Cook 1996; Quinn and Watt 2006). Tempo variations of music fragments were analyzed, beat-mapped, and set to fixed bpm using the softwares Jackson (http://vanaeken.com/; accuracy is within a 0.001 bpm margin) and Audition (Adobe Systems Inc., San Jose, CA 95110-2704, USA). Time manipulations were all below 5 % deviation of the mean music tempo (Dannenberg and Mohan 2011), well under the threshold for detection of temporal changes in music (Ellis 1991; Madison and Paulin 2010). All audio files were normalized to −0.3 dB and stored as waveform (waveform audio file; 44.1 kHz; 16-bit depth; using Audition). Fade in and fade out (10 ms) were placed at the beginning and end of each file.

Data format

Sets of IWFEs were recorded during the session, and kinematic parameters were evaluated. Data from the sensor were stored and an offline analysis was implemented. Raw data from the triaxial accelerometer were elaborated to extract wrist’s flexion–extension. The duration of a single wrist’s flexion–extension was calculated as the difference between two consecutive wrist’s flexions (see Bravi et al. 2014a, b) using a custom software developed in Matlab®. The tempi of the recorded IWFEs sets were ranked as ranges, and IWFEs sets were assigned to a defined range according to their tempo (Table 1).

Session

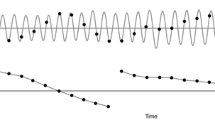

The subject was instructed to restrain from listening to music during the day of the session. Each subject had participated in one single session. Overall, during a session, the performances of the IWFEs sets were originally executed under seven conditions (Bravi et al. 2014a). Since in this study we decided to focus our analyses on IWFEs sets performed in the absence of auditory information (free, Fr), while listening to a stream of clicks or excerpt of music (Cl, Mu), and after 2 min from the end of the audio conditions (Cl2, Mu2, respectively), excluding the IWFEs sets performed after 5 min from the end of the audio condition (i.e., the conditions Cl5 and Mu5; Bravi et al. 2014a), the session is described accordingly (Fig. 1a, b). A single session was comprised of a baseline recording and two blocks. One block consisted of two pairs of Cl and Cl2 sets, or Mu and Mu2 sets, performed at two different reference tempi, for the auditory Cl or Mu sets; the same reference tempi were then used for the auditory sets of the second block. The order in which the clicks or music stimuli were presented was set to obtain equivalent number of subjects that received first one or the other.

Five conditions and the structure of the session. a Audio information is presented as streams of clicks and excerpts of music. Spectrograms are obtained with the open-source application Sonic Visualiser (dB Full Scale dBFS; Cannam et al. 2010). In the free and recall conditions, audio is not presented. b The session is designed to record first isochronous wrist’s flexion–extensions (IWFEs) in a condition free from auditory constrain (in the absence of auditory information, free = Fr, self-paced = SP; faster than SP = >SP; faster than >SP = ≫SP; slower than SP = <SP, see text for more details) and then in conditions related to clicks (during the listening of clicks = Cl; recall after 2 min from the end of the Cl condition = Cl2; one block consisting of two pairs of Cl and Cl2 sets performed at two different reference tempi: RT1 and RT2) followed by those related to music (during the listening of music = Mu; recall after 2 min from the end of the Mu condition = Mu2; one block consisting of two pairs of Mu and Mu2 sets performed at two different reference tempi: RT1 and RT2), or vice versa. In all figures, the conditions and the associated results are color-coded (color figure online)

Each session was preceded by a short practice test. After the practice test, the subject was asked whether he/she felt comfortable with the task. Recordings from the practice test were not used for further analyses. No information was given to participants about the way to perform the tasks, in terms of discreteness or smoothness of movements (Delignières and Torre 2011). The session started with recording in the Fr baseline condition, which consisted of three IWFEs sets. In the first set, the subject was asked to perform IWFEs in a self-paced manner. In the second set, the subject was asked to perform IWFEs faster than those of the first set. In the third set, the subject was asked to perform IWFEs slower than those of the first recording. The three IWFEs sets in Fr condition were performed at the beginning of the session to avoid influences from previous synchronizations (as in the case of the recall conditions, see below).

The subject was then instructed to perform a set of repetitive IWFEs while listening to clicks or music at a similar pace concurrently with the tempo of the delivered audio (i.e., maintaining an in-phase 1:1 relationship between repeated wrist’s flexion–extensions and the tempo of the audio, conditions Cl or Mu). Then, instructions to subject for IWFEs performances in recalls were of the kind: “now, try to hear in your head the auditory stimulus just listened to” (immediately after the end of the stimulus, see Zatorre and Halpern 2005). After 2 min of mentally hearing the auditory stimulus, the subject was asked to perform IWFEs as accurately as possible at the tempo of the auditory stimulus while continuing to hear mentally the stimulus itself (conditions Cl2 or Mu2). The session continued with the recordings of IWFEs in a reversed order (i.e., with the Mu conditions if Cl was recorded first, or vice versa). In order to obtain a balanced number of performances for the chosen reference tempi, the two tempi that were selected for the blocks were randomized for each single session.

Also the session of the new cohort of subjects was comprised of a baseline recording and two blocks. The free baseline condition, differently from that in Bravi et al. (2014a), consisted of four IWFEs sets. In the first set, the subject was asked to perform IWFEs in a self-paced manner. In the second set, the subject was asked to perform IWFEs slower than those of the first set. In the third and fourth sets, the subject was asked to perform IWFEs faster than those of the first recording and then faster than those of the third recording (the free baseline condition in Fig. 1b, see also Bravi et al. 2014b). In addition, the two blocks consisted of two pairs of Cl and Cl2 sets, or Mu and Mu2 sets, performed at two different reference tempi (160 and 168 bpm) for the auditory Cl or Mu sets.

Since it was reported that time-series patterns can vary depending primarily on learning and several other brain factor (Pressing and Jolley-Rogers 1997; Madison 2004) and considering the fact that the goal of our experiment was not to analyze individual behavior, our session was deemed suitable for conducting this study, seeing that each participant was asked to perform only a limited number of IWFEs sets at the same tempo. A similar strategy was already used to study synchronized human tapping (Kadota et al. 2004).

Finally, differences regarding the perception of music (Fujioka et al. 2004, 2005), the vividness of auditory images, and the potency of their effects on skilled behavior observed between musicians and non-musicians (Aleman et al. 2000; Brodsky et al. 2003; Herholz et al. 2008); a possible different involvement of the two timing modes by musically trained than non-musicians when performing repetitive movements in different motor tasks (Baer et al. 2013); and the ability of the musicians to employ a different movement strategies to maintain accurate timing with respect to non-musicians (Baer et al. 2013) represented the reasons to study a homogenous group constituted by only musically untrained subjects.

Statistical methods

To investigate whether, and to what extent, the processes for temporal regulation are influenced differently by the presence of auditory stimuli with different characteristics (Click vs. Music) and to explore whether the recall of an auditory stimulus, where auditory imagination is involved, might influence timing control processes, we computed a series of (windowed) lag-one autocorrelations, herein abbreviated wγ(1), for each set of IWFEs produced in the Fr, Cl, Mu, Cl2, and Mu2 conditions (Delignières and Torre 2011). Lag-one autocorrelation is the autocorrelation of a series with itself, shifted by a particular lag of 1 observation. We computed wγ(1) over a window of the 30 first points, moving the window by one point, all along the sets. Then, we calculated the mean of the obtained windowed lag series—mean wγ(1)—for each set of IWFEs. We considered the mean wγ(1) as an estimator of the overall autocorrelation in the set of IWFEs. Furthermore, we calculated percentage of positive wγ(1) for each set of IWFEs. If not indicated otherwise, the mean wγ(1) and the percentage of positive wγ(1) of IWFEs are to be considered as the condition’s and the range of tempi’s means, respectively. Two separate two-way ANOVAs were conducted on mean wγ(1) values and on percentages of positive wγ(1) of the IWFEs sets to evaluate within-condition differences. The first factor considered in all ANOVA was the Condition (i.e., Fr, Cl, Mu, Cl2, and Mu2). The second factor was the Tempo, with fifteen ranges (as in Table 1). To take into account for multiple testing, ANOVAs were followed by Bonferroni post hoc correction. We fixed the significance level at 0.05. In order to allow an appropriate use of parametric statistical tests, the Fisher’s Z-transformation was used to normalize the distribution of autocorrelation coefficients (Nolte et al. 2004; Freyer et al. 2012).

Considering that wγ(1) is computed over short windows, it results to be less affected than lag-one autocorrelation γ(1) by the presence of long-range correlations that tend to induce positive bias (Delignières and Torre 2011). Thus, the use of wγ(1) appears more consistent with theoretically expected values (i.e., negative for event-based timing and positive for emergent timing) than γ(1) calculated, instead, over the entire set. In addition, since wγ(1) is aimed to detect a possible variation of the mean autocorrelation value over the duration of each set, it allows us to perform a more microscopic analysis that explores the evolution of lag-one autocorrelation over time. By this analysis, we try to clarify about the dichotomy or continuum of timing modes controversy.

Results

The dataset

For the present study, we analyzed 726 sets of IWFEs out of the original recordings. Criteria for the exclusion of the sets are described in Bravi et al. (2014a). IWFEs were performed under Fr, Cl, Mu, Cl2, and Mu2 conditions (n = 153 for condition Fr, n = 154 for Cl, n = 134 for Cl2, n = 147 for Mu, n = 138 for Mu2) distributed over the 87 subjects, each containing a relatively high number of IWFEs (averaging from a minimum of 53.1, for condition Mu, range 1.017–1.133 Hz, to a maximum of 149.43, for condition Cl, range 2.883–3.000 Hz). For each set, we first calculated within-set mean and sd of IWFEs durations. Each within-set mean of the IWFEs durations was categorized within the appropriate rank (Table 1). ANOVAs on an equal number of sets per condition were performed (n = 10 for rank 1, n = 7 for rank 2, n = 7 for rank 3, n = 7 for rank 4, n = 8 for rank 5, n = 9 for rank 6, n = 10 for rank 7, n = 9 for rank 8, n = 8 for rank 9, n = 9 for rank 10, n = 10 for rank 11, n = 7 for rank 12, n = 9 for rank 13, n = 9 for rank 14, n = 7 for rank 15).

Mean wγ(1) values and percentages of positive wγ(1) of IWFEs in the Fr and the audio conditions

We investigate whether, and to what extent, the processes for temporal regulation are affected differently by the presence of simple and complex auditory stimuli. For comparisons, we calculated mean wγ(1) values and percentages of positive wγ(1) of IWFEs (see: statistical methods section) under Fr, Cl, and Mu conditions. The baseline was Fr condition.

Mean wγ(1) values and percentages of positive wγ(1) of IWFEs plotted per condition are shown in Fig. 2a, b. Mean wγ(1) was slightly positive for Fr condition, but negative for Cl, and slightly negative for Mu (Fig. 2a). Wγ(1) was more positive for Fr (61 % of positive values) and widely more negative for Cl and Mu (only 30 and 37 % of positive values, respectively; Fig. 2b).

Mean wγ(1) and percentages of positive wγ(1) in the Fr, Cl and Mu conditions. a Mean wγ(1) values (±sd) from the sets of IWFEs performed in the Fr, and during the listening of streams of clicks or excerpts of music, Cl and Mu, are plotted per condition, pooling together all ranges of tempi. Note that mean wγ(1) is slightly positive for Fr, negative for Cl, and slightly negative for Mu. b Percentages of positive wγ(1) (±sd) in the Fr, Cl and Mu, are plotted per condition, pooling together all ranges of tempi. Note that the value for Fr is above 60 % while for Cl and Mu the value is under 40 %

Then, for Fr, Cl, and Mu conditions, we plotted mean wγ(1) values and percentages of positive wγ(1) of IWFEs as function of ranges of tempi. For the three considered conditions, mean wγ(1) appeared to change with movement durations (Fig. 3a). In the Fr condition, mean wγ(1) values were almost all positive. Overall, the Cl and Mu conditions showed negative mean wγ(1) values, but while in the Cl condition mean wγ(1) values resulted to be all negative, in the Mu condition were negative for only the first nine ranges of tempi. Mu condition expressed positive values from ranks 10 to 15 (Fig. 3c). Also percentages of positive wγ(1) of IWFEs appeared to be somewhat sensitive to movement durations (Fig. 3b). In the Fr condition, percentages of positive wγ(1) were almost always above the 50 %. In addition, while in the Cl condition percentages of positive wγ(1) resulted to be under the 50 % for virtually all of ranges of tempi, the Mu condition expressed percentages of positive wγ(1) above the 50 % at the high ranges of tempi, from ranks 10–15 (Fig. 3d).

Mean wγ(1) and percentages of positive wγ(1), as a function of the ranges of tempi, in the Fr, Cl, and Mu conditions. a Mean wγ(1) values of IWFEs are plotted, as a function of the ranges of tempi, for the Fr, Cl, and Mu conditions. Values change with movement durations. In the Fr condition, mean wγ(1) values are almost all positive. In the Cl condition, mean wγ(1) values are all negative. The Mu condition expresses positive values for fast movements, from ranks 10 to 15. b Percentages of positive wγ(1) are plotted, as a function of the ranges of tempi, for the Fr, Cl, and Mu conditions. Also here, it appears evident that percentages of positive wγ(1) values are sensitive to movement durations. In the Fr condition, percentages of positive wγ(1) are almost always above 50 %. In the Cl condition, percentages of positive wγ(1) are under 50 % for almost all ranks of tempi. In the Mu condition, percentages of positive wγ(1) are above 50 % at the high ranks (10–15). c Inset of a. d Inset of b

To evaluate within-condition differences of mean wγ(1) values, the two-way ANOVA was performed on Fr, Cl, and Mu, for Condition and Tempo factors. Results showed highly significant differences for the Condition (factor 1, F 2,333 = 74.421, p < 0.001) and for the Tempo (factor 2, F 14,333 = 8.783, p < 0.001). The interaction between the Condition and the Tempo showed a highly significant p value (F 28,333 = 1.931, p < 0.01). Bonferroni post hoc tests among groups of factor 1 (Condition) showed highly significant differences between different conditions, substantiating that mean wγ(1) values of self-paced IWFEs, free from auditory constrain, were different from those of IWFEs performed in synchrony with paced auditory inputs but also that click and music affected differently the mean wγ(1) of IWFEs.

To evaluate within-condition differences of percentages of positive wγ(1), the two-way ANOVA was performed on Fr, Cl, and Mu. Results showed highly significant differences for the Condition (factor 1, F 2,333 = 55.430, p < 0.001) and for the Tempo (factor 2, F 14,333 = 6.899, p < 0.001). The interaction between the Condition and the Tempo showed a significant p value (F 28,333 = 1.591, p < 0.05). Bonferroni post hoc tests in general confirmed results obtained for the mean wγ(1) values, apart from difference between Cl and Mu (p > 0.05).

It was apparent, however, that at the high tempi (ranks 10–15) mean wγ(1) values and percentages of positive wγ(1) in the Mu condition diverged evidently, moving towards positive values, from the negative mean wγ(1) values and percentages of positive wγ(1) of the Cl condition (Fig. 3c, d). This was extended by two-way ANOVAs on mean wγ(1) values and percentages of positive wγ(1) performed separately for the first nine ranges of tempi (ranks 1–9) and for the last six ranges of tempi (ranks 10–15).

For the first nine ranges of tempi, results demonstrated highly significant differences for the Condition [factor 1, F 2,198 = 61.157, p < 0.001, for mean wγ(1) values; F 2,198 = 50.490, p < 0.001, for percentages of positive wγ(1)] and for the Tempo [factor 2, F 8,198 = 4.255, p < 0.001, for mean wγ(1) values; F 8,198 = 3.453, p < 0.001, for percentages of positive wγ(1)]. The interactions between the Condition and the Tempo showed no significant p values [F 16,198 = 1.052, p > 0.05, for mean wγ(1) values; F 16,198 = 0.479, p > 0.05, for percentages of positive wγ(1)]. Both in the case of mean wγ(1) values and percentages of positive wγ(1), Bonferroni post hoc tests among groups of factor 1 provided highly significant differences between Fr and Cl (p < 0.001) or Fr and Mu (p < 0.001) but no significant differences between Cl and Mu (p > 0.05).

On the contrary, for the last six ranges of tempi, the two-way ANOVAs demonstrated highly significant differences for the Condition [factor 1, F 2,135 = 22.279, p < 0.001, for mean wγ(1) values; F 2,135 = 14.861, p < 0.001, for percentages of positive wγ(1)] but no significant differences for the Tempo [factor 2, F 5,135 = 0.088, p > 0.05, for mean wγ(1) values; F 5,135 = 0.102, p > 0.05, for percentages of positive wγ(1)]. The interactions between the Condition and the Tempo showed no significant p values [F 10,135 = 1.307, p > 0.05, for mean wγ(1) values; F 10,135 = 1.132, p > 0.05, for percentages of positive wγ(1)]. Bonferroni post hoc tests continued to provide highly significant differences between Fr and Cl (p < 0.001). Moreover, it is important to note that no significant differences between Fr and Mu (p > 0.05) were found while highly significant differences were obtained between Cl and Mu (p < 0.001).

This latter result together provided evidence that streams of clicks and excerpts of music influence differently mean wγ(1) values and percentages of positive wγ(1) specifically for the fast rhythmic movements.

Mean wγ(1) values and percentages of positive wγ(1) in Fr and recall conditions

We here investigate whether the evocation of auditory imagery during the rhythmic motor performance might influence timing control processes. For comparisons, we calculated mean wγ(1) values and percentages of positive wγ(1) of IWFEs under the Fr, Cl2, and Mu2 conditions. In imagery research, the comparison of tasks in which participants are instructed to generate auditory imagery (Cl2 and Mu2) to tasks in which participants are not instructed to generate auditory imagery (Fr) is one type of data to be surveyed (Hubbard 2010).

Mean wγ(1) values and percentages of positive wγ(1) of IWFEs were plotted per condition (Fig. 4a, b). Mean wγ(1) was somewhat negative for Cl2 and for Mu2, compared to the slightly positive value for Fr condition (Fig. 4a). Moreover, wγ(1) values were also somewhat more negative for Cl2 and Mu2 (45 and 45 % of positive values, respectively) and, when compared to the more positive wγ(1) values of Fr (61 % of positive values), presented a particularly intermediary profile with a more balanced distribution between negative and positive wγ(1) (Fig. 4b).

Mean wγ(1) and percentages of positive wγ(1) in the Fr, Cl2, and Mu2 conditions. a Mean wγ(1) values (±sd) from the sets of IWFEs are plotted pooling together all ranges of tempi for the recall Cl2 and Mu2 conditions (Fr is illustrated for reference). Note that mean wγ(1) values are slightly positive for Fr and somewhat negative for Cl2 and Mu2. b Percentages of positive wγ(1) values (±sd) are plotted pooling together all ranges of tempi for the recall Cl2 and Mu2 conditions (Fr is illustrated for reference). Note that the value for Cl2 and Mu2 is under 50 %

When the Fr, Cl2, and Mu2 mean wγ(1) were plotted as function of ranges of tempi (Fig. 5a, c), mean wγ(1) values appeared to change, to some extent, with movement durations. In the Cl2 and Mu2 conditions, mean wγ(1) values were mostly negative (eleven on fifteen in Cl2 and ten on fifteen in Mu2, respectively). In the Fr condition, mean wγ(1) values were almost all positive. Also when the Fr, Cl2, and Mu2 percentages of positive wγ(1) of IWFEs were plotted as function of ranges of tempi (Fig. 5b, d), percentages of positive wγ(1) appeared to change, to some extent, with movement durations. In the Cl2 and Mu2, but not in Fr, percentages of positive wγ(1) were, though in most of cases very closed to 50 %, often under the 50 %.

Mean wγ(1) and percentages of positive wγ(1), as a function of the ranges of tempi, in the Fr, Cl2, and Mu2 conditions. a Mean wγ(1) values of IWFEs are plotted, as a function of the ranges of tempi, for the Cl2 and Mu2 conditions (Fr is illustrated for reference). Values change with movement durations. b Percentages of positive wγ(1) are plotted, as a function of the ranges of tempi, for Cl2 and Mu2 conditions (Fr is illustrated for reference). Here also it is evident that percentages of positive wγ(1) are sensitive to movement durations. c Inset of a. d Inset of b

To evaluate within-condition differences of mean wγ(1) values and of percentages of positive wγ(1), two-way ANOVAs were performed, on Fr, Cl2, and Mu2, for the Condition and the Tempo factors. Results demonstrated highly significant differences for the Condition [factor 1, F 2,333 = 22.751, p < 0.001, for mean wγ(1) values; F 2,333 = 16.957, p < 0.001, for percentages of positive wγ(1)] and significant differences for the Tempo [factor 2, F 14,333 = 2.030, p < 0.05, for mean wγ(1) values; F 14,333 = 1.919, p < 0.05, for percentages of positive wγ(1)]. The interactions between the Condition and the Tempo showed no significant p values [F 28,333 = 0.641, p > 0.05, for mean wγ(1) values; F 28,333 = 0.462, p > 0.05, for percentage of positive wγ(1)]. Both in the case of mean wγ(1) values and percentages of positive wγ(1), post hoc tests among groups of factor 1 showed highly significant differences between baseline Fr and Cl2 (p < 0.001) or Fr and Mu2 (p < 0.001) but no significant differences between Cl2 and Mu2 (p > 0.05), revealing that auditory experience, constructed of components drawn from memory in the absence of direct sensory instigation of experience, influences both mean wγ(1) values and percentages of positive wγ(1).

Discussion

Our data show that mean wγ(1) and percentages of wγ(1) of IWFEs durations, in the audio synchronization Cl and Mu conditions, are biased toward negative values (the domain of the event-based timing) as compared with the baseline Fr condition. This latter is mostly characterized by positive values (the domain of the emergent timing), and the difference between the audio conditions and the baseline is highly significant. Delignières and Torre (2011) argued that event-based timing is not determined by «the presence of motor events, or any external events such as metronomic signals, but by the presence of cognitive events provided by an internal timekeeper that trigger motor responses» (Delignières and Torre 2011, p. 317) and disagree with the idea that synchronization should necessarily imply event-based timing. We show that paced auditory information may favor, together with a rhythmic movement task, an abstract representation of the time intervals to produce.

In addition, the movement duration has an evident influence on mean wγ(1) values and percentages of positive wγ(1) of IWFEs. What appears from our data is that as movement durations decrease the emergent component of timing for IWFEs is favored, independently from the condition. Discrete slow and continuous fast tapping are considered to be two distinct topological classes, with the first requiring a clock-like process and the second a self-organized oscillatory process (Huys et al. 2008). As the frequency of a discontinuous movement increases, the movement resembles an oscillation and the processing can be equated with emergent timing (Repp 2008, 2011). In agreement with the latter interpretation, we found the highest mean wγ(1) values and percentages of positive wγ(1) mostly for fast IWFEs.

More in detail, our findings provide evidence that, specifically for the fast movements, clicks and music favor differently the elicitation of the event-based timing. During the performance of fast movements, music, unlike clicks, seems to lack the power of counteracting the shift of wγ(1) toward positive values as frequency of movements increases. In other words, at the fastest tempi, music is too amorphous to evoke the event-based timing that the difference between the baseline Fr and the Mu conditions, conspicuous for the adagio to moderato tempi, vanishes for the allegro to presto tempi.

The synchronization of responses to metronome and the self-paced condition differs since the former is characterized by the contribution of error correction processes, working to prevent large asynchronies and tempo drift (Semjen et al. 2000; Repp 2005). The type of movement and the corresponding pacing characteristics used to specify the timing target additionally influence the accuracy of sensorimotor synchronization processes (Lorås et al. 2012).

It was shown that the adjustment of movement trajectories is correlated with degree of correction of synchronization errors and, consequently, to the accuracy of synchronization with paced clicks. Balasubramaniam et al. (2004) have shown that synchronization with clicks increases the asymmetry of movement trajectories and that, if the goal is to maximize the engagement with an external auditory input, the better strategy is to move discretely because discrete movements support a faster phase correction (Balasubramaniam et al. 2004). Since no information was given to our participants in terms of discreteness or smoothness of the movements to be performed, it is possible to hypothesize that clicks characterized by punctuate perceptive markers provide an efficient temporal anchor (Zelaznik and Rosenbaum 2010) affecting IWFEs during synchronization even at high frequencies by nudging movement from continuous to discrete. Discrete movements offer more salient sensory information on which phase correction is based (Elliott et al. 2009).

Both discrete movements and a faster phase correction could lead to an augmentation of the event-based component for the production of IWFEs. It was shown that production of discrete movements demands a neural structure, or a network of structures, not implicated in the implementation of the dynamics for motion (Huys et al. 2008). Moreover, fast phase correction during synchronization is assumed to increase the event-based component for the production of rhythmic movements. Indeed, as is predicted by extensions of the two-level model to synchronization, slow correction tends to introduce positive correlations between successive movements, whereas fast correction tends to introduce alternations between shorter and longer movements and thus negative correlation (Semjen et al. 2000; Vorberg and Schulze 2002; Repp and Steinman 2010).

Identification of musical beat involves perception of a periodic series of regularly recurring, quite equivalent, mental events that have to be extrapolated from spectrotemporally complex sound sequences (Large and Snyder 2009; Patel and Iversen 2014). Musical beats can be perceived across a wide range of tempi (Van Noorden and Moelants 1999) and influence how subjects organize the elements of the rhythm both as figural and as metric codes (London 2012). Intervals between 1200 and 400 ms bring about the strongest sense of beat, with a preference for periods around 600 ms (London 2012). Moreover, in a walking synchronization task, participants did not synchronize with the music, especially at the highest tempi (between 146 and 190 bpm, i.e., between 2.433 and 3.167 Hz; Styns et al. 2007). Also, cutaneous mechanoreceptive afferents participate in the perception of musical meter, and information from audition and touch is grouped into a common percept (Huang et al. 2012). Thus, the complexity inherent to the perception of musical beat, along with the performance of movements lacking additional tactile information, could make the beat scarcely predictable for fast movements and offers a poor temporal anchor for the transformation of oscillatory IWFEs to discrete, a motor conversion strategy engaged during fast phase correction in a synchronization condition (Elliott et al. 2009). All of which makes music inadequate to elicit the event-based timing process for the production of IWFEs at high frequencies.

Additional basis for the distinction between event-based and emergent timing resides in the auditory representation of movement (Zelaznik and Rosenbaum 2010). Also, auditory imagery refers to the aspect of cognition in which auditory information is internally generated and processed in the absence of real sound perception (Herholz et al. 2012). With these premises, we investigated whether auditory imagery might influence timing control processes for motor production. We show that in recall conditions, timing for movements shifts toward a more event-based scenario as compared to the Fr condition where the emergent component predominates.

The production of rhythmic movements had been widely employed for the study of timing. The most common paradigm used to explore timing is the synchronization–continuation task, introduced by Stevens in 1886 (Lorås et al. 2012). In this paradigm, subsequent to the stop of the sensory stimulus, subjects attempt to represent the interval duration of stimulus with a sustained and periodic motor act by retrieval of the pre-learned durations (continuation phase; Coull et al. 2011). A large amount of knowledge of the mechanisms for timing is derived from analyses performed on the continuation phase in which auditory imagery was shown to be exploited during performance (Rao et al. 1997). Our result indicates that even the simple recall of an auditory stimulus appears to be sufficient in influencing timing mechanisms.

Also, it is interesting to note that the recall of music is more efficient than music in invoking the event-based component at the high tempi (compare Fig. 3a–d with Fig. 5a–d). The ability to build a mental representation of an auditory input is always possible, but varies among people (White et al. 1977) and is influenced by training, or by musical experiences in perception and performance (Keller and Koch 2008; Hubbard 2010). Halpern (1992) showed that, when participants imagine familiar music and tap a finger along with the imaged beat, musical experience increases the spreading of tempo representation within auditory imagery. In our task in which the goal is to perform as precisely as possible sets of isochronous movements, the augmented difficulty to represent the music tempo in non-musicians could bias subjects toward the musical beat by reducing the weight of other characteristic (musical contour, melody, and harmony). This transformed representation could paradoxically make the imagined beat decisive to shape more discrete IWFEs and to invoke the event-based component of timing.

Event-based and emergent timing have been investigated for years using prototypical tasks. Using finger tapping, for the event-based timing, and circle drawing or forearm oscillation, for the emergent timing, the two forms of timing were regarded as mutually exclusive (Robertson et al. 1999; Zelaznik et al. 2002; Delignières et al. 2004). This mutual exclusiveness was first challenged by Repp and Steinman (2010). Using our IWFEs, performed under different conditions and at various tempi, we tried to clarify the exclusiveness versus coexistence of timing processes and their possible interrelationship. We predicted a close-to-zero wγ(1) series over duration of entire set in case of the hybrid model (Repp and Steinman 2010), or to observe an alternation between positive and negative wγ(1) values over the duration of the entire set in case of mutually exclusive model (Delignières and Torre 2011).

We discuss our observations by showing the entire data board used for performing ANOVAs —per condition and ranges of tempi—as timed series of wγ(1) for each set of IWFEs (Figs. 6, 7, 8). Our data board shows unequivocally that participants, in favor of the mutual exclusive model and in contrast to the hybrid model, can exploit either the event-based or the emergent timing mode, or the two modes in alternation, but in all cases, each mode appears to be exclusive (see series color-coded in Figs. 6, 7, 8). It is evident, once again, that the emergent component of timing becomes stronger and prevails on event-based component as the tempo of movements increases, in spite of the alternation of timing modes and in good agreement with Repp (2008). Also, while the alternation of timing modes has been described in the continuation phase of a rhythmic motor task (Delignières and Torre 2011), we find that it is also present in conditions in which participants had to synchronize to an audio stimulus, indicating that timing modes alternation is not evident only in the absence of auditory input.

Data board of the over time series of wγ(1) values per condition and ranges of tempi (ranks 1–5). Data used to perform ANOVAs are shown. Columns are organized per rank; ranks 1–5 are illustrated. Wγ(1) values for slower movements on top and wγ(1) values for faster movements on bottom. For each graph, the x-axis represents the order number of the window (window #) over which the autocorrelation is computed. Columns of graphs are referring, from left to right, to wγ(1) values in the conditions Fr, Cl, Mu, Cl2, and Mu2

Data board of the over time series of wγ(1) values per condition and ranges of tempi (ranks 11–15). Data used to perform ANOVAs are shown. Columns are organized per rank; ranks 11–15 are illustrated. All other parameters are the same as in Fig. 6

A final metaphor to bring together our data may come from the synchronization of musicians in an ensemble during performance. The conductor uses baton movements to give cues for expressive character, including dynamics and accelerando or ritardando in timing. Although conventions are recognized for conducting in different tempi, there are no standards for defining the beat. Thus, the point on the movement that is considered to mark the beat may vary among conductors. Conductors’ movement trajectories can frequently appear smooth and continuous. However, skilled musicians develop great sensitivity to baton movements, and studies have shown that points of relatively high acceleration are picked out by the players to define events for synchronization (Luck and Toiviainen 2006; Luck and Sloboda 2008). We can infer that also our subject has no standards for defining the timing mode. Thus, timing mode may vary, depending from the motor task and the sensory input, and the subject alternates from one to the other seemingly to optimize performance, as event-based and emergent timing complement each other (Semjen 1996). However, subjects develop a great sensitivity to be in time (Janata et al. 2012) and tend to favor epochs of emergent timing as movements become fast enough to be difficult to manage with event-based timing. Further studies are needed to explore this latter hypothesis and, as also recently claimed in an elegant study of auditory-motor coupling on human gait (Hunt et al. 2014), to initiate capitalization on the potential that auditory-motor coupling offers, especially in the field of neuromuscular rehabilitation.

References

Aleman A, Nieuwenstein MR, Boecker KBE, de Hann EHF (2000) Music training and mental imagery ability. Neuropsychologia 38:1664–1668. doi:10.1016/S0028-3932(00)00079-8

Allan LG (1979) The perception of time. Percept Psychophys 26:340–354

Baer LH, Thibodeau JL, Gralnick TM, Li KZ, Penhune VB (2013) The role of musical training in emergent and event-based timing. Front Hum Neurosci 7:191. doi:10.3389/fnhum.2013.00191

Balasubramaniam R, Wing AM, Daffertshofer A (2004) Keeping with the beat: movement trajectories contribute to movement timing. Exp Brain Res 159:129–134. doi:10.1007/s00221-004-2066-z

Bishop L, Bailes F, Dean RT (2013) Musical expertise and the ability to imagine loudness. PLoS ONE 8:e56052. doi:10.1371/journal.pone.0056052

Bravi R, Del Tongo C, Cohen EJ, Dalle Mura G, Tognetti A, Minciacchi D (2014a) Modulation of isochronous movements in a flexible environment: links between motion and auditory experience. Exp Brain Res 232:1663–1675. doi:10.1007/s00221-014-3845-9

Bravi R, Quarta E, Cohen EJ, Gottard A, Minciacchi D (2014b) A little elastic for a better performance: kinesiotaping of the motor effector modulates neural mechanisms for rhythmic movements. Front Syst Neurosci 8:181. doi:10.3389/fnsys.2014.00181

Brodsky W, Henik A, Rubinstein B, Zorman M (2003) Auditory imagery from musical notation in expert musicians. Percept Psychophys 65:602–612. doi:10.3758/BF03194586

Cannam C, Landone C, Sandler M (2010) Sonic visualiser: an open source application for viewing, analysing, and annotating music audio files. In: Proceedings of ACM multimedia 2010 international conference. doi:10.1145/1873951.1874248

Collier GL, Ogden RT (2004) Adding drift to the composition of simple isochronous tapping: an extension of the Wing-Kristofferson model. J Exp Psychol Hum Percept Perform 30:853–872. doi:10.1037/0096-1523.30.5.853

Coull JT, Cheng R, Meck WH (2011) Neuroanatomical and neurochemical substrates of timing. Neuropsychopharmacol 36:3–25. doi:10.1038/npp.2010.113

Dannenberg RB, Mohan S (2011) Characterizing tempo change in musical performances. In: ICMC Proceedings. Publishing, Ann Arbor, MI

Delignières D, Torre K (2011) Event-based and emergent timing: dichotomy or continuum? A reply to Repp and Steinman (2010). J Mot Behav 43:311–318. doi:10.1080/00222895.2011.588274

Delignières D, Lemoine L, Torre K (2004) Time intervals production in tapping and oscillatory motion. Hum Mov Sci 23:87–103. doi:10.1016/j.humov.2004.07.001

Elliott MT, Welchman AE, Wing AM (2009) Being discrete helps keep to the beat. Exp Brain Res 192:731–737. doi:10.1007/s00221-008-1646-8

Ellis M (1991) Thresholds for detecting tempo change. Psychol Music 19:164–169. doi:10.1177/0305735691192007

Fraisse P (1982) Rhythm and Tempo. In: Deutsch D (ed) The psychology of music. Academic Press, New York, NY, pp 149–180

Freyer F, Reinacher M, Nolte G, Dinse HR, Ritter P (2012) Repetitive tactile stimulation changes resting-state functional connectivity-implications for treatment of sensorimotor decline. Front Hum Neurosci 6:144. doi:10.3389/fnhum.2012.00144

Fujioka T, Trainor LJ, Ross B, Kakigi R, Pantev C (2004) Musical training enhances automatic encoding of melodic contour and interval structure. J Cogn Neurosci 16:1010–1021. doi:10.1162/0898929041502706

Fujioka T, Trainor LJ, Ross B, Kakigi R, Pantev C (2005) Automatic encoding of polyphonic melodies in musicians and nonmusicians. J Cogn Neurosci 17:1578–1592. doi:10.1162/089892905774597263

Halpern AR (1992) Musical aspects of auditory imagery. In: Reisberg D (ed) Auditory imagery. Erlbaum, Hillsdale, NJ, pp 1–27

Herholz SC, Lappe C, Knief A, Pantev C (2008) Neural basis of music imagery and the effect of musical expertise. Eur J Neurosci 28:2352–2360. doi:10.1111/j.1460-9568.2008.06515.x

Herholz SC, Halpern AR, Zatorre RJ (2012) Neuronal correlates of perception, imagery, and memory for familiar tunes. J Cogn Neurosci 24:1382–1397. doi:10.1162/jocn_a_00216

Huang J, Gamble D, Sarnlertsophon K, Wang X, Hsiao S (2012) Feeling music: integration of auditory and tactile inputs in musical meter perception. PLoS ONE 7:e48496. doi:10.1371/journal.pone.0048496

Hubbard TL (2010) Auditory imagery: empirical findings. Psychol Bull 136:302–329. doi:10.1037/a0018436

Hunt N, McGrath D, Stergiou N (2014) The influence of auditory-motor coupling on fractal dynamics in human gait. Sci Rep 4:5879. doi:10.1038/srep05879

Huys R, Studenka BE, Rheaume NR, Zelaznik HN, Jirsa VK (2008) Distinct timing mechanisms produce discrete and continuous movements. PLoS Comput Biol 4:e1000061. doi:10.1371/journal.pcbi.1000061

Jackendoff R, Lerdahl F (2006) The capacity for music: what is it, and what’s special about it? Cognition 100:33–72. doi:10.1016/j.cognition.2005.11.005

Jacoby N, Repp BH (2012) A general linear framework for the comparison and evaluation of models of sensorimotor synchronization. Biol Cybern 106:135–154. doi:10.1007/s00422-012-0482-x

Janata P, Tomic S, Haberman J (2012) Sensorimotor coupling in music and the psychology of the groove. J Exp Psychol Gen 141:54–75. doi:10.1037/a0024208

Joiner WM, Shelhamer M (2009) A model of time estimation and error feedback in predictive timing behavior. J Comput Neurosci 26:119–138. doi:10.1007/s10827-008-0102-x

Kadota H, Kudo K, Ohtsuki T (2004) Time-series pattern changes related to movement rate in synchronized human tapping. Neurosci Lett 370:97–101. doi:10.1016/j.neulet.2004.08.004

Keller PE, Koch I (2008) Action planning in sequential skills: relations to music performance. Q J Exp Psychol (Hove) 61:275–291. doi:10.1080/17470210601160864

Kesner RP, Hopkins RO (2006) Mnemonic functions of the hippocampus: a comparison between animals and humans. Biol Psychol 73:3–18. doi:10.1016/j.biopsycho.2006.01.004

Large EW, Snyder JS (2009) Pulse and meter as neural resonance. Ann N Y Acad Sci 1169:46–57. doi:10.1111/j.1749-6632.2009.04550.x

Lemoine L, Delignières D (2009) Detrended windowed (Lag One) auto-correlation: a new method for distinguishing between event-based and emergent timing. Q J Exp Psychol (Hove) 62:585–604. doi:10.1080/17470210802131896

Levitin DJ, Cook PR (1996) Memory for musical tempo: additional evidence that auditory memory is absolute. Percept Psychophys 58:927–935. doi:10.3758/BF03205494

London J (2012) Hearing in time: psychological aspects of musical meter, 2nd edn. Oxford University Press, New York, NY

Lorås H, Sigmundsson H, Talcott JB, Öhberg FO, Stensdotter AK (2012) Timing continuous or discontinuous movements across effectors specified by different pacing modalities and intervals. Exp Brain Res 220:335–347. doi:10.1007/s00221-012-3142-4

Luck G, Sloboda JA (2008) Exploring the spatiotemporal properties of the beat in simple conducting gestures using a synchronization task. Music Percept 25:225–239

Luck GPB, Toiviainen P (2006) Ensemble musicians’ synchronization with conductors’ gestures: an automated feature-extraction analysis. Music Percept 24:195–206

Madison G (2004) Fractal modeling of human isochronous serial interval production. Biol Cybern 90:105–112. doi:10.1007/s00422-003-0453-3

Madison G, Paulin J (2010) Ratings of speed in real music as a function of both original and manipulated beat tempo. J Acoust Soc Am 128:3032–3040. doi:10.1121/1.3493462

Maelzel JN (1818) Mr. Maelzel’s for an instrument or instruments, machine or machines, for the improvement of all musical performance, which he calls a metronome or musical time-keeper. In: The repertory of arts, manufactures, and agriculture: consisting of original communications, specifications of patent inventions, practical and interesting papers, selected from the philosophical transactions and scientific journals of all nations: vol 33, 2nd series, J. Wyatt, Repertory Office, Hatton Garden, London, pp 7–13

McKinney MF, Moelants D (2007) Ambiguity in tempo perception: what draws listeners to different metric levels? Music Percept 24:155–165. doi:10.1525/mp.2006.24.2.155

McKinney MF, Moelants D, Davies EP, Klapuri A (2007) Evaluation of audio beat tracking and music tempo extraction algorithms. J New Music Res 36:1–16. doi:10.1080/09298210701653252

Nolte G, Bai O, Wheaton L, Mari Z, Vorbach S, Hallett M (2004) Identifying true brain interaction from EEG data using the imaginary part of coherency. Clin Neurophysiol 115:2292–2307. doi:10.1016/j.clinph.2004.04.029

Patel AD, Iversen JR (2014) The evolutionary neuroscience of musical beat perception: the action simulation for auditory prediction (ASAP) hypothesis. Front Syst Neurosci 8:57. doi:10.3389/fnsys.2014.00057

Phillips-Silver J, Trainor LJ (2007) Hearing what the body feels: auditory encoding of rhythmic movement. Cognition 105:533–546. doi:10.1016/j.cognition.2006.11.006

Pressing J (1998) Error correction processes in temporal pattern production. J Math Psychol 42:63–101. doi:10.1006/jmps.1997.1194

Pressing J, Jolley-Rogers G (1997) Spectral properties of human cognition and skill. Biol Cybern 76:339–347. doi:10.1007/s004220050347

Quinn S, Watt R (2006) The perception of tempo in music. Percept 35:267–280. doi:10.1068/p5353

Rao SM, Harrington DL, Haaland KY, Bobholz JA, Cox RW, Binder JR (1997) Distributed neural systems underlying the timing of movements. J Neurosci 17:5528–5535

Repp BH (2005) Sensorimotor synchronization: a review of the tapping literature. Psychon Bull Rev 12:969–992. doi:10.3758/BF03206433

Repp BH (2008) Perfect phase correction in synchronization with slow auditory sequences. J Mot Behav 40:363–367. doi:10.3200/JMBR.40.5.363-367

Repp BH (2011) Comfortable synchronization of drawing movements with a metronome. Hum Mov Sci 30:18–39. doi:10.1016/j.humov.2010.09.002

Repp BH, Steinman SR (2010) Simultaneous event-based and emergent timing: synchronization, continuation, and phase correction. J Mot Behav 42:111–126. doi:10.1080/00222890903566418

Robertson SD, Zelaznik HN, Lantero DA, Bojczyk KG, Spencer RM, Doffin JG, Schneidt T (1999) Correlations for timing consistency among tapping and drawing tasks: evidence against a single timing process for motor control. J Exp Psychol Hum Percept Perform 25:1316–1330. doi:10.1037/0096-1523.25.5.1316

Rosenzweig MR, Bennett EL, Colombo PJ, Lee DW, Serrano PA (1993) Short-term, intermediate-term, and long-term memories. Behav Brain Res 57:193–198. doi:10.1016/0166-4328(93)90135-D

Sachs C (1953) Rhythm and tempo: a study in music history. Norton, New York, NY

Schöner G (2002) Timing, clocks, and dynamical systems. Brain Cogn 48:31–51. doi:10.1006/brcg.2001.1302

Semjen A (1996) Emergent versus programmed temporal properties of movement sequences. In: Helfrich H (ed) Time and mind. Hografe & Huber, Seattle, WA, pp 23–43

Semjen A, Schulze HH, Vorberg D (2000) Timing precision in continuation and synchronization tapping. Psychol Res 63:137–147. doi:10.1007/PL00008172

Spencer RMC, Ivry RB (2005) Comparison of patients with Parkinson’s disease or cerebellar lesions in the production of periodic movements involving event-based or emergent timing. Brain Cogn 58:84–93. doi:10.1016/j.bandc.2004.09.010

Spencer RMC, Zelaznik HN, Diedrichsen J, Ivry RB (2003) Disrupted timing of discontinuous but not continuous movements by cerebellar lesions. Science 300:1437–1439. doi:10.1126/science.1083661

Spencer RMC, Verstynen T, Brett M, Ivry R (2007) Cerebellar activation during discrete and not continuous timed movements: an fMRI study. Neuroimage 36:378–387. doi:10.1016/j.neuroimage.2007.03.009

Studenka BE, Zelaznik HN (2008) The influence of dominant versus non-dominant hand on event and emergent motor timing. Hum Mov Sci 27:29–52. doi:10.1016/j.humov.2007.08.004

Studenka BE, Zelaznik HN, Balasubramaniam R (2012) The distinction between tapping and circle drawing with and without tactile feedback: an examination of the sources of timing variance. Q J Exp Psychol (Hove) 65:1086–1100. doi:10.1080/17470218.2011.640404

Styns F, van Noorden L, Moelants D, Leman M (2007) Walking on music. Hum Mov Sci 26:769–785. doi:10.1016/j.humov.2007.07.007

Torre K, Delignières D (2008) Distinct ways of timing movements in bimanual coordination tasks: contribution of serial correlation analysis and implications for modeling. Acta Psychol (Amst) 129:284–296. doi:10.1016/j.actpsy.2008.08.003

Turvey MT (1977) Preliminaries to a theory of action with reference to vision. In: Shaw R, Bransford J (eds) Perceiving, acting and knowing: toward an ecological psychology. Erlbaum, Hillsdale, NJ, pp 211–265

Van Noorden L, Moelants D (1999) Resonance in the perception of musical pulse. J New Music Res 28:43–66. doi:10.1076/jnmr.28.1.43.3122

Vorberg D, Schulze HH (2002) Linear phase-correction in synchronization: predictions, parameter estimation, and simulations. J Math Psychol 46:56–87. doi:10.1006/jmps.2001.1375

Vorberg D, Wing A (1996) Modeling variability and dependence in timing. In: Heuer H, Keele SW (eds) Handbook of perception and action, vol 3. Motor SkillsAcademic Press, London, UK, pp 181–262

White K, Ashton R, Brown R (1977) The measurement of imagery vividness: normative data and their relationship to sex, age, and modality differences. Br J Psychol 68:203–211. doi:10.1111/j.2044-8295.1977.tb01576.x

Wing AM, Kristofferson AB (1973a) The timing of interresponse intervals. Percept Psychophys 13:455–460. doi:10.3758/BF03205802

Wing AM, Kristofferson AB (1973b) Response delays and timing of discrete motor responses. Percept Psychophys 14:5–12. doi:10.3758/BF03198607

Zatorre RJ, Halpern AR (2005) Mental concerts: musical imagery and auditory cortex. Neuron 47:9–12. doi:10.1016/j.neuron.2005.06.013

Zelaznik HN, Rosenbaum DA (2010) Timing processes are correlated when tasks share a salient event. J Exp Psychol Hum Percept Perform 36:1565–1575. doi:10.1037/a0020380

Zelaznik HN, Spencer RMC, Ivry RB (2002) Dissociation of explicit and implicit timing in repetitive tapping and drawing movements. J Exp Psychol Hum Percept Perform 28:575–588. doi:10.1037/0096-1523.28.3.575

Acknowledgments

We wish to thank Erez James Cohen and Prof. Anna Gottard for careful reading of manuscript, comments, and suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bravi, R., Quarta, E., Del Tongo, C. et al. Music, clicks, and their imaginations favor differently the event-based timing component for rhythmic movements. Exp Brain Res 233, 1945–1961 (2015). https://doi.org/10.1007/s00221-015-4267-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-015-4267-z