Abstract

Previous studies showed that self-localisation ability involves both vision and proprioception, integrated into a single percept, with the tendency to rely more heavily on visual than proprioceptive cues. Despite the increasing evidence for the importance of vision in localising the hands, the time course of the interaction between vision and proprioception during visual occlusion remains unclear. In particular, we investigated how the brain weighs visual and proprioceptive information in hand localisation over time when the visual cues do not reflect the real position of the hand. We tested three hypotheses: Self-localisations are less accurate when vision and proprioception are incongruent; under the same conditions of incongruence, people first rely on vision and gradually revert to proprioception; if vision is removed immediately prior to hand localisation, accuracy increases. Sixteen participants viewed a video of their hands, under three conditions each undertaken with eyes open or closed: Incongruent conditions (right hand movement seen: inward, right hand real movement: outward), Congruent conditions (movement seen congruent to real movement). The right hand was then hidden from view and participants performed a localisation task whereby a moving vertical arrow was stopped when aligned with the felt position of their middle finger. A second experiment used identical methodology, but with the direction of the arrow switched. Our data showed that, in the Incongruent conditions (both with eyes open and closed), participants perceived their right hand close to its last seen position. Over time, the perceived position of the hand shifted towards the physical position. Closing the eyes before the localisation task increased the accuracy in the Incongruent condition. Crucially, Experiment 2 confirmed the findings and showed that the direction of arrow movement had no effect on hand localisation. Our hypotheses were supported: When vision and proprioception were incongruent, participants were less accurate and initially relied on vision and then proprioception over time. When vision was removed, this shift occurred more quickly. Our findings are relevant in understanding the normal and pathological processes underpinning self-localisation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The perception of owning our body and the ability to locate it in space are two fundamental requirements for self-consciousness to develop. While the majority of us take these functions for granted, there are some pathological conditions in which these mechanisms are disrupted (e.g. in autotopagnosia, a condition arising from brain damage to posterior parietal cortices, in which the ability to localise one’s own body parts is affected; Guariglia et al. 2002; Pick 1922; Semenza and Goodglass 1985).

Chronic pain patients have a distorted body image, leading to difficulties not only in self-representing the correct size of their affected limb (Moseley 2005), but also its position in space (Lotze and Moseley 2007). This close relationship between the position of our body in space and processing of sensory input is further supported by evidence in healthy participants that the processing of tactile stimuli to the hands is impaired when the hands are crossed over the body midline (Aglioti et al. 1999; Azañón and Soto-Faraco 2008; Eimer et al. 2003; Yamamoto and Kitazawa 2001). This crossed-hands deficit has been interpreted as a result of the mismatch between somatotopical and space-based frames of reference in determining the position of the external stimuli (e.g. when the right hand occupies the left hand of space and vice versa). Interestingly, the deficit also includes the intensity of the sensation, such that tactile or noxious stimuli to the hands are perceived as less intense if the hands are crossed than if they are not (Gallace et al. 2011; Sambo et al. 2013; Torta et al. 2013).

Knowing where our body is allows us to navigate our environment efficiently, avoid obstacles and perform our daily activities. In the healthy population, the central nervous system (CNS) integrates the range of internal and external cues, with ongoing motor commands (e.g. “efferent copy”, Holst and Mittelstaedt 1950; Sperry 1950) to generate a unique, coherent, multisensory experience. Although the CNS typically integrates multiple cues from different senses, it is still possible to locate one’s own body when a number of sensory cues are not available, for example when vision is occluded (for a comprehensive review on non-visual contributions to body position sense, see Proske and Gandevia 2012). Indeed, neurologically intact people are quite accurate in reaching for one hand with the other while keeping their eyes closed. Furthermore, when information about position is available from both visual and proprioceptive modalities, it has been shown that the perceived location of the limb more closely aligns with the visual information about its location than with the proprioceptive information about its location (van Beers et al. 1999a).

Thus, what is the relative role of vision and proprioception in correctly locating one’s own body part? Several studies have investigated this issue (Ernst and Banks 2002; Ernst and Bülthoff 2004; Smeets et al. 2006; van Beers et al. 1998, 1999a, b, 2002) and most of them support the idea that the CNS optimises the estimated position by integrating visual and proprioceptive signals. These studies have also shown that humans are less accurate in judging the position of their hand when they cannot directly see it, which suggests a relevant role of visual information. A consistent finding is that when vision is occluded, the perceived location of the hand drifts towards the body (Block 1890; Paillard and Brouchon 1968; Craske and Crawshaw 1975; Wann and Ibrahim 1992). This drifting effect, however, does not occur immediately after vision is occluded, suggesting that the visually encoded body position maintains an influence on the localisation of one’s own body. It has been proposed that this influence reduces as the visually encoded position decays, and then proprioception takes over (Desmurget et al. 2000; see Table 1).

Critically, the observed drift occurs not only along the sagittal axis, but also the transverse axis. During visual occlusion, estimates of hand location decrease in accuracy, leading healthy participants to judge their left hand as more leftward and their right hand as more rightward during both reaching estimation (i.e. localisation by pointing with the seen hand; Crowe et al. 1987; Ghilardi et al. 1995; Haggard et al. 2000) and proprioceptive estimation (i.e. no movement of the seen hand; Jones et al. 2010). This directional bias is explained in terms of a misperception of the hand location relative to the body midline (Jones et al. 2010) and confirms again the predominant role of vision in localising one’s own hands (Newport et al. 2001).

Despite the increasing evidence for the importance of vision in localising the hands, the time course of the interaction between vision and proprioception during visual occlusion remains unclear. We suggest that with one hand hidden from view, participants will initially rely more on vision, locating the hidden hand where they last saw it, on the basis of its visually encoded position. Over time, this visual trace will decay, such that the estimation of location will become more dependent on proprioceptive inputs (Chapman et al. 2000). Our suggestion would be in line with the maximum likelihood estimation rule (Ernst and Banks 2002). This theory states that in order to create a unified perception of a stimulus by means of different senses, the nervous system combines the information coming from the different sensory modalities in a statistically optimal fashion. This would suggest that the sensory modality that carries less variance dominates in determining the final percept. Further, the variance is direction-specific and sense-specific. In fact, research has demonstrated that proprioception-based localisations are more precise in the radial direction (reference shoulder; thus carrying more variance in the azimuthal direction), while vision-based localisations are more precise in the azimuthal direction (reference cyclopean eye; thus, carrying more variance in the radial direction; van Beers et al. 1999a, b).

In the present study, we investigated how vision and proprioception interact over time in localising one’s own hidden hand by using a bodily visual illusion that alters the perception of where one’s hand is. That is, the hand appears to be located where it is not. In order to test our hypothesis, we used a new illusion based on the disappearing hand trick (DHT) using the MIRAGE system (Newport and Gilpin 2011). Our illusion allowed us to manipulate the relationship between the seen and felt location of the right hand.

We hypothesised that when making hand localisation judgements, participants would initially rely primarily on the visually encoded position of the hand. However, we expected that, over time, there would be a shift to rely more heavily on proprioception, as the visually encoded position decays. As such, we hypothesised that in the 3 min following an illusory condition in which the visually encoded (perceived) position of the hand is rendered incongruent with its proprioceptively encoded (physical) position, we would observe a faster and larger drift towards the hidden right hand than in a non-illusory condition where the visually and proprioceptively encoded positions are congruent. A drift towards the right for the right hand is expected in any condition, but according to our hypothesis, its nature would not be the same. In particular, in Congruent conditions, when the visually encoded position of the hand is not manipulated (i.e. it is congruent with the proprioceptively encoded position), we predict that the participants will localise their hidden hand as more rightwards, in line with previous research reported above. In the Incongruent condition, where the visually encoded position of the hand has been manipulated (i.e. only the proprioceptively encoded position of the hand is correct) instead, we predict that we will find a summation between the directional bias towards right and an increasing reliance on proprioception over time that would lead to a larger and faster drift towards right than in the Congruent condition during the three minutes following the illusion.

Additionally, in order to better clarify the role of vision in hand localisation, we manipulated the rate of decay of the visually encoded position by asking participants, after their right hand was occluded, to either close their eyes (during which that decay of the visually encoded position will be accelerated; Chapman et al. 2000) or to continue to look at the blank space (during which that decay of the visually encoded position will be slower; Chapman et al. 2000). Furthermore, an increase in the amount of visual exposure to an incorrect visual trace has been found to decrease the reliance on proprioception during a reaching task (Holmes and Spence 2005). As a consequence, a faster decay of the visual trace might accelerate the reliance on proprioception and thus provide a larger and faster drift towards the right when eyes are closed prior to localisation judgements.

Experiment 1

Materials and methods

Participants

Sixteen healthy volunteers (eight males, mean age 31 ± 11 years) participated. All participants had normal or corrected-to-normal visual acuity and were right-handed (self-reported). They had no current or past neurological impairment and no current pain or history of a significant pain disorder. They were also naïve about the purpose of the study. All the participants gave written consent prior to their participation to the experiment. The study was performed in accordance with the ethical standards laid down in the 1991 Declaration of Helsinki and was approved by the Human Research Ethics Committee of the University of South Australia.

Apparatus and experimental setup

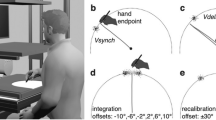

Participants viewed a real-time video image of their hands in first person perspective using the MIRAGE system (Newport et al. 2009). A combination of mirrors and camera allowed participants to view their hands in an identical spatial location and from the same perspective as if directly viewing their real hands (Newport et al. 2010). The seen position of the participants’ right hand could be manipulated and presented in real time via customised in-house software. In particular, the participants’ right hand could appear to them in its true location, where vision and proprioception offered congruent input (i.e. control congruent conditions) or in an alternative location in which vision and proprioception were incongruent.

Procedure

In all conditions, participants were seated at a table with their hands resting inside the MIRAGE system (Fig. 1). In this position, they could see an online image of their hands. A fabric, opaque bib was secured around participants’ necks and the bottom edge was attached to the MIRAGE to conceal the position of their elbows and thus remove any additional visual cues to hand location. The height of the chair was adjusted such that participants were able to look inside the MIRAGE and to comfortably raise their hands and forearms above the surface of the table.

Experimental setup. The participants were seated at a table with their hands resting inside the MIRAGE system. A fabric bib was attached to prevent the participants of seeing the position of their elbows. The chair was adjusted for each participant in order to have a comfortable position during the experiment. The pictures on the right also show that participants perspective while watching their hands moving between the blue bars inside the Mirage

Before starting the experiment, participants underwent a training procedure to familiarise with the localisation task. During the training task, participants practised hand localisation by stopping a visual arrow (that was presented via MIRAGE software, directly above their actual hand location) when the arrow reached the middle finger of their hidden right hand. The main goals of the training procedure were: (1) fixating on a spot within a blank space without being distracted by the movement of the arrow moving and (2) being able to stop the arrow accurately, even with time constraints. The training involved three stages, for a total of 22 practise localisations. The participants were allowed to practice until they felt they were totally confident with the task and also with the timing. Then, the experimental conditions commenced (see Supplemental Materials for an extensive explanation of the practice trials). Importantly, the training trials were performed at the very beginning of the experimental session, such that the aim of this procedure was just to ensure that the participants had fully understood the task and that they were totally familiar with it.

In all experimental conditions (see Fig. S1, Supplemental Materials), participants underwent an adaptation procedure in which they were asked to hold their hands approximately 5 cm above the table surface and maintain the position of their hands between two moving blue bars either side of their hands (see Supplemental Materials). In all the conditions, both hands were initially positioned approximately 13 cm laterally from the body midline. During the adaptation procedure, the positions of the blue bars were manipulated laterally, so that the positions of the hands could be gradually shifted relative to their seen position by independently moving the seen image of the hands relative to their real locations. The position of the right hand was varied across three conditions (Incongruent, Congruent Outer, Congruent Inner). In the Incongruent condition, the seen image of the right hand moved inwards at approximately 25 mm/s. Thus, in order to maintain the appearance of their right hand remaining stationary, participants were (unknowingly) required to move their right hand outwards at the same rate. This adaptation yielded to a visuo-proprioceptive discrepancy between the seen and real positions of the hand. In this illusory condition, the adaptation procedure resulted in the actual position of the participants’ right hand being 11 cm further to the right (20 cm from midline) than the seen position (9 cm from midline). Conversely, in the Congruent control conditions, the movement of the visual image was identical to the real movement of the right hand.

There were two Congruent conditions based on final hand position: the Congruent Outer condition (right hand moves from 13 to 20 cm from the midline) and the Congruent Inner condition (right hand moves from 13 to 9 cm from midline). These two conditions were designed in order to control for both the seen position of the hand (9 cm from midline) and the real position of the hand (20 cm from midline) in the Incongruent condition. The final true location of the right hand was identical between the Incongruent and the Congruent Outer conditions, and the final seen location of the right hand was identical between the Incongruent and the Congruent Inward condition. The movement of the left hand seen on the screen was congruent with the participant’s real hand movement in all the conditions, such that its final position was 9 cm from the body midline (4 cm more inwards than the initial position).

Immediately after the adaptation procedure, the experimenter placed the participant’s hands on the table (maintaining their position between the blue bars) and participants kept both hands still. They were instructed to fixate on their right hand. In all conditions, the right hand was then occluded from view (i.e. disappeared from the screen). The participants were then either asked to close their eyes for 20 s (Eyes Closed, EC) or to fixate on the space in which they had seen their right hand (Eyes Open, EO). Thus, each of the three conditions (Incongruent, Congruent Outer and Congruent Inner) was repeated twice—once with the eyes open and once with the eyes closed. In the EC condition, once the eyes were open again, participants were instructed to fixate on the location where they felt their hand to be. Then the localisation task commenced (Fig. S1 Supplemental Materials, see description below). In order to avoid any reaching error bias due to mislocalisation of the non-experimental hand, we used a localisation task that did not require any hand movement (i.e. a moving arrow as used in the training task).

Participants performed the six conditions in a randomised, counterbalanced order: Congruent Inner, EO and EC; Congruent Outer, EO and EC; Incongruent, EO and EC (see Table S1, Supplemental Materials). Following each condition, participants verbally responded to a questionnaire (see Table S2, Supplemental Materials), giving a number from zero to ten in accordance with their agreement with each sentence, in order to check whether they were aware of the visual illusion performed in the Incongruent conditions. The questionnaire was a shortened version of that used in the original DHT experiment (Newport and Gilpin 2011). At the very end of the experimental session, the experimenters briefly interviewed the participants. The participants were told that in one or more conditions, the seen position of their hands was not their actual position, because a visual illusion was elicited. They were then asked whether they were aware of it and whether they could try to report in which condition (or conditions) this illusion had been performed.

Localisation task

The localisation task did not require any movement of either hand. Reaching tasks are typically used to localise one’s own body part and require reach planning. Such tasks have been shown to utilise proprioceptive information, rely on an accurate localisation of the non-experimental hand (Jones et al. 2010) and incorporate effort and motor command components (Proske and Gandevia 2012). As mentioned above, participants were fixated on the point of the screen corresponding to their perceived location of the middle finger of their hidden right hand. An arrow (controlled by the experimenter) was displayed centrally in the upper part of the screen, pointing towards the participants. The arrow moved at a constant speed (2.65 cm/s) horizontally in the direction of the right hand (i.e. outwards from midline). Participants were instructed to say “stop” when they judged the arrow to be aligned vertically with the tip of their hidden right middle finger. This gave the experimenter a numerical value corresponding to the position of the arrow on the screen. This value was recorded for each localisation. It was not possible to blind the experimenters to the conditions, so the experimenter who was controlling the arrow looked away from the screen during the localisation task in order to minimise any possible interference due to expectation about the localisation outcome. The same experimenter also visually monitored the participants’ gaze direction. The arrow was displayed 20 s after the right hand had disappeared from view during which the participants either kept looking at the spot where they felt their right hand to be (EO conditions) or they had their eyes closed (EC conditions). The arrow returned to the starting point in the centre of the screen immediately after each localisation. Participants performed the localisation task every 15 s for a total of 13 localisation values. A second experimenter recorded each value before the arrow was returned to the starting point by the first experimenter. Following the localisation task, participants remained with their hands in position inside the MIRAGE but viewed a blank screen, allowing the experimenters to record the numerical value of the real position of the participant’s right hand without revealing this to the participant. This was done exactly with the same procedure used in the localisation task, so recording the numerical value of the arrow when it was placed exactly on the participants’ fingertip.

Data analysis

For each participant, and for each condition, the localisation error was calculated (i.e. the error values, calculated as the difference score between the participants’ judged location and the true location of their hidden hand). The true hand location was set at zero, such that overestimations (i.e. mislocalisation to the right of the hidden hand) were represented by positive values and underestimations (i.e. mislocalisation to the left of the hidden hand) by negative values. Because the data did not satisfy the assumptions of a conventional ANOVA (i.e. the homogeneity of the regression slopes), we undertook a random effect analysis of variance in order to analyse the error values. The random effect model is used when the factor levels are meant to be representative of the general population of possible levels. Random effects in ANOVA assume that the groups are a random sampling of many potential groups. The researchers are usually interested in whether the factor has a significant effect in explaining the responses in a general way. The objective of the researcher is to extend the conclusion based on a sample to all levels in the population. Random effect ANOVA assumes that the researchers randomly selected groups or subjects from all the groups or subjects in the population, even the ones not included in the research. It seeks to answer the effect of the factor in general. For a random (effect) factor, data are collected for a random sample of possible levels, with the hope that these levels are representative of all levels in that factor. This approach can be appropriate where there are a large number of possible levels (e.g. see Larson 2008). Based on the graphical plot of the data and on the Wald Z Test, the factor Participants was considered as a random factor and the factors Congruency (Congruent Outer, Congruent Inner, Incongruent), Sight (Eyes Open, EO; Eyes Closed, EC), Time (13 points over 3 min), and their interactions (Congruency × Sight, Congruency × Time, Time × Sight) as fixed factors. Different models were taken into account based on the Schwarz’s Bayesian criterion (BIC), and the model with the best fit including a random intercept (Participants) and random slopes (Condition, Sight, Time) was identified.

In order to investigate overall differences in error values between conditions (i.e. participants’ accuracy), all error scores were normalised to the first localisation judgement, and a 2 (EO, EC) × 3 (Congruent Outer, Congruent Inward, Incongruent) repeated-measures ANOVA compared error across conditions. Since Mauchly’s test for sphericity was significant, a Greenhouse–Geisser correction was applied.

As far as the questionnaire scores are concerned, we performed a one-way repeated-measure ANOVA to compare the participants’ rating scores across conditions (Congruent Outer, Congruent Inward, Incongruent) for each of the seven questionnaire items. If the Mauchly’s test for sphericity was significant, a Greenhouse–Geisser correction was applied.

In addition, Pearson’s correlation coefficients were computed to assess the relationship between the error scores at T13 (i.e. the last localisation) and the rating scores for the question “I couldn’t tell where my hand was”. For each of the main condition (i.e. Congruent Outer, Congruent Inner and Incongruent), the mean rating score at the above item was calculated between the rating scores given at the end of the Eyes Open and the rating scores given at the end of Eyes Closed condition.

All the analyses have been carried out using SPSS statistic package (IBM SPSS Statistics 21).

Results

There was a significant effect on error values of Congruency [F(2, 36.31) = 105.63, p < 0.001; r = −0.661] (Fig. 2a) and of Time [F(1,15.03) = 11.64, p < 0.005; r = 0.102]. That is, the overall error value reduced over time. No main effect of Sight [F(1,18.69) = 0.072, p = 0.791] was detected. This indicates that both the Congruency and Time modulated the perceived location of the participants’ hidden hand. We observed a significant interaction between Congruency and Sight [F(2,1162.68) = 9.60, p < 0.001] and Congruency and Time [F(2,1162.68) = 9.72, p < 0.001] (Fig. 2b, c), suggesting that the main effect of Time was mainly driven by Incongruent condition (as this effect has been detected in Incongruent but not in Congruent conditions). No other interactions were found to be significant.

Results (Experiment 1). For the factor Congruency (a), in the Incongruent conditions the error values were significantly different to those found in the Congruent Inner (p < 0.001) or Congruent Outer (p < 0.001) conditions, in which mean error values were both positive. b We set to zero the very first localisation (and, consequently, we recalculated the other error points), in order to highlight the increase in error over time and the fact that the significant interaction between congruency and time (p < 0.001) showed a larger and quicker drift towards the right in the Incongruent conditions than in either of the Congruent conditions. c The significant interaction between Sight and Congruency is shown. In all the figures, the mean errors scores are plotted, while the vertical bars represent the standard errors

For the factor Congruency (Fig. 2a), in the Incongruent conditions, participants error values across all localisations were negative (i.e. left of the actual location of the hand) and were significantly different to those found in the Congruent Inner [t(60.24) = 9.766, p < 0.001] or Congruent Outer [t(60.24) = 9.006, p < 0.001] conditions, in which mean error values were both positive (i.e. right of the actual location of the hand). Thus, the fact that the error values were positive (i.e. drifted more towards the right with respect to the real hand position) in the control conditions suggests an overestimation of the hand position to the right of the real location of the hand. This is in line with previous studies that showed a drift towards right when the occluded hand was the right one (Jones et al. 2010). Conversely, the fact that the error values in the Incongruent conditions were negative suggests an underestimation of hand position, that is, to the left of the real location of the hand. Of interest, in the Incongruent condition, we found a mislocalisation towards left, that is, in the opposite direction of the drift found in the Congruent conditions. Since the last seen position was actually more leftwards than the real position of the hand, these findings support that the initial localisation judgements were captured by the visual trace of the hand.

The Congruency by Time interaction (Fig. 2b) showed that the change in error values over time was larger in the Incongruent condition than it was in the Congruent Inner condition [b = −0.99, t(1162.68) = −1.98, p = 0.048] or the Congruent Outer condition [b = −1.44, t(1162.68) = −2.85, p = 0.004] (Fig. 2b). In the Incongruent condition, this change was from larger negative error values to smaller negative error values—i.e. moving towards the correct hand position. In the Congruent conditions, this change in error values was from smaller positive error values to larger error values—i.e. moving away from the correct hand position. This result suggests a greater amount of drift over time in the Incongruent condition than in the control conditions. This drift was consistently in a rightwards direction as in the Congruent conditions, but since this significant difference, we can hypothesise that the localisations in the Incongruent conditions are not just rightwards, but they are also towards the real location of the hidden hand.

Finally, closing the eyes for 20 s after the hand disappears led to more rapid improvement in localisations during the Incongruent conditions but not during the Congruent conditions (Fig. 2c), that is, during the Incongruent conditions, the mean localisation error was 6.53 cm, (90 % CI −7.56 to −5.50 for the EO trials and −5.62 cm, 90 % CI −6.66 to −4.59 for EC trials). However, during the congruent trials, the mean localisation error was (EO mean = 1.48 cm, 90 % CI 0.45 to 2.51; EC mean = 1.00 cm, 90 % CI −0.03 to 2.03) or the Congruent Outer conditions (EO mean = 0.86 cm, 90 % CI −0.17 to 1.89; EC mean = 0.17 cm, 90 % CI −0.86 to 1.20).

The analysis of the overall differences in error values between conditions (i.e. participants’ accuracy) revealed a significant effect of Congruency [Wilks’ Lambda = 0.390, F(2,14) = 10.958, p < 0.001], but no effect of Sight [Wilks’ Lambda = 0.970, F(1,15) = 0.460, p = 0.508] and no interaction effect [Wilks’ Lambda = 0.861, F(2,14) = 1.126, p = 0.139]. Bonferroni-corrected pairwise comparisons (α = 0.0167) revealed that accuracy was significantly lower in the Incongruent condition than in both the Congruent Inner (p = 0.001) and Congruent Outer (p = 0.007) conditions. No significant difference was found between the two Congruent conditions. We interpreted this result as confirmation that the position of the right hand was actually deceived and that this deception lasted over time. Alternatively, one may argue that the mislocalisation of the right hand during the Incongruent condition could be simply explained as a visual capture of hand position (Pavani et al. 2000). Thus, in order to check that the participants were indeed unaware of the difference between the Congruent and Incongruent conditions and so to rule out the possibility that the effect that we found was merely due to a visual capture, we analysed the questionnaire ratings. Of specific relevance was the question, I couldn’t tell where my right hand was, as higher ratings for this question in the Incongruent condition (vs. Congruent conditions) would suggest that participants were aware of the deception and thus were unsure of their actual hand position. Nonetheless, this effect might simply reflect a low sensitivity of the questionnaire itself in evaluating the ability of the participants to determine where their right hand was. The repeated-measure ANOVA to compare the participants’ rating scores across conditions (Congruent Outer, Congruent Inward, Incongruent) showed no effect of Congruency for any of the questionnaire items (see Table S1 in the Supplemental materials). This result supports the fact that the participants were naïve to the experimental manipulations. The lack of awareness regarding the experimental manipulations is also supported by the participants’ final self-report. In fact, none of the participants claimed to be aware of the illusion and when asked to try to identify the condition(s) in which the illusion was performed, they reported to be guessing. None of the participants correctly identified both of the Incongruent conditions.

Finally, the Pearson’s correlation coefficient, computed for either Congruent Inner, Congruent Outer and Incongruent conditions, did not reveal any significant correlation between the error scores at T13 and score at the questionnaire for the question “I couldn’t tell where my hand was” for any of the conditions analysed (Congruent Outer: r = −0.292, n = 16, p = 0.273; Congruent Inner: r = −0.42, n = 16, p = 0.105; Incongruent: r = −0.326, n = 16, p = 0.217). This result might suggest that amount of error at the last localisation was not correlated with the perceived ability of the participants to locate their hidden hand. Also, that no correlation was significant supports the idea that the participants did not realise that the presence of a visuo-proprioceptive manipulation was performed in just the Incongruent condition (and not in the Congruent conditions). This was also confirmed by a debriefing with the participants, at the end of the experimental session.

Experiment 2

In order to rule out the possibility that the shift towards right was merely an effect of the arrow movement direction used in the localisation task, we designed a second experiment, in which we simply varied this direction. The participants performed two conditions (both incongruent) that differed only for the starting point and direction of the arrow.

Materials and methods

Participants

Eighteen healthy volunteers (10 males, mean age 33 ± 9 years) participated. The conditions were randomised and counterbalanced across participants. All participants had normal or corrected-to-normal vision and were right-handed (self-reported). They had no current or past neurological impairment and no current pain or history of significant pain disorder. They were also naïve to the aims of the study. All the participants gave written consent prior to their participation to the experiment. The study was performed in accordance with the ethical standards laid down in the 1991 Declaration of Helsinki and was approved by the Human Research Ethics Committee of the University of South Australia.

Procedures

The participants underwent the original DHT (Roger Newport and Gilpin 2011) twice. Note that this illusion differed from the illusion used for Experiment 1 just for the fact that both hands were actually moving. However, we know from pilot data that this difference does not modulate the effects of the arrow direction on the localisation responses. Importantly, since the aim of this experiment was not to barely replicate the results of Experiment 1, the change in the procedure was performed in order to maximise the effect of the adaptation procedure on localisation task. In fact, in Experiment 2, since both hands are involved, a possible asymmetry in the arms movement can be ruled out, such the participants are able to focus just on the localisation task. During the localisation task, in one condition, the arrow was starting from the centre of the screen and moving rightwards (as in Experiment 1), while in the other condition, the arrow was moving at the same velocity but from the right-hand side of the screen towards left. The task was exactly the same as that described above.

Results

We performed a 2 (Arrow Direction: Centre to Right, Right to Centre) by 2 (Time: T0, T12) repeated-measures ANOVA. A main effect of Time [η 2 = 0.52, F(1,17) = 18.38, p < 0.001] showed that localisation error scores were more accurate (i.e. less negative) on the last judgment (T12 mean = −9.23 cm, SE = 0.86, 95 % CI −11.05 to −7.40) than they were on the first (T0 mean = −11.64 cm, SE = 0.46, 95 % CI −12.61 to −10.58). There was no main effect of Arrow Direction [F(1,17) = 3.17, p = 0.093] nor a significant interaction between the Arrow Direction and Time [F(1,17) = 2.06, p = 0.170]. Thus, in line with the Experiment 1, participants became more accurate over time, but the direction of the arrow did not influence the extent of rightward drift (see Fig. 3).

Discussion

Our results support our prediction that, when the perceived hand position is different from the physical hand position (due to a visual illusion), in the three minutes following visual occlusion of the hand, participants rely less on vision and more on proprioception, such that hand localisation judgements become more accurate (i.e. closer to the physical position of the hand) over time. Conversely, we hypothesised that providing participants with a congruent physical and perceived location of the hand would result in more accurate hand localisation judgements than when a visuo-proprioceptive incongruency was introduced. We controlled for the role of vision, accelerating the decay of the visual trace by closing the eyes immediately after hand occlusion. When the participants were forced to rely more on proprioception (i.e. the physical position of the hand was different from its perceived position), the switch to proprioception occurred earlier when they closed their eyes before the localisation task than when they kept them open.

Accuracy in the localisation task decreased over time after the visual occlusion of the hand, as evidenced by the increase in error values detected over the three minutes following the hand occlusion. That is, when the visually encoded (perceived) hand position was the same as the proprioceptively encoded (physical) position, the localisation judgements diverged from the physical position of the hand over time according to a directional bias. Also, our hypothesis that a visuo-proprioceptive incongruence (yielded by the illusion) would increase the use of proprioception to localise the hand was confirmed by our finding of an acceleration of the drift towards the real position of the hand in the condition in which the illusion was performed. This result is consistent with the maximum likelihood estimation theory of multisensory integration (Ernst and Banks 2002), suggesting that the sensory modality that dominates over the others in a given situation is the one that carries the lower level of variance. In the Incongruent condition, the increased accuracy in time since the last visual confirmation of hand position would suggest that remembered visual information has more variance (due to decay of the visually encoded position) than ongoing proprioceptive information—even in stationary sitting, there are continual perturbations incurred by breathing, cardiac rhythm and postural sway that are sufficient to activate low-threshold proprioceptive organs (see Proske and Gandevia 2012). This idea seems to be supported by the finding that acceleration of the visual trace decay, by closing the eyes, results in better performance in hand localisation for only the Incongruent condition when visual information is inaccurate. While we did not predict that the effect of closing the eyes would be specific for the Incongruent condition, it is a reasonable prediction. In fact, we hypothesised that vision would interfere with the correct localisation only when the visual trace is inaccurate. We hypothesised that when this occurs (i.e. in the Incongruent condition), the participants would rely more on proprioception over time, leading to an increase in the accuracy of hand localisation. Thus, an earlier decay of the visual trace could quicken the onset of the switch from vision to proprioception. Our results support this idea—closing the eyes only matters when an inaccurate visual trace is provided and this leads to more accurate localisations compared with keeping the eyes open.

One might argue that the effect we found might be due to a spontaneous return towards the real position. However, once the illusion is in place, the hands are stationary and there would not be any reason for updating their perceived position. In Newport and Gilpin’s study (2011), immediately after the right hand disappeared from view, the participants were required to reach across with their left hand to touch their right hand. All the participants failed in touching their disappeared hand, showing that the real position of the hands was not updated yet. We can argue that, in our experiment, until otherwise proved, the visually encoded position of the hands is maintained. However, our results show that, even though there is no actual or potential motor requirement, the location of the hand is updated on the basis of the available data, in this case proprioceptive input (i.e. visual input is no longer available). One would predict that if there is a biological advantage to be ready for movement even though none is expected, then this constant updating or recalibration would be helpful. Importantly, the shift in weighting given to proprioception is not immediate and complete, but rather occurs gradually over time.

Alternatively, during the adaptation procedure of the Incongruent condition, it is possible that a recalibration of the felt position of the hand with the seen position of the hand occurred, such that the relationship between proprioceptive and visual information was updated, to the detriment of proprioception. A decay of this recalibration between proprioception and visual information may be another possibility for the increased accuracy over time of hand localisation judgements in the Incongruent condition. Previous work using prism adaptation, in which the seen position of the hand is manipulated, suggests that the participants, under certain conditions, might start to use new visuospatial coordinates for their limb (Rossetti et al. 1998). Importantly, when the adaptation is removed, this re-calibration spontaneously decays (Newport and Schenk 2012). It may be that our data are a corollary of this spontaneous decay seen in prism adaptation. Again, that the decay occurred quicker when visual information was removed would support this idea. Our data are in line with both the MLE and the recalibration hypothesis; however, it was not our intent to differentially interrogate those theories.

Early prioritisation of vision

In line with our hypotheses, in all conditions, participants first localised their hidden right hand at a point located towards the last seen location of the hand. This was true both for the Congruent conditions (where the last seen location matched the true location of the hand) and for the Incongruent condition (where the last seen location did not match the true location of the hand). In the Incongruent condition, localisation scores were significantly leftwards (i.e. towards the last seen location) and less accurate than those in the two control conditions, which supports the dominant role of vision in localisation of our hands. Our data confirm and extend the previous findings that relate the amount of visual exposure (in terms of time) with the reliance on proprioception (Holmes and Spence 2005). In fact, Holmes and Spence found that the longer the participants were allowed to look at the (incorrect) position of their right hand, the less they relied on proprioception, tending rather to rely on vision. We found that also the opposite holds, by showing that with time, when the decay of the visually encoded position is accelerated (by closing eyes), the relative weighting and reliance on incoming sensory information switches sooner to proprioception, to the detriment of vision.

The directional bias and the proprioceptively encoded position of the hand

Regardless of the Congruency, a rightward drift was found in all the experimental conditions. A number of studies have shown that a mislocalisation of one’s own arm and hand occurs when vision is occluded (Block 1890; Paillard and Brouchon 1968; Craske and Crawshaw 1975; Desmurget et al. 2000; Smeets et al. 2006; Wann and Ibrahim 1992). It is well established that when healthy participants are asked to locate their own hidden hand in space, there is a directional bias towards the attended side of space (i.e. the right hand is overestimated as being more rightwards, while the left hand as more leftwards) (Crowe et al. 1987; Ghilardi et al. 1995; Haggard et al. 2000; Jones et al. 2010; van Beers et al. 1998). Thus, the significant rightward shift in localisations over time in all conditions is consistent with the drift reported in prior studies. Not only did we observe the same drift (in this case towards right) in all conditions, but we also found that this drift increased over time. We propose that this directional bias is driven by the fact that the participants were engaged in task that occurred in that portion of space. Due to the well-established decay of the visually encoded position after hand occlusion over time (Chapman et al. 2000), the influence of this bias, although present since the first localisation, seems to become prevalent, leading to localisations that are increasingly shifted towards the side to which the participants were performing the localisation task (i.e. to the right in our experiment). Thus, over time, the ability to localise one’s own limb in space becomes less accurate due to the reliance on a rapidly fading visually encoded position. However, if the fading visually encoded position was the only reason for less accurate localisations, the localisation judgements would be randomly distributed around the real hand location, to both the right and to the left of the real hand position. Instead, a specific trend towards the right, beyond the true (or last seen) location, was found. The question addressed here is why, when the participants start to become less accurate in localising their hidden right hand, do they systematically localise it increasingly towards the right? Our hypothesis accounts for this peculiar trend, suggesting that while the visual trace decays, a bias towards the space in which the experiment is occurring seems to guide the localisations. We can also exclude that this directional bias was simply the product of the arrow shifting, as clearly showed by the results from Experiment 2.

One might argue that the shift towards right is simply due to a cumulative error effect (i.e. the successive summation of the error produced by each consecutive response in a task; Bock and Arnold 1993; Dijkerman and de Haan 2007) caused by the repeated measures. However, the cumulative error effects have been found, and related to, motor tasks. For example, in Bock and Arnold’s study (1993), the cumulation errors were directly related to the motor component of the task. Also, Jones et al. (2010), on the basis of work Dijkerman and de Haan (2007), noted that reaching tasks might lead to kinematic errors that cannot be disentangled by localisation errors. Our protocol did not involve repeated movements, but repeated judgements of an independently moved arrow. Moreover, in the Incongruent condition, our protocol did not show accumulating error, but accumulating accuracy. However, even if the drift reflected an accumulating error, relative to the visually encoded location of the hand, then it would be consistent across conditions, which it is was not.

Importantly, the drift towards the right side was significantly different between the Congruent and the Incongruent conditions. We interpreted this significant difference as evidence of the contribution of proprioception when there is a visuo-proprioceptive incongruence (i.e. the physical and perceived position of the hand are different), but not when it is just faded away (i.e. when the hand is simply hidden from view). In fact, equal accuracy in the localisation task across the three conditions would have suggested reliance primarily on proprioception (i.e. in the Incongruent conditions, no matter where the perceived position was, the participants correctly would localise the position of the hidden hand). We contend that the greater rightwards drift when vision was occluded confirms that an updated proprioceptive input drives the rightward shift over and above any generic directional bias. On the other hand, a similar amount of drift towards the right side across all the conditions would have suggested that the localisations were mainly guided by the directional bias. Our findings clearly confirm that vision is prioritised over proprioception even when the visual input is inaccurate, but over time, in turn, proprioception is prioritised over the directional bias.

Our experiments do not exclude that a proprioceptive component was also present in the two control conditions. However, they do suggest that this component is stronger when vision is unreliable. Importantly, the adaptation procedure used in the Incongruent conditions resulted in participants being unaware of any difference between the control conditions and the illusion, as reported after the experiment and confirmed by the responses to the questionnaire. Crucially, this indicates that the switch from a visually to a proprioceptively encoded location of the hidden hand occurred entirely outside of participants’ awareness. There is clearly a complex interaction between visual, proprioceptive (and task-related) factors in self-localisation of one’s own hand. In particular, we shed light on the relative roles of vision and proprioception over time, concluding that sighted, neurologically healthy participants tend to rely heavily on vision even when the visually encoded position of their hidden hand has decayed and made unreliable, which in turn seems to result in a strong directional bias due to the task itself. In addition to this, our findings also underlined the important contribution of proprioception when vision is unreliable. In fact, although in most cases the physical (proprioceptively encoded) position of the hand is ignored (or perhaps just underestimated), there are some circumstances in which proprioception can be utilised effectively in accurately locating one’s own body part. Vision gives us distal information about the external world, allowing us to make prediction without directly contacting a potentially dangerous stimulus (Gregory 1997). It seems then an evolutionary advantageous choice to adopt a heavy reliance on visual information in a number of situations. However, there are cases in which proprioception becomes not just useful but essential. In particular, people who are blind or partially blind and who are in a condition similar to the one described here should choose to rely on proprioception (in fact, the occluded hand is inserted into a box-like system, making other strategies, such as echolocation highly unlikely). Gaining knowledge about the relative weighting of sensory inputs for self-localisation is also of importance for a variety of disorders in which proprioception is known to be damaged. In cerebral palsy, for example, a deficit to visual-proprioceptive system has been observed (e.g. Wann 1991). In addition, patients whose sense of touch is severely damaged (as in case of deafferentation) are also unable to locate their body in space and navigate in the environment. In order to successfully execute a movement, these patients need to visually monitor their limbs during the execution (Cole and Paillard 1995). Also, it is well-known that chronic pain involves disturbances in the motor system (e.g. Moseley 2004) and body image (e.g. Moseley 2005) that may also disrupt proprioception (see Lotze and Moseley 2007, for review). Besides, recent research has pointed out the relationship between the mechanisms underlying the processing of body location and nociception (Gallace et al. 2011; Sambo et al. 2013; see also Moseley et al. 2012, for a review).

Future directions

In order to provide further support to the idea that the drift towards right (i.e. towards the real position of the hand) in the Incongruent conditions is indeed due to the heavier weight assigned to proprioception, future experiments will need to investigate a condition where the seen position is to the right of the true hand position (instead of to the left, as in the present experiments). In line with the results of our study, a drift guided by proprioception towards the real position of the hand (i.e. towards the body midline and in the opposite direction than in the present study) should be found also under this condition. However, according to the extant literature (Crowe et al. 1987; Ghilardi et al. 1995; Haggard et al. 2000; Jones et al. 2010) and as confirmed by the results in the present study, also a rightward mislocalisation of the right hand (i.e. the directional bias) should be found. The directional bias, thus, being in the opposite direction of the drift guided by proprioception, might reduce the effect of this leftward drift. Thus, further experiments will be needed in order to investigate the effect of the direction of the adaptation procedure on self-localisation performance when directional bias and proprioception guided drift tend to opposite directions.

Conclusion

In conclusion, when the perceived hand position is different from the physical hand position (due to a visual illusion), we demonstrate a time-dependent shift from relying on visually encoded to proprioceptively encoded information, experimentally reversing the seemingly usual dominance of vision in localising the body. In addition to this, we showed the time course of self-localisation abilities when visual information becomes less reliable and, possibly, when proprioception starts to be more reliable (due to consistent signals coming from the limb to be localised). In fact, over time, the participants switch from a visual-based localisation strategy to a proprioceptive-based one. Last, we show new evidence supporting the claim that the brain updates limb location, even when there is no conscious need to do so (Haggard and Wolpert 2001).

References

Aglioti S, Smania N, Peru A (1999) Frames of reference for mapping tactile stimuli in brain-damaged patients. J Cognit Neurosci, 11(1):67–79. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/9950715

Azañón E, Soto-Faraco S (2008) Changing reference frames during the encoding of tactile events. Curr Biol CB 18(14):1044–1049. doi:10.1016/j.cub.2008.06.045

Block AM (1890) Expériences sur les sensations musculaires. Revue Scientifique 45:294–230

Bock O, Arnold K (1993) Error accumulation and error correction in sequential pointing movements. Exp Brain Res 95:111–117

Chapman CD, Heath MD, Westwood DA, Roy EA (2000) Memory for kinesthetically defined target location: evidence for manual asymmetries. Brain Cognit, 46(1–2):62–66. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/11527365

Cole J, Paillard J (1995) Living without touch and peripheral information about body position and movement: studies with deafferented participants. In: Bermudez JL, Marcel A, Eilan N (eds) The body and the self. MIT Press, Cambridge, pp 245–266

Craske B, Crawshaw M (1975) Shifts in kinesthesis through time and after active and passive movement. Percept Mot Skills, 40(3):755–61. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/1178361

Crowe A, Keessen W, Kuus W, Van Vliet R, Zegeling A (1987) Proprioceptive accuracy in two dimensions. Percept Mot Skills 64:831–846

Desmurget M, Vindras P, Gréa H, Viviani P, Grafton ST (2000) Proprioception does not quickly drift during visual occlusion. Exp Brain Res 134(3):363–377. doi:10.1007/s002210000473

Dijkerman HC, de Haan EHF (2007) Somatosensory processes subserving perception and action. Behav Brain Sci, 30(2):189–201; discussion 201–239. doi:10.1017/S0140525X07001392

Eimer M, Forster B, Van Velzen J (2003) Anterior and posterior attentional control systems use different spatial reference frames: ERP evidence from covert tactile-spatial orienting. Psychophysiology 40(6):924–933. doi:10.1111/1469-8986.00110

Ernst MO, Banks MS (2002) Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415(6870):429–433. doi:10.1038/415429a

Ernst MO, Bülthoff HH (2004) Merging the senses into a robust percept. Trends Cognit Sci 8(4):162–169. doi:10.1016/j.tics.2004.02.002

Gallace A, Torta DME, Moseley GL, Iannetti GD (2011) The analgesic effect of crossing the arms. Pain 152(6):1418–1423. doi:10.1016/j.pain.2011.02.029

Ghilardi MF, Gordon J, Ghez C (1995) Learning a visuomotor transformation in a local area of work space produces directional biases in other areas. J Neurophysiol, 73(6):2535–2569. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/7666158

Gregory RL (1997) Eye and brain: the psychology of seeing, 5th edn. Princepton University Press, Princeton

Guariglia C, Piccardi L, Puglisi Allegra MC, Traballesi M (2002) Is autotopoagnosia real? EC says yes. A case study. Neuropsychologia, 40(10):1744–9. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/11992662

Haggard P, Wolpert DM (2001) Disorders of Body Scheme. In: Freund H-J, Jeannerod M, Hallett M, Leiguarda R (eds) Higher-order motor disorders: from neuroanatomy and neurobiology to clinical neurology. Oxford University Press, New York, pp 261–271

Haggard P, Newman C, Blundell J, Andrew H (2000) The perceived position of the hand in space. Percept Psychophys, 62(2):363–77. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/10723215

Holmes NP, Spence C (2005) Visual bias of unseen hand position with a mirror: spatial and temporal factors. Exp Brain Res. Experimentelle Hirnforschung Expérimentation Cérébrale, 166(3–4):489–497. doi:10.1007/s00221-005-2389-4

Holst E, Mittelstaedt H (1950) Das reafferenzprinzip. Naturwissenschaften 37(20):464–476

Jones SAH, Cressman EK, Henriques DYP (2010) Proprioceptive localization of the left and right hands. Exp Brain Res Experimentelle Hirnforschung. Expérimentation Cérébrale, 204(3):373–383. doi:10.1007/s00221-009-2079-8

Larson MG (2008) Analysis of variance. Circulation 117(1):115–121. doi:10.1161/CIRCULATIONAHA.107.654335

Lotze M, Moseley GL (2007) Role of distorted body image in pain. Curr Rheumatol Rep, 9(6), 488–96. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/18177603

Moseley GL (2004) Why do people with complex regional pain syndrome take longer to recognize their affected hand? Neurology 62(12):2182–2186

Moseley GL (2005) Distorted body image in complex regional pain syndrome type 1. Neurology 65:773

Moseley GL, Gallace A, Spence C (2012) Bodily illusions in health and disease: physiological and clinical perspectives and the concept of a cortical “body matrix”. Neurosci Biobehav Rev 36(1):34–46. doi:10.1016/j.neubiorev.2011.03.013

Newport R, Gilpin HR (2011) Multisensory disintegration and the disappearing hand trick. Curr Biol CB 21(19):R804–R805. doi:10.1016/j.cub.2011.08.044

Newport R, Schenk T (2012) Prisms and neglect: what have we learned? Neuropsychologia 50(6):1080–1091. doi:10.1016/j.neuropsychologia.2012.01.023

Newport R, Hindle JV, Jackson SR (2001) Links between vision and somatosensation. Vision can improve the felt position of the unseen hand. Curr Biol CB, 11(12):975–980. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/11448775

Newport R, Preston C, Pearce R, Holton R (2009) Eye rotation does not contribute to shifts in subjective straight ahead: implications for prism adaptation and neglect. Neuropsychologia 47(8–9):2008–2012. doi:10.1016/j.neuropsychologia.2009.02.017

Newport R, Pearce R, Preston C (2010) Fake hands in action: embodiment and control of supernumerary limbs. Exp Brain Res Experimentelle Hirnforschung. Expérimentation Cérébrale, 204(3):385–395. doi:10.1007/s00221-009-2104-y

Paillard J, Brouchon M (1968) Active and passive movements in the calibration of position sense. In: Freedman SJ (ed) The neuropsychology of spatially oriented behavior. Dorsey Press, Illinois, pp 35–56

Pavani F, Spence C, Driver J (2000) Visual capture of touch: out-of-the-body experiences with rubber gloves. Psychol Sci 11(5):353–359. doi:10.1111/1467-9280.00270

Pick A (1922) Störung der Orientierung am eigenen Körper. Psychologische Forschung 1(1):303–318. doi:10.1007/BF00410392

Proske U, Gandevia SC (2012) The proprioceptive senses: their roles in signaling body shape, body position and movement, and muscle force. Physiol Rev 92(4):1651–1697. doi:10.1152/physrev.00048.2011

Rossetti Y, Rode G, Pisella L, Alessandro F, Li L, Boisson D, Perenin M-T (1998) Prism adaptation to a rightward optical deviation rehabilitates left hemispatial neglect. Nature 395:166–169

Sambo CF, Torta DM, Gallace A, Liang M, Moseley GL, Iannetti GD (2013) The temporal order judgement of tactile and nociceptive stimuli is impaired by crossing the hands over the body midline. Pain 154(2):242–247. doi:10.1016/j.pain.2012.10.010

Semenza C, Goodglass H (1985) Localisation of body parts in brain injured subjects. Neuropsychologia 23(2):161–175

Smeets JBJ, van den Dobbelsteen JJ, de Grave DDJ, van Beers RJ, Brenner E (2006) Sensory integration does not lead to sensory calibration. Proc Natl Acad Sci USA 103(49):18781–18786. doi:10.1073/pnas.0607687103

Sperry RW (1950) Neural basis of the spontaneous optokinetic response produced by visual inversion. J Comp Physiol Psychol 43(6):482

Torta DM, Diano M, Costa T, Gallace A, Duca S, Geminiani GC, Cauda F (2013) Crossing the line of pain: FMRI correlates of crossed-hands analgesia. J Pain : Off J Am Pain Soc 14(9):957–965. doi:10.1016/j.jpain.2013.03.009

Van Beers RJ, Sittig AC, Denier van der Gon JJ (1998) The precision of proprioceptive position sense. Exp Brain Res 122(4):367–377. doi:10.1007/s002210050525

Van Beers RJ, Sittig AC, Denier van der Gon JJ (1999a) Localization of a seen finger is based exclusively on proprioception and on vision of the finger. Exp Brain Res Experimentelle Hirnforschung. Expérimentation Cérébrale, 125(1):43–49. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/10100975

Van Beers RJ, Sittig AC, Van Der Gon JJD (1999b) Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol 81(3):1355–1364

Van Beers RJ, Wolpert DM, Haggard P (2002) When feeling is more important than seeing in sensorimotor adaptation. Curr Biol CB, 12(10):834–837. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/12015120

Wann JP (1991) The integrity of visual-proprioceptive mapping in cerebral palsy. Neuropsychologia, 29(11):1095–1106. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/1775227

Wann JP, Ibrahim SF (1992) Does limb proprioception drift? Exp Brain Res Experimentelle Hirnforschung. Expérimentation Cérébrale 91(1):162–166. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/1301369

Yamamoto S, Kitazawa S (2001) Reversal of subjective temporal order due to arm crossing. Nat Neurosci 4(7):759–765. doi:10.1038/89559

Acknowledgments

We would like to thank Ms. Cat Jones for her help in creating the video of the Adaptation Procedure.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (MP4 9164 kb)

Rights and permissions

About this article

Cite this article

Bellan, V., Gilpin, H.R., Stanton, T.R. et al. Untangling visual and proprioceptive contributions to hand localisation over time. Exp Brain Res 233, 1689–1701 (2015). https://doi.org/10.1007/s00221-015-4242-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-015-4242-8