Abstract

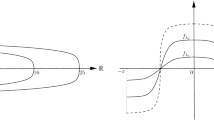

The Muskat problem, in its general setting, concerns the interface evolution between two incompressible fluids of different densities and viscosities in porous media. The interface motion is driven by gravity and capillarity forces, where the latter is due to surface tension. To leading order, both the Muskat problems with and without surface tension effect are scaling invariant in the Sobolev space \(H^{1+\frac{d}{2}}({\mathbb {R}}^d)\), where d is the dimension of the interface. We prove that for any subcritical data satisfying the Rayleigh-Taylor condition, solutions of the Muskat problem with surface tension \({\mathfrak {s}}\) converge to the unique solution of the Muskat problem without surface tension locally in time with the rate \(\sqrt{\mathfrak {s}}\) when \({\mathfrak {s}}\rightarrow 0\). This allows for initial interfaces that have unbounded or even not locally square integrable curvature. If in addition the initial curvature is square integrable, we obtain the convergence with optimal rate \({\mathfrak {s}}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the studies of fluid flows, interfacial dynamics is broad and mathematically challenging. Some interfacial problems in fluid dynamics that have been rigorously studied include the water wave problem, the compressible free-boundary Euler equations, the Hele-Shaw problem, the Muskat problem and the Stefan problem. The dynamics of the interface between the fluids strongly depends on properties of the fluids and of the media through which they flow. However, a common feature in all the above problems is that the interface is driven by gravity and surface tension. Gravity is incorporated in the momentum equations as an external force. On the other hand, surface tension balances the pressure jump across the interface (Young-Laplace equation):

where \(\llbracket p\rrbracket \) is the pressure jump, H is the mean-curvature of the interface, and \({\mathfrak {s}}>0\) is the surface tension coefficient. Well-posedness in Sobolev spaces always holds when surface tension is taken into account but only holds under the Rayleigh-Taylor stability condition on the initial data when surface tension is neglected. It is a natural problem to justify the models without surface tension as the limit of the corresponding full models as surface tension vanishes. This question was addressed in [4, 5, 8, 9, 29, 36, 37] for the problems listed above. The common theory is the following: if the initial data is stable, i.e. it satisfies the Rayleigh-Taylor stability condition, and is sufficiently smooth, then solutions to the problem with surface tension converge to the unique solution of the problem without surface tension locally in time. The general strategy of proof consists of two points.

-

(i)

To leading order, the surface tension term \({\mathfrak {s}}H\) provides a regularizing effect. For sufficiently smooth solutions, the difference between \({\mathfrak {s}}H\) and its leading contribution can be controlled by the energy of the problem without surface tension. This yields a uniform time of existence \(T_*\) for the problem with surface tension \({\mathfrak {s}}\rightarrow 0\).

-

(ii)

For sufficiently smooth solutions and for some \(\theta \in [0, 1)\), the weighted mean curvature \({\mathfrak {s}}^\theta H\) is uniformly in \({\mathfrak {s}}\) bounded (in some appropriate Sobolev norm) by the energy of the problem without surface tension. It follows that \({\mathfrak {s}}H\), the difference between the two problems, vanishes as \({\mathfrak {s}}^{1-\theta }\) as \({\mathfrak {s}}\rightarrow 0\), establishing the convergence on the time interval \([0, T_*]\). Note that the optimal rate corresponds to \(\theta =0\).

Therefore, the vanishing surface tension limit becomes subtle if the initial data is sufficiently rough so that it can accommodate curvature singularities. As a matter of fact, in the aforementioned works, the initial curvature is at least bounded. In this paper, we prove that for the Muskat problem, the zero surface tension limit can be established for rough initial interfaces whose curvatures are not bounded or even not locally \(L^2\). Regarding quantitive properties of the zero surface tension limit, the convergence rates in the aforementioned works are either unspecified or suboptimal. In this paper, we obtain the optimal convergence rate for the Muskat problem. The next subsections are devoted to a description of the Muskat problem and the statement of our main result.

1.1 The Muskat problem

The Muskat problem [43] concerns the interface evolution between two fluids of densities \(\rho ^\pm \) and viscosities \(\mu ^\pm \) governed by Darcy’s law for flows through porous media. Specifically, the fluids occupy two domains \(\Omega ^\pm = \Omega ^\pm _t \subset {\mathbb {R}}^{d+1}\) separated by an interface \(\Sigma = \Sigma _t\), with \(\Omega ^+\) confined below a rigid boundary \(\Gamma ^+\), and \(\Omega ^-\) likewise above \(\Gamma ^-\). We consider the case when the surfaces \(\Gamma ^\pm \) and \(\Sigma \) are given by the graphs of functions, that is, we designate \(b^\pm : {\mathbb {R}}^{d}_x \rightarrow {\mathbb {R}}\) and \(\eta : {\mathbb {R}}_t\times {\mathbb {R}}^{d}_x \rightarrow {\mathbb {R}}\) for which

We also consider the case where one or both of \(\Gamma ^\pm = \emptyset \). In each domain \(\Omega ^\pm \), the fluid velocity \(u^\pm \) and pressure \(p^\pm \) obey Darcy’s law:

where g denotes the gravitational acceleration, and \(\mathbf {e}_{d+1}\) is the upward unit vector in the vertical direction. For any two objects \(A^+\) and \(A^-\) associated with the domains \(\Omega ^+\) and \(\Omega ^-\) respectively, we denote the jump

whenever this difference is well-defined. In particular, set

At the interface, there are three boundary conditions. First, the normal component of the fluid velocity is continuous across the interface

where we fix n to be the upward normal of the interface, specifically \(n =\langle \nabla \eta \rangle ^{-1} (-\nabla \eta , 1)\) with

Second, the interface is transported by the normal fluid velocity, leading to the kinematic boundary condition

Third, according to the Young-Laplace equation, the pressure jump is proportional to the mean curvature through surface tension:

where \({\mathfrak {s}}\ge 0\) is the surface tension coefficient and

is twice the mean curvature of \(\Sigma \).

Finally, there is no transportation of fluid through the rigid boundaries:

where \(\nu ^\pm = \pm \langle \nabla b\rangle ^{-1}(-\nabla b^\pm , 1)\) is the outward normal of \(\Gamma ^\pm \). If \(\Gamma ^\pm = \emptyset \), this condition is replaced by the decay condition

For the two-phase problem, we have \(\rho ^\pm \) and \(\mu ^\pm \) both as positive quantities. We will also consider the one-phase problem where the top fluid is treated as a vacuum by setting \(\rho ^+ = \mu ^+ = 0\) and \(\Gamma ^+ = \emptyset \).

In the absence of the boundaries \(\Gamma ^\pm \), both the Muskat problems with and without surface tension to leading order admit \(\dot{H}^{1+\frac{d}{2}}({\mathbb {R}}^d)\) as the scaling invariant Sobolev space in view of the scaling

In either case, the problem is quasilinear. The literature on well-posedness for the Muskat problem is vast. Early results can be found in [6, 7, 17, 23, 31, 49, 51]. For more recent developments, we refer to [22, 24,25,26, 30, 34] for well-posedness, to [12, 20,21,22, 27, 28, 32, 34] for global existence, and to [13, 14] for singularity formation. Directly related to the problem addressed in the current paper is local well-posedness for low regularity large data. Consider first the problem without surface tension. In [18], the authors obtained well-posedness for \(H^2({\mathbb {T}})\) data for the one-phase problem, allowing for unbounded curvature. For the 2D Muskat problem without viscosity jump, i.e. \(\mu ^+=\mu ^-\), [22] proves well-posedness for data in all subcritical Sobolev spaces \(W^{2, 1+}({\mathbb {R}})\). In \(L^2\)-based Sobolev spaces, [39] obtains well-posedness for data in all subcritical spaces \(H^{\frac{3}{2}+}({\mathbb {R}})\). We also refer to [2] for a generalization of this result to homogeneous Sobolev spaces \(\dot{H}^1({\mathbb {R}})\cap \dot{H}^s({\mathbb {R}})\), \(s\in (\frac{3}{2}, 2)\), allowing non-\(L^2\) data. In [45], local well-posedness for the Muskat problem in the general setting as described above was obtained for initial data in all subcritical Sobolev spaces \(H^{1+\frac{d}{2}+}({\mathbb {R}}^d)\), \(d\ge 1\). The case of one fluid with infinite depth was independently obtained by [3]. Regarding the problem with surface tension, [39, 40] consider initial data in \(H^{2+}({\mathbb {R}})\). In the recent work [46], well-posedness for data in all subcritical Sobolev spaces \( H^{1+\frac{d}{2}+}({\mathbb {R}}^d)\), \(d\ge 1\), was established.

1.2 Main result

In order to state the Rayleigh-Taylor stability condition solely in terms of the interface, we define the operator

where \(J^\pm (\eta )\) and \(\mathfrak {B}^\pm (\eta )\) are respectively defined by and (2.14) and (2.21) below. Our main result is the following.

Theorem 1.1

Consider either the one-phase Muskat problem or the two-phase Muskat problem in the stable regime \(\rho ^->\rho ^+\). The boundaries \(\Gamma ^\pm \) can be empty or graphs of Lipschitz functions \(b^\pm \in \dot{W}^{1, \infty }({\mathbb {R}}^d)\). For any \(d\ge 1\), let \(s > 1+\frac{d}{2}\) be an arbitrary subcritical Sobolev index. Consider an initial datum \(\eta _0\in H^s({\mathbb {R}}^d)\) satisfying

Let \({\mathfrak {s}}_n\) be a sequence of surface tension coefficients converging to 0. Then, there exists \(T_*>0\) depending only on \(\Vert \eta _0\Vert _{H^s}\) and \(({\mathfrak {a}}, h, s, \mu ^\pm , {\mathfrak {g}})\) such that the following holds.

(i) The Muskat problems without surface tension and with surface tension \({\mathfrak {s}}_n\) have a unique solution on \([0, T_*]\), denoted respectively by \(\eta \) and \(\eta _n\), that satisfy

where \({\mathcal {F}}:{\mathbb {R}}^+\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) is nondecreasing and depends only on \((h, s, \mu ^\pm , {\mathfrak {g}})\).

(ii) As \(n\rightarrow \infty \), \(\eta _n\) converges to \(\eta \) on \([0, T_*]\) with the rate \(\sqrt{{\mathfrak {s}}_n}\):

If in addition \(s\ge 2\), then we have the convergence with optimal rate \({\mathfrak {s}}_n\):

The convergence (1.21) holds for initial data in any subcritical Sobolev spaces \(H^{1+\frac{d}{2}+}({\mathbb {R}}^d)\). In particular, this allows for initial interfaces whose curvatures are unbounded in all dimensions and not locally square integrable in one dimension. The former is because \(H(\eta _0)\in H^{-1+\frac{d}{2}+}({\mathbb {R}}^d)\not \subset L^\infty ({\mathbb {R}}^d)\) and latter is due to the fact that in one dimension we have \(H(\eta _0)\in H^{-\frac{1}{2}+\varepsilon }({\mathbb {R}})\not \subset L^2_{loc}({\mathbb {R}})\). This appears to be the first result on vanishing surface tension that can accommodate curvature singularities of the initial interface. On the other hand, the convergence (1.22) has optimal rate \({\mathfrak {s}}_n\) and holds under the additional condition that \(s\ge 2\). This is only a condition in one dimension since \(s>1+\frac{d}{2}\ge 2\) for \(d\ge 2\). Note also that \(s \ge 2\) is the minimal regularity to ensure that the initial curvature is square integrable, yet it still allows for unbounded curvature. See the technical Remark 1.3.

The proof of Theorem 1.1 exploits the Dirichlet-Neumann reformulation [45, 46] for the Muskat problem in a general setting. See also [3] for the one-fluid case. Part (i) of Theorem 1.1 is a uniform local well-posedness with repsect to surface tension. The key tool in proving this is paralinearization results for the Dirichlet-Neumann operator taken from [1, 45]. The convergences (1.21) and (1.22) rely on contraction estimates for the Dirichlet-Neumann operator proved in [45] for a large Sobolev regularity range of the Dirichlet data. Together with [45] and [46], Theorem 1.1 provides a rather complete local regularity theory for (large) subcritical data.

Remark 1.2

In [8] and [9], the first results on the zero surface tension limit for Muskat were obtained respectively in 2D and 3D for smooth initial data, i.e. \(\Sigma _0\in H^{s_0}\) for some sufficiently large \(s_0\). The interface is not necessarily a graph but if it is then the convergence estimates therein translate into

which has the same rate as (1.21).

Remark 1.3

The condition \(s\ge 2\) in (1.22) is due to the control of low frequencies in the paralinearization and contraction estimates for the Dirichlet-Neumann operator \(G(\eta )f\) (see (2.8) for its definition). Precisely, the best currently available results (see Sects. 2.4 and 2.5 below) require \(f\in H^\sigma ({\mathbb {R}}^d)\) with \(\sigma \ge \frac{1}{2}\). The proof of the \(L^\infty _t H^{s-2}_x\) convergence in (1.22) appeals to these results with \(\sigma =s-\frac{3}{2}\).

Remark 1.4

It was proved in [45] that the Rayleigh-Taylor condition holds unconditionally in the following configurations:

-

the one-phase problem without bottom or with Lipschitz bottoms;

-

the two-phase problem with constant viscosity (\(\mu ^+=\mu ^-\)).

When the Rayleigh-Taylor condition is violated, analytic solutions to the problem without surface tension exist [49]. The works [47, 48] and [15, 16] strongly indicate that these solutions are not limits of solutions to the problem with surface tension. We also refer to [35] for the instability of the trivial solution (with surface tension) and to [33] for the stability of bubbles (without surface tension).

Remark 1.5

In Theorem 1.1, the initial data is fixed for all surface tension coefficients \({\mathfrak {s}}_n\). In general, one can consider \(\eta _n\vert _{t=0}=\eta _{n, 0}\) uniformly bounded bounded in \(H^s({\mathbb {R}}^d)\) such that the conditions in (1.15) hold uniformly in n. Then, for any \(s>1+\frac{d}{2}\), we have

On the other hand, if \(s>1+\frac{d}{2}\) and \(s\ge 2\) then

Remark 1.6

By interpolating the convergence estimate (1.21) and the uniform bounds in (1.18), we obtain the vanishing surface tension limit in \(H^{s'}\) for all \(s'\in [s-1, s)\):

Convergence in the highest regularity \(L^\infty _tH^s_x\) is more subtle and can possibly be established using the Bona-Smith type argument [10]. This would imply in particular that \(\eta \) is continuous in time with values in \(H^s_x\), \(\eta \in C_t H^s_x\). In the context of vanishing viscosity limit, this question was addressed in [41], while convergence in lower Sobolev spaces (compared to initial data) was proved in [19]. For gravity water waves, the Bona-Smith type argument was applied in [44] to establish the continuity of the flow map in the highest regularity.

Remark 1.7

The proof of the local well-posedness in all subcritical Sobolev spaces in [45] uses a parabolic regularization. Theorem 1.1 provides an alternative proof via regularization by vanishing surface tension. We stress that the assertions about \(\eta \) in Theorem 1.1 do not make use of the local well-posedness results in [45].

The rest of this paper is organized as follows. In Sect. 2, we recall the reformulation of the Muskat problem in terms of the Dirichlet-Neumann operator together with results on the Dirichlet-Neumann operator established in [1, 45]. Section 3 is devoted to the proof of uniform-in-\({\mathfrak {s}}\) a priori estimates. In Sect. 4, we prove contraction estimates for the operators \(J^\pm \) which arise in the reformulation of the two-phase problem. The proof of Theorem 1.1 is given in Sect. 5. Finally, in “Appendix 5”, we recall the symbolic paradifferential calculus and the Gåarding inequality for paradifferential operators.

2 Preliminaries

2.1 Big-O notation

If X and Y are Banach spaces and \(T: X\rightarrow Y\) is a bounded linear operator, \(T\in {\mathcal {L}}(X, Y)\), with operator norm bounded by A, we write

We define the space of operators of order \(m \in {\mathbb {R}}\) in the scale of Sobolev spaces \(H^s({\mathbb {R}}^d)\):

We shall write \(T=O_{Op^m}(A)\) when \(T\in Op^m\) and for all \(s\in {\mathbb {R}}\), there exists \(C=C(s)\) such that \(T=O_{H^{s}\rightarrow H^{s-m}}(CA)\).

2.2 Function spaces

In the general setting, to reformulate the dynamics of the Muskat problem solely in terms of the interface, we require some function spaces.

We shall always assume that \(\eta \in W^{1, \infty }({\mathbb {R}}^d)\) and either \(\Gamma ^\pm =\emptyset \) or \(b^\pm \in \dot{W}^{1, \infty }({\mathbb {R}}^d)\) with \({{\,\mathrm{dist}\,}}(\eta , b^\pm )>0\). Recall that the fluid domains \(\Omega ^\pm \) are given in (1.2). Define

For any \(\sigma \in {\mathbb {R}}\), we define the ‘slightly homogeneous’ Sobolev space

When \(b^\pm \in \dot{W}^{1, \infty }({\mathbb {R}}^d)\), we fix an arbitrary number \(a\in (0, 1)\) and define the ‘screened’ fractional Sobolev spaces

According to Proposition 3.2 [45], the spaces  are independent of \(\eta \) in \(W^{1, \infty }({\mathbb {R}}^d)\) satisfying \({{\,\mathrm{dist}\,}}(\eta , b^\pm )>h>0\). Thus, we can set

are independent of \(\eta \) in \(W^{1, \infty }({\mathbb {R}}^d)\) satisfying \({{\,\mathrm{dist}\,}}(\eta , b^\pm )>h>0\). Thus, we can set

It was proved in [38, 50] that there exist unique continuous trace operators

with norm depending only on \(\Vert \eta \Vert _{\dot{W}^{1, \infty }({\mathbb {R}}^d)}\) and \(\Vert b^\pm \Vert _{\dot{W}^{1, \infty }({\mathbb {R}}^d)}\).

Finally, for \(\sigma > \frac{1}{2}\), we define

and equip it with the norm \(\Vert \cdot \Vert _{\widetilde{H}_\pm ^\sigma }=\Vert \cdot \Vert _{\widetilde{H}^\frac{1}{2}_\pm }+\Vert \cdot \Vert _{H^{1, \sigma }}\).

2.3 Dirichlet–Neumann formulation

Given a function \(f \in {\mathbb {R}}^d\), let \(\phi \) solve the Laplace equation

with the final condition replaced by decay at infinity of \(\phi \) if \(\Gamma ^- = \emptyset \). Then, we define the (rescaled) Dirichlet-Neumann operator \(G^- \equiv G^-(\eta )\) by

The operator \(G^+(\eta )\) for the top fluid domain \(\Omega ^+\) is defined similarly. The solvability of (2.7) is given in the next proposition.

Proposition 2.1

([45] Propositions 3.4 and 3.6). Assume that either \(\Gamma ^-=\emptyset \) or \(b^-\in \dot{W}^{1, \infty }({\mathbb {R}}^d)\). If \(\eta \in W^{1, \infty }({\mathbb {R}}^d)\) and \({{\,\mathrm{dist}\,}}(\eta , \Gamma ^-)>h>0\), then for every \(f\in \widetilde{H}^\frac{1}{2}_-({\mathbb {R}}^d)\) there exists a unique variational solution \(\phi ^-\in \dot{H}^1(\Omega ^-)\) to (2.7). Precisely, \(\phi \) satisfies \(\mathrm {Tr}_{\Omega ^-\rightarrow \Sigma }\phi =f\),

together with the estimate

for some \({\mathcal {F}}: {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on h and \(\Vert \nabla _xb^-\Vert _{L^\infty ({\mathbb {R}}^d)}\).

As the functions \(b^\pm \) are fixed in \(\dot{W}^{1, \infty }({\mathbb {R}}^d)\), we shall omit the dependence on \(\Vert \nabla _xb^-\Vert _{L^\infty ({\mathbb {R}}^d)}\) in various estimates below.

The Muskat problem can be reformulated in terms of \(G^\pm \) as follows.

Proposition 2.2

([46] Proposition 1.1). (i) If \((u, p, \eta )\) solve the one-phase Muskat problem then \(\eta :{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) obeys the equation

Conversely, if \(\eta \) is a solution of (2.11) then the one-phase Muskat problem has a solution which admits \(\eta \) as the free surface.

(ii) If \((u^\pm , p^\pm , \eta )\) is a solution of the two-phase Muskat problem then

where \(f^\pm :=p^\pm \vert _{\Sigma }+\rho ^\pm g \eta \) satisfy

Conversely, if \(\eta \) is a solution of (2.12) where \(f^\pm \) solve (2.13) then the two-phase Muskat problem has a solution which admits \(\eta \) as the free interface.

To make use of the results on the Dirichlet-Neumann operator established in [1, 45, 46], it is convenient to introduce the linear operators

where \(f^\pm :{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) are solutions to the system

Introduce

For the two-phase case, L coincides with \(\frac{\mu ^+ + \mu ^-}{\mu ^+}G^+ J ^+\) in view of (2.13). Thus, writing \(L = (\frac{\mu ^-}{\mu ^+ + \mu ^-} +\frac{\mu ^+}{\mu ^+ + \mu ^-})L\) yields the symmetric formula

This formula holds for the one phase problem (2.11) as well. Indeed, when \(\mu ^+ = 0\) we have \( J ^+ = 0\) and \( J ^- = \text {Id}\), and hence \(L = G^-\). In view of (2.16), Proposition 2.2 implies the following.

Lemma 2.3

The Muskat problem (both one-phase and two-phase) is equivalent to

The next proposition gathers results on the existence and boundedness of the operators \(J ^\pm \), \(G^\pm \), and L in Sobolev spaces.

Proposition 2.4

([1] Theorem 3.12, and [45] Propositions 3.8, 4.8, 4.10 and Remark 4.9). Let \(\mu ^+ \ge 0\) and \(\mu ^- > 0\). Assume \({{\,\mathrm{dist}\,}}(\eta , \Gamma ^\pm )>h>0\).

(i) If \(\eta \in W^{1, \infty }({\mathbb {R}}^d)\) then

where \({\mathcal {F}}\) is nondecreasing and depends only on \((h, \mu ^\pm )\).

(ii) If \(\eta \in H^s({\mathbb {R}}^d)\) with \(s > 1+\frac{d}{2}\) then for any \(\sigma \in [\frac{1}{2}, s]\), we have

where \({\mathcal {F}}\) is nondecreasing and depends only on \((h, \mu ^\pm , s, \sigma )\).

2.4 Paralinearization

Given a function f, define the operators

We note here that \({{\mathfrak {B}}}^\pm f = \partial _y \phi ^\pm |_\Sigma \) and \({{\mathfrak {V}}}^\pm f= \nabla _x \phi ^\pm |_{\Sigma }\), where \(\phi \) solves (2.7). Moreover, as a consequence of (2.20) and product rules, we have

for all \(s>1+\frac{d}{2}\) and \(\sigma \in [\frac{1}{2}, s]\).

The principal symbol of \(G^-(\eta )\) is

while that of \(G^+(\eta )\) is \(-\lambda (x, \xi )\). Note that \(\lambda (x, \xi ) \ge |\xi |\) with equality when \(d=1\). Next we record results on paralinearization of \(G^\pm (\eta )\).

Theorem 2.5

([1] Propostion 3.13, [45] Theorem 3.18). Let \(s>1+\frac{d}{2}\) and let \(\delta \in (0,\frac{1}{2}]\) satisfy \(\delta <s-1-\frac{d}{2}\). Assume that \(\eta \in H^s\) and \({{\,\mathrm{dist}\,}}(\eta , \Gamma ^\pm )>h>0\).

(i) For any \(\sigma \in [\frac{1}{2}, s-\delta ]\), there exists a nondecreasing function \({\mathcal {F}}\) depending only on \((h, s,\sigma ,\delta )\) such that

(ii) For any \(\sigma \in [\frac{1}{2}, s]\), there exists a nondecreasing function \({\mathcal {F}}\) depending only on \((h, s,\sigma ,\delta )\) such that for all \(f \in {\tilde{H}}^\sigma _\pm ({\mathbb {R}}^d)\), we have

Proof

(2.25) was proved in Proposition 3.13 in [1] for \(\sigma \in [\frac{1}{2}, s-\frac{1}{2}]\) but its proof allows for \(\sigma \in [\frac{1}{2}, s-\delta ]\). On the other hand, (2.27) was proved in Theorem 3.17 in [45]. Let us prove (2.26) and (2.28). We recall from (2.17) that \(L= G^- J ^-+G^+J^+\). Applying (2.25) we have

By virtue of (2.20) we have \(\Vert J ^\pm \Vert _{{\mathcal {L}}(H^{\sigma },\widetilde{H}^{\sigma }_\pm )}\le {\mathcal {F}}(\Vert \eta \Vert _{H^s})\), and thus

It follows that

where in the second equality we have used the fact that \( [\![J]\!]=\mathrm {Id}\). This completes the proof of (2.26). Finally, (2.28) can be proved similarly upon using the paralinearizaion (2.27). \(\quad \square \)

Finally, the mean curvature operator \(H(\cdot )\), defined by (1.11), can be paralinearized as follows.

Proposition 2.6

([46] Proposition 3.1). Let \(s > 1 + \frac{d}{2}\) and let \(\delta \in (0,\frac{1}{2}]\) satisfying \(\delta <s-1-\frac{d}{2}\). Then there exists a nondecreasing function \({\mathcal {F}}\) depending only on s such that

where

In addition, if \(\sigma \ge -1\) then

2.5 Contraction estimates

Let \(s>1+\frac{d}{2}\) and consider \(\eta _j\in H^s({\mathbb {R}}^d)\) satisfying \({{\,\mathrm{dist}\,}}(\eta _j, \Gamma ^\pm )>h>0\), \(j=1, 2\). We have the following contraction estimates for \(G^\pm (\eta _1)-G^\pm (\eta _2)\).

Theorem 2.7

([45] Corollary 3.25 and Proposition 3.31). For any \(\sigma \in [\frac{1}{2}, s]\), there exists a nondecreasing function \({\mathcal {F}}:{\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on \((h, s, \sigma )\) such that

and

Theorem 2.8

([45] Theorem 3.24). Let \(\delta \in (0, \frac{1}{2}]\) satisfy \(\delta <s-1-\frac{d}{2}\). Let \(\sigma \in [\frac{1}{2}+\delta , s]\). For any \(f \in \widetilde{H}^s_\pm \), there exists a nondecreasing function \({\mathcal {F}}\) depending only on \((h, s, \sigma )\) such that

where \(\lambda _1\) is defined by (2.24) with \(\eta =\eta _1\).

3 Uniform A Priori Estimates

Conclusion (i) in Theorem 1.1 concerns the uniform local well-posedness of the Muskat problem with surface tension. Key to that is the following a priori estimates that are uniform in the vanishing surface tension limit \({\mathfrak {s}}\rightarrow 0\).

Proposition 3.1

Let \(s > 1+\frac{d}{2}\), \(\mu ^- > 0\), \(\mu ^+ \ge 0\), \({\mathfrak {s}} > 0\), and \(h>0\). Suppose

is a solution to (2.18) with initial data \(\eta _0\in H^s({\mathbb {R}}^d)\) such that

Then, there exists a nondecreasing function \({\mathcal {F}}:{\mathbb {R}}^+\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on \((h, s, \mu ^\pm )\) such that

and

where \({\mathcal {F}}_1(m, n)=m^2{\mathcal {F}}(m, n)\).

Proof

Set \( B= [\![{\mathfrak {B}}(\eta ) J (\eta )]\!]\eta \) and \(V= [\![{\mathfrak {V}}(\eta ) J (\eta )]\!]\eta \). We shall write \({\mathcal {Q}}={\mathcal {Q}}(t)={\mathcal {F}}(\Vert \eta (t)\Vert _{H^s}, {\mathfrak {a}}^{-1})\) when \({\mathcal {F}}(\cdot , \cdot )\) is nondecreasing and depends only on \((h, s, \mu ^\pm )\). Note that \({\mathcal {F}}\) may change from line to line. From (2.18) we have

for both the one-phase and two-phase problems. Fix \(\delta \in \big (0, \min (\frac{1}{2}, s-1-\frac{d}{2})\big )\). By virtue of the paralinearization (2.28) (with \(\sigma =s\)) we have

On the other hand, (2.26) (with \(\sigma =s-\frac{1}{2}\)) together with (2.31) gives

Combining this with the linearization (2.29) for \(H(\eta )\) yields

where we have applied Theorem A.2 to have \(T_\lambda =O_{Op^1}({\mathcal {F}}(\Vert \eta \Vert _{H^s}))\).

Then in view of (3.6) we obtain

Note that \(\lambda \in \Gamma ^1_\delta \), \(l\in \Gamma ^2_\delta \) and \((B, V)\in W^{1+\delta , \infty }\subset \Gamma ^0_\delta \), with seminorms bounded by \({\mathcal {F}}(\Vert \eta \Vert _{H^s})\). The symbolic calculus in Theorem A.3 then gives

where \(\mathrm {Re}(T_{\xi \cdot V}) = \frac{1}{2}(T_{\xi \cdot V} + T_{\xi \cdot V}^*)\). It then follows from (3.7) that

We set \(\eta _s = \langle D\rangle ^s \eta \). Appealing to Theorem A.3 again we have

where \([A, B]=AB-BA\) and in the second line we have used the lower bound (3.2) for \((1-B)\) together with the fact that \(\lambda \ge |\xi |\). Note that we have adopted the convention that \({\mathcal {F}}(\Vert \eta \Vert _{H^s})\equiv {\mathcal {F}}(\Vert \eta \Vert _{H^s}, 0)\). This implies

Since \(i\text {Re}(T_{\xi \cdot V})\) is skew-adjoint, by testing (3.9) against \(\eta _s\), we obtain

where \((\cdot , \cdot )_{L^2}\) denotes the \(L^2\) pairing. The term involving \(1+ \Vert \eta _s\Vert _{H^{\frac{1}{2}-\delta }}\) is treated as follows:

In view of (3.2) and the fact that \(\lambda (x, \xi )\ge |\xi |\), we have the lower bounds

Moreover, \(\lambda (1-B)\in \Gamma ^1_\delta \) and \(l\lambda \in \Gamma ^3_\delta \) with seminorms bounded by \({\mathcal {F}}(\Vert \eta \Vert _{H^s})\). Then applying the Gåarding’s inequality (A.9) gives

where \(\Psi (D)\) denotes the Fourier multiplier with symbol \(\Psi \) defined by (A.3). In addition, we have

Thus, (3.10) amounts to

We use Young’s inequality and interpolation as follows:

and similarly,

Applying these inequalities to (3.14), and then subtracting terms involving \(\Vert \eta _s\Vert _{H^{\frac{1}{2}}}\), we obtain for a larger \({\mathcal {Q}}\) if needed that

A Grönwall’s argument then leads to

where \({\mathcal {Q}}_T={\mathcal {F}}(\Vert \eta \Vert _{L^\infty ([0,T];H^s)}, {\mathfrak {a}}^{-1})\) with \({\mathcal {F}}\) depending only on \((h, s, \mu ^\pm )\). In particular, we have the \(H^s\) estimate (3.4). As for the dissipation estimate, we have

On the other hand, plugging (3.4) into \({\mathcal {Q}}_T\) gives

Therefore, upon setting \({\mathcal {F}}_1(m, n)=m^2{\mathcal {F}}(m, n)\) we obtain

which finishes the proof of (3.5). \(\quad \square \)

4 Contraction Estimates for \(J^\pm \)

Our goal in this section is to prove contraction estimates for \(J^\pm (\eta )\) at two different surfaces \(\eta _1\) and \(\eta _2\). This is only a question for the two-phase problem since for the one-phase problem we have \(J^-=\mathrm {Id}\) and \(J^+\equiv 0\). Given an object X depending on \(\eta \), we shall denote \(X_j = X|_{\eta = \eta _j}\) and the difference

Proposition 4.1

Let \(s > 1 + \frac{d}{2}\) and consider \(\eta _j\in H^s({\mathbb {R}}^d)\) satisfying \({{\,\mathrm{dist}\,}}(\eta _j, \Gamma ^\pm )> h>0\), \(j=1, 2\). For any \(\sigma \in [\frac{1}{2}, s]\), there exists \({\mathcal {F}}:{\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on \((h, s, \sigma , \mu ^\pm )\) such that

where we denoted

We shall prove Proposition 4.1 for the most general case of two fluids and with bottoms, i.e. \(\mu ^+>0\) and \(\Gamma ^\pm \ne \emptyset \). Adaption to the other cases is straightforward.

4.1 Flattening the domain

There exist \(\eta _*^\pm \in C_b^{s+100}({\mathbb {R}}^d)\) such that

and for some \(C=C(h, s, d)\),

For \(j=1, 2\) we set \(\Omega _*=\Omega ^+_{j, *}\cup \Omega ^-_{j, *}\) where

Note that \(\Omega _*=\{(x,y):x\in {\mathbb {R}}^d, \eta ^-_*(x)\le y\le \eta ^+_*(x)\}\) is independent of \(j\in \{1, 2\}\). For small \(\tau >0\) to be chosen, define \(\rho _j(x, z): {\mathbb {R}}^d\times [-1, 1]\) by

Lemma 4.2

There exists \(K>0\) depending only on (s, d) such that if \(\tau K\Vert \eta _j\Vert _{H^s}\le \frac{h}{12}\) then

For \(j =1,2\), the mapping

is a Lipschitz diffeomorphism and respectively maps \({\mathbb {R}}^d\times [0, 1]\) and \({\mathbb {R}}^d\times [-1, 0]\) onto \(\Omega _{*, j}^+\) and \(\Omega _{*, j}^-\). Moreover, there exists \(C=C(h, s, d)\) such that

Proof

We first note that \(\Phi _j(x, 0)=\Sigma _j=\{(x, \eta _j(x)): x\in {\mathbb {R}}^d\}\), \(\Phi _j(x, 1)=\{(x, \eta ^+_*(x)): x\in {\mathbb {R}}^d\}\) and \(\Phi _j(x, -1)=\{(x, \eta ^-_*(x)): x\in {\mathbb {R}}^d\}\). Thus, in order to prove that \(\Phi _j\) is one-to-one and onto, it suffices to prove that \(\partial _z\varrho _j(x, z)\ge c>0\) for a.e. \((x, z)\in {\mathbb {R}}^d\times (-1, 1)\). For \(z\in (-1, 1){\setminus }\{0\}\) we have

For \(z\in [\frac{1}{3}, 1]\), \(1-2z\in [-1, \frac{1}{3}]\) and \(1+2z\in [\frac{5}{3}, 3]\). In addition, by (4.4) we have  . Consequently,

. Consequently,

Similarly we obtain (4.11) for \(z\in (-1, -\frac{1}{3})\) and \(z\in [-\frac{1}{3}, \frac{1}{3}]{\setminus }\{0\}\). Next writing

we obtain that

for some constant \(K=K(s, d)\). Note that the condition \(s>1+\frac{d}{2}\) has been used. Choosing \(\tau >0\) such that \(\tau K\Vert \eta _j\Vert _{H^s}\le h/12\) gives \(\partial _z\varrho _j(x, z)\ge h/12\) for a.e. \((x, z)\in {\mathbb {R}}^d\times (-1, 1)\).

Since

there exists \(K'=K'(s, d)\) such that

where we have used (). Then in view of the fact that \(\tau \Vert \eta _j\Vert _{H^s}\le hK^{-1}\), we obtain

whence (4.9) follows. On the other hand, we have \(\Phi ^{-1}_j(x, y)=(x, \kappa _j(x, y))\) where

Then the relation \(\kappa _j(x, \varrho _j(x, z))=z\) yields

Thus, in view of (4.8) and (4.12), we obtain (4.10). \(\quad \square \)

Lemma 4.3

Set

and

Then, \(\Upsilon \) is a Lipschitz diffeomorphism on \(\Omega \) and

Moreover, M satisfies

Proof

According to Lemma 4.2, \(\Upsilon \) is a Lipschitz diffeomorphism on \(\Omega _*\). For \((x, y)\in \Omega {\setminus }\Omega _*\) we have \(\Upsilon =\mathrm {Id}\), and hence \(\Upsilon \) is a Lipschitz diffeomorphism on \(\Omega \) and \(M-\mathrm {Id}=0\). It thus suffices to consider \((x, y)\in \Omega _*\). On \(\Omega _*\) we have \(\Upsilon (x, y)=(x, \varrho _1(x, \kappa _2(x, y))\), and so

where

Using (4.14) (with \(j=2\)) gives

In view of (4.8) and (4.12) we obtain

Next we compute

Using the above formulas for a and b together with (4.7) and (4.8) we deduce that

This combined with (4.21) leads to (4.18), (4.19) and (4.20). \(\quad \square \)

4.2 Proof of Proposition 4.1

The proof proceeds in three steps.

Step 1. We first recall from (2.15) that \(J^\pm v=f^\pm \) where \(f^\pm \) solve

From the definition of the Dirichlet-Neumann operator we see that \(f^\pm =q^\pm \vert _\Sigma \) where \(q^\pm \) solve the two-phase elliptic problem

To remove the jump of q at \(\Sigma \) we take a function \(\theta :\Omega \rightarrow {\mathbb {R}}\) satisfying

Then, the solution of (4.23) can be taken to be \(q^\pm :=(r\pm \theta )\vert _{\Omega ^\pm }\) where \(r\in \dot{H}^1(\Omega )\) solves

A function \(\theta \) satisfying (4.24) and (4.25) can be constructed as follows. Let \(\varsigma (z):{\mathbb {R}}\rightarrow {\mathbb {R}}^+\) be a cutoff function that is identically 1 for \(|z|\le \frac{1}{2}\) and vanishes for \(|z|\ge 1\). Set

Then, \(\theta (x, y)=0\) for \(|y-\eta (x)|\ge h\), and hence \(\theta _1\equiv 0\) near \(\Gamma ^\pm \) in view of the condition \({{\,\mathrm{dist}\,}}(\eta , \Gamma ^\pm )>h\). Moreover, (4.30) is satisfied.

Integration by parts leads to the following variational form of (4.26):

For example, for \(\varsigma (z):{\mathbb {R}}\rightarrow {\mathbb {R}}^+\) a cutoff function that is identically 1 for \(|z|\le \frac{1}{2}\) and vanishes for \(|z|\ge 1\), we set

Then, \(\theta (x, y)=0\) for \(|y-\eta (x)|\ge h\), and hence \(\theta _1\equiv 0\) near \(\Gamma ^\pm \) in view of the condition \({{\,\mathrm{dist}\,}}(\eta , \Gamma ^\pm )>h\). Moreover, we have

By virtue of the Lax-Milgram theorem, there exists a unique solution \(r\in \dot{H}^1(\Omega )\) to (4.28) which obeys the bound

Consequently,

and hence by the trace operation (2.5),

We note that \(\theta \) defined by (4.27) depends on \(\eta \), and so does r.

Step 2. In this step we prove contraction estimates for \(J^\pm _\delta = J^\pm _1-J^\pm _2\) in \(\widetilde{H}^\frac{1}{2}_\pm \). Recall that \(\Upsilon \) defined by (4.15) is a Lipschitz diffeomorphism on \(\Omega \). Let \(\theta _1\) be defined as in (4.29) with \(\eta =\eta _1\), and let \(\theta _2=\theta _1\circ \Upsilon \). Let us check that \(\theta _2\) obeys (4.24) and (4.25) for \(\eta =\eta _2\). Indeed, using the fact that \(\Upsilon :\Sigma _2\rightarrow \Sigma _1\) we have

On the other hand, since \(\Upsilon \equiv \mathrm {Id}\) near \(\Gamma ^\pm \) and \(\theta _1\equiv 0\) near \(\Gamma ^\pm \), we deduce that \(\theta _1\equiv 0\) near \(\Gamma ^\pm \). Finally, the bound (4.30) for \(\theta _2\) follows from (4.25) for \(\theta _1\) and the Lipschitz bound (4.18) for \(\Upsilon \).

According to Step 1, we have

where \(r_j\in \dot{H}^1(\Omega )\) satisfies

Set \(\widetilde{r}_2 = r_2 \circ \Upsilon ^{-1}\) and recall that \(\theta _1=\theta _2\circ \Upsilon ^{-1}\). Combining (4.31) and (4.17) gives

Since map \(\phi \mapsto \phi \circ \Upsilon ^{-1}\) is an isomorphism on \(\dot{H}^1 (\Omega )\), with \(M = \nabla \Upsilon \nabla \Upsilon ^t/|\det \nabla \Upsilon |\) we have for all \(\phi \in \dot{H}^1(\Omega )\) that

where gradients of scalar functions are understood as row vectors, and the rows of the Jacobian matrix \(\nabla \Upsilon \) are the gradients of each component of \(\Upsilon \). Taking the difference between (4.35) with \(j=2\) and (4.34) with \(j=1\), we obtain

for all \(\phi \in \dot{H}^1(\Omega )\). Setting \(\phi =\widetilde{r}_2 -r_1\) and using the estimate (4.19) for \(M-\mathrm {Id}\) in \(L^\infty (\Omega )\) we obtain

On the other hand, using (4.20) for \(M-\mathrm {Id}\) in \(L^2(\Omega )\) instead gives

where in the last inequality we have used the fact that

Since \(r_2=\widetilde{r}_2\circ \Upsilon \), \(\theta _2= \theta \circ \Upsilon \), and \(\Upsilon :\Omega _2^\pm \rightarrow \Omega _1^\pm ,~\Sigma _2\rightarrow \Sigma _1\) we have

and hence

In view of (4.37) and (4.38), the trace operation (2.5) yields

For the proof of (4.1), we shall only need (4.40).

Step 3. We have \(J^\pm _jv\equiv J^\pm (\eta _j)v=f^\pm _j\) where

By taking differences we obtain \(f^-_\delta =f^+_\delta \) and

where we recall the notation \(G^\pm _\delta =G^\pm _1-G^\pm _2\). Combining the contraction estimate (2.32) with the continuity (2.20) for \(J^\pm \), we deduce that

We take \(\delta \in (0, \frac{1}{2}]\) satisfying \(\sigma <s-1-\frac{d}{2}\). In light of the paralinearization (2.25) for \(G^\pm _1\), we have

It follows from (4.42),(4.43) and (4.44) that if

then

Applying Lemma A.6 we have

But for \(\tau >\frac{1}{2}\),

whence (4.46) implies that

provided that \(\nu \) and \(\sigma \) satisfy (4.45).

We note that (4.40) implies that

and hence (4.1) holds for \(\sigma =\frac{1}{2}\). Now fix \(\sigma \in (\frac{1}{2}, s]\). We use (4.47) to bootstrap the base estimate (4.48) to

Indeed, we can always take \(\delta \) smaller if necessary so that \(\frac{1}{2}+\delta <\sigma \). Plugging (4.48) into (4.47) with \(\nu =\frac{1}{2}\) yields (4.49) with \(\frac{1}{2}+\delta \) in place of \(\sigma \). Continuing this n steps, n being the greatest integer such that \(\frac{1}{2}+n\delta \le \sigma \), we obtain (4.49) for \(\frac{1}{2}+n\delta \) in place of \(\sigma \). This is justified since \(\nu =\frac{1}{2}+(n-1)\delta \) satisfies (4.45). Thus, for possibly one more step to gain \(\sigma -(\frac{1}{2}+n\delta )\) derivative, we obtain (4.49). The proof of (4.1) is complete.

Finally, (4.2) can be proved similarly except that one uses the contraction estimate (2.33) to estimate \(G^\pm _\delta f^\pm _2\) in (4.43).

5 Proof of Theorem 1.1

Let \(s > 1+\frac{d}{2}\), \(\mu ^- > 0\), \(\mu ^+ \ge 0\), and \({\mathfrak {s}} \in (0, 1]\). Consider an initial datum \(\eta _0\in H^s({\mathbb {R}}^d)\) satisfying

Theorem 1.1 will be proved in Propositions 5.1, 5.2 and 5.5 below. Precisely, Proposition 5.1 establishes the uniform local well-posedness for the Muskat problem with surface tension belonging to any bounded set, say \({\mathfrak {s}}\in (0, 1]\). Then, in Propositions 5.2 and 5.5, we prove that in appropriate topologies, \(\eta ^{({\mathfrak {s}})}\) converges to \(\eta \) with the rate \(\sqrt{\mathfrak {s}}\) and \({\mathfrak {s}}\) respectively.

Proposition 5.1

There exists a time \(T_*>0\) depending only on \(\Vert \eta _0\Vert _{H^s}\) and \(({\mathfrak {a}}, h, s, \mu ^\pm , {\mathfrak {g}})\) such that the following holds. For each \({\mathfrak {s}}\in (0, 1]\), there exists a unique solution

to the Muskat problem with surface tension \({\mathfrak {s}}\), \(\eta ^{({\mathfrak {s}})}\vert _{t=0}=\eta _0\) and

for some nondecreasing function \({\mathcal {F}}:{\mathbb {R}}^+\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on \((h, s, \mu ^\pm , {\mathfrak {g}})\). Furthermore, for all \({\mathfrak {s}}\in (0, 1]\) we have

Proof

According to Theorems 1.2 and 1.3 in [46], for each initial datum \(\eta _0\in H^s\) satisfying \({{\,\mathrm{dist}\,}}(\eta _0, \Gamma ^\pm )\ge 2h>0\) and for each \({\mathfrak {s}}>0\), there exists \(T_{\mathfrak {s}}>0\) such that the Muskat problem has a unique solution

satisfying \(\inf _{t\in T_{\mathfrak {s}}}{{\,\mathrm{dist}\,}}(\eta ^{(s)}(t),\Gamma ^\pm )> \frac{3}{2}h\). We stress the continuity in time of the \(H^s\) norm of \(\eta ^{({\mathfrak {s}})}\). Now we have in addition that \(\eta _0\) satisfies the Rayleigh-Taylor condition (5.1). Thus, we define

We shall prove that \(T^*_{\mathfrak {s}}>0\) for each \({\mathfrak {s}}\in (0, 1]\) and there exists \(T_*>0\) such that \(T^*_{\mathfrak {s}}\ge T_*\) for all \(s\in (0, 1]\).

Step 1. We claim that there exist \(\theta >0\) depending only on s, and \({\mathcal {F}}_0: {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on \((h, s, \mu ^\pm , {\mathfrak {g}})\) such that

for all \(t\le T_{\mathfrak {s}}\), where

Set

The continuity properties (2.20) and (2.23) of \(J^\pm \) and \(B^\pm \) imply that

On the other hand, denoting \({\mathfrak {B}}^\pm (\eta ^{({\mathfrak {s}})}(t))=\mathfrak {B}^\pm _t\) and \(J^\pm (\eta ^{({\mathfrak {s}})}(t))=J^\pm _t\), we can write

We treat the first two terms since the other terms are either similar or easier. The contraction estimate (2.32) with \(\sigma =s-\frac{1}{2}\) gives

From this and the definition of \(\mathfrak {B}^\pm \) it is easy to prove that

Then recalling the continuity of \(J^\pm \) from \(H^{s-\frac{1}{2}}\rightarrow \widetilde{H}^{s-\frac{1}{2}}_\pm \) we obtain

Next regarding \(\mathfrak {B}^-_0(J^-_t-J^-_0)\eta ^{({\mathfrak {s}})}(t)\) we use the contraction estimate (4.1) with \(\sigma =s-\frac{1}{2}\)

together with the continuity (2.23) to have

Therefore, we arrive at

To bound \(\Vert \eta ^{({\mathfrak {s}})}(t)-\eta _0\Vert _{H^{s-\frac{1}{2}}}\) we first use the mean-value theorem and equation (2.18) to have

for all \({\mathfrak {s}}\in (0, 1]\) and \(t\le T_{\mathfrak {s}}\). Interpolating this with the obvious bound \(\Vert \eta ^{({\mathfrak {s}})}(t)-\eta _0\Vert _{H^s}\le {\mathcal {F}}(M_s(t))\) gives

for some \(\theta _0\in (0, 1)\) and \({\mathcal {F}}\) depending only on \((h, s, \mu ^\pm , {\mathfrak {g}})\). Then in view of (5.11), this implies

Fixing \(s'\in (\max \{1+\frac{d}{2}, s-\frac{3}{2}\}, s)\) and interpolating this with the \(H^s\) bound (5.10) we obtain

for some \(\theta \in (0, 1)\). Using the embedding \(H^{s'-1}\subset L^\infty ({\mathbb {R}}^d)\) we conclude the proof of (5.8).

Step 2. We note that (5.8) implies the continuity of

Thus, in view of the definition (5.7) and the initial condition (5.1), we have \(T^*_{\mathfrak {s}}>0\) for all \({\mathfrak {s}}\in (0, 1]\).

By the definition of \(T^*_{\mathfrak {s}}\), conditions (3.1), (3.2) and (3.3) in Proposition 3.1 are satisfied for all \(T\le T^*_{\mathfrak {s}}\). Thus, the estimates (3.4) and (3.5) imply the existence of a strictly increasing \({\mathcal {F}}_2:{\mathbb {R}}^+\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on \((h, s, \mu ^\pm , {\mathfrak {g}})\) such that

and

for all \({\mathfrak {s}}\in (0, 1]\) and \(T\le T_{\mathfrak {s}}^*\), where

Set

independent of \({\mathfrak {s}}\). We claim that

Assume not, then there exists \({\mathfrak {s}}_0\in (0, 1]\) and \(T_3\le \min \{T_2, T^*_{{\mathfrak {s}}_0}\}\) such that \(M_{{\mathfrak {s}}_0}(T_3)> K_0\). Since \(M_{{\mathfrak {s}}_0}(0)=\Vert \eta _0\Vert _{H^s}^2<K_0\), the continuity of \(T\mapsto E_{{\mathfrak {s}}_0}(T)\) then yields the existence of \(T_4\in (0, T_3)\) such that \(M_{{\mathfrak {s}}_0}(T_4)= K_0\). Consequently, at \(T=T_4\), (5.14) gives

where we have used the definition of \(T_2\) in the last inequality. This contradicts the fact that \({\mathcal {F}}_2\) was chosen to be strictly increasing.

Now for all \(T\le \min \{T_2, T^*_{\mathfrak {s}}\}\), we use (5.8) and the fact that \(E_{\mathfrak {s}}(\cdot )\le M_{\mathfrak {s}}(\cdot )\) to have

Choosing \(T_*\le T_2\) sufficiently small so that

we obtain

Clearly, \(T_*\) is independent of \({\mathfrak {s}}\). Moreover, (5.18) and the definition of \(T_{\mathfrak {s}}^*\) show that \(T_*\le T^*_{\mathfrak {s}}\) for all \({\mathfrak {s}}\in (0, 1]\). Finally, since \(T_*\le \min \{T_2, T_{\mathfrak {s}}^*\}\), (5.16) and the definition of \(T^*_{\mathfrak {s}}\) guarantee that the estimates (5.4), (5.5) and (5.6) hold true. \(\quad \square \)

Proposition 5.2

There exists \({\mathcal {F}}:{\mathbb {R}}^+\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depending only on \((h, s, \mu ^\pm , {\mathfrak {g}})\) such that

for all \({\mathfrak {s}}_1\) and \({\mathfrak {s}}_2\) in (0, 1].

Proof

Denote \(\eta _j=\eta ^{({\mathfrak {s}}_j)}\), \(j=1, 2\), and \(\eta _\delta =\eta _1-\eta _2\) which exists on \([0, T_*]\). We fix \(\delta \in \big (0, \min (\frac{1}{2}, s-1-\frac{d}{2})\big )\). From (2.18) we have that \(\eta _\delta \) evolves according to

We now paralinearize \(L_2\) and \(L_\delta \). Applying (2.26) with \(\sigma =s-\frac{1}{2}-\delta \) gives

As for \(L_\delta \) we write

Using (2.25) at \(\sigma = s-\frac{1}{2}-\delta \), we have

Recall that \(N_s\) is given by (4.3). However,

and by virtue of Proposition 4.1 (with \(\sigma =s-\frac{1}{2}-\delta )\),

We thus obtain

As for \(G_\delta ^\pm J _1^\pm \), we apply Theorem 2.8 with \(\sigma =s-\frac{1}{2}\) and (2.20) to have

and hence

Combining this with (5.24) yields

From (5.22) and (5.25) we have

Interchanging \(\eta _1\) and \(\eta _2\) gives

But \(L_1\eta _\delta +L_\delta \eta _2=L_2\eta _\delta +L_\delta \eta _1\), thus taking the average of the above identities yields

where

and similarly for \(([\![{\mathfrak {V}} J]\!]\eta )_\alpha \). It then follows from (5.20) and (5.21) that

Next we perform \(H^{s-1}\) energy estimate for (5.26). Introduce \(\eta _{\delta , s-1}=\langle D\rangle ^{s-1}\eta _\delta \). Upon commuting (5.26) with \(\langle D\rangle ^{s-1}\) and applying Theorem A.3 we arrive at

where \({\mathcal {F}}\) depends only on \((h, s, \mu ^\pm )\). Moreover, the uniform estimate () implies that

Testing (5.27) against \(\eta _{\delta , s-1}\) yields

where we have used the fact that \(i\mathrm {Re}\big (T_{([\![{\mathfrak {V}} J]\!]\eta )_\alpha \cdot \xi }\big )\) is skew-adjoint.

From (5.5) we have that \( (\lambda (1-[\![{\mathfrak {B}} J ]\!]\eta ))_\alpha \) is an elliptic symbol in \(\Gamma ^1_\delta \):

where \({\mathcal {F}}\) depends only on \((h, s, \mu ^\pm , {\mathfrak {g}})\). Applying the Gårding inequality (A.9), we have

This combined with the estimate for \({\mathcal {R}}\) in (5.27) and (5.28) implies

where

\({\mathcal {F}}\) depending only on \((h, s, \mu ^\pm , {\mathfrak {g}})\). Using interpolation and Young’s inequality, we have

Thus, for possibly a larger \({\mathcal {F}}\) in \({\mathcal {Q}}_{T_*}\), we obtain

Finally, since \(\eta _\delta \vert _{t=0}=0\) and by (5.4)

an application of Grönwall’s lemma leads to the estimate (5.19). \(\quad \square \)

Now let \({\mathfrak {s}}_n\rightarrow 0\) and rename \(\eta _n=\eta ^{({\mathfrak {s}}_n)}\) solution to the Muskat problem with surface tension \({\mathfrak {s}}_n\) on \([0, T_*]\). The uniform estimates in (5.4) show that along a subsequence \(\eta _n\) converges weakly-* to

together with the bounds

The estimate (5.19) implies that \((\eta _n)_n\) is a Cauchy sequence in \(C([0, T_*]; H^{s-1})\cap L^2([0, T_*]; H^{s-\frac{1}{2}})\). Therefore,

in particular, \(\eta \vert _{t=0}=\eta _0\). Moreover, by interpolating between \(L^\infty _t H^s_x\) and \(C_t H^{s-1}\), we deduce that \(\eta \in C([0, T_*]; H^{s'})\) for all \(s'<s\). Since \(\eta _n\rightarrow \eta \) in \(C_t H^{s-1}_x\subset C_tL^\infty _x\), (5.6) gives

Lemma 5.3

\(\eta \) is a solution on \([0, T_*]\) of the Muskat problem without surface tension with initial data \(\eta _0\).

Proof

For each n, we have from (2.18) that

For any compactly supported test function \(\varphi \in C^\infty ((0, T_*)\times {\mathbb {R}}^d)\), we have

Clearly, (5.35) implies that

The continuity (2.20) of L combined with (2.31) and the uniform bound (5.4) yields

Since \(s-\frac{3}{2}>0\), this implies

Next we write

Combining (2.32) and (2.20) we obtain

On the other hand, (2.20) and (4.1) yield

Finally, by (2.20) we have

Putting together the above considerations, we obtain

by virtue of the strong convergence (5.35) and the uniform \(H^s\) bound in (5.4). We have proved that

for all compactly supported smooth test functions \(\varphi \). Therefore, \(\eta \) is a solution on \([0, T_*]\) of the Muskat problem without surface tension. \(\quad \square \)

Lemma 5.4

We have

Proof

Set

Arguing as in the proof of (5.11) we find that

On the other hand, by estimating each term in K we have

Choosing \(s'\in (\max \{\frac{d}{2}, s-2\}, s-1)\), then interpolating the above estimates gives

for some \(\theta \in (0, 1)\). Then, (5.39) follows from this and (5.5). \(\quad \square \)

Now in view of the properties (5.34), (5.36) and (5.39) of \(\eta \), we see that in the proof of (5.19), if we replace \(\eta ^{({\mathfrak {s}}_1)}\) with \(\eta _n\), \(\eta ^{({\mathfrak {s}}_2)}\) with \(\eta \), and \(({\mathfrak {s}}_1, {\mathfrak {s}}_2)\) with \(({\mathfrak {s}}_n, 0)\), then we obtain the convergence estimate

Furthermore, assume that \(\eta _1\) and \(\eta _2\) are two solutions on \([0, T_*]\) of the Muskat problem without surface tension with the same initial data \(\eta _0\) and that both satisfy (5.34), (5.36) and (5.39). Then the proof of (5.19) with \({\mathfrak {s}}_1={\mathfrak {s}}_2=0\) yields that \(\eta _1\equiv \eta _2\) on \([0, T_*]\). This proves the uniqueness of \(\eta \). In other words, we have obtained an alternative proof for the local well-posedness of the Muskat problem without surface tension for any subcritical data satisfying (5.1) and (5.2).

The next proposition improves the rate in (5.40) to the optimal rate.

Proposition 5.5

If in addition \(s\ge 2\), then

where \({\mathcal {F}}:{\mathbb {R}}^+\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) depends only on \((h, s, \mu ^\pm , {\mathfrak {g}})\).

Proof

We follow the notation in the proof of Proposition 5.2 but set \(\eta _1=\eta _n\) and \(\eta _2=\eta \). Then, \(\eta _\delta =\eta _n-\eta \) satisfies

Applying (2.20) and (2.31) with \(\sigma =s-\frac{3}{2}\ge \frac{1}{2}\) yields

Next we paralinearize \(L_2\) and \(L_\delta \). For \(L_2\) we apply (2.26) with \(\sigma =s-\frac{3}{2}\ge \frac{1}{2}\)

\(L_\delta \) can be written as in (5.23). Using (2.25) with \(\sigma = s-\frac{3}{2}\ge \frac{1}{2}\) together with the fact that \(J _\delta ^--J^+_\delta =0\), we obtain

Applying Proposition 4.1 with \(\sigma =s-\frac{3}{2}\ge \frac{1}{2}\), we obain

and hence

On the other hand, Theorem 2.8 can be applied with \(\sigma =s-\frac{3}{2}\ge \frac{1}{2}\), implying

We thus obtain

Applying this with \(f=\eta _1\), then combining with (5.44) and symmetrizing we arrive at

Plugging this and (5.43) into (5.20) leads to

Next we set \(\eta _{\delta , s-2}=\langle D\rangle ^{s-2}\eta _\delta \) and commute the first equation in (5.46) with \(\langle D\rangle ^{s-2}\) to obtain after applying Theorem A.3 that

where \({\mathcal {F}}\) depends only on \((h, s, \mu ^\pm )\). An \(L^2\) energy estimate as in (5.29) yields

where \({\mathcal {Q}}_{T_*}\) is given by (5.30). Interpolating as in (5.31) we obtain

From the uniform estimate (5.4) we have

Thus, applying Gönwall’s lemma to (5.49) we arrive at (5.41). \(\quad \square \)

References

Alazard, T., Burq, N., Zuily, C.: On the Cauchy problem for gravity water waves. Invent. Math. 198(1), 71–163 (2014)

Alazard, T., Lazar, O.: Paralinearization of the Muskat equation and application to the Cauchy problem. arXiv:1907.02138 [math.AP] (2019)

Alazard, T., Meunier, N., Smets, D.: Lyapounov functions, Identities and the Cauchy problem for the Hele-Shaw equation. Preprint arXiv:1907.03691

Ambrose, D.M., Masmoudi, N.: The zero surface tension limit of two-dimensional water waves. Commun. Pure Appl. Math. 58(10), 1287–1315 (2005)

Ambrose, D.M., Masmoudi, N.: The zero surface tension limit of three-dimensional water waves. Indiana Univ. Math. J. 58(2), 479–521 (2009)

Ambrose, D.M.: Well-posedness of two-phase Hele-Shaw flow without surface tension. Eur. J. Appl. Math. 15(5), 597–607 (2004)

Ambrose, D.M.: Well-posedness of two-phase Darcy flow in 3D. Q. Appl. Math. 65(1), 189–203 (2007)

Ambrose, D.M.: The zero surface tension limit of two-dimensional interfacial Darcy flow. J. Math. Fluid Mech. 16, 105–143 (2014)

Ambrose, D.M., Liu, S.: The zero surface tension limit of three-dimensional interfacial Darcy flow. J. Differ. Equ. 268(7), 3599–3645 (2020)

Bona, J.L., Smith, R.: The initial-value problem for the Korteweg-de Vries equation. Philos. Trans. R. Soc. Lond. Ser. A 278(1287), 555–601 (1975)

Bony, J.-M.: Calcul symbolique et propagation des singularités pour les équations aux dérivées partielles non linéaires. Ann. Sci. École Norm. Sup. (4) 14(2), 209–246 (1981)

Cameron, S.: Global well-posedness for the two-dimensional Muskat problem with slope less than 1. Anal. PDE 12(4), 997–1022 (2019)

Castro, A., Córdoba, D., Fefferman, C., Gancedo, F.: Breakdown of smoothness for the Muskat problem. Arch. Ration. Mech. Anal. 208(3), 805–909 (2013)

Castro, A., Córdoba, D., Fefferman, C.L., Gancedo, F., López-Fernández, María: Rayleigh Taylor breakdown for the Muskat problem with applications to water waves. Ann. Math 175(2), 909–948 (2012)

Ceniceros, H.D., Hou, T.Y.: The singular perturbation of surface tension in Hele-Shaw flows. J. Fluid Mech. 409, 251–272 (2000)

Ceniceros, H.D., Hou, T.Y.: Numerical study of interfacial problems with small surface tension, in: First International Congress of Chinese Mathematicians, Beijing, 1998, in: AMS/IP Stud. Adv. Math., vol.20, Amer. Math. Soc., Providence, RI, 2001, pp.63–92

Chen, X.: The hele-shaw problem and area-preserving curve-shortening motions. Arch. Ration. Mech. Anal. 123(2), 117–151 (1993)

Cheng, C.H., Granero-Belinchón, R., Shkoller, S.: Well-posedness of the Muskat problem with \(H^2\) initial data. Adv. Math. 286, 32–104 (2016)

Constantin, P.: Note on loss of regularity for solutions of the 3-D incompressible Euler and related equations. Commun. Math. Phys. 104(2), 311–326 (1986)

Constantin, P., Córdoba, D., Gancedo, F., Rodriguez-Piazza, L., Strain, R.M.: On the Muskat problem: global in time results in 2D and 3D. Am. J. Math. 138(6), 1455–1494 (2016)

Constantin, P., Córdoba, D., Gancedo, F., Strain, R.M.: On the global existence for the Muskat problem. J. Eur. Math. Soc. 15, 201–227 (2013)

Constantin, P., Gancedo, F., Shvydkoy, R., Vicol, V.: Global regularity for 2D Muskat equations with finite slope. Ann. Inst. H. Poincaré Anal. Non Linéaire 34(4), 1041–1074 (2017)

Constantin, P., Pugh, M.: Global solutions for small data to the Hele-Shaw problem. Nonlinearity 6(3), 393–415 (1993)

Córdoba, A., Córdoba, D., Gancedo, F.: Interface evolution: the Hele-Shaw and Muskat problems. Ann. Math. 173(1), 477–542 (2011)

Córdoba, A., Córdoba, D., Gancedo, F.: Porous media: the Muskat problem in three dimensions. Anal. & PDE 6(2), 447–497 (2013)

Córdoba, D., Gancedo, F.: Contour dynamics of incompressible 3-D fluids in a porous medium with different densities. Commun. Math. Phys. 273(2), 445–471 (2007)

Córdoba, D., Lazar, O.: Global well-posedness for the 2d stable Muskat problem in \(H^{3/2}\), preprint (2018), arXiv:1803.07528 [math.AP]

Deng, F., Lei, Z., Lin, F.: On the two-dimensional Muskat problem with monotone large initial data. Commun. Pure Appl. Math. 70(6), 1115–1145 (2017)

Coutand, J., Hole, J., Shkoller, S.: Well-posedness of the free-boundary compressible 3-D Euler equations with surface tension and the zero surface tension limit. SIAM J. Math. Anal. 45, 3690–3767 (2013)

Escher, J., Matioc, B.V.: On the parabolicity of the Muskat problem: well-posedness, fingering, and stability results. Z. Anal. Anwend. 30(2), 193–218 (2011)

Escher, J., Simonett, G.: Classical solutions for Hele-Shaw models with surface tension. Adv. Differ. Equ. 2, 619–642 (1997)

Gancedo, F., García-Juárez, E., Patel, N., Strain, R.M.: On the Muskat problem with viscosity jump: global in time results. Adv. Math. 345, 552–597 (2019)

Gancedo, F., García-Juárez, E., Patel, N., Strain, R.M.: Global regularity for gravity unstable Muskat bubbles. arXiv:1902.02318v2, (2020)

Granero-Belinchón, R., Shkoller, S.: Well-posedness and decay to equilibrium for the muskat problem with discontinuous permeability. Trans. Am. Math. Soc. 372(4), 2255–2286 (2019)

Guo, Y., Hallstrom, C., Spirn, D.: Dynamics near unstable, interfacial fluids. Commun. Math. Phys. 270(3), 635–689 (2007)

Hadzic, M., Shkoller, S.: Well-posedness for the classical Stefan problem and the zero surface tension limit. Arch. Rational Mech. Anal. 223, 213–264 (2017)

Shatah, J., Zeng, C.: Local well-posedness for fluid interface problems. Arch. Ration. Mech. Anal. 199(2), 653–705 (2011)

Leoni, G., Tice, I.: Traces for homogeneous Sobolev spaces in infinite strip-like domains. J. Funct. Anal. 277(7), 2288–2380 (2019)

Matioc, B.-V.: The muskat problem in 2d: equivalence of formulations, well-posedness, and regularity results. Anal. PDE 12(2), 281–332 (2018)

Matioc, B.-V.: Viscous displacement in porous media: the Muskat problem in 2D. Trans. Am. Math. Soc. 370(10), 7511–7556 (2018)

Masmoudi, N.: Remarks about the inviscid limit of the Navier-Stokes system. Commun. Math. Phys. 270(3), 777–788 (2007)

Métivier, G.: Para-differential calculus and applications to the Cauchy problem for nonlinear systems. Centro di Ricerca Matematica Ennio De Giorgi (CRM) Series, vol. 5. Edizioni della Normale, Pisa (2008)

Muskat, M.: Two Fluid systems in porous media. The encroachment of water into an oil sand. Physics 5, 250–264 (1934)

Nguyen, H.Q.: Hadamard well-posedness of the gravity water waves system. J. Hyperbolic Differ. Equ. 13(4), 791–820 (2016)

Nguyen, H.Q., Pausader, B.: A paradifferential approach for well-posedness of the Muskat problem. arXiv:1907.03304 [math.AP], (2019)

Nguyen, H.Q.: On well-posedness of the Muskat problem with surface tension. arXiv:1907.11552 [math.AP], (2019)

Siegel, M., Tanveer, S.: Singular perturbation of smoothly evolving Hele-Shaw solutions. Phys. Rev. Lett. 76, 419–422 (1996)

Siegel, M., Tanveer, S., Dai, W.-S.: Singular effects of surface tension in evolving Hele-Shaw flows. J. Fluid Mech. 323, 201–236 (1996)

Siegel, M., Caflisch, R., Howison, S.: Global existence, singular solutions, and Ill-posedness for the Muskat problem. Commun. Pure Appl. Math. 57, 1374–1411 (2004)

Strichartz, R.S.: “Graph paper” trace characterizations of functions of finite energy. J. Anal. Math. 128, 239–260 (2016)

Yi, F.: Local classical solution of Muskat free boundary problem. J. Partial Differ. Equ. 9, 84–96 (1996)

Acknowledgements

The work of HQN was partially supported by NSF Grant DMS-1907776. The authors thank B. Pausader for discussions about the Muskat problem. We would like to thank the reviewer for his/her careful reading and helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. Ionescu.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Paradifferential Calculus

Appendix A. Paradifferential Calculus

In this appendix, we recall the symbolic calculus of Bony’s paradifferential calculus. See [11, 42].

Definition A.1

1. (Paradifferential symbols) Given \(\rho \in [0, \infty )\) and \(m\in {\mathbb {R}}\), \(\Gamma _{\rho }^{m}({\mathbb {R}}^d)\) denotes the space of locally bounded functions \(a(x,\xi )\) on \({\mathbb {R}}^d\times ({\mathbb {R}}^d{\setminus } 0)\), which are \(C^\infty \) with respect to \(\xi \) for \(\xi \ne 0\) and such that, for all \(\alpha \in {\mathbb {N}}^d\) and all \(\xi \ne 0\), the function \(x\mapsto \partial _\xi ^\alpha a(x,\xi )\) belongs to \(W^{\rho ,\infty }({\mathbb {R}}^d)\) and there exists a constant \(C_\alpha \) such that,

Let \(a\in \Gamma _{\rho }^{m}({\mathbb {R}}^d)\), we define the semi-norm

2. (Paradifferential operators) Given a symbol a, we define the paradifferential operator \(T_a\) by

where \({\widehat{a}}(\theta ,\xi )=\int e^{-ix\cdot \theta }a(x,\xi )\, dx\) is the Fourier transform of a with respect to the first variable; \(\chi \) and \(\Psi \) are two fixed \(C^\infty \) functions such that:

and \(\chi (\theta ,\eta )\) satisfies, for \(0<\varepsilon _1<\varepsilon _2\) small enough,

and such that

Theorem A.2

For all \(m\in {\mathbb {R}}\), if \(a\in \Gamma ^m_0\) then

Theorem A.3

(Symbolic calculus). Let \(a \in \Gamma _r^{m}, a'\in \Gamma _r^{m'}\) and set \(\delta = \min \{1,r\}\). Then,

(i)

(ii)

Remark A.4

In the definition (A.2) of paradifferential operators, the cut-off \(\Psi \) removes the low frequency part of u. In particular, if \(a\in \Gamma ^m_0\) then

and similarly for other estimates involving paradifferential operators.

Proposition A.5

(Gåarding’s inequality). Assume \(a\in \Gamma ^m_r\) with \(m\in {\mathbb {R}}\) and \(r\in (0, 1]\) such that for some \(c>0\)

Then, for all \(\sigma \in {\mathbb {R}}\), there exists \({\mathcal {F}}:{\mathbb {R}}^+\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) nondecreasing such that

and

provided that both sides are finite. Here, \(\Psi (D)\) is the Fourier multiplier with symbol \(\Psi \) given by (A.3).

Proof

We have

According to Theorem A.3 (ii), \(T_a^*-T_{{\overline{a}}}\) is of order \(m-r\) and

Set \(b=(\mathrm {Re}(a))^\frac{1}{2}\). By virtue of (A.7) we have \(b\in \Gamma ^{\frac{m}{2}}_r\) and \(M^{\frac{m}{2}}_r(b)\le {\mathcal {F}}(M^m_r(a))\). We write

Applying Theorem A.3 (ii) once again we deduce that \(T_b^*-T_b\) is of order \(\frac{m}{2}-r\) and

where we used Remark A.4 in the last inequality. On the other hand, an application of Theorem A.3 (i) yields

Thus, we obtain

By shifting derivative differently in the above inner products, we have the variant

Next we note that \(T_{b^{-1}}T_b-\Psi (D)=T_{b^{-1}}T_b-T_1\) is of order \(-r\) and

Finally, a combination of (A.10) and (A.12) leads to (A.8), and a combination of (A.11) and (A.12) leads to (A.9). \(\quad \square \)

The proof of (A.12) also proves the following lemma.

Lemma A.6

Let \(a\in \Gamma ^m_r\), \(r\in (0, 1]\), be a real symbol satisfying \(a(x, \xi )\ge c|\xi |^m\) for all \((x, \xi )\in {\mathbb {R}}^d\times {\mathbb {R}}^d\). Then for all \(s\in {\mathbb {R}}\) we have

Rights and permissions

About this article

Cite this article

Flynn, P.T., Nguyen, H.Q. The Vanishing Surface Tension Limit of the Muskat Problem. Commun. Math. Phys. 382, 1205–1241 (2021). https://doi.org/10.1007/s00220-021-03980-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-021-03980-9