Abstract

In routine monitoring of foods, reduction of analyzed test portion size generally leads to higher sample throughput, less labor, and lower costs of monitoring, but to meet analytical needs, the test portions still need to accurately represent the original bulk samples. With the intent to determine minimal fit-for-purpose sample size, analyses were conducted for up to 93 incurred and added pesticide residues in 10 common fruits and vegetables processed using different sample comminution equipment. The commodities studied consisted of apple, banana, broccoli, celery, grape, green bean, peach, potato, orange, and squash. A Blixer® was used to chop the bulk samples at room temperature, and test portions of 15, 10, 5, 2, and 1 g were taken for analysis (n = 4 each). Additionally, 40 g subsamples (after freezing) were further comminuted using a cryomill device with liquid nitrogen, and test portions of 5, 2, and 1 g were analyzed (n = 4 each). Both low-pressure gas chromatography-tandem mass spectrometry (LPGC-MS/MS) and ultrahigh-performance liquid chromatography (UHPLC)-MS/MS were used for analysis. An empirical approach was followed to isolate and estimate the measurement uncertainty contribution of each step in the overall method by adding quality control spikes prior to each step. Addition of an internal standard during extraction normalized the sample preparation step to 0% error contribution, and coefficients of variation (CVs) were 6–7% for the analytical steps (LC and GC) and 6–9% for the sample processing techniques. In practice, overall CVs averaged 9–11% among the different analytes, commodities, batches, test portion weights, and analytical and sample processing methods. On average, CVs increased up to 4% and bias 8–12% when using 1–2 g test portions vs. 10–15 g.

Efficient quality control approach to include sample processing

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The fundamental purpose of analytical chemistry is to obtain a correct result that meets the need to know the chemical composition of a sample. However, some analytical chemists, in their enthusiasm to demonstrate novelty, generate income, promote ideas, or save resources (among many other reasons), develop analytical methods and techniques that emphasize data quality aspects of analytical chemistry more than practical aspects, or vice versa. Sometimes, ulterior and/or personal motives of analytical chemists intrude as an unstated and uncertain subjective contributor to the work itself, independent of the actual analytical biases in the results.

The desire for high-quality results to meet expectations or standards is the common cause of subjective biases in analytical chemistry (among other human endeavors). One of the subtler forms of subjective human assumptions and decision-making relates to dogmatic practices and an underlying fit-for-purpose premise about the analysis: does the proposed method meet the analytical need even if it yields “acceptably scientific” results? Furthermore, does the validation process adequately demonstrate that the method meets the real-world need? Even then, does the acceptably validated method provide a potentially useful new alternative in comparison with existing methods?

One of the ways that some analytical chemists tend to knowingly or unknowingly distance their efforts from the fundamental purpose of their work is to ignore the sample processing step when developing analytical methods. In reviewing the literature, we noted some very thorough and detailed papers about method validation, traceability, measurement uncertainty, proficiency testing, and quality assurance and control [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15], but only two of these specifically include sample processing in the discussions [3, 14]. In nearly all studies of analytical chemistry, the underlying premise when assessing method traceability to certified reference materials, conducting inter-laboratory method trials, analyzing proficiency test samples, validating analytical methods, and performing quality control (QC) procedures is that the (typically unstated) sample processing method is assumed to be valid for the analyte/matrix combination(s) being analyzed. Sampling is another factor that is sometimes mentioned [3, 8, 9], but unlike sample processing, sample collection typically resides outside of laboratory control. In the case of real samples (as opposed to proficiency test samples and the like), sample processing is nearly always conducted in the laboratory facility; thus, it makes sense that it should be considered as part of the laboratory method.

In accordance with widely accepted protocols, method validation is conducted by adding the analytes to the sample test portions rather than to the original bulk samples [1,2,3,4,5,6,7,8,9,10,11,12,13,14]. In a highly regarded guidebook on pesticide residue monitoring of foods, validation of sample processing is stated to be important, but no validation protocol or acceptability criteria for results are suggested [16]. In some applications, the sample processing step may have already been demonstrated to yield acceptable results; thus, it could be wasteful of potentially costly reagents to validate it again (albeit other resources of time and labor would remain the same as any other validation). In other cases, sample processing is indeed a trivial matter that should yield valid results, but this is usually an expectation rather than a tested hypothesis. In actuality, many analytical chemists tend to ignore the sample processing step for more mundane reasons, such as the lack of a requirement to do so, added complications in interpreting and comparing results, desire for higher quality results (or avoiding the risk of low quality results), and indolence. Even when the sample comminution step has been previously validated, the systematic and random error contributions from this step are not reflected in reported method validation results, even in studies involving multiple laboratories [15].

Certainly, assessment and validity of sample processing can be addressed through scientific investigations, which may lead to changing currently accepted practices. The first step in method validation protocols would simply begin with spiking of samples during the comminution step rather than just prior to extraction. In proficiency testing or inter-laboratory trials, known amounts of analyte(s) could be added to individual food items for comminution with matrix blank by the labs to determine accuracy in the overall methods. Choosing representative commodities [16] (or mixtures thereof) could be an additional option to test ruggedness of the approaches.

Although sample processing is nearly always neglected in publications, some reports have described studies on the topic [17,18,19,20,21,22,23,24,25,26,27,28,29]. In 2015, Lehotay and Cook wrote a perspective article about sample processing in pesticide residue analysis of foods [17]. Recently, Han et al. [18] compared different sample processing conditions and devices in the attempt to minimize the analytical test portion size for monitoring of pesticides and environmental contaminants in food mixtures. As also proposed in both articles, we assert that method validation protocols should begin from the initial sample comminution step, which would require analytical chemists to treat the sample processing step in the overall method on equal footing as the subsequent steps of sample preparation, analysis, and data processing. Otherwise, a non-validated sample processing procedure may lead to analyte degradation or a test portion that does not adequately represent the original sample. An incorrect result is actually worse than meaningless if the outcome leads to a wrong decision, and in either case, the resources spent on improper analyses would be wasted.

Beyond method validation, we recommend that the sample comminution step be assessed routinely to help ensure quality of results as part of ongoing QC practices. Not only can QC standards added to samples before each step in the method help demonstrate acceptability in the analysis of particular samples but they can also be used to help pinpoint which step failed if data quality objectives are not met. This is because the propagation of error via the summation of squares in the measured precision of each QC standard permits estimation of the error contribution from each step in the overall method [1,2,3,4,5,6,7,8,9,10,11,12,13,14, 18].

In publications about measurement uncertainty [1,2,3,4,5,6,7,8,9,10,11,12,13,14], authors describe concepts that are very familiar and applicable to analytical chemists. However, the use of Greek symbols and complex equations originating from statisticians undermines the efforts by practicing analytical chemists to understand and implement the concepts. In a simpler presentation, the following set of equations can be used to efficiently estimate measurement uncertainties:

where CV is the coefficient of variation for sample processing (proc), sample preparation (prep), and analysis (anal). We presented a more detailed explanation previously [18], but in brief, we differentiate measured relative standard deviations (RSDs) of the analyzed QC standards (QCproc, QCprep, and QCanal) from the CVs, which are calculated from the RSDs using the sum of squares equations. Because QCproc is carried through the overall method from start-to-finish, and QCanal directly isolates the final analytical step, then CVoverall = RSDproc and CVanal = RSDanal. This leads to the relationship:

Furthermore, since

then substitution of terms leads to

The aim of this study was to use this type of ongoing quality control approach to assess and compare the quality of analytical results obtained when using different comminution devices for sample processing of 10 common fruits and vegetables that contain incurred and spiked pesticides. A simple method using a Blixer® at room temperature was to be compared with a more involved two-step procedure to further comminute frozen subsamples using a liquid nitrogen cryomill device. The results would be used to calculate the minimal analytical test portions that still yield accurately representative results to the original bulk sample.

Materials and methods

Reagents and consumables

Pesticide standards were obtained from ChemService (West Chester, PA, USA), Dr. Ehrenstorfer GmbH (Augsburg, Germany), Sigma-Aldrich (St. Louis, MO, USA) or the Environmental Protection Agency’s National Pesticide Repository (Fort Meade, MD, USA). Atrazine-d5 and fenthion-d6 originated from C/D/N Isotopes (Pointe-Claire, Quebec, Canada), p-terphenyl-d14 was from AccuStandard (New Haven, CT, USA), and pyridaben-d13 and 13C-phenacetin came from Cambridge Isotope Laboratories (Andover, MA, USA). Formic acid, ammonium formate, ethylglycerol (3-ethoxy-1,2-propanediol), shikimic acid, d-sorbitol, sodium chloride (NaCl), and anhydrous magnesium sulfate (anh. MgSO4) were from Sigma-Aldrich. Deionized water (18.2 MΩ cm) was prepared with a Barnstead/Thermolyne (Dubuque, IA, USA) E-Pure Model D4641 and HPLC-grades of acetonitrile (MeCN) and methanol (MeOH) were from Fisher Scientific (Pittsburgh, PA, USA). Mini-cartridge solid-phase extraction (SPE) cleanup was conducted using automated instrument-top sample preparation (ITSP) with 45 mg sorbent mixtures of 45 g anh. MgSO4/primary secondary amine (PSA)/C18/CarbonX (20/12/12/1, w/w/w/w) bought from ITSP Solutions (Hartwell, GA, USA). Filter vials (0.45 μm PVDF) were from Thomson Instrument Company (Oceanside, CA, USA).

Samples and solutions

Ten different common fruits and vegetables were individually processed for analysis on separate days in the following order: (1) celery, (2) grapes, (3) green beans, (4) broccoli, (5) squash, (6) apples, (7) oranges, (8) peaches, (9) potatoes, and (10) bananas. All samples were obtained from local grocery stores in the expectation that they would contain incurred pesticides as reported in the Pesticide Data Program [30]. The 93 targeted pesticide analytes were carefully chosen based on pesticide monitoring reports for the analyzed commodities, including the 21 diverse pesticides that were selected for spiking to minimize overlap with expected incurred pesticides.

Tables S1 and S2 (see the Electronic Supplementary Material, ESM) list the incurred, spiked, QC, and IS analytes in the study. QCanal consisted of p-terphenyl-d14 for analysis by low-pressure gas chromatography-tandem mass spectrometry (LPGC-MS/MS) [31,32,33] and 13C-phenacetin for ultrahigh-performance liquid chromatography (UHPLC)-MS/MS. To isolate the ITSP cleanup step in sample preparation prior to GC analysis, QCITSP consisted of carbofenothion, piperonyl butoxide, and procymidone. For QuEChERS extraction, QCextr (also serving as the IS mix) consisted of atrazine-d5, fenthion-d6, and pyridaben-d13. A mixture of diazinon, pyriproxyfen, and tetraconazole constituted QCcryo, which was added to subsamples in the cryomill vessels just before cryomilling. Similarly, QCBlix (and QCBlix + cryo) included atrazine, azinphos-methyl, chlorothalonil, o,p′-DDD, dichlorvos, fenthion, hexachlorobenzene, lindane, oxyfluorfen, parathion-methyl, pendimethalin, propargite, spinosad (spinosyns A + D), and vinclozolin.

All solutions were prepared in MeCN to yield 100 ng/g equivalent sample spiking concentrations (except half as concentrated for o,p′-DDD and hexachlorobenzene). For GC, p-terphenyl-d14 at 0.88 ng/μL was included with the analyte protectants solution in 2/1 MeCN/water and 1.1% formic acid, consisting of 25 μg/μL ethylglycerol, 2.5 μg/μL gulonolactone, 2.5 μg/μL d-sorbitol, and 1.25 μg/μL shikimic acid.

Sample processing

A Robot Coupe (Ridgeland, MS, USA) Blixer® 2 and a Spex (Metuchen, NJ, USA) 6870D Freezer/Mill® cryomill were evaluated in the study. Figure 1 outlines the sequence of steps in the experimental design, which was followed for each commodity individually. The first step was to cut the samples into manageable chunks using a knife (none of the fruits or vegetable were washed or peeled). The pieces were placed into a tared 1-L beaker atop a top-loading balance to yield 520 ± 0.5 g of bulk sample, which was transferred into a 2-L stainless steel Blixer® container. The sample was chopped for 1 min, then 20 ± 0.1 g was removed for preparation of matrix-matched (MM) calibration standards. The remaining 500 g sample was spiked with 900 μL of 55.6 ng/μL QCBlix solution before processing for another 1 min at room temperature. The container contents were transferred to a glass jar, which was sealed and placed in a − 20 °C freezer for at least an hour.

The samples were partially thawed to ease transfer of subsamples to the cryomill containers and centrifuge tubes for sample preparation and analysis. For cryomilling, duplicate 20 ± 0.05 g portions were weighed into tared polycarbonate cylinder containers fitted with a stainless steel caps at the bottoms. The QCcryo solution (50 μL) was added to both vessels to yield 100 ng/g each of diazinon, pyriproxyfen, and tetraconazole. The metal rod for the cryomill was enclosed with the samples before the other stainless steel caps were fitted to the top of the cylinders. The cryomilling method in a bath of liquid nitrogen entailed 3 cycles of 1 min each at 10 beats/s of the impactor. Then, the duplicate cryomilled samples were combined and stirred by spatula in a glass jar for subsequent analysis of different test portion amounts.

Sample preparation

Sample preparation of each commodity was done using QuEChERS extraction and automated ITSP cleanup as before [32]. Sample test portions from the different sample processing approaches were weighed (four replicates each) into polypropylene centrifuge tubes of appropriate sizes: 50 mL tubes for 15, 10, and 5 g subsamples and 15 mL tubes for 2 and 1 g subsamples (see Fig. 1). Appropriate volumes of QCextr solution were added to yield 100 ng/g of the QCextr (and IS) analytes.

For extraction, 1 mL MeCN per gram sample was added followed by 10 min of shaking at room temperature using a Glas-Col (Terre Haute, IN, USA) platform pulsed-vortex shaker at the 80% pulsation setting. Then, 0.5 g per 1 g sample of 4/1 (w/w) anh. MgSO4/NaCl was added to each sample, followed by another 3 min of shaking. The tubes were then centrifuged for 3 min at 4150 rpm (3711 rcf) using a Kendro (Osterode, Germany) Sorvall Legend RT centrifuge.

For ITSP, 0.75 mL aliquots of each QuEChERS extract were pipetted into autosampler vials, and QCITSP and 13C-phenacetin (QCanal for LC) were added at 100 ng/g equivalent concentrations prior to the automated mini-column SPE cleanup step. In ITSP, 300 μL of the extracts passed through the mini-cartridges at 2 μL/s, yielding ≈ 220 μL, to which was added 25 μL QCanal (p-terphenyl-d14) solution also containing analyte protectants. Another addition of 25 μL MeCN make-up volume was made to equal the same volumes of standard solutions added to the MM calibration standards.

For LC, 0.4 mL extracts (remaining in the autosampler vials that did not undergo ITSP cleanup) plus 42.3 μL of the appropriate calibration standard solution or MeCN make-up volume were transferred to the shell portions of 0.45 μm PVDF filter vials, which were filtered by slowly compressing the plungers into the shells using a Thomson Instrument Co. multi-vial press. The same procedures were followed using the same volumes of MeCN as the extracts in the preparation of reagent-only (RO) calibration standards. In both GC and LC for RO and MM calibration standards, concentrations were 0, 2, 8, 32, 128, and 512 ng/g equivalents (half as concentrated for DDE/DDD/DDTs and hexachlorobenzene).

Analyses

Final extracts were analyzed in parallel by LPGC-MS/MS (after ITSP cleanup) and UHPLC-MS/MS (after filtration). For the former, we used an Agilent (Little Falls, DE, USA) 7890A/7010A gas chromatograph/triple quadrupole tandem mass spectrometer controlled by the MassHunter B07 software. Table S1 (see the ESM) lists the retention times (tR) and MS/MS conditions for analysis of the 73 analytes and QC standards. Chromatography entailed use of a 15 m × 0.53 mm i.d. × 1 μm film thickness Restek (Bellefonte, PA, USA) Rtx-5MS analytical column connected via an Agilent Ultimate union to a 5 m × 0.18 mm i.d. uncoated restrictor/guard Hydroguard column from Restek. Injection volume was 1 μL with 9:1 split injection into an Agilent Multi-Mode Inlet at 250 °C containing a Restek Sky single taper liner with glass wool. The column oven was initially 75 °C, then ramped after 0.5 min to 320 °C at 50 °C/min, where it was held until 10 min. The helium carrier gas flow rate was 2 mL/min using electronic pressure control set for virtual column dimensions of 5.5 m of 0.18 mm i.d. The transfer line was kept at 280 °C, the ion source at 320 °C, and the quadrupoles at 150 °C. Electron ionization at − 70 eV was employed with 100 μA filament current.

For UHPLC-MS/MS, we used a Waters (Milford, MA, USA) Acquity UPLC-TQD. The analytical column maintained at 40 °C was a Waters BEH C18 of 100 mm length, 2.1 mm i.d., and 1.7 μm particles, protected by a 5-mm pre-column of the same i.d. and type of particles. Mobile phases A and B consisted 95/2.5/2.5 (v/v/v) water/MeCN/MeOH and 1/1 (v/v) MeCN/MeOH, respectively, both also containing 0.1% formic acid and 20 mM ammonium formate. Flow rate was 0.45 mL/min, and the gradient was 95% A for 0.25 min, linearly ramped to 99.8% B for 7.5 min, and held until 8.75 min. Injection volume was 2 μL. Table S2 (see the ESM) provides the tR and MS/MS conditions for the 49 analytes and QC standards using electrospray positive ionization with 2 kV capillary and 3 V extractor voltages, 120 °C source and 450 °C desolvation temperatures, and 1000 L/h cone and 50 L/h desolvation gas flow rates.

For microscopy, a Nikon Instruments (Tokyo, Japan) Eclipse E800 was used at 40× magnification. Procedurally, 0.25 g each of comminuted samples from the different sample processing devices were mixed with 1.25 mL water in a vial and vortexed briefly. Immediately, a drop was placed on a glass microscope slide for viewing. Selected images are provided in the ESM that show typical particle shape and size differences among the commodities and comminution methods. No significant differences in analyte results were observed due to the comminution technique in this application, but we present the images for the sake of other possible applications [20].

Results and discussion

Study design and execution

This study was designed to assess analytical performance factors for different test portion sizes using two different sample processing approaches (Blix and Blix + cryo) and two analytical methods (LC and GC) for a wide range of incurred and spiked pesticides in 10 fruit and vegetable commodities. Although the study consisted of many variables (sample processing, test portion, cleanup, analysis, matrix, analyte, concentration, incurred, spiked, intra-day, and inter-day), we controlled as many of these factors as possible by adding the QC standards. As shown in Fig. 1, the experimental protocol was straightforward, and the sample processing, sample preparation, and analytical steps, including chromatographic MS/MS peak integrations [33], were conducted quickly over the course of 10 mostly consecutive days. However, compiling and processing so much data afterwards became a demanding and time-consuming task.

Previously [18], we chose to comminute several incurred matrices together in the comparison of LPGC-MS/MS results from different sample processing tools and techniques, including Blixing with dry ice. Even though use of dry ice during the comminution step was shown to yield somewhat better results for certain less stable and more volatile pesticides [18, 26], we purposefully conducted sample processing at room temperature in this study because very few pesticide monitoring labs use cryogenic sample processing, which takes too long among other inconveniences [17, 18]. Previous findings showed that acceptable quality of results depending on test portion size was achieved for nearly all types of residues using ambient conditions [17, 18, 22, 25,26,27,28,29]. Cryomilling was still conducted in this study to assess a broader range of pesticides and matrices than before. Another lesson learned from our previous study was that analytical test portions < 1 g require greater precision to handle the subsamples, reagents, and extract volumes than we could reasonably manage without specialized 96-well plate techniques [21, 23]. Thus, we only tested test subsample portions ≥ 1 g in this study.

Furthermore, our previous study [18] only entailed the analysis of a single batch of extracts containing the same analytes at similar concentrations in all extracts. Data processing in that situation was managed relatively easily, and the results could be more readily compared to estimate contributed error in each step of the study protocol. However, this study was much more complex in that it involved 10 batches of analyses by two methods of the individual commodities containing different incurred pesticides at different levels. By minimizing other sources of error previously [18], we were able to carefully isolate the different sample processing methods. However, that approach did not fully mimic real-world operations due to the mixing of matrices, elimination of multi-day, multi-method variations, and limiting the scope of analytes. In this study, we more broadly evaluated the two sample processing methods by fully incorporating the real-world variations among commodities, analytes, concentrations, and analytical methods, as well as day-to-day sources of random, systematic, and spurious errors.

Data processing

Another important factor among all the study variables was how to treat the data, which is the subject of numerous suggested options [1,2,3,4,5,6,7,8,9,10,11,12,13,14]. Accurate chromatographic peak integration through the summation integration function was automatic, fast, and reliable [33], but aspects in calibration, compilation, treatment, and interpretation of the data presented a wide array of options. An incomplete list of the possibilities includes (1) choice of quantification ion (or sum of ions); (2) using peak area or peak height as signal; (3) requiring identification criteria (including reporting level) to be met or not, and if so, what acceptability criteria; (4) normalizing signal to an IS or not [18], and if so, which IS; (5) using RO, MM, or both sets of calibration standards (and whether to employ the method of standard additions for the incurred residues); (6) choosing linear or quadratic calibration with a weighting factor, or not; (7) choosing a bracketed concentration range or removing outliers (or not), as defined by what criteria; and lastly, (8) evaluating results by which among many accepted statistical treatments [34]. Moreover, data for each analyte/matrix pair could be justifiably treated differently due to their particular facets, but this was not done for the sake of consistency and comparability. Also, calibration standards could have been combined among multiple days, but we also chose to process each batch of samples using the full contemporaneous (not combined) calibration curves.

Treatment of outliers is another very important matter in analytical studies [12, 15]. The known spurious errors were removed from datasets, but no outliers for the incurred, QCBlix, or QCcryo analytes were removed otherwise. Statistical outliers in this study could very well have been a measurement anomaly, or the curious result could have been an analyte “hot” or “dead” spot due to subsample heterogeneity. We could not tell the difference; thus, we did not remove the suspicious results.

Yet more decisions in data handling included whether to combine the incurred analytes with the QCBlix spikes in the assessment of CVproc, or whether to combine LC and GC results, or if we should treat multiple QC analytes independently or together. Only in the case of QCanal was its CV calculated from a single analyte (albeit normalized data for 13C-phenacetin in LC and p-terphenyl-d14 in GC were combined in one of the treatments for consideration). Otherwise, substantially different RSDs for QCextr were obtained for atrazine-d5 by LC and GC on some days/matrices than others, and even greater deviations occurred between and among the other QCextr standards, pyridaben-d13 and fenthion-d6. Multiple analytes also provided options for the QCcryo and QCITSP standards in the calculations of their respective CVs. In yet another question, should the CVs for individual analytes, matrices, methods, and test portions be calculated first and then averaged, or should the average CVs be calculated directly from datasets of normalized signals among the common QC analytes?

In the evaluation, we processed the integrated peak areas (not heights) testing nearly every option listed above using the instrument data handling software and many extensive and complex Excel spreadsheets. We treated the data in many different ways before settling on the presented results. Unfortunately, n = 4 was not enough replicates for each test portion size to achieve trustworthy findings by different forms of ANOVA. We sought to maximize replicates whenever possible, thereby avoiding calculation of RSDs from already averaged data within the same batch to determine CVs. Again, this study relies on the diversity of the source data to provide estimations and form conclusions, and in this respect, the diversity of source data involved both strengths and weakness in the study.

In the most straightforward approach, we used the instrument software to determine concentrations using RO standards normalized to the nearest IS with 1/x quadratic best-fit calibrations. Tables 1 and 2 summarize those results for all analytes and matrices, and Tables S3 and S4 (see the ESM) contain the concentrations and recoveries, respectively, for each analyte, matrix, and test portion weight. Others are free to treat these results as they wish if our choices in data processing described in this report do not conform to their opinions. Other means of data treatment should lead to the same general conclusions, independent of the specific values.

Overall results for incurred and spiked pesticides

Our methods were devised for routine usage [31,32,33], and no difficulties were encountered in generating analyte calibration curves, making analyte identifications, or determining the incurred and spiked pesticide concentrations. The MM and RO calibrations were excellent with nearly all analytes giving R2 > 0.999 in the analyses. In fact, the many RO and MM calibration standards and blanks of the different commodities over the course of 10 days in this study were used to assess summation integration in LPGC-MS/MS for the determination of analyte limits of quantification and identification [33].

For incurred analytes, Table 1 lists the average concentrations and standard deviations of the pesticides identified by UHPLC-MS/MS (noted by an asterisk) and LPGC-MS/MS for all test portions (n = 32). Due to the presence of incurred analytes in the MM standards, RO standards were employed. As expected from previous findings [30], the pesticides and their levels are typical for the chosen commodities in the study.

Although, we could not control which incurred pesticides would be present at what concentrations in the matrices, several analytes were analyzed by both LC and GC. For those analytes, the average concentrations were usually very similar, such as azoxystrobin in banana and potato, linuron in celery, myclobutanil and trifloxystrobin in grape, propiconazole in peach, and phosmet and pyrimethanil in apple. However, boscalid in green bean and peach yielded 16–31% higher concentrations (with 20–22% RSDs) by LC than by GC (with 8–13% RSDs). Imazalil and thiabendazole also gave significant differences between the orthogonal methods, with LC yielding more trustworthy results than GC for those rather polar fungicides. The cleanup sorbents used prior to GC analysis also partially retain these pesticides; thus, GC is not the only reason for the differences.

With respect to spiked pesticides in the study, Table 2 lists the UHPLC-MS/MS (noted by an asterisk) and LPGC-MS/MS overall average results for the QCanal, QCITSP, QCextr, QCBlix, and QCcryo pesticides in each commodity. The relatively few recoveries outside of the 70–120% range and RSDs > 20% appear in italic text. Chlorothalonil and dichlorvos are known to degrade and/or volatilize [26], but we purposefully included them in the study as markers to assess those factors. Both analytes gave highly accurate recoveries in apple, orange, and grape, and both were essentially lost in the more complex vegetables, broccoli and squash. However, curious differences were observed for peach and potato, in which dichlorvos yielded high accuracies in the former but not in the latter, and vice versa for chlorothalonil. These are examples showing that generalizations commonly made in method validations may fail when extending one set of pesticide-matrix combinations to another.

Some of the other spiked pesticides were also partially or fully lost in certain commodities. Results for fenthion in celery, green bean, peach, and potato were the most notable. The excellent calibrations and relatively consistent high recoveries for fenthion-d6 in the QCextr spike indicate that sample processing is most likely to blame for the losses, not the sample preparation or analysis steps. Indeed, we did not note low recoveries for fenthion previously [35] in which the analytes were spiked just before extraction. This example demonstrates the point made in Introduction that results from validated methods may be misleading if the sample comminution step is not included in the method validation protocol.

A few other examples of this situation may be deduced from Table 2, but sample processing may not be the only cause because other factors cannot be ruled out. Note that propargite recoveries by GC were 56–71% in broccoli, green bean, orange, peach, and squash, but recoveries were essentially 100% in the other matrices by GC and in all matrices by LC analysis. Further investigation of the GC results indicate that matrix effects, as shown in Fig. S1 (see the ESM), were the likely cause for propargite’s low biases in those matrices. Similar observations can be made by comparing results in Table 2 with Fig. S1 (see the ESM) for oxyfluorfen in orange, pyriproxyfen in green bean and orange, and piperonyl butoxide in green bean, orange, peach, and squash. Those pesticides have tR from 4.5–5.2 min (see the ESM Fig. S1), and we hypothesize that co-elution of matrix components is the main reason for the observed biases in those cases. Thus, even the use of both LC and GC for the same analyte was not an ideal control because matrix effects could be different [36]. For this reason, we recommend that matrix effects should be evaluated routinely in every analytical sequence by using both MM and RO calibration standards. No appreciable matrix effects were observed in the cases of dichlorvos, chlorothalonil, and fenthion, providing further evidence that those analyte losses more likely occurred during sample processing.

QC spike results for analysis and sample preparation

Different factors in the overall results were isolated using different data treatments as mentioned above. However, day-to-day and matrix-to-matrix biases had to first be taken into account [11], such as the matrix effects described above. Also, the different incurred analyte concentrations and recoveries (another form of systematic error) had to be minimized as much as possible. This was done as before [18] by normalizing each analyte signal to the average signal for that analyte taken from the 15 g Blix test portion for the same method and matrix (or largest sample size available for GC of banana and LC of celery). This approach also theoretically removes calibration and associated concentration ranges as a source of error in the evaluation.

As described in the “Introduction” section, calculation of CVs for the different steps in the methods depends on RSDs of the QC spikes. Ideally, QCanal, QCextr, and QCITSP standards would remain the same each day, especially when normalized to the 15 g Blix results. Figure 2 displays the variability of the results for the stepwise-spiked QC standards when signal is analyte peak area divided by peak area of atrazine-d5 from the same injection. In this way, atrazine-d5 was chosen as the IS for the same extracts analyzed by both LC and GC, thus reducing sources of error from analytical method-to-method and analyte-to-IS differences.

Results for the post-comminution QC spikes by UHPLC-MS/MS and LPGC-MS/MS when each peak area was first normalized to atrazine-d5 (IS)*, then normalized to the average signal for the 15 g Blix test portion with respect to a commodity (n = 32–96) and b test portion size (n = 40–120) in the study. The error bars represent standard deviation

Most prominently, the water content differences among the different commodities lead to different upper-phase MeCN volumes during the salt-out step in QuEChERS, and final extract volumes in ITSP are not exceptionally consistent from cartridge to cartridge [32]. QuEChERS methods rely on an IS to compensate for the matrix-to-matrix and sample-to-sample volume differences of the final extracts. An IS is not needed to achieve acceptably accurate results, but as in most instances when an IS is employed, the accuracy improves when normalizing to an IS.

The choice of a QCextr analyte as the IS also theoretically eliminates the subsequent steps assessed by QCITSP (in GC only) and QCanal because the QCextr includes those steps in its empirical RSD. Indeed, this outcome is demonstrated by the better consistency of the QCextr results than for the other standards shown in Fig. 2. The major error component in the QCextr results is the difference in signals between atrazine-d5 and the other QCextr standards (fenthion-d6 and pyridaben-d13 in GC and only the latter in LC). For comparison, the same data treatment was conducted using peak area as signal (without IS), which is provided in ESM Fig. S2.

The QC results shown in Fig. 2 and ESM Fig. S2 show the baseline variations of the steps in the analyses from which CVproc is calculated. Sample processing was not a factor per se for these QC analytes, and the point of preparing these plots was to check for systematic factors in the data treatment that could have affected the CVproc calculations. Ideally, each marker in Figs. 2 and S2 would be at the 0% line with nonexistent error bars. The error bars in Fig. 2a and ESM Fig. S2A indicate the intra-day standard deviations for the given matrices independent of the sample processing method and test portion size. The differences of the markers from 0% line indicate the inter-day and inter-matrix deviations, showing the degree of residual biases in the results.

In Fig. 2b and ESM Fig. S2B, the error bars show the inter-day and inter-matrix standard deviations for each sample processing method and test portion size independent of the matrix/day of analysis. The differences in the markers from the 0% line relate to typical biases among the days/matrices for the given parameters. In the cases of the QCextr and QCITSP results, the error bars and markers also include the inter-analyte variability. The overall combined results for each QC spike are also shown in Figs. 2b and S2B to the left of the first vertical lines.

The upper plots (A) results indicate that some matrices still yield greater random and systematic errors than others. Instrument performance suitability each day was demonstrated through excellent calibrations among other factors [33]; thus, matrix (direct and indirect effects) was the main contributor to the measurement uncertainties displayed, even though these and other sources of error should have been eliminated via normalizations. Average biases fell between ± 5% in most cases, with QCanal for LC of squash yielding more uncertainty. When results from different matrices were combined in the lower plots (B), the greater variabilities among matrices averaged out. The QCITSP results were strongly affected by matrix effects of piperonyl butoxide in some commodities, as already described, but this was not a factor since QCextr was used to calculated CVprep, not QCITSP. Ultimately, we calculated a series of individual CVs from the results for each matrix and test portion and from the overall averages, as discussed below.

Incurred and QC analyte results for sample processing

Although we mentioned that different analyte concentrations affect the degree of variations in the results, the underlying factor is not really concentration; it is signal/noise ratio. Mol et al. presented a clear demonstration of this aspect when assessing MS methods for analyte identification purposes [37]. Similarly, Fig. 3 exhibits semi-log plots of LC and GC signals for all incurred and QCBlix analytes in the study in terms of precision (RSDoverall) and bias vs. the 15 g Blix average. The plots not only show the effect of signal on uncertainties but also indicate the extent of signal differences that contributed to measured RSDs and calculated CVs in the study. Some incurred analytes in particular contributed more noise to certain matrices than others due to this factor.

The individual results shown in Fig. 3 are dispersed widely, but the general trend emerges, especially for LC results, that the RSD decreased as signal increased. In the case of GC, the high variability of chlorpropham at exceedingly high signal levels in potato caused a leveling of the trend. The normalized signals to atrazine-d5 in GC also yield greater overall consistency than in LC. Trend lines are shown for each sample processing approach and test portion size for all matrices, with color and line types denoted in the figure legends. The trends for the 10–15 g test portions demonstrated slightly better overall precision than the 1–2 g portions, but not significantly. Also, a significant positive signal bias up to 10% on average vs. the 15 g Blix test portion was observed.

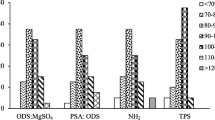

The degree of random and systematic errors with respect to commodity, test portion size, and sample processing method are displayed more clearly in Fig. 4. These results were calculated separately in LC and GC in the following ways: (1) normalize analyte peak area to peak area of atrazine-d5 for each injection; (2) average the signals and determine RSD (n = 4) for each analyte, which remains the RSD in further calculations; (3) normalize the signal averages to the averages of the 15 g Blix test portions for the same analyte (the differences from the average yield the biases); (4) average all RSDs and normalized averages for the analytes in each matrix and test portion, and also among test portions and matrices; (5) calculate individual and overall CVproc values [CVproc = √(RSD2proc − RSD2prep)] from the individual and combined averages. Figure 4 displays the results when using normalized signals and averages from the individual calculations (i = 0 when RSDprep > RSDproc). This entire procedure was also conducted separately using peak areas as signals (not normalized to atrazine-d5).

The upper plot in Fig. 4 exhibits the relationship of the calculated average CVproc among all incurred and QCBlix analytes for each variable vs. test portion size. In LC analysis, a slight decrease from 11 to 10% average CVBlix among the matrices occurred from 1 to 15 g portion weight, and the trend in average CVBlix + cryo was 12 to 10% from 1 to 5 g. In the case of GC, greater sample processing consistency and a more significant decrease from 8 to 4% was observed from 1 to 15 g in the case of CVBlix, whereas CVBlix + cryo was a consistent 7% from 1 to 5 g. Since sample processing does not actually depend on the analysis, the LC and GC results can be averaged, which yields CVproc of 8–10% for all test portion sizes and both techniques. Although individual analytes/commodities can yield more or less extensive uncertainties, as indicated by the ranges shown in the figures, the consistency of the averages provides confidence in the estimations of 8–10% for CVproc.

The lower plot of Fig. 4 tracks the biases due to the different test portions vs. the 15 g Blix results, even after accounting for potential biases shown in Fig. 2 in the calculations. In LC, the average bias among the matrices decreased from 14 to 5% for 1–10 g Blix test portions, respectively, which trended from 6 to 4% in GC. In the case of the Blix + cryo results, average bias went from 12 to 8% in LC and 7 to 6% in GC for 1–5 g test portions. Combined LC and GC results led to a trend in average bias from 9 to 5% for 1–10 g Blix subsamples, respectively, and 9–10% for 1–5 g Blix + cryo test samples.

It is not unexpected that a positive bias would occur as subsample size decreases [19, 20], but the extent of the bias was larger than expected. Low bias of the 15 g test portion can also explain the trend, and indeed, the QCBlix results vs. test portion sizes shown in Table S4 (see ESM) show several instances when the 15 g Blix results gave low recoveries. Thus, the bias is not actually as large as calculated, but the trend is real. The greater relative impact of solvent evaporation when dealing with smaller volume extracts may have contributed to this effect, as well as other biases involved when weighing smaller test portions. However, we believe that matrix effects probably contribute the most to the positive bias in the results, as further discussed below.

Estimated uncertainty budgets

As shown above and previously [18], the CVanal, CVITSP, CVextr, CVBlix, and CVBlix + cryo results for LC and GC (and combined) when using an IS or not can all be calculated from the RSDs of the incurred analytes and QC standards. Figures 2, 3, and 4 and S2 show the measurement uncertainties in this study for a series of different variables and data subsets. Systematic error is separate from this assessment of individual and overall uncertainties.

Figure 5 consists of pie charts sized to scale showing the overall uncertainty budgets independent of test portion and matrix when RSDs of all individual analytes, matrices, test portions, and analytical and sample processing methods are averaged within each QC category to yield the calculated overall CVs. The average CVs overall and for each slice of the uncertainty pies are provided in the figure. The upper half of the figure gives the results when atrazine-d5 is used as the IS, and the lower half displays the results when peak areas are used directly. Similarly, the left half of the figure exhibits the LC results, and GC results appear on the right half. The Blix and Blix + cryo sample processing results are presented in alternating rows.

As expected and usual [18, 32], the GC analysis benefited significantly when an IS was employed, but curiously, the analysis by LC became less consistent when using the IS. MS analysis by electrospray ionization is notoriously susceptible to signal suppression due to matrix effects [38], and we believe this is the most probable cause for the observed outcome, despite the normalization procedure to the 15 g test portions. In LC, atrazine-d5 may have reduced error to some extent by accounting for volumetric biases during extraction, but it also contributed its additional share of error due to its own matrix effects that did not always occur to the same extent as the other analytes [36]. As would be expected in the straightforward initial QuEChERS step, the CVextr averaged 0% even when an IS was not used. However, the cumulative uncertainty of matrix effects in LC induced the calculated CV (and measured RSD) to increase from 11 to 13% for the overall method. We used an older LC-MS instrument in this study, and dilution of injected extracts followed by analysis with a more sensitive modern instrument would likely have improved our LC results considerably. Filtering of the extracts may also have been a contributing factor to the variability of the results.

In the case of GC, matrix enhancement tends to occur rather than suppression [36, 38], which may be another possible cause for high bias in the results, but we employed analyte protectants in the GC analysis to greatly reduce or eliminate matrix effects [31,32,33, 36]. Fig. S1 (see the ESM) shows that only a few anomalies occurred for a few analytes in a few matrices, albeit ± 20% biases due to matrix effects are considered acceptable norm [16]. Despite this, Fig. 5 demonstrates how use of atrazine-d5 as the IS eliminated the contributed source of error from ITSP cleanup by accounting for its volumetric differences [32]. The average CVoverall (RSDoverall) decreased from 12–13 to 8–9% in GC by using the IS. In practice, the IS would be used in ITSP + LPGC-MS/MS, but not in UHPLC-MS/MS by this method to achieve the most accurate results.

The percent contributions due to measurement uncertainties in each step in the methods can be discerned by the size of their slices within the pie charts. When the error contribution of the sample preparation step is reduced to 0% by using an IS or eliminating the cleanup step, sample comminution by either Blix or Blix+cryo yields ≈ 55% of the pie and analysis contributes the remaining ≈ 45%. Thus, improved analytical performance can be achieved by addressing the performance of both of those steps in the LC and GC analyses.

As stated previously, sample processing is independent of the analytical method; thus, the LC and GC results can be combined to give a better estimation of CVproc. Table 3 presents both systematic and random errors averaged from the 20 sets of analyses among the 10 commodities. The results show that Blix-cryo comminution technique does not provide much if any benefit in this application over the Blix approach at room temperature. Due to the summation of squares relationship, when CVanal averages ≈ 6% as in this method, then CVproc of 5% yields CVoverall ≈ 8% and CVproc of 11% yields CVoverall ≈ 12%. Thus, the 5% maximum difference in average CVproc due to test portion sizes resulted in ≈ 4% greater difference in overall method precision. With respect to bias, the 1–2 g test samples gave ≈ 10% higher determined analyte concentrations on average than the 15 g portions. Slightly better calculated accuracies can be attained in this estimation by averaging IS-normalized GC results with non-normalized LC results.

Conclusions

Different sample processing tools at ambient and cryogenic conditions were evaluated and compared in the routine UHPLC-MS/MS and ITSP + LPGC-MS/MS targeted analysis of 93 spiked and incurred pesticides in 10 commodities extracted over the course of 10 days using the QuEChERS method. For commonly tested pesticides and commodities, use of the cryomill with liquid nitrogen was not shown to yield better accuracy than the much easier and faster Blixing® approach at room temperature. Overall reproducibility of the analyses including sample processing averaged 9–11% CV (RSD) when using the IS (or not) optimally. The sample comminution step contributed ≈ 55% to the overall uncertainty of the methods, with analysis contributing the rest. Matrix effects among the commodities and analytes were the most likely limiting source of error in the analysis, particularly in UHPLC-MS/MS, but use of a more sensitive instrument would have reduced this factor. The measurement uncertainty demonstrated by the semi-automated, high-throughput method compared very well with multi-laboratory results [14, 15], especially considering that the sample processing step was incorporated into this study.

Different test portions of 15, 10, 5, 2, and 1 g were investigated, and CVoverall increased up to ≈ 4% when taking the smaller subsample portions for analysis. Bias increased ≈ 10% when using the 1–2 g portions vs. the 15 g portions, but recoveries indicated that actual bias was probably ± 5% vs. 5–10 g portions. In our opinion, QuEChERS using 5 g test portions from the ambient Blix procedure could meet common fit-for-purpose monitoring needs rather than the current 10–15 g test portions. In this way, higher sample throughput can be achieved while taking less time, cost, and labor per sample. We recommend that well-characterized, inexpensive, and representative QCproc spikes be added to the bulk samples during the comminution step to check the validity of this equally critical but often-neglected step in the overall methods. We also recommend that validation protocols should call for spiking of the analytes into bulk samples prior to comminution rather than subsampled test portions.

References

Ellison SLR, Barwick VJ. Using validation data for ISO measurement uncertainty estimation. Part 1. Principles of an approach using cause and effect analysis. Analyst. 1998;123:1387–92.

Barwick VJ, Ellison SLR. Measurement uncertainty: approaches to the evaluation of uncertainties associated with recovery. Analyst. 1999;124:981–90.

Van Zoonen P, Hoogerbrugge R, Gort SM, van de Wiel HJ, van’t Klooster HA. Some practical examples of method validation in the analytical laboratory. Trends Anal Chem. 1999;18:584–93.

Hund E, Luc Massart D, Smeyers-Verbeke J. Operational definitions of uncertainty. Trends Anal Chem. 2001;20:394–406.

Taverniers I, Van Bockstaele E, De Loose M. Trends in quality in the analytical laboratory. I. Traceability and measurement uncertainty of analytical results. Trends Anal Chem. 2004;23:480–90.

Feinberg M, Boulanger B, Dewé W, Hubert P. New advances in method validation and measurement uncertainty aimed at improving the quality of chemical data. Anal Bioanal Chem. 2004;380:502–14.

Egea González FJ, Hernández Torres ME, Garrido Frenich A, Martínez Vidal JL, García Campaña AM. Internal quality-control and laboratory-management tools for enhancing the stability of results in pesticide multi-residue analytical methods. Trends Anal Chem. 2004;23:361–9.

Gustavo González A, Ángeles HM. A practical guide to analytical method validation, including measurement uncertainty and accuracy profiles. Trends Anal Chem. 2007;26:227–38.

Campanyó R, Granados M, Guiteras J, Prat MD. Antibiotics in food: legislation and validation of analytical methodologies. Anal Bioanal Chem. 2009;395:877–91.

Araujo P. Key aspects of analytical method validation and linearity evaluation. J Chromatogr B. 2009;877:2224–34.

Rozet E, Marini RD, Ziemons E, Dewé W, Roudaz S, Boulanger B, et al. Total error and uncertainty: friends or foes? Trends Anal Chem. 2011;30:797–806.

Andersen JET. On the development of quality assurance. Trends Anal Chem. 2014;60:16–24.

Azilawati MI, Dzulkifly MH, Jamilah B, Shuhaimi M, Amin I. Estimation of uncertainty from method validation data: application to a reverse-phase high-performance liquid chromatography method for the determination of amino acids in gelatin using 6-aminoquinoyl-N-hydroxysuccinimidyl carbamate reagent. J Pharm Biomed Anal. 2016;129:389–97.

Valverde A, Aguilera A, Valverde-Monterreal A. Practical and valid guidelines for realistic estimation of measurement uncertainty in multi-residue analysis of pesticides. Food Control. 2017;71:1–9.

Ferrer C, Lozano A, Uclés S, Valverde A, Fernández-Alba AR. European Union proficiency tests for pesticide residues in fruit and vegetables from 2009 to 2016: overview of the results and main achievements. Food Control. 2017;82:101–13.

European Commission Directorate-General for Health and Food Safety. Guidance document on analytical quality control and method validation procedures for pesticides residues analysis in food and feed. SANTE/11945/2015.

Lehotay SJ, Cook JM. Sampling and sample processing in pesticide residue analysis. J Agric Food Chem. 2015;63:4395–404.

Han L, Lehotay SJ, Sapozhnikova Y. Use of an efficient measurement uncertainty approach to compare room temperature and cryogenic sample processing in the analysis of chemical contaminants in foods. J Agric Food Chem. 2018; https://doi.org/10.1021/acs.jafc.7b04359.

Herrmann SS, Hajeb P, Andersen G, Poulsen ME. Effects of milling on the extraction efficiency of incurred pesticides in cereals. Food Addit Contam A. 2017;34:1948–58.

Raynie DE. Know your sample: size matters. LCGC N Am. 2016;34:530–2.

Riter LS, Wujcik CE. Novel two-stage fine milling enables high-throughput determination of glyphosate residues in raw agricultural commodities. J AOAC Int. in press; https://doi.org/10.5740/jaoacint.17-0317.

Sapozhnikova Y, Lehotay SJ. Evaluation of different parameters in the extraction of incurred pesticides and environmental contaminants in fish. J Agric Food Chem. 2015;63:5163–8.

Riter LS, Lynn KJ, Wujcik CE, Buchholz LM. Interlaboratory assessment of cryomilling sample preparation for residue analysis. J Agric Food Chem. 2015;63:4405–8.

Naik S, Malla R, Shaw M, Chaudhuri B. Investigation of comminution in a Wiley mill: experiments and DEM simulations. Powder Techn. 2013;237:338–54.

Fussell RJ, Hetmanski MT, MacArthur R, Findlay D, Smith F, Ambrus Ä, et al. Measurement uncertainty associated with sample processing of oranges and tomatoes for pesticide residue analysis. J Agric Food Chem. 2007;55:1062–70.

Fussell RJ, Hetmanski MT, Colyer A, Caldow M, Smith F, Findlay D. Assessment of the stability of pesticides during the cryogenic processing of fruits and vegetables. Food Addit Contam. 2007;24:1247–56.

Fussell RJ, Addie KJ, Reynolds SL, Wilson MF. Assessment of the stability of pesticides during cryogenic sample processing. 1. Apples. J Agric Food Chem. 2002;50:441–8.

Hill ARC, Harris CA, Warburton AG. Effects of sample processing on pesticide residues in fruits and vegetables. In: Fajgelj A, Ambrus Ä, editors. Principles and practices of method validation. Cambridge: Royal Society of Chemistry; 2000. p. 41–8.

Young SJV, Parfitt CH Jr, Newell RF, Spittler TD. Homogeneity of fruits and vegetables comminuted in a vertical cutter mixer. J AOAC Int. 1996;79:976–80.

US Department of Agriculture, Agricultural Marketing Service. Pesticide Data Program. Washington, DC. www.ams.usda.gov/pdp accessed Dec 2017.

Sapozhnikova Y, Lehotay SJ. Review of recent developments and applications in low-pressure (vacuum outlet) gas chromatography. Anal Chim Acta. 2015;899:13–22.

Lehotay SJ, Han L, Sapozhnikova Y. Automated mini-column solid-phase extraction cleanup for high-throughput analysis of chemical contaminants in foods by low-pressure gas chromatography-tandem mass spectrometry. Chromatographia. 2016;79:1113–30.

Lehotay SJ. Utility of the summation chromatographic peak integration function to avoid manual reintegrations in the analysis of targeted analytes. LCGC N Am. 2017;25:391–402.

Dixon WJ, Massey FJ Jr. Introduction to statistical analysis. Fourth ed. New York: McGraw-Hill; 1983.

Lehotay SJ, de Kok A, Hiemstra M, van Bodegraven P. Validation of a fast and easy method for the determination of residues from 229 pesticides in fruits and vegetables using gas and liquid chromatography and mass spectrometric detection. J AOAC Int. 2005;88:595–614.

Kwon H, Lehotay SJ, Geis-Asteggiante L. Variability of matrix effects in liquid and gas chromatography-mass spectrometry analysis of pesticide residues after QuEChERS sample preparation of different food crops. J Chromatogr A. 2012;1270:235–45.

Mol HGJ, Zomer P, López MG, Fussell FJ, Scholten J, de Kok A, et al. Identification in residue analysis based on liquid chromatography with tandem mass spectrometry: experimental evidence to update performance criteria. Anal Chim Acta. 2015;873:1–13.

Uclés S, Lozano A, Sosa A, Parrilla Vázquez P, Valverde A, Fernández-Alba AR. Matrix interference evaluation employing GC and LC coupled to triple quadrupole tandem mass spectrometry. Talanta. 2017;174:72–81.

Acknowledgments

We thank Robyn Moten, Limei Yun, and Tawana Simons for their help in the laboratory related to this study. We also thank Joseph Uknalis for the help using the microscope.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Published in the topical collection Food Safety Analysis with guest editor Steven J. Lehotay.

Disclaimer: The use of trade, firm, or corporation names does not constitute an official endorsement or approval by the USDA of any product or service to the exclusion of others that may be suitable.

Electronic supplementary material

ESM 1

(PDF 1.94 mb)

Rights and permissions

About this article

Cite this article

Lehotay, S.J., Han, L. & Sapozhnikova, Y. Use of a quality control approach to assess measurement uncertainty in the comparison of sample processing techniques in the analysis of pesticide residues in fruits and vegetables. Anal Bioanal Chem 410, 5465–5479 (2018). https://doi.org/10.1007/s00216-018-0905-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00216-018-0905-1