Abstract

Fluid configurations in three-dimensions, displaying a plausible decay of regularity in a finite time, are suitably built and examined. Vortex rings are the primary ingredients in this study. The full Navier–Stokes system is converted into a 3D scalar problem, where appropriate numerical methods are implemented in order to figure out the behavior of the solutions. Further simplifications in 2D and 1D provide interesting toy problems, that may be used as a starting platform for a better understanding of blowup phenomena.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Six collapsing rings

The aim of this paper is to propose a way to build special explicit solutions of the set of time-dependent incompressible Navier–Stokes equations. The model consists of the law of momentum conservation, given by the vector equation:

where the velocity field \(\textbf{v}\) is required to be divergence-free, i.e.: \(\textrm{div}{} \textbf{v}=0\). The last relation guarantees mass conservation. The time t belongs to the finite interval [0, T]. As customary, \(\nu >0\) denotes the viscosity parameter. The potential p plays the role of pressure and \(\textbf{f}\) is a given force field. The equations are required to be satisfied in the whole three-dimensional space \(\textbf{R}^3\). The symbol \({\bar{\Delta }}\) denotes the 3D vector Laplacian. Later, we will introduce another symbol \(\Delta \) (with no over bar) with a slightly different meaning.

Specifically, we will refer to those phenomena known as vortex rings [1, 27]. The fluid follows a rotatory motion where the stream-lines revolve around the major circumferences of a doughnut. As a consequence of diffusion, the movement of the particles is accompanied by a drifting of the ring. At the same time, a progressive reduction of the energy is expected, depending on the magnitude of \(\nu \). We would like to see what happens when the ring is constrained inside an infinite cone. There, the sections of the ring that, in normal circumstances, tend to be approximated by circles, assume unusual shapes during the evolution, also depending on their verse of rotation (Figs. 3 and 10).

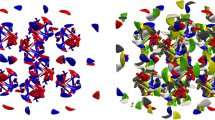

Since we want to avoid boundary conditions and have a solution defined on the whole space \(\textbf{R}^3\), we skip the idea of the cones and we divide instead the space into six virtual pyramidal regions as suggested by Fig. 1. Each pyramid has an aperture of 90 degrees, spanned by two independent angles \(\theta \) and \(\phi \). Six identical vortex rings are assembled along the six Cartesian semi-axes. They progress by maintaining a global symmetry and exerting reciprocal constraints, without mixing each other. Such a congestion may lead to possible singular behaviors that will be better clarified later in the exposition. For intense initial velocity fields and a very small diffusive parameter, there are chances that smooth solutions may, at some instant, lose regularity.

To say the whole truth, we will not solve the just mentioned problem. Our rings will not move autonomously, but they will be subject to external forces. This implies that \(\textbf{f}\) in (1.1) is going to be different from zero. By suitably manipulating the equations, we transfer part of the nonlinear term on the right-hand side, so obtaining a forcing term \(\textbf{f}(\textbf{v})\) depending on the solution itself. Assuming that the revised equation admits a unique solution, the field \(\textbf{f}(\textbf{v})\) (known a posteriori) is interpreted as an external given force. Note that the new solution may not have any physical relevance. These passages, that look like a trivial escamotage, have however some hope to be useful. In fact, let us suppose that we are able to check that \(\textbf{v}\) loses regularity in a finite time, whereas \(\textbf{f}(\textbf{v})\) remains smooth (even if its knowledge is implicitly tied to that of \(\textbf{v}\)); this would mean that it is possible to generate singularities from regular data. By ‘singularity’ here we intend a degeneracy of some partial derivative of \(\textbf{v}\). It is known from the literature that a minimal degree of regularity for \(\textbf{v}\) is always preserved during time. This means that we do not expect extraordinary explosions. It is important to remark that these mild forms of deterioration of the regularity might not be clearly detected by standard numerical simulations. This makes our analysis a bit uncertain.

We translate the full set of Navier–Stokes equations into a 3D nonlinear scalar differential equation, where the unknown is a potential \(\Psi \). Further simplifications in 2D and 1D, allow us to introduce some toy problems aimed to provide a starting platform for possible theoretical advances. Some statements will be checked with the help of numerical experiments. Nevertheless, we believe that the ideas proposed in the present paper provide a strong foundation in view of more serious studies.

As far as the 3D incompressible fluid dynamics equations are concerned, the research on the regularity of solutions has produced thousands of papers. A proof that solutions maintain their smoothness during long-time evolution is at the moment not available. Indeed, the problem of describing the behavior in three space dimensions has always been borderline. Due to the viscosity term, smooth data are expected to produce solutions with an everlasting regular behavior. On the other hand, the lack of a conclusive theoretical analysis suggests the existence of possible counterexamples. The community supporting the idea that a blowup may actually happen in a finite time is growing, and numerous publications, both concerning Euler and Navier–Stokes equations, are nowadays available. We cite here just a few titles, since an accurate review would take too much time and effort. From the theoretical side, we mention:[2, 8, 9, 11, 16]. In [5, 12, 19, 22, 26], possible scenarios regarding the development of singularities are presented. From the numerical viewpoint, we also quote [14] and [18]. Finally, sophisticated laboratory experiments on vortex rings at critical regimes are found for instance in [17] and [20].

2 A suitable coordinates environment

It is enough to study the Navier–Stokes problem on a single pyramidal subdomain and then assemble the six pieces of solution (see Sect. 10). It is wise to work within a suitable system of coordinates, where the infinitesimal distance ds is recovered by:

with r denoting the radial variable, whereas \(\theta \) and \(\phi \) are angles (Fig. 1). Within this environment, the gradient of a potential p is evaluated in the following way:

For a given vector field \(\textbf{A}=(A_1, A_2, A_3)\), we can compute some of the most classical differential operators, such as:

In the last expression we assumed that \(\textrm{div} \textbf{A}=0\). The symbol \(\Delta \) without the upper bar denotes the usual Laplacian in the variables \(\theta \) and \(\phi \). Applying \(\Delta \) to the scalar functions \(A_k\), \(k=1,2,3\), leads us to the equality:

Finally, we define the open set:

In practice, we always choose \(\omega =4\). A single pyramidal domain corresponds to the set: \(\Sigma = \{ (r,\theta ,\phi )\vert \ r>0, \ (\theta ,\phi )\in \Omega \}\).

3 Reformulation of the equations

We work in the reference frame \((r, \theta ,\phi )\) introduced in the previous section. Our functions will be regular enough to allow for the exchange of the order of derivatives. We start by introducing the vector potential:

satisfying \(\textrm{div} \textbf{A}=0\). This setting will be useful for the study of ring type displacements. We then define \(\Phi \) and u such that:

We recall that the symbols \(\Delta \) and \(\nabla \) (without the upper bars) do not contain partial derivatives with respect to r (see (2.3)). Based on these assumptions, the velocity field ends up to be:

with \(q_0=r\Phi \). Of course, we have: \(\textrm{div}{} \textbf{v}=0\). Through the use of standard calculus we also get:

with \(q_1=-(\partial /\partial r)(u/r)\). Regarding the nonlinear term, a second function \(q_2=-\frac{1}{2} \vert \textbf{v}\vert ^2\) is involved in the vector relation:

Successively, we have:

In the above expression we introduced the following functions:

In alternative, we can define \(q_3=\frac{1}{2} (u/r)^2\) and adjust \(f_2\) and \(f_3\) accordingly.

After having defined the pressure \(\ p=(\partial q_0 /\partial t)+\nu q_1+q_2+q_3\ \) and the forcing term \(\textbf{f}=(0, f_2, f_3)\), the first component of the vector momentum equation (1.1) is synthetically represented by the scalar equation:

With further little manipulation, we finally arrive at the system of two second-order equations:

For the unknowns \(\Psi \) and u, we will require Neumann type boundary conditions on \(\partial \Omega \), for any value \(r>0\). We first introduce the outward normal vector \({\bar{n}} = (n_2, n_3)\) to the domain \(\Omega \) defined in (2.4). At each one of the four corners, \({\bar{n}}\) is taken as the normalized sum of the limits of the normal vectors along the two concurring sides. This means that:

From (3.3), the first relation above implies that \(v_2n_2+v_3n_3=0\) on \(\partial \Omega \). This says that the velocity vector field is flattened on the separation surfaces of the six pyramidal domains partitioning the whole three-dimensional space. These constraints ensure the smoothness of the velocity field across the boundaries (see Sect. 10).

We think it is wise to better clarify the above passages. We got a functional equation of the type \(G(\Psi )=0\), that can be obtained by replacing u defined in (3.9) into (3.10). The aim is to solve the Navier–Stokes Eq. (1.1). Therefore, we can write:

After setting \(p=(\partial q_0 /\partial t)+\nu q_1+q_2+q_3\) and \(\textbf{f}=(0, f_2, f_3)\), we actually arrive at the relation \(G(\Psi )=0\). In this way, the pressure is not an unknown of the system, since it can be built in dependence of \(\textbf{v}\). Similarly, we have a forcing term \(\textbf{f}\) which is not given a priori, but still depends on the unknown. At the end, we are not solving the autonomous movement of a fluid. Our vortex ring will develop under the action of forces that depend on its dynamics. This evolution may have not physical interest and we do not expect the results to be easily interpreted from the fluid mechanics viewpoint. By the way, our interest here is mainly focused on the analytical viewpoint. Indeed, let us suppose that the development of \(\textbf{v}\) presents some deterioration of smoothness in a finite time, then two eventualities may happen. If \(\textbf{f}\) also loses regularity, we end up with proving nothing, because it is reasonable to assume that a bad forcing term may give raise to bad solutions. If we can show instead that \(\textbf{f}\) maintains a certain degree of regularity (even if it depends on the solution itself), then these results start becoming interesting.

Before ending this section, we make some heuristic considerations about the system (3.9–3.10). First of all, we introduce the two functionals:

Afterwards, we take for instance the two low-order eigenmodes:

Here, for a given \(\gamma >0\), the function \(\chi \) is defined, up to a multiplicative constant, as:

where \(J_{\sigma +\frac{1}{2}}\) is the spherical Bessel’s function of the first kind. This implies that \(\chi \) solves the differential equation:

By choosing \(\sigma \) in such a way that \(\sigma (\sigma +1)=2\omega ^2\), a straightforward computation passing through (3.16) shows that:

By using again (3.16), the last expression can be rewritten as:

with \(\lambda =2\omega ^2 -\gamma ^2 r^2\). This means that in first approximation, one can suppose that: \(\ u\approx \Delta \Psi +\lambda \Psi \) (although \(\lambda \) depends on r).

We now proceed with further approximations. When \(\omega =4\), we must have \(\sigma (\sigma +1)=16\), that provides: \(\sigma \approx 5.18\). From classical estimates on Bessel’s functions, \(\chi \) in (3.15) behaves like \(r^\sigma \) near the origin (up to multiplicative constant). By denoting with \(r_M>0\) the first nontrivial zero of \(\chi \), we can argue that:

The first zero of the Bessel’s function \(J_{\sigma +\frac{1}{2}}\) for \(\sigma \approx 5.18\), is approximately \(z\approx 9.56\). Thus, we must have \(\gamma =z/r_M\). We continue this rough analysis by introducing a new parameter \(\alpha \le 1\). If \({\hat{r}}\) is a point such that:

by making use of \(\chi \) in (3.19), we obtain:

Recalling the definition of \(\lambda \), we also have:

We go back to Eq. (3.10), and use as initial guess \(u_0=-\gamma ^2{\hat{r}}^2\Psi _0\). We have:

Thus, for small times t, the nonlinear term in square brackets of (3.9) changes according to:

with \(\mu _1=\alpha \) and \(\mu _2=\alpha -2\). As far as the equation (3.9) is concerned, we are induced to write:

with \(\lambda \) depending on \(\alpha \) as in (3.22). The simplified versions (3.24–3.25) will be object of further study later in Sect. 5.

4 Special cases

Before facing the general case (treated starting from Sect. 6), we deal with some preliminary simplified examples. We start by discussing the case of a scalar potential \(\Psi =\Psi (\theta , \phi )\) not depending on the variables r and t. We review (3.2) by neglecting \(\Phi \) and setting \(u=\Delta \Psi \). Going through the same computations of the previous section, we discover that:

The above equation (of fourth-order in the unknown \(\Psi \)) summarizes the whole Navier–Stokes system (1.1) for an homogeneous right-hand side \(\textbf{f}=0\). Note that, from (3.3), now \(\textbf{v}\) turns out to be singular at the origin (\(r=0\)). We then impose Neumann conditions to both the unknowns \(\Psi \) and u.

By integrating the differential Eq. (4.1) in \(\Omega \), we discover the following compatibility condition for u:

The other simplified situation the we would like to discuss is when \(\nu =0\). Within this new setting, we replace \(\Psi (t, r, \theta ,\phi )\) by \(\ r^2\Psi (t, \theta ,\phi )\). Equations (3.9) and (3.10) take respectively the form:

Note that the term \({\bar{\Delta }} \textbf{v}\) has been excluded since \(\nu =0\). In fact, here the Laplacian \(\Delta u\) cannot be taken into account because its dependence with respect to r is not homogeneous with respect to the other terms.

Some preliminary numerical tests can be carried out. In view of more sophisticated applications, we set up the computational machinery starting from the one-dimensional version of Eq. (4.1) with \(u=\Delta \Psi \). Thus, we consider:

where u and \(\Psi \) now depend exclusively on the variable \(\phi \). We then build the Fourier expansions:

where

Analogous formulas hold for \(d_k\), \(k\ge 0\). In this way, we are satisfying the boundary conditions \(u^\prime =0\) and \(\Psi ^\prime =0\) at the endpoints \(\phi =\pm \pi /\omega \). As a consequence of (4.4), for \(k\ge 1\) the coefficients are connected by the relation:

Moreover, the implication in (4.2) suggests that \(c_0=0\). Since \(\Psi \) is involved in the equations only through its derivatives, we can also set \(d_0=0\).

From well-known trigonometric formulas, we get:

For any fixed integer \(n\ge 1\), by substituting (4.8) and (4.9) into equation (4.4), we find out that, relatively to the mode \(\cos (\omega n\phi )\), we must have:

Of course, this problem always admits the trivial solutions \(u=0\) and \(\Psi =0\). However, depending on the choice of \(\omega \), another solution is available, that seems to be unique and rather stable. Numerical tests show that, as far as \(\omega \le \sqrt{2}\) (note that \(u=\cos \sqrt{2}\phi \) is the first eigenfunction such that \(u^{\prime \prime }+2u=0\)) the shape of the couple of non-vanishing solutions agrees with the one expected. Nevertheless, by increasing \(\omega \) (recall that we would like to have \(\omega =4\)), the corresponding solutions display a certain number of oscillations, leading to a velocity field \(\textbf{v}\) that does not reflect the behavior that we are trying to simulate. The analysis of the set of Eq. (4.4) has affinity with the study of diffusive logistic models, where the existence of non zero solutions depends on the location of a parameter relatively to the distribution of the eigenvalues of the diffusive operator. The literature on the subject is rather extensive. Since we did not find explicit references to our specific case, we limit our citations to the generic review paper [25].

Some analysis can also be carried out for the one-dimensional version of (4.3). In this case, we get the two equations:

According to (4.5), from (4.11) a relation is soon established between the Fourier coefficients for \(k\ge 0\):

In particular, the coefficient \(c_0=6d_0\) does not need to be zero. Considering that:

we can obtain the counterpart of (4.10) for a fixed integer \(n\ge 1\), i.e.:

with \(\mu _1=3\) and \(\mu _2=1\). For \(n=0\), the first summation in (4.14) disappears. Thus, we must have:

By virtue of (4.12), for \(\omega =4\) we come out with the estimate:

Suppose that, for \(t\rightarrow {\hat{t}}\) (where \({\hat{t}}\) may be finite or infinite), u converges to a limit in \(L^2(-\pi /4, \pi /4 )\). Let us also suppose that \(\sum _{j=1}^{\infty } c_j^2\) tends to a positive constant. Then (4.16) tells us that \(\lim _{t\rightarrow {\hat{t}}} c_0(t)\) does not exist (i.e.: \(c_0\) diverges negatively) and this is against the hypothesis of convergence in \(L^2(-\pi /4, \pi /4 )\). The remaining possibility is that \(\sum _{j=1}^{\infty } c_j^2\) tends to zero, which means that u converges to a constant function (i.e., u minus its average tends to zero). As a consequence, in the framework of functions with zero average, we expect u and \(\Psi \) to converge to zero, without producing any singular behavior.

This first attempt to build specific solutions (the first one stationary and presenting a singularity at the point \(r=0\), and the second one valid for \(\nu =0\)) has been a failure, since no interesting displacements seem to exist. Nevertheless, the construction is useful for further decisive improvements, that are discussed in the following sections.

5 A simple 1D problem

The results of the previous sections suggest to study more carefully the system in the single variable \(\phi \), involving the two unknowns u and \(\Psi \):

where Neumann type boundary conditions are assumed at the endpoints, i.e.: \(u^\prime =0\) and \(\Psi ^\prime =0\) for \(\phi =\pm \pi /\omega \). In (5.1), \(\lambda \), \(\mu _1\) and \(\mu _2\) are real parameters. After integration of (5.1) between \(- \pi /\omega \) and \(\pi /\omega \), one gets:

where we used the rule of summation by parts and imposed boundary conditions.

As a special case, (4.11) is recovered by setting \(\nu =0\), \(\lambda =6\), \(\mu _1=3\), \(\mu _2=1\). In the general case, relation (4.16) becomes:

Note that we are in the peculiar situation where the right-hand side of (5.3) does not contain the coefficient \(c_0\). If \(c_0\) does not depend on t, the above formula may allow for non-vanishing Fourier coefficients \(c_j\), \(j\ge 1\), if suitable compatibility conditions hold between the parameters \(\lambda \), \(\mu _1\) and \(\mu _2\). Namely, it is necessary that the generic quantity:

assumes both positive and negative values depending on j. For \(\lambda =6\), \(\mu _1=3\), \(\mu _2=1\), \(\omega =4\), we have that \(Q=18-64j^2\) is always negative, which confirms that the projection of the system (4.11) onto the space of zero average functions does not admit solutions different from zero. If the parameters are chosen differently, interesting new situations may emerge.

A numerical test has been made by truncating the Fourier sums at a given N and the results are visible in Fig. 2. The diffusion parameter is \(\nu =.01\). The other parameters are: \(\lambda =-3\), \(\mu _1=.5\), \(\mu _2=-1.5\). This choice ensures that Q in (5.4) may attain both positive and negative values, depending on the frequency mode involved. In the computation we enforced the condition \(c_0(t)=0, \forall t\in [0,T]\), basically by not including the zero mode in the expansion of u and noting that its knowledge is not requested in the evaluation of the right-hand side of (5.3). The explicit Euler scheme for \(0\le t\le T=1.48\) has been implemented with a sufficiently small time-step. The coefficients \(c_n\) and \(d_n\) are computed for \(1\le n\le N\), with \(N=50\). For \(\omega =4\), the initial guess is \(u_0(\phi )=\cos (\omega \phi )\). Note that the sign of u at time \(t=0\) has a nontrivial impact on the branch of solution we would like to follow. The coefficients of \(\Psi \) are updated at any iteration according to the relation \(\Psi ^{\prime \prime }+\lambda \Psi =u\). Very similar conclusions hold when \(\mu _1=1\), \(\mu _2=-4\) and \(\lambda \) is negative. This particular case will be rediscussed later in Sect. 8. The discrete solution is clearly trying to assume the shape of a very pronounced cusp at the center of the interval. For times t larger than \(T=1.486\), the simulation first produces oscillations and then overflow. Without a theoretical analysis, we are however unable to decide if there is a real blowup, though this has actually high chances to occur.

From our rough analysis, what we learned in this section is that, for certain values of the parameters, the model problem (5.1) only admits the steady state solution which is identically zero. For other suitable choices of the parameters, non-vanishing stable solutions emerge. They may effectively display a degeneracy of the regularity after a certain time.

Nonlinear parabolic equations presenting a blowup of the solution in a finite time, are widely studied. A classical example is:

with Dirichlet boundary conditions. Assume that f is convex and \(f(u)>0\), for \(u>0\). If for some \(a>0\), the integral \(\int _a^\infty (1/f(u))du \ \) is finite, then the solution of (5.5) blows-up when the initial datum is sufficiently large. This and similar other questions are reviewed for instance in [15]. Our system may have affinities with other model equations deriving from the most disparate applications. A prominent example is the Cahn-Hilliard equation [7]. The literature on this subject is quite extensive, so that we just limit ourselves to mention a recent book [21]. In its basic formulation, the Cahn-Hilliard equation takes the form:

where \(\nu >0\) and \(\gamma >0\) are suitable parameters. It is often written as a system after introducing the function \(\mu = f(u) -\gamma \Delta u\). Typical boundary conditions are of Neumann type, i.e.: \(\partial u/\partial {\bar{n}}=0\) and \(\partial \mu /\partial {\bar{n}}=0\). Existence of nontrivial attractors is proven in several circumstances. A standard choice for the nonlinear term is \(f(u)=u^3-u\).

In the one-dimensional counterpart of Eqs. (3.24) and (3.25), the quantity in (5.4) takes the value: \(Q=\alpha \lambda (\alpha ) -2(\alpha -1)j^2\omega ^2\). For \(0<\alpha <1\), we get \(\lambda (\alpha ) <0\) and Q may actually change sign. As an example, we may set \(\alpha =.5\), so that \(\lambda (\alpha ) \approx -34\) and \(Q\approx -17+16 j^2\). Roughly speaking, by fixing \({\hat{r}}\) in the interval \(]0, r_M[\), we may encounter situations similar to those examined in this section, bringing to a (supposed) degeneracy of the regularity of the solutions. This does not mean that such kind of troubles must actually manifest in the framework of the real 3D problem, especially because our preliminary analysis was oversimplified. We will better consolidate our knowledge in Sect. 9. In the next section, we try some numerical simulations on the global 3D problem. The aim is to check whether anomalous situations may effectively occur.

6 Full 3D discretization

In order to discretize the full system (3.9)–(3.10), we consider the series:

where the Fourier coefficients depend on r and t. In this fashion we are respecting the Neumann boundary constraints as prescribed in (3.11). Here, we decided to set \(c_{00}=d_{00}=0\). For \(n\ge 0\) and \(l \ge 0\), the mode \(\cos (\omega n \theta )\cos (\omega l \phi )\) is associated with the evolution of the corresponding coefficient \(c_{nl}\):

where \(c_{nl}\) and \(d_{nl}\) are related via (3.9) in the following way:

The above formulas, based on simple trigonometric identities, generalize those proposed in the previous sections. The two coefficients \(c_{00}\) and \(d_{00}\) will remain equal to zero, for all \(r\ge 0\), as time passes. Therefore, it is necessary to check whether a suitable integral of the nonlinear term satisfies a compatibility condition (see Sect. 9).

We compute approximate solutions where r belongs to the interval \([0, r_M]\) for some \(r_M>0\). We impose homogeneous Dirichlet boundary conditions to u and \(\Phi \) at \(r=0\) and \(r=r_M\). The final time is \(T=0.11\). The derivatives with respect to the variable r are approximated by central finite-differences. The discretization in time is performed by the explicit Euler scheme with a rather small time-step. This allows us to easily update the coefficients \(c_{nl}\) at each iteration. The coefficients \(d_{nl}\) are obtained at each step by solving an implicit 1D boundary-value problem which is recovered by a central finite-differences discretization of (6.3).

In the experiments that follow, we set \(\nu =.02\) and \(r_M=10\). Inspired by (3.14) and (3.19), at time \(t=0\) we impose:

which means that \(c_{00}=0\) and \(c_{10}=c_{01}=c_{11}\). We give in Fig. 3 the section for \(\phi =0\) of the initial velocity field \(\textbf{v}_0\) evaluated according to (3.3). We also show the third component of \(\textbf{A}\) as prescribed in (3.1). The level lines of \(A_3\) do not exactly envelope the stream lines, but they give however a reasonable idea of what is going on. The intensity of \(u_0\) in (6.4) has been calibrated to guarantee stability for the time-advancing scheme, also in relation to the magnitude of \(\nu \). The sign of the initial datum influences the behavior of the evolution. With the sign as in (6.4), the corresponding \(\textbf{v}_0\) has the rotatory aspect visible in Fig. 3. Like in kind of driven cavity problem, there is the tendency to form an internal layer towards the central axis of the domain (\(\theta =\phi =0\)). By switching the sign of \(\textbf{v}_0\), the evolution tends to bring the fluid towards the pyramid vertex (Fig. 10). We will mainly prefer the first situation.

We provide in Fig. 4 some snapshots of a section (corresponding to \(\phi =0\)) of the evolving ring. In truth, viewed from top (i.e., lying on the square \(\Omega \) of the plane \((\theta ,\phi )\)), the shape is not exactly that of a classical rounded ring, but the body is a little elongated in proximity of the four corners.

Vector representation of the initial field \(\textbf{v}_0\) (left), corresponding to \(u_0\) in (6.4), together with the level lines of the third component of \(\textbf{A}\) (right). These pictures are referred to the section obtained for \(\phi =0\)

Successive evolution of the ring sections, starting from the initial data of Fig. 3. The pictures are referred to \(\phi =0\), and the snapshots are taken at times \(t=0.044\), \(t=0.077\), \(t=T=0.110\), respectively

At time \(t=0.1\) (a bit earlier than the final time of computation), the section can be better examined in Fig. 5, where an enlargement is also provided. After that time, the evolution continues to be stable and the discrete solution remains bounded. The approximated solution has been obtained by truncating the summations in (6.2)–(6.3) in correspondence to the indexes greater than \(N=11\). The interval \([0, r_M]=[0,10]\) has been divided into 73 parts. The \(L^2\) norm of the velocity field shows very little variation during the evolution. However, a decay should be normally observed due to the presence of the viscous term and the numerical diffusion introduced by the discretization. In Fig. 6 we can see the plot of the velocity component \(v_1\) in the square \([0,r_M]\times [-\pi /4, \pi /4 ]\). Qualitatively, the pictures do not change too much by increasing the degrees of freedom. That is true up to a critical time approximately equal to \(t=0.1\).

The sections develop so that the main vortex moves upwards, trying to create a layer in proximity of the upper boundary. More insight comes from examining Fig. 7, where the radial component \(v_1\) of the velocity field, as a function of the variable r, is shown for \(\theta =\phi =0\) (the other two components \(v_2\) and \(v_3\) are zero). The behavior seems to follow a kind of 1D Burgers equation, where the graph shifts from left to right. Up to \(t=.09\) everything goes smooth, although the second derivatives tend to grow up. Between \(t=.09\) and \(t=.10\) there is a change of regime.

According to Fig. 8, the vector field at the center, which is initially smooth, tends to generate a sort of jump in the flux rate. This change is transmitted laterally, though one may argue that this is due either to a numerical effect or to a consequence of the forcing term \(\textbf{f}(\textbf{v})\). Beyond \(t=.10\), the numerical oscillations pollute the outcome (the anomaly is already visible at the base of the last plot of Fig. 7). Going ahead with time, we can reach situations as the one shown in Fig. 8, obtained with more accurate expansions (\(N=15\)). Some strange phenomena are then detectable independently of the degrees of freedom used.

These computations are not massive, but rather intensive by the way. Thus, it is quite expensive to perform an accurate analysis of the real behavior. It is also true that, confirming the presence of a jump of regularity on the first derivative of the flux, may be very hard from the numerical viewpoint. At the critical time something happens: the solution reaches a kind of steady state and the computation degenerates. For sure, we are not in presence of a blowup at infinity or a discontinuity of the field, but maybe of a lack of smoothness. We suspect that a reliable verification of the facts is only achievable with rather large values of N, with an abrupt growth of the costs for the numerical implementation. We address the reader to Sect. 8 for further results based on a simplified 2D version of the 3D originating problem.

As pointed out at the end of the previous section, the pictures presented so far do not reflect the actual physical behavior of an autonomous velocity field \(\textbf{v}=(v_1,v_2,v_3)\) simulating a vortex ring. There is in fact a forcing term \(\textbf{f}\), whose nature depends on the solution itself. According to (3.8) and (3.3), we have:

We show in Fig. 9 the plot of \(f_2\) restricted to the plane \(\phi =0\). The snapshot is taken at time \(t=.10\) (the same as in the pictures of Fig. 5). Note that, relatively to the section \(\theta =0\), \(f_2\) is identically zero.

The largest variations for \(\textbf{v}\) are manifested not too far from the point (denoted by P) where \(\vert v_1\vert \) reaches its maximum (Fig. 7). We may assume that P has a distance equal to \(r^*>0\) from the origin. It has to be noticed, that both \(f_2\) and \(f_3\) are the results of a multiplication of two terms and that both \(v_2\) and \(v_3\) are identically zero for \(\theta =\phi =0\). In Fig. 9 there are regions where \(\textbf{f}\) undergoes sharp changes, but things do not seem to be so critical near P, where instead the worst variation for \(\textbf{v}\) is expected. We try to reach some conclusions by assuming that, at the blowup instant, the function \(\Psi \) behaves near P as \((r-r^*)^\alpha \) (up to additive and multiplicative constants), for an appropriate value of \(\alpha \). In this circumstance, by neglecting powers of \((r-r^*)\) with higher exponents, we have from (3.3) the estimates:

If for example we set \(\alpha =5/2\), the corresponding \(v_2\) and \(v_3\) belong to \(H^1(\Sigma )\cap C^0(\Sigma )\) but not to \(H^2(\Sigma )\), where \(\Sigma =]0,r_M[\times \Omega \). Note that the Jacobian related to our system of coordinates behaves as \(r^2\) near the origin, but in order to deduce the integrability we actually operate within a neighborhood of the point P having \(r^*>0\). On the other hand, we note that \(f_2 \approx (r-r^*)^3 \) and \(f_3 \approx (r-r^*)^3\) are locally smooth functions. This means that we have room enough to suppose that a regular forcing term may produce a non regular solution, at least for what concerns the integrability of certain derivatives. As far as the term \({\bar{\Delta }}{} \textbf{v}\) is concerned, we argue that its smoothing strength at P could be momentarily diminished because is the sum of second order partial derivatives that tend to cancel each other. The above arguments are however extremely heuristic.

As it will better discussed at the end of Sect. 8, regarding a modified version of the system (3.9–3.10), we may interpret the variable r as a parameter. As done in Sect. 4, for a fixed r we introduce some functions \(\lambda (r)\), \(\mu _1(r)\), \(\mu _2(r)\) and a discriminating quantity Q(r). Roughly speaking, depending on the behavior of Q, we expect regions where the set of equations in the variable \(\theta \) and \(\phi \) are affected by dominating dissipation, so they force the velocity field to vanish. For other values of r we are instead in situations like that of Fig. 2. This push-pull condition may explain why a full blowup at infinity is not realized, passing however through a (possible) state of lack of regularity.

By looking for some references relative to the regularity of Navier–Stokes solutions, we come out for instance with the following papers: [3, 4, 6, 13, 23, 24]. Of course, much more material is available, as a consequence of an intense research activity. Most of the results deal with the case \(\textbf{f}=0\). If a blowup to infinity occurs, the theory predicts with a rather good reliability in which norms this happens and at what rate. Our case is a bit different. We have an uncommon forcing term that could produce irregularities, but at the same time prevents an exaggerated growth of the velocity field. Therefore it is not easy to find pertinent theoretical results. We leave this kind of analysis to the experts. We guess that \(v_1, v_2, v_3\) may comfortably stay into the space \(H^1(\textbf{R}^3)\) during time evolution. The considerations made above suggest a possible blowup at the interior of the functional space \(H^2(\textbf{R}^3)\), which is just a little more regular than \(C^0(\textbf{R}^3)\). Nevertheless, at the moment we have neither theoretical nor practical arguments to confirm this occurrence.

From our experiments it turns out that the role of the viscosity parameter \(\nu \) is not really crucial. It is true that, for relatively large values of \(\nu \), the solution smooth out very quickly. Maybe, in those circumstances, it is just a matter of increasing the intensity of the initial guess to reproduce again the critical behavior. On the other hand, it is also possible to choose \(\nu =0\), without affecting the stability of the numerical scheme, and obtaining outputs very similar to those of Fig. 7. Perhaps, future theoretical studies may decree that our approach is fruitless in the analysis of the possible blowup of the solutions of the Navier–Stokes equation. However, the idea could still have chances to be applied successfully to the analysis of the non-viscous Euler equation.

We spend a few words regarding the possibility of switching the sign of the initial datum (i.e., by replacing \(u_0\) by \(-u_0\) in (6.4)). In Fig. 10 we see two moments of this evolution. We are quite confident of the fact that a sort of singularity is going to be generated at the origin. For instance, it is reasonable to suppose that \(v_1\) decays as r when approaching the vertex of the pyramid. In the whole space \(\textbf{R}^3\) we have that \(r=\sqrt{x^2+y^2+z^2}\) is not a regular function. On the other hand, by examining the functions \(f_2\) and \(f_3\) we find out a posteriori that they are affected by the same pathology. Thus, we are in the case where a bad forcing term \(\textbf{f}\) induces the creation of a bad field \(\textbf{v}\), and this not an interesting discovery. The conclusion is that a regularity deterioration at the origin (\(r^*=0\)) has little chances to occur, within our framework.

Field distribution at time \(t=0\) and time \(t=.11\) when the initial field \(\textbf{v}_0\) corresponds to \(-u_0\) in (6.4)

As a final remark, we mention the possibility to substitute the pyramid \(\Sigma \) with a cone, and use spherical coordinates \((r,\theta ,\phi )\), where \(\theta \) now denotes the azimuthal angle. In this fashion we require that the expression of the ring does not involve the variable \(\phi \), so obtaining a 2D problem. After the usual computations, we get:

Unfortunately, if we approach the new set of equations by cosinus Fourier expansions (in order to preserve Neumann boundary conditions) the formulas are not neat as in (6.2), since there are spurious sinus components that cannot be easily handled. Thus, the computational cost does not decrease significantly. Considered that we are not solving exactly the original problem and that there are no numerical benefits, we decided not to proceed in this direction. Nevertheless, in Sect. 8, we examine a simplified version of (6.6)–(6.7). This surrogate problem will be more affordable from the numerical viewpoint, retaining however some of the main features.

7 Comparison with the 2D version

It is known that solutions of the 2D Navier–Stokes equation preserve indefinitely their regularity. The 2D version of the example examined so far, corresponds to four flattened rings, built on triangular slices forming a partition of \(\textbf{R}^2\). In each single slice, we work in polar coordinates \((r, \theta )\), or more appropriately in cylindrical coordinates \((r, \theta , z)\), where no dependence is assumed with respect to the variable z. In fact, the z-axis, orthogonal to the plane \(\textbf{R}^2\), is only introduced in order to apply the operator curl. We remind that, in this circumstance, the curl of a vector \(\textbf{A}=(A_1, A_2, A_3)\) is determined as follows:

For a scalar potential \(\Psi \), which is function of t, r and \(\theta \), we define \(\textbf{A}=( 0, 0, {\partial \Psi }/{\partial \theta })\). By going through the same passages followed for the 3D version, arrive at:

which are similar to (3.9)-(3.10).

Let us remark that the last system has little in common with (6.6) and (6.7). In the 3D version, defined on a cone, the flow comes from all directions and concentrates on the vertical axis. The section of the cone does not correspond to the slice of the 2D version, where the fluid only arrives from left or right. This is probably why the 3D version of the Navier–Stokes equations is more vulnerable to an overcrowding of the fluid in certain areas, giving rise to an exceptional increase of pressure.

As before, Neumann type boundary conditions are assumed for both u and \(\Psi \), i.e.: \((\partial u /\partial \theta )(\pm \pi /\omega )= (\partial \Psi /\partial \theta )(\pm \pi /\omega )=0\), for all \(r>0\). The two functionals in (3.13) now become:

By playing with the lowest order eigenmodes:

this time we discover that:

provided \(\chi (r) =\ J_{\sigma } (\gamma r)\), with \(\sigma =\omega \). For \(\omega =4\), the first nontrivial zero of the Bessel’s function \(J_4\) is 7.58.

We run some numerical experiments by setting \(\nu =.02\), \(r_M=8\) and \(T=.21\). At time \(t=0\) we impose \(u_0=\pm (r^4 /r_M) (r_M-r) \cos (\omega \theta )\). The Fourier expansions are truncated at \(N=20\). The plots of Fig. 11 show the evolution of \(v_1\) along the axes \(\theta =0\) and \(\theta =\pi /4\). Comparing with Fig. 7, the transition looks smoother and the effects of dissipation are more prominent. However, it has to be remembered that the role of the forcing term \(\textbf{f}\) (that implicitly depends on the solution itself) may alter the capability to judge what is really happening. As expected, everything looks pretty smooth.

8 A simplified model for the cone

At the end of Sect. 6, we introduced the equations (6.6–6.7). Defined on a three-dimensional cone, they just make use of the two variables r and \(\theta \). In order to develop a cheap numerical code for the calculation of their solutions, we introduce the following approximation:

which is valid for small \(\theta \). In this way we concentrate our attention on the central axis of the cone. Meanwhile, we open the possibility of implementing Fourier cosinus expansions in an easy fashion.

First of all, the expression of the velocity field takes the form:

Successively, the equations are modified as follows:

The numerical code is the same as the one taken into account in the previous section. The results are however rather different. We studied the behavior in the time interval \([0,T]=[0,.4]\), with \(r_M=10\), \(\nu =.02\) and the initial condition \(u_0=(r^7/r_M^4)(r_M-r)\cos \omega \theta \). Regarding the outcome, we refer to Figs. 12, 13, 14, where the series have been truncated for \(N>18\) in the experiments.

It is interesting to observe that, at the points where \(\partial \Psi /\partial r=0\), the coefficients of the nonlinear term in (8.4) correspond to the case \(\mu _1=1\) and \(\mu _2=-4\) for the 1D model problem (5.1) introduced in Sect. 4. This means that we are in the condition where the quantity Q defined in (5.4) attains different signs depending on the index j. In these circumstances, we guessed that the solution of (5.1) blows up in a finite time. Here, we do not have an explosion. However, the behavior looks quite weird, especially if we examine the picture on the right of Fig. 13, in which a plateau is visible in the central part. Again, we are not in the position to decide whether a break down of regularity is effectively occurring, or the weirdness is just the consequence of the small diffusive term \(\nu \) that allows for the development of sharp layers without destroying smoothness. We add further comments in the sections to follow.

9 Some theoretical considerations

In the numerical simulations of section 6, we imposed that the functions u and \(\Psi \) had zero average in \(\Omega \), corresponding to the fact that \(c_{00}=d_{00}=0\), for any r and any t. This property is compatible with (3.9) and (6.3). Moreover, it is inspired by the fact that the nonlinear term in (3.9) is independent of \(c_{00}\) and \(d_{00}\) (see also (6.2)). Thus, let us study more in detail this aspect. In order to do this, we integrate equation (3.9) in the domain \(\Omega \) and perform some integration by parts by taking into account the Neumann boundary constraints, valid for any r. Considering that \(\int _\Omega u \, d\theta d\phi =0\), for any r and t, we get:

The next step is to integrate the above expression with respect to \(r>0\). We denote by \(\Sigma \) the cartesian product \(\Omega \times ]0, r_M[\), where \(r_M\) can be either finite or infinite. At \(r=0\) and \(r=r_M\) we impose vanishing boundary conditions, independently of \(\theta \) and \(\phi \). By integrating by parts when necessary, we must have:

The above equality comes from the balance of positive and negative quantities. It does not say too much, except that is admissible with the existence of nontrivial functions \(\Psi \) solving (3.9–3.10) and compatible with the constriction \(c_{00}=d_{00}=0\). If we instead multiply (9.1) by r before the successive integration, the counterpart of (9.2) becomes \(0=0\). If we finally multiply (9.1) by \(r^2\) and integrate, the new version of (9.2) is:

which also has an ambiguous sign.

The same conclusions can be reached by arguing with the expansions (6.2–6.3). We can substitute the generic coefficient \(c_{nl}\), explicited in (6.3), into (6.2). Successively, by setting \(n=l=0\), the first sum in (6.2) disappears, the second one has \(i=j=0\) and \(k=m\), the third one has \(k=m=0\) and \(i=j\), and the fourth one has \(k=m\) and \(i=j\). We can analyze the terms of the summation, after an integration with respect to the variable r. The conclusions are similar to those of section 6, where, after introducing a suitable quantity Q, we distinguished between the case in which Q maintains the same sign (as a function of the indexes of the summation) or attains different signs. Here we are in the second situation.

Things change if we approach the two-dimensional Navier–Stokes problem. Indeed, if we transfer the same kind of computations to the system (7.2)-(7.2), we first have:

where \(\Omega =]-\pi /4, \pi /4[\). A further integration with respect to r, produces:

This situation is different from that of the three-dimensional case, since the right-hand side in (9.5) is negative and the compatibility with \(c_0=0\) now only happens for \(\Psi =0\). The outcome does not change if we multiply (9.4) by r before integration, so obtaining:

The considerations made in Sect. 5 were supported by some numerical tests and they suggested as a rule of thumb that, when Q has constant sign, the evolutive nonlinear model problem (projected into the subspace of functions with zero average) has a unique attractor consisting of the zero function. On the other hand, when Q attains different signs, there are stable singular solutions that are reached in a finite time. Can we deduce similar conclusions for the set of Navier–Stokes equations? Is the behavior of some indicator Q the discriminant factor between the two and the three-dimensional cases? We have no answers at the moment, but we hope that the results here discussed may serve as starting point to advance in this investigation. We also point out that the model problem introduced in Sect. 5 might be of interest by itself, both for its mathematical elegance and for possible applications in other contexts.

10 Discussion

There are a few things still to be fixed before concluding this paper. First of all, we need to say something about the assemblage of the six pyramidal domains representing a partition of the whole space \(\textbf{R}^3\) (Fig. 1). The Neumann conditions imposed to \(\Psi \) (and consequently to \(\Phi \)) guarantee that \(\textbf{v}\) is flattened on each triangular boundary, for any r and t (see (3.3)). Due to the Neumann conditions imposed on u, from an inspection of (3.7), the above property is also true for the nonlinear term \(\ \textbf{v}\times \textrm{curl}{} \textbf{v}\). Thus, the transfer of information between the domains only takes place through the diffusive term \(\nu {\bar{\Delta }} \textbf{v}\). After integration over \(\Omega \), the Laplacian \(\Delta u\) can be expressed in weak form and the Neumann boundary conditions allow for a good match across the interfaces, after taking into account all the symmetries involved. As a matter of fact, each normal derivative cancels out the corresponding normal derivative of the contiguous domain, since the two normal vectors are opposite. This property is not only true for the 12 triangles dividing the domains, but also for the 8 straight-lines constituting the boundary of the boundary. These last are made of the so called cross-points. A reasonable initial condition, such as for instance the one given in (6.4), may ensure a \(C^1\) matching across the interfaces. Of course, global initial data can be chosen as smooth as we please. In the event that some loss of regularity occurs during the evolution, we expect it to happen at some points in the middle of the pyramids. If a deterioration of the regularity shows up before, at some other places (for instance at the origin or at the interfaces), it will be anyway a confirmation of the possibility to generate singularities in a finite time.

We did not talk too much about the pressure p in the whole paper. This is also strictly depending on \(\textbf{v}\). It is actually defined as the sum of all the potentials than can be plugged in form of a gradient on the right-hand side of the Navier–Stokes momentum equation. Whatever the expression of p is, as far as the velocity field remains smooth, we expect the same to happen to pressure. Otherwise, as \(\textbf{v}\) starts showing a bad behavior, so it will be for p.

For \(r=r_M\), the examples here considered are equivalent to force homogeneous Dirichlet boundary conditions on the surface of a sphere centered at \(r=0\). If we want our problem to be defined in the whole space \(\textbf{R}^3\) (i.e.: \(r_M=+\infty \)), we may require either an appropriate monotone decay at infinity, or an oscillating behavior. The Bessel’s function in (3.15) can be an option, since it oscillates remaining bounded for all \(r\ge 0\), though it has not a rapid decay at infinity (\(\approx 1/\sqrt{r}\)). It is also to be reminded that the sign of the initial guess influences in different ways the successive development (compare Figs. 3 and 10). Presumably, without the Dirichlet type constraint at \(r_M\), the vortexes of Fig. 4 would escape outbound. They are however externally bounded by counter-rotating vortexes. Thus, the adoption of an initial function with alternate signs looks correct. Unfortunately, our computational capabilities are not enough to handle these types of experiments.

As a final remark we say that the idea of the six collapsing rings described in Sect. 1 can be approached as it is, i.e. without resorting to the trick of simplifying the equations through the help of a fictitious force \(\textbf{f}\). In alternative, an on purpose attractive radial force (i.e.: \(f_1\not =0\)), may be added to speed up the collapsing process. This 3D fluid dynamics exercise can be tackled by a numerical code with a great amount of computational effort. It is worthwhile to mention that many commercial codes for the resolution of the time-dependent Navier–Stokes equations require artificial boundary conditions on pressure. Such a constraints is unphysical and often leads to different outcomes depending on the conditions imposed (under the same initial and boundary conditions for the velocity field). If this might be acceptable for some engineering applications, the use is instead discouraged in view of a more accurate mathematical analysis. It would be however interesting to have a try with some special choices of \(\textbf{f}\); unforeseen surprises may come out.

References

Akhmetov, D.G.: Vortex Rings. Springer, New York (2009)

Beale, J.T., Kato, T., Majda, A.: Remarks on the breakdown of smooth solutions for the 3-D Euler equations. Comm. Math. Phys. 94, 61–66 (1984)

Beirão da Veiga, H.: A new regularity class for the Navier-Stokes equations in \({ R}^n\). Chin. Ann. Math. B 16, 407–412 (1995)

Berselli, L.C.: On a regularity criterion for the solutions to the 3D Navier-Stokes equations. Differ. Integral Equ. 15, 1129–1137 (2002)

Brenner, M.P., Hormoz, S., Pumir, A.: Potential singularity mechanism for the Euler equations. Phys. Rev. Fluids 1, 084503 (2016)

Caffarelli, L., Kohn, R., Nirenberg, L.: Partial regularity of suitable weak solutions of the Navier-Stokes equations. Comm. Pure Appl. Math. 35(6), 771–831 (1982)

Cahn, J.W., Hilliard, J.E.: Free energy of a nonuniform system, I, Interfacial free energy. J. Chem. Phys. 28(2), 258–267 (1958)

Cannone, M., Karch, G.: Smooth or singular solutions to the Navier-Stokes system? J. Diff. Eq. 197, 247 (2004)

Chan, C.H., Yoneda, T.: On possible isolated blow-up phenomena and regularity criterion of the 3D Navier-Stokes equation along the streamlines. MAA 19(3), 211–242 (2012)

Feerman, C.: Existence and smoothness of the Navier-Stokes equation, The millennium prize problems, pp. 57–67. Clay Math. Inst, Cambridge MA (2006)

Foxall, E., Ibrahim, S., Yoneda, T.: Streamlines concentration and application to the incompressible Navier-Stokes equations. Tohuku Math. J. 65(2), 273–279 (2011)

Galaktionov, V. A.: (2009), On blow-up ’twistors’ for the Navier-Stokes equations in R3: a view from reaction-diusion theory, arXiv:0901.4286v1

Giga, Y.: Solutions for semilinear parabolic equations in \(L^p\) and regularity of weak solutions of the Navier-Stokes system. J. Diff. Eq. 62, 186–212 (1986)

Grauer, R., Sideris, T.: Numerical computation of three dimensional incompressible ideal uids with swirl. Phys. Rev. Lett. 67, 3511–3514 (1991)

Hu, B.: Blow-up theories for Semilinear Parabolic Equations. Springer, New York (2011)

Karch, G., Schonbek, M.E., Schonbek, T.P.: Singularities of certain finite energy solutions to the Navier-Stokes system. Discret. Cont. Dyn. A 40(1), 189–206 (2020)

McKeown, R., et al.: A cascade leading to the emergence of small structures in vortex ring collisions. Phys. Rev. Fluids 3, 124702 (2018)

Kerr, R.M.: Evidence for a singularity of the three-dimensional incompressible Euler equations. Phys. Fluids A 5, 1725 (1993)

Kimura, Y., Moffatt, H.K.: Scaling properties towards vortex reconnection under the Biot-Savart law. Fluid Dyn. Res. 50, 011409 (2018)

Lim, T.T., Nickels, T.B.: Instability and reconnection in the head-on collision of two vortex rings. Nature 357, 225–227 (1992)

Miranville, A.: (2019), The Cahn-Hilliard equation: recent advances and applications, CBMS-NSF Regional Conf. Ser. in Appl. Math., n. 95, SIAM, Philadelphia

Moffatt, H.K., Kimura, Y.: Towards a finite-time singularity of the Navier-Stokes equations, Part 1. Derivation and analysis of dynamical system. J. Fluid Mech. 861, 930–967 (2019)

Serrin, J.: On the interior regularity of weak solutions of the Navier-Stokes equations. Arch. Rat. Mech. Anal. 9, 187–195 (1962)

Struwe, M.: On partial regularity results for the Navier-Stokes equations. Comm. Pure Appl. Math. 41(4), 437–458 (1988)

Taira, K.: Introduction to diffusive logistic equations in population dynamics. Korean J. Comput. Appl. Math. 9, 289 (2002)

Tao, T.: Finite time blowup for an averaged three-dimensional Navier-Stokes equation. J. Am. Math. Soc. 29, 3 (2004)

Wu, J.-Z., Ma, H.-Y., Zhou, M.-D.: Vorticity and Vortex Dynamics. Springer, New York (2006)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The author is affiliated to GNCS-INdAM and Research Associate at IMATI-CNR (Italy).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Funaro, D. How and why non smooth solutions of the 3D Navier–Stokes equations could possibly develop. Numer. Math. 152, 789–817 (2022). https://doi.org/10.1007/s00211-022-01333-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-022-01333-9