Abstract

In this article we consider the initial value problem for a scalar conservation law in one space dimension with a spatially discontinuous flux. There may be infinitely many flux discontinuities, and the set of discontinuities may have accumulation points. Thus the existence of traces cannot be assumed. In Audusse and Perthame (Proc R Soc Edinb Sect A 135:253–265, 2005) proved a uniqueness result that does not require the existence of traces, using adapted entropies. We generalize the Godunov-type scheme of Adimurthi et al. (SIAM J Numer Anal 42(1):179–208, 2004) for this problem with the following assumptions on the flux function, (i) the flux is BV in the spatial variable and (ii) the critical point of the flux is BV as a function of the space variable. We prove that the Godunov approximations converge to an adapted entropy solution, thus providing an existence result, and extending the convergence result of Adimurthi, Jaffré and Gowda.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this article we prove existence of an adapted entropy solution in the sense of Audusse and Perthame [6], via a convergence proof for a Godunov type finite difference scheme, to the following Cauchy problem:

The partial differential equation appearing above is a scalar one-dimensional conservation law whose flux A(u, x) has a spatial dependence that may have infinitely many spatial discontinuities. In contrast to all but a few previous papers on conservation laws with discontinuous flux that address the uniqueness question, we make no assumption about the existence of traces, and so the set of spatial flux discontinuities could have accumulation points.

Scalar conservation laws with discontinuous flux have a number of applications including vehicle traffic flow with rapid transitions in road conditions [11], sedimentation in clarifier-thickeners [8, 10], oil recovery simulation [20], two phase flow in porous media [4], and granular flow [16].

Even in the absence of spatial flux discontinuities, solutions of conservation laws develop discontinuities (shocks). Thus we seek weak solutions, which are bounded measurable functions u satisfying (1) in the sense of distributions. Closely related to the presence of shocks is the problem of nonuniqueness. Weak solutions are not generally unique without an additional condition or conditions, so-called entropy conditions. For the classical case of a conservation law with a spatially independent flux

one requires that the Kružkov entropy inequalities hold in the sense of distributions:

and then uniqueness follows from (4).

There are two main difficulties that arise which are not present in the classical case (3). The first problem is existence, the new difficulty being that getting a TV bound for the solution with BV initial data may not be possible in general due to the counter-examples given in [1, 12]. More interestingly, a TV bound for the solution is possible near the interface for non-uniformly convex fluxes (see Reference Ghoshal [13]). Several methods have been used to deal with the lack of a spatial variation bound, the main ones being the so-called singular mapping, compensated compactness, and a local variation bound. In this paper we employ the singular mapping approach, applied to approximations generated by a Godunov type difference scheme. The singular mapping technique is used to get a TV bound of a transformed (via the singular mapping) quantity. Once the TV bound of the transformed quantity is achieved we can pass to the limit and get a solution satisfying the adapted entropy inequality. Showing that the limit of the numerical approximations satisfies the adapted entropy inequalities is not straightforward due to the presence of infinitely many flux discontinuities.

The second problem is uniqueness. The usual Kružkov entropy inequalities do not apply to the discontinuous case. Also, it turns out that there are many reasonable notions of entropy solution [3, 5]. One must consider the application in order to decide on which definition of entropy solution to use.

There have been many papers on the subject of scalar conservation laws with spatially discontinuous flux over the past several decades. Most papers on this subject that have addressed the uniqueness question have assumed a finite number of flux discontinuities. Often the case of a single flux discontinuity is addressed, with the understanding that the results are readily extended to the case of any finite number of flux discontinuities. The admissibility condition has usually boiled down to a so-called interface condition (in addition to the Rankine-Hugoniot condition) that involves the traces of the solution across the spatial flux discontinuity. Often the interface condition consists of one or more inequalities, and is often derived from some modified version of the classical Kružkov entropy inequality.

When there are only finitely many flux discontinuities, existence of the required traces is guaranteed, assuming that \(u \mapsto A(u,x)\) is genuinely nonlinear [17, 23]. However if there are infinitely many flux discontinuities, and the subset of \(\mathbb {R}\) where they occur has one or more accumulation points, these existence results for traces do not apply. Thus a definition of entropy solution which does not refer to traces is of great interest.

A method has been developed first in [7], and then extended in [6], using so-called adapted entropy inequalities, that provides a notion of entropy solution and does not require the existence of traces. For the conservation law \(u_t + A(u,x)_x = 0\) with \(x \mapsto A(u,x)\) smooth, the classical Kružkov inequality (4) becomes

Due to the term \(sgn(u-k)\partial _x A(k,x)\), this definition does not make sense without modification when one tries to extend it to the case of the discontinuous flux A(u, x) considered here.

The adapted entropy approach consists of replacing the constants \(k \in \mathbb {R}\) by functions \(k_\alpha \) defined by the equations

With this approach the troublesome term \(sgn(u-k)\partial _x A(k,x)\) is not present, and the definition of adapted entropy solution is

Baiti and Jenssen [7] used this approach for the closely related problem where \(u\mapsto A(u,x)\) is strictly increasing. They proved both existence and uniqueness, with the additional assumption that the flux has the form \(A(u,x) = {\tilde{A}}(u,v(x))\). Audusse and Perthame [6] proved uniqueness for both the unimodal case considered in this paper, along with the case where \(u\mapsto A(u,x)\) is strictly increasing. The existence question was left open.

Recently there has been renewed interest in the existence question for problems where the Audusse–Perthame uniqueness theory applies. Piccoli and Tournus [19] proved existence for the problem where \(u\mapsto A(u,x)\) is strictly increasing, and without assuming the special functional form \(A(u,x) = {\tilde{A}}(u,v(x))\). This was accomplished under the simplifying assumption that \(u \mapsto A(u,x)\) is concave. Towers [22] extended the result of [19] to the case where \(u \mapsto A(u,x)\) is not required to be concave. Panov [18] proved existence of an adapted entropy solution, under assumptions that include our setup, by a measure-valued solution approach. The approach of [18] is quite general but more abstract than ours, and is not associated with a numerical algorithm.

The Godunov type scheme of this paper is a generalization of the scheme developed in [2] for the case where the flux has the form

where each of g, f is unimodal and \(H(\cdot )\) denotes the Heaviside function. This is a so-called two-flux problem, where there is a single spatial flux discontinuity. The authors of [2] proposed a very simple interface flux that extends the classical Godunov flux so that it properly handles a single flux discontinuity. The singular mapping technique is used to prove that the Godunov approximations converge to a weak solution of the conservation law. With an additional regularity assumption about the limit solution (the solution is assumed to be continuous except for finitely many Lipschitz curves in \(\mathbb {R}\times \mathbb {R}_+\)), they also prove uniqueness.

The scheme and results of the present paper improve and extend those of Adimurthi et al. [2]. By adopting the Audusse–Perthame definition of entropy solution [6], and then invoking the uniqueness result of [6], we are able to remove the regularity assumption employed in [2], and also the restriction to finitely many flux discontinuities. Moreover, the scheme of [2] is defined on a nonstandard spatial grid that is specific to the case of a single flux discontinuity, and would be inconvenient from a programming viewpoint for the case of multiple flux discontinuities. Our scheme uses a standard spatial grid, and in fact our algorithm does not require that flux discontinuities be specifically located, identified, or processed in any special way. Our approach is based on the observation that it is possible to simply apply the Godunov interface flux at every grid cell boundary. At cell boundaries where there is no flux discontinuity, the interface flux automatically reverts to the classical Godunov flux, as desired. This not only makes it possible to use a standard spatial grid, but also simplifies the analysis of the scheme.

The remainder of the paper is organized as follows. In Sect. 2 we specify the assumptions on the data of the problem, give the definition of adapted entropy solution, and state our main theorem, Theorem 2.5. In Sect. 3 we give the details of the Godunov numerical scheme, and prove convergence (along a subsequence) of the resulting approximations. In Sect. 4 we show that a (subsequential) limit solution guaranteed by our convergence theorem is an adapted entropy solution in the sense of Definition 2.1, completing the proof of the main theorem.

2 Main theorem

We assume that the flux function \(A:\mathbb {R}\times \mathbb {R}\rightarrow \mathbb {R}_+\) satisfies the following conditions:

- H-1:

-

For some \(r>0\)

$$\begin{aligned} |A(u_1,x)-A(u_2,x)|\le C|u_1-u_2| \text{ for } u_1,u_2\in [-r,r] \end{aligned}$$where the constant \(C=C(r)\) is independent of x.

- H-2:

-

There is a BV function \(a:\mathbb {R}\rightarrow \mathbb {R}\) and a continuous function \(R:\mathbb {R}\rightarrow \mathbb {R}^+\) such that

$$\begin{aligned} |A(u,x)-A(u,y)|\le R(u)|a(x)-a(y)|. \end{aligned}$$ - H-3:

-

For each \(x\in \mathbb {R}\) the function \(u \mapsto A(u,x)\) is unimodal, meaning that there is \(u_{M}(x)\in \mathbb {R}\) such that \(A(u_M(x),x)=0\) and \(A(\cdot ,x)\) is decreasing on \((-\infty ,u_M(x)]\) and increasing on \([u_M(x),\infty )\). We further assume that there is a continuous function \(\gamma : [0,\infty ) \rightarrow [0,\infty )\), which is strictly increasing with \(\gamma (0)=0\), \(\gamma (+\infty ) = +\infty \), and such that

$$\begin{aligned} \begin{aligned}&A(u,x) \ge \gamma (u-u_M(x)) \text { for all } x\in \mathbb {R}\text { and } u \in [u_M(x),\infty ],\\&A(u,x) \ge \gamma (-(u-u_M(x))) \text { for all } x\in \mathbb {R}\text { and } u \in (-\infty ,u_M(x)]. \end{aligned} \end{aligned}$$(8) - H-4:

-

\(u_M \in BV(\mathbb {R})\).

Above we have used the notation \(\text {BV}(\mathbb {R})\) to denote the set of functions of bounded variation on \(\mathbb {R}\), i.e., those functions \(\rho :\mathbb {R}\mapsto \mathbb {R}\) for which

where the \(\sup \) extends over all \(K\ge 1\) and all partitions \(\{\xi _0< \xi _1< \ldots < \xi _K \}\) of \(\mathbb {R}\).

By Assumption H-3, for each \(\alpha \ge 0\) there exist two functions \(k_\alpha ^+(x)\in [u_M(x),\infty )\) and \(k_\alpha ^-(x)\in (-\infty ,u_M(x)]\) uniquely determined from the following equations:

Related to the flux \(A(\cdot ,\cdot )\) is the so-called singular mapping:

It is clear that for each \(x\in \mathbb {R}\) the mapping \(u \mapsto \Psi (u,x)\) is strictly increasing. Therefore for each \(x\in \mathbb {R}\) the map \(u\mapsto \Psi (u,x)\) is invertible and we denote the inverse map by \(\alpha (u,x)\). Notice that \(\alpha (\cdot ,\cdot )\) and \(\Psi (\cdot ,\cdot )\) satisfy the following relation

Also, due to Assumption H-3, (10) is equivalent to \(\Psi (u,x)=sgn(u-u_M(x))A(u,x)\).

Definition 2.1

A function \(u \in L^{\infty }(\Pi _T) \cap C([0,T]:L^1_{{{\,\mathrm{loc}\,}}}(\mathbb {R}))\) is an adapted entropy solution of the Cauchy problem (1)–(2) if it satisfies the following adapted entropy inequality in the sense of distributions:

for all \(\alpha \ge 0\) or equivalently,

for any \(0\le \phi \in C_c^{\infty }(\mathbb {R}\times [0,\infty ))\).

For uniqueness and stability we will rely on the following result by Panov.

Theorem 2.2

(Uniqueness Theorem [18]) Let u, v be adapted entropy solutions in the sense of Definition 2.1, with corresponding initial data \(u_0\), \(v_0\), and assume that Assumptions (H-1)–(H-4) hold. Then for a.e. \(t\in [0,T]\) and any \(r>0\) we have

where \(L_1:=\sup \{{\left| \partial _u A(u,x)\right| };\,x\in \mathbb {R},\left| u\right| \le \max (\Vert u_0\Vert _{L^{\infty }},\Vert v_0\Vert _{L^{\infty }})\}\) and \(\alpha \) is as in (11).

Though Theorem 2.2 is not stated in [18] but it essentially follows from the techniques used in [18, Theorem 2] and Kružkov’s uniqueness proof [15] for scalar conservation laws. For sake of completeness we give a sketch of the proof for Theorem 2.2 in “Appendix”. The main reason to rely on Theorem 2.2 instead of the uniqueness result in [6] is to exclude the following assumption [6, Hypothesis (H1); page 5] on flux:

-

A(u,x) is continuous at all points of \(\mathbb {R}\times \mathbb {R}\setminus \mathcal {N}\) where \(\mathcal {N}\) is a closed set of zero measure.

Audusse and Perthame [6] presents the following two examples to which their uniqueness theorem applies.

Example 2.3

In this example \(u_M(x) = 0\) for all \(x \in \mathbb {R}\). Assumptions (H-1)–(H-4) are satisfied if \(S \in BV(\mathbb {R})\), and \(S(x) \ge \epsilon \) for some \(\epsilon >0\).

Example 2.4

Assumptions (H-1)–(H-4) are satisfied for this example also if we assume that \(u_M \in BV(\mathbb {R}).\)

Our main theorem is

Theorem 2.5

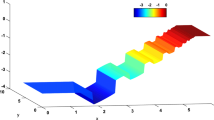

Assume that the flux function A satisfies (H-1)–(H-4), and that \(u_0\in L^{\infty }(\mathbb {R})\). Then as the mesh size \(\Delta \rightarrow 0\), the approximations \(u^{\Delta }\) generated by the Godunov scheme described in Sect. 3 converge in \(L^1_{{{\,\mathrm{loc}\,}}}(\Pi _T)\) and pointwise a.e in \(\Pi _T\) to the unique adapted entropy solution \(u \in L^{\infty }(\Pi _T) \cap C([0,T]:L^1_{{{\,\mathrm{loc}\,}}}(\mathbb {R}))\) corresponding to the Cauchy problem (1)–(2) with initial data \(u_0\).

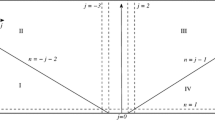

3 Godunov scheme and compactness

For \(\Delta x>0\) and \(\Delta t>0\) consider equidistant spatial grid points \(x_j:=j\Delta x\) for \(j\in \mathbb {Z}\) and temporal grid points \(t^n:=n\Delta t\) for integers \(0 \le n\le N\). Here N is the integer such that \(T \in [t^N,t^{N+1})\). Let \(\lambda :=\Delta t/\Delta x\). We fix the notation \(\chi _j(x)\) for the indicator function of \(I_j:=[x_j - \Delta x /2, x_j + \Delta x /2)\), and \(\chi ^n(t)\) for the indicator function of \(I^n:=[t^n,t^{n+1})\). Next we approximate initial data \(u_0\in BV(\mathbb {R})\) by a piecewise constant function \(u^{\Delta }_0\) defined as follows:

Suppose \({\overline{m}}^0_j:=\max \{u_0(x);\,x\in I_j\}\) and \({\underline{m}}^0_j:=\min \{u_0(x);\,x\in I_j\}\). Then, for any \(r>0\) we have

Therefore, \(u_0^\Delta \rightarrow u_0\) in \(L^1_{loc}(\mathbb {R})\). Later this argument is also used in Lemma 3.14. The approximations generated by the scheme are denoted by \(u_j^n\), where \(u_j^n \approx u(x_j,t^n)\). The grid function \(\{u_j^n\}\) is extended to a function defined on \(\Pi _T\) via

We use the notation \(\Delta _+,\Delta _-\) as standard difference operator in x variable, that is, \(\Delta _+v_j=v_{j+1}-v_j\) and \(\Delta _-v_j=v_j-v_{j-1}\). The Godunov type scheme that we employ is then:

where the numerical flux \({\bar{A}}\) is

When \(A(\cdot ,x_j)=A(\cdot ,x_{j+1})\), the flux \({\bar{A}}\) reduces to the classical Godunov flux that is used for conservation laws where the flux does not have a spatial dependence. Otherwise \({\bar{A}}\) is a generalization of the Godunov flux proposed in [2] for the two-flux problem where the flux is given by (7). It is readily verified that \({\bar{A}}(u,u,x_j,x_j) = A(u,x_j)\) and that \({\bar{A}}(u,v,x_j,x_{j+1})\) is nondecreasing (nonincreasing) as a function of u (v).

Consider \(\Psi (\cdot ,\cdot )\) as in (10). Let

We obtain compactness for \(\{u^{\Delta }\}\) via the singular mapping technique, which consists of first proving compactness for the sequence \(\{z^{\Delta }\}\), and then observing that convergence of the original sequence \(\{u^{\Delta } \}\) follows from the fact that \(u \mapsto \Psi (u,x)\) has a continuous inverse.

For our analysis we will assume that \(u_0-u_M\) has compact support and \(u_0 \in \text {BV}(\mathbb {R})\). We will show in Sect. 4 that the solution we obtain as a limit of numerical approximations satisfies the adapted entropy inequality (12). Using (14), the resulting existence theorem is then extended to the case of \(u_0 \in L^{\infty }(\mathbb {R})\) via approximations to \(u_0\) that are in \(\text {BV}\) and are equal to \(u_M\) outside of compact sets.

Let

By Assumption H-1, and since \(\left| \left| u_0 \right| \right| _{\infty }<\infty \) (which follows from \(u_0 \in \text {BV}(\mathbb {R})\)), \({\bar{\alpha }} < \infty \). Define \(k_{{\bar{\alpha }}}^{\pm }(x)\) via the equations

Lemma 3.1

The following bounds are satisfied:

Proof

By definition, \(u_M(x) \le k^+_{{\bar{\alpha }}}(x)\). On the other hand, by (8) and (21), we have

By Assumption H-3, \(\gamma ^{-1}\) is defined on \([0,\infty )\). Applying \(\gamma ^{-1}\) to both sides of (23) yields

Thus

The desired bound for \(k^+_{{\bar{\alpha }}}(x)\) then follows from (25), along with the fact that \(u_M \in L^{\infty }(\mathbb {R})\) (which follows from \(u_M \in \text {BV}(\mathbb {R})\)). The proof for \(k^-_{{\bar{\alpha }}}(x)\) is similar. \(\square \)

Let

Note that by Assumption H-1, \(L< \infty \). Since R is continuous we have \({\bar{R}}<\infty \). Also, by (21) we have \(k^-_{{\bar{\alpha }}}(x) \le u_M(x) \le k^+_{{\bar{\alpha }}}(x)\) for all \(x \in \mathbb {R}\), implying that \(\left| \left| u_M \right| \right| _{\infty } \le \mathcal {M}\).

Lemma 3.2

The numerical flux \({\bar{A}}\) satisfies the following continuity estimates:

for \(u,{\hat{u}},v,{\hat{v}}\in [-\mathcal {M},\mathcal {M}]\) and \(x,{\hat{x}},y,{\hat{y}}\in \mathbb {R}\).

Proof

These inequalities follow from the definition of \({\bar{A}}\) along with

More specifically, from (18) and (28) we have

The second inequality in (27) can be proven in a similar manner. By using definition (18) and inequalities in (28) we have

The last inequality in (27) can be shown in a similar way. \(\square \)

Lemma 3.3

Let \(u\in [-\mathcal {M},\mathcal {M}]\) and \(x,y\in \mathbb {R}\). Then we have

Proof

We start with the observation that

When \(u_M(x)<u\le u_M(y)\) we have

Similarly, when \(u_M(y)<u\le u_M(x)\) we have

In the other cases we can estimate directly and get

By symmetry we have

\(\square \)

Lemma 3.4

The grid functions \(\{ k_{{\bar{\alpha }}}^{-}(x_j)\}_{j \in \mathbb {Z}}\) and \(\{ k_{{\bar{\alpha }}}^{+}(x_j)\}_{j \in \mathbb {Z}}\) are stationary solutions of the difference scheme.

Proof

We will prove the lemma for \(\{ k_{{\bar{\alpha }}}^{+}(x_j)\}\). The proof for \(\{ k_{{\bar{\alpha }}}^{-}(x_j)\}\) is similar. It suffices to show that

By definition

Thus, referring to (18),

Recalling the formula for the scheme (17), it is clear from the above that \(\{ {k_{{\bar{\alpha }}}^{+}}(x_j)\}_{j \in \mathbb {Z}}\) is a stationary solution. \(\square \)

For the convergence analysis that follows we assume that \(\Delta :=(\Delta x,\Delta t)\rightarrow 0\) with the ratio \(\lambda = \Delta t / \Delta x\) fixed and satisfying the CFL condition

Lemma 3.5

Assume that \(\lambda \) is chosen so that the CFL condition (30) holds.

The scheme is monotone, meaning that if \( \left| v_j^n\right| , \left| w_j^n\right| \le \mathcal {M}\) for \( j \in \mathbb {Z}\), then

Proof

We define \(H_j(u,v,w)\) as follows

We show that \(H_j\) is nondecreasing in each variable. Note that from definition (18) it is clear that \({\bar{A}}(\cdot ,\cdot ,x_j,x_{j+1})\) is nondecreasing in the first variable and nonincreasing in the second variable. Therefore, from (31) we have

Next we define

For \(v_1\le v_2\) we denote \(I_1={\bar{A}}(u,v_1,x_{j-1},x_{j})-{\bar{A}}(u,v_2,x_{j-1},x_{j})\) and \(I_2={\bar{A}}(v_1,w,x_{j},x_{j+1})-{\bar{A}}(v_2,w,x_{j},x_{j+1})\) and \(I=I_1-I_2\). From (18) we have the following:

From (32) and (33) it follows that \(I=-I_2\) if \(v_1\ge u_M(x_j)\) and \(I=I_1\) if \(v_2\le u_M(x_j)\). In both the cases we have \(|I|\le L|v_1-v_2|\). When \(v_1\le u_M(x_j)\le v_2\) we have

Therefore we have

Hence, the proof is completed by invoking the CFL condition (30). \(\square \)

Lemma 3.6

Assume that the CFL condition (30) holds. Then,

Proof

From (20), we have

Applying the two branches of the inverse function \(A^{-1}(\cdot ,x)\) to (36), and using the fact that the increasing branch preserves order, while the decreasing branch reverses order, we have

By evaluation at \(x=x_j\), we also have

Thus \(\left| u_j^0\right| \le \mathcal {M}\) for \(j \in \mathbb {Z}\). It is clear from (26) that also \(\left| k_{{\bar{\alpha }}}^{\pm }(x_j)\right| \le \mathcal {M}\) for \(j \in \mathbb {Z}\). We apply a single step of the scheme to all three parts of (38), and due to the bounds \(\left| u_j^0\right| , \left| k_{{\bar{\alpha }}}^{\pm }(x_j)\right| \le \mathcal {M}\), the scheme acts in a monotone manner (Lemma 3.5), so that the ordering of (38) is preserved. In addition each of \(\{ k_{{\bar{\alpha }}}^{-}(x_j)\}\) and \(\{ k_{{\bar{\alpha }}}^{+}(x_j)\}\) is a stationary solution of the difference scheme, by Lemma 3.4. Thus, after applying the difference scheme, the result is

implying that (35) holds at time level \(n=1\). The proof is completed by continuing this way from one time step to the next. \(\square \)

Lemma 3.7

The following bound holds for \(z_j^n\):

Proof

From definition (10) of \(\Psi \), (26) and (35) we have

\(\square \)

Lemma 3.8

The following time continuity estimate holds for \(u_j^n\):

Proof

It is apparent from (18) that

With (42) and the assumption that \(u_0-u_M\) has compact support, it is clear that \(u_j^n - u_M(x_j)\) vanishes for \(\left| j\right| \ge J(n)\), for some positive integer J(n), for each \(n \ge 0\). In particular, we have \(\sum \nolimits _{j \in \mathbb {Z}} \left| u_j^n - u_M(x_j)\right| < \infty \) for \(n \ge 0\). Moreover, the fact that \(u_j^n = u_M(x_j)\) for \(\left| j\right| \ge J(n)\), combined with (42), yields

As a consequence of (43) and (17) we have

Due to the monotonicity property (Lemma 3.5), and the conservation property (44) we can invoke a straightforward modification of the Crandall-Tartar lemma [14], yielding

The proof will be completed by estimating the last term.

Invoking Lemma 3.2, we obtain

Plugging (47) into (46), and then summing over \(j \in \mathbb {Z}\), the result is

\(\square \)

Lemma 3.9

The following time continuity estimate holds for \(z_j^n\):

Proof

The estimate (48) follows directly from time continuity for \(\{u_j^n \}\), and Lipschitz continuity of \(\Psi (\cdot ,x_j)\). Indeed,

Now (48) is immediate from (41) and (49). \(\square \)

We next turn to establishing a spatial variation bound for \(z_j^n\). Define

We also use the notation \(b_+,b_-\) as positive and negative part of real number b defined as \(b_+=\max \{b,0\}\) and \(b_-=\min \{b,0\}\). Proof of the following lemma follows from Lemma 4.5 of [2] or Lemma 3.3 of [21].

Lemma 3.10

The following inequality holds:

Proof

Since RHS in (51) is non-negative, it is enough to consider the case when \((\Psi (u_{j+1}^n,x_j) - \Psi (u_j^n,x_j))_+>0 \), that is, \(\Psi (u_{j+1}^n,x_j)> \Psi (u_j^n,x_j) \). As \(u\mapsto \Psi (u,x_j)\) is an increasing function we have \(u_{j+1}^n>u_j^n\). Note that \(G(v,w):={\bar{A}}(v,w,x_j,x_j)\) for \(v,w\in \mathbb {R}\) is a standard Godunov flux. Hence, G is Lipschitz continuous function and we have

Now we observe the following

Next we check the following two inequalities,

We first show (56). If \(\sigma _-(u_j^n,x_j)=0\), then \(u_j^n\ge u_M(x_j)\). Subsequently, \(u_{j+1}^n>u_j^n\ge u_M(x_j)\). Since \(u\mapsto A(u,x_j)\) is increasing for \(u\ge u_{M}(x_j)\), the RHS of (56) vanishes. Now, if \(\sigma _-(u_j^n,x_j)=1\), then we have \(u_j^n<u_M(x_j)\). If \(u_{j-1}^n\ge u_M(x_j)\) we get \(-\int \nolimits _{u_{j-1}^n}^{u_{j}^n}\frac{\partial G}{\partial v}(v,u^n_j)\,dv\ge 0\). If \(u_{j-1}^n< u_M(x_j)\), then we have \(G(u_{j-1}^n,u_j^n)=G(u_j^n,u_j^n)\) and, subsequently, it holds \(-\int \nolimits _{u_{j-1}^n}^{u_{j}^n}\frac{\partial G}{\partial v}(v,u^n_j)\,dv =0\). Therefore, for \(\sigma _-(u^n_j,x_j)=1\) we have

To obtain the last equality we have used the fact that for \(u_j^n<u_M(x_j)\) and \(w\ge u_j^n\), \(G(u_j^n,w) = A(\min (w,u_M(x_j)),x_j)\). This completes the proof of (56). Now we show (55). If \(\sigma _+(u_{j+1}^n,x_j)=0\), then we have \(u_j^n<u_{j+1}^n\le u_M(x_j)\). Hence, the RHS of (55) vanishes. Now consider the case when we have \(\sigma _+(u^n_{j+1},x_j)=1\). This says, \(u_{j+1}^n>u_M(x_j)\). If \(u_{j+2}^n>u_M(x_j)\) we have \(G(u_{j+1}^n,u_{j+2}^n)=G(u_{j+1}^n,u_{j+1}^n)\) or equivalently, \(\int \nolimits _{u_{j+1}^n}^{u_{j+2}^n}\frac{\partial G}{\partial w}(u^n_{j+1},w)\,dw=0\). If \(u_{j+2}^n\le u_M(x_j)\), we get \(u_{j+2}^n\le u_{j+1}^n\) and subsequently, \(\int \nolimits _{u_{j+1}^n}^{u_{j+2}^n}\frac{\partial G}{\partial w}(u^n_{j+1},w)\,dw\ge 0\). Hence, for \(\sigma _+(u^n_{j+1},x_j)=1\), we have

This guarantees (55). To obtain the last equality we have used the fact that for \(u_{j+1}^n>u_M(x_j)\) and \(v\le u_{j+1}^n\), \(G(v,u_{j+1}^n) = A(\max (v,u_M(x_j)),x_j)\). Now combining (55), (56) with (54) we conclude (51). \(\square \)

We remark that Lemma 3.10 is still true if we replace \(u_{j+2}^n\) and \(u_{j-1}^n\) by any two real number \(w_1,w_2\).

Lemma 3.11

Let \({\bar{A}}^n_{j+1/2} = {\bar{A}}(u_j^n,u_{j+1}^n,x_j,x_{j+1})\). The following inequality holds:

where \(\sum \nolimits _{j\in \mathbb {Z}} \Omega _{j+1/2}^n \le C ({{\,\mathrm{TV}\,}}(a)+{{\,\mathrm{TV}\,}}(u_M))\) and C is independent of \(\Delta \).

Proof

By Lemma 3.3 we have

From (51) we have

We further modify (59) to get the following

Next we apply Lemma 3.2 to bound the last five terms of (60) by

Combining (60) and (61) we get (57) with

\(\square \)

Lemma 3.12

For each \(n\ge 0\), there is a positive integer J(n) such that

Proof

As part of the proof of Lemma 3.8 we showed that \(u_j^n = u_M(x_j)\) for sufficiently large \(\left| j\right| \). The proof is completed by combining this fact with (63). \(\square \)

Lemma 3.13

The following spatial variation bound holds for \(n \ge 0\):

where C is a \(\Delta \)-independent constant.

Proof

From Lemma 3.11 we find that

Invoking Lemma 3.8 and the fact that \(\sum \nolimits _{j \in \mathbb {Z}} \Omega _{j+1/2}^n \le C ({{\,\mathrm{TV}\,}}(a)+{{\,\mathrm{TV}\,}}(u_M))\), the result is

for some \(\Delta \)-independent constant \(C_2\).

As a result of Lemma 3.12,

We also have

implying that

From (66) and (69) it follows that

\(\square \)

Lemma 3.14

Define \(a^{\Delta }(x):=\sum _{j \in \mathbb {Z}}\chi _j(x)a(x_j)\) and \(u_M^{\Delta }(x):=\sum _{j \in \mathbb {Z}}\chi _j(x)u_M(x_j)\). As \(\Delta \rightarrow 0\), \(a^{\Delta } \rightarrow a\) and \(u_M^\Delta \rightarrow u_M\) in \(L^1_{{{\,\mathrm{loc}\,}}}(\mathbb {R})\).

Proof

We give a proof for \(a^\Delta \) and it similarly follows for \(u_M^\Delta \). Suppose

Then for any \(\sigma >0\), we have

\(\square \)

Lemma 3.15

The approximations \(u^{\Delta }\) converge as \(\Delta \rightarrow 0\), modulo extraction of a subsequence, in \(L^1_{{{\,\mathrm{loc}\,}}}(\Pi _T)\) and pointwise a.e. in \(\Pi _T\) to a function \(u \in L^{\infty }(\Pi _T) \cap C([0,T]:L^1_{{{\,\mathrm{loc}\,}}}(\mathbb {R}))\).

Proof

From Lemmas 3.7, 3.9 and 3.13 we have for some subsequence, and some \(z \in L^1(\Pi _T) \cap L^{\infty }(\Pi _T)\), \(z^{\Delta } \rightarrow z\) in \(L^1(\Pi _T)\) and pointwise a.e. Define \(u(x,t) = \Psi ^{-1}(z(x,t),x)\). We have \(u_j^n = \Psi ^{-1}(z_j^n,x_j)\), or

By using triangle inequality and Lemma 3.3 we obtain the following

From Lemma 3.14, we have \(a(x^\Delta )=a^\Delta \rightarrow a\) and \(u_M(x^\Delta )=u^\Delta _M\rightarrow u_M\) in \(L^1_{loc}(\mathbb {R})\), therefore up to a subsequence \(a(x^\Delta )\rightarrow a(x),u_M(x^\Delta )\rightarrow u(x)\) for a.e. \(x\in \mathbb {R}\). Hence, \(\Psi (u^{\Delta }(x,t),x)\rightarrow z(x,t)\) as \(\Delta \rightarrow 0\) for a.e. \((x,t)\in \Pi _T\). Fixing a point \((x,t) \in \Pi _T\) where \(\Psi (u^{\Delta }(x,t),x) \rightarrow z(x,t)\) and using the continuity of \(\zeta \mapsto \Psi ^{-1}(\zeta ,x)\) for each fixed \(x \in \mathbb {R}\), we get

Thus \(u^{\Delta } \rightarrow u\) pointwise a.e. in \(\Pi _T\). Since \(u^{\Delta }\) is bounded in \(\Pi _T\) independently of \(\Delta \) in \(\Pi _T\), we also have \(u^{\Delta } \rightarrow u\) in \(L^1_{{{\,\mathrm{loc}\,}}}(\Pi _T)\), by the dominated convergence theorem. In fact, due to the time continuity estimate (41), we also have \(u \in C([0,T]:L^1_{{{\,\mathrm{loc}\,}}}(\mathbb {R}))\). \(\square \)

4 Entropy inequality and proof of Theorem 2.5

In this section we show that u satisfies adapted entropy inequality (12), the remaining ingredient required for the proof of Theorem 2.5.

Lemma 4.1

We have the following discrete entropy inequalities:

where

Proof

The proof is a slightly generalized version of a now classical argument found in [9] or [14]. Denote the grid function \(\{u_j^n\}_{j \in \mathbb {Z}}\) by \(U^n\), and write the scheme defined by (17) as \(U^{n+1} = \Gamma (U^n)\), i.e., \(\Gamma (\cdot )\) is the operator that advances the solution from time level n to \(n+1\). Let \(K^{\pm }_{\alpha } = \{k_{\alpha }^{\pm }(x_j)\}_{j \in \mathbb {Z}}\). Since the scheme is monotone, we have

Using the fact that \(\Gamma (K^{\pm }_{\alpha }) = K^{\pm }_{\alpha }\), it follows from (76) that

The discrete entropy inequality (75) then follows from (77), using the definition of \(\Gamma (\cdot )\) in terms of (17) along with the identity \(a \vee b - a \wedge b = \left| a-b\right| \). \(\square \)

Lemma 4.2

Let

Then

Proof

We first show that \(\Psi (k^{\pm ,\Delta }_\alpha (\cdot ),\cdot )\rightarrow \Psi (k^{\pm }_\alpha (\cdot ),\cdot )\) as \(\Delta \rightarrow 0\). Observe that

This yields

By virtue of Assumption H-2 we obtain

By using (71) in (80), we have \(\Psi (k^{\pm ,\Delta }_{\alpha }(x),x)\rightarrow \Psi (k^{\pm }_\alpha (x),x)\) as \(\Delta \rightarrow 0\) for a.e. \(x\in \mathbb {R}\). By using continuity of \(\zeta \mapsto \Psi ^{-1}(\zeta ,x)\) for each fixed \(x\in \mathbb {R}\) we have \(k^{\pm ,\Delta }_{\alpha }(x)\rightarrow k^{\pm }_\alpha (x)\) as \(\Delta \rightarrow 0\) for a.e. \(x\in \mathbb {R}\). \(\square \)

Lemma 4.3

The (subsequential) limit u guaranteed by Lemma 3.15 satisfies the adapted entropy inequalities (13).

Proof

Fix \(\alpha \ge 0\). Define

and

We can rewrite \(v^{\Delta }\) and \(w^{\Delta }\) as follows

Invoking the convergence results for \(u^{\Delta }\) and for \(k^{\pm ,\Delta }_{\alpha }\), we have

pointwise a.e. and in \(L^1_{{{\,\mathrm{loc}\,}}}(\Pi _T)\). From Lemma 4.1 we have

where

Let \(0 \le \phi (x,t) \in C_0^1(\mathbb {R}\times (0,T))\) be a test function, and define \(\phi _j^n = \phi (x_j,t^n)\). As in the proof of the Lax-Wendroff theorem, we multiply (84) by \(\phi _j^n \Delta x\), and then sum by parts to arrive at

The first and third sums on the left side of (85) converge to \(\int _{\mathbb {R}_+}\int _{\mathbb {R}}\frac{\partial \phi }{\partial t}|u(x,t)-k^{\pm }_{\alpha }(x)|\,dxdt\) and \(\int _{\mathbb {R}} \left| u_0(x) -k^{\pm }_{\alpha }(x)\right| \phi (x,0) \,dx\), respectively. The crucial part of the argument is to prove convergence of the second sum on left hand side of (85). It suffices to prove that

and that

We will prove (86). The proof of (87) is similar. We start with the following identity:

Taking absolute values, using \({\bar{A}}(v_j^n,v_{j}^n,x_j,x_{j}) = A(v_j^n,x_j)\), and (27), we have

Thus, with the abbreviation \(\rho _j^n := (\phi _{j+1}^{n} -\phi _j^n)/\Delta x\),

For \(S_1\), we can invoke the Kolmogorov compactness criterion [14] since \(v^{\Delta }\) converges in \(L^1_{{{\,\mathrm{loc}\,}}}(\Pi _T)\), and conclude that \(S_1 \rightarrow 0\). By Assumption H-2 and H-4, (\(a\in \text {BV}(\mathbb {R})\) and \(u_M \in \text {BV}(\mathbb {R})\)), we also have \(S_2,S_3 \rightarrow 0\). As a result, in order to prove (86) it suffices to show that

This limit then follows from the estimate

along with the fact that \(a^{\Delta }\rightarrow a\) in \(L^1_{{{\,\mathrm{loc}\,}}}(\mathbb {R})\) (Lemma 3.14), and \(v^{\Delta } \rightarrow v\) in \(L^1_{{{\,\mathrm{loc}\,}}}(\Pi _T)\).

\(\square \)

We can now prove Theorem 2.5.

Proof

Taken together, Lemmas 3.15 and 4.3 establish that the approximations \(u^{\Delta }\) converge in \(L^1_{{{\,\mathrm{loc}\,}}}(\Pi _T)\) and pointwise a.e. in \(\Pi _T\), along a subsequence, to a function \(u \in L^{\infty }(\Pi _T) \cap C([0,T]:L^1_{{{\,\mathrm{loc}\,}}}(\mathbb {R}))\), and u is an adapted entropy solution in the sense of Definition 2.1. By Theorem 2.2, u is the unique solution to the Cauchy problem (1)–(2) with initial data \(u_0\). Moreover, as a consequence of the uniqueness result, the entire computed sequence \(u^{\Delta }\) converges to u, not just a subsequence. The final step of the proof is to extend the result to the case of \(u_0 \in L^{\infty }(\mathbb {R})\), as described in Sect. 3. \(\square \)

References

Adimurthi, Ghoshal, S.S., Veerappa Gowda G.D: Existence and nonexistence of TV bounds for scalar conservation laws with discontinuous flux. Commun. Pure Appl. Math. 64(1), 84–115 (2011)

Adimurthi, Jaffré, J., Veerappa Gowda G.D: Godunov-type methods for conservation laws with a flux function discontinuous in space. SIAM J. Numer. Anal. 42(1), 179–208 (2004)

Adimurthi, Mishra, S., Veerappa Gowda G.D: Optimal entropy solutions for conservation laws with discontinuous flux functions. J. Hyperbolic Differ. Equ. 2, 783–837 (2005)

Andreianov, B., Cancès, C.: Vanishing capillarity solutions of Buckley-Leverett equation with gravity in two-rocks’ medium. Comput. Geosci. 17, 551–572 (2013)

Andreianov, B., Karlsen, K.H., Risebro, N.H.: A theory of \(L^1\)-dissipative solvers for scalar conservation laws with discontinuous flux. Arch. Ration. Mech. Anal. 201, 27–86 (2011)

Audusse, E., Perthame, B.: Uniqueness for scalar conservation laws with discontinuous flux via adapted entropies. Proc. R. Soc. Edinb. Sect. A 135, 253–265 (2005)

Baiti, P., Jenssen, H.K.: Well-posedness for a class of \(2\times 2\) conservation laws with \(L^{\infty }\) data. J. Differ. Equ. 140, 161–185 (1997)

Bürger, R., Karlsen, K.H., Risebro, N.H., Towers, J.D.: Well-posedness in \(BV_t\) and convergence of a difference scheme for continuous sedimentation in ideal clarifier-thickener units. Numer. Math. 97, 25–65 (2004)

Crandall, M.G., Majda, A.: Monotone difference approximations for scalar conservation laws. Math. Comput. 34, 1–21 (1980)

Diehl, S.: A conservation law with point source and discontinuous flux function modelling continuous sedimentation. SIAM J. Appl. Math. 56, 388–419 (1996)

Garavello, M., Natalini, R., Piccoli, B., Terracina, A.: Conservation laws with discontinuous flux. Netw. Heterog. Media 2, 159–179 (2007)

Ghoshal, S.S.: Optimal results on TV bounds for scalar conservation laws with discontinuous flux. J. Differ. Equ. 3, 980–1014 (2015)

Ghoshal, S.S.: BV regularity near the interface for nonuniform convex discontinuous flux. Netw. Heterog. Media 11, 331–348 (2016)

Holden, H., Risebro, N.H.: Front Tracking for Hyperbolic Conservation Laws. Springer, New York (2002)

Kružkov, S.N.: First order quasilinear equations with several independent variables. Mat. Sb. (N.S.) 81(123), 228–255 (1970). (Russian)

May, L., Shearer, M., Davis, K.: Scalar conservation laws with nonconstant coefficients with application to particle size segregation in granular flow. J. Nonlinear Sci. 20, 689–707 (2010)

Panov, E.Y.: Existence of strong traces for quasi-solutions of multidimensional scalar conservation laws. J. Hyperbolic Differ. Equ. 4, 729–770 (2007)

Panov, E.Y.: On existence and uniqueness of entropy solutions to the Cauchy problem for a conservation law with discontinuous flux. J. Hyperbolic Differ. Equ. 06, 525–548 (2009)

Piccoli, B., Tournus, M.: A general BV existence result for conservation laws with spatial heterogeneities. SIAM J. Math. Anal. 50, 2901–2927 (2018)

Shen, W.: On the uniqueness of vanishing viscosity solutions for Riemann problems for polymer flooding. Nonlinear Differ. Equ. Appl. 24, 37 (2017)

Towers, J.D.: Convergence of a difference scheme for conservation laws with a discontinuous flux. SIAM J. Numer. Anal. 38, 681–698 (2000)

Towers, J.D.: An existence result for conservation laws having BV spatial flux heterogeneities—without concavity. J. Differ. Equ. 269, 5754–5764 (2020)

Vasseur, A.: Strong traces of multidimensional scalar conservation laws. Arch. Ration. Mech. Anal. 160, 181–193 (2001)

Acknowledgements

First and second author acknowledge the support of the Department of Atomic Energy, Government of India, under Project No. 12-R&D-TFR-5.01-0520. First author would also like to thank Inspire faculty-research Grant DST/INSPIRE/04/2016/000237. We thank two anonymous referees for their careful reading of the paper, and for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Let \(g:\mathbb {R}\rightarrow \mathbb {R}\) be defined as \(g(x)=\left| x\right| \). Suppose u satisfies entropy condition (12) then set \(v(x,t)=\Psi (u(x,t),x)\) where \(\Psi \) is as in (10). We denote inverse of the map \(\zeta \mapsto \Psi (\zeta ,x)\) by \(\alpha (\cdot ,x)\). Then we have

for any \(k\in \mathbb {R}\) and \(0\le \phi \in C_0^{\infty }(\mathbb {R}\times \mathbb {R}_+)\).

Lemma 5.1

[18] Let \(v_1,v_2\in L^{\infty }(\mathbb {R}\times \mathbb {R}_+)\) be two functions satisfying (93). Then we have

Proof

For \(0\le \phi \in C_c^{\infty }(\mathbb {R}_+\times \mathbb {R})\) and \(0\le \psi \in C_c^{\infty }(\mathbb {R}_+\times \mathbb {R})\) we have

and

Fix a \(\Phi \in C_c^{\infty }(\mathbb {R}\times \mathbb {R}_+)\). Let \(\eta _\epsilon \) be Friedrichs mollifiers. Consider

Putting \(k=v_2(y,s)\) and \(l=v_1(x,t)\) in (95) and (96) respectively and adding the resultants we get

where

Let \(E_0,E_1,E_2\subset \mathbb {R}\) be three sets such that

where \(r=\max \{\Vert v_1\Vert _{L^{\infty }(\mathbb {R}\times \mathbb {R}_+)},\Vert v_2\Vert _{L^\infty (\mathbb {R}\times \mathbb {R}_+)}\}\). Since \(v_2\in L^{\infty }(\mathbb {R}\times \mathbb {R}_+)\), \(E_0,E_1\) are measurable sets and \(meas(\mathbb {R}_+\setminus E_0)=meas(\mathbb {R}\setminus E_1)=0\). By our assumption, for a fixed \(x\in \mathbb {R}\), \(\Psi (x,\cdot )\) is Lipschitz on \([-r,r]\). Since \(C([-r,r])\) is separable, by Pettis Theorem we have measurability of \(E_2\) and \(meas(\mathbb {R}\setminus E_2)=0\). Therefore we can get

as \(\epsilon \rightarrow 0\) for \(x\in E_1\) and a.e. \(t,s\in \mathbb {R}_+\). We can also obtain

as \(\epsilon \rightarrow 0\) for \(x\in E_2\) and a.e. \(t,s\in \mathbb {R}_+\). With the help of (103) and (104) and Lebesgue Dominated Convergence Theorem we have

In a similar way we can show

Similarly we have

as \(\epsilon \rightarrow 0\) for \(x\in E_1\) and a.e. \(t,s\in \mathbb {R}_+\). Then by Lebesgue Dominated Convergence Theorem we have

We also have for a.e. \(x\in \mathbb {R}\) and \(t\in E_0\)

as \(\delta \rightarrow 0\). This yields

This completes the proof. \(\square \)

Observe the following

From Lemma 5.1 we can prove the following by a similar argument as in [15].

Lemma 5.2

Let \(v_1,v_2\in C([0,T],L^{1}_{loc}(\mathbb {R}))\cap L^{\infty }(\mathbb {R}\times \mathbb {R}_+)\) be two function satisfying (93). Then for a.e. \(t\in [0,T]\) and any \(r>0\) we have

swhere \(L_1:=\sup \{\partial _u A(u,x);\,x\in \mathbb {R},\left| u\right| \le \max (\Vert v_1(x,0)\Vert _{L^{\infty }},\Vert v_2(x,0)\Vert _{L^{\infty }})\}\).

Rights and permissions

About this article

Cite this article

Ghoshal, S.S., Jana, A. & Towers, J.D. Convergence of a Godunov scheme to an Audusse–Perthame adapted entropy solution for conservation laws with BV spatial flux. Numer. Math. 146, 629–659 (2020). https://doi.org/10.1007/s00211-020-01150-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-020-01150-y