Abstract

We provide both a general framework for discretizing de Rham sequences of differential forms of high regularity, and some examples of finite element spaces that fit in the framework. The general framework is an extension of the previously introduced notion of finite element systems, and the examples include conforming mixed finite elements for Stokes’ equation. In dimension 2 we detail four low order finite element complexes and one infinite family of highorder finite element complexes. In dimension 3 we define one low order complex, which may be branched into Whitney forms at a chosen index. Stokes pairs with continuous or discontinuous pressure are provided in arbitrary dimension. The finite element spaces all consist of composite polynomials. The framework guarantees some nice properties of the spaces, in particular the existence of commuting interpolators. It also shows that some of the examples are minimal spaces.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This article is concerned with developing finite element complexes similar to those described in [4, 8, 13, 24, 27, 32], but with enhanced continuity properties. Finite element spaces should be compatible in a precise sense, which in general will depend on the partial differential equation one wants to solve and will reflect the functional framework one adopts for the analysis. One way of phrasing compatibility is that the discrete spaces should form a subcomplex of a certain Hilbert complex, whose norm reflects the desired continuity, and that they should be equipped with bounded projections that commute with the differential operators. The fields we seek to discretize here can all be interpreted as differential forms, and the relevant operators are instances of the exterior derivative. What we seek can then be called good discrete de Rham sequences. The finite elements described in the above cited works are only partially continuous: for vectorfields continuity holds only in either tangential or normal directions, at interfaces of the mesh. In this paper, full continuity is achieved. This is particularly relevant for the Stokes equation.

In many cases the bounded projections alluded to above, can be obtained by an averaging technique [12, 14], from the interpolators associated with degrees of freedom, defined on smooth differential forms. Since the averaging technique is defined so as to commute with the differential, we may concentrate on getting degrees of freedom that provide commuting interpolators. As it turns out, the existence of such degrees of freedom, on a finite element space, can be deduced from a few algebraic constraints, that have been clarified in a framework of finite element systems (FES) introduced in [9] and further developed in [10, 11, 13]. A precise notion of compatibility guarantees that the so-called harmonic degrees of freedom are unisolvent and provide an interpolator that commutes.

Recall Ciarlet’s definition of a finite element (e.g. [16] Sect. 10), in terms of spaces equipped with degrees of freedom (DoF). The framework of FES gives DoFs a secondary role. Rather, compatibility is defined in terms of restrictions and differentials. On a compatible FES there will in general be many choices of DoFs, for the same spaces. The harmonic DoFs are a natural choice among these possibilities. As we will see DoFs seem most useful to describe low order elements, where there is not so much choice.

In this paper we provide both a generalization of the framework of FES that can handle higher order continuity of differential forms and some examples of new spaces that fit into the framework. The generalization essentially consists in allowing for other types of restriction operators than pullback of differential forms. These restriction operators reflect that higher continuity implies that more information about the fields should be available on interfaces in the mesh. The examples of FES we provide, are all composite finite elements on a simplicial mesh, that are piecewise polynomials with respect to a simplicial refinement. For the spaces of scalar functions, considered as 0-forms, we use continuously differentiable composite elements, as introduced by Hsieh and Clough-Tocher and discussed further in [16, 19, 25, 35]. The rest of the sequences, pertaining to differential k-forms for \(k \ge 1\), appear to be new. These sequences end with conforming mixed finite elements for the Stokes equation: continuous vectorfields with either continuous or discontinuous divergence.

Our results are quite closely related to those of [20], where finite element families for Stokes’ equation are defined in 2D, for both \(\mathrm {H}^1{-}\mathrm {L}^2\) conforming and \(\mathrm {H}^1_{{{\mathrm{div}}}}{-}\mathrm {H}^1\) conforming settings, as part of de Rham sequences with high regularity. In [29] these results are extended to 3D. The spaces attached to triangles or tetrahedra consist of polynomials, including polynomial bubbles. In particular they are smooth functions. Their degrees of freedom for vectorfields include in particular all first order partial derivatives at vertices. Our local spaces of vectorfields, on the other hand, consist of composite polynomials which are not necessarily of class \(\mathrm {C}^1\), and our degrees of freedom at vertices are just the vertex values and (for \(\mathrm {H}^1_{{{\mathrm{div}}}}\)) vertex values of the divergence, which is a particular combination of first order derivatives. We point out that our spaces come equipped with commuting interpolators. The lower order continuity/differentiability imposed at vertices (for instance), and the composite nature of our elements, seem important in this respect, from the point of view provided by FES, for the given continuity one wants to achieve. The commuting diagram that we obtain, as a consequence of compatibility, makes the proof of the inf-sup condition easier than the macro-element techniques introduced for Stokes in [34]. Or, at least, it provides an alternative type of proof.

We also mention a connection with [22, 23]. In two dimensions they construct a complex of spaces equipped with degrees of freedom that provide commuting interpolators, and such that the two last spaces form a Stokes pair. In dimension 3 they construct Stokes pairs equipped with degrees of freedom that make the interpolator commute. In both cases, the local spaces contain rational functions, where we have used composite polynomials for similar purposes. In dimension two their lowest order complex resolves a \(\mathrm {C}^1\) element due to Zienkiewicz, whereas in our case we resolve the Clough-Tocher element. See Remarks 5 and 12 for further considerations.

There is a vast literature on the construction of stable Stokes pairs. The most natural candidate seems to be the \(\mathrm {C}^{0}\mathrm {P}^{p} {-} \mathrm {P}^{p-1}\) pair, where the velocity is discretised by Lagrange elements of degree p, and the pressure with discontinuous polynomials of degree \(p-1\). This is called the Scott–Vogelius element [33], which is easy to implement and leads to strong divergence-free discretisations ; actually \({{\mathrm{div}}}V_{h}\subseteq Q_{h}\), for velocity space \(V_{h}\) and pressure space \(Q_{h}\). However the surjectivity and inf-sup conditions are subtle. The divergence operator \({{\mathrm{div}}}: \mathrm {C}^0\mathrm {P}^{p} \rightarrow \mathrm {P}^{p-1}\) is onto when there are no “singular vertices”. The definition of singular vertex is clearcut in 2D ; in [33] it is shown that in 2D, when there is no singular vertex and \(p\ge 4\), the inf-sup condition holds (with respect to \(\mathrm {H}^1 {-} \mathrm {L}^2\) norms). In 3D, it remains open to define all singular vertices and edges, and find the minimal polynomial degree p, see [38].

Instead of trying to identify singular vertices and edges, people also identify refinements of simplicial meshes, where the inf-sup condition holds:

– In 2D, on triangles with Clough-Tocher splits, stability of \(\mathrm {C}^{0}\mathrm {P}^{2} - \mathrm {P}^{1}\) and \(\mathrm {C}^{0}\mathrm {P}^{3} - \mathrm {P}^{2}\) approximations was shown in the thesis of Qin [31], see also [5]. In 2D, the stability of quadratic velocity and linear pressure on crisscross triangulations can be found in [5]. On two dimensional Powell–Sabin splits, the \(\mathrm {C}^0\mathrm {P}^{1} {-} \mathrm {P}^{0}\) pair is stable [39].

– The 3D case is more involved. When we subdivide a tetrahedra into four, by the Alfeld split that connects one internal point with the four vertices, the inf-sup condition was shown in [38]. The lowest degree in this case is \(\mathrm {C}^0\mathrm {P}^{4} - \mathrm {P}^{3}\). On Powell–Sabin splits, \(\mathrm {C}^0\mathrm {P}^{2} {-} \mathrm {P}^{1}\) is stable [40].

The main technique of proof in the above cases seems to be the macroelement technique of [34]. Here we rely instead on (often exact) sequences connected by cochain morphisms. In 2D we introduce sequences based on the Clough-Tocher \(\mathrm {C}^1\) element, so that naturally we are led to the \(\mathrm {C}^0\mathrm {P}^{2} - \mathrm {P}^{1}\) pair for Stokes, but not \(\mathrm {C}^0\mathrm {P}^{1} - \mathrm {P}^{0}\).

To be more specific on our contributions, we consider an n-dimensional domain S, say in the Euclidean space \( {\mathbb {R}}^n\). The space of alternating k-linear forms on \({\mathbb {R}}^n\) is denoted \(\mathrm {Alt}^k({\mathbb {R}}^n)\). For \(r \ge 0\) we denote by \(\mathrm {H}^r{\varLambda }^k(S)\) the spaces of k-forms on S with partial derivatives up to order r in \(\mathrm {L}^2(S)\otimes \mathrm {Alt}^k({\mathbb {R}}^n)\). We denote by \(\mathrm {H}^{r}_\mathrm{d}{\varLambda }^k(S)\) the following space:

We are interested in the complexes:

We are also interested in letting r decrease in the complex, at some index, as follows:

If we restrict attention to dimension \(n= 2\) and \(r =0 , 1\) this leaves us with three possibilities:

We refer to these sequences as de Rham sequences with regulartity \((1,0+,0)\), (2, 1, 0) and \((2,1+,1)\) respectively. The two last spaces in the two last sequences are of interest for conforming discretizations of the Stokes equation. It should be pointed out that some reformulations of the Stokes equation with auxilliary variables, can be handled with the first type of sequence (e.g. [28]). There are also examples of non-conforming methods that have been successfull, such as the Crouzeix–Raviart element [7]. As we see it, these methods have been developed because \(\mathrm {H}^1\)-conforming methods, such as those we introduce here, were not known.

We are interested in constructing finite element spaces which provide subcomplexes of the above three complexes. These subcomplexes should be equipped with commuting interpolation operators. For this purpose a framework of FES has been developed for the first type of complex, starting in [9]. It is summarized in [13]. In this paper, we extend the framework so that it can encompass the other two types of complexes, and more generally, we believe, arbitrary \(r \ge 0\) as well as switches between different r as sketched above. For small r we provide examples that illustrate that high order polynomials can be included in the finite element spaces, to achieve arbitrarily high approximation order. In arbitrary dimension we also illustrate that it can be useful to consider different simplicial refinements at different indices of the differential complex. A key tool in our construction is the use of the Poincaré operators, as has already been used to construct complexes of regularity \((1,0+,0)\), and generalizations to arbitrary dimension, [4, 24]. Many more examples than those provided here, should fit in the proposed framework.

The paper can be seen as a step towards a general theory of discretization of highly continuous fields (sections of vector bundles), in terms of inverse systems of complexes of jets. From this point of view, the present paper provides examples of r-jets of order \(r=0\) and \(r= 1\). This already seems adequate for many of the PDEs we have in mind, since they are at most second order.

The paper is organized as follows. In Sect. 2 we relate the regularity of differential forms to their inter-element continuity, expressed with three different restriction operators. In Sect. 3 we recall methods for proving sequence exactness under the exterior derivative, using the Poincare operator and we sketch how it intervenes in finite element constructions. In Sect. 4 we provide four examples of low order composite finite element sequences in space dimension 2. This motivates the framework of generalized finite element systems and gets the machinery started, with respect to higher order polynomials. In Sect. 5 we provide the appropriate notions on generalized FES, leading up to the notion of harmonic interpolator. In Sect. 6 we provide, in dimension 2, examples of composite finite element de Rham sequences with enhanced continuity and arbitrarily high degree of polynomials. In Sect. 7 we provide some tools for defining composite finite elements in arbitrary space dimension. In particular we define different simplicial refinements and study some continuous piecewise affine forms on them. In Sect. 8 we provide a composite finite element de Rham sequence with enhanced continuity and low order polynomials (at most degree two). We also show how such sequences can be branched into Whitney forms at some index. We conclude with some topics for further research.

2 Restrictions and regularity of differential forms

Restriction operators adapted to different regularities Consider a simplicial complex \({\mathcal {T}}\) on a domain S in a vector space \({\mathbb {V}}\) of dimension n. For differential forms which are piecewise smooth with respect to \({\mathcal {T}}\) we have:

-

\(u \in \mathrm {H}^0_\mathrm{d} {\varLambda }^k(S)\) iff the pullbacks to faces are singlevalued. If \(T\in {\mathcal {T}}\) is a simplex, pullback means here pullback in the sense of differential forms by the injection \(T \rightarrow S\). It remembers the action of u only on vectors which are tangent to T [see the paragraph leading to (22)].

In terms of vector proxies \(\mathrm {H}^1_\mathrm{d} {\varLambda }^k(S)\) corresponds to \(\mathrm {L}^2(S)\) vectorfields with \({{\mathrm{curl}}}\) in \(\mathrm {L}^2(S)\), for which the pullback corresponds to taking the tangential component of the vectorfield. On the other hand \(\mathrm {H}^0_\mathrm{d} {\varLambda }^{n-1}(S)\) corresponds to \(\mathrm {L}^2(S)\) vectorfields with \({{\mathrm{div}}}\) in \(\mathrm {L}^2(S)\), for which the pullback to codimension 1 faces corresponds to taking the normal component of the vectorfield.

-

\(u \in \mathrm {H}^1 {\varLambda }^k(S)\) iff the traces on faces are singlevalued. Here trace means restriction in the usual sense, remembering the action of u on all tangent vectors in S (not only T).

For vector proxies this trace operator corresponds to keeping all the compnents of the vectorfields on the faces.

-

\(u \in \mathrm {H}^1_\mathrm{d} {\varLambda }^k(S)\) iff the traces on faces of both u and \({\,}\mathrm {d}{\,}u\) are singlevalued on faces. Here the word trace is used with the same meaning as above.

It will be convenient to denote by \(\mathrm {C}^r{\varLambda }^k(S)\) the space of k-forms on S of class \(\mathrm {C}^r\) and by \(\mathrm {C}^r_\mathrm{d}{\varLambda }^k(S)\) the space of \(u \in \mathrm {C}^r{\varLambda }^k(S)\) such that \({\,}\mathrm {d}{\,}u \in \mathrm {C}^r{\varLambda }^{k+1}(S)\).

We interpret the above conditions ensuring various kinds of regularity, by saying that we have defined three types of restriction operators. Explicitely, according to context, the restriction of a differential form \(u \in \mathrm {C}^r_\mathrm{d}{\varLambda }^k(S)\) to a face T of S will be:

-

the pullback of u, denoted \({{\mathrm{\mathrm {pu}}}}_T u\), which is in \(\mathrm {C}^r_\mathrm{d}{\varLambda }^k(T)\).

-

the trace of u, denoted \({{\mathrm{\mathrm {tr}}}}_T u\), which is in \(\mathrm {C}^r(T) \otimes \mathrm {Alt}^k({\mathbb {V}})\).

-

the double-trace of u, written \(( {{\mathrm{\mathrm {tr}}}}_T u, {{\mathrm{\mathrm {tr}}}}_T {\,}\mathrm {d}{\,}u)\), which is in \(\mathrm {C}^r(T)\otimes \mathrm {Alt}^k({\mathbb {V}}) \oplus \mathrm {C}^r(T)\otimes \mathrm {Alt}^{k+1}({\mathbb {V}})\).

The framework of FES, introduced in [9] and developed further in [10,11,12,13] was designed to handle restrictions of the first type, whereas now we are interested in the other cases as well. More generally, we will consider a cellular complex \({\mathcal {T}}\) and restrictions from T to \(T'\) where \(T, T'\) are cells in \({\mathcal {T}}\) and \(T' \subseteq T\).

Admissibility condition When we start with a k-form \(u \in \mathrm {C}^r_\mathrm{d}{\varLambda }^k(S)\), the trace of \((u, {\,}\mathrm {d}{\,}u)\) on a cell T, also called the double-trace of u, is in \(\mathrm {C}^r(T)\otimes \mathrm {Alt}^k({\mathbb {V}}) \oplus \mathrm {C}^r(T)\otimes \mathrm {Alt}^{k+1}({\mathbb {V}})\), but all elements of the latter sum cannot occur. In other words there are admissibility conditions. In this paragraph we determine them.

First we introduce some notations:

– When \(v \in \mathrm {C}^r(T)\otimes \mathrm {Alt}^k({\mathbb {V}})\) we denote by \({{\mathrm{\mathrm {pu}}}}_T v \in \mathrm {C}^r{\varLambda }^k(T)\) the induced k-form on T, that remembers the action of u only on vectors in \({\mathbb {V}}\) that are tangential to T.

– When u is a k-form on S and X is a vectorfield on S, we denote by \(u \, {\mathsf {L}} \, X\) the contraction of u by X, which is the \((k-1)\)-form defined at \(x \in S\) by:

Lemma 1

Let \({\mathbb {V}}\) be a finite dimensional vector space. Let \((e_i)\) be a basis of \({\mathbb {V}}\) and let \((f_i)\) be the dual basis. Then for \(u \in \mathrm {Alt}^k({\mathbb {V}})\), \(k \ge 1\), we have:

Proof

By induction on k. \(\square \)

We may consider that this identity is true also for \(k=0\), the left hand side being 0 by definition of contraction of 0-forms.

Proposition 1

Fix \(r \ge 0\). Let \({\mathbb {V}}\) be a vector space and let T be a subspace. Let \(v_0\in \mathrm {C}^{r+1}(T)\otimes \mathrm {Alt}^k({\mathbb {V}})\) and \(v_1 \in \mathrm {C}^r(T)\otimes \mathrm {Alt}^{k+1}({\mathbb {V}})\). The following are equivalent:

-

There exists \(u \in \mathrm {C}^{r+1} {\varLambda }^k({\mathbb {V}})\) such that \({{\mathrm{\mathrm {tr}}}}_T u = v_0\) and \({{\mathrm{\mathrm {tr}}}}_T {\,}\mathrm {d}{\,}u = v_1\).

-

The induced forms \({{\mathrm{\mathrm {pu}}}}_T v_0 \in \mathrm {C}^{r+1}{\varLambda }^k(T)\) and \({{\mathrm{\mathrm {pu}}}}_T v_1 \in \mathrm {C}^r {\varLambda }^{k+1}(T)\) (obtained by remembering only the action on tangent vectors to T), are related by:

$$\begin{aligned} {\,}\mathrm {d}{\,}{{\mathrm{\mathrm {pu}}}}_T v_0 = {{\mathrm{\mathrm {pu}}}}_T v_1. \end{aligned}$$(9)

Proof

(i) The first condition implies the second, because the exterior derivative commutes with pullback.

(ii) We prove that the second condition implies the first. We write \({\mathbb {V}}= T \oplus U\). We introduce a vector field X on \({\mathbb {V}}\), defined by, for any \(x \in T\) and any \(y \in U\):

We choose a basis \((e_i)_{i \in I}\) of T and \((e_j)_{j \in J}\) of U. We impose \(I \cap J = \emptyset \), so they combine to a basis of \({\mathbb {V}}\) and we let \((f_i)_{i \in I \cup J}\) denote the corresponding dual basis of \({\mathbb {V}}\). We let \(\partial _i\) denote the directional derivative with respect to \(e_i\).

(iii) We first extend \(v_0\) to an element u of \(\mathrm {C}^{r+1} {\varLambda }^k({\mathbb {V}})\) by putting \(u(x +y) = v_0 (x)\) for \(x \in T\) and \(y \in U\). Substracting this extension we are left with the the case \(v_0 =0\) and \({{\mathrm{\mathrm {pu}}}}_T v_1=0\). To avoid clutter we denote \(v=v_1\).

(iv) Suppose v is of the form: \(v = w\, w_T \wedge w_U\) with \(w_U = f_{j_1} \wedge \cdots \wedge f_{j_l}\) (with \(l\ge 1\) distinct indices in J), \(w_T = f_{i_1} \wedge \cdots \wedge f_{i_{k+1-l}}\) (with \(k+1-l\) distinct indices in I) and w a scalar function on T.

We trivially extend w to \({\mathbb {V}}\), which yields an extension of v to a \((k+1)\)-form on \({\mathbb {V}}\), which we still denote by v. We put \(u = v \, {\mathsf {L}} \, X\). We write:

The first term here, when restricted to T, is zero. For the second term we have:

We also remark that \(v \, {\mathsf {L}} \, X\) is zero on T. Dividing \(v \, {\mathsf {L}} \, X\) by l, we have a suitable extension of (0, v).

(v) Now, in general, the condition \({{\mathrm{\mathrm {pu}}}}_T v_1= 0\) guarantees that \(v_1\) is a linear combination of forms \(w\, w_T \wedge w_U\) of the above type, all for some \(l \ge 1\). \(\square \)

This result motivates the following definition.

Definition 1

Let \(v_0 \in \mathrm {C}^0(T) \otimes \mathrm {Alt}^k({\mathbb {V}})\) and \(v_1 \in \mathrm {C}^0(T) \otimes \mathrm {Alt}^{k+1}({\mathbb {V}})\). We say that the pair \((v_0,v_1)\) is admissible if \({\,}\mathrm {d}{\,}{{\mathrm{\mathrm {pu}}}}_T v_0 = {{\mathrm{\mathrm {pu}}}}_T v_1\), where \({\,}\mathrm {d}{\,}{{\mathrm{\mathrm {pu}}}}_T v_0\) is defined a priori in the sense of distributions.

On the necessity of composite elements Consider the line \(T= {\mathbb {R}}\times \{0\}\) sitting in \({\mathbb {V}}= {\mathbb {R}}^2\). The preceding paragraph shows that in order to extend data on T, consisting of a pair \((v_0, v_1) \in C^{r+1}(T) \oplus C^r(T) \otimes \mathrm {Alt}^1({\mathbb {V}})\), to a function in \(\mathrm {C}^{r+1}({\mathbb {V}})\), there is the compatibility condition \({\,}\mathrm {d}{\,}v_0 = {{\mathrm{\mathrm {pu}}}}_T v_1\). We now illustrate that if several lines meet at a vertex (which will be the case in simplicial complexes), additional compatibility conditions could appear at the vertex, if we require the extension to be at least \(\mathrm {C}^2({\mathbb {V}})\).

Suppose we have two coordinates (x, y). We have data consisting of functions \(p_0\), \(p_1\) on the x-axis which are \(\mathrm {C}^1({\mathbb {R}})\) and \(\mathrm {C}^0({\mathbb {R}})\) respectively and as well as functions \(q_0\), \(q_1\) on the y-axis that are \(\mathrm {C}^1({\mathbb {R}})\) and \(\mathrm {C}^0({\mathbb {R}})\) respectively.

We want to find a function u on \({\mathbb {R}}^2\) of class \(\mathrm {C}^1({\mathbb {R}}^2)\) such that \((u, \partial _y u)\) restricts to \((p_0, p_1)\) on the x-axis and \((u, \partial _x u)\) restricts to \((q_0, q_1)\) on the y-axis. There are compatibily conditions at the origin:

These are sufficient for the existence of a \(\mathrm {C}^1({\mathbb {R}}^2)\) extension.

However, for extensions of class \(\mathrm {C}^2({\mathbb {R}}^2)\) of the same data, there is an additional constraint, expressing that \(\partial _x \partial _y u = \partial _y \partial _x u\) at the origin, namely:

This remark applies in particular to polynomials. Compare with the fact that the Argyris element is \(\mathrm {C}^2\) at vertices, even though one only wants to obtain \(\mathrm {C}^1\) functions.

In this paper we are not interested in constructing functions that are globally \(\mathrm {C}^2\). We want \(\mathrm {C}^1\) functions, glued together from data on subsimplices that only involve derivatives up to order 1.

This explains why we prefer to construct spaces in terms of composite polynomials: we can then hope to satisfy first order constraints (that guarantee \(\mathrm {C}^1\) continuity), without adding second order constraints (corresponding for instance to symmetry of mixed derivatives as above). Another choice could have been to use rational functions that are \(\mathrm {C}^1\) on the simplices but not \(\mathrm {C}^2\).

A differential acting on admissible pairs Let T be a flat cell in a vectorspace \({\mathbb {V}}\). Suppose that we have subspaces \(B^k(T)\) of \(\mathrm {C}^0(T) \otimes \mathrm {Alt}^{k}({\mathbb {V}})\), such that the exterior derivative on T maps \( {{\mathrm{\mathrm {pu}}}}_T B^k(T)\) into \({{\mathrm{\mathrm {pu}}}}_T B^{k+1}(T)\). Then we define the following spaces of admissible pairs:

We define the following differential:

It is well defined, because if \((v_0,v_1)\) is admissible then \({\,}\mathrm {d}{\,}{{\mathrm{\mathrm {pu}}}}_T(v_1) = {\,}\mathrm {d}{\,}^2 {{\mathrm{\mathrm {pu}}}}_T v_0= 0\), so \((v_1, 0)\) is admissible. Moreover we see that \({\mathsf {d}}^{k+1} \circ {\mathsf {d}}^k =0\).

Lemma 2

The sequence:

is exact if and only if the sequence:

is exact.

Proof

(i) Suppose the second sequence is exact.

Given an admissible \((v_1,0)\in A^{k+1}(T)\) we have \({\,}\mathrm {d}{\,}{{\mathrm{\mathrm {pu}}}}_T v_1 = 0\). Choose \(v_0' \in {{\mathrm{\mathrm {pu}}}}_T B^k(T)\) such that \({\,}\mathrm {d}{\,}v_0' = v_1\) and then \(v_0 \in B^k(T)\) such that \({{\mathrm{\mathrm {pu}}}}_T v_0 = v_0'\). Then \((v_0,v_1)\) is admissible and maps to \((v_1,0)\).

(ii) Suppose the first sequence is exact.

Suppose \(v_1' \in {{\mathrm{\mathrm {pu}}}}_T B^{k+1}(T)\) satisfies \({\,}\mathrm {d}{\,}v_1'=0\). Choose \(v_1 \in B^{k+1}(T)\) such that \({{\mathrm{\mathrm {pu}}}}_T v_1 = v_1'\). Then \((v_1,0) \in B^{k+1}(T)\) and \({\mathsf {d}}(v_1,0) = 0\). Writing \((v_1,0) = {\mathsf {d}}(v_0,v_1)\) we get \(v_0 \in B^{k}(T)\) such that \((v_0, v_1)\) is admissible. Then \(v_0' = {{\mathrm{\mathrm {pu}}}}_T v_0 \in {{\mathrm{\mathrm {pu}}}}_T B^{k}(T) \) satisfies \({\,}\mathrm {d}{\,}v_0' = v_1'\). \(\square \)

3 Poincaré and Koszul operators

Poincaré operators We recall some properties of the so-called Poincaré and Koszul operators, used for constructing finite element differential forms in [4, 24] respectively. For the former, we refer to [26], especially chapter V, but recall the main steps of interest to us.

Recall that when S and \(S'\) are domains and \({\varPhi } : S \rightarrow S'\) is differentiable, the pullback of a k-form u on \(S'\), by \({\varPhi }\), is the k-form \({\varPhi }^\star u\) on S defined at \(x \in S\) by:

Suppose now that S is a domain. We consider a smooth map \(F:[0,1] \times S \rightarrow S\), and interpret it as a family of maps \(F_t = F(t,\cdot ): S \rightarrow S\), for \(t \in [0,1]\), defining a homotopy between \(F_0\) and \(F_1\). We write:

For most \(t \in [0,1]\), we suppose we have a vector field \(G_t\) on S such that, for \(x \in S\):

This uniquely defines \(G_t\) on S when \(F_t : S \rightarrow S\) is a diffeomorphism, and expresses that any curve \(F_{\scriptscriptstyle \bullet }(x)\) flows with \(G_{\scriptscriptstyle \bullet }\).

When u is a k-form we have:

using Cartan’s formula for the Lie derivative.

The Poincaré operator associated with F (and G), acting on differential k-forms, is denoted \({{\mathrm{{\mathfrak {p}}}}}[F]\) or, when the choice of F is clear, as \({{\mathrm{{\mathfrak {p}}}}}\). It can be written succintly:

More explicitely, if u is a k-form:

With these considerations in mind, (23) can be expressed with the Poincaré operator as follows:

Suppose that \(F_1\) is the identity on S and that \(F_0\) is constant. Then the formula gives, for \({{\mathrm{{\mathfrak {p}}}}}= {{\mathrm{{\mathfrak {p}}}}}[F]\) acting on k-forms with \(k\ge 1\):

whereas if u is a function, considered as a 0-form, and the value of \(F_0\) is W, we get:

If now S is a domain in an affine space, which is starshaped with respect to, say W, we may choose F to be defined by:

Then we may substitute in the above formulas:

We denote the associated Poincaré operator as \({{\mathrm{{\mathfrak {p}}}}}_W\). It is defined explicitely on k-forms u by:

Koszul operators In an affine space, given a choice of a point W, we may also define directly a vector field \(X_W\) by:

The contraction of a differential form by \(X_W\) is called the Koszul operator associated with W and denoted:

If the choice of W is clear from the context, we may sometimes omit it from the notation.

If u is a k-form which, with respect to some choice of origin W and basis, has components which are homogeneous polynomials of degree r, then from (34) we get:

which is polynomial and whose components are homogeneous of degree \(r+1\).

We are mainly interested in identitites (30,31) and knowing that the Poincaré operator maps polynomials to polynomials, increasing degree by only one. Sometimes explicit computations are more handy with the Koszul operator. For composite elements it will be important where we locate W, so as to respect the refinement used.

Remark 1

From the above discussion of Poincaré operators, we can derive the identity, on k-forms which are homogeneous polynomials of degree r:

It is obtained by two somewhat different techniques in section 3.2 of [4].

Complexes constructed with Poincaré and Koszul operators We suppose we have a complex \(U^{\scriptscriptstyle \bullet }\) (of perhaps infinite dimensional spaces):

We also suppose that we have operators \({{\mathrm{{\mathfrak {p}}}}}_k : U^k \rightarrow U^{k-1}\) such that:

where \(\lambda _k\) is a non-zero scalar. It follows that the complex \(U^{\scriptscriptstyle \bullet }\) is exact.

We suppose furthermore that:

In this situation we suppose that we have subspaces \(V^{\scriptscriptstyle \bullet }\) that form a complex:

We then define:

Proposition 2

The spaces \(W^{\scriptscriptstyle \bullet }\) form an exact complex. We have:

Proof

(i) We notice that for \(u \in V^{k+1}\) we have:

Therefore \({\,}\mathrm {d}{\,}_{k}\) maps \(W^k\) to \(W^{k+1}\).

(ii) We also see that \({{\mathrm{{\mathfrak {p}}}}}_k\) maps \(W^k\) to \(W^{k-1}\), using (42). Therefore identity (41) also holds for the complex \(W^{\scriptscriptstyle \bullet }\). It follows that it is exact and that we have:

Finally, if \(u \in {\,}\mathrm {d}{\,}_{k-1} W^{k-1} \cap {{\mathrm{{\mathfrak {p}}}}}_{k+1} W^{k+1}\), then \({\,}\mathrm {d}{\,}_k u = 0\) and \({{\mathrm{{\mathfrak {p}}}}}_k u = 0\) so that \(u = 0\), also from (41). \(\square \)

Remark 2

The spaces \(W^{\scriptscriptstyle \bullet }\) form a cochain complex with respect to \({{\mathrm{{\,}\mathrm {d}{\,}}}}_{\scriptscriptstyle \bullet }\), but they also form a chain complex with respect to \({{\mathrm{{\mathfrak {p}}}}}_{\scriptscriptstyle \bullet }\), and it is exact.

Examples We can take \(V^k = \mathrm {P}^p {\varLambda }^k({\mathbb {R}}^n)\). Then we get the exact complex of spaces:

This generalizes the first family of Nédélec–Raviart–Thomas, and \(p= 0\) corresponds to Whitney forms.

We can also take \(V^k = \mathrm {P}^{p-k} {\varLambda }^k({\mathbb {R}}^n)\). Since it is stable under \({{\mathrm{{\mathfrak {p}}}}}\) it is exact. This generalizes the second family of Nédélec–Brezzi–Douglas–Marini.

We now consider the construction of composite elements on a simplicial complex. For instance, on a triangulation, the Cloch-Tocher split consists in adding one point to each triangle, and join it with the three vertices, so that each triangle is divided into three smaller triangles.

More generally one can consider a simplicial complex where each simplex is included in an n-dimensional simplex. We suppose that we add an inpoint to each n-dimensional simplex and join it to the vertices, and possibly inpoints of boundary simplices. More precisely we suppose here that a simplicial refinement of the \((n-1)\)-skeleton is chosen. For each n-dimensional simplex T the inpoint W is coned with the refinement of the boundary of T. In this paragraph we denote such a refinement by \({\mathcal {S}}\).

It is then natural to define finite element spaces on T consisting of piecewise polynomials with respect to \({\mathcal {S}}\), using the Poincaré operator associated with W.

We can then take \(V^k(T) = \mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^{p-k} {\varLambda }^k({\mathcal {S}})\), consisting of k-forms which are piecewise polynomials of degree \(p-k\), that are continuous and with continuous exterior derivative. The Poincaré operator associated with the inpoint maps \(V^k(T)\) to \(V^{k-1}(T)\) so that we get an exact complex. This construction resembles that of to the second family above. We carry out this construction in dimension \(n= 2\) in Sect. 6.

Another construction allows to have different simplicial refinements of T for each index k, and resembles that of the first family above. Let’s call the refinements of T, \({\mathcal {S}}_k\). We can define:

These spaces form a complex which is not exact. We can then define the augmented spaces:

Notice that \(A^k(T)\) contains \(\mathrm {P}^p {\varLambda }^k(T)\). We carry out a construction of this type in arbitrary dimension n, with \(p = 1\), in Sect. 8.

We also show how one can branch such spaces into standard Whitney forms, by augmenting the complex:

See in (171) how this leads to a new space at index k.

4 Low order finite element complexes in 2D

We proceed to define four complexes based on the Clough-Tocher element.

A complex of regularity \((2,1+,1)\). Let T be a triangle with vertices \(V_0, V_1\) and \(V_2\). Choose a point W in the interior of T, and subdivide T into three triangles, by drawing edges from W to \(V_0\), \(V_1\) and \(V_2\). This equips T with a simplicial refinement, which we denote by \({\mathcal {R}}\).

The Clough-Tocher element involves a degree of freedom on the edges, which can be taken as the normal derivative at the midpoint. More generally, for each edge E, we consider a linear form on one-forms, which evaluates the one-form in a transverse direction \(\nu _E\), at an interior point \(W_E\) of the edge. We denote this degree of freedom on 1-forms as \(\mu _E\).

Clough-Tocher complex with continuous pressure described in Proposition 3

For the following proposition we also refer to Fig. 1.

Proposition 3

We have an exact sequence:

The spaces are, more explicitely, the following:

-

\(\mathrm {C}^1 \mathrm {P}^3 {\varLambda }^0({\mathcal {R}})\) consists of piecewise \(\mathrm {P}^3\) functions, which are of class \(\mathrm {C}^1(T)\).

-

\(\mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) consists of piecewise \(\mathrm {P}^2\) one-forms which are \(\mathrm {C}^0(T)\) with exterior derivative in \(\mathrm {C}^0(T)\).

-

\(\mathrm {C}^0\mathrm {P}^1 {\varLambda }^2({\mathcal {R}})\) consists of piecewise \(\mathrm {P}^1\) two-forms, which are \(\mathrm {C}^0(T)\).

Moreover these spaces have the following properties:

-

\(\mathrm {C}^1 \mathrm {P}^3 {\varLambda }^0({\mathcal {R}})\) has dimension 12. Any element u is determined by the following data:

-

vertices V: one DoF for u(V) and two DoFs for \({\,}\mathrm {d}{\,}u(V)\).

-

edges E: one DoF, say \(\mu _E ({\,}\mathrm {d}{\,}u)\).

-

-

\(\mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) has dimension 15. Any element u is determined by:

-

vertices V: two DoFs for u(V) and one DoF for \({\,}\mathrm {d}{\,}u (V)\).

-

edges E: two DoFs, tranverse and tangential: \(\mu _E(u)\) and \(\int _E u\).

-

-

\(\mathrm {C}^0\mathrm {P}^1 {\varLambda }^2({\mathcal {R}})\) has dimension 4. Any element u is determined by:

-

vertices V: one DoF for u(V).

-

interior T: one DoF, namely the integral \(\int _T u\).

-

The above degrees of freedom provide commuting interpolators.

Proof

(i) Exactness of the complex can be deduced from the Poincaré operator associated with the inpoint W. It maps the spaces one to the other.

Notice by the way that we get the identity:

(ii) Counting constraints on the space of piecewise polynomials of degree 3 on \({\mathcal {R}}\), shows that the dimension of the first space is at least \(30 - 18 = 12\). That the dimension is exactly 12 follows from proving unisolvence of the DoFs, which is done in particular in [15, 30].

It amounts to showing that if both u and its derivatives are 0 on \(\partial T\), then \(u= 0\). Such a u can be written \(\lambda _W^2 v\) where \(v \in \mathrm {C}^0\mathrm {P}^1({\mathcal {R}})\), where \(\lambda _W\) is the barycentric coordinate map of \({\mathcal {R}}\) associated with the inpoint W. We have that:

Since \(u\in \mathrm {C}^1(T)\) we get that the following form is continuous on T:

Since \({\,}\mathrm {d}{\,}\lambda _W\) is discontinuous at the vertices, the three vertex values of v are 0, so that v is proportional to \(\lambda _W\). Since \({\,}\mathrm {d}{\,}\lambda _W\) is discontinuous at W we deduce \(u = 0\).

(iii) The last space has dimension 4 and the given degrees of freedom are unisolvent.

(iv) Counting constraints on the spaces of piecewise polynomial one-forms, shows that the dimension of the second space is at least \(36 - 21 = 15\). If \(u \in \mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) has degrees of freedom 0 we write:

We notice that \({\,}\mathrm {d}{\,}u \in \mathrm {C}^0\mathrm {P}^1 {\varLambda }^2({\mathcal {R}})\) and has degrees of freedom 0 so \({\,}\mathrm {d}{\,}u = 0\). We also notice that \(v= {{\mathrm{{\mathfrak {p}}}}}_W u\) satisfies \({\,}\mathrm {d}{\,}v = u\). Its degrees of freedom are 0 except perhaps the vertex values v(V). They must be the same, because \(\int _E {\,}\mathrm {d}{\,}v = 0\) for each edge E. Hence v is constant, so \(u = {\,}\mathrm {d}{\,}v =0\).

This proves unisolvence and that the dimension count is exact[the dimension can also be deduced from (54)].

(v) It is straightforward to check that the interpolator associated with these DoFs commutes with the exterior derivative. \(\square \)

What remains in order to prove that this is a good finite element, is that inter-element continuity behaves as expected. On edges the spaces of restrictions from adjacent triangles should be the same.

Remark 3

The space \(\mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) is also described in [2], where it is analysed with Bernstein-Bezier techniques. Their definition incorporates the fact that an element of \(\mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) is automatically \(\mathrm {C}^1\) at the inpoint W.

A minimal complex of regularity \((2,1+,1)\). It is also possible, in the previous example, to eliminate the edge degrees of freedom in \(\mathrm {C}^1 \mathrm {P}^3 {\varLambda }^0({\mathcal {R}})\), by requiring \({\,}\mathrm {d}{\,}u \, {\mathsf {L}} \, \nu _E\) to be affine on edge E. Usually one imposes the normal derivative on edges to be affine. This is called the reduced HCT element. The transverse edge degree of freedom in \(\mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) is then also eliminated by requiring \(u \, {\mathsf {L}} \, \nu _E\) to be affine. See Fig. 2.

The natural degrees of freedom provide a commuting interpolator.

Remark 4

We see that we have as many degrees of freedom left for the space of 0-forms (namely three times the number of vertices) as for the space of 2-forms (namely the number of vertices plus number of triangles), up to the Euler–Poincaré characteristic of the surface. This can be interpreted as a balancing of the degrees of freedom describing the curl and the divergence of the vector fields.

This complex is minimal, among complexes with this regularity, in a sense which can be made precise in the framework of finite element systems. This is described below, in the last paragraph of Sect. 5.

A complex of regularity (2, 1, 0). We may also consider the sequence:

The spaces are, more explicitely, the following:

-

\(\mathrm {C}^1 \mathrm {P}^3 {\varLambda }^0({\mathcal {R}})\) consists of piecewise \(\mathrm {P}^3\) functions, which are of class \(\mathrm {C}^1(T)\).

-

\(\mathrm {C}^0 \mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) consists of piecewise \(\mathrm {P}^2\) one-forms which are \(\mathrm {C}^0(T)\).

-

\(\mathrm {P}^1 {\varLambda }^2({\mathcal {R}})\) consists of piecewise \(\mathrm {P}^1\) two-forms.

The second space has dimension 20. The last one has dimension 9. Exactness follows from using the Poincaré operator at the inpoint W. A preliminary reasoning shows that \(\mathrm {C}^0 \mathrm {P}^2 {\varLambda }^1({\mathcal {R}})\) should have 2 degrees of freedom per vertex, 2 per edge and 8 interior ones see Fig. 3. However it is not clear what they should be, if one wants the interpolator to commute with the exterior derivative (the harmonic dergrees of freedom of the FES framework provide a possible choice). A part from a choice of degrees of freedom adapted to Stokes, these spaces are well known (e.g. last paragraph of [5]).

A minimal complex of regularity (2, 1, 0). In the last example, the spaces are bigger than necessary. A smaller complex of the form:

may be defined as follows. The spaces are:

-

\(A^0(T)\) is reduced HCT, of dimension 9.

The DoFs are vertex values and vertex values of the exterior derivative.

-

\(A^1(T) = {\,}\mathrm {d}{\,}A^0(T) \oplus {{\mathrm{{\mathfrak {p}}}}}_W A^2(T) \approx {{\mathrm{curl}}}A^0(T) \oplus {\mathbb {R}}X_W \), of dimension 9.

The degrees of freedom are, at vertices two for the value of the 1-form, and at edges one for the integral.

-

\(A^2(T) = \mathrm {P}^0 {\varLambda }^2(T)\) consists of constant 2-forms on T, of dimension 1.

The degree of freedom is the integral.

These degrees of freedom provide a commuting interpolator see Fig. 4. This complex is minimal, among complexes with this regularity, by the remarks that will be made in the last paragraph of Sect. 5.

Remark 5

In [23] a complex of regularity (2, 1, 0) equipped with commuting interpolators is also defined. Instead of resolving the Clough-Tocher element (full or reduced) like ours, their complex resolves a \(\mathrm {C}^1\) element due to Zienkiewicz that contains rational functions. The dimensions of their three spaces is (12, 12, 1), which is intermediate between our minimal complex, with dimensions (9, 9, 1) and the previous complex, with dimensions (12, 20, 9).

They also define high order versions of their complex.

5 Generalized finite element systems

Motivation for finite element systems To study the examples of the preceding section, some general theorems make the task easier. Moreover, specifying the degrees of freedom a priori can be difficult when one wants to go to higher order polynomials.

If we are given spaces \(A^k(T)\) of k-forms on a cell T, we can actually forget about degrees of freedom and just consider the spaces \(A^k(T')\) obtained by restriction to the faces \(T'\) of T, with the appropriate definition of restriction, adapted to a particular regularity. Two properties turn out to be sufficient, in order to get a nice finite element:

-

\(\dim A^k(T) = \sum _{T'\unlhd T} \dim A^k_0(T')\). Here, as will be detailed below, \(T' \unlhd T\) signifies that \(T'\) is a subcell of T and \(A^k_0(T')\) denotes the subset of \(A^k(T')\) consisting of k-forms whose restrictions to boundary subcells of \(T'\) are 0.

-

The sequence \(A^{\scriptscriptstyle \bullet }(T')\) is exact on each subcell \(T'\) of T, except at index 0, where the cohomology group has dimensions 1, essentially consisting of the constant functions.

When these properties are satisfied we will show that the sequences \(A^{\scriptscriptstyle \bullet }_0(T')\) are exact except at index \(\dim T'\), where the cohomology group has dimension 1. Then one can define a commuting interpolator by using the so-called harmonic degrees of freedom, described below.

In a cellular complex the spaces \(A^k(T')\) defined on faces should be well defined, in the sense that if they are obtained as the spaces of restrictions from a cell T (containing \(T'\)) to \(T'\), then they should be independent of T.

Definitions related to finite element systems Let \({\mathcal {T}}\) be a cellular complex. If \(T, T'\) are cells in \({\mathcal {T}}\) we write \(T' \unlhd T\) to signify that \(T'\) is a subcell of T (we consider that T is a subcell of T). Given two cells T and \(T'\) in \({\mathcal {T}}\), their relative orientation is denoted \({{\mathrm{\mathrm {o}}}}(T,T')\). It is 0 unless \(T'\) is a codimension one subcell of T, in which case it is \(\pm \,1\). Cellular cochain complex is denoted \({\mathcal {C}}^{\scriptscriptstyle \bullet }({\mathcal {T}})\). Its differential, also called the coboundary map, is denoted \(\delta : {\mathcal {C}}^k({\mathcal {T}}) \rightarrow {\mathcal {C}}^{k+1}({\mathcal {T}})\). Its matrix in the canonical basis is given by relative orientations.

All complexes considered in this paper are cochain complexes in the sense that the differential increases the index.

Definition 2

A finite element system on \({\mathcal {T}}\) consists of the following data, which includes both spaces and operators:

-

We suppose that for each \(T \in {\mathcal {T}}\), and each \(k \in {\mathbb {Z}}\) we are given a vector space \(A^k(T)\). For \(k<0\) we suppose \(A^k(T) = 0\).

-

For every \(T \in {\mathcal {T}}\) and \(k \in {\mathbb {Z}}\), we have an operator \({\mathsf {d}}^k_T: A^k(T) \rightarrow A^{k+1}(T)\) called differential. Often we will denote it just as \({\mathsf {d}}\). We require \({\mathsf {d}}^{k+1}_T \circ {\mathsf {d}}^k_T = 0\). This makes \(A^{\scriptscriptstyle \bullet }(T)\) into a complex.

-

Given \(T, T'\) in \({\mathcal {T}}\) with \(T' \unlhd T\) we suppose we have restriction maps:

$$\begin{aligned} {\mathsf {r}}^k_{T'T} : A^k(T) \rightarrow A^k(T'), \end{aligned}$$(61)subject to:

-

\({\mathsf {r}}^{k+1}_{T'T} {\mathsf {d}}^k_T = {\mathsf {d}}^k_{T'} {\mathsf {r}}^k_{T'T}\).

-

\({\mathsf {r}}^k_{T''T} = {\mathsf {r}}^k_{T''T'} {\mathsf {r}}^k_{T'T}\).

This makes the family \(A^{\scriptscriptstyle \bullet }(T)\), for \(T \in {\mathcal {T}}\), into an inverse system of complexes.

-

-

We suppose we have a map \({\mathsf {c}}_T: {\mathbb {R}}\rightarrow A^0(T)\). It mimicks inclusion of constant scalar functions. We require:

-

For \(T \in {\mathcal {T}}\), \({\mathsf {d}}^0_T {\mathsf {c}}_T = 0\).

-

If \(T' \unlhd T\) are cells in \({\mathcal {T}}\), \({\mathsf {r}}_{T'T} {\mathsf {c}}_T = {\mathsf {c}}_{T'}\).

-

-

For T a k-dimensional cell in \({\mathcal {T}}\) we suppose we have an evaluation map \({\mathsf {e}}: A^k(T) \rightarrow {\mathbb {R}}\). It mimicks integration of k-forms on a k-cell. We suppose that the following formula holds, for \(u \in A^{k-1}(T)\):

$$\begin{aligned} {\mathsf {e}}_T {\mathsf {d}}_T u = \sum _{T' \in \partial T} {{\mathrm{\mathrm {o}}}}(T, T') {\mathsf {e}}_{T'} {\mathsf {r}}_{T'T} u. \end{aligned}$$(62)It’s an analogue of Stokes theorem on T.

If \({\mathcal {T}}'\) is a cellular subcomplex of \({\mathcal {T}}\), the spaces \(A^k(T)\) with \(T\in {\mathcal {T}}'\) constitute an inverse system. The inverse limits can be identified as:

In other words \({\underleftarrow{\lim }}_{T\in {\mathcal {T}}'} A^k(T)\) consists of families \((u_T)_{T \in {\mathcal {T}}'}\), such that for each cell \(T \in {\mathcal {T}}'\) (of all dimensions) \(u_T \in A^k(T)\), and the family is stable under restrictions to subcells. One can consider that such a family is given by a choice of \(u_T \in A^k(T)\) on top-dimensional cells \(T \in {\mathcal {T}}'\), together with their restrictions to subcells, provided that these are single-valued, i.e. the restrictions to a subcell are the same from all top-dimensional neighboring cells.

We notice that, if T is a cell and \({\mathcal {S}}(T)\) denotes the cellular complex consisting of all the subcells of T in \({\mathcal {T}}\), then the restriction maps provide an isomorphism:

For this reason it seems safe to use the notation:

This will be used in particular when \({\mathcal {T}}'\) is the boundary of a cell \(T\in {\mathcal {T}}\). In that case \(\partial T\) denotes the cellular complex consisting of the strict subcells of T, and \(A^k(\partial T)\) can be interpreted as consisting of families of elements \(u_{T'} \in A^k(T')\) for \(T' \in \partial T\) that are single-valued along interfaces inside the boundary.

Another way of formulating (62) is that for any cellular subcomplex \({\mathcal {T}}'\), the evaluation provides a cochain morphism:

We will later provide conditions under which it induces isomorphisms on cohomology groups, which would be an analogue of de Rham’s theorem.

We denote by \(A^k_0(T)\) the kernel of the induced map \({\mathsf {r}}^k: A^k(T) \rightarrow A^k(\partial T)\). We consider that the boundary of a point is empty, so that if T is a point \(A^k_0(T) = A^k(T)\).

Definition 3

We say that A admits extensions on \(T \in {\mathcal {T}}\), if the restriction map induces a surjection:

for each k. We say that A admit extensions on \({\mathcal {T}}\), if it admits extensions on each \(T\in {\mathcal {T}}\).

This notion corresponds to that of flabby sheaves (faisceaux flasques in French [21]), due to the following.

Proposition 4

The FES A admits extensions on \({\mathcal {T}}\) if an only if, for any cellular complexes \({\mathcal {T}}'', \mathcal T'\) such that \({\mathcal {T}}'' \subseteq {\mathcal {T}}' \subseteq {\mathcal {T}}\), the restriction \(A^{\scriptscriptstyle \bullet }({\mathcal {T}}') \rightarrow A^{\scriptscriptstyle \bullet }({\mathcal {T}}'')\) is onto.

In particular if A admits extensions, then, when \(T'\) is a subcell of T, the restriction \(A^{\scriptscriptstyle \bullet }(T) \rightarrow A^{\scriptscriptstyle \bullet }(T')\) is onto. However this is in general a strictly weaker condition than the extension property. To see this, consider for instance the finite element spaces \(A^0(T)\) consisting of \(\mathrm {P}^1\) functions on a quadrilateral S, on its edges E and on its vertices V. Then the restriction from \(A^0(S)\) to each edge \(A^0(E)\) is onto, as are the other restrictions from faces to subfaces, but the restriction from \(A^0(S)\) to \(A^0(\partial S)\) is not onto, since the latter has dimension 4 but the former had dimension only 3.

Definition 4

We say that \(A^{\scriptscriptstyle \bullet }\) is exact on T when the following sequences are exact:

We say that \(A^{\scriptscriptstyle \bullet }\) is locally exact on \({\mathcal {T}}\) when \(A^{\scriptscriptstyle \bullet }\) is exact on each \(T \in {\mathcal {T}}\).

Definition 5

We say that A is compatible when it admits extensions and is locally exact.

de Rham type theorems The following theorem extends Proposition 5.16 in [12]:

Theorem 1

Suppose that the element system A is compatible. Then the evaluation maps \({\mathsf {e}}: A^{\scriptscriptstyle \bullet }({\mathcal {T}}) \rightarrow {\mathcal {C}}^{\scriptscriptstyle \bullet }({\mathcal {T}})\) induces isomorphisms on cohomology groups.

Proof

The proof of Proposition 5.16 in [12] works verbatim. \(\square \)

We also have the following extension of Proposition 5.17 in [12]:

Theorem 2

Suppose that A has extensions. Then A is compatible if and only if the following two conditions hold:

-

For each \(T\in {\mathcal {T}}\) the (”inclusion of constants”) map \({\mathsf {c}}: {\mathbb {R}}\rightarrow A^0(T)\) is injective.

-

For each \(T \in {\mathcal {T}}\) the sequence \(A^{\scriptscriptstyle \bullet }_0(T)\) has nontrivial cohomology only at index \(k = \dim T\), and there the induced map:

$$\begin{aligned} {\mathsf {e}}: \mathrm {H}^k A^{\scriptscriptstyle \bullet }_0(T) \rightarrow {\mathbb {R}}, \end{aligned}$$(69)is an isomorphism [it is well defined by (62)].

Proof

We suppose \(m >0\) and that the equivalence has been proved for cellular complexes consisting of cells of dimension \(n< m\).

Let \(T\in {\mathcal {T}}\) be a cell of dimension m. We suppose that A is compatible on the boundary of T. Since the boundary is \((m-1)\) dimensional we may apply the above de Rham theorem there.

We write the following diagram:

On the rows, the second map is inclusion and the third arrow restriction. Both rows are short exact sequences of complexes. The vertical map is the de Rham map. The diagram commutes.

We write the two long exact sequences corresponding to the two rows, and connect them by the map induced by the de Rham map.

Suppose that (68) is exact. Then the first and fourth vertical maps are isomorphisms. By the induction hypothesis the second and fifth are isomorphisms. By the five lemma, the third one is an isomorphism. This can be stated as announced.

Suppose that the two stated conditions hold. One applies the five lemma to the long exact sequence, and obtains that \(A^{\scriptscriptstyle \bullet }(T)\) is exact, except at index 0. The cohomology group of index 0 is one dimensional, and must consist of the constants, by injectivity of their inclusion. \(\square \)

Extensions, dimension counts and harmonic interpolation The following proposition almost exactly reproduces Proposition in [13].

Proposition 5

Suppose that \(A^k\) is an element system and that \(T \in {\mathcal {T}}\). Suppose that, for each cell \(U \in \partial T\), each element v of \(A^k_0(U)\) can be extended to an element u of \(A^k(T)\) in such a way that, \({\mathsf {r}}_{UT} u = v\) and for each cell \(U' \in \partial T\) with the same dimension as U, but different from U, we have \({\mathsf {r}}_{U'T} u = 0\). Then \(A^k\) admits extensions on T.

Proof

In the situation described in the proposition we denote by \({{\mathrm{\mathsf {ext}}}}_U v = u\) a chosen extension of v (from U to T).

Pick \(v \in A^k(\partial T)\). Define \(u_{-1}= 0 \in A^k(T)\).

Pick \(l \ge -1\) and suppose that we have a \(u_{l} \in A^k(T)\) such that v and \(u_l\) have the same restrictions on all l-dimensional cells in \(\partial T\). Put \(w_l = v - {\mathsf {r}}_{\partial T\, T} u_l \in A^k(\partial T)\). For each \((l+1)\)-dimensional cell U in \(\partial T\), remark that \({\mathsf {r}}_{U\partial T} w_l \in A^k_0(U)\), so we may extend it to the element \({{\mathrm{\mathsf {ext}}}}_U {\mathsf {r}}_{U\partial T} w_l \in A^k(T)\). Then put:

Then v and \(u_{l+1}\) have the same restrictions on all \((l+1)\)-dimensional cells in \(\partial T\).

We may repeat until \(l+1 = \dim T\) and then \(u_{l+1}\) is the required extension of v. \(\square \)

Proposition 6

Let A be a FES on a cellular complex \({\mathcal {T}}\). Then:

-

We have:

$$\begin{aligned} \dim A^k ({\mathcal {T}}) \le \sum _{ T\in {\mathcal {T}}}\dim A^k_0(T). \end{aligned}$$(73) -

Equality holds in (73) if and only if \(A^k\) admits extensions on each \(T \in {\mathcal {T}}\).

Proof

The proof in [13] works verbatim. \(\square \)

Suppose now that we are discretizing differential forms, say the sequence \(\mathrm {H}^1_\mathrm{d}{\varLambda }^{\scriptscriptstyle \bullet }(S)\) or, more precisely, \(\mathrm {C}^0_{\,}\mathrm {d}{\,}{\varLambda }^k(S)\). For each cell T, equip each space of double traces of \(\mathrm {C}^0_{\,}\mathrm {d}{\,}{\varLambda }^k(S)\), with a continuous scalar product \(\langle \cdot | \cdot \rangle \), typically a variant of the \(\mathrm {L}^2\) product on forms. For a given finite element system A (equipped with double traces for the restrictions), define spaces \({\mathcal {F}}^k(T)\) of degrees of freedom as follows. For \(k = \dim T\):

and for \(k \ne \dim T\):

This is the natural generalization, to the adopted setting, of so-called projection based interpolation, as defined in [17, 18]. We call these the harmonic degrees of freedom. For compatible finite element systems these degrees of freedom are unisolvent and yield a commuting interpolator \(\mathrm {C}^0_{\,}\mathrm {d}{\,}{\varLambda }^{\scriptscriptstyle \bullet }(S) \rightarrow A^{\scriptscriptstyle \bullet }({\mathcal {T}})\), which we call the harmonic interpolator. This topic is detailed in Sect. 2.4 of [13], see in particular Proposition 2.8 of that paper.

Minimality Consider a FES A on a cellular complex \({\mathcal {T}}\) where the topdimensional cells are domains in a fixed vectorspace \({\mathbb {V}}\) of dimension n. Suppose furthermore that each certex lies in an n-dimensional cell. Suppose that, when T is a top-dimensional cell, \(A^k(T)\) is a space of k-forms, containing the constant ones. If A is compatible then in particular for each vertex \(V \in {\mathcal {T}}\), the restriction from \(A^k(T)\) to \(A^k(V)\) is onto. Depending on the nature of restriction we deduce:

-

If restriction is the pullback, then \(A^k(V) = 0\), except for \(k= 0\), in which case it has dimension 1.

-

If restriction is the trace, then \(A^k(V) = \mathrm {Alt}^k({\mathbb {V}})\).

-

If restriction is the double trace then \(A^k(V) = \mathrm {Alt}^k({\mathbb {V}}) \oplus \mathrm {Alt}^{k+1}({\mathbb {V}})\).

Moreover, if A is compatible, then we must have, for any k-dimensional cell, \(\dim A^k_0(T) \ge 1\), by Theorem 2.

These considerations provide a lower bound on \(\dim A^k({\mathcal {T}})\) in view of Proposition 6. We will see examples where this lower bound is attained. These examples are then minimal FES.

This paper defines four minimal FES: two in 2D and two in 3D. In each dimension we distinguish between continuous and discontinuous divergence.

The topic of minimal FES is studied in more detail in [11], in the case where the restriction is the pullback. General recipies for constructing small compatible FES within a big compatible FES are provided.

6 High order composite elements in 2D

Definition of finite element spaces. On a triangle T, we define a complex of regularity \((2, 1+, 1)\) depending on a parameter \(p \ge 3\). The choice \(p=3\) was described previously, in Proposition 3, except for the characterization of spaces attached to faces.

We define the following spaces:

-

\(A^0(T) = \mathrm {C}^1\mathrm {P}^p {\varLambda }^0({\mathcal {R}})\).

It consists of the functions which are \({\mathcal {R}}\)-piecewise in \(\mathrm {P}^p\), and which are of class \(\mathrm {C}^1(T)\).

-

\(A^1(T) = \mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^{p-1}{\varLambda }^1({\mathcal {R}})\).

It consists of the 1-forms which are \({\mathcal {R}}\)-piecewise in \(\mathrm {P}^{p-1}\), and which are of class \(\mathrm {C}^0(T)\) with exterior derivative in \(\mathrm {C}^0(T)\).

-

\(A^2(T)= \mathrm {C}^0\mathrm {P}^{p-2}{\varLambda }^2({\mathcal {R}})\).

It consists of the 2-forms which are \({\mathcal {R}}\)-piecewise in \(\mathrm {P}^{p-2}\), and which are of class \(\mathrm {C}^0(T)\).

We analyse this complex as follows. First we notice:

Proposition 7

The following sequence is exact:

The dimensions are:

Proof

(i) Exactness follows from an application of the Poincaré operator associated with the inpoint W.

(ii) For \(A^0(T)\), the dimension is given in [19].

(iii) For \(A^2(T)\) the space consists of continuous piecewise \(\mathrm {P}^r\) functions, on a mesh with 4 vertices, 6 edges and 3 triangles, so with \(r = p-2\). Adding the dimensions of the bubblespaces we get:

(iv) The dimension of \(A^1(T)\) can then be deduced from the exactness of (76):

This completes the proof. \(\square \)

Remark 6

The dimensions are those one obtains by the perhaps naïve approach of counting constraints on piecewise-polynomial differential forms.

For instance, for \(A^0(T)\) one starts with the space of \({\mathcal {R}}\)-piecewise polynomials of degree p. It has dimension \((3/2)(p+2)(p+1)\). To be \(\mathrm {C}^1\) at W one imposes two equalities of first order jets, which amounts to 6 conditions. Then, on the edges joining W to the vertices, one expresses continuity, knowing we already have continuity at W as well as continuity of the directional derivative at W along the edge. This gives \(3(p-1)\) conditions. Finally one expresses continuity of a transverse derivative on the interior edges, knowing that we already have continuity of it at W. This also gives \(3(p-1)\) conditions.

This gives a lower bound on the dimension, since we are not certain at this point that the imposed conditions are linearly independent.

Having examined the spaces \(A^k(T)\), we now look at what happens on the faces of T:

Case 1 Vertices. We define, at a vertex V:

-

\(A^0(V) = {\mathbb {R}}\oplus \mathrm {Alt}^1({\mathbb {V}})\) interpreted as a value and a value of the differential. Its dimension is 3.

-

\(A^1(V) = \mathrm {Alt}^1({\mathbb {V}}) \oplus \mathrm {Alt}^2({\mathbb {V}})\) interpreted as a value and a value of its exterior derivative. Its dimension is 3.

-

\(A^2(V) = \mathrm {Alt}^2({\mathbb {V}})\). Its dimension is 1.

Notice, in view of Lemma 2, that we have a well defined complex:

where the second arrow \(v_0\mapsto (v_0, 0)\), the third is \((v_0,v_1) \mapsto (v_1, 0)\) and the fourth one is \((v_0, v_1)\mapsto v_1\). Remark that the complex is exact.

Case 2 Edges. At an edge E we define:

-

\(A^0(E)\) is the subspace of \(\mathrm {P}^{p}(E) \oplus \mathrm {P}^{p-1}(E) \otimes \mathrm {Alt}^1({\mathbb {V}})\) consisting of admissible pairs \((v_0, v_1)\). Its dimension is \(p+1 + p= 2p+1\).

-

\(A^1(E) = \mathrm {P}^{p-1}(E)\otimes \mathrm {Alt}^1({\mathbb {V}}) \oplus \mathrm {P}^{p-2}(E) \otimes \mathrm {Alt}^2({\mathbb {V}})\). Its dimension is \(2p +p-1 = 3p -1\).

-

\(A^2(E) = \mathrm {P}^{p-2}(E)\otimes \mathrm {Alt}^2({\mathbb {V}})\). Its dimension is \(p-1\).

Again, in view of Lemma 2, we notice that we have a well defined complex:

and that it is exact.

We remark that A defines a finite element system on \({\mathcal {S}}(T)\), with respect to restriction operators defined by taking double-traces. The crucial missing point is the extension property (flabbyness).

The following result is immediate.

Proposition 8

For any edge E of T, A admits extensions from \(\partial E\) to E. Moreover:

And there is nontrivial cohomology only at index \(k=1\), where it has dimension 1.

Theorem 3

The finite element system A admits extensions from \(\partial T\) to T. Hence it is compatible.

Proof

We use Proposition 5. What is required is to prove some extension properties from vertices to edges and triangles, and from edges to triangles. These required properties are proved in the two next paragraphs. \(\square \)

We use the term jet informally. An r-jet corresponds to a Taylor expansion of order r in some vector bundle, which will here be a vector bundle of differential forms. However for the highest order partial derivatives, only a certain combination of them, corresponding to the exterior derivative, will be used. Moreover the jet exists even when a section it should be the expansion of, is not known a priori.

Extension of 1-jets from vertices In this section we consider elements in dimension 2 but our construction of extension from vertices is valid in any dimension. Let then \({\mathbb {V}}\) be a vector space of finite dimension and let V be a point in \({\mathbb {V}}\).

We are interested in complexes at V of the form:

Suppose we are given \((v_0, v_1) \in \mathrm {Alt}^k({\mathbb {V}}) \oplus \mathrm {Alt}^{k+1}({\mathbb {V}})\) at vertex V. Suppose T is a simplex, of arbitrary dimension, containing V. We want to find a k-form \(u_0\) on T whose double trace is \((v_0, v_1)\). In other words we want an admissible pair \((u_0, u_1)\) whose traces are \((v_0, v_1)\).

Let \(\lambda \) be the barycentric coordinate on T with respect to vertex V, and let X be the canonical vectorfield \(X: x \mapsto x - V\). Notice that for any \(w \in \mathrm {Alt}^{k+1}({\mathbb {V}})\) considered as a constant \((k+1)\)-form, we have \({\,}\mathrm {d}{\,}(w \, {\mathsf {L}} \, X) = (k+1) w\).

The admissible pair \((\lambda ^2 v_0, 2\lambda {\,}\mathrm {d}{\,}\lambda \wedge v_0)\) on T restricts to \((v_0, 2 {\,}\mathrm {d}{\,}\lambda \wedge v_0)\) at V. We therefore put \(w_1 = v_1 - 2{\,}\mathrm {d}{\,}\lambda \wedge v_0\), and we want to find an extension of \((0, w_1)\). We notice that the following pair on T is both admissible and restricts to \((0, w_1)\) at V:

All in all, we extend the data at V to T by the formula:

Notice that \(u_0\) is a differential k-form of polynomial degree 3, that \(u_1\) is a \((k+1)\)-form of degree 2, that the pair \((u_0,u_1)\) is admissible, and that its restriction to the other vertices of T is 0, in the sense of double-traces. More stongly, the restriction to the face opposite to V in T is 0. This construction can be used to obtain basisvectors attached to the vertices of the global spaces.

Remark 7

If T is a triangle, Proposition 3 guarantees that we have extensions from the vertices of T to T, as required in Proposition 5, simply by matching degrees of freedom.

Extension of polynomial 1-jets from edges to triangles Now suppose E is an edge of a triangle T, living in a vector space \({\mathbb {V}}\) of dimension 2. We wish to extend data on E to T, so as to be able to apply Proposition 5 .

Fix p such that \( p \ge 3\). We consider the following spaces, for \(0 \le k \le \dim {\mathbb {V}}\).

The admissibility condition is non-trivial only for \(k=0\).

We label the vertices of E with 0 and 1, and the third vertex of T is labelled with 2. The barycentric coordinates on T are, accordingly, denoted \(\lambda _0, \lambda _1, \lambda _2\).

We suppose we have chosen an inpoint W on T, and we divide T into three triangles by joining W to the vertices of T. The simplicial complex so obtained is denoted \({\mathcal {R}}\).

Lemma 3

There is a function \({\varPhi } \in \mathrm {C}^1\mathrm {P}^3({\mathcal {R}})\) such that:

Proof

Follows from Proposition 3 by matching degrees of freedom. \(\square \)

Lemma 4

There is a 1-form \({\varPsi } \in \mathrm {C}^0_{\,}\mathrm {d}{\,}\mathrm {P}^2{\varLambda }^1({\mathcal {R}})\) such that:

Proof

Follows from Proposition 3 by matching degrees of freedom. \(\square \)

Suppose we are given \((v_0,v_1) \in A^k_0(E)\) and that we wish to extend it to T. We may extend this data by 0 to all of \(\partial T\).

Case 3 Case \(k=0\). First we remark that \(v_0\) is of the form:

where \(w_0 \in \mathrm {P}^{p-4}(E)\). In this form \(v_0\) is trivially extendable to T, as a function \(u_0 \in \mathrm {P}^p(T)\). Substracting \(({{\mathrm{\mathrm {tr}}}}_E u_0, {{\mathrm{\mathrm {tr}}}}_E {\,}\mathrm {d}{\,}u_0)\) from \((v_0,v_1)\) leaves us with data where \(v_0 = 0\). Assuming now that \(v_0 = 0\), admissibility shows that \(v_1\) is of the form:

where \(w_1 \in \mathrm {P}^{p-3}(E)\). Then we extend \((0, v_1)\) to T as the admissible pair:

In our setup it is only \(u_0\) which is of interest on T, but we need the traces of both \(u_0\) and \({\,}\mathrm {d}{\,}u_0\) on \(\partial T\).

Notice also that the constructed extension satisfies \(u_0 \in \mathrm {C}^1\mathrm {P}^p{\varLambda }^0({\mathcal {R}})\).

Case 4 Case \(k=1\). We remark that the data is of the form:

With \(w_0 \in \mathrm {P}^{p-3}(E)\), \(w_1 \in \mathrm {P}^{p-3}(E)\) and \(w_2 \in \mathrm {P}^{p-4}(E)\). We essentially extend the three different components separately, but in a precise order.

First, let \({\tilde{w}}_2 \in \mathrm {P}^{p-3}(E)\) denote an antiderivative of \(w_2\). Put:

Then:

whose trace is \(v_1\). This leaves us with the problem of extending data where \(w_2 = 0\).

Second, define:

Then \({\,}\mathrm {d}{\,}u_0 = 0\), so in particular \({{\mathrm{\mathrm {tr}}}}_{\partial T} u_0 = 0\). Moreover:

This leaves us with the problem of extending data where \(w_2 = 0\) and \(w_1 =0\).

Third, define:

Then:

and moreover:

This completes the extension procedure.

Case 5 Case \(k=2\). Then \(v_1=0\) and \(v_0\) is of the form:

for some \(w_0 \in \mathrm {P}^{p-4}(E)\). We extend \(v_0\) to T as:

7 Tools for composite finite elements

We develop some tools that will be used to define finite element sequences in dimension \(n \ge 3\).

Various refinements of simplices A simplex is a finite non-empty set. Its subsimplices are the non-empty subsets. The geometric realization of a simplex T in a vector space containing the vertices, is its convex hull, denoted |T|. Geometric realizations are examples of cells. If T is a simplex with vertices \(V_0, \ldots , V_k\) we also write \(T = [V_0, \ldots , V_k]\).

If T is a cell in a cellular complex \({\mathcal {T}}\), we denote by \({\mathcal {S}}_{\mathcal {T}}(T)\) the set of subcells of T in \({\mathcal {T}}\), which is also a cellular complex. We denote by \({\mathcal {S}}^k_{\mathcal {T}}(T)\) the set of those subcells of T which have dimension k. When no confusion is possible we omit the subscript \({\mathcal {T}}\). In particular, if T is a simplex the associated simplicial complex is denoted \({\mathcal {S}}(T)\).

For each simplex T we choose an interior point \(W_T\), called the inpoint of T.

Definition 6

Given a simplex T we denote by \({\mathcal {R}}_m(T)\) the simplicial complex consisting of simplices of the form:

such that:

-

\(T' = [V_0, \ldots , V_l]\) is a subsimplex of T of dimension \(l \le m\),

-

\(T_0 , \ldots , T_{k-1} , T_k\) are subsimplices of T of dimension at least \(m+1\),

-

The simplices are nested as follows, with strict inclusions:

$$\begin{aligned} T' \lhd T_0 \lhd \ldots \lhd T_{k-1} \lhd T_k. \end{aligned}$$(113)

We call \({\mathcal {R}}_m(T)\) the m-refinement of T.

In particular \({\mathcal {R}}_0(T)\) is the barycentric refinement of T, at least when the inpoints are chosen to be the isobarycenters. We see that \({\mathcal {R}}_m(T)\) only uses inpoints of subsimplices of T of dimension at least \(m+1\) ; subsimplices of T of dimension at most m are not refined. Another way of saying this is that \({\mathcal {S}}(T)\) and \({\mathcal {R}}_m(T)\) have the same m-skeleton (the m-skeleton of a cellular complex is the cellular complex consisting of those cells that have dimension at most m). For \(m \ge \dim T\) we have \({\mathcal {R}}_m(T) = {\mathcal {S}}(T)\).

When choosing the inpoints, one is interested in satisfying special properties for adjacent simplices in some simplicial complex, as reviewed in [25]:

-

In dimension 2, \({\mathcal {R}}_1(T)\) is known as a Clough-Tocher split. One is also interested in splits where the inpoints of edges lie on the lines joining the inpoints of the adjacent triangles. Then \({\mathcal {R}}_0(T)\) is known as a Powell–Sabin split.

-

In dimension 3, one is interested in splits where the inpoints on faces lie on the lines joining the inpoints of the two adjacent tetrahedra. Then \({\mathcal {R}}_1(T)\) is known as a Worsey-Farin split, after [36]. If, in addition, the inpoint on edges lie on a plane cointaining all the inpoints of the adjacent tetrahedra (i.e. those containing the edge), then \({\mathcal {R}}_0(T)\) is called a Worsey–Piper split, after [37].

-

Actually [36] defines \({\mathcal {R}}_{1}(T)\) in arbitrary dimension n and refer to it as generalized Clough-Tocher split. On n-dimensional simplices one chooses arbitrary inpoints. On \((n-1)\)-dimensional simplices the inpoint is the intersection point with the line joining the inpoints of the two adjacent topdimensional simplices.

-

Worsey–Piper splits may be difficult to construct. One example would be to choose, as inpoints, the circumcenters of all subsimplices. A sufficient condition for this choice to yield points in the interior of the simplices, is that simplices are strictly acute. This is quite restrictive.

-

We also note that for \(m = \dim T -1\), \({\mathcal {R}}_m(T)\), which consists in adding the single inpoint \(W_T\) to T and cone it with the boundary simplices of T, is known as the Alfeld split of T, at least when \(\dim T = 3\), see [1].

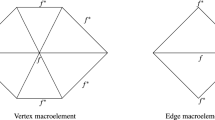

The different types of refinements of a tetrahedron are illustrated in Fig. 5. Not all subsimplices are represented, just those corresponding to one face of the tetrahedron.

We note the following:

Lemma 5

We have:

-

For any m, \({\mathcal {R}}_m(T)\) is a refinement of \({\mathcal {R}}_{m+1}(T)\).

-

If U is a subsimplex of T then:

$$\begin{aligned} {\mathcal {R}}_m(U) = \{T' \in {\mathcal {R}}_m(T) \ : \ |T'| \subseteq |U| \}. \end{aligned}$$(114)

Alignments in meshes Already on a triangular mesh in dimension 2, continuity requirements involving derivatives, enforced on piecewise polynomials, may produce complicated spaces. The dimension will in general depend for instance on alignments of edges arriving at vertices. The following result pertains to one such situation.

Suppose S is a two-dimensional vectorspace with a basis \((e_1, e_2)\). The basis vectors divide S into four sectors, as follows. For the four possibilities of choices of signs \(a,b \in \{+, -\}\) we consider the sectors:

We consider differential forms, which are piecewise polynomials with respect to this subdivision, with various continuity requirements across interfaces.

Proposition 9

We have an exact sequence on S:

where, more precisely:

-

\(\mathrm {C}^1 \mathrm {P}^2 {\varLambda }^0\), the space of continuously differentiable piecewise polynomials of degree 2, has dimension 8. The arrow arriving from \({\mathbb {R}}\) is inclusion of constants. Any element u will be uniquely determined by the values of the following data:

-

the 1-jet at 0, consisting of the function value u(0) and the differential \(\mathrm {D}u(0)\).

-

the directional second order derivatives at 0, in the four directions \(\pm e_1\) and \(\pm e_2\), which, by the way, are well defined.

-

the value of the second order derivative \(\partial _1 \partial _2 u\), which, it turns out, must be the same in the four sectors.

-

-

\(\mathrm {C}^0 \mathrm {P}^1 {\varLambda }^1\) has dimension 10. The arrows arriving to and from this space are exterior derivatives.

-

\(\mathrm {P}^0 {\varLambda }^2\) has dimension 4. The arrow to \({\mathbb {R}}\) is the following map:

$$\begin{aligned} u \mapsto u(++) - u(-+) + u(--) - u(+-). \end{aligned}$$(117)Here u(ab) stands for the value of the two-form u on \(T_{ab}\), or more precisely \(u[ae_1 + be_2](e_1, e_2)\).

Remark 8

It seems that, if we have just four sectors, without alignments of the edges then the sequence:

is exact and \(\mathrm {C}^1\mathrm {P}^2{\varLambda }^0\) has dimension only 7.

The situation is reminiscent of [33], which is interested in the last part of the complex, for polynomials of higher order.

Some spaces of piecewise polynomials on simplexes We first recall:

Proposition 10

Suppose \(T=[V_0, \ldots , V_n]\) is an oriented simplex of dimension n.

Suppose that u is a constant n-form on T. Then:

Suppose that u is affine on T and 0 at the vertices \(V_1, \dots , V_n\). Then:

Let S be a simplex of dimension n. All faces T of S are supposed equipped with an orientation and a chosen inpoint \(W_T\).

We shall prove some results of which the following constitute a first case:

Proposition 11

We have the following:

-

Suppose \(u \in \mathrm {C}^1 \mathrm {P}^2 {\varLambda }^0({\mathcal {R}}_{0} (S))\), that \({\,}\mathrm {d}{\,}u \) is 0 at the vertices of S, and that u has the same value at all vertices of S. Then u is constant on S.

-

Suppose \(u \in \mathrm {C}^0 \mathrm {P}^1 {\varLambda }^1({\mathcal {R}}_{0} (S))\) and that \({\,}\mathrm {d}{\,}u= 0\). If u is 0 at the vertices of S and the pullback of u to 1-dimensional faces of S has integral 0, then \(u = 0\).

Proof

By induction on \(\dim S\). For \(\dim S =0\) there is nothing to prove. Supposing now \(n\ge 1\) and that the result has been proved for simplexes S with \(\dim S < n\) we proceed as follows, supposing \(\dim S = n\).

(i) Choose \(u \in \mathrm {C}^1 \mathrm {P}^2 {\varLambda }^0({\mathcal {R}}_{0} (S))\) and suppose that \({\,}\mathrm {d}{\,}u = 0\) at the vertices. On any \((n-1)\)-face of S the pullback of u is constant by the induction step. Hence u is constant on \(\partial T\). Substracting this constant, we may suppose that \({{\mathrm{\mathrm {tr}}}}_{\partial T} u = 0\). Let \(\lambda _S\) be the barycentric coordinate on S attached to the inpoint, so that \(\lambda _S \in \mathrm {C}^0 \mathrm {P}^1 {\varLambda }^0({\mathcal {R}}_{n-1}(S))\). We can write \(u = \lambda _S v\) for some \(v \in \mathrm {C}^0 \mathrm {P}^1 {\varLambda }^0({\mathcal {R}}_{0} (S))\). The condition that \(u \in \mathrm {C}^1(S)\) then gives \(v \in \mathrm {C}^0 \mathrm {P}^1 {\varLambda }^0({\mathcal {R}}_{n-1} (S))\). We write \({\,}\mathrm {d}{\,}u = \lambda _S {\,}\mathrm {d}{\,}v + v {\,}\mathrm {d}{\,}\lambda _S\) and deduce that v is zero at the vertices of S. Hence v is proportional to \(\lambda _S\) : \(v = c\lambda _S\). We get \({\,}\mathrm {d}{\,}u = 2 c \lambda _S {\,}\mathrm {d}{\,}\lambda _S\). Since \({\,}\mathrm {d}{\,}\lambda _S\) is discontinuous at the inpoint of S, we deduce that \(c = 0\), hence \(u = 0\).

(ii) Choose \(u \in \mathrm {C}^0 \mathrm {P}^1 {\varLambda }^1({\mathcal {R}}_{0} (S))\) such that \({\,}\mathrm {d}{\,}u = 0\) on S, u is 0 at the vertices of S and the pullback of u to 1-dimensional faces of S has integral 0. Write \(u = {\,}\mathrm {d}{\,}v\) with \(v \in \mathrm {C}^1 \mathrm {P}^2 {\varLambda }^0({\mathcal {R}}_{0} (S))\). We have that \({\,}\mathrm {d}{\,}v\) is zero at vertices. Moreover v has the same values at all vertices, by the one-dimensional Stokes. By the preceding result v is constant, so \(u = 0\). \(\square \)

The purpose of the next three propositions is to extend these results to k-forms for higher k. Eventually we want to show that if certain degrees of freedom are 0 then the k-form is 0.

Our first result is of the type that if certain degrees of freedom are 0 then the k-form is 0 at the center of the simplex.

Proposition 12