Abstract

There has been much recent research on preconditioning discretisations of the Helmholtz operator \(\Delta + k^2 \) (subject to suitable boundary conditions) using a discrete version of the so-called “shifted Laplacian” \(\Delta + (k^2+ \mathrm{i}\varepsilon )\) for some \(\varepsilon >0\). This is motivated by the fact that, as \(\varepsilon \) increases, the shifted problem becomes easier to solve iteratively. Despite many numerical investigations, there has been no rigorous analysis of how to chose the shift. In this paper, we focus on the question of how large \(\varepsilon \) can be so that the shifted problem provides a preconditioner that leads to \(k\)-independent convergence of GMRES, and our main result is a sufficient condition on \(\varepsilon \) for this property to hold. This result holds for finite element discretisations of both the interior impedance problem and the sound-soft scattering problem (with the radiation condition in the latter problem imposed as a far-field impedance boundary condition). Note that we do not address the important question of how large \(\varepsilon \) should be so that the preconditioner can easily be inverted by standard iterative methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Helmholtz equation is the simplest possible model of wave propagation. Although most applications are concerned with the propagation of waves in exterior domains, it is common to use as a model problem the Helmholtz equation posed in an interior domain with an impedance boundary condition, i.e.

where \(\Omega \) is a bounded Lipschitz domain in \(\mathbb {R}^d\) (\(d=2\) or \(3\)) with boundary \(\Gamma \), and \(f\) and \(g\) are prescribed functions. This paper is predominately concerned with the interior impedance problem (1.1), but we also consider the exterior Dirichlet problem, with the radiation condition realised as an impedance boundary condition (i.e., a first-order absorbing boundary condition).

The Helmholtz equation is difficult to solve numerically for the following two reasons:

-

1.

The solutions of the homogeneous Helmholtz equation oscillate on a scale of \(1/k\), and so to approximate them accurately one needs the total number of degrees of freedom, \(N\), to be proportional to \(k^d\) as \(k\) increases. Furthermore, the pollution effect means that in some cases (e.g. for low-order finite element methods) having \(N\sim k^d\) is still not enough to keep the relative error bounded independently of \(k\) as \(k\) increases. This growth of \(N\) with \(k\) leads to very large matrices, and hence to large (and sometimes intractable) computational costs.

-

2.

The standard variational formulation of the Helmholtz equation is sign-indefinite (i.e., not coercive). This means that (1) it is hard to prove error estimates for the Galerkin method that are explicit in \(k\), and (2) it is hard to prove anything a priori about how iterative methods behave when solving the Galerkin linear system; indeed, one expects iterative methods to behave extremely badly if the indefinite system is not preconditioned.

Quite a lot of recent research has focused on preconditioning (1.1) using the discretisation of the original Helmholtz problem with a complex shift:

The parameter \(\eta \) is usually chosen to be either \(k\) or \(\sqrt{k^2+ \mathrm{i}\varepsilon }\), and the analysis in this paper covers both these choices. It is well-known that, with \(k\) fixed, the solution of (1.2) tends to the solution of (1.1) as \(\varepsilon \rightarrow 0\); this is called the “principle of limited absorption”. When used as a preconditioner for (1.1), the problem (1.2) is usually called the “shifted Laplacian preconditioner” (even though the shift is added to the Helmholtz operator itself).

In some ways it is more natural to consider adding absorption to the problem (1.1) by letting \(k\mapsto k+ \mathrm{i}\delta \) for some \(\delta >0\) (with \(\eta \) then usually chosen as either \(k\) or \(k+ \mathrm{i}\delta \)). The results in this paper are equally applicable to this preconditioner, however we consider absorption in the form of (1.2) since this form seems to be more prevalent in the literature.

The question then arises, how should one choose the “absorption” (or “shift”) parameter \(\varepsilon \)? In this paper we investigate this question when (1.1) is solved using finite element methods (FEMs) of fixed order.

One of the advantages of the shifted Laplacian preconditioner is that it can be applied when the wavenumber \(k\) is variable (i.e., the medium being modelled is inhomogeneous) as was done, for example, in [15, 42], and [54] (with the last paper considering the higher-order case). In the present paper, however, all the theory is for constant \(k\) (although Example 5.5 contains an experiment where \(k\) is variable).

Recall that the standard variational formulation of (1.2) (for any \(\varepsilon \ge 0\)) is, given \(f \in L^2(\Omega )\), \(g\in L^2(\Gamma )\), \(\eta >0\), and \(k>0\),

where

and

The original Helmholtz problem that we are interested in solving, (1.1), is therefore (1.3) when \(\varepsilon = 0\) and \(\eta = k\), and in this case we write \(a(u,v)\) instead of \(a_\varepsilon (u,v)\).

If \(V_N\) is an \(N\)-dimensional subspace of \(H^1(\Omega )\) with basis \(\{\phi _i: i = 1, \ldots , N \}\) then the corresponding Galerkin approximation of (1.3) is:

The Galerkin equations (1.6) are equivalent to the \(N\)-dimensional linear system

where \(\mathbf{S}_{\ell ,m} = \int _{\Omega } \nabla \phi _\ell \cdot \nabla \phi _m \) is the stiffness matrix, \(\mathbf{M}_{\ell ,m} = \int _{\Omega } \phi _\ell \phi _m \) is the domain mass matrix, and \(\mathbf{N}_{\ell ,m} = \int _{\Gamma } \phi _\ell \phi _m\) is the boundary mass matrix. When \(\varepsilon = 0\) and \(\eta = k\), (1.7) is the discretisation of the original problem (1.1), in which case we write \(\mathbf{A}\) instead of \(\mathbf{A}_{\varepsilon }\) in (1.7). Note that \(\mathbf{A}_\varepsilon \) and \(\mathbf{A}\) are both symmetric but not Hermitian.

The “shifted Laplacian preconditioner” (applied in left-preconditioning mode) replaces the solution of \(\mathbf{A}\mathbf {u}= \mathbf {f}\) with the solution of:

GMRES works well applied to this problem if \(\Vert \mathbf{I}- \mathbf{A}_\varepsilon ^{-1}\mathbf{A}\Vert _2 \) is sufficiently small (and this can be quantified by the Elman estimate recalled in Theorem 1.8 and Corollary 1.9 below).

In practice, \(\mathbf{A}_\varepsilon ^{-1}\) in (1.8) is replaced with an approximation that is easy to compute (e.g. a multigrid V-cycle). Then, letting \(\mathbf{B}_\varepsilon ^{-1}\) denote an approximation of \(\mathbf{A}_\varepsilon ^{-1}\), we replace (1.8) with

Writing

we see that a sufficient condition for GMRES to converge in a \(k\)–independent number of steps is that both \( \Vert \mathbf{I}- \mathbf{A}_{\varepsilon }^{-1} \mathbf{A}\Vert _2\) and \(\Vert \mathbf{I}- \mathbf{B}_\varepsilon ^{-1}\mathbf{A}_{\varepsilon } \Vert _2 \) are sufficiently small. We write these two conditions as

In other words, the task is to find \(\varepsilon \) and \(\mathbf{B}_\varepsilon \) so that both properties (P1) and (P2) are satisfied. At this stage, one might already guess that achieving both (P1) and (P2) imposes somewhat contradictory requirements on \(\varepsilon \). Indeed, on the one hand, (P1) requires \(\varepsilon \) to be sufficiently small (since the ideal preconditioner for \(\mathbf{A}\) is \(\mathbf{A}^{-1}\), which is \(\mathbf{A}_0^{-1}\)). On the other hand, the larger \(\varepsilon \) is, the less oscillatory the shifted problem is, and the cheaper it will be to construct a good approximation to \(\mathbf{A}_\varepsilon ^{-1}\) in (P2). These issues have been explored numerically in the literature (see the discussion in Sect. 1.1 below), however there are no rigorous results about how to achieve either (P1) or (P2), and hence no theory about the best choice of \(\varepsilon \).

In this paper we perform the first step in this analysis by describing rigorously how large one can choose \(\varepsilon \) so that (P1) still holds. These results can then be used in conjunction with results concerning (P2) to answer the question of how to choose \(\varepsilon \) in (1.9). Indeed, the question of how one should choose \(\varepsilon \) for (P2) to hold when \(\mathbf{B}_\varepsilon ^{-1}\) is constructed using multigrid is considered in the recent preprint [7]. Furthermore, in a subsequent paper [21] we will describe for a class of domain decomposition preconditioners how \(\varepsilon \) should be chosen for these so that (P2) holds.

It could be argued that splitting the question of how to choose \(\varepsilon \) in (1.9) into (P1) and (P2) is somewhat artificial from a practical point of view. However, it is difficult to see how any rigorous numerical analysis of this question can proceed without this split.

We also mention here that although the discussion above was presented in the context of left preconditioning, it applies equally well to right preconditioning and the main results (Theorems 1.4 and 1.5) are for both approaches.

Before outlining the main results of this paper, we review the literature on the shifted Laplacian preconditioner, focusing on the choices of \(\varepsilon \) proposed, and whether these choices are aimed at achieving (P1) or (P2). (Although not all of this previous work concerns finite-element discretisations of the Helmholtz equation, in the discussion below we still use \(\mathbf{A}\) to denote the discretisation of the (unshifted) Helmholtz problem, and \(\mathbf{A}_\varepsilon ^{-1}\) to denote the preconditioner arising from the shifted Helmholtz problem.)

1.1 Previous work on the shifted Laplacian preconditioner

Preconditioning the Helmholtz operator with the inverse of the Laplacian was proposed in [2], and preconditioning with \((\Delta -k^2)^{-1}\) was proposed in [32].

Preconditioning the Helmholtz operator with \((\Delta + \mathrm{i}\varepsilon )^{-1}\) was considered in [16] and [17], and then preconditioning with \((\Delta + k^2+ \mathrm{i}\varepsilon )^{-1}\) was considered in [15] and [55]. For both preconditioners, the authors chose \(\varepsilon \sim k^2\), and constructed an approximation to the discrete counterpart of \((\Delta +\mathrm{i}\varepsilon )^{-1}\) or \((\Delta +k^2 +\mathrm{i}\varepsilon )^{-1}\) using multigrid. (Using the notation above, preconditioning with the second operator corresponds to choosing \(\varepsilon \sim k^2\) and constructing \(\mathbf {B}^{-1}_{\varepsilon }\) using a multigrid V-cycle.) Preconditioning with \((\Delta +k^2 +\mathrm{i}\varepsilon )^{-1}\) and \(\varepsilon \sim k^2\) was then further investigated in the context of multigrid in [8] and [49].

The choice \(\varepsilon \sim k^2\) was motivated by analysis of the 1-d Helmholtz equation in an interval with Dirichlet boundary conditions in [16, Sect. 5], [14, Sect. 5.1.2], [15, Sect. 3], with this analysis using the fact that in this situation the eigenvalues of the Laplacian are known explicitly. The investigations in [16, Sect. 5] and [14, Sect. 5.1.2] considered preconditioning the 1-d Helmholtz operator with \((\mathrm{d}^2/\mathrm{d}x^2 +k^2(a + \mathrm{i}b))^{-1}\), and found that, under the restriction that \(a\le 0\), \(|\lambda _{\text {max}}|/|\lambda _{\text {min}}|\) was minimised for the operator \((\mathrm{d}^2/\mathrm{d}x^2 +k^2(a + \mathrm{i}b))^{-1}(\mathrm{d}^2/\mathrm{d}x^2 +k^2)\) when \(a=0\) and \(b = \pm 1\). The eigenvalues of \((\mathrm{d}^2/\mathrm{d}x^2 +k^2(a + \mathrm{i}b))^{-1}(\mathrm{d}^2/\mathrm{d}x^2 +k^2)\) for this boundary value problem were plotted in [15, Sect. 3], and it was found that they were better clustered for \(a=1\) and several choices of \(b\sim 1\) than for \(a=0\) and \(b=1\). (This eigenvalue clustering can be seen as partially achieving (P1) at the continuous level).

A more general eigenvalue-analysis was conducted in [55], with this investigation considering a general class of Helmholtz problems (including the interior impedance problem in 2- and 3-d). This investigation hinged on the fact that the field of values of many Helmholtz problems is contained within a closed half-plane (and thus the eigenvalues are also in this closed half-plane). One can see this for the interior impedance problem by noting from (1.4) that, since \(\eta \in \mathbb {R}\),

The investigation in [55] uses the bound on the number of GMRES iterations that (1) assumes that the eigenvalues are enclosed by a circle not containing the origin, and (2) involves the condition number of the matrix of eigenvectors (see, e.g., [45, Theorem 5], [44, Corollary 6.33]). Because of (1), [55] needs to assume that the wavenumber has a small imaginary part (to prevent the circle enclosing zero), and because of (2) [55] needs to assume that the matrix of eigenvectors is well-conditioned. Under this strong assumption about the matrix of eigenvectors, it was shown that when the operator \(\Delta + \widetilde{k}^2\), with \(\widetilde{k}=k + \mathrm{i}\alpha \), \(\alpha >0\), is preconditioned by \((\Delta +c + \mathrm{i}d))^{-1}\) with \(c\le 0\), the best choices for \(c\) and \(d\) (in terms of minimising the number of GMRES iterations) are \(c=0\) and \(d= |\widetilde{k}^2|\) [55, Sect. 4.1].

Another eigenvalue-analysis of the Helmholtz equation in 1-d with Dirichlet boundary conditions was conducted in [18]. Here, the eigenvalues of a finite-difference discretisation of this problem were calculated, and it was stated that \(\varepsilon <k\) is needed for the eigenvalues to be clustered around one [which partially achieves (P1)]. Furthermore, a Fourier analysis of multigrid in this paper showed that \(\varepsilon \sim k^2\) is needed for multigrid to converge for \(\mathbf{A}_\varepsilon \).

Other uses of the shifted Laplacian preconditioner include its use with \(\varepsilon \sim k^2\) in the context of domain decomposition methods in [30], and its use with \(\varepsilon \sim k\) in the sweeping preconditioner of Enquist and Ying in [13] (these authors consider preconditioning the Helmholtz equation with \(k\) replaced by \(k+\mathrm{i}\delta \) with \(\delta \sim 1\), and this corresponds to choosing \(\varepsilon \sim k\)). Finally we note that solving the problem with absorption by preconditioning with the inverse of the Laplacian (i.e., aiming to achieve (P2) with \(\varepsilon =0\)) has been investigated in [24, 25].

Two points to note from this literature review are the following.

-

(i)

All the analysis of how to choose \(\varepsilon \) has focused on studying the eigenvalues of \(\mathbf{A}_\varepsilon ^{-1}\mathbf{A}\) (and then trying to either minimise \(|\lambda _{\text {max}}|/|\lambda _{\text {min}}|\) or cluster the eigenvalues around the point 1).

-

(ii)

All these investigations, apart from that in [55], consider the Helmholtz equation posed in a 1-d interval with Dirichlet boundary conditions, under the assumption that \(k^2\) is not an eigenvalue.

Recall that linear systems involving Hermitian matrices can be solved using the conjugate gradient method, and bounds on the number of iterations can be obtained from information about the eigenvalues of the matrix. However, if the matrix is non-Hermitian, general purpose iterative solvers such as GMRES or BiCGStab are required, and information about the spectrum is usually not enough to provide information about the number of iterations required. Even when \(\mathbf{A}\) is Hermitian (as is the case for Dirichlet boundary conditions, but not for impedance boundary conditions), \(\mathbf{A}_\varepsilon \) is not Hermitian, and therefore the investigations of the eigenvalues of \(\mathbf{A}_\varepsilon ^{-1}\mathbf{A}\) discussed above are not sufficient to provide bounds on the number of iterations (with this fact noted in [15]).

1.2 Statement of the main results

In this paper we prove several results that give sufficient conditions on \(\varepsilon \) for the shifted Laplacian to be a good preconditioner for the Helmholtz equation, in the sense that (P1) above is satisfied. We emphasise again that these results alone are not sufficient to decide how to choose the shift in the design of practical preconditioners for \(\mathbf{A}\), since they do not consider the cost of constructing approximations of \(\mathbf{A}_\varepsilon ^{-1}\), or equivalently the question of when the property (P2) holds.

The boundary value problems for the Helmholtz equation that we consider are

-

1.

The interior impedance problem (1.1), and

-

2.

The truncated sound-soft scattering problem.

By “the truncated sound-soft scattering problem” we mean the exterior Dirichlet problem (with zero Dirichlet boundary conditions on the obstacle) where the radiation condition is imposed via an impedance boundary condition on the boundary of a large domain containing the obstacle (i.e., a first-order absorbing boundary condition); see Problem 2.4 and Fig. 2.

We consider solving these boundary value problems with FEMs of fixed order. Although such methods suffer from the pollution effect, they are still highly used in applications. We prove results when

-

(a)

The boundary of the domain is smooth and a quasi-uniform sequence of meshes is used, and

-

(b)

The domain is non-smooth and locally refined meshes are used (under suitable assumptions).

For simplicity, we now state the main results of the paper for the interior impedance problem when (a) holds (Theorems 1.4 and 1.5 below). The analogous result for the interior impedance problem when (b) holds is Theorem 4.4, and the analogous result for the truncated sound-soft scattering problem when (a) holds is Theorem 4.5.

Notation 1.1

We use the notation \(a\lesssim b\) to mean that there exists a \(C>0\) (independent of all parameters of interest and in particular \(k, \varepsilon ,\) and \(h\)) such that \(a\le C\,b\). We say that \(a\sim b\) if \(a\lesssim b\) and \(a\gtrsim b\).

Throughout the paper we make the assumption that

It is possible to derive analogous results for larger \(\varepsilon \), but \(\varepsilon \sim k^2\) is the largest value of the shift/absorption usually considered in the literature and we do not expect interesting results for larger \(\varepsilon \).

Definition 1.2

(Star-shaped)

-

(i)

The domain \(\Omega \) is star-shaped with respect to the point \(\mathbf {x}_0\in \Omega \) if the line segment \([\mathbf {x}_0,\mathbf {x}]\) is a subset of \(\Omega \) for all \(\mathbf {x}\in \Omega \).

-

(ii)

The domain \(\Omega \) is star-shaped with respect to the ball \(B_a(\mathbf {x}_0)\) (with \(a>0\) and \(\mathbf {x}_0\in \Omega \)) if \(\Omega \) is star-shaped with respect to every point in \(B_a(\mathbf {x}_0)\).

Remark 1.3

(Remark on star-shapedness) If \(\Omega \) is Lipschitz (and so has a normal vector at almost every point on the boundary) then \(\Omega \) is star-shaped with respect to \(\mathbf {x}_0\) if and only if \((\mathbf {x}-\mathbf {x}_0)\cdot \mathbf {n}(\mathbf {x})\ge 0\) for all \(\mathbf {x}\in \partial \Omega \) for which \(\mathbf {n}(\mathbf {x})\) is defined. Furthermore, \(\Omega \) is star-shaped with respect to \(B_a(\mathbf {x}_0)\) if and only if \((\mathbf {x}-\mathbf {x}_0)\cdot \mathbf {n}(\mathbf {x})\ge a\) for all \(\mathbf {x}\in \partial \Omega \) for which \(\mathbf {n}(\mathbf {x})\) is defined (for proofs of these statements see [36, Lemma 5.4.1] or [27, Lemma 3.1]). Whenever we consider a star-shaped domain (in either sense) in this paper, we assume that \(\mathbf {x}_0=\mathbf{0}\).

Theorem 1.4

(Sufficient conditions for \(\mathbf{A}_\varepsilon ^{-1}\) to be a good preconditioner) Suppose that either \(\Omega \) is a \(C^{1,1}\) domain in 2- or 3-d that is star-shaped with respect to a ball or \(\Omega \) is a convex polygon and suppose that \(\mathbf{A}\) and \(\mathbf{A}_\varepsilon \) are obtained using \(H^1\)-conforming polynomial elements of fixed order on a quasi-uniform sequence of meshes. Assume that \(\varepsilon \lesssim k^2\) and either \(\eta = k\) or \(\eta = \sqrt{k^2 + \mathrm{i}\varepsilon }\). Then, given any \(k_0>0\) and \(C>0\), there exist \(C_1, C_2, C_3>0 \) (independent of \(h, k,\) and \(\varepsilon \) but depending on \(k_0\) and \(C\)) such that if \(h k^2 \ge C\) and

then

and

for all \(k\ge k_0\).

Therefore, if \(\varepsilon /k\) is sufficiently small, \(\mathbf{A}_\varepsilon ^{-1}\) is a good preconditioner for \(\mathbf{A}\). (If absorption is added to the original problem by letting \(k\mapsto k+ \mathrm{i}\delta \), with corresponding Galerkin matrix \({\varvec{\mathcal{A}}}_\delta \), then the analogues of (1.13) and (1.14) are \(\Vert \mathbf {I}-{\varvec{\mathcal{A}}}_\delta ^{-1} \mathbf{A}\Vert _2 \le C_2 \delta \) and \(\Vert \mathbf {I}- \mathbf{A}{\varvec{\mathcal{A}}}_\delta ^{-1}\Vert _2 \le C_3 \delta \), and thus if \(\delta \) is sufficiently small, \({\varvec{\mathcal{A}}}_\delta ^{-1}\) is a good preconditioner for \(\mathbf{A}\).) Theorem 1.4 has the following consequence.

Theorem 1.5

(\(k\)-independent GMRES estimate) If the assumptions of Theorem 1.4 hold and \(\varepsilon /k\) is sufficiently small, then when GMRES is applied to either of the equations \(\mathbf{A}_\varepsilon ^{-1} \mathbf {A}\mathbf {u}= \mathbf{A}_\varepsilon ^{-1} \mathbf {f}\) or \(\mathbf {A}\mathbf{A}_\varepsilon ^{-1} \mathbf {v} = \mathbf {f}\), it converges in a \(k\)–independent number of iterations.

These two theorems are proved in §4, along with analogous results for non-quasi-uniform meshes.

Where do the requirements on \(h\) in Theorem 1.4 come from? The requirement (1.12) ensures that the Galerkin method is quasi-optimal, with constant independent of \(k\) and \(\varepsilon \), when it is applied to the variational problem (1.3), and the proof of Theorem 1.4 requires this quasi-optimality. (Recall that the best result so far about quasi-optimality of the \(h\)-FEM is that, under some geometric restrictions, quasi-optimality holds with constant independent of \(k\) when \(hk^2 \lesssim 1\) [35, Prop. 8.2.7]. The condition (1.12) is the analogue of \(hk^2\lesssim 1\) for the shifted problem; see Lemma 3.5 below for more details.) We discuss the condition (1.12) more in Remark 4.2, but note that if quasi-optimality could be proved under less restrictive conditions, then the bound (1.13) would hold under these conditions too.

When dealing with discretisations of the Helmholtz equation one expects to encounter a condition such as (1.12), however one does not usually expect to encounter a condition such as \(hk^2 \ge C\) (although in practice this will always be satisfied). This second condition is only necessary when \(\eta = \sqrt{k^2+ \mathrm{i}\varepsilon }\) (and not when \(\eta =k\)), and arises from bounding \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{N}\Vert _2\) and \(\Vert \mathbf{N}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) independently of \(k\), \(\varepsilon \), and \(h\); see Sect. 1.3 below and Lemma 4.1.

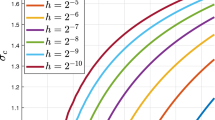

How sharp is the bound (1.13)? Numerical evidence suggests that (1.13) is sharp in the sense that the right-hand side cannot be replaced by \(\varepsilon /k^{\alpha }\) for \(\alpha >1\). Indeed, Fig. 1 plots the boundary of the numerical range of \(\mathbf{A}_\varepsilon ^{-1}\mathbf{A}\) for increasing \(k\) for each of the three choices \(\varepsilon =k\), \(\varepsilon =k^{3/2}\), and \(\varepsilon =k^2\) (Recall that the numerical range of a matrix \(\mathbf{C}\) is the set \(W(\mathbf{C}):=\{ (\mathbf{C}\, \mathbf {x}, \mathbf {x}) : \mathbf {x}\in \mathbb {C}^N, \Vert \mathbf {x}\Vert _2=1\}\).) In this example, \(\Omega \) is the unit square, \(\eta = k\), \(f=1\), \(g=0\), \(V_N\) is the standard hat-function basis for conforming \(P1\) finite elements on a uniform triangular mesh on \(\Omega \), and the mesh diameter \(h\) is chosen to decrease proportional to \(k^{-2}\). The numerical range is computed using an accelerated version of the algorithm of Cowen and Harel [9] (the algorithm is adapted to sparse matrices and the eigenvalues are estimated by an iterative method, which avoids forming the system matrix).

The figures show that when \(\varepsilon = k\) the numerical range remains bounded away from the origin as \(k\) increases, whereas when \(\varepsilon =k^{3/2}\) or \(k^2\) the distance of the numerical range from the origin decreases as \(k\) increases. This is consistent with the result of Theorem 1.4 since, when \(\left\| \mathbf {x}\right\| _2= 1\),

[where \(C_2\) is the constant in (1.13)]. This bound shows that when \(\varepsilon /k\) is small enough, the numerical range is bounded away from the origin, although we cannot quantify “small enough” here, since the value of \(C_2\) is unknown (although in principle one could work it out).

Of course, these experiments do not rule out the possibility that a bound such as

holds for some \(\alpha >1\) and for some large \(C_3\). Nevertheless, in Sect. 6 we see that the condition “\(\varepsilon /k\) sufficiently small” also arises when one considers how well the solution of the boundary value problem with absorption (1.2) approximates the solution of the boundary value problem without absorption (1.1), independently of any discretisations, and thus we conjecture that (1.15) does not hold for any \(\alpha >1\).

A disadvantage of the bounds in Theorem 1.4 is that they seem to allow for the possibility that \(\Vert \mathbf {I}-\mathbf{A}_\varepsilon ^{-1}\mathbf{A}\Vert _2\) and \(\Vert \mathbf {I}-\mathbf{A}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) might grow with increasing \(k\) if \(\varepsilon \gg k\). However, we also prove the following result, which rules out any growth.

Lemma 1.6

(Alternative bound on \(\Vert \mathbf {I}- \mathbf{A}_\varepsilon ^{-1}\mathbf{A}\Vert _2\) and \(\Vert \mathbf {I}- \mathbf{A}\mathbf{A}_\varepsilon ^{-1}\Vert _2\)) Under the conditions of Theorem 1.4 there exists a \(C_4>0\) such that

for all \(k\ge k_0\).

In Table 1 we plot \(\mathrm {dist}(0,W(\mathbf{A}_\varepsilon ^{-1}\mathbf{A}))\) and also the number of GMRES iterations needed to reduce the initial residual by six orders of magnitude, starting with a zero initial guess, when GMRES is applied to \(\mathbf{A}_\varepsilon ^{-1} \mathbf{A}\mathbf {x}= \mathbf{A}_\varepsilon ^{-1} \mathbf{1}.\) The difference between the two sets of results is that results on the left are obtained with \(h= k^{-2}\) (in accordance with the conditions of Theorem 1.4), and the results on the right are obtained with the less restrictive condition that \(h= k^{-3/2}\); we see that the two sets of results are almost identical.

When \(\varepsilon =k\) the number of iterations stays constant as \(k\) increases (which is consistent with Theorem 1.5), but when \(\varepsilon =k^{3/2}\) or \(\varepsilon =k^2\) the number of iterations grows with \(k\). The results of more extensive experiments are given in Sect. 5, but they all show similar behaviour (i.e., the number of iterations remaining constant as \(k\) increases when \(\varepsilon =k\), but increasing as \(k\) increases for larger \(\varepsilon \)).

1.3 The idea behind the proofs of Theorems 1.4 and 1.5

The idea behind Theorem 1.4. Considering first the case of left preconditioning and noting that

where \(\mathbf{M}\) and \(\mathbf{N}\) are as in (1.7), we see that a bound on \(\Vert \mathbf {I}- \mathbf{A}_\varepsilon ^{-1}\mathbf{A}\Vert _2\) can be obtained from bounds on \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _2\) and \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{N}\Vert _2\). We obtain bounds on \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _2\) and \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{N}\Vert _2\) in Lemma 4.1 below using an argument that bounds these quantities when \(\mathbf{A}_\varepsilon \) is the Galerkin matrix of a general variational problem and one has

-

(i)

a bound on the solution operator of the continuous problem, and

-

(ii)

conditions under which the Galerkin method is quasi-optimal.

In our context, we need the bound (i) and the conditions (ii) (along with the corresponding constant of quasi-optimality) to be explicit in \(h\), \(k\), and \(\varepsilon \).

Regarding (i): proving bounds on the solution of the Helmholtz equation posed in exterior domains is a classic problem, and in particular can be achieved using identities introduced by Morawetz in [39]. Bounds on the solution of the interior impedance problem (1.1) and the truncated sound-soft scattering problem were proved independently (although essentially using Morawetz’s identities) in [10, 35], and [26] (see [6, §5.3], [50, §1.2] for discussions of this work). In this paper we use Green’s identity to bound the solution of the shifted interior impedance problem (1.2) explicitly in \(k\) and \(\varepsilon \) when \(\varepsilon \gtrsim k\) and \(\Omega \) is a general Lipschitz domain, and we use Morawetz’s identities to bound the solution (again explicitly in \(k\) and \(\varepsilon \)) when \(\varepsilon \lesssim k\) and \(\Omega \) is a Lipschitz domain that is star-shaped with respect to a ball. (We also prove analogous results for the truncated sound-soft scattering problem.)

Regarding (ii): \(k\)-explicit quasi-optimality of the \(h\)-version of the FEM was proved by Melenk in [35] in the case \(\varepsilon = 0\). Indeed Melenk showed that quasi-optimality holds with a quasi-optimality constant independent of \(k\) under the condition that \(hk^2\lesssim 1\). This result was obtained using a duality argument that is often attributed to Schatz [47] along with the \(k\)-explicit bound on the solution discussed in (i). We apply this argument to the case when \(\varepsilon > 0\), with the only difference being that the variational formulation of (1.2) is coercive when \(\varepsilon >0\) with coercivity constant \(\sim \varepsilon /k^2\) (see Lemma 3.1). Therefore, instead of the mesh threshold \(hk^2\lesssim 1\) we obtain \(hk\sqrt{|k^2-\varepsilon |}\lesssim 1\), reflecting the fact that if \(\varepsilon =k^2\) then the uniform coercivity in this case implies that quasi-optimality holds with no mesh threshold.

The argument used to bound \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _2\) and \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{N}\Vert _2\) in Lemma 4.1 below can also be used to bound \(\Vert \mathbf{A}_\varepsilon ^{-1}\Vert _2\) (when \(\mathbf{A}_\varepsilon \) is the Galerkin matrix of a general variational problem) if one has (i) and (ii) above. We have not been able to find this argument explicitly in the literature, although it is alluded to in [31, Last paragraph of §2.4]. Furthermore, put another way, this argument states that if the sesquilinear form satisfies a continuous inf-sup condition and the Galerkin solutions exist, are unique, and are quasi-optimal, then one can obtain a discrete inf-sup condition. When phrased in this way, this result can be seen as a special case of [33, Theorem 3.9].

Remark 1.7

The argument for the case of right preconditioning is very similar, in that a bound on \(\Vert \mathbf {I}- \mathbf{A}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) can be obtained from bounds on \(\Vert \mathbf{M}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) and \(\Vert \mathbf{N}\mathbf{A}_\varepsilon ^{-1}\Vert _2\). Then, because \(\mathbf{M}\) and \(\mathbf{N}\) are real symmetric matrices,

Since the matrix \(\mathbf{A}_\varepsilon ^{*}\) is simply the Galerkin matrix corresponding to the adjoint to problem (1.2), and we also have bounds on the solution operator for this problem (as outlined in Remark 2.5), the argument to obtain bounds on \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _2\) and \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{N}\Vert _2\) in Lemma 4.1 below can be repeated for the adjoint problem, resulting in bounds on \(\Vert \mathbf{M}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) and \(\Vert \mathbf{N}\mathbf{A}_\varepsilon ^{-1}\Vert _2\).

The idea behind Theorem 1.5. Theorem 1.5 follows from Theorem 1.4 by using the Elman estimate for GMRES.

Theorem 1.8

If the matrix equation \(\mathbf{C}\mathbf {x}= \mathbf {y}\) is solved using GMRES then, for \(m\in \mathbb {N}\), the GMRES residual \(\mathbf {r}_m:= \mathbf{C}\mathbf {x}_m - \mathbf {y}\) satisfies

(recall that \(W(\mathbf{C}):= \{ (\mathbf{C}\mathbf {x}, \mathbf {x}) : \mathbf {x}\in \mathbb {C}^N, \Vert \mathbf {x}\Vert _2=1\}\) is the numerical range or field of values).

The bound (1.18) was originally proved in [12] (see also [11, Theorem 3.3]) and appears in the form above in [3, Equation 1.2]. A variant of this theory, where the Euclidean inner product \((\cdot , \cdot )\) and norm \(\Vert \cdot \Vert _2\) are replaced by a general inner product and norm, is used in [5].

Theorem 1.8 has the following corollary.

Corollary 1.9

If \(\Vert \mathbf{I}- \mathbf{C}\Vert _2 \le \sigma < 1\), then in (1.18)

Theorem 1.5 follows from Theorem 1.4 by applying Corollary 1.9 with \(\mathbf{C}= \mathbf{A}_\varepsilon ^{-1}\mathbf{A}\). Indeed, Theorem 1.4 shows that if \(\varepsilon /k\) is sufficiently small, \(\Vert \mathbf{I}- \mathbf {C}\Vert _2\) can be bounded below one, independently of \(k\) and \(\varepsilon \). Therefore GMRES converges and the number of iterations is independent of \(k\).

1.4 Outline and preliminaries

In Sect. 2 we prove bounds that are explicit in \(k,\eta ,\) and \(\varepsilon \) on the solutions of the shifted interior impedance problem (1.2) and the shifted truncated sound-soft scattering problem. In Sect. 3 we prove results about the continuity and coercivity of \(a_\varepsilon (\cdot ,\cdot )\) and obtain sufficient conditions for the Galerkin method applied to \(a_\varepsilon (\cdot ,\cdot )\) to be quasi-optimal (with all the constants given explicitly in terms of \(k\), \(\eta \), and \(\varepsilon \)). In Sect. 4 we put the results of Sects. 2 and 3 together to prove Theorem 1.4 and its analogue for non-quasi-uniform meshes. In Section 5 we illustrate the theory with numerical experiments. Section 6 contains some concluding remarks about approximating the solution of (1.1) by the solution of (1.2), independently of any discretisations.

Notation and recap of elementary results. Let \(\Omega \subset \mathbb {R}^d\), \(d=2\), or \(3\), be a bounded Lipschitz domain (where by “domain” we mean a connected open set) with boundary \(\Gamma \). We do not introduce any special notation for the trace operator, and thus the trace theorem is simply

(see [34, Theorem 3.38, Page 102]), and the multiplicative trace inequality is

[22, Theorem 1.5.1.10, last formula on Page 41].

Let \(\partial _n\) denote the normal-derivative trace on \(\Omega \) (with the convention that the normal vector points out of \(\Omega \)). Recall that if \(u\in H^2(\Omega )\) then \(\partial _n u:= \mathbf {n}\cdot \nabla u\), and, for \(u\in H^1(\Omega )\) with \(\Delta u \in L^2(\Omega )\), \(\partial _n u\) is defined so that Green’s first identity holds (see, e.g., [6, Equation (A.29)]). Denote the surface gradient on \(\Gamma \) by \(\nabla _{\Gamma }\); see, e.g., [6, Equation A.14] for the definition of this operator in terms of a parametrisation of the boundary.

Finally, we repeatedly use the inequalities

and

where \(a, b,\) and \(\delta \) are all \(>0\). (Recalling Notation 1.1, we see that (1.22) implies that \( a+b \sim \sqrt{a^2+ b^2}\).)

2 Bounds on the solution operators to the problems with absorption

In this section we prove bounds that are explicit in \(k\), \(\eta \), and \(\varepsilon \) on the solutions of the shifted interior impedance problem and the shifted truncated sound-soft scattering problem. First, we define precisely what we mean by these problems.

Problem 2.1

(Interior Impedance Problem with absorption) Let \(\Omega \subset \mathbb {R}^d\), with \(d=2\) or \(3\), be a bounded Lipschitz domain with outward-pointing unit normal vector \(\mathbf {n}\) and let \(\Gamma :=\partial \Omega \). Given \(f\in L^2(\Omega )\), \(g \in L^2(\Gamma )\), \(\eta \in \mathbb {C}{\setminus }\{ 0\}\) and \(\varepsilon \ge 0\), find \(u\in H^1(\Omega )\) such that

Remark 2.2

(Existence and uniqueness) One can prove using Green’s identity that the solution of the Problem 2.1 (if it exists) is unique; see Sect. 2.1.2. One can prove via Fredholm theory [using the fact that \(H^1(\Omega )\) is compactly contained in \(L^2(\Omega )\)] that uniqueness implies existence in exactly the same way as for the problem with \(\varepsilon =0\).

Remark 2.3

(The choice of \(\eta \)) If one thinks of the impedance boundary condition as being a first order approximation to the Sommerfeld radiation condition, then for the unshifted problem \(\eta \) should be equal to \(k\), and for the shifted problem \(\eta \) should be equal to \(\sqrt{k^2+\mathrm{i}\varepsilon }\). With \(\eta _R\) and \(\eta _I\) denoting the real and imaginary parts of \(\eta \) respectively, we prove bounds under the assumption that \(\eta _R\sim k\) and \(0\le \eta _I\lesssim k\). These assumptions cover both the case that \(\eta =k\) and the case that \(\eta =\sqrt{k^2+\mathrm{i}\varepsilon }\) (recall that we assume that \(\varepsilon \lesssim k^2\)).

Problem 2.4

(Truncated sound-soft scattering problem with absorption) Let \(\Omega _D\) be a bounded Lipschitz open set in \(\mathbb {R}^d\) (\(d=2\) or \(3\)) such that the open complement \(\Omega _+:=\mathbb {R}^d{\setminus }\Omega _D\) is connected. Let \(\Omega _R\) be a bounded Lipschitz domain such that \(\Omega _D \subset \Omega _R \subset \mathbb {R}^d\) with \(d(\Omega _D, \partial \Omega _R)>0\) (where \(d(\cdot ,\cdot )\) is the distance function). Let \(\Gamma _R := \partial \Omega _R\), \(\Gamma _D :=\partial \Omega _D\), and \(\Omega := \Omega _R {\setminus } \overline{\Omega _D}\) (thus \(\partial \Omega = \Gamma _R \cup \Gamma _D\) and \(\Gamma _R \cap \Gamma _D = \emptyset \)). Given \(f \in L^2(\Omega )\), \(g \in L^2(\Gamma _R)\), \(\eta \in \mathbb {C}{\setminus }\{ 0\}\), and \(\varepsilon \ge 0\), find \(u \in H^1(\Omega )\) such that

If \(\varepsilon = 0, \eta = k\), \(\Omega _R\) is a large ball containing \(\Omega _D\), and \(f\) and \(g\) are chosen appropriately, then the solution of the truncated sound-soft scattering problem is a classical approximation to the solution of the sound-soft scattering problem (see, e.g., [6, Equation (2.16)]); Fig. 2 shows \(\Omega _R\) and \(\Omega _D\) in this case. We use the convention that on \(\Gamma _D\) the normal derivative \(\partial _n v\) equals \(\mathbf {n}_D\cdot \nabla v\) for \(v\) that are \(H^2\) in a neighbourhood of \(\Gamma _D\), and similarly \(\partial _n v= \mathbf {n}_R \cdot \nabla v\) on \(\Gamma _R\), where \(\mathbf {n}_D\) and \(\mathbf {n}_R\) are oriented as in Figure 2. Note that Remarks 2.2 and 2.3 also apply to Problem 2.4.

An example of the domains \(\Omega _D\) and \(\Omega _R\) in Problem 2.4

We go through the details of the bounds for Problem 2.1 in Sect. 2.1, and then outline in Sect. 2.2 the (small) modifications needed to the arguments to prove the analogous bounds for Problem 2.4.

2.1 Bounds on the interior impedance problem with absorption

Remark 2.5

(The adjoint problem) All the bounds on the solution of the interior impedance problem proved in this section are also valid when the signs of \(\varepsilon \) and \(\eta \) are changed; i.e., the bounds also hold for the solution of

(under the same conditions on \(\varepsilon \) and \(\eta \)).

Remark 2.6

(Regularity) Let \(u\) be the solution of Problem 2.1. Since \(f\in L^2(\Omega )\) we have that \(\Delta u \in L^2(\Omega )\), and since \(g \in L^2(\Gamma )\) we have that \(\partial _n u\in L^2(\Gamma )\). These two facts imply that \( u \in H^1(\Gamma )\) by a regularity result of Nečas for Lipschitz domains [41, §5.2.1], [34, Theorem 4.24(ii)].

We now state the two main results of this section.

Theorem 2.7

(Bound for \(\varepsilon > 0\) for general Lipschitz \(\Omega \)) Let \(u\) solve Problem 2.1, let \(\eta =\eta _R+ \mathrm{i}\eta _I\) and assume that \(\eta _I\ge 0\), \(\eta _R>0\). Then, given \(k_0>0\), there exists a \(C>0\), independent of \(\varepsilon \), \(k\), \(\eta _R\), and \(\eta _I\), such that

for all \(k\ge k_0\), \(\eta _R>0\), and \(\varepsilon >0\).

Assuming that \(\varepsilon \lesssim k^2\), we obtain the following corollary.

Corollary 2.8

If the conditions in Theorem 2.7 hold and, in addition, \(\varepsilon \lesssim k^2\), then

for all \(k\ge k_0\), \(\eta _R>0\), and \(\varepsilon >0\). In particular, if \(\eta _I\ge 0\), \(\eta _R\sim k\), and \(\varepsilon \sim k\) then

while if \(\eta _I\ge 0\), \(\eta _R\sim k\), and \(\varepsilon \sim k^2\) then

for all \(k\ge k_0\).

This corollary shows how the \(k\)-dependence of the bounds on the solution operator improves as \(\varepsilon \) is increased from \(k\) to \(k^2\).

As \(\varepsilon \rightarrow 0\), the right-hand side of (2.5) blows up. A bound that is valid uniformly in this limit can be obtained by imposing some geometric restrictions on \(\Omega \).

Theorem 2.9

(Bound for \(\varepsilon /k\) sufficiently small when \(\Omega \) is star-shaped with respect to a ball and Lipschitz) Let \(\Omega \) be a Lipschitz domain that is star-shaped with respect to a ball (see Definition 1.2), and let \(u\) be the solution of Problem 2.1 in \(\Omega \). If \(\eta _R\sim k\) and \(|\eta _I|\lesssim k\) then, given \(k_0>0\), there exist \(c\) and \(C\) (independent of \(k\), \(\varepsilon \), and \(\eta \) and \(>0\)) such that, if \( {\varepsilon }/{k}\le c\) for all \(k \ge k_0\), then

Remark 2.10

(The case \(\varepsilon =0\)) The bound (2.7) for \(\varepsilon =0\) was proved for \(d=2\) in [35, Prop. 8.1.4] and for \(d=3\) in [10, Theorem 1] using essentially the same methods we use here (see Remark 2.16 for more details).

It is useful for what follows to combine the results of Theorems 2.7 and 2.9 to form the following corollary.

Corollary 2.11

(Bound for \(\varepsilon \lesssim k^2\)) If \(\Omega \) is star-shaped with respect to a ball, \(\varepsilon \lesssim k^2\), \(\eta _R\sim k\), and \(0\le \eta _I\lesssim k\), then, given \(k_0>0\),

for all \(k\ge k_0\).

In §3 we find sufficient conditions for the Galerkin method applied to Problem 2.1 to be quasi-optimal. To do this, we need a bound on the \(H^2\)-norm of the solution (in cases where the solution is in \(H^2(\Omega )\)), and this can be obtained by combining the following lemma with the bound (2.8).

Lemma 2.12

(A bound on the \(H^2(\Omega )\) norm) Let \(u\) be the solution of Problem 2.1, and assume further that \(g\in H^{1/2}(\Gamma )\). If \(\Omega \) is \(C^{1,1}\) (in 2- or 3-d) then \(u\in H^2(\Omega )\) and there exists a \(C\) (independent of \(k\) and \(\varepsilon \)) such that

for all \(k>0 \) and \(\varepsilon \ge 0\). Furthermore, if \(\Omega \) is a convex polygon and \(g\in H^{1/2}_{\mathrm{pw}}(\Gamma )\) (i.e., \(H^{1/2}\) on each side) then the bound (2.9) also holds, with \(\left\| g\right\| _{H^{1/2}(\Gamma )}\) replaced by \(\Vert g\Vert _{H^{1/2}_{\mathrm{pw}}(\Gamma )}\) (i.e., the sum of the \(H^{1/2}\)–norms of \(g\) on each side).

Proof of Lemma 2.12

First consider the case when \(\Omega \) is \(C^{1,1}\). By [22, Theorem 2.3.3.2, Page 106], if \(v\in H^1(\Omega )\) with \(\Delta v\in L^2(\Omega )\) and \(\partial _n v\in H^{1/2}(\Gamma )\) then

The bound (2.9) then follows from (2.10) by using (1) the fact that \(u\) satisfies the PDE (2.1a) and boundary conditions (2.1b), and (2) the trace theorem (1.19).

When \(\Omega \) is a convex polygon, the result (2.9) will follow if we can again establish that (2.10) holds (except with the condition that \(\partial _n v\in H^{1/2}(\Gamma )\) replaced by \(\partial _n v\in H^{1/2}_{\mathrm{pw}}(\Gamma )\)). (There is a slight subtlety in that we need to show that \(\left\| u\right\| _{H^{1/2}_{\mathrm{pw}}(\Gamma )}\lesssim \left\| u\right\| _{H^1(\Omega )}\), but this follows from the trace result for polygons in [22, Part (c) of Theorem 1.5.2.3, Page 43] using the fact that \(u\) is continuous at the corners of the polygon. This latter fact follows from the Sobolev embedding theorem [34, Theorem 3.26] and the fact that \(u \in H^1(\Gamma )\), which follows from the regularity result of Nečas [34, Theorem 4.24 (ii)] since \(u\in H^2(\Omega )\) implies \(\partial _n u\in L^2(\Gamma )\).)

The bound (2.10) can be established when \(\Omega \) is a convex polygon by combining two results in [22] and performing some additional work as follows. When \(\Omega \) is a convex polygon and \(v\) is such that \(v\in H^1(\Omega )\), \(\Delta v\in L^2(\Omega )\), and \(\partial _n v=0\) on \(\Gamma \), then

by [22, Theorem 4.3.1.4, Page 198]. When \(\partial _n v\ne 0\) but is in \(H^{1/2}_{\mathrm{pw}}(\Gamma )\) then \(v\in H^2(\Omega )\) by [22, Corollary 4.4.3.8, Page 233] (note that the sum in [22, Equation 4.4.3.8] is empty since \(\Omega \) is convex). Therefore, by linearity, to prove that the bound (2.10) holds when \(\Omega \) is a convex polygon we only need to show that for these domains there exists a lifting operator \(G: H^{1/2}_{\mathrm{pw}}(\Gamma ) \rightarrow H^2(\Omega )\) with \(\partial _n G(g)=g\) and

(in fact we show below that this is the case when \(\Omega \) is any polygon). Using a partition of unity it is sufficient to construct such an operator when (1) \(\Omega \) is a half-space, and (2) \(\Omega \) is an infinite wedge.

For (1), given \(g\) define \(G(g)\) to be the solution of the Neumann problem for Laplace’s equation in \(\Omega \) (with Neumann data \(g\)). The explicit expression for the solution in terms of the Fourier transform shows that (2.12) is satisfied.

For (2), first consider the case when the wedge angle is \(\pi /2\) (i.e., a right-angle). By linearity we can take \(g\) to be zero on one side of the wedge. Introduce coordinates \((x_1,x_2)\) so that \(g\ne 0\) on the positive \(x_1\)–axis and \(g=0\) on the positive \(x_2\)–axis. Extend \(g\) to the negative \(x_1\)–axis by requiring that \(g\) is even about \(x_1=0\); one can then show that this extension is a continuous mapping from \(H^{1/2}(\mathbb {R}^+)\) to \(H^{1/2}(\mathbb {R})\). The solution of the Neumann problem for Laplace’s equation in the half-space \(\{(x_1,x_2) : x_2>0\}\) then satisfies \(\partial _n u=0\) on the positive \(x_2\)–axis, and thus this function satisfies the requirements of the lifting. A lifting for a wedge of arbitrary angle can be obtained from a lifting for a right-angled wedge by expressing the function in polar coordinates and rescaling the angular variable. (Note that all our liftings up to this point have satisfied Laplace’s equation. Rescaling the angular variable means that the resulting function does not satisfy Laplace’s equation, but is still in \(H^2(\Omega )\).) \(\square \)

2.1.1 Green, Rellich, and Morawetz identities for the Helmholtz equation

For the proofs of Theorems 2.7 and 2.9 we need the following identities.

Lemma 2.13

(Green, Rellich, and Morawetz identities for the Helmholtz equation) Let \(v \in C^2(D)\) for some domain \(D\subset \mathbb {R}^d\), and let

for \(k\) and \(\alpha \in \mathbb {R}\). Then, on the domain \(D\),

Proof of Lemma 2.13

The identities (2.13) and (2.14) can be proved by expanding the divergences on the right-hand sides; for (2.13) this is straightforward, but for (2.14) this is more involved; see, e.g., [51, Lemma 2.1] for the details. The identity (2.15) is then (2.14) plus \(2\alpha \) times the real part of (2.13). \(\square \)

Remark 2.14

All three of the identities in Lemma 2.13 are formed by multiplying the Helmholtz operator \(\mathcal{L}v\) by a function of \(v\), say \(\overline{\mathcal{N}v}\), and then expressing this quantity as the divergence of something plus some non-divergence terms. The multiplier \(\mathcal{N}v =v\) is associated with the name of Green, and (2.13) is a special case of the pointwise form (as opposed to integrated form) of Green’s first identity. The multiplier \(\mathcal{N}v= \mathbf {x}\cdot \nabla v\) was introduced by Rellich in [43], and identities resulting from multipliers that are derivatives of \(v\) are thus often called Rellich identities. The idea of taking \(\mathcal{N}v\) to be a linear combination of \(v\) and a derivative of \(v\) (in general \(\mathbf {Z}\cdot \nabla v- \mathrm{i}k \beta v + \alpha v\) for \(\mathbf {Z}\) a real vector field and \(\beta \) and \(\alpha \) real scalar fields) was used extensively by Morawetz in the context of the Helmholtz and wave equations; see [38, 40], and [39]. The identity (2.15) is essentially contained in [39, §I.2] and [40]; see [52, Remark 2.7] for more details. For more discussion of Rellich and Morawetz identities, see [6, §5.3].

For the proofs of Theorems 2.7 and 2.9, we integrate the indentities (2.13) and (2.15) over \(\Omega \).

Lemma 2.15

(Integrated forms of the Green and Morawetz identities) With \(\Omega \) as in Problem 2.1, define the space \(V\) by

(note that either of the conditions \(\partial _n v\in L^2(\Gamma )\) or \(v \in H^1(\Gamma )\) can be dropped from the definition of \(V\) by the results of Nečas [41, §5.1.2, 5.2.1], [34, Theorem 4.24]). Then, with \(\mathcal{L}v\) and \(\mathcal{M}v\) as in Lemma 2.13, if \(v\in V\) then

and

where the expression \(\nabla v\) in the integral on \(\Gamma \) is understood as \(\nabla _{\Gamma }v + \mathbf {n}\partial _n v\).

Proof of Lemma 2.15

Equations (2.17) and (2.18) hold as consequences of the divergence theorem applied to the identities (2.13) and (2.15). Indeed, the divergence theorem \(\int _\Omega \nabla \cdot \mathbf F = \int _{\Gamma } \mathbf F \cdot \mathbf {n}\) is valid when \(\Omega \) is Lipschitz and \(\mathbf F \in (C^1(\overline{\Omega }))^d\) [34, Theorem 3.34]. Therefore, (2.17) and (2.18) hold for \(v \in \mathcal{D}(\overline{\Omega }):= \{ U\vert _\Omega : U \in C^\infty _0(\mathbb {R}^d)\}\). By the density of \(\mathcal{D}(\overline{\Omega })\) in the space \(V\) [37, Appendix A], (2.17) and (2.18) hold for \(v \in V\). \(\square \)

2.1.2 Proof of Theorem 2.7

Outline The only ingredients for the proof are the integrated form of Green’s identity (2.17), the Cauchy-Schwarz inequality, and the inequality (1.21). By Remark 2.6, the solution \(u\) of Problem 2.1 is in the space \(V\); therefore, by Lemma 2.15, (2.17) holds with \(v\) replaced by \(u\). Using the impedance boundary condition (2.1b) and the fact that \(\mathcal{L}u = -f - \mathrm{i}\varepsilon u\) in \(\Omega \), we obtain

From here, the proof consists of the following three steps:

-

1.

Use the imaginary part of (2.19) to estimate \(\left\| u\right\| _{L^2(\Omega )}^2\) and \(\left\| u\right\| _{L^2(\Gamma )}^2\) by \(\left\| f\right\| _{L^2(\Omega )}^2\) and \(\left\| g\right\| _{L^2(\Gamma )}^2\).

-

2.

Use the real part of (2.19) to estimate \(\left\| \nabla u\right\| _{L^2(\Omega )}^2+k^2\left\| u\right\| _{L^2(\Omega )}^2\) by \(\left\| u\right\| _{L^2(\Omega )}^2\), \(\left\| u\right\| _{L^2(\Gamma )}^2\), \(\left\| f\right\| _{L^2(\Omega )}^2\), and \(\left\| g\right\| _{L^2(\Gamma )}^2\).

-

3.

Put the estimates of Steps 1 and 2 together to give the result (2.4).

Step 1. Taking the imaginary part of (2.19) and using the Cauchy-Schwarz inequality, we obtain

(Note that this inequality establishes uniqueness of the interior impedance problem with absorption, since if \(f\) and \(g\) are both zero then the inequality implies that \(u\) is zero in \(\Omega \).) Using the inequality (1.21) on both terms on the right-hand side, we find that

Taking \(\delta _1 = \varepsilon \) and \(\delta _2= \eta _R\), we obtain

Step 2. Taking the real part of (2.19) yields

and thus (since \(\eta _I\ge 0\))

Adding \(k^2 \left\| u\right\| _{L^2(\Omega )}^2\) to both sides and then using the inequality (1.21) on the terms involving \(f\) and \(g\), we obtain

Step 3. We choose \(\delta _1=k^2\) in (2.23) and then use (2.22) to estimate \(\left\| u\right\| _{L^2(\Omega )}^2\) and \(\left\| u\right\| _{L^2(\Gamma )}^2\) in terms of \(\left\| f\right\| _{L^2(\Omega )}^2\) and \(\left\| g\right\| _{L^2(\Gamma )}^2\) to get

We then choose \(\delta _2= \eta _R\) (to make \(1/\delta _2\) and \(\delta _2/\eta _R^2\) equal) and obtain the bound (2.4).

2.1.3 Proof of Theorem 2.9

Outline. The proof consists of the following two steps:

-

1.

Use the integrated Morawetz identity (2.18) to show that, given \(k_0>0\), there exist \(c\) and \(C\) (independent of \(k\), \(\eta \), and \(\varepsilon \) and \(>0\)) such that if \(\varepsilon \le ck\) then

$$\begin{aligned} \left\| \nabla u\right\| _{L^2(\Omega )}^2 + k^2 \left\| u\right\| _{L^2(\Omega )}^2 \le C\left[ (k^2 +|\eta |^2)\left\| u\right\| _{L^2(\Gamma )}^2+ \left\| f\right\| _{L^2(\Omega )}^2 + \left\| g\right\| _{L^2(\Gamma )}^2\right] \nonumber \\ \end{aligned}$$(2.24)for all \(k\ge k_0\).

-

2.

Use the imaginary part of Green’s identity to remove the \((k^2+|\eta |^2) \left\| u\right\| _{L^2(\Gamma )}^2\) term from the right-hand side of (2.24).

We first prove the bound in Step 2 and then prove the bound in Step 1. Step 2. In the proof of Theorem 2.7 we used the imaginary part of Green’s identity to obtain the bound (2.22). We could use (2.22) to bound the \((k^2+|\eta |^2) \left\| u\right\| _{L^2(\Gamma )}^2\) term in (2.24) by \(\left\| f\right\| _{L^2(\Omega )}^2\) and \(\left\| g\right\| _{L^2(\Gamma )}^2\), however the right-hand side of (2.22) blows up if \(\varepsilon \rightarrow 0\) and we want to be able to include the case when \(\varepsilon =0\).

The bound (2.22) came from (2.21) with \(\delta _1=\varepsilon \) and \(\delta _2 = \eta _R\). If we instead keep \(\delta _1\) arbitrary we obtain

Dropping \(\varepsilon \left\| u\right\| _{L^2(\Omega )}^2\) from the left-hand side of (2.25) and then using the resulting inequality in (2.24) we obtain

for all \(k\ge k_0\). If \(\eta _R\sim k\) and \(|\eta _I|\lesssim k\) then

Therefore, if we let \(\delta _1= k\theta \) (for some \(\theta >0\)) then the right-hand side of (2.26) is \(\lesssim \left\| f\right\| _{L^2(\Omega )}^2 + \left\| g\right\| _{L^2(\Gamma )}^2\), which is the right-hand side of (2.7) [with the constant \(C\) in (2.7) different to the constant \(C\) in (2.26)]. The left-hand side of (2.26) is then

and so choosing \(\theta \) less than \(1/(Cb)\) gives the result (2.7).

Step 1. Remark 2.6 implies that \(u\) is in the space \(V\) defined by (2.16). Lemma 2.15 then implies that the integrated identity (2.18) holds with \(v\) replaced by \(u\). Recalling that \(\nabla u\) on \(\Gamma \) is understood as \(\nabla _{\Gamma }u + \mathbf {n}\partial _n u\), we find that the integral over \(\Gamma \) in (2.18) can be rewritten as

Therefore, using both (2.27) and that fact that \(\mathcal {L}u = -f - \mathrm{i}\varepsilon u\), we can rewrite (2.18) as

We now let

and note that \(\delta _+ \ge \delta _->0\) since \(\Omega \) is assumed to be star-shaped with respect to a ball (see Remark 1.3). Using both the definition of \(\mathcal{M}u\) and the Cauchy-Schwarz inequality on the right-hand side of (2.28), and writing the integrals as norms, we obtain that

(Note that the boundary condition (2.1b) gives us \(\partial _n u\) on \(\Gamma \) in terms of \(u\) and \(g\), but we choose not to use this yet.) Next we let \(2\alpha =d-1\) so that the coefficients of both \(\left\| \nabla u\right\| _{L^2(\Omega )}^2\) and \(\left\| u\right\| _{L^2(\Omega )}^2\) on the left-hand side become equal to one. We now use (1.21) on each of the terms on the right-hand side (with a different \(\delta \) each time) to obtain

To prove the bound (2.24) we need to ensure that a) each bracket on the left-hand side is greater than zero and doesn’t grow with \(k\), and b) each bracket on the right-hand side does not grow with \(k\).

We choose \(\delta _7=1, \delta _6 = \delta _- /(2R)\) (so that the coefficient of \(\left\| \nabla _\Gamma u\right\| _{L^2(\Gamma )}^2\) on the left-hand side becomes \(\delta _-/2\), which is \(>0\)), \(\delta _4 = k^2/(d-1)\), and \(\delta _3 = 1/(2R)\). With these choices, and neglecting the term involving \(\left\| \nabla _\Gamma u\right\| _{L^2(\Gamma )}^2\) on the left-hand side, we obtain from (2.29) the bound

for some \(C'>0\) (independent of \(k\), \(\eta \) and \(\varepsilon \)). The right-hand side of (2.30) is bounded above by

for some \(C''>0\) (again independent of \(k\), \(\eta \) and \(\varepsilon \)), since the boundary condition (2.1b) and the inequality (1.21) imply that

Also, given any \(k_0>0\), there exists a \(C'''>0\) independent of \(k\) such that

Therefore, to establish (2.24) we only need to show that the coefficients of \(\Vert \nabla u\Vert ^2_{L^2(\Omega )}\) and \(k^2 \Vert u\Vert ^2_{L^2(\Omega )}\) on the left-hand side of (2.30) are bounded away from zero, independently of \(k\). If \(\varepsilon =0\) this is immediately true. If \(\varepsilon \ne 0\) we choose \(\delta _5 = 4 \varepsilon R\). The left-hand side of (2.30) then becomes

If \(\varepsilon /k\le 1/(4R)\) then this last expression is

and we are done.

Remark 2.16

The earlier proofs of the bound (2.7) when \(\varepsilon =0\) discussed in Remark 2.10 use essentially the same method that we use here, except that to get (2.24) they apply the Rellich (2.14) and Green (2.13) identities separately and then take the particular linear combination that corresponds to the Morawetz identity (2.15) with \(2\alpha =d-1\) (whereas we use the Morawetz identity with \(2\alpha =d-1\) directly). (In addition, these earlier proofs only consider the case when \(\Gamma \) is piecewise smooth, and not Lipschitz.)

In the next subsection we obtain the analogue of the bound of Theorem 2.9 for the truncated sound-soft scattering problem (see Theorem 2.18). For the case \(\varepsilon =0\), this bound was obtained in [26, Proposition 3.3] using essentially the same method as we do (but again using a combination of the Rellich and Green identities that is equivalent to using the Morawetz identity).

2.2 Bounds on the truncated sound-soft scattering problem

The following are analogues of Theorems 2.7 and 2.9 for Problem 2.4.

Theorem 2.17

(Bound for \(\varepsilon >0\) for Lipschitz \(\Omega _D\) and \(\Omega _R\)) Let \(u\) be the solution of Problem 2.4, and let \(\eta =\eta _R+ \mathrm{i}\eta _I\) with \(\eta _I\ge 0\), \(\eta _R>0\). Then, given \(k_0>0\), there exists a \(C>0\), independent of \(\varepsilon \), \(k\), \(\eta _R\) and \(\eta _I\), such that

for all \(k\ge k_0\), \(\eta _R>0\), and \(\varepsilon >0\).

Theorem 2.18

(Bound for \(\varepsilon /k\) sufficiently small when \(\Omega _R\) and \(\Omega _D\) are star-shaped) Let \(u\) be the solution to Problem 2.4 and assume that \(\Omega _R\) is star-shaped with respect to a ball centred at the origin and \(\Omega _D\) is star-shaped with respect to the origin, i.e.

where \(\mathbf {n}_D\) and \(\mathbf {n}_R\) are the unit normal vectors to \(\Omega _D\) and \(\Omega _R\) respectively (oriented as in Fig. 2). If \(\eta _R\sim k\) and \(|\eta _I|\lesssim k\), then, given \(k_0>0\), there exist \(c\) and \(C\) (independent of \(k\), \(\eta \), and \(\varepsilon \) and \(>0\)) such that, if \({\varepsilon }/{k}\le c\) for all \(k \ge k_0\), then

for all \(k\ge k_0\).

Proof of Theorem 2.17

This follows the proof of Theorem 2.7 exactly. Indeed, the starting point of Theorem 2.7 was (2.19) (the integrated form of Green’s identity with the PDE and boundary conditions imposed on \(u\)), and this holds for the truncated sound-soft scattering problem with \(\Gamma \) replaced by \(\Gamma _R\) (since the integral over \(\Gamma _D\) that arises when Green’s identity is applied in \(\Omega \) is zero as \(u=0\) on \(\Gamma _D\)). \(\square \)

Proof of Theorem 2.18

This follows the proof of Theorem 2.9 exactly. Indeed, Step 2 is the same since it depends on Green’s identity. For Step 1, we note that applying the integrated Morawetz identity (2.18) in \(\Omega \) yields (2.28) with \(\Gamma \) replaced by \(\Gamma _R\), and the additional term \(\int _{\Gamma _D} (\mathbf {x}\cdot \mathbf {n}_D) |\partial _n u|^2\) on the left-hand side. By (2.31), this additional term is non-negative, and the proof proceeds as before. \(\square \)

3 Variational formulations and quasi-optimality

In Sect. 3.1 we prove results about the continuity and coercivity of \(a_\varepsilon (\cdot ,\cdot )\), and then we use these in Sect. 3.2–Sect. 3.4 to obtain sufficient conditions for quasi-optimality of the Galerkin method applied to \(a_\varepsilon (\cdot ,\cdot )\). In Sect. 3.1–3.4 we consider the interior impedance problem, and then in Sect. 3.5 we outline the small modifications needed to extend the results to the truncated scattering problem.

3.1 Continuity and coercivity of \(a_\varepsilon (\cdot ,\cdot )\)

Recall from Sect. 1 the variational formulation of the shifted interior impedance problem (1.3) and its Galerkin approximation (1.6). Define a norm on \(H^1(\Omega )\) by

in what follows we always have \(k\ge k_0\) for some \(k_0>0\) and thus \(\Vert \cdot \Vert _{1,k,\Omega }\) is indeed a norm and is equivalent to the usual \(H^1\)-norm.

Lemma 3.1

(Continuity and coercivity of \(a_\varepsilon (\cdot ,\cdot )\))

-

(i)

If \(|\eta | \lesssim k\) and \(\varepsilon \lesssim k^2\) then, given \(k_0>0\), there exists a \(C_c\) (independent of \(k\), \(\eta \), and \(\varepsilon \)) such that

$$\begin{aligned} \big |a_{\varepsilon }(u,v)\big | \le C_c \left\| u\right\| _{1,k,\Omega } \left\| v\right\| _{1,k,\Omega } \end{aligned}$$for all \(k\ge k_0\) and \(u,v\in H^1(\Omega )\).

-

(ii)

If \(\eta _R\) and \(\eta _I\) are both \(\ge 0\) and \(0<\varepsilon \lesssim k^2\), then there exists a constant \(\alpha >0\) (independent of \(k\), \(\eta \), and \(\varepsilon \)) such that

$$\begin{aligned} \big \vert a_{\varepsilon }(v,v) \big \vert \ge \alpha \, \frac{\varepsilon }{k^2}\, \left\| v\right\| ^2_{1,k, \Omega } \end{aligned}$$(3.2)for all \(k>0\) and \(v\in H^1(\Omega )\).

Proof

(i) This follows from the Cauchy-Schwarz inequality and the multiplicative trace inequality (1.20).

(ii) Given \(k>0\) and \(\varepsilon >0\), define \(p>0\) and \(q>0\) by

so that \(k^2+\mathrm{i}\varepsilon = (p+\mathrm{i}q)^2\). The definition of \(p\) and the fact that \(\varepsilon \lesssim k^2\) mean that \(k\le p\lesssim k\), and the fact that \(2qp=\varepsilon \) then implies that \(q\sim \varepsilon /k\). Now

and so

Therefore, taking the imaginary part of each side of (3.3), we have

Now, defining \(\Theta := {-(p-iq)}/{\vert p-iq\vert } = -(p-iq)/\sqrt{p^2 + q^2}\), and using the fact that \(\eta _R\) and \(\eta _I\) are both \(\ge 0\) we have

The result (3.2) follows since \(p\sim k\), \(q\sim \varepsilon /k\), and \(\varepsilon \lesssim k^2\). \(\square \)

Remark 3.2

This “trick” of multiplying the sesquilinear form by the complex conjugate of the wavenumber (in the proof above this was \(p-\mathrm{i}q\)) is well known in, for example, the time-domain boundary-integral-equation literature; see [23, Proposition 1].

Note that (with \(\varepsilon \lesssim k^2\)) the bound (3.2) is sharp in its \(k\)- and \(\varepsilon \)-dependence. Indeed, if \(u_j\) is a Dirichlet eigenfunction of \(-\Delta \) on \(\Omega \) with eigenvalue \(\lambda _j\), then

Therefore, if \(k = k_j := \sqrt{\lambda _j}\) then

3.2 Abstract conditions for quasi-optimality

To state the main result of this section, we need to introduce the solution operator of the adjoint problem. Given \(f\in L^2(\Omega )\), define \(S_{k,\varepsilon }^* f\) as the solution of the variational problem

This is the variational formulation of the adjoint problem (2.3) with \(g=0\); i.e., if \(w= S_{k,\varepsilon }^* f\) satisfies (3.4) then \(w\) is a solution of the weak form of (2.3) with \(g=0\), and vice versa.

Lemma 3.3

(Quasi-optimality for \(a_\varepsilon (\cdot ,\cdot )\)) Assume that \(\varepsilon \lesssim k^2\) and \(|\eta |\lesssim k\). Let \(C_c\) and \(\alpha \) be the constants in Lemma 3.1. Let \(u_N\) denote the Galerkin solution defined by (1.6). Let

If

then

The analogue of this result for the Helmholtz equation (i.e. \(\varepsilon =0\)) first appeared in the form above as [46, Theorem 2.5], although the argument goes back to Schatz [47] and has been used by several authors since then (see, e.g., the discussion in [19, §4] and the references therein). The only difference in our use of this argument (compared to previous uses) is that, instead of using the fact that \(a_\varepsilon (\cdot ,\cdot )\) satisfies a Gårding inequality, we use the fact that when \(\varepsilon =k^2\) it is coercive with constant independent of \(k\). Note that \({\eta (V_N)}\) in (3.5) is not related to the \(\eta \) in the impedance boundary condition (2.1b); we use this notation to be consistent with the other uses of this argument in the literature.

Proof

We first prove the bound (3.7) under the assumption that \(u_N\) exists. Choosing \(v=v_N \in V_N\) in (1.3) and subtracting this from (1.6), we have Galerkin orthogonality:

Coercivity (3.2) and the triangle inequality imply that, for any \(v\in H^1(\Omega )\),

We now apply this last inequality with \(v=e_N:=u-u_N\) and use that fact that, by Galerkin orthogonality, \(a(e_N,e_N)= a(e_N, u-v_N)\) for any \(v_N\in V_N\). This yields

(where we have used the continuity of \(a_\varepsilon (\cdot ,\cdot )\) to obtain the second inequality). If we can show that

then we obtain the result (3.7).

Now, using the definition of \(S_{k,\varepsilon }^*\) (3.4), Galerkin orthogonality (3.8), continuity of \(a_\varepsilon (\cdot ,\cdot )\), and the definition of \({\eta (V_N)}\) (3.5), we have

for some \(v_N \in V_N\). Therefore

and the condition (3.6) is sufficient to ensure that (3.12) holds.

Up to now, we have assumed that \(u_N\) [the solution of the variational problem (1.6)] exists. The fact that \(u_N\) exists can be established using [33, Theorem 3.9], but here we follow the simpler approach found in, e.g., [4, Theorem 5.7.6]. Since (1.6) is a system of \(N\) equations with \(N\) unknowns, existence for all right-hand sides is equivalent to uniqueness. Therefore, we only need to show that if \(F=0\) and \(N\) is such that the condition (3.6) holds, then (1.6) only has the trivial solution \(u_N=0\). Seeking a contradiction, suppose that \(a_\varepsilon (u_N,v_N)=0\) for all \(v_N\in V_N\) for some \(u_N\ne 0\). Remark 2.2 implies that \(u=0\), and then (3.7) implies that \(u_N=0\) when \(N\) is such that (3.6) holds. Therefore, the solution to (1.6) exists and is unique when \(N\) satisfies (3.7). \(\square \)

Remark 3.4

(Using coercivity for \(\varepsilon = \gamma k^2\), for some \(\gamma >0\), instead of for \(\varepsilon =k^2\).) In the proof of Lemma 3.3 we used the coercivity of \(a_{k^2}(\cdot ,\cdot )\). Instead, we could have used the coercivity of \(a_{\gamma k^2}(\cdot ,\cdot )\), with \(\gamma \) any positive constant. If we had done this, then the mesh threshold for quasi-optimality would be

and the constant of quasi-optimality in (3.7) would be \(2C_c/(\gamma \alpha )\).

3.3 Quasi-optimality: smooth domains and convex polygons

In this subsection we consider the case when \(\Omega \) is either a \(C^{1,1}\) 2- or 3-d domain that is star-shaped with respect to a ball or a convex polygon. We also assume that \(V_N\) has the property that, for all \(w\in H^2(\Omega )\),

this is true, for example, for continuous piecewise-polynomial elements on a simplicial mesh by properties of the quasi-interpolant given in [48, Theorem 4.1].

We now use Lemma 3.3 to prove the following result.

Lemma 3.5

(Quasi-optimality for \(a_\varepsilon (\cdot ,\cdot )\) for smooth domains and convex polygons) Suppose that the variational problem (1.3) is solved using the Galerkin method with \(V_N \subset H^1(\Omega )\). Assume that \(\varepsilon \lesssim k^2\), \(\eta _R\sim k\), and \(\eta _I\lesssim k\). Then, given \(k_0>0\), there exists \(C_1>0\) (with \(C_1\) independent of \(h,k,\) and \(\varepsilon \)) such that if \(k\ge k_0\) and

then (3.7) holds.

Proof

Given \(f\in L^2(\Omega )\), let \(w:= S^*_{k,\varepsilon } f\). By Remark 2.5, given \(k_0>0\), \(\Vert w\Vert _{H^1(\Omega )}\lesssim \Vert f\Vert _{L^2(\Omega )}\) for all \(k\ge k_0\). Moreover, Lemma 2.12 then implies that \(\Vert w\Vert _{H^2(\Omega )}\lesssim k \Vert f\Vert _{L^2(\Omega )}\) for all \(k\ge k_0\). Combining these bounds with (3.15) yields

Therefore, from the definition of \(\eta (V_N)\),

and the result follows from Lemma 3.3. \(\square \)

Remark 3.6

For arbitrary curved \(C^{1,1}\) domains it is not always possible to fit the domain boundary exactly with polynomial elements, and some analysis of non-conforming error is then necessary; since this is very standard, we do not give it here.

3.4 Quasi-optimality: non-smooth domains

In obtaining Lemma 3.5 from Lemma 3.3 we used a bound on the \(H^2\)-norm of the solution of the adjoint problem to estimate \(\eta (V_N)\) and get a mesh-threshold for quasi-optimality. We now consider domains in which the solution to the adjoint problem is not in \(H^2(\Omega )\). In this case we can still estimate \(\eta (V_N)\) (and thus get conditions for quasi-optimality) under assumptions on the solution and the mesh that we now explain.

Assumption 3.7

Let \(\Omega \) be a bounded Lipschitz polyhedron in \(\mathbb {R}^d\) (\(d=2,3\)).

-

1.

Let \(w = S^*_{k,\varepsilon } f\) and let \(C_{\mathop {\mathrm{sol}}}(k,\varepsilon )\) be such that

$$\begin{aligned} \left\| w\right\| _{1,k,\Omega } \lesssim C_{\mathop {\mathrm{sol}}}(k,\varepsilon ) \left\| f\right\| _{L^2(\Omega )} \end{aligned}$$(3.17)for all \(f\in L^2(\Omega )\) and for all \(0\le \varepsilon \lesssim k^2\). Assume that there exists a weight function \(\Phi \in C(\overline{\Omega })\) such that, for any \(f\in L^2(\Omega )\),

$$\begin{aligned} \sup _{\vert \alpha \vert = 2} \left\| \Phi D^\alpha w\right\| _{L^2(\Omega )} \lesssim k\, C_{\mathop {\mathrm{sol}}}(k,\varepsilon )\left\| f\right\| _{L^2(\Omega )}. \end{aligned}$$(3.18) -

2.

With \(\Phi \) as in Part 1, assume that if \(v \in H^1(\Omega )\) and \(\sup _{\alpha =2} \Vert \Phi D^\alpha v\Vert _{L^2(\Omega )} < \infty \) then there exists a shape-regular simplicial mesh sequence so that the corresponding finite element space \(V_N\) has dimension \(N\), has largest element diameter \((1/N)^{1/d}\), and satisfies

$$\begin{aligned}&\inf _{v_N \in V_N} \left\{ \left( \frac{1}{N}\right) ^{1/d} \left| v - v_N \right| _{H^1(\Omega )} + \left\| v - v_N\right\| _{L^2(\Omega )} \right\} \nonumber \\&\quad \lesssim \left( \frac{1}{N}\right) ^{2/d} \sup _{\vert \alpha \vert = 2} \left\| \Phi D^\alpha v \right\| _{L^2(\Omega )}. \end{aligned}$$(3.19)

Remark 3.8

Part 2 of Assumption 3.7 holds by results in [1], and Part 1 of Assumption 3.7 holds when \(\Omega \) is a polygon in \(\mathbb {R}^2\) and \(\varepsilon =0\) by [19, Theorem 3.2] (and we expect similar arguments to apply when \(0<\varepsilon \lesssim k^2\)). We now discuss both these sets of results when \(\Omega \) is a polygon. The result [19, Theorem 3.2] proves that there exists a weight function \(\Phi \in C(\overline{\Omega })\) such that the soluton \(u = S^*_{k,0} f \) of (2.3) with \(f\in L^2(\Omega )\), \(g=0\), and \(\varepsilon =0\) has a decomposition \(u = u_{H^2} + u_{\mathcal{A}}\), where

The weight function \(\Phi \) can be taken to be one at convex corners, but at a non-convex corner \(\Phi (\mathbf {x}) \sim r^\beta \) as \(r\rightarrow 0\) for \(\mathbf {x}\) in a neighbourhood of a corner point \(\mathbf {x}_0\) with exterior angle \(\omega \), where \(r:=|\mathbf {x}-\mathbf {x}_0|\) and \(\beta > 1- \pi /\omega \); see [19, Equation (24) and Lemma 3.11] (this decay of the weight function compensates for singularities in the second derivatives of \(u_{\mathcal{A}}\)). The subsequent verification of (3.19) can be obtained from several references, e.g. [1] and the references therein. Indeed, the existence of a suitably refined shape-regular mesh and corresponding \(v_N\in V_N\) satisfying

follows from [1, Theorems 3.2 and 3.3] and particularly the estimate [1, Equation (3.19)]. Note that [1] uses very different notation to ours; the weighted norm on the right-hand side of [1, Equation (3.19)] coincides with that on the right-hand side of our Eq. (3.19), and \(H_0\) in [1] equals \(\pi /\omega \) in our notation (the statement that \(H_0=\pi /(2\omega _0)\) on [1, Page 68] is a typo). The required complexity of the mesh follows from the discussion in [1, Remark 3.1] and the shape-regularity is [1, Condition (d) on Page 71]. The estimate on \(\Vert v-v_N\Vert _{L^2(\Omega )}\) in (3.19) is not proved explicitly in [1] but follows using similar arguments.

Remark 3.9

(How does \(C_{\mathop {\mathrm{sol}}}(k,\varepsilon )\) depend on \(k\) and \(\varepsilon \)?) By combining Theorems 2.7 and 2.9 (and using Remark 2.5) we see that if \(\Omega \) is Lipschitz and star-shaped with respect to a ball, \(0\le \varepsilon \lesssim k^2\), \(\eta _R\sim k\), and \(0<\eta _I\lesssim k\), then (3.17) holds with \(C_{\mathop {\mathrm{sol}}}(k,\varepsilon ) \sim 1\); in what follows we only consider this situation.

The following is the analogue of Lemma 3.5 for non-smooth domains.

Lemma 3.10

(Quasi-optimality for \(a_\varepsilon (\cdot ,\cdot )\) for non-smooth domains) Suppose that \(\Omega \) is such that Assumption 3.7 holds with \(C_{\mathop {\mathrm{sol}}}(k,\varepsilon )\sim 1\), and suppose that the variational problem (1.3) is solved using the Galerkin method in the space \(V_N\). If \(\varepsilon \lesssim k^2\), \(\eta _R\sim k\), and \(\eta _I\lesssim k\) then, given \(k_0>0\), there exists a \(C_1>0\) (independent of \(N,k,\) and \(\varepsilon \)) such that, if \(k\ge k_0\) and

then (3.7) holds.

Proof

By Lemma 3.3 we only need to estimate \(\eta (V_N)\) and ensure that (3.6) holds. With \(w= S^*_k f\), Assumption 3.7 implies that there exists a \(v_N\in V_N\) such that

from which it follows that

Therefore,

and this implies that, given \(k_0>0\), there exists a \(C_1>0\) such that the condition (3.23) is sufficient to ensure that (3.6) holds. \(\square \)

3.5 The truncated sound-soft scattering problem with absorption

The variational formulation of Problem 2.4 is almost identical to that of Problem 2.1 except that the Hilbert space is now \(V = \{ v \in H^1(\Omega ) : v = 0 \text { on } \Gamma _D\}\), and the integrals over \(\Gamma \) in \(a_\varepsilon (\cdot ,\cdot )\) and \(F(\cdot )\) defined in (1.4) and (1.5) respectively are replaced by integrals over \(\Gamma _R\). Lemma 3.1 (continuity and coercivity of \(a_{\varepsilon }(\cdot ,\cdot )\)) holds as before. Lemma 3.5 holds if \(\Omega \) is \(C^{1,1}\) and satisfies the geometric assumptions in Theorem 2.18. Similarly, if Assumption 3.7 is satisfied with \(\Omega = \Omega _R{\setminus }\overline{\Omega _D}\) and \(\Omega _R\) and \(\Omega _D\) are as in Theorem 2.18, then Lemma 3.10 holds.

4 Proofs of Theorem 1.4 and its analogue for non-quasi-uniform meshes

In Sect. 4.1 we consider the interior impedance problem, and in Sect. 4.2 we consider the truncated sound-soft scattering problem.

4.1 Results about the interior impedance problem

4.1.1 Smooth domains and quasi-uniform meshes (i.e., Proof of Theorem 1.4)

As discussed in Sect. 1.3, we prove Theorem 1.4 by obtaining bounds on \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _2\), \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{N}\Vert _2\), \(\Vert \mathbf{M}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) and \(\Vert \mathbf{N}\mathbf{A}_\varepsilon ^{-1}\Vert _2\).

Lemma 4.1

Under the same conditions as in Theorem 1.4, given \(k_0>0\), there exist \(C_1, C_2>0, C_3>0\) (independent of \(h, k,\) and \(\varepsilon \) but depending on \(k_0\)) such that if \(h k\sqrt{|k^2-\varepsilon |} \le C_1\) then

Proof of Lemma 4.1

We prove below the estimates on \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _2\) and \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{N}\Vert _2\); the analogous estimates for \(\Vert \mathbf{M}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) and \(\Vert \mathbf{N}\mathbf{A}_\varepsilon ^{-1}\Vert _2\) are obtained as outlined in Remark 1.7 (and also using the fact that a result analogous to Lemma 3.5 holds for the adjoint problem).

Given \(v_N \in V_N\), let \(\mathbf {v}\) denote the vector of the nodal values of \(v_N\). A standard scaling argument for the mass matrix \(\mathbf{M}\) yields

Therefore,

We first prove the bound (i) in (4.1) (i.e., the bound on \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _2\)). Given \(\mathbf {f}\in \mathbb {C}^N\), we create a variational problem whose Galerkin discretisation leads to the equation \(\mathbf{A}_\varepsilon \widetilde{\mathbf {u}}= \mathbf{M}\,\mathbf {f}\). Indeed, let \(\widetilde{f} := \sum _j f_j \phi _j\) and note that \(\widetilde{f} \in L^2(\Omega )\). Define \(\widetilde{u}\) to be the solution of the variational problem

let \(\widetilde{u}_N\) be the solution of the finite element approximation of (4.4), i.e.,

and let \(\widetilde{\mathbf {u}}\) be the vector of nodal values of \(\widetilde{u}_N\). The definition of \(\widetilde{f}\) then implies that (4.5) is equivalent to \(\mathbf{A}_\varepsilon \widetilde{\mathbf {u}}= \mathbf{M}\,\mathbf {f}\), and so to obtain a bound on \(\Vert \mathbf{A}_\varepsilon ^{-1}\mathbf{M}\Vert _{2}\) we need to bound \(\Vert \widetilde{\mathbf {u}}\Vert _2\) in terms of \(\Vert \mathbf {f}\Vert _2\). Note that the hypotheses imply that the bound on the solution operator (2.8) holds (by Corollary 2.11), and also that if \(h k\sqrt{|k^2-\varepsilon |} \le C_1\) then quasi-optimality (3.7) holds (by Lemma 3.5). Starting with (4.3) we then have