Abstract

In their work Ikromov and Müller (Fourier Restriction for Hypersurfaces in Three Dimensions and Newton Polyhedra. Princeton University Press, Princeton, 2016) proved the full range \(L^p-L^2\) Fourier restriction estimates for a very general class of hypersurfaces in \({\mathbb {R}}^3\) which includes the class of real analytic hypersurfaces. In this article we partly extend their results to the mixed norm case where the coordinates are split in two directions, one tangential and the other normal to the surface at a fixed given point. In particular, we resolve completely the adapted case and partly the non-adapted case. In the non-adapted case the case when the linear height \(h_\text {lin}(\phi )\) is below two is settled completely.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For a given smooth hypersurface S in \({\mathbb {R}}^n\), its surface measure \(\mathrm {d}\sigma \), and a smooth compactly supported function \(\rho \geqslant 0\), \(\rho \in C_0^\infty (S)\), the associated Fourier restriction problem asks for which \(p,q \in [1,\infty ]\) the estimate

holds true. This problem was first considered by E.M. Stein in the late 1960s. Soon thereafter the problem was essentially solved for curves in two dimensions, see [4, 7, 34]. The higher dimensional case in its most general form is still wide open. The three dimensional case, as of yet, is far from being completely understood even when S is the sphere, and there has been a lot of deep work in the direction of understanding \(L^p-L^q\) estimates for surfaces with both vanishing and non-vanishing Gaussian curvature. A small sample of such works are [2, 3, 12, 21, 24, 30, 33].

The case when \(q = 2\) has proven to be more tractable since one can use the “\(R^* R\) technique”. This was exploited by Tomas and Stein (see [31]) to obtain the full range of \(L^p-L^2\) estimates when the hypersurface in question is the unit sphere, and later further developed by Greenleaf [11] where the full range of \(L^p-L^2\) estimates was obtained for surfaces with non-vanishing Gaussian curvature. In fact, Greenleaf proved that if one has a decay estimate on the Fourier transform of \(\rho \mathrm {d}\sigma \) (which can be interpreted as a uniform estimate for an oscillatory integral), i.e.,

then the associated restriction estimate holds true for \(p' \geqslant 2(h+1)\) and \(q = 2\). However, in general, this range is not optimal. Recently I.A. Ikromov and D. Müller in their series of works (see [14,15,16], and also their work with M. Kempe [13]) have developed techniques for proving the full range of \(L^p-L^2\) estimates for a very general class of surfaces in \({\mathbb {R}}^3\). Their work builds upon the work of V.I. Arnold and his school (in particular, the work by Varchenko [32]) which highlighted the importance of the Newton polyhedron within problems involving oscillatory integrals, and upon the work of Phong and Stein [25] and Phong et al. [26] in the real analytic case where the authors in addition to the Newton polyhedron used the Puiseux series expansions of roots to obtain results on oscillatory integral operators. For further and more detailed references we refer the reader to [16].

In [16] Ikromov and Müller proved the following theorem.

Theorem 1.1

Let S be a smooth hypersurface in \({\mathbb {R}}^3\) and \(\mathrm {d}\sigma \) its surface measure. After localisation and a change of coordinates assume that S is given as the graph of a smooth function \(\phi : \Omega \rightarrow {\mathbb {R}}\) of finite type with \(\phi (0) = 0\) and \(\nabla \phi (0) = 0\), where \(\Omega \subseteq {\mathbb {R}}^2\) is an open neighbourhood of 0. Furthermore, assume that \(\phi \) is linearly adapted in its original coordinates. Let \(\rho \geqslant 0\), \(\rho \in C_c^\infty (S)\), be a smooth compactly supported function. Then the estimate (1.1) holds true for all \(\rho \) with support contained in a sufficiently small neighbourhood of 0 when \(q=2\) and when either

-

(a)

\(\phi \) is adapted in its original coordinates and \(p \geqslant 2(h(\phi )+1)\), or

-

(b)

\(\phi \) is not adapted in its original coordinates, satisfies the Condition (R), and \(p \geqslant 2(h^{\text {res}}(\phi )+1)\).

Since linear transformations respect the Fourier transform, one can always assume linear adaptedness. The quantities \(h(\phi )\) and \(h^\text {res}(\phi )\) are respectively the height and the restriction height of the function \(\phi \) (the precise definitions can be found in Sects. 1.1 and 2.3 below respectively; also note that we use \(h^{\text {res}}(\phi )\) to denote the restriction height of the function \(\phi \) instead of \(h^{\text {r}}(\phi )\) as in [16]). Condition (R) is a factorisation condition which is true for real analytic functions, but not for general smooth functions, and it remains open whether this condition can be removed in the above theorem.

In this paper we shall be interested in the mixed norm case with \(L^p({\mathbb {R}}^3)\) denoting from now on the space \(L^{p_3}_{x_3} (L^{p_2}_{x_2} (L^{p_1}_{x_1}))\) and \(q = 2\) in (1.1). We shall be interested in the particular case when \(p_1=p_2\), i.e., we only differentiate between the tangential and the normal direction to the surface S at the point \(0 \in S\). This means we take \(\Vert f \Vert _{L^p({\mathbb {R}}^3)}\) to mean

Henceforth we shall denote by p the pair \((p_1, p_3)\). Our task is to determine for which \((p_1,p_3)\) the inequality

holds true for \(\rho \geqslant 0\) supported in a sufficiently small neighbourhood of 0.

This question is of great interest in the theory of PDEs, as was noticed by Strichartz in [29]. Namely, one can obtain mixed norm Strichartz estimates for a wide collection of symbols \(\phi \) determining the surface S since the estimate (1.2) can be reinterpreted as an a priori estimate

for the Cauchy problem

where g has its Fourier transform supported in a small neighbourhood of the origin and \(\phi (D)\) is the operator with symbol \(\phi (\xi )\).

It turns out that we can use the same basic techniques and phase space decompositions as in [16] in proving the estimate (1.2) in the cases we consider (namely, the adapted case and the non-adapted case with \(h_\text {lin}(\phi ) < 2\)). The main additional ingredients we shall use are some basic ideas from [9] (see also [20]) for handling mixed norms. In our case additional complications appear which were absent in the corresponding cases in [16] and some of which resemble problems appearing in some of the final chapters of [16]. For example, after making a phase space decomposition of the kernel of the convolution operator obtained by the “\(R^* R\) technique”, a recurring theme will be that we will not be able to sum absolutely the operators associated to the kernel decomposition pieces whose operators were absolutely summable [16]. A further interesting feature of the mixed norm case is that estimates for the mixed norm endpoint for operators of certain kernel pieces become invariant under scalings considered in [16].

The structure of this article is as follows. In the following Sect. 1.1 we review some fundamental concepts such as the Newton polyhedron and adapted coordinates. In Sect. 1.2 we state the main results of this paper, namely Theorem 1.2 which states the necessary conditions, and Theorem 1.3 which gives us the mixed norm Fourier restriction estimates in the adapted case and the case \(h_{\text {lin}}(\phi ) < 2\). In Sect. 2 we derive the necessary conditions (by means of Knapp-type examples) for the exponents in (1.2). See Proposition 2.1. In Sect. 2.4 we also determine explicitly the Newton polyhedra of \(\phi \) in its original and adapted coordinates in the case when the linear height of \(\phi \) is strictly less than 2. Section 3 contains auxiliary results that we shall often refer to. In Sect. 3.2 we list results related to oscillatory integrals and also some results on oscillatory sums from [16] that are useful in conjunction with complex interpolation. In Sect. 3.3 we state results which we need for handling mixed norms. In Sect. 4, Proposition 4.2, we deal with the adapted case, i.e., we prove that if \(\phi \) is adapted in its original coordinates, then the estimate (1.2) holds for all p’s determined by the necessary conditions, except occasionally for a certain endpoint. In the same section (see Proposition 4.3) we also reduce the general non-adapted case to considering the part near the principal root jet of \(\phi \). In Sects. 5 and 6 we handle the case when the linear height of \(\phi \) is strictly less than 2 for a class of functions \(\phi \) which includes all analytic functions (see Theorem 5.1 for a precise formulation).

For reasons of consistency we use the same notational conventions as in [16]. We use the “variable constant” notation meaning that constants appearing in calculations and in the course of our arguments may have different values on different lines. Furthermore we use the symbols \(\sim , \lesssim , \gtrsim , \ll , \gg \) in order to avoid writing down constants. If we have two nonnegative quantities A and B, then by \(A \ll B\) we mean that there is a sufficiently small positive constant c such that \(A \leqslant cB\), by \(A \lesssim B\) we mean that there is a (possibly large) positive constant C such that \(A \leqslant CB\), and by \(A \sim B\) we mean that there are positive constants \(C_1 \leqslant C_2\) such that \(C_1 A \leqslant B \leqslant C_2 A\). One defines analogously \(A \gg B\) and \(A \gtrsim B\). Often the constants c and C shall depend on certain parameters p in which case we occasionally write \(A \ll _p B\), \(A \lesssim _p B\), etc., in order to emphasize this dependence.

A further notational convention adopted from [16] is the use of symbols \(\chi _0\) and \(\chi _1\) in denoting certain nonnegative smooth compactly supported functions on \({\mathbb {R}}\). Namely, we require \(\chi _0\) to be supported in a neighbourhood of the origin and identically 1 near the origin, and \(\chi _1\) to be supported away from the origin and identically 1 on some open neighbourhood of \(1 \in {\mathbb {R}}\). These cutoff functions \(\chi _0\) and \(\chi _1\) may vary from line to line, and sometimes, when several \(\chi _0\) and \(\chi _1\) appear within the same formula, they may even designate different functions.

1.1 Fundamental concepts and basic assumptions

Let the surface S be given as the graph \(S = S_\phi :=\{ (x_1,x_2,\phi (x_1,x_2)) : x = (x_1,x_2) \in \Omega \subset {\mathbb {R}}^2 \}\) of a smooth and real-valued function \(\phi \) defined on an open neighbourhood \(\Omega \) of the origin. We can assume without loss of generality that \(\phi (0) = 0\) and we take \(\Omega \) to be a sufficiently small neighbourhood of the origin in \({\mathbb {R}}^2\). In the mixed norm case we cannot use the rotational invariance of the Fourier transform in order to reduce to the case \(\nabla \phi (0) = 0\). Instead we use a different linear transformation (for details see Sect. 3.1), and so we may and shall assume \(\nabla \phi (0) = 0\).

Next, we impose on \(\phi \) to be a function of finite type at 0. This means that there exists a multi-index \(\alpha \in {\mathbb {N}}_0^2\) such that \(\partial ^\alpha \phi (0) \ne 0\). By continuity, \(\phi \) is of finite type on a neighbourhood of 0. We may therefore assume that \(\phi \) is of finite type in each point of \(\Omega \). We define the Taylor support of \(\phi \) as the set \({\mathcal {T}}(\phi ) :=\{ \alpha \in {\mathbb {N}}_0^2 : \partial ^\alpha \phi (0) \ne 0 \}\). The Newton polyhedron \({\mathcal {N}}(\phi )\) of \(\phi \) is the convex hull of the set \(\bigcup _\alpha \{(t_1,t_2) \in {\mathbb {R}}^2 : t_1 \geqslant \alpha _1, t_2 \geqslant \alpha _2 \}\), where the union is over all \(\alpha \) such that \(\partial ^\alpha \phi (0) \ne 0\) (and so \(|\alpha | \geqslant 2\)). See Fig. 1. Both edges and vertices are called faces of \({\mathcal {N}}(\phi )\). We define the Newton diagram \({\mathcal {N}}_d(\phi )\) of \(\phi \) to be the union of all compact faces of \({\mathcal {N}}(\phi )\).

If we are given a face \(e_0\) of \({\mathcal {N}}(\phi )\), we can define its associated (formal) series

If \(e_0\) is a compact face, then \(\phi _{e_0}(x_1, x_2)\) is a mixed homogeneous polynomial. This means that there exists a weight \(\kappa ^{e_0} = (\kappa ^{e_0}_1,\kappa ^{e_0}_2) \in [0,\infty )^2\) such that for any \(r > 0\) we have \(\phi _{e_0}(r^{\kappa ^{e_0}_1} x_1, r^{\kappa ^{e_0}_2} x_2) = r \phi _{e_0}(x_1, x_2)\), and we call \(\phi _{e_0}\) a \(\kappa ^{e_0}\)-homogeneous polynomial. \(\kappa ^{e_0}\) is uniquely determined if and only if \(e_0\) is not a vertex. In fact, in the case when \(e_0\) is an edge, we define \(L_{\kappa ^{e_0}}\) to be the unique line containing \(e_0\):

Then the weight \(\kappa ^{e_0}\) is uniquely determined by the relation

When \(e_0\) is an unbounded face, \(\phi _{e_0}(x_1, x_2)\) is to be taken only as a formal power series. Note that then \(e_0\) is either a vertical or horizontal edge of \({\mathcal {N}}(\phi )\), and we can also find unique \(\kappa ^{e_0}_1\) and \(\kappa ^{e_0}_2\) (one of them being 0 in this case) such that (1.4) holds.

Of particular interest is the principal face \(\pi (\phi )\) defined as the face of minimal dimension of \({\mathcal {N}}(\phi )\) which intersects the bisectrix \(\{(t_1,t_2) \in {\mathbb {R}}^2 : t_1 = t_2\}\). Its associated series (or homogeneous polynomial) we call the principal part of \(\phi \) and denote by \(\phi _{\text {pr}} :=\phi _{\pi (\phi )}\). Let \(\kappa = (\kappa _1,\kappa _2)\) determine the line \(L_{\kappa }\) as in (1.5) containing the principal face if it is an edge, or when it is a vertex, let \(\kappa \) determine the edge of \({\mathcal {N}}(\phi )\) having the principal face as its left endpoint. Interchanging the \(x_1\) and \(x_2\) coordinates, if necessary, we may always assume that \(\kappa _2 \geqslant \kappa _1\). We shall denote the ratio \(\kappa _2/\kappa _1\) by m, and so \(m \geqslant 1\).

The Newton distance \(d(\phi )\) of \(\phi \) is defined to be the coordinate d of the point (d, d) which is the intersection of the bisectrix and the principal face of \({\mathcal {N}}(\phi )\). One can easily see that if \(\kappa = (\kappa _1,\kappa _2)\) determines the line containing the principal face (or any of the supporting lines to \({\mathcal {N}}(\phi )\) in case \(\pi (\phi ) = \{(d,d)\}\)), then we have \(d(\phi ) = 1/(\kappa _1+\kappa _2)\).

The Newton height \(h(\phi )\) of \(\phi \) is defined as

By a smooth local coordinate change we mean a function \(\varphi \) which is smooth and invertible in a neighbourhood of the origin, and \(\varphi (0) = 0\). We also define the linear height as

For a coordinate change \(\varphi \) we shall denote the new cooridnates by \(y = \varphi (x)\). In this case we also denote \(d_y = d(\phi \circ \varphi )\). We say that \(\phi \) is adapted in the y coordinates if \(d_y = h(\phi )\). Analogously, we say that \(\phi \) is linearly adapted in coordinates y if \(d_y = h_{\text {lin}}(\phi )\). When \(\phi \) is adapted in its original coordinates x we say that \(\phi \) is adapted, and if \(\phi \) is not adapted in its original coordinates, then we say that \(\phi \) is non-adapted. Analogous expressions we shall use for linear adaptedness. We obviously always have \(d_x = d(\phi ) \leqslant h_{\text {lin}}(\phi ) \leqslant h(\phi )\).

The existence of an adapted coordinate system for real analytic functions on \({\mathbb {R}}^2\) was first proven by Varchenko in [32]. He gave an explicit algorithm on how to construct an adapted coordinate system. His result was generalised in [14] where it was shown that an adapted coordinate system exists for general smooth functions. It turns out that in the smooth case one can also essentially use Varchenko’s algorithm. In this article when we refer to Varchenko’s algorithm we shall always mean the variant used in [14]. In this variant one constructs an adapted coordinate system in the form of a non-linear shear transformation

The smooth real-valued function \(\psi \) can be taken in the real-analytic case to be the principal root jet of \(\phi \) as defined in [16]. We denote the function \(\phi \) in the new (adapted) coordinates by \(\phi ^a\). Then we have

We remark that when \(\phi \) is not adapted, then \(m = \kappa _2/\kappa _1\) is a positive integer and \(\psi (x_1) - b_1 x_1^m = {\mathcal {O}}(x_1^{m+1})\) for some nonzero real constant \(b_1\).

We introduce next Varchenko’s exponent \(\nu (\phi ) \in \{0,1\}\). If \(h(\phi ) \geqslant 2\) and there exists an adapted coordinate system y such that in these coordinates the principal face of \(\phi ^a(y)\) is a vertex, we define \(\nu (\phi ) :=1\). In all other cases we take \(\nu (\phi ) :=0\). In particular \(\nu (\phi ) = 0\) whenever \(h(\phi ) < 2\). A concrete characterisation for determining when an adapted coordinate system having the principal face as a vertex exists can be found in [15, Lemma 1.5].

Let us discuss next linear adaptedness. We assume that \(h_{\text {lin}}(\phi ) < h(\phi )\), i.e., that we cannot achieve adapted coordinates with a linear coordinate change. In [16, Section 1.3] it was shown that in this case we can always find a linearly adapted coordinate system, and [16, Proposition 1.7] gives an explicit characterisation of when a coordinate system is linearly adapted. It was shown in particular that if the coordinate system x is not already linearly adapted, then one just needs to apply the first step of Varchenko’s algorithm in order to obtain it.

Since in our mixed norm case we consider only \(p_1=p_2\), we can freely use linear coordinate changes in “tangential” variables \((x_1,x_2)\) in the expression (1.2). Thus we may assume without loss of generality that either the original coordinate system x is already adapted, or that it is at least linearly adapted. In particular, we may assume \(d(\phi ) = h_{\text {lin}}(\phi )\).

The final important concept we introduce is the augmented Newton polyhedron \({\mathcal {N}}^{\text {res}}(\phi ^a)\) of a non-adapted \(\phi \) (note the slight change in notation compared to [16], where \({\mathcal {N}}^{\text {r}}(\phi ^a)\) is used instead). \({\mathcal {N}}^{\text {res}}(\phi ^a)\) is defined as the convex hull of the set

where \(L^+\) is defined as follows. Let \(L_{\kappa }\) be the line containing the principal face \(\pi (\phi )\) of \({\mathcal {N}}(\phi )\) and let \(P = (t^P_1, t^P_2)\) be the point on \(L_{\kappa } \cap {\mathcal {N}}(\phi ^a)\) with the smallest \(t_2\) coordinate. Such a point always exists. Then \(L^+\) is the ray

(See Fig. 1).

1.2 The main results

Let us briefly review all the conditions on the function \(\phi \) which we may assume without loss of generality when considering the mixed norm restriction problem:

-

\(\phi (0)=0\) and \(\nabla \phi (0) = 0\),

-

\(\phi \) is of finite type on \(\Omega \),

-

the weight \(\kappa \) determined by the principal face of \({\mathcal {N}}(\phi )\) (or by the edge containing the principal face as its left endpoint) satisfies \(m = \kappa _2 / \kappa _1 \geqslant 1\), and

-

the original coordinate system x is either adapted, or linearly adapted but not adapted. In both cases we have \(d(\phi ) = h_{\text {lin}}(\phi )\).

Recall that S denotes the surface given as the graph of \(\phi \) and \(\mathrm {d}\sigma \) its surface measure. We are considering the mixed norm Fourier restriction problem (1.2) when \(\rho \) is supported in a sufficiently small neighbourhood of the origin.

We begin by stating necessary conditions which will be obtained by means of Knapp-type examples. When \(\phi \) is not adapted we denote by

the function defined in the following way. Consider all lines of the form

where \({\tilde{\kappa }} \in [0,\infty )^2\) is a weight. For each \(0 \leqslant {\tilde{\kappa }}_1 \leqslant \kappa _1\) there is a unique \({\tilde{\kappa }}_2\) so that (1.6) determines a supporting line \(L_{{\tilde{\kappa }}}\) to \({\mathcal {N}}^{\text {res}}(\phi ^a)\). We then define \(K({\tilde{\kappa }}_1)\) to be \({\tilde{\kappa }}_2\) for \({\tilde{\kappa }}_1 \in [0,\kappa _1]\) (see Fig. 2). Note that then the weight (0, K(0)) determines line containing the horizontal edge of the augmented Newton polyhedron, i.e., the right most edge of \({\mathcal {N}}^{\text {res}}(\phi ^a)\). The weight \((\kappa _1, K(\kappa _1)) = \kappa \) determines the line containing the edge associated to the principal face of \({\mathcal {N}}(\phi )\) which is the left most edge of \({\mathcal {N}}^{\text {res}}(\phi ^a)\).

Denote by \({\mathcal {L}}\) the Legendre transformation, which acts on real-valued convex functions K by:

Then we may state the necessary conditions in the following way:

Theorem 1.2

Let \(\phi \) be as above and let us assume that the estimate (1.2) holds true with \(\rho (0) \ne 0\). If \(\phi \) is adapted, then we have the necessary condition

If K is as above and \(\phi \) is linearly adapted, but not adapted, then we necessarily have

Recall that \(d(\phi ) = h(\phi )\) when \(\phi \) is adapted. The above theorem is a direct consequence of Proposition 2.1 in Sect. 2 below and the discussion in Sect. 2.2. The necessary conditions are depicted in Fig. 3.

The main result of this paper is:

Theorem 1.3

Let \(\phi \) be as above and \(\rho \) supported in a sufficiently small neighbourhood of 0. If either

-

(a)

\(\phi \) is adapted in its original coordinates, or

-

(b)

\(\phi \) is non-adapted, \(h_{\text {lin}}(\phi ) < 2\), and \(\phi \) is real analytic,

then the estimate (1.2) holds true for all \((1/p_1',1/p_3')\) as determined by Theorem 1.2, except for the point \((1/p_1',1/p_3') = (0,1/(2h(\phi )))\) where it is false if \(\rho (0) \ne 0\) and either \(h(\phi ) = 1\) or \(\nu (\phi ) = 1\).

In case (b) we shall actually prove the claim for a more general class of functions than is stated here.

The part (a) of the above theorem follows from Proposition 4.2, and the part (b) follows from Theorem 5.1 Let us mention that in the case \(h_{\text {lin}}(\phi ) < 2\) it turns out that we always have \(\nu (\phi ) = 0\), which will be important for the boundary point \((1/p_1',1/p_3') = (0,1/(2h(\phi )))\).

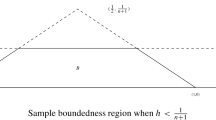

In this article we do not deal with the non-adapted case when \(h_\text {lin}(\phi ) \geqslant 2\) in its full generality. Let us briefly comment how one can easily get some preliminary Fourier restriction estimates. Namely, the abstract result from [20] by Keel and Tao implies that we automatically have the Fourier restriction estimate for the region labeled by KT in Fig. 3 below. For details we refer to Proposition 4.1.

One can combine this result with the case \(p_1=p_3\) from Theorem 1.1 and get by interpolation the region labeled by IM in Fig. 3.

2 Necessary conditions

In this section our assumptions on \(\phi \) are as explained in Sect. 1.2. Our goal is to find a complete set of necessary conditions on \(p = (p_1,p_3) \in [1,\infty ]^2\) for (1.2) to hold true whenever \(\rho (0) \ne 0\). We shall reframe the conditions in several ways: an “explicit” form in Sect. 2.1, a form as in Theorem 1.2 using the Legendre transformation of K in Sect. 2.2, and a form when we fix the ratio \(p_1'/p_3'\) in Sect. 2.3. In Sect. 2.4 we discuss the normal forms of \(\phi \) when \(h_{\text {lin}}(\phi ) < 2\) and determine explicitly the necessary conditions in this case.

2.1 The explicit form

Let us first introduce some further notation. Recall that if \(\phi \) is linearly adapted but not adapted, then the adapted coordinate system is obtained through \((y_1, y_2) = (x_1, x_2 - \psi (x_1))\), where \(\psi \) is the principal root jet, and recall that the function \(\phi \) in the new coordinates y is \(\phi ^a(y_1,y_2) = \phi (y_1, y_2 + \psi (y_1))\), i.e., \(\phi ^a\) represents the function \(\phi \) in adapted coordinates. We denote the vertices of \({\mathcal {N}}(\phi ^a)\) by

where \(n \geqslant 0\) and we assume that the points are ordered from left to right, i.e., \(A_{l-1} < A_l\) for \(l = 1,2,\ldots ,n\). Next, we denote the compact edges of \({\mathcal {N}}(\phi ^a)\) by

and also the unbounded edges by

see Fig. 1. Let us denote by \(L_l, \, l = 0, \ldots , n+1\), the associated lines on which these edges lie. Each line \(L_l\) is given by the equation

where \((\kappa _1^l, \kappa _2^l) \in [0,\infty )^2\) is its associated weight. We also introduce the quantity \(a_l :={\kappa _2^l}/{\kappa _1^l}\), which is related to the slope of \(L_l\), namely, its slope is then equal to \(-1/a_l\). We obviously have \(a_0 = 0\) and \(a_{n+1} = \infty \).

Recall that we denote by \(0< m < \infty \) the leading exponent in the Taylor expansion of \(\psi \) and that we define \(L_{\kappa }\) to be the unique line \(\kappa _1 t_1 + \kappa _2 t_2 = 1\) satisfying \(\kappa _2 = m \kappa _1\) and which is a supporting line to the Newton polyhedron \({\mathcal {N}}(\phi ^a)\) (see Fig. 1). This line coincides with the line containing the principal face of \({\mathcal {N}}(\phi )\), as follows from Varchenko’s algorithm.

Next, let \(l_0\) be such that \(a_{l_0} > m \geqslant a_{l_0-1}\). Note that the point \((A_{l_0-1},B_{l_0-1})\) is the right endpoint of the intersection of \(L_{\kappa }\) and \({\mathcal {N}}(\phi ^a)\). Varchenko’s algorithm also shows that \(B_{l_0-1} \geqslant A_{l_0-1}\). We denote by \(l^a\) the index such that \(\kappa ^{l^a}\) is associated to the principal face of \({\mathcal {N}}(\phi ^a)\). If \(\pi (\phi ^a)\) is a vertex, we take \(l^a\) to be associated to the edge to the left of \(\pi (\phi ^a)\). Note \(l^a \geqslant l_0\).

Now we can express the augmented Newton polyhedron \({\mathcal {N}}^{\text {res}}(\phi ^a)\) as the convex hull of the set \({\mathcal {N}}(\phi ^a) \cup L_\kappa ^+\), where \(L_\kappa ^+\) denotes the ray \(\{ (t_1,t_2) \in L_{\kappa } : t_2 \geqslant B_{l_0-1}\}\).

Before stating the necessary conditions analogous to [16, Proposition 1.16], let us recall that in the case of the principal face being a vertex, we take \(\kappa \) to determine the line containing the edge of \({\mathcal {N}}(\phi )\) which has \(\pi (\phi )\) as its left endpoint. Furthermore recall that \(m = \kappa _2 / \kappa _1 \geqslant 1\) and that \(\phi \) is linearly adapted in its original coordinates.

Proposition 2.1

Let \(\phi \) be as above. Let \(\rho \geqslant 0\), \(\rho \in C_0^\infty (S)\), be a smooth compactly supported function with \(\rho (0) \ne 0\), and assume that the estimate (1.2) holds true. If \(\phi \) is non-adapted, let us consider the nonlinear shear transformation \((y_1, y_2) = (x_1, x_2 - \psi (x_1))\) and let \(\phi ^a(y) :=\phi (y_1,y_2+\psi (y_1))\) be the function \(\phi \) expressed in the adapted coordinates. Then it necessarily follows that for all weights \(({\tilde{\kappa }}_1,{\tilde{\kappa }}_2)\) such that \(L_{{\tilde{\kappa }}}\) is a supporting line to \({\mathcal {N}}^\text {res}(\phi ^a)\) we have

This is equivalent to

Furthermore, when \(\phi \) is either adapted or non-adapted we have the conditions

In particular when \(\phi \) is non-adapted the first condition in (2.3) then coincides with the one in the second line of (2.2). Moreover in this case the conditions in (2.2) for \(l > l^a\) are redundant, and if we fix \(p_3' = \infty \) (resp. \(p_1' = \infty \)) then all the conditions reduce to \(p_1' \geqslant 2\) (resp. \(p_3' \geqslant 2h(\phi )\)).

Proof

We give only a sketch of the proof since it follows the same lines as in [16]. Let us consider any supporting line \(L_{{\tilde{\kappa }}}\) to the augmented Newton polyhedron \({\mathcal {N}}^\text {res}(\phi ^a)\) for some weight \(({\tilde{\kappa }}_1,{\tilde{\kappa }}_2)\). This particularly implies by the definition of the augmented Newton diagram that \({\tilde{\kappa }}_2 \geqslant m {\tilde{\kappa }}_1\).

We first consider the case when \({\tilde{\kappa }}_1 > 0\), i.e., when the associated line \(L_{{\tilde{\kappa }}}\) is not horizontal. In this case for each sufficiently small \(\varepsilon > 0\) we define the region \(D_\varepsilon ^a :=\{ y \in {\mathbb {R}}^2 : |y_1| \leqslant \varepsilon ^{{\tilde{\kappa }}_1}, |y_2| \leqslant \varepsilon ^{{\tilde{\kappa }}_2} \}\), which in the original coordinate system has the form \(D_\varepsilon :=\{ x \in {\mathbb {R}}^2 : |x_1| \leqslant \varepsilon ^{{\tilde{\kappa }}_1}, |x_2-\psi (x_1)| \leqslant \varepsilon ^{{\tilde{\kappa }}_2} \}\). Using the \(\phi ^a_{{\tilde{\kappa }}}\) part of the Taylor approximation of \(\phi ^a\) one easily gets that for each \(y \in D_\varepsilon ^a\) we have \(|\phi ^a(y)| \leqslant C \varepsilon \). Returning to the x coordinates we obtain \(|\phi (x)| \leqslant C \varepsilon \) when \(x \in D_\varepsilon \). But for \(x \in D_\varepsilon \) one has

since \(|\psi (x_1)| \lesssim |x_1|^{m}\) and \({\tilde{\kappa }}_2 \geqslant m {\tilde{\kappa }}_1\). Therefore the region \(D_\varepsilon \) is contained in the set where \(|x_1| \leqslant C_1 \varepsilon ^{{\tilde{\kappa }}_1}\) and \(|x_2| \leqslant C_2 \varepsilon ^{m {\tilde{\kappa }}_1}\). Thus, we choose a Schwartz function \(\varphi _\varepsilon \) which has its Fourier transform of the form

for some smooth compactly supported function \(\chi _0\) which is identically 1 on the interval \([-1,1]\). Then in particular we have \(\widehat{\varphi _\varepsilon }(x_1,x_2,\phi (x_1,x_2)) \geqslant 1\) on \(D_\varepsilon \).

Now on the one hand, since \(\rho (0) \ne 0\), we have

and on the other

Plugging these into (1.2) and letting \(\varepsilon \rightarrow 0\) one obtains (2.1) for the non-horizontal edges.

In the horizontal case \({\tilde{\kappa }}_1 = 0\) one only slightly changes the argument. Namely, one defines for a sufficiently small \(\delta > 0\) the set \(D_\varepsilon ^a :=\{ y \in {\mathbb {R}}^2 : |y_1| \leqslant \varepsilon ^{\delta }, |y_2| \leqslant \varepsilon ^{{\tilde{\kappa }}_2} \}\). The associated set in the x coordinates \(D_\varepsilon \) is then contained in the box determined by \(|x_1| \leqslant \varepsilon ^\delta \) and \(|x_2| \leqslant \varepsilon ^{m \delta }\). Furthermore, using a Taylor series expansion, one can easily show that for \(x \in D_\varepsilon \) we have again \(|\phi (x)| \leqslant C \varepsilon \). Now one proceeds as in the non-horizontal case, the only difference is that after taking the limit \(\varepsilon \rightarrow 0\), one also needs to take the limit \(\delta \rightarrow 0\).

Let us now briefly explain why (2.1) and (2.2) are equivalent. We obviously have that (2.1) implies (2.2). For the reverse implication we note that the \({\tilde{\kappa }}\)’s considered in (2.2) are by definition precisely those for which the lines \(L_{{\tilde{\kappa }}}\) contain the edges of the augmented Newton diagram. This means that all the other supporting lines touch the augmented Newton diagram at only one point. Now one just uses the fact that the associated weight \({\tilde{\kappa }}\) of such a supporting line \(L_{{\tilde{\kappa }}}\) is obtained by a convex combination of weights associated to the edges which intersect at the point through which \(L_{{\tilde{\kappa }}}\) passes. Thus, all the conditions in (2.1) can be obtained as convex combinations of conditions in (2.2).

The proof of (2.3) is similar to the one for (2.1). One considers the set \(D_\varepsilon \) defined by \(\{ x \in {\mathbb {R}}^2 : |x_1| \leqslant \varepsilon ^{\kappa _1}, |x_2| \leqslant \varepsilon ^{\kappa _2} \}\) in the case when the principal face of \({\mathcal {N}}(\phi )\) is compact. If it is not compact, then one uses \(\{ x \in {\mathbb {R}}^2 : |x_1| \leqslant \varepsilon ^{\delta }, |x_2| \leqslant \varepsilon ^{\kappa _2} \}\). Using the Taylor approximation of \(\phi (x)\) one gets that for \(x \in D_\varepsilon \) we have \(|\phi (x)| \lesssim \varepsilon \). The first condition in (2.3) is then obtained by plugging

into the estimate (1.2) in the compact case. In the non-compact case we just change \(\varepsilon ^{\kappa _1}\) to \(\varepsilon ^\delta \).

In the adapted case, when \(d(\phi ) = h(\phi )\), we also get automatically the second condition from the first one. Finally, as was mentioned at the beginning of this section, if \(\phi \) is non-adapted and if we take l such that \(\kappa ^l\) is associated to the principal face of \({\mathcal {N}}(\phi ^a)\), then we have \(h(\phi ) = 1/(\kappa _1^l + \kappa _2^l)\). Therefore the associated condition to this l in (2.2) implies the second condition in (2.3).

Let us now prove the remaining claims. When \(p_1' = \infty \), then all the conditions indeed reduce to \(1/p_3' \leqslant 1/(2h(\phi ))\) since \(\kappa _1^l+\kappa _2^l\) is minimal precisely for the edge \(\gamma _{l^a}\) which intersects the bisectrix of \({\mathcal {N}}(\phi ^a)\). This is a direct consequence of the fact that the augmented Newton polyhedron is obtained by the intersection of upper half-planes which have \(L_{\kappa }\) and \(L_l\)’s with \(\kappa _2^l/\kappa _1^l > m\) (i.e., for \(l \geqslant l_0\)) as boundaries, and of the fact that the bisectrix intersects \(L_l\) at \((1/(\kappa _1^l+\kappa _2^l), 1/(\kappa _1^l+\kappa _2^l))\).

When \(p_3' = \infty \), then the condition \(1/p_1' \leqslant 1/2\) is the strongest one; this is a direct consequence of \(\kappa _2^l/\kappa _1^l > m = \kappa _2/\kappa _1\).

We finally prove that one does not need to consider all the conditions in the first row of (2.2), but only for \(l = l_0,\ldots ,l^a\) where \(l^a\) is such that \(\gamma _{l^a}\) is the principal face of \({\mathcal {N}}(\phi ^a)\). This follows from the following two facts. Namely, we first note that the line in the \((1/p_1',1/p_3')\)-plane given by

intersects the axis \(1/p_1' = 0\) at the point which has the \(1/p_3'\) coordinate equal to \((\kappa _1^l+\kappa _2^l)/2\), which is greater than \(1/(2h(\phi ))\) if \(l \ne l^a\), by the previous discussion in the case \(p_1' = \infty \). And secondly, as \(\kappa _1^l\) decreases when l increases, the slope of the line (2.4) in the \((1/p_1',1/p_3')\)-plane increases with l too. Therefore, in the \((1/p_1',1/p_3')\)-plane the lines given by (2.4) and corresponding to necessary conditions associated to any l with \(l > l^a\) are lying above the line associated to \(l^a\) in the area where \(1/p_1' \geqslant 0\). \(\square \)

The necessary conditions from Proposition 2.1 determine a polyhedron in the \((1/p_1',1/p_3')\)-plane which we denote by \({\mathcal {P}}\) (see Fig. 3). Let us define the lines

associated to the necessary conditions. Using arguments similar as in the proof of Proposition 2.1, or the Legendre transformation from the following Sect. 2.2, one can show that the polyhedron \({\mathcal {P}}\) is of the form \({\mathcal {P}} = OPP_{l_0}P_{l_0+1}\ldots P_{l^a-1}P_{l^a}{\tilde{P}}\), i.e., the polyhedron with vertices \(O, P, P_{l_0}, P_{l_0+1}, \ldots , P_{l^a-1}, P_{l^a}, {\tilde{P}}\), where the point O is the origin and the other points are as follows. The point P is (1/2, 0) and the point \({\tilde{P}}\) is \((0,1/(2h(\phi )))\). The point \(P_{l_0}\) is the intersection of \({\tilde{L}}\) and \({\tilde{L}}_{l_0}\), and all the other points \(P_{l}\) are given as intersections of the lines \({\tilde{L}}_{l}\) and \({\tilde{L}}_{l-1}\) for \(l = l_0+1, \ldots , l^a\).Footnote 1

As in the \(p_1=p_3\) case considered in [16], we expect that the conditions from Proposition 2.1 are sharp. This will of course follow if we prove that the Fourier restriction estimate is true within the range they determine. In the adapted case, when \(d(\phi ) = h(\phi )\), the only condition we obtained was

This condition is sharp as will be shown in Sect. 4, though sometimes the endpoint estimate on the \(1/p_3'\) axis will not hold.

2.2 The form using the Legendre transformation

As already noted, the necessary conditions can be stated as

for all \(({\tilde{\kappa }}_1,{\tilde{\kappa }}_2)\) such that \(L_{{\tilde{\kappa }}}\) is a supporting line to the augmented Newton polyhedron of \(\phi ^a\). This can be rewritten as

As in Sect. 1.2 we denote by K the function associating to each \({\tilde{\kappa }}_1 \in [0,\kappa _1]\) the \({\tilde{\kappa }}_2\) such that \(L_{{\tilde{\kappa }}}\) is a supporting line to the augmented Newton polyhedron of \(\phi ^a\), i.e., we have \({\tilde{\kappa }} = ({\tilde{\kappa }}_1, K({\tilde{\kappa }}_1))\). The Legendre transformation of K is given by (1.7) and thus we have

We have depicted the graph of K in Fig. 2.

2.3 Conditions when the ratio is fixed

If we fix a ratio \(r = p_1'/p_3' \in [0,\infty ]\), then we are able to introduce a quantity slight more general than the restriction height \(h^{\text {res}}(\phi )\) introduced in [16]. We shall not use this quantity in this article, but it may prove useful when considering the mixed norm Fourier restriction for functions \(\phi \) with \(h_{\text {lin}}(\phi ) \geqslant 2\). The cases \(r \in \{0,\infty \}\) are not interesting since we shall prove the associated results in Sect. 4 easily, so we assume that \(r \in (0,\infty )\) is fixed. In this case by plugging in \(p_1' = rp_3'\) the conditions (2.2) can be restated as

where again \({\tilde{\kappa }}\) is such that \(L_{{\tilde{\kappa }}}\) is a supporting line to the augmented Newton polyhedron \({\mathcal {N}}^\text {res}(\phi ^f)\). But now we notice that the number on the right hand side of the above inequality is actually the \(t_2\)-coordinate of the intersection of the line \(L_{{\tilde{\kappa }}}\) with the parametrised line \(t \mapsto ( t - \frac{1+m}{r},t )\), which we shall denote by \(\Delta ^{(m)}_r\). This motivates us to define

when \(\kappa _2^l/\kappa _1^l > m\) (i.e., for \(l \geqslant l_0\)). Then, if we define

the conditions (2.2) can be restated as the requirement that the inequalities

must hold necessarily true for all \(r \in (0,\infty )\), along with the inequalities \(p_1' \geqslant 2\) and \(p_3' \geqslant 2h(\phi )\), representing the respective cases \(r = 0\) and \(r = \infty \).

By definition, the restriction height \(h^{\text {res}}(\phi )\) from [16] coincides with \(h^{\text {res}}_r(\phi )\) when \(r = 1\), and in the same way as in [16] we see from (2.6) that \(h^{\text {res}}_r(\phi )+1\) can be read off as the \(t_2\)-coordinate of the point where the line \(\Delta ^{(m)}_r\) intersects the augmented Newton diagram of \(\phi ^a\) (see Fig. 4).

2.4 Necessary conditions when \(h_\text {lin}(\phi ) < 2\)

In the case when \(\phi \) is non-adapted and the linear height of \(\phi \) is strictly less than 2 it turns out that there are only two necessary conditions from Proposition 2.1. Namely, in this case we shall show that \(l_0 = l^a\), and therefore the only conditions are

If we replace above the inequality signs with equality signs, we get two linear equations in \((1/p_1',1/p_3')\). Let \((1/{\mathfrak {p}}_1',1/{\mathfrak {p}}_3')\) be the solution of this system. We shall call \({\mathfrak {p}} = ({\mathfrak {p}}_1,{\mathfrak {p}}_3)\) the critical exponent. Then, by interpolation, it is sufficient to prove the Fourier restriction estimate (1.2) for the exponent \({\mathfrak {p}}\) and the endpoint exponents associated to the points lying on the axes, i.e., (1/2, 0) and \((0,1/(2h(\phi )))\).

In order to obtain what precisely the critical exponent \({\mathfrak {p}}\) is, we recall [16, Proposition 2.11] which gives us explicit normal forms of \(\phi \) in the case when \(h_\text {lin}(\phi ) < 2\). In the real analytic case these normal forms were derived in [27] by Siersma. [16, Proposition 2.11] states that there are two type of singularities, A and D.

In the case of A type singularity the form of the function \(\phi \) is

Here \(\psi \), b, and \(b_0\) are smooth functions such that \(\psi (x_1) = cx_1^m + {\mathcal {O}}(x_1^{m+1})\) (with \(c \ne 0\) and \(m \geqslant 2\)), \(b(0,0) \ne 0\), and \(b_0(x_1) = x_1^n \beta (x_1)\) (with either \(\beta (0) \ne 0\) and \(n \geqslant 2m+1\), or \(b_0\) is flat, i.e., “\(n = \infty \)”). The function \(\psi \) is the principal root jet of \(\phi \). If \(b_0\) is flat, this is \(A_\infty \) type singularity, and otherwise it is \(A_{n-1}\) type singularity. In adapted coordinates, the formula (2.8) turns into

where \(b^a(y_1,y_2) = b(y_1,y_2+\psi (y_1))\), i.e., the function b in \((y_1,y_2)\) coordinates. From the formulas (2.8) and (2.9) one can now determine the form of the Newton polyhedron of \(\phi \) and \(\phi ^a\) (see Fig. 5). Reading off the Newton polyhedra we have

and so the necessary conditions (2.2) can be written as

Now an easy calculation shows that \((1/{\mathfrak {p}}_1',1/{\mathfrak {p}}_3') = (1/(2m+2),1/4)\), i.e., we have determined the critical exponent.

In the case of D type singularity [16, Proposition 2.11] tells us that

i.e., the function b from (2.8) is now to be written as \(b(x_1,x_2) = x_1 b_1(x_1,x_2) + x_2^2 b_2(x_2)\). In this case we have the conditions \(b_1(0,0) \ne 0\) and \(b_2(x_2) = c_2 x_2^k + {\mathcal {O}}(x_2^{k+1})\). Again \(\psi (x_1) = cx_1^m + {\mathcal {O}}(x_1^{m+1})\) (\(c \ne 0\), \(m \geqslant 2\)) and \(b_0(x_1) = x_1^n \beta (x_1)\), but now either \(\beta (0) \ne 0\) and \(n \geqslant 2m+2\), or \(b_0\) is flat. If \(b_0\) is flat, this is \(D_\infty \) type singularity, and otherwise it is \(D_{n+1}\) type singularity. The function \(b_1^a\) is the function \(b_1\) in \((y_1,y_2)\) coordinates. Now one determines the form of the Newton polyhedra (see Fig. 6) and reads off that

Therefore, the necessary conditions can be written as

Again, a simple calculation shows that \((1/{\mathfrak {p}}_1',1/{\mathfrak {p}}_3') = (1/(4m+4),1/4)\).

Note that in the \(A_\infty \) and \(D_\infty \) cases the necessary conditions form a right-angled trapezium in the \((1/p_1',1/p_3')\)-plane (easily seen by taking \(n \rightarrow \infty \); one can also do a direct calculation). As the critical exponents in the cases \(A_{n-1}\) and \(D_{n+1}\) do not depend on n, one is easily convinced that the critical exponents of \(A_\infty \) and \(D_\infty \) cases are equal to the respective critical exponents of \(A_{n-1}\) and \(D_{n+1}\).

3 Auxiliary results

3.1 Reduction to the case \(\nabla \phi (0) = 0\)

In Sect. 1.1 we mentioned that one can always reduce the mixed normed Fourier restriction problem to the case when \(\nabla \phi (0) = 0\), despite rotational invariance not being at one’s disposal. Let us justify this. Consider the linear transformation \(L(x_1,x_2,x_3) :=(x_1,x_2,x_3 + \partial _1 \phi (0) x_1 + \partial _2 \phi (0) x_2)\) whose inverse and transpose are respectively \(L^{-1}(x_1,x_2,x_3) = (x_1,x_2,x_3 - \partial _1 \phi (0) x_1 - \partial _2 \phi (0) x_2)\) and \(L^t(x_1,x_2,x_3) = (x_1 + \partial _1 \phi (0) x_3, x_2 + \partial _2 \phi (0) x_3, x_3)\). Plugging in the function \(f \circ L^t\) into the expression of the mixed norm Fourier restriction estimate (1.2) we obtain

Now one just notices that \(\Vert f \circ L^t \Vert _{L^{p_3}_{x_3} (L^{p_1}_{(x_1,x_2)})} = \Vert f \Vert _{L^{p_3}_{x_3} (L^{p_1}_{(x_1,x_2)})}\), and that

since the determinant of L is 1. Thus the estimate (1.2) with the function \(\phi \) is equivalent (up to a slight change in amplitude due to the Jacobian factor \(\sqrt{1+|\nabla \phi (\xi )|^2}\)) to the same estimate with the function \(\phi \) replaced by the function \(\xi \mapsto \phi (\xi ) - \xi \cdot \nabla \phi (0)\), which has gradient 0 at the origin.

3.2 Auxiliary results related to oscillatory sums and integrals

We first mention that we shall often use the more or less standard van der Corput Lemma from [16] (see [16, Lemma 2.1]), originating from the work of van der Corput [5].Footnote 2

Another lemma (less general, but with a stronger implication than the one in [16, Section 2.2]) we need gives us an asymptotic of an oscillatory integral of Airy type. We shall also need some variants, but these we shall state and prove along the way when they are needed.

Lemma 3.1

For \(\lambda \geqslant 1\) and \(u \in {\mathbb {R}}\), \(|u| \lesssim 1\), let us consider the integral

where a, b are smooth and real-valued functions on an open neighbourhood of \(I \times K\) for I a compact neighbourhood of the origin in \({\mathbb {R}}\) and K a compact subset of \({\mathbb {R}}^m\). Let us assume that \(b(t,s) \ne 0\) on \(I \times K\) and that \(|t| \leqslant \varepsilon \) on the support of a. If \(\varepsilon > 0\) is chosen sufficiently small and \(\lambda \) sufficiently large, then the following holds true:

-

(a)

If \(\lambda ^{2/3} |u| \lesssim 1\), then we can write

$$\begin{aligned} J(\lambda ,u,s) = \lambda ^{-1/3} g\left( \lambda ^{2/3}u, \lambda ^{-1/3}, s\right) , \end{aligned}$$where \(g(v, \mu , s)\) is a smooth function of \((v,\mu ,s)\) on its natural domain.

-

(b)

If \(\lambda ^{2/3} |u| \gg 1\), then we can write

$$\begin{aligned} J(\lambda ,u,s) =&\,\lambda ^{-1/2} |u|^{-1/4} \chi _0(u/\varepsilon ) \, \sum _{\tau \in \{+,-\}} a_\tau (|u|^{1/2},s; \lambda |u|^{3/2}) e^{i \lambda |u|^{3/2} q_\tau (|u|^{1/2},s)} \\&+ (\lambda |u|)^{-1} E(\lambda |u|^{3/2}, |u|^{1/2}, s), \end{aligned}$$where \(a_{\pm }\) are smooth functions in \((|u|^{1/2},s)\) and classical symbolsFootnote 3 of order 0 in \(\lambda |u|^{3/2}\), and where \(q_\pm \) are smooth functions such that \(|q_\pm | \sim 1\). The function E is a smooth function satisfying

$$\begin{aligned} \left| \partial ^\alpha _\mu \partial ^\beta _v \partial ^\gamma _s E(\mu , v, s)\right| \leqslant C_{N,\alpha ,\beta ,\gamma } |\mu |^{-N}, \end{aligned}$$for all \(N, \alpha , \beta , \gamma \in {\mathbb {N}}_0\).

The proof is the same as in [16], up to obvious modifications.

Next, we state results relating the Newton polyhedron and its associated quantities with asymptotics of oscillatory integrals.

Theorem 3.2

Let \(\phi : \Omega \rightarrow {\mathbb {R}}\) be a smooth function of finite type defined on an open set \(\Omega \subset {\mathbb {R}}^2\) containing the origin. If \(\Omega \) is a sufficiently small neighbourhood of the origin and \(\eta \in C_c^\infty (\Omega )\), then

for all \(\xi \in {\mathbb {R}}^3\).

This result was proven in [15] and can be interpreted as a uniform estimate with respect to a linear pertubation of the phase. The case when \(h(\phi ) < 2\) was considered earlier in [6]. In the case when \(\phi \) is real analytic and there is no pertubation (i.e., \(\xi _1=\xi _2=0\)) the above result goes back to Varchenko [32]. In the case of a real analytic function \(\phi \) one actually has a uniform estimate with respect to analytic pertubations (this was proved by Karpushkin [18]).

We also have the following result from [15] which gives us sharpness of Theorem 3.2 in the case when \(\xi _1=\xi _2=0\).

Theorem 3.3

Let \(\phi \) be as in Theorem 3.2 and let us define for \(\lambda > 0\) the function

for an \(\eta \in C_c^\infty (\Omega )\). If the principal face \(\pi (\phi ^a)\) of \({\mathcal {N}}(\phi ^a)\) is a compact face, and if \(\Omega \) is a sufficiently small neighbourhood of the origin, then

where \(c_{\pm }\) are nonzero constants depending on the phase \(\phi \) only.

An analogous result was proved earlier by Greenblatt in [10] for real analytic phase functions \(\phi \). When the principal face is not compact, Theorem 3.3 may fail in general (for an example of this see [17]).

Finally, we state three lemmas which we shall often use in conjunction with Stein’s complex interpolation theorem. The proofs of the first and the third lemma can be found in [16, Section 2.5], while we only give a brief note on the proof of the second lemma since it is a direct modification of the first one. The proof of all of them are elementary, though the proof of the third one is quite technical.

Lemma 3.4

Let \(Q = \prod _{k=1}^n[-R_k,R_k]\) be a compact cuboid in \({\mathbb {R}}^n\) for some real numbers \(R_k > 0\), \(k = 1,\ldots ,n\), and let \(\alpha ,\beta ^1,\ldots ,\beta ^n\) be some fixed nonzero real numbers. For a \(C^1\) function H defined on an open neighbourhood of Q, nonzero real numbers \(a_1, \ldots , a_n\), and M a positive integer we define

for \(t \in {\mathbb {R}}\). Then there is a constant C which depends only on Q and the numbers \(\alpha \) and \(\beta ^k\)’s, but not on H, \(a_k\)’s, M, and t, such that

for all \(t \in {\mathbb {R}}\).

We shall often use this lemma in combination with the holomorphic function

when applying complex interpolation. This function has the property that \(| \gamma (1+it) F(t) | \leqslant C_\theta \) for a positive constant \(C_\theta < +\infty \), and \(\gamma (\theta ) = 1\).

The following lemma is a slight variation of what was written in [16, Remark 2.8].

Lemma 3.5

Let \(Q = \prod _{k=1}^n[-R_k,R_k]\) be a compact cuboid in \({\mathbb {R}}^n\) for some real numbers \(R_k > 0\), \(k = 1,\ldots ,n\), let \(\alpha ,\beta ^1,\ldots ,\beta ^n\) be some fixed nonzero real numbers, and let \(0< \epsilon < 1\). For a \(C^1\) function H on a neighbourhood of Q, nonzero real numbers \(a_1, \ldots , a_n\), and M a positive integer we define

for \(t \in {\mathbb {R}}\). Then there is a constant C which depends only on Q and the numbers \(\alpha \), \(\beta ^k\)’s, and \(\epsilon \), but not on H, \(a_k\)’s, M, and t, such that

for all \(t \in {\mathbb {R}}\). The constants \(C_k\) are given as

where the supremum goes over the set \(\prod _{j=1}^k[-R_j,R_j]\).

The only difference compared to the proof of [16, Lemma 2.7] is that one now writes

and notes that the fractions are bounded by their respective \(C_k\)’s.

In the above lemma we could have directly defined \(C_k\)’s as the Hölder quotients appearing in (3.2), but the formulas used in Lemma 3.5 turn out to be more practical. One can easily construct an example though where using the Hölder quotients is more appropriate. One example is when one has an oscillatory factor such as in \(H(y_1) = y_1^\epsilon \, e^{i y_1^{-1}}\), \(0< y_1 < 1\) (cf. the Riemann singularity as in [28, Chapter VIII, Subsection 1.4.2]). This function is \(\epsilon \)-Hölder continuous at 0 and satisfies the conclusion of Lemma 3.5 in the sense that \(|F(t)| \leqslant C/|2^{i\alpha t} - 1|\), but one can show without too much effort that the integral defining \(C_1\) in Lemma 3.5 is infinite.

The third lemma is a two parameter version of the first one.

Lemma 3.6

Let \(Q = \prod _{k=1}^n[-R_k,R_k]\) be a compact cuboid in \({\mathbb {R}}^n\) for some real numbers \(R_k > 0\), \(k = 1,\ldots ,n\), and let \(\alpha _1,\alpha _2 \in {\mathbb {Q}}^{\times }\), and \(\beta ^k_1, \beta ^k_2 \in {\mathbb {Q}}\), \(k = 1,\ldots ,n\), be fixed numbers such that \(\alpha _1 \beta _2^k - \alpha _2 \beta _1^k \ne 0\) for all k (i.e., the vector \((\alpha _1, \alpha _2)\) is linearly independent from \((\beta _1^k, \beta _2^k)\)). For a \(C^2\) function H defined on an open neighbourhood of Q, nonzero real numbers \(a_1, \ldots , a_n\), and \(M_1, M_2\) positive integers we define

for \(t \in {\mathbb {R}}\). Then there is a constant C which depends only on Q and the numbers \(\alpha _1, \alpha _2\), \(\beta _1^k\)’s, \(\beta _2^k\)’s, but not on H, \(a_k\)’s, \(M_1\), \(M_2\), and t, such that

for all \(t \in {\mathbb {R}}\). The function \(\rho \) is defined by \(\rho (t) :=\prod _{\nu = 1}^N {\tilde{\rho }}(\nu t) {\tilde{\rho }}(-\nu t)\), where

and N is a positive integer depending on the \(\beta _1^k\)’s and \(\beta _2^k\)’s.

For future reference we also note the following construction from [16, Remark 2.10] of a complex function \(\gamma \) on the strip \(\Sigma :=\{\zeta \in {\mathbb {C}}: 0 \leqslant {\text {Re}}\zeta \leqslant 1\}\) which shall be used in the context of complex interpolation together with the above two parameter lemma. If we are given \(0< \theta < 1\) and the exponents \(\alpha _1, \alpha _2\), and \(\beta _1^k\)’s, \(\beta _2^k\)’s as above, we define

where

The function \(\gamma \) has the following two key properties. It is an entire analytic function uniformly bounded on the strip \(\Sigma \), and for the function F as in Lemma 3.6 there is a positive constant \(C_\theta < +\infty \) such that for all \(t \in {\mathbb {R}}\) we have \(| \gamma (1+it) F(t) | \leqslant C_\theta \). It also has the property that \(\gamma (\theta ) = 1\).

3.3 Auxiliary results related to mixed \(L^p\)-norms

In this section R shall denote the Fourier restriction operator \(L^p({\mathbb {R}}^3) \rightarrow L^2(\mathrm {d}\mu )\) for a positive finite Radon measure \(\mu \), and all functions and measures will have \({\mathbb {R}}^3\) as their domain, unless stated otherwise. Recall that we assume \(p = (p_1,p_3)\).

We first recall what happens in the simple case when \(p=(2,1)\) and \(\mu \) has the form

where \(\phi \) is any measurable function on an open set \(\Omega \) and \(\eta \in C_c^\infty (\Omega )\) is a nonnegative function. In this case the form of the adjoint of R is

and it is called the extension operator. Using Plancherel for each fixed \(x_3\), we easily get boundedness of \(R^* : L^2(\mathrm {d}\mu ) \rightarrow L^\infty _{x_3}(L^2_{(x_1,x_2)})\). Note that the operator bound depends only on the \(L^\infty \) norm of \(\eta \). In particular we know that \(R : L^1_{x_3}(L^2_{(x_1,x_2)}) \rightarrow L^2(\mathrm {d}\mu )\) is bounded.

When considering the \(L^p-L^2\) Fourier restriction problem for other p’s, it is advantageous to reframe the problem using the so called “\(R^* R\)” method. The boundedness of the restriction operator \(R : L^p \rightarrow L^2(\mathrm {d}\mu )\) is equivalent to the boundedness of the operator \(T = R^* R\), which can be written as

in the pair of spaces \(L^p \rightarrow L^{p'}\), where \(p'\) denotes the Young conjugate exponents \((p_1',p_3')\). Note that the operator T is linear in \(\mu \) and it even makes sense for a complex \(\mu \) (unlike the restriction operator R). This enables us to decompose the measure \(\mu \) into a sum of complex measures, each having an associated operator of the same form as in (3.4).

The following few lemmas give us information on the boundedness of convolution operators such as in (3.4).

Lemma 3.7

Let us consider the convolution operator \(T : f \mapsto f * {\widehat{\mu }}\) for a tempered Radon measure \(\mu \) (i.e., a Radon measure which is a tempered distribution).

-

(i)

If \({\widehat{\mu }}\) is a measurable function which satisfies

$$\begin{aligned} |{\widehat{\mu }}(x_1,x_2,x_3)| \lesssim A (1+|x_3|)^{-{\tilde{\sigma }}} \end{aligned}$$(3.5)for some \({\tilde{\sigma }} \in [0,1)\), then the operator norm of \(T : L^p \rightarrow L^{p'}\) for \((1/p_1',1/p_3') = (0,{\tilde{\sigma }}/2)\) is bounded (up to a multiplicative constant) by A.

-

(ii)

If \(\mu \) is a bounded function such that \(\Vert \mu \Vert _{L^\infty } \lesssim B\), then the operator norm of \(T : L^2 \rightarrow L^{2}\) is bounded (up to a multiplicative constant) by B.

Proof

One can easily show by integrating (3.4) in \((x_1,x_2)\) variables that

and therefore we can now apply the (one-dimensional) Hardy-Littlewood-Sobolev inequality and obtain the claim in the first case. The second case when \(p_1=p_3=2\) is a well known classical result for multipliers. \(\square \)

For a more abstract approach to the above lemma see [9, 20]. There one also obtains an appropriate result for \({\tilde{\sigma }} = 1\) when \(1/p_1' > 0\), but shall not need this.

A particular useful application of the above lemma is the following.

Lemma 3.8

Let us consider \(T : f \mapsto f * {\widehat{\mu }}\) for a tempered Radon measure \(\mu \) which is now localised in the frequency space \({{\,\mathrm{supp}\,}}{\widehat{\mu }} \subset {\mathbb {R}}^2 \times [-\lambda _3,\lambda _3]\) for a \(\lambda _3 \gtrsim 1\). Let us assume that \(\mu \) and \({\widehat{\mu }}\) are measurable functions satisfying

Then T is a bounded operator for \((\frac{1}{p_1'},\frac{1}{p_3'}) = (0,\frac{{\tilde{\sigma }}}{2})\) for all \({\tilde{\sigma }} \in [0,1)\), with the associated operator norm being at most (up to a multiplicative constant) \(A \, \lambda _3^{{\tilde{\sigma }}}\). The operator norm of \(T : L^2 \rightarrow L^{2}\) is bounded (up to a multiplicative constant) by B.

Proof

We only need to obtain the decay estimate (3.5). We note that since \({\widehat{\mu }}\) has \(x_3\) support bounded by \(\lambda _3\), it follows

for all \({\tilde{\sigma }} \in [0,1)\). \(\square \)

At the end of this section we note the following simple result which tells us that the conclusion of Lemma 3.7 is in a sense quite sharp. We remark that the last conclusion in the lemma below is consistent with the condition \({\tilde{\sigma }}<1\) in (3.5).

Lemma 3.9

Consider the convolution operator \(T : f \mapsto f * {\widehat{\mu }}\) for a tempered Radon measure \(\mu \) whose Fourier transform \({\widehat{\mu }}\) is continuous. Let \(\varphi : [0,+\infty ) \rightarrow (0,+\infty )\) be an increasing and unbounded continuous function and assume that at least one of the limits

exists for some \({\tilde{\sigma }} \in (0,1)\), with the limiting value being a nonzero number. Then \(T : L^p \rightarrow L^{p'}\) is not a bounded operator for \((1/p_1',1/p_3') = (0,{\tilde{\sigma }}/2)\). The conclusion also holds in the case when \(\varphi \) is the constant function 1, \({\tilde{\sigma }} = 1\), and if we additionally assume that \({\widehat{\mu }}\) is an \(L^\infty ({\mathbb {R}}^3)\) function and that both of the above limits exist and are equal, with the limiting value being a nonzero number.

Proof

Let us begin the proof by assuming that the operator \(T : L^{\frac{2}{2-{\tilde{\sigma }}}}_{x_3} (L^1_{(x_1,x_2)}) \rightarrow L^{2/{\tilde{\sigma }}}_{x_3} (L^\infty _{(x_1,x_2)})\) is bounded. Since \({\widehat{\mu }}\) is continuous, without loss of generality we can assume that

for all x in the open set U of the form \(\{ x \in {\mathbb {R}}^3 : x_3 > K, |(x_1,x_2)| < \epsilon _U(x_3)\}\), where \(K > 0\) and \(\epsilon _U\) is a continuous and strictly positive function on \({\mathbb {R}}\).

Now consider the function

where \(\chi _0\) is smooth, identically 1 in the interval \([-1,1]\), and supported within the interval \([-2,2]\). Then

and if we assume \(\varepsilon \) to be sufficiently small and M sufficiently large, one obtains by a simple calculation that

for all \(x_3\) such that \(4M< x_3 < C(M, \varepsilon )\), where \(C(M, \varepsilon ) \rightarrow \infty \) when \(\varepsilon \rightarrow 0\) and M is fixed. If in addition we know say \(x_3 \leqslant 5M < C(M, \varepsilon )\), then

and the lower bound on the norm is

But now by the boundedness assumption we obtain

i.e., \(\varphi (|M|) \lesssim 1\). This is impossible in general since we can take \(M \rightarrow \infty \).

In the case when the limits are equal, \({\tilde{\sigma }} = 1\), and \(\varphi \) is the constant function 1, we redefine U as the set \(\{ x \in {\mathbb {R}}^3 : |x_3| > K, |(x_1,x_2)| < \epsilon _U(x_3)\}\) after which we can take (3.7) to be true for \(x \in U\) too. If we use the same f as above, then for any \(x_3 \in [-M/2,M/2]\) we easily obtain from the definition of T that

for an M sufficiently large and \(\varepsilon \) sufficiently small. Thus the norm \(\Vert Tf \Vert _{L^{2}_{x_3} (L^\infty _{(x_1,x_2)})}\) is bounded below by \(M^{1/2} \ln M\), while \(\Vert f\Vert _{L^{2}_{x_3} (L^1_{(x_1,x_2)})}\) is of size \(M^{1/2}\). This is impossible if T is bounded. \(\square \)

In the case \({\tilde{\sigma }} = 1\) and when \(\varphi \) is identically equal to a nonzero constant the above proof does not work if the limits have the same absolute value but opposite signs. This is related to the fact that an operator given as a convolution against \(x \mapsto x/(1+x^2)\) is bounded \(L^2({\mathbb {R}}) \rightarrow L^2({\mathbb {R}})\) since the Fourier transform of \(x \mapsto x/(1+x^2)\) is up to a constant \(\xi \mapsto e^{-|\xi |} {{\,\mathrm{sgn}\,}}\xi \).

4 The adapted case and reduction to restriction estimates near the principal root jet

Here we mimic [16, Chapter 3] and the last section of [15], where the adapted case for \(p_1=p_3\) was considered. In this section we shall be concerned with measures of the form

where \(\phi (0) = 0\), \(\nabla \phi (0) = 0\), and \(\eta \) is a smooth nonnegative function with support contained in a sufficiently small neighbourhood of 0. We assume that \(\phi \) is of finite type on the support of \(\eta \). The associated Fourier restriction problem is

for any \(\eta \) with support contained in a sufficiently small neighbourhood of 0.

The following proposition will be useful in this section.

Proposition 4.1

Let \(\mu \), \(\phi \), and \(\eta \) be as above. Then the mixed norm Fourier restriction estimate (4.2) holds true for the point \((1/p_1',1/p_3') = (1/2,0)\). Furthermore we have the following two cases.

-

(i)

If either \(h(\phi ) = 1\) or \(\nu (\phi ) = 1\), then the estimate (4.2) holds true for \(1/p_1' = 0\) and \(1/p_3' <1/(2h(\phi ))\). In this case the estimate for \((1/p_1',1/p_3') = (0,1/(2h(\phi )))\) does not hold if \(\eta (0) \ne 0\).

-

(ii)

If \(h(\phi )>1\) and \(\nu (\phi )=0\), then the estimate (4.2) holds true for \((1/p_1',1/p_3') = (0,1/(2h(\phi )))\).

Proof

The claim for \((1/p_1',1/p_3') = (1/2,0)\) follows from considerations at the beginning of Sect. 3.3.

Let us now recall what happens in the non-degenerate case, i.e., when the determinant of the Hessian \(\det {\mathcal {H}}_\phi (0,0) \ne 0\). This is equivalent to \(h(\phi ) = 1\) and in this case \(\phi \) is adapted in any coordinate system. Here we have the bound (4.2) for all of the \((1/p_1',1/p_3')\) given in the necessary condition (2.5), except for the point (0, 1/2), for which it does not hold. This fact is actually true globally, i.e., the Strichartz estimates hold (see [9, 20] and references therein) in the same range, and one can easily convince oneself that the same proof as in say [20] goes through in our local case. For the negative results at the point (0, 1/2) in the case of Strichartz estimates see [19] and [23]. We can also get a negative result at the point (0, 1/2) directly in our case by applying Lemma 3.9 for the case \({\tilde{\sigma }} = 1\) and \(\varphi \) is identically equal to 1. The limits in Lemma 3.9 are obtained by a simple application of the two dimensional stationary phase method. Furthermore, since the Hessian does not change its sign when changing the phase \(\phi \mapsto -\phi \), the limits in both directions are equal.

The claims for the case when \(h(\phi ) > 1\) follow easily by applying Theorems 3.2 and 3.3 to Lemmas 3.7 and 3.9 respectively. In Lemma 3.9 we take \(\varphi \) to be the logarithmic function \(x \mapsto \log (2+x)\). \(\square \)

4.1 The adapted case

The following proposition tells us precisely when the Fourier restriction estimate holds in the adapted case.

Proposition 4.2

Let us assume that \(\mu \), \(\phi \), and \(\eta \) are as explained at the beginning of this section, and let us assume that \(\phi \) is adapted.

-

(i)

If \(h(\phi )=1\) or \(\nu (\phi )=1\), then the full range Fourier restriction estimate given by the necessary condition (2.5) holds true, except for the point \((1/p_1',1/p_3') = (0,1/(2h(\phi )))\) where it is false if \(\eta (0) \ne 0\).

-

(ii)

If \(h(\phi )>1\) and \(\nu (\phi )=0\), then the full range Fourier restriction estimate given by the necessary condition (2.5) holds true, including the point \((1/p_1',1/p_3') = (0,1/(2h(\phi )))\).

Proof

The case when \(h(\phi ) = 1\) is the classical known case and it was already discussed in the proof of Proposition 4.1. The case when \(h(\phi ) > 1\) and \(\nu (\phi ) = 0\) follows from Proposition 4.1 by interpolation.

Let us now consider the remaining case when \(h(\phi ) > 1\) and \(\nu (\phi ) = 1\). Then if we would use Proposition 4.1 and interpolation as in the previous case, we would miss all the boundary points determined by the line of the necessary condition (2.5) except the point (1/2, 0) where we know that the estimate always holds. Recall that this is essentially because we have the logarithmic factor in the decay of the Fourier transform of \(\mu \). Instead, one can use the strategy from [15, Section 4] to avoid this problem. We only briefly sketch the argument. One decomposes

where \(\mu _k\) are supported within ellipsoid annuli centered at 0 and closing in to 0. This is done by considering the partition of unity

where \(\chi \) is an appropriate \(C_c^\infty ({\mathbb {R}}^2)\) function supported away from the origin and

where \(\kappa = (\kappa _1, \kappa _2)\) is the weight associated to the principal face of \({\mathcal {N}}(\phi )\). Next, one rescales the measures \(\mu _k\) and obtains measures \(\mu _{0,(k)}\) having the form (4.1). These new measures have uniformly bounded total variation and Fourier decay estimate with constants uniform in k:

Note that there is no logarithmic factor anymore. Now we can use Proposition 4.1 and interpolation to obtain the mixed norm Fourier restriction estimate within the range (2.5) for each \(\mu _{0,(k)}\). As in [15, Section 4], one now easily obtains the boundFootnote 4

where \(\delta ^e_{r}(x_1,x_2,x_3) = (r^{\kappa _1}x_1,r^{\kappa _2}x_2,rx_3)\). The scaling in our mixed norm case is

and therefore

by the necessary condition \(1/p'_1 + 1/(|\kappa |p'_3) \leqslant 1/2\), and the equalities \(d(\phi ) |\kappa | = h(\phi ) |\kappa | = 1\). The rest of the proof is the same as in [15] if we assume \(p_1 > 1\), since then one can use the Littlewood-Paley theoremFootnote 5 and the Minkowski inequality (which we can apply since \(p_1=p_2\leqslant 2\) and \(p_3\leqslant 2\)) to sum the above inequality in k. The proof of Proposition 4.2 is done. \(\square \)

4.2 Reduction to the principal root jet

In this section we make some preliminary reductions for the case when \(\phi \) is not adapted. Recall that we may assume that \(\phi \) is linearly adapted and that we denote by \(\psi \) the principal root of \(\phi \). Then we can obtain the adapted coordinates y (after possibly interchanging the coordinates \(x_1\) and \(x_2\)) through \((y_1,y_2) = (x_1, x_2-\psi (x_1))\). Before stating the last proposition of this section (analogous to [16, Proposition 3.1]) let us recall some notation from [16]. We write

where \(b_1 \ne 0\) and \(m \geqslant 2\) by linear adaptedness (see [16, Proposition 1.7]). If F is an integrable function on the domain of \(\eta \), say \(\Omega \subseteq {\mathbb {R}}^2\), then we denote

If \(\chi _0\) denotes a \(C_c^\infty ({\mathbb {R}})\) function equal to 1 in a neighbourhood of the origin, we may define

where \(\varepsilon \) is an arbitrarily small parameter. The domain of \(\rho _1\) is a \(\kappa \)-homogeneous subset of \(\Omega \) which contains the principal root jet \(x_2 = \psi (x_1)\) of \(\phi \) when \(\Omega \) is contained in a sufficiently small neighbourhood of 0.

Proposition 4.3

Assume \(\phi \) is of finite type on \(\Omega \), non-adapted, and linearly adapted (i.e., \(d(\phi ) = h_{\text {lin}}(\phi )\)). Let \(\varepsilon > 0\) be sufficiently small and let \(\mu ^{1-\rho _1}\) have support contained in a sufficiently small neighbourhood of 0. Then the mixed norm Fourier restriction estimate (4.2) with respect to the measure \(\mu ^{1-\rho _1}\) holds true for all \((1/p_1',1/p_3')\) which satisfy

i.e., within the range determined by the necessary condition associated to the principal face of \({\mathcal {N}}(\phi )\), except maybe the boundary points of the form \((0,1/p_3')\). In particular, it also holds true within the narrower range determined by all of the necessary conditions, excluding maybe the boundary points of the form \((0,1/p_3')\).

We just briefly mention that the proof of the Proposition 4.3 is trivial as soon as one uses the results from [16, Chapter 3]. Analogously to the previous section, one decomposes the measure \(\mu ^{1-\rho _1}\) by using the \(\kappa \) dilations associated to the principal face of \({\mathcal {N}}(\phi )\). The measures \(\nu _k\) obtained by rescaling are of the form (4.1), have uniformly bounded total variation, and have the Fourier transform decay (with constants uniform in k)

All of this was proven in [16, Chapter 3]. Therefore we have the Fourier restriction estimate for each \(\nu _k\) for the points \((1/p_1',1/p_3') = (1/2,0)\) and \((1/p_1',1/p_3') = (0,1/(2d(\phi )))\). Now one uses again interpolation, the Minkowski inequality, and the Littlewood-Paley theorem, to obtain the claim.

Note that the estimates for the boundary points of the form \((0,1/p_3')\) can be directly solved for the original measure \(\mu \) through Proposition 4.1.

5 The case \(h_{\text {lin}}(\phi ) < 2\)

In the remainder of this article we shall be concerned with the proof of:

Theorem 5.1

Let \(\phi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\) be a smooth function of finite type defined on a sufficiently small neighbourhood \(\Omega \) of the origin, satisfying \(\phi (0) = 0\) and \(\nabla \phi (0) = 0\). Let us assume that \(\phi \) is linearly adapted, but not adapted, and that \(h_{\text {lin}}(\phi ) < 2\). We additionally assume that the following holds: Whenever the function \(b_0\) appearing in (2.8), (2.9), (2.10) is flat (i.e., when \(\phi \) is \(A_\infty \) or \(D_\infty \) type singularity), then it is necessarily identically equal to 0. In this case, for all smooth \(\eta \geqslant 0\) with support in a sufficiently small neighbourhood of the origin the Fourier restriction estimate (4.2) holds for all p given by the necessary conditions determined in Sect. 2.4.

The above condition on the function \(b_0\) is implied by the Condition (R) from [16] (see [16, Remark 2.12. (c)]).

We begin with some preliminaries. As one can see from the Newton diagrams in Sect. 2.4, the assumption in our case \(h_{\text {lin}}(\phi ) < 2\) implies that \(h(\phi ) \leqslant 2\). Additionally, we see that \(h(\phi ) = 2\) implies that we either have \(A_\infty \) or \(D_\infty \) type singularity. As mentioned in Sect. 1.1, the Varchenko exponent is 0, i.e., \(\nu (\phi ) = 0\), if \(h(\phi ) < 2\). When \(h(\phi ) = 2\) the equality \(\nu (\phi ) = 0\) also holds true in our case since the principal faces are non-compact. We conclude that if \(h_{\text {lin}}(\phi ) < 2\), then by Proposition 4.1 we have the mixed norm Fourier restriction estimate (4.2) for both of the points \((1/p_1',1/p_3') = (1/2,0)\) and \((1/p_1',1/p_3') = (0,1/(2h(\phi )))\). Therefore, according to Sect. 2.4, by interpolation it remains to prove the estimate (4.2) for the respective critical exponents given by

where \(m \geqslant 2\) is the principal exponent of \(\psi \) from Sect. 2.4.

Recall that according to Proposition 4.3 we may concentrate on the piece of the measure \(\mu \) located near the principal root jet:

where

for an arbitrarily small \(\varepsilon \) and \(\omega (0) x_1^m\) the first term in the Taylor expansion of

where \(\omega \) is a smooth function such that \(\omega (0) \ne 0\).

As we use the same decompositions of the measure \(\mu ^{\rho _1}\) as in [16], we shall only briefly outline the decomposition procedure.

5.1 Basic estimates

Before we outline the further decompositions and rescalings of \(\mu ^{\rho _1}\), we first describe here the general strategy for proving the Fourier restriction estimates for the pieces obtained through these decompositions. All of the pieces \(\nu \) of the measure \(\mu ^{\rho _1}\) will essentially be of the form

where \(\Phi \) is a phase function and \(a \geqslant 0\) an amplitude. The amplitude will usually be compactly supported with support away from the origin. Both \(\Phi \) and a will depend on various decomposition related parameters. We shall need to prove the Fourier restriction estimate with respect to these measures with estimates being uniform in a certain sense with respect to the appearing decomposition parameters.

At this point one uses the “\(R^* R\)” method applied to the measure \(\nu \). The resulting operator is \(T_\nu \) which acts by convolution against the Fourier transform of \(\nu \). Now one considers the spectral decomposition \((\nu ^\lambda )_\lambda \) of the measure \(\nu \) so that each functions \(\nu ^\lambda \) is localised in the frequency space at \(\lambda = (\lambda _1, \lambda _2, \lambda _3)\), where \(\lambda _i \geqslant 1\) are dyadic numbers for \(i=1, 2, 3\). For such functions \(\nu _\lambda \) we shall obtain bounds of the form (3.6). By Lemma 3.8 then we have the bounds on their associated convolution operators \(T^\lambda _\nu \):

for all \({\tilde{\sigma }} \in [0,1)\). A and B shall again depend on various decomposition related parameters. If we now define

then interpolating (5.3) (\(\theta \) being the interpolation coefficient) we get precisely the estimate for the critical exponent in (5.1) with the bound

Now it remains to sum over \(\lambda \).

When \(\theta < 1/4\), we shall be able to always sum absolutely. In the cases when \(\theta = 1/4\) and particularly \(\theta = 1/3\) (note that both appear only in A type singularity with \(m = 3\) and \(m = 2\) respectively) we shall need the complex interpolation method developed in [16].

5.2 First decompositions and rescalings of \(\mu ^{\rho _1}\)

As in Sect. 4, we use the \(\kappa \) dilatations associated to the principal face of \({\mathcal {N}}(\phi )\), and subsequently a Littlewood-Paley argument. Then it remains to prove the Fourier restriction estimate for the renormalised measures \(\nu _k\) of the form

uniformly in k. As was shown in [16, Section 4.1], the function \(\phi (x,\delta )\) has the form

where \(\delta = (\delta _1,\delta _2,\delta _3) :=(2^{-\kappa _1 k}, 2^{-\kappa _2 k}, 2^{-(n\kappa _1-1)k})\), and

Above the functions b, \(b_1\), \(b_2\), \(\beta \), and the quantity n are as in Sect. 2.4. Recall that \(m = \kappa _2/\kappa _1 \geqslant 2\) and so \(\delta _2 = \delta _1^m\). The amplitude \(a(x,\delta ) \geqslant 0\) is a smooth function of \((x,\delta )\) supported at

Furthermore, due to the \(\rho _1\) cutoff function, which has a \(\kappa \)-homogeneous domain, we may assume \(|x_2 - x_1^m \omega (0)| \ll 1\).

Since we can take k arbitrarily large, the parameter \(\delta \) approaches 0. This implies that on the domain of integration of a we have that \({\tilde{b}}(x_1,x_2,\delta _1,\delta _2)\) converges as a function of \((x_1,x_2)\) to b(0, 0) (resp. \(b_1(0,0)x_1\)) in \(C^\infty \) when \(k \rightarrow \infty \) and \(\phi \) has A type singularity (resp. D type singularity). The amplitude \(a(x,\delta )\) converges in \(C_c^\infty \) to a(x, 0). We also recall that according to the assumption in Theorem 5.1, we may assume that \(\delta _3 = 0\) if “\(n = \infty \)”, i.e., if \(b_0\) is flat in the normal form of \(\phi \).

The next step is to decompose the (compactly) supported amplitude a into finitely many parts, each localised near a point \(v=(v_1,v_2)\) for which we may assume that it satisfies \(v_2 = v_1^m \omega (0)\) (by compactness and since in (5.2) we can take \(\varepsilon \) arbitrarily small). The newly obtained measures we denote by \(\nu _\delta \) and their new amplitudes by the same symbol \(a(x,\delta ) \geqslant 0\):

where now the support of \(a(\cdot ,\delta )\) is contained in the set \(|x-v| \ll 1\).

Since we can use Littlewood-Paley decompositions in the mixed norm case (see [22, Theorem 2], and also [1, 8]), we can now decompose the measure \(\nu _\delta \) in the \(x_3\) direction in the same way as in [16, Section 4.1]. This is achieved by using the cutoff functions \(\chi _1(2^{2j}\phi (x,\delta ))\) in order to localise near the part where \(|\phi (x,\delta )| \sim 2^{-2j}\). Then it remains to prove the mixed norm estimate (4.2) for measures \(\nu _{\delta ,j}\) with bounds uniform in paramteres \(j \in {\mathbb {N}}\) and \(\delta = (\delta _1,\delta _2,\delta _3) \in {\mathbb {R}}^3\), \(\delta _i \geqslant 0\), \(i = 1,2,3\), where the measures \(\nu _{\delta ,j}\) are defined through

where j can be taken sufficiently large and \(\delta \) sufficiently small. The function \(2^{2j}\phi (x,\delta )\) can be written as

Following [16], we distinguish three cases: \(2^{2j} \delta _3 \ll 1\), \(2^{2j} \delta _3 \gg 1\), and the most involed \(2^{2j} \delta _3 \sim 1\).

5.3 The case \(2^{2j} \delta _3 \gg 1\)

As was done in [16, Subsection 4.1.1], we change coordinates from \((x_1,x_2)\) to \((x_1, 2^{2j} \phi (x,\delta ))\) and subsequently perform a rescaling (which we adjust to our mixed norm case). Then one obtains that the mixed norm Fourier restriction for \(\nu _{\delta , j}\) is equivalent to the estimate

where \({\tilde{\nu }}_{\delta ,j}\) is the rescaled measure

The function \(a(x, \delta , j)\) has in \(\delta \) and j uniformly bounded \(C^l\) norms for an arbitrarily large \(l \geqslant 0\), and the phase function is given by

where \(x_1\sim 1\), \(x_2\sim 1\), and without loss of generality we may assume \({\tilde{b}}_1(x_1,x_2,0,0) \sim 1\) and \({\tilde{\beta }}(0) \sim 1\); for details see [16, Subsection 4.1.1]. There the phase function \(\phi (x, \delta , j)\) was obtained by solving the equation

in \(y_2\) after substituting \(x_1 = y_1\) and \(x_2 = 2^{2j} \phi (y,\delta )\).

By using the implicit function theorem one can show that when \(\delta \rightarrow 0\), then we have the following \(C^\infty \) convergence in the \((x_1,x_2)\) variables:

In both the A and D type singularity cases we see that \({\tilde{b}}_1\) does not depend on \(x_2\) in an essential way.

Now we proceed to perform a spectral decomposition of \({\tilde{\nu }}_{\delta , j}\), i.e., for \((\lambda _1, \lambda _2, \lambda _3)\) dyadic numbers with \(\lambda _i \geqslant 1\), \(i = 1,2,3\), we define the spectrally localised measures \(\nu _j^\lambda \) through