Abstract

In this article, we investigate systems of generalized Schrödinger operators and their fundamental matrices. More specifically, we establish the existence of such fundamental matrices and then prove sharp upper and lower exponential decay estimates for them. The Schrödinger operators that we consider have leading coefficients that are bounded and uniformly elliptic, while the zeroth-order terms are assumed to be nondegenerate and belong to a reverse Hölder class of matrices. In particular, our operators need not be self-adjoint. The exponential bounds are governed by the so-called upper and lower Agmon distances associated to the reverse Hölder matrix that serves as the potential function. Furthermore, we thoroughly discuss the relationship between this new reverse Hölder class of matrices, the more classical matrix \({\mathcal {A}_{p,\infty }}\) class, and the matrix \({\mathcal {A}_\infty }\) class introduced by Dall’Ara (J Funct Anal 268(12):3649–3679, 2015).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this article, we undertake the study of fundamental matrices associated to systems of generalized Schrödinger operators; we establish the existence of such fundamental matrices and we prove sharp upper and lower exponential decay estimates for them. Our work is strongly motivated by the papers [2] and [3] in which similar exponential decay estimates were established for fundamental solutions associated to Schrödinger operators of the form

and generalized magnetic Schrödinger operators of the form

respectively. In [2], \(\mu \) is assumed to be a nonnegative Radon measure, whereas in [3], A is bounded and uniformly elliptic, while \(\textbf{a}\) and V satisfy a number of reverse Hölder conditions. Here we consider systems of generalized electric Schrödinger operators of the form

where \(A^{\alpha \beta } = A^{\alpha \beta }(x)\), for each \(\alpha , \beta \in \left\{ 1, \dots , n\right\} \), is a \(d \times d\) matrix with bounded measurable coefficients defined on \(\mathbb {R}^n\) that satisfies boundedness and ellipticity conditions as described by (25) and (24), respectively. Moreover, the zeroth order potential function V is assumed to be a matrix \({\mathcal {B}_p}\) function. We say that V is in the matrix \({\mathcal {B}_p}\) class if and only if \(\left\langle V \vec {e}, \vec {e} \right\rangle := \vec {e}^T V \vec {e}\) is uniformly a scalar \({\text {B}_p}\) function for any \(\vec {e} \in \mathbb {R}^d\). As such, the operators that we consider in this article fall in between the generality of those that appear in [2] and [3], but are far more general in the sense that they are for elliptic systems of equations.

Many of the ideas in Shen’s prior work [4,5,6] have contributed to this article. In particular, we have built on some of the framework used to prove power decay estimates for fundamental solutions to Schrödinger operators \(-\Delta + V\), where V belongs to the scalar reverse Hölder class \({\text {B}_p}\), for \(p = \infty \) in [4] and \(p \ge \frac{n}{2}\) in [5], along with the exponential decay estimates for eigenfunctions of more general magnetic operators as in [6].

As in both [2] and [3], Fefferman–Phong inequalities (see [7], for example) serve as one of the main tools used to establish both the upper and lower exponential bounds that are presented in this article. However, since the Fefferman–Phong inequalities that we found in the literature only apply to scalar weights, we state and prove new matrix-weighted Fefferman–Phong inequalities (see Lemma 15 and Corollary 2) that are suited to our problem. To establish our new Fefferman–Phong inequalities, we build upon the notion of an auxiliary function associated to a scalar \({\text {B}_p}\) function that was introduced by Shen in [4]. More specifically, given a matrix function \(V \in {\mathcal {B}_p}\), we introduce a pair of auxiliary functions: the upper and lower auxiliary functions. (Sect. 3 contains precise definitions of these functions and examines their properties.) Roughly speaking, we can, in some settings, interpret these quantities as the auxiliary functions associated to the largest and smallest eigenvalues of V. The upper and lower auxiliary functions are used to produce two versions of the Fefferman–Phong inequalities. Using these auxiliary functions, we also define upper and lower Agmon distances (also defined in Sect. 3), which then appear in our lower and upper exponential bounds for the fundamental matrix, respectively. We remark that the original Agmon distance appeared in [8], where exponential upper bounds for N-body Schrödinger operators first appeared.

Given the elliptic operator \(\mathcal {L}_V\) as in (1) that satisfies a suitable set of conditions, there exists a fundamental matrix function associated to \(\mathcal {L}_V\), which we denote by \(\Gamma ^V\). The fundamental matrix generalizes the notion of a fundamental solution to the systems setting; see for example [9], where the authors generalized the results of [10] to the systems setting. To make precise the notion of the fundamental matrix for our systems setting, we rely upon the constructions presented in [11]. In particular, we define our bilinear form associated to (1), and introduce a well-tailored Hilbert space that is used to establish the existence of weak solutions to PDEs of the form \(\mathcal {L}_V\vec {u} = \vec {f}\). We then assume that our operator \(\mathcal {L}_V\) satisfies a natural collection of de Giorgi–Nash–Moser estimates. This allows us to confirm that the framework from [11] holds for our setting, thereby verifying the existence of the fundamental matrix \(\Gamma ^V\). Section 6 contains these details.

In Sect. 7, assuming very mild conditions on V, we verify that the class of systems of “weakly coupled” elliptic operators of the form

satisfy the de Giorgi–Nash–Moser estimates that are mentioned in the previous paragraph (see the remark at the end of Sect. 7 for details). Consequently, this implies that the fundamental matrices associated to weakly coupled elliptic systems exist and satisfy the required estimates. In fact, this additionally shows that Green’s functions associated to these elliptic systems exist and satisfy weaker interior estimates, though we will not need this fact. Further, we establish local Hölder continuity of bounded weak solutions \(\vec {u}\) to

under even weaker conditions on V. Specifically, V doesn’t have to be positive semidefinite a.e. or even symmetric, see Proposition 10 and Remark 10. Finally, although we will not pursue this line of thought in this paper, note that the combination of Proposition 10 and Remark 10 likely leads to new Schauder estimates for bounded weak solutions \(\vec {u}\) to (1). We remark that this section on elliptic theory for weakly coupled Schrödinger systems could be of independent interest beyond the theory of fundamental matrices. A “weakly coupled (linear) elliptic operator” typically refers to an operator of the form

where B is a \(d \times d\) matrix function and

where the matrices \(A_1, \ldots , A_d\) are uniformly elliptic. We refer the reader to [12,13,14] for boundedness and regularity results for weak solutions to \(- {{\,\textrm{div}\,}}\left( \mathcal {A} \nabla \vec {u} \right) + B \nabla \vec {u} + V\vec {u} = 0\) under very different conditions on the coefficients of matrices \(A_1, \ldots , A_d, B, \) and V.

Assuming the set-up outlined above, we now describe the decay results for the fundamental matrices. We show that there exists a small constant \(\varepsilon > 0\) so that for any \(\vec {e} \in \mathbb {S}^{d-1}\),

where \(\overline{d}\) and \(\underline{d}\) denote the upper and lower Agmon distances associated to the potential function \(V \in {\mathcal {B}_p}\) (as defined in Sect. 3). That is, we establish an exponential upper bound for the norm of the fundamental matrix in terms of the lower Agmon distance function, while the fundamental matrix is always exponentially bounded from below in terms of the upper Agmon distance function. The precise statements of these bounds are described by Theorems 11 and 12. For the upper bound, we assume that \(V \in {\mathcal {B}_p}\) along with a quantitative cancellability condition \({\mathcal{Q}\mathcal{C}}\) that will be made precise in Sect. 2.3. On the other hand, the lower bound requires the scalar condition \(\left| V\right| \in {\text {B}_p}\) and that the operator \(\mathcal {L}_V\) satisfies some additional properties—notably a scale-invariant Harnack-type condition. In fact, the term \(\overline{d}(x, y, V)\) in the lower bound of (3) can be replaced by \(d(x, y, \left| V\right| )\), see Remark 15.

Interestingly, (3) can be used to provide a beautiful connection between our upper and lower auxiliary functions and Landscape functions that are similar to those defined in [15]. Note that this connection was previously found in [16] for scalar elliptic operators with nonnegative potentials. We will briefly discuss these ideas at the end of Sect. 9, see Remark 17.

To further understand the structure of the bounds stated in (3), we consider a simple example. For some scalar functions \(0 < v_1 \le v_2 \in {\text {B}_p}\), define the matrix function

A straightforward check shows that \(V \in {\mathcal {B}_p}\) and satisfies a nondegeneracy condition that will be introduced below. Moreover, the upper and lower Agmon distances satisfy \(\underline{d}\left( \cdot , \cdot , V \right) = d\left( \cdot , \cdot , v_1 \right) \) and \(\overline{d}\left( \cdot , \cdot , V \right) = d\left( \cdot , \cdot , v_2 \right) \), where \(d\left( x, y, v \right) \) denotes the standard Agmon distance from x to y that is associated to a scalar function \(v \in {\text {B}_p}\). We then set

Since \(\vec {u}\) satisfies \(\mathcal {L}_V\vec {u} = \vec {f}\) if and only if \(u_i\) satisfies \(- \Delta u_i + v_i u_i = f_i\) for \(i = 1, 2\), then \(\mathcal {L}_V\) satisfies the set of elliptic assumptions required for our operator. Moreover, the fundamental matrix for \(\mathcal {L}_V\) has a diagonal form given by

where each \(\Gamma ^{v_i}\) is the fundamental solution for \(-\Delta + v_i\). The results of [2] and [3] show that for \(i = 1, 2\), there exists \(\varepsilon _i > 0\) so that

Restated, for \(i = 1, 2\), we have

where \(\left\{ \vec {e}_1, \vec {e}_2\right\} \) denotes the standard basis for \(\mathbb {R}^2\). Since \(v_1 \le v_2\) implies that \(\underline{d}\left( x, y, V \right) = d\left( x, y, v_1 \right) \le d\left( x, y, v_2 \right) = \overline{d}\left( x, y, V \right) \), then we see that there exists \(\varepsilon > 0\) so that for any \(\vec {e} \in {\mathbb {S}}^1\),

Compared to estimate (3) that holds for our general operators, this example shows that our results are sharp up to constants. In particular, the best exponential upper bound we can hope for will involve the lower Agmon distance function, while the best exponential lower bound will involve the upper Agmon distance function.

As stated above, the Fefferman–Phong inequalities are crucial to proving the exponential upper and lower bounds of this article. The classical Poincaré inequality is one of the main tools used to prove the original Fefferman–Phong inequalities. Since we are working in a matrix setting, we use a new matrix-weighted Poincaré inequality. Interestingly, a fairly straightforward argument based on the scalar Poincaré inequality from [2] can be used to prove this matrix version of the Poincaré inequality, which is precisely what is needed to prove the main results described above.

Although the main theorems in this article may be interpreted as vector versions of the results in [2] and [3], many new ideas (that go well beyond the technicalities of working with systems) were required and developed to establish our results. We now describe these technical innovations.

First, the theory of matrix weights was not suitably developed for our needs. For example, we had to appropriately define the matrix reverse Hölder classes, \({\mathcal {B}_p}\). And while the scalar versions of \({\text {B}_p}\) and \({\text {A}_\infty }\) have a well-understood and very useful correspondence (namely, a scalar weight \(v \in \text {B}_p\) iff \(v^p \in \text {A}_\infty \)), this relationship was not known in the matrix setting. In order to arrive at a setting in which we could establish interesting results, we explored the connections between the matrix classes \({\mathcal {B}_p}\) that we develop, as well as \({\mathcal {A}_\infty }\) and \({\mathcal {A}_{p,\infty }}\) that were introduced in [1] and [17, 18], respectively. The matrix classes are introduced in Sect. 2, and many additional relationships (including a matrix version of the previously mentioned correspondence between A\({}_\infty \) and B\({}_p\)) are explored in Appendices A and B.

Given that we are working in a matrix setting, there was no reason to expect to work with a single auxiliary function. Instead, we anticipated that our auxiliary functions would either be matrix-valued, or that we would have multiple scalar-valued “directional” Agmon functions. We first tried to work with a matrix-valued auxiliary function based on the spectral decomposition of the matrix weight. However, since this set-up assumed that all eigenvalues belong to \({\text {B}_p}\), and it is unclear when that assumption holds, we decided that this approach was overly restrictive. As such, we decided to work with a pair of scalar-valued auxiliary functions that capture the upper and lower behaviors of the matrix weight. Once these functions were defined and understood, we could associate Agmon distances to them in the usual manner. These notions are introduced in Sect. 3.

Another virtue of this article is the verification of elliptic theory for a class of elliptic systems of the form (1). By following the ideas of Caffarelli from [19], we prove that under standard assumptions on the potential matrix V, the solutions to these systems are bounded and Hölder continuous. That is, instead of simply assuming that our operators are chosen to satisfy the de Giorgi–Nash–Moser estimates, we prove in Sect. 7 that these results hold for this class of examples. In particular, we can then fully justify the existence of their corresponding fundamental matrices. To the best of our knowledge, these ideas from [19] have not been used in the linear setting.

A final challenge that we overcame has to do with the fact there are two distinct and unrelated Agmon distance functions associated to the matrix weight V. In particular, because these distance functions aren’t related, we had to modify the scalar approach to proving exponential upper and lower bounds for the fundamental matrix associated to the operator \(\mathcal {L}_V:= \mathcal {L}_0+ V\). The first bound that we prove for the fundamental matrix is an exponential upper bound in terms of the lower Agmon distance. In the scalar setting, this upper bound is then used to prove the exponential lower bound. But for us, the best exponential lower bound that we can expect is in terms of the upper Agmon distance. If we follow the scalar proof, we are led to a standstill since the upper and lower Agmon distances of V aren’t related. We overcame this issue by introducing \(\mathcal {L}_\Lambda := \mathcal {L}_0+ \left| V\right| I_d\), an elliptic operator whose upper and lower Agmon distances agree and are comparable to the upper Agmon distance associated to \(\mathcal {L}_V\). In particular, the upper bound for the fundamental matrix of \(\mathcal {L}_\Lambda \) depends on the upper Agmon distance of V. This observation, along with a clever trick, allows us to prove the required exponential lower bound. These ideas are described in Sect. 9, using results from the end of Sect. 8.

The motivating reasons for studying systems of elliptic equations are threefold, as we now describe.

First, real-valued systems may be used to describe complex-valued equations and systems. To illuminate this point, we consider a simple example. Let \(\Omega \subseteq \mathbb {R}^n\) be an open set and consider the complex-valued Schrödinger operator given by

where \(c = \left( c^{\alpha \beta } \right) _{\alpha , \beta = 1}^n\) denotes the complex-valued coefficient matrix and x denotes the complex-valued potential function. That is, for each \(\alpha , \beta = 1, \ldots , n\),

where both \(a^{\alpha \beta }\) and \(b^{\alpha \beta }\) are \(\mathbb {R}\)-valued functions defined on \(\Omega \subseteq \mathbb {R}^n\), while

where both v and w are \(\mathbb {R}\)-valued functions defined on \(\Omega \). To translate our complex operator into the language of systems, we define

That is, each of the entries of A is an \(n \times n\) matrix function:

while each of the entries of V is a scalar function:

Then we define the systems operator

If \(u = u_1 + i u_2\) is a \(\mathbb {C}\)-valued solution to \(L_x u = 0\), where both \(u_1\) and \(u_2\) are \(\mathbb {R}\)-valued, then \(\vec {u} = \begin{bmatrix} u_1 \\ u_2 \end{bmatrix}\) is an \(\mathbb {R}^2\)-valued vector solution to the elliptic system described by \(\mathcal {L}_V\vec {u} = \vec {0}\).

This construction also works with complex systems. Let \(C = A + i B\), where each \(A^{\alpha \beta }\) and \(B^{\alpha \beta }\) is \(\mathbb {R}^{d \times d}\)-valued, for \(\alpha , \beta \in \left\{ 1, \ldots , n\right\} \). If we take \(X = V + i W\), where V and W are \(\mathbb {R}^{d \times d}\)-valued, then the operator

describes a complex-valued system of d equations. Following the construction above, we get a real-valued system of 2d equations of the form described by (4), where now

In particular, if X is assumed to be a \(d \times d\) Hermitian matrix (meaning that \(X = X^*\)), then V is a \(2d \times 2d\) real, symmetric matrix. This shows that studying systems of equations with Hermitian potential functions (as is often done in mathematical physics) is equivalent to studying real-valued systems with symmetric potentials, as we do in this article. Moreover, X is positive (semi)definite iff V is positive (semi)definite. In conclusion, because there is much interest in complex-valued elliptic operators, we believe that it is very meaningful to study real-valued elliptic systems of equations.

Our second motivation comes from physics and molecular dynamics. Schrödinger operators with complex Hermitian matrix potentials V naturally arise when one seeks to solve the Schrödinger eigenvalue problem for a molecule with Coulomb interactions between electrons and nuclei. More precisely, it is sometimes useful to convert the eigenvalue problem associated to the above (scalar) Schrödinger operator into a simpler eigenvalue problem associated to a Schrödinger operator with a matrix potential and Laplacian taken with respect to only the nuclear coordinates. See the classical references [20, p. 335–342] and [21, p. 148–153] for more details. Note that this potential is self-adjoint and is often assumed to have eigenvalues that are bounded below, or even approaching infinity as the nuclear variable approaches infinity. See for example [22,23,24,25,26], where various molecular dynamical approximation errors and asymptotics are computed utilizing the matrix Schrödinger eigenvalue problem stated above as their starting point.

With this in mind, we are hopeful that the results in this paper might find applications to the mathematical theory of molecular dynamics. Moreover, it would be interesting to know whether the results of Sects. 6 and 7 are true for “Schrödinger operators” with a matrix potential and nonzero first order terms. Note that such operators also appear naturally when one solves the same Schrödinger eigenvalue problem for a molecule with Coulomb interactions between electrons and nuclei, but only partially performs the “conversion” described in the previous paragraph. We again refer the reader to [20, p. 335–342] for additional details. It would also be interesting to determine whether defining a landscape function in terms of a Green’s function of a Schrödinger operator with a matrix potential would provide useful pointwise eigenfunction bounds.

Third, studying elliptic systems of PDEs with a symmetric nonnegative matrix potential provides a beautiful connection between the theory of matrix-weighted norm inequalities and the theory of elliptic PDEs. In particular, classical scalar reverse Hölder and Muckenhoupt A\({}_\infty \) assumptions on the scalar potential of elliptic equations are very often assumed (see [4,5,6] for example). On the other hand, while various matrix versions of these conditions have appeared in the literature (see for example [1, 17, 18, 27, 28]), the connections between elliptic systems of PDEs with a symmetric nonnegative matrix potential and the theory of matrix-weighted norm inequalities is a mostly unexplored area (with the exception of [1], which provides a Shubin–Maz’ya type sufficiency condition for the discreteness of the spectrum of a Schrödinger operator with complex Hermitian positive-semidefinite matrix potential V on \(\mathbb {R}^n\)). This project led to the systematic development of the theory of matrix reverse Hölder classes, \({\mathcal {B}_p}\), as well as an examination of the connections between \({\mathcal {B}_p}\), \({\mathcal {A}_\infty }\), and \({\mathcal {A}_{2,\infty }}\). By going beyond the ideas from [1, 17, 18], we carefully study \({\mathcal {A}_\infty }\) and prove that \({\mathcal {A}_\infty }= {\mathcal {A}_{2,\infty }}\).

Unless otherwise stated, we assume that our \(d \times d\) matrix weights (which play the role of the potential in our operators) are real-valued, symmetric, and positive semidefinite. As described above, real symmetric potentials are equivalent to complex Hermitian potentials through a “dimension doubling” process. In fact, because of this equivalence, our results can be compared with those in mathematical physics, where systems with complex, Hermitian matrix potentials are considered. To reiterate, we assume throughout the body of the article that V is real-valued and symmetric. However, in Appendix B, we follow the matrix weights community convention and assume that our matrix weights are complex-valued and Hermitian.

1.1 Organization of the article

The next three sections are devoted to matrix weight preliminaries with the goal of stating and proving our matrix version of the Fefferman–Phong inequality, Lemma 15. In Sect. 2, we present the different classes of matrix weights that we work with throughout this article, including the aforementioned matrix reverse Hölder condition \({\mathcal {B}_p}\) for \(p > 1\) and the (non-Muckenhoupt) quantitative cancellability condition \({\mathcal{Q}\mathcal{C}}\) that will be crucial to the proof of Lemma 15. These two classes will be discussed in relationship to the existing matrix weight literature in Appendix B. Section 3 introduces the auxiliary functions and their associated Agmon distance functions. The Fefferman–Phong inequalities are then stated and proved in Sect. 4. Section 4 also contains the Poincaré inequality that is used to prove one of our new Fefferman–Phong inequalities. The following three sections are concerned with elliptic theory. Section 5 introduces the elliptic systems of the form (1) discussed earlier. The fundamental matrices associated to these operators are discussed in Sect. 6. In Sect. 7, we show that the elliptic systems of the form (1) satisfy the assumptions from Sect. 6. The last two sections, Sects. 8 and 9, are respectively concerned with the upper and lower exponential bounds for our fundamental matrices. Further, we discuss the aforementioned connection between our upper and lower auxiliary functions and Landscape functions at the end of Sect. 9.

Finally, in our first two appendices, we state and prove a number of results related to the theory of matrix weights that are interesting in their own right, but are not needed for the proofs of our main results. In Appendix A, we explore the quantitative cancellability class \({\mathcal{Q}\mathcal{C}}\) in depth, providing examples and comparing it to our other matrix classes. In Appendix B, we systematically develop the theory of the various matrix classes that are introduced in Sect. 2. In particular, we provide a comprehensive discussion of the matrix \({\mathcal {B}_p}\) class and characterize this class of matrix weights in terms of the more classical matrix weight class \({\mathcal {A}_{p,\infty }}\) from [17, 18]. This discussion nicely complements a related matrix weight characterization from [28, Corollary 3.8]. We also discuss how the matrix \({\mathcal {A}_\infty }\) class introduced in [1] relates to the other matrix weight conditions discussed in this paper. In particular, we establish that \({\mathcal {A}_\infty }= {\mathcal {A}_{2,\infty }}\). Further, we provide a new characterization of the matrix \({\mathcal {A}_{2,\infty }}\) condition in terms of a new reverse Brunn–Minkowski type inequality. We hope that Appendix B will appeal to the reader who is interested in the theory of matrix-weighted norm inequalities in their own right. The last appendix contains the proofs of technical results that we skipped in the body.

We have attempted to make this article as self-contained as possible, particularly for the reader who is not an expert in elliptic theory or matrix weights. In Appendix B, we have not assumed any prior knowledge of matrix weights. As such, we hope that this section can serve as a reference for the \({\mathcal {A}_\infty }\) theory of matrix weights.

1.2 Notation

As is standard, we use C, c, etc. to denote constants that may change from line to line. We may use the notation C(n, p) to indicate that the constant depends on n and p, for example. The notation \(a \lesssim b\) means that there exists a constant \(c > 0\) so that \(a \le c b\). If \(c = c\left( d, p \right) \), for example, then we may write \(a \lesssim _{(d, p)} b\). We say that \(a \simeq b\) if both \(a \lesssim b\) and \(b \lesssim a\) with dependence denoted analogously.

Let \(\left\langle \cdot , \cdot \right\rangle _d: \mathbb {R}^d \times \mathbb {R}^d \rightarrow \mathbb {R}\) denote the standard Euclidean inner product on d-dimensional space. When the dimension of the underlying space is understood from the context, we may drop the subscript and simply write \(\left\langle \cdot , \cdot \right\rangle \). For a vector \(\vec {v} \in \mathbb {R}^d\), its scalar length is \(\left| \vec {v}\right| = \left\langle \vec {v}, \vec {v} \right\rangle ^{\frac{1}{2}}\). The sphere in d-dimensional space is \(\mathbb {S}^{d-1}= \left\{ \vec {v} \in \mathbb {R}^d: \left| \vec {v}\right| = 1\right\} \).

For a \(d \times d\) real-valued matrix A, we use the 2-norm, which is given by

Alternatively, \(\left| A\right| \) is equal to its largest singular value, the square root of the largest eigenvalue of \(AA^T\). For symmetric positive semidefinite \(d \times d\) matrices A and B, we say that \(A \le B\) if \(\left\langle A \vec e, \vec e \right\rangle \le \left\langle B \vec e, \vec e \right\rangle \) for every \(\vec e \in \mathbb {R}^d\). Note that both \(\left| \vec {v}\right| \) and \(\left| A\right| \) are scalar quantities.

If A is symmetric and positive semidefinite, then \(\left| A\right| \) is equal to \(\lambda \), the largest eigenvalue of A. Let \(\vec {v} \in \mathbb {S}^{d-1}\) denote the eigenvector associated to \(\lambda \) and let \(\left\{ \vec {e}_i\right\} _{i=1}^d\) denote the standard basis of \(\mathbb {R}^d\). Observe that \(\vec {v} = \sum _{i=1}^d \left\langle \vec {v}, \vec {e}_i \right\rangle \vec {e}_i\) and for each j, \( \left\langle \vec {v}, \vec {e}_j \right\rangle ^2 \le \sum _{i=1}^d \left\langle \vec {v}, \vec {e}_i \right\rangle ^2 = 1\). Then, since \(A^{\frac{1}{2}}\) is well-defined, an application of Cauchy–Schwarz shows that

Let \(\Omega \subseteq \mathbb {R}^n\) and \(p \in \left[ 1,\infty \right) \). For any d-vector \(\vec {v}\), we write \( \left\| \vec {v}\right\| _{L^p(\Omega )} = \left( \int _{\Omega } \left| \vec {v}(x)\right| ^p dx \right) ^{\frac{1}{p}}\). Similarly, for any \(d \times d\) matrix A, we use the notation \( \left\| A\right\| _{L^p(\Omega )} = \left( \int _{\Omega } \left| A(x)\right| ^p dx \right) ^{\frac{1}{p}}\). When \(p = \infty \), we write \( \left\| \vec {v}\right\| _{L^\infty (\Omega )} = {{\,\textrm{ess}\,}}\sup _{x \in \Omega } \left| \vec {v}(x)\right| \) and \( \left\| A\right\| _{L^\infty (\Omega )} = {{\,\textrm{ess}\,}}\sup _{x \in \Omega } \left| A(x)\right| \). We say that a vector \(\vec {v}\) belongs to \(L^p\left( \Omega \right) \) iff the scalar function \(\left| \vec {v}\right| \) belongs to \(L^p\left( \Omega \right) \). Similarly, for a matrix function A defined on \(\Omega \), \(A \in L^p\left( \Omega \right) \) iff \(\left| A\right| \in L^p\left( \Omega \right) \). In summary, we use the notation \(\left| \cdot \right| \) to denote norms of vectors and matrices, while we use the notations \(\left\| \cdot \right\| _p\), \(\left\| \cdot \right\| _{L^p}\), or \(\left\| \cdot \right\| _{L^p\left( \Omega \right) }\) to denote \(L^p\)-space norms.

We let \(C^\infty _c(\Omega )\) denote the set of all infinitely differentiable functions with compact support in \(\Omega \). If \(\vec {\varphi }: \Omega \rightarrow \mathbb {R}^d\) is a vector-valued function for which each component function \(\varphi _i \in C^\infty _c(\Omega )\), then \(\vec {\varphi } \in C^\infty _c(\Omega )\).

For \(x \in \mathbb {R}^n\) and \(r > 0\), we use the notation B(x, r) to denote a ball of radius \(r > 0\) centered at \(x \in \mathbb {R}^n\). We let Q(x, r) be the ball of radius r and center \(x \in \mathbb {R}^n\) in the \(\ell ^\infty \) norm on \(\mathbb {R}^n\) (i.e. the cube with side length 2r and center x). We write Q to denote a generic cube. We use the notation \(\ell \) to denote the sidelength of a cube. That is, \(\ell \left( Q\left( x, r \right) \right) = 2r\).

We will assume throughout that \(n \ge 3\) and \(d \ge 2\). In general, \(1< p < \infty \), but we may further specify the range of p as we go.

2 Matrix classes

Within this section, we define the classes of matrix functions that we work with, then we collect a number of observations about them.

2.1 Reverse Hölder matrices

Recall that for a nonnegative scalar-valued function v, we say that v belongs to the reverse Hölder class \({\text {B}_p}\) if \(v \in L^p_{{{\,\textrm{loc}\,}}}\left( \mathbb {R}^n \right) \) and there exists a constant \(C_v\) so that for every cube Q in \(\mathbb {R}^n\),

Let V be a \(d \times d\) matrix weight on \(\mathbb {R}^n\). That is, V is a \(d \times d\) real-valued, symmetric, positive semidefinite matrix. For such matrices, we define \({\mathcal {B}_p}\), the class of reverse Hölder matrices, via quadratic forms as follows.

Definition 1

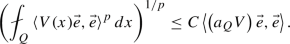

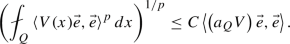

(Matrix \({\mathcal {B}_p}\)) For matrix weights, we say that V belongs to the class of reverse Hölder matrices, \(V \in {\mathcal {B}_p}\), if \(V \in L^p_{{{\,\textrm{loc}\,}}}\left( \mathbb {R}^n \right) \) and there exists a constant \(C_V\) so that for every cube Q in \(\mathbb {R}^n\) and every \(\vec e \in \mathbb {R}^d\) (or \(\mathbb {S}^{d-1}\)),

This constant is independent of Q and \(\vec {e}\), so that the inequality holds uniformly in Q and \(\vec {e}\). We call \(C_V\) the (uniform) \({\mathcal {B}_p}\) constant of V. Note that \(C_V\) depends on V as well as p; to indicate this, we may use the notation \(C_{V,p}\).

Now we collect some observations about such matrix functions. The first result regards the norm of a matrix \({\mathcal {B}_p}\) function.

Lemma 1

(Largest eigenvalue is \({\text {B}_p})\) If \(V \in {\mathcal {B}_p}\), then \(\left| V\right| \in {\text {B}_p}\) with \(C_{\left| V\right| } \lesssim _{(d, p)} C_V\).

Proof

Let \(\vec {e}_1, \ldots , \vec {e}_d\) denote the standard basis for \(\mathbb {R}^d\). Using that \( \left| V\right| \le d \sum _{i=1}^d \left\langle V \vec {e}_i, \vec {e}_i \right\rangle \) (as explained in the notation section, see (5)) combined with the Hölder and Minkowski inequalities shows that

where we have used the reverse Hölder inequality (7) in the fourth step. \(\square \)

Lemma 2

(Gehring’s Lemma) If \(V \in {\mathcal {B}_p}\), then there exists \(\varepsilon \left( p, C_V \right) > 0\) so that \(V \in \mathcal {B}_{p+\varepsilon }\). In particular, \(V \in \mathcal {B}_q\) for all \(q \in \left[ 1, p + \varepsilon \right] \). Moreover, if \(q \le s\), then \(C_{V, q} \le C_{V, s}\).

Proof

Since \(\left\langle V(x)\vec {e}, \vec {e} \right\rangle \) is a scalar \({\text {B}_p}\) weight (with \({\text {B}_p}\) constant uniform in \(\vec {e} \in \mathbb {R}^d\)), then it follows from the proof of Gehring’s Lemma (see for example the dyadic proof in [29]) that \(V \in {\mathcal {B}_p}\) implies that there exists \(\varepsilon > 0\) such that \(V \in \mathcal {B}_{p + \varepsilon }\). Let \(q \le p + \varepsilon \), \(\vec {e} \in \mathbb {R}^d\). Then by Hölder’s inequality,

showing that \(V \in \mathcal {B}_q\). If \(q \le s \le p + \varepsilon \), then the same argument holds with \(C_{V,s}\) in place of \(C_{V, p+\varepsilon }\). \(\square \)

Now we introduce an averaged version of V that will be extensively used in our arguments.

Definition 2

(Averaged matrix) Let V be a function, \(x \in \mathbb {R}^n\), \(r > 0\). We define the averaged matrix as

These averages have a controlled growth.

Lemma 3

(Controlled growth, cf. Lemma 1.2 in [5]) If \(V \in {\mathcal {B}_p}\), then for any \(0< r< R < \infty \),

where \(C_V\) is the uniform \({\mathcal {B}_p}\) constant for V.

Proof

Let \(0< r < R\). Then for any \(\vec e \in \mathbb {R}^n\), applications of the Hölder inequality and the reverse Hölder inequality described by (7) show that

As \(\vec {e} \in \mathbb {R}^n\) was arbitrary, then  , which leads to the conclusion of the lemma. \(\square \)

, which leads to the conclusion of the lemma. \(\square \)

Furthermore, the \({\mathcal {B}_p}\) matrices serve as doubling measures.

Lemma 4

(Doubling) If \(V \in {\mathcal {B}_p}\), then V is a doubling measure. That is, there exists a doubling constant \(\gamma = \gamma \left( n, p, C_V \right) > 0\) so that for every \(x \in \mathbb {R}^n\) and every \(r > 0\),

Proof

Since each \(\left\langle V \vec {e}, \vec {e} \right\rangle \) belongs to \({\text {B}_p}\), then by the scalar result, \(\left\langle V \vec {e}, \vec {e} \right\rangle \) defines a doubling measure. Moreover, since the \({\text {B}_p}\) constant is independent of \(\vec {e} \in \mathbb {S}^{d-1}\), then so too is the doubling constant associated to each measure defined by \(\left\langle V \vec {e}, \vec {e} \right\rangle \). It follows that V defines a doubling measure. \(\square \)

2.2 Nondegenerate matrices

Next, we define a very natural class of nondegenerate matrices.

Definition 3

(Nondegeneracy class) We say that V belongs to the nondegeneracy class, \(V \in \mathcal{N}\mathcal{D}\), if V is a matrix weight that satisfies the following very mild nondegeneracy condition: For any measurable \(\left| E\right| > 0\), we have (in the usual sense of semidefinite matrices) that

First we give an example of a matrix function in \({\mathcal {B}_p}\) but not in \(\mathcal{N}\mathcal{D}\).

Example 1

(\({\mathcal {B}_p}\setminus \mathcal{N}\mathcal{D}\) is nonempty) Take \(v: \mathbb {R}^n \rightarrow \mathbb {R}\) in \({\text {B}_p}\) and define

It is clear that \(V \in {\mathcal {B}_p}\). However, since V and its averages all have zero eigenvalues, then \(V \notin \mathcal{N}\mathcal{D}\).

Now we produce a number of examples in both \({\mathcal {B}_p}\) and \(\mathcal{N}\mathcal{D}\).

Example 2

(\({\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) polynomial matrices) Let V be a polynomial matrix.

-

(a)

If V is symmetric, nontrivial along the diagonal, and positive semidefinite, then V satisfies (9). It follows from Corollary 5 in Appendix A that \(V \in {\mathcal {B}_p}\) as well.

-

(b)

If for every \(\vec {e} \in \mathbb {R}^d\), there exists \(i \in \left\{ 1, \ldots , d\right\} \) so that \( \sum _{j = 1}^d V_{ij}e_j \ne 0\), then

$$\begin{aligned} \left\langle V^T V\vec {e}, \vec {e} \right\rangle&= \left\langle V\vec {e}, V\vec {e} \right\rangle = \sum _{k = 1}^d \left( \sum _{j = 1}^d V_{kj}e_j \right) ^2 \ge \left( \sum _{j = 1}^d V_{ij}e_j \right) ^2 > 0 \end{aligned}$$so that \(V^T V\) satisfies (9). Since \(V^T V\) is symmetric and polynomial, then \(V^T V \in \mathcal{N}\mathcal{D}\cap {\mathcal {B}_p}\). A similar condition shows that \(V V^T \in \mathcal{N}\mathcal{D}\cap {\mathcal {B}_p}\) as well.

As we will see below, the nondegeneracy condition described by (9) facilitates the introduction of one of our key tools. However, there are also practical reasons to avoid working with matrices that aren’t nondegenerate. For example, consider a matrix-valued Schrödinger operator of the form \(- \Delta + V\), where V is as given in Example 1. The fundamental matrix of this operator is diagonal with only the first entry exhibiting decay, while all other diagonal entries contain the fundamental solution for \(\Delta \). In particular, since the norm of this fundamental matrix doesn’t exhibit exponential decay, we believe that the assumption of nondegeneracy is very natural for our setting.

2.3 Quantitative cancellability condition

As we’ll see below, the single assumption that \(V \in {\mathcal {B}_p}\) will not suffice for our needs, and we’ll impose additional conditions on V. To define the quantitative cancellability condition that we use, we need to introduce the lower auxiliary function associated to \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\).

If \(V \in \mathcal{N}\mathcal{D}\), then by (8) and (9), for each \(x \in \mathbb {R}^n\) and \(r > 0\), we have \(\Psi \left( x, r; V \right) > 0\). If \(V \in {\mathcal {B}_p}\) and \(p > \frac{n}{2}\), then the power \(2 - \frac{n}{p} > 0\) and it follows from Lemma 3 that

These observations allows us to make the following definition of \(\underline{m}\), the lower auxiliary function.

Definition 4

(Lower auxiliary function) Let \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) for some \(p > \frac{n}{2}\). We define the lower auxiliary function \( \underline{m}\left( \cdot , V \right) : \mathbb {R}^n\rightarrow (0, \infty )\) as follows:

We investigate this function and others in much more detail within Sect. 3. For now, we use \(\underline{m}\) to define our next matrix class.

Definition 5

(Quantitative cancellability class) If \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\), then we say that V belongs to the Quantitative Cancellability class, \(V \in {\mathcal{Q}\mathcal{C}}\), if there exists \(N_V > 0\) so that for every \(x \in \mathbb {R}^n\) and every \(\vec {e} \in \mathbb {R}^d\),

where \(Q = Q\left( x, \frac{1}{\underline{m}(x, V)} \right) \).

For most of our applications, we consider \({\mathcal{Q}\mathcal{C}}\) as a subset of \({\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) in order to make sense of \(\underline{m}(\cdot , V)\). However, if \(V \notin {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) or \(\underline{m}\left( \cdot , V \right) \) is not well-defined, we say that \(V \in {\mathcal{Q}\mathcal{C}}\) if (10) holds for every cube \(Q \subseteq \mathbb {R}^n\).

To show that this class of matrices is meaningful, we provide a non-example.

Example 3

(\({\mathcal{Q}\mathcal{C}}\) is a proper subset of \({\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\)) Define \(V: \mathbb {R}^n \rightarrow \mathbb {R}^{2 \times 2}\) by

By Example 2, \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\). However, as shown in Appendix A, \(V \notin {\mathcal{Q}\mathcal{C}}\).

In Sect. 8, we prove one of our main results: an upper exponential decay estimate for the fundamental matrix of the elliptic operator \(\mathcal {L}_V\), where \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\cap {\mathcal{Q}\mathcal{C}}\). A further discussion of these matrix classes and their relationships is available in Appendix A.

2.4 Stronger conditions

To finish our discussion of matrix weights, we introduce some closely related and more well-known classes of matrices. Note that these assumptions are stronger and more readily checkable.

Definition 6

(\({\mathcal {A}_\infty }\) matrices) We say that V belongs to the A-infinity class of matrices, \(V \in {\mathcal {A}_\infty }\), if for any \(\varepsilon > 0\), there exists \(\delta > 0\) so that for every cube Q,

This class of matrix weights was first introduced in [1], where the author proved a Shubin–Maz’ya type sufficiency condition for the discreteness of the spectrum of a Schrodinger operator \(- \Delta + V\), where \(V \in {\mathcal {A}_\infty }\). Interestingly, and somewhat surprisingly, we show in the Appendix B that the condition \(V \in {\mathcal {A}_\infty }\) is equivalent to \(V \in \mathcal {A}_{2, \infty }\). The class \(\mathcal {A}_{2, \infty }\) is the readily checkable class of matrix weights introduced in [17, 18], which we now define.

Definition 7

(\(\mathcal {A}_{2, \infty }\) matrices) We say \(V \in \mathcal {A}_{2, \infty }\), if there exists \(A_V > 0\) so that for every cube Q, we have

We briefly discuss the relationship between \({\mathcal {B}_p}\) and \({\mathcal {A}_\infty }\). First, we have the following application of Gehring’s lemma.

Lemma 5

(\({\mathcal {A}_\infty }\subseteq \mathcal {B}_q\)) If \(V \in {\mathcal {A}_\infty }\), then \(V \in \mathcal {B}_q\) for some \(q > 1\).

Proof

Since \(\left\langle V \vec {e}, \vec {e} \right\rangle \in {\text {A}_\infty }\) uniformly in \(\vec {e} \in \mathbb {S}^{d-1}\), then by [30, Lemma 7.2.2], there exists \(q > 1\) so that \(\left\langle V \vec {e}, \vec {e} \right\rangle \in B_q\) uniformly in \(\vec {e} \in \mathbb {S}^{d-1}\). In particular, \(V \in \mathcal {B}_q\), as required. \(\square \)

On the other hand, when \(V \in {\mathcal {B}_p}\), there is no reason to expect that \(V \in {\mathcal {A}_\infty }\). If \(V \in {\mathcal {B}_p}\), then for each \(\vec {e} \in \mathbb {R}^d\), \(\left\langle V(x) \vec {e}, \vec {e} \right\rangle \) is a scalar \({\text {B}_p}\) function, which means that \(\left\langle V(x) \vec {e}, \vec {e} \right\rangle \) is a scalar \({\text {A}_\infty }\) function. As the \({\text {B}_p}\) constants are uniform in \(\vec {e}\), then for any \(\varepsilon > 0\), there exists \(\delta > 0\) so that for every cube Q, if we define  , then \( \left| {Q_{\vec {e}}}\right| \ge (1-\varepsilon ) \left| Q\right| \). However, this doesn’t guarantee any quantitative lower bound on \( \left| {\bigcap _{\vec {e}} Q_{\vec {e}}}\right| \). In fact, Example 3 above gives a matrix function that belongs to \(\mathcal{N}\mathcal{D}\cap {\mathcal {B}_p}\) for every p, but has zero determinant everywhere. Therefore, for every x, there exists \(\vec {e}_x\) for which \(\left\langle V(x) \vec {e}_x, \vec {e}_x \right\rangle = 0\), while the nondegeneracy condition ensures that

, then \( \left| {Q_{\vec {e}}}\right| \ge (1-\varepsilon ) \left| Q\right| \). However, this doesn’t guarantee any quantitative lower bound on \( \left| {\bigcap _{\vec {e}} Q_{\vec {e}}}\right| \). In fact, Example 3 above gives a matrix function that belongs to \(\mathcal{N}\mathcal{D}\cap {\mathcal {B}_p}\) for every p, but has zero determinant everywhere. Therefore, for every x, there exists \(\vec {e}_x\) for which \(\left\langle V(x) \vec {e}_x, \vec {e}_x \right\rangle = 0\), while the nondegeneracy condition ensures that  . From this observation, we see that the \({\mathcal {A}_\infty }\) condition (11) is impossible to satisfy. Alternatively, as shown in Appendix A, \(V \notin {\mathcal{Q}\mathcal{C}}\) and therefore by Lemma 6 below, \(V \notin {\mathcal {A}_\infty }\).

. From this observation, we see that the \({\mathcal {A}_\infty }\) condition (11) is impossible to satisfy. Alternatively, as shown in Appendix A, \(V \notin {\mathcal{Q}\mathcal{C}}\) and therefore by Lemma 6 below, \(V \notin {\mathcal {A}_\infty }\).

Recall that \(\lambda _d = \left| V\right| \) denotes the largest eigenvalue of V. As we saw in Lemma 1, if \(V \in {\mathcal {B}_p}\), then \(\lambda _d\) belongs to \({\text {B}_p}\). Let \(\lambda _1\) denote the smallest eigenvalue of V. That is, \(\lambda _1 = \left| V^{-1}\right| ^{-1}\). Under a stronger set of assumptions, we can also make the interesting conclusion that \(\lambda _1\) is in \({\text {B}_p}\).

Proposition 1

(Smallest eigenvalue is scalar \({\text {B}_p})\) If \(V \in {\mathcal {B}_p}\cap {\mathcal {A}_\infty }\), then \(\lambda _1 \in {\text {B}_p}\).

The proof of this result appears in the appendix of [31]. Although the assumption that \(V \in {\mathcal {B}_p}\cap {\mathcal {A}_\infty }\) implies that the smallest and largest eigenvalues of V belong to \({\text {B}_p}\), it is unclear what conditions would imply that the other eigenvalues belong to this reverse Hölder class.

The next result and its proof show that the \({\mathcal{Q}\mathcal{C}}\) condition can be thought of as a noncommutative, non-\({\mathcal {A}_\infty }\) condition that is very naturally implied by the noncommutativity that is built into the \({\mathcal {A}_\infty }\) definition.

Lemma 6

(\({\mathcal {A}_\infty }{\cap \mathcal{N}\mathcal{D}} \subseteq {\mathcal{Q}\mathcal{C}}\)) If \(V \in {\mathcal {A}_\infty }{\cap \mathcal{N}\mathcal{D}}\), then \(V \in {\mathcal{Q}\mathcal{C}}\).

In the following proof, we establish that (10) holds for all cubes \(Q \subseteq \mathbb {R}^n\), not just those at the special scale which are defined by \(Q = Q\left( x, \frac{1}{\underline{m}(x, V)} \right) \).

Proof

Since \(V \in {\mathcal {A}_\infty }\), we may choose \(\delta > 0\) so that (11) holds with \(\varepsilon = \frac{1}{2}\). That is, for any \(Q \subseteq \mathbb {R}^n\), if we define  , then \(\left| S\right| \ge \frac{1}{2} \left| Q\right| \). Observe that since V(Q) is invertible, then

, then \(\left| S\right| \ge \frac{1}{2} \left| Q\right| \). Observe that since V(Q) is invertible, then

then

showing that \(V \in {\mathcal{Q}\mathcal{C}}\). \(\square \)

Next we describe a collection of examples of matrix functions in \({\mathcal {B}_p}\cap {\mathcal {A}_{2,\infty }}\). Let \(A = \left( a_{ij} \right) _{i, j = 1}^d\) be a \(d \times d\) Hermitian, positive definite matrix and let \(\Gamma = \left( \gamma _{ij} \right) _{i, j = 1}^d\) be some constant matrix. We use A and \(\Gamma \) to define the \(d \times d\) matrix function \(V: \mathbb {R}^n \rightarrow \mathbb {R}^{d \times d}\) by

By [32, Theorem 3.1], a matrix of the form (12) is positive definite a.e. iff \(\gamma _{ij} = \gamma _{ji} = \frac{1}{2} \left( \gamma _{ii} + \gamma _{jj} \right) \) for \(i, j = 1, \ldots , d\). Moreover, in this setting, [32, Lemma 3.4] shows that \(V^{-1}: \mathbb {R}^n \rightarrow \mathbb {R}^{d \times d}\) is well-defined and given by

where \(A^{-1} = \left( a^{ij} \right) _{i, j = 1}^d\). Under the assumption of positive definiteness, these matrices provide a full class of examples of matrix weights in \({\mathcal {B}_p}\cap {\mathcal {A}_{2,\infty }}\).

Proposition 2

Let V be defined by (12)) where \(A = \left( a_{ij} \right) _{i, j = 1}^d\) is a \(d \times d\) Hermitian, positive definite matrix and \(\gamma _{ij} = \frac{1}{2} \left( \gamma _i + \gamma _j \right) \) for some \(\vec {\gamma } \in \mathbb {R}^d\). If \(p \ge 1\) and \(\gamma _{i} > - \frac{n}{p}\) for each \(1 \le i \le d\), then \(V \in {\mathcal {B}_p}\cap {\mathcal {A}_{2,\infty }}\).

The proof of this result appears in Appendix C.

The classical Brunn–Minkowski inequality implies that the map \(A \mapsto (\det A)^{\frac{1}{d}}\), defined on the set of \(d \times d\) symmetric positive semidefinite matrices A, is concave. An application of Jensen’s inequality shows that

see [17, p. 48] for a proof. Accordingly, we make the following definition of an associated reverse class.

Definition 8

(\(\mathcal {R}_{\text {BM}}\)) We say that a matrix weight V belongs to the reverse Brunn–Minkowski class, \(V \in \mathcal {R}_{\text {BM}}\), if there exists a constant \(B_V > 0\) so that for any cube \(Q \subseteq \mathbb {R}^n\), it holds that

In Appendix C, we also provide the proof of the following“non-\({\mathcal {A}_\infty }\)” condition for \(V \in {\mathcal{Q}\mathcal{C}}\).

Proposition 3

If \(V \in \mathcal{N}\mathcal{D}\) and there exists a constant \(B_V > 0\) so that (15) holds for every cube \(Q \subseteq \mathbb {R}^n\), then \(V \in {\mathcal{Q}\mathcal{C}}\).

Even for a \(d \times d\) diagonal matrix weight V with a.e. positive entries \(\lambda _1(x), \ldots , \lambda _d(x)\), it is not clear when (15) holds. If each \(\lambda _j (x) \in {\text {A}_\infty }\) for \(1 \le j \le d\), then (15) holds. In particular, since every diagonal matrix weight V with positive a.e. entries belongs to \({\mathcal{Q}\mathcal{C}}\), then (15) doesn’t provide a necessary condition for \({\mathcal{Q}\mathcal{C}}\). It would be interesting to find an easily checkable sufficient condition for \(V \in {\mathcal{Q}\mathcal{C}}\) that is at least trivially true in the case of diagonal matrix weights.

For a much deeper discussion of the classes \({\mathcal {B}_p}, {\mathcal {A}_\infty }, {\mathcal {A}_{2,\infty }}\), and their relationships to each other, we refer the reader to Appendix B. We don’t discuss matrix \(\mathcal {A}_p\) weights in Appendix B since they play no role in this paper. However, [33] serves as an excellent reference for the theory of matrix \(\mathcal {A}_p\) weights and the boundedness of singular integrals on these spaces.

3 Auxiliary functions and Agmon distances

Now that we have introduced the class of matrices that we work with, we develop the theory of their associated auxiliary functions. In the scalar setting, these ideas appear in [4, 2, 5], and [3], for example. As we are working with matrices instead of scalar functions, there are many different ways to generalize these ideas.

We assume from now on that \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) for some \(p \in \left[ \frac{n}{2}, \infty \right] \). By Lemma 2, there is no loss in assuming that \(p > \frac{n}{2}\). Since \(V \in \mathcal{N}\mathcal{D}\), then by (8) and (9), for each \(x \in \mathbb {R}^n\) and \(r > 0\), we have \(\Psi \left( x, r; V \right) > 0\). Since \(p > \frac{n}{2}\), then the power \(2 - \frac{n}{p} > 0\) and it follows from Lemma 3 that for any \(\vec {e} \in \mathbb {R}^d\),

This allows us to make the following definition.

If \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) for some \(p > \frac{n}{2}\), then for \(x \in \mathbb {R}^n\) and \(\vec {e} \in \mathbb {S}^{d-1}\), the auxiliary function \(m\left( x, \vec {e}, V \right) \in (0, \infty )\) is defined by

Remark 1

If v is a scalar \({\text {B}_p}\) function, then we may eliminate the \(\vec {e}\)-dependence and define

We recall the following lemma from [5], for example, that applies to scalar functions.

Lemma 7

(cf. [5, Lemma 1.4]) Assume that \(v \in {\text {B}_p}\) for some \(p > \frac{n}{2}\). There exist constants \(C, c, k_0 > 0\), depending on n, p, and \(C_v\), so that for any \(x, y \in \mathbb {R}^n\),

-

(a)

If \( \left| x - y\right| \lesssim \frac{1}{m\left( x, v \right) }\), then \( m\left( x, v \right) \simeq _{(n, p, C_V)} m\left( y, v \right) \),

-

(b)

\( m\left( y, v \right) \le C \left[ 1 + \left| x - y\right| m\left( x, v \right) \right] ^{k_0} m\left( x, v \right) \),

-

(c)

\( m\left( y, v \right) \ge \frac{c \, m\left( x, v \right) }{\left[ 1 + \left| x -y\right| m\left( x, v \right) \right] ^{{k_0}/ \left( k_0+1 \right) }}\).

As the properties described in this lemma will be very useful below, we seek auxiliary functions that also satisfy this set of results in the matrix setting. We define two auxiliary functions as follows.

Definition 9

(Lower and upper auxiliary functions) Let \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) for some \(p > \frac{n}{2}\). We define the lower auxiliary function as follows:

The upper auxiliary function is given by

Remark 2

Since \(\left| \Psi \left( x, r; V \right) \right| \) satisfies Lemma 3 whenever \(V \in {\mathcal {B}_p}\), then for the upper auxiliary function, \(\overline{m}\left( x, V \right) \), we do not need to assume that \(V \in \mathcal{N}\mathcal{D}\).

For V fixed, we define

then observe that \(\overline{\Psi }(x) \le I \le \underline{\Psi }(x)\). In particular, for every \(\vec {e} \in \mathbb {S}^{d-1}\),

With this pair of functions in hand, we now seek to prove Lemma 7 for both \(\underline{m}\) and \(\overline{m}\). The following pair of observations for each auxiliary function will allow us to prove the desired results.

Lemma 8

(Lower observation) Let \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) for some \(p > \frac{n}{2}\). If \( c \ge \left\langle \Psi \left( x,r; V \right) \vec {e}, \vec {e} \right\rangle \) for some \(\vec {e} \in \mathbb {S}^{d-1}\), then \( r \le \max \left\{ 1, (C_Vc)^\frac{p}{2p - n}\right\} \frac{1}{\underline{m}(x, V)} \).

Proof

If \(r \le \frac{1}{\underline{m}\left( x, V \right) }\), then we are done, so assume that \(\frac{1}{\underline{m}\left( x, V \right) } < r\). Then it follows from (18) and Lemma 3 that for any \(\vec {e} \in \mathbb {S}^{d-1}\),

The conclusion follows after algebraic simplifications. \(\square \)

As we observed in Lemma 1, if \(V \in {\mathcal {B}_p}\), then \(\left| V\right| \in {\text {B}_p}\). Thus, it is meaningful to discuss the quantity \(m\left( x, \left| V\right| \right) \). For \(\overline{m}\left( x, V \right) \), we rely on the following relationship regarding norms. Note that by Remark 2, we do not need to assume that \(V \in \mathcal{N}\mathcal{D}\) for this result.

Lemma 9

(Upper auxiliary function relates to norm) If \(V \in {\mathcal {B}_p}\) for some \(p > \frac{n}{2}\), then

Proof

For any \(r > 0\), choose \(\vec {e} \in \mathbb {S}^{d-1}\) so that

Since \(\left\langle V(y) \vec {e}, \vec {e} \right\rangle \le \left| V\right| \), then \(\left| \Psi (x, r;V)\right| \le \Psi (x, r; \left| V\right| )\). It follows that \(\frac{1}{\overline{m}\left( x, V \right) } \ge \frac{1}{m\left( x, \left| V\right| \right) }\) so that

Let \(\left\{ \vec {e}_i\right\} _{i=1}^d\) denote the standard basis of \(\mathbb {R}^d\). For any \(r > 0\), it follows from (5) that

Combining the fact that \(\Psi \left( x, \frac{1}{m\left( x, \left| V\right| \right) }; \left| V\right| \right) = 1\) with the previous observation, Lemma 3, and the definition of \(\overline{m}\) shows that

and the second part of the inequality follows. \(\square \)

Since \(\left| V\right| = \lambda _d\), the largest eigenvalue of V, then this result shows that \(\overline{m}\left( x, V \right) \simeq m\left( x, \lambda _d \right) \), indicating why we call \(\overline{m}\) the upper auxiliary function.

Now we use these lemmas to establish a number of important tools related to the functions \(\underline{m}\left( x, V \right) \) and \(\overline{m}\left( x, V \right) \). From now on we will assume that \(\left| \cdot \right| \) on \(\mathbb {R}^n\) refers to the \(\ell _\infty \) norm.

Lemma 10

(Auxiliary function properties) If \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) for some \(p > \frac{n}{2}\), then both \(\underline{m}(\cdot , V)\) and \(\overline{m}(\cdot , V)\) satisfy the conclusions of Lemma 7 where all constants depend on n, p, and \(C_V\). For \(\overline{m}(\cdot , V)\), the constants depend additionally on d and we may eliminate the assumption that \(V \in \mathcal{N}\mathcal{D}\).

Proof

First consider \(\overline{m}(\cdot , V)\). Lemma 9 combined with Lemma 1 implies that all of these properties follow immediately from Lemma 7.

Now consider \(\underline{m}(\cdot , V)\). Suppose \(\left| x - y\right| \le \frac{2^j -1}{\underline{m}\left( x, V \right) }\) for some \(j \in \mathbb {N}\). Then \(Q\left( y, \frac{1}{\underline{m}\left( x, V \right) } \right) \subseteq Q\left( x, \frac{2^j}{\underline{m}\left( x, V \right) } \right) \). Choose \(\vec {e} \in \mathbb {S}^{d-1}\) so that \(\left\langle \Psi \left( x, \frac{1}{\underline{m}\left( x, V \right) }; V \right) \vec {e}, \vec {e} \right\rangle = 1\). Then

where we have used Lemma 4 and \(\gamma \) denotes the doubling constant. It then follows from Lemma 8 that \( \frac{1}{\underline{m}\left( x, V \right) } \le \frac{\max \left\{ 1, \left( C_V \gamma ^j \right) ^{p/(2p-n)}\right\} }{\underline{m}\left( y, V \right) }\) or

Since \(\left| x - y\right| \le \frac{2^j -1}{\underline{m}\left( x, V \right) }\) and \(\frac{1}{\underline{m}\left( x, V \right) } \le \frac{\left( C_V \gamma ^j \right) ^{p/(2p-n)}}{\underline{m}\left( y, V \right) }\), then \(\left| x - y\right| \le \frac{\left( 2^j - 1 \right) \left( C_V \gamma ^j \right) ^{p/(2p-n)}}{\underline{m}\left( y, V \right) }\). Thus, \(Q\left( x, \frac{1}{\underline{m}\left( y, V \right) } \right) \subseteq Q\left( y, \frac{\left( 2^j - 1 \right) \left( C_V \gamma ^j \right) ^{p/(2p-n)}+1}{\underline{m}\left( y, V \right) } \right) \). Setting \( {\tilde{j}} = \lceil \ln \left[ \left( 2^j - 1 \right) \left( C_V \gamma ^j \right) ^{p/(2p-n)}+1\right] / \ln 2\rceil \), it can be shown, as above, that

where now \(\vec {e} \in \mathbb {S}^{d-1}\) is such that \(\left\langle \underline{\Psi }(y) \vec {e}, \vec {e} \right\rangle = 1\). Arguing as above, we see that \(\frac{1}{\underline{m}\left( y, V \right) } \le \frac{ \max \left\{ 1, \left( C_V \gamma ^{\tilde{j}} \right) ^{p/(2p-n)}\right\} }{\underline{m}\left( x, V \right) }\), or

When \(\left| x - y\right| \lesssim \frac{1}{\underline{m}\left( x, V \right) }\), we have that \(j \simeq 1\) and \(\tilde{j} \simeq 1\). Then statement (a) is a consequence of (20) and (21).

If \(\left| x - y\right| \le \frac{1}{\underline{m}\left( x,V \right) }\), then part (a) implies that \(\underline{m}\left( y, V \right) \lesssim \underline{m}\left( x, V \right) \) and the conclusion of (b) follows. Otherwise, choose \(j \in \mathbb {N}\) so that \(\frac{2^{j-1}-1}{\underline{m}\left( x, V \right) } \le \left| x - y\right| < \frac{2^j-1}{\underline{m}\left( x, V \right) }\). From (20), we see that

Setting \(C = \left( C_V \gamma \right) ^{\frac{p}{2p-n}}\) and \(k_0 = \frac{p \ln \gamma }{\left( 2p-n \right) \ln 2 }\) gives the conclusion of (b).

If \(\left| x - y\right| \le \frac{1}{\underline{m}\left( x, V \right) }\) or \(\left| x - y\right| \le \frac{1}{\underline{m}\left( y, V \right) }\), then part (a) implies that \(\underline{m}\left( x, V \right) \lesssim \underline{m}\left( y, V \right) \), and the conclusion of (c) follows. Thus, we consider when \(\left| x - y\right| > \frac{1}{\underline{m}\left( x, V \right) }\) and \(\left| x - y\right| > \frac{1}{\underline{m}\left( y, V \right) }\). Repeating the arguments from the previous paragraph with x and y interchanged, we see that

Rearranging gives that

Taking \(c = 2^{-k_0/(k_0+1)}C^{-1/(k_0+1)}\) leads to the conclusion of (c). \(\square \)

Using these auxiliary functions, we now define the associated Agmon distance functions.

Definition 10

(Agmon distances) Let \(\underline{m}(\cdot , V)\) and \(\overline{m}(\cdot , V)\) be as in (16) and (17), respectively. We define the lower Agmon distance function as

and the upper Agmon distance function as

where in both cases, the infimum ranges over all absolutely continuous \(\gamma :[0,1] \rightarrow \mathbb {R}^n\) with \(\gamma (0) = x\) and \(\gamma (1) = y\).

We make the following observation.

Lemma 11

(Property of Agmon distances) If \(\left| x - y\right| \le \frac{C}{\underline{m}(x, V)}\), then \(\underline{d}\left( x, y, V \right) \lesssim _{(n, p, C_V)} C\). If \(\left| x - y\right| \le \frac{C}{\overline{m}(x, V)}\), then \(\overline{d}\left( x, y, V \right) \lesssim _{(d, n, p, C_V)} C\).

Proof

We only prove the first statement since the second one is analogous. Let \(x, y \in \mathbb {R}^n\) be as given. Define \(\gamma : \left[ 0,1\right] \rightarrow \mathbb {R}^n\) by \(\gamma \left( t \right) = x + t\left( y - x \right) \). By Lemma 10(a), \(\underline{m}\left( \gamma \left( t \right) , V \right) \lesssim _{(n, p, C_V)} \underline{m}\left( x, V \right) \) for all \(t \in \left[ 0, 1\right] \). It follows that

as required. \(\square \)

In future sections, the lower Agmon distance function will be an important tool for us once it has been suitably regularized. We regularize this function \(\underline{d}(\cdot , \cdot , V)\) by following the procedure from [2]. Observe that by Theorem 10(c), \(\underline{m}\left( \cdot , V \right) \) is a slowly varying function; see [34, Definition 1.4.7], for example. As such, we have the following.

Lemma 12

(cf. the proof of Lemma 3.3 in [2]) There exist a sequence \(\left\{ x_j\right\} _{j=1}^\infty \subseteq \mathbb {R}^n\) so that with \( Q_j = Q\left( x_j, \tfrac{1}{\underline{m}\left( x_j, V \right) } \right) \), we have \( \mathbb {R}^n = \bigcup _{j=1}^\infty Q_j\) and \( \sum _{j=1}^\infty \chi _{Q_j} \lesssim _{(n, p, C_V)} 1\). Moreover, for each j, there exist \(\phi _j \in C^\infty _0\left( Q_j \right) \) so that \(0 \le \phi _j \le 1\), \( \sum _{j=1}^\infty \phi _j = 1\), and \( \left| \nabla \phi _j(x)\right| \lesssim _{(n, p, C_V)} \underline{m}\left( x, V \right) \).

Remark 3

Since \(\overline{m}\left( \cdot , V \right) \) is also a slowly varying function, the same result applies to \(\overline{m}(\cdot , V)\) with constants that depend additionally on d.

Using this lemma and [34, Theorem 1.4.10], we can follow the process from [2, pp. 542] to establish the following pair of results.

Lemma 13

(Lemma 3.3 in [2]) For each \(y \in \mathbb {R}^n\), there exists nonnegative function \(\varphi _V(\cdot , y) \in C^\infty (\mathbb {R}^n)\) such that for every \(x \in \mathbb {R}^n\),

Lemma 14

(Lemma 3.7 in [2]) For each \(y \in \mathbb {R}^n\), there exists a sequence of nonnegative, bounded functions \(\left\{ \varphi _{V, j}\left( \cdot , y \right) \right\} \subseteq C^\infty (\mathbb {R}^n)\) such that for every \(x \in \mathbb {R}^n\),

To conclude the section, we observe that under the stronger assumption that \(V \in {\mathcal {B}_p}\cap {\mathcal {A}_\infty }\), we can prove a result analogous to Lemma 9 for the smallest eigenvalue. By Proposition 1, \(\lambda _1 \in {\text {B}_p}\), so it is meaningful to discuss \(m\left( x, \lambda _1 \right) \). In subsequent sections, we will not assume that \(V \in {\mathcal {A}_\infty }\), so this result should be treated as an interesting observation. Its proof is provided in [31].

Proposition 4

(Lower auxiliary function relates to \(\lambda _1\)) If \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\cap {\mathcal {A}_\infty }\) for some \(p > \frac{n}{2}\), then

where the implicit constant depends on n, p, \(C_V\) and the \({\mathcal {A}_\infty }\) constants.

This result leads to the following observation.

Corollary 1

If \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\cap {\mathcal {A}_\infty }\) for some \(p > \frac{n}{2}\), then \(m\left( x, \lambda _1 \right) \) satisfies the conclusions of Lemma 7 where the constants have additional dependence on the \({\mathcal {A}_\infty }\) constants.

Remark 4

In fact, if we assume that \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\cap {\mathcal {A}_\infty }\), then we can show that Lemma 10 holds for \(\underline{m}\left( \cdot , V \right) \) in the same way that we show it holds for \(\overline{m}\left( \cdot , V \right) \). That is, we apply Lemma 7 to \(\lambda _1\), then use Proposition 4.

4 Fefferman–Phong inequalities

In this section, we present and prove our matrix versions of the Fefferman–Phong inequalities. The first result is a lower Fefferman–Phong inequality which holds with the lower auxiliary function from (16). This result will be applied in Sect. 8 where we establish upper bound estimates for the fundamental matrices. A corollary to this lower Fefferman–Phong inequality, which is used in Sect. 9 to prove lower bound estimates for the fundamental matrices, is then provided. In keeping with [2], we also present the upper bound with the upper auxiliary function from (17).

Before stating and proving the lower Fefferman–Phong inequality, we present the Poincaré inequality that will be used in its proof. Additional and more complex matrix-valued Poincaré inequalities and related Chanillo–Wheeden type conditions appear in a forthcoming manuscript.

Proposition 5

(Poincaré inequality) Let \(V \in \mathcal {B}_{\frac{n}{2}}\). For any open cube \(Q \subseteq \mathbb {R}^n\) and any \(\vec {u} \in C^1(Q)\), we have

We prove this result by following the arguments from the scalar version of the Poincaré inequality in Shen’s article, [2, Lemma 0.14].

Proof

Fix a cube Q and define the scalar weight \(v_Q (y) = \left| V(Q)^{-\frac{1}{2}} V(y) V(Q)^{-\frac{1}{2}}\right| \).

First we show that \(v_Q \in B_{\frac{n}{2}}\) with a comparable constant. For an arbitrary cube P, observe that by (5)

where we have used that \(V \in \mathcal {B}_{\frac{n}{2}}\) to reach the third line. This shows that \(v_Q \in B_{\frac{n}{2}}\).

Since \(v_Q \in B_{\frac{n}{2}}\), then it follows from [2, Lemma 0.14] that

Since

the conclusion follows. \(\square \)

Now we present the lower Fefferman–Phong inequality. This result will be applied in Sect. 8 to prove the exponential upper bound on the fundamental matrix. Note that we assume here that V belongs to the first three matrix classes that were introduced in Sect. 2.

Lemma 15

(Lower Auxiliary Function Fefferman–Phong Inequality) Assume that \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\cap {\mathcal{Q}\mathcal{C}}\) for some \(p > \frac{n}{2}\). Then for any \( \vec {u} \in C^1 _0(\mathbb {R}^n)\), it holds that

Proof

For some \(x_0 \in \mathbb {R}^n\), let \(r_0 = \frac{1}{\underline{m}(x_0, V)}\) and set \(Q = Q(x_0, r_0)\). Property \({\mathcal{Q}\mathcal{C}}\) in (10) shows that

where the last line follows from an application of Proposition 5. Now we multiply this inequality through by \(r_0^{-2} = \underline{m}\left( x_0, V \right) ^{2}\), then apply Lemma 10 to conclude that \(\underline{m}\left( x_0, V \right) \simeq _{(n, p, C_V)} \underline{m}\left( x, V \right) \) on Q. It follows that

Since \(r_0^{2-n}V(Q) = \Psi (x_0, r_0, V) \ge I\) implies that \(r_0^{n-2} \left| (V(Q))^{-1}\right| = \left| \Psi (x_0, r_0, V)^{-1} \right| \le 1\), then for any \(Q = Q\left( x_0, \frac{1}{\underline{m}\left( x_0, V \right) } \right) \), we have shown that

According to Lemma 12, there exists a sequence \(\left\{ x_j\right\} _{j=1}^\infty \subseteq \mathbb {R}^n\) such that if we define \( Q_j = Q\left( x_j, \frac{1}{\underline{m}\left( x_j, V \right) } \right) \), then \( \mathbb {R}^n = \bigcup _{j=1}^\infty Q_j\) and \( \sum _{j=1}^\infty \chi _{Q_j} \lesssim _{(n, p, C_V)} 1.\) Therefore, it follows from (23) that

as required. \(\square \)

Remark 5

If we assume that \(\vec {u} \equiv 1\) on Q, then the condition \({\mathcal{Q}\mathcal{C}}\) is necessary for (22) to be true on all such cubes. As such, the condition \({\mathcal{Q}\mathcal{C}}\) is very natural assumption to impose. In fact, as we show in Appendix A, there are matrix weights \(V \in \left( {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D} \right) \setminus {\mathcal{Q}\mathcal{C}}\) for which this Fefferman–Phong estimate fails to hold.

Finally, if we replace V by \(\left| V\right| I\), we are essentially reduced to the scalar setting and we only need that \(\left| V\right| \in {\text {B}_p}\). In particular, we don’t need to assume that \(V \in {\mathcal{Q}\mathcal{C}}\). As shown in Sects. 8 and 9, this result will be applied to prove the exponential lower bound on the fundamental matrix.

Corollary 2

(Norm Fefferman–Phong Inequality) Assume that \(\left| V\right| \in {\text {B}_p}\) for some \(p > \frac{n}{2}\). Then for any \( \vec {u} \in C^1 _0(\mathbb {R}^n)\), it holds that

To conclude the section, although we will not use it, we state the straightforward upper bound which is similar [2, Theorem 1.13(b)]. The proof is very similar to that of [2, Theorem 1.13(b)], so we omit it. Notice that for this result, we only assume that \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\).

Proposition 6

(Upper Auxiliary Function Fefferman–Phong Inequality) Assume that \(V \in {\mathcal {B}_p}\) for some \(p > \frac{n}{2}\). For any \( \vec {u} \in C_0^1(\mathbb {R}^n)\), it holds that

5 The elliptic operator

In this section, we introduce the generalized Schrödinger operators. For this section and the subsequent two, we do not need to assume that our the matrix weight V belongs to \({\mathcal {B}_p}\) and therefore work in a more general setting. In particular, to define the operator, the fundamental matrices, and discuss a class of systems operators that satisfy a set of elliptic theory results, we only require nondegeneracy and local p-integrability of the zeroth order potential terms. The stronger assumption that \(V \in {\mathcal {B}_p}\) is not required until we establish more refined bounds for the fundamental matrices; namely the exponential upper and lower bounds. As such, the next three sections are presented for V in this more general setting.

For the leading operator, let \(A^{\alpha \beta } = A^{\alpha \beta }(x)\), for each \(\alpha , \beta \in \left\{ 1, \dots , n\right\} \), be an \(d \times d\) matrix with bounded measurable coefficients defined on \(\mathbb {R}^n\). We assume that there exist constants \(0< \lambda , \Lambda < \infty \) so that \(A^{\alpha \beta }\) satisfies an ellipticity condition of the form

and a boundedness assumption of the form

For the zeroth order term, we assume that

In particular, since V is a matrix weight, then V is a \(d \times d\), a.e. positive semidefinite, symmetric, \(\mathbb {R}\)-valued matrix function.

Remark 6

Note that if \(V \in {\mathcal {B}_p}\cap \mathcal{N}\mathcal{D}\) for some \(p \in \left[ \frac{n}{2}, \infty \right] \), then since \(V \in {\mathcal {B}_p}\) implies that \(V \in L^p_{loc}\left( \mathbb {R}^n \right) \) for some \(p \ge \frac{n}{2}\), such a choice of V satisfies (26). This more specific assumption on the potential functions will appear in Sects. 8 and 9.

The equations that we study are formally given by

To make sense of what it means for some function \(\vec {u}\) to satisfy (27), we introduce new Hilbert spaces. But first we recall some familiar and related Hilbert spaces. For any open \(\Omega \subseteq \mathbb {R}^n\), \(W^{1,2}(\Omega )\) denotes the family of all weakly differentiable functions \(u \in L^{2}(\Omega )\) whose weak derivatives are functions in \(L^2(\Omega )\), equipped with the norm that is given by

The space \(W^{1,2}_0(\Omega )\) is defined to be the closure of \(C^\infty _c(\Omega )\) with respect to \(\left\| \cdot \right\| _{W^{1,2}(\Omega )}\). Recall that \(C^\infty _c(\Omega )\) denotes the set of all infinitely differentiable functions with compact support in \(\Omega \).

For any open \(\Omega \subseteq \mathbb {R}^n\), the space \(Y^{1,2}(\Omega )\) is the family of all weakly differentiable functions \(u \in L^{2^*}(\Omega )\) whose weak derivatives are functions in \(L^2(\Omega )\), where \(2^*=\frac{2n}{n-2}\). We equip \(Y^{1,2}(\Omega )\) with the norm

Define \(Y^{1,2}_0(\Omega )\) as the closure of \(C^\infty _c(\Omega )\) in \(Y^{1,2}(\Omega )\). When \(\Omega = \mathbb {R}^n\), \(Y^{1,2}\left( \mathbb {R}^n \right) = Y^{1,2}_0\left( \mathbb {R}^n \right) \) (see, e.g., Appendix A in [11]). By the Sobolev inequality,

It follows that \(W^{1,2}_0(\Omega ) \subseteq Y^{1,2}_0(\Omega )\) with set equality when \(\Omega \) has finite measure. The bilinear form on \(Y_0^{1,2}(\Omega )\) that is given by

defines an inner product on \(Y_0^{1,2}(\Omega )\). With this inner product, \(Y_0^{1,2}(\Omega )\) is a Hilbert space with norm

We refer the reader to [11, Appendix A] for further properties of \(Y^{1,2}(\Omega )\), and some relationships between \(Y^{1,2}(\Omega )\) and \(W^{1,2}(\Omega )\). These spaces can be generalized to vector-valued functions in the usual way.

Towards the development of our new function spaces, we start with the associated inner products. For any V as in (26) and any \(\Omega \subseteq \mathbb {R}^n\) open and connected, let \(\left\langle \cdot , \cdot \right\rangle ^2_{W_V^{1,2}(\Omega )}: C_c^\infty (\Omega ) \times C_c^\infty (\Omega ) \rightarrow \mathbb {R}\) be given by

This inner product induces a norm, \(\left\| \cdot \right\| ^2_{W_V^{1,2}(\Omega )}: C_c^\infty (\Omega ) \rightarrow \mathbb {R}\) that is defined by

The nondegeneracy condition described by (9) ensures that this is indeed a norm and not just a semi-norm. In particular, if \(\left\| D \vec {u}\right\| _{L^2(\Omega )} = 0\), then \(\vec {u} = \vec {c}\) a.e., where \(\vec {c}\) is a constant vector. But then by (9), \(\left\| V^{1/2} \vec {u}\right\| ^2_{L^{2}(\Omega )} = \left\| V^{1/2} \vec {c}\right\| ^2_{L^{2}(\Omega )} = 0\) iff \(\vec {c} = \vec {0}\).

For any \(\Omega \subseteq \mathbb {R}^n\) open and connected, we use the notation \(L_V^2(\Omega )\) to denote the space of V-weighted square integrable functions. that is,

For any V as in (26) and any \(\Omega \subseteq \mathbb {R}^n\) open and connected, define each space \(W_{V,0}^{1,2}(\Omega )\) as the completion of \(C_c^\infty (\Omega )\) with respect to the norm \(\left\| \cdot \right\| _{W_V^{1,2}(\Omega )}\). That is,

The following proposition clarifies the meaning of our trace zero Sobolev space.

Proposition 7

Let V be as in (26) and let \(\Omega \subseteq \mathbb {R}^n\) be open and connected. For every sequence \(\{\vec {u}_k\}_{k=1}^\infty \subset C_c ^\infty (\Omega )\) that is Cauchy with respect to the \(W_{V}^{1,2}(\Omega )\)-norm, there exists a \(\vec {u} \in L_V ^2(\Omega ) \cap Y^{1,2}_0(\Omega )\) for which

Proof

Since \(\{\vec {u}_k\} \subset C_c ^\infty (\Omega )\) is Cauchy in the \(W_{V}^{1,2}(\Omega )\) norm, then \(\left\{ V^{1/2} \vec {u}_k\right\} \) and \(\left\{ D\vec {u}_k\right\} \) are Cauchy in \(L^2(\Omega )\), so there exists \(\vec {h} \in L^2(\Omega )\) and \(U \in L^2(\Omega )\) so that

By the Sobolev inequality applied to \(\vec {u}_k-\vec {u}_j\), we have

In particular, \(\left\{ \vec {u}_k\right\} \) is also Cauchy in \(L^{2^*}(\Omega )\) and then there exists \(\vec {u} \in L^{2^*}(\Omega )\) so that

For any \(\Omega ' \Subset \mathbb {R}^n\), observe that another application of Hölder’s inequality shows that

Since \(V \in L^{\frac{n}{2}}_{{{\,\textrm{loc}\,}}}(\mathbb {R}^n)\), then \(\left\| V\right\| _{L^{\frac{n}{2}}\left( \Omega ' \right) } < \infty \) and we conclude that \(V^{1/2} \vec {u}_k \rightarrow V^{1/2} \vec {u}\) in \(L^2\left( \Omega \cap \Omega ' \right) \) for any \(\Omega ' \Subset \mathbb {R}^n\). By comparing this statement with (28), we deduce that \(V^{1/2} \vec {u} = \vec {h}\) in \(L^2(\Omega )\) and therefore a.e., so that (28) holds with \(\vec {h} = V^{1/2} \vec {u}\). Moreover, \(\vec {u} \in L^2_V\left( \Omega \right) \).

Next we show that \(D\vec {u} = U\) weakly in \(\Omega \). Let \(\vec {\xi } \in C^\infty _c(\Omega )\). Then for \(j = 1, \ldots , n\), we get from (30) and (29) that

where \(U_j\) denotes the \(j^{\text {th}}\) column of U. That is, \(D\vec {u} = U \in L^2(\Omega )\). In particular, (29) holds with \(U = D\vec {u}\). Finally, in combination with (29) and (30), this shows that \(\vec {u} \in Y^{1,2}_0(\Omega )\). \(\square \)

By Proposition 7, associated to each equivalence class of Cauchy sequences \([\{\vec {u}_k\}] \in W_{V, 0} ^{1, 2} (\Omega )\) is a function \(\vec {u} \in L_V ^2(\Omega ) \cap Y^{1,2}_0(\Omega )\) with

so that

In fact, this defines a norm on weakly-differentiable vector-valued functions \(\vec {u}\) for which \(\left\| \vec {u}\right\| _{W^{1,2}_V(\Omega )} < \infty \). It follows that the function \(\vec {u}\) is unique and this shows that \(W_{ V, 0} ^{1, 2} (\Omega )\) isometrically imbeds into the space \(L_V^2(\Omega ) \cap Y^{1,2}_0(\Omega )\) equipped with the norm \(\left\| \cdot \right\| _{W^{1,2}_V(\Omega )}\).

Going forward, we will slightly abuse notation and denote each element in \(W_{V, 0}^{1, 2} (\Omega )\) by its unique associated function \(\vec {u} \in L_V ^2(\Omega ) \cap Y^{1,2}_0(\Omega )\). To define the nonzero trace spaces that we need below to prove the existence of fundamental matrices, we use restriction. That is, define the space

and equip it with the \(W_{V}^{1,2}(\Omega )\)-norm.

Note that \(W_{V}^{1,2}(\mathbb {R}^n) = W_{V, 0}^{1,2}(\mathbb {R}^n)\). Moreover, when \(\Omega \ne \mathbb {R}^n\), \(W_{V}^{1,2}(\Omega )\) may not be complete so we simply treat it as an inner product space. We stress that in general, \(W_{V}^{1,2}(\Omega )\) should not be thought of as a kind of “Sobolev space,” but should instead be viewed as a convenient collection of functions used in the construction from [11]. Specifically, the construction of fundamental matrices from [11] uses the restrictions of elements from appropriate “trace zero Hilbert–Sobolev spaces” defined on \(\mathbb {R}^n\). For us, \(W_{V,0}^{1,2}(\mathbb {R}^n)\) plays the role of the trace zero Hilbert–Sobolev space. Also, as an immediate consequence of Proposition 7 we have the following.

Corollary 3

Let V be as in (26) and let \(\Omega \subseteq \mathbb {R}^n\) be open and connected. If \(\vec {u} \in W_V ^{1, 2} (\Omega )\), then \(\vec {u} \in L_V ^2(\Omega ) \cap Y^{1,2}(\Omega )\) and there exists \(\{\vec {u}_k \}_{k=1}^\infty \subset C_c ^\infty (\mathbb {R}^n)\) for which