Abstract

We provide a refined explicit estimate of the exponential decay rate of underdamped Langevin dynamics in the \(L^2\) distance, based on a framework developed in Albritton et al. (Variational methods for the kinetic Fokker–Planck equation, arXiv arXiv:1902.04037, 2019). To achieve this, we first prove a Poincaré-type inequality with a Gibbs measure in space and a Gaussian measure in momentum. Our estimate provides a more explicit and simpler expression of the decay rate; moreover, when the potential is convex with a Poincaré constant \(m \ll 1\), our estimate shows the decay rate of \(O(\sqrt{m})\) after optimizing the choice of the friction coefficient, which is much faster than m for the overdamped Langevin dynamics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the convergence rate for the following underdamped Langevin dynamics \(({x}_t, {v}_t)\in {\mathbb {R}}^d\times {\mathbb {R}}^d\):

Have U(x) is the potential energy, \(\gamma >0\) is the friction coefficient, and \({W}_t\) is a d-dimensional standard Brownian motion; the mass and temperature are set to be 1 for simplicity. The law of the process (1), \(\rho (t,x,v)\), satisfies the kinetic Fokker–Planck equation

It is well-known (see for example [45, Proposition 6.1]) that under mild assumptions, (2) admits a unique stationary density function given by

where

When \(\gamma \rightarrow \infty \), the rescaled dynamics \(x^{(\gamma )}_t {:}{=} x_{\gamma t}\) converges to the Smoluchowski SDE, also known as the overdamped Langevin dynamics (see e.g., [45, Sec. 6.5]), which is given by

An equivalent formalism of (2) is the following backward Kolmogorov equation:

Have \({\mathcal {L}}_{\text {ham}}\) is the Hamiltonian transport operator and \({\mathcal {L}}_{\text {FD}}\) is the fluctuation-dissipation term

Indeed, (4) could be derived from (2) by considering \(\rho (t, x, v) = f(t, x, -v) \rho _{\infty }(x, v)\) [45]; since by \(L^2\)-duality, \(\left\Vert \rho - \rho _{\infty }\right\Vert _{L^2(\rho _{\infty }^{-1})} \equiv \left\Vert f - \int f\,\textrm{d}\rho _{\infty }\right\Vert _{L^2(\rho _{\infty })}\), the exponential convergence of the solution \(\rho (t,\cdot ,\cdot )\) of (2) to \(\rho _\infty \) is equivalent to the exponential decay of \(f(t, \cdot , \cdot )\) to zero, provided that \(\int f_0\,\textrm{d}\rho _{\infty }= 0\). Similarly, one could obtain the backward Kolmogorov equation for the overdamped Langevin dynamics, which is given by

If \(\mu \) satisfies a Poincaré inequality, one could show that the generator in the above equation (6) is self-adjoint and coercive with respect to \(L^2(\mu )\). As a consequence, if \(\int h_0\,\textrm{d}\mu = 0\), then h(t, x) decays to zero exponentially fast as \(t\rightarrow \infty \), see for example [6, Theorem 4.2.5].

Unlike the generator of (6), the generator \({\mathcal {L}}\) in (4) for the underdamped Langevin is not uniformly elliptic. As a result, proving the exponential convergence of \(\rho (t, \cdot , \cdot )\) to the equilibrium \(\rho _{\infty }\) is more challenging. With extensive works throughout the years, the exponential convergence of the underdamped Langevin dynamics is now better understood in various norms (see Section 1.2 below for a review).

Our goal in this work is to provide an explicit estimate of the decay rate in \(L^2\) for the semigroup in (4), based on a framework proposed in [1] which implicitly uses Hörmander’s bracket conditions [32]. In particular, under some mild assumptions of U, we obtain explicit estimates for some universal constant \(C>1\) independent of \(U,\gamma ,d\) and some \(\nu > 0\) such that for any possible \(f=f(t,x,v)\) satisfying (4) and \(\int _{} f_0 \,\textrm{d}\rho _{\infty }= 0\), we have

In the rest of this section, we will first present our assumptions and main results. Next, we will briefly review existing approaches to study the exponential convergence of (4) (or equivalently (2)) in Section 1.2, and compare our estimate of the decay rate \(\nu \) with some previous works aiming at explicit estimates [9, 16, 40, 47]. We would like to comment here that convergence results are also obtained in earlier works [17, 26], although their rates are only explicit in \(\gamma \).

1.1 Notations

Throughout the paper we assume I to be the time interval (0, T), and we use \(\,\textrm{d}\lambda (t)=\frac{1}{T}\chi _{(0,T)}(t)\,\textrm{d}t\) to denote the rescaled Lebesgue measure on I so that \(\,\textrm{d}\lambda (t)\) denotes a probability measure. For any probability measure \(\rho \), we use \(L^2(\rho )\) (and similarly \(H^1(\rho ),H^2(\rho )\)) to denote the standard Sobolev spaces, and \(H^{-1}(\rho )\) to denote the dual space of \(H^1(\rho )\). For the Gaussian probability measure \(\kappa \) in velocity space, we also use \(L^2_\kappa \), \(H^1_\kappa , \, H^{-1}_\kappa \) to denote the corresponding spaces. Moreover, we use \(H_0^1(\lambda \otimes \mu )\) to denote the \(H^1(\lambda \otimes \mu )\) functions that vanish at both time boundaries \(t=0\) and \(t=T\). By abuse of notation, we denote the canonical pairing \(\langle \cdot , \cdot \rangle _{H^{-1}(\rho ),H^{1}(\rho )}\) between \(f\in H^1(\rho )\) and \(g\in H^{-1}(\rho )\) by

For \(f\in H^{-1}(\rho )\), we use the notation \((f)_{\rho } {:}{=} \langle f,1\rangle _{H^{-1}(\rho ),H^1(\rho )}\). For an arbitrary Banach space V and time interval I equipped with Lebesgue measure \(\,\textrm{d}\lambda (t)\), we denote by \(L^p(\lambda \otimes \mu ;V)\) the Banach space of functions f(t, x, v) with norm

Inspired by [1], we define the Banach space

We define a projection operator for \(\phi (t,x,v)\in L^2(\lambda \otimes \rho _{\infty })\) by

Equivalently, \(\Pi _v\) is used to obtain the marginal component of \(\phi \) in \(L^2(\lambda \otimes \mu )\). By a slight abuse of notation, for \(\phi (x, v) \in L^2(\rho _{\infty })\), we also use the same notation \(\Pi _v\) to represent the similar projection, i.e., \((\Pi _v \phi )(x) {:}{=} \int _{{\mathbb {R}}^d} \phi (x, v) \,\textrm{d}\kappa (v)\). The adjoints of \(\nabla _x\) and \(\nabla _v\) in the Hilbert space \(L^2(\rho _{\infty })\) are respectively given by \(\nabla _x^* F= - \nabla _x \cdot F + \nabla _x U \cdot F\) and \(\nabla _v^* F = -\nabla _v \cdot F + v \cdot F\) for any vector field \(F(x,v): {\mathbb {R}}^{2d} \rightarrow {\mathbb {R}}^d\). Thus we can rewrite operators \({\mathcal {L}}_{\text {ham}}\) and \({\mathcal {L}}_{\text {FD}}\) as

For time-augmented state space \(I\times {\mathbb {R}}^d\) equipped with measure \(\lambda \otimes \mu \), we use the convention \(\partial _{x_0}{:}{=}\partial _t\), the short-hand notation \(\bar{\nabla }{:}{=}(\partial _t,\nabla _x)^\top \), and the notation \({\mathscr {L}}{:}{=}-\partial _{tt}+\nabla _x^*\nabla _x\) to denote the “Laplace” operator on \(L^2(\lambda \otimes \mu )\). We use C to denote a universal constant independent of all parameters that may change from line to line.

1.2 Assumptions and Main Results

Assumption 1

(Poincaré inequality for \(\mu \)) Assume that the potential U(x) satisfies a Poincaré inequality in space

Assumption 2

The potential \(U\in C^2({\mathbb {R}}^d)\), and there exist constants \(M>0\) and \(\delta \in (0,1)\) such that

for some constant \(M\geqslant 1\).

Assumption 3

The embedding  is compact.

is compact.

Remark 1.1

-

(i)

Assumption 1 guarantees that the elliptic equation \(\nabla _x^*\nabla _x u = h\) has a unique solution \(u\in H^2(\mu )\) for any \(h\in L^2(\mu )\) satisfying \((h)_\mu =0\) (see for example [19, Proposition 5]). Hence, together with Assumption 3, we derive from Fredholm alternative that \(L^2(\mu )\) has an orthonormal basis \(\{1\}\cup \{w_\alpha \}_{\alpha >0}\) where \(w_\alpha \in H^2(\mu )\) are eigenfunctions of \(\nabla _x^*\nabla _x\) with eigenvalue \(\alpha ^2\) for a discrete set of \(\alpha >0\) (see [22, Chapter 6] for an argument with bounded domains):

$$\begin{aligned} \nabla ^*_x\nabla _x w_\alpha =\alpha ^2 w_\alpha . \end{aligned}$$Further, by Assumption 1, any eigenvalue \(\alpha ^2\) of \(\nabla ^*_x \nabla _x\) satisfies \(\alpha \ge \sqrt{m}\), in fact, the smallest \(\alpha \) is precisely \(\sqrt{m}\), the square root of the Poincaré constant; the spectrum of \(\nabla _x^*\nabla _x\) is unbounded from above.

-

(ii)

Assumption 3 is satisfied when

$$\begin{aligned} \lim _{|x|\rightarrow \infty } \dfrac{U(x)}{|x|^\beta }=\infty \end{aligned}$$for some \(\beta >1\) (see [31] for a proof). We would like to comment here that we require Assumption 3 only for technical purposes, more precisely in the proof of Lemma 2.6 where we used the spectral decomposition of the elliptic operator \(\nabla _x^*\nabla _x\) to construct the test functions we desire. We believe that the assumption is not necessary for our main results to hold. We leave this for future research.

-

(iii)

Similar versions of Assumption 2 is commonly used in the literature, see e.g., the books [45, 54] and the papers [18, 19], and is satisfied when U grows at most exponentially fast as \(x\rightarrow \infty \). Here we adopt the more natural dimension scaling in [10, Assumption 1] (in particular, we take \(c_1=c_3=M\) in their setting), since in the case of separable potential \(U(x) = \sum _{i=1}^d u(x_i)\), this amounts to the more natural one-dimensional estimate \(|u''|^2 \leqslant M(1+|u'|^2)\).

Theorem 1

Under Assumptions 1, 2, and 3, there exist a constant \(\nu > 0\) and universal constants C, c independent of all parameters such that, for every f(t, x, v) satisfying the backward Kolmogorov equation (4) with initial condition \(f_0 \in L^2(\mu ;H^1_\kappa )\) and

we have, for every \(t\in (0,\infty )\),

Moreover, \(\nu \) can be made explicit as

with some constant \(R>0\) given by

-

(i)

If U is convex, then

$$\begin{aligned} R=0. \end{aligned}$$ -

(ii)

If the Hessian of U is bounded from below

$$\begin{aligned} \nabla _x^2 U(x) \geqslant -K\, \textrm{Id}, \qquad \forall \, x \in {\mathbb {R}}^d \end{aligned}$$(14)for some constant \(K \geqslant 0\), then

$$\begin{aligned} R=\sqrt{K}. \end{aligned}$$Note that if \(K = 0\), we recover the estimate in case (i).

-

(iii)

In the most general case without further assumptions,

$$\begin{aligned} R=M+M^\frac{3}{4}d^\frac{1}{4}. \end{aligned}$$

Remark 1.2

-

(i)

If we fix \(m=O(1)\), then, when \(\gamma \rightarrow 0\) (resp. \(\gamma \rightarrow \infty \)), our estimate provides an estimate on decay rate of \(O(\gamma )\) (resp. \(O(\gamma ^{-1})\)). This is consistent with [17, 26, 47] and also the isotropic Gaussian case when \(U(x)=\frac{m}{2}|x|^2\) (see Appendix A).

-

(ii)

In the convex case, if we optimize with respect to \(\gamma \) by choosing \(\gamma =\sqrt{m}\), then

$$\begin{aligned} \nu =\frac{\sqrt{m}}{4c}. \end{aligned}$$As is shown in Appendix A, the scaling on m is optimal in the regime \(m\rightarrow 0\), as it is the rate even for isotropic quadratic potential. We refer the readers to Appendix B for the corresponding results from the DMS method, with a slightly more explicit estimate compared to [47].

-

(iii)

In the case where condition (14) is satisfied, e.g. for the double well potential \(U(x)=(|x|^2-1)^2\) with \(K=4\), our scaling on K is consistent with [36, Theorem 1] and [37, Sec. 5]. Similar assumption is also used in [44, Theorem 1] for functional inequalities.

-

(iv)

It is well-known that for overdamped Langevin dynamics, the decay rate is simply m in \(L^2(\mu )\) for (6). By part (ii) of this remark, when \(m \ll 1\), the underdamped Langevin dynamics (1) could converge to its equilibrium \(\rho _{\infty }\) at a rate \(O(\sqrt{m})\) for convex potentials, which is much faster than the overdamped Langevin dynamics.

-

(v)

Due to the relation (see e.g., [48])

$$\begin{aligned}&\frac{1}{\sqrt{2}}\left\Vert \rho - \rho _{\infty }\right\Vert _{\text {TV}} \leqslant \sqrt{\textrm{KL}\left( \rho \,\Vert \,\rho _{\infty }\right) } \leqslant \sqrt{\chi ^2(\rho , \rho _{\infty })} \\&\quad \equiv \left\Vert \rho - \rho _{\infty }\right\Vert _{L^2(\rho _{\infty }^{-1})} \equiv \left\Vert f - \int f\,\textrm{d}\rho _{\infty }\right\Vert _{L^2(\rho _{\infty })}, \end{aligned}$$where \(f = \,\textrm{d}\rho / \,\textrm{d}\rho _{\infty }\), and the Talagrand inequality [44] \(W_2(\rho ,\rho _{\infty }) \leqslant \sqrt{\frac{2}{C_{LSI}}\textrm{KL}(\rho \Vert \rho _{\infty })}\) where \(C_{LSI}\) is the logarithmic Sobolev constant, Theorem 1 implies that \(\rho (t, \cdot , \cdot )\) converges to \(\rho _{\infty }\) with rate \(2\nu \) in both \(\chi ^2\)-divergence and relative entropy, and with rate \(\nu \) in total variation and (if \(\mu \) satisfies log-Sobolev inequality) 2-Wasserstein distance. On the other hand, our result does not imply

$$\begin{aligned}d(\rho _t,\rho _{\infty }) \leqslant C\exp (-\nu t) d(\rho _0,\rho _{\infty }) \end{aligned}$$where \(d(\rho ,\pi ) = TV(\rho ,\pi ), \, W_2(\rho ,\pi )\) or \(\textrm{KL}(\rho \Vert \pi )\). It is interesting to study if one could establish the same convergence rate with Wasserstein distance (which is the same as asking if one could establish a coupling argument for our result) or relative entropy.

Our decay estimate is based on the following Poincaré-type inequality in time-augmented space:

Theorem 2

Under Assumptions 1, 2, and 3, there exist a universal constant C independent of all parameters, and a constant \(R<\infty \) (the same constant as in Theorem 1) such that for every \(f\in H_{hyp}^1(\lambda \otimes \mu )\), we have

Let us give a brief introduction on the strategy of the proof, which is strongly motivated by the work of Armstrong and Mourrat [1]. A naive energy estimate and Gaussian Poincaré inequality yields

While the above establishes the \(L^2\) energy decay, it does not directly yield exponential decay rate. In particular, the energy dissipation is only present in velocity variable. However, instead of looking at single time slice, we should look at time intervals, since after time propagation, the dissipation in v together with the transport terms in x will lead to dissipation in x. Moreover, in the analysis, we are essentially treating the time variable t as another space variable alongside x. With the help a Poincaré-type inequality in the time-augmented state space established in Theorem 2, we can prove exponential convergence still using the standard energy estimate, in line with the moral “hypocoercivity is simply coercivity with respect to the correct norm”, quoted from [1, Page 4].

To prove Theorem 2, as an educated reader might realize from [19], the elliptic regularity in x variable plays an important role in the estimates, which in Lemma 2.4 we made a mild generalization to the time-augmented space \(L^2(\lambda \otimes \mu )\). However, in the proof of Theorem 2 when applying integration by parts, we need test functions that vanish at both boundary layers \(t=0\) and \(t=T\), which is not necessarily satisfied by the derivatives of the solution to the elliptic equation (22). This is why we resort to Lemma 2.6 (also an extension of Bogovskii’s operator [11] to \((I\times {\mathbb {R}}^d,\lambda \otimes \mu )\)) for the solution of the divergence equation (25), which is a cornerstone of this proof. In particular, even for convex U, the constants in (15) blow up as \(T\rightarrow 0\), which can be traced down to the estimate of \(\psi _{2,\alpha }'\) in (35), and thus prevents us from working on single time slices.

1.3 A Literature Review and Comparison

Kinetic Fokker–Planck equation was first studied by Kolmogorov [34], and was the main motivation for Hörmander’s theory on hypoelliptic equations [32], which gave an almost complete classification of second-order hypoelliptic operators. The earliest result regarding its exponential convergence were established in [52] for potentials with bounded Hessian, which was later generalized in [41, 51, 55]. There is a substantial amount of works in the literature for studying the exponential convergence of the underdamped Langevin dynamics. Below, we shall categorize them based on the norms and approaches to characterize the convergence.

-

(i)

(Convergence in \(H^1(\rho _{\infty })\) norm). The exponential convergence of the kinetic Fokker–Planck equation in \(H^1(\rho _{\infty })\) was proved by Villani in [54, Theorem 35], which was inspired by early works of [27, 29]. See also [53] for a brief overview of main ideas. The earlier work of [43] proved similar results on the torus without forcing term. Since \(L^2(\rho _{\infty })\) norm is controlled by \(H^1(\rho _{\infty })\) norm, this result automatically implies the convergence of (4) in \(L^2(\rho _{\infty })\). However, the decay rate therein is quite implicit; see [54, Sec. 7.2]. This approach is extended in [9] to possibly singular potentials with convergence rates given in certain cases.

-

(ii)

(Convergence in a modified \(L^2(\rho _{\infty })\) norm). A more direct approach for convergence in \(L^2(\rho _{\infty })\) was developed by Dolbeault, Mouhot and Schmeiser in [18, 19], see also earlier ideas in [28]. They identified a modified \(L^2(\rho _{\infty })\) norm, denoted by \(\textsf{E}\), such that \(\textsf{E}(\rho (t, x, v)) \rightarrow 0\) exponentially fast for \(\rho (t, \cdot , \cdot )\) evolving according to (2). This hypocoercivity method was revisited and adapted in [17, 26, 47] to deal with the backward Kolmogorov equation (4), i.e., to show that \(\textsf{E}(f(t, \cdot , \cdot ))\) decays to zero exponentially fast. In Appendix B.1, we will briefly revisit how to choose the Lyapunov function \(\textsf{E}\), based on [16, Sec. 2], because their setup is consistent with our \(L^2(\rho _{\infty })\) estimate in Section 1.1 above. We would like to remark that while [47] gets some rate, for which the scalings in d and \(\gamma \) are known, it is difficult to determine the optimal \(\gamma \) for their convergence rate estimates. As a remark, the DMS method [18, 19] has been extended or adapted to study the convergence of spherical velocity Langevin equation [25], non-equilibrium Langevin dynamics [33], Langevin dynamics with general kinetic energy [49], temperature-accelerated molecular dynamics [50], adaptive Langevin dynamics [38], dynamics with Boltzmann-type dissipation [2], dynamics with singular potentials [12], just to name a few. It might be interesting to study whether the variational framework [1] we based on can be extended to these cases.

-

(iii)

(Convergence in Wasserstein distance). Baudoin discussed a general framework of the Bakry–Émery methodology [5] to hypoelliptic and hypocoercive operators, based on which the exponential convergence of the kinetic Fokker–Planck equation (quantified by a Wasserstein distance associated with a special metric) was proved under certain assumptions on the potential U(x) [7, Theorem 2.6]; see also [8]. A different approach is the coupling method for underdamped Langevin dynamics (1). In [16, Sec. 2], for strongly convex potential U, Dalalyan and Riou-Durand considered the mixing of the marginal distribution in the x coordinate, by a synchronous coupling argument; an estimate of the convergence rate was also explicitly provided, quantified by \(W_2\) distance [16, Theorem 1]. For more general potentials, Eberle, Guillin and Zimmer developed a hybrid coupling method, composed of synchronous and reflection couplings, to study the exponential convergence of probability distributions for the underdamped Langevin dynamics (1), quantified by a Kantorovich semi-metric [20]. Unfortunately, their rates are dimension dependent in general.

-

(iv)

(Convergence in relative entropy) Villani [54] obtained exponential convergence of kinetic Fokker–Planck in the case of potentials with bounded Hessian, which is extended in [8]. A more quantitative convergence rate is obtained in [40]. All of them essentially used Gamma calculus on a twisted metric so that derivatives in x direction can be introduced. In [13], exponential convergence of entropy is established for potentials that may not have bounded Hessians but satisfy a stronger weighted log-Sobolev inequality.

There are other approaches to study the long time behavior of the underdamped Langevin dynamics, e.g., Lyapunov function [4, 41, 51, 55] and spectral analysis [21, 35]. There are also works that extend the aforementioned approaches to dynamics with singular potentials [9, 12, 14, 15, 30, 39]. We will not go into details here.

While our work is not the first one that studies the exponential convergence of underdamped Langevin dynamics, our estimates are more quantitative, and in certain cases, sharper than any existing result. In particular, for a large class of convex potentials, we establish an \(O(\sqrt{m})\) convergence rate after optimizing in \(\gamma \), which is independent of dimension and only assumes a mild upper bound (Assumption 2) on the derivatives of the potential. To the best of our knowledge, this optimal \(O(\sqrt{m})\) convergence rate is new in the literature.

Table 1 summarizes the previous results [9, 16, 40] under the assumption \(m \textrm{Id} \leqslant \nabla _x^2 U \leqslant L \textrm{Id}\) (and hence guarantee Assumptions 1–3) in the most interesting regime \(m\ll 1 \ll L\), with optimal choice of \(\gamma \). To elaborate the comparison with result of [40], after a rescaling, they proved exponential convergence of (4) with friction parameter (using their notations) \(\gamma \sqrt{\xi }\) and convergence rate \(O(\frac{\lambda }{\sqrt{\xi }})\), with constraints that requires (see [40, Proof of Lemma 8])

Combined, these yield \(\xi \geqslant O(L)\) and \(\lambda \leqslant O(m)\), which means the convergence rate cannot exceed \(O(\frac{m}{\sqrt{L}})\). Moreover, they require \(\gamma \geqslant O(1)\), or their friction parameter must be at least \(O(\sqrt{L})\).

We also comment that in the case where \(\Vert \nabla _x^2 U\Vert \leqslant L \textrm{Id}\), but U is not necessarily convex, our convergence rate is \(\nu = O(\frac{m}{\sqrt{L}})\) after optimizing in \(\gamma \) by choosing \(\gamma \sim \sqrt{L}\), which matches the results of existing works [9, 40].

2 Proofs

In this section, we present the statements and proofs of auxiliary lemmas, followed by the proofs of the two main theorems. Lemmas 2.1, 2.2 and 2.3 are the technical lemmas that prepare us for the elliptic regularity result in Lemma 2.4. The proof of the divergence Lemma, which builds up from elliptic regularity, is presented in Lemma 2.6. The proof of Theorem 2 is then possible with the test functions obtained from Lemma 2.6. Finally we present the proof of Theorem 1 which follows from Theorem 2 and energy estimate.

We start with the Poincaré inquality on tensorized space \((I\times {\mathbb {R}}^d, \lambda \otimes \mu )\), which allows elliptic regularity to hold in the time-augmented state space. The proof is standard and is thus omitted.

Lemma 2.1

(Poincaré Inequality) For \(f\in H^1(\lambda \otimes \mu )\),

The next lemma is also a technical lemma, the goal of which is to show that under Assumption 2, \(|\nabla ^2 U|\) defines a bounded operator \(H^1(\lambda \otimes \mu )\rightarrow L^2(\lambda \otimes \mu )\), which allows us to improve the regularity \(u\in H^2(\lambda \otimes \mu )\) for u being the solution of (22) in the proof of Lemma 2.4.

Lemma 2.2

[54, Lemma A.24] For any \(\phi \in H^1(\lambda \otimes \mu )\), we have

where M is the constant in (11).

Proof

We thus finish the proof of (17) after rearranging and using \(\delta <1\). \(\quad \square \)

Next we present a technical lemma that prepares us for the (mixed space-time) \(H^2\) estimates of u, the solution of the elliptic equation (22). This is a generalization of a similar \(L^2\)–\(H^2\) regularity estimate in [19, Proposition 5], where only the spatial variable is considered, but our estimates are algebraically simpler thanks to Bochner’s formula. Let us remark that we adopt the same scaling of parameters as [10, Lemma 3.6], especially in the most general case (iii).

Lemma 2.3

For any \(u\in H^2(\lambda \otimes \mu )\) such that \(\bar{\nabla } u\in H_0^1(\lambda \otimes \mu )^{d+1}\),

Similarly,

Here C is a universal constant whose the precise value can be traced in the proof under different assumptions in Theorem 1, and R is defined in Theorem 1.

Proof

We only prove (18) since the proof of (19) follows from a similar argument. The starting point of the proof is Bochner’s formula

Integrate over \(\lambda \otimes \mu \) and (noticing the last term above has integral zero) we get

This already verifies the conclusion in cases (i) (setting \(K=0\)) and (ii) with \(C=1\).

Now we deal with the more general case, without assuming (14). Using (17) with \(\phi =\partial _{x_i} u,\ i=1,\cdots ,d\),

Rearranging the terms, we arrive at

Therefore by (21) and triangle inequality,

\(\square \)

One of the key lemmas of our proof is the following result on elliptic regularity on the space \((I\times {\mathbb {R}}^d, \lambda \otimes \mu )\) (the solution to such elliptic equation will play an important role in the proof of Lemma 2.6):

Lemma 2.4

Consider the following elliptic equation:

Assume \(h\in H^{-1}(\lambda \otimes \mu )\), and \((h)_{\lambda \otimes \mu }=0\). Define the function space

Then

-

(i)

There exists a unique \(u\in V\) which is a weak solution to (22). More precisely, for any \(v\in H^1(\lambda \otimes \mu )\), we have

$$\begin{aligned} \int _{I\times {\mathbb {R}}^d} (\partial _t u\partial _t v +\nabla _x u \cdot \nabla _x v)\,\textrm{d}\lambda (t)\,\textrm{d}\mu (x) = \int _{I\times {\mathbb {R}}^d} hv \,\textrm{d}\lambda (t)\,\textrm{d}\mu (x). \end{aligned}$$Moreover, when \(h\in L^2(\lambda \otimes \mu )\), we have the estimate

$$\begin{aligned} \Vert \partial _t u\Vert _{L^2(\lambda \otimes \mu )}^2 + \Vert \nabla _x u\Vert _{L^2(\lambda \otimes \mu )}^2 \leqslant \max \left\{ \frac{1}{m},\frac{T^2}{\pi ^2}\right\} \Vert h\Vert _{L^2(\lambda \otimes \mu )}^2. \end{aligned}$$(23) -

(ii)

If \(h\in L^2(\lambda \otimes \mu )\), then the solution u to (22) satisfies \(u\in H^2(\lambda \otimes \mu )\).

Remark 2.5

One could in fact estimate \(\Vert u\Vert _{H^1(\lambda \otimes \mu )}\) using only \(\Vert h\Vert _{H^{-1}(\lambda \otimes \mu )}\), but with a slightly worsened constant \(\max \{\frac{1}{m},\frac{T^2}{\pi ^2},1\}\) on the rhs. Since in our applications we only use \(\Vert h\Vert _{L^2(\lambda \otimes \mu )}\), we opt for the current version of (23) for simplicity.

Proof

(i) V is a linear Hilbert space and has non-zero elements (any function constant in t, and \(H^1\) and mean zero in x is included in V). Moreover, V is a subspace of \(H^1(\lambda \otimes \mu )\), and for the rest of the paper we equip it with the \(H^1(\lambda \otimes \mu )\) norm. We also define the following inner-product:

One can easily verify \(B(\cdot ,\cdot )\) is an inner product on V. Notice that if \(B(u,u)=0\) then \(\partial _t u=\nabla _x u=0\), leaving u to be a constant, which has to be 0 since \((u)_{\lambda \otimes \mu }=0\). If u is a weak solution of (22), then for any \(v\in V\), \(B(u,v)=\int _{I\times {\mathbb {R}}^d} hv\,\textrm{d}\lambda (t)\,\textrm{d}\mu (x) \), and necessarily \((h)_{\lambda \otimes \mu }=0\) when we take \(v=1\).

Since \((u)_{\lambda \otimes \mu }=0\), by Poincaré inequality (Lemma 2.1) we can show B is coercive under \(H^1(\lambda \otimes \mu )\) norm in the sense of

We can also show B is bounded above since it is an inner-product and \(B[u,u]\leqslant \Vert u\Vert _{H^1(\lambda \otimes \mu )}^2\). Define a linear functional on V: \(H(v){:}{=}\int _{I\times {\mathbb {R}}^d} hv \,\textrm{d}\lambda (t)\,\textrm{d}\mu (x)\). One can verify the boundedness of H:

Thus by Lax–Milgram’s Theorem, the equation (22) has a unique weak solution \(u\in V\). Moreover,

and the desired estimate follows.

(ii) For each \(i=1,2,\cdots ,d\), consider the elliptic equation

The motivation of considering (24) is that, if we formally differentiate (22) with respect to \(\partial _{x_i}\), then \(\partial _{x_i} u \) satisfies precisely the equation (24) for \(w_i\). Hence, our plan is to use part (i) to establish \(w_i\in H^1(\lambda \otimes \mu )\), then argue that \(w_i-\partial _{x_i} u\) must be constant.

We first verify the rhs of (24) has total integral zero. Indeed

The next step is to show rhs is in \(H^{-1}(\lambda \otimes \mu )\). Pick a test function \(\phi \in H^1(\lambda \otimes \mu )\) with \(\Vert \phi \Vert _{H^1(\lambda \otimes \mu )}=1\), and, by Lemma 2.2,

where \(C(M,d)>0\) is a constant depending on M, d. Therefore, by (i) we know there exists a \(w_i\in V\) which is the weak solution of (24). Finally, comparing (22) and (24), we observe that \({\mathscr {L}}(w_i-\partial _{x_i}u)=0\) in the sense of distributions, which by (i) indicates \(w_i-\partial _{x_i} u\) must be constant, which must be \(-(\partial _{x_i} u)_{\lambda \otimes \mu }\), since by construction \(w\in V\) and \((w)_{\lambda \otimes \mu }=0\). This also means \(\partial _{x_i} u \in H^1(\lambda \otimes \mu )\) since \(w_i\in H^1(\lambda \otimes \mu )\). We end the proof of \(u\in H^2(\lambda \otimes \mu )\) by writing \(\partial _{tt} u =\nabla _x^*\nabla _x u-h \in L^2(\lambda \otimes \mu ) \). \(\quad \square \)

We finally need a lemma for the solution of a divergence equation with Dirichlet boundary conditions. The resolution of divergence equation is an important tool in mathematical fluid dynamics (see the book [23, Section III.3]). However, in order to obtain more natural estimate on the constants, instead of resorting to the aforementioned Bogovskii’s operator, we take advantage of the structure of space \(L^2(\mu )\) by eigenspace decomposition, which is made possible thanks to Assumption 3. This will provide us test functions which play a crucial role in the proof of Theorem 2.

Lemma 2.6

For any function \(f\in L^2(\lambda \otimes \mu )\) with \((f)_{\lambda \otimes \mu }=0\), there exist two functions \(\phi _0 \in H_0^1(\lambda \otimes \mu )\) and \(\Phi \in H^2(\lambda \otimes \mu )\) such that \(\nabla _x \Phi \in H_0^1(\lambda \otimes \mu )^d\) and

with estimates

and

Here C is a universal constant and R is the constant defined in Theorem 1.

Remark 2.7

We believe the correct scaling of the rhs should be \(O(\frac{1}{T})\) as \(T\rightarrow 0\), which we are unable to obtain, due to the pessimistic estimates in the last two lines of (31) that changed the scaling of the last two terms from O(1) to \(O(T^2)\), but will not pursue further since in the proof of Theorem 1 we only take \(T=\frac{1}{\sqrt{m}}\). As we mentioned iearlier after Theorem 2, the scaling of \(O(\frac{1}{T})\) as \(T\rightarrow 0\) should come from (35).

Before we proceed to the proof, let us give a brief heuristic argument on why we need to introduce the space of harmonic functions (i.e. the space \({\mathbb {H}}\) that appears at the beginning of the proof) and consider orthogonal projection on it. Indeed, a direct way to look for a solution of (25) is to look for that of (22) and set \(\phi _0=\partial _t u, \Phi = u\). However, these test functions do not satisfy the appropriate boundary conditions. In particular, if solution of (22) satisfy \(\nabla _x u(t=0,\cdot ) = \nabla _x u(t=T,\cdot )=0\), then necessarily f has to be perpendicular to the space of harmonic functions. Meanwhile, the harmonic part of f requires special treatment from us and brings technical difficulty to the proof. However, thanks to Assumption 3, one can decompose the harmonic part of f using separation of variables, which enables us to obtain the solution of divergence equation by constructing it for each component and adding them up.

Proof

Let \({\mathbb {H}}\) be the subspace of \(L^2(\lambda \otimes \mu )\) that consists of “harmonic functions”, in other words, \(f\in {\mathbb {H}}\) if and only if \({\mathscr {L}}f=0\). We consider the decomposition \(f=f^{(1)}+f^{(2)}\) where \(f^{(1)}\in {\mathbb {H}}\) and \(f^{(2)}\perp {\mathbb {H}}\). Since \(1\in {\mathbb {H}}\) we know \((f^{(2)})_{\lambda \otimes \mu }=0\) and hence \((f^{(1)})_{\lambda \otimes \mu }=0\). Therefore by linearity it suffices to consider \(f^{(1)}\) and \(f^{(2)}\) separately. For \(f^{(2)}\), the equation

has a unique solution in \(V\cap H^2(\lambda \otimes \mu )\) by Lemma 2.4. Moreover, for any \(v\in {\mathbb {H}} \cap H^2(\lambda \otimes \mu )\), integration by parts yields

Therefore, since v is arbitrary, we have \(u(T)=u(0)=0\), which implies \(\nabla _x u\in H_0^1(\lambda \otimes \mu )^d\). Also by construction of boundary conditions \(\partial _t u\in H_0^1(\lambda \otimes \mu )\). Thus for \(f^{(2)}\) part, it suffices to take correspondingly \(\phi _0^{(2)}=\partial _t u,~\Phi ^{(2)}= u\) with the estimates

and

We now consider the \(f^{(1)}\) part. Since \( \{1\}\cup \{w_\alpha \} \) forms an orthonormal basis in \(L^2(\mu )\) and \((f^{(1)})_{\lambda \otimes \mu }=0\), we have an orthogonal decomposition

Since \(f^{(1)}\) is harmonic,

and therefore \(f_0(t)\) is an affine function \(f_0(t)=c_0(t-\frac{T}{2})\) for some constant \(c_0\), as \(f_0(t)\) has integral zero. Moreover for \(\alpha >0\) there exist constants \(c_\pm ^\alpha \) such that

Therefore, by orthogonality in \(L^2(\lambda \otimes \mu )\), we can write for some constant \(C\in (1,\infty )\),

The construction of test functions for \(f_0(t)\) is straightforward: We simply take \(\Phi ^{(0)}=0\) and \(\phi _0^{(0)}(t,x)=\frac{c_0}{2}(t^2-tT)\). We then construct \(\phi _{0,\alpha },\Phi _\alpha \) for each component of the sum \(e^{-\alpha t} w_\alpha (x)\), and therefore the functions \(\phi _{0,\alpha }(T-t,\cdot ),\Phi _{\alpha }(T-t,\cdot )\) also apply to the component \(e^{-\alpha (T-t)}w_\alpha (x)\), so that the eventual test functions \(\phi _0,\Phi \) can be obtained after taking linear combination. The goal is to find \(\phi _{0,\alpha },\Phi _\alpha \) such that

Since \(w_\alpha \in H^2(\lambda \otimes \mu )\), in order to eliminate the x part of the equation, we can take the natural ansatz by separation of variables \(\phi _{0,\alpha }=\psi _{1,\alpha }(t)w_\alpha (x)\) and \(\Phi _\alpha =\psi _{2,\alpha }(t) w_\alpha (x)\), and the two functions \(\psi _{1,\alpha }(t),\psi _{2,\alpha }(t)\) should satisfy \(\psi _{1,\alpha }(0)=\psi _{1,\alpha }(T)=\psi _{2,\alpha }(0)=\psi _{2,\alpha }(T)=0\) as well as the equation

Integrating (32) against t, we obtain the necessary and sufficient condition

Of course there exists infinitely many possible solutions, since for any \(\psi _{2,\alpha }\) that vanishes at both time boundaries and satisfies (33), the choice \(\psi _{1,\alpha } = \int _0^t (\alpha ^2\psi _{2,\alpha } (\tau ) -e^{-\alpha \tau })\,\textrm{d}\tau \) also vanishes at both time boundaries. Therefore we only need to choose a particular one to satisfy the desired estimates. Let us introduce a short-hand notation \(\ell =e^{-\alpha T} \in (0,1)\). Our idea is to find \(\psi _{2,\alpha }\) of the form \(\psi _{2,\alpha }(t)= \frac{1}{\alpha ^2}g(e^{-\alpha t})\), which after a change of variable \(s{:}{=}e^{-\alpha t}\) turns the condition (33) into \(\int _{\ell }^1 \frac{g(s)}{s}\,\textrm{d}s = 1-\ell \), and the boundary conditions into \(g(1)=g(\ell )=0\). Hence, we may finish our construction by picking \(g(s)=sh(s)\) with

From the expression we can directly derive (using \(\alpha \geqslant \sqrt{m}\))

One can explicitly compute

Moreover since \(\psi _{1,\alpha }'(t) = \alpha ^2\psi _{2,\alpha }(t) - e^{-\alpha t}\) from (32),

Finally since

with

we can estimate

To sum up, our construction of test functions can be write as

here we recall that u is the solution of (28).

We now establish the estimates by direct calculations, which is possible since the variables are separated. Notice that for \(\alpha , \beta \),

hence cross terms in the expansion of \(\Vert \sum _\alpha (c_+^\alpha \psi _{2,\alpha }(t) + c_-^\alpha \psi _{2,\alpha }(T-t))\nabla _x w_\alpha (x)\Vert _{L^2(\lambda \otimes \mu )}^2\) vanish. Therefore, we can estimate

Here in the last line when we used (31), the worse factor \((1-e^{-\sqrt{m}T})^{-2}\) comes only from the last term on the line above. This establishes (26). Using similar arguments, we can estimate

as well as

We finally treat the terms from \(\nabla ^2_x \Phi \):

Adding together (39), (40) and (41), we arrive at

\(\quad \square \)

We are now ready to prove the main results of the paper. The proof is essentially inspired from that of [1, Proof of Theorem 3]. In particular, to retrieve the \(L^2(\lambda \otimes \mu ;H^{-1}_\kappa )\) norm, we need to construct a test function that is in \(L^2(\lambda \otimes \mu ;H^1_\kappa )\), which is highly related to the test functions constructed in Lemma 2.6. The differences of these two proofs are: (1) we choose the test functions explicitly \(\xi _0=1\) and \(\xi _i = v_i\), which are orthogonal to each other and have explicit expressions for up to fourth moments (in particular any first and third moments vanish); (2) Instead of using \(\Vert \bar{\nabla } \Pi _v f\Vert _{H^{-1}(\lambda \otimes \mu )}\) as an intermediate step, we proceed as (42) and control the \(L^2(\lambda \otimes \mu ;H^1_\kappa )\) norm of another explicitly constructed function, in order to minimize the usage of Cauchy–Schwarz inequalities and track the dimension dependence of constants carefully.

Proof of Theorem 2

Without loss of generality, assume \((f)_{\lambda \otimes \rho _{\infty }}=0\). which indicates \( (\Pi _v f)_{\lambda \otimes \mu } = 0\). Therefore, we can take \(\phi _0, \Phi \) as in Lemma 2.6 with \(\Pi _v f\) in place of f, so that \(-\partial _t \phi _0 + \nabla _x^* \nabla _x \Phi = \Pi _v f\). The trick in our following step is to introduce v variable in the calculation. Notice, by Gaussianity, that

where \(\delta _{i,j}\) is the Kronecker symbol which equals to 1 if \(i=j\) and 0 otherwise. Thus,

For the first integral on the right hand side, we use integration by parts, where it is important that the test functions \((\phi _0,\nabla _x\Phi )\) have Dirichlet boundary conditions in time:

We further estimate the term \(\Vert \phi _0-v\cdot \nabla _x\Phi \Vert _{L^2(\lambda \otimes \mu ;H^1_\kappa )}\) by explicit integration, noticing \((\phi _0,\Phi )\) do not depend on v so that explicit moments of v can be directly calculated:

For the second integral in (42), we estimate again by explicit expansion in v, which is possible since we have explicit up to fourth moments of v:

Combining the above estimates, we arrive at

Finally

as claimed.\(\quad \square \)

With Theorem 2, we are now able to prove exponential relaxation to equilibrium claimed in Theorem 1, which essentially follows from a standard energy estimate.

Proof of Theorem 1

We first notice that the solution \(f\in H^1_{hyp}((0,T)\otimes \mu )\) for all \(T>0\). Indeed, as long as \(f_0\in L^2(\mu ;H^1_\kappa )\), we have \(f(t,\cdot ,\cdot ) \in L^2(\mu ;H^1_\kappa )\) for any \(t>0\) (see for example [54, Theorem 35]), and hence \(\partial _t f -{\mathcal {L}}_{\text {ham}}f = -\gamma \nabla _v^*\nabla _v f \in L^2(\lambda \otimes \mu ;H_\kappa ^{-1})\). We also have that (12) implies

for all \(t\in (0,T)\). This follows from

using the equation (4) and integration by parts.

For every \(0<s<t\), we have the typical energy estimate (hereafter we use \(L^2((s,t)\otimes \rho _{\infty })\) to denote \(L^2(\lambda _{(s,t)}\otimes \rho _{\infty })\)):

In particular,

Since by equation (4),

we have

Now fix T to be the length of the time interval. Denote \(b_1=C(\frac{1}{\sqrt{m}(1-e^{-\sqrt{m} T})}+T)\) and \(b_2=C(1+RT+ \frac{1}{(1-e^{-\sqrt{m}T})^2}+ \frac{R}{\sqrt{m}(1-e^{-\sqrt{m}T})^2})\), and thus by Theorem 2, (43) and (44), and Gaussian Poincaré inequality

we have for time stamps \(t_k =kT\)

Now for any \(t>0\), we pick the integer k satisfying \(t_k\leqslant t < t_{k+1}\), so that \(\Vert f(t,\cdot )\Vert _{L^2(\rho _{\infty })} \leqslant \Vert f(t_k,\cdot )\Vert _{L^2(\rho _{\infty })}\). Applying above inequality iteratively and using the monoticity (44), we obtain

The prefactor

is bounded above by a constant. Using \(\log (1+x) \geqslant \frac{1}{C}x\) for \(x \in [0, \frac{1}{C}]\) for some universal constant C, and then pick \(T=\frac{1}{\sqrt{m}}\), this yields exponential decay with rate

which is precisely (13). \(\quad \square \)

Data Availability Statement

This manuscript has no associated data.

References

Albritton, D., Armstrong, S., Mourrat, J.-C., Novack, M.: Variational methods for the kinetic Fokker–Planck equation, arXiv preprint arXiv:1902.04037, 2019

Andrieu, C., Durmus, A., Nüsken, N., Roussel, J.: Hypocoercivity of piecewise deterministic Markov process-Monte Carlo. Ann. Appl. Probab. 31(5), 2478–2517, 2021

Armstrong, S.: Answer to “Elliptic regularity with Gibbs measure satisfying Bakry–Emery condition”, MathOverflow. https://mathoverflow.net/q/335599 (version: 2019-07-06)

Bakry, D., Cattiaux, P., Guillin, A.: Rate of convergence for ergodic continuous Markov processes: Lyapunov versus Poincaré. J. Funct. Anal. 254(3), 727–759, 2008

Bakry, D., Émery, M.: Diffusions hypercontractives, Séminaire de Probabilités XIX 1983/84, Springer, 177–206, 1985

Bakry, D., Gentil, I., Ledoux, M.: Analysis and Geometry of Markov Diffusion Operators, Springer, Cham, 2014

Baudoin, F.: Wasserstein contraction properties for hypoelliptic diffusions, arXiv:1602.04177 [math], 2016

Baudoin, F.: Bakry–émery meet Villani. J. Funct. Anal. 273(7), 2275–2291, 2017

Baudoin, F., Gordina, M., Herzog, D.P.: Gamma calculus beyond Villani and explicit convergence estimates for Langevin dynamics with singular potentials. Arch. Rational Mech. Anal. 241(2), 765–804, 2021

Bernard, É., Fathi, M., Levitt, A., Stoltz, G.: Hypocoercivity with Schur complements. Annales Henri Lebesgue 5, 523–557, 2022

Bogovskii, M.E.: Solution of the First Boundary Value Problem for the Equation of Continuity of an Incompressible Medium, Doklady Akademii Nauk, vol. 248, Russian Academy of Sciences, 1037–1040, 1979

Camrud, E., Herzog, D.P., Stoltz, G., Gordina, M.: Weighted \(L^2\)-contractivity of Langevin dynamics with singular potentials. Nonlinearity 35(2), 998, 2021

Cattiaux, P., Guillin, A., Monmarché, P., Zhang, C.: Entropic multipliers method for Langevin diffusion and weighted log Sobolev inequalities. J. Funct. Anal. 277(11), 108288, 2019

Conrad, F., Grothaus, M.: Construction, ergodicity and rate of convergence of \({N}\)-particle Langevin dynamics with singular potentials. J. Evol. Equ. 10(3), 623–662, 2010

Cooke, B., Herzog, D.P., Mattingly, J.C., McKinley, S.A., Schmidler, S.C.: Geometric ergodicity of two-dimensional Hamiltonian systems with a Lennard–Jones-like repulsive potential. Commun. Math. Sci. 15(7), 1987–2025, 2017

Dalalyan, A.S., Riou-Durand, L.: On sampling from a log-concave density using kinetic Langevin diffusions. Bernoulli 26(3), 1956–1988, 2020

Dolbeault, J., Klar, A., Mouhot, C., Schmeiser, C.: Exponential rate of convergence to equilibrium for a model describing fiber lay-down processes. Appl. Math. Res. eXpress 2013(2), 165–175, 2013

Dolbeault, J., Mouhot, C., Schmeiser, C.: Hypocoercivity for kinetic equations with linear relaxation terms. Comptes Rendus Mathematique 347(9), 511–516, 2009

Dolbeault, J., Mouhot, C., Schmeiser, C.: Hypocoercivity for linear kinetic equations conserving mass. Trans. Am. Math. Soc. 367(6), 3807–3828, 2015

Eberle, A., Guillin, A., Zimmer, R.: Couplings and quantitative contraction rates for Langevin dynamics. Ann. Probab. 47(4), 1982–2010, 2019

Eckmann, J.-P., Hairer, M.: Spectral properties of hypoelliptic operators. Commun. Math. Phys. 235(2), 233–253, 2003

Evans, L.C.: Partial Differential Equations, vol. 19, American Mathematical Society, Philadelphia, 2010

Galdi, G.: An Introduction to the Mathematical Theory of the Navier–Stokes Equations: Steady-State Problems, Springer, Berlin, 2011

Gigli, N.: Answer to “Elliptic regularity with Gibbs measure satisfying Bakry–Emery condition”, MathOverflow. https://mathoverflow.net/q/335608 (version: 2019-07-06)

Grothaus, M., Stilgenbauer, P.: Hypocoercivity for Kolmogorov backward evolution equations and applications. J. Funct. Anal. 267(10), 3515–3556, 2014

Grothaus, M., Stilgenbauer, P.: Hilbert space hypocoercivity for the Langevin dynamics revisited. Methods Funct. Anal. Topol. 22(02), 152–168, 2016

Helffer, B., Nier, F.: Hypoelliptic Estimates and Spectral Theory for Fokker–Planck Operators and Witten Laplacians, vol. 1862, Springer, 2005

Hérau, F.: Hypocoercivity and exponential time decay for the linear inhomogeneous relaxation Boltzmann equation. Asympt. Anal. 46(3–4), 349–359, 2006

Hérau, F., Nier, F.: Isotropic hypoellipticity and trend to equilibrium for the Fokker–Planck equation with a high-degree potential. Arch. Ration. Mech. Anal. 171(2), 151–218, 2004

Herzog, D.P., Mattingly, J.C.: Ergodicity and Lyapunov functions for Langevin dynamics with singular potentials. Commun. Pure Appl. Math. 72(10), 2231–2255, 2019

Hooton, J.G.: Compact Sobolev imbeddings on finite measure spaces. J. Math. Anal. Appl. 83, 570–581, 1981

Hörmander, L.: Hypoelliptic second order differential equations. Acta Math. 119, 147–171, 1967

Iacobucci, A., Olla, S., Stoltz, G.: Convergence rates for nonequilibrium Langevin dynamics. Annales mathématiques du Québec 43(1), 73–98, 2019

Kolmogorov, A.: Zufallige bewegungen (zur theorie der Brownschen bewegung). Ann. Math. 66, 116–117, 1934

Kozlov, S.M.: Effective diffusion in the Fokker–Planck equation. Math. Notes Acad. Sci. USSR 45(5), 360–368, 1989

Ledoux, M.: A simple analytic proof of an inequality by P. Buser. Proc. Am. Math. Soc. 121(3), 951–959, 1994

Ledoux, M.: Spectral gap, logarithmic Sobolev constant, and geometric bounds. Surv. Differ. Geom. 9(1), 219–240, 2004

Leimkuhler, B., Sachs, M., Stoltz, G.: Hypocoercivity properties of adaptive Langevin dynamics. SIAM J. Appl. Math. 80(3), 1197–1222, 2020

Lu, Y., Mattingly, J.C.: Geometric ergodicity of Langevin dynamics with Coulomb interactions. Nonlinearity 33(2), 675, 2019

Ma, Y.-A., Chatterji, N.S., Cheng, X., Flammarion, N., Bartlett, P.L., Jordan, M.I.: Is there an analog of Nesterov acceleration for gradient-based MCMC? Bernoulli 27(3), 1942–1992, 2021

Mattingly, J.C., Stuart, A.M., Higham, D.J.: Ergodicity for SDEs and approximations: locally Lipschitz vector fields and degenerate noise. Stoch. Process. Appl. 101(2), 185–232, 2002

Metafune, G., Pallara, D., Priola, E.: Spectrum of Ornstein–Uhlenbeck operators in lp spaces with respect to invariant measures. J. Funct. Anal. 196(1), 40–60, 2002

Mouhot, C., Neumann, L.: Quantitative perturbative study of convergence to equilibrium for collisional kinetic models in the torus. Nonlinearity 19(4), 969, 2006

Otto, F., Villani, C.: Generalization of an inequality by Talagrand and links with the logarithmic Sobolev inequality. J. Funct. Anal. 173(2), 361–400, 2000

Pavliotis, G.A.: Stochastic Processes and Applications: Diffusion Processes, the Fokker–Planck and Langevin Equations, vol. 60, Springer, 2014

Risken, H.: Fokker–Planck Equation: Methods of Solution and Applications, Springer Series in Synergetics, 1989

Roussel, J., Stoltz, G.: Spectral methods for Langevin dynamics and associated error estimates. ESAIM Math. Model. Numer. Anal. 52(3), 1051–1083, 2018

Sason, I., Verdú, S.: \(f\)-divergence inequalities. IEEE Trans. Inf. Theory 62(11), 5973–6006, 2016

Stoltz, G., Trstanova, Z.: Langevin dynamics with general kinetic energies. Multiscale Model. Simul. 16(2), 777–806, 2018

Stoltz, G., Vanden-Eijnden, E.: Longtime convergence of the temperature-accelerated molecular dynamics method. Nonlinearity 31(8), 3748–3769, 2018

Talay, D.: Stochastic Hamiltonian systems: exponential convergence to the invariant measure, and discretization by the implicit Euler scheme. Markov Process. Relat. Fields 8(2), 163–198, 2002

Tropper, M.M.: Ergodic and quasideterministic properties of finite-dimensional stochastic systems. J. Stat. Phys. 17(6), 491–509, 1977

Villani, C.: Hypocoercive diffusion operators. Bollettino dell’Unione Matematica Italiana 10-B(2), 257–275, 2007

Villani, C.: Hypocoercivity. Mem. Am. Math. Soc. 202(950), 66, 2009

Wu, L.: Large and moderate deviations and exponential convergence for stochastic damping Hamiltonian systems. Stoch. Process. Appl. 91(2), 205–238, 2001

Acknowledgements

This research is supported in part by National Science Foundation via Grants DMS-1454939 and CCF-1910571. We would like to thank Rong Ge, Yulong Lu, Jonathan Mattingly, Jean-Christophe Mourrat, and Gabriel Stoltz for helpful discussions, and thank Felix Otto for discussions and providing an idea leading to the proof of Lemma 2.6. LW would also like to thank Scott Armstrong [3] and Nicola Gigli [24] for answering our question on MathOverflow, which lead to our proof of Lemma 2.4 (ii).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest Statement

The authors have no conflict of interest.

Additional information

Communicated by C. Le Bris.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

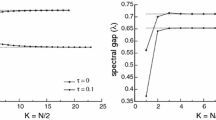

Appendix A. The Decay Rate for Isotropic Quadratic Potential

For isotropic quadratic potential, an explicit expression for the spectral gap of \({\mathcal {L}}\) is available (thus also the decay rate in (7)). Note that while the result is stated for \(d = 1\), it trivially extends to arbitrary dimension for isotropic quadratic potential as different coordinates are independent. The spectrum is also explicitly known for \(V=0\) and \(x\in {\mathbb {T}}^d\) on a torus, see [35].

Theorem 3

([46, (10.83)], [42, Theorem 3.1]) When \(U(x) = \frac{m}{2}|x|^2\), \(d = 1\), the spectrum of the operator \(-{\mathcal {L}}\) is given by

Let \(\lambda _{\text {exact}}\) be the spectral gap for the real component of \(\{\lambda _{i,j}\}_{i,j\geqslant 0}\). Notice that the spectral gap is always achieved when \(i = 0\) and \(j = 1\), thus

Corollary A.1

For any dimension d, for isotropic potential \(U(x) = \frac{m}{2}|x|^2\), (7) holds with the decay rate \(\lambda _{\text {exact}}\).

Appendix B. The DMS Hypocoercive Estimation

In this section, we will revisit the decay rate by DMS estimation [18, 19], adapted and summarized for underdamped Langevin equation in [47, Sec. 2]. In the first part of this section, we will review the main result based on [47]; in addition, we will provide a new estimate of the operator norm of \( \left\Vert {\mathcal {A}}{\mathcal {L}}_{\text {ham}}(1-\Pi _v)\right\Vert _{L^2(\rho _{\infty }) \rightarrow L^2(\rho _{\infty })}\), which leads into a more explicit expression of the decay rate. In the second part, we will present the asymptotic analysis of the decay rate with respect to m and \(\gamma \), under the assumption that \(\nabla _x^2 U \geqslant -2\, \textrm{Id}\).

1.1 Revisiting the DMS Hypocoercive Estimation in \(L^2(\rho _{\infty })\)

Let us first define an operator

and a Lyapunov function \(\textsf{E}\) for \(\phi (x, v)\) by

where \(\epsilon \in (-1, 1)\) is some quantity depending on \({\mathcal {L}}\), to be specified below. The functional \(\textsf{E}\) is equivalent to \(L^2(\rho _{\infty })\) norm in the following sense (see e.g., [47, Eq. (17)]),

Theorem 4

(See [47, Theorem 1]) Assume that the Poincaré inequality (10) holds and there exists \(\textsf{R}_{\text {ham}}< \infty \) such that

Suppose \(\epsilon \in (-1, 1)\) is chosen such that \(\lambda _{\text {DMS}} = \lambda _{\text {DMS}} (\gamma , m, \textsf{R}_{\text {ham}}, \epsilon ) > 0\), where

Then for any solution f(t, x, v) of (4) with \(\int f_0\ \,\textrm{d}\rho _{\infty }= 0\), we have

Notice that when \(\epsilon = 0\), the rate \(\lambda _{\text {DMS}} = 0\), which reduces to the conclusion that \(\left\Vert f(t, \cdot , \cdot )\right\Vert _{L^2(\rho _{\infty })}\) is non-increasing in time t. The existence of \(\textsf{R}_{\text {ham}}\) has been studied under fairly general assumptions on the potential U(x) in [19, Sec. 2]. In the Proposition B.1 below, we provide a simpler estimation of \(\textsf{R}_{\text {ham}}\) only under the assumption of lower bound on Hessian; see the Appendix B.3 for its proof. The first part of the proof is the same as [19, Lemma 4]; the simplicity in our approach comes from the application of Bochner’s formula. It is interesting to observe that \(\textsf{R}_{\text {ham}}\) does not depend on m when U is an isotropic quadratic potential.

Proposition B.1

Assume there exists \(K \in {\mathbb {R}}\) such that \(\nabla _x^2 U \geqslant -K\, \textrm{Id}\) for all \(x \in {\mathbb {R}}^d\), then we can choose

such that (49) is satisfied.

For the isotropic case \(U(x) = \frac{m}{2}|x|^2\), we have

Thus the optimal choice of \(\textsf{R}_{\text {ham}}\) is \(\sqrt{2}\) and (51) is tight in this case.

As an immediate consequence, if it holds that \(\nabla _x^2 U \geqslant -2\, \textrm{Id}\), we can take \( \textsf{R}_{\text {ham}}= \sqrt{2}\), which is tight for the isotropic case.

1.2 Asymptotic Analysis of the Decay Rate

In this subsection, we shall assume that \(\nabla _x^2 U \geqslant -2\, \textrm{Id}\), thus we can choose \(\textsf{R}_{\text {ham}}= \sqrt{2}\), according to the Proposition B.1. To remove the dependence on the parameter \(\epsilon \) and to find the optimal decay rate, let us introduce

provided that the supremum is not achieved at the boundary i.e., \(\epsilon = 1^{-}\) or \(\epsilon = (-1)^{+}\). Observe that

-

When \(\epsilon = 0\), \(\lambda _{\text {DMS}}(\gamma , m, \sqrt{2}, 0) = 0\);

-

When \(\epsilon = (-1)^{+}\), \(\lambda _{\text {DMS}}(\gamma , m, \sqrt{2}, (-1)^{+}) < 0\).

Therefore, the supremum can only be achieved at \(\epsilon = 1^{-}\), or the critical points of the expression on the right hand side of (52). In general, it is hard to obtain a simple explicit expression of \(\Lambda _{\text {DMS}}(\gamma , m)\). Therefore, we shall consider the following asymptotic regions:

Proposition B.2

-

(i)

For fixed \(m = O(1)\), we have

$$\begin{aligned} \Lambda _{\text {DMS}}(\gamma , m) = \left\{ \begin{aligned} \left( \frac{-(1+m) \sqrt{3m^2+4m+1} + 3m^2+3m+1}{6m^2+8m+3}\right) \gamma +O(\gamma ^2),&\qquad \text{ when } \ \gamma \rightarrow 0;\\ \frac{4m^2}{(1+m)^2 }\gamma ^{-1} + O(\gamma ^{-2}),&\qquad \text{ when } \ \gamma \rightarrow \infty .\\ \end{aligned} \right. \end{aligned}$$(53) -

(ii)

Consider coupled asymptotic regime \(\gamma = b\sqrt{m}\) (or equivalently \(m =\left( \gamma /b\right) ^2\)) for some \(b = O(1)\), we have

$$\begin{aligned} \Lambda _{\text {DMS}}(\gamma , m) = \left\{ \begin{aligned}&\frac{\gamma ^5}{2b^4} + O(\gamma ^6),&\qquad \text { when } \gamma \rightarrow 0;\\ \frac{4}{\gamma } + O(\gamma ^{-2}),&\qquad \text { when } \gamma \rightarrow \infty .\\ \end{aligned}\right. \end{aligned}$$(54)

The proof can be found in Appendix B.3. The scaling in the first case is already known in e.g., [17, 26, 47]; in the above proposition, we simply explicitly calculate the leading order term. The second case is relevant when we choose \(\gamma \) to optimize the convergence rate according to m and for the regime \(m\rightarrow 0\).

1.3 Proofs of the Propositions in Appendix

Proof of Proposition B.1

We first consider the case that Hessian is bounded from below. It is equivalent to consider the operator norm of

Notice that this operator is supported on \(\text {Ran}(\Pi _v)\) from the observation that \({\mathcal {A}}= \Pi _v {\mathcal {A}}\), it is then equivalent to find the smallest \(\textsf{R}_{\text {ham}}\) such that for any \(\phi (x,v)\) with \(\Pi _v \phi = \phi \) (i.e., \(\phi (x,v) \equiv \phi (x)\) is a function of x only), we have

Given such a function \(\phi \) with \(\Pi _v \phi = \phi \), define

It is easy to check that \(\Pi _v \varphi = \varphi \). By simplifying the above equation with (5) and (9),

Furthermore, by some straightforward calculation, we have

Thus

Then, by Bochner’s formula,

From (56), we have

By combining the last two equations,

which yields (51).

We now consider the isotropic case. Recall that the operator norm of \({\mathcal {A}}{\mathcal {L}}_{\text {ham}}(1-\Pi _v)\) is the smallest \(\textsf{R}_{\text {ham}}\) such that (55) holds. Let us consider the elliptic PDE (56). By the choice \(U(x) = \frac{m}{2}|x|^2\),

Then by rescaling the variable \(x = \frac{y}{\sqrt{m}}\) and rescaling the functions \(\bar{\phi }(y) {:}{=} {\phi }(x) = \phi \left( \frac{y}{\sqrt{m}}\right) \), \(\bar{\varphi }(y) {:}{=} {\varphi }(x) = {\varphi }\left( \frac{y}{\sqrt{m}}\right) \), we have

In addition, by rewriting (55), we need to find the smallest \(\textsf{R}_{\text {ham}}\) such that

Next, let us expand the last equation by probabilists’ Hermite polynomials \(H_k(z) {:}{=} (z - \frac{\textrm{d}}{\textrm{d}z})^k \cdot 1\) for integers \(k\geqslant 0\). Recall two important properties

Given \(\varvec{n} = (n_1, n_2, \cdots , n_d)\), define

By the above properties, it is easy to show that if \(\bar{\varphi } = H_{\varvec{n}}\), then \(\bar{\phi } = N_{\varvec{n}} H_{\varvec{n}}\), where \(N_{\varvec{n}} {:}{=} 1 + m \sum _{i} n_i\). Thus if \(\bar{\varphi }(y) = \sum _{\varvec{n}} a_{\varvec{n}}H_{\varvec{n}}\), then we have \(\bar{\phi } = \sum _{n} a_{\varvec{n}} N_{\varvec{n}} H_{\varvec{n}}\). By such an expansion, (58) can be rewritten as

Then finding the operator norm of \({\mathcal {A}}{\mathcal {L}}_{\text {ham}}(1-\Pi _v)\) is equivalent to finding the smallest \(\textsf{R}_{\text {ham}}\) such that for any \(\varvec{n}\), one has

When \(n_1 \rightarrow \infty \) and \(n_2, n_3, \cdots , n_d = 0\), we know that \(\frac{\textsf{R}_{\text {ham}}^2}{2} \geqslant 1\). Also observe that

Therefore, \(\frac{\textsf{R}_{\text {ham}}^2}{2} = 1\) is sufficient.

In summary, \(\left\Vert {\mathcal {A}}{\mathcal {L}}_{\text {ham}}(1-\Pi _v)\right\Vert _{L^2(\rho _{\infty }) \rightarrow L^2(\rho _{\infty })} = \sqrt{2}\) and the optimal choice of \(\textsf{R}_{\text {ham}}\) is \(\sqrt{2}\). \(\quad \square \)

Proof of Proposition B.2

We used Maple software to help verify the asymptotic expansion.

Part (i): \(m = O(1)\).

-

(when \(\gamma \rightarrow 0\)). Via asymptotic expansion, we have

$$\begin{aligned} \lambda _{\text {DMS}}(\gamma , m, \sqrt{2}, 1^{-}) = - \frac{1+\sqrt{6 m^2 + 8m + 3}}{4(1+m)} + O(\gamma ) < 0. \end{aligned}$$Thus the supremum is not obtained at \(\epsilon = 1^{-}\). Then let us consider critical points within the domain \((-1, 1)\), whose asymptotic expansions are

$$\begin{aligned} \epsilon _{\pm } = \frac{(6m^2+5m+1 \pm \sqrt{3m^2+4m+1})(1+m)}{18m^3+30m^2+17m+3} \gamma + O(\gamma ^2) > 0. \end{aligned}$$After comparison, the larger decay rate is obtained at \(\epsilon _{-}\) with the value in (53).

-

(when \(\gamma \rightarrow \infty \)). Similarly, via asymptotic expansion, we have

$$\begin{aligned} \lambda _{\text {DMS}}(\gamma , m, \sqrt{2}, 1^{-}) = -\frac{\frac{\sqrt{5}}{2}-1}{4}\gamma + O(1) < 0. \end{aligned}$$Thus we need to consider the critical points. It turns out, there is only one critical point within the domain \((-1, 1)\), which is \(\varepsilon = \frac{8m}{1+m}\gamma ^{-1} + O(\gamma ^{-2})\) with the decay rate in (53).

Part (ii): \(\gamma = b\sqrt{m}\) with \(b=O(1)\).

-

(when \(\gamma \rightarrow 0\)). Via asymptotic expansion, one could check that

$$\begin{aligned} \lambda _{\text {DMS}}(\gamma , m=(\gamma /b)^2, \sqrt{2}, 1^{-}) = -\frac{1+\sqrt{3}}{4} + O(\gamma ) < 0. \end{aligned}$$Thus, we only need to consider the decay rate at critical points, which are given by

$$\begin{aligned} \epsilon _1 = \frac{\gamma ^3}{b^2} + O(\gamma ^4), \qquad \epsilon _2 = \frac{2}{3} \gamma + O(\gamma ^2). \end{aligned}$$and the associated decay rates are

$$\begin{aligned} \lambda _{\text {DMS}}(\gamma , m=(\gamma /b)^2, \sqrt{2}, \epsilon _1)&= \frac{\gamma ^5}{2b^4} + O(\gamma ^{6})> 0;\\ \lambda _{\text {DMS}}(\gamma , m=(\gamma /b)^2, \sqrt{2}, \epsilon _2)&= -\frac{1}{3} \gamma + O(\gamma ^2) < 0. \end{aligned}$$Therefore, the optimal decay rate is obtained at \(\epsilon _1\), which gives (54).

-

(when \(\gamma \rightarrow \infty \)). Via asymptotic expansion, one could obtain

$$\begin{aligned} \lambda _{\text {DMS}}(\gamma , m=(\gamma /b)^2, \sqrt{2}, 1^{-}) = -\frac{\sqrt{5}-2}{8}\gamma + O(1) < 0. \end{aligned}$$Thus the supremum in (52) cannot be obtained at \(\epsilon = 1^{-}\). Then, let us look at the critical points. It turns out there is only one within the interval \((-1, 1)\), which is \(\epsilon _1 = \frac{8}{\gamma } + O(\gamma ^{-2})\). The optimal decay rate must be achieved at \(\epsilon _1\), with the expression given in (54). \(\quad \square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cao, Y., Lu, J. & Wang, L. On Explicit \(L^2\)-Convergence Rate Estimate for Underdamped Langevin Dynamics. Arch Rational Mech Anal 247, 90 (2023). https://doi.org/10.1007/s00205-023-01922-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00205-023-01922-4