Abstract

Many-body perturbation theory (MBPT) is widely used in quantum physics, chemistry, and materials science. At the heart of MBPT is the Feynman diagrammatic expansion, which is, simply speaking, an elegant way of organizing the combinatorially growing number of terms of a certain Taylor expansion. In particular, the construction of the ‘bold Feynman diagrammatic expansion’ involves the partial resummation to infinite order of possibly divergent series of diagrams. This procedure demands investigation from both the combinatorial (perturbative) and the analytical (non-perturbative) viewpoints. In this paper, we approach the analytical investigation of the bold diagrammatic expansion in the simplified setting of Gibbs measures (known as the Euclidean lattice field theory in the physics literature). Using non-perturbative methods, we rigorously construct the Luttinger–Ward formalism for the first time, and we prove that the bold diagrammatic series can be obtained directly via an asymptotic expansion of the Luttinger–Ward functional, circumventing the partial resummation technique. Moreover we prove that the Dyson equation can be derived as the Euler–Lagrange equation associated with a variational problem involving the Luttinger–Ward functional. We also establish a number of key facts about the Luttinger–Ward functional, such as its transformation rule, its form in the setting of the impurity problem, and its continuous extension to the boundary of the domain of physical Green’s functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The bold Feynman diagrammatic expansion of many-body perturbation theory (MBPT), along with the many practically used methods in quantum chemistry and condensed matter physics that derive from it, can be formally derived from the Luttinger–Ward (LW)Footnote 1 formalism [19]. Since its original proposal in 1960, the LW formalism has found widespread applicability [5, 8, 13, 24]. However, the LW formalism and the LW functional are defined only formally, and this shortcoming poses serious questions both in theory and in practice. Indeed, the very existence of the LW functional in the setting of fermionic systems is under debate, with numerical evidence to the contrary appearing in the past few years [9, 11, 15, 28] in the physics community.

This paper expands on the work in [18], as well as an accompanying paper. In the accompanying paper, we provided a self-contained explanation of MBPT in the setting of the Gibbs model (alternatively known as the ‘Euclidean lattice field theory’ in the physics literature). In this setting one is interested in the evaluation of the moments of certain Gibbs measures. While the exact computation of such possibly high-dimensional integrals is intractable in general, important exceptions are the Gaussian integrals, that is, integrals for the moments of a Gaussian measure, which can be evaluated exactly. Perturbing about a reference system given by a Gaussian measure, one can evaluate quantities of interest by a series expansion of Feynman diagrams, which correspond to certain moments of Gaussian measures. For a specific form of quartic interaction that we refer to as the generalized Coulomb interaction, such a perturbation theory enjoys a correspondence with the Feynman diagrammatic expansion for the quantum many-body problem with a two-body interaction [1, 2, 22]. The generalized Coulomb interaction is also of interest in its own right and includes, for example, the (lattice) \(\varphi ^{4}\) interaction [2, 29], as a special case. The combinatorial study of its perturbation theory was the goal of the accompanying paper. Nonetheless, the techniques of the accompanying paper, and MBPT more broadly, are more generally applicable to various types of field theories and interactions.

The culmination of the developments of the accompanying paper is the bold diagrammatic expansion, which is obtained formally via a partial resummation technique which sums possibly divergent series of diagrams to infinite order. Indeed, the main technical contribution of the accompanying paper was to place the combinatorial side of this procedure on firm footing. One motivation for this paper is to interpret the bold diagrams analytically, which we accomplish by first constructing the LW formalism. In fact this construction is non-perturbative and valid for rather general forms of interaction. Below we focus on the contributions and organization of this paper only.

1.1 Contributions

The main contribution of this paper is to establish the LW formalism rigorously for the first time, in the context of Gibbs measures. In this setting, the role of the Green’s function is assumed by the two-point correlator.

The construction of the LW functional proceeds via concave duality, in a spirit similar to that of the Levy-Lieb construction in density functional theory [16, 17] at zero temperature and the Mermin functional [21] at finite temperature, as well as the density matrix functional theory developed in [3, 7, 27]. With careful interpretation, this duality gives rise to a one-to-one correspondence between non-interacting and interacting Green’s functions. The LW formalism yields a variational interpretation of the Dyson equation; to wit, the free energy can be expressed variationally as a minimum over all physical Green’s functions, and the self-consistent solution of the Dyson equation yields its unique global minimizer. We also prove a number of useful properties of the LW functional, such as the transformation rule, the projection rule, and the continuous extension of the LW functional to the boundary of its domain, which can be interpreted as the domain of physical Green’s functions. In particular, this last property suggests a novel interpretation of the LW functional as the non-divergent part of the concave dual of the free energy. These results allow us to interpret the appropriate analogs of quantum impurity problems in our simplified setting. In particular, we prove that the self-energy is always a sparse matrix for impurity problems, with nonzero entries appearing only in the block corresponding to the impurity sites. Such a result is at the foundation of numerical approaches such as the dynamical mean field theory (DMFT) [10, 14].

We prove that the bold diagrams for the generalized Coulomb interaction can be obtained as asymptotic series expansions of the LW and self-energy functionals, circumventing the formal strategy of performing resummation to infinite order. The proof of this fact proceeds by proving the existence of such series non-constructively and then employing the combinatorial results of the accompanying paper to ensure that the terms of these series are in fact given by the bold diagrams.

Although the bold diagrammatic expansion (evaluated in terms of the interacting Green’s function, which is always defined) appears to be applicable in cases where the non-interacting Green’s function is ill-defined, we demonstrate that caution should be exercised in practice in such cases. Using a one-dimensional example, we demonstrate that the approximate Dyson equation obtained via a truncated bold diagrammatic expansion may yield solutions with large error in the regime of vanishing interaction strength or fail to admit solutions at all.

1.2 Outline

In Section 2 we review preliminary material and definitions needed to understand the results of this paper.

Section 3 concerns the construction of the LW formalism, beginning with a discussion of the the variational formulation of the free energy and the relevant concave duality (Section 3.1). This is followed by the introduction of the LW functional and the Dyson equation (Section 3.2). Then we introduce several key properties of the LW functional: the transformation rule (Section 3.3); the projection rule, accompanied by a discussion of impurity problems (Section 3.4); and the continuous extension property (Section 3.5). The proof of the continuous extension property, which is the most technically demanding part of the paper, is postponed to Section 5, which has its own outline.

Section 4 concerns the bold diagrammatic expansion. In Section 4.1 we prove the existence of asymptotic series for the LW functional and the self-energy, and in Section 4.2 we relate the coefficients of the former to the latter. Then for the rigorous development of the bold diagrammatic expansion, it only remains at this point to prove that the asymptotic series for the self-energy matches the bold diagrammatic expansion of the accompanying paper. This is the most involved task of Section 4. In Section 4.3, we review the results that we need from the accompanying paper in a ‘diagram-free’ way that should be understandable to the reader who has not read the accompanying paper, and in Section 4.4, we establish the claimed correspondence. Finally, in Section 4.5 we illustrate the aforementioned warning about the truncation of the bold diagrammatic series in cases where the non-interacting Green’s function is ill-defined.

Relevant background material on convex analysis and the weak convergence of measures is collected in “Appendices A and B”, respectively. The proofs of many lemmas are provided in “Appendix C”, as noted in the text.

2 Preliminaries

In this section we discuss some preliminary definitions and notations.

2.1 Notation and Quantities of Interest

Throughout we shall let \(\mathcal {S}^{N}\), \(\mathcal {S}^{N}_+\), and \(\mathcal {S}^{N}_{++}\) denote respectively the sets of symmetric, symmetric positive semidefinite, and symmetric positive definite \(N\times N\) real matrices. For simplicity we restrict our attention to real matrices, though analogous results can be obtained in the complex Hermitian case.

In this paper we will consider Gibbs measures defined by Hamiltonians \(h:\mathbb {R}^N \rightarrow \mathbb {R}\cup \{+\infty \}\) of the form

where \(A \in \mathcal {S}^N\). The first term represents the quadratic or ‘non-interacting’ part of the Hamiltonian, while the second term, U, represents the interaction. We define the partition function accordingly as

For fixed interaction U, we may think of the partition function of A alone, that is, as \(Z:\mathcal {S}^N\rightarrow \mathbb {R}\) sending \(A\mapsto Z[A]\). In fact we adopt this perspective exclusively for the time being.

The free energy is then defined as a mapping \(\Omega :\mathcal {S}^{N} \rightarrow \mathbb {R}\cup \{-\infty \}\) via

We denote the domain of \(\Omega \) by

and the interior of the domain by \(\mathrm {int}\,\mathrm {dom}\,\Omega \). As we will see, \(\Omega \) is concave in A, and this notion of domain is the usual notion from convex analysis (see “Appendix A”), and it is simply the set of A such that the integral in Eq. (2.2) is convergent.

For \(A \in \mathrm {int}\,\mathrm {dom}\,\Omega \), in fact the integrand in Eq. (2.2) must decay exponentially, hence we can define the two-point correlator (which we call the Green’s function by analogy with the quantum many-body literature) in terms of A via

and the integral on the right-hand side is convergent. More compactly, we have a mapping \(G : \mathrm {int}\,\mathrm {dom}\,\Omega \rightarrow \mathcal {S}^N_{++}\) defined by

It is important to note that \(G[A] \in \mathcal {S}^N_{++}\) for all A. As we shall see in Section 3, this constraint defines the domain of ‘physical’ Green’s functions, in a certain sense. In the discussion below, G is also called the interacting Green’s function.

In the case of the ‘non-interacting’ Gibbs measure, where \(U \equiv 0\), all quantities of interest can be computed exactly by straightforward multivariate integration. In particular, letting \(G^{0}[A] := G[A;0]\), we have for \(A\in \mathrm {dom}\,\Omega = \mathcal {S}^N_{++}\) that

The neatness of this relation is that it motivates the factor of one half included in the quadratic part of the Hamiltonian. We refer to \(G^0 [A]\) as the non-interacting Green’s function associated to A, whenever \(A \in \mathcal {S}^N_{++}\). Note that for a general interaction U, \(\mathrm {int}\,\mathrm {dom}\,\Omega \) may contain elements not in \(\mathcal {S}^N_{++}\). For such A there is an associated (interacting) Green’s function but not a non-interacting Green’s function.

In general G can be viewed as the gradient of \(\Omega \), for a suitably defined notion of gradient for functions of symmetric matrices, which we now define:

Definition 2.1

For \(i,j=1,\ldots ,N\), let \(E^{(ij)} \in \mathcal {S}^N\) be defined by \(E^{(ij)}_{kl} = \delta _{ik}\delta _{jl} + \delta _{il}\delta _{jk}\). For a differentiable function \(f:\mathcal {S}^N\rightarrow \mathbb {R}\), define the gradient \(\nabla f :\mathcal {S}^N\rightarrow \mathcal {S}^N\) by

If f is obtained by restriction from a function \(f: \mathbb {R}^{N\times N} \rightarrow \mathbb {R}\), then equivalently \(\nabla _{ij}f = \frac{\partial f}{\partial X_{ij}} + \frac{\partial f}{\partial X_{ji}}\).

Then on \(\mathrm {dom}\,\Omega \) the gradient map \(\nabla \Omega \) is given by

that is, \(G = \nabla \Omega \), as claimed. The notion of gradient of Definition 2.1 is natural for our setting in that it yields this relation. However, it may seem a bit awkward when applied to specific computations. Indeed, consider a function \(X\mapsto f(X)\) on \(\mathcal {S}^N\) that is specified by a formula that can be applied to all \(N\times N\) matrices and in which the roles of \(X_{kl}\) and \(X_{lk}\) are the same for all l, k. For instance, such a formula is given by \(f(X) = \sum _{ij} X_{ij}^2\). Then the usual matrix derivative of f, considered as a function on \(N\times N\) matrices, is given by \(\frac{\partial f}{\partial X_{ij}}(X) = 2 X_{ij}\), whereas, viewing f as a function on \(\mathcal {S}^N\) and with notation as specified in Definition 2.1, we have \(\nabla _{ij} f (X) = 4 X_{ij}\). More generally in this situation we have \(\nabla _{ij} = 2 \frac{\partial }{\partial X_{ij}}\). Since formulas like this arise from the bold diagrammatic expansion (as discussed in the accompanying paper), it is convenient then to estabilish.

Definition 2.2

For a differentiable function \(f:\mathcal {S}^N\rightarrow \mathbb {R}\), define the matrix derivative \(\frac{\partial f}{\partial X} :\mathcal {S}^N\rightarrow \mathcal {S}^N\) by

Moreover, this notion of derivative will yield the relation

where \(\Sigma \) is the self-energy and \(\Phi \) is the LW functional, as was foreshadowed in the accompanying paper.

2.2 Interaction Growth Conditions

Note that \(\mathrm {dom}\,\Omega \) depends on the shape of U(x). For example, if \(U(x)=0\), then \(\mathrm {dom}\,\Omega =\mathcal {S}^{N}_{++}\). If \(U(x)=\sum _{i=1}^{N} x_{i}^4\), then \(\mathrm {dom}\,\Omega =\mathcal {S}^{N}\). Our most basic condition on U is the following:

Definition 2.3

(Weak growth condition) A measurable function \(U:{\mathbb {R}^{N}}\rightarrow \mathbb {R}\) satisfies the weak growth condition, if there exists a constant \(C_U\) such that \(U(x) + C_U( 1 + \Vert x\Vert ^2) \geqq 0\) for all \(x\in {\mathbb {R}^{N}}\), and \(\mathrm {dom}\,\Omega \) is an open set.

The weak growth condition of Definition 2.3 specifies that U cannot decay to \(-\infty \) faster than quadratically, which ensures in particular that \(\mathrm {dom}\,\Omega \) is non-empty. The assumption that \(\mathrm {dom}\,\Omega \) is an open set (that is, \(\mathrm {dom}\,\Omega = \mathrm {int}\,\mathrm {dom}\,\Omega \)) will be used later to ensure that for fixed U there is a one-to-one correspondence between A and G (hence also between non-interacting and interacting Green’s functions) over suitable domains.

Note that the condition of Definition 2.3 is weaker than the condition

For instance, if \(N=2\) and \(U(x)=x_{1}^4\), then the weak growth condition is satisfied with \(C_{U}=0\), but Eq. (2.6) is not satisfied for all \(A\in \mathcal {S}^N\). In fact, when U(x) only depends on a subset of components of \(x\in {\mathbb {R}^{N}}\), we call the Gibbs model an impurity model or impurity problem, in analogy with the impurity models of quantum many-body physics [20], and we call the subset of components on which U depends the fragment. The flexibility of the weak growth condition will allow us to rigorously establish the LW formalism for the impurity model. In the setting of the impurity model, the ‘projection rule’ of Proposition 3.13 then allows us to understand the LW formalism of the impurity model in terms of the lower-dimensional LW formalism of the fragment and to prove a special sparsity pattern of the self-energy.

One of our main results (Theorem 3.18) is that the LW functional, which is initially defined on the set \(\mathcal {S}^N_{++}\) of physical Green’s functions, can in fact be extended continuously to the boundary of \(\mathcal {S}^N_{++}\), a fact which will not be apparent from the definition of the LW functional. (In fact, this extension shall be specified by an explicit formula involving lower-dimensional LW functionals.) However, in order for this result to hold, we need to strengthen the weak growth condition to the following:

Definition 2.4

(Strong growth condition) A measurable function \(U:{\mathbb {R}^{N}}\rightarrow \mathbb {R}\) satisfies the strong growth condition if, for any \(\alpha \in \mathbb {R}\), there exists a constant \(b\in \mathbb {R}\) such that \(U(x) + b \geqq \alpha \Vert x\Vert ^2\) for all \(x\in {\mathbb {R}^{N}}\).

Note that the strong growth condition ensures that \(\mathrm {dom}\,\Omega = \mathcal {S}^{N}\) and is hence an open set. If U is a polynomial function of x and satisfies the strong growth condition, then Eq. (2.6) will also be satisfied.

In Section 5 we will discuss the precise statement and proof of the aforementioned continuous extension property. In addition, a counterexample will be provided in the case where the weak growth condition holds but the strong growth condition does not. In fact, the continuous extension property is also valid for impurity models (which do not satisfy the strong growth condition) via the projection rule (Proposition 3.13), provided that the interaction satisfies the strong growth condition when restricted to the fragment.

For the generalized Coulomb interaction considered in the accompanying paper, that is,

there is a natural condition on the matrix v that ensures that U satisfies the strong growth condition, namely that the matrix v is positive definite. We will simply assume that this holds whenever we refer to the generalized Coulomb interaction. To see that this assumption implies the strong growth condition, first note that \(v \succ 0\) guarantees in particular that U is a nonnegative polynomial, strictly positive away from \(x=0\). Since U is homogeneous quartic, it follows that \(U\geqq C^{-1}\vert x\vert ^{4}\) for some constant C sufficiently large, which evidently implies the strong growth condition. Another sufficient assumption is that the entries of v are nonnegative and moreover that the diagonal entries are strictly positive.

Our interest in diagrammatic expansions leads us to adopt a further condition on the interaction. Too see why this is necessary, recall from the accompanying paper that the perturbation about a non-interacting theory (\(U \equiv 0\)) involves integrals such as

which is clearly undefined if, for example, \(U(x) = e^{x^4}\). In most applications of interest, U(x) is only of polynomial growth, but it is sufficient to assume growth that is at most exponential in the sense of Assumption 2.5, which is actually only needed in Section 4 for our consideration of the bold diagrammatic expansion.

Assumption 2.5

(At-most-exponential growth) In this section, we assume that there exist constants \(B,C > 0\) such that \(\vert U(x) \vert \leqq B e^{C \Vert x\Vert }\) for all \( x\in {\mathbb {R}^{N}}\).

Further technical reasons for this assumption will become clear in Section 4.

2.3 Measures and Entropy: Notation and Facts

Let \(\mathcal {M}\) be the space of probability measures on \({\mathbb {R}^{N}}\) (equipped with the Borel \(\sigma \)-algebra), let \(\mathcal {M}_2 \subset \mathcal {M}\) be the subset of probability measures with moments up to second order, and let \(\lambda \) denote the Lebesgue measure on \({\mathbb {R}^{N}}\). For notational convenience we define a mapping that takes the second-order moments of a probability measure:

Definition 2.6

Define \(\mathcal {G} :\mathcal {M}_2 \rightarrow \mathcal {S}_{+}^{N}\) by \(\mathcal {G}(\mu )=\int xx^T\,\,\mathrm {d}\mu \). Writing \(\mathcal {G}=(\mathcal {G}_{ij})\), we equivalently have \(\mathcal {G}_{ij}(\mu )=\int x_{i}x_{j}\,\,\mathrm {d}\mu \).

Therefore if \(\mu \) is defined via a density

then \(\mathcal {G}(\mu ) = G[A]\).

We also denote by

the covariance matrix of \(\mu \).

For \(\mu \in \mathcal {M}\), let H denote the (differential) entropy

where \(\frac{\,\mathrm {d}\mu }{\,\mathrm {d}\lambda }\) denotes the Radon-Nikodym derivative (that is, the probability density function of \(\mu \) with respect to the Lebesgue measure \(\lambda \)) whenever \(\mu \ll \lambda \) (that is, whenever \(\mu \) is absolutely continuous with respect to the Lebesgue measure). We will often refer to the differential entropy as the entropy for convenience.

For \(\mu ,\nu \in \mathcal {M}\), define the relative entropy \(H_\nu (\mu )\) via

Note carefully the sign convention.Footnote 2 The integral in (2.9) is well-defined with values in \(\mathbb {R}\cup \{-\infty \}\) for all \(\mu ,\nu \in \mathcal {M}\).

We now record some useful properties of the relative entropy.

Fact 2.7

For fixed \(\nu \in \mathcal {M}\), \(H_\nu \) is non-positive and strictly concave on \(\mathcal {M}\), and \(H_\nu (\mu ) = 0\) if and only if \(\mu = \nu \). Moreover \(H_\nu \) is upper semi-continuous with respect to the topology of weak convergence; that is, if the sequence \(\mu _k \in \mathcal {M}\) converges weakly to \(\mu \in \mathcal {M}\), then \(\limsup _{k\rightarrow \infty } H_{\nu }(\mu _k) \leqq H_{\nu }(\mu )\).

Proof

For proofs see [23].

By contrast to the relative entropy, the differential entropy suffers from two analytical nuisances.

First, in the definition of the entropy in (2.8), the entropy may actually fail to be defined for some measures (which simultaneously concentrate too much in some area and fail to decay fast enough at infinity, so the negative and positive parts of the integral are \(-\infty \) and \(+\infty \), respectively, and the Lebesgue integral is ill-defined). However, Lemma 2.8 states that when we restrict to \(\mathcal {M}_2\), the integral cannot have an infinite positive part and is well-defined.

Lemma 2.8

For \(\mu \in \mathcal {M}_2\), if \(\mu \ll \lambda \), then the integral in (2.8) exists (in particular, the positive part of the integrand has finite integral) and moreover

with possibly \(H(\mu ) = -\infty \). The first inequality is satisfied with equality if and only if \(\mu \) is a Gaussian measure with a positive definite covariance matrix. The second inequality is satisfied with equality if and only if \(\mu \) has mean zero.

Note that Lemma 2.8 also entails a useful bound on the entropy in terms of the second moments, as well as the classical fact that Gaussian measures are the measures of maximal entropy subject to second-order moment constraints.

The second analytical nuisance of the differential entropy is that we do not have the same semi-continuity guarantee as we have for the relative entropy in Fact 2.7. However, control on second moments allows a semi-continuity result that will suffice for our purposes.

Lemma 2.9

Assume that \(\mu _j \in \mathcal {M}_2\) weakly converge to \(\mu \in \mathcal {M}\), and that there exists a constant C such that \(\mathcal {G}(\mu _j) \preceq C\cdot I_N\) for all j. Then \(\limsup _{j\rightarrow \infty } H(\mu _j) \leqq H(\mu )\).

Remark 2.10

In other words, the entropy is upper semi-continuous with respect to the topology of weak convergence on any subset of probability measures with uniformly bounded second moments. The subtle difference between the statements in Fact 2.7 and Lemma 2.9 is due to the fact that the Lebesgue measure \(\lambda \notin \mathcal {M}\).

The proofs of Lemmas 2.8 and 2.9 are given in “Appendix C”.

Finally we record the classical fact that subject to marginal constraints, the entropy is maximized by a product measure. In the statement and throughout the paper, ‘\(\#\)’ denotes the pushforward operation on measures.

Fact 2.11

Suppose \(p < N\) and let \(\pi _1:{\mathbb {R}^{N}}\rightarrow \mathbb {R}^{p}\) and \(\pi _2:{\mathbb {R}^{N}}\rightarrow \mathbb {R}^{N-p}\) to be the projections onto the first p and last \(N-p\) components, respectively. Then for \(\mu \in \mathcal {M}_2\), \(H(\mu ) \leqq H(\pi _1 \# \mu ) + H(\pi _2 \# \mu )\).

Remark 2.12

Note that \(\pi _1 \# \mu \) and \(\pi _2 \# \mu \) are the marginal distributions of \(\mu \) with respect to the product structure \({\mathbb {R}^{N}}= \mathbb {R}^p \times \mathbb {R}^{N-p}\).

See “Appendix C” for a short proof.

3 Luttinger–Ward Formalism

This section is organized as follows. In Section 3.1, we provide a variational expression for the free energy via the classical Gibbs variational principle. For fixed U, this allows us to identify the Legendre dual of \(\Omega [A]\), denoted by \(\mathcal {F}[G]\), and to establish a bijection between A and the interacting Green’s function G. In Section 3.2, we define the Luttinger–Ward functional and show that the Dyson equation can be naturally derived by considering the first-order optimality condition associated to the minimization problem in the variational expression for the free energy. Then we prove that the LW functional satisfies a number of desirable properties. First, in Section 3.3 we prove the transformation rule, which relates a change of the coordinates of the interaction with an appropriate transformation of the Green’s function. The transformation rule leads to the projection rule in Section 3.4, which implies the sparsity pattern of the self-energy for the impurity problem. Up until this point we assume only that U satisfy the weak growth condition. Then in Section 3.5 we motivate and state our result that the LW functional is continuous up to the boundary of \(\mathcal {S}^N_{++}\), for which we need the assumption that U satisfies the strong growth condition. The proof (as well as a counterexample demonstrating that weak growth is not sufficient) is deferred to Section 5. Throughout we defer the proofs of some technical lemmas to “Appendix C”. Moreover we will invoke the language of convex analysis following Rockafellar [25] and Rockafellar and Wets [26]. See “Appendix A” for further background and details.

3.1 Variational Formulation of the Free Energy

The main result in this subsection is given by Theorem 3.1.

Theorem 3.1

(Variational structure) For U satisfying the weak growth condition, the free energy can be expressed variationally via the constrained minimization problem

where

is the concave conjugate of \(\Omega [A]\) with respect to the inner product \(\langle A,G\rangle = \frac{1}{2}\mathrm {Tr}[AG]\). (Note that by convention \(\mathcal {F}[G] = -\infty \) whenever \(\mathcal {G}^{-1}(G)\) is empty, that is, whenever \(G\in \mathcal {S}^{N}\backslash \mathcal {S}_{+}^{N}\).) Moreover \(\Omega \) and \(\mathcal {F}\) are smooth and strictly concave on their respective domains \(\mathrm {dom}\,\Omega \) and \(\mathcal {S}^N_{++}\). The mapping \(G[A]:=\nabla \Omega [A]\) is a bijection \(\mathrm {dom}\,\Omega \rightarrow \mathcal {S}^{N}_{++}\), with inverse given by \(A[G]:=\nabla \mathcal {F}[G]\).

We first record some technical properties of \(\Omega \) in Lemma 3.2.

Lemma 3.2

\(\Omega \) is an upper semi-continuous, proper (hence closed) concave function. Moreover, \(\Omega \) is strictly concave and \(C^{\infty }\)-smooth on \(\mathrm {dom}\,\Omega \).

Remark 3.3

Recall that a function f on a metric space X is upper semi-continuous if for any sequence \(x_k \in X\) converging to x, we have \(\limsup _{k\rightarrow \infty } f(x_k) \leqq f(x)\).

We now turn to exploring the concave (or Legendre-Fenchel) duality associated to \(\Omega \). The following lemma, a version of the classical Gibbs variational principle [23] (alternatively known as the Donsker-Varadhan variational principle [12]), is the first step toward identifying the dual of \(\Omega \).

Lemma 3.4

For any \(A\in \mathcal {S}^{N}\),

If \(A\in \mathrm {dom}\,\Omega \), the infimum is uniquely attained at \(\,\mathrm {d}\mu (x)=\frac{1}{Z[A]}e^{-\frac{1}{2}x^{T}Ax-U(x)}\,\,\mathrm {d}x\).

Remark 3.5

One might wonder whether the infimum in (3.3) can be taken over all of \(\mathcal {M}\). Note that if \(\mu \) does not have a second moment, it is possible to have both \(H(\mu ) = +\infty \) and \(\int \left( \frac{1}{2}x^{T}Ax+U(x)\right) \,\,\mathrm {d}\mu (x) = +\infty \), so the expression in brackets is of the indeterminate form \(\infty - \infty \). The restriction to \(\mu \in \mathcal {M}_2\) takes care of this problem because Lemma 2.8 guarantees that \(H(\mu ) < +\infty \), and by the weak growth condition, the other term in the infimum must be either finite or \(+\infty \). Moreover, \(\mathcal {M}_2\) is still large enough to contain the minimizer, and restricting our attention to measures with finite second-order moments will be convenient in later developments.

From the previous lemma we can split up the infimum in (3.3) and obtain

Since \(\int x^{T}Ax\,\,\mathrm {d}\mu =\mathrm {Tr}[\mathcal {G}(\mu )A]\), it follows that

This proves Eq. (3.1) of Theorem 3.1 using the definition of \(\mathcal {F}[G]\) in Eq. (3.2).

Remark 3.6

For the perspective of the large deviations theory, we comment that the construction of \(\mathcal {F}\) from the entropy may be recognizable by analogy to the contraction principle [23]. Indeed, the expression \(\int U\,\,\mathrm {d}\mu - H(\mu )\) is equal (modulo a constant offset) to \(-H_{\nu _U}(\mu )\), where \(\nu _U\) is the measure with density proportional to \(e^{-U}\). If one considers i.i.d. sampling from the probability measure \(\nu _U\), by Sanov’s theorem \(-H_{\nu _U}\) is the corresponding large deviations rate function for the empirical measure. The rate function for the second-order moment matrix (that is, \(-\mathcal {F}\), modulo constant offset) is obtained via the contraction principle applied to the mapping \(\mu \mapsto \mathcal {G}(\mu )\). This is analogous to the procedure by which one obtains Cramér’s theorem from Sanov’s theorem via application of the contraction principle to a map that maps \(\mu \) to its mean [23].

Now we record some technical facts about \(\mathcal {F}\) in Lemma 3.7, which demonstrates in particular that \(\mathcal {F}\) diverges (at least) logarithmically at the boundary \(\partial \mathcal {S}_{+}^{N} = \mathcal {S}_{+}^{N} \backslash \mathcal {S}_{++}^{N}\).

Lemma 3.7

\(\mathcal {F}\) is finite on \(\mathcal {S}^N_{++}\) and \(-\infty \) elsewhere. Moreover,

for all \(G \in \mathcal {S}^N_{++}\).

Define

so \(\mathcal {F}[G]=\sup _{\mu \in \mathcal {G}^{-1}(G)}\Psi [\mu ]\). By the concavity of the entropy, \(\Psi \) is concave on \(\mathcal {M}_2\). Thus, given G, we can in principle solve a concave maximization problem over \(\mu \in \mathcal {M}\) to find \(\mathcal {F}[G]\), with the linear constraint \(\mu \in \mathcal {G}^{-1}(G)\). Moreover, this variational representation of \(\mathcal {F}\) in terms of the concave function \(\Psi \) is enough to establish the concavity of \(\mathcal {F}\) by abstract considerations. This and other properties of \(\mathcal {F}\) are collected in the following.

Lemma 3.8

\(\mathcal {F}\) is an upper semi-continuous, proper (hence closed) concave function on \(\mathcal {S}^{N}\).

Now Eq. (3.1) states precisely that \(\Omega \) is the concave conjugate of \(\mathcal {F}\) with respect to the inner product \(\langle A,G\rangle = \frac{1}{2}\mathrm {Tr}[AG]\), and accordingly we write \(\Omega =\mathcal {F}^{*}\). Since \(\mathcal {F}\) is concave and closed, we have by Theorem A.14 that \(\mathcal {F}=\mathcal {F}^{**}=\Omega ^{*}\), that is, \(\mathcal {F}\) and \(\Omega \) are concave duals of one another. Thus we expect that \(\nabla \mathcal {F}\) and \(\nabla \Omega \) are inverses of one another, but to make sense of this claim we need to establish the differentiability of \(\mathcal {F}\). We collect this and other desirable properties of \(\mathcal {F}\) in the following:

Lemma 3.9

\(\mathcal {F}\) is \(C^\infty \)-smooth and strictly concave on \(\mathcal {S}^N_{++}\).

Then Theorem A.15 guarantees that \(\nabla \Omega \) is a bijection from \(\mathrm {dom}\,\Omega \rightarrow \mathcal {S}^N_{++}\) with its inverse given by \(\nabla \mathcal {F}\). This completes the proof of Theorem 3.1.

Finally, following Lemma 3.4, together with the splitting of (3.3) and the \(A\leftrightarrow G\) correspondence of Theorem 3.1, we observe that the supremum in (3.2) is attained uniquely at the measure \(\,\mathrm {d}\mu := \frac{1}{Z[A[G]]} e^{-\frac{1}{2}x^{T}A[G]x-U(x)} \,\mathrm {d}x\).

3.2 The Luttinger–Ward Functional and the Dyson Equation

According to Lemma 3.7, \(\mathcal {F}\) should blow up at least logarithmically as G approaches the boundary of \(\mathcal {S}_{++}^{N}\). Remarkably, we can explicitly separate the part that accounts for the blowup of \(\mathcal {F}\) at the boundary. In fact, subtracting away this part is how we define the Luttinger–Ward (LW) functional for the Gibbs model. We will see in this subsection that the definition of the Luttinger–Ward functional can also be motivated by the stipulation that its gradient (the self-energy) should satisfy the Dyson equation.

Consider for a moment the case in which \(U\equiv 0\), so

The random variable X achieving the maximum entropy subject to \(\mathbb {E}[X_{i}X_{j}]=G_{ij}\) follows a Gaussian distribution, that is, \(X\sim \mathcal {N}(0,G)\). It follows that

This motivates, for general U, the consideration of the Luttinger–Ward functional

For non-interacting systems, \(\Phi [G]\equiv 0\) by construction.

Now we turn to establishing the Dyson equation. Theorem 3.1 shows that for \(A\in \mathrm {dom}\,\Omega \), the minimizer \(G^{*}\) in (3.1) satisfies \(A=\nabla \mathcal {F}[G^{*}]=A[G^{*}]\), so the minimizer is \(G^{*}=G[A]\). Recall that

Taking gradients and plugging into \(A=\nabla \mathcal {F}[G^*]\) yields

Define the self-energy \(\Sigma \) as a functional of G by \(\Sigma [G]:= \frac{1}{2} \nabla \Phi [G] = \frac{\partial \Phi }{\partial G} [G]\). Then we have established that for \(G=G[A]\),

Moreover, by the strict concavity of \(\mathcal {F}\), \(G=G[A]\) is the unique G solving (3.5).

Eq. (3.5) is in fact the Dyson equation as in Section 3.8 of the accompanying paper. To see this, recall from Eq. (2.4) that the non-interacting Green’s function \(G^{0}\) is given by \(G^{0}=A^{-1}\), so we have

Left- and right-multiplying by \(G^{0}\) and G, respectively, and then rearranging, we obtain

However, Eq. (2.4) requires \(G^{0}\) to be well defined, that is, \(A\in \mathcal {S}_{++}^{N}\). On the other hand, the Dyson equation (3.5) derived from the LW functional does not rely on this assumption and makes sense for all \(A\in \mathrm {dom}\,\Omega \). Nonetheless, if for fixed A one seeks to approximately solve the Dyson equation for G by inserting an ansatz for the self-energy obtained from many-body perturbation theory, one must be wary in the case that \(A \notin \mathcal {S}^N_{++}\); see Section 4.5.

3.3 Transformation Rule for the LW Functional

Though the dependence of the Luttinger–Ward functional on the interaction U was only implicit in the previous section, we now explicitly consider this dependence, including it in our notation as \(\Phi [G,U]\). The same convention will be followed for other functionals without comment. Proposition 3.10 relates a transformation of the interaction with a corresponding transformation of the Green’s function.

Proposition 3.10

(Transformation rule)Let \(G\in \mathcal {S}_{++}^{N}\), U be an interaction satisfying the weak growth condition. Let T denote an invertible matrix in \(\mathbb {R}^{N\times N}\), as well as the corresponding linear transformation \({\mathbb {R}^{N}}\rightarrow {\mathbb {R}^{N}}\). Then

Proof

For \(G\in \mathcal {S}^N_{++}\), note that the supremum in (3.2) can be restricted to the set of \(\mu \in \mathcal {G}^{-1}(G)\) that have densities with respect to the Lebesgue measure. (Indeed, for any \(\mu \in \mathcal {M}_2\) that does not have a density, \(H(\mu )-\int U\,\,\mathrm {d}\mu = -\infty \).) Then observe

Going forward we will denote \(C:=-N\log (2\pi e)\).

Then for T invertible, we have

Now observe by changing variables that

Therefore

as was to be shown.

Remark 3.11

Since T is real, the Hermite conjugation \(T^{*}\) is the same as the matrix transpose, and this is used simply to avoid the notation \(T^{T}\).

From the transformation rule we have the following corollary:

Corollary 3.12

Let \(G\in \mathcal {S}_{++}^{N}\), and consider an interaction U which is a homogeneous polynomial of degree 4 satisfying the weak growth condition. For \(\lambda >0\), we have

3.4 Impurity Problems and the Projection Rule

For the impurity problem, the interaction only depends on a subset of the variables \(x_1,\ldots ,x_N\), namely the fragment. In such a case, the Luttinger–Ward functional can be related to a lower-dimensional Luttinger–Ward functional corresponding to the fragment. This relation, called the projection rule, is given in Proposition 3.13 below. In the notation, we will now explicitly indicate the dimension d of the state space associated with the Luttinger–Ward functional via subscript as in \(\Phi _{d}[G,U]\), since we will be considering functionals for state spaces of different dimensions. We will follow the same convention for other functionals without comment.

Before we state the projection rule, we record some remarks on the domain of \(\Omega \) and growth conditions in the context of impurity problems. Suppose that the interaction U depends only on \(x_1, \ldots , x_p\), where \(p\leqq N\), so U can alternatively be considered as a function on \(\mathbb {R}^p\). Notice that even if U satisfies the strong growth condition as a function on \(\mathbb {R}^p\), it is of course not true that \(\mathrm {dom}\left( \Omega _N[\,\cdot \,,U] \right) = \mathcal {S}^N\). As mentioned above, this provides a natural reason to consider interactions that do not grow fast in all directions and motivates the generality of our previous considerations.

In fact, for

one can show by Fubini’s theorem, integrating out the last \(N-p\) variables in (2.2), that \(A\in \mathrm {dom}\left( \Omega _N[\,\cdot \,,U] \right) \) if and only if both

Moreover, one can show that for such A,

Therefore, if \(\mathrm {dom}\,\left( \Omega _p[\,\cdot \,,U(\,\cdot \,,0)] \right) \) is open, then so is \(\mathrm {dom}\left( \Omega _N[\,\cdot \,,U] \right) \). It follows that if U satisfies the weak growth condition as a function on \(R^p\), then U also satisfies the weak growth condition as a function on \(\mathbb {R}^N\).

Proposition 3.13

(Projection rule) Let \(p\leqq N\). Suppose that U depends only on \(x_1,\ldots ,x_p\) and satisfies the weak growth condition. Hence we can think of U as a function on both \({\mathbb {R}^{N}}\) and \(\mathbb {R}^p\). Then for \(G\in \mathcal {S}^N_{++}\),

where \(G_{11}\) is the upper-left \(p\times p\) block of G.

Remark 3.14

If U can be made to depend only on \(p\leqq N\) variables by linearly changing variables, then we can use the projection rule in combination with the transformation rule (Proposition 3.10) to reveal the relationship with a lower-dimensional Luttinger–Ward functional, though we do not make this explicit here with a formula.

Corollary 3.15

Let \(p\leqq N\), and P be the orthogonal projection onto the subspace \(\mathrm {span}\,\{e_1^{(N)},\ldots ,e_p^{(N)}\}\). Suppose that \(U(\,\cdot \,,0)\) satisfies the weak growth condition. Then for \(G \in \mathcal {S}^N_{++}\),

where \(G_{11}\) is the upper-left \(p\times p\) block of G.

Proof of Proposition 3.13

First we observe that we can assume that G is block-diagonal. To see this, let \(G\in \mathcal {S}^N_{++}\), and write

Then block Gaussian elimination reveals that

Define

so \(G = T\widetilde{G}T^*\). Then by the transformation rule, we have

where the last equality uses the fact that U depends only on the first p arguments, which are unchanged by the transformation T.

Since \(\widetilde{G}\) is block-diagonal with the same upper-left block as G, we have reduced to the block-diagonal case, as claimed, so now assume that \(G\in \mathcal {S}^N_{++}\) with

Recall the following expression for \(\mathcal {F}_N\):

Next define \(\pi _1:{\mathbb {R}^{N}}\rightarrow \mathbb {R}^{p}\) and \(\pi _2:{\mathbb {R}^{N}}\rightarrow \mathbb {R}^{N-p}\) to be the projections onto the first p and last \(N-p\) components, respectively. Then with ‘\(\#\)’ denoting the pushforward operation on measures, \(\pi _1 \# \mu \) and \(\pi _2 \# \mu \) are the marginals of \(\mu \) with respect to the product structure \({\mathbb {R}^{N}}= \mathbb {R}^p \times \mathbb {R}^{N-p}\). Now recall Fact 2.11, in particular the inequality \(H(\mu ) \leqq H(\pi _1 \# \mu ) + H(\pi _2 \# \mu )\). Also note that if \(\mu \in \mathcal {G}_N^{-1}(G)\), then \(\pi _1 \# \mu \in \mathcal {G}_p^{-1}(G_{11})\) and \(\pi _2 \# \mu \in \mathcal {G}_{N-p}^{-1}(G_{22})\). Finally observe that since U depends only on the first p arguments, \(\int U\,\,\mathrm {d}\mu = \int U\,d(\pi _1 \# \mu )\) for any \(\mu \). Therefore,

Since \(\det G = \det G_{11} \det G_{22}\), it follows that

For the reverse inequality, let \(\mu _1\) be arbitrary in \(\mathcal {G}_p^{-1}(G_{11})\), and consider \(\mu := \mu _1 \times \mu _2\), where \(\mu _2\) is given by the normal distribution with mean zero and covariance \(G_{22}\). Then

Since \(\mu _1\) is arbitrary in \(\mathcal {G}_p^{-1}(G_{11})\), it follows by taking the supremum over \(\mu _1\) that

which implies

Remark 3.16

The proof suggests that for U depending only on the first p arguments and G block-diagonal, the supremum in the definition of \(\mathcal {F}\) is attained by a product measure, which is perhaps not surprising. The proof also suggests, however, that for such U and general G, the supremum is attained by taking a product measure and then ‘correlating’ it via the transformation T.

For the impurity problem, Proposition 3.13 immediately implies that the self-energy has a particular sparsity pattern, and thus we have

Corollary 3.17

Let \(p\leqq N\) and suppose that U (satisfying the weak growth condition) depends only on \(x_1,\ldots ,x_p\). Then

For example, consider \(U(x) = \frac{1}{8} \sum _{ijkl} v_{ij} x_i^2 x_j^2\). Here the stipulation that U depend only on the first p arguments corresponds to the stipulation that \(v_{ij} = 0\) unless \(i,j \leqq p\). For such an interaction, in the bold diagrammatic expansion for \(\Phi \) and \(\Sigma \), any term in which \(G_{ij}\) appears will be zero unless \(i,j\leqq p\). This is a non-rigorous perturbative explanation of the fact that \(\Phi \) depends only on the upper-left block of G, which in turn explains the sparsity structure of \(\Sigma \), as well as the fact that \(\Sigma \) also depends only on the upper-left block of G. However, the developments of this section apply to interactions U of far greater generality and which may indeed be non-polynomial, hence not admitting of a bold diagrammatic expansion.

3.5 Continuous Extension of the LW Functional to the Boundary

The discussion in this subsection is only heuristic, and the proofs of the theorems stated here are deferred to Section 5.

Now in Section 3.1 we saw that the functional \(\mathcal {F}[G]\) diverges at the boundary \(\partial \mathcal {S}_{+}^{N} = \mathcal {S}_{+}^{N} \backslash \mathcal {S}_{++}^{N}\). On the other hand, the projection rule together with the transformation rule, motivates the formula by which we can extend \(\Phi \) continuously up to the boundary \(\partial \mathcal {S}_{+}^{N}\).

Indeed, suppose that \(T^{(j)} \rightarrow P\), where \(T^{(j)}\) is invertible and P is the orthogonal projection onto the first p components, as in Corollary 3.15. Then for \(G\in \mathcal {S}^N_{++}\),

By naively taking limits of both sides, we expect that

where \(G_{11}\) is the upper-left \(p\times p\) block of G. Then by the projection rule we expect

where \(G_{11}\) is the upper-left \(p\times p\) block of G. After possibly changing coordinates via the transformation rule, this formula provides a general recipe for evaluating the LW functional on the boundary \(\partial \mathcal {S}^N_{+}\), which is the content of Theorem 3.18 below.

Unfortunately, there are nontrivial analytic difficulties that are hidden by this heuristic derivation. In fact there exists an interaction U satisfying the weak growth condition for which the continuous extension property fails. Since the discussion of this counterexample is somewhat involved, it is postponed to Section 5.5. However, the continuous extension property is true for U satisfying the strong growth condition of Definition 2.4.

Before stating the continuous extension property in Theorem 3.18, we provide a more careful discussion of the structure of the boundary \(\partial \mathcal {S}^N_{+}\). Consider a q-dimensional subspace K of \({\mathbb {R}^{N}}\), and let \(p=N-q\). Then the set

forms a ‘stratum’ of the boundary of \(\mathcal {S}_{+}\), which is itself isomorphic to the set of \(p\times p\) positive definite matrices. In turn, one can consider boundary strata (of smaller dimension) nested inside of \(S_{K}\).

We will show that the restriction of the Luttinger–Ward function to such a stratum is precisely the Luttinger–Ward function for a lower-dimensional system. To this end, fix a subspace K and choose any orthonormal basis \(v_{1},\ldots ,v_{p}\) for \(K^{\perp }\). (The choice of basis is not canonical but can be made for the purpose of writing down results explicitly.) Define \(V_{p}:=[v_{1},\ldots ,v_{p}]\). We use this notation to indicate both the matrix and the corresponding linear map.

Theorem 3.18

(Continuous extension, I) Suppose that U is continuous and satisfies the strong growth condition. With notation as in the preceding discussion, \(\Phi _{N}[\,\cdot \,,U]\) extends continuously to \(S_{K}\) via the rule

for \(G\in S_{K}\). Consequently, \(\Phi _{N}[\,\cdot \,,U]\) extends continuously to all of \(\mathcal {S}_{+}^{N}\).

Remark 3.19

We interpret the extension rule as to set \(\Phi _N [0,U] = \Phi _0[U] := -2 \cdot U(0)\). Moreover, it will become clear in the proof that even for continuous interactions U that do not satisfy the strong growth condition, the extension is still lower semi-continuous on \(\mathcal {S}^N_{+}\) and continuous on \(\mathcal {S}^N_{++}\cup \{0\}\).

Changing coordinates via Proposition 3.10, we see that Theorem 3.18 is actually equivalent to the following:

Theorem 3.20

(Continuous extension, II) Suppose that U is continuous and satisfies the strong growth condition. For \(G\in \mathcal {S}_{++}^{p}\), \(\Phi [\,\cdot \,,U]\) extends continuously via the rule

Once again we comment that proof is deferred to Section 5.

4 Bold Diagram Expansion for the Generalized Coulomb Interaction

Using the Luttinger–Ward formalism, in this section we prove that the bold diagrammatic expansions from the accompanying paper of the self-energy and the LW functional [for the generalized Coulomb interaction (4.1)] can indeed be interpreted as asymptotic series expansions in the interaction strength at fixed G. This provides a rigorous interpretation of the bold expansions that is not merely combinatorial. Recall that when each G in the bold diagrammatic expansion of the self-energy is further expanded using \(G^{0}\) and U, the resulting expansion should be formally the same as the bare diagrammatic expansion of the self energy. The combinatorial argument in Section 4 of the accompanying paper guaranteeing this fact does not need to be repeated in this setting, and we will be able to directly use Theorem 4.12 from the accompanying paper. The remaining hurdles are analytical, not combinatorial.

We summarize the results of this section as follows:

Theorem 4.1

For any continuous interaction \(U:{\mathbb {R}^{N}}\rightarrow \mathbb {R}\) satisfying the weak growth condition and any \(G \in \mathcal {S}^N_{++}\), the LW functional and the self-energy have asymptotic series expansions as

Moreover, for U a homogeneous quartic polynomial, the coefficients of the asymptotic series satisfy

If U is moreover a generalized Coulomb interaction (2.7), we have (borrowing the language of the accompanying paper) that

that is, \(\Sigma ^{(k)}\) is given the sum over bold skeleton diagrams of order k with bold propagator G and interaction \(v_{ij} \delta _{ik} \delta _{jl}\).

Remark 4.2

For a series as in Eq. (4.1) to be asymptotic means that the error of the M-th partial sum is \(O(\varepsilon ^{M+1})\) as \(\varepsilon \rightarrow 0\).

Since U is fixed, for simplicity in the ensuing discussion we will omit the dependence on U from the notation via the definitions \(\Phi _G(\varepsilon ) := \Phi [G,\varepsilon U]\), \(\Sigma _G(\varepsilon ) = \Sigma [G,\varepsilon U]\), and \(A_G(\varepsilon ) := A[G,\varepsilon U]\). We will also denote the series coefficients via \(\Phi ^{(k)}_G := \Phi ^{(k)}[G,U]\) and \(\Sigma ^{(k)}_G := \Sigma ^{(k)}[G,U]\). In this notation, our asymptotic series take the form

Notation 4.3

Note carefully that in this section the superscript (k) is merely a notation and does not indicate the k-th derivative. Such derivatives will be written out as \(\frac{\,\mathrm {d}^k}{\,\mathrm {d}\varepsilon ^k}\).

Now we outline the remainder of this section. In Section 4.1 we prove that the LW functional and the self-energy do indeed admit asymptotic series expansions. In Section 4.2 we prove the relation between the LW and self-energy expansions for quartic interactions, namely Eq. (4.2). Interestingly, this relation—which is well-known formally based on diagrammatic observations—was originally assumed to be true to obtain a formal derivation of the LW functional [19, 20]. Our proof here does not rely on any diagrammatic manipulation, only making use of the transformation rule and the quartic nature of the interaction U. Similar relations for homogeneous polynomial interactions of different order could easily be obtained. Next, in Section 4.3, we summarize and expand on the necessary results from the accompanying paper in diagram-free language; this both reduces the prerequisite knowledge needed for the remainder of the section and clarifies the arguments that follow. Finally, in Section 4.4 we prove that when U is a generalized Coulomb interaction, the series for the self-energy is in fact the bold diagrammatic expansion of Section 4 of the accompanying paper.

4.1 Existence of Asymptotic Series

In this section we assume that U is continuous and satisfies the weak growth condition. We first prove the following pair of lemmas.

Lemma 4.4

For any \(G\in \mathcal {S}^N_{++}\), \(A_G (\varepsilon ) \rightarrow G^{-1}\) as \(\varepsilon \rightarrow 0^+\).

Lemma 4.5

For \(G\in \mathcal {S}^N_{++}\), all derivatives of the functions \(\Phi _G:(0,\infty ) \rightarrow \mathbb {R}\) and \(\Sigma _G:(0,\infty ) \rightarrow \mathbb {R}^{N\times N}\) extend continuously to \([0,\infty )\).

We will convey the continuous extension of the derivatives of \(\Phi _G\) to the origin by the notation \(\Phi ^{(k)}_G := \Phi ^{(k)}_G (0)\), and similarly for the self-energy \(\Sigma _G^{(k)} := \Sigma _G^{(k)}(0)\). From the preceding it will follow that the series (4.4) are indeed asymptotic series in the following sense:

Proposition 4.6

For any nonnegative integer M, \(\Phi _G(\varepsilon ) - \sum _{k=1}^M \Phi ^{(k)}_G \varepsilon ^k = O(\varepsilon ^{M+1})\) and \(\Sigma _G(\varepsilon ) - \sum _{k=1}^M \Sigma ^{(k)}_G \varepsilon ^k = O(\varepsilon ^{M+1})\) as \(\varepsilon \rightarrow 0^+\).

Proof

Consider any function \(f:[0,\infty )\rightarrow \mathbb {R}\) with all derivatives extending continuously up to the boundary (and so defined at 0). Let \(\delta >0\), so for \(\varepsilon \in (\delta ,1]\) we know by the Lagrange error bound that

where C is a constant that depends only on a uniform bound on \(\left( \frac{\,\mathrm {d}}{\,\mathrm {d}\varepsilon }\right) ^{k+1} f\) over [0, 1] (the existence of which is guaranteed by the continuous extension property). Simply taking the limit of our inequality as \(\delta \rightarrow 0^+\), and again employing the continuous extension property, yields that \(\left| f(\varepsilon )-\sum _{k=0}^M f^{(k)}(0) \varepsilon ^k \right| \leqq C\varepsilon ^{M+1}\). This fact together with Lemma 4.5 proves the proposition.

4.2 Relating the LW and Self-energy Expansions

The bold diagrams for the Luttinger–Ward functional are pinned down in terms of the bold diagrams for the self-energy via the following:

Proposition 4.7

If U is a homogeneous quartic polynomial, then for all k,

Proof

Observe that by the transformation rule that for any \(G\in \mathcal {S}^N_{++}\), \(\varepsilon , t>0\).

Taking the gradient in G of both sides, we have

Since U is homogeneous quartic, in fact, we have

Then, using this relation, we compute

Now since t ranges from 0 to 1 in the integrand, we have that \(t^{2N+1}\varepsilon ^{N+1}\leqq \varepsilon ^{N+1}\), and therefore

This establishes the proposition.

4.3 Diagram-free Discussion of Results from the Accompanying Paper

For U satisfying the weak growth condition and \(A \in \mathrm {dom}\,\Omega [\,\cdot \,,U]\), define

Here we use the lowercase \(\sigma \) to emphasize that the self-energy here is being considered as a functional of A (not G), together with the interaction.

Now we set the notation of U to indicated a fixed generalized Coulomb interaction (2.7). Further define

The following lemma concerns the bare diagrammatic expansion of the Green’s function and the self-energy, that is, the asymptotic series for \(G_A\) and \(\sigma _A\):

Lemma 4.8

For fixed \(A \in \mathcal {S}^N_{++}\), all derivatives \(\frac{\,\mathrm {d}^{n}G_A}{\,\mathrm {d}\varepsilon ^{n}} : (0,\infty )\rightarrow \mathcal {S}^N_{++}\) and \(\frac{\,\mathrm {d}^{n}\sigma _A}{\,\mathrm {d}\varepsilon ^{n}}: (0,\infty ) \rightarrow \mathcal {S}^N\) extend continuously to \([0,\infty )\). In fact, interpreted as functions of both A and \(\varepsilon \), \(\frac{\,\mathrm {d}^{n}G_A}{\,\mathrm {d}\varepsilon ^{n}}(\varepsilon )\) and \(\frac{\,\mathrm {d}^{n}\sigma _A}{\,\mathrm {d}\varepsilon ^{n}}(\varepsilon )\) extend continuously to \(\mathcal {S}^N_{++}\times [0,\infty )\). Moreover, we have asymptotic series expansions

where the coefficient functions \(g^{(k)}_A\) and \(\sigma ^{(k)}_A\) are polynomials in \(A^{-1}\). More precisely, \(g^{(k)}_A\) and \(\sigma ^{(k)}_A\) are homogeneous polynomials of degrees \(2k+1\) and \(2k-1\), respectively. (Note that the zeroth-order term \(\sigma _A^{(0)}\) is implicitly zero.)

Finally, let \(G_{A}^{(\le M)}(\varepsilon )\) and \(\sigma _{A}^{(\le M)}(\varepsilon )\) denote the M-th partial sums of the above asymptotic series for \(G_A(\varepsilon )\) and \(\sigma _A(\varepsilon )\), respectively. For every \(A \in \mathcal {S}^N_{++}\), there exists a neighborhood \(\mathcal {N}\) of A in \(\mathcal {S}^N_{++}\) on which the truncation errors can actually be bounded

for all \(\epsilon \in [0,\tau ]\), with \(C, \tau \) independent of \(A \in \mathcal {N}\).

Proof

The asymptotic series expansions for \(G_A\) and \(\Sigma _A\) are established in Theorems 3.15 and 3.17 of the accompanying paper. The continuous extension of the derivatives of \(G_A\) and \(\sigma _A\) to \([0,\infty )\) follows from differentiation under the integral and simple dominated convergence arguments.

The uniform error bound follows from a Lagrange error bound argument as in Proposition 4.6, together with the continuity of \(\frac{\,\mathrm {d}^{n}G_A}{\,\mathrm {d}\varepsilon ^{n}}(\varepsilon )\) and \(\frac{\,\mathrm {d}^{n}\sigma _A}{\,\mathrm {d}\varepsilon ^{n}}(\varepsilon )\) on \(\mathcal {S}^N_{++}\times [0,\infty )\).

Inspired by Eq. (4.3), let

In fact \(\mathbf {S}_G^{(k)}\) is polynomial in G, homogeneous of degree \(2k-1\). At this point we do not yet know that \(\mathbf {S}_G^{(k)}\) coincides with \(\Sigma _G^{(k)}\), and indeed this is what we want to show. For any G, also define the partial sum

Then the main result (Theorem 4.12) of the accompanying paper can be phrased as follows:

Theorem 4.9

For any fixed \(A\in \mathcal {S}^N_{++}\), the expressions

agree as polynomials in \(\varepsilon \) up to order M, and hence they agree as joint polynomials in \((A^{-1},\varepsilon )\) after neglecting all terms in which \(\varepsilon \) appears degree at least \(M+1\).

4.4 Derivation of Self-energy Bold Diagrams

We have already shown that there exist asymptotic series for the LW functional and the self-energy. The remainder of Theorem 4.1 then consists of identifying that the self-energy coefficients \(\Sigma _G^{(k)}\) are indeed given by the bold diagrammatic expansion, that is, that \(\Sigma _G^{(k)} = \mathbf {S}_G^{(k)}\). Equivalently, we want to show that the partial sums \(\mathbf {S}_G^{(\leqq M)}(\varepsilon )\) and \(\Sigma _G^{(\leqq M)}(\varepsilon )\), which are polynomials of degree M in \(\varepsilon \), are equal. We will think of \(G \in \mathcal {S}^N_{++}\) as fixed throughout the following discussion, and we omit dependence on G from some of the notation below to avoid excess clutter. We will also think of M as a fixed positive integer and \(\varepsilon >0\) as variable (and sufficiently small).

Since our series expansion is only valid in the asymptotic sense, for any finite M we consider the truncation

Then we have \(\Sigma _G (\varepsilon ) - \Sigma ^{(\le M)}_G (\varepsilon ) = O(\varepsilon ^{M+1})\). For the purpose of this discussion, \(O(\varepsilon ^{M+1})\) will be thought of as negligibly small, and ‘\(\approx \)’ will be used to denote equality up to error \(O(\varepsilon ^{M+1})\). Meanwhile ‘\(\sim \)’ will be used to denote error that is \(O(\varepsilon ^{M+1-p})\) for all \(p\in (0,1)\), equivalently \(O(\varepsilon ^{M+\delta })\) for all \(\delta \in (0,1)\). We remark that the difference between the relations ‘\(\approx \)’ and ‘\(\sim \)’ is due to technical reasons to be detailed later, and may be neglected on first reading.

Note that it actually suffices to show that \(\Sigma _G^{(\leqq M)}(\varepsilon ) \sim \mathbf {S}_G^{(\leqq M)}(\varepsilon )\). Indeed, both sides are polynomials of degree M in \(\varepsilon \). Thus their difference is a polynomial of degree \(\leqq M\). If the degree-n part of the difference is nonzero for some \(n = 1,\ldots , M\), then the difference is not \(O(\varepsilon ^{n+\delta })\) for any \(\delta >0\). But if \(\Sigma _G^{(\leqq M)}(\varepsilon ) \sim \mathbf {S}_G^{(\leqq M)}(\varepsilon )\), then the difference is \(O(\varepsilon ^{n+\delta })\) for all \(n=1,\ldots ,M\), \(\delta \in (0,1)\). Thus in this case the difference is zero. With this reduction in mind, we now make a simple yet critical observation, namely that \(\Sigma ^{(\le M)}_G (\varepsilon )\) can be identified as the exact self-energy yielded by a modified interaction term. This will allow us to identify a quadratic form \(A^{(M)}(\varepsilon )\), for which dependence on G has been suppressed from the notation, which generates (up to negligible error) the Green’s function G under the interaction \(\varepsilon U\).

Lemma 4.10

With notation as in the preceding discussion, \(\Sigma ^{(\le M)}_G (\varepsilon )\) is the self-energy induced by the interaction \({U}^{(M)}_\varepsilon (x) := \varepsilon U(x) + \frac{1}{2} x^T \left[ \Sigma _G(\varepsilon ) - \Sigma ^{(\le M)}_G (\varepsilon ) \right] x \), that is,

and moreover

Thus we may identify

Proof

Recalling that \(A_G(\varepsilon ) = A[G,\varepsilon U]\) and \(\Sigma _G (\varepsilon ) = \Sigma [G,\varepsilon U]\), write

It follows that under the interaction \({U}^{(M)}_\varepsilon \), the quadratic form \(G^{-1} + \Sigma ^{(\le M)}_G (\varepsilon )\) corresponds to the (interacting) Green’s function G. This establishes the second statement of the lemma, that is, that

Moreover, by the Dyson equation we have that

which is the first statement of the lemma. The last statement then follows from the second, together with the definitions of \(G[\,\cdot \,,\,\cdot \,]\) and \(\sigma [\,\cdot \,,\,\cdot \,]\).

Remark 4.11

Note carefully that Lemma 4.10 is a non-perturbative fact and is valid for all \(\varepsilon >0\), though we shall apply it in a perturbative context.

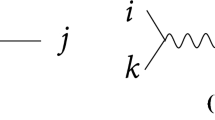

At this point we have defined the terms needed to present a schematic diagram (Fig. 1) of our proof that \(\Sigma _{G}^{(\le M)}(\varepsilon ) \sim \mathbf {S}^{(\le M)}_G (\varepsilon )\). Although the motivation for this schematic may not be fully clear at this point, the reader should refer back to it as needed for perspective.

Now recalling the definitions (4.5), we can write

Meanwhile, following Lemma 4.10 we have the identities

Note that pointwise, \(\varepsilon U\) and \(U_\varepsilon ^{(M)}\) differ negligibly, but the form of \(\varepsilon U\) is simpler and easier to work with going forward.

Based on Eqs. (4.6) and (4.7), one then hopes that \(G_{A^{(M)}(\varepsilon )}(\varepsilon )\) is close to G and \(\sigma _{A^{(M)}(\varepsilon )}(\varepsilon )\) is close to \(\Sigma _G^{(\leqq M)}(\varepsilon )\). This is the content of the next two lemmas.

Lemma 4.12

\(G_{A^{(M)}(\varepsilon )}(\varepsilon ) \sim G\).

Proof

See “Appendix C.11”.

Lemma 4.13

\(\sigma _{A^{(M)}(\varepsilon )}(\varepsilon ) \sim \Sigma ^{(\le M)}_{G}(\varepsilon )\).

Proof

Based on Eqs. (4.6) and (4.7), we want to show that \( \sigma [A^{(M)}(\varepsilon ), U_\varepsilon ^{(M)}] \sim \sigma [A^{(M)}(\varepsilon ), \varepsilon U]\). We have already shown that \( G = G[A^{(M)}(\varepsilon ), U_\varepsilon ^{(M)}] \sim G[A^{(M)}(\varepsilon ), \varepsilon U]\), from which it follows that

which is exactly what we want to show.

Then we can use \(\sigma _{A^{(M)}(\varepsilon )}(\varepsilon )\) as a stepping stone to relate \(\Sigma ^{(\leqq M)}_G (\varepsilon )\) with the bare diagrammatic expansion for the self-energy via the following:

Lemma 4.14

\(\sigma _{A^{(M)}(\varepsilon )}(\varepsilon ) \approx \sigma _{A^{(M)}(\varepsilon )}^{(\leqq M)}(\varepsilon )\)

Proof

Since \(A^{(M)}(\varepsilon ) = G^{-1} + O(\varepsilon )\), the result follows from Lemma 4.8 (in particular, the locally uniform bound on truncation error of the bare self-energy series).

We can prove a similar fact (which will be useful later on) regarding the bare series for the interacting Green’s function:

Lemma 4.15

\(G_{A^{(M)}(\varepsilon )} (\varepsilon ) \approx G_{A^{(M)}(\varepsilon )}^{(\leqq M)} (\varepsilon )\).

Proof

Since \(A^{(M)}(\varepsilon ) = G^{-1} + O(\varepsilon )\), the result follows from Lemma 4.8 (in particular, the locally uniform bound on truncation error of the bare series for the interacting Green’s function).

From Lemmas 4.12 and 4.15 we immediately obtain

Lemma 4.16

\(G_{A^{(M)}(\varepsilon )}^{(\leqq M)} (\varepsilon ) \sim G\).

Finally, we are ready to state and prove the last leg of the schematic diagram (Fig. 1).

Lemma 4.17

\(\mathbf {S}^{(\le M)}_G \sim \sigma _{A^{(M)}(\varepsilon )}^{(\leqq M)}(\varepsilon )\).

Proof

Consider \(\mathbf {S}_{G_{A}^{(\le M)}}^{(\le M)}\) as a polynomial in \((A^{-1},\varepsilon )\), and let \(P(A^{-1},\varepsilon )\) be the contribution of terms in which \(\varepsilon \) appears with degree at least \(M+1\). By Theorem 4.9 we have the equality

of polynomials in \((A^{-1},\varepsilon )\). Then substituting \(A\leftarrow A^{(M)}(\varepsilon )\), we obtain

Although the first term on the left-hand side of Eq. (4.8) looks quite intimidating, we can recognize it as \(\mathbf {S}_{ \mathbf {G}(\varepsilon ) }^{(\leqq M)}(\varepsilon )\), where

is the expression from Lemma 4.16. Since \(\mathbf {S}_{ [\,\cdot \,] }^{(\leqq M)}(\varepsilon ) = \sum _{k=1}^M \mathbf {S}_{ [\,\cdot \,] }^{(k)} \varepsilon ^k \), where each \(\mathbf {S}_{ [\,\cdot \,] }^{(k)}\) is a polynomial (homogeneous of positive degree) in the subscript slot, it follows that

Then from Eq. (4.8) we obtain

but since \([A^{(M)}(\varepsilon )]^{-1} = G + O(\varepsilon )\) and since P only includes terms of degree at least \(M+1\) in the second slot, it follows that \(P([A^{(M)}(\varepsilon )]^{-1},\varepsilon ) \approx 0\), and the desired result follows.

Taken together (as indicated in Fig. 1), Lemmas 4.13, 4.14, and 4.17 imply that \(\Sigma _{G}^{(\le M)}(\varepsilon ) \sim \mathbf {S}^{(\le M)}_G (\varepsilon )\) as desired, and the proof of Theorem 4.1 is complete.

4.5 Caveat Concerning Truncation of the Bold Diagrammatic Expansion

Although the LW and self-energy functionals are defined even for G such that the corresponding quadratic form \(A = A[G]\) is indefinite (and hence there is no physical bare non-interacting Green’s function), Green’s function methods (as discussed in Section 4.7 of the accompanying paper) based on truncation of the bold diagrammatic expansion can fail dramatically in the case of indefinite A. One can encounter divergent behavior as the interaction becomes small, or the Green’s function method may fail to admit a solution. Both failure modes can demonstrated by simple one-dimensional examples. The relevance of these to the solution of the quantum many-body problem is at this point unclear.

Consider the one-dimensional example of

where \(a = -1\). The corresponding non-interacting Green’s function is \(G^{0}=-1<0\) and hence is not even a physical Green’s function.

Nonetheless with \(\lambda >0\) the true Green’s function is still well-defined via

We now compute G via the Hartree-Fock method (cf. Section 4.7 of the accompanying paper), that is, we approximate the self-energy as

Hence the self-consistent solution \(G^{(1)}\) of the Dyson equation solves

There is only one positive (physical) solution to this equation, namely

In the spirit of perturbation theory, one might hope that \(G^{(1)}\) is a good approximation to G at least when \(\lambda \rightarrow 0\). However we see just the opposite. This is perhaps not surprising because the exact Green’s function G itself blows up in this limit.

The failure of the method as \(\lambda \rightarrow 0\) can be understood more precisely as follows. Rewrite the Hamiltonian from (4.9) as

The corresponding Gibbs measure (which is unaffected by the additive constant) then concentrates about two peaks at \(x=\pm \sqrt{\frac{2}{\lambda }}\) as \(\lambda \rightarrow 0\). Hence we expect

We note that, in contrast with the statement of Lemma 4.8, the limit \(\lim _{\lambda \rightarrow 0+} G(\lambda )\) does not exist. According to Eq. (),

We find that as \(\lambda \rightarrow 0+\), G and its first order approximation \(G^{(1)}\) do not agree.

If we include the second-order terms of the bold diagrammatic expansion

Then the self-consistent solution \(G^{(2)}\) of the Dyson equation solves

This yields a quartic equation in the scalar \(G^{(2)}\), which in fact has no solution for physical \(G^{(2)}\), that is, \(G^{(2)} > 0\).

To see this, first ease the notation by substituting \(x \leftarrow G^{(2)}\), so we are interested in the solutions \(x>0\) of

However, \(y^4 - y^2 \geqq -\frac{1}{4}\) for all y, so the first term is at least \(-\frac{3}{8}\), which evidently implies that no solutions exist for \(x>0\).

5 Proof of the Continuous Extension of the LW Functional

In Section 3.5 we motivated the continuous extension of the LW functional to the boundary of \(\mathcal {S}^N_{++}\) and stated this result in two equivalent forms (Theorems 3.18 and 3.20). In this section we prove the continuous extension property (for interactions of strong growth). We also develop the counterexample promised earlier, an interaction of weak but not strong growth for which the continuous extension property fails.

The section is outlined as follows. In Section 5.1, we describe some preliminary reductions in the proof of the continuous extension property, after which the proof can be divided into two parts: lower-bounding the limit inferior of the LW functional as the argument approaches the boundary and upper-bounding the limit supremum. In Section 5.2, we prove the lower bound, and in Section 5.3 we prove the upper bound. In Section 5.4 we provide an alternate view on the continuous extension property from the Legendre dual side, and in Section 5.5 we use this perspective to exhibit the aforementioned counterexample to the continuous extension property, which satisfies the weak growth condition but not the strong one.

5.1 Proof Setup

We are going to prove Theorem 3.20, which as we have remarked suffices to prove Theorem 3.18 by changing coordinates via Proposition 3.10.

Suppose \(G\in \mathcal {S}^N_{+}\) is of the form

where \(G_{p}\in \mathcal {S}_{++}^{p}\), and suppose that \(G^{(j)} \in \mathcal {S}^N_{++}\) with \(G^{(j)}\rightarrow G\) as \(j\rightarrow \infty \). For each j, diagonalize \(G^{(j)}=\sum _{i=1}^{N}\lambda _{i}^{(j)} v_{i}^{(j)} \left( v_{i}^{(j)} \right) ^{T}\), where the \(v_{i}^{(j)}\) are orthonormal, \(\lambda _{i}^{(j)}>0\) for \(i=1,\ldots ,N\).

We want to show that

It suffices to show that every subsequence has a convergent subsequence with its limit being \(\Phi _{p}[G_p,U(\,\cdot \,,0)]\). The \(G^{(j)}\) are convergent, hence bounded (in the \(\Vert \cdot \Vert _2\) norm), so the \(\lambda _i^{(j)}\) are bounded. Moreover, the \(v_i^{(j)}\) are all of unit length, hence bounded, so by passing to a subsequence if necessary we can assume that, for each i, there exist \(\lambda _i, v_i\) such that \(\lambda _i^{(j)} \rightarrow \lambda _i\) and \(v_i^{(j)} \rightarrow v_i\) as \(j\rightarrow \infty \). It follows that the \(v_i\) are orthonormal and that G can be diagonalized as \(G=\sum _{i=1}^{N}\lambda _{i}v_{i}v_{i}^{T}\). Since \(G_p\) is positive definite, we must have \(\lambda _{i}>0\) for \(i=1,\ldots ,p\), and moreover \(\lambda _{i}=0\) for \(i=p+1,\ldots ,N\). Evidently, the eigenvectors of G with strictly positive eigenvalues must be precisely the eigenvectors of \(G_p\), concatenated with \(N-p\) zero entries, that is, for \(i=1,\ldots ,p\), \(v_i\) must be of the form \((*,0)\). By orthogonality, for \(i=p+1,\ldots , n\), \(v_i\) must be of the form \((0,*)\).

For convenience we also establish the following notation:

Now the proof consists of proving two bounds: a lower bound

and an upper bound

These bounds will be proved in the next two sections, that is, Sections 5.2 and 5.3, respectively.

5.2 Lower Bound

We want to establish a lower bound on \(\Phi _{N}[G_{j},U]\) via our expression for \(\mathcal {F}_{N}\) as a supremum:

This strategy requires us to construct measures \(\mu ^{(j)} \in \mathcal {G}_{N}^{-1}(G^{(j)})\). Intuitively, what one hopes to do (though this strategy will require some modification) is the following: consider the measure \(\alpha \) on \(\mathbb {R}^p\) that attains the supremum in the analogous expression for \(\mathcal {F}_p [G_p,U(\,\cdot \,,0)]\), identify this measure with a measure on \(V_G \simeq \mathbb {R}^p\), rotate and scale appropriately to obtain a measure \(\alpha ^{(j)}\) supported on \(V_{G^{(j)}}\) with the correct second-order moments with respect to this subspace, and finally take the direct sum with an appropriate Gaussian measure \(\beta ^{(j)}\) on \(V_{G^{(j)}}^\perp \). Unfortunately, due to difficulties of analysis, it is not clear how to then prove the desired limit as \(j\rightarrow \infty \).

However, the analysis of this limit would be feasible if the \(\mu ^{(j)}\) had compact support (which they evidently do not). Then our approach is to carry out a construction that preserves the spirit of the ‘ideal’ construction just described but instead works with \(\mu ^{(j)}\) of (uniform) compact support.

For convenience we let \(\mathcal {M}_c \subset \mathcal {M}_2\) denote the subset of measures of compact support. The acceptability of working with measures of compact support can be motivated by the following lemma, which will be used below. (In the statement we temporarily suppress dependence on the interaction and the dimension from the notation.)

Lemma 5.1

For all \(G\in \mathcal {S}^N\),

Now we outline our actual construction of the \(\mu ^{(j)}\). Consider an arbitrary measure \(\alpha \in \mathcal {G}_{p}^{-1}(G_{p})\) with compact support on \(\mathbb {R}^{p}\simeq V_{G}\). (We abuse notation slightly by considering \(\alpha \) as a measure on both \(\mathbb {R}^{p}\) and \(V_{G}\).) The idea now is to construct a measure in \(\mu ^{(j)} \in \mathcal {G}_{N}^{-1}(G^{(j)})\) by rotating \(\alpha \) and scaling appropriately to obtain a measure \(\alpha ^{(j)}\) supported on \(V_{G^{(j)}}\) and then taking the direct sum with a compactly supported measure \(\beta ^{(j)}\) on \(V_{G^{(j)}}^\perp \) (the details of which will be discussed later). In fact the supremum in (5.1) will be approximately attained by a measure of this form as \(j\rightarrow \infty \), that is, our lower bound will be tight as \(j\rightarrow \infty \).

Accordingly, for the construction of \(\alpha ^{(j)}\), let \(O^{(j)}\) be the orthogonal linear transformation sending \(v_i \mapsto v_i^{(j)}\), and let \(D^{(j)}\) be the linear transformation with matrix (in the \(v_i^{(j)}\) basis) given by

Then define \(T^{(j)} := D^{(j)} O^{(j)}\) and \(\alpha ^{(j)} := T^{(j)} \# \alpha \). Note that \(T^{(j)} \rightarrow I_n\) as \(j \rightarrow \infty \). Moreover, observe that \(\alpha ^{(j)}\) is a measure supported on \(V_{G^{(j)}}\) with second-order moment matrix given by \(\mathrm {diag}(\lambda _{1}^{(j)},\ldots ,\lambda _{p}^{(j)})\) with respect to the coordinates on \(V_{G^{(j)}}\) induced by the orthonormal basis \(v_{1}^{(j)},\ldots ,v_{p}^{(j)}\).

Now we turn to the construction of \(\beta ^{(j)}\). Let \(R>1\) and let \(\gamma \) be a measure supported on \([-R,R]\) with \(\int x^2 \,\mathrm{{d}}\gamma = 1\). The parameter R will control the size of the support of \(\beta ^{(j)}\) and will be sent to \(+\infty \) at the very end of the proof of the lower bound (after the limit in j has been taken). Then define

and define a measure \(\beta ^{(j)}\) on \(\mathbb {R}^{N-p}\) by \(\beta ^{(j)} := \Lambda ^{(j)} \# (\gamma \times \cdots \times \gamma )\). Note that \(\Lambda ^{(j)}\rightarrow 0\) as \(j\rightarrow \infty \). Abusing notation slightly, we will also identify \(\beta ^{(j)}\) with a measure supported on \(V_{G^{(j)}}^{\perp }\simeq \mathbb {R}^{N-p}\) via the identification of the orthonormal basis \(v_{p+1}^{(j)},\ldots ,v_{N}^{(j)}\) for \(V_{G^{(j)}}^\perp \) with the standard basis of \(\mathbb {R}^{N-p}\).

Finally, define the product measure \(\mu ^{(j)}:=\alpha ^{(j)}\times \beta ^{(j)}\) with respect to the product structure \({\mathbb {R}^{N}}=V_{G^{(j)}}\times V_{G^{(j)}}^{\perp }\), and note that \(\mu ^{(j)}\in \mathcal {G}_N^{-1}(G^{(j)})\), so by (5.1),

where \(H(\alpha ^{(j)})\) and \(H(\beta ^{(j)})\) are the entropies of \(\alpha ^{(j)}\) and \(\beta ^{(j)}\) on the probability spaces \(V_{G^{(j)}}\) and \(V_{G^{(j)}}^{\perp }\), respectively.

Notice that there is a compact set on which \(all \) of the measures \(\mu ^{(j)}\) are supported. It is then not difficult to see that \(\mu ^{(j)}\) converges weakly to the measure \(\alpha \times \delta _0\), where the product is with respect to the product structure \({\mathbb {R}^{N}}= V_G \times V_G^\perp \) and \(\delta _0\) is the Dirac delta measure localized at the origin. By the continuity of U and the uniform boundedness of the supports of \(\mu ^{(j)}\), this is enough to guarantee that

as \(j\rightarrow \infty \).

Next we write the Luttinger–Ward functional in terms of \(\mathcal {F}_N\):

Then combining the preceding observations yields

Now for any \(\varepsilon > 0\), we can choose R sufficiently large and \(\gamma \) supported on \([-R,R]\) such that \(H(\gamma ) \geqq \frac{1}{2} \log (2\pi e) - \varepsilon \). Indeed, note that \(\frac{1}{2} \log (2\pi e)\) is the entropy of the standard normal distribution, that is, the maximal entropy over measures of unit variance. By restricting the normal distribution to \([-R,R]\) for R sufficiently large, we can become arbitrarily close to saturating this bound. Therefore we have that

Since \(\alpha \) was arbitrary in \(\mathcal {G}_{p}^{-1}(G_{p})\cap \mathcal {M}_c\), this establishes the desired upper bound

where we have used Lemma 5.1, which allows us to look at the supremum over compactly supported measures.

Observe that the proof of the lower bound did not require the strong growth assumption, hence the semi-continuity claim of Remark 3.19.

5.3 Upper Bound

Next we turn to establishing an upper bound. The basic strategy is to select measures \(\mu ^{(j)}\) that (approximately) attain the supremum in (5.1) and take a limit as \(j\rightarrow \infty \).

Before proceeding, let \(\varepsilon >0\). Moreover, define \(\pi _1\) to be the orthogonal projection onto \(V_{G} \simeq \mathbb {R}^p\), and define \(\pi _2\) to be the orthogonal projection onto \(V_{G}^\perp \simeq \mathbb {R}^{N-p}\).

Now for every j, as suggested above choose \(\mu ^{(j)} \in \mathcal {G}_N^{-1}(G^{(j)})\) such that

Therefore