Abstract

We provide a mathematical analysis of the effective viscosity of suspensions of spherical particles in a Stokes flow, at low solid volume fraction \(\phi \). Our objective is to go beyond Einstein’s approximation \(\mu _{eff} = (1+\frac{5}{2}\phi ) \mu \). Assuming a lower bound on the minimal distance between the N particles, we are able to identify the \(O(\phi ^2)\) correction to the effective viscosity, which involves pairwise particle interactions. Applying the methodology developped over the last years on Coulomb gases, we are able to tackle the limit \(N \rightarrow +\infty \) of the \(O(\phi ^2)\)-correction, and provide an explicit formula for this limit when the particles centers can be described by either periodic or stationary ergodic point processes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Setting of the Problem

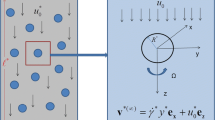

Our general concern is the computation of the effective viscosity generated by a suspension of N particles in a fluid flow. We consider spherical particles of small radius a, centered at \(x_{i,N}\), with \(N \geqq 1\) and \(1\leqq i\leqq N\). To lighten notations, we write \(x_i\) instead of \(x_{i,N}\), and \(B_i = B(x_i, a)\). We assume that the Reynolds number of the fluid flow is small, so that the fluid velocity is governed by the Stokes equation. Moreover, the particles are assumed to be force- and torque-free. If \(\mathcal {F} = \mathbb {R}^3 \setminus (\cup _i B_i)\) is the fluid domain, governing equations are

where \(\mu \) is the kinematic viscosity, while the constant vectors \(u_i\) and \(\omega _i\) are Lagrange multipliers associated to the constraints

Here, \(\sigma _\mu (u,p) := 2\mu D(u) - p I\) is the usual Cauchy stress tensor. The boundary condition at infinity will be specified later on.

We are interested in a situation where the number of particles is large, \(N \gg 1\). We want to understand the additional viscosity created by the particles. Ideally, our goal is to replace the viscosity coefficient \(\mu \) in (1.1) by an effective viscosity tensor \(\mu '\) that would encode the average effect induced by the particles. Note that such replacement can only make sense in the flow region \(\mathcal {O}\) in which the particles are distributed in a dense way. For instance, a finite number of isolated particles will not contribute to the effective viscosity, and should not be taken into account in \(\mathcal {O}\). The selection of the flow region is formalized through the following hypothesis on the empirical measure:

Note that we do not ask for regularity of the limit density f over \(\mathbb {R}^3\), but only in restriction to \(\mathcal {O}\). Hence, our assumption covers the important case \(f = \frac{1}{|\mathcal {O}|} 1_{\mathcal {O}}\).

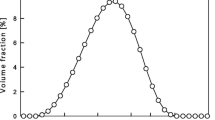

We investigate the classical regime of dilute suspensions, in which the solid volume fraction

is small, but independent of N. In addition to (H1), we make the separation hypothesis

Let us stress that (H2) is compatible with (H1) only if the \(L^\infty \) norm of f is small enough (roughly less than \(1/c^3\)), which in turn forces \(\mathcal {O}\) to be large enough.

Our hope is to replace a model of type (1.1) by a model of the form

with the usual continuity conditions on the velocity and the stress

A priori, \(\mu '\) could be inhomogeneous (and should be if the density f seen above is itself non-constant over \(\mathcal {O}\)). It could also be anisotropic, if the cloud of particles favours some direction. With this in mind, it is natural to look for \(\mu ' = \mu '(x)\) as a general 4-tensor, with \(\sigma ' = 2 \mu ' D(u)\) given in coordinates by \(\sigma _{ij} = \mu '_{ij kl} D(u)_{kl}\). By standard classical considerations of mechanics, \(\mu '\) should satisfy the relations

namely, \(\mu '\) should define a symmetric isomorphism over the space of \(3\times 3\) symmetric matrices.

As we consider a situation in which \(\phi \) is small, we may expect \(\mu '\) to be a small perturbation of \(\mu \), and hopefully admit an expansion in powers of \(\phi \):

The main mathematical questions are:

-

Can solutions \(u_N\) of (1.1)–(1.2) be approximated by solutions \(u_{eff} = 1_{\mathbb {R}^3\setminus \mathcal {O}} u + 1_{\mathcal {O}} u'\) of (1.4)–(1.5), for an appropriate choice of \(\mu '\) and an appropriate topology ?

-

If so, does \(\mu '\) admit an expansion of type (1.6), for some k ?

-

If so, what are the values of the viscosity coefficients \(\mu _i\), \(1 \leqq i \leqq k\) ?

Let us stress that, in most articles about the effective viscosity of suspensions, it is implicitly assumed that the first two questions have a positive answer, at least for \(k=1\) or 2. In other words, the existence of an effective model is taken for granted, and the point is then to answer the third question, or at least to determine the mean values

of the viscosity coefficients. As we will see in Section 2, these mean values can be determined from the asymptotic behaviour of some integral quantities \(\mathcal {I}_N\) as \(N \rightarrow +\infty \). These integrals involve the solutions \(u_N\) of (1.1)–(1.2) with condition at infinity

where S is an arbitrary symmetric trace-free matrix.

The effective viscosity problem for dilute suspensions of spherical particles has a long history, mostly focused on the first order correction created by the suspension, that is \(k=1\) in (1.6). The pioneering work on this problem was due to Einstein [15], not mentioning earlier contributions on the similar conductivity problem by Maxwell [29], Clausius [11], Mossotti [32]. The celebrated Einstein’s formula,

was derived under the assumption that the particles are homogeneously and isotropically distributed, and neglecting the interactions between particles. In other words, the correction \(\mu _1 = \frac{5}{2} \mu \) is obtained by summing N times the contribution of one spherical particle to the effective stress. The calculation of Einstein will be seen in Section 2. It was later extended to the case of an inhomogeneous suspension by Almog and Brenner [1, p. 16], who found that

The mathematical justification of formula (1.9) came much later. As far as we know, the first step in this direction was due to Sanchez-Palencia [38] and Levy and Sanchez-Palencia [28], who recovered Einstein’s formula from homogenization techniques, when the suspension is periodically distributed in a bounded domain. Another justification, based on variational principles, is due to Haines and Mazzucato [19]. They also consider a periodic array of spherical particles in a bounded domain \(\Omega \), and define the viscosity coefficient of the suspension in terms of the energy dissipation rate:

where \(u_N\) is the solution of (1.1)–(1.2)–(1.8), replacing \(\mathbb {R}^3\) by \(\Omega \). Their main result is that

For preliminary results in the same spirit, see Keller-Rubenfeld [27]. Eventually, a recent work [21] by the second author and Di Wu shows the validity of Einstein’s formula under general assumptions of type (H1)–(H2). See also [33] for a similar recent result.

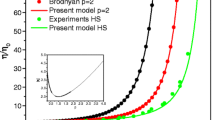

Our goal in the present paper is to go beyond this famous formula, and to study the second order correction to the effective viscosity, that is \(k=2\) in (1.6). Results on this problem have split so far into two settings: periodic distributions, and random distributions of spheres. Many different formulas have emerged in the literature, after analytical, numerical and experimental studies. In the periodic case, one can refer to the works [2, 34, 37, 42], or to the more recent work [2], dedicated to the case of spherical inclusions of another Stokes fluid with viscosity \(\tilde{\mu }\ne \mu \). Still, in the simple case of a primitive cubic lattice, the expressions for the second order correction differ. In the random case, the most reknowned analysis is due to Batchelor and Green [5], who consider a homogeneous and stationary distribution of spheres, and express the correction \(\mu _2\) as an ensemble average that involves the N-point correlation function of the process. As pointed out by Batchelor and Green, the natural idea when investigating the effective viscosity up to \(O(\phi ^2)\) is to replace the N-point correlation function by the two-point correlation function, but this leads to a divergent integral. To overcome this difficulty, Batchelor and Green develop what they call a renormalization technique, that was developed earlier by Batchelor to determine the sedimentation speed of a dilute suspension. After further analysis of the expression of the two-point correlation function of spheres in a Stokes flow [6], completed by numerical computations, they claim that under a pure strain, the particles induce a viscosity of the form

Although the result of Batchelor and Green is generally accepted by the fluid mechanics community, the lack of clarity about their renormalization technique has led to debate; see [1, 22, 35].

One main objective in the present paper is to give a rigorous and global mathematical framework for the computation of

leading to explicit formula in periodic and stationary random settings. We will adopt the point of view of the studies mentioned before: we will assume the validity of an effective model of type (1.4)–(1.5)–(1.6) with \(k=2\), and will identify the averaged coefficient \(\nu _2\).

More precisely, our analysis is divided into two parts. The first part, conducted in Section 2, has as its main consequence the following:

Theorem 1.1

Let \((x_{i})_{1 \leqq i \leqq N}\) a family of points supported in a fixed compact set \(\mathbb {R}^3\), and satisfying (H1)–(H2). For any trace-free symmetric matrix S and any \(\phi > 0\), let \(u_N\), resp. \(u_{eff}\), the solution of (1.1)–(1.2)–(1.8) with the radius a of the balls defined through (1.3), resp. the solution of (1.4)–(1.5)–(1.8) where \(\mu '\) obeys (1.6) with \(k=2\), \(\mu _1\) being given in (1.10).

If \(u_N - u_{eff} = o(\phi ^2)\) in \(H^{-\infty }_{loc}(\mathbb {R}^3)\), meaning that for all of bounded open set U, there exists \(s \in \mathbb {R}\) such that

then, necessarily, the coefficient \(\nu _2\) defined in (1.12) satisfies \(\, \nu _2 S : S = \mu \lim _{N \rightarrow +\infty } \mathcal {V}_N\) where \(\nu _2\) was defined in (1.12), and

with the Calderón–Zygmund kernel

Roughly, this theorem states that if there is an effective model at order \(\phi ^2\), the mean quadratic correction \(\nu _2\) is given by the limit of \(\mathcal {V}_N\), defined in (1.13). Note that the integral at the right-hand side of (1.13) is well-defined: \(f \in L^2(\mathbb {R}^{3})\) and \(f \rightarrow g_S \star f\) is a Calderón–Zygmund operator, therefore continuous on \(L^2(\mathbb {R}^3)\). We insist that our result is an if theorem: the limit of (1.13) does not necessarily exist for any configuration of particles \(x_i = x_{i,N}\) satisfying (H1)–(H2). In particular, it is not clear that an effective model at order \(\phi ^2\) is available for all such configurations.

Still, the second part of our analysis shows that for points associated to stationary random processes (including periodic patterns or Poisson hard core processes), the limit of the functional does exist, and is given by an explicit formula. We shall leave for later investigation the problem of approximating \(u_N\) by \(u_{eff}\) when the limit of \(\mathcal {V}_N\) exists.

Our study of functional (1.13) is detailed in Sections 3 to 5. It borrows a lot from the mathematical analysis of Coulomb gases, as developped over the last years by Sylvia Serfaty and her coauthors [9, 36, 40]. Although our paper is self-contained, we find useful to give a brief account of this analysis here. As explained in the lecture notes [41], one of its main goals is to understand what configurations of points minimize Coulomb energies of the form

where \(g(x) = \frac{1}{|x|}\) is a repulsive potential of Coulomb type, and V is typically a confining potential. It is well-known, see [41, chapter 2], that under suitable assumptions on V, the sequence of functionals \(H_N\) (seen as a functionals over probability measures by extension by \(+\infty \) outside the set of empirical measures) \(\Gamma \)-converges to the functional

Hence, the empirical measure \(\delta _N = \frac{1}{N} \sum _{i=1}^N \delta _{x_i}\) associated to the minimizer \((x_1,\dots ,x_N)\) of \(H_N\) converges weakly to the minimizer \(\lambda \) of H.

In the series of works [36, 40], see also [39] on the Ginzburg-Landau model, Serfaty and her coauthors investigate the next order term in the asymptotic expansion of \(\min _{x_1,\dots ,x_N} H_N\). A keypoint in these works is understanding the behaviour of (the minimum of)

as \(N \rightarrow +\infty \). This is done through the notion of renormalized energy. Roughly, the starting point behind this notion is the (abusive) formal identity

where \(h_N\) is the solution of \(\Delta h_N = 4 \pi (\delta _N - \lambda )\) in \(\mathbb {R}^3\). Of course, this identity does not make sense, as both sides are infinite. On one hand, the left-hand side is not well-defined: the potential g is singular at the diagonal, so that the integral with respect to the product of the empirical measures diverges. On the other hand, the right-hand side is not better defined: as the empirical measure does not belong to \(H^{-1}(\mathbb {R}^3)\), \(h_N\) is not in \(\dot{H}^1(\mathbb {R}^3)\).

Still, as explained in [41, chapter 3], one can modify this identity, and show a formula of the form

where \(h_N^\eta \) is an approximation of \(h_N\) obtained by regularization of the Dirac masses at the right-hand side of the Laplace equation: \(\Delta h_N^\eta = 4 \pi (\delta ^\eta _N - \lambda )\) in \(\mathbb {R}^3\). Note the removal of the term \(N g(\eta )\) at the right-hand side of (1.17). This term, which goes to infinity as the parameter \(\eta \rightarrow 0\), corresponds to the self-interaction of the Dirac masses: it must be removed, consistently with the fact that the integral defining \(\mathcal {H}_N\) excludes the diagonal. This explains the term renormalized energy. See [41, chapter 3] for more details.

From there (omitting to discuss the delicate commutation of the limits in N and \(\eta \) !), the asymptotics of \(\min _{x_1, \dots , x_N} \mathcal {H}_N\) can be deduced from the one of \(\min _{x_1, \dots , x_N} \int _{\mathbb {R}^3} |{\nabla }h_N^\eta |^2\), for fixed \(\eta \). The next step is to show that such minimum can be expressed as spatial averages of (minimal) microscopic energies, expressed in terms of solutions of the so-called jellium problems: see [41, chapter 4]. These problems, obtained through rescaling and blow-up of the equation on \(h_N^\eta \), are an analogue of cell problems in homogenization. More will be said in Section 4, and we refer to the lecture notes [41] for all necessary complements.

Thus, the main idea in the second part of our paper is to take advantage of the analogy between the functionals \(\mathcal {V}_N\) and \(\mathcal {H}_N\) to apply the strategy just described. Doing so, we face specific difficulties: our distribution of points is not minimizing an energy, the potential \(g_S\) is much more singular than g, the reformulation of the functional in terms of an energy is less obvious, etc. Still, we are able to reproduce the same kind of scheme. We introduce in Section 3 an analogue of the renormalized energy. The analogue of the jellium problem is discussed in Section 4. Finally, in Section 5, we are able to tackle the convergence of \(\mathcal {V}_N\), and give explicit formula for the limit in two cases: the case of a (properly rescaled) \(L \mathbb {Z}^3\)-periodic pattern of M-spherical particles with centers \(a_1\), ..., \(a_M\), and the case of a (properly rescaled) hardcore stationary random process with locally integrable two points correlation function \(\rho _2(y,z) = \rho (y-z)\). In the first case, we show that

where \(G_S\) and \(G_{S,L}\) are the whole space and \(L\mathbb {Z}^3\)-periodic kernels defined respectively in (3.12) and (5.18); see Proposition 5.4. In the special case of a primitive cubic lattice, for which \(M=L=1\), we can push the calculation further, finding that

with \(\alpha \approx 9.48\) and \(\beta \approx -2,15\), cf. Proposition 5.5 for precise expressions. Our result is in agreement with [42]. In the random stationary case, if the process has mean intensity one, we show that

These formula open the road to numerical computations of the viscosity coefficients of specific processes, and should in particular allow us to check the formula found in the literature [5, 35].

Let us conclude this introduction by pointing out that our analysis falls into the general scope of deriving macroscopic properties of dilute suspensions. From this perspective, it can be related to mathematical studies on the drag or sedimentation speed of suspensions; see [13, 23,24,25, 30] among many. See also the recent work [14] on the conductivity problem.

2 Expansion of the Effective Viscosity

The aim of this section is to understand the origin of the functional \(\mathcal {V}_N\) introduced in (1.13), and to prove Theorem 1.1. The outline is the following. We first consider the effective model (1.4)–(1.5)–(1.6). Given S a symmetric trace-free matrix, and a solution \(u_{eff}\) with condition at infinity (1.8), we exhibit an integral quantity \(\mathcal {I}_{eff} = \mathcal {I}_{eff}(S)\) that involves \(u_{eff}\) and allows us to recover (partially) the mean viscosity coefficient \(\nu _2\). In the next paragraph, we introduce the analogue \(\mathcal {I}_{N}\) of \(\mathcal {I}_{eff}\), that involves this time the solution \(u_N\) of (1.1)–(1.2) and (1.8). In brief, we show that if \(u_N\) is \(o(\phi ^2)\) close to \(u_{eff}\), then \(\mathcal {I}_{N}\) is \(o(\phi ^2)\) close to \(\mathcal {I}_{eff}\). Finally, we provide an expansion of \(\mathcal {I}_{N}\), allowing us to express \(\nu _2\) in terms of \(\mathcal {V}_N\). Theorem 1.1 follows.

2.1 Recovering the Viscosity Coefficients in the Effective Model

Let \(k \geqq 2\), \(\mu '\) satisfying (1.6), with viscosity coefficients \(\mu _i\) that may depend on x. Let S symmetric and trace-free. We denote \(u_0(x) = Sx\). Let \(u_{eff} = 1_{\mathbb {R}^3\setminus \mathcal {O}} u + 1_{\mathcal {O}} u'\) the weak solution in \(u_0+ \dot{H}^1(\mathbb {R}^3)\) of (1.4)–(1.5)–(1.8). By a standard energy estimate, one can show the expansion

where the system satisfied by \(u_{eff,i} = 1_{\mathbb {R}^3\setminus \mathcal {O}} u_i + 1_{\mathcal {O}} u'_i\) is derived by plugging the expansion in (1.4)–(1.5) and keeping terms with power \(\phi ^i\) only. Notably, we find that

together with the conditions \(u_1 = 0\) at infinity,

Similarly,

together with \(u_2 = 0\) at infinity,

Now, inspired by formula (4.11.16) in [4], we define

where n refers to the outward normal. We will show that

We first use (1.5) to write

Using the equations satisfied by \(u'_1\) and \(u'_2\), after integration by parts, we get

Plugging this last line in the expression for \(\mathcal {I}_{eff}\) yields (2.4).

We see through formula (2.4) that the expansion of \(\mathcal {I}_{eff}\) in powers of \(\phi \) gives access to \(\nu _1\), and, if \(\mu _1\) is known, it further gives access to \(\nu _2\). On the basis of the works [1, 33] and of the recent paper [21], which considers the same setting as ours, it is natural to assume that \(\mu _1\) is given by (1.10). This implies \(\nu _1 = \frac{5}{2} \mu \). With such expression of \(\mu _1\), and the form of f specified in (H1), we can check that \(u_S = (5 |\mathcal {O}|)^{-1} u_{eff,1}\) satisfies

It follows that

2.2 Recovering the Viscosity Coefficients in the Model with Particles

To determine the possible value of the mean viscosity coefficient \(\nu _2\), we must now relate the functional \(\mathcal {I}_{eff}\), based on the effective model, to a functional \(\mathcal {I}_N\) based on the real model with spherical rigid particles. From now on, we place ourselves under the assumptions of Theorem 1.1. Note that, thanks to hypothesis (H2), the spherical particles do not overlap for \(\phi \) small enough, so that a weak solution \(u_N \in u_0 + \dot{H}^1(\mathbb {R}^3)\) of (1.1)–(1.2)–(1.8) exists and is unique.

By integration by parts, for any R such that \(\mathcal {O}\Subset B_R\), we have

By analogy with (2.3), and as all particles remain in a fixed compact \(K \supset \mathcal {O}\) independent of N, we set for any R such that \(K \subset B_R\):

which again does not depend on our choice of R by integration by parts. Now, if \(u_{eff}\) and \(u_N\) are \(o(\phi ^2)\)-close in the sense of Theorem 1.1, then

Indeed, \(u_N - u_{eff}\) is a solution of a homogenenous Stokes equation outside K. By elliptic regularity, we find that \(\limsup _{N \rightarrow +\infty } \Vert u_{eff} - u_N\Vert _{H^s(K')} = 0\), for any compact \(K' \subset \mathbb {R}^3\setminus K\) and any positive s. Relation (2.9) follows.

We now turn to the most difficult part of this section, that is expanding \(\mathcal {I}_N\) in powers of \(\phi \). We aim to prove

Proposition 2.1

Let \((x_{i})_{1\leqq i \leqq N}\), satisfying (H1)–(H2). For S trace-free and symmetric, for \(\phi > 0\), let \(u_N\) the solution of (1.1)–(1.2)–(1.8) with the ball radius a defined through (1.3). Let \(\mathcal {I}_N\) as in (2.8), \(\mathcal {V}_N\) as in (1.13), and \(u_S\) the solution of (2.5). One has

As before, notation \(A_N = B_N + o(\phi ^2)\) means \(\limsup _N |A_N - B_N| = o(\phi ^2)\). Obviously, Theorem 1.1 follows directly from (2.6), (2.9) and from the proposition.

To start the proof, we set \(v_N := u_N - u_0\). Note that \(v_N \in \dot{H}^1(\mathcal F)\) still satisfies the Stokes equation outside the ball, with \(v_N = 0\) at infinity, and \(v_N = - Sx + u_i + \omega _i \times (x-x_i)\) inside \(B_i\). Moreover, taking into account the identities

and

one has for all i that

From the definition (2.8), we can re-express \(\mathcal {I}_N\) as

To obtain an expansion of \(\mathcal I_N\) in powers of \(\phi \), we will now approximate \((v_N,p_N)\) by some explicit field \((v_{app}, p_{app})\), inspired by the method of reflections. This approximation involves the elementary problem

The solution of (2.13) is explicit [18], and given by

with

The pressure is

We now introduce

where

In short, the first sum at the right-hand side of (2.17) corresponds to a superposition of N elementary solutions, meaning that the interaction between the balls is neglected. This sum satisfies the Stokes equation outside the ball, but creates an error at each ball \(B_i\), whose leading term is \(S_i x\). This explains the correction by the second sum at the right-hand side of (2.17). One could of course reiterate the process: as the distance between particles is large compared to their radius, we expect the interactions to be smaller and smaller. This is the principle of the method of reflections that is investigated in [24]. From there, Proposition 2.1 will follow from two facts. Defining

we will show first that

and then

Identity (2.19) follows from a calculation that we now detail. We define

We have

To treat \(I_b\) and \(I_d\), we rely on the following property, which is checked easily through integration by parts: for any (v, p) solution of Stokes in \(B_i\), and any trace-free symmetric matrix S, \(\mathcal {I}_i(v,p) : S = 0\). As for all i and all \(j\ne i\), \(v^s[S](\cdot -x_j)\) or \(v^s[S_j](\cdot -x_j)\) is a solution of Stokes inside \(B_i\), we deduce

As regards \(I_a\), we use the following formula, which follows from a tedious calculation [18]: for any traceless matrix S,

It follows that

This term corresponds to the famous Einstein formula for the mean effective viscosity. It is coherent with the expression (1.10) for \(\mu _1\), which implies \(\nu _1 = \frac{5}{2} \mu \).

Eventually, as regards \(I_c\), we can use (2.22) again, replacing S by \(S_i\):

with \(g_S\) defined in (1.14). In view of (2.21)–(2.23)–(2.24), to conclude that (2.19) holds, it is enough to prove

Lemma 2.2

For any \(f \in L^2(\mathbb {R}^3)\),

with \(g_S\) defined in (1.14), and \(u_S \in \dot{H}^1(\mathbb {R}^3)\) the solution of (2.5).

Proof

Note that both sides of the identity are continuous over \(L^2\): the left-hand side is continuous as the Calderón–Zygmund operator \(f \rightarrow g_S \star f\) is continuous over \(L^2\), while the right-hand side is continuous by classical elliptic estimates for the Stokes operator. By density, this is therefore enough to assume that \(f \in C^\infty _c(\mathbb {R}^3)\). We denote by \(U = (U_{ij}), Q = (Q_j)\) the fondamental solution of the Stokes operator. This means that for all j, the vector field \(U_j = (U_{ij})_{1\leqq i \leqq 3}\) and the scalar field \(Q_j\) satisfy the Stokes equation

It is well-known, (see [16, p. 239]), that

From there, one can deduce the following formula, cf [16, p. 290, equation (IV.8.14)]:

Using the Einstein convention for summation, this implies in turn that

where we have used that S is trace-free to obtain the third equality. Hence,

Note that the permutations between the derivatives and the convolution product do not raise any difficulty, as \(f \in C_c^\infty (\mathbb {R}^3)\). Now, using \(S_{kl} = S_{lk}\), and denoting by \(\mathrm{St}^{-1}\) the convolution with the fundamental solution (inverse of the Stokes operator), we get

Eventually,

This concludes the proof of the lemma. \(\square \)

Remark 2.3

By polarization of the previous identity, at least for \(f, \tilde{f}\) smooth and decaying enough, one has

The last step in proving Proposition 2.1, hence Theorem 1.1, is to show the bound (2.20). If \(w := v_N - v_{app}\), \(q := p_N - p_{app}\),

Direct verifications show that \(v_{app}\), hence w, satisfies the same force- and torque-free conditions as v. This means that for any family of constant vectors \(u_i\) and \(\omega _i\), \(1 \leqq i \leqq N\),

By a proper choice of \(u_i\) and \(\omega _i\), we find

for any family \((\tilde{u}_i, \tilde{\omega }_i)\), using this time that \(v_N\) is force- and torque-free. Let \(q \geqq 2\). By a proper choice of \((\tilde{u}_i, \tilde{\omega }_i)\), by Poincaré and Korn inequalities, one can ensure that for all i,

where

Note that the factor \(\frac{1}{a}\) at the right-hand side is consistent with scaling considerations. Moreover, by standard use of the Bogovskii operator, see [16], there exists a constant C (depending only on the constant c in (H2)) and a field \(W \in W^{1,q}(\mathcal {F})\) , zero outside \(\cup _{i=1}^N B(x_i,2a)\) satisfying

We deduce, with \(p \leqq 2\) the conjugate exponent of q, that

By well-known variational properties of the Stokes solution, \(\Vert D(v_N)\Vert _{L^2}\) minimizes \(\Vert D(v)\Vert _{L^2}\) over the set of all v in \(\dot{H}^1(\mathbb {R}^3)\) satisfying a boundary condition of the form \(v\vert _{B_i} = - Sx + u_i + \omega _i \times (x-x_i)\) for all i. By the same considerations as before, based on the Bogovski operator, we infer that

so that

Using this inequality with the first term in (2.31) and applying the Hölder inequality to the second term, we end up with

To deduce (2.20), it is now enough to prove that for all \(q > 1\), there exists a constant C independent of N or \(\phi \) such that

Indeed, taking \(q > 2\), meaning \(p < 2\), and combining this inequality with (2.32) yields (2.20), more precisely

In order to show the bound (2.33), we must write down the expression for \(w\vert _{B_i} = v_N\vert _{B_i} - v_{app}\vert _{B_i}\), where \(v_{app}\) was introduced in (2.17). A little calculation, using Taylor’s formula with an integral remainder, shows that

with \(w_i^r\) being a rigid vector field (that disappears when taking the symmetric gradient), with

and with the bilinear application

We remind that \(v^s[S]\) and v[S] were introduced in (2.14) and (2.15), while the matrices \(S_j\) are defined in (2.18). Note that the matrices \(D_i\) and \(S_i\) have the same kind of structure. More precisely, we can define for a collection \((A_1, \dots , A_N)\) of N symmetric matrices, an application

Then, \((S_1, \dots , S_N) = \mathcal {A}(S, \dots ,S)\) and \((D_1, \dots , D_N) = \mathcal {A}(S_1, \dots ,S_N) = \mathcal {A}^2(S, \dots ,S)\). Note that for any matrix A, the kernel D(v[A]), homogeneous of degree \(-3\), is of Calderón–Zygmund type. Using this property, we are able to prove in the appendix the following lemma, which is an adaptation of a result by the second author and Di Wu [21]:

Lemma 2.4

For all \(1< q < +\infty \), there exists a constant C, depending on q and on the constant c in (H2), such that, if \((A'_1, \dots , A'_N) = \mathcal {A}(A_1, \dots , A_N)\), then

We can now proceed to the proof of (2.33). Denoting \(w_i^1:= D_i (x-x_i)\), we find by the lemma that

Then, we notice that for any matrix A, \(|D(v^s[A] - v[A])(x)| = O(a^5 |x|^{-5})\). This implies that \(w^2_i := E_i (x - x_i)\) satisfies

By assumption (H2), the points \(y_i := N^{1/3} x_i\) satisfy, for all \(i \ne j\), that

In particular,

We then make use of the following easy generalization of Young’s convolution inequality: \(\forall q \geqq 1\),

Applied with \(a_{ij} = \frac{1}{(c + |y_i - y_j|)^5}\) and \(b_j = |S| + |S_j|\), together with Lemma 2.4, this yields

It remains to bound the symmetric gradient of \(w_i^3 := \mathbf {F}_i\vert _{x}(x-x_i,x-x_i)\). By the expression of \(v^s\), we get that, in \(B_i\)

Proceeding as above, we find

As \(D(w) = D(w_i^1) + D(w_i^2) + D(w_i^3)\), cf. (2.34), the previous estimates yield (2.33). This concludes the proof of Proposition 2.1, and therefore the proof of Theorem 1.1.

3 The \(\phi ^2\) Correction \(\mathcal {V}_N\) as a Renormalized Energy

We start in this section the asymptotic analysis of the viscosity coefficient

As a preliminary step, we will show that there is no loss of generality in assuming

We introduce the set

By (H1)–(H2), it is easily seen that \(N_{ext} = o(N)\) as \(N \rightarrow +\infty \). We now show

Lemma 3.1

\(\mathcal {V}_N\) is uniformly bounded in N, and

goes to zero as \(N\rightarrow +\infty \).

Proof: For any open set U, we denote \(\fint _U = \frac{1}{|U|} \int _U\).

Let \(d := \frac{c}{4} N^{-1/3} \leqq \min _{i \ne j}\frac{|x_i-x_j|}{4}\) by (H2). We write

For the first term, with \(y_i := N^{1/3} x_i\) and with (H2) in mind, that is \(|y_i - y_j| \geqq c\) for \(i \ne j\), we get that

see (1.14). This yields, by a discrete convolution inequality,

where we have used that \(\sum _{j=1}^N \frac{1}{(c+|y_i - y_j|)^4}\) is uniformly bounded in N and in the index i thanks to the separation assumption. By similar arguments, \( | II | \leqq \mathcal {C}\). As regards the last term, we notice that

where \(F_N = \sum _{i=1}^N 1_{B(x_i,d)}\). The operator \(\mathcal {T} F(x) = \int g_S(x-y) F(y) \mathrm{d}y\) is a Calderón–Zygmund operator, and therefore continuous over \(L^2\). As \(F_N^2 = F_N\) (the balls are disjoint), we find that the \(L^2\) norm of \(F_N\) is \((N d^3)^{1/2}\) and

Similarly,

It follows that \(|III| \leqq \frac{C}{N d^3}\). With our choice of d, the first part of the lemma is proved.

From there, to prove that \(\mathcal {V}_{N,ext}\) goes to zero, as \(N_{ext} = o(N)\), it is enough to show that

By symmetry, it is enough that

This can be shown by a similar decomposition as the previous one. Namely,

Proceeding as above, we find this time that

which concludes the proof. \(\square \)

Remark 3.2

By Lemma 3.1, there is no restriction assuming (3.1) when studying the asymptotic behaviour of \(\mathcal {V}_N\). Therefore, we make from now on the assumption (3.1).

As explained in the introduction, the analysis of \(\mathcal {V}_N\) will rely on the mathematical methods introduced over the last years for Coulomb gases, the core problem being the analysis of a functional of the form (1.15). We shall first reexpress \(\mathcal {V}_N\) in a similar form. More precisely, we will show

Proposition 3.3

Denoting

we have \(\mathcal {V}_N = \mathcal {W}_N + \varepsilon (N)\) where \(\varepsilon (N) \rightarrow 0\) as \(N \rightarrow \infty .\)

Remark 3.4

In the definition of \(\mathcal {W}_N\), the integrals of the form

which appear when expanding the product, are understood as

where \(\mathrm{St}\) is the Stokes operator; see (2.30) and the proof below for an explanation.

Proof

Clearly,

so that, formally,

Note that it is not obvious that this formal decomposition makes sense, because all three quantities at the right-hand side involve integrals of \(g_S(x-y)\) against product measures of the form \(\mathrm{d} \delta _N(x) f(y) \mathrm{d}y\) (or the symmetric one), which may fail to converge because of the singularity of \(g_S\). To solve this issue, a rigorous path consists in approximating, at fixed N, each Dirac mass \(\delta _{x_i}\) by a (compactly suppported) approximation of unity \(\rho _\eta (x-x_i)\), where \(\eta > 0\) is the approximation parameter and goes to zero. One can then set, for each \(\eta \), \(\delta _N^\eta (x) := \frac{1}{N} \sum _{i=1}^N \rho _\eta (x-x_i)\), leading to the rigorous decomposition

where \(\mathcal {V}_N^\eta \), \(\mathcal {W}_N^\eta \) are deduced from \(\mathcal {V}_N\), \(\mathcal {W}_N\) replacing the empirical measure by its regularization. It is easy to show that \(\lim _{\eta \rightarrow 0} \mathcal {V}_N^\eta = \mathcal {V}_N\). To conclude the proof, we shall establish the following: first,

the same limit holding for the symmetric term. In particular, (3.2) will show that \(\mathcal {W}_N = \lim _{\eta \rightarrow 0} \mathcal {W}_N^\eta \) exists, in the sense given in Remark 3.4. Then, we will prove

which, together with (3.2), will complete the proof of the proposition.

The limit (3.2) follows from identity (2.30). Indeed, for \(\eta > 0\), this formula yields

Now, we remark that due to our assumptions on f, by elliptic regularity, \(h = \mathrm{St}^{-1}(S {\nabla }f)(x)\) is \(C^1\) inside \(\mathcal {O}\). Moreover, in virtue of Remark (3.2), we can assume (3.1). Hence, as \(\eta \rightarrow 0\),

It remains to prove (3.3). In the special case where \(f \in C^r(\mathbb {R}^3)\) for some \(r \in (0,1)\) (implying that it vanishes at \({\partial }\mathcal {O}\)), classical results on Calderón–Zygmund operators yield that the function \(\int _{\mathbb {R}^3} g_S(x-y) f(x) \mathrm{d}x = \frac{8\pi }{3} S {\nabla }\cdot h(y)\) is a continuous (even Hölder) bounded function, so (H1) implies straightforwardly that

In the general case where f is discontinuous across \({\partial }\mathcal {O}\), the proof is a bit more involved. The difficulty lies in the fact that some points \(x_i\) get closer to the boundary as \(N \rightarrow +\infty \).

Let \({\varepsilon }> 0\). Under (H2), there exists \(c' > 0\) (depending on c only) such that for \(N^{-1/3} \leqq {\varepsilon }\),

Let \(\chi _{\varepsilon }: \mathbb {R}^3 \rightarrow [0,1]\) be a smooth function such that \(\chi _{\varepsilon }=1\) in a \(c' {\varepsilon }/4\) neighborhood of \({\partial }\mathcal {O}\), \(\chi _{\varepsilon }=0\) outside a \(c' {\varepsilon }/2\) neighborhood of \({\partial }\mathcal {O}\). We write

By formula (2.30), the second term reads as

with \(u_{\varepsilon }= \mathrm{St}^{-1} S {\nabla }((1- \chi _{\varepsilon }) f)\). The source term \((1- \chi _{\varepsilon }) f\) being \(C^1\) and compactly supported, \(S {\nabla }\cdot u_{\varepsilon }\) is Hölder and bounded, so that, as \(N \rightarrow +\infty \), the integral goes to zero by the weak convergence assumption (H1), for any fixed \({\varepsilon }> 0\). As regards the first term, we split it again into

where \(v_{\varepsilon }\) is this time the solution of the Stokes equation with source \(S {\nabla }(\chi _{\varepsilon }f)\). It is Hölder away from \({\partial }\mathcal {O}\), so that the last term at the right-hand side goes again to zero as \(N \rightarrow +\infty \), by assumption (H1).

It remains to handle the first term at the right-hand side. We shall show below that for a proper choice of \(\chi _{\varepsilon }\) one has

Taking advantage of this fact, we write

where we used property (3.4) to obtain the last inequality. With this bound and the convergence to zero of the other terms for fixed \({\varepsilon }\) and \(N \rightarrow +\infty \), the limit (3.3) follows.

We still have to show that \({\nabla }v^{\varepsilon }\) is uniformly bounded in \(L^\infty \) for a good choice of \(\chi _{\varepsilon }\). We borrow here to the analysis of vortex patches in the Euler equation, initiated by Chemin in 2-d [10], extended by Gamblin and Saint-Raymond in 3-d [17]. First, as \(\mathcal {O}\) is smooth, one can find a family of five smooth divergence-free vector fields \(w_1, \dots , w_5\), tangent at \({\partial }\mathcal {O}\) and non-degenerate in the sense that

see [17, Proposition 3.2]. We take \(\chi _{\varepsilon }\) in the form \(\chi (t/{\varepsilon })\), for a coordinate t transverse to the boundary, meaning that \({\partial }_t\) is normal at \({\partial }\mathcal {O}\). With this choice and the assumptions on f, one checks easily that \(\chi _{\varepsilon }f\) is bounded uniformly in \({\varepsilon }\) in \(L^\infty (\mathbb {R}^3)\) and that for all i, \(w_i \cdot {\nabla }(\chi _{\varepsilon }f)\) is bounded uniformly in \({\varepsilon }\) in \(C^0(\mathbb {R}^3) \subset C^{r-1}(\mathbb {R}^3)\) for all \(r \in (0,1)\). Hence, the norm \(\Vert \chi _{\varepsilon }f\Vert _{r,W}\) introduced in [17, p. 395], where \(W = (w_1, \dots , w_5)\), is bounded uniformly in \({\varepsilon }\).

We then split the Stokes system

into the equations

and

Let us show that \({\partial }_i{\partial }_j \Delta ^{-1} (\chi _{\varepsilon }f)\) is bounded uniformly in \({\varepsilon }\) in \(L^\infty \). Let \(\chi \in C^\infty _c(\mathbb {R}^3)\), \(\chi \geqq 0\), \(\chi =1\) near zero. Let for all \(m \in \mathbb {R}\), \(\Lambda ^m(\xi ) := (\chi (\xi ) + |\xi |^2)^{m/2}\). It is easily seen through the Fourier transform that, for all \(s \in \mathbb {N}\),

Moreover, by the calculations in [17, p. 401], replacing \(\omega \) with \(\chi _{\varepsilon }f\), we get

Combining (3.6) and (3.7), we find that

is bounded uniformly in \({\varepsilon }\) in \(L^\infty \), and consequently that

Also, by continuity of Riesz transforms over \(L^p\), we have

Now, applying \(w_k \cdot {\nabla }\) to the equation satisfied by \(\Omega _{\varepsilon }\), we obtain for all \(1 \leqq k \leqq 5\),

where \(F_{i,j,{\varepsilon }}\), \(G_{i,{\varepsilon }}\) and \(H_{\varepsilon }\) are combinations of \(\Omega _{\varepsilon }\), \(\chi _{\varepsilon }f\) and \(w_k \cdot {\nabla }(\chi _{\varepsilon }f)\). In particular, they are bounded uniformly in \({\varepsilon }\) in \(L^\infty \cap L^p\), for any \(1< p < \infty \).

For the first term at the r.h.s., we write, with the same cut-off function \(\chi \) as before,

By continuity of \( (-\Delta )^{-1} {\partial }_{i} {\partial }_j\) over \(L^2\), the first term, with low frequencies, belongs to \(H^s\) for any s, with uniform bound in \({\varepsilon }\). By the continuity of \((1-\chi (D)) (-\Delta )^{-1} {\partial }_{i} {\partial }_j\) over Hölder spaces (Coifman-Meyer theorem), the second term, with high frequencies, is uniformly bounded in \({\varepsilon }\) in \(C^{r-1}(\mathbb {R}^3)\), for any \(0< r < 1\).

For the second and third terms in (3.8), we claim that

This can be easily seen by expressing these fields as \( \sum _{i} {\partial }_{i} \Phi \star G_{i,{\varepsilon }}\) and \(\Phi \star H_{\varepsilon }\) with \(\Phi \) the fundamental solution, and by using the uniform \(L^p\) bounds on \(G_{i,{\varepsilon }}\) and \(H_{\varepsilon }\). Eventually, we find that

We conclude by [17, Proposition 3.3] that \({\nabla }v_{\varepsilon }\) is bounded in \(L^\infty (\mathbb {R}^3)\) uniformly in \({\varepsilon }\). \(\square \)

3.1 Smoothing

By Proposition 3.3, we are left with understanding the asymptotic behaviour of

The following field will play a crucial role: for U, Q defined in (2.26), we set

From (2.27), we have \( g_S = \frac{8\pi }{3} \, (S {\nabla }) \cdot G_S\), and that \(G_S\) solves, in the sense of distributions,

Moreover, from the explicit expression

and taking into account the fact that S is symmetric and trace-free, we get

Let us note that \(G_S\) is called a point stresslet in the literature, see [18]. It can be interpreted as the velocity field created in a fluid of viscosity 1 by a point particle whose resistance to a strain is given by \(-S\).

We now come back to the analysis of (3.9). Formal replacement of the function f in Lemma 2.2 by \(\delta _N - f\) yields the formula

where

satisfies

The formula (3.13) is similar to the formula (1.16), and is as much abusive, as both sides are infinite. Still, by an appropriate regularization of the source term \(S{\nabla }\sum _i \delta _{x_i}\), we shall be able in the end to obtain a rigorous formula, convenient for the study of \(\mathcal {W}_N\). This regularization process is the purpose of the present paragraph.

For any \(\eta > 0\), we denote \(B_\eta = B(0,\eta )\), and define \(G_S^\eta \) by

Note that, by homogeneity,

The field \(G_S^\eta \) belongs to \(\dot{H}^1(\mathbb {R}^3)\), and solves

where \(S^\eta \) is the measure on the sphere defined by

with \(n=\frac{x}{|x|}\) the unit normal vector pointing outward \(B_\eta \), \(\left[ F\right] := F\vert _{{\partial }B_\eta ^+} - F\vert _{{\partial }B_\eta ^-}\) the jump at \({\partial }B_\eta \) (with \({\partial }B_\eta ^+\), resp. \({\partial }B_\eta ^-\), the outer, resp. inner boundary of the ball), and \(s^\eta \) the standard surface measure on \(\partial B_\eta \). We claim the following:

Lemma 3.5

For all \(\eta > 0\), \(\, S^\eta = \mathrm{div} \, \Psi ^\eta \,\) in \(\mathbb {R}^3\), where

Moreover, \(\Psi ^\eta \rightarrow S\delta \) in the sense of distributions as \(\eta \rightarrow 0\), so that \(S^\eta \rightarrow S{\nabla }\delta \).

Proof of the lemma.

From the explicit formula (3.12) for \(G_S\) and \(p_S\), we find

so that

Using that S is trace-free, one can check from definition (3.21) that \(\hbox {div}~\Psi ^\eta = 0\) in the complement of \({\partial }B_\eta \), while

where the last equality comes from (3.22). Together with (3.20), this implies the first claim of the lemma.

To compute the limit of \(\Psi ^\eta \) as \(\eta \rightarrow 0\), we write \(\Psi ^\eta = \Psi ^\eta _1 + \Psi ^\eta _2\), with

Let \(\varphi \in C^\infty _c(\mathbb {R}^3)\) be a test function. We can write \( \langle \Psi _1^\eta , \varphi \rangle = \langle \Psi _1^\eta , \varphi (0) \rangle + \langle \Psi _1^\eta , \varphi - \varphi (0) \rangle . \) The second term is \(O(\eta )\), while the first term can be computed using the elementary formula \(\int _{B_1} x_i x_j \mathrm{d}x = \frac{4\pi }{15} \delta _{ij}\). We find

For the second term, using the homogeneity (3.18), we find again that \(\lim _{\eta } \langle \Psi _2^\eta , \varphi \rangle = \langle \Psi _2^1 , \varphi (0) \rangle \). Note that the pressure \(p_S^1\) is defined up to a constant, so that we can always select the one with zero average. With this choice, we find

where the sixth equality comes from the elementary formula \(\int _{{\partial }B_1} n_i n_j n_k n_l \mathrm{d}s^1= \frac{4\pi }{15} (\delta _{ij} \delta _{kl} + \delta _{ik} \delta _{jl} + \delta _{il} \delta _{jk})\). The result follows. \(\quad \square \)

For later purposes, we also prove here

Lemma 3.6

Proof

By (3.18), \(\int _{B_\eta } |{\nabla }G^\eta _S|^2 \mathrm{d}x = \frac{1}{\eta ^3} \int _{B_1} |{\nabla }G^1_S|^2 \mathrm{d}x\). The second term can be computed with (3.12):

\(\square \)

3.2 The Renormalized Energy

Thanks to the regularization of \(S{\nabla }\delta \) introduced in the previous paragraph, cf. Lemma 3.5, we shall be able to set a rigorous alternative to the abusive formula (3.13). Specifically, we shall state an identity involving \(\mathcal {W}_N\), defined in (3.9), and the energy of the function

This function solves

and is a regularization of \(h_N\), cf. (3.14)–(3.15).

The main result of this section is

Proposition 3.7

Proof

We assume that \(\eta \) is small enough so that \(2 \eta < \min _{i \ne j} |x_i - x_j|\). From the explicit expressions (3.14), (3.25), we find that \(h_N, h_N^\eta = O(|x|^{-2})\), \({\nabla }(h_N, h_N^\eta ) = O(|x|^{-3})\) and \(p_N, p_N^\eta = O(|x|^{-3})\) at infinity. As these quantities decay fast enough, we can perform an integration by parts to find

where we defined \(h_N^i := h_N - G_S(x-x_i)\).

As \(h_N^i\) is smooth over the support of \(S^\eta (\cdot - x_i)\), we can apply Lemma 3.5 to obtain

We can then apply Lemma 3.6 to obtain

As regards the fourth term, we notice that by our definition (3.16)–(3.17) of \(G_S^\eta \), and the fact that the balls \(B(x_i, \eta )\) are disjoint, the function \(h_N - h_N^\eta = \sum _{i} (G_S(x-x_i) - G_S^\eta (x-x_i))\) is zero over \(\cup _i {\partial }B(x_i, \eta )\), which is the support of \(\sum _i S^\eta (x-x_i)\). It follows that

where we integrated by parts, using that \(G_S - G_S^\eta \) is zero outside the balls. Let us notice that the second integral at the right-hand side converges despite the singularity of \(S{\nabla }\cdot G_S\), using the smoothness of f near \(x_i\) (by assumption (3.1) and Remark 3.2). Moreover, it goes to zero as \(\eta \rightarrow 0\). Using the homogeneity and smoothness properties of \(G_S^\eta \) inside \(B^\eta \), we also find that the first sum goes to zero with \(\eta \), resulting in

We end up with

It remains to rewrite properly the right-hand side. We first get

and, integrating by parts,

The last equality was deduced from the identity \(g_S = \frac{8\pi }{3} \, (S {\nabla }) \cdot G_S\), see the line after (3.10). The proposition follows: \(\square \)

We can refine the previous proposition as follows:

Proposition 3.8

Let \(c > 0\) the constant in (H2). There exists \(C > 0\) such that: for all \(\alpha< \eta < \frac{c}{2} N^{-1/3}\),

Proof

One has from (3.25) that

It follows that

After integration by parts,

while

We get

We note that \(G_S^\eta - G_S^\alpha \) is zero outside \(B_\eta \), while \(S^\alpha + S^\eta \) is supported in \(B_\eta \). Moreover, thanks to (H2), for \(\alpha< \eta < \frac{c}{2}\), the balls \(B(x_i, \eta )\) are disjoint. We deduce: \(a=0\).

After integration by parts, taking into account that \(G_S^\eta - G_S^\alpha \) vanishes outside \(B_\eta \), we can write \(b = b_\eta - b_\alpha \) with

By assumption (3.1), for N large enough, for all \(1\leqq i \leqq N\) and all \(\eta \leqq \frac{c}{2} N^{-1/3}\), \(B(x_i, \eta )\) is included in \(\mathcal {O}\). Hence, f is \(C^{1}\) in \(B(x_i, \eta )\), and

This results in \(b_\eta \leqq C N^2 \eta \).

Similarly, decomposing \(B(x_i, \eta ) = B(x_i, \alpha ) \cup \Big ( B(x_i, \eta ) \setminus B(x_i, \alpha ) \Big )\), we find that

using again that f is Lipschitz over \(B(x_i, \eta )\). We end up with \(b_\alpha \leqq C N^2 \eta \), and finally \(b \leqq C N^2 \eta \).

For the last term c in (3.28), we first notice that as \(G_S^\eta - G_S^\alpha \) is zero outside \(B_\eta \):

where we used Lemma 3.6 in the last line. By the definition of \(S^\alpha \), the remaining term splits into

By integration by parts, applied in \(B_\eta \setminus B_\alpha \) for the first term and in \(B_\alpha \) for the second term, we get

From there, the conclusion follows easily. \(\square \)

If we let \(\alpha \rightarrow 0\) in Proposition 3.8, combining with Propositions 3.27 and 3.3, we find

Corollary 3.9

For all \( \eta < \frac{c}{2} N^{-1/3}\),

where \({\varepsilon }(N) \rightarrow 0\) as \(N \rightarrow +\infty \).

This corollary shows that to understand the limit of \(\mathcal {V}_N\), it is enough to study the limit of

for \(\eta _N := \eta N^{-1/3}\), \(\eta < \frac{c}{2}\) fixed. For periodic and more general stationary point processes, this will be possible through a homogenization approach. This homogenization approach involves an analogue of a cell equation, called jellium in the literature on Coulomb gases. We will motivate and introduce this system in the next section.

4 Blown-up System

Formula (3.27) suggests to understand at first the behaviour of \(\int _{\mathbb {R}^3} |{\nabla }h_N^\eta |^2\) at fixed \(\eta \), when \(N \rightarrow +\infty \). To analyze the system (3.26), a useful intuition can be taken from classical homogenization problems of the form

with F(x, y) periodic in variable y, and \(\overline{F}(x) := \int _{\mathbb {T}} F(x,y) \mathrm{d}y\). Roughly, \(\Omega \) would be like \(\mathcal {O}\), the small scale \({\varepsilon }\) like \(N^{-1/3}\), the term \( \frac{1}{{\varepsilon }^3} F(x,x/{\varepsilon })\) would correspond to the sum of (regularized) Dirac masses, while the term \( \frac{1}{{\varepsilon }^3} \overline{F}\) would be an analogue of Nf. The factor \(\frac{1}{{\varepsilon }^3}\) in front of F is put consistently with the fact that \(\sum _i \delta _{x_i}\) has mass N. The dependence on x of the source term in (4.1) mimics the possible macroscopic inhomogeneity of the point distribution \(\{x_i\}\).

In the much simpler model (4.1), standard arguments show that \(h_{\varepsilon }\) behaves like

where H(x, y) satisfies the cell problem

Let us stress that substracting the term \(\frac{1}{{\varepsilon }^3} \overline{F}(x)\) in the source term of (4.1) is crucial for the asymptotics (4.2) to hold. It follows that

Note that the factor \({\varepsilon }^6\) in front of the left-hand side is coherent with the factor \(\frac{1}{N^2}\) at the right-hand side of (3.27). Note also that

Such average over larger and larger boxes may be still meaningful in more general settings, typically in stochastic homogenization.

Inspired by those remarks, and back to system (3.26), the hope is that some homogenization process may take place, at least locally near each \(x \in \mathcal {O}\). More precisely, we hope to recover \(\lim _{N} \mathcal {W}_N\) by summing over \(x \in \mathcal {O}\) some microscopic energy, locally averaged around x. This microscopic energy will be deduced from an analogue of the cell problem, called a jellium in the literature on the Ginzburg-Landau model and Coulomb gases.

4.1 Setting of the Problem

We will call point distribution a locally finite subset of \(\mathbb {R}^3\). Given a point distribution \(\Lambda \), we consider the following problem in \(\mathbb {R}^3\):

Given a solution \(H = H(y)\), \(P = P(y)\), we introduce, for any \(\eta > 0\),

which satisfies, by (3.11), (3.19), that

We remark that, the set \(\Lambda \) being locally finite, the sum at the right-hand side of (4.3) or (4.5) is well-defined as a distribution. Also, the sum at the right-hand side of (4.4) is well-defined pointwise, because \(G_S^\eta - G_S\) is supported in \(B_\eta \).

As discussed at the beginning of Section 4, we expect the limit of \(\int _{\mathbb {R}^3} |{\nabla }h_N^\eta |^2\) to be described in terms of quantities of the form

where \(K_R := (-\frac{R}{2},\frac{R}{2})^3\), for various \(\Lambda \) and solutions \(H^\eta \) of (4.5). Broadly, the energy concentrated locally around a point x should be understood from a blow-up of the original system (3.26), zooming at scale \(N^{-1/3}\) around x. Let \(x \in \mathcal {O}\) (the center of the blow-up), and \(\eta _N := \eta N^{-1/3}\), for a fixed \(\eta > 0\). If we introduce

we find that

System (4.5) corresponds to a formal asymptotics where one replaces \(\sum _{i=1}^N \delta _{z_{i,N}}\) by \(\sum _{i=1}^\infty \delta _{z_i}\), with \(\Lambda = \{z_i\}\) a point distribution. Note that, under (H2), we expect this point distribution to be well-separated, meaning that there is \(c >0\) such that: for all \(z' \ne z \in \Lambda \), \(|z'-z| \geqq c\). Still, we insist that this asymptotics is purely formal and requires much more to be made rigorous. Such rigorous asymptotics will be carried in Section 5 for various classes of point configurations.

We now collect several general remarks on the blown-up system (4.3). We start by defining a renormalized energy. For any \(L > 0\), we denote \(K_L := (-\frac{L}{2}, \frac{L}{2})^3\).

Definition 4.1

Given a point distribution \(\Lambda \), we say that a solution H of (4.3) is admissible if for all \(\eta > 0\), the field \(H^\eta \) defined by (4.4) satisfies \({\nabla }H^\eta \in L^2_{loc}(\mathbb {R}^3)\).

Given an admissible solution H and \(\eta > 0\), we say that \(H^\eta \) is of finite renormalized energy if

exists in \(\mathbb {R}\). We say that H is of finite renormalized energy if \(H^\eta \) is for all \(\eta \), and

exists in \(\mathbb {R}\).

Remark 4.2

From formula (4.4), it is easily seen that H is admissible if and only if there exists one \(\eta > 0\) with \({\nabla }H^\eta \in L^2_{loc}(\mathbb {R}^3)\).

Proposition 4.3

If \(H_1\) and \(H_2\) are admissible solutions of (4.3) satisfying, for some \(\eta > 0\), that

then \({\nabla }H_1\) and \({\nabla }H_2\) differ from a constant matrix.

Proof

We set \(H := H_1 - H_2 = H_1^\eta - H_2^\eta \). It is a solution of the homogeneous Stokes equation with

By standard elliptic regularity, any solution v of the Stokes equation in the unit ball

satisfies, for some absolute constant C,

We apply this inequality to \(v(x) = H(x_0 + R x)\), \(x_0\) arbitrary. After rescaling, we find that

As \(R \rightarrow +\infty \), the right hand-side goes to zero, which concludes the proof. \(\square \)

Proposition 4.4

Let \(\Lambda \) be a well-separated point distribution, meaning there exists \(c > 0\) such that for all \(z' \ne z \in \Lambda \), \(|z'-z| \geqq c\). Let \(0< \alpha< \eta < \frac{\min (c,1)}{4}\). Let H be an admissible solution of (4.3) such that \(H^\eta \) is of finite renormalized energy. Then, \(H^\alpha \) is also of finite renormalized energy, and

In particular, H is of finite renormalized energy as soon as \(H^\eta \) is for some \(\eta \in (0, \frac{c}{4})\), and \( \mathcal {W}({\nabla }H) = \mathcal {W}^\eta ({\nabla }H)\) for all \(\eta < \frac{\min (c,1)}{4}\).

Proof

Let \(R > 0\). As \(\Lambda \) is well-separated,

From this and the fact that the limit \(\mathcal {W}^\eta ({\nabla }H)\) exists (in \(\mathbb {R}\)), it follows that

Let \(\Omega _R\) be an open set such that \(K_{R-1} \subset \Omega _R \subset K_R\) and such that

where \(c'\) depends on c only. This implies that \(G^{\eta }(\cdot + z)\), \(G^\alpha (\cdot + z)\) are smooth at \({\partial }\Omega _R\) for all \(z \in \Lambda \), and that \(H^{\eta }\), \(H^\alpha \) are smooth at \({\partial }\Omega _R\).

We now proceed as in the proof of Proposition 3.8. We write

After integration by parts, and manipulations similar to those used to show Proposition 3.8, we end up with

Let us emphasize that the contribution of the boundary terms at \({\partial }\Omega _R\) is zero: indeed, thanks to (4.10), \((G_S^\eta - G_S^\alpha )(\cdot + z)\) is zero at \({\partial }\Omega _R\) for any \(z \in \Lambda \). Similarly,

The integral in the right-hand side was computed above, (see (3.29) and the lines after):

Back to (4.11), we find that

We deduce from this identity, (4.8) and (4.9) that

and replacing R by \(R+1\),

As \(\Omega _R \subset K_R \subset \Omega _{R+1}\), the result follows. \(\square \)

4.2 Resolution of the Blown-up System for Stationary Point Processes

As pointed out several times, we follow the strategy described in [41] for the treatment of minimizers and minima of Coulomb energies, but in our effective viscosity problem, the points \(x_{i,N}\) do not minimize the analogue \(\mathcal {V}_N\) of the Coulomb energy \(\mathcal {H}_N\). Actually, although we consider the steady Stokes equation, our point distribution may be time dependent. More precisely, in many settings, the dynamics of the suspension evolves on a timescale associated with viscous transport (scaling like \(a^2\), with a the radius of the particle), which is much smaller than the convective time scale (scaling like a). This allows us to neglect the time derivative in the Stokes equation: system (1.1)–(1.2) corresponds then to a snapshot of the flow at a given time t. Even when one is interested in the long time behaviour, the existence of an equilibrium measure for the system of particles is a very difficult problem. To bypass this issue, a usual point of view in the physics literature is to assume that the distribution of points is given by a stationary random process (whose refined description is an issue per se).

We will follow this point of view here, and introduce a class of random point processes for which we can solve (4.3). Let \(X = \mathbb {R}\) or \(X =\mathbb {T}_L := \mathbb {R}/(L\mathbb {Z})\) for some \(L > 0\). We denote by \(Point_X\) the set of point distributions in \(X^3\): an element of \(Point_X\) is a locally finite subset of \(X^3\), in particular a finite subset when \(X=\mathbb {T}_L\). We endow \(Point_X\) with the smallest \(\sigma \)-algebra \(\mathcal {P}_X\) which makes measurable all the mappings

Given a probability space \((\Omega ,\mathcal {A},P)\), a random point process \(\Lambda \) with values in \(X^3\) is a measurable map from \(\Omega \) to \(Point_X\), see [12]. By pushing forward the probability P with \(\Lambda \), we can always assume that the process is in canonical form, that is \(\Omega = Point_X\), \(\mathcal {A} = \mathcal {P}_X\), and \(\Lambda (\omega ) = \omega \).

We shall consider processes that, once in canonical form, are

-

(P1) stationary: the probability P on \(\Omega \) is invariant by the shifts

$$\begin{aligned} \tau _y : \Omega \rightarrow \Omega , \quad \omega \rightarrow y + \omega , \quad y \in X^3. \end{aligned}$$ -

(P2) ergodic: if \(A \in \mathcal {A}\) satisfies \(\tau _y(A) = A\) for all y, then \(P(A) = 0\) or \(P(A) = 1\).

-

(P3) uniformly well-separated: we mean that there exists \(c > 0\) such that almost surely, \(|z - z'| \geqq c\) for all \(z \ne z'\) in \(\omega \).

These properties are satisfied in two important contexts:

Example 4.5

(Periodic point distributions). Namely, for \(L > 0\), \(a_1, \dots , a_M\) in \(K_L\), we introduce the set \(\Lambda _0 := \{a_1, \dots , a_M\} + L \mathbb {Z}^d\). We can of course identify \(\Lambda _0\) with a point distribution in \(X^3\) with \(X = \mathbb {T}_L\). We then take \(\Omega = \mathbb {T}_L^3\), P the normalized Lebesgue measure on \(\mathbb {T}^3_L\), and set \(\Lambda (\omega ) := \Lambda _0 + \omega \). It is easily checked that this random process satisfies all assumptions. Moreover, a realization of this process is a translate of the initial periodic point distribution \(\Lambda _0\). By translation, the almost sure results that we will show below (well-posedness of the blown-up system, convergence of \(\mathcal {W}_N\)) will actually yield results for \(\Lambda _0\) itself.

Example 4.6

(Poisson hard core processes). These processes are obtained from Poisson point processes, by removing balls in order to guarantee the hypothesis (P3). For instance, given \(c > 0\), one can remove from the Poisson process all points z which are not alone in B(z, c). This leads to the so-called Matérn I hard-core process. To increase the density of points while keeping (P3), one can refine the removal process in the following way: for each point z of the Poisson process, one associates an “age” \(u_z\), with \((u_z)\) a family of i.i.d. variables, uniform over (0, 1). Then, one retains only the points z that are (strictly) the “oldest” in B(z, c). This leads to the so-called Matérn II hard-core process. Obviously, these two processes satisfy (P1) by stationarity of the Poisson process, and satisfy (P2) because they have only short range of correlations. For much more on hard core processes, we refer to [8].

The point is now to solve almost surely the blown-up system (4.3) for point processes with properties (P1)–(P2)–(P3). We first state

Proposition 4.7

Let \(\Lambda = \Lambda (\omega )\) a random point process with properties (P1)–(P2)–(P3). Let \(\eta > 0\). For almost every \(\omega \), there exists a solution \(\mathbf{H}^\eta (\omega , \cdot )\) of (4.5) in \(H^1_{loc}(X^3)\) such that

where \(D_\mathbf{H}^\eta \in L^2(\Omega )\) is the unique solution of the variational formulation (4.12) below.

Remark 4.8

In the case \(X = \mathbb {T}_L\), point distributions and solutions \(H^\eta \) over \(X^3\) can be identified with \(L\mathbb {Z}^3\)-periodic point distributions and \(L\mathbb {Z}^3\)-periodic solutions defined on \(\mathbb {R}^3\). This identification is implicit here and in all that follows.

Proof

We treat the case \(X=\mathbb {R}\), the case \(X=\mathbb {T}_L\) follows the same approach. We remind that the process is in canonical form: \(\Omega = Point_\mathbb {R}\), \(\mathcal {A} = \mathcal {P}_\mathbb {R}\), \(\Lambda (\omega ) = \omega \). The idea is to associate to (4.5) a probabilistic variational formulation. This approach is inspired by works of Kozlov [7, 26], see also [3]. Prior to the statement of this variational formulation, we introduce some vocabulary and functional spaces. First, for any \(\mathbb {R}^d\)-valued measurable \(\phi = \phi (\omega )\), we call a realization of \(\phi \) an application

For \(p \in [1,+\infty )\), \(\phi \in L^p(\Omega )\), as \(\tau _y\) is measure preserving, we have for all \(R > 0\) that \(\mathbb {E} \int _{K_R} |R_\omega [\phi ]|^p = R^3 \, \mathbb {E} |\phi |^p\). Hence, almost surely, \(R_\omega [\phi ]\) is in \(L^p_{loc}(\mathbb {R}^3)\). Also, for \(\phi \in L^\infty (\Omega )\), one finds that almost surely \(R_\omega [\phi ] \in L^\infty _{loc}(\mathbb {R}^3)\). It is a consequence of Fatou’s lemma: for all \(R > 0\),

We say that \(\phi \) is smooth if, almost surely, \(R_\omega [\phi ]\) is. For a smooth function \(\phi \), we can define its stochastic gradient \({\nabla }_\omega \phi \) by the formula

where here and below, \({\nabla }= {\nabla }_y\) refers to the usual gradient (in space). Note that \({\nabla }_\omega \phi (\tau _y \omega ) = {\nabla }R_\omega [\phi ](y)\). One can define similarly the stochastic divergence, curl, etc, and reiterate to define partial stochastic derivatives \({\partial }^\alpha _\omega \).

Starting from a function \(V \in L^p(\Omega )\), \(p \in [1,+\infty ]\) one can build smooth functions through convolution. Namely, for \(\rho \in C^\infty _c(\mathbb {R}^3)\), one can define

which is easily seen to be in \(L^p(\Omega )\), as

using that \(\tau _y\) is measure-preserving. Moreover, it is smooth: we leave to the reader to check

We are now ready to introduce the functional spaces we need. We set

We remind that \(S^\eta = \hbox {div}~\Psi ^\eta \), with \(\Psi ^\eta \) defined in (3.21). We introduce

Note that it is well-defined, as \(\Psi ^\eta \) is supported in \(B_\eta \) and \(\omega \) is a discrete subset. It is measurable: indeed, \(\Psi ^\eta \) is the pointwise limit of a sequence of simple functions of the form \(\sum _i \alpha _i 1_{A_i}\), where \(A_i\) are Borel subsets of \(\mathbb {R}^3\). As

is measurable by definition of the \(\sigma \)-algebra \(\mathcal {A}\), we find that \(\mathsf {\Pi }^\eta \) is. Moreover, as \(\Lambda \) is uniformly well-separated, one has \(|\mathsf {\Pi }^\eta (\omega )| \leqq C \Vert \Psi ^\eta \Vert _{L^\infty }\) for a constant C that does not depend on \(\omega \), so that \(\mathsf {\Pi }^\eta \) belongs to \(L^\infty (\Omega )\).

We now introduce the variational formulation: find \(D_\mathbf{H}^\eta \in \mathcal {V}_\sigma \) such that for all \(D_\phi \in \mathcal {V}_\sigma \),

As \(\mathcal {V}_\sigma \) is a closed subspace of \(L^2(\Omega )\), existence and uniqueness of a solution comes from the Riesz theorem.

It remains to build a solution of (4.5) almost surely, based on \(D_\mathbf{H}^\eta \). Let \(\phi _k = \phi _k(\omega )\) a sequence in \(\mathcal {D}_\sigma \) such that \({\nabla }_\omega \phi _k\) converges to \(D_\mathbf{H}^\eta \) in \(L^2(\Omega )\). Let \(\rho \in C^\infty _c(\mathbb {R}^3)\). It is easily seen that \(\rho \star \phi _k\) also belongs to \(\mathcal {D}_\sigma \) and that \({\partial }^\alpha _\omega {\nabla }_\omega (\rho \star \phi _k) = {\partial }^\alpha _\omega (\rho \star {\nabla }_\omega \phi _k)\) converges to the smooth function \({\partial }^\alpha _\omega (\rho \star D_\mathbf{H}^\eta )\) in \(L^2(\Omega )\), for all \(\alpha \). In particular, as \({\nabla }_\omega \times {\nabla }_\omega (\rho \star \phi _k) = 0\), we find that \({\nabla }_\omega \times (\rho \star D_\mathbf{H}^\eta ) = 0\). Applying the realization operator \(R_\omega \), we deduce that

We recall that \(R_\omega [D_\mathbf{H}^\eta ]\) belongs almost surely to \(L^2_{loc}(\mathbb {R}^3)\), so that \({\nabla }\times R_\omega [D_\mathbf{H}^\eta ]\) is well-defined in \(H^{-1}_{loc}(\mathbb {R}^3)\). Taking \(\rho = \rho _n\) an approximation of the identity, and sending n to infinity, we end up with \({\nabla }\times R_\omega [D_\mathbf{H}^\eta ] = 0\) in \(\mathbb {R}^3\). As curl-free vector fields on \(\mathbb {R}^3\) are gradients, it follows that almost surely, there exists \(\mathbf{H}^\eta = \mathbf{H}^\eta (\omega , y)\) with

In the case \(X = \mathbb {T}_L\), one can show that the mean of \(R_\omega [D_\mathbf{H}^\eta ]\) is almost surely zero, so that the same result holds. In addition, because the matrices \({\nabla }_\omega \phi \), \(\phi \in \mathcal {D}_\sigma \), have zero trace, the same holds for \(D_\mathbf{H}^\eta \). Hence,

One still has to prove that the first equation of (4.5) is satisfied. Therefore, we use (4.12) with test function \(D_\phi = {\nabla }_\omega \phi \), where the smooth function \(\phi \) is of the form

Note that for smooth functions \(\varphi , \tilde{\varphi }\), a stochastic integration by parts formula holds:

Thanks to this formula, we may write

Similarly, we find

As this identity is valid for all smooth test fields \(\varphi \), we end up with

Proceeding as above, we find that, almost surely,

which can be written as

It follows that there exists \(\mathbf{P}^\eta = \mathbf{P}^\eta (\omega ,y)\) such that

which concludes the proof of the proposition. \(\square \)

Corollary 4.9

For random point processes with properties (P1)–(P2)–(P3), there exists almost surely a solution H of (4.3) with finite renormalized energy and such that for all \(\eta > 0\), the gradient field \({\nabla }H^\eta \), where \(H^\eta \) is given by (4.4), coincides with the gradient field \({\nabla }\mathbf{H}^\eta \) of Proposition 4.7. Moreover,

where \(m := \mathbb {E}|\Lambda \cap K_1|\) is the mean intensity of the point process, the expression at the right-hand side being actually constant for \(\eta \) small enough.

Proof

By the definition of the mean intensity and by property (P2), which allows us to apply the ergodic theorem (cf. [12, Corollary 12.2.V]), we have, almost surely, that

Let \(\eta _0 < \frac{\min (c,1)}{4}\) fixed, and \(\mathbf{H}^{\eta _0}\) given by the previous proposition. We set

It is clearly an admissible solution of (4.3). By Proposition 4.4, in order to show that H has almost surely finite renormalized energy, it is enough to show that for one \(\eta < \frac{\min (c,1)}{4}\), almost surely, the function \(H^\eta \) given by (4.4), namely,

has finite renormalized energy. This holds for \(\eta = \eta _0\), as \(H^{\eta _0} = \mathbf{H}^{\eta _0}\) and the ergodic theorem applies. We then notice that

We remark that \(G^\eta _S- G_S^{\eta _0} = 0\) outside \(B_{\max (\eta ,\eta _0)}\), so that the sum at the r.h.s. has only a finite number of non-zero terms. In the same way as we proved that the function \(\mathsf {\Pi }^\eta \) belongs to \(L^\infty (\Omega )\), we get that \(\sum _{z \in \omega } {\nabla }(G^\eta _S- G_S^{\eta _0})(z)\) defines an element of \(L^\infty (\Omega )\). Hence, by the ergodic theorem, we have, almost surely, that

Combining this with (4.13) and Proposition 4.4, we obtain the formula for \(\mathcal {W}({\nabla }H)\).

The last step is to prove that for all \(\eta > 0\), \({\nabla }H^\eta = {\nabla }\mathbf{H}^\eta \) almost surely. As a consequence of the ergodic theorem, one has, almost surely, that

Reasoning as in the proof of Proposition 4.3, we find that their gradients differ by a constant:

Applying again the ergodic theorem, we get that almost surely \( \mathbb {E}D_{H}^\eta = \mathbb {E}D_\mathbf{H}^\eta + C(\omega )\). As \(D_\mathbf{H}^\eta \) belongs to \(\mathcal {V}_\sigma \), its expectation is easily seen to be zero. To conclude, it remains to prove that \(\mathbb {E}D_{H}^\eta = \mathbb {E}\sum _{z \in \omega } {\nabla }(G_S^\eta - G_S^{\eta _0})(z)\) is zero. Using stationarity, we write, for all \(R > 0\),

We remark that for all z outside a \(\max (\eta , \eta _0)\)-neighborhood of \({\partial }K_R\), \( \int _{K_R} {\nabla }(G_S^\eta - G_S^{\eta _0})(z+\cdot ) = \int _{{\partial }K_R} n \otimes (G_S^\eta - G_S^{\eta _0})(z+\cdot ) = 0\). It follows from the separation assumption and the \(L^\infty \) bound on \({\nabla }(G_S^\eta - G_S^{\eta _0})\) that

\(\square \)

5 Convergence of \(\mathcal {V}_N\)

This section concludes our analysis of the quadratic correction to the effective viscosity. From Theorem 1.1, we know that this quadratic correction should be given by the limit of \(\mathcal {V}_N\) as N goes to infinity, where \(\mathcal {V}_N\) was introduced in (1.13). We show here that the functional \(\mathcal {V}_N\) has indeed a limit, when the particles are given by the kind of stationary point processes seen in Section 4.

5.1 Proof of Convergence

Let \({\varepsilon }> 0\) a small parameter, and \(\Lambda = \Lambda (\omega )\) a random point process with properties (P1)–(P2)–(P3): stationarity, ergodicity, and uniform separation. As seen in Examples 4.5 and 4.6, this setting covers the case of periodic patterns of points as well as classical hard core processes. We set \(N = N({\varepsilon })\) the cardinal of the set

where \(\check{\Lambda } := -\Lambda \) and where we label the elements arbitrarily. Note that N depends on \(\omega \), although it does not appear explicitly. From the fact that \(\Lambda \) is uniformly well-separated and from the ergodic theorem (cf. [12, Corollary 12.2.V]), we can deduce that, almost surely,

so that we shall note indifferently \(\lim _{{\varepsilon }\rightarrow 0}\) or \(\lim _{N \rightarrow +\infty }\). Note that, strictly speaking, \(N = N({\varepsilon })\) does not necessarily cover all integer values when \({\varepsilon }\rightarrow 0\), but this is no difficulty.

More generally, for all \(\varphi \) smooth and compactly supported in \(\mathbb {R}^3\), ergodicity implies

which shows that (H1) is satisfied with \(f = \frac{1}{|\mathcal {O}|} 1_{\mathcal {O}}\). The hypothesis (H2) is also trivially satisfied, as well as (3.1). Our main theorem is

Theorem 5.1

Almost surely,

with m the mean intensity of the process, and H the solution of (4.3) given in Corollary 4.9.

The rest of the paragraph is dedicated to the proof of this theorem.

Let \(\eta \) satisfying \(\eta < \frac{\min (c,1)}{4}\) and \(\eta < \frac{c}{2} (m|\mathcal {O}|)^{-1/3}\). By (5.1), it follows that, almost surely, for \({\varepsilon }\) small enough, \({\varepsilon }\eta < \frac{c}{2} N^{-1/3}\). By Corollary 3.9,

We denote \(h_{\varepsilon }^\eta := h_N^{\eta {\varepsilon }}\), see (3.25)–(3.26). Let H be the solution of the blown-up system (4.3) provided by Corollary 4.9, \(H^\eta \) given in (4.4), and \(P^\eta \) as in (4.5). We define new fields \(\bar{h}^\eta _{\varepsilon }, \bar{p}^\eta _{\varepsilon }\) by the following conditions: \(\bar{h}^\eta _{\varepsilon }\in \dot{H}^1(\mathbb {R}^3)\),

We omit indication of the dependence in \(\omega \) to lighten notations. We claim

Proposition 5.2

Proposition 5.3

Note that, by Proposition 4.4 and our choice of \(\eta \), \(\mathcal {W}^\eta ({\nabla }H)\) = \(\mathcal {W}({\nabla }H)\). Theorem 5.1 follows directly from this fact, (5.2), and the propositions.

Proof of Proposition 5.2.

We know from Corollary 4.9 that

From this and relation (5.1), we see that the proposition amounts to the statement

A simple application of the ergodic theorem shows that, almost surely,

It remains to show that

It will be deduced from the well-known fact that the Stokes solution \(\bar{h}^\eta _{\varepsilon }\) minimizes

among divergence-free fields \(\bar{h}\) in \(\mathrm{ext} \,\mathcal {O}\) satisfying the Dirichlet condition \(\bar{h}\vert _{{\partial }\mathcal {O}} = \bar{h}^\eta _{\varepsilon }\vert _{{\partial }\mathcal {O}}\).

First, we prove that the \(H^{1/2}(\partial \mathcal {O})\)-norm of \({\varepsilon }^3 \bar{h}^\eta _{\varepsilon }\) goes to zero. In this perspective, we introduce for all \(\delta > 0\) a function \(\chi _\delta \) with \(\chi _\delta = 1\) in a \(\frac{\delta }{2}\)-neighborhood of \({\partial }\mathcal {O}\), \(\chi _\delta = 0\) outside a \(\delta \)-neighborhood of \({\partial }\mathcal {O}\). We write

By the ergodic theorem and Corollary 4.9, \({\varepsilon }^3 {\nabla }\bar{h}^\eta _{\varepsilon }= {\nabla }_y H^\eta (\frac{\cdot }{{\varepsilon }})\) converges almost surely weakly in \(L^2(\mathcal {O})\) to \(\mathbb {E}D_{\mathbf {H}^{\eta }} = 0\). Let \(\varphi \in L^2(\mathcal {O})\). By standard results on the divergence operator, cf [16], there exists \(v \in H^1_0(\mathcal {O})\) with \(\hbox {div}~v = \varphi - \fint _{\mathcal {O}} \varphi \), \(\Vert v\Vert _{H^1(\mathcal {O})} \leqq C_\mathcal {O}\Vert \varphi \Vert _{L^2(\mathcal {O})}\). As by definition \(\bar{h}^\eta _{\varepsilon }\) has zero mean over \(\mathcal {O}\), it follows that

Hence, \({\varepsilon }^3 \bar{h}^\eta _{\varepsilon }\) converges weakly to zero in \(H^1(\mathcal {O})\) and therefore strongly in \(L^2(\mathcal {O})\). It follows that, for any given \(\delta \),

To conclude, it is enough to show that \(\limsup _{{\varepsilon }\rightarrow 0} \Vert {\varepsilon }^3 {\nabla }\bar{h}^\eta _{\varepsilon }\chi _\delta \Vert _{L^2(\mathcal {O})}\) goes to zero as \(\delta \rightarrow 0\). This comes from

Finally, \(\Vert {\varepsilon }^3 \bar{h}^\eta _{\varepsilon }\Vert _{H^{1/2}({\partial }\mathcal {O})} = o(1)\). To conclude that (5.3) holds, we notice that

By classical results on the right inverse of the divergence operator, see [16], one can find for R such that \(\mathcal {O}\Subset B(0,R)\) a solution \(\bar{h}\) of the equation

and such that

Extending \(\bar{h}\) by zero outside B(0, R), we find

This concludes the proof of the proposition. \(\quad \square \)

Proof of Proposition 5.3.

Let \(h := h_{\varepsilon }^\eta - \bar{h}_{\varepsilon }^\eta \). It satisfies an equation of the form

where the various source terms will now be defined. First,

Here, the value of the stress is taken from \(\mathrm{ext} \,\mathcal {O}\), n refers to the normal vector pointing outward \(\mathcal {O}\) and \(s_{{\partial }}\) refers to the surface measure on \({\partial }\mathcal {O}\). We remind that \(\bar{h}^\eta _{\varepsilon }\in \dot{H}^1(\mathbb {R}^3)\) does not jump at the boundary, but its derivatives do, so that one must specify from which side the stress is considered. Then,

with the value of the stress taken from \(\mathcal {O}\), and n as before. Noticing that \( S {\nabla }f = - \frac{1}{|\mathcal {O}|} Sn \, s_{\partial }\), we finally set

where

Note that the term \(R_3\) is supported in pieces of spheres. From (3.20), we know that for all \(\eta > 0\),

This allows us to show that the integral of \(R_2+R_3\) is zero. Indeed,

so that

The point is now to prove that \({\varepsilon }^3 \Vert {\nabla }h\Vert _{L^2(\mathbb {R}^3)} \rightarrow 0\) as \({\varepsilon }\rightarrow 0\). From a simple energy estimate, and taking (5.7) into account, we find

As \((\bar{h}_{\varepsilon }^\eta , \bar{p}_{\varepsilon }^\eta )\) is a solution of a homogeneous Stokes equation in \(\mathrm{ext} \,\mathcal {O}\), we get, from an integration by parts, that

using the Cauchy–Schwarz inequality and the bound (5.5).

We now wish to show that

for some \(\nu ({\varepsilon })\) going to zero with \({\varepsilon }\). More precisely, we will prove that for any divergence-free \(\varphi \in \dot{H}^1(\mathbb {R}^3)\),

which implies (5.10), by Poincaré inequality. We first notice that

where

Then, we use the relation \(S^\eta = \hbox {div}~\Psi ^\eta \), cf. Lemma 3.5 and integrate by parts to get

For a fixed \(\eta \), there is a constant C (depending on \(\eta \)) such that

For the last inequality, we have used that all \(x_i\)’s with \(i \in I_{\varepsilon }^\eta \) belong to an \({\varepsilon }\)-neighborhood of \({\partial }\mathcal {O}\), so that \(|I_{\varepsilon }^\eta | = O({\varepsilon }^{-2})\). Hence,

Let

We claim that \(\mathbb {E}\int _{K_1} F_3 = 0\). Indeed, by stationarity, for all \(R > 0\)

We have used crucially the fact that \(\Psi ^\eta \) is supported in \(B(0,\eta )\). The \(O(\frac{1}{R})\)-term is associated to the points \(z \in \Lambda \) which lie in a \(\delta \)-neighborhood of \({\partial }K_R\): see the end of the proof of Corollary 4.9 for similar reasoning. By sending R to infinity, we find that, almost surely,