Abstract

Let \(\Omega \subset {\mathbb {R}}^3\) be a domain and let \(f\in BV_{{\text {loc}}}(\Omega ,{\mathbb {R}}^3)\) be a homeomorphism such that its distributional adjugate is a finite Radon measure. We show that its inverse has bounded variation \(f^{-1}\in BV_{{\text {loc}}}\). The condition that the distributional adjugate is finite measure is not only sufficient but also necessary for the weak regularity of the inverse.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Suppose that \(\Omega \subset {\mathbb {R}}^n\) is an open set and let \(f :\Omega \rightarrow f(\Omega )\subset {\mathbb {R}}^n\) be a homeomorphism. In this paper we address the issue of the weak regularity of \(f^{-1}\) under regularity assumptions on f.

The classical inverse function theorem states that the inverse of a \(C^1\)-smooth homeomorphism f is again a \(C^1\)-smooth homeomorphism, under the assumption that the Jacobian \(J_f\) is strictly positive. In this paper we address the question whether the inverse of a Sobolev or BV-homeomorphism is a BV function or even a Sobolev function. This problem is of particular importance as Sobolev and BV spaces are commonly used as initial spaces for existence problems in PDE’s and the calculus of variations. For instance, elasticity is a typical field where both invertibility problems and Sobolev (or BV) regularity issues are relevant (see for example [2, 4, 29]).

The problem of the weak regularity of the inverse has attracted a big attention in the past decade. It started with the result of [20, 23] where it was shown that for homeomorphisms in dimension \(n=2\) we have

This result has been generalized to \({\mathbb {R}}^n\) in [6] where it was shown that

It is natural to study the sharpness of the assumption \(f\in W^{1,n-1}\). It was shown in [22] that the assumption \(f\in W^{1,n-1-\varepsilon }\) (or any weaker Orlicz–Sobolev assumption) is not sufficient in general. Furthermore, by results of [9] we know that for \(f\in W^{1,n-1}\) we have not only \(f^{-1}\in BV\) but also the total variation of the inverse satisfies

where \({\text {adj}}Df\) denotes the adjugate matrix, that is the matrix of \((n-1)\times (n-1)\) subdeterminants. However, for \(n\ge 3\) it is possible to construct a \(W^{1,1}\) homeomorphism with \({\text {adj}}Df\in L^1\) such that \(f^{-1}\notin BV\) (see [22]) so the pointwise adjugate does not carry enough information about the regularity of the inverse. The main trouble in the example from [22] is that the pointwise adjugate does not capture some singular behavior on the set of measure zero.

On the other hand, the results of [6] are not perfect as they cannot be applied to even very simple mappings. Let c(x) denote the usual Cantor ternary function, then \(h(x)=x+c(x)\) is BV homeomorphism and its inverse \(g=h^{-1}\) is even Lipschitz. It is easy to check that

is a BV homeomorphism and its inverse \(f^{-1}(x,y,z)=[g(x),y,z]\) is Lipschitz, but the results of [6] cannot be applied as f is not Sobolev. In this paper we obtain a new result in dimension \(n=3\) about the regularity of the inverse which generalizes the result of [6] and can be applied to the above mapping.

It is well-known that in models of Nonlinear Elasticity and in Geometric Function Theory the usual pointwise Jacobian does not carry enough information about the mapping and it is necessary to work with the distributional Jacobian; see for example [2, 5, 21, 24, 25, 27]. This distributional Jacobian captures the behavior on zero measure sets and can be used to model for example cavitations of the mapping; see for example [16, 17, 28, 29]. In the same spirit we introduce the notion of the distributional adjugate \(\mathcal {ADJ}\, Df\) (see Definition 1.4 below) and we show that the right assumption for the regularity of the inverse is that \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\), where \({\mathcal {M}}(\Omega )\) denotes finite Radon measures on \(\Omega \).

Further we need to add the technical assumption that Lebesgue area (see (2.2) below) of image of a.e. hyperplane parallel to coordinate axes is finite. Let us recall that the Hausdorff measure is always bigger (see Sect. 2.4):

so it is enough to assume the finiteness of Hausdorff measure of the image. Our main result is the following:

Theorem 1.1

Let \(\Omega \subset {\mathbb {R}}^3\) be a domain and \(f\in BV_{{\text {loc}}}(\Omega ,{\mathbb {R}}^3)\) be a homeomorphism such that \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\) and assume further that for a.e. t we have

Then \(f^{-1}\in BV_{{\text {loc}}}(f(\Omega ),{\mathbb {R}}^3)\).

If we moreover know that the image of the measure \(f(\mathcal {ADJ}\, Df)\) is absolutely continuous with respect to Lebesgue measure, then \(f^{-1}\in W_{{\text {loc}}}^{1,1}(f(\Omega ),{\mathbb {R}}^3)\).

It would be very interesting to see if the assumption (1.2) can be removed. A similar extra assumption was assumed in [8, Theorem 14].

To show that our result generalizes the aforementioned result of [6] we notice first that for homeomorphisms in \(W^{1,n-1}\) the distributional adjugate \(\mathcal {ADJ}\, Df\) is equal to the pointwise adjugate \({\text {adj}}Df\) (see [21, Proposition 2.10]). The main part of the proof in [6] was to show that f maps \({\mathcal {H}}^{n-1}\) null sets on almost every hyperplane to \({\mathcal {H}}^{n-1}\) null sets. This implies (1.2) for \(W^{1,n-1}\) -homeomorphisms. This property of null sets on hyperplanes may fail in our setting so our proof is more subtle and we have to use delicate tools of Geometric Measure Theory.

Moreover, our assumptions are not only sufficient but also necessary for the weak regularity of the inverse.

Theorem 1.2

Let \(\Omega \subset {\mathbb {R}}^3\) be a domain and \(f\in BV(\Omega ,{\mathbb {R}}^3)\) be a homeomorphism such that \(f^{-1}\in BV(f(\Omega ),{\mathbb {R}}^3)\). Then \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\) and for a.e. t we have

In an upcoming article [19] these results are further refined. There the total variation of distributional adjugate is shown to equal the total variation of the derivative of the inverse mapping. Moreover, some simple ways of verifying the key assumption \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\) are presented there.

Now we give the formal definition of the distributional adjugate. Without loss of generality we can assume that \(\Omega =(0,1)^3\) as all statements are local.

Definition 1.3

Let \(f=(f_1,f_2,f_3) :(0,1)^3\rightarrow {\mathbb {R}}^3\) be a homeomorphism in BV. For \(t\in (0,1)\) we define

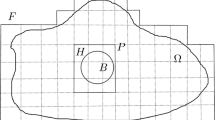

We can split these mappings into 9 mappings from \((0,1)^{2}\rightarrow {\mathbb {R}}^{2}\) using its coordinate functions. Given \(k,j\in \{1,2,3\}\) choose \(a,b\in \{1,2,3\}{\setminus }\{j\}\) with \(a<b\) and define

see Fig. 1. For example,

The definition of the nine 2-dimensional restrictions \(f^t_{k,j}\) of a mapping \(f :(0,1)^3 \rightarrow {\mathbb {R}}^3\) in Definition 1.3

Now we recall the definition of distributional Jacobian and, using it, define the distributional adjugate.

Definition 1.4

Let f be as in Definition 1.3. For mappings \(f^t_{k,j}\) we consider the usual distributional Jacobian (see for example [21, Sect. 2.2]), that is the distribution

This distribution is well-defined for homeomorphism in \(W^{1,1}\). It is also well-defined for homeomorphism in BV for \(n=3\), we just consider the integral with respect to corresponding measure \(d(\partial _l f^{t}_{k,j})_{2}(x)\) instead of \((\partial _l f^{t}_{k,j})_2(x)\; \mathrm{d}x\) and for example we define

Assume that these \(3\times 3\) distributions \({\mathcal {J}}_{f^{t}_{i,j}}\) are measures for a.e. \(t\in (0,1)\) and for measurable \(A\subset (0,1)^3\) we set

We say that \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\) if the distributions \({\mathcal {J}}_{f^{t}_{k,j}}\) are measures for a.e. \(t\in (0,1)\) and \((\mathcal {ADJ}\, Df)_{k,j}\in {\mathcal {M}}(\Omega )\) for every \(i,j\in \{1,2,3\}\).

A priori it seems that the definition of the distributional adjugate is dependent on the choice of coordinates. This turns out not to be the case and we discuss this further in Sect. 6.1.

2 Preliminaries

Total variation of the measure \(\mu \) is the measure \(|\mu |\) such that

For a domain \(\Omega \subset {\mathbb {R}}^n\) we denote by \(C^\infty _0(\Omega )\) those smooth functions \(\varphi \) whose support is compactly contained in \(\Omega \), that is \({\text {supp}}\varphi \subset \subset \Omega \).

2.1 Mollification

We will need to approximate continuous BV mappings with smooth maps. To this end we recall here the basic definitions of convolution and mollifiers for the reader’s convenience; for a more detailed treatise on the basics and connections to BV mappings we refer to [1, Sects. 2.1 and 3.1].

A family of mappings \((\rho _\varepsilon ) \in C^\infty ({\mathbb {R}}^n, {\mathbb {R}})\) is called a family of mollifiers if for all \(\varepsilon >0\) we have \(\rho _\varepsilon (x) = \varepsilon ^{-n} \rho (x / \varepsilon )\), where \(\rho \in C^\infty ({\mathbb {R}}^n, {\mathbb {R}})\) is a non-negative mapping satisfying \({\text {supp}}\rho \subset B({0},1)\), \(\rho (-x) = \rho (x)\) and \(\int _{{\mathbb {R}}^n} \rho = 1\). We will sometimes use a sequence of mollifiers \((\rho _j)\), in which case we tacitly assume that there is a family of mollifiers \(({{\tilde{\rho }}}_\varepsilon )\) from which we extract the sequence \((\rho _j)\) by setting \(\rho _j {:}{=}{{\tilde{\rho }}}_{\frac{1}{j} }\).

For \(\Omega \subset {\mathbb {R}}^n\) and any two functions \(f :\Omega \rightarrow {\mathbb {R}}^m\), \(g :\Omega \rightarrow {\mathbb {R}}\) we set their convolution to be

whenever the integral exists. Likewise for a m-valued Radon measure \(\mu \) defined on \(\Omega \) and a function \(g :\Omega \rightarrow {\mathbb {R}}\) we define their convolution as

whenever the integral exists.

For a function \(f :\Omega \rightarrow {\mathbb {R}}^m\) or a Radon measure \(\mu \) defined on \(\Omega \) we define their (family of) mollifications to be the families \((f_\varepsilon ) {:}{=}(f *\rho _\varepsilon )\) and \((\mu _\varepsilon ){:}{=}(\mu *\rho _\varepsilon )\), respectively, where \((\rho _\varepsilon )\) is a family of mollifiers. Similarly we define the sequence of mollifications as \((f_j) {:}{=}(f *\rho _j)\) and \((\mu _j) {:}{=}(\mu *\rho _j)\). For our purposes the exact family of mollifiers does not matter, so we tacitly assume that some such family has been given whenever we use mollifications.

2.2 Topological degree

For \(\Omega \subset {\mathbb {R}}^n\) and a given smooth map \(f :\Omega \rightarrow {\mathbb {R}}^n\) we define the topological degree as

if \(J_{f}(x) \ne 0\) for each \(x \in f^{-1}\{y_{0}\}\). This definition can be extended to arbitrary continuous mappings and each point \(y_0\notin f(\partial \Omega )\), see for example [13, Sect. 1.2] or [21, Chapter 3.2]. For our purposes the following property of the topological degree is crucial; see [13, Definition 1.18]:

Lemma 2.1

Let \(\Omega \subset {\mathbb {R}}^n\) be a domain, \(f :\Omega \rightarrow {\mathbb {R}}^n\) a continuous function and U a domain with \({\overline{U}} \subset \Omega \). Then for any point \(y_0 \in {\mathbb {R}}^n{\setminus } f (\partial U)\) and any continuous mapping \(g :\Omega \rightarrow {\mathbb {R}}^n\) with

we have \(\deg (f, \Omega , y_0) = \deg (g, \Omega , y_0)\).

We will also need some classical results concerning the dependence of the degree on the domain. The following result is from [13, Theorem 2.7]:

Lemma 2.2

Let \(\Omega \subset {\mathbb {R}}^n\) be a domain, \(f :\Omega \rightarrow {\mathbb {R}}^n\) a continuous function and U a domain with \({\overline{U}} \subset \Omega \).

-

(1)

(Domain decomposition property) For any domain \(D \subset U\) with a decomposition \(D = \cup _i D_i\) into open disjoint sets, and a point \( p \notin f (\partial D)\), we have

$$\begin{aligned} \deg (f,D,p) = \sum _i \deg (f,D_i,p). \end{aligned}$$ -

(2)

(Excision property) For a compact set \(K \subset {{\overline{U}}}\) and a point \(p \notin f(K \cup \partial U)\) we have \(\deg (f,U,p) = \deg (f,U{\setminus } K,p)\).

The topological degree agrees with the Brouwer degree for continuous mappings, which in turn equals the winding number in the plane. The winding number is an integer expressing how many times the path \(\beta _f {:}{=}f (\partial D)\) circles the point p; indeed, the winding number equals the topological index of the mapping \(\frac{\beta _f - p}{|\beta _f - p|} :{\mathbb {S}}^1 \rightarrow {\mathbb {S}}^1\). We refer to [13, Sect. 2.5] for discussion of the winding number in the setting of holomorphic planar mappings.

2.3 Hausdorff measure

For \(A\subset {\mathbb {R}}^n\) we use the classical definition of the Hausdorff measure (see for example [11])

where

The important ingredient of our proof is the Gustin boxing inequality [14] which states that for each compact set \(K\subset {\mathbb {R}}^n\) we have

2.4 On various areas

Besides homeomorphisms in three dimensions we work with a continuous mappings \(g:[0,1]^2\rightarrow {\mathbb {R}}^3.\) A central object is the Hausdorff measure of the image \({\mathcal {H}}^2(g((0,1)^2)),\) but we need to use other finer notions of area. The results of this subsection can be found in the book of Cesari [3] and they also follow by some results of Federer, see for example [12, (13) on p. 93] and references given there.

First we define the Lebesgue area (see [3, 3.1]). Let L be an affine mapping, then for any triangle \(\Delta \) the area of \(L(\Delta )\) is defined in the natural way. For a piecewise linear mapping \(h:[0,1]^2\rightarrow {\mathbb {R}}^3\) we define the Lebesgue area \(A(h, [0,1]^2)\) to be the sum of the areas of the triangles of some triangulation where h is linear in each of these triangles. We define

where \(\mathcal {PH}(g)\) is the collection of all sequences of polyhedral surfaces converging uniformly to g.

Next we define coordinate mappings \(g_{j}:[0,1]^2\rightarrow {\mathbb {R}}^2\) as

Finally we define (see [3, 9.1])

where S is any finite system of nonoverlapping simple open polygonal regions in \([0,1]^2\) and \(\deg (g_{j},y,A)\) denotes the topological degree of mapping.

We need the following characterization of the Lebesgue area (see [3, 18.10 and 12.8.(ii)]) which holds for any continuous g

Let us note that these results are highly nontrivial. For example it is possible to construct continuous g such that \(A(g,[0,1]^2)\) is much smaller than \({\mathcal {H}}^2(g([0,1]^2))\) (which may be even infinite) but the result (2.4) is still true. Further for the validity we need only continuity of g and we do not need to assume that \(A(g, [0,1]^2)<\infty \). However, this is only known to hold for two dimensional surfaces in \({\mathbb {R}}^3\) and for higher dimensions the assumption about the finiteness of the Lebesgue area might be needed.

2.5 BV functions and the coarea formula

Let \(\Omega \subset {\mathbb {R}}^n\) be an open domain. A function \(h\in L^1(\Omega )\) is of bounded variation, \(h\in BV(\Omega ),\) if the distributional partial derivatives of h are measures with finite total variation in \(\Omega \), that is there are Radon (signed) measures \(\mu _1,\ldots ,\mu _n\) defined in \(\Omega \) so that for \(i=1,\ldots ,n,\)\(|\mu _i|(\Omega )<\infty \) and

for all \(\varphi \in C^{\infty }_0(\Omega ).\) We say that \(f\in L^1(\Omega ,{\mathbb {R}}^n)\) belongs to \(BV(\Omega ,{\mathbb {R}}^n)\) if the coordinate functions of f belong to \(BV(\Omega )\).

Let \(\Omega \subset {\mathbb {R}}^n\) be open set and \(E\subset \Omega \) be measurable. The perimeter of E in \(\Omega \) is defined as a total variation of \(\chi _E\) in \(\Omega \), that is

We will need the following coarea formula to characterize BV functions (see [1, Theorem 3.40]):

Theorem 2.3

Let \(\Omega \subset {\mathbb {R}}^n\) be open and \(u\in L^1(\Omega )\). Then we have

In particular, \(u\in BV(\Omega )\) if and only if the integral on the righthand side is finite.

It is well-known (see for example [1, Proposition 3.62]) that for the coordinate functions of a homeomorphism \(f :\Omega \rightarrow {\mathbb {R}}^n\) we have

Moreover, we have the following version of coarea formula for continuous BV functions by Federer [11, Theorem 4.5.9 (13) and (14) for \(k\equiv 1\)].

Theorem 2.4

Let \(\Omega \subset {\mathbb {R}}^n\) be open and \(u\in BV(\Omega )\) be continuous. Then we have

2.6 BVL condition

Let \(\Omega \subset {\mathbb {R}}^n\) be open and \(f\in L^1(\Omega )\). It is well-known that \(f\in BV(\Omega )\) if and only if it satisfies the BVL condition, that is it has bounded variation on \({\mathcal {L}}^{n-1}\) a.e. line parallel to the coordinate axes, and the variation along these lines is integrable (see for example [1, Remark 3.104]). As a corollary we obtain that a BV function of n-variables is a BV function of \((n-1)\)-variables on \({\mathcal {L}}^1\) a.e. hyperplane parallel to coordinate axis.

For example for \(n=2\) and \(f\in BV((0,1)^2)\) we have that the function

has bounded (one-dimensional) variation for a.e. \(x\in (0,1)\). Moreover,

where \(|D f_x|\) denotes the (one-dimensional) total variation of \(f_x\) and \(|D_2 f|\) denotes the total variation of the measure \(\frac{\partial {f}}{\partial y}\). A similar identity holds for \(f_y(x){:}{=}f(x,y)\) and \(D_1 f\).

2.7 Convergence of BV functions

In dimension two, the boundary of a ball B(x, r) is a curve and we will tacitly assume that it is always parametrized with the path

Thus when we speak of the length\(\ell (\partial B(x,r))\) of the boundary of a ball or its image \(f (\partial B(x,r))\) under a mapping f, we mean the length of the curve \(\beta \) or \(f \circ \beta \), respectively. Note that the length of a path \(\gamma :[0,1] \rightarrow {\mathbb {R}}^2\) equals

from which we immediately see that if \(f_j \rightarrow f\) uniformly, then

Similarly, we also assume line segments in the plane to be equipped with a path parametrization and to have similar length convergence properties.

By the results in the previous Sect. 2.6 we know that the restriction of a BV mapping \(f \in BV({\mathbb {R}}^2,{\mathbb {R}}^2)\) to \({\mathcal {L}}^1\) a.e. line segment in the plane is again a BV mapping; that is for all \(a>b\) and a.e. \(x \in {\mathbb {R}}\), the restriction \(f_x {:}{=}f|_{\{ x \} \times (a,b)}\) is a BV mapping and

where \(| Df_x |\) denotes the one-dimensional total variation of \(f_x\).

A similar result holds also for \({\mathcal {H}}^1\) a.e. radius of a sphere: given a point x, the restriction of f to \(\partial B(x,r)\) is BV for \({\mathcal {H}}^1\) a.e. radius \(r > 0\). This in particular implies that for such radii,

where \(f_r {:}{=}f|_{\partial B(x,r)}\) and \(|D(f_r)|\) denotes the one-dimensional total variation of \(f_r\). Furthermore, similarly as in (2.7), we have

Recall the weak* convergence of BV mappings.

Definition 2.5

We say that a sequence \((f_j)\) of BV mappings weakly* converges to f in BV, if \(f_j \rightarrow f\) in \(L^1\) and \(Df_j\) weakly* converge to Df, that is

for all \(\varphi \in C_0(\Omega )\).

The next result from [1, p. 125, Proposition 3.13.] gives a characterization for weak* convergence in BV. Note especially that since the mollifications of continuous BV functions converge uniformly, they especially converge locally in \(L^1\), so in this case the boundedness of the sequence in BV-norm gives weak* convergence for the derivatives.

Proposition 2.6

Let \(f_j\) be a sequence of BV mappings \(\Omega \rightarrow {\mathbb {R}}^2\). Then \(f_j\) weakly* converges to a BV mapping \(f :\Omega \rightarrow {\mathbb {R}}^2\) if and only \(f_j \rightarrow f\) in \(L^1\) and \(\sup |Df_j|(\Omega )<\infty .\)

3 Properties of BV Mappings

In the proof of Theorem 1.1 we use some ideas of [9, proof of Theorem 1.7]. In particular we use the following observation based on the coarea formula (Theorem 2.3):

Theorem 3.1

Let \(\Omega \subset {\mathbb {R}}^n\) be a domain and \(f\in BV_{{\text {loc}}}(\Omega ,{\mathbb {R}}^n)\) be a homeomorphism. Then the following measure on \(\Omega \) is finite

if and only if \(f^{-1}\in BV_{{\text {loc}}}(f(\Omega ),{\mathbb {R}}^3)\). In addition, \(f(\mu )\) is absolutely continuous with respect to the Lebesgue measure if and only if \(f^{-1}\in W^{1,1}(f(\Omega ),{\mathbb {R}}^3).\)

Proof

Assume that \(\mu \) is a finite measure. By Theorem 2.3 and the perimeter inequality (2.6) we have

and thus \(f^{-1}\in BV_{{\text {loc}}}\).

If \(f^{-1}\in BV_{{\text {loc}}}\), then by Theorem 2.4 we know that the only inequality in the above computation (3.1) is actually equality and we get \(\mu \in {\mathcal {M}}(\Omega )\).

Let us consider now the final claim. We have to show that \(\left| Df^{-1} \right| (E)<\varepsilon \) if \(\left| E \right| <\delta .\) Given \(\varepsilon \) we choose \(\delta >0\) from the absolute continuity of measure \(f(\mu )\). By approximation we may assume that E is open and \(|E|<\delta \). The definition of \(\mu ,\) assumed absolute continuity of \(f(\mu )\) and (3.1) (with E instead of \(\Omega \)) imply

If we know that \(f^{-1}\in W^{1,1}\) then we have only equalities in (3.1) and we easily obtain that \(f(\mu )\) is absolutely continuous with respect to the Lebesgue measure. \(\square \)

We next show that for a mollification of a continuous BV mapping, the convergence is inherited to a.e. circle in a weak sense.

Proposition 3.2

Let \(f :\Omega \subset {\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) be a continuous BV mapping and \((\rho _k)\) a sequence of mollifiers. Denote \(f^k {:}{=}f *\rho _k\), \(f^k_{c,s} {:}{=}f^k|_{\partial B(c,s)}\) and \(f_{c,s} {:}{=}f|_{\partial B(c,s)}\). Then for any point \(z \in {\mathbb {R}}^2\) we have

and

for \({\mathcal {H}}^1\) a.e. radius \(r > 0\) such that \(\overline{B(z,r)} \subset \Omega \).

Proof

Since the claim is local it suffices, after a smooth change of local coordinates, to show that for a continuous BV mapping \(f :(0,1)^2 \rightarrow {\mathbb {R}}^2\) we have

and

on \({\mathcal {H}}^1\) almost every line segment \(I_x {:}{=}\{ x \} \times (0,1)\), where \(f_x^k {:}{=}f^k|_{I_x}\) and \(f_x {:}{=}f|_{I_x}\).

We start by proving (3.2). By the results in Sect. 2.7, for \({\mathcal {H}}^1\) a.e. \(x \in (0,1)\) we have

so since \(f_x^k \rightarrow f_x\) uniformly, we see by the notions of Sect. 2.7 that

Thus to prove (3.2) it suffices to show that for a.e. \(x \in (0,1)\),

Suppose this is not true, whence there exists \(\delta > 0\) such that the set

has positive 1-measure. Fix a Lebesgue point \(x_0 \in (0,1)\) of J. By the Lebesgue density theorem we may assume \(x_0\) to be such that

Choose \(\eta >0\) such that

Fix \(r>0\) for which

As remarked in Sect. 2.6, \(D_2f\) is a finite Radon measure. Thus applying [1, Proposition 2.2.(b), p. 42] for the mollification of its total variation \(|D_2 f|\) and using the fact that the measure is Borel regular, we see that

for any Borel set U. By setting \(U {:}{=}(x_0-r,x_0+r) \times (0,1)\) we have by (i) that \(|D f| (\partial U) = 0\), and so also \(|D_2 f| (\partial U) \le |D f|(\partial U) = 0\). Thus

On the other hand by using Fatou’s lemma, the definition of J, (3.5) (iii), (ii), (iv) and (3.4),

This contradicts (3.6) and so (3.2) holds.

To prove (3.3) we note that for \({\mathcal {H}}^1\) a.e. line segment \(I_x\) the BV mappings \(f_x^k :I_x \rightarrow {\mathbb {R}}^2\) converge uniformly to the continuous BV mapping \(f_x :I_x \rightarrow {\mathbb {R}}^2\). Furthermore they form a bounded sequence with respect to the BV norm, and thus by Proposition 2.6 they converge weak* in BV. This implies (3.3) and the proof is complete. \(\quad \square \)

4 Degree Theorem for Continuous BV Planar Mappings

The aim of this section is to prove the following analogy of the change of variables formula for the distributional Jacobian in two dimensions. A similar statement was shown before in [5] for mappings that satisfy \(J_f>0\) a.e. and that are one-to-one and in [10] for open and discrete mappings. Here we generalize this result to mappings where the Jacobian can change the sign but we restrict our attention to planar mappings only.

Theorem 4.1

Let \(f :{\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) be a continuous BV mapping such that the distributional Jacobian \({\mathcal {J}}f\) is a signed Radon measure. Then for every \( x \in {\mathbb {R}}^2\) we have

for a.e. \(r > 0\).

Before the proof Theorem 4.1 we prove the following important corollary, which is one of the main tools in the proof of Theorem 1.1:

Proposition 4.2

Let \(f :{\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) be a continuous BV mapping such that the distributional Jacobian \({\mathcal {J}}f\) is a signed Radon measure and such that \(V(f,{\mathbb {R}}^2)<\infty \). Then for every \( x \in {\mathbb {R}}^2\) we have

for a.e. \(r > 0\).

Proof

Let us note that the previous theorem holds not only for balls but also for a.e. cube Q(x, r). From the previous theorem we know that the set

has full \({\mathcal {L}}^{n+1}\) measure. It follows that for a.e. \(r>0\) we have that Q(x, r) is good for a.e. \(x\in \Omega \) with \(r<{\text {dist}}(x,\partial \Omega )\). Hence we can fix \(r_0>0\) such that all \(r_k=r_0 2^k\), \(k\in {\mathbb {Z}}\), are good for every \(x\in \Omega {\setminus } N_0\) with \(|N_0|=0\). Hence we can fix \(x_0\in {\mathbb {R}}^n\) and a dyadic grid

such that for all cubes from the grid inside \(\Omega \) we have

and \({\mathcal {L}}^2(f(\partial Q))=|{\mathcal {J}}_f|(\partial Q)=0\) for every \(Q\in G_0\), \(Q\subset \Omega \). It is enough to choose any

Let us fix a cube \(Q\subset \Omega \). Instead of proving (4.2) for a ball we prove it for Q which is equivalent. Analogously to (2.3) we define (see [3, 9.10])

where S is any finite system of nonoverlapping simple open polygonal regions in Q. By [3, 12.9 Theorem (iii)] we know that

Note that for the validity of this identity we need the additional assumption \(V(f,Q)<\infty \) as it is the assumption of [3, 12.9 Theorem (iii)] (it is stated in [3] as f is plane BV but in the notation of the book it means exactly \(V(f,Q)<\infty \)). By [3, 12.9 Theorem (i) and 12.6] we know that there is a sequence of figures \(F_n\) in Q that consists of disjoint cubes from our dyadic grid \(Q_{i,n}\), \(F_n=\bigcup _i Q_{i,n}\), so that

Now we can easily estimate with the help of (4.4) and definition of total variation

\(\square \)

The proof of Theorem 4.1 requires several auxiliary results. We begin with the following degree convergence lemma; compare to Lemma 2.1:

Lemma 4.3

Let \(f :\Omega \subset {\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) be a continuous BV mapping and let \((f^k)\) be mollifications of f. Then for any point \(x \in {\mathbb {R}}^2\) and a.e. radius \(r > 0\) we have

Proof

Let \(x_0 \in {\mathbb {R}}^2\). By the BVL properties remarked in Sect. 2.6 we know that for almost every radius \(r>0\) the length of \(f(\partial B(x_0,r))\) is finite, that is

\(|Df_{z,r}|(\partial B(z,r))<\infty \) and that the claim of Proposition 3.2 holds. Fix such a \(r_0\), and set \(B_0 {:}{=}B(x_0,r_0)\).

We first define

whence

and we have to show that \(F^k\rightarrow F\) in \(L^1.\) To show this we use compactness of BV. First we show that \(F^k\) is bounded sequence in BV-norm.

It is easy to see that the variation measure of \(F^k\) is supported only on the curve \(f^k(\partial B_0)\). Furthermore, since \(f^k(\partial B_0)\) is rectifiable, \({\mathcal {H}}^1\)-a.e. point is on the boundary of at most two components of \({\mathbb {R}}^2 {\setminus } f^k(\partial B_0)\). In such a situation, if the value of \(F^k\) differs by N on these two components, the image \(f^k(\partial B_0)\) must cover this joint boundary at least N times. Thus the total variation of \(F^k\) is in fact bounded by

Since the radius \(r_0\) was chosen such that Proposition 3.2 holds, we have

and so \(\left| DF^k \right| \) is uniformly bounded. Furthermore the boundedness of the sequence \((F^k)\) in \(L^1\) follows from the Sobolev inequality [1, Theorem 3.47]. Thus, the compactness theorem in [1, Theorem 3.23] implies that there exists a subsequence \((F^{k(j)})\) which converges in \(L^1\) to a function G.

We will show that \(G=F,\) which implies that the original sequence \(F^k\) converges to F in \(L^1,\) as every converging subsequence must converge to F. Assume that \(F\ne G\) on a set A with positive Lebesgue measure. Since \(f(\partial B_0)\) has finite 1-Hausdorff measure we find with the Lebesgue density theorem \(z\in {\mathbb {R}}^2{\setminus } f(\partial B_0),\) which is a density point of A with \(G(z)\ne F(z).\) For some very small ball \(B_z\) centered at z we have

and \(B_z\) is compactly contained in some component of \({\mathbb {R}}^2{\setminus } f(\partial B_0).\) Now recall that \(f^k\) converge uniformly to f. When \(\Vert f^k-f \Vert <{\text{ dist }}(B_z,f(\partial B_0))\) we have by basic properties of the degree (see [13, Theorem 2.3.])

for every point \(y\in B_z.\) This is a contradiction with \(L^1\) convergence and the definition of \(B_z\). Thus the original claim follows. \(\quad \square \)

The proof of the previous lemma goes through also with absolute values of the degrees. We record this observation as the following corollary even though we will not be using it in this paper:

Corollary 4.4

Let \(f :\Omega \subset {\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) be a continuous BV mapping and \((f^k)\) a mollification of f. Then for any point \(x \in {\mathbb {R}}^2\) and a.e. radius \(r > 0\) we have

Proposition 4.5 is essentially a BV-version of [18, Proposition 2.10]. For smooth mappings the identity (4.9) follows in a more general form with smooth test functions \(g \in C^\infty (\Omega ,{\mathbb {R}}^2)\) by combining the Gauss-Green theorem and the area formula in a ball B:

where \(\nu \) denotes the unit exterior normal to B and \({\text {cof}}D f(x)\) denotes the cofactor matrix, that is the matrix of \((n-1)\times (n-1)\) subdeterminants with correct signs. For more details for the general setting we refer to Müller, Spector and Tang; in [29, Proposition 2.1] they prove the claim for continuous \(f \in W^{1,p}\), \(p > n-1\) and \(g \in C^1\). We need the identity only in the case of \(g(x_1,x_2)=[x_1,0]\). In this case the integrand on left hand side of (4.7) reduces to

where \(\nu _t\) is the unit tangent vector of \(\partial B.\) Thus in the BV setting it is natural to replace the left hand side of (4.7) with

since by Sect. 2.6, f is one dimensional BV-function on almost every sphere centered at any given point.

Proposition 4.5

Let \(\Omega \subset {\mathbb {R}}^2\) be a domain and let \(f :\Omega \rightarrow {\mathbb {R}}^2\) be a continuous BV mapping. Then for every \(c\in {\mathbb {R}}^2\) and a.e. \(r>0\) such that \(B{:}{=}B(c,r)\subset \Omega \) we have

Proof

We prove the claim by approximating f with a sequence of mollifiers \((f^k)\), showing that \([f^k_1,0] \cdot {\text {cof}}Df^k\) convergences weakly* to \(f_1 \mathrm{d}(Df|_{\partial B})\) and combining this with Lemma 4.3.

Let us fix \(r>0\) such that \(B(c,r)\subset \subset \Omega \) and the conclusion of Lemma 4.3 and Proposition 3.2 hold for this radius. Now for every k with \(B(c,r+\frac{1}{k})\subset \subset \Omega \) we have \(f^k\in C^\infty (B,{\mathbb {R}}^2)\). Since f is continuous, \(f^k \rightarrow f\) uniformly. Clearly

We next note that by Proposition 3.2, \(Df^k|_{\partial B} \rightarrow Df|_{\partial B}\) with respect to the weak* convergence and \(\Vert f_1^k - f_1\Vert _\infty \rightarrow 0\) by the uniform convergence of \((f^k)\). Thus both terms of the right hand side converge to zero as \(k \rightarrow \infty \). It follows that

On the other hand, since the mappings \(f^k\) are smooth we have by for example [29, Proposition 2.1] that

Combining this with (4.10) and Lemma 4.3 gives the claim. \(\quad \square \)

We are now ready to prove the main result of this section, Theorem 4.1. In its proof we use some ideas from [5, 27].

Proof of Theorem 4.1

We recall the definition of distributional Jacobian for any \(\varphi \in C^{\infty }_0(\Omega )\)

Let us pick a ball \(B{:}{=}B(y,r)\subset \Omega \) such that \(|Df|(\partial B)=0\). Furthermore, by the Lebesgue theorem we may assume that

where \(f_{y,s}{:}{=}f|_{\partial B(y,s)}\) and \(|Df_{y,s}|\) is the corresponding (one-dimensional) total variation. Let us fix \(\psi \in C^\infty ({\mathbb {R}},[0,1])\) such that \(\psi (s)\equiv 1\) for \(s<0\) and \(\psi (s)\equiv 0\) for \(s>1\). For \(0<\delta < r\) we set

As the distributional Jacobian is a Radon measure and \(|Df|(\partial B)=0\) we obtain

By (4.11) for \(\varphi =\Phi _{\delta }({ |x-y|})\) and Proposition 4.5 we have

We next show that the integral on the right hand side of (4.14) converges as \(\delta \rightarrow 0\). For all \(y \in \Omega \) and \(\delta > 0\) small enough we set

Note that with this notation,

and the right hand side is a type of average integral as \(\int _{r-\delta }^r \Phi '_\delta =1\).

We have a fixed mapping \(f|_{\overline{B(y,r)}}\) with \(|Df_{y,r}|(\partial B(y,r))<\infty \). We claim that given \(\varepsilon >0\) we can find \(\eta >0\) such that for every continuous mapping \(g|_{\overline{B(y,r)}}\) we have

Indeed, if this were not true, we would have uniformly converging sequence such that conclusion of (4.16) would not hold. Analogously to the proof of Lemma 4.3 we would then get a contradiction.

Moreover, similarly to the proof of Lemma 4.3, the Sobolev inequality gives, for these a.e. radii,

Given \(\varepsilon >0\) we choose \(\eta >0\) as in (4.16) and then we choose \(\delta >0\) so that for every \(s\in [r-\delta ,r]\) we have

where we have used (4.12). By Chebyshev’s inequality with (4.18) we obtain

By (4.14), (4.15), \(\int _{r-\delta }^r \Phi '_{\delta }=1\), \(|\Phi '_{\delta }|\le \frac{C}{\delta }\), (4.16) and (4.17) we obtain

Together with (4.13) this implies that

\(\square \)

5 Proof of Main Theorem 1.1

Given a point \(x\in {\mathbb {R}}^n\) and a \(s>0\) we denote by Q(x, s) the cube with center x and sidelength s and whose sides are parallel to coordinate planes. Given a \(t>0\) we also denote \(tQ(x,s){:}{=}Q(x,ts).\)

Proof of Theorem 1.1

Without loss of generality we may assume that \((-1,2)^3\subset \Omega \) and we prove only that \(f^{-1}\in BV\bigl (f((0,1)^3)\bigr )\) as the statement is local. We denote \(Q{:}{=}Q((\frac{1}{2},\frac{1}{2}), 1)= (0,1)^2.\) Slightly abusing the notation we write \(2Q{:}{=}Q((\frac{1}{2},\frac{1}{2}), 2)\). We claim that

and the statement of the theorem then follows from Theorem 3.1. Let \(\varepsilon >0\). We start with an estimate for \({\mathcal {H}}^2_{\varepsilon }(f(Q\times \{t\}))\) for some fixed \(t\in (0,1).\)

First let us fix \(t\in (0,1)\) such that (see (1.2))

and

and we note that this holds for a.e. \(t\in (0,1)\) by the Lebesgue density theorem. Let us define the measure on (0, 1) by

Let us denote by h the absolutely continuous part of \(\mu \) with respect to \({\mathcal {L}}^1\). Then it is easy to see that

Moreover, we can fix t so that

which holds for a.e. t by the Lebesgue density theorem and by the fact that the corresponding limit is zero a.e. for the singular part of \(\mu \).

Since f is uniformly continuous there exists for our fixed t a subdivision of \(Q\times \{t\}=\bigcup _i Q_i \) into squares \(Q_i=Q(c^i,r_i)\) such that \({\text {diam}}(f(2Q_i\times \{t\}))< \frac{\varepsilon }{2}\). Furthermore we fix \(r>0\) so that for every \(0<\delta <r\) we have with the help of (5.1)

For \(\eta >0\) we put our \(Q_i\times \{t\}\) into the box

In the following we divide \(\partial U_{i,\eta }\) into three parts parallel to coordinate axes:

where \(\partial _2 U_{i,\eta }\) denotes two rectangles perpendicular to \(x_2\) axis and \(\partial _1 U_{i,\eta }\) denotes two rectangles perpendicular to \(x_1\) axis. For each \(Q_i\) we choose a real number \(0\le \eta _{i}\le 1\) so that

which is possible as the smallest value is less or equal to the average and here and in the following we denote for simplicity \(|{\mathcal {J}}_{f_{1,j}}|(\partial _1 U_{i,\eta })\) the sum of two:

as \(Q_i=Q(c^i,r_ i)\).

It is obvious that \(f(Q_i\times \{t\})\subset f(U_{i,\eta _i})\). By the definition of the Lebesgue area (2.2) and its estimate (2.4) we obtain that we can approximate f on \(\partial U_{i,\eta _i}\) by piecewise linear \(f^i:\partial U_{i,\eta _i}\rightarrow {\mathbb {R}}^3\) such that

and so that \(f^i\) is so close to f that (see (5.4) (iv))

and \(f(Q_i\times \{t\})\) lies inside \(f^i(U_{i,\eta _i})\), that is

By (5.7) we have

Now we obtain for each t with the help of the Gustin boxing lemma (2.1) and (5.6) that

Recall that by definition (2.3),

so by Proposition 4.2 we obtain

Notice that even though Theorem 4.2 is stated only for disks, it also holds for rectangles and moreover, we may use it for polygons. This can be seen by covering the polygon by rectangles and arguing as in the end of the proof of Proposition 4.2.

We treat the terms in (5.9) separately. We sum the last inequality, use the fact that \((1+\eta _i)Q_i\) have bounded overlap (as \(1\le 1+\eta _i\le 2\)) and with the help of (5.4) (ii) and (iii) we obtain

For the remaining part of the right hand side of (5.8) we recall that \(Q_i=Q(c^i,r_i)\) and by (5.9), (5.5), linear change of variables and \(\delta <r\) we have

Summing over i, using bounded overlap of \(2 Q_i\), (5.2) and (5.4) (i) we obtain

Combining (5.8), (5.10) and (5.11), we have, with the help of (5.3),

By passing \(\varepsilon \rightarrow 0\) we obtain our conclusion with the help of Theorem 3.1. \(\quad \square \)

6 Reverse Implication

The main aim of this Section is to show Theorem 1.2. For its proof we again use some ideas from Müller [27] and De Lellis [7]. As a corollary we show that the notion of \(\mathcal {ADJ}\, Df\in {\mathcal {M}}\) does not depend on the chosen system of coordinates and that this notion is weakly closed.

For the proof of Theorem 1.2 we require the following result which shows that the topological degree is smaller than the number of preimages.

Lemma 6.1

Let \(F :{\mathbb {R}}^3 \rightarrow {\mathbb {R}}^3\) be a homeomorphism, \(f :{\mathbb {R}}^2 \rightarrow {\mathbb {R}}^3\) the restriction of F to the xy-hyperplane, \(p :{\mathbb {R}}^3 \rightarrow {\mathbb {R}}^2\) the projection \((x_1,x_2,x_3) \mapsto (x_1,x_2)\) and \(g = p \circ f\). Then for any \(B(x,r) \subset (0,1)^2\) and \(y \in {\mathbb {R}}^2 {\setminus } g (\partial B(z,r))\),

Proof

We may assume that N(g, B(z, r), y) is finite and \(\deg (g, B(z,r),y) > 0\). By [13, Theorem 2.9] we can write the degree as a sum of local indices

recall that local index is defined by

where V is any neighborhood of x such that \(g^{-1}\{y\}\cup {\bar{V}} =\{ x \}\).

To prove the claim it thus suffices to prove that \(|i(g,x,y)|\le 1\) for every \(x\in g ^{-1}\{ y \} \). Towards contradiction suppose that this is not the case. Fix some \(x_0\in g ^{-1}\{ y \}\) such that \(i(g,x,y)\ge 2\); the case when the index is negative is dealt identically. Let \(B(x_0,s)\) be a ball such that

Without loss of generality we may assume that \(x_0 = y = 0\), \(s=1\). Denote \(Z = \{ 0 \} \times \{ 0 \} \times {\mathbb {R}}\). Since the topological degree equals the winding number, the path \(\beta {:}{=}g (\partial B(0,1))\) winds around the point 0 at least twice in \({\mathbb {R}}^2 {\setminus } \{ 0 \}\), so especially the path \(\alpha {:}{=}f (\partial B(0,1) )\) winds twice around Z in \({\mathbb {R}}^3 {\setminus } Z\).

Now we note that \(\partial B(0,1) \times \{ 0 \} \subset {\mathbb {S}}^2\), where \({\mathbb {S}}^2\) denotes the two-dimensional sphere in \({\mathbb {R}}^3\). Since \(F:{\mathbb {R}}^3 \rightarrow {\mathbb {R}}^3\) is a a homeomorphism and f the restriction of F, \(F({\mathbb {S}}^2)\) is a topological sphere in \({\mathbb {R}}^3\). Furthermore, Z intersects fB(0, 1) only at a single point, and we fix \({\hat{Z}}\) to be the compact subinterval of Z which contains the intersection point and intersects \(F({\mathbb {S}}^2)\) only at the endpoints of the interval, which we may assume to be \((0,0,\pm 1)\). The unique pre-images of these points cannot be on the circle \(\partial B(0,1)\), so we may assume then to be \((0,0,\pm 1)\) as well. Thus

This gives rise to a contradiction, since F is a homeomorphism and so the degree of \(F|_{{\mathbb {S}}^2 {\setminus } Z}\) is \(\pm 1\). More specifically, the path \(\alpha :{\mathbb {S}}^1 \rightarrow F({\mathbb {S}}^2) {\setminus } Z\) winds around the Z-axis at least twice, that is the homotopy class \([\alpha ]\) of \(\alpha \) in the group \(\pi _1({\mathbb {R}}^2 {\setminus } Z, \alpha (0)) \simeq {\mathbb {Z}}\) is non-zero and does not span the group \({\mathbb {Z}}\). Furthermore the intersection \(Z \cap F(B^3(0,1))\) consists of countably many paths starting and ending at the boundary \(f{\mathbb {S}}^2\) and so since Z intersects fB(0, 1) only at a single point all but one of these loops can be pulled to the boundary \(f {\mathbb {S}}^2\) without intersecting \(\alpha \). Thus the homotopy class \([\alpha ]\) of \(\alpha \) in the group \(\pi _1(F({\mathbb {S}}^2) {\setminus } {\hat{Z}}, \alpha (0)) \simeq {\mathbb {Z}}\) is also non-zero and does not span the group \({\mathbb {Z}}\). But this is a contradiction since \(\alpha = F (\partial B(0,1) \times \{ 0 \})\), where the homotopy class \([ \partial B(0,1) \times \{ 0 \}]\) spans \(\pi _1(F({\mathbb {S}}^2) {\setminus } Z, (1,0,0)) \simeq {\mathbb {Z}}\) at the domain side and a homeomorphism F induces an isomorphism between homotopy groups by for example [15, p. 34]. \(\quad \square \)

Proof of Theorem 1.2

The distributional adjugate is a well-defined distribution as \(f\in BV\) is continuous. Without loss of generality we assume that f is defined on \((0,1)^3\) and we show that \(\mathcal {ADJ}\, Df\in {\mathcal {M}}((0,1)^3)\). We only show that \({\mathcal {J}}_{f^t_{1,1}}\) is a measure for a.e. \(t\in (0,1)\) and that

as the proof for other eight components of \(\mathcal {ADJ}\, Df\) is similar.

By Theorem 3.1 we know that

and hence

Let us fix \(t\in (0,1)\) such that \({\mathcal {H}}^2\bigl ( f^t_{1}((0,1)^2) \bigr )<\infty \). Put \(g {:}{=}f^t_{1,1}\) and denote by \(g_1\) and \(g_2\) its coordinate functions. Let us fix \(\varphi \in C^{1}_0((0,1)^2)\). We recall the definition of distributional Jacobian

where the integration is with respect to the relevant components of the variation measure of g as earlier.

Let \(\psi \in C^{\infty }_C[0,1)\) be such that \(\psi \ge 0\), \(\psi ' \le 0\) and

For each \(\varepsilon >0\) we denote by \(\eta _\varepsilon \) the usual convolution kernel, that is

It is clear that \(\eta _\varepsilon *D\varphi =D\eta _\varepsilon *\varphi \) converges uniformly to \(D\varphi \) as \(\varepsilon \rightarrow 0+\) and hence

It is easy to see that \(D\eta _\varepsilon (x)={\psi _\varepsilon }'(|x|)\nu \), where \(\nu =\frac{x}{|x|}\) is the normal vector. By the Fubini theorem and change to polar coordinates we get

By the degree formula Proposition 4.5 we obtain

Let us fix \(0<\varepsilon <\frac{1}{2}{\text {dist}}({\text {supp}}\,\varphi ,\partial (0,1)^2)\). Then we have with the help of Lemma 6.1

Notice that for fixed \(y\in {\mathbb {R}}^2\) we have

With this we obtain from (6.2) that

By [26, Theorem 7.7] we see that

Combining this with (6.3), it follows that for every \(\varphi \in C^{1}_0((0,1)^2)\) we have

with C independent of \(\varphi .\) By the Hahn-Banach Theorem there is an extension to every \(\varphi \in C_0((0,1)^2)\) which satisfies the same bound. By the Riesz Representation Theorem there is a measure \(\mu _t\) such that

and thus \(\mathcal {ADJ}\, Df\in {\mathcal {M}}((0,1)^3)\). \(\quad \square \)

6.1 Dependence on the system of coordinates

In principle the Definition 1.4 of \(\mathcal {ADJ}\, Df\in {\mathcal {M}}\) depends on our coordinate system. Below we show that this notion is independent on the system of coordinates.

Corollary 6.2

Let \(\Omega \subset {\mathbb {R}}^3\) be a domain and \(f\in BV(\Omega ,{\mathbb {R}}^3)\) be a homeomorphism satisfying (1.2) such that \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\). Then \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\) also for a different coordinate system.

Proof

By Theorem 1.1 we know that \(f^{-1}\in BV\). Hence \(f\in BV_{{\text {loc}}}\) and \(f^{-1}\in BV_{{\text {loc}}}\) and both of these do not depend on the choice of coordinate system. Thus by Theorem 1.2 we have \(\mathcal {ADJ}\, Df\in {\mathcal {M}}(\Omega ,{\mathbb {R}}^{3\times 3})\) for any coordinate system. \(\quad \square \)

It is of course not true that the value of

is independent of coordinate system. In fact it might be more natural to define \(|\mathcal {ADJ}\, Df|\) as an average over all directions (and not only 3 coordinate directions). Then, one could ask for the validity of (compare with (1.1))

6.2 The notion is stable under weak convergence

For possible applications in the Calculus of Variations we need to know that the notion of distributional adjugate is stable under weak convergence.

Theorem 6.3

Let \(\Omega \subset {\mathbb {R}}^3\) be a bounded domain. Let \(f_j, f\) be a BV homeomorphisms of \((0,1)^3\) onto \(\Omega \) and assume that \(f_j\rightarrow f\) uniformly and weak* in \(BV((0,1)^3,\Omega )\). Further suppose each \(f_j\) satisfies (1.2) and let \(\mathcal {ADJ}\, Df_j\in {\mathcal {M}}((0,1)^3)\) with

Then \(\mathcal {ADJ}\, Df\in {\mathcal {M}}\).

Proof

By (6.5) and Theorem 1.1 we obtain that the sequence \((f^{-1}_j)\) is a bounded in \(BV(\Omega ,{\mathbb {R}}^3)\) and hence it has a weakly* converging subsequence. Thus we can assume (passing to a subsequence) that \(f^{-1}_j\rightarrow h\) weakly* in BV and also strongly in \(L^1\) (see [1, Corollary 3.49]). We define the pointwise representative of h as

Now we need to show that \(h=f^{-1}\). Fix \(x_0\in (0,1)^3\) and \(0<r<{\text {dist}}(x_0,\partial (0,1)^3)\). We find \(\delta >0\) so that \(B(f(x_0),\delta )\) is compactly contained in \(f(B(x_0,r))\). Since \(f_j \rightarrow f\) uniformly we obtain that for j large enough we have

It follows that

where we use that \(f_j^{-1}\rightarrow h\) strongly in \(L^1\) and that we have a proper representative of h. As the above inequality holds for every \(r>0\) we obtain \(h(f(x_0))=x_0\).

From \(f\in BV\) and \(f^{-1}=h\in BV\) we obtain \(\mathcal {ADJ}\, Df\in {\mathcal {M}}((0,1)^3)\) by Theorem 1.2. \(\quad \square \)

References

Ambrosio, L., Fusco, N., Pallara, D.: Functions of Bounded Variation and Free Discontinuity Problems. Oxford Mathematical Monographs. The Clarendon Press, Oxford University Press, New York 2000

Ball, J.: Global invertibility of Sobolev functions and the interpenetration of matter. Proc. R. Soc. Edinb. Sect. A88(3–4), 315–328, 1981

Cesari, L.: Surface Area Annals of Mathematics Studies, No. 35, p. 595. Princeton University Press, Princeton, NJ 1956

Ciarlet, P.G., Nečas, J.: Injectivity and self-contact in nonlinear elasticity. Arch. Ration. Mech. Anal. 97(3), 171–188, 1987

Conti, S., De Lellis, C.: Some remarks on the theory of elasticity for compressible Neohookean materials. Ann. Sc. Norm. Super. Pisa Cl. Sci. (5)2, 521–549, 2003

Csörnyei, M., Hencl, S., Malý, J.: Homeomorphisms in the Sobolev space \(W^{1, n-1}\). J. Reine Angew. Math. 644, 221–235, 2010

De Lellis, C.: Some fine properties of currents and applications to distributional Jacobians. Proc. R. Soc. Edinb. 132, 815–842, 2002

De Lellis, C.: Some remarks on the distributional Jacobian. Nonlinear Anal. 53, 1101–1114, 2003

D’Onofrio, L., Schiattarella, R.: On the total variations for the inverse of a BV-homeomorphism. Adv. Calc. Var. 6, 321–338, 2013

D’Onofrio, L., Hencl, S., Malý, J., Schiattarella, R.: Note on Lusin \((N)\) condition and the distributional determinant. J. Math. Anal. Appl. 439, 171–182, 2016

Federer, H.: Geometric Measure Theory. Die Grundlehren der mathematischen Wissenschaften, vol. 153, 2nd edn. Springer, New York 1996

Federer, H.: Hausdorff measure and Lebesgue area. Proc. Natl. Acad. Sci. USA37, 90–94, 1951

Fonseca, I., Gangbo, W.: Degree Theory in Analysis and Applications. Clarendon Press, Oxford 1995

Gustin, W.: Boxing inequalities. J. Math. Mech. 9, 229–239, 1960

Hatcher, A.: Algebraic Topology, p. xii+544. Cambridge University Press, Cambridge 2002

Henao, D., Mora-Corral, C.: Invertibility and weak continuity of the determinant for the modelling of cavitation and fracture in nonlinear elasticity. Arch. Ration. Mech. Anal. 197, 619–655, 2010

Henao, D., Mora-Corral, C.: Fracture surfaces and the regularity of inverses for BV deformations. Arch. Ration. Mech. Anal. 201, 575–629, 2011

Henao, D., Mora-Corral, C.: Lusin’s condition and the distributional determinant for deformations with finite energy. Adv. Calc. Var. 5, 355–409, 2012

Hencl, S., Kauranen, A., Malý, J.: On distributional adjugate and the derivative of the inverse, to appear in Annales Academiae Scientiarum Fennicae Mathematica. Preprint arXiv:1904.04574

Hencl, S., Koskela, P.: Regularity of the inverse of a planar Sobolev homeomorphism. Arch. Ration. Mech. Anal180, 75–95, 2006

Hencl, S., Koskela, P.: Lectures on Mappings of Finite Distortion Lecture Notes in Mathematics 2096, p. 176. Springer, Berlin 2014

Hencl, S.: Sharpness of the assumptions for the regularity of a homeomorphism. Mich. Math. J. 59, 667–678, 2010

Hencl, S., Koskela, P., Onninen, J.: Homeomorphisms of bounded variation. Arch. Ration. Mech. Anal186, 351–360, 2007

Iwaniec, T., Koskela, P., Onninen, J.: Mappings of finite distortion: monotonicity and continuity. Invent. Math. 144, 507–531, 2001

Iwaniec, T., Martin, G.: Geometric Function Theory and Nonlinear Analysis. Oxford Mathematical Monographs. Clarendon Press, Oxford 2001

Mattila, P.: Geometry of Sets and Measures in Euclidean Spaces Cambridge Studies in Advanced Mathematics. Cambridge University Press, Cambridge 1999

Müller, S.: \(Det=det\) A remark on the distributional determinant. C. R. Acad. Sci. Paris Series I Math. 311(1), 13–17, 1990

Müller, S., Spector, S.J.: An existence theory for nonlinear elasticity that allows for cavitation Arch. Ration. Mech. Anal. 131(1), 1–66, 1995

Müller, S., Spector, S.J., Tang, Q.: Invertibility and a topological property of Sobolev maps. SIAM J. Math. Anal. 27, 959–976, 1996

Acknowledgements

The authors would like to thank Jan Malý for pointing their interest to Theorem 2.4 and for many valuable comments and for finding the gap in the original proof of Proposition 4.2. The authors would also like to thank to Ulrich Menne for his information about the literature on Lebesgue area and the anonymous referee for careful reading of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by S. Müller

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

SH and AK were supported in part by the ERC CZ grant LL1203 of the Czech Ministry of Education. SH was supported in part by the grant GAČR P201/18-07996S. AK acknowledges financial support from the Spanish Ministry of Economy and Competitiveness, through the “María de Maeztu” Programme for Units of Excellence in R&D (MDM-2014- 0445). The last author was supported by a grant of the Finnish Academy of Science and Letters.

Rights and permissions

About this article

Cite this article

Hencl, S., Kauranen, A. & Luisto, R. Weak Regularity of the Inverse Under Minimal Assumptions. Arch Rational Mech Anal 238, 185–213 (2020). https://doi.org/10.1007/s00205-020-01540-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00205-020-01540-4