Abstract

Microgrids serve an essential role in the smart grid infrastructure, facilitating the seamless integration of distributed energy resources and supporting the increased adoption of renewable energy sources to satisfy the growing demand for sustainable energy solutions. This paper presents an application of integral reinforcement learning (IRL) algorithm-based adaptive optimal control strategy for automatic generation control of an is-landed micro-grid. This algorithm is a model-free actor-critic method that learns the critic parameters using the recursive least square method. The actor is straightforward and evaluates the action from the critic directly. The robustness of the proposed control technique is investigated under various uncertainties arising from parameter uncertainty, electric vehicle (EV) aggregator, and renewable energy sources. This study incorporates case studies and comparative analyses to demonstrate the control performance of the proposed control strategy. The effectiveness of the technique is evaluated by comparing it with deep Q-learning (DQN) control techniques and PI controllers. The proposed controller significantly improves performance metrics compared to the DQN and PI controllers. It reduces the peak frequency deviation by 6\(\%\) and 14\(\%\), respectively, compared to the DQN and PI controllers. When subjected to multiple-step load disturbances, the proposed controller reduces the mean square error by 28\(\%\) and 42\(\%\), respectively, while lowering both the integral absolute error and the integral time absolute error by 21\(\%\) and 35\(\%\) compared to the DQN and PI controllers. Additionally, when operating with renewable energy sources, the proposed controller decreases the standard deviation in the frequency deviation by 17\(\%\) compared to the DQN controller and 23\(\%\) compared to the PI controller.

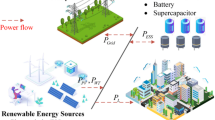

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Renewable energy is a long-term and potentially sustainable strategy for reducing green house gas emissions significantly. Due to environmental concerns, economic expansion, and the energy crisis problem, incorporating renewable energy sources into the power system is now getting accelerated. The transition from centralized to distributed generation (DG) has enhanced the desirability and suitability of micro-grids for integrating renewable energy sources [1]. Micro-grid is a small-scale integrated energy system with multifarious distribution configuration consisting of several interconnected distributed energy resources and loads situated within a specific local area and operating within a well-defined electrical boundary [2].

Microgrids may be viewed as a more developed form of distributed generation system with minimal influence from the stochastically distributed generations through efficient management of the storage systems and dispatched loads [3]. Generally, a microgrid operates in grid-connected mode or autonomous mode. However, due to their lower equivalent inertia compared to the main grid, the management and controls of autonomous microgrids are often more complex than those of grid-connected ones. Consequently, even moderate or minor disruptions in the power supply can cause issues in power quality and stability, specifically, a deterioration in the quality of the voltage and frequency [4]. Incorporating energy storage systems into microgrids can maintain the instantaneous power balance and enhance the microgrid’s dynamic performance, which is crucial in is-land operation mode. The integration of multiple energy storage devices, such as batteries and flywheel, has the potential to enhance the stability of microgrids. However, this integration also introduces increased complexity of control issues.

Frequency regulation is a significant challenge in autonomous microgrids due to the low inertia of the system and the large share of intermittent renewable sources [5]. Renewable energy sources and energy storage systems must co-ordinate in autonomous microgrids to limit the frequency deviations by minimizing the mismatch between generation and demand [6,7,8]. When there is an imbalance between generated power and demand power, different control strategies are needed to maintain the frequency stability of the system [9]. To stabilize the system, primary droop controllers are widely employed. Still, frequency deviations from the nominal value are observed in the steady state. Consequently, a secondary control strategy is employed to reach optimal frequency synchronization. Generally, the primary droop and secondary frequency control are called automatic generation control (AGC). In generally, it is also known as load frequency control (LFC). This paper mainly focuses on the automatic generation control of micro-grid supported by renewable energy and storage systems.

Demand response, grid integration of electric vehicles, smart homes, presence of noise on the sensor, etc. increase the unpredictability in the power system operation [10]. Huge integration of renewable energy sources increases the uncertainty of the system due to their intermittent in nature. During the parameter measurement of the microgrid, the value of internal parameters of the system may deviate in a small range from their nominal values. The presence of parameter uncertainties and load disruptions introduces unpredictability and uncertainty into the power system [11]. Due to the aforementioned reasons, the dynamics of the power system varies with time, and an accurate simulation model cannot always be found. Conventional controllers such as Proportional-Integral-Derivative (PID), Linear Matrix Inequality (LMI), Sliding Mode Control (SMC), and Model Predictive Control (MPC) are developed using model-based techniques to obtain the optimal control performance. These model-based control techniques guarantee optimal the performance when applied to a precise system model with no uncertainty. However, the interest of this paper is to design of controller for unknown system dynamics.

Reinforcement learning (RL) and adaptive dynamic programming (ADP) can solve the optimal control problem from data without having the knowledge of the system dynamics [12,13,14]. RL and ADP fill the gap between conventional optimal control and adaptive control techniques. The optimal solution is obtained by learning online the solution to the Hamilton–Jacobi–Bellman (HJB) equation [15]. The HJB equation reduces to an algebraic Recati equation (ARE) for linear systems. RL adopts the temporal difference (TD) approach. TD method can learn directly from the raw experience without having a model of the environment dynamics. TD method implements mainly two steps [15, 16]: (1) to solve the temporal difference equation (known as policy evaluation), (2) to find the optimal control strategy (known as policy improvement). These steps are analogous in solving Hamilton–Jacobi–Bellman (HJB) equation for the optimal control problem. Policy and value iteration methods determine the sequence in which the TD equations can be solved, and the corresponding optimal policies can be obtained.

In recent years, extensive research on RL-based AGCs has been conducted, including DQN-based AGC [17], DDQN based AGC [18], DDPG-based AGC [19, 20], SAC-based AGC [21], and others. However, these controllers often require multiple neural networks for designing the control strategy. Each algorithm presents its unique advantages; however, increased use of neural networks increases the complexity of the design. Considering the challenges associated with design complexity and popularity of PID controllers for AGC, authors of [22] propose RL-based adaptive PID for AGC. The above discussion reveals that adaptive control strategies to reduce design complexity have room for AGC. In [23, 24], the authors present an integral reinforcement learning (IRL) algorithm-based optimum control method for automatic generation control in power systems. Papers [23] present two different implementations of online IRL controllers, each employing separate neural networks for actor and critic networks, it lacks discussion on the neural network configurations, raising concerns about the complexity of the controller design. Conversely, the recursive least square-based IRL [25] approach offers a solution to the optimal control problem without relying on neural networks. The authors in [23] utilized the gradient method to update neural network parameters by computing the cost function gradient for each parameter. However, using the recursive least square method, the parameters of the IRL-based optimal controller can be evaluated directly, eliminating the need for a gradient descent approach [25]. This approach effectively eradicates suboptimal control policies arising from local optima.

The integration of renewable energy sources poses a significant challenge to traditional power system control methods, necessitating innovative approaches to ensure efficient and reliable operation. While the integral reinforcement learning (IRL) algorithm-based optimum control method proposed by [23] and [24] shows promise in addressing uncertainties and unknown dynamics in power systems, its efficacy in the context of renewable energy sources remains largely unexplored. Furthermore, IRL-based AGC needs to investigate the impact of uncertainty and unpredictability resulting from parameter uncertainty and external disturbances such as renewable integration and electric vehicle (EV) integration. Moreover, it is crucial to emphasize that the application of IRL controller for AGC of microgrids is still limited, and further research is needed in this area. This is because the IRL-based optimal controller offers notable advantages: (1) similar to value-based model-free RL algorithms, it does not rely on the discretization of action and state spaces, and (2) akin to deep RL algorithms, it avoids the necessity of training multiple neural networks. The proposed work can be viewed as an energy management problem. A comparison table of the proposed method with traditional methods is provided in Table 1.

The main contributions of the paper are as follows:

-

This paper investigates the automatic generation control of an isolated microgrid. The investigation focuses on the impact of an EV aggregator, parameter uncertainty, and the uncertainty associated with renewable energy sources on the frequency stability of the system.

-

This paper introduces an adaptive optimal control technique for secondary frequency control using the integral reinforcement learning algorithm. Like other model-free RL algorithms, it can learn the system without prior knowledge of the system dynamics. During pre-learning, the recursive least square (RLS) method is implemented to estimate the controller parameters.

-

For comparison purposes, the effectiveness of the IRL controller is compared with the performance of deep Q-learning (DQN) techniques and PI controller.

The rest parts of the paper are organized as follows. Section 2 describes the dynamic model of the system components. In Sect. 3, the mathematical formulation of the proposed control strategy is provided, followed by Sect. 4. Section 4 presents the simulation results. Finally, Sect. 5 concludes the paper.

2 Dynamic model of autonomous microgrid understudy

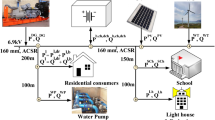

The proposed microgrid is comprised of various system components, including photovoltaic (PV) and wind power generation, a diesel engine system, an electric vehicle (EV) aggregator, and energy storage systems such as flywheel energy storage systems (FESS) and battery energy storage systems (BESS). This section presents the dynamic model of these components and the microgrid’s state-space representation. Figure 1 illustrates the schematic diagram of the system.

2.1 Diesel generator system

Diesel generators can provide reliable and stable power to remote areas. The dynamic model of the diesel generator can be expressed as follows [35]:

where \(\Delta X_{g}(t)\), \(\Delta P_{g}(t)\), \(\Delta f(t)\), \(\Delta I(t)\) and \(\Delta P_d\) denote deviation in governor position, deviation in generator power, deviation in frequency, incremental change in integral control and change in load disturbance, respectively.

2.2 Renewable energy sources

The renewable energies integrated to the micro-grid are PV and wind power. The first-order dynamic equations of PV and wind are given in Eqs. 2 and 3, respectively [40,41,42].

2.3 Storage systems

In the proposed micro-grid energy storage systems, battery energy storage system and flywheel energy storage systems (BESS and FESS) are used. The capacity of the BESS should reach a certain scale to significantly influence the system frequency [36]. However, the capital cost of a large BESS is relatively high. To get a large-scale BESS, aggregated multiple small-scale BESS is implemented in [37] for LFC. In this study, multiple small-scale BESS are aggregated for primary frequency control of the micro-grid. The first-order dynamic equations of ith BESS and FESS are given in Eqs. 4, and 5, respectively [38, 39]. \(\alpha _i\) represents the participation factor of each BESS and \(i=\)1, 2, 3.

2.4 EV Aggregrator

Assuming that all EVs participating in the EV aggregator have same time constant (\(T_e\)), the first-order dynamic model of the EV aggregator is expressed in Eq. 6 [43].

where \(K_e=\sum _{i=1}^N K_{{ei}} / N, i=1, \ldots , N\). \(K_{ei}\) is the gain of the ith EV. \(\alpha \) is the the participation factor of EV aggregator. N is the total number of EVs. The gain \((K_e)\) depends on the SOC of battery. An EV can take part in LFC with SOC controllable mode or SOC idle mode. In SOC idle mode, an EV can absorb or discharge power without considering its battery SOC. The gain (\(K_{ei}\)) of the ith EV is considered as \({\bar{K}}_{e}=1\) in SOC idle mode. When SOC is considered ,the gain of ith EV can be modified as \(K_{\textrm{ei}}={\bar{K}}_e-{\bar{K}}_e g_i(t)\), where

When the \({SOC}_i\) \(\ge \) \({SOC}_{\text{ high } ,i}\), the ith EV will only deliver power with \(K_{{ei}}={\bar{K}}_e\). When the \({SOC}_i\) \(\le \) \({SOC}_{\text{ low } ,i}\), the ith EV will only absorb power with \(K_{{ei}}=0\). For other cases, \(K_{ei}\) is calculated using Eq. 7.

Assume that among N EVs, \(N_1\) and \(N_{2}\) (\(=N-N_{1}\)) are participating in LFC with SOC idle mode and SOC controllable mode, respectively. Then the gain of the aggregated EVs can be written as

where \(\eta _2\) is \(N_2/N\), \(\eta =max(\eta _2)\), \(g_0(t)=\) \(\sum _{i=N_1+1}^N\) \(g_i(t)/N_2\) and \(g(t)=(\eta _2/\eta )g_0(t)\). N, \(N_1\) and \(N_2\) are varying with time. Figure 2a–c represents the number of plug-in EVs participating in LFC. The maximum and minimum numbers for the total number of available EVs (N) have been set at 650 and 900, respectively. The value of N, depicted in Fig. 2a, is determined randomly between the minimum and maximum values. Figure 2b presents the number of vehicles in idle mode. Similarly, Fig. 2c depicts the number of EVs in SOC controllable mode and it is set in such way that the \(\eta _2\) value should be less than given \(\eta \). Given \(\eta \) is 0.5, Fig. 2d shows \(\eta \) and \(\eta _2=N_2/N\). Based on these variables, \(g_0(t)\) and g(t) of Eq. 8 are shown in Fig. 3a and b. The time-dependent gain (\(K_e\)) is illustrated in Fig. 3c.

Remark

In [43], the total number of EVs of the aggregator is experiencing rapid fluctuations with time between the minimum and maximum values. However, maintaining such variation during all times may not be feasible in practice. Therefore, in this paper, the total number of EVs is adjusted slowly over time and fluctuates within the minimum and maximum values. A wide range of variations in total number of EVs, including slow and fast variations and constant fluctuations, are considered.

The complete state space model of the proposed micro-grid is

where \( x=[\Delta X_{g }, \Delta P_{t }, \Delta f, \Delta I, \Delta P_{PV }, \Delta P_{W }, \Delta P_{BESS1 },\) \(\Delta P_{BESS2}, \Delta P_{BESS3 }, \Delta P_{FESS }, \Delta P_{e} ]^T\). u is the output of the controller. \(\Delta P_{d}=[\Delta P_{Solar }, \Delta P_{Wind }]^T\). \(A_1\), \(A_2\), B, and \(\Gamma \) are as follows:

\(A_1\)=\(\begin{bmatrix} -\frac{1}{ T_{g} } & 0 & \frac{-1}{R{Tg}}& \frac{-1}{T_{g}}& 0& 0\\ \frac{1}{T_{t}} & \frac{-1}{T_{t}} & 0 & 0 & 0 & 0 \\ 0 & \frac{K_{P}}{T_{P}} & \frac{-1}{T_{p}} & 0 & \frac{K_{P}}{T_{P}} & \frac{K_{P}}{T_{P}} \\ 0 & 0 & 0 & K_I & 0 & 0 \\ 0 & 0 & 0 & 0 & -\frac{1}{T_{PV}} & 0 \\ 0 & 0 & 0 & 0 & 0 & -\frac{1}{T_{WT}}\\ 0& 0& \frac{\alpha _1}{T_{BESS1}}& 0& 0& 0\\ 0& 0& \frac{\alpha _2}{T_{BESS1}}& 0& 0& 0\\ 0& 0& \frac{\alpha _3}{T_{BESS1}}& 0& 0& 0\\ 0& 0& \frac{1}{T_{FESS}}& 0& 0& 0\\ 0& 0& 0& 0& 0& 0\\ \end{bmatrix}\)

\(A_2\)=\(\begin{bmatrix} 0& 0& 0& 0& 0\\ 0& 0& 0& 0& 0\\ \frac{K_{P}}{T_{P}}& \frac{K_{P}}{T_{P}}& \frac{K_{P}}{T_{P}}& \frac{K_{P}}{T_{P}}& \frac{K_{P}}{T_{P}}\\ 0& 0& 0& 0& 0\\ 0& 0& 0& 0& 0\\ 0& 0& 0& 0& 0\\ -\frac{1}{T_{BESS1}}& 0& 0& 0& 0\\ 0& -\frac{1}{T_{BESS2}}& 0& 0& 0\\ 0& 0& -\frac{1}{T_{BESS3}}& 0& 0\\ 0& 0& 0& -\frac{1}{T_{FESS}}& 0\\ 0& 0& 0& 0& -\frac{1}{T_{e}}\\ \end{bmatrix}\)

This paper aims to design a controller that can effectively determine the u value, as per Eq. 9, for the above system.

3 Adaptive controller design

3.1 IRL controller

In this section, an adaptive control strategy based on the IRL algorithm is presented. The IRL is an online learning technique of internal system dynamics [25]. In this article, the IRL algorithm is used for secondary frequency control. Consider the linear continuous-time system

with the Q-value function

where \(x \in R^n\) is the system state, \(u\in R^m\) is the control input, \(A \in {\mathbb {R}}^{n \times n}\) and \( B \in {\mathbb {R}}^{n \times m}\). J(x, u) is the quadratic cost function. It is assumed that (A, B) is controllable. The cost function J(x, u) is defined as \(J(x, u)=x^T {\textbf {Q}} x+u^T {\textbf {R}} u. ~{\textbf {Q}}={\textbf {Q}}^T \ge 0 \in {\mathbb {R}}^{n \times n}\) and \({\textbf {R}}={\textbf {R}}^T>0 \in {\mathbb {R}}^{m \times m}\) are time-invariant weight matrices. By the Belllman’s optimal principle [15], the Kvalue can be found as

where P is the unique positive definite solution of the algebric Reccati equation(ARE)

The optimal continuous time Q-value function can be presented in quadratic form [44] as shown in Eq. 14.

It can be noticed that the \(S^*\) is associated with \(P^*\) in ARE. By minimizing \(Q^*(x,u)\) with respect to u, the optimal solution \(u^*\) can be found as follows:

Problem Statement: Solving the value function (Eq. 14) yields the optimal policy (u); however, the system matrix B is involved in this case, and it is unknown. The final objective is to implement the online adaptive IRL algorithm to get the optimal policy \(u^*\) (as per Eq. 15) without involving matrix B.

Pseudo code of pre-learning of IRL algorithm:

-

1.

Initialize Time step (t), Step size (T), K(t), W(t), \( W(t - T )\) and x(t)

-

2.

Calculate \(u(t) = - K(t)x(t)\). Apply the current policy to the system and observe the next state \(x(t+T )\). Then calculate \(u(t + T ) = - K(t)x(t + T )\). Collect dataset (x(t), \(x(t + T )\), u(t), \(u(t + T )\) and compute \(\phi (z(t))\), \(\phi (z(t + T )\))

-

3.

Define the value function in parametric form as \(Q(x(t), u(t)) =W^T\phi (z(t))\), where W is weight matrix, \( \phi (z(t)) \) is the quadratic polynomial basis set, z(t) is the vector \([x(t)^T~u(t)^T]\). \(\phi (z(t))=z(t) \bigotimes z(t)\) and \(\bigotimes \) represents the Kronecker product [45]. The number of elements of W is \(n(n+1)/2\), where n represents the number of elements in z(t).

-

4.

Calculate the weight matrix as follows

$$\begin{aligned} W(t+T)^T=\Delta \phi ^{-1} \int _t^{t+T} J(x, u) d \tau \end{aligned}$$(16)where \(\Delta \phi =\phi (z(t))-\phi (z(t+T))\). The inverse of \(\Delta \phi \) cannot be found directly as it is a vector. In this study, the inverse is determined using the recursive least square (RLS) method [46]. So, \(\Delta \phi ^{-1}=\Delta \phi ^T\left( \Delta \phi \Delta \phi ^T\right) ^{-1}\).

-

5.

Unpack the vector \(W(t+T)\) into matrix S (shown in Eq. 14) and find \(K=\left( S_{22}\right) ^{-1}\left( S_{12}\right) ^T\).

-

6.

Check condition \(||W (t+T) - W (t )|| < \zeta \) (Tolerance). If not so, set \(W(t)\leftarrow W(t+T)\), \(t\leftarrow t+T\). Go to Step 2. Else Go to Step 7.

-

7.

Get the final K.

-

8.

STOP

3.2 Deep Q-learning Controller

The DQN [47] uses two networks, namely the current (Q) and target (\(Q^-\)) networks. These networks are the same in structure. \(\theta \) and \(\theta ^-\) are the parameter of the current network and target network, respectively. The current network selects the action (\({\arg \max }\hspace{0.1cm} Q(s,u;\theta )\)) associated with the highest Q value. The target network evaluates the Q value (\(Q(s_t^\prime , u_t^\prime \)) of the target state (\(s_t^\prime \)). In this paper, these networks are three-layer backpropagation neural networks, with an input layer of four dimensions and an output layer of seven.

DQN employs a replay buffer to collect the experiences at each time step of pre-learning. At each time step (t), a mini-batch of samples is drawn from the buffer and passed through the current neural network. The optimal action, obtained from greedy policy, is then executed in the environment, leading to the observation of next state (\(s^\prime \)) and the calculation of reward (\(R_{l_t}\)). These experiences are stored as tuples containing the current state, action taken, reward obtained, and resulting next state. For example, the tuple for the ith sample is (\(s_i,u_i,R_{l_i},s_{i}^\prime \)). Subsequently, the immediate next state is provided to the target network to determine the maximum \(Q^-\)-value. The pictorial view of pre-learning of DQN at time step (t) is shown in Fig. 4. After pre-learning the current network is used as controller.

The loss function of ith sample of the mini-batch is expressed in Eq. 17.

Here, \(R_l\) and \(\gamma \) are reward function and discount factor, respectively. The reward (R) is chosen using Eq. 10.

The parameters \(\theta \) are updated using Eq. 19, where N is the number of samples in a mini-batch.

where \(\nabla _{\theta _t} L\left( \theta _t\right) \) is the gradient of loss function with respect to parameter of current network \(\theta \) at time step t. After each c time step, the critic network’s parameters are modified to match the actor network’s. \(\theta ^-\) is updated using Eq. 20. \(\theta _{t+c}\) is the critic parameter

4 Simulation

This section demonstrates the performance of the proposed control technique for automatic generation control of the proposed micro-grid subject to unpredictability and uncertainty associated with load disturbances and an EV aggregator. Table 2 displays the parameters related to the micro-grid.

Pre-learning of proposed technique: Algorithm 1 depicts the pre-learning procedure of the IRL controller. It is assumed that the states (\(x=\left[ \Delta X_{g }, \Delta P_{t }, \Delta f, \Delta I(t) \right] ^T\)) are observable. Q and R, involved in cost function J, are chosen as \(0.001 I_{4\times 4}\) and 0.08, respectively. The initial state \(x_0\) is \([0.1,0,0,0]^T\). Step size (T) is chosen as 0.1 s. Four different initial K values are chosen to show the convergence of the controller. The values are given in Table 3. The corresponding convergence curves are shown in Fig. 7, 5, and 6. Figure 7 shows the 2- norm of difference of weight matrix (W) and the 2-norm of difference of K over consecutive time steps. The convergence of elements of K are illustrated in Fig. 5a–d. Figure 6a–o illustrates the convergence of elements of weight matrix (W). Step size (T) is chosen as 0.1 s. Based on algorithm 1, the kernel matrix S and optimal K values are found and expressed in Eqs. 21 and 22, respectively. Likewise, Fig. 8 depicts the convergence curve of the DQN in response to the sinusoidal load disturbance, as illustrated in Fig. 9.

4.1 Case 1: Performance comparison under step load disturbance

This case study aims to investigate the performance of the proposed controller under step load disturbance. Notably, this analysis does not consider the uncertainties corresponding to the PV and wind systems. To appraise the effectiveness and adaptive performance of the proposed control strategy, a step load disturbance of 0.01pu is applied to the system. Figure 10 depicts the outcomes of the simulation. The numerical values of the peak and peak time of the controllers are provided in Table 4. The peak value of the proposed method is reduced by 6\(\%\) and 14\(\%\) compared to DQN and PI controllers. From the simulation result, it is observed that the proposed control enhances the transient performance by reducing the peak of the frequency deviation. The proposed controller shows a faster response compared to the DQN and PI controller.

4.2 Case 2: Performance comparison under multiple step load change

This case study evaluates the performance of the IRL controller under step frequent load changes. The effect of PV and wind power is not considered. The system is applied to multi-step load perturbations, and the corresponding change in frequency is illustrated in Fig. 11. The numerical value of applied step load disturbances is given in Table 5.

The result demonstrates that the proposed controller helps the system to reach its steady state faster despite frequent disturbances. For performance evaluation, this case study considers three performance indices: mean squared error (MSE), integral absolute error (IAE), and integral time absolute error (ITAE) [48]. Tracking accuracy is evaluated using the MSE index. The IAE index evaluates the system’s overall overshoot. The ITAE index is used to assess the transient response time. The numerical results for these indices of frequency deviation are given in Table 6. The MSE for the proposed controller is decreased by 28% and 42% compared to the DQN and PI controller, respectively. Similarly, the IAE and ITAE for the proposed controller are approximately decreased by 21% and 35% percent compared to the DQN and PI controller, respectively. From these numerical performance values, it is observed that the proposed controller achieves better tracking performance with smaller tracking errors, smaller overshoots, and faster responses than other controllers.

4.3 Case 3: Performance comparison under renewable sources

This case study investigates the robustness of the proposed controller towards the PV and wind power uncertainty under random load disturbances. The system subjected to random load disturbances (\(\Delta P_d\) \( \in \) [0.04,0.08] pu), as depicted in Fig. 12a. The perturbations in wind and solar power applied to the system are illustrated in Fig. 12b and c. The dynamic performances of the system are shown in Fig. 13. For performance evaluation, this case study considers the normal distribution of the frequency deviation. Figure 14 illustrates this. The proposed controller reduces the standard deviation in the frequency curve by 17\(\%\) and 23\(\%\) compared to DQN and PI controller. It is observed that the proposed controller exhibits a lower standard deviation compared to others, indicating less deviation frequency from the mean value. Furthermore, compared to other controllers, the mean value of frequency deviation for the proposed controller is closer to zero, which signifies a performance improvement. Therefore, it is observed that the probability of getting the frequency deviation close to zero is highest for the proposed controller. From the above discussion, it is concluded that the proposed controller effectively minimizes frequency deviations against the uncertainties imposed by PV and wind power.

4.4 Case 4: Sensitivity analysis

Theoretical examinations indicate that in cases where the power system exhibits inherent stability, variations in frequency remain relatively minor and constrained across varying load-damping coefficients. Conversely, within an unstable power system, disturbances can lead to amplified frequency deviations over time [49]. To demonstrate the robustness and efficacy of the proposed IRL controller in a more challenging condition, some critical parameters of the power system are varied and corresponding deviation in frequency (\(\Delta f\)) is illustrated in Fig. 15. The change in parameters are shown in Table 7. At a time of 2 s in this case study, a step load disturbance of 0.02 per unit is applied. Figure 15 unveils the adaptability and robustness of the IRL controller to the variations in system parameters. Case 3 corresponds to no change in parameters. Despite changes to the system parameters, the desired outputs are bounded with small fluctuations.

Based on the analysis of case studies 1 and 2, it has been determined that the controller proposed is highly effective in reducing frequency deviations when faced with step load disturbances, even in the presence of EV uncertainties. Similarly, in case study 3, the proposed controller has demonstrated effectiveness in minimizing frequency deviations despite the uncertainties introduced by PV and wind power. The controller has also been shown to be robust against parameter changes, which further highlights its reliability and efficiency.

In the given study, the target network of DQN updates its parameters without requiring the gradient of the loss function; instead, it directly copies parameters from the current network. In contrast, the current network requires the gradient of the loss function to update its parameters. As shown in Fig. 4, the current networks employ gradient ascent to update 77 (weights of the current network) parameters at each time step. However, the proposed controller adopts the RLS method, requiring only 15 parameter updates. In this paper, the simulation results show that the proposed controller performs better than the DQN algorithm. However, DQN may achieve enhanced performance, particularly with deep neural networks featuring more hidden layers and a larger discretized action set. However, the complexity of the controller will increase, causing an increase in the number of parameter updates and learning time. Moreover, the proposed controller needs no discretization of action set.

5 Conclusion

An optimal control technique that uses integral reinforcement learning has been successfully implemented for automatic generation control of EV, renewable, and storage system integrated is-landed micro-grid. The proposal includes four case studies that analyze the robustness of the controller against uncertainties due to load disturbances, EV aggregator, renewable integration, and parameter uncertainty. The case studies show that the proposed IRL controller effectively minimizes the frequency deviation. The proposed controller markedly enhances performance metrics relative to the DQN and PI controllers. Specifically, it achieves a reduction in peak frequency deviation by 6\(\%\) and 14\(\%\) compared to the DQN and PI controllers, respectively. Under multiple-step load disturbances, the controller decreases the mean square error by 28\(\%\) and 42\(\%\), respectively, while reducing both the integral absolute error and the integral time absolute error by 21\(\%\) and 35\(\%\) in comparison to the DQN and PI controllers. Furthermore, in scenarios involving renewable energy sources, the proposed controller lowers the standard deviation in frequency deviation by 17\(\%\) compared to the DQN controller and by 23\(\%\) compared to the PI controller. The proposed method is a policy iteration-based algorithm. The main limitation of the proposed method is it needs permissible initial conditions, which have been determined based on human expertise in this study. This paper applies the method to a single-area microgrid, but future work could potentially expand to interconnected multi-microgrids.

Abbreviations

- J(x, u):

-

Quadratic cost function

- Q(x, u):

-

Q-value function

- A, B :

-

System matrices

- Q,R :

-

Time invariant weights matrices

- x :

-

System state

- u :

-

Controller output

- V(x):

-

Value function

- Q, \(Q^-\) :

-

Current, target network

- \(\theta ,~\theta ^-\) :

-

Parameters of current, target network

- \(L(\theta ), R_\textrm{l}\) :

-

Loss function, reward for DQN

- \(\gamma \), \(\alpha _\textrm{l}\) :

-

Discount factor, learning rate

- W :

-

Weight matrix

- z :

-

\([x ~~u]^\textrm{T}\)

- \( \phi (z) \) :

-

Quadratic polynomial basis set

- \(\bigotimes \) :

-

Kronecker product

- \(T_\textrm{g}\) :

-

Time constant of governor

- \(T_\textrm{t}\) :

-

Time constant of turbine

- \(T_{\textrm{BESS}_i}\) :

-

Time constant of ith BESS

- \(T_\textrm{P}\) :

-

Time constant of power system

- \(T_\textrm{PV}\) :

-

Time constant of PV

- \(T_\textrm{WT}\) :

-

Time constant of Wind

- \(T_\textrm{FESS}\) :

-

Time constant of FESS

- \(T_\textrm{e}\) :

-

Time constant of EV aggregator

- \(K_\textrm{P}\) :

-

Gain of power system

- \(\alpha \) :

-

Participation factor of EV aggregator

- \(\alpha _i\) :

-

Participation factor of ith BESS

- \(\zeta \) :

-

Tolerence

- \(K_\textrm{I}\) :

-

Integral gain

- T :

-

Time step

- \(K_{ei}\) :

-

Gain of the ith EV

- N :

-

Total number of EVs

- \(N_1\) :

-

No. of EVs in SOC idle mode

- \(N_2\) :

-

No. of EVs in SOC Controllable mode

- \(\eta _2\) :

-

\(N_2/N\)

- \(\eta \) :

-

Maximum of \(\eta _2\)

- \(\Delta X_\textrm{g}\) :

-

Deviation in governor position

- \(\Delta P_\textrm{g}\) :

-

Deviation in generator power

- \(\Delta f\) :

-

Deviation in frequency

- \(\Delta I\) :

-

Incremental change in integral control

- \(\Delta P_\textrm{d}\) :

-

Change in load disturbance

- R :

-

Speed regulation of the governor

- \(K_\textrm{e}\) :

-

Gain of EV aggregator

- SOC:

-

State of charge

- SOC\(_{\text{ low } (\textrm{high}),i}\) :

-

Low (High) battery SOC ith EV

- SOC\(_{\max (\min ), i}\) :

-

Max (Min) battery SOC ith EV

- c :

-

Delayed time-step in DQN pre-learning

- \(\nabla _{\theta _\textrm{t}} L\left( \theta _\textrm{t}\right) \) :

-

Gradient of loss function

- \(\Delta P_{\textrm{Solar}}\) :

-

Solar power change

- \(\Delta P_{\textrm{Wind}}\) :

-

Wind power change

- \(\Delta P_{\textrm{PV}}\) :

-

Output solar power change

- \(\Delta P_{\textrm{W}}\) :

-

Output wind power change

- \(\Delta P_{\textrm{BESS}}\) :

-

Output battery power change

- \(\Delta P_{\textrm{FESS}}\) :

-

Output flywheel power change

- \(\Delta P_\textrm{e}\) :

-

EV aggregator power change

- \(\Gamma \) :

-

Disturbance matrix

- HJB:

-

Hamilton–Jacobi–Bellman

- ARE:

-

Algebraic Recati equation

- TD:

-

Temporal difference

- DQN:

-

Deep Q-network

- DDQN:

-

Double deep Q-network

- DDPG:

-

Deep deterministic policy gradient

- SAC:

-

Soft actor-critic

- IRL:

-

Integral reinforcement learning

- EV:

-

Electric vehicle

- RLS:

-

Recursive least square

- PV:

-

Photovoltaic

- BESS:

-

Battery energy storage system

- FESS:

-

Flywheel energy storage system

- PI:

-

Proportional integral controller

- PID:

-

Proportional integral derivative

- MSE:

-

Mean squared error

- IAE:

-

Integral absolute error

- ITAE:

-

Integral time absolute error

- AGC:

-

Automatic generation control

- DG:

-

Distributed generation

- LFC:

-

Load frequency control

- RL:

-

Reinforcement learning

- ADP:

-

Adaptive dynamic programming

References

Yang F, Feng X, Li Z (2019) Advanced Microgrid Energy Management System for Future Sustainable and Resilient Power Grid. IEEE Trans Ind Appl 55(6):7251–7260. https://doi.org/10.1109/TIA.2019.2912133

Kerdphol T, Rahman FS, Watanabe M, Mitani Y, Turschner D, Beck H-P (2019) Enhanced virtual inertia control based on derivative technique to emulate simultaneous inertia and damping properties for microgrid frequency regulation. IEEE Access 7:14422–14433. https://doi.org/10.1109/ACCESS.2019.2892747

Tang X, Deng W, Qi Z (2014) Investigation of the dynamic stability of microgrid. IEEE Trans Power Syst 29(2):698–706. https://doi.org/10.1109/TPWRS.2013.2285585

Nikkhajoei H, Lasseter RH (2009) Distributed generation interface to the CERTS microgrid. IEEE Trans Power Delivery 24(3):1598–1608. https://doi.org/10.1109/TPWRD.2009.2021040

Farrokhabadi M et al (2020) Microgrid stability definitions, analysis, and examples. IEEE Trans Power Syst 35(1):13–29. https://doi.org/10.1109/TPWRS.2019.2925703

Khooban M-H, Dragicevic T, Blaabjerg F, Delimar M (2018) Shipboard microgrids: a novel approach to load frequency control. IEEE Trans Sustain Energy 9(2):843–852. https://doi.org/10.1109/TSTE.2017.2763605

Muduli R, Jena D, Moger T (2023) Application of expected Sarsa-learning for load frequency control of multi-area power system. In: 2023 5th International conference on energy, power and environment: towards flexible green energy technologies (ICEPE), Shillong, India, pp. 1–6 https://doi.org/10.1109/ICEPE57949.2023.10201593

Sadasiva Behera, Nalin B, Dev Choudhury (2022) Modelling and simulations of modified slime mould algorithm based on fuzzy PID to design an optimal battery management system in microgrid. Cleaner Energy Syst 3:100029. https://doi.org/10.1016/j.cles.2022.100029

Adibi M, van der Woude J (2022) Secondary frequency control of microgrids: an online reinforcement learning approach. IEEE Trans Autom Control 67(9):4824–4831. https://doi.org/10.1109/TAC.2022.3162550

Guo W, Liu F, Si J, He D, Harley R, Mei S (2016) Online supplementary ADP learning controller design and application to power system frequency control with large-scale wind energy integration. IEEE Trans Neural Netw Learn Syst 27(8):1748–1761. https://doi.org/10.1109/TNNLS.2015.2431734

Singh V, Moger T, Jena D (2022) Uncertainty handling techniques in power systems: a critical review. Electr Power Syst Res 203:107633

Li H, Liu D, Wang D (2014) Integral reinforcement learning for linear continuous-time zero-sum games with completely unknown dynamics. IEEE Trans Autom Sci Eng 11(3):706–714. https://doi.org/10.1109/TASE.2014.2300532

Liu D, Xue S, Zhao B, Luo B, Wei Q (2021) Adaptive dynamic programming for control: a survey and recent advances. IEEE Trans Syst, Man, Cybernet: Syst 51(1):142–160. https://doi.org/10.1109/TSMC.2020.3042876

Muduli R, Nair N, Kulkarni S, Singhal M, Jena D, Moger T (2023) Load frequency control of two-area power system using an actor- critic reinforcement learning method-based adaptive PID controller. In: 2023 IEEE 3rd international conference on sustainable energy and future electric transportation (SEFET), Bhubaneswar, India, pp. 1–6 https://doi.org/10.1109/SeFeT57834.2023.10245225

Zhu LM, Modares H, Peen GO, Lewis FL, Yue B (2015) Adaptive suboptimal output-feedback control for linear systems using integral reinforcement learning. IEEE Trans Control Syst Technol 23(1):264–273. https://doi.org/10.1109/TCST.2014.2322778

Abouheaf M, Gueaieb W, Sharaf A (2019) Load frequency regulation for multi-area power system using integral reinforcement learning. IET Generat, Transm Distribut 13(19):4311–4323

Xi L, Zhou L, Liu L, Duan D, Xu Y, Yang L, Wang S (2020) A deep reinforcement learning algorithm for the power order optimization allocation of agc in interconnected power grids. CSEE J Power Energy Syst 6(3):712–723

Xi L, Yu L, Xu Y, Wang S, Chen X (2020) A Novel Multi-Agent DDQN-AD Method-Based Distributed Strategy for Automatic Generation Control of Integrated Energy Systems. IEEE Transactions on Sustainable Energy 11(4):2417–2426. https://doi.org/10.1109/TSTE.2019.2958361

Li J, Yu T, Zhang X (2022) Coordinated load frequency control of multi-area integrated energy system using multi-agent deep reinforcement learning. Appl Energy 306:117900

Khooban MH, Gheisarnejad M (2021) A novel deep reinforcement learning controller based type-II fuzzy system: frequency regulation in microgrids. IEEE Trans Emerg Top Computat Intell 5(4):689–699. https://doi.org/10.1109/TETCI.2020.2964886

Zheng Y, Tao J, Sun H, Sun Q, Chen Z, Dehmer M, Zhou Q (2021) Load frequency active disturbance rejection control for multisource power system based on soft actor-critic. Energies 14(16):4804

Muduli R, Jena D, Moger T (2024) Application of reinforcement learning-based adaptive pid controller for automatic generation control of multi-area power system. IEEE Trans Automat Sci Eng. https://doi.org/10.1109/TASE.2024.3359219

Abouheaf Mohammed, Gueaieb Wail, Sharaf Adel (2019) Load frequency regulation for multi-area power system using integral reinforcement learning. IET Generat, Trans Distribut 13(19):4311–4323

Ou R, Xu Y, Li Z, Hu R, Huang J, Yu J (2021) Integral reinforcement learning-based adaptive optimal automatic generation control. In: 2021 4th International conference on energy, electrical and power engineering (CEEPE), pp. 643–649 https://doi.org/10.1109/CEEPE51765.2021.9475666

Vrabie D, Pastravanu O, Abu-Khalaf M, Lewis FL (2009) Adaptive optimal control for continuous-time linear systems based on policy iteration. Automatica 45(2):477–484

Qu Z, Xu C, Yang F, Ling F, Pirouzi S (2023) Market clearing price-based energy management of grid-connected renewable energy hubs including flexible sources according to thermal, hydrogen, and compressed air storage systems. J Energy Storage 69:107981

Zhang X, Yu X, Ye X, Pirouzi S (2023) Economic energy managementof networked flexi-renewable energy hubs according to uncertainty modeling by the unscented transformation method. Energy 278:128054

Khalafian F, Iliaee N, Diakina E, Parsa P, Alhaider MM, Masali MH, Zhu M (2024) Capabilities of compressed air energy storage in the economic design of renewable off-grid system to supply electricity and heat costumers and smart charging-based electric vehicles. J Energy Storage 78:109888

Liang H, Pirouzi S (2024) Energy management system based on economic Flexi-reliable operation for the smart distribution network including integrated energy system of hydrogen storage and renewable sources. Energy 293:130745

Pirouzi S (2023) Network-constrained unit commitment-based virtual power plant model in the day-ahead market according to energy management strategy. IET Generat, Trans Distribut 17(22):4958–4974

Norouzi M, Aghaei J, Niknam T, Pirouzi S, Lehtonen M (2022) Bi-level fuzzy stochastic-robust model for flexibility valorizing of renewable networked microgrids. Sustain Energy, Grids Netw 31:100684

Norouzi M, Aghaei J, Pirouzi S, Niknam T, Fotuhi-Firuzabad M (2022) Flexibility pricing of integrated unit of electric spring and EVs parking in microgrids. Energy 239:122080

Cavus M, Allahham A, Adhikari K, Giaouris D (2024) A hybrid method based on logic predictive controller for flexible hybrid microgrid with plug-and-play capabilities. Appl Energy 359:122752

Cavus M, Allahham A, Adhikari K, Zangiabadi M, Giaouris D (2023) Energy management of grid-connected microgrids using an optimal systems approach. IEEE Access 11:9907–9919

Wang C, Mi Y, Fu Y, Wang P (2018) Frequency control of an isolated micro-grid using double sliding mode controllers and disturbance observer. IEEE Trans Smart Grid 9(2):923–930. https://doi.org/10.1109/TSG.2016.2571439

Mahmoud TS, Ahmed BS, Hassan MY (2019) The role of intelligent generation control algorithms in optimizing battery energy storage systems size in microgrids: a case study from Western Australia. Energy Convers Manage 196:1335–1352

Chen S, Zhang T, Gooi HB, Masiello RD, Katzenstein W (2016) Penetration Rate and Effectiveness Studies of Aggregated BESS for Frequency Regulation. IEEE Transactions on Smart Grid 7(1):167–177. https://doi.org/10.1109/TSG.2015.2426017

Bevrani H, Habibi F, Babahajyani P, Watanabe M, Mitani Y (2012) Intelligent frequency control in an AC microgrid: online PSO-based fuzzy tuning approach. IEEE Trans Smart Grid 3(4):1935–1944. https://doi.org/10.1109/TSG.2012.2196806

Oshnoei A, Kheradmandi M, Muyeen SM (2020) Robust control scheme for distributed battery energy storage systems in load frequency control. IEEE Trans Power Syst 35(6):4781–4791. https://doi.org/10.1109/TPWRS.2020.2997950

Khooban M-H (2018) Secondary load frequency control of time-delay stand-alone microgrids with electric vehicles. IEEE Trans Industr Electron 65(9):7416–7422. https://doi.org/10.1109/TIE.2017.2784385

Javanmardi H, Dehghani M, Mohammadi M, Siamak S, Hesamzadeh MR (2022) BMI-based load frequency control in microgrids under false data injection attacks. IEEE Syst J 16(1):1021–1031. https://doi.org/10.1109/JSYST.2021.3054947

Rajesh KS, Dash SS (2019) Load frequency control of autonomous power system using adaptive fuzzy based PID controller optimized on improved sine cosine algorithm. J Ambient Intell Humaniz Comput 10:2361–2373

Pham TN, Nahavandi S, Hien LV, Trinh H, Wong KP (2017) Static output feedback frequency stabilization of time-delay power systems with coordinated electric vehicles state of charge control. IEEE Trans Power Syst 32(5):3862–3874. https://doi.org/10.1109/TPWRS.2016.2633540

Li H, Liu D, Wang D (2014) Integral reinforcement learning for linear continuous-time zero-sum games with completely unknown dynamics. IEEE Trans Autom Sci Eng 11(3):706–714. https://doi.org/10.1109/TASE.2014.2300532

Schacke K (2004) On the Kronecker product. Master’s thesis, University of Waterloo

Lewis JM, Lakshmivarahan S, Dhall S (2006) Dynamic data assimilation: a least squares approach, vol 13. Cambridge University Press, Cambridge

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmiller M (2013) Playing atari with deep reinforcement learning, arXiv preprint arXiv:1312.5602

Stojanovic V (2023) Fault-tolerant control of a hydraulic servo actuator via adaptive dynamic programming. Math Model Control 3(3):181–191. https://doi.org/10.3934/mmc.2023016

Huang H, Li F (2013) Sensitivity analysis of load-damping characteristic in power system frequency regulation. IEEE Trans Power Syst 28(2):1324–1335. https://doi.org/10.1109/TPWRS.2012.2209901

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Muduli, R., Jena, D. & Moger, T. Automatic generation control of is-landed micro-grid using integral reinforcement learning-based adaptive optimal control strategy. Electr Eng (2024). https://doi.org/10.1007/s00202-024-02648-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00202-024-02648-6