Abstract

This paper presents a hybrid agent-based stock-flow-consistent model featuring heterogeneous banks, purposely built to examine the effects of variations in banks’ expectations formation and forecasting behaviour and to conduct policy experiments with a focus on monetary and prudential policy. The model is initialised to a deterministic stationary state and a subset of its free parameters are calibrated empirically in order to reproduce characteristics of UK macro-time-series data. Experiments carried out on the baseline focus on the expectations formation and forecasting behaviour of banks through allowing banks to switch between forecasting strategies and having them engage in least-squares learning. Overall, simple heuristics are remarkably robust in the model. In the baseline, which represents a relatively stable environment, the use of arguably more sophisticated expectations formation mechanisms makes little difference to simulation results. In a modified version of the baseline representing a less stable environment alternative heuristics may in fact be destabilising. To conclude the paper, a range of policy experiments is conducted, showing that an appropriate mix of monetary and prudential policy can considerably attenuate the macroeconomic volatility produced by the model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper presents a hybrid agent-based stock-flow consistent (AB-SFC) macro-model with an agent-based banking sector. Its purpose is to investigate the effects of various assumptions concerning banks’ expectations formation and forecasting behaviour and, along the policy dimension, the impacts of monetary policy and prudential regulation. The hybrid model is constructed by fusing a macroeconomic stock-flow consistent model featuring households, firms, a government and a central bank with an agent-based banking sector which interacts with the aggregate portions of the model.

The model is initialised to a deterministic stationary state using UK data as a rough guide to give rise to realistic initial values, whereby the aggregate stock-flow consistent structure is utilised to reduce the degrees of freedom. A subset of the remaining free parameters is then calibrated empirically using the method of simulated moments, utilising a set of statistics calculated from UK macro time-series data, with the result that the model can reproduce these quite closely. Following validation exercises and a presentation of the dynamics produced by the baseline simulation thus obtained, I carry out two sets of experiments. Firstly I experiment with allowing banks to use a variety of forecasting heuristics in their expectations formation and decision-making, including heterogeneous expectations with heuristic switching and OLS-learning. The result is that these changes produce little to no difference in the overall dynamics when implemented in the baseline model, which provides a relatively stable environment. More sophisticated heuristics do not appear able to significantly outperform simpler ones, giving rise to very similar simulation results. When implemented in a modified version of the baseline model representing a less stable environment, however, it turns out that varying the expectations formation mechanism of banks can have a strongly destabilising impact. In addition, it is shown that when banks use an alternative, arguably more sophisticated heuristic for setting their interest rates, this can produce inferior outcomes for them. These results are in line with existing research and the concept of ‘ecological rationality’ which emphasises that the fitness of behavioural rules is highly context-dependent. The second set of experiments concerns the implementation of various stabilisation policies. It is shown that an appropriate mix of monetary and prudential policy can considerably reduce the macroeconomic fluctuations present in the baseline simulation, a result which is strengthened when fiscal policy is added to the policy mix. A different policy mix is necessary, however, to contain the instability triggered by alternative expectations formation heuristics in the modified version of the model. Along the policy dimension, the paper hence makes a case for concerted action incorporating a range of different tools and highlights the possible dependence of policy effectiveness on expectations formation mechanisms used by agents.

The paper contributes to research on hybrid AB-SFC models in that the particular focus on bank heterogeneity is novel to the literature. Moreover, the literature examining expectations formation in macroeconomic agent-based models in detail is at present still underdeveloped. Finally, the paper contributes to the increasing empirical orientation of the AB-SFC literature through the application of an empirical calibration algorithm to the presented model.

The paper is structured as follows: Section 2 gives a brief motivation for this research and reviews some relevant literature. Section 3 outlines the structure of the model and the behavioural assumptions. Section 4 discusses the initialisation and calibration strategy and presents the baseline simulation. Section 5 contains the results of the experiments carried out on the baseline. Section 6 concludes the paper. Online Appendix A presents the traditional balance sheet and transactions-flow matrices summarising the stock-flow consistent structure of the model. A description of the initialisation and calibration procedure, as well as a full list of initial and parameter values can be found in online Appendix B. Online Appendix C contains a sensitivity analysis on the monetary policy rule as well as several parameters which are not included in the empirical calibration procedure.

2 Motivation and literature review

The purpose of this paper is to combine insights from various strands of the literature to advance research on agent-based stock-flow consistent (AB-SFC) models. Over the past 10 to 15 years there have been substantial advances in the use of agent-based models (ABMs) in macroeconomics, leading to the emergence of a number of frameworks which have been applied to a variety of topics in macroeconomic research. Among others, these include the family of ‘Complex Adaptive Trivial Systems’ (CATS) models (Delli Gatti et al. 2011; Assenza et al. 2015), the various incarnations of the Eurace model (Cincotti et al. 2010; Dawid et al. 2012), and the Keynes+Schumpeter (K+S) model (Dosi et al. 2010). The basic goal of all these frameworks is to provide an alternative way to ‘micro-found’ macroeconomic models rooted in the complex adaptive systems paradigm, emphasising both micro-micro and micro-macro interactions, adaptation, as well as emergent properties. Agent-based approaches, including to macroeconomics, have also attracted increasing interest among policy-makers (see e.g. Turrell 2016; Haldane and Turrell 2018, 2019). Dawid and Delli Gatti (2018) provide a comprehensive review of agent-based macroeconomics and compare the major different frameworks in detail.

A by now fairly closely related strand of the literature which emerged out of the post-Keynesian tradition in macroeconomic research is that of stock-flow consistent (SFC) models (see Godley and Lavoie (2007), who develop the approach, as well as Caverzasi and Godin (2015) and Nikiforos and Zezza (2017) for surveys). Stock-flow consistent models are aggregative (i.e. not ‘micro-founded’), depicting dynamics at the sectoral level, and aim in particular at jointly modelling national accounts variables and flow-of-funds variables within a fully consistent accounting framework. This approach provides an important disciplining device and consistency check in writing large-scale computational models and is essential in comprehensive depictions of real-financial interactions. While by now there exist a range of large-scale pure SFC-models, including one developed in a central bank (Burgess et al. 2016), there is also a growing literature which combines stock-flow consistent frameworks with agent-based modelling in various ways (Dawid et al. 2012; Michell 2014; Seppecher 2016). Among these, Caiani et al. (2016) stand out in their emphasis upon the SFC structure of their model and the creative use thereof in initialisation and calibration.

The present paper follows the trend of combining agent-based and SFC modelling techniques and in particular represents a contribution to the development of hybrid agent-based models in which certain parts or sectors of the economy are modelled in an aggregate/structural way or using representative agents whilst others (typically one sector) are disaggregated and modelled using AB methods. Examples of this include Assenza et al. (2007) and Assenza and Delli Gatti (2013) who apply this approach, using heterogeneous firms, to the Greenwald-Stiglitz financial accelerator model (Greenwald and Stiglitz 1993). Michell (2014) uses an agent-based firm sector within an otherwise aggregate SFC framework to model the ideas of Steindl (1952) regarding monopolisation and stagnation along with Minsky’s (1986) trichotomy of hedge, speculative and Ponzi finance. Pedrosa and Lang (2018) construct a more complex model than that of Michell (2014) to investigate similar issues. Botta et al. (2019) focus on heterogeneity among households to investigate inequality dynamics in a financialised economy. The advantage of such a hybrid approach is that important insights arising from agent heterogeneity can be gained from a hybrid model without the necessity of constructing a fully agent-based framework, instead focussing only on a subset of sectors. While, as indicated above, there exist several canonical macroeconomic agent-based modelling frameworks, this is not the case for pure SFC models and the class of hybrid models described in this paragraph. Rather, these models are typically purpose-built for a given research question.Footnote 1 The present paper follows this approach, presenting a model purpose-built to discuss banks’ expectations formation under bounded rationality and to conduct policy experiments with a particular focus on monetary policy and prudential regulation. The emphasis is hence on introducing heterogeneity only within the banking sector; an approach which to my knowledge is novel to the literature.Footnote 2 The hybrid approach allows me to pay particularly close attention to the banking sector which is modelled in great detail in order to investigate the effect of banks’ behaviour and particularly their expectations formation on macroeconomic dynamics. This stands in contrast to many existing non-mainstream macroeconomic models, particularly those with a post-Keynesian flavour, in which banks are frequently modelled as relatively passive entities.

The issues of bounded rationality, learning and expectations formation are relatively long-standing components of the macroeconomic literature. Bounded rationality is a broad concept, with contributions ranging from works such as that of Sargent (1993) which arguably involves only minimal departures from full rationality, via the heuristics and biases approach of (new) Behavioural Economics (Kahneman and Tversky 2000) to the ‘procedural/ecological’ rationality concepts of Simon (1982) and Gigerenzer (2008) which aim to replace the traditional concept of perfect rationality altogether.Footnote 3 Any departure from full rationality in the traditional sense raises several thorny issues, especially how economic agents are envisioned to form expectations in the absence of full rationality. Several ways of tackling this problem have been proposed. Evans and Honkapohja (2001) develop the so-called e-learning approach whereby one can derive conditions under which agents, through attempting to estimate model parameters, may be able to ‘learn’ the rational expectations equilibrium of a model even in the absence of full rationality and perfect information. Hommes (2013) is a book-length treatment of the idea, stemming from the seminal contribution of Brock and Hommes (1997), that agents may switch between a number of different forecasting strategies based on their relative performance, and possibly the cost of acquiring the necessary information. Arifovic (2000) discusses the use of evolutionary learning algorithms in various macroeconomic settings.

While expectations formation, including under bounded rationality, is thus widely discussed in the literature, such considerations have had relatively little impact in AB and SFC models in which simple adaptive or naive expectations are often assumed without much discussion. Thus for instance, Dosi et al. (2017) appears to so far be the only paper explicitly applying structural heterogeneity and OLS learning of expectations in a macroeconomic ABM. Furthermore, while in most ABMs, agents are by necessity boundedly rational and endowed with imperfect information, specific modelling choices with regard to how agents form expectations are seldom discussed in detail, meaning that there is much room to contribute to the existing literature. Generally, there appears to have so far been little systematic investigation of the consequences of variations in the behavioural assumptions commonly contained in AB-SFC models. In this context, it should however be noted that the modelling of learning, which is of course closely related to expectations formation and adaptation of behavioural rules, has played a much more prominent role in the macro-ABM literature (see e.g. Dawid et al. 2012; Salle et al. 2012; Landini et al. 2014; Seppecher et al. 2019), with various forms of genetic algorithms (Dawid 1999) being a popular choice to depict the adaptation of agents to a changing economic environment. The present paper contributes to the literature along similar lines as Dosi et al. (2017), focussing on the consequences of varying assumptions about agents’ expectations formation. Despite not being based on any pre-existing framework, the model presented here incorporates a range of behavioural assumptions which are common in the AB and/or SFC literature and hence the simulation results discussed below should be of some general interest to researchers in the area.

Recent years have seen major advances in the development of AB(-SFC) modelling as a viable alternative paradigm in macroeconomic analysis. Chief among these has been the work carried out on empirical estimation/calibration and validation of macro-ABMs, moving away from the rather informal validation protocols which had been standard in the earlier literature (Windrum et al. 2007). Grazzini and Richiardi (2015) discuss the use simulated minimum distance estimators as developed e.g. by Gilli and Winker (2003), and Grazzini et al. (2017) suggest the application of Bayesian methods for the estimation of macroeconomic ABMs. Lamperti et al. (2018) show how machine learning surrogates can be used to empirically calibrate macro-ABMs in a computationally economical manner. Barde and van der Hoog (2017) present a detailed validation protocol for large-scale ABMs using the Markov Information Criterion and stochastic kriging to interpolate the response of the model to parameter changes. Guerini and Moneta (2017) suggest a validation method based on comparing structural vector autoregressive models estimated on both empirical and simulated data. These contributions represent a major step in increasing the credibility and empirical orientation of macro-ABMs as one particular weakness of this approach has always been the large number of parameters contained in any reasonably detailed model. While a range of different empirical estimation/calibration methods for macroeconomic ABMs have hence recently become available, their application to relatively complex models, especially newly developed ones, is still not standard in the literature. In applying a simulated minimum distance approach - in particular the method of simulated moments - to the model developed here, this paper hence contributes to the increasing empirical orientation of the macro-ABM literature. In addition, the paper represents, to my knowledge, the first attempt to apply an empirical calibration algorithm to a hybrid AB-SFC model containing only one agent-based sector, meaning that it should also be of some interest to researchers working on pure SFC models, the empirical grounding of which is also somewhat underdeveloped.

The chosen focus for this paper, as already mentioned, lies on the expectations formation of the banking sector. On the policy front, the detailed modelling of the banking sector also provides opportunity to contribute to recent debates surrounding the appropriate conduct of prudential regulation policy and its possible interactions with monetary policy (Galati and Moessner 2012; Barwell 2013; Claessens et al. 2013; Freixas et al. 2015). Prudential policy has begun to gain importance in the ABM literature, with several of the major frameworks being used to conduct policy experiments in financial regulation (e.g. van der Hoog 2015a, 2015b; Popoyan et al. 2017; Salle and Seppecher 2018; Krug 2018). By contrast, there have been relatively few treatments of this topic in pure aggregative SFC models (exceptions include Nikolaidi 2015; Detzer 2016 and Burgess et al. 2016). The model presented here is purposely constructed so as to incorporate a rich structure of prudential policy levers and potential feedback effects of monetary and prudential policy which are detailed in the model description below.

3 Model outline

The current section provides an overview of the model and its behavioural assumptions, beginning with its general sectoral structure.

3.1 General structure

The macroeconomic sectoral structure of the model is summarised in Fig. 1. The more traditional balance sheet and transactions flow matrices representing the aggregate structure of the model (i.e. excluding transactions occurring within the banking sector) are shown in Tables 11 and 12 in online Appendix Appendix.

As can be seen, the model consists of 5 sectors, namely households, firms, the government, the central bank and the banks. The first four sectors are modelled as aggregates without explicit micro-foundations whilst the banks are disaggregated. In particular, the model contains an oligopolistic banking sector consisting of 12 individual banks which are structurally identical (i.e. they all hold the same types of assets and liabilities) but may differ w.r.t. their decision-making and the precise composition and size of their balance sheets. The following sub-sections provide a sector-by-sector overview of the behavioural assumptions. The basic tick-length in simulations of this model is one week (with one year being composed of 48 weeks), and it is assumed that while all endogenous variables are computed on a weekly basis, main decision variables adjust to their target or desired values at differing speeds in an adaptive fashion, as will be detailed below.

3.2 Households

Every week, households compute a plan for desired consumption according to the Haig-Simons consumption function

where α1 is the propensity to consume out of weekly disposable income, yde is expected real household disposable income for the week, α2 is the annual propensity to consume out of accumulated wealth and vh is real household wealth. The expectations formation mechanism for all expected values in the model is discussed in Section 3.7. The motivation of this consumption rule, which is standard in the SFC literature, bears similarity to many of the canonical ABM rules described by Dawid and Delli Gatti (2018) and is also used in the benchmark AB-SFC of Caiani et al. (2016), is that if disposable income is defined in a manner consistent with the Haig-Simons definition of income, then this rule implicitly defines a target steady/stationary state household wealth to disposable income ratio to which households adjust over time.Footnote 4 One variation on the usual assumption of constant consumption propensities (α1 and α2) is that here these are assumed to depend on the (expected) real rate of return on households’ assets (government bonds, deposits and houses),Footnote 5\(r{r_{h}^{e}}\), according to a logistic function, meaning that the target ratio of wealth to disposable income (and hence households’ saving) also becomes a function of this return rate. α1 is determined by

where \({\alpha _{1}^{L}}\) and \({\alpha _{1}^{U}}\) are the lower and upper bounds of the consumption propensity and the σ’s are parameters. The same functional form also determines α2. Household consumption demand hence becomes a decreasing function of interest rates in the model (in particular government bond and deposit rates). This introduces a feedback effect of monetary policy (as well as banks’ interest rate setting behaviour) on economic activity which is a basic building block of the New Keynesian framework (Gali 2015) but which is largely absent from the AB and SFC literatures. While the strong link between interest rates and consumption implied by the standard New Keynesian model may be viewed as unrealistic, it does appear reasonable to suppose that the return rate households can expect on their savings should have some effect on their consumption demand. Both desired consumption and the ‘desired’ consumption propensities are computed every period, but it is assumed, using an adaptive mechanism, that consumption adjusts more quickly towards the desired level than do the consumption propensities. The idea is the following: Equation 1 is interpreted as giving an aggregate level of desired consumption of all households represented by the modelled aggregate household sector. At the same time, I assume that households on average update their consumption every quarter, i.e. every 12 periods. Accordingly, I assume that every period (week), 1/12 of the gap between actual and desired consumption (which may of course itself change from period to period) is closed. The same mechanism is applied to the consumption propensities, but here I assume an updating frequency of 24 periods. This mechanism enables me to mimic asynchronous adaptive decision-making at differing frequencies even in the case of sectors modelled as aggregates, whilst sticking to the basic model-time unit of one week. Importantly, this imparts a degree of real stickiness to the model which is central in enabling the model to generate realistic macroeconomic dynamics. The adjustment mechanism is applied throughout the model to decision variables pertaining to the aggregate sectors, which adapt according to a process of the form

where x is the decision variable in question and horizon is the adjustment speed which might be 4, 12, 24 or 48 as the case may be. Beyond the determination of consumption and the consumption propensities, the mechanism is also applied to the determination of demand on the housing market, households’ portfolio decisions, wage-setting, firms’ pricing and investment decisions as well as their leverage adjustment and dividend payouts described below. Where appropriate, adaptive expectations formation as described in Section 3.7 is also specified so as to match the stickiness of the variable to be predicted.

Households are assumed to privately own the firms and banks in the model, i.e. there are no traded firm or bank shares. Consequently, in allocating their financial savings to different financial assets, households have a choice between bank deposits and government bonds. Households’ demand for government bonds is given by a Brainard-Tobin portfolio equation (Brainard and Tobin 1968; Kemp-Benedict and Godin 2017), which is standard in the SFC literature (though not in ABMs). In this case, deposits act as the buffer stock absorbing shocks and errors in expectations, hence the share of financial wealth held as deposits by households is a residual. It is assumed that households revise their portfolio decisions at a frequency of 12 periods (quarterly).

The final two important behavioural assumptions regarding households are their demand for housing, and the dynamics of the wage rate. Households form a ‘notional’ demand for houses according to

where LTV is the maximum loan-to-value ratio (which is constant in the baseline but represents a possible prudential policy lever due to its effect on notional housing demand), \(V_{h}^{*}\) is the current target level of nominal household wealth derived from Eq. 1, and \(\overline {r_{M, r}^{e}}\) is the expected average real interest rate charged on mortgages, with \(\overline {r_{M, r}}^{n}\) being its ‘normal’ or conventional value, set equal to the value in the initial stationary state. There is hence no ‘direct’ speculative element in housing demand (in the sense that, for instance, (expected) house prices do not enter directly into the function), although appreciation of the housing stock obviously has a positive impact on Vh. With notional housing demand, the updating time horizon using equation 3 is assumed to be one quarter. Based on the notional housing demand, households formulate a demand for mortgages based on the LTV (it is assumed that households always use the maximum permissible LTV ratio). This demand for mortgages, which may or may not be fully satisfied based on banks’ credit rationing behaviour, in turn gives rise to an ‘effective’ demand for houses equal to

where Ms is the total amount of mortgages supplied by banks in the current period. Banks’ behaviour (as well as prudential policy, which, as described below, has an impact on rationing behaviour) hence affects the housing market both through mortgage rates and the extent to which mortgage demand is rationed. The supply of houses is determined by the assumption that in each period, a constant fraction η of a constant total stock of houses in the model are up for sale. The price of houses is then determined by market clearing.

Regarding wages, it is assumed that the (desired) nominal wage rate is determined by a Phillips-curve-type equation of the form

which is supposed to mimic the aggregate outcome of a wage-bargaining process. \({u^{e}_{h}}\) is the households’ expected rate of industrial capacity utilisation, \({\pi _{h}^{e}}\) is their (semi-annualised) expected rate of inflation whilst un is an exogenous ‘normal’ rate of capacity utilisation. The wage rate is anchored around Wn, its level in the stationary state, which appears reasonable since there is no long-run growth in the model and factor productivities are fixed. The actual wage rate adjusts to the desired one with a time-horizon of 24 periods which appears roughly consistent with available evidence regarding the duration of wage-spells.

3.3 Firms

Firms produce a homogeneous good used both for consumption and investment according to a Leontief production function the coefficients of which are fixed throughout. The good is demanded by households, the government and firms themselves (for capital investment) and it is assumed that demand is in general satisfied instantaneously. However, the Leontief production function in principle implies a maximum level of output which can be produced given the existing capital stock if capacity utilisation = 1. Accordingly it is assumed that if total demand exceeds capacity, consumption demand is rationed. Unless aggregate demand exceeds firms’ productive capacity \(\overline {y}\), output is hence demand-determined, i.e.

The production function together with actual production implies a demand for labour which is assumed to always be fully satisfied by households at the going wage rate. Firms set the price for their output according to a fixed mark-up over the sum of unit labour cost and ‘unit interest cost’, defined here as a one-year moving average of firms’ net interest payments over output.Footnote 6 The price level adjusts with a horizon of 24 periods.

To determine the demand for investment goods, firms compute a desired growth rate of the capital stock according to

where \(\overline {r_{L, r}^{e}}\) is the expected average real interest rate charged on bank loans (with \(\overline {r_{L, r}}^{n}\) being once more a ‘normal’ or conventional level given by the value in the initial stationary state) and \({u^{e}_{f}}\) is firms’ expectation of the future rate of capacity utilisation. This formulation for the investment function is similar to the one adopted by Caiani et al. (2016). More generally, the formulation of investment demand as a function of capacity utilisation, implying that firms invest more in periods of high utilisation, targeting a ‘normal’ level of utilisation, is standard in the SFC literature and also quite common in ABMs (Dawid and Delli Gatti 2018). The incorporation of the interest rate on loans introduces an additional feedback effect of monetary policy (as well as the behaviour of banks) on aggregate demand. The desired growth rate of capital is assumed to adapt at a frequency of one quarter. Together with the depreciation of capital (which takes place at a fixed rate) the desired growth rate of capital implies a demand for investment goods. Despite the presence of a capital stock and investment, the model does not feature long-run growth but rather aims to depict business cycle fluctuations around a stationary state.

It is assumed that firms possess a fixed target for their leverage ratio (defined as loans over capital stock). Based on firms’ existing stocks of loans and capital, current loan repayments and depreciation as well as their current investment plans, one can derive a gap between current and target leverage and firms attempt to slowly close this gap by using appropriate combinations of loans and flows of current net revenue in financing their investment (indeed, if leverage is below target, firms may also take out new loans exceeding current investment in order to increase leverage). The actual combination of internal and external finance may differ from the planned one due to possible rationing of loan demand which is described below. If firms are unable to obtain the amount of loans for which they apply due to credit rationing, investment expenditure is curtailed accordingly. Deposits with the banking sector act as the buffer stock of the firm sector, just as they do for households, absorbing unexpected fluctuations in revenues and expenditures. Firms’ dividend payouts are determined by firms’ profit after deducting net revenues used to internally finance investment, adapting with a frequency of 24 periods.

3.4 Government

The government collects taxes on a one-year moving average of household income (wages, interest and profits/dividends accruing to households) at a fixed rate τ. In addition it may levy a tax on firms’ retained earnings if it suffers persistent deficits. In the baseline model, the real value of government spending is assumed to be fixed. Deficits are covered as they occur by the issuance of government bonds of an amount corresponding to the deficit in the current period, while in the case of a surplus, repayments are made to households and the central bank proportionally to their respective holdings of bonds. Government bonds hence in principle have an infinite maturity (i.e. the government does not have to repay or roll over particular bonds at a specific time), but can be repaid when a surplus allows the government to do so. These assumptions are made so as to keep the government bond market relatively simple. A more elaborate maturity and issuance structure of bonds would greatly complicate the model without, in my view, adding much insight on the main objectives of the paper, which are to investigate the role of expectations formation among the heterogeneous banks and the impacts of monetary and prudential policy. For similar reasons, banks do not participate in the government bond market as the focus lies on modelling banks’ lending behaviour to the private sector, the associated competition and balance sheet dynamics, as well as the expectations banks have to form in this context. The balance sheet structure assumed for banks in the model is sufficient to allow for quite rich asset and liability management behaviour, as outlined in the description of bank behaviour in Section 3.6.

New government bonds are offered in the first instance to households and the government varies the interest rate on bonds in an attempt to clear the market. It does so by equating the updated stock of government bonds (following any issuance in the current period) with the previous period’s demand for bonds from households (emanating, as outlined above, from a portfolio equation) by adjusting the interest rate.

3.5 Central bank

The central bank sets a nominal deposit rate according to a Taylor-type pure inflation-targeting rule:

where πe is the central bank’s expected inflation rate and πt is its target, set equal to 0. This rate is adjusted once every month and then remains constant for the following 4 periods. The central bank’s lending rate is given by a constant mark-up over its deposit rate, giving rise to a corridor system. In addition, I suppose that the central bank has in mind a target interbank rate in the middle of this corridor and continuously carries out open-market operations in order to steer the level of central bank reserves to a level consistent with this target. It does so by purchasing and selling government bonds from/to the households, transferring/withdrawing reserves to/from the banking sector which in turn increases/decreases households’ deposits by the corresponding amount (for simplicity I assume that the households are always willing to enter into such transactions). Given the sequence of events within a period, the central bank is in fact always able to perfectly target the correct level of reserves, meaning that in practice banks are never ‘in the Bank’ to acquire advances and similarly there is never an aggregate excess level of reserves since these are drained by the central bank.

If necessary, the central bank also acts as a lender of last resort to the government by purchasing residuals of newly issued bonds. All central bank profits are transferred to the government (and all losses are reimbursed by the government). In addition, the central bank is the prudential policy-maker in the model. At present, the model includes three prudential policy ratios, namely the capital adequacy ratio, the liquidity coverage ratio and a maximum loan-to-value ratio on mortgages, all applying to banks. In the baseline, the targets for all these regulatory ratios are assumed constant.

The capital adequacy ratio of a bank i is given by

where M are mortgages, L are loans to firms and vbb is the banks’ capital buffer, equal to vb + eb, the bank’s net worth plus the fixed amount of bank equity held by households. The ω’s are risk-weights whereby the asset assumed to be the riskier one, loans to firms, is given a weight of 1 in the calculation of the capital adequacy ratio and mortgages receive a weight < 1. These risk weights are used below to determine the extent to which different sources of credit demand are rationed, and the default probabilities on loans and mortgages described below are set so as to be in line with these risk weights. The liquidity coverage ratio is in essence a minimum reserve requirement applying to deposits defined in line with the Basel III framework (Basel Committee on Banking Supervision 2010, 2013).

3.6 Banks

The agent-based banks possess the richest behavioural structure of all the sectors in the model. Each bank must set three interest rates, namely the rate of interest on deposits, on loans to firms, and on mortgages. It is assumed that each period, a random sample of banks is drawn (such that on average, each bank is drawn once every 4 periods) and these are allowed to adjust their interest rate in a given period (meaning that on average, each bank can adjust its interest rate once a month). The deposit rate offered by a bank i is given by

\(\overline {r_{cb}}\) is a one-quarter moving average of the midpoint of the central bank’s interest rate corridor and εd1 is a parameter < 0. cli is an indicator for bank i’s clearing position (i.e. the difference between all transactions during a period representing an inflow of reserves and those representing an outflow of reserves from bank i′s balance sheet). This indicator is calculated as

whereby \(\overline {clear_{i}}\) is a one-quarter moving average of bank i’s clearing position. The intuition is that a bank which persistently finds itself with a negative clearing position (i.e. experiencing a constant drain of reserves) will increase its deposit rate in order to attract more deposits, and vice-versa. Inflows of deposits represent the cheapest source of funding for banks in the model; in particular they are by construction cheaper than to borrow reserves on the interbank market or from the central bank. The hyperbolic tangent is chosen as a functional form so as to place an upper and lower bound on the value of cli. The lending rates of bank i are given by

where the 𝜃’s are gross mark-ups (the mark-ups are > 1 and evolve endogenously as described below). default signifies the current default rate on mortgages and loans of bank i, which are added to \(\overline {r_{cb}}\) in an attempt by the bank to cover for expected losses based on its current assessment of default rates. In addition to setting its interest rates, a bank can also decide to engage in direct rationing of credit. Each bank calculates the gap between its current risk-weighted assets and the maximum allowed given the target capital adequacy ratio and its expected capital buffer. If this gap is greater than zero, meaning that risk-weighted assets are too high, banks ration credit directly. In particular, in each period they attempt to close \(\frac {1}{48}\) of the gap in risk-weighted assets by rationing both mortgage and loan demand according to their relative risk weights.Footnote 7 This way of modelling credit rationing is somewhat similar to the one used in the family of models building on Delli Gatti et al. (2011) such as Assenza et al. (2015, 2018). Through potentially curtailing both investment and housing demand (which in turn will feed back into household wealth and hence consumption), banks’ rationing behaviour which is partly determined by their expectations about their own capital buffer hence has an impact on aggregate demand.

A central element in the modelling of the banking sector is that of the distribution of loan demand and deposits between the different banks. It is assumed that the aggregate amount of deposits of households and firms is distributed between the banks in each period according to

where D are deposits, \(\widehat {{r_{d}^{i}}}\) is bank i’s relative deposit rate and 𝜖d is an autocorrelated, normally distributed random shock centered on 1 with standard deviation σdis. The shares thus calculated are then normalised and multiplied by the total amount of deposits in order to determine the amount held by each individual bank. The share of mortgage demand received by each bank is calculated as follows:

Md is mortgage demand, \(\widehat {{r_{M}^{i}}}\) is the inverse of bank i’s relative rate on mortgages and \(\widehat {ratio{n_{M}^{i}}}\) is an indicator of the relative intensity of the rationing of mortgages by bank i. This is first calculated as

and then normalised so that \(\sum \widehat {ratio{n_{M}^{i}}}=1\). The equation implies that banks which rationed mortgage demand in the previous period will tend to lose market shares in the current period, with the logistic functional form bounding the value of \(\widehat {ratio{n_{M}^{i}}}\). The distribution of loan demand between the banks takes place in identical fashion to that of mortgages. One potential problem with this formulation of deposit and loan distribution is that if a bank loses its entire market share, there is no way for it to re-enter the market. For this reason, a small lower bound is imposed on the market share of each bank to give it the possibility to re-capture market shares it previously lost. In each period, a given fraction of firm loans and mortgages held by each bank are repaid. Defaults evolve according to

and symmetrically for firm loans. ζM is a fixed parameter, \(\overline {lev_{h}}\) is a one-year moving average of the ratio of mortgages to the housing stockFootnote 8 meaning that defaults tend to increase as households become more highly leveraged in the housing market, and \(\epsilon _{M}^{def}\) is a random variable drawn from a logistic distribution which is not only autocorrelated but also cross-correlated across banks. This implies that default shocks are not completely idiosyncratic across banks but instead contain a ‘systemic’ element hitting all banks at the same time. To construct these random default shocks, I first generate a matrix of cross-correlated normal random variables and then transform them into draws from a logistic distribution the location parameter of which is the relative interest rate of each bank and the scale parameter, sdef, of which is empirically calibrated below. I assume that defaults on firm loans are more frequent than those on mortgages on average and this is reflected in the risk weights of the two assets.

In addition to its decisions on credit rationing (and indirectly through the effects of defaults on both equity and the interest rates), the capital adequacy ratio also feeds into banks’ dividend policy. In particular, banks form an expectation about their future capital buffer and compare it to a target value (based on the target capital adequacy ratio). Every quarter, they calculate a mean of the deviations over the previous 12 periods and then, for the following 12 periods, pay a dividend equal to current profits plus \(\frac {1}{12}\) of the deviation (while the deviation may be negative, the total dividend must be positive or zero). Banks’ dividend payouts (as well as banks’ expectations which feed into their determination), making up part of households’ disposable income, have a feedback effect on aggregate demand through consumption expenditure.

A further element of the banking sectors’ behaviour concerns the interbank market. Banks’ final demand for reserves is determined by their deposits and the target liquidity coverage ratio set by the regulator. In order to calculate their demand for/supply of funds on the interbank market, each bank calculates a clearing position netting all its in- and outflows of reserves over the present period. After adding this clearing position to their previous stock of reserves, banks end up with a ‘prior’ stock of reserves which is compared to their target stock, thereby determining whether they will demand or supply funds on the interbank market.

Demand and supply on the interbank market are aggregated and matched, and whichever side of the market is short is rationed proportionately. For instance, if total demand on the interbank market is higher than total supply, each bank on the demand side receives funds equal to \(\frac {individual demand}{total demand}\cdot total supply\). The interbank market is hence modelled in an extremely simple fashion and in and of itself has only a very slight impact on simulation results through its determination of the interbank rate (see below). By construction there are no defaults or possibilities of the interbank market freezing up. It merely represents a straightforward way to redistribute reserves among banks in accordance with their reserve targets. As outlined above, however, inflows and outflows of reserves during the period (i.e. before interaction on the interbank market) do play an important role in that they determine banks’ clearing position and hence their behaviour in competition for deposits.

If banks are unable to obtain all the reserves they need on the interbank market, they request advances from the central bank. These advances are always granted on demand at the central bank lending rate, which is however higher than the interbank rate, since the latter by construction falls within the corridor and is given by

where Rgap is the aggregate gap between reserves prior to the central bank’s intervention and target reserves. This functional form ensures that the interbank rate is bounded within the interest rate corridor set by the central bank, which is reasonable as all banks have access to the central bank’s lending and deposit facilities.

Recall that when setting interest rates on loans and mortgages, banks add the default rate to a moving average of the central bank rate and then apply a mark-up on this sum. In the baseline model, each bank adaptively changes its mark-ups every time it is allowed to alter its interest rates. In particular, a given bank i will follow the following rule:

-

If revenues of bank i on the asset (mortgages or loans) were higher during the past month than in the month before that, and bank i’s rate is lower than the sector average, increase the mark-up.

-

In the opposite case, decrease the mark-up

-

Otherwise, leave the mark-up unchanged.

To revise the mark-up, banks draw a normally distributed random number centered on the parameter step (which is however constrained to always be non-negative) and then increase or decrease the mark-up by this amount. Overall this mechanism is intended to depict a type of heuristic search for the most profitable mark-up rate, and it involves banks implicitly making predictions about the relationships between (relative) interest rates and revenues. This heuristic for setting the price of loans is similar to the one used by Assenza et al. (2015) to model the pricing decisions of firms. When setting interest rates, banks’ information set includes the current-period default rates on their portfolios of mortgages and loans and they use these current default rates as a prediction of future ones in setting their interest rates. Both of these mechanisms will be altered as part of the experiments presented in Section 5.1.

Finally, the model does not contain a bankruptcy mechanism for banks which does not represent a problem since no bank has so far gone bankrupt in any simulation.

3.7 Expectations

In the baseline all expectations are modelled following an adaptive mechanism:

To take into account that decision variables in the model adjust at different speeds, x− 1 in the equation above may be a moving average of some length corresponding to the adjustment speed of the forecasted variable.

While the focus of the model lies on the expectations of banks, expectations also enter into the behaviour of other sectors for both theoretical and computational reasons. Households forecast their disposable income, their wealth, mortgage interest rates, the rate of inflation, and the composite rate of return on their assets. Firms forecast the average interest rate on loans, as well as their capacity utilisation. The central bank forms expectations about inflation as well as capacity utilisation. Banks must form expectations about their capital buffer, vbb. In addition, as outlined above, they engage in a type of forecasting or expectations formation when setting mark-ups and (possibly) in forming perceptions about default rates, which however is different from the adaptive expectations mechanism.

The first experiment reported in Section 5.1 consists in replacing the adaptive expectations mechanism in the banking sector with the model of heterogeneous expectations formation and heuristic switching proposed by Brock and Hommes (1997). Specifically I use the version presented in Anufriev and Hommes (2012) where it is assumed that agents can switch between four different specifications for expected variables given by

The first rule is the same adaptive one used in the baseline, which is now augmented by two trend-following rules (one weak and one strong, i.e. ψtf1 < 0 and ψtf2 > 1) and an ‘anchoring and adjustment’ mechanism in which the anchor is the moving average of x. Agents switch between these four mechanisms based on a fitness function calculated using the error between expected values and realisations of the forecasted variables as detailed in Anufriev and Hommes (2012). In addition, Section 5.1 discusses experiments in which the banks can use simple econometric techniques to conduct forecasts and form expectations.

4 Calibration & baseline simulation

Before simulation, the model is calibrated to a deterministic stationary state to pin down a range of initial and parameter values using the procedure described in online Appendix Appendix, drawing on UK data where possible. A subset of the model’s remaining free parameters is then calibrated empirically using the method of simulated moments (Gilli and Winker 2003; Grazzini and Richiardi 2015) and UK macroeconomic time series data. A detailed description of the procedure and a list of the empirically calibrated parameters can be found in online Appendix Appendix. Table 1 provides a comparison of the empirical statistics used to calibrate the model and their simulated counterparts generated following calibration. It can be seen that the model is able to reproduce the standard deviations of the empirical time series very closely. It performs somewhat less well on the first order autocorrelations, in particular those of consumption and investment for which the simulated series show a higher autocorrelation than their empirical counterparts. This higher autocorrelation of consumption and investment however appears necessary in order for the model to be able to reproduce the autocorrelation of GDP due to the absence of other components of GDP in the model and government spending being constant in the baseline.

A final remark regarding initialisation and parametrisation concerns the agent-based banks. Rather than start with completely identical agents, as is sometimes done in the literature, I instead impose a heterogeneous initialisation by giving banks balance sheets of differing sizes. The reason for this is that I am primarily interested in the behaviour of heterogeneous banks rather than the endogenous emergence of heterogeneity. The initial distribution is detailed in online Appendix Appendix. Following calibration of the model, I move on to the baseline simulation. This is conducted using 100 Monte Carlo repetitions. Stochastic elements in the simulation emanate from the default process, the distribution of aggregate flows of loan and mortgage demand as well as deposits between banks, the re-setting of bank interest rates at random intervals and the random amount by which banks’ mark-ups change in case they are revised. After discarding a transient of 480 periods, the baseline simulates the behaviour of the model for 1200 periods, corresponding to 25 years (it is assumed that one year is made up of 48 weeks).Footnote 9

Starting from the deterministic stationary state, the stochastic elements characterising the behaviour of the agent-based banks are sufficient to make the economy diverge from the stationary state and converge to a pattern of irregular cyclical movements. Figures 2, 3, 4, 5 and 6 give an idea of the dynamics of the model by showing aggregate time-series from one individual, representative run. Figure 6 reports the sectoral financial balances at a quarterly frequency since the weekly data is too noisy to allow for a legible graphical representation.

Investment demand which reacts to both utilisation gaps and financing conditions (in the form of interest rates and credit rationing) is clearly the main driver of fluctuations in aggregate income. Consumption is more persistent, being driven by a combination of relatively slow-moving factors including changes in the wage rate, fluctuations in the consumption propensities, and the impact of cycles in the price of housing on household wealth.

Figure 4 shows that even average lending rates are quite volatile, reacting to developments in the cross-correlated default shocks and revisions in the mark-ups. In addition, since the model produces fairly volatile inflation rates, the pure inflation-targeting monetary policy leads to strong fluctuations in the central bank rates which in turn feed through into the rates offered by banks. Credit rationing is not particularly prevalent but increases strongly at the peaks of economic booms, contributing to the consequent downturn.

Turning to the dynamics at the level of individual banks, it can be seen that banks are quite successful at remaining close to the fixed target for the capital adequacy ratio (Fig. 7). Fluctuations in the capital adequacy ratio are correlated across banks to some degree, but at times individual banks diverge from the common trend. Figure 8 shows that the size-distribution of banks in terms of the length of their balance sheets is relatively constant and in particular that there appears to be no tendency towards monopolisation of all loan markets by any single bank. Instead, the oligopolistic structure appears stable.

A look at the distribution of loans and mortgages, as in Fig. 9, reveals an interesting pattern. It can be seen that in general, banks never lose significant market shares in both loan and mortgage markets at the same time and even that instead, a bank which loses shares in one market tends to increase its share in the other one. This is a general property of the simulation results which can be found across the individual Monte Carlo runs of the model. In some simulations, one individual bank eventually gains a considerable share in one market (loans or mortgages) but at the same time, its share in the other market declines strongly. This phenomenon is not a feature built into the model but rather can be considered an emergent property of the system.

While the model dynamics are qualitatively quite similar across different Monte Carlo repetitions, the cyclical movements almost disappear when taking the mean of simulated time-series as the peaks and troughs of cyclical movements do not necessarily coincide across different runs. To get an idea of the dynamics generated by the model, I must hence focus on analysing individual runs as was done above, together with second moments of simulated data and various other summary statistics. In addition to calibrating the model so that the volatility and first-order autocorrelation of the main macro time-series corresponds to that of their empirical counterparts, I also follow Assenza et al. (2015) in taking a look at the cross-correlations and higher-order autocorrelations of these series. Figures 10 and 11 provide an overview of these, using quarterly and filtered simulated data. Figure 10 (where the solid lines represent the simulated quarterly data, the bars are Monte-Carlo standard deviations and the dashed lines represent the empirical data) shows that the model does a reasonably good job at reproducing empirical autocorrelations although the simulated time series of investment and the price level are somewhat less persistent than the empirical ones.

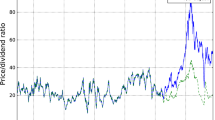

Figure 11 shows the cross-correlations of real output at time t with output, investment, consumption and the price level at time t − lag. Again the fit appears reasonably good, with the exception of that of the price-level. In the case of the latter the model produces a much stronger cyclicality than that which is observed in the empirical data so that overall the model does not appear to reproduce price and inflation dynamics especially well (this is also underlined by Fig. 12 which shows that the model appears to produce regular cyclical dynamics in the inflation rate which are not present to the same extent in the data).

Given the focus of the present paper on the agent-based banking sector, it appears appropriate to give a closer examination to the role of the banking sector and particularly bank heterogeneity in producing the observed model dynamics. The banking sector clearly is an important driver of macroeconomic fluctuations in the model, with fluctuations in interest rates and credit availability impacting both investment and consumption expenditure. The interbank market, being modelled in a strongly simplified form, plays a mostly passive role aimed at redistributing reserves among banks; the model by assumption does not allow for the possibility of defaults and a freeze-up of the interbank market. The specific effect of including multiple banks with heterogeneous balance sheet compositions in the model can be gauged rather simply through a counterfactual experiment, by running a version of the model in which there is only a single bank.Footnote 10 Table 2 (where the numbers in parentheses represent the 95% confidence interval from a Wilcoxon signed-rank test) shows that in the absence of bank heterogeneity, macroeconomic volatility increases strongly.Footnote 11 Competition between banks, which leads to a redistribution of loan demand to banks which offer lower rates and are less likely to ration credit hence appears to have a stabilising influence on the system as it is able to stabilise the flow of credit to the private sector to a certain degree. With only a single bank, default shocks are no longer partly idiosyncratic as in the baseline but rather become systemic, meaning that overall they have a greater influence on interest rates and credit rationing.

5 Simulation experiments

Having presented the baseline simulation, the current section reports a range of experiments which were carried out on the model. The first set focuses on the expectations formation and forecasting behaviour of banks while the second concerns the implementation of various policy tools.

5.1 Expectations & forecasting

Due to the importance of banks’ balance sheet management for the observed model dynamics, a straightforward experiment to carry out is to replace banks’ adaptive expectations formation process with the heterogeneous expectations and heuristic switching mechanism outlined in Section 3.7. While banks’ expectations in the baseline model are not homogeneous insofar as banks may hold different expectations at any given point in time, all banks make use of the same expectations formation rule, namely the one given by Eq. 16. In this experiment, by contrast, they may switch between different expectations formation mechanisms. As was explained in the model description, banks must make forecasts about the composition of their own balance sheet, in particular their future capital buffer, to use as an input in their decision-making about credit rationing and dividend payments. Interestingly, however, an implementation of the mechanism described in Anufriev and Hommes (2012) for banks in the present version of the model appears to have little effect on simulation results.Footnote 12 Banks do indeed switch between different heuristics and tend to prefer a mix between the strong and weak trend following rules with occasional use of the adaptive and the anchoring and adjustment rules. Notably, no individual dominant forecasting strategy appears to emerge.

Table 3 reports the means and standard deviations of banks’ errors in forecasting their own capital buffer in the baseline and under heterogeneous expectations with heuristic switching. While the heuristic switching case provides a clear improvement over simple adaptive ones in terms of the standard deviation of forecast errors, in the baseline model simple adaptive expectations by themselves turn out to be a fairly decent forecasting heuristic upon which the alternative heuristic cannot improve sufficiently to significantly alter the dynamics of the model.Footnote 13 The decrease in the standard deviation of forecast errors shows that under heuristic switching, banks tend to make smaller mistakes. An examination of the simulation data shows that this leads to a small increase in the average level of bank profits and a decrease in their volatility, as well as a decrease in the incidence of credit rationing as banks make smaller mistakes in forecasting their own capital buffer. However, none of these differences are large enough to be statistically significant as, despite the large difference between the standard deviations shown in Table 3, banks’ forecast errors are already fairly small even under simple adaptive expectations.

Table 4 consequently shows that the introduction of heterogeneous expectations and heuristic switching for banks makes no significant difference for the simulated moments of the macroeconomic time-series. As discussed further below, the mixture of forecasting heuristics selected by banks based on the fitness criterion turns out to be so similar to adaptive expectations in the baseline model that simulation results change only very little.

Instead of allowing banks to switch between the forecasting rules suggested by Hommes, I can instead also allow them to attempt to make forecasts using econometric methods, as is sometimes done in conventional models (see Evans and Honkapohja 2001). Here I allow banks to estimate an AR(1) model of their own capital buffer using OLS regression on all past observations of their own capital buffer and make the forecast based on the estimated parameters of this model. Due to the use of OLS, this learning algorithm falls into the decreasing gain category (Evans and Honkapohja 2001, Ch. 1), meaning that the period-to-period change in the estimated parameters tends to decrease as more data is accumulated.

The result of this exercise is summarised in Tables 5 and 6. As in the case of heuristic switching, the standard deviation of the forecast error decreases, though not to the same degree. In terms of macroeconomic outcomes this experiment is little different from the previous one; OLS-learning is able to improve upon simple adaptive expectations in terms of the standard deviation of forecast errors but for the same reasons given above, the improvement makes little difference to model outcomes.

The model contains two more points at which the behaviour of banks may be modified to allow for some more sophisticated prediction behaviour. Recall from the model description that in setting their lending rates, banks apply a mark-up over the sum of the central bank rate and the current default rate of loans/mortgages. Suppose that instead, banks attempt to estimate future default probabilities and also use estimation techniques in order to make a decision on mark-up revisions. I implement this notion in the following way: For default probabilities, I once more suppose that the banks use AR(1) models estimated using OLS on all past observations of the default rates on loans and mortgages to make one-period ahead forecasts. With regard to the mark-ups, recall that banks use two criteria for revision, namely whether their revenues from each asset have been growing and whether their interest rates are high relative to those of other banks. I now allow them to make a forecast of their future revenues using an econometric model to make a judgement as to whether their revenues are going to increase over the next month with the proposed mark-up revision. In particular, each bank which is allowed to re-set its interest rates in a given period estimates the model:

using all past observations, where iL are revenues on loans and rL is the interest rate on loans, with \(\overline {r_{L}}\) being its average prevailing across the banking sector. Banks hence attempt to estimate the effect of their own lending rate on their revenues, controlling for the average rate charged in the economy. The equivalent model is also estimated for mortgages. Based on the estimated coefficients φ3 and φ4 of Eq. 21 the bank then estimates potential gains in revenue from increasing or decreasing its mark-up. It does so by randomly drawing a change in the mark-up using the same distribution as in the baseline, and then, also taking into account its forecast of default probabilities, calculating the interest rate implied by increasing as well as decreasing the mark-up on loans (or mortgages) by that amount. The bank then uses the estimated parameters φ3 and φ4 to calculate whether an increased, decreased or unchanged mark-up is expected to produce the highest revenue and sets its mark-up accordingly.

In contrast to the previous experiments, this modification of the model does have a statistically significant impact on simulation outcomes, as shown in Table 7. In particular, allowing the banks to use the new, arguably more sophisticated behavioural rules outlined above leads to a considerable reduction in the volatility of investment at the macroeconomic level as under the alternative behavioural rules, banks’ lending rates become less volatile. The standard deviation of consumption increases somewhat due in particular to a slight increase in the volatility of dividend payments from firms and especially banks whose profits appear to become less stable using the alternative interest rate setting mechanism. At the microeconomic level, as shown in Table 8, banks’ profits on average decrease when they use the alternative method of forecasting revenues and defaults in setting their interest rates, suggesting that the simple heuristics used in the baseline model are superior to the more sophisticated ones implemented in this experiment. At the microeconomic level, this result is in line with those obtained by Dosi et al. (2017), who find that in their model more sophisticated heuristics are generally less successful than simpler ones.

The results of the above experiments raise several issues worth discussing. It may appear curious that replacing banks’ adaptive expectations formation mechanism with heterogeneous expectations and heuristic switching or OLS learning as in the first two experiments would have next to no effect on simulation results. One might suspect based on this result that banks’ expectations in fact do not play an important role in the model. This assertion can be tested by a simulation in which banks, rather than form expectations about their capital buffer, simply assume that it will at all times be equal to its target. This leads banks to frequently make large forecast errors which in turn leads to increased volatility at the aggregate level. The robustness of the model to an implementation of heterogeneous expectations with heuristic switching or OLS learning for banks can be explained by the fact that in the present model, which is stationary and incorporates numerous rigidities imparting stickiness to endogenous variables, adaptive expectations, heuristic switching and OLS learning in fact yield very similar expectations. Figure 13 shows a comparison of banks’ average expectation of their capital buffer under the three different expectations formation rules (using a snapshot of 250 periods from one individual simulation for better legibility), demonstrating that the differences between the predictions of the three heuristics are minimal. Given the negligible differences between predictions, banks’ behaviour will be next to identical under all three heuristics, explaining that overall simulation results are also close to identical. By contrast, the modifications made to the model in the third experiment, particularly the way banks forecast their revenues, represents a major change in the behavioural rules underlying the model, making it unsurprising that simulation results are more strongly affected.

However, it also turns out that some minor modifications to the model can make it much more sensitive to the expectations formation mechanism used by banks. In particular, one can construct an environment that is somewhat more unstable than the baseline by reducing the adjustment horizons of aggregate consumption, housing demand and investment from 12 periods to 4 and increasing the speed at which banks adjust their credit rationing and dividend payout behaviour such that they now attempt to hit their target for the capital adequacy ratio period-to-period rather than over time. The effect of this is to make the environment in which banks must form their expectations more unstable and unpredictable. This leads to an increase in macroeconomic volatility under any expectations formation mechanism. Figure 14 plots the MC averages of GDP for the three types of expectations formation together with 95% confidence intervals. As can be seen, OLS learning still delivers similar outcomes as adaptive expectations, but the use of heuristic switching puts the model on an explosive path. Across MC repetitions, it appears to cause a collapse in output due to excessive credit rationing as a consequence of large forecast errors during the transient phase. The recovery from this collapse invariably leads the economy onto an explosive trajectory fuelled by a feedback loop between a growing stock of government debt and interest payments on government debt.

Overall, the results of the experiments reported above build upon those obtained by Dosi et al. (2017). They too find that alternative expectations formation mechanisms may yield inferior results and can act as a source of instability. The present paper underlines the context-dependence of such results. The K+S model used by Dosi et al. (2017) represents a highly complex and fast-moving environment which also incorporates technological change and long-term growth. By contrast, the baseline model presented in this paper constructs a stationary and relatively stable environment in which alternative expectations formation mechanisms perform fairly well (although interestingly they do not outperform the simpler one by a great margin). If the environment is made more unstable, or the modification to the behavioural rules is more fundamental, however, the results move closer to those obtained by Dosi et al. (2017). This general result is very much in line with the concept of ecological rationality advanced by Gigerenzer (2008), which emphasises that the ‘rationality’ or suitability of a particular behavioural rule is always dependent on the context in which it is applied.

5.2 Policy

To conclude my investigation of the model, I conduct several policy experiments, starting from the unmodified baseline model. To begin with, I undertake a parameter sweep of a generalised Taylor rule reacting to both inflation and changes in capacity utilisation to analyse the effects of monetary policy. The detailed results of this experiment are reported in online Appendix Appendix. The results suggest that, within this model, adherence to the Taylor principle is helpful for price stabilisation, but a too strong response of monetary policy to inflation may also be disadvantageous, leading to an increase in macroeconomic fluctuations. Moreover, the generalised Taylor rule incorporating capacity utilisation as a proxy for output in addition to inflation does not appear able to strongly improve on the outcomes of the baseline simulation in terms of limiting macroeconomic volatility. Following the parameter sweep of the monetary policy rule, I experiment with several different policy tools aiming to increase macroeconomic stability relative to the baseline simulation, namely an activist fiscal policy, an alternative monetary policy rule, an endogenous maximum loan-to-value ratio on mortgages, and an endogenous target capital adequacy ratio. Real government expenditure, which is constant in the baseline, is endogenised as

where \(\hat {c}\) is the annualised growth rate of private consumption over the preceding quarter. The government sets a target level of expenditure according to the equation above on a quarterly basis and then gradually adjusts its spending to the desired level over the next quarter. The monetary policy rule is amended with the specific aim of stabilising investment expenditure. The central bank continues to re-set its interest rate on a monthly basis, but does so according to

where \(\widehat {i_{d}}\) is the annualised growth rate of private investment demand over the previous month. The endogenous maximum loan-to-value ratio on mortgages is implemented as

where LTV∗ is the fixed maximum loan-to-value ratio from the baseline, \(\overline {p_{h}}\) is the average price of housing over the past month and \(p_{h}^{*}\) is a target level for the house price, set equal to the value of the price of housing prevailing in the initial stationary state for convenience. It is assumed that the central bank resets a ‘target’ maximum LTV according to the equation above once every month and then adjusts the maximum LTV to this target over the following 4 periods. Finally, the endogenous target capital adequacy ratio for banks is given by

where CAR∗ is the fixed target capital adequacy ratio from the baseline, \(\overline {lev_{f}}\) is the average leverage ratio of firms over the previous month and \(le{v_{f}^{t}}\) is the fixed target leverage ratio used by firms in the baseline, which is here assumed to also be taken as a target by the central bank. Just as the endogenous maximum LTV ratio, the endogenous target capital adequacy ratio is re-set once every month by the central bank and then adjusted gradually to that level over the following 4 periods.

Table 9 summarises the effects of the four policy tools outlined above, implemented individually, in terms of their impact on macroeconomic volatility. Stars indicate standard deviations which become significantly lower (or higher) under the respective policy relative to the baseline, based on the 5% confidence intervals of a Wilcoxon signed rank test. As is common in the AB(-SFC) literature, fiscal policy turns out to be highly effective at reducing output volatility. As the fiscal policy rule is tied to the growth of private consumption, the reduction in output volatility is primarily achieved through a reduction in the volatility of consumption. The endogenous capital adequacy ratio also appears very effective as a tool to stabilise GDP, with the greatest impact being a reduction in the volatility of investment. The alternative monetary policy rule also significantly reduces the volatility of investment and GDP but does not significantly affect consumption and has the drawback of considerably increasing fluctuations in the price level. Finally, the endogenous maximum LTV ratio is able to decrease consumption volatility, but its impact is not quite strong enough for this to also translate into a significant reduction of output volatility.

As a final experiment I investigate the joint impacts of the policy tools considered above. For joint implementation, I reduce the strength of each individual tool as it turns out that when implemented jointly, the policy tools may themselves be a source of additional volatility if they are calibrated to react too strongly. Accordingly, I reduce the parameter in the fiscal policy rule from 2.5 to 1, the one in the alternative monetary policy rule from 0.15 to 0.1, the one for the endogenous maximum LTV from 10 to 5 and the one for the endogenous target capital adequacy ratio from 8 to 4. Table 10 summarises the effects of jointly implementing the alternative monetary policy rule and the endogenous maximum LTV and target capital adequacy ratios at first without and then with the addition of an activist fiscal policy.

It can be seen that the alternative monetary policy rule jointly with the two prudential policy tools can produce a considerable reduction in the volatility of output and its components, a result which becomes even stronger when fiscal policy is added to the mix. However, both policy packages lead to a significant increase in the volatility of the price level. Depending on the policy tool(s) used, there hence appears to be a trade-off between output and price-level/inflation-stabilisation in the model. Despite reducing overall macroeconomic volatility, both policy mixes somewhat increase short-term fluctuations in both wage and interest costs which translates into a more volatile price level. The overall conclusion of these experiments is that monetary, prudential and fiscal policies can, both individually and interacting in a mutually reinforcing manner, promote the attenuation of business cycles in real GDP and its components. However, such effects may come at the price of at least partly sacrificing other policy objectives, such as inflation control in the case of the present analysis. In addition, both the calibration and the timing of policy interventions, especially the frequency at which policies are altered and the speed at which they adjust play an important role in determining the success of a policy rule in this model. Too strong/weak or too frequent/infrequent intervention may well reduce the effectiveness of a particular policy or even turn it into a source of additional macroeconomic volatility. This suggests that prudential policies such as the ones tested here can be a valuable addition to policy-makers’ toolboxes and that they can interact favourably with monetary policy, but also that they must be very carefully calibrated and closely coordinated with other policy measures.

All policy experiments presented above were re-run under both heterogeneous expectations with heuristic switching and OLS learning for banks in the unmodified model. Just as in the baseline, the use of these two alternative heuristics does not have a significant impact on simulation outcomes, meaning that the presented policy interventions are able to produce equivalent reductions in macroeconomic volatility also under alternative expectations formation regimes. A somewhat different picture emerges when looking at the modified model, i.e. the one featuring a greater degree of volatility, which was discussed at the end of Section 5.1. In Section 5.1 it was shown that in the modified model, OLS learning leads to very similar results to those obtained under simple adaptive expectations. Re-running the policy experiments presented above under both OLS learning and adaptive expectations within the modified model reveals that in both cases, they deliver a similar degree of reduction in volatility as when they are applied in the unmodified model. None of the policy measures discussed above appear able, however, to contain the instability produced by the modified model under heterogeneous expectations which was shown in Fig. 14. In order to prevent the model from settling onto an explosive path, a different mix of policy interventions is necessary. For this experiment, the macro-prudential policy rules for the maximum LTV and target capital adequacy ratio presented above are combined with a generalised Taylor rule of the form

with ϕu = 0.5, meaning that monetary policy also reacts to fluctuations in capacity utilisation u, and a fairly strong activist fiscal policy of the form

where \(\overline {u}\) is a one-quarter average of industrial capacity utilisation. It is found that the use of this policy mix can indeed prevent the model from settling on the explosive path, as is demonstrated by Fig. 15, which shows the MC-averages of GDP with and without the application of the policy mix.