Abstract

For the special case of balanced one-way random effects ANOVA, it has been established that the generalized likelihood ratio test (LRT) and Wald’s test are largely equivalent in testing the variance component. We extend these results to explore the relationships between Wald’s F test, and the LRT for a much broader class of linear mixed models; the generalized split-plot models. In particular, we explore when the two tests are equivalent and prove that when they are not equivalent, Wald’s F test is more powerful, thus making the LRT test inadmissible. We show that inadmissibility arises in realistic situations with common number of degrees of freedom. Further, we derive the statistical distribution of the LRT under both the null and alternative hypotheses \(H_0\) and \(H_1\) where \(H_0\) is the hypothesis that the between variance component is zero. Providing an exact distribution of the test statistic for the LRT in these models will help in calculating a more accurate p-value than the traditionally used p-value derived from the large sample chi-square mixture approximations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For linear mixed models with one variance component beyond the usual error, Wald proposed in 1947, for the first time, an exact F test for the variance component being zero. In 2010, Lu and Zhang have established the equivalence between the generalized likelihood ratio test and the traditional F test for no group effects in the balanced one-way random effects model, see also Herbach (1959). We extend this result to a much broader family of linear mixed effects models with one random effect, compound symmetry (CS) covariance structure, and other constraints– namely the generalized split-plot (GSP) models introduced in Christensen (1987). GSPs assume CS covariance structure indicating heterogeneous variances and constant correlations among repeated measures. GSP models include standard split-plot models with nearly any experimental design for the whole plot treatments and include some ability to incorporate covariates into split-plot models. Further, the standard multivariate growth curve models (cf. some standard multivariate text like Johnson and Wichern) with compound symmetry covariance matrices are special cases of generalized split plot models as shown by Christensen (2019, Section 11.7.1). The balanced one-way random effects model is the simplest GSP model.

A general linear mixed model that specifies both fixed effects and random effects can be written as

where Y is a vector of observations, \({\tilde{X}}\) is a known model matrix for fixed effects, \(\beta \) is an unobservable parameter vector of fixed effects, \(X_1\) is a known model matrix for random effects, \(\gamma \) is an unobservable vector of random effects, and \(\epsilon \) is a vector of residual errors with \({\mathbb {E}}(\epsilon )=0\), \({\text {Cov}}(\epsilon )=R\), \({\mathbb {E}}(\gamma )=0\), \({\text {Cov}}(\gamma )=D\), \({\text {Cov}}(\epsilon ,\gamma )=0\), and therefore \({\text {Cov}}(Y)=X_1DX_1^{'}+R\).

1.1 Notation

For the rest of this paper, we use the notation C(A) and r(A) to denote the column space and rank of the matrix A respectively. In addition, the perpendicular projection operator (PPO) onto C(A) is denoted by \(M_A\) unless otherwise specified. In matrix notation, \(J_r^c\) and \(0_{r\times c}\) denote a matrix of ones and a matrix of zeros respectively each of size \(r\times c\). When \(c=1\), for simplicity we suppress c so that \(J_r\) and \(0_r\) denote a column vector of ones and a column vector of zeros respectively of length r. \(I_n\) is the identity matrix of size n while I, with no subscripts, has size that can be inferred from context. We use \(\text {diag}(V_1,\dots ,V_{N})\) to denote a block diagonal matrix with square matrices \(V_1, \dots , V_N\) on its diagonal and \(\text {Bdiag}(V)\) to denote a block diagonal matrix whose diagonal entries are all V. Vertical lines denote the determinant when enclosing a matrix and absolute value when enclosing a number. L(.), and \(\ell (.)\) denote the likelihood, and \(-2\)Log-likelihood functions respectively while \(\ell _*(.)\equiv -\ell (.)\).

1.2 A class of linear mixed models of interest

Our class of linear mixed models of interest is of the form

with \(\eta \sim N(0,\sigma _w^2 I_N)\), \(\epsilon \sim N(0,\sigma _s^2 I_n)\), \({\text {Cov}}(\epsilon ,\eta )=0_{n\times N}\), \(n=Nm\), \(\delta \) and \(\gamma \) are fixed effects, \(C(X_{*})\subset C(X_1)\), and \(C([X_{*},X_2])= C(X_{*}, (I-M_1)X_2)\) where \(M_1\) is the PPO onto \(C(X_1)\). If \(V=Cov(Y)\), then for this class of models it’s immediate that \(C(VX)\subset C(X)\) given that \(X_1\) is a matrix of indicator variables for the clusters with equal group sizes. GSPs are the class of such linear models for which \(V=\left( \sigma _w^2+\sigma _s^2\right) \left[ (1-\rho )I_n+\rho J_{nxn} \right] \) where \(\rho =\frac{\sigma _w^2}{\sigma _w^2+\sigma _s^2}\), \(\sigma _w^2\) is the variance between clusters or blocks, and \(\sigma _s^2\) is the variance within clusters or blocks.

1.2.1 Generalized split plot (GSP) models

We summarize the definition and analysis of GSP models from Christensen (1984, 1987 and 2011). Consider a two-stage cluster sampling model

with n observations, \(m_i\) subjects from each cluster, and includes fixed effects for each cluster. Let \(X=[X_1, X_2]\) where the columns of \(X_1\) are indicators for the clusters and \(X_2\) contains the other effects. Write \(\beta '=[\alpha ', \gamma ']\) so that \(\alpha \) is a vector of fixed cluster effects and \(\gamma \) is a vector of fixed non-cluster effects. Because it is a two-stage cluster sampling model, the error vector \(\xi \) has uncorrelated clusters and intraclass correlation structure and can be written with random effects as

where \(\eta \) contains random cluster effects and \(\epsilon \) is a random error. Assume that \(\eta \) and \(\epsilon \) are independent such that \(\eta \sim N(0,\sigma _w^2 I_N)\), \(\epsilon \sim N(0,\sigma _s^2 I_n)\) with \({\text {Cov}}(\epsilon ,\eta )=0_{n\times N}\) such that \(n=\sum _{i=1}^N m_i\) then we get the mixed model

with

The GSP models are obtained by imposing additional structure on the fixed cluster effects within the cluster sampling model (5). To model the whole plot (cluster) effects we put a constraint on \(C(X_1)\) by considering a reduced model

where \({\tilde{X}}=[X_{*}, X_2]\) and \(\beta _{*}'=[\delta ^{'}, \gamma ^{'}]\) and the covariance matrix remains as in (6). The \(\delta _i\)s will be the whole plots fixed effects and the \(\gamma _i\)s will be the subplots fixed effects. In this model, \(\eta \) is the whole plot error and \(\epsilon \) is the subplot error.

To develop a traditional split-plot analysis for GSP models, we need three assumptions:

- (a):

-

\(m_i=m\), i.e. all whole plots are of the same size,

- (b):

-

\(C(X_{*})\subset C(X_1)\), i.e. \(\delta \) contains whole plot effects,

- (c):

-

\(C({\tilde{X}})= C(X_{*}, (I-M_1)X_2)\) where \(M_1\) is the PPO onto \(C(X_1)\), i.e. subplot effects are orthogonal to whole plots (not just whole plot effects).

In particular, with these three conditions, the least-squares estimates (LSEs) for model (7) are best linear unbiased estimates (BLUEs) and standard split-plot F and t statistics have null hypothesis F and t distributions, cf., Christensen (2011, Chapter 11).

We consider a GSP model with n total observations, \(r(M_1)=N\) whole plots and m subplots in each whole plot. In model (7), with covariance structure (6) and assumption (a) we can rewrite (6) as

Also,

where

In a general mixed model (1) exact statistical inference cannot typically be performed noting that approximation for exact calculations could be made (Lavine et al. 2015). However, there are special cases such as Wald’s tests for variance components and split-plot models where exact inferences are available. The analysis of split-plot designs is complicated by having two different errors in the model. If \(\sigma _w^2=0\), the model (7) reduces to the standard linear model

In particular, if the whole plot model is a one-way ANOVA, i.e., the whole plot design is a completely randomized design (CRD), and the subplot effects involve only subplot main effects and whole plot by subplot interaction then the model reduces to two-way ANOVA with interaction. Further, if the whole plot one-way model is unbalanced this might be an interesting two-way ANOVA model wherein the number of observations on each pair of factors ij is \(k=1,\ldots ,mN_i\) instead of the usual \(k=1,\ldots ,N_{ij}\) which makes whole plot treatments and subplot treatments orthogonal (Christensen 2011, Section 7.4). To test the appropriateness of the standard linear model (11), the hypothesis of interest is whether or not the covariance component for whole plots lies on the boundary of the parameter space, i.e.,

There are three competing test statistics for the null hypothesis in (12), the likelihood ratio test (LRT) and the traditional, exact, F-test (Wald’s test) and the likelihood ratio test based on the residual likelihood (RLRT). These tests have been studied extensively over the past few decades (see Crainiceanu and Ruppert 2004; Greven et al. 2008; Wiencierz et al. 2011; Molenberghs and Verbeke 2007; Scheipl et al. 2008), however studies about their equivalnace in the context of split-plot designs are limited.

1.3 Objectives

In this work, we aim to show the inadmissibility of the LRT in testing the hypothesis that the between variance component in a linear mixed model with CS covariance structure and other constraints is zero (i.e. among GSP models). Firstly, we derive explicit expressions for the maximum likelihood estimates (MLEs) for the variance components of the GSP model. Further, we show that the LRT and F tests are equivalent for testing (12) in GSP models when the size of the test, \(\alpha \), is reasonably small and we derive the exact distribution for the LRT test statistics under both \(H_0\) and \(H_1\). In particular, we show that the two tests are equivalent when \(\alpha \le 1-p\) where p is the probability that the LRT statistic is zero and give a proof that the F test has a larger power when \(\alpha >1-p\).

1.4 Additional notations

Let M, \(M_1\), \({\tilde{M}}\) and \(M_{*}\) be the PPOs onto C(X), \(C(X_1)\), \(C({\tilde{X}})\) and \(C(X_{*})\), respectively, so that

and define

to be the PPO onto \(C(X_1)^\perp _{C(X)}\) which under our assumptions is also the PPO onto \(C(X_*)^\perp _{C({\tilde{X}})}\). From Christensen (2011, Chapter 11) the projection operators satisfy

For completeness, these properties are reproven in Appendix 1. The sum of squares for whole plot error and subplot error are, respectively,

such that

The F statistic for testing (12) is defined as

2 The equivalence between the LRT and F-test

2.1 Maximum likelihood estimators (MLEs)

The likelihood function for model (7) is:

where

We provide three lemmas:

Lemma 1

The inverse of \(aI_n+bP\), where P is a PPO and a and b are real numbers such that \(a\ne 0\) and \(a\ne -b\), is

Proof of Lemma 1

See Proposition 12.11.1 in Christensen (2011, p.322). \(\square \)

Lemma 2

The determinant of \(aI_n+bP\), where P is a PPO and a and b are real numbers, is

Proof of Lemma 2

Any nonzero vector in \(C(P)^\perp \) is an eigenvector for the eigenvalue a, so a has multiplicity \(n-r(P)\). Similarly, any nonzero vector in C(P) is an eigenvector for the eigenvalue \(a+b\), so \(a+b\) has multiplicity r(P). The determinant is the product of the eigenvalues and hence \(\left| aI_n+bP \right| =a^{n-r(P)} (a+b)^{r(P)}.\) Appendix 2 contains an illustration. \(\square \)

Lemma 3

For \(q_1, q_2>0\), maximizing the function

subject to the constraint \(x_2\ge x_1> 0\) gives a maximum at \((x_1, x_2)=(Q_1, Q_2)\) when \(x_2>x_1>0\) (i.e. when \((x_1, x_2)\) is in the interior of the constraint) or a maximum at \((x_1, x_2)=\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}, \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) when \(x_2=x_1\) (i.e. when \((x_1,x_2)\) is on the boundary of the constraint).

The proof is in Appendix 3.

Applying Lemmas 1 and 2 to V in (20) gives the following determinant and inverse covariance matrix:

and

Substituting (24) in (19) and taking \(-2\) times the natural logarithm leads to

Proposition 1

The Maximum Likelihood estimators for \(\beta _{*}\), \(\sigma _{w}^2\) and \(\sigma _{s}^2\) of model (7) are

such that the pair \({\hat{\sigma }}_w^2=0\) and \({\hat{\sigma }}_s^2=\frac{SSE(s)+SSE(w)}{n}\) occurs when \(\frac{SSE(w)}{N}\le \frac{SSE(s)}{n-N}\) and the other pair \({\hat{\sigma }}_w^2=\frac{1}{m}\left[ \frac{SSE(w)}{N}-\frac{SSE(s)}{n-N}\right] \) and \({\hat{\sigma }}_s^2=\frac{SSE(s)}{n-N}\) occurs otherwise.

Proof of Proposition 1

Differentiating (26) with respect to \(\beta _{*}\) and setting the partial derivative to zero, leads to

It is well known, for the fixed effects in mixed models, that the maximum likelihood estimates (MLEs) are also the best linear unbiased estimates (BLUEs) and Christensen (2011, Chapter 11) has shown, since \(C(V{\tilde{X}})\subset C({\tilde{X}})\), that the ordinary least squares estimates (OLSEs) are the BLUEs for \(\beta _{*}\) so they are also the maximum likelihood estimates. Therefore, \(\hat{\beta _{*}}=\left( {\tilde{X}}'V^{-1}{\tilde{X}} \right) ^{-1}{\tilde{X}}'V^{-}Y=\left( {\tilde{X}}'{\tilde{X}} \right) ^{-}{\tilde{X}}'Y\) and subsequently \({\tilde{X}}\hat{\beta _{*}}\) could be computed, using the OLSEs, as

Note that the least squares estimates do not depend on the variance parameters so to find \({\hat{\sigma }}_{w}^2\) and \({\hat{\sigma }}_{s}^2\), we need to maximize

Not re-expressing \({\varPsi }\) in terms of the sum of square errors would make it impossible to find closed form solutions that maximize \(\ell _*({\hat{\beta }}_*, \sigma _w^2,\sigma _s^2|Y)\). Hence, since \({\varPsi } = (Y-{\tilde{M}}Y)'V^{-1}(Y-{\tilde{M}}Y)\), we get

To apply Lemma 3 to \(\ell _*\), let \(q_1=N(m-1)=n-N\), \(q_2=N\), \(x_1=\sigma _s^2\), \(x_2=\sigma _s^2+m\sigma _w^2\), \(Q_1=\frac{SSE(s)}{q_1}\) and \(Q_2=\frac{SSE(w)}{q_2}\). A key point is \(x_2\ge x_1\) so our maximization has to be done subject to that constraint. Lemma 3 gives the maximizers

when \(\frac{SSE(w)}{N}>\frac{SSE(s)}{n-N}\) and

when \(\frac{SSE(w)}{N}\le \frac{SSE(s)}{n-N}\).

Now, suppose that the MLE of \(\sigma _w^2\) is \({\hat{\sigma }}^2_w=\frac{1}{m} \left( \frac{SSE(w)}{N}-\frac{SSE(s)}{n-N}\right) \) then \(\frac{SSE(s)}{n-N}\le \frac{SSE(w)}{N}\) so that

with the first inequality true because the term in the middle is a weighted average so has to be larger than the smaller of the two things being averaged, therefore the MLE of \(\sigma _s^2\) is the smaller of the terms \(\frac{SSE(s)}{n-N}\) and \(\frac{SSE(s)+SSE(w)}{n}\). That is, the larger term between 0 and \(\frac{1}{m} \left( \frac{SSE(w)}{N}-\frac{SSE(s)}{n-N}\right) \) forces the answer to be the smaller term between \(\frac{SSE(s)}{n-N}\) and \(\frac{SSE(s)+SSE(w)}{n}\) and vice versa. Hence (34) and (35) could be written via max and min as

and

\(\square \)

Note that the partial derivatives for (33) are

and

So, for varification purposes, pluging in the pair \({\hat{\sigma }}_w^2=0\) and \({\hat{\sigma }}_s^2=\frac{SSE(s)+SSE(w)}{n}\) into (38) and the other pair \({\hat{\sigma }}_w^2=\frac{1}{m}\left[ \frac{SSE(w)}{N}-\frac{SSE(s)}{n-N}\right] \) and \({\hat{\sigma }}_s^2=\frac{SSE(s)}{n-N}\) into (37) gives zero as desired.

3 Monotonic relationship between the LRT and F-test statistics

We show that the LRT statistic \({\varLambda }\) is a monotone function of the F-test statistic F for testing the null hypothesis in (12). When \({\varLambda }\) is not 0, the monotone relationship is strict, so whenever the size of the test \(\alpha \) is smaller than the probability \(1-p_m\) that \({\varLambda }\ne 0\), the tests are equivalent. We also examine the behavior of the tests when they are not equivalent (i.e. when \(\alpha >1-p_m\)). To establish this monotone relationship we need to distinguish between the sum of squared errors and model parameters under the reduced model in (11) versus the full model in (7). In particular, the sum of squares for errors under the reduced model is

Similarly, we use \(\sigma _{e}^2\) to denote the variance parameter corresponding to the reduced model while \(\sigma _{w}^2\) and \(\sigma _{s}^2\) denote the variance parameters of the full model. Since the reduced and full models are nested we present the equivalance between the LRT and F-test under the full model. Since there are two cases for the MLEs of \(\sigma _w^2\) and \(\sigma _s^2\), the relationship between the statistics \({\varLambda }\) and F will be decomposed into two cases as well.

Proposition 2

The LRT statistic \({\varLambda }\), for (12), is a monotone function of the F statistic. In particular, (case-I) when \({\hat{\sigma }}_{w}^2=0\) and \({\hat{\sigma }}_{s}^2=\frac{SSE(s)+SSE(w)}{n}\) we have

and (case-II) when \({\hat{\sigma }}_{w}^2=\frac{1}{m}\left[ \frac{SSE(w)}{N}-\frac{SSE(s)}{n-N} \right] \) and \({\hat{\sigma }}_{s}^2=\frac{SSE(s)}{n-N}\) we have

such that case-I occurs when \(F\le \kappa \) and case-II occurs when \(F>\kappa \) where

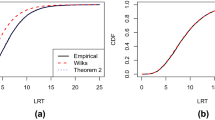

In Case-II, the relationship is strictly monotone so the tests are equivalent as long as the alpha level is smaller than \(P(F >\kappa )\). The plot of \({\varLambda }\) as a function of F is presented in Fig. 1.

Proof of proposition (2)

To examine the variation between plots, we test the whole plot error as in (12). Note that, under \(H_0\) we get the reduced model (11) where \(\epsilon \sim N(0,\sigma _{e}^2I)\) with

and

so that the MLE of \(\sigma _{e}^2\) is

In our case, (7) is the full model and (11) is the reduced one so that

where \(\ell _R ({\hat{\beta }}_*,{\hat{\sigma }}^2_e|Y)\equiv \sup \ell ({\hat{\beta }}_*,\sigma ^2_e|Y)\) and \(\ell _F ({\hat{\beta }}_*,{\hat{\sigma }}^2_w,{\hat{\sigma }}^2_s|Y)\equiv \sup \ell ({\hat{\beta }}_*,\sigma ^2_w,\sigma ^2_s|Y)\) such that the R and F subscripts refer to the reduced and full models respectively. Using (39), then plugging (45) into (44) gives

Further, if we let \({\hat{\sigma }}_{s}^2\) and \({\hat{\sigma }}_{w}^2\) be the MLEs for \(\sigma _{s}^2\) and \(\sigma _{w}^2\) and plug them into (33) we get

Case-I: If \({\hat{\sigma }}_{w}^2=0\) and \({\hat{\sigma }}_{s}^2=\frac{SSE(s)+SSE(w)}{n}\) then

Case-II: If \({\hat{\sigma }}_{w}^2=\frac{1}{m}\left[ \frac{SSE(w)}{N}-\frac{SSE(s)}{n-N}\right] \) and \({\hat{\sigma }}_{s}^2=\frac{SSE(s)}{n-N}\) then, by (18), we get

We note that this case holds only when \({\hat{\sigma }}_{w}^2>0\) so F must be larger than \(\kappa \) since

The function of \({\varLambda }\) for Case II given in (50) is strictly increasing in F since

\(\square \)

4 The distribution of the F-test and LRT statistics

The form of relation between the \({\varLambda }\) and F helps us better understand the distribution of \({\varLambda }\). In particular, this relation implies an important lemma (Lemma 4) on the distribution of \({\varLambda }\) for (7).

Proposition 3

The distribution of the \(F-ratio\) in (18) for the model in (7) is a constant multiple of a central F distribution,

Proof of Proposition (3)

It has been shown in Chapter 11.2 of Christensen (2011) that

and SSE(w) is independent of SSE(s). Thus, since the F-distribution arises from the ratio of two independent chi-squared random variables, each divided by its respective degrees of freedom, we have

\(\square \)

Now, if we let \(W\sim F\left( N-r(X_{*}), n-r(X)\right) \) then \({\hat{\sigma }}_w^2=0 \iff F \le \kappa \iff W\le \frac{\kappa \sigma _s^2}{\sigma _s^2+m\sigma _w^2}\) where the first if and only if is an exact relationship and the second one is only a distributional relationship.

Lemma 4

The distribution of the LRT statistic \({\varLambda }\) for the model in (7) is determined by the relationship in (57) where \(W\sim F\left( N-r(X_{*}), n-r(X)\right) \), \(p_m\), a, and \(\tau \) are as described.

such that

where

and

Proof of Lemma 4

From case-I of Proposition 2 we know that \({\varLambda }=0\) iff \(F\le \kappa \) so

The equality in the second line of (61) holds due to Proposition 3.

Now, from case-II of Proposition 2 we also know that, if \(F> \kappa \) (i.e. when \(W> \frac{\kappa \sigma _s^2}{\sigma _s^2+m\sigma _w^2}\)),

So, if we let \(W\sim F\left( N-r(X_{*}), n-r(X)\right) \) then substituting F from (53) into (62) gives

where \(a=\frac{N-r(X_{*})}{n-r(X)}\frac{\sigma _s^2+m\sigma _w^2}{\sigma _s^2}\) and \(\tau =N\log \left( \frac{(m-1)^{m-1}}{m^m}\frac{1}{a}\right) \). \(\square \)

We note that \(\lim _{W \rightarrow \frac{\kappa \sigma _s^2}{\sigma _s^2+m\sigma _w^2}}{\varLambda }=0\). This result can be verified by plugging in \(W=\frac{\kappa \sigma _s^2}{\sigma _s^2+m\sigma _w^2}\) in the third equality of (63). In practice, the probability mass at zero for the likelihood ratio test in (58) should be numerically estimated by using the MLEs as follows.

Providing an exact distribution of the test statistic for the LRT in these models will help in calculating a more accurate p-value than the traditionally used p-value from the large sample chi-square mixture approximations introduced by Self and Liang (1987). The distribution of \({\varLambda }\) under the null hypothesis is obtained by substituting zero for \(\sigma _w^2\) in Lemma 4. This implies that, under \(H_0\), the probability that \({\varLambda }\) is zero does not depend on the parameter \(\sigma _s^2\) and equals

GSP models offer remarkable advantages in the ability to perform exact calculations. For example, Crainiceanu and Ruppert (2004) derive the probability mass at zero for the likelihood ratio test in linear mixed models (LMM) with one variance component as

where \(\mu _{s,n}\) and \(\xi _{s,n}\) are the K eigenvalues of the \(K\times K\) matrices \(X_1^{'}P_{0}X_1\) and \(X_1^{'}X_1\) respectively, where \(w_i\sim N(0,1)\), \(P_{0}=I_n-{\tilde{X}}\left( {\tilde{X}}^{'}{\tilde{X}}\right) ^{-1}{\tilde{X}}^{'}\) and \({\tilde{p}}\) is the dimensionality of the vector \(\beta _{*}\) in view of (7). We note that \({\tilde{p}}\) is the rank of the design matrix \({\tilde{X}}\) instead of the dimensionality of the vector \(\beta _{*}\) when the generalized inverse is used. We present the equivalence between the two formulas in (65) and (66) in the discussion that follows.

Considering the model in (7), one can show that the eigenvalues \(\xi _{s,n}\), of \(X_1^{'}X_1\), are m of multiplicity N and the eigenvalues \(\mu _{s,n}\), of \(X_1^{'}P_{0}X_1\), are m of multiplicity \(N-r(X_{*})\) and zero of multiplicity \(r(X_{*})\). Further, from (15), it’s immediate that \(M_{*}\perp (M-M_1)\) and \(M_1\subset M\) so

Thus, if we let \(w_s\sim N(0,1)\) then according to (66), the probability mass at zero for the likelihood ratio test in testing (12) is

which is the same as (65).

The formula in (65) is an easier way of getting the probability mass at zero for the likelihood ratio test under \(H_0\) than (66). In fact, our results stand out relative to the derivation of Crainiceanu and Ruppert (2004) for being nice and compact and because it’s not possible in general to give such a short and straightforward expression for computing the probability mass at zero for the LRT statistic. Also, to the best of our knowledge, it is the first time that an explicit mathematical form, Lemma 4, has been presented for the LRT for any variance component in a linear mixed model under the full model. This allows us compute the power of the test through a formula instead of a Monte Carlo simulation.

5 Power comparison

This section includes four subsections. In the Sect. 1, we illustrate the steps for computing the critical value and power of the F-test and give a concrete example. In the Sect. 2, we illustrate the steps for computing the critical value and power of the LRT when \(\alpha \le 1-p_m\) (i.e. when there is no randomized test) and give a concrete example; we use the very same example that we use for the F-test to show the equivalence in power through a numerical example. These illustrative steps and example, of the Sect. 2, don’t use the relationship between the two test statistics \({\varLambda }\) and F. Thus, the Sect. 2 is concluded with a very short theorem on the equivalence between the two test for the case when \(\alpha \le 1-p_m\). The Sect. 4 gives a detailed proof, without numerical examples, that the F-test has a larger power than the LRT when \(\alpha > 1-p_m\). The Sect. 5 discusses the practicality of the second case when \(\alpha > 1-p_m\).

5.1 The power of the F-test

The F-test statistic, by Proposition 3, has a distribution that is a constant multiple of an F distribution as \(F\sim \frac{\sigma _s^2+m\sigma _w^2}{\sigma _s^2}F(N-r(X_{*}), n-r(X))\). Since the F-test statistic is denoted by F and the F-distribution is traditionally known by the symbol F, to eliminate ambiguities, we let \(W\sim F\left( N-r(X_{*}), n-r(X)\right) \). Then, at a given significance level \(\alpha \), the critical value C is computed under \(H_0\) as

G is the CDF for \(F_{(N-r(X_{*}), n-r(X))}\). For example, if we let \(N-r(X_*)=3\) and \(n-r(X)=9\) then, for \(\alpha =0.05\), C is found as \(C=G^{-1}(1-\alpha )=G^{-1}(0.95)=3.86255.\)

If \(m=4\), \(\sigma _s^2=3\) and \(\sigma _w^2=7\), then the power of a size \(\alpha \) F-test is

For example, if we let \(N-r(X_*)=3\), \(n-r(X)=9\), \(m=4\), \(\sigma _s^2=3\) and \(\sigma _w^2=7\) then, for \(\alpha =0.05\), the critical value is 3.86255 and the power is \({\varXi }_F=1-G\left( \frac{3}{31}\times 3.86255\right) =0.7741\).

5.2 The power of the LRT when \(\alpha \le 1-p_m\)

The LRT statistic, by Lemma 4, has a mixture distribution as

such that \(p_m\), \(\tau \) and a are defined in Lemma 4. Thus, at a given significance level \(\alpha \), the critical value \(C'\) is computed under \(H_0\) as

where \(G'\) is the CDF of the transformed random variable \(\tau +N\log \left( \frac{(1+aW)^m}{W} \right) \) for \(W\sim F\left( N-r(X_{*}), n-r(X)\right) \) when \(W>\kappa \) and \(\sigma _w^2=0\). For example, if we let \(N-r(X_*)=3\) and \(n-r(X)=9\) then, for \(\alpha =0.05\) under \(H_0\), \(\kappa =1\), \(p_m=0.56371\) and

so that \(C'\) is found, by numerical simulation or numerical integration after transformation, as \(C'=G'^{-1}(1-\frac{\alpha }{1-p_m})=G^{-1}(0.8853973)=4.848.\) See Castellacci (2012) for more details on computing the quantiles of mixture distributions.

If \(m=4\), \(\sigma _s^2=3\) and \(\sigma _w^2=7\) then the power of a size \(\alpha \) LRT is

where \(G^{''}\) is the CDF of the transformed random variable \(\tau +N\log \left( \frac{(1+aW)^m}{W} \right) \) for \(W\sim F\left( N-r(X_{*}), n-r(X)\right) \) when \(W> \frac{\kappa \sigma _s^2}{\sigma _s^2+m\sigma _w^2}\) and \(\sigma _w^2>0\). For example, if we let \(N-r(X_*)=3\), \(n-r(X)=9\), \(m=4\), \(\sigma _s^2=3\) and \(\sigma _w^2=7\) then, for \(\alpha =0.05\) under \(H_1\), \(\kappa =\frac{3}{4}\), \(p_m=0.04014\) and

so that \({\varXi }_{LRT}\) is found, by numerical simulation or numerical integration after transformation, as \({\varXi }_{LRT}=(1-p_m)\left[ 1-G^{''}(C')\right] =0.95986\left[ 1-G^{''}(4.848)\right] =0.7741.\)

Note that both test statistics \({\varLambda }\) and F are nonnegative and whenever \({\varLambda } \ne 0\) there is a strict monotonic relationship and thus when the LRT critical region does not include 0, the tests are the same. In fact, in the case when \(\alpha \le 1-p_m\), the critical region will consist of positive values where \({\varLambda }\) is a strictly increasing function of the F, thus we have

Proposition 4

Let \(\alpha \) be the size of the test. If \(\alpha \le 1-p_m\) where \(p_m=P({\varLambda }=0| \sigma _w^2=0)\) then the F-test and LRT are equivalent and hence have the same power.

Proof of Proposition (4)

Since \({\varLambda }\) can be written as

where g(.) is a strictly increasing function, then the critical region of the LRT when \(\alpha \le 1-p_m\) doesn’t involve 0 and hence the power can be calculated as

\(\square \)

Figure 2 illustrates the equivalence of the F-test and LRT whenever \(\alpha \le 1-p_m\) where \(p_m=P({\varLambda }=0| \sigma _w^2=0)\). Further, it clarifies why the two tests are equivalent as long as the critical value \(W_{\alpha }\) of the F-test is larger than \(W_{1-p_m}\); the minimal critical value at which the two tests are equivalent. In fact, under the null hypothesis of \(\sigma _w^2=0\) we have \(\lim _{W \rightarrow \kappa }{\varLambda }=0\).

5.3 Power comparison when \(\alpha > 1-p_m\)

In the case when \(\alpha >1-p_m\), the critical region of the LRT involves \({\varLambda }=0\) hence it involves randomization. We show mathematically, for this case, that the power of the F-test is larger than that of the LRT. Let \(k:=\frac{\sigma _s^2+m\sigma _w^2}{\sigma _s^2}\). Firstly, we rewrite the power of a size \(\alpha \) F-test in terms of \(p_m\) the probability that \({\varLambda }=0\) under \(H_1\) as follows.

Note that the second equality in (78) is due to the probabilistic identity \(P(E)+P(E^c)=1\). Secondly, we rewrite the randomized test for the LRT in terms of the F-test according to the monotonic relationship between their test statistics and the smallest critical value, \(W_{1-p_m}=\kappa \), where the F and LRT tests are equivalent as follows.

where \(\gamma \) is determined according to the size of the test as

Hence, the power of the LRT is

Proposition 5

Let \(p_m=P({\varLambda }=0|\sigma _w^2=0)\). For GSP models with a finite whole plots size m, if \(\alpha > 1-p_m\) then the power of the size \(\alpha \) F-test is larger than that of the LRT in testing \(\sigma _w^2=0\).

Proof of Proposition 5

It’s sufficient to show that

Since \(k=\frac{\sigma _s^2+m\sigma _w^2}{\sigma _s^2}>1\), this follows from Qeadan and Christensen (2014). \(\square \)

5.4 Is \(\alpha > 1-p_m\) practical?

The LRT and F-test are equivalent as long as the level of the test is smaller or equal to \(P(W>\kappa )\) where \(\kappa =\frac{n-r(X)}{(m-1)(N-r(X_{*}))}\) and \(W\sim F_{N-r(X_*), n-r(X)}\). That is, the two tests are equivalent for all \(\alpha \)’s satisfying the inequality \(\alpha \le P\left( W>\frac{n-r(X)}{(m-1)(N-r(X_{*}))}\right) \). Table 1 presents the maximal values of \(\alpha \) satisfying this inequality for different combinations of the degrees of freedom \(df_1=N-r(X_*)\) and \(df_2=n-r(X)\) when \(m=2\). Since the increase in m, for being in the denominator of \(\frac{n-r(X)}{(m-1)(N-r(X_{*}))}\), increases the maximal values of \(\alpha \) satisfying the inequality \(\alpha \le P\left( W>\frac{n-r(X)}{(m-1)(N-r(X_{*}))}\right) \), it’s sufficient to provide another Table (see Table 2) for the case when \(m=4\) to explain the pattern in which those maximal values of \(\alpha \) behave as a function of m. The highlighted cells of Table 1 in italic represent the combination of degrees of freedom for which the F-test has a larger power than the LRT at the \(5\%\) significance level when \(m=2\). The very same thing is true for Table 2 when \(m=4\). We observed from simulation, and below give a mathematical proof, that as m increases the power of the LRT approaches that of the F-test. Typically, the degrees of freedom for subplot error \(df_2\) are much larger than the degrees of freedom for whole plots error \(df_1\); for GSP models. So, the \(\alpha > 1-p_m\) case is very practical.

Proposition 6

For GSP models, if \(\alpha > 1-p_m\) then, for a size \(\alpha \) test, \({\varXi }_{LRT}\uparrow {\varXi }_{F}\) in testing \(\sigma _w^2=0\) as the whole plots size m approaches infinity.

Proof of Proposition 6

Recall that

and

From Proposition 5, we have established for a finite whole plot size m

If we let \(m\uparrow \infty \) then \(k\uparrow \infty \) so that

and thus the inequality becomes equality and as a result \({\varXi }_{LRT}\uparrow {\varXi }_{F}\). In fact for \(m=\infty \) we have \({\varXi }_{LRT}={\varXi }_{F}=1\) since \(\lim _{k \rightarrow +\infty }P\left( W\ge \frac{1}{k}W_{1-p_m}\right) =1\). \(\square \)

6 Conclusion

For a class of linear mixed models with CS covariance structure, we show the equivalence between the F-test and LRT when the level of the test, \(\alpha \), is less or equal to one minus the probability, p, that the LRT statistic is zero. However, when \(\alpha > 1 - p\), we show that the F-test has a larger power than the LRT and thus proving that the LRT test is inadmissible. Further, we derive the statistical distribution of the LRT under both the null and alternative hypotheses \(H_0\) and \(H_1\) where \(H_0\) is the hypothesis that the between component variance is zero. This work proves important property on the relationship between the F-test and LRT for a large class of Mixed effect models. Further, providing an exact distribution of the test statistic for the LRT in these models will help in calculating a more accurate p-value than the traditionally used p-value from the large sample chi-square mixture approximations introduced by Self and Liang (1987).

References

Castellacci G (2012) A formula for the quantiles of mixtures of distributions with disjoint supports. http://ssrn.com/abstract=2055022. Accessed 15 April 2013

Christensen R (1984) A note on ordinary least squares methods for two-stage sampling. J Am Stat Assoc 79:720–72

Christensen R (1987) The analysis of two-stage sampling data by ordinary least squares. J Am Stat Assoc 82:492–498

Christensen R (2011) Plane answers to complex questions: the theory of linear models, 4th edn. Springer, New York

Christensen R (2019) Advanced linear modeling: statistical learning and dependent data, 3rd edn. Springer, New York

Cloud MJ, Drachman BC (1998) Inequal Appl Eng, 1st edn. Springer, New York

Crainiceanu CM, Ruppert D (2004) Likelihood ratio tests in linear mixed models with one variance component. J R Stat Soc Ser B 66:165–85

Cuyt A, Petersen VB, Verdonk B, Waadeland H, Jones WB (2008) Handbook of continued fractions for special functions, pp 319–341

Dutka J (1981) The incomplete beta function–a historical profile. Arch History Exact Sci 24:11–29

Graybill FA (1961) An introduction to linear statistical models, vol I. McGraw Hill, New York

Greven S, Crainiceanu CM, Küchenhoff H, Peters A (2008) Restricted likelihood ratio testing for zero variance components in linear mixed models. J Comput Gr Stat 17(4):870–891

Harville DA (1997) Matrix algebra from a statistician’s perspective, 1st edn. Springer, New York

Herbach LH (1959) Properties of model II-type analysis of variance tests, a: optimum nature of the \(F\)-test for model II in the balanced case. Ann Math Stat 30(4):939–959

Johnson RA, Wichern DW (2002) Applied multivariate statistical analysis. Prentice hall, Upper Saddle River, NJ

Miller SS, Mocanu PT (1990) Univalence of Gaussian and confluent hypergeometric functions. Proc Am Math Soc 110(2):333–342

Lavine M, Bray A, Hodges J (2015) Approximately exact calculations for linear mixed models. Electron J Stat 9(2):2293–2323

Molenberghs G, Verbeke G (2007) Likelihood ratio, score, and wald tests in a constrained parameter space. Am Stat 61(1):22–27

O’Connor AN (2011) Probability distributions used in reliability engineering. RIAC, Maryland

Qeadan F, Christensen R (2014) New stochastic inequalities involving the F and Gamma Distribut. J Inequal Spec Funct 5(4):22–33

Scheipl F, Greven S, Küchenhoff H (2008) Size and power of tests for a zero random effect variance or polynomial regression in additive and linear mixed models. Comput Stat Data Anal 52(7):3283–3299

Self SG, Liang KY (1987) Asymptotic properties of maximum likelihood estimators and likelihood ratio tests under nonstandard conditions. J Am Stat Assoc 82(398):605–610

Wiencierz A, Greven S, Küchenhoff H (2011) Restricted likelihood ratio testing in linear mixed models with general error covariance structure. Electron J Stat 5:1718–1734

Yan L, Zhang G (2010) The equivalence between likelihood ratio test and f-test for testing variance component in a balanced one-way random effects model. J Stat Comput Simul 80:443–450

Acknowledgements

We acknowledge the reviewers greatly for their valuable time in reviewing the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Supplementary material includes (i) Proof for the PPOs Properties in (15), (ii) Illustration for the proof of Lemma 2, and (iii) Proof of Lemma 3.

1.1 Proof for the PPOs Properties in (15)

Firstly, \({\tilde{M}}=M_{*}+M_2\): This result is an immediate consequence of conditions (b) and (c) of Sect. 1.3. In particular, since \(C({\tilde{X}})= C(X_{*}, (I-M_1)X_2)\) then by defintion of PPO

The equality in the third line of (83) is due to condition (b) which implies that \((I-M_1)X_{*}=X_{*}^{'}(I-M_1)=0\).

Secondly, \(M_{*}M_1=M_{*}\): This result is an immediate consequence of condition (b). In particular, since \(C(X_{*})\subset C(X_1)\) then \(X_{*}=X_1B\) for some matrix B and therefore, by definition of PPO,

Thus, using \(M_{*}\) from (84) and \(M_1\) from (13) gives

Thirdly, \(M_{1}M_2=0\): This results is trivially obtained by simply multiplying \(M_1\) from (13) and \(M_2\) from (14).

Fourthly, \(M=M_1+M_2\): Let \(X=[X_1, X_2]\) such that M is the PPO onto C(X). Since \(M_1M_2=0\), then \(C(M_1)\perp C(M_2)\) and hence \(M=M_1+M_2\) is a PPO onto \(C(M_1,M_2)\) by Theorem B.45 of Christensen (2011). But \(C(M_1,M_2)=C(X_1,(I-M_1)X_2)\) since \(C(M_1)=C(X_1)\) and \(C(M_2)=C((I-M_1)X_2)\). So, it remains to prove that \(C(X_1,X_2)=C(X_1,(I-M_1)X_2)\) to complete the proof. To do so, we use the fact that \(C(A_1) = C(A_2)\) iff there exist \(B_1\) and \(B_2\) such that \(A_1 = A_2 B_2\) and \(A_2=A_1 B_1\) as follows.

and

That is, \(C(X_1, (I-M_1)X_2)\subset C(X_1, X_2)\) and \(C(X_1, X_2)\subset C(X_1, (I-M_1)X_2)\) so that \(C(X_1,X_2)=C(X_1,(I-M_1)X_2)\) as desired. \(\square \)

Appendix 2

1.1 Illustration for the proof of Lemma 2

Let \(\lambda =-\,\frac{a}{b}\). Then

However, the determinant \(|P-\lambda I_n|\) in (88) is the characteristic polynomial of P which equals to (89) since 1 and 0 are the eigenvalues for P with multiplicity r(P) and \(n - r(P)\) respectively.

Hence, substituting (89) in (88) gives the desired result

\(\square \)

Appendix 3

1.1 Proof of Lemma 3

When \(x_2> x_1> 0\), we have a standard maximization problem for a function of two variables. Setting the partial derivatives to zero gives

and

Let \(g_{x_ix_j}=\frac{\partial }{\partial x_j}\left( \frac{\partial }{\partial x_i}g(x_i,x_j)\right) \) for \(i,j\in \{1,2\}\). Then, according to the second derivative test, we have

with \(D(Q_1, Q_2)=\frac{q_1q_2}{Q_1^2Q_2^2}>0\) and \(g_{x_1x_1}(Q_1, Q_2)=\frac{-q_1}{Q_1^2}<0\) so that \((x_1, x_2)=(Q_1, Q_2)\) is a maximum point. Thus, if \(Q_2>Q_1>0\) then the point \((Q_1,Q_2)\) is in the interior and maximizes the function within the interior; i.e. a local maximum.

When \(x_1=x_2:=x\), using direct substitution, the problem reduces to maximizing the function of one variable

over \(R^{+}\). So, setting the partial derivative of g(x) to zero gives

Now, using the second derivative test, we have

with

so that \((x_1, x_2)=\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}, \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) is a maximum point on the boundary of the domain.

Now, we show that if \(Q_2>Q_1>0\) then the maximum in the interior is a global maximum. Note that when the maximum is in the interior at \((Q_1,Q_2)\) it attains the value

Further, when the maximum is on the boundary at \(\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}, \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) it attains the value

Showing that \(g(Q_1,Q_2)>g\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) is the same as showing

which is true due to Jensen’s Inequality:

Let Q be a r.v. such that \(P(Q=Q_1)=\frac{q_1}{q_1+q_2}\) and \(P(Q=Q_2)=\frac{q_2}{q_1+q_2}\) then by Jensen’s Inequality we have

Now, we show that if \(Q_1>Q_2>0\) then the maximum in the boundary is a global maximum. Note that if \(Q_1> Q_2>0\), there are no critical points of the function within the interior. Further, we know that \(g(x_1,x_2)\) goes to \(-\infty \) in both \(x_1\) and \(x_2\) which forces the maximum on the boundary at \(\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}, \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) to be a global maximum. \(\square \)

Rights and permissions

About this article

Cite this article

Qeadan, F., Christensen, R. On the equivalence between the LRT and F-test for testing variance components in a class of linear mixed models. Metrika 84, 313–338 (2021). https://doi.org/10.1007/s00184-020-00777-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-020-00777-z