Abstract

Consider N independent stochastic processes \((X_i(t), t\in [0,T])\), \(i=1,\ldots , N\), defined by a stochastic differential equation with random effects where the drift term depends linearly on a random vector \(\Phi _i\) and the diffusion coefficient depends on another linear random effect \(\Psi _i\). For these effects, we consider a joint parametric distribution. We propose and study two approximate likelihoods for estimating the parameters of this joint distribution based on discrete observations of the processes on a fixed time interval. Consistent and \(\sqrt{N}\)-asymptotically Gaussian estimators are obtained when both the number of individuals and the number of observations per individual tend to infinity. The estimation methods are investigated on simulated data and show good performances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Longitudinal data are widely collected in clinical trials, epidemiology, pharmacokinetic pharmacodynamics experiments and agriculture. The interest may focus on population effects among individuals and individual specific behaviour. In mixed effects models, random effects are incorporated to accomodate variability among subjects or inter-individual variability, while the same structural model rules the dynamics of each subject. In stochastic differential equations with mixed effects (SDEMEs), the structural model is a set of stochastic differential equations. The use of SDEMEs is comparatively recent. It has first been motivated by pharmacological applications (see Ditlevsen and De Gaetano 2005; Donnet and Samson 2008; Delattre and Lavielle 2013; Leander et al. 2015; Forman and Picchini 2016 and many others) but also neurobiological ones (as in Dion 2016 for example). The main issue in mixed-effects models is the estimation of the parameters in the distribution of the random effects. This is generally difficult in practice due to the intractable likelihood function. To overcome this problem, many methods based on approximate solutions associated with computationally intensive numerical methods have been proposed (Picchini et al. 2010; Picchini and Ditlevsen 2011; Delattre and Lavielle 2013). For general mixed models as well as for SDEMEs, these methods, due to their iterative settings, lead to large computation times for the estimation. Moreover, when the stuctural model is a stochastic differential equation, there is an additional problem which derives from the intractability of the likelihood associated with discrete observations of one path.

The SDEME framework allows to take account of two sources of randomness in the structural SDE model, random effects in the drift and random effects in the diffusion coefficient, and the temptation to incorporate in this SDE model a joint distribution for these two random effects is quite natural. This modeling has been proposed by several authors: Picchini et al. (2010), Picchini and Ditlevsen (2011), Berglund et al. (2001), Forman and Picchini (2016), Whitaker et al. (2017). But to our knowledge, it has never been studied theoretically. However, it is well known that, for discretely observed diffusion processes, estimation of parameters in the drift coefficient and diffusion coefficient have different properties (see e.g., Kessler et al. 2012). Our aim here is to investigate the statistical properties of such a situation according to n, the number of observations per individual (or path), and the total number of individuals N. The results of Nie and Yang (2005), Nie (2006, 2007) do not provide answers to these questions, and all the numerical methods compulsory to the likelihood intractability, are unable to tackle this problem. Understanding how estimation performs in these kinds of models is an important issue, necessary to clarify what can be expected from all the numerical methods widely used in this domain. Since the work of Ditlevsen and De Gaetano (2005), where the special case of a mixed-effects Brownian motion with drift is treated, the main contributions to our knowledge for a theoretical study of parametric inference in SDEMEs, are from Delattre et al. (2013, 2015, 2017) and Grosse Ruse et al. (2017).

In this paper, we consider discrete observations and simultaneous random effects in the drift and diffusion coefficients by means of a joint parametric distribution. The inclusion of random effects both in the drift and the diffusion coefficient raises new problems which were not addressed in our previous works. We focus on specific distributions for the random effects. They derive from a Bayesian choice of distributions and lead to explicit approximate likelihoods (this choice indeed corresponds to explicit posterior and marginal distributions for an n-sample of Gaussian distributions with a specific prior distribution on the parameters).

More precisely, we consider N real valued stochastic processes \((X_i(t), t \ge 0)\), \(i=1, \ldots ,N\), with dynamics ruled by the following random effects stochastic differential equation (SDE):

where \((W_1, \ldots , W_N)\) are N independent Wiener processes, \(((\Phi _i, \Psi _i), i=1, \ldots N)\) are N i.i.d. \({{\mathbb {R}}}^d\times (0,+\infty )\)-valued random variables, \(((\Phi _i, \Psi _i), i=1, \ldots ,N)\) and \((W_1, \ldots , W_N)\) are independent and x is a known real value. The functions \(\sigma (.):{{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\) and \( b(.)=(b_1(.),\ldots , b_d(.))' :{{\mathbb {R}}}\rightarrow {{\mathbb {R}}}^d\) are known. The notation \(X'\) for a vector or a matrix X denotes the transpose of X. Each process \((X_i(t))\) represents an individual and the \(d+1\)-dimensional random vector \((\Phi _i, \Psi _i)\) represents the (multivariate) random effect of individual i. In Delattre et al. (2013), the N processes are assumed to be continuously observed throughout a fixed time interval [0, T], \(T>0\) and \(\Psi _i= \gamma ^{-1/2}\) is non random and known. When \(\Phi _i\) follows a Gaussian distribution \({{\mathcal {N}}}_d({\varvec{\mu }}, \gamma ^{-1}{\varvec{\Omega }})\), the exact likelihood associated with \((X_i(t), t\in [0,T], i=1, \ldots ,N)\) is explicitely computed and asymptotic properties of the exact maximum likelihood estimators are derived under the asymptotic framework \(N\rightarrow +\infty \). In Delattre et al. (2015), the case of \(b(.)=0\) and \(\Psi _i= \Gamma _i^{-1/2}\) with \(\Gamma _i\) following a Gamma distribution \(G(a, \lambda )\) is investigated; and Delattre et al. (2017) is concerned with estimation of mixed effects either in the drift or in the diffusion coefficient from discrete observations. From now on, each process \((X_i(t))\) is discretely observed on a fixed-length time interval [0, T] at n times \(t_j=jT/n\) and the random effects \((\Phi _i, \Psi _i)\) follow a joint parametric distribution. Our aim is to estimate the unknown parameters from the observations \((X_i(t_j), j=1, \ldots ,n; i=1, \ldots , N)\). We focus on distributions that give rise to explicit approximations of the likelihood functions so that the construction of estimators is easy and their asymptotic study feasible. To this end, we assume that \((\Phi _i,\Psi _i)\) has the following distribution:

Contrary to the common Gaussian assumption for random effects, the marginal distribution of \(\Phi _i\) is not Gaussian: \(\Phi _i -{\varvec{\mu }}= \Gamma _i^{-1/2} \eta _i\), with \(\eta _i \sim {{\mathcal {N}}}_d(0, {\varvec{\Omega }})\), is a Student distribution. We propose two distinct approximate likelihood functions which yield asymptotically equivalent estimators as both N and the number n of observations per individual tend to infinity. The first approximate likelihood (Method 1) is natural but proving its existence raises technical difficulties (Proposition 1, Lemma 1). The second one (Method 2) derives from Method 1 and has the advantage of splitting the estimation of parameters in the diffusion coefficient and parameters in the drift term. We obtain consistent and \(\sqrt{N}\)-asymptotically Gaussian estimators for all parameters under the condition \(N/n \rightarrow 0\). For the parameters \((\lambda ,a)\) of random effects in the diffusion coefficient, we obtain that the weaker constraint \(N/n^2\) is enough (Theorems 1, 2). We prove that the estimators obtained with these two approximate likelihoods are asymptotically equivalent (Theorem 3). We compare these results with the estimation for N i.i.d. direct observations of the random effects \((\Phi _i, \Psi _i)\).

The methods of the present paper and of the previous ones (Delattre et al. 2013, 2015, 2017) are now avalaible in the R package MsdeParEst (Delattre and Dion 2017) for mixed Ornstein–Uhlenbeck and CIR.

The structure of the paper is the following. Some working assumptions and two approximations of the model likelihood are introduced in Sect. 2. Two estimation methods are derived from these approximate likelihoods in Sect. 3, and their respective asymptotic properties are studied. Section 4 provides numerical simulation results for several examples of SDEMEs and illustrates the performances of the proposed methods in practice. Theoretical proofs are gathered in the “Appendix”. Some technical proofs are given in an electronic supplementary material. Auxiliary results are given in Sect. 7.

2 Approximate likelihoods

2.1 Framework and assumptions

Let \((X_i(t), t \ge 0)\), \(i=1, \ldots ,N\) be N real valued stochastic processes ruled by (1). The processes \((W_1, \ldots , W_N)\) and the r.v.’s \((\Phi _i,\Psi _i), i=1, \ldots ,N\) are defined on a common probability space \((\Omega , {{\mathcal {F}}}, {{\mathbb {P}}})\) and we set \(({{\mathcal {F}}}_t=\sigma ( \Phi _i, \Psi _i, W_i(s), s\le t, i=1, \ldots ,N), t\ge 0)\). We assume that:

-

(H1)

The real valued functions \(x \rightarrow b_j(x)\), \(j=1, \ldots , d\) and \(x \rightarrow \sigma (x)\) are \(C^2\) on \({{\mathbb {R}}}\) with first and second derivatives bounded by L. The function \(\sigma ^2(.)\) is lower bounded : \(\exists \; \sigma _0\ne 0, \forall x\in {{\mathbb {R}}}, \sigma ^2(x)\ge \sigma ^2_0.\)

-

(H2)

There exists a constant K such that, \(\forall x \in {{\mathbb {R}}}\), \(\Vert b(x)\Vert +|\sigma (x)|\le K\).

Assumption (H1) corresponds to the usual linear growth condition and regularity assumptions on b(.), \(\sigma (.)\) which ensure the existence and uniqueness of strong solutions of Eq. (1). However, we need here, for the accurate study of discretizations (see Sect. 7.2), that \(X_i(t)\) has finite moments. For stochastic differential equations, the property {\(E(X_i^{2p}(t)) <\infty \)} holds if the initial condition satisfies \(\{ E(X_i^{2p}(0))<\infty \}\). This does no longer hold here, even if {\( E(||\Phi _i||^{2p}+ \Psi _i^{2p})<\infty \)} (see Sect. 7.1). We have either to assume that \((\Phi _i,\Psi _i)\) belongs to a bounded set or (H2). To circumvent this last assumption, we could think of applying a localization device (see Jacod and Protter 2012, Chapter 4.4.1). However, while it is straightforward to apply this method for one path, the extension to N paths \(X_i(.)\) simultaneously, is complex, especially as \(X_i(t)\) has possibly no finite moments (see Sect. 7.1).

The processes \((X_i(t))\) are discretely observed with sampling interval \(\Delta _n\) on a fixed time interval [0, T] and for sake of clarity we assume a regular sampling on [0, T]:

Let \(\vartheta \) denote the unknown parameter and \(\Theta \) the parameter set with

The canonical space associated with one trajectory on [0, T] is defined by \((({{\mathbb {R}}}^d\times (0,+\infty )\times C_T), P_\vartheta )\) where \(C_T\) denotes the space of real valued continuous functions on [0, T], \(P_\vartheta \) denotes the distribution of \((\Phi _i,\Psi _i, (X_i(t), t\in [0,T]))\) and \(\vartheta \) the unknown parameter. For the N trajectories, the canonical space is \(\prod _{i=1}^N (({{\mathbb {R}}}^d\times (0,+\infty )\times C_T),{{\mathbb {P}}}_\vartheta = \otimes _{i=1}^N P_\vartheta )\). Below, the true value of the parameter is denoted \(\vartheta _0\).

We introduce the statistics and the assumptions

-

(H3)

The matrix \(V_i(T)\) is positive definite a.s., where

$$\begin{aligned} V_i(T)= \left( \int _0^T \frac{b_k(X_i(s))b_\ell (X_i(s))}{ \sigma ^2(X_i(s))}ds\right) _{1\le k,\ell \le d}. \end{aligned}$$(7) -

(H4)

The parameter set \(\Theta \) satisfies that, for constants \(\ell _0, \ell _1, \alpha _0, \alpha _1 ,m,c_0,c_1,\) \(0< \ell _0\le \lambda \le \ell _1,\; 0<\alpha _0 \le a\le \alpha _1,\; \Vert {\varvec{\mu }}\Vert \le m,\; c_0\le \lambda _{max}({\varvec{\Omega }})\le c_1,\) where \(\lambda _{max}({\varvec{\Omega }})\) denotes the maximal eigenvalue of \({\varvec{\Omega }}\).

Assumption (H3) ensures that all the components of \(\Phi _i\) can be estimated. If the functions \((b_k/\sigma ^2)\) are not linearly independent, the dimension of \(\Phi _i\) is not well defined and (H3) is not fulfilled. Note that, as n tends to infinity, the matrix \(V_{i,n}\) defined in (5) converges a.s. to \(V_i(T)\) so that, under (H3), for n large enough, \(V_{i,n}\) is positive definite. Assumption (H4) is classically used in a parametric setting. Under (H4), the matrix \({\varvec{\Omega }}\) may be non invertible which allows including non random effects in the drift term.

2.2 First approximation of the likelihood

Let us now compute our first approximate likelihood \({{\mathcal {L}}}_n(X_{i,n},\vartheta )\) of \(X_{i,n}\), with \(\vartheta \) defined in (4). The exact likelihood of the i-th vector of observations is obtained by computing first the conditional likelihood given \(\Phi _i=\varphi , \Psi _i=\psi \), and then integrating the result with respect to the joint distribution of \((\Phi _i, \Psi _i)\). The conditional likelihood given fixed values \((\varphi , \psi )\), i.e. the likelihood of \((X_i^{\varphi , \psi }(t_j), j=1, \ldots ,n)\) being not explicit, is approximated by using the Euler scheme likelihood of

Setting \(\psi = \gamma ^{-1/2}\), the exact likelihood of \((X_i^{\varphi , \psi }(t_j), j=1, \ldots ,n)\) is replaced by the likelihood of \((Y_{i,j},j=1, \ldots ,n)\):

with \(Y_{i,0}=x\) and \(\epsilon _{i,j}= \frac{W_i(t_j)-W_i(t_{j-1})}{\sqrt{\Delta }}\) i.i.d. \(\mathcal{N}(0,1)\). Therefore, this yields the approximate conditional likelihood:

where this formula ignores multiplicative functions which do not contain the unknown parameters.

The unconditional approximate likelihood is obtained integrating with respect to the joint distribution \(\nu _{\vartheta }(d\gamma ,d\varphi )\) of the random effects \((\Gamma _i=\Psi _i^{-2},\Phi _i)\).

For this, we first integrate \(L_n(X_i, \gamma ,\varphi )\) with respect to the Gaussian distribution \({{\mathcal {N}}}_d({\varvec{\mu }},\gamma ^{-1}{\varvec{\Omega }})\). Then, we integrate the result w.r.t. the distribution of \(\Gamma _i\). At this point, a difficulty arises. This second integration is only possible on the subset \(E_{i,n}(\vartheta )\) defined in (12).

Let \(I_d\) denote the identity matrix of \({{\mathbb {R}}}^d\) and set, for \(i=1, \ldots ,N\), under (H3)

Proposition 1

Assume that, for \(i=1, \ldots ,N\), \((\Phi _i, \Psi _i)\) has distribution (2). Under (H1) and (H3), an explicit approximate likelihood for the observation \((X_{i,n}, i=1, \ldots ,N)\) is on the set \(\displaystyle \mathbf E _{N,n}(\vartheta )\) (see 12),

Note that this approximate likelihood also ignores multiplicative functions which do not contain the unknown parameters. We must now deal with the set \(\displaystyle \mathbf E _{N,n}(\vartheta )\). For each i, elementary properties of quadratic variations yield that, as n tends to infinity, \(S_{i,n}/n\) tends to \(\Gamma _i^{-1}\) in probability. On the other hand, the random matrix \(V_{i,n}\) tends a.s. to the integral \(V_i(T)\) and the random vector \(U_{i,n}\) tends in probability to the stochastic integral

Therefore \( T_{i,n}( {\varvec{\mu }}, {\varvec{\Omega }})/n\) tends to 0 (see 11). This implies that, for all \(i=1, \ldots , N\) and for all \((\vartheta _0, \vartheta )\), \({{\mathbb {P}}}_{\vartheta _0}(E_{i,n}(\vartheta ))\rightarrow 1\) as n tends to infinity. However, we need the more precise result

Moreover, the set on which the approximate likelihood is considered should not depend on \(\vartheta \).

To this end, let us define, for \(\alpha >0\), using the notations of (H4),

Lemma 1

Assume (H1)–(H4). For all \(\vartheta \) satisfying (H4) and all i, we have \( F_{i,n} \subset E_{i,n}(\vartheta )\). If \(a_0>4\) and as \(N,n \rightarrow \infty \), \(N=N(n)\) is such that \(N/n^{2} \rightarrow 0\), then,

For this, we prove that, if \(a_0>4\),

where \(\lesssim \) means lower than or equal to up to a multiplicative known constant which does not depend on n. This explains the constraint \(N/n^2\rightarrow 0\).

For this proof, the condition \(c_0\le \lambda _{max}({\varvec{\Omega }})\) of (H4) is not required.

The condition \(a_0>4\) is a moment condition for \(\Psi _i\) required by the proof. It is equivalent to the fact that \({{\mathbb {E}}} \Psi _i^8={{\mathbb {E}}} \Gamma _i^{-4}= \lambda _0^4/(a_0-1)(a_0-2)(a_0-3)(a_0-4)<+\infty \). Note that \({{\mathbb {E}}} \Psi _i^2={{\mathbb {E}}} \Gamma _i^{-1}=\lambda _0/(a_0-1)\) so that the random effect takes moderate values which is reasonable.

As a consequence of Lemma 1, for all \(\vartheta \), \({{\mathbb {P}}}_{\vartheta _0}(\mathbf E _{N,n}(\vartheta ))\rightarrow 1\) and (13) is well defined on the set \(\mathbf F _{N,n}\) which is independent of \(\vartheta \) and has probability tending to 1. The proof of Lemma 1 is surprisingly technical and detailed in an electronic supplementary material.

2.3 Second approximation of the likelihood

Formulae (13)–(14) suggest another approximation of the likelihood which is simpler. We give the heuristics for this approximation. We can write:

The first term \({{\mathcal {L}}}_n^{(1)}(X_{i,n}, \vartheta )\) only depends on \((\lambda ,a)\) and is equal to the approximate likelihood function obtained in Delattre et al. (2015) for \(b\equiv 0\) and \(\Gamma _i\sim G(a,\lambda )\). For the second term, we have:

As for n tending to infinity, \(T_{i,n}( {\varvec{\mu }}, {\varvec{\Omega }})\) tends to a fixed limit and \(S_{i,n}/n\) tends to \(\Gamma _i^{-1}\), this yields:

Now, the above expression only depends on \(({\varvec{\mu }}, {\varvec{\Omega }})\). Morevover, as the term \(U_{i,n}'V_{i,n}^{-1}U_{i,n} \) in \(T_{i,n}( {\varvec{\mu }}, {\varvec{\Omega }})\) does not contain parameters, we can forget it and set:

and define the second approximation for the log-likelihood: \({{\varvec{V}}}_{N,n}( \vartheta )= \sum _{i=1}^N {{\varvec{V}}}_n(X_{i,n}, \vartheta ) \). Thus, estimators of \((\lambda ,a)\) and \(({\varvec{\mu }}, {\varvec{\Omega }})\) can be computed separately. It is noteworthy that this second approximation overcomes the difficulties encountered in Lemma 1.

3 Asymptotic properties of estimators

In this section, we study the asymptotic behaviour of the estimators based on the two approximate likelihood functions of the previous section. To serve as a baseline, the estimation when an i.i.d. sample \((\Phi _i,\Gamma _i), i=1, \ldots , N\) is observed is presented in Sect. 7.4.

3.1 Estimation based on the first approximation of the likelihood

We study the estimation of \(\vartheta =(\lambda ,a, {\varvec{\mu }}, {\varvec{\Omega }})\) using the approximate likelihood \({{\mathcal {L}}}_{N,n}( \vartheta )\) given in Proposition 1 on the set \(\mathbf {F}_{N,n}\) studied in Lemma 1 (see 13, 14, 16). Let

By (H4), using notations (16), we have \(\lambda + S_{i,n}/2 + T_{i,n}({\varvec{\mu }}, {\varvec{\Omega }})/2 \ge (S_{i,n} -M_{i,n})/2\). We refer to the supplementary material (proof of Lemma 1), where we give a proof of the nontrivial property that, if \(N/n^2\) tends to 0, with probability tending to 1, \(T_{i,n}({\varvec{\mu }}, {\varvec{\Omega }})\ge -M_{i,n}\) for all \(i=1, \ldots , N\).

More precisely, on the set \( F_{i,n}\), \(Z_{i,n}> \alpha / (\sqrt{n}+ 2(\alpha _1/\sqrt{n}))>0\) where \(\alpha _1\) is defined in (H4). Instead of considering \(\log {{{\mathcal {L}}}_n(X_i, \vartheta )}\) on \(F_{i,n}\), to define a contrast, we replace \(\log Z_{i,n} \) by \(1_{F_{i,n}}\log Z_{i,n}\) and set \(\mathbf U _{N,n}(\vartheta )= \sum _{i=1}^N \mathbf U _n(X_i, \vartheta ),\) with

Define the pseudo-score function and the associated estimators \({\tilde{\vartheta }}_{N,n}\):

To investigate their asymptotic behaviour, we need to prove that \(Z_{i,n}\) defined in (20) is close to \(\Gamma _i^{-1}\) and that \(1_{F_{i,n}}Z_{i,n}^{-1}\) is close to \(\Gamma _i\). For this, we introduce the random variable

which corresponds to \(S_{i,n}\) when \(b(.)=0, \sigma (.) =1\). Then, we split \(Z_{i,n}-\Gamma _i^{-1}\) into \(Z_{i,n} -\frac{S_{i,n}^{(1)}}{n}+ \frac{S_{i,n}^{(1)}}{n} -\Gamma _i^{-1}\) and study successively the two terms. The second term has explicit distribution as \(C_{i,n}^{(1)}\) has \(\chi ^2(n)\) distribution and is independent of \(\Gamma _i\). The first term is treated below. We proceed analogously for \(1_{F_{i,n}}Z_{i,n}^{-1}- \Gamma _i\) introducing \(n/ S_{i,n}^{(1)}\).

Lemma 2

Assume (H1)–(H4). For all \(p\ge 1\), we have

Let \(\psi (u)=\frac{\Gamma '(u)}{\Gamma (u)}\) and \(F^c\) denote the complementary set of F. We obtain,using (20), (25),

and let \(J(\vartheta )\) denote the covariance matrix of

The Fisher information matrix associated with the direct observation \((\Gamma _1, \ldots , \Gamma _N)\) (see Sect. 7.4) is

We now state:

Theorem 1

Assume (H1)–(H4), \(a>4\), and that n and \(N=N(n)\) tend to infinity. Then, for all \(\vartheta \),

-

If \(N/n^2\rightarrow 0\), \(N^{-1/2}\left( \frac{\partial \mathbf U _{N,n}}{\partial \lambda }(\vartheta ), \frac{\partial \mathbf U _{N,n}}{\partial a}(\vartheta )\right) '\) converges in distribution under \({{\mathbb {P}}}_{\vartheta }\) to the Gaussian law \({{\mathcal {N}}}_2(0, I_0(\lambda , a))\).

-

If \(N/n\rightarrow 0\), \(N^{-1/2}\nabla _{\vartheta } \mathbf U _{N,n}(\vartheta )\) converges in distribution under \({{\mathbb {P}}}_{\vartheta }\) to \({{\mathcal {N}}}_q(0, {{\mathcal {J}}}(\vartheta ))\) (\(q=2+d+d(d+1)/2\)) where

$$\begin{aligned} {{\mathcal {J}}}(\vartheta )= \left( \begin{array}{c|c} I_0(\lambda ,a) &{}\quad \mathbf 0 \\ \hline \mathbf 0 &{}\quad J(\vartheta ) \end{array}\right) \end{aligned}$$with \(J(\vartheta ),I_0( \lambda ,a)\) defined in (27), (28).

-

Define the opposite of the Hessian of \(\mathbf U _{N,n}(\vartheta )\) by \({{\mathcal {J}}}_{N,n}(\vartheta )=-\nabla ^2_{\vartheta }{} \mathbf U _{N,n}(\vartheta )\). Then, if \(a>6\), as n and \(N=N(n)\) tend to infinity, \(\frac{1}{N} {{\mathcal {J}}}_{N,n}(\vartheta )\) converges in \({{\mathbb {P}}}_{\vartheta }\)-probability to \({{\mathcal {J}}}(\vartheta )\).

Remark 1

The constraint \(a>6\) might seem too stringent. Noting that \({{\mathbb {E}}}(\Gamma _i^{-1})= \frac{\lambda }{a-1}\), it is indeed an assumption on the quadratic variations of \((X_i(t))\) and \(a>6\) corresponds to moderate quadratic variations.

Remark 2

In the case of univariate random effect \(\Phi _i \sim \mathcal{N}(\mu ,\omega ^2)\), \(J(\vartheta )\) writes,

For the proof, we use that \(A_{i,n}\) (resp. \( B_{i,n}\)) converges to \(A_i(T;{\varvec{\mu }},{\varvec{\Omega }})\) (resp. \(B_i(T;{\varvec{\Omega }})\)) as n tends to infinity and that \(Z_{i,n}^{-1}1_{F_{i,n}}\) is close to \(\Gamma _i\) for large n. Note that \(I_0(\lambda ,a)\) is invertible for all \((\lambda ,a) \in (0,+\infty )^2\) (see Sect. 7.4). We conclude:

Theorem 2

Assume (H1)–(H4), \(a_0>6\), that n and \(N=N(n)\) tend to infinity with \(N/n \rightarrow 0\) and that \(J(\vartheta _0)\) is invertible. Then, with probability tending to 1, a solution \({\tilde{\vartheta }}_{N,n}\) to (21) exists which is consistent and such that \({\sqrt{N}}({\tilde{\vartheta }}_{N,n} - \vartheta _0)\) converges in distribution to \({{\mathcal {N}}}_q(0, {{\mathcal {J}}}^{-1}(\vartheta _0))\) under \({{\mathbb {P}}}_{\vartheta _0}\) for all \(\vartheta _0\).

For the first two components, the constraint \(N/n^2 \rightarrow 0\) is enough.

The proof of Theorem 2 is deduced standardly from the previous theorem and omitted.

The estimators of \((\lambda , a)\) are asymptotically equivalent to the exact maximum likelihood estimators of the same parameters based on the observation of \((\Gamma _i, i=1, \ldots , N)\) under the constraint \(N/n^2 \rightarrow 0\). There is no loss of information for parameters coming from the random effects in the diffusion coefficient. For the parameters \(({\varvec{\mu }}, {\varvec{\Omega }})\), which come from the random effects in the drift, the constraint is \(N/n \rightarrow 0\) and there is a loss of information (see 49 in Sect. 7.4) w.r.t. the direct observation of \(((\Phi _i, \Gamma _i), i=1, \ldots ,N)\). For instance, consider a univariate random effect \(\Phi \sim \mathcal{N}(\mu ,\omega ^2)\). Then, if \(\omega ^2\) is known, one can see that

If for all i, \(\Gamma _i=\gamma \) is deterministic, there is no loss of information for the estimation of \((\mu , \omega ^2)\), with respect to the continuous observation of the processes \((X_i(t), t\in [0,T])\) (see Delattre et al. 2013).

3.2 Estimation based on the second approximation of the likelihood

Now, we consider the second approximation of the loglikelihood (19). We set

We do not need to truncate \(\xi _{i,n}\) as it is bounded from below. For the second term \(\mathbf V _n^{(2)}(X_{i,n}, {\varvec{\mu }},{\varvec{\Omega }})\), we need a truncation to deal with \(n/S_{i,n}\) and make a slight modification. Let, for k a given constant,

and \(\mathbf W _{N,n}({\varvec{\mu }}, {\varvec{\Omega }})= \sum _{i=1}^N \mathbf W _{n}(X_{i,n},{\varvec{\mu }}, {\varvec{\Omega }})\).

We define the estimators \({\vartheta }_{N,n}^*\) by

We have the following result.

Theorem 3

Assume (H1)–(H4), \(a_0>6\), that n and \(N=N(n)\) tend to infinity with \(N/n \rightarrow 0\) and that \(J(\vartheta _0)\) is invertible. Then, with probability tending to 1, a solution \(\vartheta ^*_{N,n}\) to (32) exists which is consistent and such that \({\sqrt{N}}( \vartheta ^*_{N,n} - \vartheta _0)\) converges in distribution to \({{\mathcal {N}}}_q(0, {{\mathcal {J}}}^{-1}(\vartheta _0))\) under \({{\mathbb {P}}}_{\vartheta _0}\) for all \(\vartheta _0\) (see (29), (28) and the statement of Theorem 2). For the first two components, the constraint \(N/n^2 \rightarrow 0\) is enough.

The estimators \(\vartheta ^*_{N,n}\) and \({\tilde{\vartheta }}_{N,n}\) are asymptotically equivalent.

This equivalence result is quite important: it could be expected that Method 1, being a natural approximation of the likelihood, would lead to better asymptotic results. This is not the case. Implementing Method 2 to compute the estimators is numerically easier because it splits the estimation of random effect parameters in the diffusion coefficient and those in the drift coefficient. On simulated data, both methods are comparable even for reasonably small sample sizes.

4 Simulation study

We illustrate and assess the properties of the parameter estimators. Model parameters are estimated by the two approximations of the likelihood. We found little difference between results of both methods, and so we only report results for Method 2.

Several models are simulated:

Model 1 Mixed effects Brownian motion:

Model 2 A model satisfying (H1)–(H2) with constant \(\sigma (\cdot )\):

Model 3 A model satisfying (H1)–(H2) with non constant \(\sigma (\cdot )\):

\(\Gamma _i \sim G(a,\lambda )\) , \(\Phi _i = (\Phi _{i,1}, \Phi _{i,2})'| \Gamma _i = \gamma \sim \mathcal {N}_2({\varvec{\mu }},\gamma ^{-1}{\varvec{\Omega }})\) with \({\varvec{\mu }}=(\mu _1,\mu _2)'\) and \({\varvec{\Omega }}=\mathrm {diag}(\omega _1^2,\omega _2^2)\)

Model 4 Mixed Ornstein–Uhlenbeck process:

\(\Gamma _i \sim G(a,\lambda )\) , \(\Phi _i = (\Phi _{i,1}, \Phi _{i,2})'| \Gamma _i = \gamma \sim \mathcal {N}_2({\varvec{\mu }},\gamma ^{-1}{\varvec{\Omega }})\) with \({\varvec{\mu }}=(\mu _1,\mu _2)'\) and \({\varvec{\Omega }}=\mathrm {diag}(\omega _1^2,\omega _2^2)\).

Note that Model 4 does not fulfill assumptions (H1)–(H2) but it is widely used in practice and the estimation results show that the estimation methods still perform well.

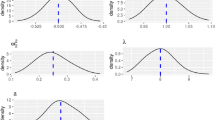

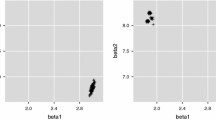

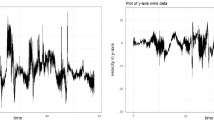

For each SDE model, 100 data sets are generated with N subjects on the same time interval [0, T], \(T=5\). Each data set is simulated as follows. First, the random effects are drawn, then, the diffusion sample paths are simulated with a very small discretization step-size \(\delta = 0.001\). Exact simulation is performed for Models 1 and 4 whereas a Euler discretization scheme is used for Models 2 and 3. The time interval between consecutive observations is taken equal to \(\Delta = 0.025\) or 0.005 with a resulting number of observations \(n= 200,1000\) and fixed time interval [0, 5]. The model parameters are then estimated by using the second approximation of the likelihood from each simulated dataset. The empirical mean and standard deviation of the estimates are computed from the 100 datasets (Tables 1, 2, 3 and 4). For Gamma distributions, the parameters \(m=a/\lambda \) and \(t = \psi (a) - \log (\lambda )\) have unbiased empirical estimators and are easier to estimate. This is why we present the estimates of m and t rather than the estimates of a and \(\lambda \) which are highly biased even for direct observations. The estimation procedure requires a truncation (see 31), we use \(k=0.1\).

We observe from Tables 1, 2, 3 and 4 that the estimation method has satisfactory performances overall. The parameters are estimated with very little bias whatever the model and the values of n and N. The bias becomes smaller as both N and n increase. The standard deviations of the estimates are small, they become smaller when N increases and they do not depend on n. These results are in accordance with the theory since the asymptotic distribution of the estimates is obtained when \(N/n \rightarrow 0\) or \(N/n^2 \rightarrow 0\) respectively, and the rate of convergence is \(\sqrt{N}\). Although for \(n=200\), we don’t have N / n very small in our simulation design, the results in this case are quite satisfactory which encourages the use of this estimation method not only for high but also moderate values of numbers of observations per path n. In all the examples below, the Gamma distribution parameters are \(a=8,\lambda =2\). The associated theoretical s.d. for m and t for direct observations are respectively 0.2, 0.05 for \(N=50\) and 0.14, 0.04 for \(N=100\) (see 7.4 and Table 5). This agrees with the results obtained in Examples 1–4 for \((a,\lambda )\) .

5 Concluding comments

In this paper, we have addressed the new problem of estimating parameters when having discrete observations and simultaneous random effects in the drift and in the diffusion coefficients by means of a joint parametric distribution. We have considered N paths and n observations per path. For linear random effects and a specific joint distribution for these random effects, we have proved that the model parameters in the drift and in the diffusion can be estimated consistently and with a rate \(\sqrt{N}\) under the condition \(N/n \rightarrow 0\). For the parameters in the diffusion coefficient, the constraint is weaker (\(N/n^2 \rightarrow 0\)). We have proposed two methods for the estimation leading to asymptotically equivalent properties. The second one is now implemented in a R package (MsdeParEst). Including random effects both in the drift and in the diffusion coefficient of SDE has been proposed in several data applications but to our knowledge, it had not been studied from a theoretical point of view.

Our results are obtained for a regular sampling but could be easily extended to non regular ones. They are derived in an asymptotic framework. An important issue would be to study non asymptotic properties. It would also be interesting to consider more general SDEMEs where fixed and random effects are no longer linear and different distributions for the random effects.

References

Berglund M, Sunnaker M, Adiels M, Jirstrand M, Wennberg B (2001) Investigations of a compartmental model for leucine kinetics using non-linear mixed effects models with ordinary and stochastic differential equations. Math Med Biol. https://doi.org/10.1093/imammb/dqr021

Delattre M, Dion C (2017) MsdeParEst: Parametric estimation in mixed-effects stochastic differential equations. https://CRAN.R-project.org/package=MsdeParEst. Accessed 16 Sept 2017

Delattre M, Genon-Catalot V, Larédo C (2017) Parametric inference for discrete observations of diffusion with mixed effects in the drift or in the diffusion coefficient. Stoch Process Appl. https://doi.org/10.1016/j.spa.2017.08.016

Delattre M, Genon-Catalot V, Samson A (2013) Maximum likelihood estimation for stochastic differential equations with random effects. Scand J Stat 40:322–343

Delattre M, Genon-Catalot V, Samson A (2015) Estimation of population parameters in stochastic differential equations with random effects in the diffusion coefficient. ESAIM Probab Stat 19:671–688

Delattre M, Lavielle M (2013) Coupling the SAEM algorithm and the extended Kalman filter for maximum likelihood estimation in mixed-effects diffusion models. Stat Interface 6(4):519–532

Dion C (2016) Nonparametric estimation in a mixed-effect Ornstein–Uhlenbeck model. Metrika 79:919–951

Ditlevsen S, De Gaetano A (2005) Mixed effects in stochastic differential equation models. REVSTAT Stat J 3:137–153

Donnet S, Samson A (2008) Parametric inference for mixed models defined by stochastic differential equations. ESAIM P&S 12:196–218

Forman J, Picchini U (2016) Stochastic differential equation mixed effects models for tumor growth and response to treatment. Preprint arXiv:1607.02633

Grosse Ruse M, Samson A, Ditlevsen S (2017) Multivariate inhomogeneous diffusion models with covariates and mixed effects. Preprint arXiv:1701.08284

Jacod J, Protter P (2012) Discretization of processes, vol 67. Stochastic modelling and applied probability. Springer, Berlin

Kessler M, Lindner A, Sørensen ME (2012) Statistical methods for stochastic differential equations, vol 124. Monographs on statistics and applied probability. Chapman & Hall, London

Leander J, Almquist J, Ahlstrom C, Gabrielsson J, Jirstrand M (2015) Mixed effects modeling using stochastic differential equations: Illustrated by pharmacokinetic data of nicotinic acid in obese zucker rats. AAPS J. https://doi.org/10.1208/s12248-015-9718-8

Nie L (2006) Strong consistency of the maximum likelihood estimator in generalized linear and nonlinear mixed-effects models. Metrika 63:123–143

Nie L (2007) Convergence rate of the MLE in generalized linear and nonlinear mixed-effects models: theory and applications. J Stat Plan Inference 137:1787–1804

Nie L, Yang M (2005) Strong consistency of the MLE in nonlinear mixed-effects models with large cluster size. Sankhya Indian J Stat 67:736–763

Picchini U, De Gaetano A, Ditlevsen S (2010) Stochastic differential mixed-effects models. Scand J Stat 37:67–90

Picchini U, Ditlevsen S (2011) Practicle estimation of high dimensional stochastic differential mixed-effects models. Comput Stat Data Anal 55:1426–1444

Whitaker GA, Golightly A, Boys RJ, Chris S (2017) Bayesian inference for diffusion driven mixed-effects models. Bayesian Anal 12:435–463

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

Appendix

1.1 Proof of Proposition 1

For the likelihood of the i-th vector of observations, we first compute the conditional likelihood given \(\Phi _i=\varphi , \Psi _i=\psi \), and then integrate the result with respect to the joint distribution of \((\Phi _i, \Psi _i)\). We replace the exact likelihood given fixed values \((\varphi , \psi )\), i.e. the likelihood of \((X_i^{\varphi , \psi }(t_j), j=1, \ldots ,n)\) by the Euler scheme likelihood of (8). Setting \(\psi = \gamma ^{-1/2}\), it is given up to constants by:

The unconditional approximate likelihood is obtained integrating with respect to the joint distribution \(\nu _{\vartheta }(d\gamma ,d\varphi )\) of the random effects \((\Gamma _i=\Psi _i^{-2},\Phi _i)\). For this, we first integrate \(L_n(X_i, \gamma ,\varphi )\) with respect to the Gaussian distribution \({{\mathcal {N}}}_d({\varvec{\mu }},\gamma ^{-1}{\varvec{\Omega }})\). Then, we integrate the result w.r.t. the distribution of \(\Gamma _i\). This second integration is only possible on the subset \(E_{i,n}(\vartheta )\) defined in (12).

Assume first that \({\varvec{\Omega }}\) is invertible. Integrating (33) with respect to the distribution \({{\mathcal {N}}}({\varvec{\mu }}, \gamma ^{-1}{\varvec{\Omega }})\) yields the expression:

where

and

Computations using matrices equalities and (5), (6), (10) yield that \(T_{i,n}({{\varvec{\mu }}},{{\varvec{\Omega }}})\) is equal to the expression given in (11), i.e.:

Noting that \( \frac{det({{\varvec{\Sigma }}}_{i,n})}{ det({\varvec{\Omega }})}= (det(I_d+V_{i,n}{\varvec{\Omega }}))^{-1} \), we get

Then, we multiply \(\Lambda _n(X_{i,n},\gamma , {\varvec{\mu }}, {\varvec{\Omega }})\) by the Gamma density \((\lambda ^a /\Gamma (a)) \gamma ^{a-1}\exp {(-\lambda \gamma )}\), and on the set \(E_{i,n}(\vartheta )\) (see 12), we can integrate w.r.t. to \(\gamma \) on \((0,+\infty )\). This gives \({{\mathcal {L}}}_n(X_{i,n}, \vartheta )\).

At this point, we observe that the formula (14) and the set \(E_{i,n}(\vartheta )\) are still well defined for non invertible \({\varvec{\Omega }}\). Consequently, we can consider \({{\mathcal {L}}}_n(X_{i,n}, \vartheta )\) as an approximate likelihood for non invertible \({\varvec{\Omega }}\). \(\square \)

1.2 Proof of Theorem 1

For simplicity of notations, we consider the case \(d=1\) (univariate random effect in the drift, i.e. \(\mu = {\varvec{\mu }}\), \(\omega ^2={\varvec{\Omega }}\)). The case \(d>1\) does not present additional difficulties.

Proof of Lemma 2

We omit the index n but keep the index i for the i-th sample path. We have (see 20):

Therefore, using definition (22) and the notations introduced in (H4) (bounds on the parameter set),

Note that, using (11):

Thus, using (H4) and the fact that \(x+x^2\le 2(1+x^2)\) for all \(x\ge 0\),

By the Hölder inequality, we obtain using (6), (36) and (37):

Now, we apply Lemmas (7) and (5) of Sect. 7.2 and (48) of Sect. 7.3 and this yields inequality (23) of Lemma 2.

For the second inequality, we write:

On \(F_{i}\), \(Z_i\ge c/\sqrt{n}\), so:

Consequently, by the Hölder inequality,

Now, we take conditional expectation w.r.t. \(\Psi _i=\psi , \Phi _i=\varphi \) and apply the Cauchy–Schwarz inequality. We use that, for n large enough, (see (48) of Sect. 7.3):

And, we apply inequality (23) to get (24). \(\square \)

Now, we start proving Theorem 1. Omitting the index n in \(A_{i,n}, B_{i,n}\), we have [see (21), (25), (26)]

The remainder terms are:

The most difficult remainder terms are \(R_1, R_2,R_3,R_4\). They are treated in Lemma 3 below. For the term \(R'_2\), we use that \((\psi (a+(n/2)) - \log {(a+(n/2))})= O(n^{-1})\) (see 47) and that, for \(a>4\), \({{\mathbb {P}}}_{\theta }(F_{i}^c) \lesssim n^{-2}\) (see 17) to get that \(R'_2= O_P(\sqrt{N}/n)\).

Using Lemma 5 in Sect. 7.2, it is easy to check that \(R'_3\) and \(R'_4\) are \(O_P(\sqrt{N/n})\).

Therefore, there remains to find the limiting distribution of:

The first two components are exactly the score function corresponding to the exact observation of \((\Gamma _i, i=1, \ldots , N)\) (see Sect. 7.4). Hence, the first part of Theorem 1 is proved.

The whole vector is ruled by the standard central limit theorem. To compute the limiting distribution, we use results from Delattre et al. (2013) which deals with the case of \(\Gamma _i=\gamma \) fixed and \(\Phi _i \sim {{\mathcal {N}}}(\mu , \gamma ^{-1}\omega ^2)\). It is proved in this paper that

This result is stated for \(\gamma =1\) in Proposition 5, p. 328 of Delattre et al. (2013) with (unfortunately) different notations: \(A_i(T;\mu , \omega ^2)\) is denoted \(\gamma _i(\theta )\) and \(B_i(T, \omega ^2) \) is denoted \(I_i(\omega ^2)\) (formula (11) of this paper). It can be extended for any value of \(\gamma \) using formula (35) and the regularity properties of the statistical model. Hence, the third and fourth component are centered and the covariances between the first two components and the last two ones are null. Moreover, it is also proved (in the same Proposition 5) that

Hence, the covariance matrix of the last two components is equal to \(J(\vartheta )\) defined in (29).

The proof of the last item (second order derivatives) relies on the same tools with more cumbersome computations but no additional difficulty. It is detailed in the electronic supplementary material. Note that this part only requires that N, n both tend to infinity without further constraint. So the proof is complete. \(\square \)

Lemma 3

Recall (20). Then, for \(a>4\), (see (16) for the definition of \(F_{i,n}\)), \(R_1, R_2\) are \(O_P(\max \{1/\sqrt{n},\frac{\sqrt{N}}{n}\})\), \(R_3, R_4\) are \(O_P(\sqrt{\frac{N}{n}})\).

Proof

The proof goes in several steps. We have introduced

We know the exact distribution of \(S_{i}^{(1)}\): \(C_{i}^{(1)}\) is independent of \(\Gamma _i\) and has distribution \(\chi ^2(n)=G(n/2, 1/2)\). By exact computations, using Gamma distributions (see Sect. 7), we obtain:

Thus,

Let us detail the first computation. Note that \((\Gamma _i, i=1,\ldots ,N)\) and \((C_{i}^{(1)}, i=1,\ldots ,N)\) are independent. Thus,

The second computation is similar.

Then, we have to study

We write:

For \(a>4\), \({{\mathbb {P}}}_{\vartheta }(F_{i}^c) \lesssim n^{-2}\) (see 17). We have, applying the Cauchy–Schwarz inequality:

Next, apply Lemma 2,

As noted above, \(\Psi _i=\Gamma _i^{-1/2}\), \({{\mathbb {E}}}_{\vartheta }(\Psi _i^{-q})< +\infty \) for all \(q\ge 0\). We can write \(\Phi _i=\mu +\omega \Psi _i \varepsilon _i\) with \(\varepsilon _i\) a standard Gaussian variable independent of \(\Psi _i\). We have

Thus, for \(a>4\),

Joining (39) (left)–(40) (left) and (41), we obtain that, for \(a>4\), \(R_1=O_P(\max \{n^{-1/2}, \sqrt{N}/n\})\).

Analogously,

As above, we can prove

And:

On \(F_{i}\),

Therefore,

Now, we take conditional expectation w.r.t. \(\Phi _i=\varphi , \Psi _i=\psi \), and apply first the Cauchy–Schwarz inequality and then Lemma 2. This yields:

We have to check that the expectation above is finite. The worst term is \({{\mathbb {E}}}_{\vartheta }\Psi _i^6= {{\mathbb {E}}}_{\vartheta }\Gamma _i^{-3}\) which requires the constraint \(a>3\). Thus, for \(a>3\), we have:

Therefore, we have proved that \(R_1, R_2\) are \(O_P(\max \{\frac{1}{\sqrt{n}}, \frac{\sqrt{N}}{n}\})\).

For \(R_3, R_4\), we proceed analogously but we have to deal with the terms \(A_{i}, A_{i}^2\). We write again:

Using Lemma 6 and the Cauchy–Schwarz inequality, we obtain:

Applying now Lemma 2, we obtain:

This requires the constraint \({{\mathbb {E}}}_{\vartheta }\Psi _i^3 {{\mathbb {E}}}_{\vartheta } \Gamma _i^{-3/2}<+\infty \), i.e. \(a>3/2\). Note that \({{\mathbb {E}}}_{\vartheta }(\Psi _i^{-4p})\) is finite for any p. This is why these moments are omitted.

We proceed analogously for \(R_4\) and find that \(R_4= O_P(\sqrt{N/n})\) for \(a>2\). The different constraint on a derives from the presence of \(A_i^2\) in \(R_4\) which requires higher moments for \(\Gamma _i^{-1}\). \(\square \)

1.3 Proof of Theorem 3

We only give a sketch of the proof and assume \(d=1\) for simplicity. We compute \({{\mathcal {H}}}_{N,n}(\vartheta )\) (see 32) and set \(G_{i,n}=\{S_{i,n}\ge k\sqrt{n}\} \). We have:

We can prove that, under (H1)–(H2), if \(a_0>2\), \({{\mathbb {P}}}_{\vartheta _0} (G_{i,n}^c)\lesssim n^{-2}\) under analogous and simpler tools as in Lemma 1. The result of Lemma 2 holds with \(\xi _{i,n}\) instead of \(Z_{i,n}\) and without \(1_{F_{i,n}}\). This allows to prove that:

where \(r_1\) and \(r_2\) are \(O_P(\sqrt{N}/n)\).

The result of Lemma 2 holds with \(S_{i,n}/n\) instead of \(Z_{i,n}\) and \(G_{i,n}\) instead of \(F_{i,n}\) (and the proof is much simpler). This implies that:

and we can prove that \(r_3\) and \(r_4\) are \(O_P(N/n)\).

Auxiliary results

In Sect. 7.1, we explain why Assumption (H2) is required. Results on discretizations are recalled in Sect. 7.2. Sections 7.3 and 7.4 contain results on Gamma distributions and estimation from direct observations of the random effects.

1.1 Preliminary results for SDEs with random effects

SDEMEs have specific features that differ from usual SDEs. The discussion below justifies our setup and assumptions. Consider \((X(t), t \ge 0)\) a stochastic process ruled by:

where the Wiener process (W(t)) and the r.v.’s \((\Phi ,\Psi )\) are defined on a common probability space \((\Omega , {{\mathcal {F}}}, {{\mathbb {P}}})\) and independent. We set \(({{\mathcal {F}}}_t=\sigma ( \Phi , \Psi , W(s), s\le t), t\ge 0)\). To understand the properties of (X(t)), let us introduce the system of stochastic differential equations:

Existence and uniqueness of strong solutions is therefore ensured by the classical assumptions:

-

(A1)

The real valued functions \((x, \varphi )\in {{\mathbb {R}}}\times {{\mathbb {R}}}^{d} \rightarrow b(x, \varphi )\) and \((x, \psi )\in {{\mathbb {R}}}\times {{\mathbb {R}}}^{d'} \rightarrow \sigma (x, \psi )\) are \(C^1\).

-

(A2)

There exists a constant K such that, \(\forall x, \varphi , \psi \in {{\mathbb {R}}}\times {{\mathbb {R}}}^{d}\times {{\mathbb {R}}}^{d'}\), (\(\Vert .\Vert \) is the Euclidian norm):

$$\begin{aligned} |b(x, \varphi )| \le K(1+|x| +\Vert \varphi \Vert ), \quad |\sigma (x, \psi )| \le K(1+|x| +\Vert \psi \Vert ) \end{aligned}$$If the following additional assumption holds:

-

(A3)

The r.v.’s \(\Phi ,\Psi \) satisfy \({{\mathbb {E}}}(\Vert \Phi \Vert ^{2p} + \Vert \Psi \Vert ^{2p})<+\infty \)

then, under (A1)–(A2), for all T, \(\sup _{t\le T}{{\mathbb {E}}}X^{2p}(t)<+\infty \).

To deal with discrete observations, moment properties are required to control error terms. Here, we consider a simple model for the drift term, \(b(x,\varphi )= \varphi ' b(x)\), and for the diffusion coefficient, \(\sigma (x,\psi )= \psi \sigma (x)\), with \(\varphi \in {{\mathbb {R}}}^{d}\), \(\psi \in {{\mathbb {R}}}\). The common assumptions on b and \( \sigma \) should be that \(b, \sigma \) are \(C^1({{\mathbb {R}}})\) and have linear growth w.r.t. x. Then, for all fixed \((\varphi , \psi ) \in {{\mathbb {R}}}^d\times (0,+\infty )\), the stochastic differential equation

admits a unique strong solution process \((X^{\varphi ,\psi }(t), t\ge 0)\) adapted to the filtration \(({{\mathcal {F}}}_t)\). Moreover, the stochastic differential equation with random effects (1) admits a unique strong solution adapted to \(({{\mathcal {F}}}_t)\) such that the joint process \((\Phi ,\Psi , X(t), t \ge 0)\) is strong Markov and the conditional distribution of (X(t)) given \(\Phi =\varphi , \Psi =\psi \) is identical to the distribution of (46). We need more than this property. Indeed, moment properties for X(t) are required. Let us stress that, with \(b(x, \varphi )=\varphi 'b(x), \sigma (x,\psi )=\psi \sigma (x)\), (A2) does not hold even if \(b,\sigma \) have linear growth. In particular, (A3) does not ensure that X(t) has moments of order 2p. Let us illustrate this point on an example.

Example

Consider the mixed effect Ornstein–Uhlenbeck process \(dX(t)= \Phi X(t) dt + \Psi dW(t), X(0)=x\). Then, \(\displaystyle X(t)= x \exp {(\Phi t)} + \Psi \exp {(\Phi t)}\smallint _0^t \exp {(-\Phi s)}\; dW(s) \). The first order moment of X(t) is finite iff \({{\mathbb {E}}}\exp {(\Phi t)}<+\infty \) and \({{\mathbb {E}}}\left[ |\Psi |((\exp {(2\Phi t)}\right. \left. -1)/2\Phi )^{1/2}\right] <+\infty \) which is much stronger than the existence of moments for \(\Phi , \Psi \). When \((\Phi , \Psi )\) has distribution (2), \(\displaystyle {{\mathbb {E}}}\exp {(\Phi t)}= \exp {(\mu t)} {{\mathbb {E}}}(\exp {(\omega ^2 t^2/\Gamma )})=+\infty \).

In a more general setup, the existence of moments is standardly proved by using the Gronwall lemma. In this case, it would lead to an even stronger condition such as \( {{\mathbb {E}}}(\exp {(\Phi ^2 t)}+ \exp {(\Psi ^2 t)})<+\infty \).

Hence, stronger assumptions than usual are necessary. Indeed, (A2) holds for model (1) either if \(\varphi \) and \(\psi \) belong to a bounded set, or if b and \(\sigma \) are uniformly bounded.

We could think of using a localisation device to get bounded b and \(\sigma \). The problem here is to deal with N sample paths \((X_i(t)), i=1, \ldots ,N\) which have possibly no moments. The localisation device is here complex.

1.2 Approximation results for discretizations

The following lemmas are proved in Delattre et al. (2017). In the first two lemmas, we set \(X_1(t)=X(t), \Phi _1=\Phi , \Psi _1=\Psi \).

Lemma 4

Under (H1)–(H2), for \(s\le t\) and \(t-s \le 1\), \(p\ge 1\),

For \(t\rightarrow H(t,X.)\) a predictable process, let \(V(H;T)= \int _0^T H(s,X.)ds\) and \(U(H;T)=\int _0^T H(s,X.)dX(s)\). The following results can be standardly proved.

Lemma 5

Assume (H1)–(H2) and \(p\ge 1\). If H is bounded, \( {{\mathbb {E}}}_{\vartheta }(|U(H;T)|^{p}|\Phi =\varphi , \Psi =\psi ) \lesssim | \varphi |^{p}+\psi ^{p}.\)

Consider \(f:{{\mathbb {R}}} \rightarrow {{\mathbb {R}}}\) and set \(H(s,X.)=f(X(s)), H_n(s,X.)= \sum _{j=1}^n f(X((j-1)\Delta ))1_{((j-1)\Delta ,j\Delta ]}(s)\). If f is Lipschitz,

If f is \(C^2\) with \(f',f''\) bounded

Lemma 6

Recall notations (25)–(26). Under (H1)–(H2),

Let

Lemma 7

Then, for all \(p\ge 1\),

1.3 Properties of the Gamma distribution

The digamma function \(\psi (a)= \Gamma '(a)/\Gamma (a)\) admits the following integral representation: \(\psi (z)= -\gamma +\int _0^1 (1-t^{z-1})/(1-t) dt\) (where \(-\gamma =\psi (1)=\Gamma '(1)\)). For all positive a, we have \( \psi '(a)= -\int _0^1 \frac{\log {t}}{1-t}\;t^{a-1} dt\). Consequently, using an integration by parts, \( -a\psi '(a)=-1- \int _0^1 t^a g(t)dt\), where \(g(t)=(\log {t}/(1-t))' \). A simple study yields that \(t^ag(t)\) is integrable on (0, 1) and positive except at \(t=1\). Thus, \(1-a\psi '(a) \ne 0\). The following asymptotic expansion as a tends to infinity holds:

If X has distribution \(G(a, \lambda )\), then \(\lambda X\) has distribution G(a, 1). For all integer k, \({{\mathbb {E}}}(\lambda X)^k=\frac{\Gamma (a+k)}{\Gamma (a)}\). For \(a>k\), \({{\mathbb {E}}}(\lambda X)^{-k}=\frac{\Gamma (a-k)}{\Gamma (a)}\). Moreover, \({{\mathbb {E}}}\log {(\lambda X)}= \psi (a)\), Var \([\log {(\lambda X)}]= \psi '(a)\).

In particular, if \(X=\sum _{j=1}^n \varepsilon _i^2\) where the \(\varepsilon _i\)’s are i.i.d. \({{\mathcal {N}}}(0,1)\), then \(X\sim \chi ^2(n)=G(n/2,1/2)\). Therefore, \({{\mathbb {E}}}X^{-p}<+\infty \) for \(n>2p\) and as \(n\rightarrow +\infty \),

1.4 Direct observation of the random effects

Assume that a sample \((\Phi _i, \Gamma _i), i=1, \ldots ,N\), is observed and that \(d=1\) for simplicity. The Gamma distribution with parameters \((a, \lambda )\) (\(a>0, \lambda >0\)) \(G(a, \lambda )\), has density \( \gamma _{a,\lambda }(x)=(\lambda ^a/\Gamma (a)) x^{a-1} e^{-\lambda x} \mathbb {1}_{(0, +\infty )}(x), \) where \(\Gamma (a)\) is the Gamma function. We set \(\psi (a)= \Gamma '(a)/\Gamma (a)\). The log-likelihood \(\ell _N(\vartheta )\) of the N-sample \((\Phi _i, \Gamma _i), i=1, \ldots ,N\) has score function \({{\mathcal {S}}}_N(\vartheta )= \left( \frac{\partial }{\partial \lambda } \ell _N(\vartheta ) \;\; \frac{\partial }{\partial a} \ell _N(\vartheta )\;\;\frac{\partial }{\partial \mu } \ell _N(\vartheta ) \;\; \frac{\partial }{\partial \omega ^2} \ell _N(\vartheta ) \right) '\) given by

By standard properties, we have, under \({{\mathbb {P}}}_{\vartheta }\), \(N^{-1/2}{{\mathcal {S}}}_N(\vartheta ) \rightarrow _{{{\mathcal {D}}}}{{\mathcal {N}}}_4(0, {{\mathcal {J}}}_0(\vartheta )),\) where

Using properties of the di-gamma function (\(a\psi '(a)-1\ne 0\)), \(I_0(a,\lambda )\) is invertible for all \((a,\lambda )\in (0,+\infty )^2\). The maximum likelihood estimator based on the observation of \((\Phi _i,\Gamma _i, i=1, \ldots , N)\), denoted \(\vartheta _N=\vartheta _N(\Phi _i,\Gamma _i, i=1, \ldots , N)\), is consistent and satisfies \(\sqrt{N}(\vartheta _N-\vartheta )\rightarrow _{{{\mathcal {D}}}}{{\mathcal {N}}}_4(0, {{\mathcal {J}}}_0^{-1}(\vartheta ))\) under \({{\mathbb {P}}}_{\vartheta }\) as N tends to infinity.

In the simulations presented in Sect. 4, we took \(a=8\) and observed that estimations of a are biased with a large standard deviation. This can be seen on \(I_0^{-1}(\lambda ,a)\):

If a large, \(a/ (a\psi '(a)-1)=O(a^2)\).

However, natural parameters for Gamma distributions are \(m=a/\lambda , t= \psi (a)-\log {\lambda }\) with unbiased estimators \(\hat{m}= N^{-1}\sum _{i=1}^N \Gamma _i\), \(\hat{t}= N^{-1} \sum _{i=1}^N \log {\Gamma _i}\) such that the vector \(\sqrt{N}(\hat{m}-m, \hat{t} -t)\) is asymptotically Gaussian with limiting covariance matrix

The asymptotic variance of \(\hat{t}\) is \(\psi '(a)=O(a^{-1})\) and both parameters (m, t) are well estimated.

Rights and permissions

About this article

Cite this article

Delattre, M., Genon-Catalot, V. & Larédo, C. Approximate maximum likelihood estimation for stochastic differential equations with random effects in the drift and the diffusion. Metrika 81, 953–983 (2018). https://doi.org/10.1007/s00184-018-0666-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-018-0666-z