Abstract

The sparse precision matrix plays an essential role in the Gaussian graphical model since a zero off-diagonal element indicates conditional independence of the corresponding two variables given others. In the Gaussian graphical model, many methods have been proposed, and their theoretical properties are given as well. Among these, the sparse precision matrix estimation via scaled lasso (SPMESL) has an attractive feature in which the penalty level is automatically set to achieve the optimal convergence rate under the sparsity and invertibility conditions. Conversely, other methods need to be used in searching for the optimal tuning parameter. Despite such an advantage, the SPMESL has not been widely used due to its expensive computational cost. In this paper, we develop a GPU-parallel coordinate descent (CD) algorithm for the SPMESL and numerically show that the proposed algorithm is much faster than the least angle regression (LARS) tailored to the SPMESL. Several comprehensive numerical studies are conducted to investigate the scalability of the proposed algorithm and the estimation performance of the SPMESL. The results show that the SPMESL has the lowest false discovery rate for all cases and the best performance in the case where the level of the sparsity of the columns is high.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The covariance matrix and its inverse are of main interest in multivariate analysis to model dependencies between variables. Traditionally, these two parameters have been estimated by the sample covariance matrix and its inverse based on the maximum likelihood (ML) estimation. Although these ML estimators are often asymptotically unbiased and simple to calculate, there are some weaknesses when the number of variables is greater than that of samples. This circumstance is also known as high-dimensional low-sample size (HDLSS) data. For HDLSS data, it is known that the sample covariance matrix becomes inefficient (Yao et al. 2015). In addition, the inverse of the sample covariance matrix is undefined since the sample covariance matrix is singular for HDLSS data. For the covariance matrix, many methods have been proposed under some structural conditions such as bandable structure and sparse structure to improve the estimation efficiency (Bickel and Levina 2008a, b; Rothman et al. 2009; Wu and Pourahmadi 2009; Cai et al. 2010; Cai and Liu 2011; Cai and Zhou 2012).

To obtain an estimator of the precision matrix (i.e., inverse covariance matrix) for HDLSS data, various approaches have been developed by adopting the sparsity assumption that there are many zero elements in the matrix. The existing methods can be categorized into four approaches: covariance estimation-induced approach, ML-based approach, regression approach, and constrained \(\ell _1\)-minimization approach. The covariance estimation-induced approach considers an indirect estimation using the inversion of the well-conditioned shrinkage covariance matrix estimators and applies multiple testing procedures to identify nonzero elements of the precision matrix (GeneNet, Schäfer and Strimmer (2005)). The ML-based approach directly estimates the precision matrix by maximizing the penalized likelihood function (Yuan and Lin 2007; Friedman et al. 2008; Witten et al. 2011; Mazumder and Hastie 2012). The regression approach considers the linear regression model and uses the fact that the nonzero regression coefficients correspond to the nonzero off-diagonal elements of the precision matrix (Meinshausen and Bühlmann 2006; Peng et al. 2009; Yuan 2010; Sun and Zhang 2013; Ren et al. 2015; Khare et al. 2015; A et al. 2017). The constrained \(\ell _1\)-minimization (CLIME) approach (Cai et al. 2011) obtains the sparse precision matrix by solving the linear programming problem with the constraints on the proximity to the precision matrix where the objective function is the sum of absolute values of the design variables. The adaptive CLIME (Cai et al. 2016) improves the CLIME and attains the optimal rate of convergence.

Among the existing methods, sparse precision matrix estimation via the scaled Lasso (SPMESL) proposed by Sun and Zhang (2013) is a tuning-free procedure. Conversely, other existing methods require searching the optimal tuning parameter, GeneNet (Schäfer and Strimmer 2005) and the neighborhood selection (Meinshausen and Bühlmann 2006) require choosing the level of the false discovery rate; other penalized methods require choosing the optimal penalty level. In addition, SPMESL supports the consistency of the precision matrix estimation under the sparsity and invertibility conditions that are weaker than the irrepresentable condition (van de Geer and Bühlmann 2009; Sun and Zhang 2013). However, it has not been widely used for the sparse precision matrix estimation due to the inefficiency of the implemented algorithm of the SPMESL using the least angle regression (LARS) algorithm (Bradely Efron et al. 2004). Even though the LARS algorithm efficiently provides a whole solution path of the Lasso problem (Tibshirani 1996), its computational cost is expensive and the SPMESL needs to solve p independent Lasso problems as the subproblems of the SPMESL. Thus, the scalability of the SPMESL is still challenging.

In this paper, we found the possibility to improve the computational efficiency of the SPMESL with the empirical observation that the SPMESL does not need a whole solution path of the Lasso problem based on the tuning-free characteristic of the SPMESL (see details in Sect. 3). Motivated by this empirical observation, we propose a more efficient algorithm based on the coordinate descent (CD) algorithm and the warm start strategy for the scaled Lasso and the SPMESL as applied to the standard lasso problem in Wu and Lange (2008). Moreover, we develop the row-wise updating parallel CD algorithm using graphics processing units (GPUs) adequate for the SPMESL. To efficiently implement the proposed parallel CD algorithm, we consider the active response matrix that consists of columns of active response variables that corresponds to the error variance estimate not converged at the current iteration. We will show the efficiency of the proposed algorithms using comprehensive numerical studies. In this paper, we also provide the numerical comparisons of the estimation performance of the SPMESL with three different penalty levels, which are theoretically suggested in previous literature (Sun and Zhang 2012, 2013) but there is no comparison result of them in terms of the estimation performance.

The remainder of the paper is organized as follows. Sect. 2 introduces the SPMESL and its original algorithm. We explain the proposed CD algorithm and GPU-parallel CD algorithm in Sect. 3. Section 4 provides a comprehensive numerical study, including the comparisons of computation times and estimation performances with other existing methods. We provide a summary of the paper and discussion in Sect. 5.

2 Sparse precision matrix estimation via scaled lasso

In this section, we briefly introduce the scaled Lasso, the SPMESL, and their algorithms.

2.1 Scaled lasso

The scaled Lasso proposed by Sun and Zhang (2012) is a variant of a penalized regression model with the Lasso penalty. To be specific, let \(\mathbf{y}\in \mathbb {R}^n\), \(\mathbf{X}\in \mathbb {R}^{n\times p}\), \(\varvec{\beta } \in \mathbb {R}^p\), and \(\sigma >0\). Consider a linear regression model \(\mathbf{y}= \mathbf{X}\varvec{\beta } + \epsilon \), where \(\epsilon \sim N(0, \sigma ^2 \mathbf{I}_n)\) and \(\mathbf{I}_n\) is the n-dimensional identity matrix. The scaled Lasso considers the minimization of the following objective function \(L_{\lambda _0}(\varvec{\beta }, \sigma )\):

where \(\lambda _0\) is a given tuning parameter and \(\Vert \mathbf{x}\Vert _1 = \sum _{j=1}^p |x_j|\) for \(\mathbf{x}\in \mathbb {R}^p\). Thus, the scaled Lasso simultaneously obtains the estimate \(\hat{\varvec{\beta }}\) of the regression coefficient \(\varvec{\beta }\) and the estimate \(\hat{\sigma }\) of the error standard deviation \(\sigma \). It is proved that the objective function \(L_{\lambda _0}\) is jointly convex in \((\varvec{\beta },\sigma )\) and is strictly convex for \(\sigma \) in Sun and Zhang (2012). From the convexity of the objective function, the solution \((\hat{\varvec{\beta }}, \hat{\sigma })\) of the scaled Lasso problem can be obtained by the block CD algorithm as follows:

-

(Step 1) With a fixed \(\hat{\sigma }\), consider the minimization of \(\hat{\sigma }L_{\lambda _0}(\varvec{\beta }, \hat{\sigma })\):

$$\begin{aligned} \hat{\sigma }L_{\lambda _0}(\varvec{\beta }, \hat{\sigma }) = \frac{\Vert \mathbf{y}-\mathbf{X}\varvec{\beta }\Vert _2^2}{2n} + \hat{\sigma }\lambda _0 \Vert \varvec{\beta }\Vert _1 + \frac{\hat{\sigma }^2}{2}. \end{aligned}$$The minimizer \(\hat{\varvec{\beta }}\) of \(\hat{\sigma }L_{\lambda _0}(\varvec{\beta },\hat{\sigma })\) can be obtained by solving the following standard lasso problem with \(\lambda =\hat{\sigma }\lambda _0\):

$$\begin{aligned} \min _{\varvec{\beta }} \frac{\Vert \mathbf{y}-\mathbf{X}\varvec{\beta }\Vert _2^2}{2n} + \lambda \Vert \varvec{\beta }\Vert _1. \end{aligned}$$(2) -

(Step 2) With a fixed \(\hat{\varvec{\beta }}\), the minimizer \(\hat{\sigma }\) of \(L_{\lambda _0}(\hat{\varvec{\beta }},\sigma )\) is easily obtained by

$$\begin{aligned} \hat{\sigma }= \frac{\Vert \mathbf{y}- \mathbf{X}\hat{\varvec{\beta }}\Vert _2}{\sqrt{n}}. \end{aligned}$$ -

(Step 3) Repeat Steps 1 and 2 until convergence occurs.

In the original paper of the scaled Lasso, the standard lasso problem is solved by the LARS algorithm (Bradely Efron et al. 2004), which provides a whole solution path of the standard Lasso problem. During the block CD algorithm, the minimizer \(\hat{\varvec{\beta }}(\hat{\sigma }^{(r)})\) of \(\hat{\sigma }^{(r)} L_{\lambda _0}(\varvec{\beta },\hat{\sigma }^{(r)})\) in Step 1 at the r-th iteration is obtained from the solution path of the standard Lasso problem with \(\lambda =\hat{\sigma }^{(r)}\lambda _0\).

In addition to the joint estimation of \(\varvec{\beta }\) and \(\sigma \), the scaled Lasso has attractive features as described in Sun and Zhang (2012). First, the scaled Lasso guarantees the consistency of \(\hat{\varvec{\beta }}\) and \(\hat{\sigma }\) under two conditions: the penalty level condition \(\lambda _0 > A \sqrt{2n^{-1}\log p}\) for \(A > 1\) and the compatibility condition, which implies the oracle inequalities for the prediction and estimation (van de Geer and Bühlmann 2009). Second, the scaled Lasso estimates are scale-equivariant in \(\mathbf{y}\) in the sense that \(\hat{\varvec{\beta }}(\mathbf{X},\alpha \mathbf{y}) = \alpha \hat{\varvec{\beta }}(\mathbf{X},\mathbf{y})\) and \(\hat{\sigma }(\mathbf{X}, \alpha \mathbf{y}) = |\alpha | \hat{\sigma }(\mathbf{X},\mathbf{y})\). Finally, the authors suggest using the universal penalty level \(\lambda _{0}^{U} = \sqrt{2n^{-1}\log p}\) for \(\lambda _0\) based on their numerical and real-data examples. We can consider the scaled Lasso with the universal penalty level as a tuning-free procedure. Note that the universal penalty level does not satisfy the theoretical requirement \(\lambda _0 > A\sqrt{2n^{-1}\log p}\) for the consistency of \(\hat{\sigma }\). The authors of the scaled Lasso provide several conditions that can weaken the required condition for \(\lambda _0\), in order to justify using a penalty level smaller than \(A\sqrt{2n^{-1}\log p}\) for \(A>1\) (Sun and Zhang 2012).

2.2 Sparse precision matrix estimation via scaled Lasso

Let \(\varvec{\varSigma }=(\sigma _{jk})_{1\le j,k\le p}\) and \(\varvec{\varOmega }=\varvec{\varSigma }^{-1}=(\omega _{jk})_{1\le j,k\le p}\) be a covariance matrix and a corresponding precision matrix, respectively. Suppose that \(\mathbf{x}^{(i)} = (X_{i1},\ldots , X_{ip})\) for \(i=1,2,\ldots ,n\) are independently drawn from the multivariate normal distribution with mean \(\mathbf{0}\) and covariance matrix \(\varvec{\varSigma }\). Let \(\mathbf{X}\in \mathbb {R}^{n\times p}\) be a data matrix and \(\mathbf{x}_k = (X_{1k},\ldots ,X_{nk})^T\) be the kth column of \(\mathbf{X}\). Consider following linear regression models for \(k=1,\ldots ,p\):

where \(\epsilon _{ik}\)s are independent and identically distributed random variables drawn from the normal distribution with mean 0 and variance \(\sigma _k^2\). As applied in the regression approach for the sparse precision matrix estimation, the elements of the precision matrix can be represented into the regression coefficient and the error variance by using the following relationships:

As the scaled Lasso simultaneously estimates the regression coefficients \((\beta _{jk})\) and the error standard deviation \(\sigma _k\) as described in Sect. 2.1, we can use the scaled Lasso to estimate the precision matrix. Thus, the SPMESL considers solving the scaled Lasso problems column-wise and defines the SPMESL estimator that combines the estimates from the p scaled Lasso problems.

To be specific, let \(\mathbf{B} = (b_{jk})_{1\le j,k \le p}\) be a matrix of the regression coefficients such that \(b_{jk} = \beta _{jk}\) for \(j\ne k\) and \(b_{jj} = -1\) for \(j=1,\ldots ,p\). Denote \(\mathbf{b}^{(j)} =(b_{j1}, \ldots , b_{jp})\) and \(\mathbf{b}_{k}=(b_{1k},\ldots ,b_{pk})^T\) as the jth row and the kth column of a matrix \(\mathbf{B}\), respectively. We further let \(\mathbf{S} = (s_{jk})\) be the sample covariance matrix. Subsequently, the precision matrix can be represented with \(\mathbf{B}\) and \(\mathbf{D}=\mathrm{diag}(\sigma _1^{-2}, \ldots , \sigma _p^{-2})\) as follows:

To obtain the estimate of \(\varvec{\varOmega }\), the SPMESL solves the following p independent scaled Lasso problems first: for \(k=1,\ldots ,p\),

Note that the SPMESL in Sun and Zhang (2013) originally considers \(\lambda _0 \sum _{j\ne k} \sqrt{s_{jj}}|\beta _{jk}|\) instead of \(\lambda _0 \sum _{j\ne k} |\beta _{jk}|\) to penalize the coefficients on the same scale. In this paper, we assume that the columns of the data matrix \(\mathbf{X}\) are centered and scaled to \(\mathbf{X}_k^T \mathbf{X}_k = n\) for \(k=1,\ldots ,p\). Thus, \(s_{jj} = 1\) for \(j=1,\ldots ,p\). This assumption does not affect the estimation performance of the precision matrix as the scaled Lasso has the scale-equivariant property in the response variable as explained in the previous section. We can easily recover the estimate \(\hat{\varvec{\varOmega }}^o = (\hat{\omega }_{jk}^o)_{1\le j,k\le p}\) from the data in the original scale by the following Proposition 1:

Proposition 1

Let \(\mathbf{X}\in \mathbb {R}^{n \times p}\) and \(\tilde{\mathbf{X}} = \mathbf{X}\mathbf{C}\) be a data matrix and the scaled data matrix with \(\mathbf{C}=\mathrm{diag}(s_{11}^{-1/2},\ldots ,s_{pp}^{-1/2})\), where \(s_{jj} > 0\) for \(j=1,\ldots ,p\). Denote \(\hat{\varvec{\varOmega }}^o\) as the estimate of the precision matrix by applying the scaled Lasso in (5) with the penalty term \(\lambda _0 \sum _{j\ne k} \sqrt{s_{jj}}|\beta _{jk}|\) column by column with \(\mathbf{X}\). Similarly, denote \(\hat{\varvec{\varOmega }}^C = (\hat{\omega }_{jk}^C)_{1\le j,k \le p}\) as the estimate by the scaled Lasso in (5) with \(\tilde{\mathbf{X}}\). Then, \(\hat{\varvec{\varOmega }}^o = \mathbf{C}\hat{\varvec{\varOmega }}^C \mathbf{C}\).

Proof

By the definition of \(\tilde{\mathbf{X}}\), the kth column of \(\tilde{\mathbf{X}}\) is \(\tilde{\mathbf{X}}_{k} = \mathbf{X}_{k}/\sqrt{s_{kk}}\). Let \(\hat{\mathbf{B}}^C = (\hat{b}_{jk}^C)_{1\le j,k \le p}\) and \((\hat{\sigma }_{k,C})_{1\le k \le p}\) be the solutions of the p scaled Lasso problem in (5) with \(\tilde{\mathbf{X}}\). We further let \(\hat{\mathbf{B}}^o = (\hat{b}_{jk}^o)_{1\le j,k \le p}\) and \((\hat{\sigma }_{k,o})_{1\le k \le p}\) be the solutions of the following scaled Lasso problems: for \(k=1,\ldots ,p\),

By substituting \(\tilde{\mathbf{X}}\) with \(\mathbf{X}\mathbf{C}\) in (5) and the reparameterization with \(\tilde{\beta }_{jk} = \beta _{jk}/\sqrt{s_{jj}}\) for \(j\ne k\), the kth scaled Lasso problem becomes

From the forms of the problems (6) and (7), the relationship \(\hat{\mathbf{b}}^o_{k} = \sqrt{s_{kk}}\hat{\mathbf{b}}^C_{k}\) and \(\hat{\sigma }_{k,o} = \sqrt{s_{kk}}\hat{\sigma }_{k,C}\) hold by the scale equivariant property of the scaled Lasso. In addition, by the reparameterization, \(\hat{b}^o_{jk} = \sqrt{s_{kk}/s_{jj}} \hat{b}^C_{jk}\) for \(1\le j, k \le p\). Combining the above relationships, the (j, k)th element \(\hat{\omega }_{jk}^o\) of the precision matrix estimate \(\hat{\varvec{\varOmega }}^o\) is represented as

Hence, \(\hat{\varvec{\varOmega }}^o = \mathbf{C} \hat{\varvec{\varOmega }}^C \mathbf{C}\). \(\square \)

Note that the result \(\hat{\varvec{\varOmega }}^o = \mathbf{C} \hat{\varvec{\varOmega }}^C \mathbf{C}\) in Proposition 1 is consistent with the property of \((\mathrm{Var}(\mathbf{A} \mathbf{z}))^{-1} = \mathbf{A}^{-T} \mathrm{Var}(\mathbf{z})^{-1} \mathbf{A}^{-1}\) for a p-dimensional random vector \(\mathbf{z}\) and a nonsingular matrix \(\mathbf{A}\in \mathbb {R}^{p \times p}\).

After solving p independent scaled Lasso problems, the estimate \(\hat{\varvec{\varOmega }}_1 = - \hat{\mathbf{B}} \hat{\mathbf{D}} = (\hat{\omega }_{jk,1})_{1\le j,k \le p}\) of the precision matrix is obtained. However, the estimate \(\hat{\varvec{\varOmega }}_1\) is not symmetric in general. To find the symmetric estimate of the precision matrix using the current estimate \(\hat{\varvec{\varOmega }}_1\), the SPMESL considers solving the following linear programming problem as in Yuan (2010):

Remark that the authors of the SPMESL consider the above linear programming problem for the symmetrization step in Sun and Zhang (2013), but they applied the following symmetrization step in the implemented R package scalreg:

which is applied in the CLIME and the theoretical properties are developed on this symmetrization (Cai et al. 2011). In addition, for the high-dimensional data, the symmetrization applied in the CLIME is favorable as its computational cost is cheap and it is easily parallelizable. For these reasons, we apply the symmetrization step (9) in the proposed algorithm.

As stated in the previous section, the scaled Lasso guarantees the consistency of the regression coefficients and the error variance under the compatibility condition. Thus, the SPMESL also guarantees column-wise consistency of \(\hat{\varvec{\varOmega }}_1\) under the compatibility conditions, which is independently defined in each column of \(\hat{\varvec{\varOmega }}_1\), as the SPMESL applies the scaled Lasso column wise. To derive the overall consistency of \(\hat{\varvec{\varOmega }}\), the authors of the SPMESL considers the capped \(\ell _1\) sparsity and the invertibility conditions as follows.

-

(i)

Capped \(\ell _1\) sparsity condition: For a certain \(\epsilon _0\), \(\lambda _0^*\) not depending on j and an index set \(T_j \subset \{1,2,\ldots ,p\} \setminus \{j\}\), the capped \(\ell _1\) sparsity of the jth column with \(t_{j}>0\) is defined as

$$\begin{aligned} |T_j| + \sum _{k\ne j, k \notin S_j} \frac{|\omega _{kj}|\sqrt{\sigma _{kk}}}{(1-\epsilon _0) \sqrt{\omega _{jj}}\lambda _{0}^*} \le a_j. \end{aligned}$$ -

(ii)

Invertibility condition: Let \(\mathbf{W}=\mathrm{diag}(\sigma _{11},\ldots , \sigma _{pp})\) and \(\mathbf{R} = \mathbf{W}^{-1/2}\varvec{\varSigma } \mathbf{W}^{-1/2}\). Further, let \(T_j \subseteq Q_j \subseteq \{1,2,\ldots ,p\}\setminus \{j\}\). The invertibility condition is defined as

$$\begin{aligned} \inf _j \left\{ \frac{ \mathbf{u}^T \mathbf{R}_{-j,-j} \mathbf{u}}{\Vert \mathbf{u}_{Q_j}\Vert _2^2} ~:~ \mathbf{u}\in \mathbb {R}^p, \mathbf{u}_{Q_j} \ne 0, 1 \le j \le p\right\} \ge c_* \end{aligned}$$with a fixed constant \(c_*>0\). Note that the invertibility condition holds if the spectral norm of \(\mathbf{R}^{-1}= \mathbf{D}^{1/2} \varvec{\varOmega } \mathbf{D}^{1/2}\) is bounded (i.e., \(\Vert \mathbf{R}^{-1}\Vert _2 \le c_*^{-1}\)).

With some modifications on the capped \(\ell _1\) sparsity and the invertibility conditions, the authors of the SPMESL derive several conditions on \(\lambda _{0}^*\) that guarantees the estimation consistency of the precision matrix under the spectral norm. Among them, for practical usage, we consider two conditions on a penalty level \(\lambda _0 \ge \lambda _0^*\) as follows:

-

Union bound for p applications of the scaled Lasso (Theorem 2 in Sun and Zhang (2013)):

$$\begin{aligned} \lambda _0 = A \sqrt{4n^{-1} \log p} \text{ for } A > 1. \end{aligned}$$(10) -

Probabilistic error bound (Theorem 13 in Sun and Zhang (2013)):

$$\begin{aligned} \lambda _0 = A L_n (k/p) \text{ for } 1 < A \le \sqrt{2}, \end{aligned}$$(11)where k is a real solution of \(k=L_1^4(k/p) + 2 L_1^2(k/p)\), \(L_n(t) = n^{-1/2} \varPhi ^{-1}(1-t)\), and \(\varPhi ^{-1}(t)\) is the standard normal quantile function.

Note that a real solution of the equation \(k-L_1^4(k/p) + 2 L_1^2(k/p)=0\) can easily be found by applying the bisection method. For instance, we demonstrate two real solutions for \(p=100, 1000\) in Fig. 1 with the values of \(k-L_1^4(k/p) + 2 L_1^2(k/p)\). For \(p=1000\) and \(n=100\), \(\lambda _{ub} = \sqrt{4n^{-1} \log p} \approx 0.5257\) and \(\lambda _{pb}=\sqrt{2} L_n (k/p) \approx 0.2810\) with \(k=23.4748\) while \(\lambda _{univ} = \sqrt{2n^{-1} \log (p-1)} \approx 0.3717\), where \(\lambda _{ub}\) is the penalty level derived by the union bound, \(\lambda _{pb}\) is the penalty level derived by the probabilistic error bound, and \(\lambda _{univ}\) is the universal penalty level used in the scaled lasso. In the paper of Sun and Zhang (2013), the penalty level derived by the probabilistic error bound is suggested for the SPMESL. However, there are no comparison results for the three penalty levels \(\lambda _{univ}\), \(\lambda _{ub}\), and \(\lambda _{pb}\). We conduct a comparison of performances for identifying the nonzero elements of \(\varvec{\varOmega }\) by the three penalty levels above in Sect. 4 to provide a guideline for the penalty level \(\lambda _0\).

3 Efficient coordinate descent algorithm for SPMESL and its GPU-parallelization

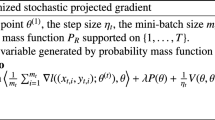

The original algorithm for the scaled Lasso and the SPMESL adopt the LARS algorithm, which provides the whole solution path of the Lasso regression problem, and its implemented R package scalreg is available in the Comprehensive R Archive Network (CRAN) repository. As mentioned in the Introduction, we empirically observed that the block CD algorithms for the scaled Lasso and the SPMESL do not need a whole solution path of the standard Lasso problem in their sub-problems, where the sub-problem denotes the minimization problem in Step 1 of the scaled Lasso problem. To describe our empirical observation, we consider an example with a linear regression model \(y_i = \mathbf{x}_i^T \varvec{\beta } + \epsilon _i\) and \(\epsilon _i \sim N(0,\sigma ^2)\) for \(i=1,2,\ldots ,250\), where the true parameter \(\varvec{\beta } = (\varvec{\beta }_2^T, \varvec{\beta }_{-1}^T, \varvec{\beta }_0^T)^T \in \mathbb {R}^{500}\), \(\varvec{\beta }_2 = (2,\ldots ,2)^T \in \mathbb {R}^{5}\), \(\varvec{\beta }_{-1} = (-1,\ldots ,-1)^T \in \mathbb {R}^{5}\), \(\varvec{\beta }_0=(0,\ldots ,0)^T \in \mathbb {R}^{490}\), and \(\sigma = 3, 5\). We set the initial value of \((\varvec{\beta }, \sigma )\) as \((\mathbf{0}_{500\times 1}, 1)\). As shown in Fig. 2, the numbers of iterations for the convergence of \(\hat{\sigma }\) are less than 10 when the true parameter \(\sigma = 3, 5\) and \(\lambda _0=\sqrt{2n^{-1}\log p}\). This implies that we do not need to obtain the whole solution paths of p lasso problems with respect to all \(\lambda \) values.

Thus, the calculation of the whole solution path by the LARS algorithm is inefficient for the scaled Lasso and the SPMESL. In addition, the scaled Lasso and the SPMESL iteratively solve the lasso problem with the penalty \(\lambda _r = \hat{\sigma }^{(r)} \lambda _0\) in their inner iteration, where \(\lambda _r\) denotes the penalty level at the rth iteration. As \(\hat{\sigma }^{(r)}\) converges to the minimizer of (1), the difference between \(\lambda _r\) and \(\lambda _{r-1}\) decreases as the iteration proceeds. This denotes that the Lasso estimate in the current iteration is not far from that in the next iteration. From this observation, the warm start strategy, which denotes that the solution in the previous iteration is used as an initial value of the next iteration, is favorable and can efficiently accelerate the algorithm for the Lasso regression problem in the inner iteration.

To fully utilize the warm start strategy, we consider the coordinate descent (CD) algorithm for the Lasso regression problem in the inner iteration. This is because it is known that the CD algorithm with the warm start strategy is efficient for the Lasso regression problem and has an advantage for memory consumption (Wu and Lange 2008). In addition, the SPMESL needs solving p independent scaled Lasso problems to obtain the estimate of the precision matrix. We develop the GPU-parallel CD algorithm for the SPMESL, which updates p coordinates simultaneously with GPUs. In the following subsections, we introduce the CD algorithm for the subproblem of the scaled Lasso and GPU-parallel CD algorithm for the SPMESL in detail.

3.1 CD algorithm for subproblem of the scaled Lasso

In this subsection, we focus on the following subproblem of the scaled Lasso with a given \(\lambda _0\):

where \(\mathbf{y}\in \mathbb {R}^n\) is a response vector, \(\mathbf{X}\in \mathbb {R}^{n \times p}\) is a design matrix, \(\lambda ^{(r-1)} = \hat{\sigma }^{(r-1)} \lambda _0\), and \(\hat{\sigma }^{(r-1)} = \Vert \mathbf{y}- \mathbf{X}\hat{\beta }^{(r-1)}\Vert _2/ \sqrt{n}\) is the iterative solution for \(\sigma \) at the \((r-1)\)th iteration. For the notational simplicity, we use \(\hat{\varvec{\beta }}\) to denote the rth iterative solution \(\hat{\varvec{\beta }}^{(r)}\), and \(\hat{\varvec{\beta }}^{[cur]} \) and \(\hat{\varvec{\beta }}^{[next]}\) denote the current and the next iterative solution in the CD algorithm, respectively. To apply the warm start strategy, we set the initial value \(\hat{\varvec{\beta }}^{[cur]}\) for \(\hat{\varvec{\beta }}\) to \(\hat{\varvec{\beta }}^{(r-1)}\). In this paper, we consider the cyclic CD algorithm with an ascending order (i.e., coordinate-wise update from the smallest index to the largest index). Subsequently, for \(j=1,\ldots , p\), the CD algorithm updates \(\hat{\beta }_j^{[next]}\) by following equations:

where \(\text{ Soft}_\lambda (a_j) = \text{ sign }(a_j)(|a_j|-\lambda )_+\) is the soft-thresholding operator and \((x)_+ = \max (x, 0)\). The CD algorithm repeats the cyclic updates until the convergence occurs, where the common convergence criterion is the \(L_\infty \)-norm of the difference between \(\hat{\varvec{\beta }}^{[cur]}\) and \(\hat{\varvec{\beta }}^{[next]}\) (i.e., \(\Vert \hat{\varvec{\beta }}^{[next]} - \hat{\varvec{\beta }}^{[cur]}\Vert _\infty \)). The whole CD algorithm with warm start strategy for the scaled Lasso is summarized in Algorithm 1.

3.2 CD algorithm for SPMESL

As described in Sect. 2.2, the SPMESL estimates the precision matrix by solving the p scaled Lasso problems, where each column of the observed data matrix is considered as the response variable and the other columns are considered as the exploratory variables. To be specific, let \((\mathbf{x}^{(i)})^T = (X_{i1}, X_{i2}, \ldots , X_{ip})^T \sim N(0, \varvec{\varOmega }^{-1})\) be the ith random sample and \(\varvec{\varOmega } = \varvec{\varSigma }^{-1}\) be the precision matrix. Furthermore, let \(\mathbf{x}_k = (X_{1k},\ldots , X_{nk})^T\) be the kth column vector of the observed data matrix \(\mathbf{X}= (\mathbf{x}_1, \ldots , \mathbf{x}_p) \in \mathbb {R}^{n\times p}\). The CD algorithm with the warm start strategy for the SPMESL independently applies the CD algorithm in Sect. 3.1 to the subproblem (5) for \(k=1,2,\ldots , p\). The main procedures in the CD algorithm for the SPMESL are summarized in the following two steps:

-

Updating \(\hat{\varvec{\beta }}_{-k}\) for \(1\le k \le p\): Applying the CD algorithm with the warm-start strategy for the following lasso subproblem: for \(k=1,2,\ldots ,p\),

$$\begin{aligned} \hat{\varvec{\beta }}_{-k} = \mathop {\mathrm{argmin}}\limits _{\varvec{\beta }_{-k}~:~\beta _{kk}=0} \frac{\Vert \mathbf{x}_k - \mathbf{X}~\varvec{\beta }_{-k} \Vert _2^2}{2n} + \hat{\sigma }_j \lambda _0 \Vert \varvec{\beta }_{-k}\Vert _1, \end{aligned}$$(13)where \(\varvec{\beta }_{-k} = (\beta _{1,k},\ldots ,\beta _{k-1,k},0,\beta _{k+1,k},\ldots ,\beta _{p,k})^T \in \mathbb {R}^{p}\).

-

Updating \(\hat{\sigma }_k\) for \(1\le k \le p\): For given \(\lambda _0\) and \(\hat{\varvec{\beta }}_{-k}s\), \(\hat{\sigma }_k\)s are obtained by the equation

$$\begin{aligned} \hat{\sigma }_k =\frac{\Vert \mathbf{x}_k - \mathbf{X}~\hat{\varvec{\beta }}_{-k} \Vert _2}{\sqrt{n}}, \end{aligned}$$(14)where \(\hat{\varvec{\beta }}_{-k} = (\hat{\beta }_{1,k},\ldots ,\hat{\beta }_{k-1,k},0,\hat{\beta }_{k+1,k},\ldots ,\hat{\beta }_{p,k})^T\) is the solution to the problem (13).

The CD algorithm for the SPMESL independently repeats the updating \(\hat{\varvec{\beta }}_{-k}\) and \(\hat{\sigma }_k\) until convergence occurs for \(k=1,2,\ldots , p\). After solving the p scaled Lasso problems, the final estimate \(\hat{\varvec{\varOmega }}\) of the precision matrix by the SPMESL is obtained by the symmetrization (9). The whole CD algorithm with warm start strategy for the SPMESL is summarized in Algorithm 2.

3.3 Parallel CD algorithm for SPMESL using GPU

As we described in Sect. 3.2, the CD algorithm for the SPMESL solves p independent scaled Lasso problems. From this independence structure, p elements in \(\mathbf{B}=(\beta _{jk})\) can be updated in parallel, where \((p-1)\) elements can simultaneously be updated in practice since \(\beta _{kk}=0\) is fixed. In addition, the computation of \(\hat{\sigma }\) is independent as well in the sense that the update equation for \(\hat{\sigma }_k\) only needs information of \(\hat{\varvec{\beta }}_{-k}\). To describe the proposed parallel CD algorithm, we consider the following joint minimization problem, which combines p scaled Lasso problems:

where \(\mathbf{B}=(\varvec{\beta }_{-1},\ldots , \varvec{\beta }_{-p})\) and \(\varvec{\beta }_{-k} = (\beta _{1,k},\ldots ,\beta _{k-1,k},0,\beta _{k+1,k},\ldots ,\beta _{p,k})^T\). As the updating equation (14) for \(\hat{\sigma }_k\) is simple and easily parallelizable, we focus on the update for \(\hat{\mathbf{B}}\) in this subsection. For the given iterative solution \(\hat{\varvec{\sigma }}^{(r)}=(\hat{\sigma }_1^{(r)}, \ldots , \hat{\sigma }_p^{(r)})\), the subproblem for updating \(\hat{\mathbf{B}}^{(r+1)}\) can be represented as follows:

where \(\Vert \mathbf{A}\Vert _F = \mathrm{tr}(\mathbf{A}^T \mathbf{A})=\big (\sum _{i,j} a_{ij}^2\big )^{1/2}\) is the Frobenius norm of a matrix \(\mathbf{A}\) and \(\lambda _j = \hat{\sigma }_j^{(k)} \lambda _0\). For the notational simplicity, hereafter, we denote \(f(\mathbf{B};\hat{\varvec{\sigma }}^{(r)}, \lambda _0)\) and \(\widehat{\mathbf{B}}^{(r+1)}\) as \(f(\mathbf{B})\) and \(\widehat{\mathbf{B}}\), respectively. As \(f(\mathbf{B})\) is the sum of the smooth function of \(\mathbf{B}\) (the square of the Frobenius norm) and non-smooth convex functions, \(f(\mathbf{B})\) satisfies the conditions of Theorem 4.1 in Tseng (2001). Thus, the iterative sequence \(\{\hat{\mathbf{B}}^{(r)}\}\) in the CD algorithm with cyclic rule converges to the stationary point of \(f(\mathbf{B})\), where \(\hat{\mathbf{B}}^{(r)}\) denotes the iterative solution of the CD algorithm at the rth iteration, and each iteration is counted when one cycle is finished (i.e., \(\beta _{12},\ldots ,\beta _{p-1,p}\) have been updated.).

To develop the parallel CD (PCD) algorithm, we consider a row-wise update for \(\hat{\mathbf{B}}\), which is one of the possible orderings in the cyclic rule. The main idea of the parallel CD algorithm is that the p Lasso subproblems are independent in the sense that \(\beta _{j,k}\) does not need information \((\beta _{j,l})\) for \(l\ne k\). To be specific, let \(\hat{\varvec{\beta }}^{(j), [cur]} =(\hat{\beta }_{j,1}^{[cur]},\ldots ,\hat{\beta }_{j,j-1}^{[cur]}, 0, \hat{\beta }_{j,j+1}^{[cur]}, \ldots , \hat{\beta }_{j,p}^{[cur]})\) and \(\hat{\varvec{\beta }}^{(j), [next]}\) be the jth row of the current and next iterative solutions of \(\hat{\mathbf{B}}\), respectively. The following Proposition 2 shows that the row of \(\hat{\mathbf{B}}\) can be updated in parallel.

Proposition 2

Let \(\mathbf{E} = (\mathbf{e}_1,\ldots ,\mathbf{e}_p)\) be a current residual matrix defined with \(\mathbf{e}_k = \mathbf{x}_k - \mathbf{X}\hat{\varvec{\beta }}_{-k}^{[cur]}\), and let \(\hat{\mathbf{B}}^{[cur]}\) and \(\widehat{\mathbf{B}}^{[next]}\) be the current and next iterative solution for the coefficient of the joint Lasso subproblem, respectively. Suppose we update the rows of \(\hat{\mathbf{B}}^{[next]}\) from the first row to the last row. Then, each row \(\hat{\varvec{\beta }}^{(j),[next]}\) of \(\hat{\mathbf{B}}^{[next]}\) for \(j=1,\ldots ,p\) can simultaneously be updated by following updating equations:

where \(\mathbf{S}_{\lambda _1,\ldots ,\lambda _p}(\mathbf{x}) = (\text{ Soft}_{\lambda _j}(x_j))_{1\le j \le p}\), \(\text{ Soft}_\lambda (x) = \mathrm{sign}(x)(|x|-\lambda )_+\), and \((x)_+ = \max (x,0)\).

Proof

As described in Sect. 3.1, the CD algorithm updates each element \(\hat{\beta }_{j,k}^{[next]}\) in \(\hat{\varvec{\beta }}^{(j),[next]}\) by

Consider updating the first row of \(\hat{\mathbf{B}}^{(next)}\) by the CD algorithm. Then, for \(k=2,\ldots ,p\), the above updating equations becomes

The update for \(\hat{\beta }_{1,k}^{[next]}\) only needs information on the current residual vector \(\mathbf{e}_k\) and \(\hat{\beta }_{1,k}^{[cur]}\). Hence, the updates of \(\hat{\beta }_{1,k}^{[next]}\) and \(\hat{\beta }_{1,l}^{[next]}\) for \(k\ne l\) are independent in the sense that \(\hat{\beta }_{1,k}^{[next]}\) does not depend on \(\hat{\beta }_{1,k}^{[next]}\), and vice versa. Combining these equations for \(j=2,\ldots ,p\), we can represent \((p-1)\) updating equations with the following vector form:

where \(\lambda _j = \hat{\sigma }_j \lambda _0\) and \(\mathbf{a}_1^T = (0, \mathbf{e}_2^T \mathbf{x}_1/n, \ldots , \mathbf{e}_p^T \mathbf{x}_1/n) + (0, \hat{\beta }_{1,2}^{[cur]},\ldots , \hat{\beta }_{1,p}^{[cur]}) =\mathbf{x}_1^T \mathbf{E}/n + \hat{\varvec{\beta }}^{(1),[cur]} - (\mathbf{x}_1^T \mathbf{e}_1/n) \mathbf{i}_1\), where \(\hat{\beta }_{1,1}^{[cur]}=0\) and \(\mathbf{i}_j\) is the jth row of p-dimensional identity matrix. After updating \(\hat{\varvec{\beta }}^{(1),[next]}\), the current residual matrix is also updated by \(\mathbf{E} \leftarrow \mathbf{E} + \mathbf{X}_1 (\hat{\varvec{\beta }}^{(1),[cur]}- \hat{\varvec{\beta }}^{(1),[next]})\). Then, the updated \(\mathbf{e}_k\) becomes \(\mathbf{x}_k - \mathbf{x}_1 \hat{\beta }_{1,k}^{[next]} - \sum _{l=2}^p \mathbf{x}_l \hat{\beta }_{l,k}^{[cur]}\). Using these equations, we can express a general form of updating equations as

where, for computational simplicity, we calculate \(\mathbf{a}_j\) by \(\mathbf{x}_j^T \mathbf{E}/n + \hat{\varvec{\beta }}^{(j),[cur]}\) and then set \(a_{jj} = 0\). Applying this sequence of updating equations from the first row (\(j=1\)) to the last row (\(j=p\)) of \(\widehat{\mathbf{B}}^{[next]}\) is equivalent to the cyclic CD algorithm for the joint Lasso subproblem. \(\square \)

In Proposition 2, the row-wise updating equations consist of basic linear algebra operations such as matrix-matrix multiplication and element-wise soft-thresholding, which are adequate for parallel computation using GPUs. To fully utilize the GPUs, we use the cuBLAS library for linear algebra operations and develop CUDA kernel functions for the element-wise soft-thresholding, parallel update for \(\hat{\varvec{\sigma }}\) , and symmetrization of \(\hat{\varvec{\varOmega }}\). We refer this CD algorithm to the parallel CD (PCD) algorithm in the sense of that p elements in each row of \(\widehat{\mathbf{B}}\) are simultaneously updated if p GPUs (i.e., p CUDA cores) are available. Note that \(\hat{\beta }_{jj}\) for \(j=1,\ldots ,p\) are fixed with 0 in the PCD algorithm, which is handled explicitly in the implementation. The whole PCD algorithm with warm start strategy for the SPMESL is summarized in Algorithm 3. In Algorithm 3, we use a convergence criterion \(\Vert \mathbf{B}^{(r+1)} - \mathbf{B}^{(r)}\Vert _\infty < \delta \) to check the convergence of \(\mathbf{B}^{(r)}\), which is different to the convergence criterion \(\Vert \varvec{\beta }_{-j}^{(r+1)} - \varvec{\beta }_{-j}^{(r)}\Vert _{\infty } < \delta \) in the CD algorithm. To ensure that the CD and the PCD algorithm provide the same solution, we show that the PCD algorithm obtains a solution that is sufficiently close to the solution of the CD algorithm if two algorithms use the same initial values in the following Theorem 1:

Theorem 1

For a given vector \((\hat{\sigma }_1, \ldots , \hat{\sigma }_p)\), a tuning parameter \(\lambda _0\), and a convergence tolerance \(\delta >0\), let \({\varvec{\beta }}_{-k}^{(r)}\) and \(\mathbf{b}_k^{(r)}\) be iterative solutions at the rth iteration by the CD algorithm with a convergence criterion \(\Vert \varvec{\beta }_{-k}^{(r+1)} - \varvec{\beta }_{-k}^{(r)}\Vert _{\infty } < \delta \) and the PCD algorithm with a convergence criterion \(\Vert \mathbf{B}^{(r+1)} - \mathbf{B}^{(r)}\Vert _\infty < \delta \), respectively. Let \(\hat{\varvec{\beta }}_{-j}\) and \(\hat{\mathbf{b}}_j\) be the solutions of the CD and the PCD that satisfy the given convergence criteria. Suppose that the CD and the PCD algorithms use the same initial point (\(\hat{\varvec{\beta }}_{-k}^{(0)}=\hat{\mathbf{b}}_k^{(0)}, 1\le j \le p)\). Then, \(\Vert \hat{\mathbf{b}}_k - \hat{\varvec{\beta }}_{-k}\Vert _\infty \) is also bounded by \(\delta \).

Proof

As the function \(g(\varvec{\beta }_{-k};\hat{\sigma }_k, \lambda _0)\) is convex with respect to \(\varvec{\beta }_{-k}\), it is easy to show that the coordinate-wise minimization of \(g(\varvec{\beta }_{-k};\hat{\sigma }_k, \lambda _0)\) satisfies the conditions (B1)–(B3) and (C1) in Tseng (2001). To see this, let \(\eta = (\eta _1,\ldots ,\eta _{p-1})^T=(\beta _{1k},\ldots ,\beta _{k-1,k},\beta _{k+1,k},\ldots ,\beta _{pk})^T\), \(\tau = (\tau _1,\ldots ,\tau _{p-1})^T\), and \(\tau _m = \lambda _{m}\) for \(1\le m \le k-1\) and \(\tau _m=\lambda _{m+1}\) for \(k\le m\le p-1\). We further let \(f_0(\eta ) = \frac{1}{2n}\Vert \mathbf{x}_j - \mathbf{X}_{-j} \eta \Vert _2^2\) and \(f_m(\eta _m) = \tau _m | \eta _{m}|\) for \(1\le m \le p-1\), where \(\mathbf{X}_{-j} = (\mathbf{x}_1,\ldots , \mathbf{x}_{j-1},\mathbf{x}_{j+1},\ldots , \mathbf{x}_p)\). Then, we can represent \(g(\varvec{\beta }_{-k};\hat{\sigma }_k, \lambda _0)\) as \(f(\eta )=f_0(\eta ) + \sum _{m=1}^{p-1} f_m(\eta _{m})\). With this representation, it is trivial that \(f_0\) is continuous on \(\mathrm{dom} f_0\) (B1) and \(f_0, f_1, \ldots , f_{p-1}\) are lower semi-continuous (B3). As \(f_0, f_1, \ldots , f_{p-1}\) are convex functions, the function \(\eta _m \mapsto f(\eta _1,\ldots , \eta _{p-1})\) for each \(m\in \{1,\ldots , p-1\}\) and \((\eta _l)_{l\ne m}\) is also convex and hemivariate (B2). The function \(f_0\) also satisfies that \(\mathrm{dom} f_0\) is open and \(f_0\) tends to \(\infty \) at every boundary point of \(\mathrm{dom} f_0\) because the domain of \(f_0(\eta )\) is \(\mathbb {R}^{p-1}\) and \(f_0(\eta )\) is the sum of squares of errors. Thus, by Theorem 5.1 in Tseng (2001), the cyclic CD algorithm guarantees that \(\varvec{\beta }_{-k}^{(r)}\) converges to a stationary point of \(g(\varvec{\beta }_{-k};\hat{\sigma }_k, \lambda _0)\). As the same updating order \((1\rightarrow 2 \rightarrow \cdots \rightarrow p)\) and equation are applied with the same initial values in the proposed CD and PCD algorithms, the sequence \(\{\mathbf{b}_k^{(r)}\}\) by the PCD is equivalent to the sequence \(\{ \varvec{\beta }_{-k}^{(r)}\}\). That is, \(\varvec{\beta }_{-k}^{(r)}=\mathbf{b}_k^{(r)}\) for \(r\ge 0\). Let \(K_{CD}\) and \(K_{PCD}\) be the iteration numbers that satisfies the convergence criteria of the CD and the PCD, respectively. As \(\Vert \varvec{\beta }_{-k}^{(r)} - \varvec{\beta }_{-k}^{(r-1)}\Vert _\infty =\Vert \mathbf{b}_k^{(r)} - \mathbf{b}_k^{(r-1)}\Vert _\infty \le \Vert \mathbf{B}^{(r)} - \mathbf{B}^{(r-1)}\Vert _\infty \), it is satisfied that \(K_{PCD} \ge K_{CD}\). As the p-dimensional Euclidean space with \(L_\infty \)-norm is Banach space, the convergent sequence \(\{ \varvec{\beta }_{-k}^{(r)}\}\) and \(\{ \mathbf{b}_{k}^{(r)}\}\) is a Cauchy sequence. Thus, from the definition of the Cauchy sequence, for a given \(\delta >0\), there exists K such that \(\Vert \varvec{\beta }_{-k}^{(u)} - \varvec{\beta }_{-k}^{(v)}\Vert _\infty < \delta \) for \(u,v \ge K\). Take \(K=K_{CD}\), \(u=K_{CD}\), and \(v=K_{PCD}\). Then, \(\varvec{\beta }_{-k}^{(K_{CD})} = \hat{\varvec{\beta }}_{-k}\) and \(\varvec{\beta }_{-k}^{(K_{PCD})} = \hat{\mathbf{b}}_k\). Hence, the \(L_\infty \)-norm of the difference of \(\hat{\varvec{\beta }}_{-k}\) and \(\hat{\mathbf{b}}_k\) is bounded by \(\delta \). \(\square \)

For convergence of \(\sigma _j\), we also use the same convergence criterion \(|\sigma _j^{(r)}- \sigma _j^{(r-1)}|<\delta \) as in the CD algorithm. To reduce the computational costs in the PCD algorithm, at each iteration, we remove some columns of \(\mathbf{B}\) in the problem if the corresponding \(\sigma _j\) satisfies the convergence criterion \(|\sigma _j^{(r)}- \sigma _j^{(r-1)}|<\delta \). This additional procedure needs the rearrangement of the coefficient matrix \(\mathbf{B}\). In the implementation, we use an index vector and a convergence flag vector to implement the additional rearrangement procedure efficiently. Thus, the PCD algorithm requires more computational costs than the CD algorithm for the SPMESL as the CD algorithm runs consequently for \(j=1,\ldots , p\) and does not need the rearrangement procedure. In the next section, however, we numerically show that the PCD algorithm becomes more efficient compared to the CD algorithm when either the number of variables or the sample size increases, although the PCD has more computational costs.

4 Numerical study

4.1 Data construction and simulation settings

In this section, we numerically investigate the computational efficiency of the proposed CD and PCD algorithms and the estimation performance of the SPMESL with comparisons to other existing methods. To proceed the comparison on various circumstances, we first consider four network structures for the precision matrix defined as follows:

-

AR(1): AR(1) network is also known as a chain graph. We define a precision matrix \(\varOmega \) for AR(1) as

$$\begin{aligned} \varOmega = (\omega _{ij})_{1\le i,j \le p} = \left\{ \begin{array}{ll} 1 &{} \text{ if } i = j \\ 0.48 &{} \text{ if } |i-j| = 1\\ 0 &{} \text{ otherwise } \end{array} \right. \end{aligned}$$ -

AR(4): In AR(4) network, each node is connected to neighborhood nodes whose distance is less than or equal to 4, where the distance of two nodes i and j is defined as \(d(i,j)=|i-j|\). The precision matrix \(\varOmega \) corresponding to AR(4) network is defined as

$$\begin{aligned} \varOmega = (\omega ^{ij})_{1\le i,j \le p} = \left\{ \begin{array}{ll} 0.6^{|i-j|} &{} \text{ if } |i-j| \le 4\\ 0 &{} \text{ otherwise } \end{array} \right. \end{aligned}$$ -

Scale-free: Degrees of nodes follow the power-law distribution having a form \(P(k) \propto k^{-\alpha }\), where P(k) is a fraction of nodes having k connections and \(\alpha \) is a preferential attachment parameter. We set \(\alpha = 2.3\), which is used in Peng et al. (2009), and we generate a scale-free network structure by using the Barabási and Albert (BA) model (Barabási and Albert 1999). With the generated network structure \(G=(V,E)\), we define a precision matrix corresponding to \(G=(V,E)\) by following the steps applied in (Peng et al. 2009): \( (i)~ \tilde{\varOmega } = (\tilde{\omega }_{ij})_{1\le i,j \le p} = \left\{ \begin{array}{ll} 1 &{} \text{ if } i = j \\ U &{} \text{ if } (i,j) \in E\\ 0 &{} \text{ otherwise } \end{array} \right. , \) \( U \sim \mathcal {U}([-1,-0.5]\cup [0.5,1])\). (ii) \(\varOmega =({\omega }_{ij})_{1\le i,j \le p} =\frac{\tilde{\omega }_{ij}}{1.5 \sum _{k\ne i} |\tilde{\omega }^{ik}|} \) (iii) \(\varOmega = (\varOmega + \varOmega ^T)\big /2\) (iv) \(\omega _{ii} = 1\) for \(i=1,2,\ldots ,p\)

-

Hub: Following (Peng et al. 2009), for \(p=100\), a hub network consists of 10 hub nodes whose degrees are around 15 and 90 non-hub nodes whose degrees lie between 1 and 3. Edges in the hub network are randomly selected with the above conditions. With the given network structure \(G=(V,E)\), we generate a precision matrix by the procedure described in the scale-free network generation.

To avoid nonzero elements having considerably small magnitudes (i.e., the absolute value of element), we generate (p/100) subnetworks, each of which consists of 100 nodes, and set nonzero elements having magnitudes less than 0.1 to 0.1 (i.e., \(\mathrm{sign}(\omega _{ij})/10\)) for the scale-free and hub networks. For example, we generate five subnetworks having 100 nodes when \(p=500\) for the scale-free and hub networks. We depict the generated four network structures in Fig. 3 for \(p=500\). Among four networks, AR(1) and AR(4) networks correspond the circumstances that the variables are measured in a specific order, and the hub and scale-free networks are frequently observed in real-world problems such as the gene regulatory networks and functional brain networks.

For comparison of the computational efficiency, we consider the number of variables \(p=1000\), and sample size \(n=250, 500\). To provide a benchmark of the computational efficiency, we also consider the well-known existing methods such as the CLIME (Cai et al. 2011), graphical lasso (GLASSO) (Friedman et al. 2008), and convex partial correlation estimation method (CONCORD) (Khare et al. 2015). As the computation times of the algorithms are affected by the level of sparsity on the estimate of the precision matrix, we set \(\lambda _0 = \sqrt{4n^{-1}\log p}\) for LARS-CPU (SPMESL-LARS) and CD-CPU (SPMESL-CD). Further, we search the tuning parameters for other methods to obtain the level of sparsity similar to the one obtained by the SPMESL. For example, for AR(1) and AR(4) networks with \(p=1000\), the averages of the estimated edges of all methods are around 1000, which is 0.2% of the total possible edges. With the chosen penalty levels, the computation time for each method is measured in CPU time (seconds) by using a workstation (Intel(R) Xeon(R) W-2175 CPU (base: 2.50 GHz, max-turbo: 4.30 GHz) and 128 GB RAM) with NVIDIA GeForce GTX 1080 Ti. We measure the average computation times and standard errors over 10 datasets.

For comparison of the estimation performance, we consider evaluating the estimation performance of the SPMESL with \(\lambda _{pb}=\sqrt{2} L_n (k/p)\), \(\lambda _{univ}=\sqrt{2 n^{-1} \log (p-1)}\), and \(\lambda _{ub}=\sqrt{4 n^{-1} \log p}\) as there are three different suggestions for \(\lambda _0\) without a comparison of the estimation performance, where k is a real solution of \(k=L_1^4(k/p) + 2 L_1^2(k/p)\), \(L_n(t) = n^{-1/2} \varPhi ^{-1}(1-t)\), and \(\varPhi ^{-1}(t)\) is the standard normal quantile function. Here, we denote the SPMESL with \(\lambda _{pb}\), \(\lambda _{univ}\), \(\lambda _{ub}\) as SPMESL-P, SPMESL-2, SPMESL-4, respectively. As described in the computational efficiency comparison, we apply the three existing methods (CLIME, GLASSO, CONCORD) to provide a benchmark of the estimation performance. Hereafter, we referred these three methods to the tuning-search methods. To measure the estimation performance, we consider five performance measures– sensitivity (SEN), specificity (SPE), false discovery rate (FDR), miss-classification error rate (MISR), and Matthew’s correlation coefficients (MCC)–for identifying the true edges and the Frobenius norm of \(\varvec{\varOmega }^0 - \hat{\varvec{\varOmega }}\) for estimation error, where \(\varvec{\varOmega }^0\) and \(\hat{\varvec{\varOmega }}\) denote the true precision matrix and the estimate of the precision matrix, respectively. The five performance measures for identification of the true edges are defined as follows:

where \(\mathrm{TP} = \sum _{j<k} I(\hat{\omega }_{jk}\ne 0, \omega ^0_{jk}\ne 0)\), \(\mathrm{FP} = \sum _{j<k} I(\hat{\omega }_{jk}\ne 0, \omega ^0_{jk}= 0)\), \(\mathrm{TN} = \sum _{j<k} I(\hat{\omega }_{jk}= 0, \omega ^0_{jk}= 0)\), and \(\mathrm{FN} = \sum _{j<k} I(\hat{\omega }_{jk}= 0, \omega ^0_{jk} \ne 0)\),

In the comparison of the estimation performance, we set the number of variables and sample size as 500 and 250, respectively. We generate samples \(\mathbf{X}^1, \mathbf{X}^2,\ldots , \mathbf{X}^n \sim N(\mathbf{0},\varvec{\varSigma })\), where \(\varvec{\varSigma } = \varvec{\varOmega }^{-1}\) and \(\varvec{\varOmega }\) is from a given network structure. Unlike the comparison of computational efficiency, we need to choose criteria for the selection of the optimal tuning parameter of the CLIME, GLASSO, and CONCORD. For a fair comparison, we adopt the Bayesian information criterion (BIC), which is widely used in model selection, and search the optimal tuning parameter over an equally spaced grid \((0.10, 0.12, \ldots , 0.88, 0.90)\). We generate 50 datasets, each of which we search the optimal tuning parameters for the CLIME, GLASSO, and CONCORD and apply \(\lambda _{pb}\), \(\lambda _{univ}\), \(\lambda _{ub}\) for the SPMESL.

In this numerical study, we implement R package cdscalreg for the CD algorithm with a warm start strategy for the scaled Lasso and SPMESL, which is available at https://sites.google.com/view/seunghwan-lee/software. For the other methods in this study, we use R packages fastclime for FASTCLIME, glasso for GLASSO, gconcord for CONCORD, scalreg for the original algorithm for SPMESL. Note that consider FASTCLIME (Pang et al. 2014) for the CLIME, which obtains the CLIME estimator efficiently and provides a solution path of the CLIME applying the parametric simplex method. It is also worth noting that the GPUs we used are more efficient for conducting operations with single-precision values than double-precision values, but the R programming language only supports the double-precision that makes the efficiency of the GPU-parallel computation decrease. Although this PCD implementation could decrease its computational efficiency, we develop an R function for the PCD algorithm to provide an efficient and convenient tool for R users. If readers want to utilize GPU-parallel computation maximally, PyCUDA (Klöckner et al. 2012) is one of the convenient and favorable ways to implement CUDA GPU-parallel computation.

4.2 Comparison results for computational efficiency

Table 1 reports the average computation times and standard errors over 10 datasets. From Table 1, we numerically verify that the proposed algorithm based on the CD with warm-start strategy is more efficient than the original algorithm based on the LARS, where the proposed algorithm (CD-CPU) is 110.2 and 466.3 times faster than LARS-CPU for the worst case (AR(1), \(n=250\)) and the best case (AR(4), \(n=500\)), respectively. For comparison of efficiency with other methods, overall, the CD-CPU is faster than FASTCLIME and slower than GLASSO and CONCORD. GLASSO is the most efficient algorithm in our numerical study. Its efficiency is from the sub-procedure that reduces the computational cost by the pre-identification procedure for nonzero block diagonals of the estimate that rearranges the order of variables for a given tuning parameter described in Witten et al. (2011). The CONCORD is faster than the CD-CPU in general because the CD algorithm for the CONCORD is applied to minimize its objective function directly. However, the CD algorithm in the CD-CPU is repeatedly applied to solve the lasso subproblems. Even though the GLASSO and CONCORD are faster than the CD-CPU, the GLASSO and CONCORD are tuning-search methods while the CD-CPU is not. Thus, the CD-CPU becomes the most efficient when the GLASSO and CONCORD need to evaluate more than five tuning parameters. For the efficiency of the PCD-GPU, we can see that the PCD-GPU is faster than CD-CPU for all cases except for the case of (Hub, \(n=250\)). In addition, Table 1 also shows that the efficiency of the PCD-GPU increases as the sample size increases. For example, all computation times of CD-CPU significantly increase when the sample size increases from 250 to 500. However, there is no significant difference on the computation times of PCD-GPU between the sample sizes 250 and 500. This might show that the GPU device has idle processing units when \(n=250\). Note that the estimator of the SPMESL can be obtained by solving p scaled Lasso problems independently on multi CPU cores instead of on GPUs. However, the cost per core of CPU is more expensive than that of GPU. Moreover, the average computation times of the parallel computation of the CD-CPU with 16 CPU cores with the R package doParallel (PCD-MPI in Table 1) are around 20 seconds, which are worse than CD-CPU. This inefficiency might be from the communication cost and the number of CPU cores not enough for large p.

To verify the efficiency of PCD-GPU compared to CD-CPU, we conduct additional numerical studies for CD-CPU and PCD-GPU with \(p=500, 1000, 2000, 5000\) and \(n=250, 500, 1000\). Table 2 reports the average computation times and standard errors measured in CPU time (seconds) for CD-CPU and PCD-GPU. As shown in Table 2, PCD-GPU becomes more efficient than CD-CPU when either the number of variables or the sample size increases. For example, CD-CPU is 1.05\(\sim \)1.94 times faster than PCD-GPU only for the Hub-network cases of \((p,n)=(500,250), (500,500), (1000,250)\); however, PCD-GPU outperforms CD-CPU for all the other cases and 4.71\(\sim \)11.65 times faster than CD-CPU when \(p=5000\). In Table 2, we also find an advantage of the GPU-parallel computation. Originally, the parallel computation in PCD-GPU applied to reduce the computational cost depends on the number of variables. However, the additional numerical studies support that the parallel computation in PCD-GPU also reduces the computation times when the number of samples increases for a fixed p. This advantage is from the efficiency of the GPU-parallel computation for the matrix-matrix and matrix-vector multiplications. Thus, the additional numerical studies show that PCD-GPU is favorable for the cases where either p or n is sufficiently large.

4.3 Comparison results for estimation performance

Tables 3 and 4 report the averages of the number of estimated edges (\(|\hat{E}|\)) and the six performance measures over 50 data sets for AR(1), AR(4), Scale-free, and Hub networks. From the results in Tables 3 and 4, we can find several interesting features. First, focusing on comparing SPMESL-P, SPMESL-2, and SPMESL-4, the SPMESL-P obtains the smallest estimation error in Frobenius norm, and the SPMESL-4 has the largest estimation error for all network structures. The estimation error of SPMESL-2 is located near the middle of an interval defined by the estimation errors of the SPMESL-P and SPMESL-4. However, for the performance in the identification of the true edges, the SPMESL-4 has the smallest SEN and FDR for all network structures, and the SPMESL-2 obtains the lowest MISR and the highest MCC for Scale-free and Hub networks while the SPMESL-P has the highest MISR and the lowest MCC for all network structures. For AR(1) and AR(4) network structures, the MISR and the MCC of the SPMESL-2 are worse than those of the SPMESL-4, but the differences of the SPMESL-2 and the SPMESL-4 are small. For example, the difference in the MCC of the SPMESL-2 and the SPMESL-4 are 0.0195 and 0.0095 in an original scale for AR(1) and AR(4), respectively.

Second, by comparing the SPMESL and the tuning-search methods (CLIME, GLASSO, CONCORD), the SPMESL-2 and SPMESL-4 obtain considerably small FDRs compared to the tuning-search methods. For example, the FDRs by the tuning-search methods are over 27% for all cases, but the FDRs by the SPMESL-2 and the SPMESL-4 are less than 6.2%. The SPMESL-P has the lowest MCC and the highest FDR for Scale-free and Hub networks and obtains the second-lowest MCC and the second-highest FDR for AR(4) networks, where only the GLASSO is worse than the SPMESL-P in terms of the MCC and FDR. For AR(1) network, the SPMESL with all penalty levels are better than the tuning-search methods for identifying the true edges, and the estimation errors of the SPMESL-P and SPMESL-2 are less than those of the tuning-search methods.

Finally, we compare the estimation performance of the tuning-search methods. For the estimation error in the Frobenius norm, the CONCORD outperforms CLIME and GLASSO for all cases, where the CONCORD obtains similar estimation errors to the SPMESL-P for AR(4), Scale-free, and Hub networks. However, for the identification of the true edges, the CLIME obtains slightly better performance than the CONCORD for all networks except the AR(1) network. For the AR(1) network, the CONCORD outperforms CLIME and GLASSO for estimation error and identification of the true edges. In our numerical study, the CONCORD is favorable among the three tuning-search methods we consider. Note that we adopt the BIC for three tuning-search methods for a fair comparison, but the comparison results might be changed if we apply other model selection criteria.

From the results of the estimation performance comparison, we recommend the SPMESL with the universal penalty level \(\lambda _{univ}\) if the target problem can accept the FDR level around 5%; the uniform-bound penalty level is only preferred when the problem only accepts the small FDR less than 1%. Note that we do not recommend using the probabilistic bound \(\lambda _{pb}\) as the SPMESL-P has the highest FDR among three penalty levels for the SPMESL, which are over 50% for Scale-free and Hub networks, although the SPMESL-P obtains the lowest estimation error in Frobenius norm.

5 Conclusion

In this paper, we proposed an efficient coordinate descent algorithm with the warm start strategy for sparse precision matrix estimation using the scaled lasso motivated by the empirical observation that the iterative solution for the diagonal elements of the precision matrix needs only a few iterations. In addition, we also develop the parallel coordinate descent algorithm (PCD) for the SPMESL by representing p Lasso subproblems as the unified minimization problem. In the PCD algorithm, we use a different convergence criterion \(\Vert \mathbf{B}^{(k+1)} - \mathbf{B}^{(k)}\Vert _\infty < \delta \) to check the convergence of the PCD algorithm for the unified minimization problem. We show that the difference in the iterative solutions of the CD and PCD caused by the difference of the convergence criteria is also bounded by the convergence tolerance \(\delta \).

Our numerical study shows that the proposed CD algorithm is much faster than the original algorithm of the SPMESL, which adopts the LARS algorithm to solve the Lasso subproblems. Moreover, the PCD algorithm with GPU-parallel computation becomes more efficient than the CD algorithm when either the number of variables or the sample size increases. For the optimal tuning parameter for the SPMESL, there are three suggestions without the comparison of the estimation performance. In the additional simulation, we numerically investigate the estimation performance of the SPMESL with the three penalty levels and the other three tuning-search methods. From the comparison results, the SPMESL with the uniform bound level and the universal penalty level outperform the three tuning-search methods. Specifically, the probabilistic bound level \(\lambda _{pb} = \sqrt{2} L_n (k/p)\) provides the estimate that has the smallest estimation error in terms of the Frobenius norm; the uniform bound level \(\lambda _{ub} = \sqrt{4 n^{-1} \log (p)}\) provides the estimate that has the smallest FDR less than 1%; and the universal penalty level \(\lambda _{univ} = \sqrt{2 n^{-1} \log (p-1)}\) obtains either the best or second-best in terms of MCC and FDR. Overall, we recommend the SPMESL with the universal penalty level and apply the CD algorithm if p is less than or equal to 1000 and the PCD algorithm when the target problem has more than 1000 variables and GPU-parallel computation is available.

References

Ali A, Khare K, Oh S-Y, Rajaratnam B (2017) Generalized pseudolikelihood methods for inverse covariance estimation. In: Proceedings of the 20th international conference on artificial intelligence and statistics vol 54, pp 280–288

Barabási A, Albert R (1999) Emergence of scaling in random networks. Science 286:509–512. https://doi.org/10.1126/science.286.5439.509

Bradely Efron B, Hastie T, Johnstone I, Tibshirani R (2004) Least angle regression. Ann Stat 32:407–499. https://doi.org/10.1214/009053604000000067

Bickel PJ, Levina E (2008) Regularized estimation of large covariance matrices. Ann Stat 36:199–227. https://doi.org/10.1214/009053607000000758

Bickel PJ, Levina E (2008) Covariance regularization by thresholding. Ann Stat 36:2577–2604. https://doi.org/10.1214/08-AOS600

Cai TT, Zhang CH, Zhou HH (2010) Optimal rates of convergence for covariance matrix estimation. Ann Stat 38:2118–2144. https://doi.org/10.1214/09-AOS752

Cai T, Liu W (2011) Adaptive thresholding for sparse covariance matrix estimation. J Am Stat Assoc 106:672–684. https://doi.org/10.1198/jasa.2011.tm10560

Cai TT, Liu W, Luo X (2011) A constrained \(\ell _1\) minimization approach to sparse precision matrix estimation. J Am Stat Assoc 106:594–607. https://doi.org/10.1198/jasa.2011.tm10155

Cai TT, Zhou HH (2012) Minimax estimation of large covariance matrices under \(\ell _1\) norm. Stat Sin 22:1319–1349. https://doi.org/10.5705/ss.2010.253

Cai TT, Liu W, Zhou HH (2016) Estimating sparse precision matrix: optimal rates of convergence and adaptive estimation. Ann Stat 44:455–488. https://doi.org/10.1214/13-AOS1171

Friedman J, Hastie T, Tibshirani R (2008) Sparse inverse covariance estimation with the graphical Lasso. Biostatistics 9:432–441. https://doi.org/10.1093/biostatistics/kxm045

Khare K, Oh S-Y, Rajaratnam B (2015) A convex pseudolikelihood framework for high dimensional partial correlation estimation with convergence guarantees. J R Stat Soc Series B 77:803–825

Klöckner A, Pinto N, Lee Y, Catanzaro B, Ivanov P, Fasih A (2012) PyCUDA and PyOpenCL: a scripting-based approach to GPU run-time code generation. Parallel Comput 38:157–174. https://doi.org/10.1016/j.parco.2011.09.001

Mazumder R, Hastie T (2012) The graphical lasso: new insights and alternatives. Electr J Stat 6:2125–2149. https://doi.org/10.1214/12-EJS740

Meinshausen N, Bühlmann P (2006) High dimensional graphs and variable selection with the Lasso. Ann Stat 34:1436–1462. https://doi.org/10.1214/009053606000000281

Pang H, Liu H, Vanderbei R (2014) The FASTCLIME package for linear programming and large-scale precision matrix estimation in R. J Mach Learn Res 15:489–493

Peng J, Wang P, Zhou N, Zhu J (2009) Partial correlation estimation by joint sparse regression models. J Am Stat Assoc 104:735–746. https://doi.org/10.1198/jasa.2009.0126

Ren Z, Sun T, Zhang C-H, Zhou HH (2015) Asymptotic normality and optimalities in estimation of large Gaussian graphical model. Ann Stat 43:991–1026. https://doi.org/10.1214/14-AOS1286

Rothman AJ, Levina E, Zhu J (2009) Generalized thresholding of large covariance matrices. J Am Stat Assoc 104:177–186. https://doi.org/10.1198/jasa.2009.0101

Schäfer J, Strimmer K (2005) A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics. Stat Appl Genet Mol Biol 4:32. https://doi.org/10.2202/1544-6115.1175

Sun T, Zhang CH (2012) Scaled sparse linear regression. Biometrika 99:879–898. https://doi.org/10.1093/biomet/ass043

Sun T, Zhang CH (2013) Sparse matrix inversion with scaled lasso. J Mach Learn Res 14:3385–3418

Tibshirani R (1996) Regression shrinkage and selection via the Lasso. J Roy Stat Soc B 58:267–288. https://doi.org/10.1111/j.2517-6161.1996.tb02080.x

Tseng P (2001) Convergence of a block coordinate descent method for nondifferentiable minimization. J Optim Theory Appl 109:475–494. https://doi.org/10.1023/A:1017501703105

van de Geer S, Bühlmann P (2009) On the conditions used to prove oracle results for the Lasso. Electr J Stat 3:1360–1392. https://doi.org/10.1214/09-EJS506

Witten D, Friedman J, Simon N (2011) New insights and faster computations for the graphical lasso. J Comput Graph Stat 20:892–900. https://doi.org/10.1198/jcgs.2011.11051a

Wu TT, Lange K (2008) Coordinate descent algorithms for lasso penalized regression. Ann Appl Stat 2:224–244. https://doi.org/10.1214/07-AOAS147

Wu WB, Pourahmadi M (2009) Banding sample autocovariance matrices of stationary processes. Stat Sin 19:1755–1768

Yao J, Zheng S, Bai Z (2015) Large sample covariance matrices and high-dimensional data analysis. Cambridge University Press, New York, NY. https://doi.org/10.1017/CBO9781107588080

Yuan M, Lin Y (2007) Model selection and estimation in the Gaussian graphical model. Biometrika 94:19–35. https://doi.org/10.1093/biomet/asm018

Yuan M (2010) Sparse inverse covariance matrix estimation via linear programming. J Mach Learn Res 11:2261–2286

Acknowledgements

Donghyeon Yu’s research was supported by the INHA UNIVERSITY Research Grant and the National Research Foundation of Korea (NRF-2020R1F1A1A01048127).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lee, S., Kim, S.C. & Yu, D. An efficient GPU-parallel coordinate descent algorithm for sparse precision matrix estimation via scaled lasso. Comput Stat 38, 217–242 (2023). https://doi.org/10.1007/s00180-022-01224-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-022-01224-5