Abstract

Generalized linear models with categorical explanatory variables are considered and parameters of the model are estimated by an exact maximum likelihood method. The existence of a sequence of maximum likelihood estimators is discussed and considerations on possible link functions are proposed. A focus is then given on two particular positive distributions: the Pareto 1 distribution and the shifted log-normal distributions. Finally, the approach is illustrated on an actuarial dataset to model insurance losses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The assumption of identical distributions for random variables in an observation sample is relaxed for regression models by considering explanatory variables. Generalized linear models (GLMs) were introduced by Nelder and Wedderburn (1972) and popularized in McCullagh and Nelder (1989). GLMs rely on probability distribution functions of exponential type for the response variable which include most of the light and medium tailed distributions (such as normal, gamma or inverse Gaussian). Asymptotic properties of sequences of maximum likelihood estimators (MLE) for GLMs were studied by Fahrmeir and Kaufmann (1985).

The finite sample property of MLE of specific GLMs has been discussed in depth in statistical literature (see Fienberg 2007; Haberman 1974). In addition, there are lots of literature studying the finite sample property of MLE for logistic regression models (see e.g. Albert and Anderson 1984; Silvapulle 1981).

Regression models for heavy-tailed distributions have been mainly studied through the point-of-view of extreme value analysis, see Beirlant et al. (2004) for a review. A regression model for the generalized Pareto distribution (GPD) where the scale parameter depends on covariates are described in Davison and Smith (1990) with a least square estimation procedure and a model checking method. Beirlant et al. (1998) propose a Burr model by regressing the shape parameter with an exponential link on explanatory variables. In the aforementioned article, a simulation study with one explanatory variable is detailed as well as an application to fire insurance. Residual plots and asymptotic convergence towards the normal distribution are also discussed. Similarly, Ozkok et al. (2012) propose a regression model for Burr distribution where the scale parameter depends on covariates.

An estimation of the extremal tail index (used in generalized extreme value (GEV) distributions and GPD) by considering a class of distribution function with an exponential link on explanatory variables is also described in Beirlant and Goegebeur (2003). Using generalized residuals of explanatory variable makes possible the estimation of the tail index. Still by the extreme value theory approach, Chavez-Demoulin et al. (2015) and Hambuckers et al. (2016) both propose a semi-parametric regression model for GEV and GPD where the explanatory variables are time or known factor levels. They assume that all parameters depend on covariates and also use exponentially distributed residuals.

Outside the extreme value theory framework, there is also a literature studying the covariate modelling. For instance, the so-called double GLMs, where the dispersion parameter also depends on covariates, have been studied by McCullagh and Nelder (1989, Chap. 10) or Smyth and Verbyla (1999). Furthermore, Rigby and Stasinopoulos (2005) propose a general regression framework where all parameters are modeled by explanatory variable and the distribution is not restricted to exponential family. The only restriction that the authors impose is the twice differentiability of the density function w.r.t. parameters. However, there is no clear convergence result of the proposed estimators. Among the proposed distributions, Rigby and Stasinopoulos (2005) use 1-parameter Pareto, log-logistic (a special case of the Pareto 3 distribution) and GEV distributions.

In this paper, we propose closed-form estimators for GLMs in the case of categorical variables. The expression is valid for any distribution belonging to the one-parameter exponential family and any link function. To our knowledge, only Lipovetsky (2015) provides an explicit solution in the special case of a logit regression with categorical predictors. He assumes the response variable follows a Bernoulli distribution with a canonical link function and a particular set-up of intercept.

Then, the paper will continue by the application of such formulas not on classical distributions, but on distributions such as the log-transformed variable has a distribution in the exponential family. Therefore, the choice of probability distributions of this paper is led by two aspects: distributions with positive values and distributions as the log-transformed variable belongs to the exponential family. The considered distributions have heavier tails than the exponential distribution. We choose to study two distributions: the Pareto 1 distribution and the shifted lognormal distribution with fixed threshold parameters. We could have considered log-logistic and GEV distributions being also appropriate in many situations but these distributions do not belong to the exponential family.

Applications of this distribution can be found in various disciplines such as finance, insurance, reliability theory, etc. Here, we are interested with an application to insurance loss modeling. Making insurance tariffs consists in appropriately selecting and transforming explanatory variables so that the prediction fairly estimates the mean of the response variable. When the relation between the transformed response variable and an explanatory variable is not affine, non-linearities is generally accommodated in three ways: binning the variable, adding polynomial terms or using piecewise linear functions in the predictor (see e.g. Goldburd et al. 2016). In this paper, we focus mainly on the first approach where continuous variables (typically the age of the policyholder) have been discretized so that explanatory variables are categorical.

More precisely, pricing non-life insurance relies on estimating the claim frequency and the claim severity distributions. The former is generally estimated by a regression model such as Poisson or zero-inflated models. However for modeling claim severity, we commonly split the claim dataset between attritional and atypical claims. A threshold \(\mu \) is chosen either from the extreme value theory or by expert judgments. A classical GLM such as gamma or inverse-Gaussian is fitted on attritional claim amounts below \(\mu \) (see e.g. Ohlsson and Johansson 2010). Atypical claim amounts above \(\mu \) are not necessarily modeled at all. An empirical rule of the insurance pricing is used to mutualize atypical claims over the portfolio, i.e. the aggregate sum of atypical claims is shared equally among all policies. We aim at providing a regression model for those claims above \(\mu \) in order to refine this empirical rule.

The threshold \(\mu \) can also be interpreted in another insurance context. Generally in non-life insurance, contracts are underwritten with a deductible. This has two consequences: the policyholder will retain the risk of claims below the deductible; and the insurer will only know and be interested in claims above the deductible. In the numerical section, we consider only the example of large claim modeling.

The paper is organized as follows. In Sect. 2, we present the GLMs. Section 3 provide exact formulas for MLE in the case of categorical explanatory variables. Section 4 is dedicated to the Pareto 1 GLM, while Sect. 5 is dedicated to the shifted lognormal GLM. Finally, a simulation analysis is provided in Sect. 6 and an application to an actuarial dataset is carried out in Sect. 7, before Sect. 8 conludes.

2 Preliminaries on generalized linear models;

In this section, we consider the estimation problem in GLMs. We consider deterministic exogenous variables \(\varvec{x}_1, \ldots , \varvec{x}_n\), with \(\varvec{x}_i = (x_i^{(1)},\ldots ,x_i^{(p)})\)\(\in \mathbb {R}^p\) for \(i=1,\ldots ,n\).

In the following, for the sake of clarity, bold notations are reserved for vector of \(\mathbb {R}^p\) and bold notations with an underline are reserved for vector of \(\mathbb {R}^n\). The index \(i\in I=\{1,\dots ,n\}\) is reserved for the observations, while the indexes j, k, l are used for the explanatory variables.

In this setting, the sample \(\underline{\varvec{Y}} = (Y_1,\ldots , Y_n)\) is composed of real-valued independent random variables; each one belongs to a family of probability measures of one-parameter exponential type with respective parameters \(\lambda _1, \ldots , \lambda _n\) valued in \(\varLambda \subset \mathbb {R}\).

Precisely, the likelihood L associated to the statistical experiment generated by \(Y_i\), \(i\in I\) verifies

and \(-\,\infty \) if \(y_i\notin \mathbb {Y}\), where \(a:\mathbb {R}\rightarrow \mathbb {R}\), \(b:\varLambda \rightarrow \mathbb {R}\) and \(c:\mathbb {X}\times \mathbb {R}\rightarrow \mathbb {R}\) are known real-valued measurable functions and \(\phi \) is the dispersion parameter (e.g. McCullagh and Nelder 1989, Section 2.2).

In Eq. (1), the parameters \(\lambda _1, \ldots , \lambda _n\) depend on a finite-dimensional parameter \(\varvec{\vartheta } \in \varTheta \subset \mathbb {R}^p\). Direct computations lead to

Using a twice continuously differentiable and bijective function g from \(b^\prime (\varLambda )\) to \(\mathbb {R}\), the GLM are defined by assuming the following relation between the expectation \(\mathbf {E}_{\varvec{\vartheta }} Y_i\) and the predictor

where \(\eta _i\) are the linear predictors and \(\langle .,.\rangle \) denotes the scalar product. In other words, the bijective function \(\ell =(b^\prime )^{-1}\circ g^{-1}\) is setted; then we have

We summarize with the following relations

where D is the space of linear predictor and X the possible set of value of \(\varvec{x}_i\) for \(i\in I\). Here \(\ell \) is chosen and, consecutively \(\varTheta \), \(\varLambda \) and D must be set.

The parameter \(\varvec{\vartheta } \in \varTheta \subset \mathbb {R}^p\) is to be estimated and g is called the link function in the regression framework. We talk of canonical link function, when \(\ell \) is the identity function.

Let us compute the log-likelihood of \(\underline{\varvec{y}}=(y_1,\ldots ,y_n)\):

with b, h and \(\ell \) being respectively defined in (1) and (3). Here, the vector of the parameters \(\varvec{\vartheta }\) is unknown. If the model is identifiable, it can be shown that the sequence of MLE \((\widehat{\varvec{\vartheta }}_n)_{n\ge 1}\) defined by \( \widehat{\varvec{\vartheta }}_n = \arg \max _{\varvec{\vartheta }\in \varTheta } L (\varvec{\vartheta }\,|\,\underline{\varvec{y}})\) asymptotically exists and is consistent (for example Fahrmeir and Kaufmann 1985, Theorem 2, 4).

The MLE \(\widehat{\varvec{\vartheta }}_n\), if it exists, is the solution of the non linear system

with \(S_j(\varvec{\vartheta })\) are the component of the Score vector defined by

It is worth mentioning that for a small data set (small n) or large number of explanatory variables, the existence of the MLE is not guaranteed. Note that the MLE \(\widehat{\varvec{\vartheta }}_n\) does not depend on the value of the dispersion parameter \(\phi \). Indeed, the dispersion parameter is estimated in a second step using the sum of square residuals or the log-likelihood (see e.g. McCullagh and Nelder 1989, Chap. 9).

In a general setting, the system (5) does not have a closed-form solution and GLMs are generally fitted using a Newton-type method, such that an iteratively re-weighted least square (IWLS) algorithm also refereed to Fisher Scoring algorithm (see e.g. McCullagh and Nelder 1989).

In the case of categorical explanatory variables described later on, the non-asymptotic existence of the MLE depends on the conditional distribution and the chosen link function (see Examples 1, 2 and 3 on Sect. 4.2).

3 A closed-form MLE for categorical explanatory variables

In any regression model, categorical or nominal explanatory variables have to be coded since their value is a name or a category. When the possible values are unordered, it is common to use a binary incidence matrix or dummy variables where each row has a single unity in the column of the class to which it belongs. In the case of ordered values, a contrast matrix has to be used (see e.g. Venables and Ripley 2002).

3.1 A single explanatory variable

Let us first consider the case of a single categorical explanatory variable. That is \(p=2\) and for all \(i\in I\), \(x_i^{(1)}=1\) is the intercept and \(x_i^{(2)}\) takes values in a set of d modalities \(\{v_{1},\dots ,v_{d}\}\) with \(d>2\). We define the incidence matrix \((x_i^{(2),j})_{i,j}\) where \(x_i^{(2),j}= \varvec{1}_{x_i^{(2)} = v_j}\) is the binary dummy of the jth category for \(i\in I\) and \(j\in J=\{1,\ldots ,d\}\). From this incidence matrix, we compute the number of appearance \(m_j>0\) of the jth category and \(\overline{y}_n^{(j)}\) the mean value of \(\underline{\varvec{y}}\) taking over the jth category by

By construction, this incidence matrix has rows that sum to 1. Therefore if we use the combination of the incidence matrix with a 1-column for the intercept \((x_i^{(1)}, x_i^{(2),j})_{i,j}\): a redundancy appears. We must choose either to use no intercept, to drop one column for a particular modality of \(x_i^{(2)}\), or to use a zero-sum condition on the parameters. We investigate below these three options in a single framework.

Consider the following GLM for the explanatory variables \(x_i^{(1)},x_i^{(2),1}\), ..., \(x_i^{(2),d}\) assuming that

where \(\varvec{\vartheta } = (\vartheta _{(1)},\vartheta _{(2),1},\ldots ,\vartheta _{(2),d})\) is the unknown vector parameters. The model being not identifiable, we impose exactly one linear equation on \(\varvec{\vartheta }\)

with \(\varvec{R} = (r_0,r_1,\ldots ,r_d)\) any real vector of size \(d+1\). A theorem and two corollaries are given below and corresponding proofs are postponed to Appendix A.1.

Theorem 3.1

Suppose that for all \(i\in I\), \(Y_i\) takes values in \(b'(\varLambda )\). If the vector \(\varvec{R}\) is such that \(\sum _{j=1}^d{ r_{j} - r_{0}}\ne 0\), then there exists a unique, consistent and explicit MLE \(\widehat{\varvec{\vartheta }}_n = (\widehat{\varvec{\vartheta }}_{n,(1)},\widehat{\varvec{\vartheta }}_{n,(2),1},\ldots ,\widehat{\varvec{\vartheta }}_{n,(2),d})\) of \(\varvec{\vartheta }\) given by

Note that if \(\overline{Y}_n^{(j)}\) does not belong to \(b'(\varLambda )\), \(g(\overline{Y}_n^{(j)})\) and hence \(\widehat{\varvec{\vartheta }}_{n,(l),j}\) are not defined.

We give below the three most common examples of linear constraint, some details of these calculus are given in Appendix A.1.

Example 3.1

No-intercept model The no-intercept model is obtained with \(\varvec{R}=(1,0,\ldots ,0)\) leading to \(\vartheta _{(1)} = 0\). Therefore the unique, consistent and explicit MLE \(\widehat{\varvec{\vartheta }}_n\) of \(\varvec{\vartheta }\) is

Example 3.2

Model without first modality

The model without first modality is obtained with \(\varvec{R}=(0,1,\ldots ,0)\) leading to \(\vartheta _{(2),1} = 0\). Therefore, the unique, consistent and explicit MLE \(\widehat{\varvec{\vartheta }}_n\) of \(\varvec{\vartheta }\) is

Example 3.3

Zero-sum condition

The zero-sum model is obtained with \(\varvec{R}=(0,1,\ldots ,1)\) leading to \(\sum _{j=1}^d\vartheta _{(2),j} = 0\). Therefore, the unique, consistent and explicit MLE \(\widehat{\varvec{\vartheta }}_n\) of \(\varvec{\vartheta }\) is

Remark 3.1

In Theorem 3.1, it is worth noting that the value of \(\widehat{\varvec{\vartheta }}_n\) does not depend on the distribution of the \(Y_i\). This fact was stated in Goldburd et al. (2016) but without any proof.

Remark 3.2

The three different parametrizations (Examples 3.1, 3.2 and 3.3) depends on the type of application and on the modeler choice. In statistical software, there is a default choice: for instance in the statistical software R, the model without the first modality is the default parametrization (see functions lm(), glm() by R Core Team 2019). The first option without intercept may be justified when no group can be chosen as the reference group.

Remark 3.3

When g is the identity function, the first and third options (Examples 3.1 and 3.3) can be interpreted as a generalized analysis of variance (ANOVA) for \(Y_i\) with respect to groups defined by the explanatory variable \(\varvec{x}^{(2)}\). Even for non-Gaussian random variables, some applications may justify these options.

Remark 3.4

The case when there is no explanatory variable, i.e. \(Y_i\) are identically distributed, cannot be obtained with Eq. (8). But in that case, we get with similar arguments \(\widehat{\varvec{\vartheta }}_{n,(1)} = g(\overline{Y}_n)\), which is in line with Example 6.3.12 of Lehmann and Casella (1998) when g is the canonical link, see Appendix A.1.

Remark 3.5

Despite the distribution of \({\overline{Y}}_n^{(j)}\) still belongs to the exponential family, the bias of \({\widehat{\vartheta }}_{n,(1)}\) and \({\widehat{\vartheta }}_{n,(2),j}\) cannot be determined for a general link function g. However, we can show the consistency of the estimator and an asymptotic confidence interval, see Appendix A.1. In the following, we will investigate the bias and the distribution of the MLE for some special cases of distributions and link functions.

Theorem 3.1 has two interesting corollaries which give some clues on the choice of the link function g. This corollary tempers the importance of the link function since it will not affect the predicted moments in the case of a single explanatory variable.

Corollary 3.1

The value of the log-likelihood defined in (4) taken on the exact MLE \(\widehat{\varvec{\vartheta }}_n\,(\)if it exists) given by (8), under constraint (7), does not depend on the link function g. More precisely, we have \(\forall i\in I,\quad \ell (\hat{\eta }_i) = (b')^{-1}(\overline{y}_n^{(j)})\quad \text {for } j\in J \text { such that } x_i^{(2),j}=1\) and

with \({\tilde{b}}=\left( b'\right) ^{-1}\). Therefore, the criteria AIC and BIC are also independent of the link function g. The estimator of \(\phi \) is obtained by maximizing \(\log L(\widehat{\varvec{\vartheta }}_n\,|\,\underline{\varvec{y}})\) with respect to \(\phi \) given a, b, c functions.

Corollary 3.2

The predicted mean and predicted variance for the ith individual is estimated by \(\widehat{\mathbf {E}Y_i} =b'(\ell ({\widehat{\eta }}_i))\) and \(\widehat{\text{ Var }Y_i} = a({\widehat{\phi }})b''(\ell ({\widehat{\eta }}_i))\) respectively using (2). Both estimates do not depend on the link function g and the predicted mean does not depend on the function b. More precisely, when \(v_j\) is the modality of the ith individual (i.e. \(x_i^{(2),j}=1\)), the predicted mean and predicated variance are given by

Corollary 3.2 may be surprising because the predicted mean does not depend on the conditional distribution of \(Y_1,\ldots ,Y_n\). The predicted mean is just the mean of the response variable taken over the class j i.e. observations \(y_i\) such that the covariate \(x^{(2)}_i\) takes the modality \(v_j\).

The formula (8) of the MLE \(\widehat{\varvec{\vartheta }}_n\) can be reformulated as

with \(\varvec{Q}\) is the \(d\times (1+d)\) matrix defined by \(\varvec{Q}=\left( A_0, A_1\right) \), with \(A_0\) is the ones vector of size d, \(A_1\) the identity matrix of size d, and \(\varvec{g({\bar{Y}})}\) the vector \((g({\bar{Y}}_n^{(1)}),\ldots ,g({\bar{Y}}_n^{(d)}))\). We have

With this formulation, the estimator of \(\varvec{\vartheta }\) is \( \widehat{\varvec{\vartheta }}_n = (\varvec{Q}'\varvec{Q} + \varvec{R}'\varvec{R})^{-1}\varvec{Q}'\varvec{g({\bar{Y}})}. \) This general form is particularly useful in the case of two categorical variables of the next subsection.

3.2 Two explanatory variables

Now, we consider the case of two explanatory categorical variables. That is \(p=3\) and for all \(i=1,\dots ,n\), \(x_i^{(1)}=1\) is the intercept and \(x_i^{(2)}, x_i^{(3)}\) take values in \(\{v_{j1},\dots ,v_{jd_j}\}\) with \(d_2,d_3\) modalities respectively. We define by \(x_i^{(2),k}\) and \(x_i^{(3),l}\), \(k\in K=\{1,\ldots ,d_2\}\) and \(l\in L=\{1,\ldots ,d_3\}\) the binary dummies of the kth and lth resp. categories, \(m^{(j)}_{k}>0\) the number of appearance of the kth modality of the jth variable, \(j=1,2\), \(m_{k,l}\) the number of appearance of the kth and lth category simultaneously and \({\overline{y}}_n^{(k,l)}\) the mean value of \(\underline{\varvec{y}}\) taking over the kth and lth categories. That is

Dummy | Frequency | Mean | Index |

|---|---|---|---|

\(x_i^{(2),k} = 1_{x_i^{(2)} =\,v_{2k}}\) | \(m_k^{(2)} = \sum \limits _{i=1}^n x_i^{(2),k}\) | \({\overline{y}}_n^{(2),k} =\frac{1}{m_k^{(2)}} \sum \limits _{i=1}^n y_i x_i^{(2),k}\) | \(k\in K\) |

\(x_i^{(3),l} = 1_{x_i^{(3)} =\,v_{3l}}\) | \(m_l^{(3)} = \sum \limits _{i=1}^n x_i^{(3),l}\) | \( {\overline{y}}_n^{(3),l} =\frac{1}{m_l^{(3)}} \sum \limits _{i=1}^n y_i x_i^{(3),l}\) | \(l\in L \) |

\(x_i^{(k,l)} = x_i^{(2),k}x_i^{(3),l}\) | \(m_{k,l} = \sum \limits _{i=1}^n x_i^{(k,l)}\) | \( \overline{y}_n^{(k,l)}=\frac{1}{m_{k,l}}\sum \limits _{i=1}^{n} y_i x_i^{(k,l)}\) | \((k,l)\in K\times L\) |

where \({\overline{y}}_n^{(k,l)}\) is computed over \({ KL}^\star = (K\times L){\setminus } \{(k,l)\in K\times L;m_{k,l}=0\}\). Set \(d_{2,3}^\star = \#KL^\star \), for \(l\in L\), \(K_l^\star = \{{k\in K}; m_{k,l}>0\}\), \(d_{(3),l}^\star = \#K_l^\star \) and for \(k\in {K}\), \(L_k^{\star } = \{{l\in L}; m_{k,l}>0\}\), \(d_{(2),k}^\star = \#L_k^\star \).

Note that \((m_{k,l})_{kl}\) are absolute frequencies of the contingency table resulting from cross-classifying factors and can be computed very easily. Be careful that \(KL^\star \) is not equal to \(K \times L\) but \(\bigcup _{l\in L} K^\star _l = \bigcup _{k\in K} L^\star _k = KL^\star ,\) and \(d_{2,3}^\star = d_2d_3 - r\), where \(r=\#\{(k,l)\in K\times L; m_{k,l}=0\}\).

Let \(\varvec{Q}\) be the \(d_{2,3}^\star \times (1+d_2 + d_3 +d_{2,3}^\star )\) real matrix defined by \( \varvec{Q} = (A_0,A_1,A_2,A_{12}) \) with \(A_0=\varvec{1}_{d^\star _{2,3}}\) the \(d^\star _{2,3}\times 1\) ones matrix; \(A_1 =({{\,\mathrm{diag}\,}}(\varvec{1}_{d^\star _{(2),k}}))_{k\in K}\), the \(d^\star _{2,3}\times K\) diagonal block matrix of ones vector of size \(d^\star _{(2),k}\); \(A_2=(I^{\star ,k}_{d_{3}})_{k\in K}\), the \(d^\star _{2,3}\times L\) matrix where \(I^{\star ,k}_{d_{3}}\) is the identity matrix of size \(d_{3}\) without rows l for which \(m_{k,l}=0\); \(A_{12}= I_{d^\star _{2,3}}\) the \(d^\star _{2,3}\times d^\star _{2,3}\) identity matrix.

Consider the following GLM for explanatory variables \(x_i^{(1)},x_i^{(2),j},x_i^{(3),j}\)

where \(\vartheta _{(1)},(\vartheta _{(2),k})_{k\in K},(\vartheta _{(3),l})_{l\in L},(\vartheta _{kl})_{(k,l)\in KL^\star }\) are the \(d_2 + d_3 + d_{2,3}^\star +1\) unknown parameters. Again at this stage, the model is not identifiable because of the redundancy on the vectors \((x_1^{(2),k},\ldots ,x_n^{(2),k})\), \(k\in K\), the vectors \((x_1^{(3),l},\ldots ,x_n^{(3),l})\), \(l\in L\) and the ones vector. As previously, we need to impose \(q\ge 1+d_2 + d_3\) linear constraints on the vector parameters \(\varvec{\vartheta }\)

where \(\varvec{R}\) is a \(q\times (1+d_2 + d_3 +d_{2,3}^\star )\) real matrix of linear contrasts, with \(rank(\varvec{R})=1+d_2 + d_3\) and \(\varvec{0}_q\) the zeros vector of size q. Again, the proofs of the following theorem and corollaries are postponed to Appendix A.2.

Theorem 3.2

Suppose that for all \(i\in \{1,\ldots ,n\}\), \(Y_i\) takes values in \(b'(\varLambda )\). Under constraint (11) and if \(\varvec{R}\) such that \((\varvec{Q}', \varvec{R}')\) is of rank \(d^\star _{2,3}\), there exists a unique, consistent and explicit MLE \(\widehat{\varvec{\vartheta }}_n\) of \(\varvec{\vartheta }\) given by

where the vector \(\varvec{g({\bar{Y}})}\) is \(((g({\bar{Y}}_n^{(k,l)}))_{l\in L_k^\star })_{k\in K}\).

Example 3.4

No intercept and no single-variable dummy

The model with no intercept and no single-variable dummy is \(\vartheta _{1} = 0\) and \(\vartheta _{(2),k}=\vartheta _{(3),l}=0\)\(\forall k\in K\,\forall l\in L\). Therefore, the unique, consistent and explicit MLE \(\widehat{\varvec{\vartheta }}_n\) of \(\varvec{\vartheta }\) is

Example 3.5

Zero-sum conditions The model with zero-sum conditions assumes

Therefore, the unique, consistent and explicit MLE \(\widehat{\varvec{\vartheta }}_n\) of \({\varvec{\vartheta }}\) is

Remark 3.6

The MLE of the model with only main effects for two explanatory variables defined as \(g(\mathbf {E}Y_i) = \vartheta _1 + \sum _{k=1}^{d_2}x_i^{(2),k}\vartheta _{(2),k} + \sum _{l=1}^{d_3}x_i^{(3),l}\vartheta _{(3),l}\) does not present such explicit formula whatever the matrix \(\varvec{R}\) of rank 2. In that case, the MLE does not solves a least square problem under a linear constraint, see Appendix A.2. In the special case of logit-regression, Lipovetsky (2015) also notice that least square estimation does not coincide with the MLE.

For simplicity, we consider only the cases of one and two explanatory categorical variables. With a higher number of explanatory variables, we can perform a similar analysis to obtain an explicit solution of the MLE. As for one explanatory variable, Theorem 3.2 has two interesting corollaries on the value of the log-likelihood and the predicted moments.

Corollary 3.3

The value of log-likelihood defined in (4) taken on the exact MLE \(\widehat{\varvec{\vartheta }}_n\) (if it exists) given by (12), under constraint (11), does not depend on the link function g. More precisely, we have \(\forall i\in I,\quad \ell (\hat{\eta }_i) = (b')^{-1}(\overline{y}_n^{(k,l)})\quad \text {for } l\in L\text { and } k\in K\text { such that } x_i^{(2),j} =1 \text { and } x_i^{(3), k} =1 \) and

with \({\tilde{b}}=(b')^{-1}\) and \({\tilde{I}}=\{i\in I, x_i^{(2),k}=x_i^{(3),l}=1\}\). The estimator of \(\phi \) is obtained by maximizing \(\log L( \widehat{\varvec{\vartheta }}_n\,|\,\underline{\varvec{y}})\) with respect to \(\phi \) given a, b, c functions.

Corollary 3.4

The predicted mean and predicted variance for the ith individual is estimated by \(\widehat{\mathbf {E}Y_i} =b'(\ell ({\widehat{\eta }}_i))\) and \(\widehat{\text{ Var }Y_i} = a({\widehat{\phi }})b''(\ell ({\widehat{\eta }}_i))\) respectively using (2). Both estimates do not depend on the link function g and the predicted mean does not depend on the function b. Let \(v_{2k}\) and \(v_{3l}\) be the modalities of the ith individual of the two explanatory variables, i.e. \(x_i^{(2),k}=1\) and \(x_i^{(3),l}=1\). The predicted mean and variance are given by

In the next two sections, we apply previous theorems and corollaries to two particular distributions: Pareto 1 and lognormal distribution. Our results do not only apply to continuous distributions but also for discrete distributions. But we choose these two distributions in order to model insurance losses.

4 GLM for Pareto I distribution with categorical explanatory variables

4.1 Characterization

Consider the sample \(\underline{\varvec{Y}}=(Y_1,\ldots , Y_n)\) composed of independent Pareto Type 1. Precisely, we assume that the independent random variables \(Y_1\), ..., \(Y_n\) are Pareto with known threshold parameter \(\mu \) and respective shape parameter (depending on the unknown parameter \(\varvec{\vartheta }\)) \(\lambda _1(\varvec{\vartheta }), \ldots , \lambda _n(\varvec{\vartheta }) \in \varLambda =(0,\infty )\). The density f of Pareto distribution with threshold and shape parameter \(\mu \) and \(\lambda _i(\varvec{\vartheta })\), \(i\in I\) is

and 0 if \(y<\mu \).

We recall that for the Pareto Type 1 distribution

Unlike the known parameter \(\mu \), the parameter \(\varvec{\vartheta }\) is to be estimated. These closed-form formulas are particularly useful in an insurance context since the expectation and the variance are used in most premium computation. For instance, \(\mathbf {E}Y\) is the pure premium and for \(\gamma >0\), \(\mathbf {E}Y+\gamma \text{ Var }Y\) is the variance principle (see Bühlmann and Gisler 2006, Section 1.2.2).

In the following, instead of \(\underline{\varvec{Y}}\) we consider the sample \(\underline{\varvec{Z}} = (T(Y_1),\ldots ,T(Y_n))\). With the re-parametrization \(z_i = T(y_i) = - \log \left( y_i/\mu \right) \), \(i\in I\), this distribution belongs to the exponential family as defined in (1), with

In particular, for the Pareto I distribution, there is no dispersion parameter. It is also worth mentioning that \(-Z_i\) is exponential with parameter \(\lambda _i(\varvec{\vartheta })\). Consecutively, all moments of \(Z_i\) exist and are given by \(\mathbf {E}(Z_i)^m = (-1)^m m!/\lambda _i(\varvec{\vartheta })^m\), \(m\in \mathbb {N}^*\).

Consider the regression model with a link function g, a response variable \(Y_i\) Pareto I distributed where \(Z_i = - \log \left( Y_i/\mu \right) \) and

with for \(i\in I\), \(\varvec{x}_i = (1, x_i^{(2),1},\ldots ,x_i^{(2),d})^T\) are the covariate vectors and \(\varvec{\vartheta } = (\vartheta _{(1)},\vartheta _{(2),1}\ldots ,\vartheta _{(2),d})^T\) is the unknown parameter vector.

The choice of the link function g appearing in (15) is a crucial point. Let us start with the canonical link. That is, the chosen function g so that \(\ell =(b')^{-1}\circ g^{-1}\) is the identity function. For our Pareto model, \(g(t) = -\frac{1}{t}\) since \(b'(\lambda ) = -\frac{1}{\lambda }\). From (4), the choice of the canonical link function imposes constraints on the linear predictor space \(D\subset \varLambda \subset (0,+\,\infty )\) in that case. Since D results from the scalar product of \(\varvec{\vartheta }\) parameters with explanatory variables \(\varvec{y}_i\), some negative values might be produced when the covariables take negative values. This make the choice of the canonical link inappropriate.

In order to remedy this issue, we can choose a link function such that the values of the function \(\ell \) falls in \(\varLambda \subset (0,\infty )\). A natural choice is \(\ell (\eta )=\exp (\eta )\) which guarantees a positive parameter. The choice \(\ell (\eta )=\exp (\eta )+1\) guarantee a finite expectation for the random variables \(Y_i\), \(i=1,\ldots ,n\). We summarize in Table 1 the tested \(\ell \) functions in our application in Sect. 7, and in Table 2, the spaces given a link function.

4.2 Estimation for categorical exogenous variables

Consider the case of one categorical exogenous variable. We expose the case of the re-parametrization without intercept, i.e. \( \langle \varvec{R}, \varvec{\vartheta }\rangle = 0\) with \(\varvec{R}=(1,0,\ldots ,0)\).

Let \(\widehat{\varvec{\vartheta }}_{n}\) the MLE defined in (9), if it exists, of \(\varvec{\vartheta }\). Using the following equality of Corollary 3.1

the log likelihood evaluated on \(\widehat{\varvec{\vartheta }}_{n}\) for both the transformed sample \(\underline{\varvec{z}}\) and the original sample \(\underline{\varvec{y}}\) with one categorical exogenous variable is

The log-likelihood computation is detailed on Appendix B.

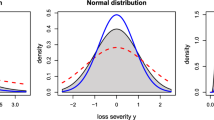

The second remark is that \(-Z_i\) are exponentially distributed \({\mathcal {E}}(\ell (\eta _i))\). Hence for \(j\in J\), the estimators \({\widehat{\vartheta }}_{n,(2),j}\) of \(\vartheta _{(2),j}\) are known transforms of a gamma random variable \(\mathcal Ga(m_j, m_j\ell (\vartheta _{2,j}))\). Below we analyze the choice of the link functions considered in Table 1 in Examples 4.1, 4.2, 4.3 and plotted in Fig. 4a.

Example 4.1

canonical link

In the special case of canonical Pareto model, because \(z_i<0\) for all \(i\in I\), we have \({\overline{z}}_n^{(j)}\in b'(\varLambda ) = (-\,\infty ,0)\) for all \(j\in J\) (g and \(\varLambda \) are respectively defined in Tables 1 and 2 ). With no-intercept using Eq. (9), the MLE is

Hence, for \(j\in J\), \({\widehat{\vartheta }}_{n,(2),j}\) follows an Inverse Gamma distribution with shape parameter \(m_j\) and rate parameter \(m_j\vartheta _{(2),j}\) (see e.g. Johnson et al. 2000, Ch. 17). We can compute the moments of the Inverse Gamma distribution, for \(m_j>2\),

An unbiased estimator of \(\vartheta _{(2),j}\) is then \({\widehat{\vartheta }}^{\star }_{n,(2),j} = \frac{m_j-1}{m_j}{\widehat{\vartheta }}_{n,(2),j}\) which has a lower variance

A similar bias is also obtained by Bühlmann and Gisler (2006) in a credibility context. Of course this unbiased estimator is also applicable for two exogenous variables with the first parametrization of the Theorem 3.2. When some modalities (or couple of modalities) aren’t much represented, it can be relevant to use this unbiased estimator.

Example 4.2

log-inverse link

In the special case of the log-inv Pareto model, we also have \({\overline{z}}_n^{(j)}\in b'(\varLambda ) = (-\,\infty ,0)\) for all \(j\in J\) (g and \(\varLambda \) are respectively defined in Tables 1 and 2). With no-intercept using Eq. (9), the MLE is

Here, for \(j\in J\), the distribution of \(-{\widehat{\vartheta }}_{n,(2),j}\) is the distribution of the log of the gamma distribution with shape \(m_j\) and rate \(m_j\exp (\vartheta _{(2),j})\). We can derive moments of this distribution which should not be confused with the log-gamma distribution studied e.g. in Hogg and Klugman (1984).

Let \(L=\log (G)\) when G is gamma distributed with shape parameter \(a>0\) and rate parameter \(\lambda >0\). We have by elementary manipulations the moment generating function of L:

where \(\varGamma \) denotes the usual gamma function. Therefore by differentiating and evaluating at 0, we deduce that the expectation and the variance of L are \( \mathbf {E}L =\psi (a)- \log \lambda \) and \(\text{ Var }L =\psi '(a), \) where the functions \(\psi \) and \(\psi '\) are the digamma and trigamma function (see e.g. Olver et al. 2010). Getting back to our example, we deduce that

From Olver et al. (2010), we know that \(\log (m_j) - \psi (m_j)\) tends to 0 as \(m_j\) tend to infinity. Hence \({\widehat{\vartheta }}_{n,(2),j}\) is asymptotically unbiased, and an unbiased estimator of \(\vartheta _{(2),j}\) is

Example 4.3

shifted log-inverse link

In the special case of the shifted log-inv Pareto model, \(\overline{z}_n^{(j)}\) is not necessarily in \(b'(\varLambda )=(-1 ,0)\) for all \(j\in J\) (g and \(\varLambda \) are respectively defined in Tables 1 and 2). If there is an index j such as \({\overline{z}}_n^{(j)} \le -1\), the MLE is not defined and we couldn’t use the shifted log-inv link with the same incidence matrix.

Nevertheless, for sufficiently large n, for j such that \(x_i^{(2)}=v_j\), \({\overline{Z}}_n^{(j)} \rightarrow \mathbf {E}Z_i\) almost surely, where \(\mathbf {E}Z_i =-1/(\exp (\vartheta _{2,j})+1) > -1\). Hence for sufficiently large n, the conditions of Theorem 3.1 are satisfied. With no-intercept using Eq. (9), the MLE (provided it exists) is

The expectation of \({\widehat{\vartheta }}_{n,(2),j}\) is complex and should be done numerically. However by the strong law of large numbers and the continuity of the link function, \({\widehat{\vartheta }}_{n,(2),j}= -\log \left( -1/{\overline{Z}}_n^{(j)}-1\right) \) converge almost surely to \(\log ( (\exp (\vartheta _{(2),j})+1) -1) = \vartheta _{(2),j}.\)

Remark 4.1

In Theorem 3.1, the condition \(Y_i\) takes values in \(b'(\varLambda )\) might seem too restrictive. In fact the condition \({\overline{y}}_n^{(j)} \in b'(\varLambda )\) for all \(j\in J\) is sufficient to define a vector value \(\widehat{\varvec{\vartheta }}_n\) which maximise the likelihood. But \(\widehat{\varvec{\vartheta }}_n\) fails to be a MLE estimator because the random variable \(g({\overline{Y}}_n^{(j)})\) can to be not defined. Nevertheless, when \(m_j\) tends to infinity for any \(j\in J\), the random variables \(Y_i\)’s defined by (6) such that \(y_i^{(2),j}=1\) are i.i.d. (not only independent) and the strong law of large numbers implies that \({\overline{Y}}_i^{(j)}\) converges almost surely to \(\mathbf {E}Y_i = b'(\ell (\eta _i))\in b'(\varLambda )\). Hence, the probability \(P({\overline{Y}}_n^{(j)}\notin b'(\varLambda ))\) tends to zero which guarantees the asymptotically existence of the MLE estimator.

4.3 Model diagnostic

In this paragraph, we propose residuals adapted at the case of Pareto problem. First note that \(Y_i\) is Pareto I with shape \(\ell (\eta _i)\) and threshold \(\mu \) and for the parametrization (14) \(-Z_i = \log (Y_i/\mu ) \sim \mathcal E(\ell (\eta _i))\). Let define the residuals

Hence \(R_{1},\ldots ,R_{n}\) are i.i.d. and have an exponential distribution \({\mathcal {E}}(1)\). The consistency of the MLE makes it possible to say that the estimated residuals \({\widehat{R}}_{n,i} = -\ell (\widehat{\eta }_i)Z_i\), \(i\in I\), with \(\widehat{\eta }_i=\langle \varvec{x}_i,\widehat{\varvec{\vartheta }}_n\rangle \) are asymptotically i.i.d..

It is also possible to verify the assumption of the Pareto distribution for \(Y_i\) conditionally to \(\varvec{y}_i\) with graphical diagnostic as an exponential Quantile–Quantile plot on the residuals \({\widehat{R}}_{n,i}\).

In the case of a single explanatory variable, for \(i\in I\), the residuals \({\widehat{R}}_{n,i}\) do not depend on \(\ell \) function. Their explicit forms are

Furthermore, the summation of \({\widehat{R}}_{n,1},\ldots ,{\widehat{R}}_{n,n}\) has the surprising property to be deterministic and is exactly equal to n.

5 GLM for shifted log-normal distribution with categorical explanatory variables

5.1 Characterization

Secondly, consider the sample \(\underline{\varvec{Y}}=(Y_1,\ldots , Y_n)\) composed of independent shifted log-normal variables respectively with mean \(\lambda _1(\varvec{\vartheta }),\ldots ,\lambda _n(\varvec{\vartheta })\), dispersion \(\phi = \sigma ^2\) and a known threshold \(\mu \). The shifted log-normal is also known as the 3-parameter log-normal. Precisely, the density of \(Y_i\) is

and 0 for \(y\le \mu \). It is well known that the lognormal distribution has finite moment (see e.g. Johnson et al. 2000). In particular, the expectation and the variance are given by

The transformed sample \(\underline{\varvec{Z}} = T(\underline{\varvec{Y}})=(T(Y_1),\ldots , T(Y_n))\) with \(T(y) = \log (y-\mu )\) is belongs the exponential family with

It is worth mentioning that \(Z_i\) are normally distributed with mean \(\lambda _i(\theta )\) and variance \(\phi \). As a consequence, all moments of \(Z_i\) exists and \(\mathbf {E}(Z_i-\lambda _i)^m=(2m)!\phi ^m/(2^mm!)\) for m even and 0 for m odd. Consider the regression model with a link function g, a response variable \(Y_i\) lognormally distributed where \(Z_i = \log \left( Y_i-\mu \right) \) and

with for \(i\in I\), \(\varvec{x}_i = (1, x_i^{(2),1},\ldots ,x_i^{(2),d})^T\) are the covariate vectors and \(\varvec{\vartheta } = (\vartheta _{(1)},\vartheta _{(2),1},\ldots ,\vartheta _{(2),d})^T\in \mathbb {R}^d\) is the unknown parameter vector.

The choice of the link function g for Eq. (19) is less restrictive than for the Pareto case. Any differentiable invertible function from \(\mathbb {R}\) to \(\mathbb {R}\) will work. Since \(b'(x)=x\), the canonical link function is obtained by choosing g such that \(\ell = {{\,\mathrm{id}\,}}\circ ~ g^{-1}=g^{-1}\) is the identity function. In other words, the canonical link function is the identity function.

Unlike the previous section, any moment of \(Y_i\) exist and there is no particular link needed to guarantee their existence. In the numerical application, we will also consider another link function: a real version of the logarithm.

5.2 Estimation for categorical exogenous variables

Again we consider the case of categorical variables and without intercept model, that is with a predictor \(\eta _i=x_i^{(2),1}\vartheta _{(2),1} + \dots + x_i^{(2),d} \vartheta _{(2),d}\). In the case of the lognormal dispersion, there is a dispersion to be estimated. The log-likelihood is given by

The components of the MLE of \(\varvec{\vartheta }\) are given by \(\widehat{\varvec{\vartheta }}_{n,(2),j} = g({\bar{z}}_n^{(j)}), j\in J\), and the estimated log likelihood for the transformed sample \(\underline{\varvec{z}}\) is

Maximizing over \(\phi \) the log-likelihood leads to the empirical variance

Hence the estimator of \(\phi \) is the intra-class variance. This closed-form estimate \({\widehat{\phi }}\) differs from what classical statistical softwares carry out, where the dispersion parameter is estimated by a quasi-likelihood approach.

Using the previous equations, we compute the log likelihood evaluated on \({\widehat{\phi }}\) and on \(\widehat{\varvec{\vartheta }}_{n}\) for both the transformed sample \(\underline{\varvec{z}}\) and the original sample \(\underline{\varvec{y}}\) with one categorical exogenous variable (Corollary 3.1) is

Below we analyze the choice of the link functions defined in Table 3 in Examples 5.1, 5.2 and plotted in Fig. 4b and with parameter spaces given in Table 4.

Example 5.1

canonical link

With the canonical link function, there is no issue between the parameter space and the linear predictor space since \(D=\varLambda =\mathbb {R}\). With no-intercept using Eq. (9), the MLE is

Hence, the distribution \({\widehat{\vartheta }}_{n,(2),j}\) is simply a normal distribution with mean \(\vartheta _{(2),j}\) and variance \(\phi /m_j\). Therefore, the MLE is unbiased and converges in almost surely to \(\vartheta _{(2),j}\).

Example 5.2

symmetrical log link

We consider a central symmetry of the logarithm function given in Table 3 leading to \(l_g(x)=e^{x}1_{x\ge 0}+(2-e^{-x})1_{x<0}\). With this symmetrical log link function, there is no issue between the parameter space and the linear predictor space since again \(D=\varLambda =\mathbb {R}\). With no-intercept using Eq. (9), the MLE is

The expectation of \({\widehat{\vartheta }}_{n,(2),j}\) is complex and should be done numerically. However by the strong law of large numbers and the continuity of the link function, \({\widehat{\vartheta }}_{n,(2),j}= l_g({\overline{Z}}_n^{(j)})\) converge almost surely to \(l_g(\mathbf {E}{\overline{Z}}_n^{(j)}) =\vartheta _{(2),j}\).

5.3 Model diagnostic

In this paragraph, we give some details about residuals in the lognormal case. As already said, the transformed variables \(Z_i=\log (Y_i-\mu )\) is normally distributed with mean \(\ell (\eta _i)\) and variance \(\phi \). Let define the residuals

Hence \(R_{1},\ldots ,R_{n}\) are i.i.d. and have a normal distribution \({\mathcal {N}}(0,1)\). The consistency of the MLE makes it possible to say that the \({\widehat{R}}_{n,i} = \frac{Z_i - \ell ({\widehat{\eta }}_i)}{\sqrt{{\widehat{\phi }}}}\), \(i\in I\), with \(\widehat{\eta }_i=\langle \varvec{x}_i,\widehat{\varvec{\vartheta }}_n\rangle \) are asymptotically i.i.d. Furthermore, the summation of \({\widehat{R}}_{n,1},\ldots ,{\widehat{R}}_{n,n}\) is exactly equal to 0.

It is also possible to verify the assumption of the lognormal distribution for \(Y_i\) conditionally to \(\varvec{x}_i\) with graphical diagnostic as a normal Quantile–Quantile plot on the residuals \({\widehat{R}}_{n,i}\).

6 Simulations study

This section is devoted to the simulation study: all computations are carried out thanks to the R statistical software R Core Team (2019). The first part of the simulation analysis consists in assessing the uncertainty of the MLE with the two different approaches: either the explicit formula given in Example 4.1 or the IWLS algorithm described in McCullagh and Nelder (1989).

Therefore, the simulation process has the following steps. Firstly, given the parameter number p, we simulate n random variables which are Pareto 1 distributed in the case of no intercept and a canonical link (see Example 4.1). Secondly, we check that the fitted coefficients by both methods have identical values. Thirdly, we compute the exact confidence interval using the result of Example 4.1 and the asymptotic MLE confidence interval resulted from the IWLS algorithm.

Figure 1 shows the estimated parameters when \(p=3\) for different values of n. That is we plot \({\widehat{\vartheta }}_{n,(2),1}\), \({\widehat{\vartheta }}_{n,(2),2}\), \({\widehat{\vartheta }}_{n,(2),3}\) as solid lines for \(n=\) 100, 300, 500, 700, 900, 1000, 3000, 5000, 7000, 9000, 10,000 against the true values \(\varvec{\vartheta }=(2,3,4)\) (horizontal dot-dashed lines) and \(\mu =150\). The confidence intervals are plotted in dashed lines (theoretical) and dotted lines (asymptotic). We observe that for any sample size n, explicit and IWLS produce the same value but the explicit confidence interval is much thinner in the explicit case.

The second part of the simulation analysis consists in assessing the gain in terms of computations between the two different approaches: either the explicit formula given in Example 4.1 or the IWLS algorithm described in McCullagh and Nelder (1989).

In Table 5, we provide a complexity analysis of the two procedure: the IWLS algorithm and the explicit solution. Again we simulate with Pareto 1 distributed random variables for a specific number of parameters p, and a known threshold \(\mu =150\) given a sample size n. Then we compute the floating point operation numbers given the size of the input dataset. Two different explanatory variables have been tested so that the parameter number is different: \(p=5\) or 7. We observe that the explicit solution is far less computer intensive (4000 times faster) than the IWLS algorithm which takes 5 or 6 iterations to reach the solution. Thus having an explicit solution can lead to substantial benefits for very large datasets.

7 Application to large claim modeling

This section is devoted to the numerical illustration on a real dataset [again computations are carried out thanks to the R statistical software R Core Team (2019)]. In our application, we focus on modeling non-life insurance losses (claim amount) of corporate business lines. Our data set comes from an anonymous private insurer: for privacy reason, amounts have been randomly scaled, dates randomly rearranged, variable modalities renamed. The data set consists of 211,739 claims which occurred between 2000 and 2010. In addition to the claim amount level, various explanatory variables are available.

We provide in Table 10 in Appendix C a short descriptive analysis of the two most important variables (risk class and guarantee type with respectively 5 and 7 modalities). Due to the very high value of skewness and kurtosis, we observe that claim amount is particularly heavy tailed.

In the sequel, we consider only large claims which are in our context claims above \(\mu =340{,}000\) (in euros). The threshold value has been chosen by expert opinion of practitioners. We refer to e.g. Reiss and Thomas (2007) for advanced selection methods based on extreme value theory.

7.1 A single explanatory variable

Firstly, we consider both Pareto 1 GLM and Shifted log-normal GLM with only one explanatory variable: the guarantee type. We choose Guarantee 1 as the reference level implying that \(\vartheta _{(2),1}=0\). So, \(\vartheta _{(1)}\) representing the effect of the reference category and \((\vartheta _{(2),j})_j\) representing the differential effect of categories j relative to the reference category will be estimated through (15) and (19). Observations \(y_1,\ldots , y_n\) are observed claim amounts either from Pareto 1 (13) or shifted log-normal (18).

For theses two models, we have many possible choices for the link function g. Naturally, we choose link functions appearing in Tables 1 and 3 respectively. In accordance to Corollaries 3.1, the choice of g does not impact the values respectively given on (16) and (21) of the log-likelihoods applied on the MLE of \(\varvec{\vartheta }\).

Furthermore, for Pareto GLM, the choice of shifted log-inverse link function seems attractive because it guarantees the existence of \(\mathbf {E}Y_i\). Nevertheless, alternative link functions (canonical or log-inv) allow to construct an unbiased estimator (see Sect. 4). For shifted log-normal model, the choice of canonical link function is more attractive because it leads to an unbiased and simpler MLE estimator (see Sect. 5).

Coefficients are estimated by explicit formulas given in Sects. 4 and 5. In Table 6, the estimated coefficients are given in the five considered situations. Positive values of \(\vartheta _{(2),j}\) in the Pareto GLM increase the shape parameter of the Pareto 1 distribution leading to a decrease in heavy-tailedness. Regarding the log-normal model, positive values of \(\vartheta _{(2),j}\) increase the scale parameter of the log-normal distribution leading to a shrink of the distribution.

Irrespectively of the considered link function, the sign of the fitted coefficients are same except for intercept (Table 6) given a distribution. This convinces us that different model assumptions (i.e. link) do not lead to opposite conclusions on the claim severity. Furthermore from Table 10, we retrieve the fact that all guarantees except Guarantee 2 have heavier tails than the reference guarantee 1.

Whatever the considered link function g, the residuals defined in Sect. 4.3 by \({\widehat{R}}_{n,i} = -\ell (\widehat{\eta }_i)Z_i\), \(i\in I\), do not depend on \(\ell \) and are given by Eq. (17). We show on Fig. 2 (left) the quantile/quantile plots of residuals described on Sect. 4.3 against the standard exponential distribution. On Fig. 3, we observe that the assumption of Pareto 1 for \(Y_1,\ldots ,Y_n\) is better than the log-normal distribution at least for small quantiles. Moreover, comparing the value of the log-likelihood in Table 6, Pareto 1 distribution is also the best choice. In the following, we focus only on the Pareto 1 distribution.

Quantile–quantile plots of the residuals defined in Sect. 4.3: (left) for one explanatory variable, (right) for two explanatory variables

For all coefficient, let us compute the p values of statistical tests with the null hypothesis \(\vartheta _{(1)}=0\) (Interecept null), and for \(j\in J{\setminus }\{1\}\), \(\vartheta _{(2),j}=0\) (no differential effect of the jth guarantee). Table 7 reports the value of the coefficient, its standard error, the student statistics and the associated p value. We observe that some modalities of the guarantee variable are statistically significant at the 5% level. Except for Guarantee 2 and Guarantee 6, other p values are relatively small showing the Pareto 1 distribution with explanatory variables is relevant in this context.

Quantile–quantile plots of the residuals defined in Sect. 4.3: (left) for Pareto 1, (right) for shifted log-normal distributions

7.2 Two explanatory variables

Secondly, we consider the Pareto GLM models and Shifted log-normal GLM models with the two explanatory variables (guarantee and risk class) without intercept nor single-variable (c.f. model (10) and example 3.4), that are

with \(Z_i = -\log (Y_i/\mu )\) for the Pareto 1 modeling \(Z_i = \log (Y_i-\mu )\) for the shifted log normal modeling and where for \((k,l)\in { KL}^\star \) the unknown parameters \(\vartheta _{kl}\) represent the effect of the couple of the modalities k and l for the first and the second variable. In theses examples, as it describes in Table 8, we have \(K=\{1,\ldots ,7\}\), \(L=\{1,\ldots ,5\}\) but \({ KL}^\star = \{1,\ldots ,7\}\times \{1,\ldots ,5\}{\setminus }\{(1,2),(6,5)\}\).

Consider the estimation procedures in (22). We compute the claim numbers according guarantee and risk in Table 8. This claim number per class might be too short to ensure the existence of the MLE with the shifted log-inv link. We arbitrary choose the simple case of the canonical link and an unbiased estimator is relevant in this context.

The coefficients of the model are estimated using the exact method described in Sect. 3 and then unbiased in the same way of Example 1. The fitted coefficients are not shown but are available upon request to the authors. Furthermore, we compute the p values of the statistical test \(\vartheta _{kl}=0\) in Table 9. We observe that most computed p values are small: either less than \(10^{-6}\) or less than 1%. Only 5 on the 33 p values are above the usual 5% level, corresponding to the couples guarantee/risk class (1,5), (2,2), (2,3), (2,5) and (7,5) (claim number of 1,2 or 3). In the two variables setting, the Pareto 1 GLM is thus still relevant.

8 Conclusion

In this paper, we deal with regression models where the response variable belongs to the general formulation of the exponential family, the so-called GLM. We focus on the estimation of parameters of GLMs and derive explicit formulas for MLE in the case of categorical explanatory variables. In this case, the closed-form estimators do not require any use of numerical algorithms, in particular the well-known IWLS algorithm. This is logical, because in the special setting of categorical variables, a regression model is equivalent to fitting the same distribution on subgroups defined with respect to explanatory variables. Hence, we get back to usual explicit solutions for the exponential family in the i.i.d. case.

Yet we work with one or two explanatory variables for the two derived theorems, the approach can be extended to d categorical variables as long as we consider interactions terms and a zero-sum condition. If we consider main effects only for d categorical variables, the MLE cannot be reformulated as a least-square problem. Nevertheless, having an explicit formula make a clear advantage compared to the IWLS algorithm, particularly for large scale datasets.

The explicit formulas are examplified on two particular positive distributions particularly useful in an insurance context: the Pareto 1 distribution and the shifted log-normal distribution. In both cases, we present typical link functions and derive in most cases the distribution of the MLE. In relevant cases, we also give an unbiased estimator. In the general setting, the exact standard error computation is not available, yet the Delta Method can be used to obtain an asymptotic standard error. Finally, we illustrate the estimation process for both distributions on simulated datasets and an actuarial data set.

For future research, a natural extension is to propose regression models for distribution outside the exponential family. Typically, we could consider the Pareto 1 distribution with unknown threshold and shape parameters. We could also consider generalized Pareto distribution based on the peak over thresholds approach. A natural extension could also be to jointly estimate the shape and the dispersion parameters of the distribution.

References

Albert A, Anderson JA (1984) On the existence of maximum likelihood estimates in logistic regression models. Biometrika 71(1):1–10

Beirlant J, Goegebeur Y (2003) Regression with response distributions of pareto-type. Comput Stat Data Anal 42(4):595–619

Beirlant J, Goegebeur Y, Verlaak R, Vynckier P (1998) Burr regression and portfolio segmentation. Insur Math Econ 23(3):231–250

Beirlant J, Goegebeur Y, Teugels J, Segers J (2004) Statistics of extremes: theory and applications. Wiley, Hoboken

Bühlmann H, Gisler A (2006) A course in credibility theory and its applications. Springer, Berlin

Chavez-Demoulin V, Embrechts P, Hofert M (2015) An extreme value approach for modeling operational risk losses depending on covariates. J Risk Insur 83(3):735–776

Davison A, Smith R (1990) Models for exceedances over high thresholds. J R Stat Soc Ser B 52(3):393–442

Fahrmeir L, Kaufmann H (1985) Consistency and asymptotic normality of the maximum likelihood estimator in generalized linear models. Ann Stat 13(1):342–368

Fienberg SE (2007) The analysis of cross-classified categorical data, 2nd edn. Springer, Berlin

Goldburd M, Khare A, Tevet D (2016) Generalized linear models for insurance rating. CAS monograph series number 5. Casualty Actuarial Society, Arlington

Haberman SJ (1974) Log-linear models for frequency tables with ordered classifications. Biometrics 30(4):589–600

Hambuckers J, Heuchenne C, Lopez O (2016) A semiparametric model for generalized pareto regression based on a dimension reduction assumption. HAL. https://hal.archives-ouvertes.fr/hal-01362314/

Hogg RV, Klugman SA (1984) Loss distributions. Wiley, Hoboken

Johnson N, Kotz S, Balakrishnan N (2000) Continuous univariate distributions, vol 1, 2nd edn. Wiley, Hoboken

Lehmann EL, Casella G (1998) Theory of point estimation, 2nd edn. Springer, Berlin

Lipovetsky S (2015) Analytical closed-form solution for binary logit regression by categorical predictors. J Appl Stat 42(1):37–49

McCullagh P, Nelder JA (1989) Generalized linear models, vol 37. CRC Press, Boca Raton

Nelder JA, Wedderburn RWM (1972) Generalized linear models. J R Stat Soc Ser A 135(3):370–384

Ohlsson E, Johansson B (2010) Non-life insurance pricing with generalized linear models. Springer, Berlin

Olver FWJ, Lozier DW, Boisvert RF, Clark CW (eds) (2010) NIST handbook of mathematical functions. Cambridge University Press, Cambridge

Ozkok E, Streftaris G, Waters HR, Wilkie AD (2012) Bayesian modelling of the time delay between diagnosis and settlement for critical illness insurance using a burr generalised-linear-type model. Insur Math Econ 50(2):266–279

R Core Team (2019) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna. https://www.R-project.org/

Reiss R, Thomas M (2007) Statistical analysis of extreme values, 3rd edn. Birkhauser, Basel

Rigby R, Stasinopoulos D (2005) Generalized additive models for location, scale and shape. Appl Stat 54(3):507–554

Smyth GK, Verbyla AP (1999) Adjusted likelihood methods for modelling dispersion in generalized linear models. Environmetrics 10(6):696–709

Silvapulle MJ (1981) On the existence of maximum likelihood estimators for the binomial response models. J R Stat Soc Ser B (Methodological) 43(3):310–313

Venables W, Ripley B (2002) Modern applied statistics with S. Springer, Berlin

Acknowledgements

This research benefited also from the support of the ‘Chair Risques Emergents ou atypiques en Assurance’, under the aegis of Fondation du Risque, a joint initiative by Le Mans University, Ecole Polytechnique and MMA company, member of Covea group. The authors thank Vanessa Desert for her active support during the writing of this paper. The authors are also very grateful for the useful suggestions of the two referees. This work is supported by the research project “PANORisk” and Région Pays de la Loire (France).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Proofs of Sect. 3

1.1 Proof for the one-variable case

Proof of Theorem 3.1

We have to solve the system

The system \(S(\varvec{\vartheta })=0\) is

that is

The first equation in the previous system is redundancy, and

Hence if \(Y_i\) takes values in \(\mathbb {Y}\subset b'(\varLambda )\), and \(\ell \) injective, we have

The system (23) is

Let us compute the determinant of the matrix \(M_d = \left( \begin{array}{c} \varvec{Q} \\ \varvec{R}\end{array}\right) \). Consider \(\varvec{R} = (r_0,r_1,\ldots ,r_d)\). We have

The determinant can be computed recursively

Since \( \det (M_1) = -r_0+ r_1 \) and \( \det (M_2) = -r_2 -(-r_0 +r_1) = r_0 - r_1 -r _2, \) we get \(\det (M_d) = (-1)^d r_0+ (-1)^{d+1}(r_1+\dots + r_d) =(-1)^d( r_0 - r_1-\dots -r_d)\). This determinant is non zero as long as \(r_0 \ne \sum _{j=1}^d r_j\).

Now we compute the inverse of matrix \(M_d\) by a direct inversion.

Let us check the inverse of \(M_d\)

So as long as \(r_0 \ne \sum _{j=1}^d r_j\)

In an other way, the system (24) is equivalent to

and for \((\varvec{Q}\, \varvec{R})\) of full rank, the matrix \((\varvec{Q}'\varvec{Q} + \varvec{R}'\varvec{R})\) is invertible and \( {\varvec{\vartheta }} = (\varvec{Q}'\varvec{Q} + \varvec{R}'\varvec{R})^{-1}\varvec{Q}'\varvec{g({\bar{Y}})}. \)\(\square \)

Examples—Choice of the contrast vector \(\varvec{R}\)

- 1.

Taking \(r_0=1, \varvec{r}=\varvec{0}\) leads to \( -r_0 + \varvec{r} \varvec{1}_d=-1 \Rightarrow \widehat{\varvec{\vartheta }}_n = \left( \begin{array}{c} 0 \\ \varvec{g({\bar{Y}})} \end{array}\right) . \)

- 2.

Taking \(r_0=0, \varvec{r}=(1,\varvec{0})\) leads to

$$\begin{aligned} -r_0 + \varvec{r} \varvec{1}_d=1 \Rightarrow \widehat{\varvec{\vartheta }}_n = \left( \begin{array}{c} g({\bar{Y}}_n^{(1)})\\ 0\\ g({\bar{Y}}_n^{(2)}) - g({\bar{Y}}_n^{(1)})\\ \vdots \\ g({\bar{Y}}_n^{(d)}) - g({\bar{Y}}_n^{(1)})) \end{array}\right) . \end{aligned}$$ - 3.

Taking \(r_0=0, \varvec{r}=\varvec{1}\) leads to

$$\begin{aligned} -r_0 + \varvec{r} \varvec{1}_d=d \Rightarrow \widehat{\varvec{\vartheta }}_n = \left( \begin{array}{c} \overline{\varvec{g({\bar{Y}})}}\\ g({\bar{Y}}_n^{(1)}) - \overline{\varvec{g({\bar{Y}})}}\\ \dots \\ g({\bar{Y}}_n^{(d)}) - \overline{\varvec{g({\bar{Y}})}} \end{array}\right) , \text { with } \overline{\varvec{g({\bar{Y}})}} = \dfrac{1}{d}\displaystyle \sum _{j=1}^dg(\overline{Y}_n^{(j)}). \end{aligned}$$

Proof of Remark 3.4

We have to solve the system

If \(\ell \) is injective, the system simplifies to

\(\square \)

Proof of Remark 3.5

Let \(Y_i\) from the exponential family \(F_{exp}(a,b,c,\lambda ,\phi )\). It is well known, that the moment generating function of \(Y_i\) is

Hence, the moment generating function of the average \({\overline{Y}}_m\) is

So we get back to a known result that \({\overline{Y}}_m\) belongs to the exponential family \(F_{exp}(x\mapsto a(x)/m,b,c,\lambda ,\phi )\) (e.g. McCullagh and Nelder 1989).

In our setting, random variables in the average \(\overline{Y}_n^{(j)}\) are i.i.d. with functions a, b, c and parameters \(\lambda =\ell (\vartheta _{(1)}+\vartheta _{(j)})\) and \(\phi \). And \({\overline{Y}}_n^{(j)}\) also belongs to the exponential family with the same parameter but with the function \({\bar{a}}:x\mapsto a(x)/m_j\). In particular,

But the computation of \(\mathbf {E}g({\overline{Y}}_n^{(j)})\) remains difficult unless g is a linear function. By the strong law of large numbers, as \(m_j\rightarrow +\,\infty \), the estimator is consistent since

By the Central Limit Theorem (i.e. \({\overline{Y}}_n^{(j)}\) converges in distribution to a normal distribution) and using the Delta Method, we obtain that the following

\(\square \)

Proof of Corollaries 3.1

The log likelihood of \(\widehat{\varvec{\vartheta }}_n\) is defined by

In fact, we must be verified than \(\ell ({\widehat{\eta }}_i)\) does not depend on g function. If we consider \(\widehat{\varvec{\vartheta }}_n\) defined by (8), we have \(\varvec{Q}\widehat{\varvec{\vartheta }}_n = \varvec{g({\bar{y}})}\) , since \(\widehat{\varvec{\vartheta }}_n\) is solution of the system (23), i.e. \(\varvec{Q}(\varvec{Q}'\varvec{Q} + \varvec{R}'\varvec{R})^{-1}\varvec{Q}'=I\) Using \({\widehat{\eta }}_i= (\varvec{Q}\widehat{\varvec{\vartheta }}_n)_j\) for i such that \(x_i^{(2),j}=1\) we obtain

and

In the same way,

\(\square \)

1.2 Proof for the two-variable case

Proof of Theorem 3.2

The system \(S(\varvec{\vartheta })=0\) is

that is

The system have exactly \(1+d_2+d_3\) redundancies, and \(S(\varvec{\vartheta })=0\) reduces to

Hence the system has rank \({ KL}^\star \) and if \(Y_i\) takes values in \(\mathbb {Y}\subset b'(\varLambda )\), and \(\ell \) injective, we have

In the same way of proof of Theorem 3.1, we have to solve

that is, because \(\varvec{Q}\varvec{Q}'+\varvec{R}\varvec{R}'\) is full rank, in the same way of proof of Theorem 3.1

In that case, the MLE solves a least square problem with response variable \(\varvec{g({\bar{Y}})}\), explanatory variable \(\varvec{Q}\) under a linear constraint \(\varvec{R}\).

- 1.

Under linear contrasts (\({\tilde{C}}_0\)), the model (10) is equivalent to model (6) with \(J=KL^\star \) modalities. Hence the solution is evident.

- 2.

Under linear contrasts (\({\tilde{C}}_\varSigma \) ), the system

$$\begin{aligned} \vartheta _{(1)}+\vartheta _{(2),k} + \vartheta _{(3),l} + \vartheta _{kl} = g({\bar{Y}}_n^{(k,l)})\quad \forall (k,l)\in KL^\star \end{aligned}$$implies that

$$\begin{aligned} \sum _{(k,l)\in KL^\star }m_{k,l}(\vartheta _{(1)}+\vartheta _{(2),k} + \vartheta _{(3),l} + \vartheta _{kl}) = \sum _{(k,l)\in KL^\star }m_{k,l} g({\bar{Y}}_n^{(k,l)}). \end{aligned}$$Using

$$\begin{aligned} \sum _{(k,l)\in KL^\star }m_{k,l}= & {} n,\quad \sum _{(k,l)\in KL^\star }m_{k,l}\vartheta _{(2),k} = \sum _{k\in K}\sum _{l\in L^\star _k}m_{k,l}\vartheta _{(2),k}\nonumber \\= & {} \sum _{k\in K}m^{(2)}_k\vartheta _{(2),k}= 0,\\ \sum _{(k,l)\in KL^\star }m_{k,l}\vartheta _{(3),l}= & {} \sum _{l\in L}\sum _{k\in K^\star _l}m_{k,l}\vartheta _{(3),l}= \sum _{l\in L}m^{(3)}_l\vartheta _{(3),l}= 0,\nonumber \\&\quad \sum _{(k,l)\in KL^\star }m_{k,l}\vartheta _{kl} =0, \end{aligned}$$we get \(\vartheta _{(1)} = \dfrac{1}{n}\displaystyle \sum \nolimits _{(k,l)\in KL^\star }m_{k,l} g({\bar{Y}}_n^{(k,l)}).\) In the same way, taking summation over \(K^\star _l\) for \(l\in L\) and over \(L^\star _k\) for \(k\in K\), we found \(\vartheta _{(2),k}\) and \(\vartheta _{(3),l}\), and then \(\vartheta _{kl}\).

With main effect only, the system \(S(\varvec{\vartheta })=0\) is

There are \(1+d_2+d_3\) equations for \(1+d_2+d_3\) parameters, but each explanatory variable are colinear. So, the two additional constraints \(\varvec{R}\varvec{\vartheta }=0\) ensures that a solution exist for the remaining \(d_2+d_3-1\) parameters. Using \(\sum _k x_i^{(2),k}=1\), the second set of equations becomes \(\forall l\in L\)

Similarly, the third set of equations becomes \(\forall k\in K\)

Even with a canonical link \(\ell (x)=x\) so that \(\ell '(x)=1\), this system is not a least-square problem for a nonlinear g function. \(\square \)

Calculus of the Log-likelihoods appearing in Sects. 4 and 5

Consider the Pareto GLM described on (13) and (15). The b function is \(b(\lambda ) = -\log (\lambda )\), using corollary 3.1 we have \(\ell (\hat{\eta }_i) = (b')^{-1}(\overline{z}_n^{(j)})=-(\overline{z}_n^{(j)})^{-1}\) for j such that \(x_i^{(2),j}=1\) and

Compute the original log likelihood of Pareto 1 distribution:

Hence with \(z_i=-\log (y_i/\mu )\),

Now consider the shifted log-normal GLM described on (18) and (19). Here, the b function is \(b(\lambda )=\lambda ^2/2\), hence using Corollary 3.1, we have \(\ell (\hat{\eta }_i) = (b')^{-1}(\overline{z}_n^{(j)})=\overline{z}_n^{(j)}\) for j such that \(x_i^{(2),j}=1\) and Eq. (21) holds.

Let us compute the original log likelihood of the shifted log normal distribution:

with \(z_i=\log (y_i-\mu )\). Hence

Using \( {\widehat{\phi }} = \frac{1}{n}\sum _{j\in J}\sum _{i, x_i^{(2),j}=1}\left( z_i - {\bar{z}}_n^{(j)}\right) ^2 \) leads to the desired result.

Link functions and descriptive statistics

Rights and permissions

About this article

Cite this article

Brouste, A., Dutang, C. & Rohmer, T. Closed-form maximum likelihood estimator for generalized linear models in the case of categorical explanatory variables: application to insurance loss modeling. Comput Stat 35, 689–724 (2020). https://doi.org/10.1007/s00180-019-00918-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-019-00918-7