Abstract

Separability is an attractive feature of covariance matrices or matrix variate data, which can improve and simplify many multivariate procedures. Due to its importance, testing separability has attracted much attention in the past. The procedures in the literature are of two types, likelihood ratio test (LRT) and Rao’s score test (RST). Both are based on the normality assumption or the large-sample asymptotic properties of the test statistics. In this paper, we develop a new approach that is very different from existing ones. We propose to reformulate the null hypothesis (the separability of a covariance matrix of interest) into many sub-hypotheses (the separability of the sub-matrices of the covariance matrix), which are testable using a permutation based procedure. We then combine the testing results of sub-hypotheses using the Bonferroni and two-stage additive procedures. Our permutation based procedures are inherently distribution free; thus it is robust to non-normality of the data. In addition, unlike the LRT, they are applicable to situations when the sample size is smaller than the number of unknown parameters in the covariance matrix. Our numerical study and data examples show the advantages of our procedures over the existing LRT and RST.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Covariance separability is an attractive property of covariance matrices, which can improve and simplify many multivariate procedures. A separable covariance matrix is defined by its representation as the Kronecker product of two covariance matrices. The most common multivariate procedures for a separable covariance matrix are those with the matrix normal distribution or transposable data (Dawid 1981; Gupta and Nagar 1999; Wang and West 2009). For example, Viroli (2010) and Glanz and Carvalho (2013) consider the mixture model and an EM procedure to estimate it. Yin and Li (2012) study the sparse Gaussian graphical model under the matrix normal assumption. Allen and Tibshirani (2012) and Tan and Witten (2014) study various inferential issues on transposable data, where transposability implies both rows and columns of the matrix data are correlated. Extensions to three-level multivariate data are also presented in the literature (e.g., Roy and Leiva 2008, 2011).

Due to its importance in inferential procedures, testing covariance separability has received considerable attention from previous researchers. Lu and Zimmerman (2005) and Mitchell et al. (2006) consider repeatedly measured multivariate data. They, independently, study the likelihood ratio (LR) statistic under the normality assumption, and propose an approximation to its quantiles under the null hypothesis. Fuentes (2006) studies the separability in spatio-temporal processes. She uses a spectral representation of the process, and reformulates the test problem to a simple two-way ANOVA problem. Li et al. (2007) work with stationary spatio-temporal random fields. Using the asymptotic normality of the estimated covariance functions, they build an unified framework for various testing problems. Recently, Filipiak et al. (2016, 2017) propose Rao’s score test (RST) under normality for the repeatedly measured multivariate data, and use the asymptotic chi-square distribution as its null distribution.

The normality is a common assumption in most of previous works on testing covariance separability, which is often not true in practice. In this paper, we propose permutation based testing procedures to resolve this difficulty. More specifically, we rewrite the null hypothesis on a separable covariance matrix \(\Sigma \) as an intersection of many individual sub-hypotheses. The sub-hypotheses are on separability of specially structured sub-matrices of \(\Sigma \), where the LR statistic is invariant to the permutation of (groups of) variables. Thus, the p value for each sub-hypothesis can be approximated numerically. The final decision is obtained by combining p values of individual sub-hypotheses using the Bonferroni and multi-stage additive procedures.

The remainder of this paper is organized as follows. In Sect. 2, we briefly introduce the LRT under normality assumption by Mitchell et al. (2006) and the RST by Filipiak et al. (2016, 2017). In Sect. 3, we propose permutation based procedures. We then apply our methods and also two existing procedures (the LRT and RST) to simulated data, and compare their sizes and powers in Sect. 4. We analyze two real data examples in Sect. 5. Concluding remarks and discussions are given in Sect. 6.

2 Existing procedures for testing separability

We consider a multivariate random variable \(\mathbf{X}_{p\times q}\),

and its vectorized variable

which has mean \(\mu =\big (\mu _{11},\mu _{12},\dots ,\mu _{1q},\mu _{21},\mu _{22},\ldots ,\mu _{p1},\mu _{p2},\ldots ,\mu _{pq}\big )^{\mathrm{T}}\) and covariance matrix \(\Sigma \) (a \(pq\times pq\) matrix). Suppose we have n repeatedly measured observations (independently and identically distributed copies) of \(\mathbf{X}\) and \(\mathbf{vX}\), which are \(\mathbf{X}_{1},\mathbf{X}_{2},\ldots ,\mathbf{X}_{n}\) and \(\mathbf{vX}_{1},\mathbf{vX}_{2},\ldots ,\mathbf{vX}_{n}\), respectively. The goal of this paper is to test the separability of \(\Sigma \), i.e., \(\Sigma =\mathrm{U}\otimes \mathrm{V}\) for two covariance matrices \(\mathrm{U}=\big (u_{ij},1\le i,j\le p\big )\) (a \(p\times p\) matrix) and \(\mathrm{V}=\big (v_{kl},1\le k,l\le q\big )\) (a \(q\times q\) matrix) using these observations.

A popular procedure to test the separability of \(\Sigma \) is the likelihood ratio (LR) test under normality. Suppose \(\mathbf{vX}\) is normal and has the covariance matrix \(\Sigma =\mathrm{U}\otimes \mathrm{V}\). The maximum likelihood estimators (MLEs) of \(\mathrm{U}\) and \(\mathrm{V}\) are the solution to the following equations (Dutilleul 1999),

where \(\mathbf{M}\) is the \(p\times q\) matrix formed from \(\mu \) and \(\widehat{\mathbf{M}}\) is the sample average of \(\mathbf{X}_{1},\ldots ,\mathbf{X}_{n}\). The LR statistic is then found to be

where \(\mathbf{S}\) is the sample covariance matrix

and \(\widehat{\mu }\) is the vectorized estimator of \(\widehat{\mathbf{M}}\).

For the null distribution of the LR statistic in (1), Mitchell et al. (2006) propose to match its first moment to that of a scaled chi-square distribution. They first show that the null distribution of the LR statistic only depends on p, q, and n, not the specific form of \(\mathrm{U}\) and \(\mathrm{V}\). Thus, all separable covariance models \(\Sigma =\mathrm{U}\otimes \mathrm{V}\) yield the same null distribution given p, q, n. For each combination of (p, q, n), they approximate the null distribution with a scaled chi-square distribution:

where \(\xi =pq(pq+1)/2-p(p+1)/2-q(q+1)/2+1\) is the number of degrees of freedom of the asymptotic null distribution; and k is determined by matching the first moment of \(\mathrm{lrt}\) to that of the \(k\cdot \chi ^{2}(\xi )\), given by

where \(\psi \) is the digamma function. They further approximate the critical value (denoted by \(\mathrm{lrt}_{\alpha }\)) with that of the approximate scaled chi-square distribution as

where \(\text {lrt}_{\alpha }\) is the \(100\times (1-\alpha )\)-th quantile of the null distribution of \(\mathrm{lrt}\) and \(\chi _{\alpha }^{2}(\xi )\) is that of the chi-square distribution \(\chi ^{2}(\xi )\).

The above approximation by Mitchell et al. (2006) relies on the multivariate normality assumption in evaluating the expectation of \(\mathrm{lrt}\). In particular, they evaluate the expectation of the generalized variance \(\log |\mathbf{S}|\) under the multivariate normality. As shown in our numerical study in Sect. 4, if the data are from a non-normal distribution (in our study, we use a multivariate t-distribution with degrees of freedom 5 and multivariate version of the chi-square distribution with degrees of freedom 3), the critical value in (3) is severely biased and accordingly the size of the LRT is much larger than the aimed level.

As an alternative to the LRT, very recently, Filipiak et al. (2016, 2017) consider the RST under normality, whose testing statistic is

where \(\mathbf{Z} = \big (\mathbf{vX}_{1}, \ldots , \mathbf{vX}_{n}\big )^\mathrm{T}\) is a matrix having n rows by stacking transposed \(\mathbf{vX}_h, h=1,\ldots , n\), and \(\mathrm{P}^{\perp } = \mathrm{I}_n - n^{-1} 1_n 1_n^\mathrm{T}\) is a projection matrix onto orthogonal complement of the column space of n dimensional vector \(1_n=(1,\ldots ,1)^\mathrm{T}\), and \(\mathrm{tr}( \mathrm{A})\) is the trace of the matrix \(\mathrm{A}\).

The RST statistic has an advantage in its applicability to a small sized data, where the required number of samples is smaller (\(n > \max (p,q)\)) than that of LRT (\(n > pq\)). Also, it is well-known that the RST statistic is asymptotically distributed as the chi-square distribution with degrees of freedom \(\xi \) (defined above) under the null hypothesis. However, as pointed out in Filipiak et al. (2016, 2017), the finite sample distribution of (4) is unknown under the null hypothesis and also is quite different from the asymptotic. The authors numerically approximate its critical values using Monte Carlo samples from the normal distribution. Nonetheless, like the LRT, the performance of the RST also depends on the normality assumption as shown later in Fig. 1 and tables in Sect. 4.

3 Permutation based procedures

All procedures to test the covariance separability in the literature, which include Mitchell et al. (2006) and Filipiak et al. (2016, 2017), strongly depend on the normality assumption or large sample asymptotic, which is often not true in practice. In this section, we propose permutation based procedures that are free of any distributional assumption on the data. To do so, we first rewrite the null hypothesis (the separability of \(\Sigma \)) into the intersection of many small individual hypotheses on (a specific form of) sub-matrices of \(\Sigma \) and test the individual hypotheses via permutation.

Suppose, as defined earlier, \(\mathbf{vX}\) is a pq dimensional random vector of

with covariance matrix \(\Sigma =\big (\sigma _{st},1\le s,t\le pq\big )\) (a \(pq\times pq\) matrix). The covariance matrix \(\Sigma \) is defined to be separable, if it can be written as the Kronecker product of two covariance matrices \(\mathrm{U}=\big (u_{ij},1\le i,j\le p\big )\) (a \(p\times p\) matrix) and \(\mathrm{V}=\big (v_{kl},1\le k,l\le q\big )\) (a \(q\times q\) matrix):

Let

which is the submatrix of \(\Sigma \) corresponding to the subvector

Similarly, we define \(\Sigma _{kl}^{\mathrm{c}}\) as

Then, the hypothesis “\(\Sigma \) is separable” is equivalent to “\(\Sigma _{ij}^{\mathrm{r}}\) and \(\Sigma _{kl}^{\mathrm{c}}\) are separable for all \(1\le i<j\le p\) and \(1\le k<l\le q\).” We let \(\mathcal {H}_{0,ij}^{\mathrm{r}}\) and \(\mathcal {H}_{0,kl}^{\mathrm{c}}\) be the separability hypotheses on \(\Sigma _{ij}^{\mathrm{r}}\) and \(\Sigma _{kl}^{\mathrm{c}}\), respectively, for each choice of (i, j) and (k, l). Below we propose a permutation based procedure to test the individual hypotheses \(\mathcal {H}_{0,ij}^{\mathrm{r}}\) and \(\mathcal {H}_{0,kl}^{\mathrm{c}}\).

We consider the test \(\mathcal {H}_{0,ij}^{\mathrm{r}}\) which is the hypothesis on the covariance matrix of the sub-vector

Here, we assume \(u_{ii}=u_{jj}\) (say they are equal to 1) and, before implementing the procedure below for the data, we standardize the data to take this assumption into account. Under this assumption, \(\mathbf{X}_{ij}^{\mathrm{r}}\) has the covariance matrix

In addition,

has the same covariance matrix with that of \(\mathbf{X}_{ij}^{\mathrm{r}}\). Thus, if the distribution of \(\mathbf{X}\) is specified only based on its mean and covariance matrix (for example, the elliptical distribution in Anderson 2003), the distributions of \(\mathbf{X}_{ij}^{\mathrm{r}}\) and \(\mathbf{X}_{ji}^{\mathrm{r}}\) are equal if they have common means. The detail about permutation of multivariate data can be found in Li et al. (2010, 2012) and Klingenberg et al. (2009).

The above allows us to construct a permutation based testing procedure for the sub-hypotheses \(\mathcal {H}_{0,ij}^{\mathrm{r}}\) and also for \(\mathcal {H}_{0,kl}^{\mathrm{c}}\). To be specific, let \(\mathbf{Y}^{h},h=1,2,\ldots ,n,\) be independent copies of \((\mathbf{X}_{ij}^{\mathrm{r}})^\mathrm{T}\) and let the likelihood ratio test (LRT) statistic for \(\mathcal {H}_{0,ij}^{\mathrm{r}}\) using \(\mathbf{Y}^{h}\)’s be \(\mathbf{T}\), that is,

where \(\widehat{\mathrm{U}}_{ij}\) and \(\widehat{\mathrm{V}}\) are the MLE of \(\mathrm{U}_{ij}=[1,u_{ij};u_{ji}, 1]\), \(\mathrm{V}\), and \(\mathbf{S}_{[i,j]}\) is the sample covariance matrix obtained from \(\mathbf{Y}^{h}\)’s. Note that we assume \(p=2\) for the time being, so that \(\mathbf{Y}^{h}=\big (\mathbf{Y}_{1}^{h},\mathbf{Y}_{2}^{h}\big )\), where \(\mathbf{Y}_{1}^{h}=\big (X_{i1}^{h},\ldots ,X_{iq}^{h}\big )\) and \(\mathbf{Y}_{2}^{h}=\big (X_{j1}^{h},\ldots ,X_{jq}^{h}\big )\). Suppose \(\pi =\big (\pi (h),h=1,2,\ldots ,n\big )\) is a vector of i.i.d. random numbers having values 0 or 1 with probability 1 / 2. The permutation of \(\big \{\mathbf{Y}^{h}=\big (\mathbf{Y}_{1}^{h},\mathbf{Y}_{2}^{h}\big ),h=1,2,\ldots ,n\big \}\) for \(\pi \) is defined as \(\big \{\mathbf{Y}^{h}(\pi )=\big (\mathbf{Y}_{1}^{h}(\pi ),\mathbf{Y}_{2}^{h}(\pi )\big ),h=1,2,\ldots ,n\big \}\), where

In the sequel, the permuted LR statistic corresponding to \(\pi \) is computed as

where \(\widehat{\mathrm{U}}_{ij}(\pi )\), \(\widehat{\mathrm{V}}(\pi )\), and \(\mathbf{S}_{[i,j]} ({ \pi })\) are the estimators with the \(1 \times 2q\) permuted samples \(\big \{\mathbf{Y}^{h}(\pi )=\big (\mathbf{Y}_{1}^{h}(\pi ),\mathbf{Y}_{2}^{h}(\pi )\big ),h=1,2,\ldots ,n\big \}\) defined above. We approximate the null distribution of \(\mathbf{T}\) with the empirical distribution function of the permuted statistics \(\big \{\mathbf{T}(\pi ),\pi \in \Pi \big \}\), where \(\Pi \) is the collection of all possible permutations, and then evaluate the p value for the hypothesis \(\mathcal {H}_{0,ij}^{\mathrm{r}}\). The above permutation algorithm is summarized as follows. For the column-wise permutation algorithm, we replace “rows” with “columns”; and “p” with “q”.

Finally, we do the same procedure for each individual hypothesis \(\mathcal {H}_{0,ij}^{\mathrm{r}}\) and \(\mathcal {H}_{0,kl}^{\mathrm{c}}\) and obtain their p values \(p_{ij}^{\mathrm{r}}\) and \(p_{kl}^{\mathrm{c}}\).

Our next step is to combine p values of individual sub-hypotheses to make a final decision on the separability of \(\Sigma \), given the overall significance level \(\alpha \). Here, we consider two combining procedures, both based on the Bonferroni correction. The first procedure, named as “m-perm”, considers only the sub-hypotheses along with the smaller dimension of the data matrix. If p is smaller than q, we consider the sub-hypotheses \(\mathcal {H}_{0,ij}^{\mathrm{r}}\), \(i,j=1,2,\ldots ,p\) with \(i<j\). The row-wise \(\left( {\begin{array}{c}p\\ 2\end{array}}\right) \) pairs of hypotheses result in p values \(\{p_{12}^{\mathrm{r}},p_{13}^{\mathrm{r}},\ldots ,p_{p-1,p}^{\mathrm{r}}\}\), and the Bonferroni correction compares all individual p values \(p_{ij}^{\mathrm{r}}\) with the adjusted significance level \(\alpha /\left( {\begin{array}{c}p\\ 2\end{array}}\right) \). Equivalently, the p value is set as \(\left( {\begin{array}{c}p\\ 2\end{array}}\right) \min _{i<j}p_{ij}^{\mathrm{r}}\). The second procedure, named as “two-s”, is the combination of the row and column-wise Bonferroni procedures with the idea of multi-stage additive testing (Sheng and Qiu 2007). Here, we first test the separability by testing the row-wise sub-hypotheses (here, \(p<q\) is assumed) using the Bonferroni procedure at level \(\gamma _{1}\). If the separability is not rejected at the first stage, we further test the column-wise sub-hypotheses using the Bonferroni procedure at level \(\gamma _{2}\). The p value of the two stage procedure becomes \(\left( {\begin{array}{c}p\\ 2\end{array}}\right) \min _{i<j}p_{ij}^{\mathrm{r}}\), if \(\left( {\begin{array}{c}p\\ 2\end{array}}\right) \min _{i<j}p_{ij}^{\mathrm{r}}\le \gamma _{1}\); \(\gamma _{1}+(1-\gamma _{1})\left( {\begin{array}{c}q\\ 2\end{array}}\right) \min _{k<l}p_{kl}^{\mathrm{c}}\), otherwise. In this paper, we set the significance levels \(\gamma _{1}\) and \(\gamma _{2}\) to be equal, i.e., \(\gamma =1-\sqrt{1-\alpha }\), following Sheng and Qiu (2007).

Despite the additional efforts on combining p values, the proposed procedures have at least two advantages over the existing LRT and RST. First, the LRT and RST strongly depend on the normality assumption, which is often not true in practice. In addition, even under the normality, the null distributions of the LR statistic and RST statistic are still not fully characterized, and several approximate formulas are proposed or Monte Carlo approximation is used in the literature. Unlike the existing procedures, our procedures are distribution free and can be used with minimal distributional assumptions. Second, our procedures are applicable to small sized data. For example, the required sample size for our procedures is \(n>2\min (p,q)\), while that of the LRT and RST is \(n>pq\) and \(n > \max (p,q)\), respectively.

4 A numerical study

We numerically investigate the sizes and powers of the proposed permutation based procedures and compare their performances to those of the LRT and the RST. Here, we use the linear model as in Mitchell et al. (2006); that is

where \(\mathbf{M}\) is the \(p\times q\) matrix corresponding to the mean vector \(\mu \); \(\mathbf{E}\) is the \(p\times q\) error matrix, whose (i, j)-th element is \(e_{ij}\), and let

We consider three distributions for \(\mathbf{vE}\) with mean 0 and covariance matrix \(\Sigma \); (i) the multivariate normal distribution, (ii) the multivariate t-distribution with degrees of freedom 5, and (iii) the “multivariate” chi-square distribution with degrees of freedom 3. Contrary to the first two, the last distribution is asymmetrical, which is generated by

where each component of \(\mathbf{vF}\) independently follows a univariate chi-square distribution with degrees of freedom 3, and a mean vector \(\mu _F\) of \(\mathbf{vF}\) is used to center the error at the mean zero. It is worthwhile to point out that these multivariate distributions can be characterized only by mean and covariance matrix when degrees of freedom, if relevant, are fixed, which ensures the applicability of our procedures for testing the covariance matrix. We also assume that \(\mathbf{M}\) is the zero matrix for simplicity. Following Mitchell et al. (2006), we set \(p=4\) (the row size), \(q=3,5,10\) (the column size), and \(n=20,25,50,75\) (the number of replicated samples). The covariance matrix is assumed to have the form of

where \(1\le i,j\le 4\), \(1\le t\le t+k\le q\), and \(\mathrm{I}(A)\) is an indicator function for the event A. For instance, the model (10) with \(p=4,q=3\) is written by

where each block matrix is defined by

where \(*\) indicates elementwise multiplication. In the study below, we set \(\sigma =1\), and \(\gamma =0.7\).

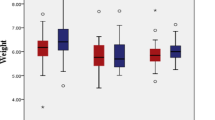

We first examine the magnitude of biases of Mitchell et al. (2006)’s approximation for the LRT and the RST (with the asymptotic chi-square distribution) to the critical value when the normality is violated. To do it, we generate samples from the multivariate t-distribution where the covariance is assumed to be separable (\((\rho _{1},\rho _{2},\rho _{3},\rho _{4})=(0.6,0.6,0.6,0.6)\)). We fix row and column sizes as \(p=4,q=3\) and compute 10, 000 LRT and RST statistics with different sample sizes \(n=20,25,50,75\) and degrees of freedom \(\mathrm{df}=5,10,30,\infty \) (\(\infty \) corresponds to the multivariate normal distribution). To understand the approximation error by Mitchell et al. (2006) and Filipiak et al. (2016, 2017), we consider the differences between the empirical 95-th percentile and the approximation by (3) for the LRT and that by the asymptotic chi-square distribution for the RST. Figure 1 shows that the approximate critical values by both methods are biased, and the magnitude of the biases increases as either the number of samples increases (decreases) when the degrees of freedom are small (large) or the non-normality grows (the degrees of freedom decreases).

Next, we compare the empirical sizes and powers of the permutation based procedures to the LRT based on (3) and RST. To evaluate the empirical sizes and powers, we consider three hypotheses: (i) null (“N”) hypothesis: \((\rho _{1},\rho _{2},\rho _{3},\rho _{4})=(0.6,0.6,0.6,0.6)\), (ii) the first alternative (“A1”) hypothesis: \((\rho _{1},\rho _{2},\rho _{3},\rho _{4})=(0.6,0.65,0.7,0.75)\), the second alternative (“A2”) hypothesis: \((\rho _{1},\rho _{2},\rho _{3},\rho _{4})=(0.9,0.7,0.7,0.45)\). We generate 500 data sets from the model (9) for each combination of p,q, and n. In each data set, we use 2000 permuted samples to calculate the p value. The significance level \(\alpha \) is set to 0.05. The empirical sizes and powers are reported in Table 1.

Table 1 first shows that the proposed permutation based procedures work better than both the LRT with the approximation in (3) and RST in controlling the size at the aimed level 0.05. The sizes of both the LRT with (3) and RST become larger than s0.05 when the underlying distribution has heavier tails (e.g. the t-distribution with smaller degrees of freedom). In addition, when the data are from an asymmetric distribution (e.g. the chi-square distribution), the same type of upward bias occurs in the size.

Second, the performance of the m-perm depends on the permutation direction (either row-wise or column-wise) as well as the true covariance \(\Sigma \). In both alternatives “A1” and “A2”, the covariance matrix of the column-wise selection (for example, \(\mathbf{X}_{12}^{\mathrm{c}}\)) is conjectured to be less separable than that of the row-wise selection (for example, \(\mathbf{X}_{12}^{\mathrm{r}}\)) and, thus, the choice of the column-wise permutation (the cases \(p>q\)) shows more power than the row-wise permutation. Table 2 reports the empirical powers (for the cases of \(p=4\) and \(q=3\)) of the Bonferroni procedure (at level \(\alpha \)) based on the row-wise permutation together with those based on the column-wise permutation. This shows the Bonferroni test for sub-hypotheses along with the row-wise direction (p-perm) now has lower power than the column-wise permutation (q-perm); the p-perm often has lower power than both the size-corrected LRT and RST.

Third, the two-s, our second permutation based procedure, considers both row-wise and column-wise permutations and has higher empirical power than the LRT in all but one case (normal distribution and with \(q=10\), \(r=75\) under A1). Compared to the RST, it tends to have higher power when \((p,q)=(4,3)\) and (4, 5), but lower power when \((p,q)=(4,10)\) (the case p and q are very unequal). It is interesting to see that it has higher empirical power than both the LRT and RST even for some cases with the data from the normal distribution. We conjecture this is because the sample size n is relatively small compared to the dimension of the data pq. Here, we remark that both the theoretical reference distributions of the LRT and RST are not available in practice.

Finally, we conclude the section with a short report on the computation, which was done with R ver. 3.4.2 and carried out on a PC with Intel Core i7 3.0 GHz processor. The average CPU time without parallel computing for the case \(p=4, q=5, n=50\) using 2000 permutation steps is 32.04 seconds with standard deviation 1.89 seconds based on 100 repetitions.

5 Data examples

5.1 Tooth size data

We now apply our method to testing the covariance separability of the tooth size data, which were obtained as a part of Korean National Occlusion Study, conducted from 1997 to 2005 (Wang et al. 2006; Lee et al. 2007). Here, we use the tooth sizes of 179 young Korean men who passed predefined selection criteria among 15, 836 respondents recorded in this dataset, to test separability in the analysis. The observation of each subject has a \(2\times 14\) matrix form, where the first row consists of sizes of 14 teeth in maxilla and the second row consists of those in mandible. We write the size matrix of the h-th subject as

where “\(\mathrm{X}\)” in the upper-script is short for maxilla and “\(\mathrm{N}\)” for mandible. In this section, we are interested in testing the covariance matrix of \(\mathbf{X}\) (its vectorized version), say \(\Sigma \), is separable as the Kronecker product of \(\mathrm{U}_{2\times 2}\) (the common covariance matrix between sizes of the upper and lower teeth at the same location) and \(\mathrm{V}_{14\times 14}\) (the covariance matrix among sizes of the teeth within maxillar (or mandible)). In short, we test the hypothesis \(\mathcal {H}_{0}:\Sigma =\mathrm{U}_{2\times 2}\otimes \mathrm{V}_{14\times 14}\).

Before testing the hypothesis on separability, we check the normality assumption which existing procedures rely on. We apply three popular testing procedures for the normality which are available from “MVN” R package by Korkmaz et al. (2014); they are Mardia’s (1970), Henze–Zirkler’s (1990), and Royston’s test (Shapiro and Wilk 1964; Royston 1983, 1992). All of the results clearly indicate non-normality of tooth sizes (p value = 0), and univariate Q–Q plots (Fig. 2) confirm it again, especially with “X4R” and “N2R”.

To test the separability, we use the centered data by subtracting the mean vector. The cross-covariance matrix between maxillary and mandible regions is estimated from the unstructured sample covariance matrix \(\mathbf{S}\) and the maximum likelihood estimator under separability \(\widehat{\mathrm{U}}\otimes \widehat{\mathrm{V}}\), respectively, as:

and

where M[a : b, c : d] denotes a submatrix of M from a-th row to b-th row and from c-th column to d-th column, producing a \((b-a+1)\times (d-c+1)\) matrix. From the above, we find a significant difference between \(\mathbf{S}[1:7,15:21]\) and \((\widehat{\mathrm{U}}\otimes \widehat{\mathrm{V}})[1:7,15:21]\). We approximate the p value using the procedure in Sect. 3, which is approximated to 0.025 by the two-s procedure. Here, the number of permuted data sets to approximate the p value is set as 10,000. On the other hand, the LRT statistic in (1) is evaluated as 921.91, and the critical value under normality approximated by Mitchell et al. (2006) is 368.26 at the significant level \(\alpha =0.01\). The RST statistic is evaluated as 741.45 with the asymptotic critical value \(\chi ^2_{.01}(299) = 358.81\) and empirical critical value 358.27 at \(\alpha =0.01\).

5.2 Corpus callosum thickness

Our second example is about two-year longitudinal MRI scans from the Alzheimer’s Disease Neuroimaging Initiative (ADNI). According to Lee et al. (2016), the corpus callosum (CC) thickness profile is calculated based on CC segmentation at equally spaced intervals. To be specific, the CC thicknesses of 135 subjects are measured at 99 points for each year. The separability hypothesis to be tested is \(\mathcal {H}_{0}:\Sigma =\mathrm{U}_{2\times 2}\otimes \mathrm{V}_{99\times 99}\), where we expect \(\mathrm{U}\) and \(\mathrm{V}\) to explain the covariance structure in CC thickness of the repeated measurements and the measurements within a subject, respectively.

The LRT based on the normality is not applicable to this dataset for two reasons. First, the multivariate normal tests provided by the MVN R-package reveal that the data do not satisfy the multivariate normality. This can also be observed from Fig. 3, in which each shown variable has a heavy right tail. Second, the sample size (\(n=135\)) is less than the number of measure points (\(pq=99\times 2=198\)), making the LR statistic undefined.

We apply the proposed permutation procedures to the data. More precisely, we apply the permutation test to the sub-hypotheses of all \(\left( {\begin{array}{c}99\\ 2\end{array}}\right) \) column-wise pairs. The permutation test for each sub-hypothesis is for the bivariate paired data with the size of \(135\times 4\); for example, if the column pair (k, l) is chosen, the bivariate paired data are \(\big \{\big (X_{hk}^1,X_{hl}^1,X_{hk}^2,X_{hl}^2 \big ),h=1,2,\ldots ,135\big \}\). We use 100, 000 random permutations to evaluate the p value of each sub-hypothesis, and the Bonferroni adjusted p value is given by \(\left( {\begin{array}{c}99\\ 2\end{array}}\right) \min _{k<l}p_{kl}^{\mathrm{c}}\), where \(p_{kl}^{\mathrm{c}}\) is the p value for the sub-hypothesis \(\mathcal {H}_{kl}^{\mathrm{c}}\) for the (k, l)-th column pair. The p value evaluated is less than 0.0001. The RST statistic is evaluated as 16, 346.75 with the asymptotic and empirical critical value as \(\chi ^2_{.01}(14749) = 15,151.49\) and 15, 095.09, respectively.

6 Discussion

In this paper, we propose permutation based procedures to test the separability of covariance matrices. The procedure divides the null hypothesis on a separable covariance matrix into many sub-hypotheses, which are testable via a permutation method. Compared to the existing LRT and RST under normality, the proposed procedures are distribution free and robust to non-normality of the data. In addition, it is applicable to small sized data, whose size is smaller than dimension of the covariance matrix under test. The numerical study and data examples show that the proposed permutation procedures are more powerful when the data are non-normal and the dimension is high.

Theory on permutation procedures has been well developed for linear permutation test statistics (Strasser and Weber 1999; Finos and Salmaso 2005; Pesarin and Salmaso 2010; Bertoluzzo et al. 2013) and its computational tool is publicly available (the R package “coin” by Hothorn et al. 2017). In our procedure, permutation is applied to testing the sub-hypotheses \(\mathcal {H}_{0,ij}^{\mathrm{r}}\) and \(\mathcal {H}_{0,kl}^{\mathrm{c}}\) for each choice of (i, j) and (k, l); the sub-hypotheses are on the separability of \(\Sigma _{ij}^{\mathrm{r}}\hbox {s}\) and \(\Sigma _{kl}^{\mathrm{c}}\hbox {s}\). Here, we use the LR statistic, one of a few known statistics for testing covariance separability. The LR statistic is non-linear and, thus the CRAN package and the asymptotic results of Strasser and Weber (1999) can not be directly applied to it. However, we conjecture that, for an appropriately chosen permutation test statistic, we may encapsulate our problem into the existing conditional inference framework and achieve the proven optimality.

Our procedures in this paper use the Bonferroni rule to combine p values from testing of many sub-hypotheses. In our problem, the p values of individual sub-hypotheses are conjectured to be strongly dependent to each other by its nature. For this reason, we adopt the Bonferroni rule to ensure the size of the combined test to be less than the aimed level despite of its conservativeness. In addition, the additional numerical study not reported here shows that the well-known Fisher’s omnibus and Lipták’s rules (under the assumption of independent p values) are severely biased in their sizes for non-normal data. The same reasoning would be applied to the direct aggregation of individual LR statistics. We could not specify the null distribution of the aggregated statistic due to the dependency among individual statistics. We thus have difficulty in proceeding with this.

We finally conclude the paper with the remark that the procedure of this paper can easily be applied to testing more complexly structured covariance matrix. Suppose we consider repeatedly measured spatial data (on the lattice system) or image data. The observation of a single subject has the form of a three-way array \(\mathbf{X}_{ijk}\), \(i=1,2,\ldots ,a\), \(j=1,2,\ldots ,b\), and \(k=1,2,\ldots ,c\) (with the dimension of \(a \times b \times c\)), and its separable covariance matrix has the form of \(\Sigma =\mathrm{A} \otimes \mathrm{B} \otimes \mathrm{C}\) (here, \(\mathrm{A}\), \(\mathrm{B}\), and \(\mathrm{C}\) are \(a \times a\), \(b \times b\), and \(c \times c\) covariance matrix, respectively). To test the hypothesis \(\Sigma =\mathrm{A} \otimes \mathrm{B} \otimes \mathrm{C}\), we read the data as a matrix form as \(\mathbf{Y}_{s,k} = \mathbf{X}_{[ij]k}\) with \(s=1,2,\ldots ,ab, k=1,2,\ldots c\), and test the sub-hypothesis \(\mathcal {H}_{0,\{[12],3\}}: \Sigma =\mathrm{U}_1 \otimes \mathrm{C} \Big (=\big ( \mathrm{A} \otimes \mathrm{B} \big ) \otimes \mathrm{C}\Big )\) at the level \(\alpha _1\). We repeat the same procedure for the other two sub-hypotheses \(\mathcal {H}_{0,\{1,[23]\}}: \Sigma = \mathrm{A} \otimes \mathrm{U}_2 \Big (= \mathrm{A} \otimes \big ( \mathrm{B} \otimes \mathrm{C} \big ) \Big )\) and \(\mathcal {H}_{0,\{2,[13]\}}: \Sigma =\mathrm{B} \otimes \mathrm{U}_3 \Big (= \mathrm{B} \otimes \big ( \mathrm{A} \otimes \mathrm{C} \big )\Big )\) at the level \(\alpha _2\) and \(\alpha _3\), respectively. Finally, we combine the results of three sub-hypotheses with the Bonferroni or multi-stage additive procedure discussed in Sect. 3.

References

Anderson TW (2003) An introduction to multivariate statistical analysis, 3rd edn. Wiley, New York

Allen GI, Tibshirani R (2012) Inference with transposable data: modelling the effects of row and column correlations. J R Stat Soc Ser B Stat Methodol 74(4):721–743

Bertoluzzo F, Pesarin F, Salmaso L (2013) On multi-sided permutation tests. Commun Stat Simul Comput 42(6):1380–1390

Dawid AP (1981) Some matrix-variate distribution theory: notational considerations and a Bayesian application. Biometrika 68(1):265–274

Dutilleul P (1999) The mle algorithm for the matrix normal distribution. J Stat Comput Simul 64(2):105–123

Filipiak K, Klein D, Roy A (2016) Score test for a separable covariance structure with the first component as compound symmetric correlation matrix. J Multivar Anal 150:105–124

Filipiak K, Klein D, Roy A (2017) A comparison of likelihood ratio tests and Rao’s score test for three separable covariance matrix structures. Biom J 59:192–215

Finos L, Salmaso L (2005) A new nonparametric approach for multiplicity control: optimal subset procedures. Comput Stat 20(4):643–654

Fuentes M (2006) Testing for separability of spatial-temporal covariance functions. J Stat Plan Inference 136(2):447–466

Glanz H, Carvalho L (2013) An expectation-maximization algorithm for the matrix normal distribution. arXiv preprint arXiv:1309.6609

Gupta AK, Nagar DK (1999) Matrix variate distribution. Chapman Hall/CRC, New York

Henze N, Zirkler B (1990) A class of invariant consistent tests for multivariate normality. Commun Stat Theory Methods 19(10):3595–3617

Hothorn T, Hornik K, van de Wiel MA, Zeileis A (2017) Package “coin”. Conditional inference procedures in a permutation test framework. ver. 1.2-2. 2017. https://cran.r-project.org/web/packages/coin/index.html. Accessed 02 July 2018

Klingenberg B, Solari A, Salmaso L, Pesarin F (2009) Testing marginal homogeneity against stochastic order in multivariate ordinal data. Biometrics 65(2):452–462

Korkmaz S, Goksuluk D, Zararsiz D (2014) MVN: an R package for assessing multivariate normality. R J 6(2):151–162

Lee SJ, Lee S, Lim J, Ahn SJ, Kim TW (2007) Cluster analysis of tooth size in subjects with normal occlusion. Am J Orthod Dentofac Orthop 132(6):796–800

Lee SH, Bachman AH, Yu D, Lim J, Ardekani BA (2016) Predicting progression from mild cognitive impairment to Alzheimers disease using longitudinal callosal atrophy. Alzheimers Dement Diagn Assess Dis Monit 2:68–74

Li B, Genton MG, Sherman M (2007) A nonparametric assessment of properties of space–time covariance functions. J Am Stat Assoc 102(478):736–744

Li E, Lim J, Lee S-J (2010) Likelihood ratio test for correlated multivariate samples. J Multivar Anal 101(3):541–554

Li E, Lim J, Kim K, Lee S-J (2012) Distribution-free tests of mean vectors and covariance matrices for multivariate paired data. Metrika 75(6):833–854

Lu N, Zimmerman DL (2005) The likelihood ratio test for a separable covariance matrix. Stat Probab Lett 73(4):449–457

Mardia KV (1970) Measures of multivariate skewness and kurtosis with applications. Biometrika 57(3):519–530

Mitchell MW, Genton MG, Gumpertz ML (2006) A likelihood ratio test for separability of covariances. J Multivar Anal 97(5):1025–1043

Pesarin F, Salmaso L (2010) Permutation tests for complex data. Wiley, New York

Roy A, Leiva R (2008) Likelihood ratio tests for triply multivariate data with structured correlation on spatial repeated measurements. Stat Probab Lett 78(13):1971–1980

Roy A, Leiva R (2011) Estimating and testing a structured covariance matrix for three-level multivariate data. Commun Stat Theory Methods 40(11):1945–1963

Royston P (1983) Some techniques for assessing multivariate normality based on the Shapiro–Wilk W. Appl Stat 32(2):121–133

Royston P (1992) Approximating the Shapiro–Wilk W test for non-normality. Stat Comput 2(3):117–119

Shapiro SS, Wilk MB (1964) An analysis of variance test for normality (complete samples). Biometrika 52:591–611

Sheng J, Qiu P (2007) p-Value calculation for multi-stage additive tests. J Stat Comput Simul 77(12):1057–1064

Strasser H, Weber C (1999) On the asymptotic theory of permutation statistics. Math Methods Stat 8(2):220–250

Tan KM, Witten D (2014) Sparse biclustering of transposable data. J Comput Gr Stat 23(4):985–1008

Viroli C (2010) Finite mixtures of matrix normal distributions for classifying three-way data. Stat Comput 21(4):511–522

Wang X, Stokes L, Lim J, Chen M (2006) Concomitant of multivariate order statistics with application to judgment post-stratification. J Am Stat Assoc 101:1693–1704

Wang H, West M (2009) Bayesian analysis of matrix normal graphical models. Biometrika 96(4):821–834

Yin J, Li H (2012) Model selection and estimation in the matrix normal graphical model. J Multivar Anal 107:119–140

Acknowledgements

We are grateful to the Associate Editor and two anonymous reviewers for their many helpful comments. The R-package “NPCovSepTest” of the proposed procedure is online available from https://sites.google.com/view/seongohpark/software. J. Lim’s research is supported by National Research Foundation of Korea (Nos. NRF-2017R1A2B2012264; MIST-2011-0030810).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Park, S., Lim, J., Wang, X. et al. Permutation based testing on covariance separability. Comput Stat 34, 865–883 (2019). https://doi.org/10.1007/s00180-018-0839-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-018-0839-2