Abstract

This paper focuses on the estimation of the coefficient functions, which is of primary interest, in generalized varying-coefficient models with non-exponential family error. The local weighted quasi-likelihood method which results from local polynomial regression techniques is presented. The nonparametric estimator based on iterative weighted quasi-likelihood method is obtained to estimate coefficient functions. The asymptotic efficiency of the proposed estimator is given. Furthermore, some simulations are carried out to evaluate the finite sample performance of the proposed method, which show that it possesses some advantages to the previous methods. Finally, a real data example is used to illustrate the proposed methodology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Generalized linear models (GLMs) were introduced by Nelder and Wedderburn (1972) as a unifying concept of the linear model. They are based on two fundamental assumptions: the conditional distributions belong to an exponential family and a known transform of the underlying regression function is linear. An important extension proposed by Wedderburn (1974) is the quasi-likelihood function, which relaxes the above model assumptions. It only requires assumptions on the first two moments, rather than the entire distribution of the data. The quasi-likelihood approach is useful because in many situations the exact distribution of the observations is unknown. In addition, a quasi-likelihood function has statistical properties similar to those of a log-likelihood function.

The generalized varying-coefficient models are such GLMs that the coefficients of the explanatory variables are assumed to vary with another factor. Let \(Y\) be the response variable, which is to be predicted by the associated covariates \((\mathbf X , \mathbf U )\), where \(\mathbf X =(X_1, X_2, \ldots , X_p)^T\) and \(\mathbf U =(U_1, U_2, \ldots , U_d)^T\) are possibly vector-valued predictors of lengths \(p\) and \(d\), respectively. The conditional density of \(Y\) given \(\mathbf X =\mathbf x \) and \(\mathbf U =\mathbf u \) belongs to an unknown distribution family, but the first two moments are given by

and

for a given function \(V(\cdot )\) and an unknown parameter \(\sigma ^2\). A generalized varying-coefficient model is of the form

or

for some given reversible link function \(g(\cdot )\), where \(\mathbf x =(x_1, x_2, \ldots , x_p)^T\), \({\mathbf {b}}(\cdot )=\left[ b_1(\cdot ), b_2(\cdot ), \ldots , b_p(\cdot )\right] ^T\) is a \(p\)-dimensional vector, consisting of unspecified smooth coefficient functions and \(\mu (\mathbf x , \mathbf u )\) is the mean regression function of the response variable \(Y\). Obviously, if all the coefficient functions \(\left\{ b_j(\cdot )\right\} _{j=1}^p\) are constants, the generalized varying-coefficient model in model (1) will slip into a classical generalized linear regression model. On the other hand, if part of them are viewed as constants, the model (1) can be regarded as a generalized partially linear varying-coefficient model.

The generalized varying-coefficient model is a simple but useful extension of the classical linear models. It has been extensively studied by many researchers. For example, Cai et al. (2000a) proposed a one-step procedure to estimate the coefficient functions and studied the asymptotic normality of the estimators. Additionally, the standard error formulas of the estimated coefficients were derived and were empirically tested. Furthermore, a goodness of fit test was proposed to detect coefficient functions. Kuruwita et al. (2011) proposed a new two-step estimation method for generalized varying coefficient models, with normal distribution of error term, where the link function was specified up to some smoothness conditions. Furthermore, they established the consistency and asymptotic normality of the estimated varying coefficient functions. Lian (2012) considered the problem of variable selection for high-dimensional generalized varying-coefficient models and proposed a polynomial-spline procedure that simultaneously eliminated irrelevant predictors and estimated the nonzero coefficients. Generalized varying-coefficient models are particularly appealing in longitudinal studies where they allow one to explore the extent to which covariates affect responses changing over time; see, for instance, the work of Hoover et al. (1998), Huang et al. (2004), Fan et al. (2007), Chiou et al. (2012) and the references therein. Besides, the ability to model dynamical systems led to applications in the areas including functional data modelling, see Ramsay (2006). Cai et al. (2000b) and Huang and Shen (2004) extended the generalized varying-coefficient models to time series analysis. Cai et al. (2008) developed them in survival analysis.

For the generalized varying-coefficient models mentioned above, the errors are assumed to be independently and identically distributed and follow an exponential family of distribution (Cai et al. 2000a; Lian 2012), or an finite distribution with mean zero and small variance (Kuruwita et al. 2011; Hoover et al. 1998; Huang et al. 2004). However, in practice, the distribution of the error term is always unknown, i.e., the exponential family distribution may be not appropriate. For example, for the simulated model in Sect. 4.1, we fitted it by one-step estimate introduced in Cai et al. (2000a, (2000b), in Fig. 1. The performance of the one-step estimator is not satisfying. Thus, a suitable estimation method needs to be developed for solving this problem.There has been little work on generalized varying-coefficient model with non-exponential family error in the literature. In this paper, by applying local weighted quasi-likelihood method and the estimation method of generalized linear model, we propose a new nonparametric estimation method for the generalized varying-coefficient model with non-exponential family error. Since the proposed estimation method needs some iterative computations, henceforth, we call it nonparametric estimation based on iterative weighted quasi-likelihood (NIWQL). The resulting estimator, NIWQL estimator, will be shown to be asymptotic efficiency.

Simulated results for model (18) with sample size 200. a, b and c are the estimates of \(b_0(U)\), \(b_1(U)\) and \(b_2(U)\), respectively, using one-step estimation method with bandwidth \(\text {h} = 0.1326\). Solid curves and dashed curves are the true functions and the one-step estimates

The article is organized as follows. In Sect. 2, details for the estimation process of generalized varying-coefficient models are introduced. Results on asymptotic efficiency of the estimators are presented in Sect. 3. The simulation results are reported in Sect. 4, followed by the real data application in Sect. 5 and concluding remarks in Sect. 6. Finally, technical proofs are collected in the Appendix.

2 The estimation process

We consider the generalized varying-coefficient model in which the variance function is given by a mean response function and an unknown parameter \(\sigma ^2\) as well. In this section, we propose a nonparametric estimation based on the iterative weighted quasi-likelihood to estimate the coefficient functions of generalized varying-coefficient model with the structure of variance function is specificated. For simplicity, we only consider the case in which \(\mathbf u \) is one-dimensional, i.e., \(d=1\). Extension to multivariate \(\mathbf u \) involves no fundamentally new ideas. However, implementations with \(\mathbf u \) having more than two dimensions may have some difficulties due to the “curse of dimensionality”.

2.1 Local weighted quasi-likelihood

In this part, we shall discuss how to implement the local weighted quasi-likelihood methods for the generalized varying-coefficient model.

Let \(\left\{ (U_i, \mathbf X _i, Y_i)\right\} _{i=1}^n\) be a random sample, then we have \(U=(U_1, \ldots , U_n)^T,\, \mathbf X =(\mathbf X _1, \mathbf X _2, \ldots , \mathbf X _n)^T,\, Y=(Y_1, \ldots , Y_n)^T\). Denote \(L(\mu ,Y)\) as a log conditional density function of \(Y\) given \(\mathbf X =\mathbf x \) and \(U=u\), then we have

Furthermore, the quasi-likelihood function of \((Y_1, Y_2, \ldots , Y_n)\) given \(\mathbf X =\mathbf x \) and \(U=u\) can be briefly described as follows,

Under the model (1), the primary interest is to estimate the coefficient functions \(\left\{ b_j(\cdot )\right\} _{j=1}^p\). We use a local polynomial modelling scheme, which has several nice properties, such as high statistical efficiency, design adaptation (Fan 1993) and good boundary behavior (Fan and Gijbels 1996; Ruppert and Wand 1994). Assume that each \(b_j(\cdot )\) has a continuous \(m+1\) derivative. For each given point \(u_0\), we approximate \(b_j(\cdot )\) locally by a polynomial function

for \(u\) in a neighborhood of \(u_0\). Furthermore, based on a random sample \(\left\{ (U_i, \mathbf X _i, Y_i)\right\} _{i=1}^n\), we have

Therefore, the following weighted quasi-likelihood estimation of the generalized varying-coefficient model is to obtain the solution vector

via maximizing the weighted quasi-likelihood function

where \(K_h(\cdot )=K(\cdot /h)/h,\, K(\cdot )\) is a kernel function and \(h>0\) is a bandwidth. Let the solution vector of the above optimization problem be

then the estimations of the coefficient functions \(\left\{ b_j(\cdot )\right\} _{j=1}^n\) at the point \(u=u_0\) can be expressed as

The algorithm above uses local polynomial weighted fits based on kernel weights with a fixed global bandwidth. One may replace these by more sophisticated smoothers, such as locally varying bandwidths, nearest neighbor weights, and so on. Other non-kernel smoothers, such as splines, also may be used.

2.2 The NIWQL estimator

For simplicity of notation, denote \(\varsigma _1^{(0)}(u_0)= \varsigma _1(u_0),\, \varsigma _1^{(1)}(u_0)=\varsigma '_1(u_0)\), and so on. Then, we can rewrite the expression (6) as

The quasi-score function \(\Phi _r^{k}\) for the weighted quasi-likelihood is

where \(k=0, 1, \ldots , m\). Hence, the expression (9) is the solution of the following equation

where \(g(\mu _i)=\sum \nolimits _{j=1}^p{\left[ \varsigma _j^{(0)}(u_0) +\varsigma _j^{(1)}(u_0)(U_i-u_0) + \cdots +\varsigma _j^{(m)}(u_0)(U_i-u_0)^m\right] } {X_{ij}},\, r=1, 2, \ldots , p\) and \(k=0, 1, \ldots , m\). In order to obtain the solution of (10), we extend the estimation algorithm of the generalized linear model to the generalized varying-coefficient model.

Let \(z_i=g(\mu _i)+(Y_i-\mu _i)g'(\mu _i)\), then we have \(E(z_i)=g(\mu _i)\) and \(\text {Var}(z_i)=\text {Var}(Y_i)\left[ g'(\mu _i)\right] ^2= \phi V(\mu _i)\left[ g'(\mu _i)\right] ^2=a_i\phi \), where \(a_i=V(\mu _i)\left[ g'(\mu _i)\right] ^2\). Then we have the following equation

Furthermore,

Denote the design matrix in the expression (11) by:

where \(\mathbf X =(X_{ij})_{1\le i \le n, 1\le j \le p}\) and \(U^k=\text {diag}\left[ (U_1-u_0)^k, (U_2-u_0)^k, \ldots , (U_n-u_0)^k\right] (k=1, 2, \cdots , m)\). Let \(Z=(z_1, z_2, \ldots , z_n)^T\). Furthermore, let \(W\) be the \(n\times n\) diagonal matrix of weights:

Therefore, the Eq. (11) can be easily written as

If the matrix \(\Gamma ^T(u_0)W\Gamma (u_0)\) is nonsingular, the solution vector of the expression (12), i.e., the NIWQL estimator, can be given by

Therefore, the estimator \(\hat{\beta }_{\text {NIWQL}}(u_0)\) of the coefficient functions \(\left\{ {b_j(u_0)}\right\} _{j=1}^p\) at the point \(u=u_0\) can be obtained, denoted by,

where \(E_1=(\mathbf I _p , \mathbf 0 _p , \ldots , \mathbf 0 _p)\) is a \(p\times mp\) matrix, \(\mathbf I _p\) and \(\mathbf 0 _p\) are the identity and null matrices of order \(p\), respectively.

2.3 The implementation of NIWQL method

Note that the vector \(Z\) in the NIWQL estimator (13) is unknown, so some iterative computations are needed if we want to obtain the estimation of (13). Combining the local weighted quasi-likelihood with the iterative least square estimation of generalized linear models, we proposed a nonparametric estimation method based on local weighted quasi-likelihood, whose procedure can be constructed as follows:

-

Step 1. Give a set of starting values of \((\mu _1, \mu _2, \ldots , \mu _n),\, \left( \mu _1^{0}, \mu _2^{0}, \ldots , \mu _n^{0}\right) \).

-

Step 2. Calculate the initial values of \(g(\mu _i)\) according to the given starting values in Step 1, and denote \(\eta _i^{(0)}=g(\mu _i^{(0)}), i= 1, 2, \ldots , n\).

-

Step 3. Compute the starting values of \(z_i (i= 1, 2, \ldots , n)\) in term of the relationship \(z_i=g(\mu _1)+(y_i-\mu _i)g^{'}(\mu _i)\), namely,

$$\begin{aligned} z_i^{(0)}=\eta _i^{(0)}+(y_i-\mu _i^{(0)})g'(\mu _i^{(0)}), \quad i=1, 2, \ldots , n. \end{aligned}$$Furthermore, we can obtain \(\left\{ a_i^{(0)}\right\} _{i=1}^n\). Therefore, \(Z^{(0)}=(z_1^{(0)}, z_2^{(0)}, \ldots , z_n^{(0)})^T\) and \(W^{(0)}=\,\text {diag}\left( \frac{K_h(U_1-u_0)}{a_1^{(0)}}, \ldots , \frac{K_h(U_n-u_0)}{a_n^{(0)}}\right) \) can be calculated, respectively.

-

Step 4. Compute the first estimation of \(\xi (u_0)\), that is,

$$\begin{aligned} \hat{\xi }^{(1)}(u_0)=\left( \Gamma ^T(u_0)W^{(0)}\Gamma (u_0)\right) ^{-1}\Gamma ^T(u_0)W^{(0)}Z^{(0)}. \end{aligned}$$Furthermore, \(\eta ^{(1)}=(\eta ^{(1)}_1, \eta ^{(1)}_2, \ldots , \eta ^{(1)}_n)^T\) can be obtained, where \(\eta ^{(1)}_i=\,\sum \nolimits _{j=1}^p{[\varsigma _j^{(1,0)}(u_0)+\varsigma _j^{(1,1)}(u_0)(U_i-u_0) + \cdots +\varsigma _j^{(1,m)}(u_0)(U_i-u_0)^m]}{X_{ij}}, (i=1, 2, \ldots , n)\) and \(\varsigma _j^{(1,k)} (1\le k \le m)\) is the \(k\)th component of the vector \(\hat{\xi }^{(1)}(u_0)\).

-

Step 5. Note that \(\eta =g(\mu )\), then we can obtain \(\mu _i^{(1)}=g^{-1}(\eta ^{(1)}_i), i=1, 2, \ldots , n\). Furthermore, \(\hat{\xi }^{(2)}(u_0)\) can be calculated via repeating Steps 2, 3 and 4.

-

Step 6. Repeat the above steps, the iterative estimation of \(\xi (u_0)\) can be obtained

$$\begin{aligned} \hat{\xi }^{(t+1)}(u_0)=\left( \Gamma ^T(u_0)W^{(t)}\Gamma (u_0)\right) ^{-1} \Gamma ^T(u_0)W^{(t)}Z^{(t)} \end{aligned}$$until the convergence holds. Furthermore, the NIWQL estimator \(\hat{\xi }_{\text {NIWQL}}(u_0)\) can be obtained, that is,

$$\begin{aligned} \hat{\xi }_{\text {NIWQL}}(u_0)=\left( \Gamma ^T(u_0)W^{(t)}\Gamma (u_0)\right) ^{-1} \Gamma ^T(u_0)W^{(t)}Z^{(t)}. \end{aligned}$$

We now briefly discuss the choice of the initial estimates. An intuitive and explicit method is based on the substitution of the response observations (\(y_1, y_2, \ldots , y_n\)), namely, in Step 1 of the estimation procedure, let \(\mu _i^{(0)}=y_i (i=1, 2, \ldots , n)\). In the simulation implementation, we will verify this set of initial values is effective.

Another problem associated with the NIWQL estimator is the singularities of the the matrix \(\Gamma ^T(u_0)W\Gamma (u_0)\). In the theoretical part discussed, we will assume that it is nonsingular. However, it may be singular in practical applications. In local modelling, the matrix \(\Gamma ^T(u_0)W\Gamma (u_0)\) can easily be ill conditioned in certain local neighborhood since there can only be a few data points in this neighborhood. This cannot simply be rescued by increasing the size of the bandwidth or using the nearest neighborhood type of bandwidths. We handle this problem of singularity via a ridge regression, namely, replace \(\Gamma ^T(u_0)W\Gamma (u_0)\) by \(\Gamma ^T(u_0)W\Gamma (u_0)+\lambda I\), where \(I\) is an identity matrix with the same order as matrix \(\Gamma ^T(u_0)W\Gamma (u_0)\), and \(\lambda \) is a ridge parameter which needs to be chosen.

3 Asymptotic theory

In this section, we give some assumption conditions, and then derive the asymptotic distribution of the NIWQL estimator. We demonstrate that the performance of the nonparametric estimation based on iterative weighted quasi-likelihood method is good.

Some regularity conditions should be first imposed for the theorem. Let \(q_\ell (s, y)=(\partial ^{\ell }/\partial s^{\ell })Q\left\{ g^{-1}(s, t)\right\} \). Note that \(q_k(\cdot , \cdot )\) is linear in \(y\) for fixed \(s\) and such that

where \(\rho (u, \mathbf x )=[g'(\mu )]^2 V(\mu )\).

Define \(\theta _j=\int {u^jK(u)du}\) and \(\nu _j=\int {u^jK^2(u)du}\), \(j=0, 1, \ldots , m+1\). Let \(\Theta =(\theta _{i+j-2})_{1\le i,j \le m+1}\), \(\Theta ^{*} =(\nu _{i+j-2})_{1\le i,j \le m+1}\) and \(\text {H}=\text {diag}(1, h, \ldots , h^m)\otimes \mathbf I _p\), where \(\otimes \) denotes the Kronecker product. Also, other notations need to be defined, that is, \(\Pi (u_0)=E\left[ \rho (U, \mathbf X )\mathbf X \mathbf X ^T|U=u\right] \) and \(\Lambda (u_0)= \frac{Var(Y|U=u,\mathbf X =\mathbf x )}{\rho (u, \mathbf x )}\). To state the main results of this paper, we make the following conditions, namely, for a given point \(u\):

-

\(\mathbf {C}1 \). \(K(\cdot )\) has a bounded support.

-

\(\mathbf C2 \). Suppose that \(\text {Var}(Y|\mathbf X =\mathbf x , U=u)\ne 0,\, g'(\mu )\ne 0\), and \(\rho (\mathbf x , u)\ne 0\).

-

\(\mathbf C3 \). \(E(Y^4|\mathbf X =\mathbf x , U=u)<\infty \) in the neighborhood of \(u=u_0\).

-

\(\mathbf C4 \). The functions \(f_U(u_0)\), \(\Pi (u)\), \(b_j^{(m+1)}(\cdot ),\, \text {Var}(Y|\mathbf X =\cdot , U=\cdot ),\, V'(\cdot )\), and \(g'''(\cdot )\) are continuous.

-

\(\mathbf C5 \). The function \(q_2(s, y)<0\) for \(s\in \mathfrak {R}\) and \(y\) in the range of the response variable.

-

\(\mathbf C6 \). \(h\rightarrow 0\) and \(nh\rightarrow \infty \).

Then, we can obtain the following theorem.

Theorem 1

Under the conditions \(\mathbf C1 -\mathbf C6 \), then we have

where \({\mathbf {b}}^{(m+1)}(u_0)=\left[ b_1^{(m+1)}(u_0), \ldots , {b}_p^{(m+1)}(u_0)\right] ^T\), \(\varvec{\theta }=(\theta _{m+1}, \ldots , \theta _{2m+1})\) is a \(m+1\)-vector, \(\Delta =f_U(u_0)\Theta \otimes \Pi (u_0)\) and \(\mathbf B =f_U(u_0)\Theta ^{*}\otimes \Lambda (u_0)\). Furthermore, when \(m=1\) and the kernel function \(K(\cdot )\) is symmetric, we obtain that

where \(\Upsilon (u_0)=f_U^{-1}(u_0)\left( \begin{array}{cc} \nu _1 &{} 0 \\ 0 &{} \nu _2/\theta _2^2 \\ \end{array} \right) \otimes \frac{\Lambda (u_0)}{\Pi ^2(u_0)}\).

The bias and variance expressions in Theorem 1 can be deduced from the general theorem in Carroll et al. (1998). The main difference is that here we establish the results in terms of asymptotic normality, whereas those authors established them for the general case by using conditional expectations.

The proof of the Theorem 1 is given in the Appendix.

4 Simulations

Section 3 has shown that the NIWQL estimator for the generalized varying-coefficient model possesses the asymptotic normality under some mild assumptions. In this section, some simulation studies based on the nonparametric iterative estimation discussed in Sect. 2 will be carried out. We illustrate the performance of the proposed NIWQL estimator and compare it with the local quasi-likelihood(LQL) estimator proposed in Fan and Chen (1999) and the one-step LQL estimator proposed in Cai et al. (2000a). Furthermore, the proposed method seems to be more robust, even though the error term is contaminated by a Gaussian distribution or a \(t\) distribution with a suitable degree of freedom.

4.1 Comparisons with LQL estimator and one-step LQL estimator

Consider the relationship between \(Y\) and \(\mathbf X =(1, X_1, X_2)^T\) given by

where \(U\) came from the uniform random distribution \(U(0,1)\) and the covariates \(X_1\) and \(X_2\) were the standard normal random variables with correlation coefficient \(3^{-1}\), independent of \(U\). The variance of error term \(\epsilon \) was defined as \(\text {Var}(\epsilon |\mathbf X =\mathbf x , U=u) =\sigma ^2V(\mu )\) for a given function \(V(\cdot )\) with the form of \(V(\mu )=\frac{\alpha _1}{\alpha _0}\mu ^2\). Then

where \(e\) was a random variable with zero mean and finite variance \(\delta ^2\), independent of both \(X\) and \(U\).

In the model (18), the coefficients were set as \({{\alpha }_{0}}=3,\, {{\alpha }_{1}}=2,\, {{b }_{0}}\left( U \right) =\exp \left( 2U-1 \right) \), \({{b }_{1}}\left( U \right) =8U\left( 1-U \right) \), and \({{b }_{2}}\left( U \right) ={{\sin }^{2}}\left( 2\pi U \right) \), respectively.

For the above example, we conducted N = 500 replications with the sample size n=200. Furthermore, the local linear fits for the coefficient functions were carried out, that is, \(m=1\) in formula (4), and the Epanechnikov kernel function \(K\left( s \right) =\frac{3}{4}{{\left( 1-{{s}^{2}} \right) }_{+}}\) with the cross-validation procedure was adopted.

In practical implementation, the matrix \(\Gamma ^T(u_0)W\Gamma (u_0)\) in (14) can be ill conditioned. A commonly used technique to deal with this problem is the ridge regression technique (see for example Seifert and Gasser 1996). Then an issue arises about how large a ridge parameter should be used. Following the same heuristic as in Fan and Chen (1999), we suggest using the ridge parameters

Replacing \(\Gamma ^T(u_0)W\Gamma (u_0)\) by \(\Gamma ^T(u_0)W\Gamma (u_0)+\lambda I\) in (14) will not alter asymptotic behaviour and will avoid near singularity of the matrix. Furthermore, for each coefficient function, the 500 estimated values at each grid point \(G_i=i/n\) were obtained by the proposed method described in Sect. 2, and the averaged value of them was taken as the final estimated value of the coefficient function at the point \(G_i\).

Figure 2a–c display the true functions and the estimated functions. The corresponding residual plots were presented in Fig. 3a–c. As expected, the performance of the NIWQL estimator is better than that of the LQL estimator, and significantly better than that of the one-step LQL estimator, because the additional estimation steps are involved in the other two methods (referred to Fan and Chen 1999; Cai et al. 2000a), which maybe Reduce the estimation accuracy. This reveals that the NIWQL method outperforms the other two competitors.

Simulation results under the sample size 200. a, b and c are the estimates of \(b_{0}\left( U \right) ,\, b_{1}\left( U \right) \) and \(b_{2} \left( U \right) \), respectively, with bandwidth h \(=\) 0.1326 chosen by the cross-validation. Solid curve is the true function; dashed curve, dotted curve and dash-dotted curve are the estimated curves calculated by the NIWQL, LQL and one-step methods, respectively

Residual plots with the sample size 200. a, b and c are the residual plots of \(b_0(U)\), \(b_1(U)\) and \(b_2(U)\), respectively, with bandwidth \(\hbox {h} = 0.1326\) chosen by the cross-validation. Solid curve, dotted curve and dash-dotted curve are the residual curves calculated by the NIWQL, LQL and one-step methods, respectively

For three estimators \(\hat{\varvec{b}}_{\text {NIWQL}},\, \hat{\varvec{b}}_{\text {LQL}}\) and \(\hat{\varvec{b}}_{\text {one}}\), we assess their performances via the average square errors(ASE), which is similar to the expression (15) in Fan and Chen (1999),

where \(\left\{ {{U}_{k}},k=1,2,\cdots ,n \right\} \) are the grid points. Similarly, the performance of joint estimator \(\hat{\mathbf{b}}(\cdot )\) is evaluated by

Tables 1 and 2 display the simulation results of the ASE in 500 replications, where the values in Table 1 were calculated by (19), and the ones in Table 2 by (20). As expected, the results of NIWQL method are smaller than those of LQL and one-step LQL methods, which demonstrates that NIWQL method is better than the other two methods.

Another issue of interest is how sensitive the NIWQL estimator is to the choice of bandwidth. A mean integrated squared error (MISE) index is defined as

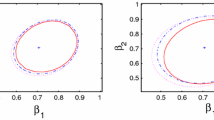

where \(\text {SE}_i(U_j)\) is the squared error of the \(i\)th-simulated sample. Figure 4 depicts the MISE of the estimator \(\hat{\xi }_{\text {NIWQL}}\) of the coefficient function as a function of bandwidth \(h\). We can observe that the MISE curve first decreases and gradually increases, and reaches its minimizer around the optimal bandwidth. This means that NIWQL estimator is sensitive to choice of \(h\).

In order to assess the variability of the estimated coefficient functions, we developed the bootstrap pointwise confidence band, compared with the method derived by the estimated standard errors proposed in Cai et al. (2000a). The curves of the estimated coefficient functions of the model (18) are displayed in Fig. 5, together with the pointwise confidence bands derived by the two methods mentioned above. The dotted curves are the estimated function plus or minus 1.96 of the estimated standard errors and the dashed curves are 95 % bootstrap confidence band based on 1000 replications. It can easily be seen that, around the origin, the pointwise confidence band resulted from the estimated standard errors exist a larger range than that from the bootstrap method. Specially, the proposed bootstrap pointwise confidence band method possesses the obvious superiority when coefficient function admits different degrees of smoothness, for instance, the sinusoidal function has a higher degree of smoothness than that of exponential function and quadratic function. Therefore, the confidence band implemented by the bootstrap approach is superior to that derived by the estimated standard errors.

The estimates of coefficient functions (solid line) in model (18), along with their pointwise confidence bands; a: \(b_0(U)\), b: \(b_1(U)\), and c: \(b_2(U)\). Dotted curves are the confidence bands based on the estimated standard errors, and dashed curves are 95 % bootstrap confidence interval based on 1000 bootstrap replications

4.2 Simulations of robustness

In this part, we implemented the simulations to illustrate that the proposed NIWQL estimator is robust even if the variance function of the error term is misspecification. For convenience, the model (18) was continued to be used here. We wrote an algorithm program using the nonparametric iterative weighted quasi-likelihood method under the following circumstances:

-

(a)

We firstly generated 100(200,500) pseudo-random numbers of \(\left( {{X}_{1}},{{X}_{2}} \right) \) and \(U\) from their distributions discussed above, respectively. Secondly, \(\epsilon _i\)’s \([i=1, 2, \ldots , 100(200,500)]\) were taken to be independent and identically distributed, normal random variables with mean zero and variance \(\delta ^2=0.5\). Thirdly, we obtain the variance of dependent variable \(Y\) according to a given variance function and the random errors generated from the second step. Fourthly, according to the third step, generate the simulation values of the dependent variable \(Y\). The simulation results are listed in Table 3.

-

(b)

Generate 100(200,500) random errors with some contaminated random errors by adding another random error term in the second step described in (a), and the remaining steps were the same as those of case (a). We took Gaussian random errors \(\epsilon \sim N(0,0.5)\) with contaminated random errors \(\epsilon \sim N(0,16)\) with probability 0.1, 0.2, 0.3, respectively. The simulation results are presented in Table 4.

-

(c)

In the second step in (a), generate 200(500) random errors with some contaminated random errors by adding another random error term, and the other steps were the same as those of case (a). We took Gaussian random errors \(\epsilon \sim N(0, 0.5)\) and contaminated \(t\) distribution random errors \(\epsilon \sim t(\omega , \delta , \nu )\), where \(\omega =0,\, \delta =4\) and \(\nu =5\). The probability density function of a \(t\) distributed variable Y with location and dispersion parameters is

$$\begin{aligned} p(y, \omega , \delta ; \nu )=\frac{\nu ^{\nu /2}\Gamma \left( (\nu +1)/2\right) }{\delta \sqrt{\pi }\Gamma (\nu /2)} \left\{ \nu +\left( \frac{y-\omega }{\delta }\right) \right\} ^{-(\nu +1)/2}, \end{aligned}$$where \(t, \omega \in \mathbf {R},\, \delta >0\) and \(\Gamma (\cdot )\) is the gamma function. The mean and variance of \(Y\) are \(\omega \,(\nu >1)\) and \(\nu \delta ^2/(\nu -2) (\nu >2)\), respectively. The \(t\) distribution reduces to the normal and Cauchy distributions when \(\nu =\infty \) and 1, respectively, referred to Lin et al. (2009). In another case, we took Gaussian random errors \(\epsilon \sim N(0, 0.5)\) and contaminated \(t\) distribution random errors \(\epsilon \sim t(0, 4, 20)\). Gaussian random errors \(\epsilon \sim N(0, 0.5)\) and contaminated \(t\) distribution random errors \(\epsilon \sim t(0, 4, 40)\) were considered in the final case. The simulation results are shown in Table 5.

In the Monte Carlo simulations, the same kernel function and replications were used. Table 3 summarizes the results of case (a). It shows that the values of mean and SD of ASE decrease as the sample size increases, which indicates that the large sample properties is valid. The simulated results with different degrees of Gaussian contamination are reported in Table 4. From Table 4, we can observe that NIWQL estimator has a better performance than the competitors. Furthermore, for the case of (c), we used the normal error \(\epsilon \sim N(0, 0.5)\) with contaminated \(t(0, 4, 5),\, t(0, 4, 20)\) and \(t(0, 4, 50)\) distributions with probability 0.1 and 0.2, respectively. From Table 5, it can easily be seen that the performance of the NIWQL estimator outperforms its competitors. More specially, by the NIWQL method, all contaminations are of small influence on the simulated results, but has an evident effect on that for the one-step LQL method. All facts show that the proposed NIWQL estimator is more robust than the LQL and one-step LQL estimators. However, the same conclusion can not be drawn if one uses Cauchy distribution as contaminated random errors instead of normal and other \(t\) distributions even at a large sample size.

5 Real data analysis

In this section, we used an environmental data set to illustrate the proposed method. The data set, collected in Hong Kong from January 1, 1994 to November 30, 1995, was to examine the association between the levels of pollutants and the number of daily hospital admissions for circulation problems, and studied the extent to which the association varies over time. The data set consists of 700 observations, which had been analyzed by Fan and Chen (1999). The covariates were taken as the levels pollutants sulphur dioxide (\(\text {SDO}\)), \(X_2\) (in \(\upmu {\text{ g/m }}^3\)) and nitrogen dioxide (\(\text {NDO}\)) \(X_3\) (in \(\upmu {\text{ g/m }}^3\)). It was reasonable to model the number of hospital admissions (\(\text {NHA}\)) as a Poisson process. Based on the observations \(\left\{ \text {SDO}_i, \text {NDO}_i, \text {NHA}_i, i=1, \ldots , 700 \right\} \), we suggested the following Poisson regression model with the mean \(\mu (u, \mathbf x )\) given by

The NIWQL procedure with the Epanechnikov kernel \(K\left( s \right) = \frac{3}{4}{{\left( 1-{{s}^{2}} \right) }_{+}}\) was used to fit the model (21) and the smoothing parameter \(h\) was chosen by the cross-validation method, which suggested that \(h=0.0843\). The scatter and the fitted curve of the response NHA are shown in Fig. 6a. Figure 6b–d display the estimated curves of the coefficient functions together with 95 % bootstrap confidence bands based on 1000 replications. The three confidence bands of the coefficient functions exclude zero in most of the support, which indicates that the three covariates have a significant impact on the response. The corresponding residual standard deviation is 0.0140. For comparison, we fitted the LQL and one-step LQL estimators using the three independent covariates. The resulting residual standard deviations are 0.0143 and 0.0178, respectively, which implies that the proposed method can produce a satisfactory fit of the response.

6 Concluding remarks

In this paper, we focus on the estimation problem of the generalized varying-coefficient model. By applying both the weighted quasi-likelihood and nonparametric smoothing techniques, we extend the nonparametric estimation based on iterative weighted quasi-likelihood method to estimate the coefficient functions of the generalized varying-coefficient model with non-exponential family distribution. The asymptotic efficiency of the generalized varying-coefficient estimates, obtained by the NIWQL method is established under some assumptions. Furthermore, two simulation experiments are implemented to assess the performance of the proposed estimator, the results of which demonstrate that it possesses good estimation accuracy and robust estimation effect. Finally, a practical data analysis is performed to evaluate the finite sample behaviors of the proposed estimator.

References

Cai J, Fan J, Jiang J, Zhou H (2008) Partially linear hazard regression with varying coefficients for multivariate survival data. J R Stat Soc 70(1):141–158

Cai Z, Fan J, Li R (2000a) Efficient estimation and inferences for varying-coefficient models. J Am Stat Assoc 95(451):888–902

Cai Z, Fan J, Yao Q (2000b) Functional-coefficient regression models for nonlinear time series. J Am Stat Assoc 95(451):941–956

Carroll RJ, Ruppert D, Welsh AH (1998) Local estimating equations. J Am Stat Assoc 93:214–227

Chiou JM, Ma Y, Tsai CL (2012) Functional random effect time-varying coefficient model for longitudinal data. Statistic 1(1):75–89

Fan J (1993) Local linear regression smoothers and their minimax. Ann Stat 21:196–216

Fan J, Chen J (1999) One-step local quasi-likelihood estimation. J R Stat Soc 61(4):927–943

Fan J, Gijbels I (1996) Local polynomial modelling and its applications: monographs on statistics and applied probability. CRC Press, Boca Raton

Fan J, Huang T, Li R (2007) Analysis of longitudinal data with semiparametric estimation of covariance function. J Am Stat Assoc 102(478):632–641

Hoover DR, Rice JA, Wu CO, Yang LP (1998) Nonparametric smoothing estimates of time-varying coefficient models with longitudinal data. Biometrika 85(4):809–822

Huang JZ, Shen H (2004) Functional coefficient regression models for non-linear time series: a polynomial spline approach. Scand J Stat 31(4):515–534

Huang JZ, Wu CO, Zhou L (2004) Polynomial spline estimation and inference for varying coefficient models with longitudinal data. Statistica Sinica 14(3):763–788

Kuruwita C, Kulasekera K, Gallagher C (2011) Generalized varying coefficient models with unknown link function. Biometrika 98(3):701–710

Lian H (2012) Variable selection for high-dimensional generalized varying-coefficient models. Statistica Sinica 22(3):1563–1588

Lin JG, Zhu LX, Xie FC (2009) Heteroscedasticity diagnostics for t linear regression models. Metrika 70(1):59–77

Nelder JA, Wedderburn RWM (1972) Generalized linear models. J Royal Stat Soc Ser A 135:370–384

Ramsay JO (2006) Functional data analysis. Wiley Online Library, New Jersey

Ruppert D, Wand MP (1994) Multivariate weighted least squares regression. Ann Stat 22:1346–1370

Seifert B, Gasser T (1996) Finite-sample variance of local polynomials: analysis and solutions. J Am Stat Assoc 91(433):267–275

Wedderburn RW (1974) Quasi-likelihood functions, generalized linear models, and the GaussNewton method. Biometrika 61(3):439–447

Acknowledgments

The authors are grateful to the Editor and two anonymous referees for their constructive comments which have greatly improved this paper. The research work is supported by the National Natural Science Foundation of China under Grant No. 11171065, 11401094, the Natural Science Foundation of Jiangsu Province of China under Grant No. BK20140617, BK20141326 and the Research Fund for the Doctoral Program of Higher Education of China under Grant No. 20120092110021

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Proof of the Theorem 1

The expression (17) can be obtained easily according to the expression (16) under some assumptions. For brevity, we outline only the proof of the expression (16). For each given point \(u\), the conditions \(\mathbf C1 \)–\(\mathbf C6 \) proposed in the Sect. 3 are needed. For simplicity, we recall that the vector \(\hat{\xi }(u_0)\) maximizes (9). Here, we consider the normalized estimator

where \(\psi =(nh)^{-1/2}\). Let \(\bar{\eta }(u_0, u, \mathbf x )=\sum \nolimits _{j=1}^p{\left[ b_j(u_0)+ \cdots +b_j^{(m)}(u_0)(u-u_0)\right] x_{j}}\) and \(z_i=\left\{ \mathbf X _i^T, \frac{U_i-u_0}{h}\mathbf X _i^T, \ldots , \frac{(U_i-u_0)^m}{h^m}\mathbf X _i^T \right\} \). It can easily be seen that \(\hat{\xi }^{*}(u_0)\) maximizes

where \(\mu _i=g^{-1}\left( \bar{\eta }(u_0,u,\mathbf x )+\psi \xi ^{*T}(u_0)Z_i\right) \). Equivalently, \(\hat{\xi }^{*}(u_0)\) maximizes

where \(\bar{\mu }_i=\bar{\eta }(u_0, u, \mathbf x ))\). Condition \(\mathbf C5 \) implies that the function \(\ln (\cdot )\) is concave in \(\hat{\xi }^{*}(u_0)\). We have the following expression via a Taylor’s expansion:

where \(\bar{\eta }(u_0)=\bar{\eta }(u_0, u, \mathbf x ),\, \eta _i\) is between \(\bar{\eta }(u_0)\) and \(\bar{\eta }(u_0)+\psi \xi ^{*T}(u_0)\Gamma _i(u_0)\), and

and

Note the fact that \((\Delta _n)_{ij}=(E \Delta _n)_{ij}+ O_p\left\{ \left[ \text {Var}(\Delta _n)_{ij}\right] ^{\frac{1}{2}}\right\} \), and take a Taylor’s expansion of \(\eta (u, \mathbf x )\) with respect to \(u\) around \(|u-u_0|<h\),

and

Therefore, the expect of \(\Delta _n\)

where \(\Pi (u_0)=E(\rho (u, \mathbf x )\mathbf X \mathbf X ^T|U=u)\). Besides, the element of the variance term can be calculated that \(\text {Var}(\Delta _n)_{ij}=E[(\Delta _{nij}-E\Delta _{nij})(\Delta _{nij} -E\Delta _{nij})^T]=O(\psi ^2)\). Therefore,

Next, we will compute the expected value of the absolute of \(\Omega _n\),

since \(q_3\) is linear in \(Y\) with \(E(Y_1|(\mathbf X _1,U_1))<\infty \), we have \(E\left[ |q_3\{\eta _1(u_0), Y_1\}\mathbf X _1^3|\right] <\infty \), therefore, \(E(\Omega _n)=O(\psi )\). Combining the above equations leads to

By the quadratic approximation lemma (Fan and Gijbels 1996), we have

If \(\Xi _n\) is a stochastically bounded sequence of random vectors. The asymptotic normality of \(\xi ^{*}(u_0)\) follows from that of \(\Xi _n\). So, we need to establish the asymptotic normality of \(\Xi _n\). To establish its asymptotic normality, the mean and covariance need to be computed. The mean

where \(U_h=\left( 1, \frac{U-u_0}{h}, \ldots , \left( \frac{U-u_0}{h}\right) ^m\right) ^T,\, \varvec{\theta }=(\theta _{m+1}, \theta _{m+2}, \ldots , \theta _{2m+1})^T\), and \({\mathbf {b}}^{(m+1)}(u_0)=\left[ b^{(m+1)}_1(u_0), \ldots , b^{(m+1)}_p(u_0)\right] ^T\). An application of \(E(\Xi _n)\) calculated above and the definition of \(q_1\), one obtains that

where \(\Lambda (u_0)=\frac{\text {Var}(Y|U=u,\mathbf X =\mathbf x )}{\rho (u, \mathbf x )}\). In order to prove that

we now employ Cramér-Wold device to derive the asymptotic normality of \(\text {Var}(\Xi _n)\): for any unit vector \(\mathbf {e}\),

Combining (23), (24), (25) and (26), one has

Therefore, the Theorem (16) holds true. Besides, it is easy to verify the Lyapounov’s condition for that sequence, that is formula (27) can easily be proved. If \(m=1\) and \(K(\cdot )\) is symmetric, then \(\theta _1=0\), so that (17) holds true. The proof is completed.

Rights and permissions

About this article

Cite this article

Zhao, YY., Lin, JG. & Huang, XF. Nonparametric estimation in generalized varying-coefficient models based on iterative weighted quasi-likelihood method. Comput Stat 31, 247–268 (2016). https://doi.org/10.1007/s00180-015-0579-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-015-0579-5

Keywords

- Generalized varying-coefficient models

- Nonparametric estimation

- Local polynomial regression

- Weighted quasi-likelihood